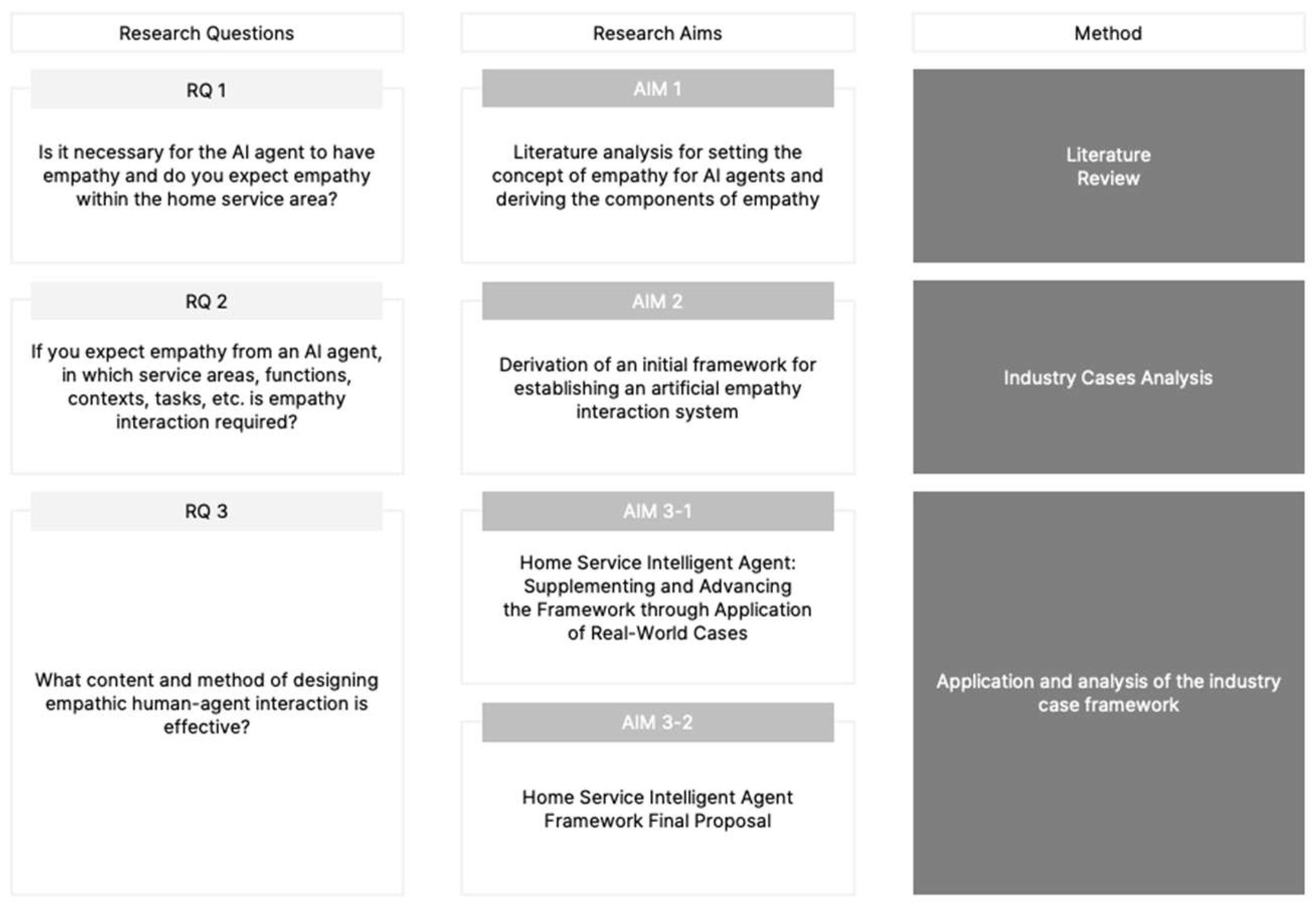

4.1. Analyzing Artificial Empathy Interaction Components and Deriving an Initial Framework

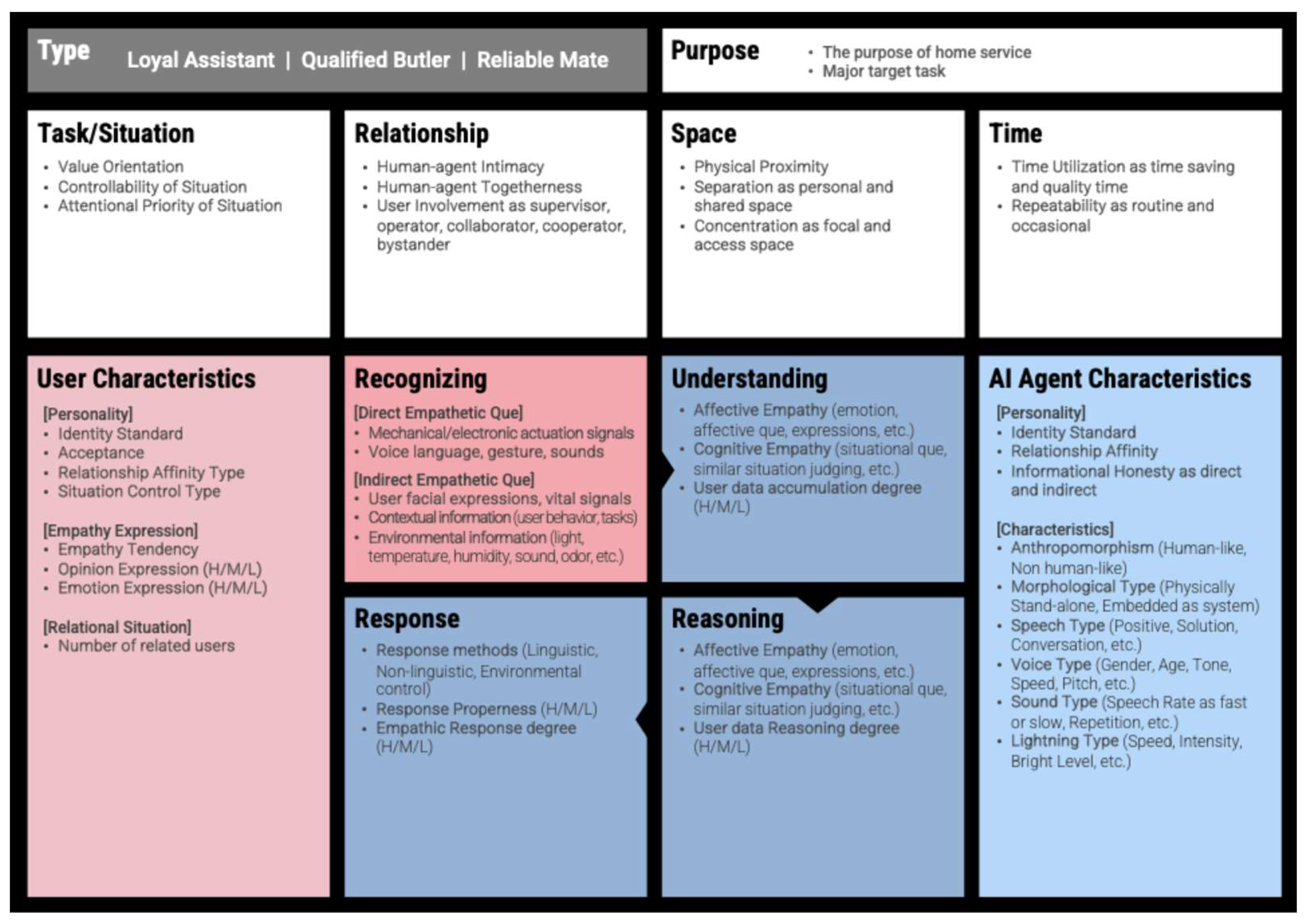

It has been established through previous studies that psychological anthropomorphism is of significance in the interaction between intelligent agents and users. Furthermore, it has been demonstrated that this phenomenon is related to empathic responses. In order to demonstrate natural human-like responses, it is necessary to consider external environmental factors such as context and situation in addition to form and function. Consequently, this research proceeded with the process of identifying components to derive a framework that can identify the components that should be considered for various home service areas and situations, and how interactions should be designed in a multifaceted manner. To establish the framework, we first checked to see if there was an empathy structure that could be borrowed to capture the overall flow. A comprehensive review of studies that have employed the empathy process in the context of AI was conducted, revealing that the structure of recognizing, interpreting, and responding to emotions remains consistent across these applications. Each process delineates the components that are identified [

39]. Furthermore, the empathy process is comprised of additional components that must be given due consideration, such as information pertaining to the user, the situation and context, and the setting factors of the agent, given that the majority of studies focus on the interaction between a robot or agent and a user. Consequently, the structure of the framework proposed in this study draws from previous studies, as it is essential to examine the connections between various components. However, the proposed framework is constrained to tangible robots; consequently, additional literature on empathic interfaces and empathic interactions of intelligent agents was identified and collated according to the purpose of this study (see

Table 2 below). Additional concepts were cited from the literature to include the characteristics and details of intelligent agents operating as a system within the borrowed structure.

The components of the proposed initial framework are broadly categorized into the following: the purpose of the intelligent agent, context, user characteristics, AI agent characteristics, and interaction. Firstly, the purpose of the intelligent agent corresponds to the purpose of providing services and the goals of the tasks it performs to meet that purpose. This is an element that must be identified to define the goals to be pursued, and the functions required when designing an intelligent AI. It is also the part where the overall implementation direction can be confirmed.

The subsequent section pertains to the context component, encompassing situations and relationships. This component is further categorized into four intermediate categories: situation and context, task, relationship, space, and time. These elements have been explored in studies that utilize the concept of layers to describe the flow of smart technology within the home environment or the elements that should be viewed from the user's perspective [

46]. The studies that addressed the flow of technology and those that viewed it from a user-centered perspective highlighted the same point: the perceived context and surrounding factors related to the user, such as tasks and relationships. These are factors that are also mentioned in existing product ecosystem models and need to be considered to understand the overall user experience. In this study, we analyzed these factors closely and added them to the components to find customized, empathic interactions [

47].

Firstly, the situation, context, and detailed factors that characterize the situation are set up, particularly in the case of tasks. The detail factor is the importance factor, which is how important the situation is based on the user's needs. Accuracy is a factor that determines whether the situation requires proper information delivery or action performance. The lower the weight of this factor, the more social rather than informational or functional the situation is. The next factor is controllability, which determines whether the situation itself can be controlled and manipulated by an intelligent agent. Situation significance determines how much attention the user needs to pay to the situation they are in. This is a sub-factor of relevance because the more urgent the situation, the more likely it is that empathic interactions will be set up differently, such as the way of speaking or expressing.

The subsequent relationship factor is composed of sub-factors to examine the relationship between the user and the intelligent agent. The first of the sub-factors is similarity between the user and the AI, which is measured as the degree to which the user's image type is similar to the AI's image type and whether they can feel a sense of unity. The intimacy sub-factor, meanwhile, is concerned with the closeness between user and AI, whilst the affinity sub-factor determines whether the user holds a favorable opinion of the AI. Intimacy may vary according to frequency of use or acceptance of the technology, whilst user perception of AI responses may be positive or negative, and thus influence user liking. The involvement sub-factor is dependent upon the extent of user interaction with the AI. This construct was inspired by a study by Kim(2023), who posited that the anticipated functions or roles may be contingent on the user's level of engagement. This notion was deemed pertinent as the anticipated empathic interaction may also be subject to variation depending on whether the user requires basic task execution or social functions. The spatial dimension encompasses the characteristics of the physical environment in which the intelligent agent is situated. Physical proximity within a space is an essential factor because the type and level of direct interaction can vary depending on whether the space has a physical appearance. Sub-factors were also set up to categorize the type of space: individuality and concentration. Individuality determines whether a space is utilized individually or shared with many users. Concentration is a factor that determines whether a space is focused and utilized for a specific purpose or whether it is transient and used in passing. As with space, the temporal factor is further subdivided into smaller factors. Proximity is a factor that distinguishes the duration of the user's interaction with the AI. Utilization is organized so that the user can choose whether time is utilized for efficiency and practicality or for other values. Repetitiveness was categorized according to whether the task occurs at regular intervals of time or intermittently.

The following characteristics are attributed to users and intelligent agents (see

Table 3). These characteristics correspond to the HRI empathy model which was adopted in this study. However, due to the comprehensive scope of the detailed elements, this study added and refined them based on empathy theories in the field of psychology and studies related to empathic interfaces.

Firstly, user characteristics are divided into user disposition and user's characteristic situation. User disposition is a factor that distinguishes identity, and we have categorized it into individual-centered and group-centered. Change acceptance is a factor that distinguishes whether a user is oriented towards stable situations and responses or an achievement-oriented person who prefers stimulation, change, and variety. Relatedness to others is a factor that categorizes whether a user is an extrovert or introvert. Situation control is a factor that categorizes whether a user has some control over a given situation by planning or improvising.

User situation consisted of factors that were optional, as opposed to the factors previously identified in context and situation. First, for the number of users, we distinguished between single and multiple users. This was deemed necessary because in the case of multiple users, we need to define which situations or criteria they should interact with. The user support relationship role was added as a sub-factor because the expected empathy and interaction may vary depending on whether the user is in the position of providing care or receiving care in a care situation.

Intelligent agents exhibit personality traits analogous to those of users, yet an additional transparency factor has been incorporated, enabling the adjustment of their degree of honesty when presenting information. A distinguishing feature of intelligent agents is their categorization based on the presence or absence of a physical form, thereby distinguishing between extrinsic factors and intrinsic characteristics. The specific details of extrinsic and intrinsic factors vary across studies and cases, necessitating their organization without the establishment of an index.

In the final analysis, the overall empathy process was divided into distinct phases, encompassing the interaction between the user and the intelligent agent. The detailed elements of this process are delineated in

Table 4. In accordance with extant empathy theories and HRI research, the organization of empathic cues into a sequence of recognizing, understanding, interpreting, and responding to their meaning was facilitated. In addition, we have included technologies that can be utilized in the empathy process to ascertain which empathic cues are matched and connected to each process.

In the recognition stage, empathic cues are generally recognized through sensing and are divided into direct and indirect cues. Direct cues include explicit clues, such as mechanical or electronic manipulations, spoken language, gestures, and sounds. Indirect cues are implicit or latent cues that have implied meaning. These include facial expressions, vital signs, specific actions or tasks, and environmental information such as light, temperature, humidity, and odors. The importance of further interpretation to clarify the situation and message can be inferred from these cues. Techniques used in the recognition phase include recognizing emotions or vital signs through the empathic cues.

The comprehension stage is the component of interpretation that facilitates comprehension of the meaning of previously recognized empathic cues. It comprises the empathic understanding method, the tools for empathic understanding, and the skills utilized In this step, a level of accumulation was added to determine whether the cues were emotional or cognitive and to correlate them with existing data. Emotional empathy involves understanding the emotions and emotional cues that another person is expressing or experiencing. In contrast, cognitive empathy pertains to the ability to comprehend the situation through indirect or direct cues.

The subsequent judgment stage follows on from the understanding stage and involves reasoning and deciding what empathic response to implement. Since this stage is concerned with determining how to express the cues interpreted in the understanding stage, it is divided into emotional empathy and cognitive empathy and consists of the same skills utilized in the other stages. The expression of similar emotions in the judgment phase is indicative of emotional empathy, while the ability to judge the user's thoughts or feelings is indicative of cognitive empathy. The factors that influence cognitive empathy include the robot's role and perspective, which can be considered in the process of making judgments.

The final reaction phase comprises sections addressing the manner and contexts in which empathy is expressed by the intelligent agent. This phase is categorized into reaction methods, reaction types, and enabling technologies. The types of reactions that users can recognize are categorized as follows: verbal empathy, non-verbal empathy, and empathy through environmental changes. Verbal empathy includes text, spatial language, spoken word, and sound effects. Non-verbal empathy encompasses facial expressions conveyed through facial movements, such as eyebrows, eyes, and mouth, images including emojis, tactile responses such as vibration, and movement through body control. The environmental change empathy category includes light, temperature, humidity, and odor. The categorization of reactions was further refined based on the nature of the intelligent agent's response, distinguishing between positive and negative responses. Additionally, factors such as the utilization of technology for the response were organized to ascertain its presence or absence.

As illustrated in

Figure 2 below, the components and details of the initial framework described above are rendered visually. In a manner analogous to the HRI empathy process model borrowed from this study, arrows have been included to demonstrate the flow. Furthermore, the detailed factors that can be categorized according to the scale are expressed in the form of indicators, and the parts that require further explanation are not restricted so that they can be freely written.

The initial framework proposed in this study aligns with traditional product ecosystem models in terms of its structure, encompassing users, context, and interactions. Product ecosystem models serve as a framework for comprehending the interactions between users and products, as well as the interactions that arise from products influencing user behavior [67, 68]. It is a framework for understanding the experience of using a product at the product or system level and can help in the design process to enhance the user's experience. The empathy process that this study aims to address is also in the same vein, as it aims to provide an enhanced experience through empathic responses that users need in using home services. The components of the product ecosystem model and the components of the framework of this study are similar, which proves the necessity of identifying them from a user experience perspective. However, further validation is required to determine whether all the components in the initial proposed framework are appropriate and whether anything is missing. The final framework is proposed as shown in

Figure 2.

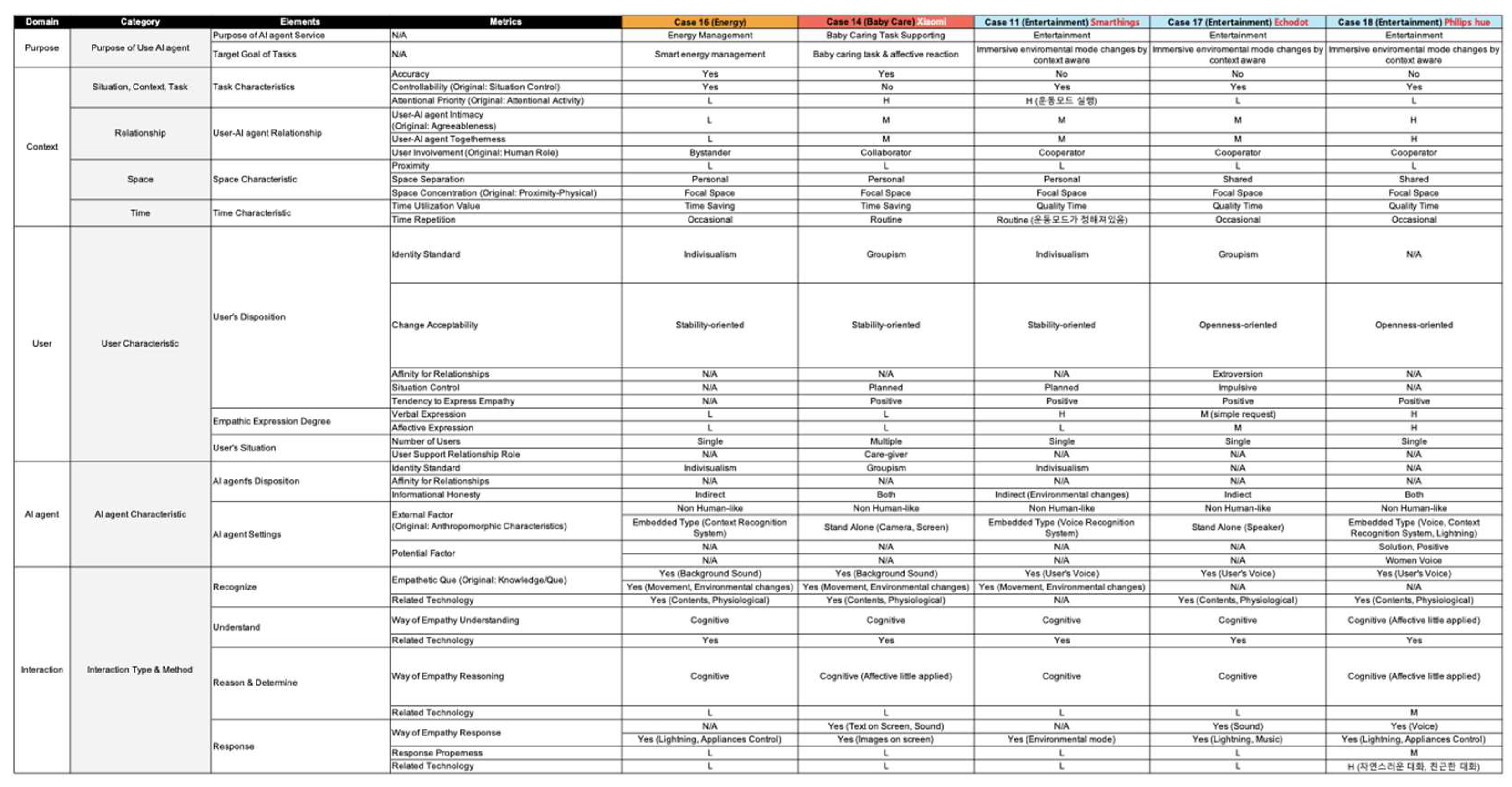

4.2. Advancing the Framework by Applying Industry Practices

This study constitutes a refinement of the initial Empathic Human-Agent Interactions framework proposed earlier, with the objective of determining the validity of the organization of the components and identifying any components that are missing or require refinement. The study incorporates industry examples within the framework to reflect each component and visually illustrate the interaction between AI agents and users. Given that this study proposes a framework for identifying appropriate empathic interactions in the realm of home services, cases of intelligent agents in the home environment were selected. A total of 24 cases were selected as future scenarios or products on the market represented by intelligent agents applied to robots and home appliances by various companies, including leading IT companies. However, the scenarios and product features in the examples do not include very detailed tasks as they cover many areas of home services. Therefore, we extracted only the things that are clearly stated or understood in the scenarios and applied them to the cases in the framework to see if they show similar behavior. Furthermore, in instances where scenarios encompassed multiple functions and situations, these were examined and applied to the framework individually. As a consequence of this process, the cases were subdivided into 30 individual cases, which were then reflected in the framework. The applicable points for each element were checked and the cases were organized as shown in

Figure 3 below.

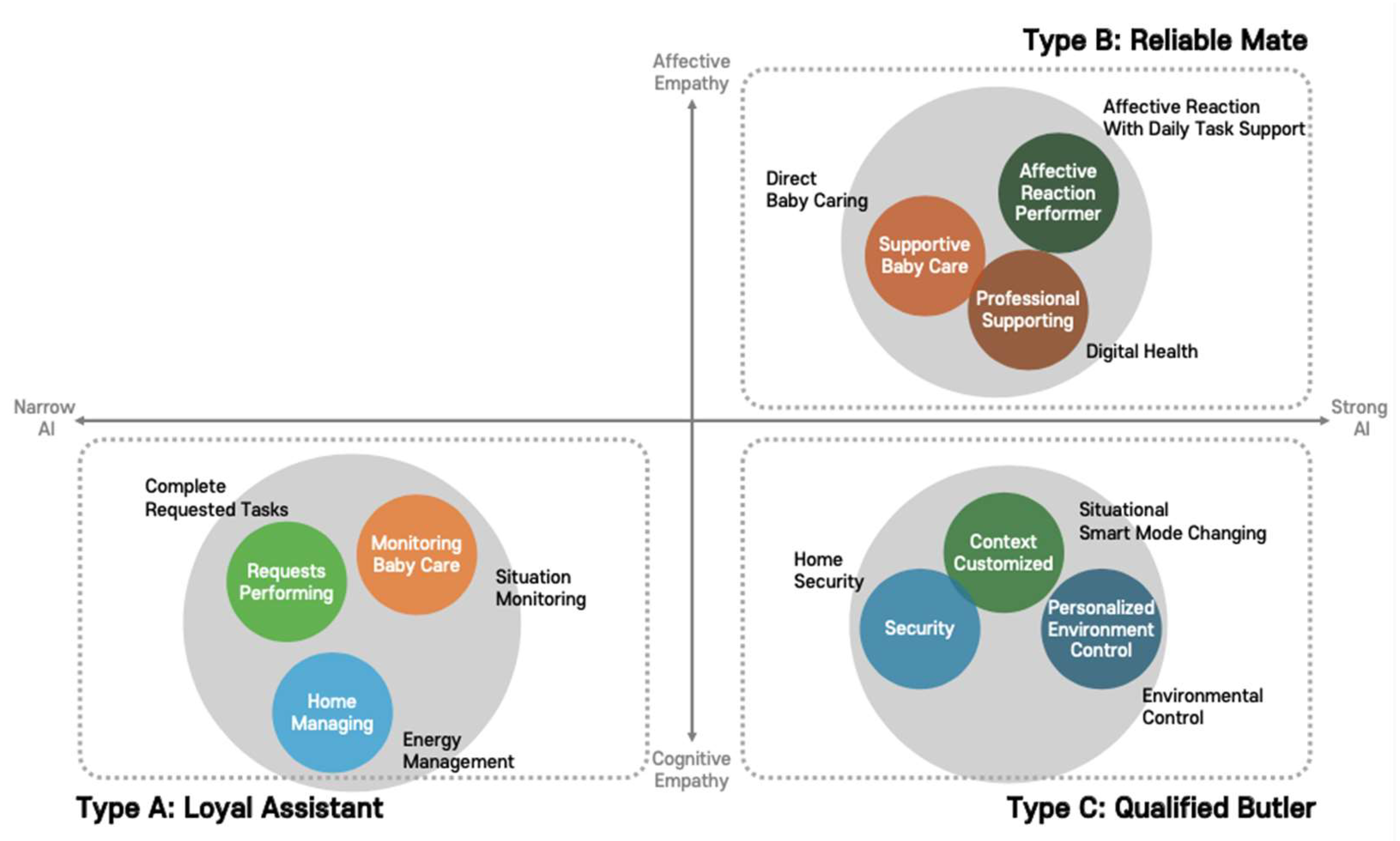

Following a thorough examination of the outcomes derived from the application of the framework in reflecting upon the cases, it was ascertained that analogous patterns and characteristics could be identified, even in instances where the service areas differed. This finding paved the way for the classification of these cases. To facilitate the classification process, an initial classification axis was established, with this classification being based on trends that exhibited similarity. The present study confirmed the variability of the empathy interaction method in accordance with the service area, function, context, and task within the home service domain. Consequently, the most efficacious approach to achieving clarity appeared to be the division of empathy areas. The initial axis was thus established to delineate emotional empathy from cognitive empathy. The subsequent axis was positioned to reflect the degree of technological advancement in accurately identifying and executing situations, contexts, and other parameters. This was chosen as a discernible criterion in cases and aligned with the research objective.

The classification of cases by the axis set resulted in the generation of

Figure 4, which illustrates the distinct categories. The first category is designated as the 'Loyal Assistant' type and encompasses tasks executed solely upon user request. This category includes childcare assistance, wherein the assistant observes the situation and context on behalf of the user and notifies them of immediate tasks. Additionally, it encompasses efficient energy management assistance within the domestic environment. The cases that fall under this category primarily respond by accurately recognizing the situation, thus demonstrating a greater focus on cognitive empathy as opposed to emotional empathy. Additionally, these cases are constrained to tasks that monitor the situation or address straightforward user requests, resulting in a comparatively lower level of AI compared to other cases and consequently a low ranking. A distinguishing feature of this category is the inclusion of scenarios where the user's inability to articulate their needs results in suboptimal performance. Consequently, these cases have been classified within the Low Intelligence category.

The second category is that of the Qualified Butler, which includes cases that effectively manage the overall home environment. The majority of these cases are those that execute functions at the opportune moment to resolve situations that are problematic for the user, such as understanding the context and switching to a customized mode or managing security. In this category, it is evident that it is closely related to cognitive empathy in that it comprehends the overall situation and controls the environment and situation accordingly, akin to the first category. However, this type exhibits a more natural empathy response through accurate situational judgment, context recognition, and analysis than the first type, thus occupying a higher intelligence level.

The final type is Reliable Mate, which refers to cases where the level of intelligence is very similar to that of a human and, unlike the previous two types, displays emotional empathy. Typical examples include digital health, which directly assists in childcare when the user is absent, displays emotional responses in various forms of empathy, and involves experts to deliver and manage knowledge. These systems are unique in that, unlike other types, they emphasize emotional empathy responses even though they include service areas with outstanding expertise. Also, unlike other types, they include scenarios such as using virtual avatars, showing more diverse interaction methods.

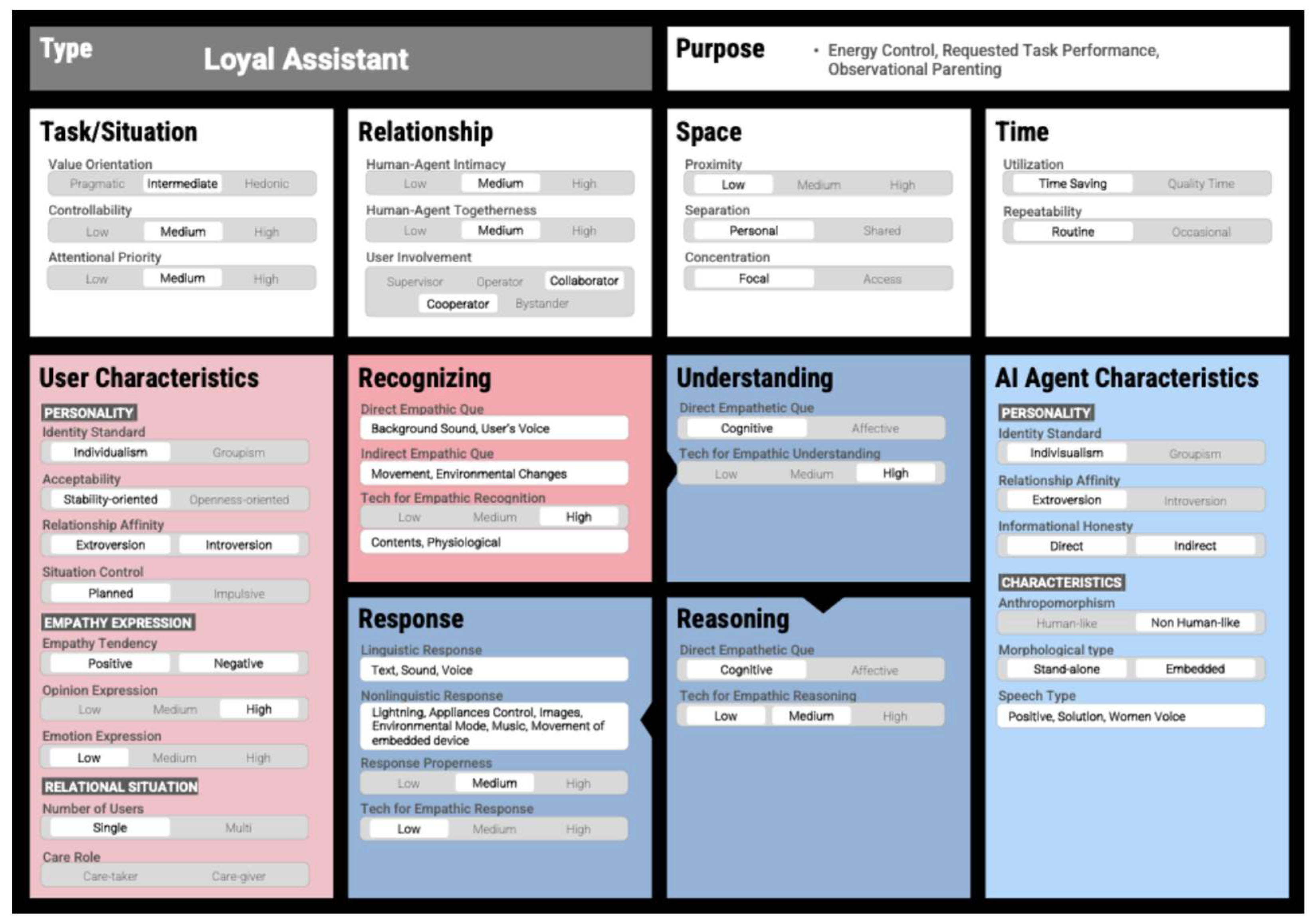

Following a thorough examination of each case and their subsequent classification according to the predetermined criteria, the three types derived were organized according to the initial proposed framework format. This was done to verify the absence of superfluous elements and to ascertain whether any additional verification was required. Given that the same type encompasses diverse service areas, it was visualized in a manner that facilitates the determination of whether it needs to be expressed on a scale or if it needs to be selected in duplicate. The upper part is designed to describe the purpose of using the AI agent, which is expressed by the type of name and the cases included in the type. Below that is a section that captures the overall context, which is divided into context, relationship, space, and time. The lower left is designed to describe the characteristics of the user and is placed next to the user section so that the recognition, which is the initial stage of the process of empathy interaction, can be seen. The entire sequence is designed to be visible, with the recognition of the AI agent by the user being the ultimate objective. The characteristics of the AI agent are displayed at the bottom right, and are closely related to the understanding, reasoning, and response stages of the empathy interaction process. For clarity, this section is displayed in the same color as the user section, so that the connection from empathy recognition to response can be understood. Furthermore, any ambiguous or ambiguous expressions identified within the nomenclature employed in the initial framework have been modified to straightforward terms, thereby ensuring the elimination of any potential for confusion.

The framework for visualizing the first type, the Loyal Assistant, is shown in

Figure 5 above. This type includes cases that monitor the situation and perform the requested tasks, including those that focus on functional aspects such as energy management and those that control entertainment elements. The majority of these are tasks that users can control directly and are incidental tasks in terms of life. In terms of the situation, these are tasks that users can control directly, and they are located between hedonic and functional tasks. Furthermore, the scenario itself does not constitute an urgent situation, and thus does not require significant attention, due to the fact that it is within the user's capacity to exercise control. The characteristics of the situation are ultimately connected to the relationship between humans and AI agents; since the primary function of the AI agent is to receive a specific task from the user and to perform the function, the level of intimacy and sense of unity is moderate, and the level of user involvement is either Cooperator or Collaborator. The spatial characteristics exhibited in the scenarios were personalized and focused, with the scenarios automatically identifying voice requests and environmental factors and performing tasks, primarily in the form of embedded systems, thereby demonstrating limited physical accessibility within the space. In terms of temporal dynamics, tasks performed resembled routines with an emphasis on practicality.

Following the identification of the overall scenario in terms of situation, relationship, space, and time to ascertain its characteristics, the flow and characteristics of empathy interaction and interface were examined in the lower part. The process of empathy interaction was identified by arranging the relevant studies referenced when establishing the initial framework in the order of recognition, understanding, reasoning, and reaction. Due to the nature of this type of scenario, the level of recognition technology is very high because it recognizes and resolves situations. The degree of users' expression of intention is high because there are parts that request tasks, but the degree of expression of emotion is very low because the main function is to control the situation and change the environment. This is related to the clues that the AI agent recognizes, and it was found that it mainly recognizes environmental changes, movements, user voice commands, sounds, etc. as clues for empathy. This type encompasses numerous scenarios pertaining to situational awareness, necessitating a high level of proficiency in comprehending contextual nuances. Conversely, the capacity to interpret, reason, and respond empathically is found to be less developed. The efficacy of this system has been demonstrated in scenarios that involve notifications based on straightforward requests or monitoring data. The necessity for adjustment of the environment or performance of tasks following the establishment of context renders the implementation of an embedded system preferable to that of an AI agent endowed with a human-like appearance.

In this section, we set out the direction for establishing empathy interaction within the framework, as demonstrated by representative examples in this category. Firstly, we present an instance of automatically controlling and adjusting to conserve energy. The user experienced empathy interaction between the AI agent through the brief and rapid notification sound and the text display in the app that is utilized in conjunction. We determined that such interaction is essential in scenarios where regular notifications are necessary or in situations where data is collected and reported. Secondly, in the context of monitoring to facilitate childcare, empathy interaction manifested through light, notification sounds, and on-screen images. Given the urgency characteristic of many situations that arise during monitoring, empathy reactions are typically brief yet impactful. Finally, a case study demonstrated a scenario where the user performs a task or controls a function in response to a request. This is a response to the user's request, which is primarily conducted through dialogue, thereby underscoring the necessity for an interaction method that seamlessly integrates into the context. This may involve an environmental adjustment that gradually augments the music volume, or the movement of a connected device in a natural manner.

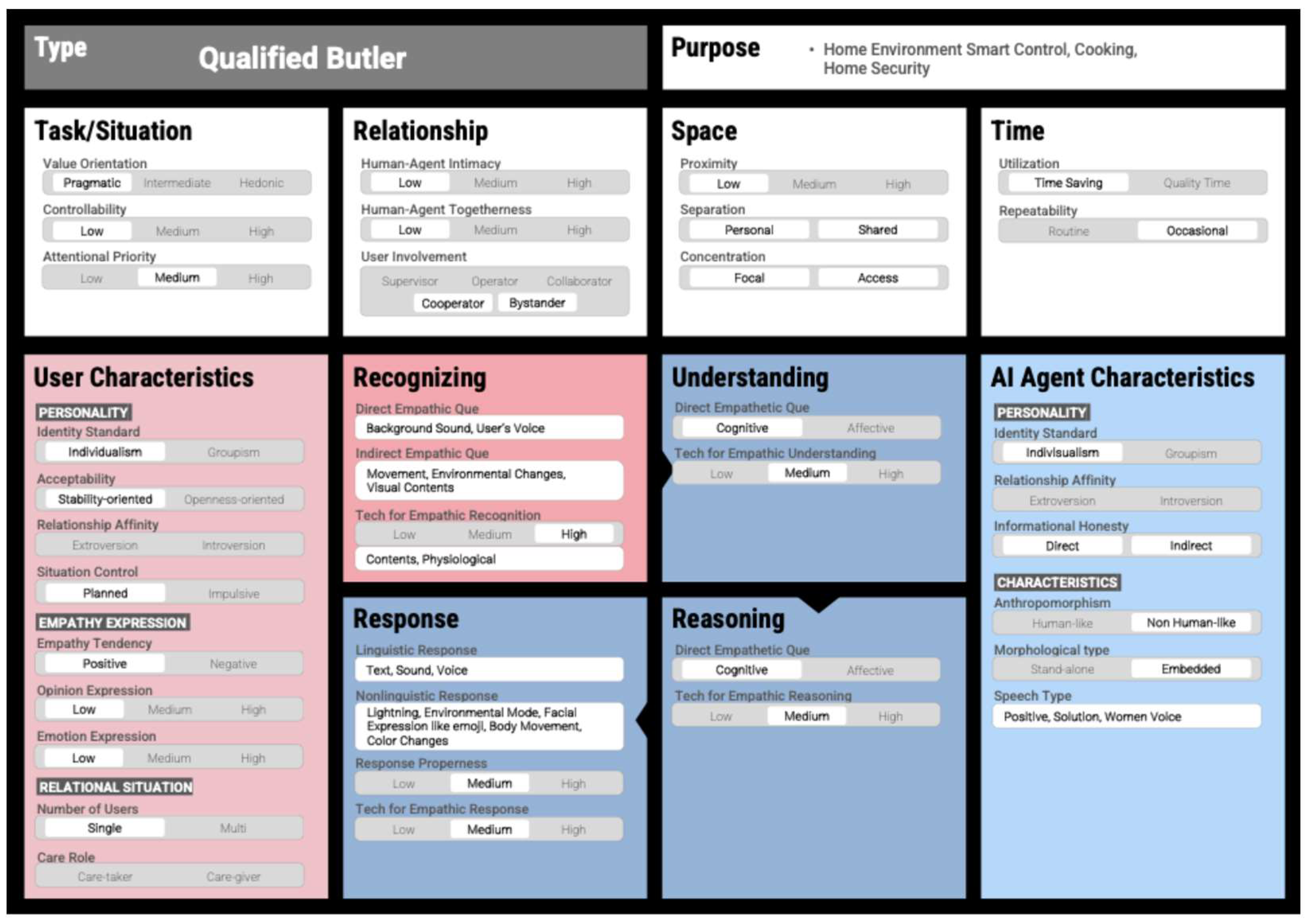

The framework for visualizing the second type, Qualified Butler, is shown in

Figure 6 below. In this type, the user performs tasks by identifying the home environment, as in the first type. However, in contrast to the previous type, the user performs not only the tasks requested by the user, but also many scenarios that identify the environment and situation and control it smartly in advance. This type was almost entirely focused on functionality. This aspect is characterized by a multitude of automated features that govern the environment, even in the absence of direct user intervention. The second type exhibits a greater number of situations necessitating user intervention, thereby prompting an investigation into the impact of this on empathy interaction. In terms of relationship dynamics, the intimacy and sense of unity between humans and AI agents is minimal, primarily due to the user's role, which is either that of a bystander or a cooperator. In terms of space, these are also cases where the automation function is activated without much user involvement, resulting in physical accessibility being low, and both spatial division and concentration being diverse. In terms of time, these were cases where the functional aspect was emphasized, corresponding to the 'Time Saving' category. It was also confirmed that these are not repeated and appear only when necessary, corresponding to the 'Occasional' category.

In this study, we identified the characteristics of this type in terms of situation, relationship, space, and time. We then examined the characteristics exhibited by the empathy interaction flow at the bottom. The recognition stage, which collects empathy clues, included numerous elements that detect changes in the surrounding environment, including the parts that the user requests. In particular, the level of awareness of the user's situation and the types of clues collected are similar to those of the first type. This category also encompasses scenarios where tasks are executed following situational awareness, resulting in elevated levels of context understanding, reasoning, and responsiveness. As these systems primarily identify situations and environments, they are predominantly embedded and lack a physical form. While analogous to the first category, this type demands a more advanced technological capacity to comprehend, reason, and respond, owing to the necessity of handling sophisticated functions and performing higher-level tasks. A notable distinction is the emphasis on time efficiency, while also exhibiting a substantial capacity for empathy responses.

To ascertain the direction in which empathy interaction is set up on the framework, a typical example was reflected upon. Firstly, the home environment control shows different empathy responses depending on the situation, whether it is entertainment or security, and shows customized interactions at the right time and in the right way, showing a higher level of empathy. Specifically, the tablet attached to the device displays a motion as if tilting its head, or it provides the subsequent step in advance on the screen and furnishes the necessary information, thereby demonstrating a highly sophisticated level of empathy. In the home security scenario, if the system were embedded, an emergency would be expressed through a whistle as if it were detected by a person. It has been demonstrated that the system responds in a manner consistent with human behavior; for instance, it does not immediately respond to movement, such as the activation of lighting or the emission of audible signals, in situations where such responses might be expected, such as in the presence of an approaching individual. Consequently, it has been determined that the system should exhibit an interaction that conveys a strong message at the opportune moment.

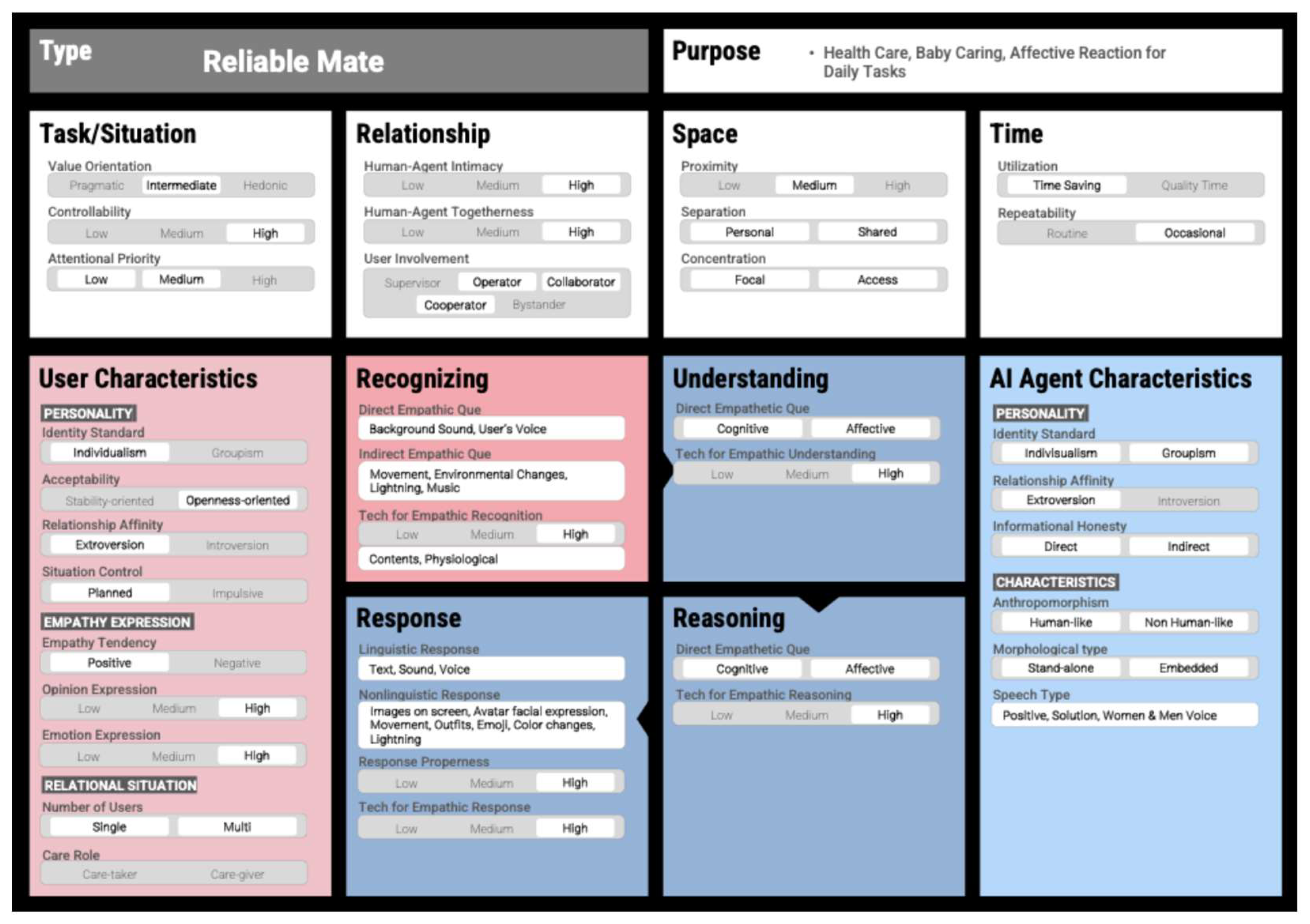

The framework that visualizes the third type, Reliable Mate, is shown in

Figure 7 below. Unlike the other two types, this type includes examples of emotional empathy shown in digital health, advanced parenting, and daily tasks. Unlike the other types, this type emphasizes emotional responses and shows that appropriate forms of empathy are needed in healthcare and parenting. In terms of situation, the case uses an emotional empathy approach, but it is closer to functional in terms of performing functional tasks. The user has a high degree of autonomy, with no situations requiring significant attention. In terms of relationships, the level of intimacy and sense of unity between humans and AI agents is notably high, particularly when user engagement is high and they work together as collaborators, communicating closely with each other. As demonstrated in the user engagement, the space was close to the user because they were working together and had a close relationship. The spatial aspect of the environment was found to be of medium size, with both separability and centrality being incorporated into various degrees. In terms of temporal considerations, instances that frequently occurred and did not necessitate the occurrence of an event were found to be conducive to time efficiency.

An examination of the flow of this particular empathic interaction reveals that the level of recognition technology exhibited was notably elevated. This is primarily attributable to the incorporation of tasks such as direct user requests, communication with the AI agent, and the balancing stage, which collectively contributed to the comprehensive recognition process. The identified cues encompassed a balanced distribution of both direct and indirect factors, including the user's behavior, voice, and environmental changes. The perceived cues exhibited minimal distinction from the initial two types; however, a discernible divergence emerged in the manner they were interpreted and responded to. This category demonstrated a high degree of proficiency in comprehending both cognitive and emotional cues, a proficiency that was further exemplified in the subsequent reasoning stage, which was predicated on the comprehended content. A diverse array of empathy reactions was exhibited in the ensuing reaction stage, a feature that stood in contrast to the preceding two types, encompassing text, images, environmental control, and virtual avatars. This was attributed to the unique characteristics of the AI agent, including its form and verbal expression, and the high emotional response, which differed from the previous two types.

A survey of representative examples reveals a more diverse set of empathy methods. Firstly, the home healthcare case demonstrated empathy interaction, whereby the user was immersed in the situation and felt as if they were being treated by a doctor, with a virtual avatar displaying the same professionalism as a doctor, such as wearing clothes and glasses. While the virtual environment has been predominantly employed for entertainment purposes, it has the potential to be utilized in professional domains to demonstrate a high level of empathy, thereby serving as a reference point for other forms of empathy. The subsequent example pertains to parenting, and in contrast to the monitoring-type parenting observed in the first type, it exemplifies a high level of empathy. In this paradigm, the voice is synchronized to the gender of the primary caregiver, thereby fostering a sense of realism and emotional resonance. This interaction exemplifies the integration of an emotional empathy method within a professional parenting framework, underscoring the significance of customizing methods to align with the user's unique context and patterns. Furthermore, the demonstration extended to daily task assistance, reinforcing the efficacy of emotional empathy in fostering human-like interactions. This was primarily observed in the form of stand-alone robots, which exhibited human-like behavioral reactions such as nodding and tilting their heads. This suggests that non-verbal empathy interactions play a significant role in communication with users when providing information and performing tasks.

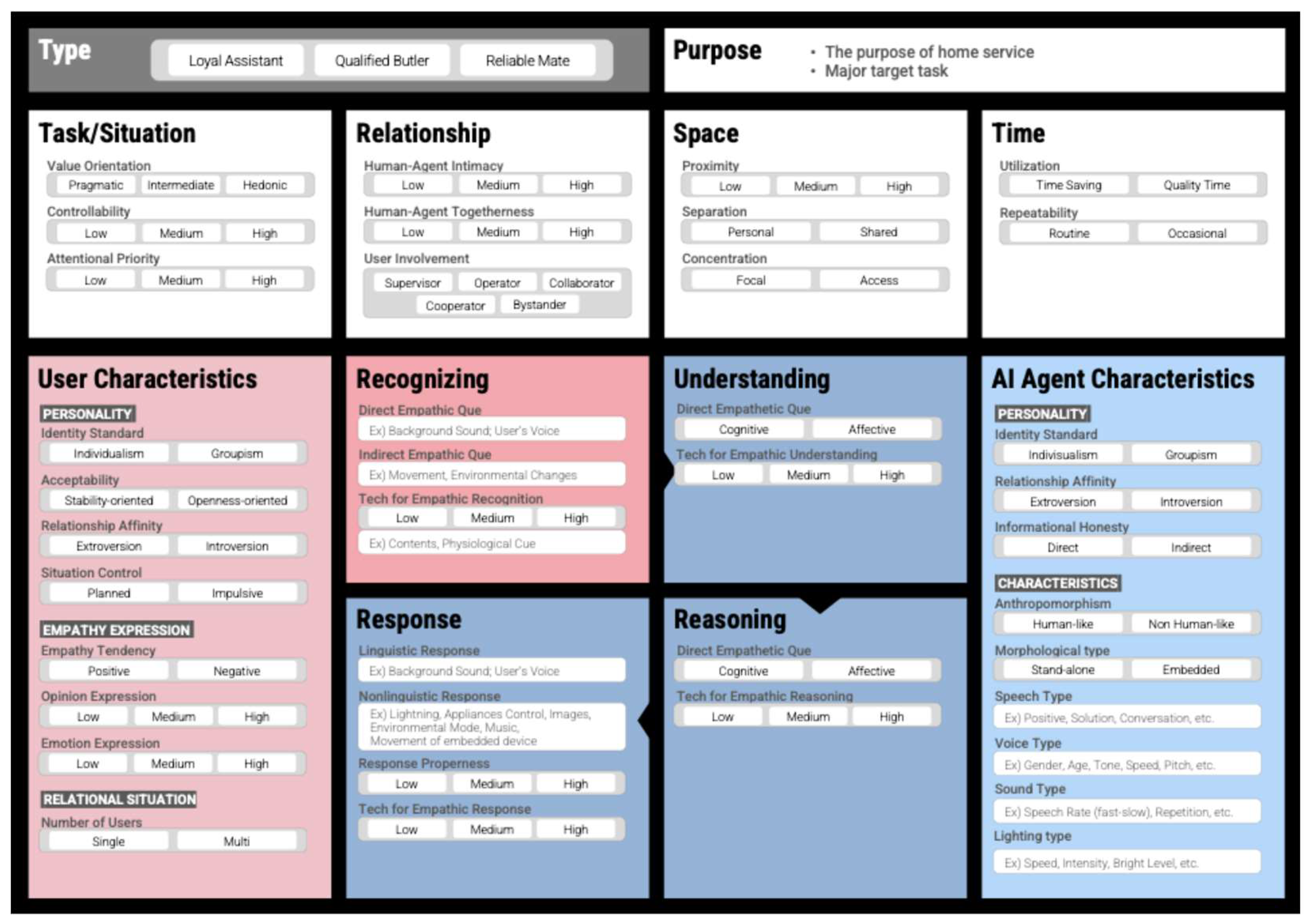

4.3. Final Framework Proposal: Empathic HAX(Human-Agent Interaction) Canvas

The industry cases were applied to the framework in order to identify the elements that should be considered in the final canvas. The final canvas-type framework is shown in

Figure 8 below.

Firstly, it was confirmed that the level of empathy exhibited by users is contingent upon the extent to which they express empathy. In terms of emotional empathy, the level of empathy increased when users treated the AI agent as if they were treating a real person when expressing their thoughts or emotions. In contrast, the first and second types primarily recognize the situation and respond with empathy by adjusting the environment appropriately. This finding lends further credence to the notion that the expression of intention and emotion is not a primary factor in responses to empathy, and that this element is essential for the establishment of effective empathy interaction and response methods.

The following process pertains to the refinement of nomenclature or the elucidation of classification criteria, with the objective of enhancing the clarity of the framework in instances where the criteria appear opaque. Primarily, this pertains to the external manifestation of the AI agent. The classification of this entity is contingent upon its resemblance to a human in both appearance and behavior. Entities that meet these criteria are designated as 'Human-like'. This modification was implemented to circumvent any potential confusion arising from the utilization of a non-uniform nomenclature within the framework. Subsequently, we have augmented the framework with additional elucidations pertaining to the empathy interaction process. Specifically, we have incorporated a definition of the element to indicate the technological framework employed by the AI agent during the understanding and reasoning stages. This enhancement is intended to facilitate immediate comprehension by observers of the canvas. In the response stage, the intention is to indicate the appropriateness of the response and the level of response technology; however, it is difficult to ascertain this immediately, and thus the definition was added to the canvas. However, since the recognition stage has a section where empathy cues can be entered and is named as the level of related cognitive skills, no additional content was added, unlike the other empathy stages.

In the domain of AI agent characteristics, certain elements pertaining to reactions have been incorporated. A case study has substantiated that the background sound type is exhibited at a rapid tempo in situations of urgency. Consequently, this has been incorporated into the characteristics section as a factor that influences empathy reactions and exhibits a correlation. The subsequent section pertains to the categories of light expressions. It was determined that the manner in which light is expressed varies in scenarios where it is dimly lit along the user's movement path or where the floor is rapidly illuminated with a welcome message in the area where the user is greeted. Features that are responsive have been incorporated to allow for more precise consideration during the setup of empathic interactions. Furthermore, more detailed explanations were frequently required for matters such as the intensity and method of expressing voice or sound. Consequently, the approach adopted was to compose it as a descriptive type, as opposed to a selective type, with the objective that users could freely document the voice and background sound they receive.

In the preceding case analysis stage, the option was designated as a selection type. However, in the subsequent AI agent feature area, empathy recognition and empathy response stages, it underwent modification so that it could not be selected simply by looking at it. Furthermore, it was configured so that appropriate empathy interactions could be examined by freely describing them. Additionally, in other areas, it was made possible to select in the form of an action button to increase the utilization of the canvas as the below figure 9.