1. Introduction

Standardized object datasets like the YCB Object and Model Set [

1] are crucial for benchmarking in computer vision and robotics, providing consistent evaluation metrics and reproducible results. While the YCB set’s 77 objects across food, tools, and task items have proven valuable for tasks from recognition to pose estimation, international researchers often face accessibility challenges when attempting to acquire the complete set. Additionally, there remains a notable gap in standardized datasets for supermarket objects with varying deformability and mass distributions.

In response to these challenges, we introduce the Australian Supermarket Object Set (ASOS)

1 (

Figure 1), designed for accessibility and relevance to real-world scenarios. This dataset contains 50 objects commonly found in Australian supermarkets, along with their 3D textured meshes. These objects were chosen based on their affordability, availability, and representativeness of typical household items, ensuring their usability for both real-world and simulated environments. Unlike many existing datasets that rely on synthetic models or lack real-world counterparts, the supermarket object set provides tangible objects that can be easily sourced locally, overcoming barriers of cost and availability while addressing practical issues like deformability and mass distribution that are difficult to replicate in simulations.

The availability of this object set addresses a gap in the existing literature: while datasets like LINEMOD [

2] and BigBIRD [

3] focus on pose estimation and RGB-D tasks, their reliance on online meshes or specialized items limits their real-world applicability. Similarly, while the YCB and ACRV datasets [

4] enable real-world object benchmarking, their emphasis on general household items leaves room for a domain-specific dataset that captures objects encountered in daily shopping contexts. The Australian Supermarket Object Set bridges this gap by offering a cost-effective, practical, and diverse collection of items that can be leveraged to evaluate robotics and computer vision algorithms in real-world and simulated environments.

This paper outlines the design principles, data collection methodology, and metadata associated with the supermarket object set. By providing an open-access dataset and addressing challenges like sim-to-real discrepancies, we aim to advance reproducibility and innovation in robotics and vision research.

Table 1.

Object datasets present in the literature. (Real objects available) We consider objects to be `3D printable’ if the dataset presented the 3d printed models as the object dataset, compared to the dataset containing 3D printable objects that would produce objects with different properties than those in the dataset. Similarly, we in general consider real objects to be available if they can be obtained in their original form without much effort, and for a reasonable monetary cost, based solely on the information provided by the authors of the dataset.

Table 1.

Object datasets present in the literature. (Real objects available) We consider objects to be `3D printable’ if the dataset presented the 3d printed models as the object dataset, compared to the dataset containing 3D printable objects that would produce objects with different properties than those in the dataset. Similarly, we in general consider real objects to be available if they can be obtained in their original form without much effort, and for a reasonable monetary cost, based solely on the information provided by the authors of the dataset.

| Dataset Name |

Type |

Theme |

Num objs |

Real objects? |

| PSB [5] |

Meshes |

General |

1814 |

|

| 3DNet [6] |

Meshes |

General |

3433 |

|

| KIT database [7] |

Textured meshes, stereo RGB images |

Household |

145 |

|

| LINEMOD [2] |

Textured meshes, RGB images with poses |

General |

15 |

|

| BigBIRD [3] |

Textured meshes, RGB-D images |

Household |

125 |

|

| ModelNet [8] |

Meshes |

General |

151k |

|

| Rutgers APC [9] |

Textured meshes, RGB-D images with poses |

Household |

25 |

|

| ShapeNetCore [10] |

Meshes with WordNet annotations |

General |

51k |

|

| YCB [1] |

Textured meshes, RGB-D images, shopping list |

Daily life |

77 |

✓ |

| ACRV [4] |

Textured meshes, shopping list |

Household |

42 |

✓ |

| MVTec ITODD [11] |

Meshes, RGB-D images with poses |

Industrial |

28 |

|

| T-LESS [12] |

Textured meshes, RGB-D images with poses |

Industrial |

30 |

|

| RBO [13] |

Articulated meshes, RGB-D images |

Articulation |

14 |

|

| TUD-L & TYO-L [14] |

Textured meshes, RGB-D images with poses |

Varied lighting |

24 |

|

| ContactDB [15] |

Meshes with contact maps, RGB-D & thermal images |

Contact |

3750 |

3D printable |

| HomebrewedDB [16] |

Textured meshes, RGB-D images with poses |

Household, industry, toy |

33 |

|

| EGAD [17] |

Meshes, 3D printing instructions |

Generated |

2282 |

3D printable |

| Household Cloth Object Set [18] |

Meshes, microscopic images, object details |

Cloth |

27 |

✓ |

| ABO [19] |

Textured meshes with metadata including WordNet annotations, RGB images, physically-based renders |

Amazon.com household |

7953 |

|

| AKB-48 [20] |

Articulated meshes |

Articulation |

2037 |

|

| GSO [21] |

High-quality textured meshes with metadata |

Household |

1030 |

|

| HOPE [22] |

Textured meshes, shopping list |

Toy Grocery |

28 |

✓ |

| MP6D [23] |

Meshes, RGB-D images |

Industrial |

20 |

|

| ObjectFolder [24,25] |

Neural representations that can be used to generate visual appearance, impact sounds and tactile data |

Multisensory |

1000 |

|

| PCPD [26] |

RGB-D images |

Power grid |

10 |

|

| TransCG [27] |

Meshes, RGB-D images |

Transparent |

51 |

|

| Ours |

Textured meshes, shopping list |

Supermarket |

50 |

✓ |

2. Related Works

Object sets have been widely used as benchmarking tools for various tasks. One of the oldest and still commonly used object sets is the Princeton Shape Benchmark [

5], which focuses on a range of geometries with semantic labels indicating their purpose. However, this object set only provides untextured meshes and is primarily used for shape-based tasks such as geometric matching. Other object sets like 3DNet [

6], ModelNet [

8], and ShapeNet [

10] emphasize geometrical properties and lack texture. While these sets contain semantically labeled CAD models, a major limitation of such object sets is that there are no guarantees about how models in these object sets correspond to real-world objects. These object sets are usually curated by humans to eliminate unrealistic geometries and ensure correct semantic labels, but many of the models in these databases were synthetically constructed and so may not map to real world objects. Additionally, the absence of texture hinders the integration of visual cues for tasks like segmentation and pose estimation.

There have been many other object sets proposed for use in benchmarking object detection and pose estimation, among other tasks, that consist of textured object models that have direct real-world analogs. Early object sets introduced with these features include LINEMOD[

2], the KIT database [

7] and BigBIRD [

3], with more recently introduced datasets improving on these early benchmarks, such as GSO [

21] consisting of much higher quality scans and ABO [

19] containing a wide range of realistic textured meshes. There have also been many object sets proposed that are more focused on a specific task, which include extra data that is directly relevant to the task. Object sets that were designed primarily for pose estimation such as the Rutgers APC RGB-D dataset [

9], TUD-L, TYO-L [

14] and HomebrewedDB [

16], contain RGB-D images that have been annotated with object poses in addition to textured object meshes.

All object sets that have been mentioned so far consist of meshes only accessible online. While many of these object sets may have been constructed by scanning real-world objects, there is no easy way to access the original objects used to construct the object sets to perform real-world tests. This is especially an issue for robotics tasks, where direct interaction with the object is commonplace, and there can often be a large sim-to-real gap due to many properties of real-world objects not being accounted for by the simulated meshes, such as surface roughness and deformability. To rectify this issue, there have been object sets introduced that aim to provide access to the real world objects that correspond to the meshed in the datasets for use in robotic benchmarking of tasks like grasping. Two primary examples of such object sets are the YCB Object and Model Set [

1] and ACRV [

4], which consist of household objects and come with shopping lists to allow researchers to easily purchase the real-world objects.

3. Supermarket Object Set

The Supermarket Object Set is a collection of 50 household items that can be easily obtained from Coles, a major Australian supermarket chain. This object set data is accessible online

2. Each object in the set is accompanied by a high-quality 3D water-tight mesh, as well as detailed information about its mass and dimensions. This object set is specifically designed to facilitate benchmarking of robotic manipulation and computer vision tasks, providing researchers with accessible and common objects for evaluation. The inclusion of high-quality meshes in the Supermarket Object Set enables accurate simulation of real-world objects, allowing for benchmarking of manipulation and vision techniques. However, it is important to note that certain properties of real objects, such as flexibility, deformability, and durability, are challenging to simulate effectively. Additionally, properties like mass distribution are difficult to measure accurately for real objects. The accessibility of the real-world object set provides an opportunity to compare algorithms in these hard-to-simulate circumstances.

3.1. Object Choices

The Supermarket Object Set comprises 50 household items categorized into 10 different categories, with each category containing between 4 and 6 objects. The properties of these objects, including shape, size, and weight, can be found in

Table 2, and the objects are depicted in

Figure 2. The selection of objects for the Supermarket Object Set was guided by several criteria:

3.2. Cost

The chosen objects in the Supermarket Object Set are easily obtainable on a budget. This is achieved in three ways: Firstly, all objects can be obtained from Coles, a major Australian supermarket chain, making in-person acquisition simple for local researchers. Secondly, the objects are selected as cheap, generically branded items. Lastly, they are non-perishable and robust, reducing the need for frequent replacement. As a result, the Supermarket Object Set is easily accessible, cost-effective, and durable.

3.3. Commonality

The objects in the Supermarket Object Set are chosen from the most commonly purchased generic items at Coles, making the object set representative of standard household objects in Australia. Algorithms that perform effectively on this object set are more likely to exhibit better generalization to real-world Australian settings compared to randomly selected object sets.

3.4. Shape, Size and Weight

The graspability of objects is influenced by their size, shape, and weight [

17]. To ensure a well-balanced dataset, the objects in the Supermarket Object Set are chosen to cover a diverse range of shapes and sizes. The object categories are explicitly defined based on these properties. For most common household objects, shapes can be categorized as either boxes or cylinders. Therefore, the object set includes separate categories for regular cylinders (e.g., cans), irregular cylinders (e.g., sauce bottles), and boxes (e.g., toothpaste boxes). To ensure size diversity among the selected boxes, separate categories for small and large boxes are included. This distinction is not necessary for cylinders as it was found that the selection criteria for the boxes already provided a wide range of sizes. The weight of an object is also a crucial factor in determining its graspability. Objects in the Supermarket Object Set are carefully selected to represent the range of weights typically encountered in a domestic setting. The dataset includes objects with a diverse set of weights, ranging from light objects weighing a minimum of 18g to heavy objects weighing a maximum of 1458g. The maximum weight is chosen to remain below the maximum payload for most standard robotic manipulators.

3.5. Variety

The objects in the Supermarket Object Set are chosen to be representative of a range of household items used in various tasks. This includes non-perishable food items, drinks, cleaning goods, personal hygiene products, and health items. The dataset intentionally includes items with outlier shapes or properties, such as deformable biscuit packets or irregularly shaped spray bottles, to enhance its real-world applicability. The objects are categorized into 10 categories based on their shape, including boxes, cylinders, large objects, and packets.

3.6. Data Collection Methodology

To construct the object meshes, a Structure-from-Motion approach called COLMAP [

28,

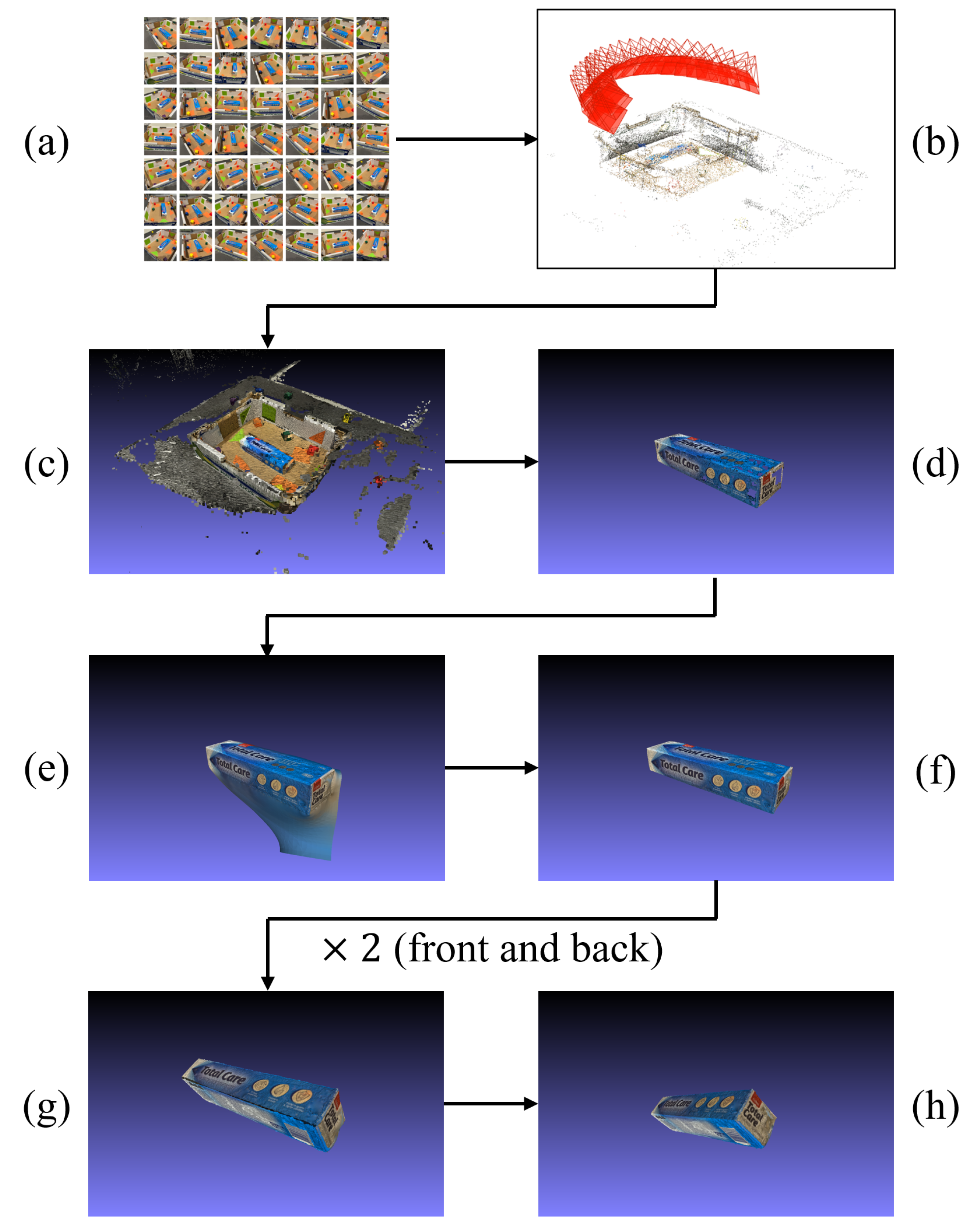

29] was utilized. The mesh construction process for each object involved the following steps. A diagram illustrating each step of the data collection and post-processing procedure can be found in

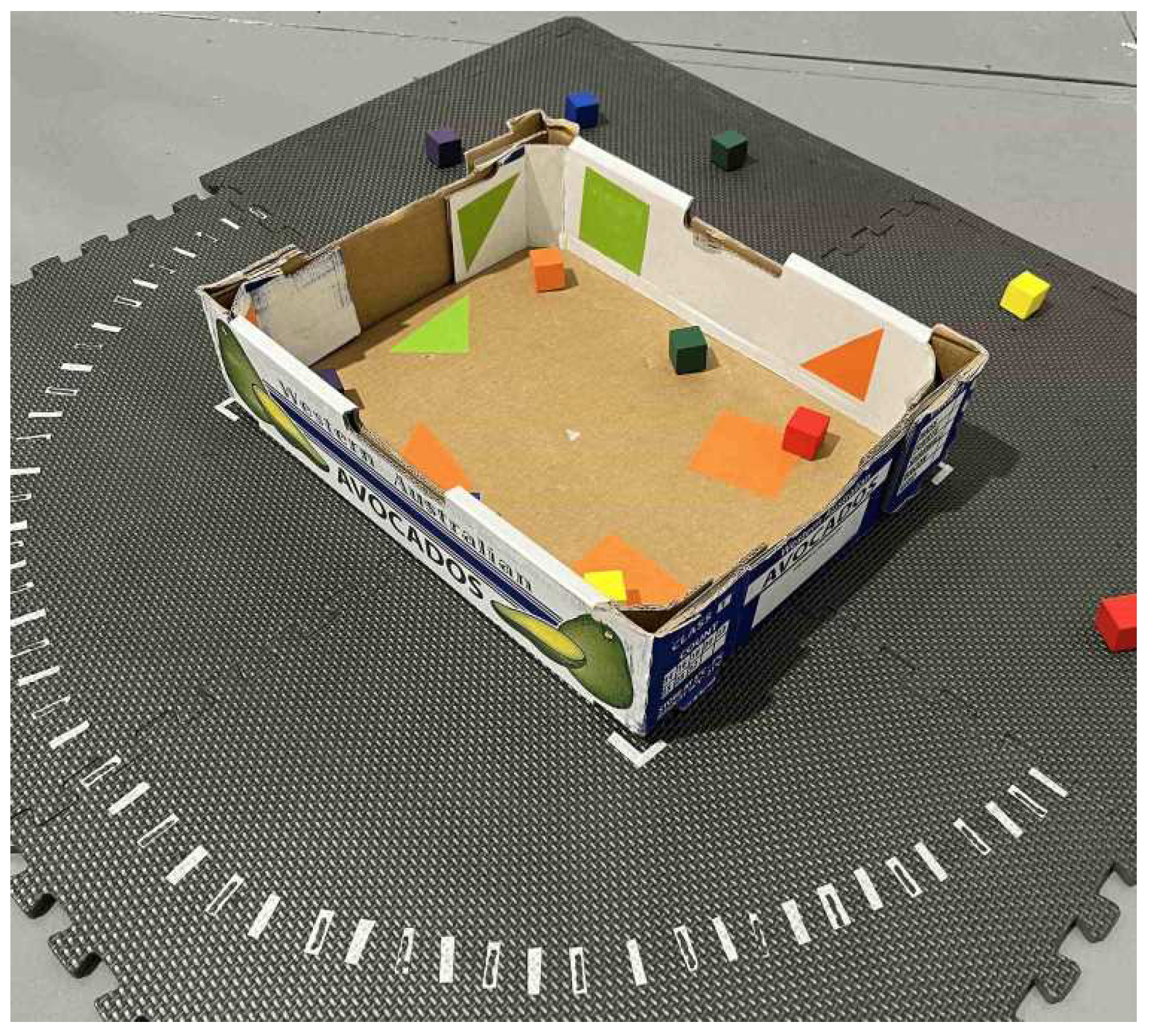

Figure 4. First, the object was placed in a feature-rich box, as depicted in

Figure 3. The box contained various colored shapes, facilitating easy feature detection and matching using SIFT [

30] and RANSAC [

31]. Next, the front and side of the object were imaged using an iPhone 13 mini from 25 different views, covering a semicircle around the object. For each view, a photo of the scene was taken, resulting in a total of 50 photos captured for each object (25 views × 2 sides). This is depicted in

Figure 4a and

Figure 4b. A high-quality point cloud of the scene was then reconstructed using COLMAP (

Figure 4c). The object was isolated and cleaned using Meshlab tools (

Figure 4d). Subsequently, screened Poisson surface reconstruction [

32] was employed to create a watertight mesh (

Figure 4e). This process introduced some artifacts that were subsequently cleaned (

Figure 4f). As the bottom of the object, which rests on the table, was not visible and therefore not imaged, the resulting mesh was incomplete. To address this issue and fully reconstruct the mesh, the object was flipped to expose the previously unseen faces, and the aforementioned steps were repeated to generate a second mesh. The two meshes were combined using point-based gluing utilising Iterative Closest Point (ICP) algorithm [

33] (

Figure 4g). This process returns a high-quality water-tight mesh of the object from the object set (

Figure 4h). This process was undertaken for all 50 objects in the object set to collect our dataset.

3.7. Metadata

The Supermarket Object Set was constructed from a total of 2,500 images, with each object represented by 50 images. Each image had a resolution of 4032×3024. The final object set consists of 50 files in the Polygon File Format (.ply). The resulting object set consists of 50 files in the Polygon File Format (.ply), which can be loaded using popular computer graphics software such as Meshlab or Blender. When uncompressed, the full dataset occupies a storage space of 14.6 GB.

4. Conclusions

The Australian Supermarket Object Set provides a unique and practical addition to the landscape of standardized benchmarking datasets. By offering a collection of 50 easily accessible supermarket items along with high-quality 3D textured meshes, it addresses key challenges faced by researchers, such as accessibility, affordability, and real-world applicability. The dataset enables the benchmarking of algorithms in both simulated and real-world environments while addressing practical challenges like deformability, mass distribution, and durability that are often neglected in existing datasets.

The accessibility of the object set through a local supermarket chain ensures its usability for Australian researchers, while its diversity of shapes, sizes, and categories makes it relevant for a wide range of robotics and computer vision applications. The systematic data collection process, employing high-resolution imaging and Structure-from-Motion techniques, ensures the reliability and accuracy of the dataset.

By focusing on common household items encountered in daily life, the supermarket object set not only serves as a valuable resource for academic research but also has the potential to improve the generalizability of algorithms in real-world settings. We anticipate that this dataset will play a significant role in advancing reproducibility and fostering innovation in robotic manipulation, object detection, and related fields. Future work may expand this dataset to include additional categories or explore its application in domain-specific tasks like grocery sorting or packaging automation.

Funding

This research was funded by Coles Group, Australia.

Data Availability Statement

References

- Calli, B.; Singh, A.; Walsman, A.; Srinivasa, S.; Abbeel, P.; Dollar, A.M. The ycb object and model set: Towards common benchmarks for manipulation research. In Proceedings of the International Conference on Advanced Robotics (ICAR); 2015. [Google Scholar]

- Hinterstoißer, S.; Lepetit, V.; Ilic, S.; Holzer, S.; Bradski, G.R.; Konolige, K.; Navab, N. Model Based Training, Detection and Pose Estimation of Texture-Less 3D Objects in Heavily Cluttered Scenes. In Proceedings of the Asian Conference on Computer Vision; 2012. [Google Scholar]

- Singh, A.; Sha, J.; Narayan, K.S.; Achim, T.; Abbeel, P. BigBIRD: A large-scale 3D database of object instances. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA); 2014; pp. 509–516. [Google Scholar] [CrossRef]

- Leitner, J.; Tow, A.W.; Sünderhauf, N.; Dean, J.E.; Durham, J.W.; Cooper, M.; Eich, M.; Lehnert, C.; Mangels, R.; McCool, C.; et al. The ACRV picking benchmark: A robotic shelf picking benchmark to foster reproducible research. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA); 2017; pp. 4705–4712. [Google Scholar] [CrossRef]

- Shilane, P.; Min, P.; Kazhdan, M.; Funkhouser, T. The princeton shape benchmark. In Proceedings of the IEEEProceedings Shape Modeling Applications; 2004. [Google Scholar]

- Wohlkinger, W.; Aldoma, A.; Rusu, R.B.; Vincze, M. 3DNet: Large-scale object class recognition from CAD models. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation; 2012; pp. 5384–5391. [Google Scholar] [CrossRef]

- Kasper, A.; Xue, Z.; Dillmann, R. The KIT object models database: An object model database for object recognition, localization and manipulation in service robotics. The International Journal of Robotics Research 2012, 31, 927–934. [Google Scholar] [CrossRef]

- Wu, Z.; Song, S.; Khosla, A.; Yu, F.; Zhang, L.; Tang, X.; Xiao, J. 3d shapenets: A deep representation for volumetric shapes. In Proceedings of the IEEE conference on computer vision and pattern recognition; 2015. [Google Scholar]

- Rennie, C.; Shome, R.; Bekris, K.E.; de Souza, A.F. A Dataset for Improved RGBD-Based Object Detection and Pose Estimation for Warehouse Pick-and-Place. IEEE Robotics and Automation Letters 2015, 1, 1179–1185. [Google Scholar] [CrossRef]

- Chang, A.X.; Funkhouser, T.; Guibas, L.; Hanrahan, P.; Huang, Q.; Li, Z.; Savarese, S.; Savva, M.; Song, S.; Su, H.; et al. ShapeNet: An Information-Rich 3D Model Repository. Technical Report arXiv:1512.03012 [cs.GR], Stanford University — Princeton University — Toyota Technological Institute at Chicago, 2015. arXiv:1512.03012.

- Drost, B.; Ulrich, M.; Bergmann, P.; Härtinger, P.; Steger, C. Introducing MVTec ITODD — A Dataset for 3D Object Recognition in Industry. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshops (ICCVW); 2017; pp. 2200–2208. [Google Scholar] [CrossRef]

- Hodaň, T.; Haluza, P.; Obdržálek, Š.; Matas, J.; Lourakis, M.; Zabulis, X. T-LESS: An RGB-D Dataset for 6D Pose Estimation of Texture-less Objects. IEEE Winter Conference on Applications of Computer Vision (WACV) 2017. [Google Scholar]

- Martín-Martín, R.; Eppner, C.; Brock, O. The RBO dataset of articulated objects and interactions. The International Journal of Robotics Research 2019, 38, 1013–1019. [Google Scholar] [CrossRef]

- Hodaň, T.; Michel, F.; Brachmann, E.; Kehl, W.; Glent Buch, A.; Kraft, D.; Drost, B.; Vidal, J.; Ihrke, S.; Zabulis, X.; et al. BOP: Benchmark for 6D Object Pose Estimation. European Conference on Computer Vision (ECCV) 2018. [Google Scholar]

- Brahmbhatt, S.; Ham, C.; Kemp, C.C.; Hays, J. ContactDB: Analyzing and Predicting Grasp Contact via Thermal Imaging. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2019; 8701–8711. [Google Scholar]

- Kaskman, R.; Zakharov, S.; Shugurov, I.; Ilic, S. HomebrewedDB: RGB-D Dataset for 6D Pose Estimation of 3D Objects. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Los Alamitos, CA, USA, oct 2019; pp. 2767–2776. [Google Scholar] [CrossRef]

- Morrison, D.; Corke, P.; Leitner, J. Egad! an evolved grasping analysis dataset for diversity and reproducibility in robotic manipulation. IEEE Robotics and Automation Letters 2020, 5, 4368–4375. [Google Scholar] [CrossRef]

- Garcia-Camacho, I.; Borràs, J.; Calli, B.; Norton, A.; Alenyà, G. Household Cloth Object Set: Fostering Benchmarking in Deformable Object Manipulation. IEEE Robotics and Automation Letters 2022, 7, 5866–5873. [Google Scholar] [CrossRef]

- Collins, J.; Goel, S.; Deng, K.; Luthra, A.; Xu, L.; Gundogdu, E.; Zhang, X.; Yago Vicente, T.F.; Dideriksen, T.; Arora, H.; et al. ABO: Dataset and Benchmarks for Real-World 3D Object Understanding. CVPR 2022. [Google Scholar]

- Liu, L.; Xu, W.; Fu, H.; Qian, S.; Han, Y.J.; Lu, C. AKB-48: A Real-World Articulated Object Knowledge Base. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022; 14789–14798. [Google Scholar]

- Downs, L.; Francis, A.; Koenig, N.; Kinman, B.; Hickman, R.; Reymann, K.; McHugh, T.B.; Vanhoucke, V. Google Scanned Objects: A High-Quality Dataset of 3D Scanned Household Items. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA). IEEE Press; 2022; pp. 2553–2560. [Google Scholar] [CrossRef]

- Tyree, S.; Tremblay, J.; To, T.; Cheng, J.; Mosier, T.; Smith, J.; Birchfield, S. 6-DoF Pose Estimation of Household Objects for Robotic Manipulation: An Accessible Dataset and Benchmark. In Proceedings of the International Conference on Intelligent Robots and Systems (IROS); 2022. [Google Scholar]

- Chen, L.; Yang, H.; Wu, C.; Wu, S. MP6D: An RGB-D Dataset for Metal Parts’ 6D Pose Estimation. IEEE Robotics and Automation Letters 2022, 7, 5912–5919. [Google Scholar] [CrossRef]

- Gao, R.; Si, Z.; Chang, Y.Y.; Clarke, S.; Bohg, J.; Fei-Fei, L.; Yuan, W.; Wu, J. ObjectFolder 2.0: A Multisensory Object Dataset for Sim2Real Transfer. In Proceedings of the CVPR. 2022. [Google Scholar]

- Gao, R.; Chang, Y.Y.; Mall, S.; Fei-Fei, L.; Wu, J. ObjectFolder: A Dataset of Objects with Implicit Visual, Auditory, and Tactile Representations. In Proceedings of the CoRL; 2021. [Google Scholar]

- Liu, X.; Li, S.; Liu, X. A Multiform Power Components Dataset for Robotic Maintenance in Power Grid. In Proceedings of the 2022 International Conference on Advanced Robotics and Mechatronics (ICARM); 2022; pp. 1116–1121. [Google Scholar] [CrossRef]

- Fang, H.; Fang, H.S.; Xu, S.; Lu, C. TransCG: A Large-Scale Real-World Dataset for Transparent Object Depth Completion and a Grasping Baseline. IEEE Robotics and Automation Letters 2022, 7, 7383–7390. [Google Scholar] [CrossRef]

- Schönberger, J.L.; Frahm, J.M. Structure-from-Motion Revisited. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR); 2016. [Google Scholar]

- Schönberger, J.L.; Zheng, E.; Pollefeys, M.; Frahm, J.M. Pixelwise View Selection for Unstructured Multi-View Stereo. In Proceedings of the European Conference on Computer Vision (ECCV); 2016. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. International journal of computer vision 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Kazhdan, M.; Hoppe, H. Screened Poisson Surface Reconstruction. ACM Trans. Graph. 2013, 32. [Google Scholar] [CrossRef]

- Zhang, Z. Iterative Point Matching for Registration of Free-Form Curves and Surfaces. Int. J. Comput. Vision 1994, 13, 119–152. [Google Scholar] [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).