Submitted:

11 February 2025

Posted:

12 February 2025

You are already at the latest version

Abstract

Keywords:

I. Introduction

II. Methodology

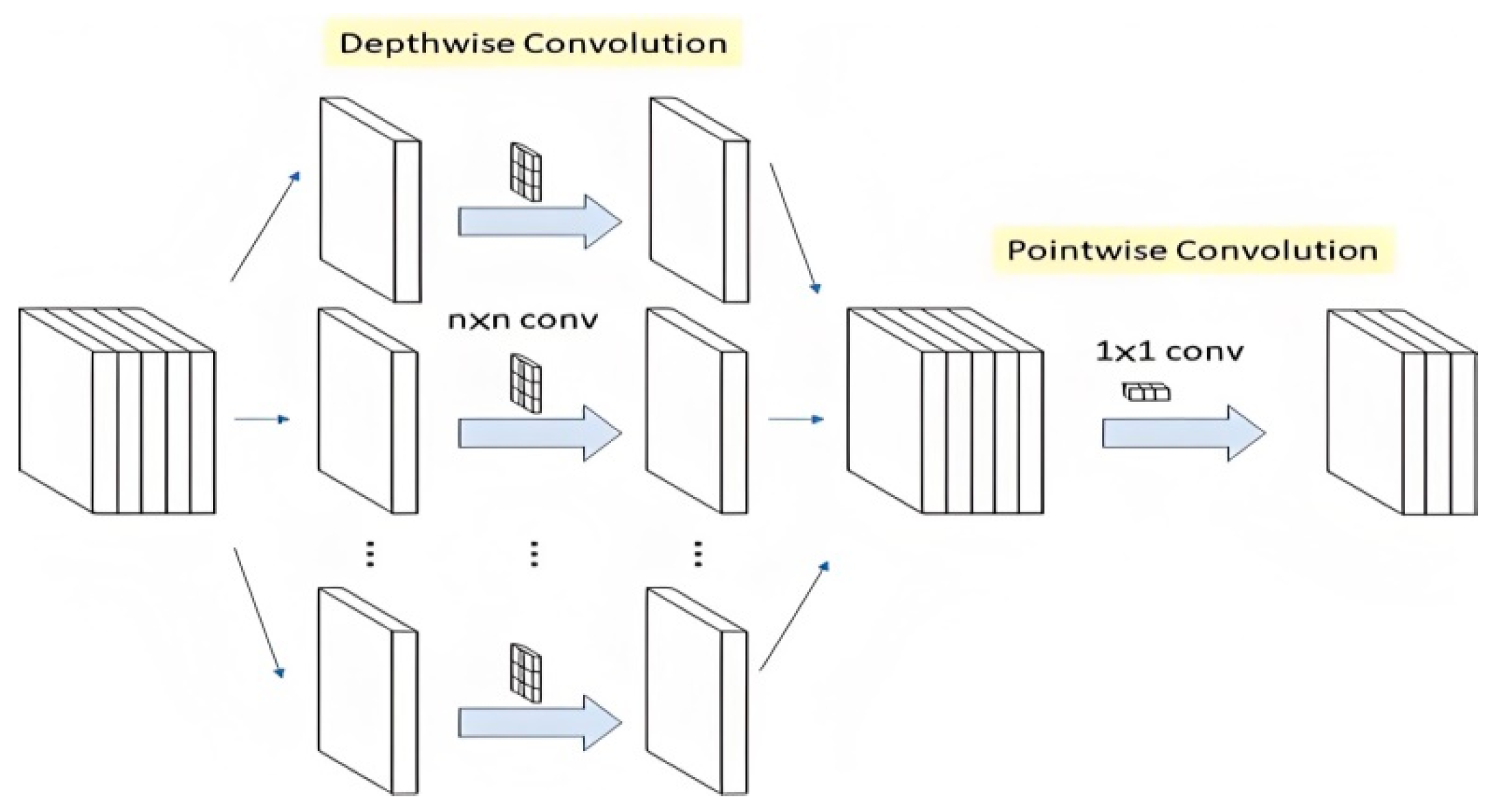

A. Convolutional Neural Networks (CNN)

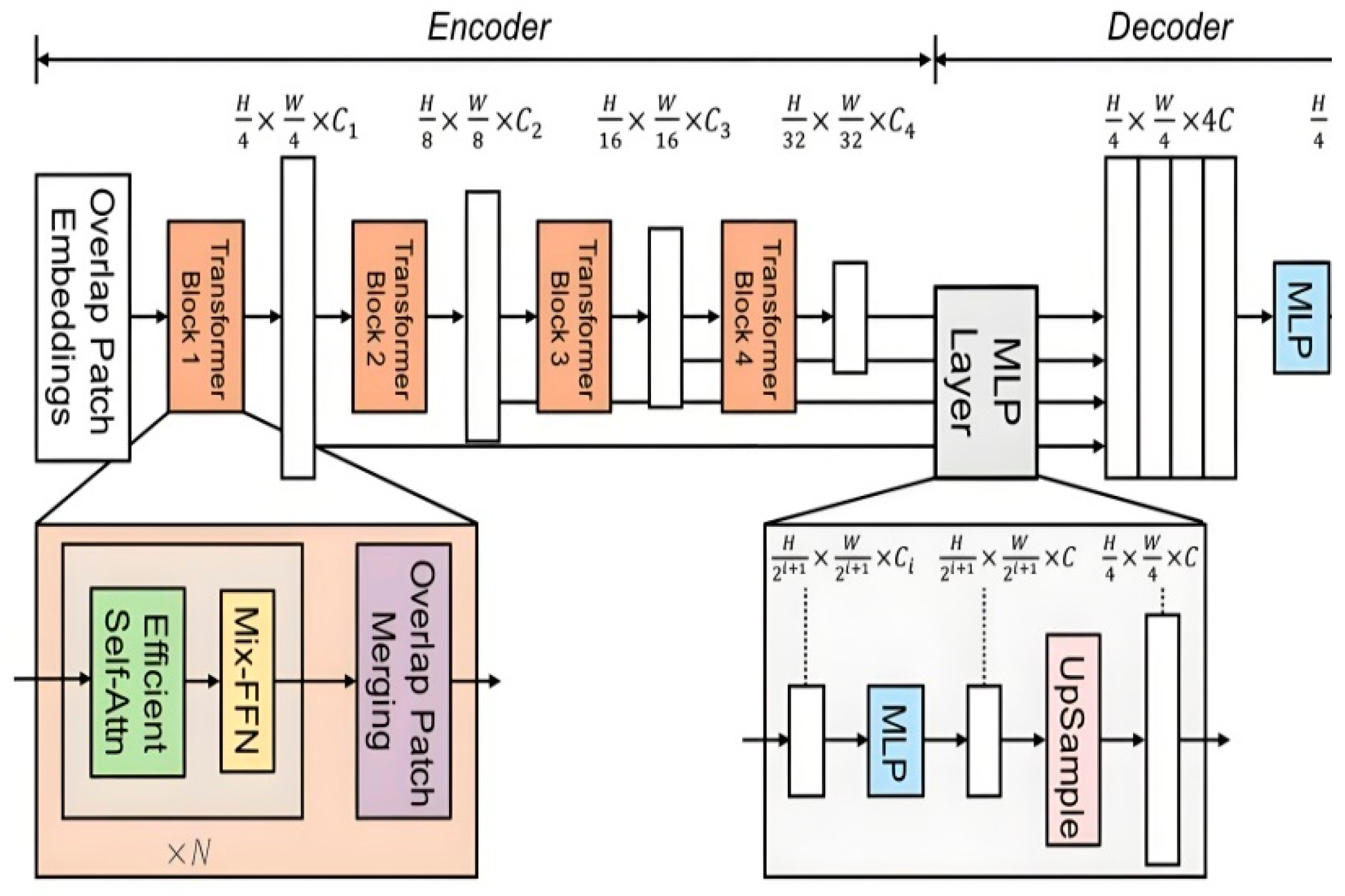

B. Transformers

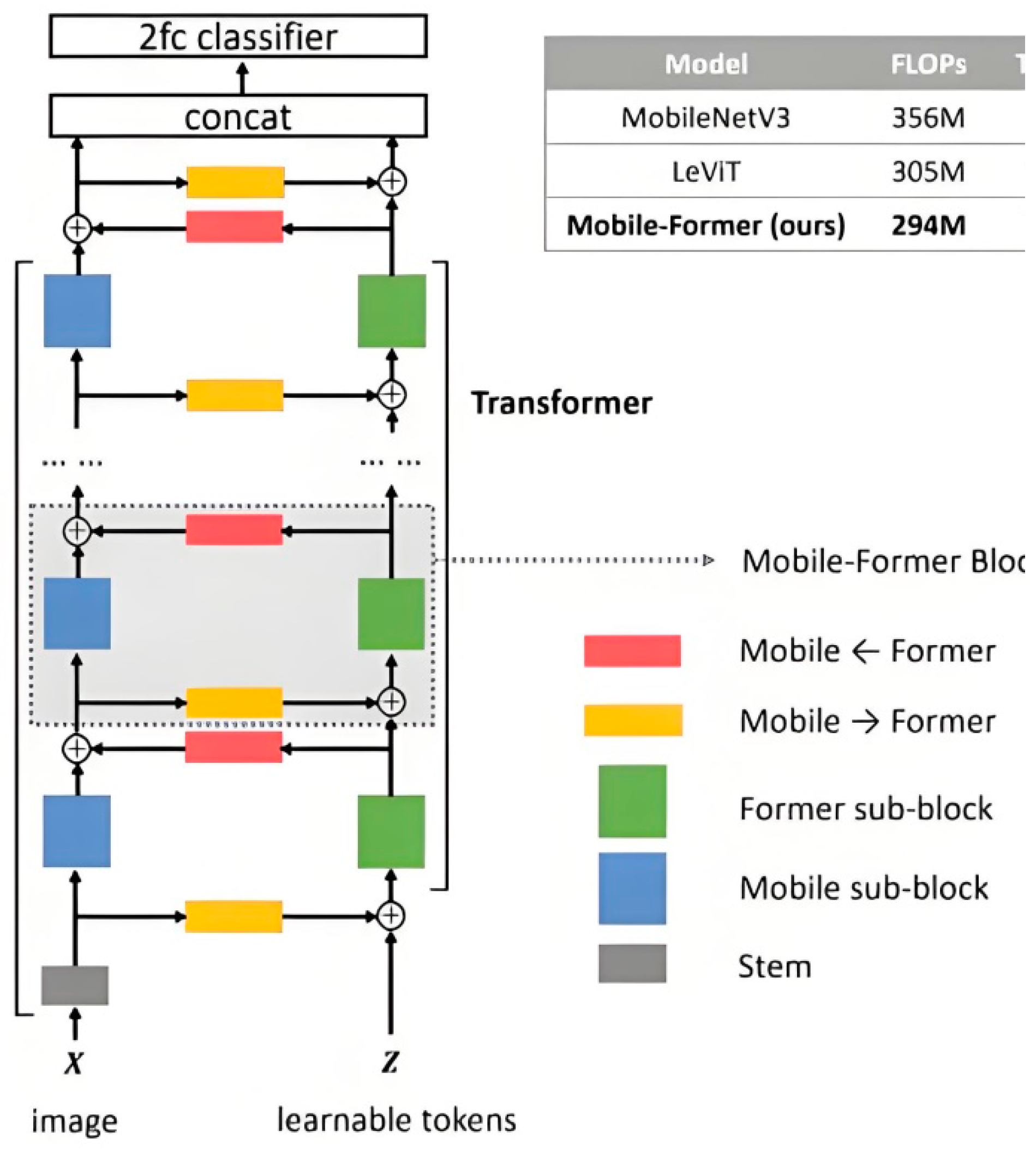

C. CNN-Transformer

III. Data

A. Data

B. Data Preprocessing

C. Feature Selection

IV. Results

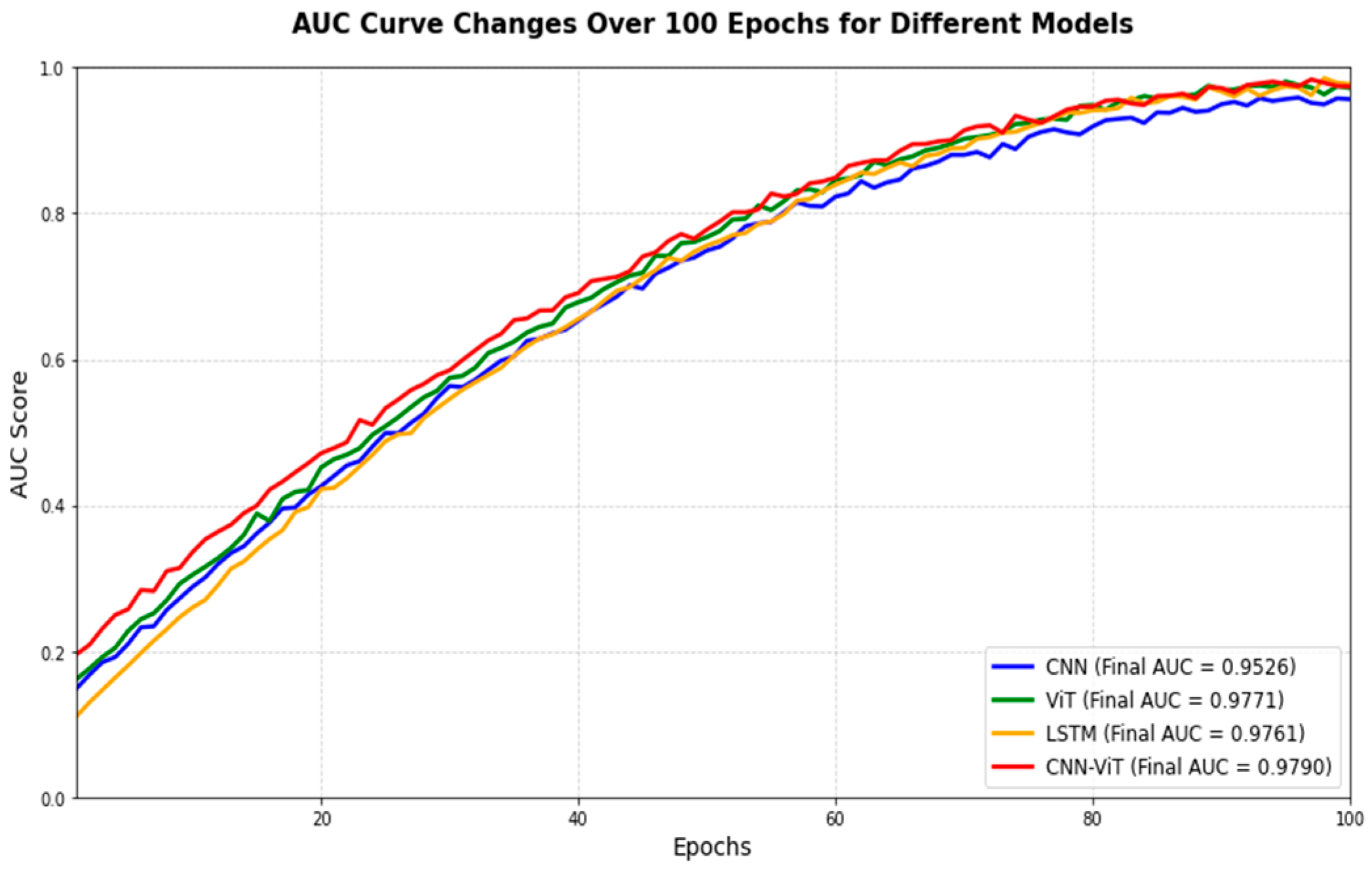

A. Composite Model Performance

B. Comparison of Different Model Performances

V. Conclusions

References

- Richhariya, P., & Singh, P. K. (2012). A survey on financial fraud detection methodologies. International journal of computer applications, 45(22), 15-22.

- West, J., & Bhattacharya, M. (2016). Intelligent financial fraud detection: a comprehensive review. Computers & security, 57, 47-66. [CrossRef]

- Ngai, E. W., Hu, Y., Wong, Y. H., Chen, Y., & Sun, X. (2011). The application of data mining techniques in financial fraud detection: A classification framework and an academic review of literature. Decision support systems, 50(3), 559-569. [CrossRef]

- Ke, Z., Zhou, S., Zhou, Y., Chang, C. H., & Zhang, R. (2025). Detection of AI Deepfake and Fraud in Online Payments Using GAN-Based Models. arXiv preprint arXiv:2501.07033.

- Ravisankar P, Ravi V, Rao G R, et al. Detection of Financial Statement Fraud and Feature Selection Using Data Mining Techniques[J]. Decision Support Systems, 2011,50(2):491–500. [CrossRef]

- Ali, A., Abd Razak, S., Othman, S. H., Eisa, T. A. E., Al-Dhaqm, A., Nasser, M., ... & Saif, A. (2022). Financial fraud detection based on machine learning: a systematic literature review. Applied Sciences, 12(19), 9637. [CrossRef]

- Sabau, A. S. (2012). Survey of clustering based financial fraud detection research. Informatica Economica, 16(1), 110.

- Yu, Q., Ke, Z., Xiong, G., Cheng, Y., & Guo, X. (2025). Identifying Money Laundering Risks in Digital Asset Transactions Based on AI Algorithms. [CrossRef]

- Hilal, W., Gadsden, S. A., & Yawney, J. (2022). Financial fraud: a review of anomaly detection techniques and recent advances. Expert systems With applications, 193, 116429. [CrossRef]

- Yu, Q., Xu, Z., & Ke, Z. (2024, November). Deep learning for cross-border transaction anomaly detection in anti-money laundering systems. In 2024 6th International Conference on Machine Learning, Big Data and Business Intelligence (MLBDBI) (pp. 244-248). IEEE. [CrossRef]

- Chauhan, R., Ghanshala, K. K., & Joshi, R. C. (2018, December). Convolutional neural network (CNN) for image detection and recognition. In 2018 first international conference on secure cyber computing and communication (ICSCCC) (pp. 278-282). IEEE. [CrossRef]

- Throckmorton, C. S., Mayew, W. J., Venkatachalam, M., & Collins, L. M. (2015). Financial fraud detection using vocal, linguistic and financial cues. Decision Support Systems, 74, 78-87. [CrossRef]

- Zhang, Z., Li, X., Cheng, Y., Chen, Z., & Liu, Q. (2025). Credit Risk Identification in Supply Chains Using Generative Adversarial Networks. arXiv preprint arXiv:2501.10348.

- Maaz, M., Shaker, A., Cholakkal, H., Khan, S., Zamir, S. W., Anwer, R. M., & Shahbaz Khan, F. (2022, October). Edgenext: efficiently amalgamated cnn-transformer architecture for mobile vision applications. In European conference on computer vision (pp. 3-20). Cham: Springer Nature Switzerland. [CrossRef]

- Ke, Z., & Yin, Y. (2024). Tail Risk Alert Based on Conditional Autoregressive VaR by Regression Quantiles and Machine Learning Algorithms. arXiv preprint arXiv:2412.06193.

- Bello, O. A., Folorunso, A., Ogundipe, A., Kazeem, O., Budale, A., Zainab, F., & Ejiofor, O. E. (2022). Enhancing Cyber Financial Fraud Detection Using Deep Learning Techniques: A Study on Neural Networks and Anomaly Detection. International Journal of Network and Communication Research, 7(1), 90-113.

- Reddy, N. M., Sharada, K. A., Pilli, D., Paranthaman, R. N., Reddy, K. S., & Chauhan, A. (2023, June). CNN-Bidirectional LSTM based Approach for Financial Fraud Detection and Prevention System. In 2023 International Conference on Sustainable Computing and Smart Systems (ICSCSS) (pp. 541-546). IEEE. 10.1109/ICSCSS57650.2023.10169800.

- Ke, Z., Xu, J., Zhang, Z., Cheng, Y., & Wu, W. (2024). A consolidated volatility prediction with back propagation neural network and genetic algorithm. arXiv preprint arXiv:2412.07223.

- Liu A*, Jia, M, Chen, C, (2025). From Speculative Dreams to Ghost Housing Realities: Homeless Homeowners Failed Future-Making in Urban Development, Urban Geography, https://doi.org/10.1080/02723638.2025.2456545.

- Chen, Y., Liu, L., & Fang, L. (2024). An Enhanced Credit Risk Evaluation by Incorporating Related Party Transaction in Blockchain Firms of China. Mathematics, 12(17), 2673. [CrossRef]

- Udayakumar, R., Joshi, A., Boomiga, S. S., & Sugumar, R. (2023). Deep Fraud Net: A Deep Learning Approach for Cyber Security and Financial Fraud Detection and Classification. Journal of Internet Services and Information Security, 13(3), 138-157. [CrossRef]

- Zhou, S., Zhang, Z., Zhang, R., Yin, Y., Chang, C. H., & Shen, Q. (2025). Regression and Forecasting of US Stock Returns Based on LSTM. [CrossRef]

- Wan, W., Zhou, F., Liu, L., Fang, L., & Chen, X. (2021). Ownership structure and R&D: The role of regional governance environment. International Review of Economics & Finance, 72, 45-58. [CrossRef]

- Liu, A., & Chen, C. (2025). From real estate financialization to decentralization: A comparative review of REITs and blockchain-based tokenization. Geoforum, 159, 104193. [CrossRef]

- Hu, Z., Yu, R., Zhang, Z., Zheng, H., Liu, Q., & Zhou, Y. (2024). Developing Cryptocurrency Trading Strategy Based on Autoencoder-CNN-GANs Algorithms. arXiv preprint arXiv:2412.18202.

| #. | Variables |

|---|---|

| 1 | Basic earnings per share |

| 2 | Disposal of fixed assets, intangible assets, etc., net cash received |

| 3 | Ex-rights date |

| 4 | Operating expenses |

| 5 | Diluted earnings per share growth rate |

| 6 | Total comprehensive income attributable to owners of the parent company |

| 7 | Report disclosure date |

| 8 | Total shareholders' equity (or stockholders' equity) |

| 9 | Cash received related to other business activities |

| 10 | Actual receipt time |

| 11 | Actual investment (or cost) |

| 12 | Issue date |

| 13 | Other industries |

| 14 | Accounts receivable |

| 15 | Deferred income |

| 16 | Purchase of fixed assets, intangible assets, and others |

| 17 | Non-current liabilities total |

| 18 | Operating foreign income |

| 19 | Long-term equity investments/assets |

| 20 | Short-term loans |

| 21 | Asset impairment loss |

| 22 | Financial expenses |

| 23 | Total comprehensive income |

| 24 | Addition: Beginning cash and cash equivalents balance |

| 25 | Total comprehensive income attributable to owners (or shareholders) of the parent company |

| 26 | Other non-current assets |

| 27 | Total profit (indicated as a negative number if a loss) |

| 28 | Operating profit (indicated as a negative number if a loss) |

| 29 | Cash received from borrowing |

| 30 | Total investment cash inflows |

| 31 | Undistributed profit |

| 32 | Undistributed profit |

| 33 | Taxes and fees paid |

| 34 | Operating profit |

| 35 | Other receivables |

| 36 | Deferred taxes |

| 37 | Employee payable compensation |

| 38 | Research and development expenses (R&D expenses) |

| Kernel Size | Accuracy | Precision | Recall | F1 Score | AUC |

|---|---|---|---|---|---|

| 3 | 0.9663 | 0.9594 | 0.9761 | 0.9682 | 0.9771 |

| 6 | 0.9692 | 0.9624 | 0.9771 | 0.9692 | 0.9790 |

| 9 | 0.9682 | 0.9614 | 0.9771 | 0.9682 | 0.9790 |

| 12 | 0.9692 | 0.9594 | 0.9780 | 0.9682 | 0.9780 |

| Models | Accuracy | Precision | Recall | F1 Score | AUC |

|---|---|---|---|---|---|

| CNN | 0.9183 | 0.8879 | 0.9673 | 0.9212 | 0.9526 |

| ViT | 0.9663 | 0.9594 | 0.9741 | 0.9663 | 0.9771 |

| LSTM | 0.9594 | 0.9467 | 0.9731 | 0.9604 | 0.9761 |

| CNN-VIT | 0.9692 | 0.9624 | 0.9771 | 0.9692 | 0.9790 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).