Introduction

Neural information represents nature’s solution for managing different afferent or efferent information in a complex biological entity by integrating into nervous systems the management of internal and external information in order to ensure entity survival in an unpredictable nondeterministic environment. The neural specific way of organizing and processing information has represented a challenge over time, with reverberations in many fields: biology (cellular neural information specificity on living entities), neuroscience (providing frameworks for understanding neural information circuits management and nervous systems functionality), medicine (neural specific diagnostics and treatments strategies), cognitive sciences (modeling decision-making and perceptions as neural information outcomes in mental architectures and representations), computer science (inspiring new architectures for artificial intelligence but also providing a relevant scientific and technological environment for testing and validating of neural information), robotics (integrating neural characteristics as bio-inspired control on afferent and efferent information management), linguistics (understanding language acquisition and processing as emergent neural information dynamics on hierarchical semantic structures), philosophy (emergence of consciousness, knowledge representation, distributed causality, ideas genesis and concepts of intelligence), psychology (correlating neural information cognitive processes with motivational ones on human behavior), natural sciences (scientific knowledge correlation and limits in relation to objective neural perceptions of nature), education (optimizing learning by understanding methodologies through neuroadaptive strategies), arts (generating creative elements and understanding aesthetic perceptions and neural non-deterministic effects), sociology (explanation and modeling emergent collective behavior and social neuromimetic networks in a similar manner with the group itself as a neural entity), anthropology (neural information influences on cultural information transmission and evolution), environmental sciences (neural information exchange and impact on the environment), organizational science (understanding and optimizing information flows in complex institutional structures), legal studies (understanding the decision-making process in judicial phenomena), etc. [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13,

14,

15,

16]. However, even if the sophisticated neural information specificity has led to numerous competing models and frameworks, often tailored to specific aspect rather than addressing universal characteristics, the neural information principles can be identified through synthesis provided by the information technology that stands today at the forefront of neural systems research, and which become an important testing ground due to the objective and immediate impact facilities that it offers and due they have become sufficiently powerful both in terms of processing speed and the amount of information that it can manage, serving as the primary catalyst in unveiling the fundamental neural information aspects, with dual focus on hardware development and software implementations of neural methods, creating a unique laboratory for testing and refining our understanding neural specificity with many neural elements starting to be integrated into programming instruments as complementary and augmentative elements of formal digital ones as auxiliary or even embedded libraries [

17,

18,

19,

20]. In the following sections, the neural information organizing and processing principles are presented in a concise way, targeting both axiomatic structuring and transdisciplinary perspective.

0. Function Principle

The principle of neural function represents the most comprehensive principle that synthesizes the essence of a neural system that can be reduced to a fundamental function related to the fundamental objective (survival in the biological case, delivery of a contextual text in the case of a ChatBot, etc.) and which incorporates other subsequent functions and characteristics highlighted by the other objectives and principles. The general fundamental function of the ensemble is reflected (fragmented) in many other functions at different levels down, through specific functions of parts (organs), regions, operations, and so on down to the function of an elementary decision-making information node (neuron) and an elementary information connection or storage (synapse).

Figure 0.

Neural Function Illustration.

Figure 0.

Neural Function Illustration.

Any neural function has an objective (utility, purpose, target, functionality, function itself, etc.), being finitely and constrainedly structured in order to use optimally the finite available resources. Therefore, the neural function provides an adaptive result (compromise approximation) by balancing objectives with constraints, both being nuanced by other principles and highlight the finite and limited nature of a neural system. The neural informational function serves as fundamental operational element that bridge afferent-efferent information as a concrete mechanism that facilitates specific input-output transformations from the cellular level to abstract emergence of cognitive capabilities. The neural function enables the management of hierarchized and modularized neural information and represents the most comprehensive concept for understanding and describing the operational and organizational mechanisms in natural and artificial neural systems.

1. Memorization Principle

The neural information memorization principle represents the specificity of neural information to be preserved from the simple memorization of informational fragments (at the synapse level), to more correlated (combined) fragments form regionally extended and more abstractly structured informational organization levels, up to the information preservation from one entity (generation) to another in a way other than genetically, through culture. The nature chose to memorize elements of neural information for several reasons: information compression (storing patterns), energy efficiency and resource optimization (minimize energy spent on reprocessing known inputs, avoiding metabolically favorable constant relearning), adaptive efficiency (provides crucial survival benefits like avoiding dangers and finding food, to respond quickly to recurring patterns or stimuli, to store critical patterns and vital environmental cues), predictive modeling (to anticipate future outcomes based on past experiences), behavioral flexibility (allowing adaptation without genetic evolution), redundancy reduction (to encode essential information while filtering noise), learning optimization (to build on prior knowledge without starting from scratch), hierarchical abstraction (to represent complex ideas through simplified structures), time integration and information correlation (bridging temporal or detailed gaps between causes and effects), etc.

Figure 1.

Memorization Illustration.

Figure 1.

Memorization Illustration.

The neural information is memorized by storing its fragments in the distributed placements and also by placing multiple copies in differently distributed locations and in association with other information, and also in the form of information mixtures. Memorization accompanies the processes highlighted by other principles: functions contain memorized components (parameters), etc.

2. Nondeterminism Principle

The neural information nondeterminism principle highlights the inherent specificity of natural and artificial neural systems to operate beyond strictly deterministic frameworks as a response to a nondeterministic environment, the nature identifying the most efficient approach of adapting to environmental non-determinism by using the non-deterministic elements themselves in the neural system, imitating in fact the similar solution used at the genetic level. Consequently, neural information has a degree of uncertainty, to which contribute also other related characteristics such as: variability (using multiple, different or diverse response to increase the chances), indeterminism, randomness, stochasticity, etc. The nondeterminism is reflected in many aspects considered positive (desirable, useful, etc.) such as: creativity (generating novel ideas or solutions), adaptability (adjusting to new or changing environments, the noise as a source with evolutionary potential), exploration (discovering alternative paths or strategies), resilience (overcoming incomplete or noisy inputs), flexibility (handling ambiguous or conflicting information), emergent behaviors (spontaneously achieving complex outcomes), stochastic resonance for enhanced signal detection, synaptic exploration during learning and development, emotional variability allowing for rich experiences, hormonal fluctuations enabling diverse physiological responses, genetic expression variability supporting evolutionary adaptation, the capacity for divergent thinking, free will, etc.

Figure 2.

Nondeterminism Illustration.

Figure 2.

Nondeterminism Illustration.

The nondeterminism is also reflected in many negative aspects (undesirable, useless, etc.) such as: unpredictability (difficulty in forecasting outcomes), instability (susceptibility to chaotic or erratic information), error-proneness (misinterpreting or misclassifying), bias amplification (exaggerating existing biases in learning stage), inefficiency (wasting resources on unproductive exploration), fragility (vulnerability to adversarial information or perturbations), susceptibility to pathological states, vulnerability to noise-induced errors, challenges in maintaining stable neural representations across time and contexts, etc. The nondeterminism is indispensable to a realistic neural system; its suppression in the area of artificial neural systems to transform them into deterministic and predictable systems cancels out most of the neural specific characteristics, therefore, at least a minimal degree of nondeterminism must be maintained.

3. Fragmentation Principle

The neural information fragmentation principle highlights the fact that fragmentation of information and related processes occurs at any level, starting with the neural ensemble of the entity and continuing with various intermediate levels related to organs, neuronal regions and down to the cellular level of decision-making atoms (neurons) and even lower to the level of their connection parts on memorization atoms (synapses).

Figure 3.

Fragmentation Illustration.

Figure 3.

Fragmentation Illustration.

Fragmentation is achieved both as a way of information organizing (essentially a cellular/atomic one) but also from of the information flow transformation perspective in which the input information (coming from the sense organs, for example) is initially fragmented (as at the level of the retina in the visual case and later of the optic nerves), and also further at the level of the input interface neurons layers (where in the case of visual information natural fragmentation directly solves the convolution segregation compared to artificial case in which are applies explicit convolution operations for feature extraction) and subsequently in alternation with the aggregation processes that abstract the information, abstractions which also can be subject to subsequent fragmentations, also implying the distribution of information, etc. The functionality (function), as a fundamental neuronal objective, has a fragmented structure, being fragmented into other sub functions related to sub processes. Fragmentation is usually associated with the input information, also accompanies many afferent (incoming) and efferent (outgoing) information and processes, both interface and internal, such as: encoding, segregation, disassembly, gauging, sensing, splitting, dispatching, etc.

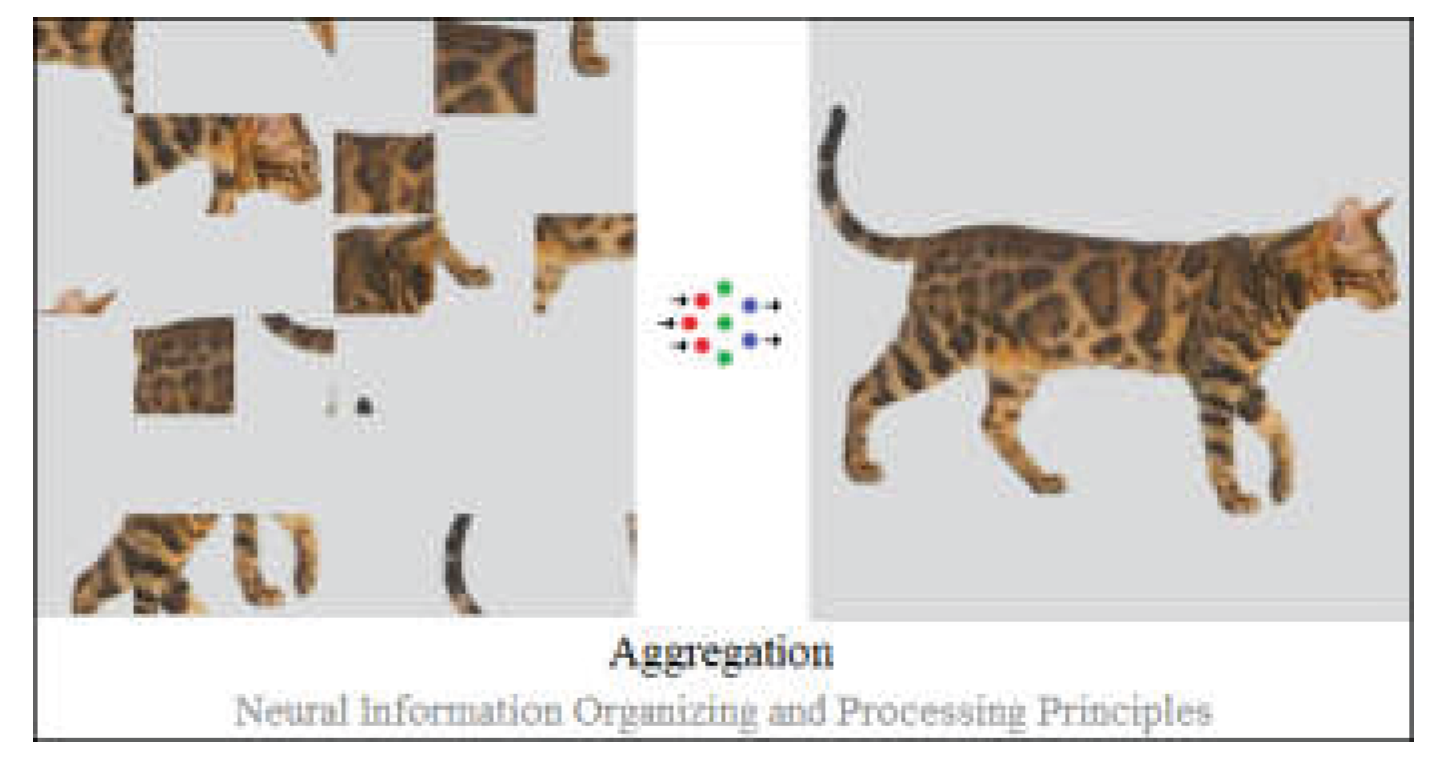

4. Aggregation Principle

The neural information aggregation principle represents a set of structuring and transformations that facilitate the collection, correlation and integration of informational fragments or other aggregates. Neural information aggregation is present at any level, from the elementary decision node (neuron) level as action output, to neuronal regions as feature detection, up network level as perceptions and motor reaction, and more abstract as categories, concepts, and even social characteristics related to other external entities, environment etc. It can be see that at fundamental level, a neural node (neuron) is both a fragmentation and also an aggregation center.

Figure 4.

Aggregation Illustration.

Figure 4.

Aggregation Illustration.

In terms of neural functions structuring, aggregation indicates how information components are correlated and integrated in order to obtain the function output that achieves its objective, as a dynamic process through which multiple parallel streams of information converge and combine, resulting in unified coherent intermediate or final output guided by contextual relevance highlighted by other principles such as objectivation, etc. The aggregation principle is also involved in achieving multimodal integrations by combining and correlating information from different sources to form complex and coherent representations, sometimes even completing missing informational components that can also generate creative or illusion-like representations, etc. Aggregation is usually associated with the output demand processes, both interface and internal, such as: collecting, correlation, decoding, integration, assembly, controlling, actuating, efferention, etc.

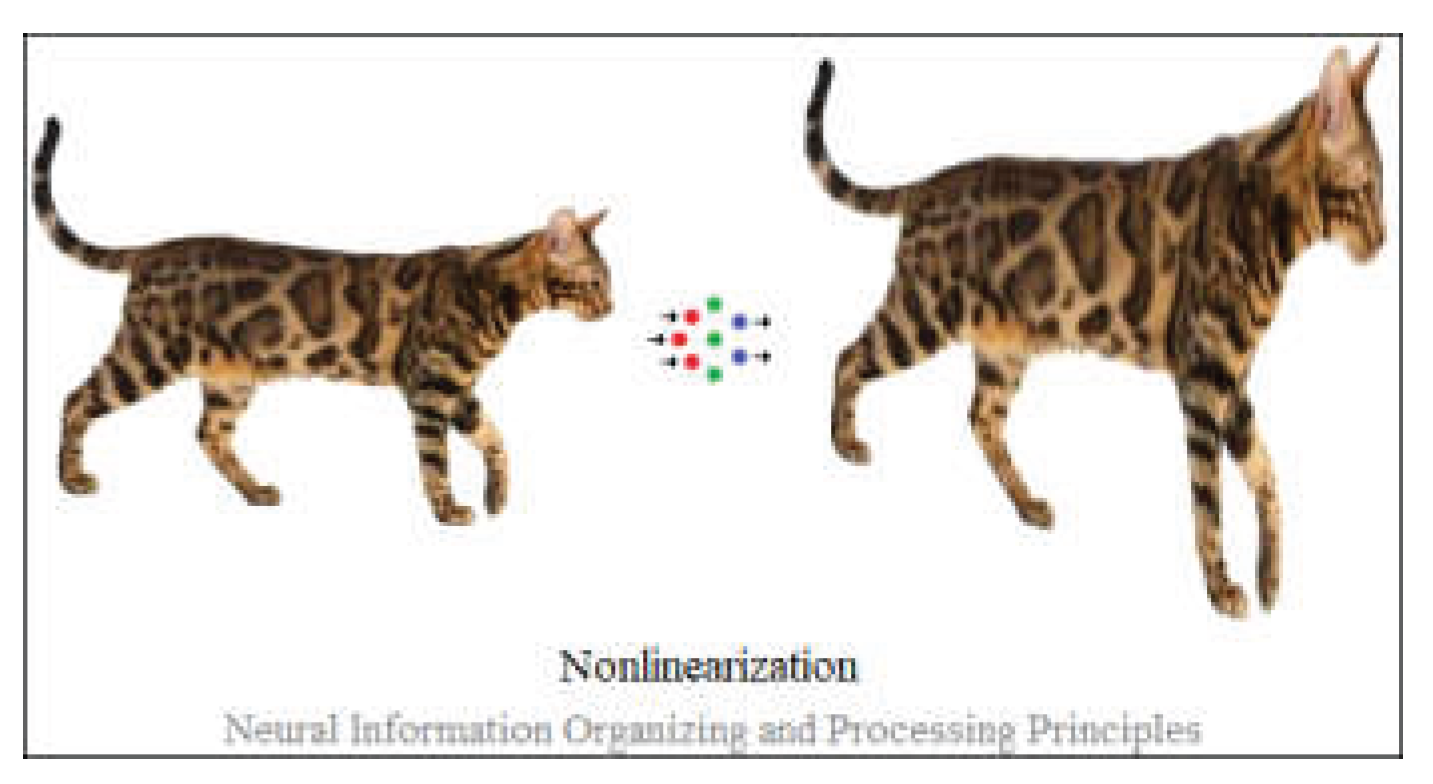

5. Nonlinearization Principle

The neural information nonlinearization principle highlights the nonlinear characteristic of information transformations in neural systems reflected at all levels. Nonlinearity also induces or implies asymmetry, characteristic that has both structural and processing relevance. Essentially, the nonlinear characteristic allows certain information that does not have an important quantitative weight to acquire decision-making relevance in a certain context.

Figure 5.

Nonlinearization Illustration.

Figure 5.

Nonlinearization Illustration.

At the synaptic level, information accumulations are in reality nonlinear, even if though numerical modeling usually it uses linear weightings for ease calculation. At the level of neuronal nodes (neurons), the aggregation of information coming from the afferent nodes is nonlinear integrated, the neuronal response (output) being also a nonlinear activating one through thresholds that create firing patterns. At an even more extensive level, that of a group of interconnected nodes or a region, the selection of relevant information is also carried out nonlinearly with emergent pattern detection and multi-stable information selection and switching. At network level, many complex dynamics show attractors, spontaneous functional clustering, and nonlinear associative information effects. At cognitive level, some categorical perception, decision-making, and sudden insight transitions as higher-order manifestations of underlying neural nonlinearity. At behavioral level, the nonlinearity is reflected on threshold-based responses and abrupt state transitions in both actions and emotional states. Even at social level, neural entities in groups demonstrate avalanche effects, spontaneous clustering, and phase transitions in collective behavior, all stemming from fundamental neural nonlinearity. It can be said that literally (mathematically), the nonlinearization principle is reflected in the fact that the neural ensemble counts more than the sum of its parts and in certain contexts an insignificant part can provide the most relevant contribution and response.

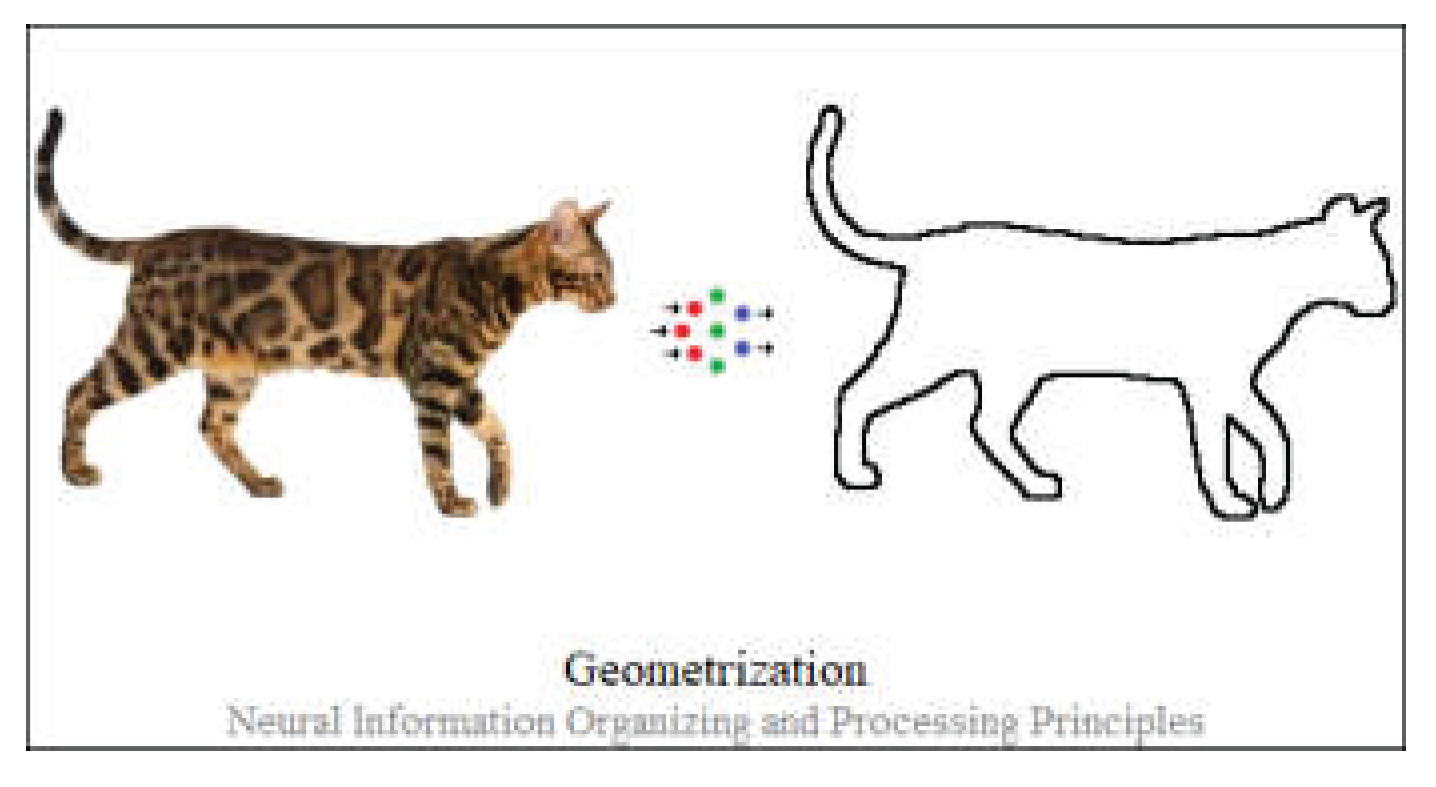

6. Geometrization Principle

The neural information geometrization principle represents a set of organizing and processing characteristics that allow the correlation of neural information based on geometric locations and similarities, and forming abstract geometric representations, thus conferring significance to perceptions by preserving the relationships and relative geometrical concrete or abstract position (relation) of their components. The geometrization of neural information is achieved through the symbiosis between the architectural geometry of the neural network and the geometrization of information flow that circulating through the network, both influencing and transforming reciprocal, including through alternations of afferent type (grouping and correlating source information) and efferent type (related to destination).

Figure 6.

Geometrization Illustration.

Figure 6.

Geometrization Illustration.

The geometrization of neural information is present at all levels, in which both topological and informational neighborhoods leave their mark on neural processes. For example, visual information is taken from the retina directly with a geometric structuring with neighboring retinal areas that will connected by the optic nerves with similar neighboring or correlated neuronal areas, which allows the relative localization of relevant visual information. Moreover, in order to achieve a more relevant final geometric representation of neural information, nature has doubled/multiplied some receptors (eyes, ears, etc.), and also the processing neural regions, etc. Geometrization allows deeper/abstract information representations (a thought, an idea, etc.) and also explains, for example, the formation of illusions (not just optical ones) in the situation where some missing information fragments are geometrically compensated and completed by the network, forming a representation different from the real one, etc.

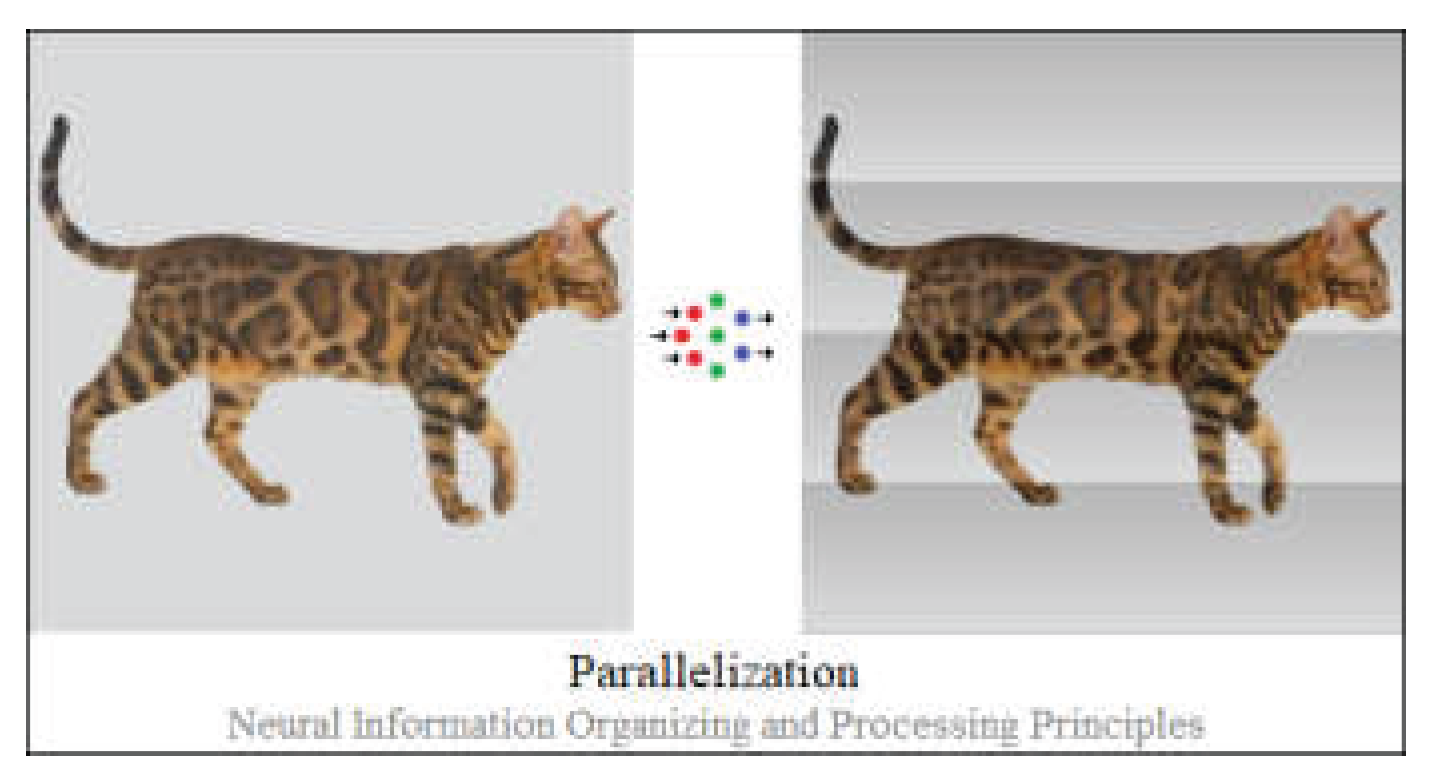

7. Parallelization Principle

The neural information parallelization principle highlights the characteristic of neural systems to process information simultaneously in multiple ways and with different information streams across multiple neural pathways. Parallelization is essential for the simultaneous management of both multiple affectors (sense organs, sensors, etc.) and multiple effectors (motor elements, actuators, etc.), located in the peripheral areas and also in the central areas through alternative processing regions that have the role of highlighting different information aspects and features. Thus, parallelization enables biological or artificial neural systems to integrate diverse sensory inputs, manage complex multiple tasks, and respond to the environment in real-time.

Figure 7.

Parallelization Illustration.

Figure 7.

Parallelization Illustration.

Parallelization is achieved by involving other principles, such as information fragmentation in a preliminary stage, aggregation at the end where information is recombined into cohesive representations, etc. The neural information parallelization is optimized by some feedback mechanisms, both internal and external. The neural parallelism is architecturally and informationally present throughout the entire neural ensemble, as well as at different levels of processing, from the signal level (direct stimulus information), intermediate through numerous distributed processing paths related to some specializations tasks, to the most abstract cognitive and social processes.

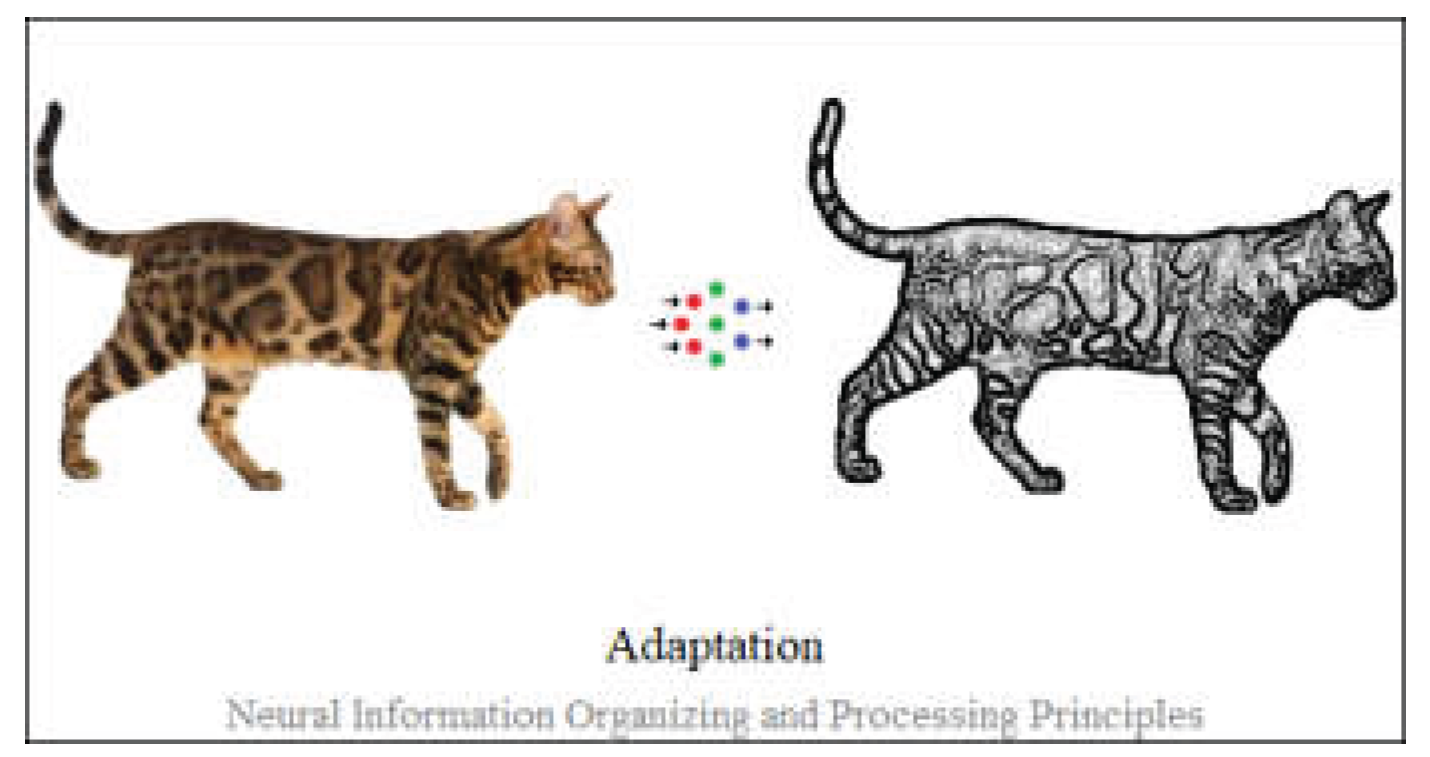

8. Adaptation Principle

The neural information adaptation principle is the capacity of neural structures and processes to modify their properties, configurations, functional characteristics, and behavior in response to both intrinsic and extrinsic factors, enabling optimal information processing and behavioral outcomes in dynamic environments. Mathematically (algorithmically), adaptation means approximation (flexibility) in achieving the functional objective, by tolerating some information variations, feature reflected in many neural characteristics: fault tolerance, error tolerance, modification tolerance, injury tolerance, pattern recognition, dynamic stability, resilience to environmental uncertainty, etc.

Figure 8.

Adaptation Illustration.

Figure 8.

Adaptation Illustration.

The neural adaptability is a multi-scale characteristic, starting from molecular and cellular processes to large-scale cognitive and social one. The adaptability, whether is natural or artificial, has many ways of manifestation on: fundamental level (synaptic/homeostatic/morphological plasticity, adjustable weights/biases/thresholds/activation parameters and functions), network level (regions plasticity, network homeostasis, neuromodulation, neurofiltration), entity level (feedbacks, emotional regulations), social level (empathy, communication, collaboration, deception), etc. In correlation with other principles such as that of objectivation, it contributes to establishing the relevance of a part (fragment) into an ensemble (aggregate) in a given context by neglecting unimportant information, as in attention mechanism, etc.

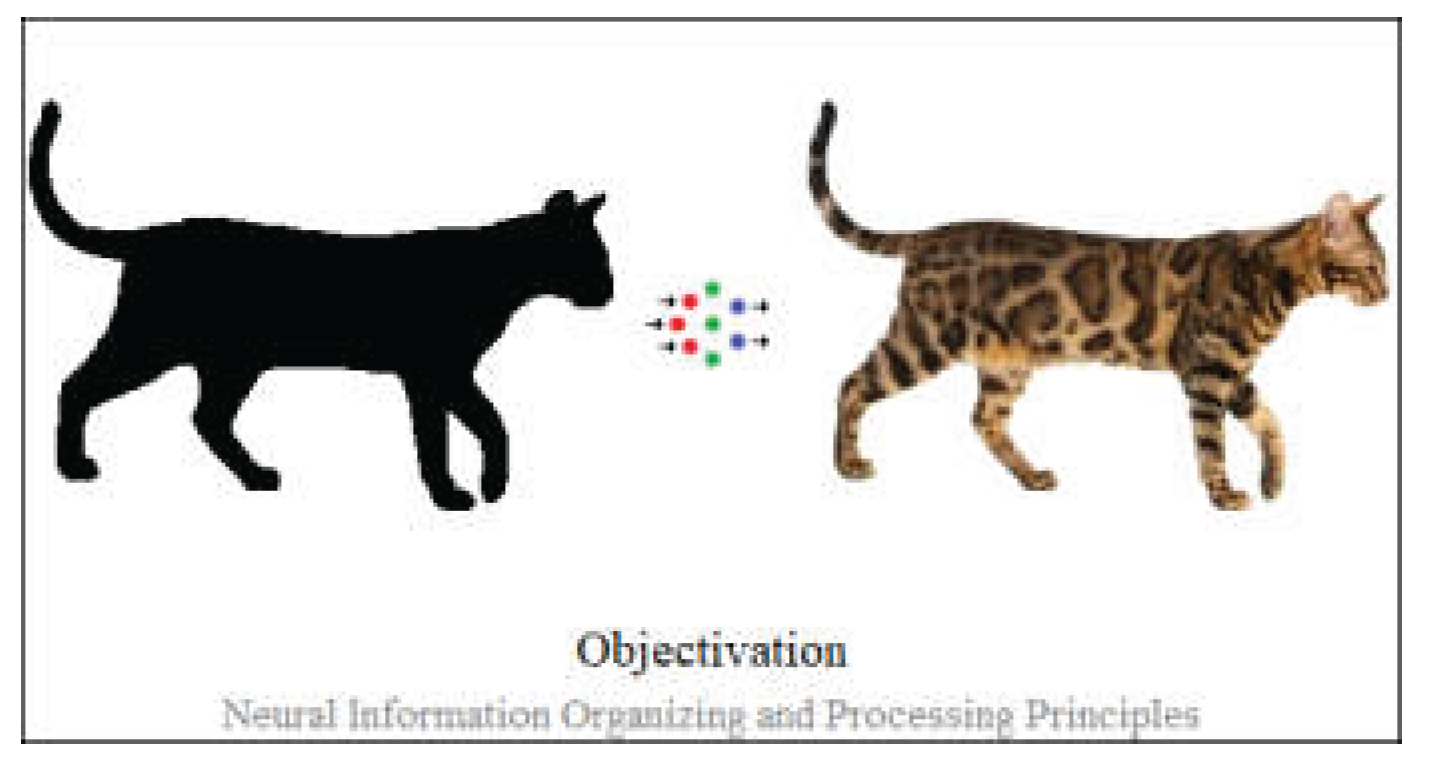

9. Objectivation Principle

The neural information objectivation principle highlights neural entity ability to form objective perceptions of itself and of the environment with which it interacts in a general way, to establish relevant quantitative management of neural information and also to pursue objectives related to different neural levels. These characteristics are the expression of how internally, this principle acts through decision-making elements that balance stimulating information with inhibitory information in relation to a local objective and continuing likewise in a much more abstract way in combination with other principles (such as fragmentation, aggregation, parallelization, etc.), by select the relevant information related to: successive deep processing levels, chains of reasoning, etc.

Figure 9.

Objectivation Illustration.

Figure 9.

Objectivation Illustration.

The objectivation of neural information, whether in natural or artificial systems, emerges through the interplay of deterministic mechanisms spanning multiple organizational levels, involving differentiation-integration balancing mechanisms through: voltage-gated ion channels and neurotransmitter concentration thresholds at the synaptic scale, redundant parallel pathways and inhibitory circuits at the network level, distributed consensus mechanisms at the collective level, error correction, noise resilience, hierarchical processing, predictive modeling, feedback loops, collective fragmentation-aggregation, emergent phenomena, etc. The goal is to obtain representations (sensations, perceptions, concepts, semantics, etc.) as objective as possible, which contribute to an objective, rigorous, and unbiased processing framework that ensures reliable information certification across micro, network, cognitive, and social levels. The objectivation allows a neural system or agent to distinguish between beneficial/useful and harmful/useless information, an essential characteristic in: reinforcement learning, adversarial learning, etc. The objectivation principle constrain the neuronal entity to be balanced in terms of resource utilization, in establishing causal correlations, in achieving objective representations of internal and external elements, being behind of even deeper characteristics such as: consciousness, rationing, socialization, etc.

Conclusions

The present proposed neural information organizing and processing principles emerged from the process of synthesizing and abstracting of informational characteristics of biological neural systems correlated with the neural implementations features in both, as software components within computing tools and as architectural components of hardware elements, these also representing the most rigorous and easiest methods available for testing and confirmation. Therefore, even explicit mathematically or algorithmically descriptions were avoided in the principles presentation, these were identified and highlighted to ensure that are: biologically relevant, transdisciplinary applicable, mathematically formalizable, algorithmically implementable, electronically (hardware) embeddable, etc.

The presented neural information principles may require future completions and optimizations since they are the expression of a present synthesis of knowledge in the field, both their order and number may be subject to further reconsideration and completion. In addition, they refer only to the fundamental organizing and processing to which information is subjected in a neuronal system, other aspects than informational ones may complete them in terms of energy, metabolism, etc. However, many aspects can be found as detailing elements of the presented principles or as sub-principles or combinations of them.

Supplementary Materials

The following supporting information can be downloaded at the website of this paper posted on

Preprints.org.

References

- C. S. Sherrington, The Integrative Action of the Nervous System, Oxford University Press, 1906.

- E. D. Adrian, The Basis of Sensation: The Action of the Sense Organs, W. W. Norton & Company, 1928.

- W. S. McCulloch, W. Pitts, A logical calculus of the ideas immanent in nervous activity, Bulletin of Mathematical Biophysics 5 (1943) 115-133.

- D. O. Hebb, Organization of behavior: A neurophysiological theory, John Wiley and Sons, 1949.

- Turing, Computing Machinery and Intelligence, Mind 49 (1950) 433-460.

- J. Von Neumann, Probabilistic logics and the synthesis of reliable organisms from unreliable components, Automata studies 34 (1956) 43-98.

- F. Rosenblatt, The Perceptron: A Perceiving and Recognizing Automaton, Cornell Aeronautical Laboratory, Report 85-460-1, 1957.

- F. Rosenblatt, Principles of Neurodynamics: Perceptrons and the Theory of Brain Mechanisms, Cornell Aeronautical Laboratory, Report VG-1196-G-8, 1962.

- S. Linnainmaa, Taylor expansion of the accumulated rounding error, BIT 16 (1976) 146-160.

- J. J. Hopfield, Neural networks and physical systems with emergent collective computational abilities, Proceedings of the National Academy of Sciences. 79 (1982) 2554-2558.

- D. Rumelhart, G. Hinton, R. Williams, Learning representations by back-propagating errors, Nature 323 (1986) 533-536.

- G. M. Edelman, Neural Darwinism: The Theory of Neuronal Group Selection, New York, 1987.

- D. J. Chalmers, The conscious mind: In search of a fundamental theory, Oxford Paperbacks, 1997.

- E. R. Kandel, J. H. Schwartz, T. M. Jessell, Principles of Neural Science, New York, 2000.

- A. Vaswani et al., Attention Is All You Need, Advances in neural information processing systems 30 (2017).

- R. Wang, Y. Wang, X. Xu, Y. Li, X. Pan. Brain works principle followed by neural information processing: a review of novel brain theory. Artificial Intelligence Review 2023, 56, 285–350. [Google Scholar] [CrossRef]

- I. Petrila, Implementation of general formal translators. arXiv:2212.08482 (2022).

- I. Petrila, @C – augmented version of C programming language. arXiv:2212.11245 (2022).

- I. Petrila, Neural Information Organizing and Processing – Neural Machines. arXiv:2404.03676 (2024).

- I. Petrila, @JavaScript: Augmented JavaScript, Preprints 2025020081 (2025).

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).