1. Introduction

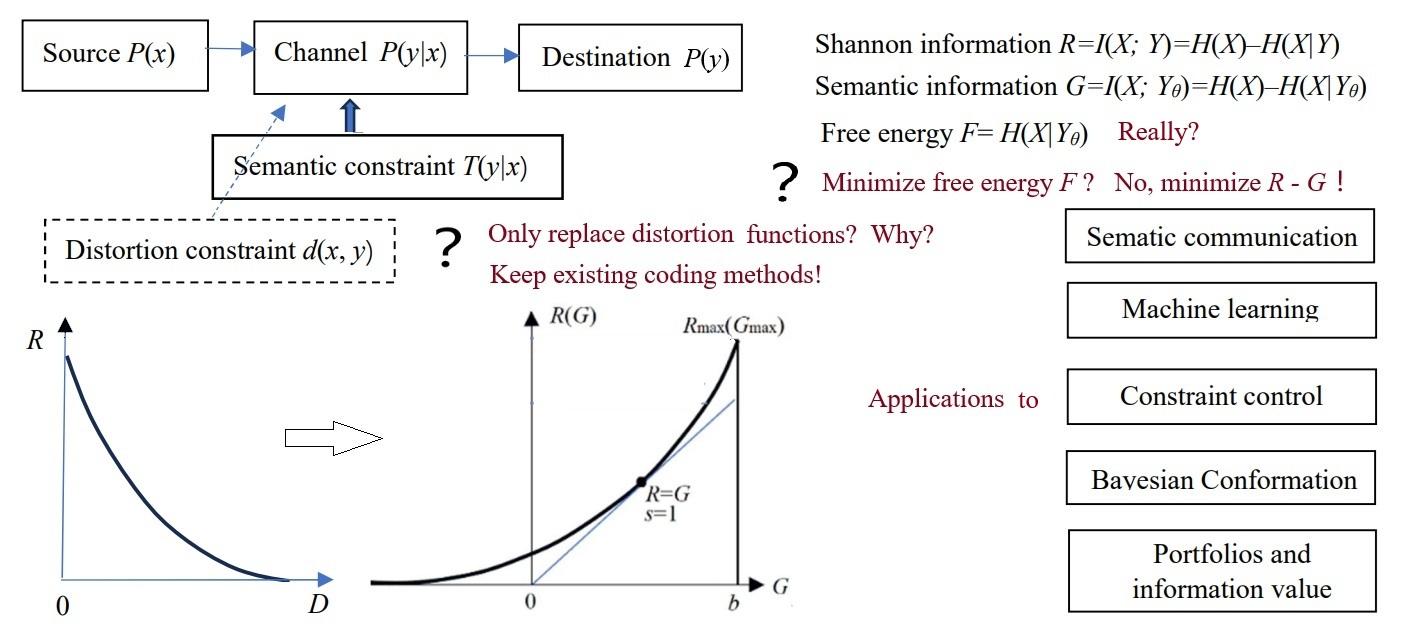

Although Shannon's information theory [

1] has achieved remarkable success, it faces three

significant limitations that restrict its semantic communication and machine

learning applications. First, it cannot measure semantic information. Second,

it relies on the distortion function to evaluate communication quality, but the

distortion function is subjectively defined and lacks an objective standard.

Third, it is challenging to incorporate model parameters into entropy formulas.

In contrast, machine learning often requires cross-entropy and cross Mutual

Information (MI) involving model parameters (

Appendix

A lists all abbreviations with original texts). Moreover, the minimum

distortion criterion resembles the philosophy of "absence of fault is a

virtue," whereas a more desirable principle might be "merit

outweighing fault is a virtue." Why did Shannon's information theory use

the distortion criterion instead of the information criterion? This is

intriguing.

The study of semantic information gained attention

soon after Shannon's theory emerged. Weaver initiated research on semantic

information and information utility [

2], and

Carnap and Bar-Hillel proposed a semantic information theory [

3]. Thirty years ago, the author of this article

extended Shannon's theory to a semantic information framework [

4,

5,

6,

7], now known as the semantic information G

theory (abbreviated as the G theory) [

8].

Here, "G" stands for generalization, reflecting the G theory's role

as a generalized form of Shannon's information theory. Earlier contributions to

semantic information theories include works by Carnap and Bar-Hillel, Dretske [

9], Wu [

10], and

Zhong [

11], while more recent contributions

after the author's generalization include those by Floridi [

12,

13] and others [

14].

These theories primarily address natural language information and semantic

information measures (upstream problems). In contrast, newer approaches have

focused on electronic semantic communication over the past decade, particularly

semantic compression (downstream problems) [

14,

15,

16,

17].

These explorations are highly valuable.

Researchers hold two extreme views on semantic

information theory. One view argues that Shannon's theory suffices, rendering a

dedicated semantic information theory unnecessary; at most, semantic distortion

needs consideration; the opposing view advocates for a parallel semantic

information theory alongside Shannon's framework. Among parallel approaches,

some researchers (e.g., Carnap and Bar-Hillel) use only logical probability,

avoiding statistical probability, while others incorporate semantic sources, semantic

channels, semantic destinations, and semantic information rate distortion [

17].

The G theory offers a compromise between these

extremes. It fully inherits Shannon's information theory, including its derived

theories. Only the semantic channel composed of truth functions is newly added.

Based on Davidson's truth-conditional semantics [

18],

truth functions represent the extensions and semantics of concepts or labels.

By leveraging the semantic channel, the G theory can:

derive the likelihood function from the truth function and source, enabling semantic probability predictions, thereby quantifying semantic information, and

replace the distortion constraint in Shannon's theory with semantic constraints, which include semantic distortion, semantic information quantity, and semantic information loss constraints.

The semantic information measure does not replace

Shannon's information measure but supplants the distortion metric used to

evaluate communication quality. Truth functions can be derived from sample

distributions using machine learning techniques with the maximum semantic

information criterion, addressing the challenges of defining classic distortion

functions and optimizing Shannon channels with an information criterion. A key

advantage of generalization over reconstruction is that semantic constraint functions

can be treated as new or negative distortion functions, allowing the use of

existing coding methods without additional electronic semantic communication

coding considerations.

In addition to Shannon's ideas, the G theory

integrates Popper's views on semantic information, logical probability, and

factual testing [

19,

20]; Fisher's maximum

likelihood principle [

21]; and Zadeh's fuzzy

set theory [

22,

23]. To unify Popper's logical

probability with Zadeh's fuzzy sets, the author proposed the P-T probability

framework [

8,

24], simultaneously accommodating

both statistical and logical probabilities.

Thirty years ago, the G theory was applied to image

data compression based on visual discrimination [

5,

7].

In the past decade, the author has introduced model parameters into truth

functions, utilized truth functions as learning functions [

7,

25], and optimized them with sample

distributions. The G theory has also been employed to optimize semantic

communication for machine learning tasks, including multi-label learning,

maximum MI classification, mixture models [

8],

Bayesian confirmation [

26,

27], semantic

compression [

28], constraint control [

29], and latent variable solutions [

30]. The concept of mutually aligning semantic and

Shannon channels aids in understanding decentralized machine learning and

reinforcement learning methods.

The main motivations and objectives of this paper

are as follows:

Semantic communication urgently requires a semantic

compression theory analogous to the information rate-distortion theory [

31,

32,

33]. The author extends the information

rate-distortion function to derive the information rate fidelity function

R(

G)(where

R is the minimum Shannon MI for a given semantic MI

G), which

provides a theoretic foundation for semantic compression theory.

Estimated MI (a specific case of semantic MI) [

25,

34,

35] and Shannon MI minimization [

36] have been utilized in deep learning. However,

researchers often conflate estimated MI with Shannon MI and remain unclear

about which should be maximized or minimized [

37,

38,

39].

The G theory can clarify these distinctions.

The G theory has undergone continuous refinement,

with many results scattered across more than 20 articles by the author. This

paper aims to provide a comprehensive overview, helping future researchers

avoid redundant efforts.

The remainder of this paper is organized as

follows:

Section 2 introduces the G

theory;

Section 3 discusses electronic

semantic communication;

Section 4

explores goal-oriented information and information value (in conjunction with

portfolio theory); and

Section 5 examines

the G theory's applications to machine learning. The final section provides

discussions and conclusions, including comparing the G theory with other

semantic information theories, exploring the concept of information, and

identifying the G theory's limitations and areas for further research.

2. From Shannon's Information Theory to the Semantic Information G Theory

2.1. Semantics and Semantic Probabilistic Predictions

Popper stated in his 1932 book

The Logic of

Scientific Discovery [

19] (p. 102): the

significance of scientific hypotheses lies in their predictive power, and

predictions provide information; the smaller the logical probability and the

more it can withstand testing, the greater the amount of information it

provides. He also explicitly emphasized the necessity of distinguishing between

two types of probability: statistical probability and logical probability. The

G theory incorporates two kinds of probabilities and probability predictions,

with

T representing logical probability and truth value. Statistical

probability predictions are expressed using Bayes' formula, and semantic

probability predictions follow a similar approach.

The semantics of a word or label encompass both its

connotation and extension. Connotation refers to an object's essential

attributes, while extension denotes the range of objects the term refers to.

For example, the extension of "adult" includes individuals aged 18

and above, while its connotation is "over 18 years old." Extensions

for some concepts, like "adult," may be explicitly defined by

regulations, whereas others, such as "elderly," "heavy

rain," "excellent grades," or "hot weather," are more

subjective and evolve through usage. Connotation and extension are

interdependent; one can often infer one from the other.

According to Tarski's truth theory [

40] and Davidson's truth-conditional semantics [

9], a concept's semantics can be represented by a

truth function, which reflects the concept's extension. For a crispy set, the

truth function acts as the characteristic function of the set. For example,

x

is age, and

y1 is the label of the set {adult}, we denote

the truth function as

T(

y1|x), which is also the

characteristic function of the set {adult}.

In 1931, Popper put forward in the book "

The

Logic of Scientific Discovery" [

39]

(P.96) that the smaller the logical probability of a scientific hypothesis, the

greater the amount of (semantic) information if it can stand the test. We can

say that Popper is the earliest researcher of semantic information [

19]. Later, he proposed a logical probability axiom

system. He emphasized that there are two kinds of probabilities, statistical

and logical probabilities, at the same time ([

39]

(pp. 252-258). But he had not established a probability system that includes

both.

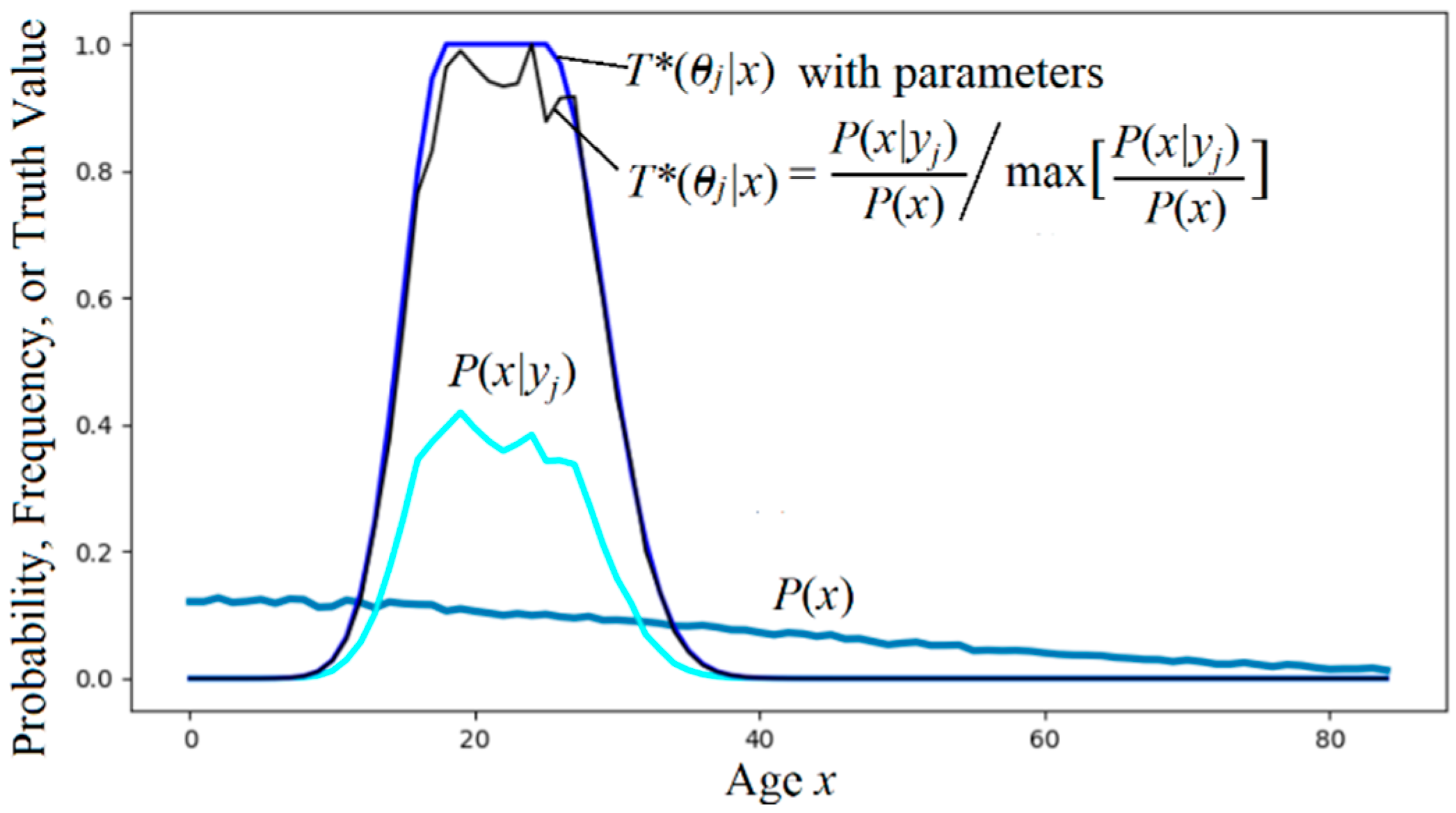

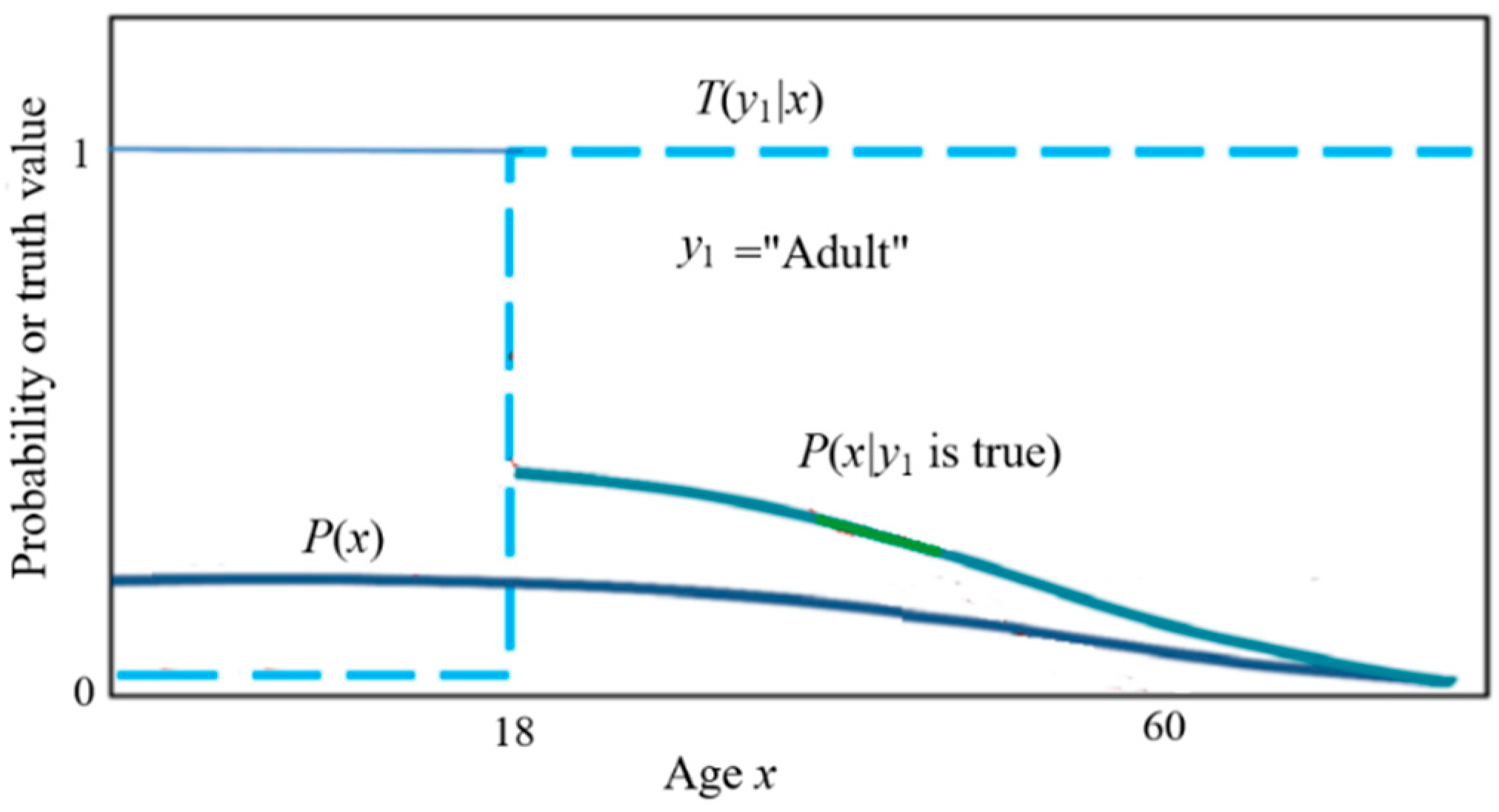

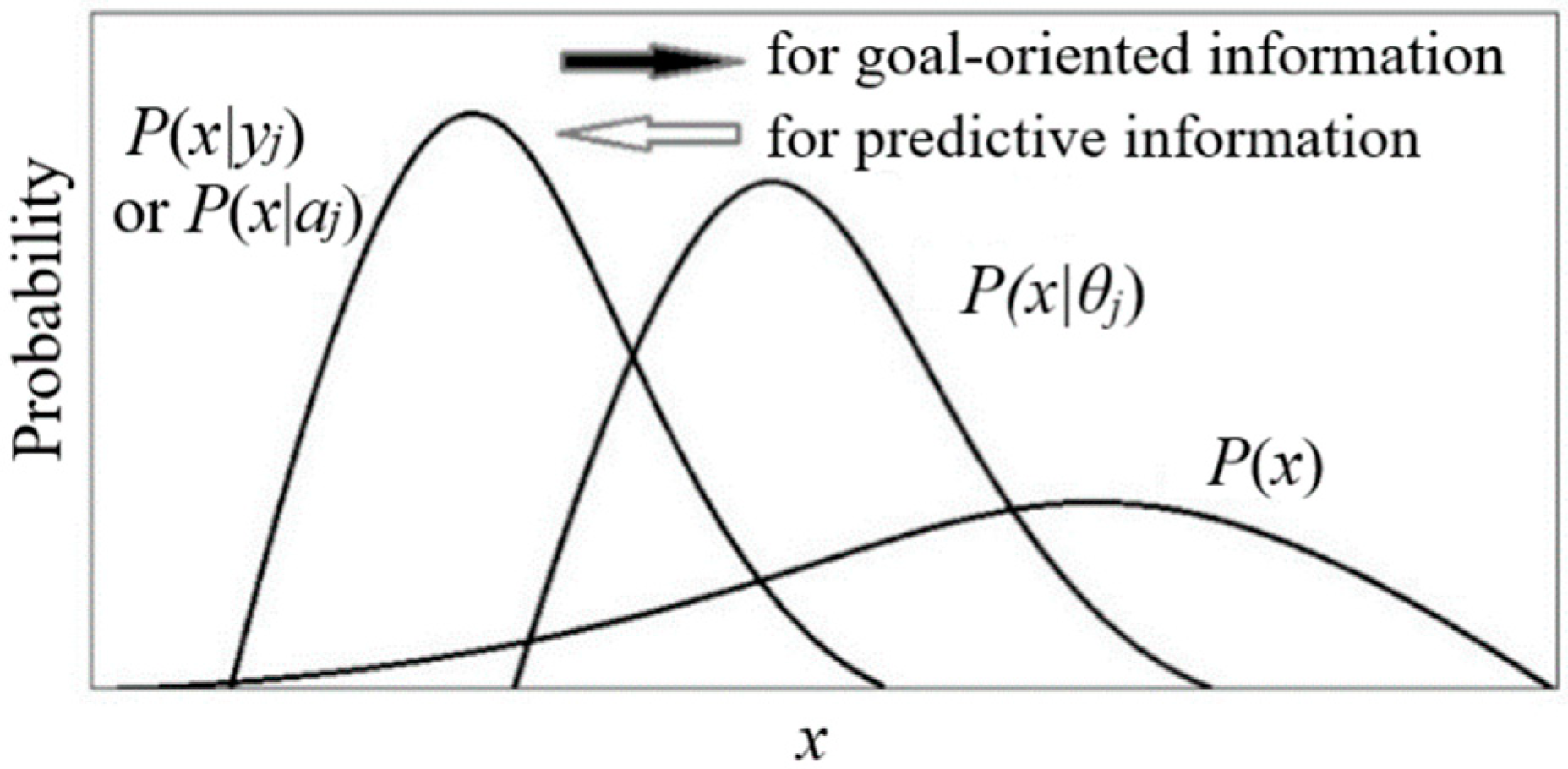

The truth function serves as the tool for semantic

probability predictions (illustrated in

Figure 1).

The formula is:

If "adult" is changed to

"elderly", the crispy set becomes a fuzzy set, the truth function is

equal to the membership function of the fuzzy set, and the above formula

remains unchanged.

The extension of a sentence can be regarded as a

fuzzy range in a high-dimensional space. For example, an instance described by a

sentence with a subject, object, and predicate structure can be regarded as a point

in the Cartesian product of three sets, and

the extension of a sentence is a fuzzy subset in the Cartesian

product. For example, the subject and the predicate are two people in

the same group, and the predicate can be selected as one of "bully",

"help", etc. The extension of "Tom helps Jone" is an

element in the three-dimensional space, and the extension of "Tom helps an

old man" is a fuzzy subset in the three-dimensional space. The extension

of a weather forecast is a subset in the multidimensional space with time,

space, rainfall, temperature, wind speed, etc., as coordinates. The extension

of a photo or a compressed photo can be regarded as a fuzzy set, including all

things with similar characteristics.

Floridi affirms that all sentences or labels that may

be true or false contain semantics and provide semantic information [

26]. The author agrees with this view and suggests

converting the distortion function and the truth function

T(

yj|x)

to each other. To this end, we define:

where exp and log are a pair of inverse functions; d(yj|x)

means the distortion when yj represents xi.

We use d(yj|x) instead of d(x, yj)

because the distortion may be asymmetrical.

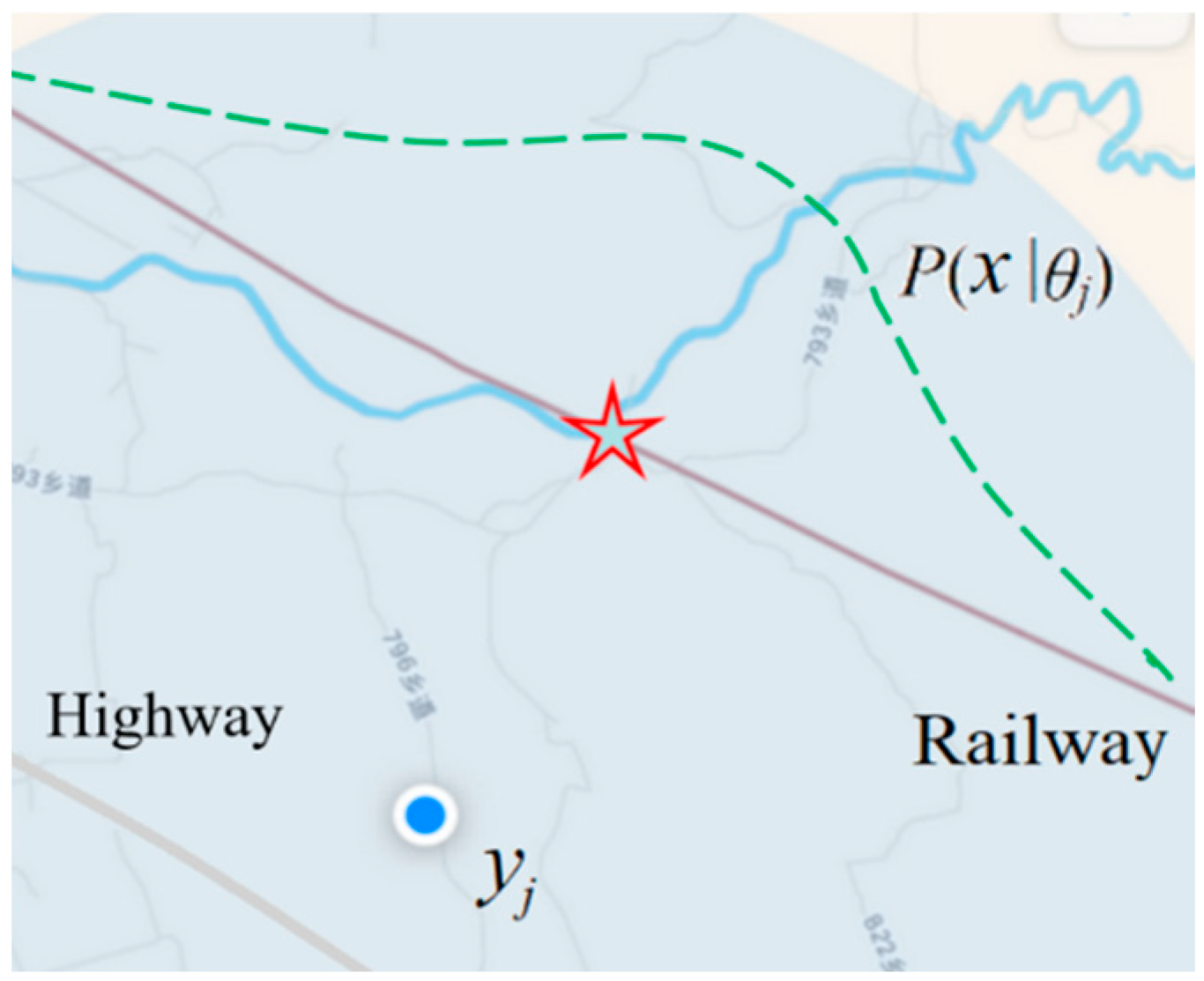

For example, the pointer on a Global Positioning

System (GPS) map has relative error or distortion; the distortion function can

be converted to a truth function or similarity function:

where

σ is the standard deviation; the

smaller it is, the higher the precision.

Figure 2 shows the mobile phone positioning seen by someone on a train.

According to the semantics of the GPS pointer, we

can predict that the actual position is an approximate normal distribution on

the high-speed rail, and the red five-star indicates the maximum possible

position. If a person is on a specific highway, the prior probability

distribution P(x) will change, and the maximum possible position

is the place closest to the small circle on that highway.

Clocks, scales, thermometers, and various economic

indices are similar to the positioning pointers and can all be regarded as

estimates (), with error ranges or extensions, so they can all

be used for semantic probability prediction and provide semantic information.

Color perception can also be regarded as an estimate of color or color light.

The higher the discrimination of the human eye (similar to the smaller σ),

the smaller the extension. A Gaussian function can also express its truth

function or discrimination function.

2.2. The P-T Probability Framework

Carnap and Bar-Hillel only use logical probability.

We use logical probability, truth value, and statistical probability in the

above semantic probability prediction. The truth value is conditional logical

probability. The G theory is based on the P-T probability framework.

Why do we need a new probabilistic framework?

Because a hypothesis or label, such as "adult", has two probabilities

simultaneously. One is the probability of the set represented by the label,

which is defined by Kolmogorov [

41]; it is not

normalized. Another is the probability in which the label is selected. It is

defined by Mises [

42]; it is normalized. The

P-T probability framework attempts to unify the two probabilities and

generalize the set to the fuzzy set.

We define:

X and Y are two random variables, taking x ϵ U={x1, x2, …} and y ϵ V ={y1, y2, …} as their values. For machine learning, xi is an instance, and yj is a label or hypothesis; yj(xi) is a proposition, and yj(x) is a proposition function.

The θj is a fuzzy subset of the domain U, whose elements make yj true. We have yj(x) = "x ϵ θj". The θj can also be understood as a model or a set of model parameters.

Probability defined by “=”, such as P(yj)≡P(Y = yj), is a statistical probability; probability defined by “ϵ”, such as P(X ϵ θj), is a logical probability. To distinguish P(Y = yj) and P(X ϵ θj), we define the logical probability of yj as T(yj)≡ T(θj)≡ P(X ϵ θj).

T(yj|x) ≡ T(θj|x)≡ P(X ϵ θj|X = x)∈[0,1] is the truth function of yj and also the membership function mθj(x) of the fuzzy set θj, that is,

The logical probability of a label is generally not

equal to its statistical probability. The logical probability of a tautology is

1, while its statistical probability is close to 0. We have P(y1)

+ P(y2) + … + P(yn) = 1, but

it is possible that T(y1) + T(y2)

+ … + T(yn) > 1. For example, the age labels

include "adult", "non-adult", "child",

"youth", "elderly", etc., and the sum of their statistical

probabilities is 1, while the sum of their logical probabilities is greater

than 1 because the sum of the logical probabilities of "adult" and

"non-adult" alone is equal to 1.

According to the above definition, we have:

This is the probability of a fuzzy event defined by

Zadeh [

23].

We can put T(θj|x) and P(x)

into the Bayesian formula to obtain the semantic probability prediction

formula:

To

P(x|θj) is the likelihood function P(x|yj,

θ) in the popular method. We use P(x|θj) here

because the j-th parameter is bound to yj. We call the

above formula the semantic Bayesian formula:

Because the maximum value of T(yj|x)

is 1, from P(x) and P(x|θj), we

derive a new formula:

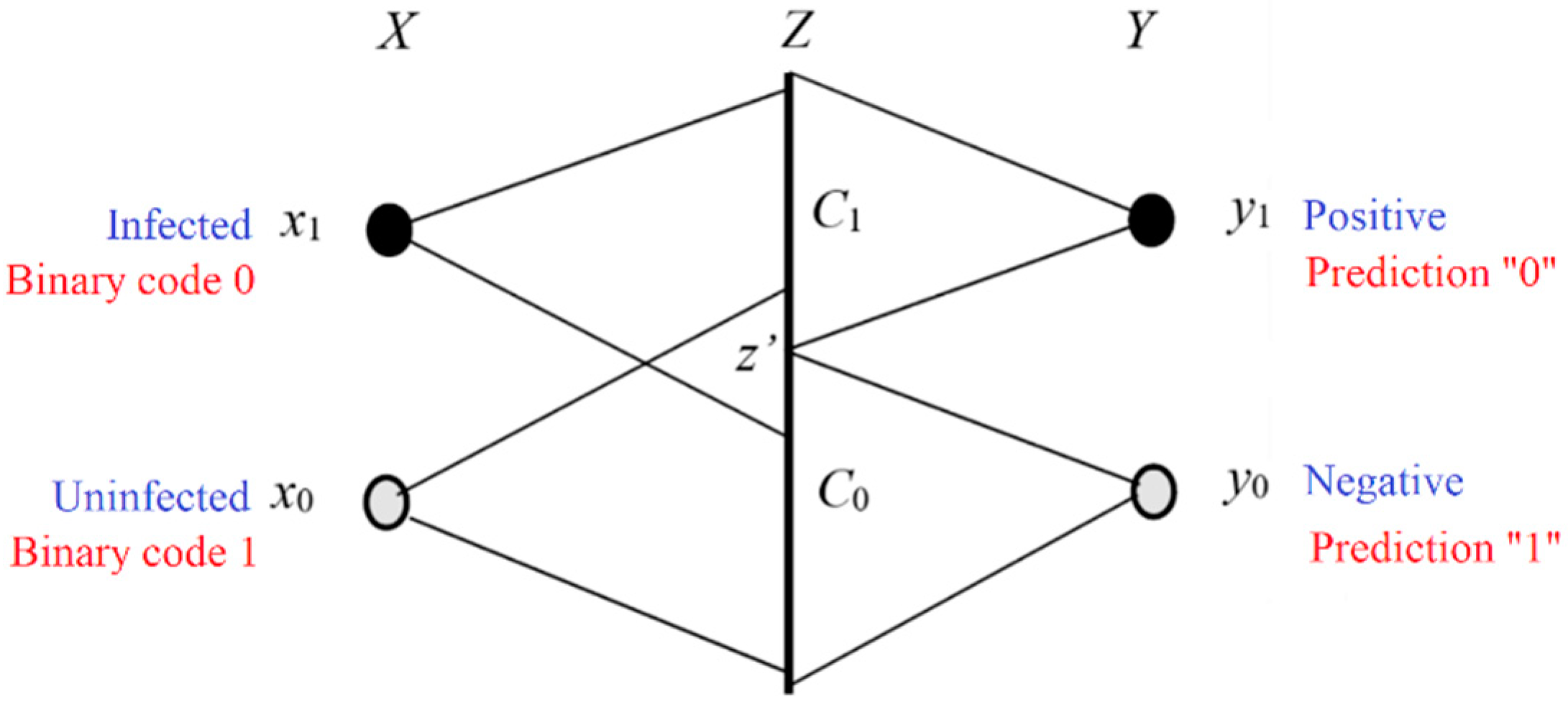

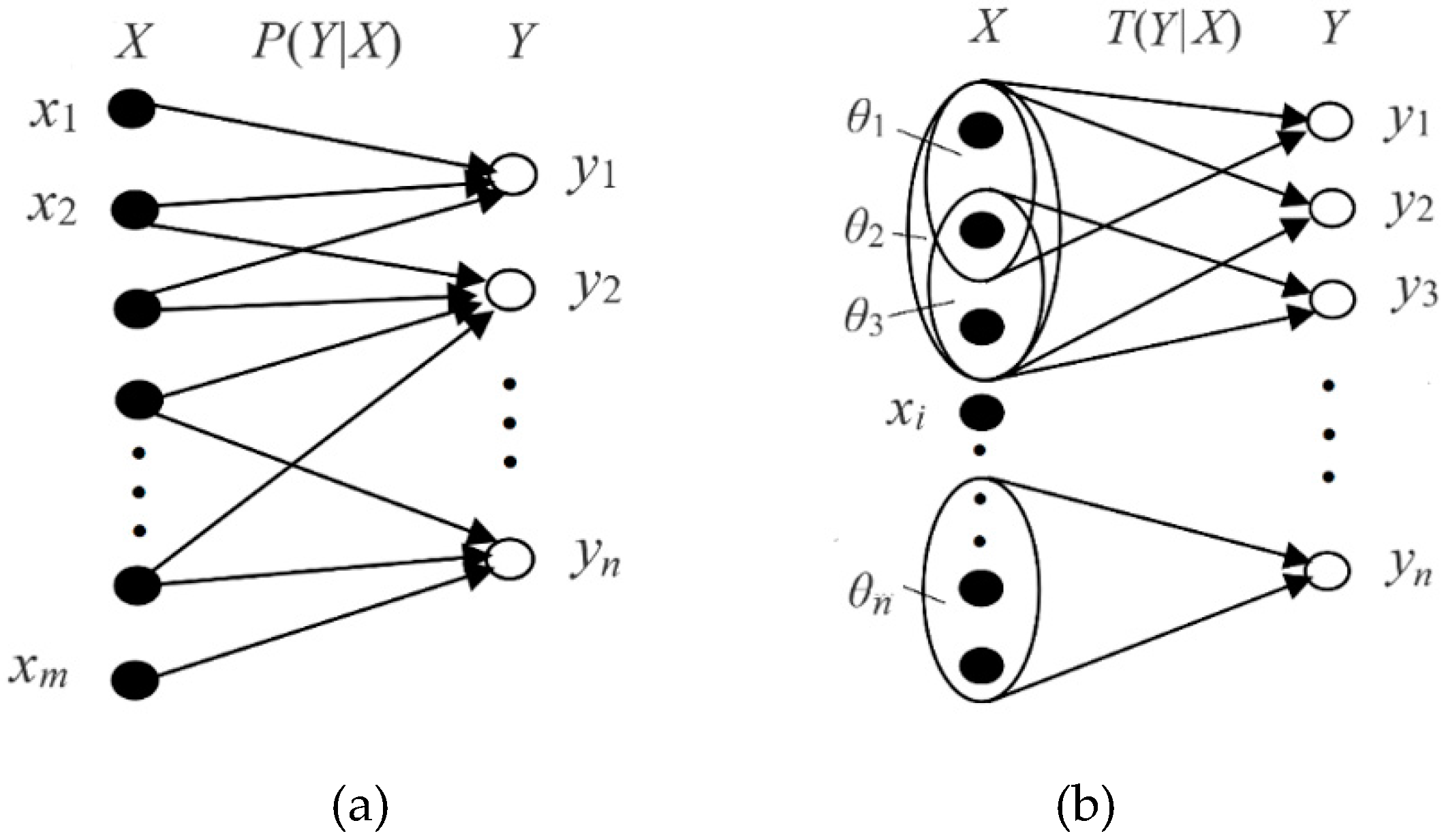

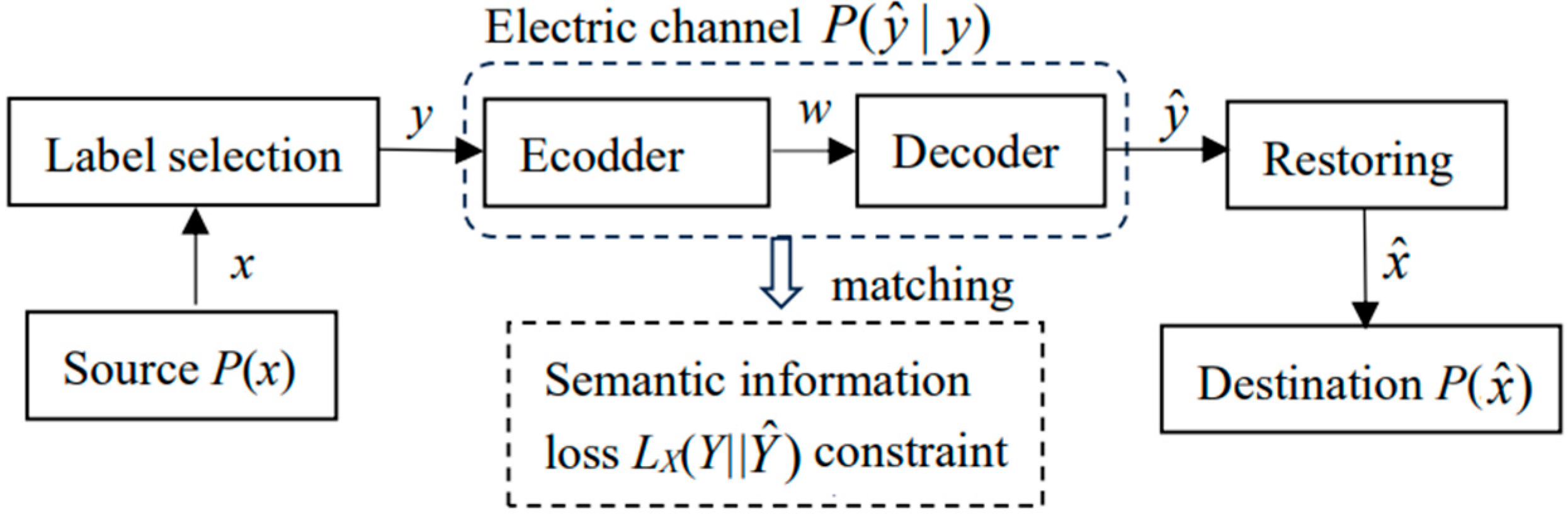

2.3. Semantic Channel and Semantic Communication Model

Shannon calls

P(

X)

, P(

Y|X),

and

P(

Y) the source, the channel, and the destination. Just as a

set of transition probability functions

P(

yj|x)(

j=1,

2, …) constitutes a Shannon channel, a set of truth value functions

T(

θj|x)

(

j=1,2,…) constitutes a semantic channel. The comparison of the two

channels is shown in

Figure 3. For

convenience, we also call

P(

x)

, P(

y|x), and

P(

y)

the source, the channel, and the destination, and we call

T(

y|x)

the semantic channel.

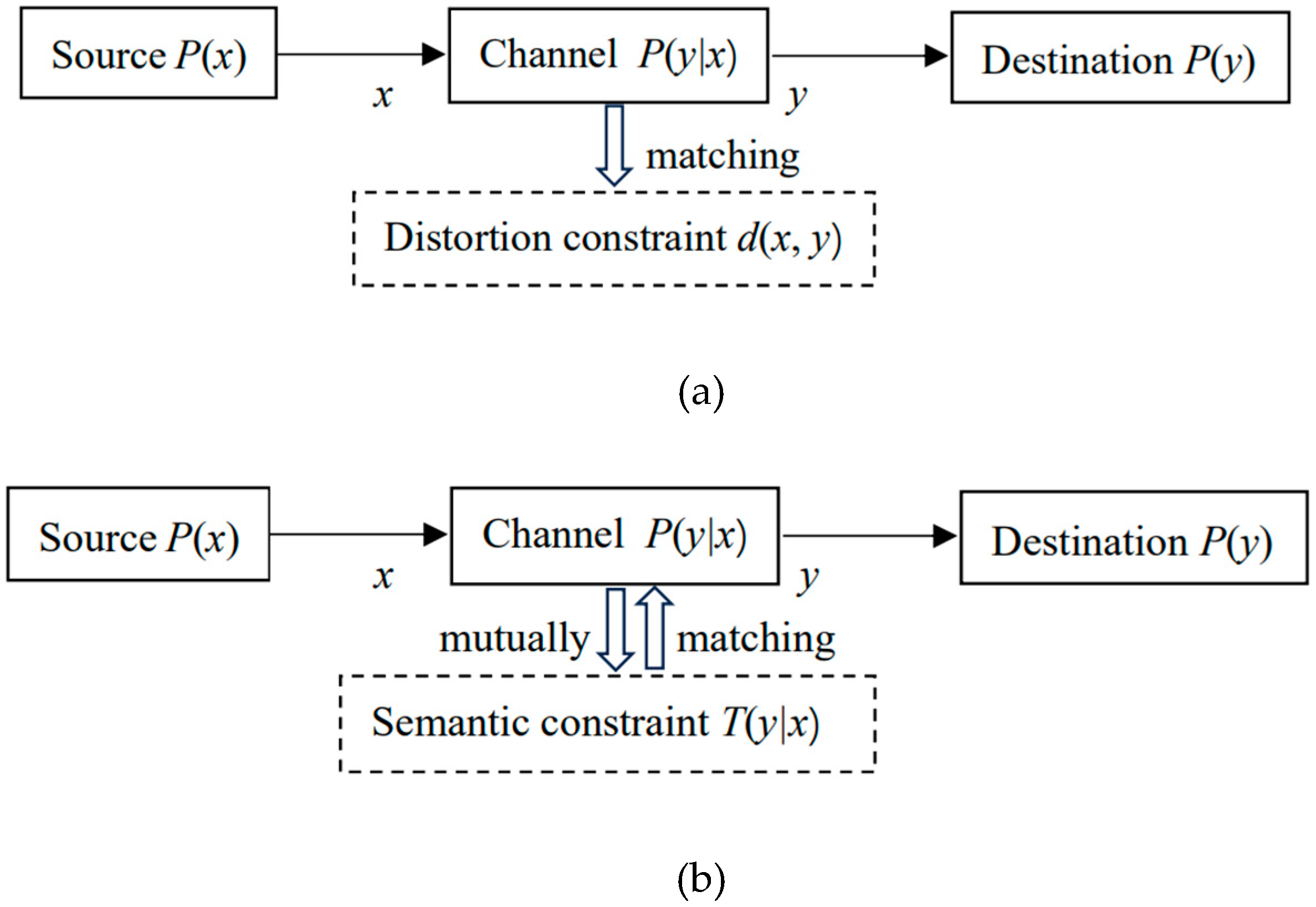

The semantic channel reflects the semantics or

extensions of labels, while the Shannon channel indicates the usage of labels.

The comparison between the Shannon and the semantic communication models is

shown in

Figure 4. The distortion

constraint is usually not drawn, but it actually exists.

The semantic channel contains information about the

distortion function, and the semantic information represents the communication

quality, so there is no need to define a distortion function anymore.

Optimizing the model parameters is to make the semantic channel match the

Shannon channel, that is, T(θj|x)∝P(yj|x)

or P(x|θj) = P(x|yj) (j=1,2,…),

so that the semantic MI reaches its maximum value and is equal to the Shannon

information. Conversely, when the Shannon channel matches the semantic channel,

the information difference reaches the minimum, or the information efficiency

reaches the maximum.

2.4. Generalizing Shannon Information Measure to Semantic Information G Measure

Shannon MI can be expressed as.

where H(X) is the entropy of X,

reflecting the minimum average code length. H(X|Y) is the

posterior entropy of X, reflecting the minimum average code length after

predicting x based on Y. Therefore, Shannon MI means the average

code length saved due to the prediction.

We replace P(xi|yj)

on the right side of the log with the likelihood function P(xi|θj).

Then we get the semantic MI:

where H(X|Yθ) is

the semantic posterior entropy of x:

H(

X|Yθ) is the free energy

F in the Variational Bayes method (VB) [

44,

45]

and the Minimum Free Energy (MFE) principle [

46].

The smaller it is, the greater the amount of semantic information.

H(

Yθ|X)

is called the fuzzy entropy, equal to the average distortion

. Because according to Equation (2), there is:

H(Yθ) is the semantic

entropy:

Note that P(x|yj) on the

left side of the log is used for averaging and represents the sample

distribution. It can be a relative frequency and may not be smooth or

continuous. P(x|θj) and P(x|yj)

may differ, reflecting that obtaining information needs factual testing. It is

easy to see that the maximum semantic MI criterion is equivalent to the maximum

likelihood criterion and is similar to the Regularized Least Squares (RLS)

criterion. Semantic entropy is the regularization term. Fuzzy entropy is a more

general average distortion than the average square error.

Semantic entropy has a clear coding meaning. Assume

that the sets θ1, θ2, … are crispy sets;

the distortion function is:

We regard

P(

Y) as the source and

P(

X)

as the destination, then the parameter solution of the information

rate-distortion function is [

31]:

It can be seen that the minimum Shannon MI is equal

to the semantic entropy, that is, R(D=0)=H(Yθ).

The following formula indicates the relationship

between Shannon MI and semantic MI and the encoding significance of semantic

MI:

where KL(…) is the Kullbak-Leibler (KL) divergence

with a likelihood function, which Akaike [

43] first

used to prove that the minimum KL divergence criterion is equivalent to the

maximum likelihood criterion. The last term in the above formula is always

greater than 0, reflecting the average code length of residual coding.

Therefore, the semantic MI is less than or equal to the Shannon MI; it reflects

the average code length saved due to semantic prediction.

From the above formula, the semantic MI reaches its

maximum value when the semantic channel matches the Shannon channel. According

to Equation (15), letting P(x|θj)= P(x|yj),

we can obtain the optimized truth function from the sample distribution:

When Y=yj, the semantic MI becomes the semantic KL information:

The KL divergence cannot usually be interpreted as information because the smaller it is, the better. But I(X; θj) above can be said to be information because the larger it is, the better.

Solving T*(θj|x) with equation (16) requires that the sample distributions P(x) and P(x|yj) are continuous and smooth. Otherwise, by using Equation (17), we can obtain:

The above method for solving

T*(

θj|x) is called Logical Bayesian Inference (LBI) [

8] and can be called the random point falling shadow method. This method inherits Wang's idea of random set falling shadow [

47,

48].

Suppose the truth function in (10) becomes a similarity function. In that case, the semantic MI becomes the estimated MI [

25], which has been used by deep learning researchers for Mutual Information Neural Estimation (MINE) [

34] and Information Noise Contrast Estimation (InfoNCE) [

35].

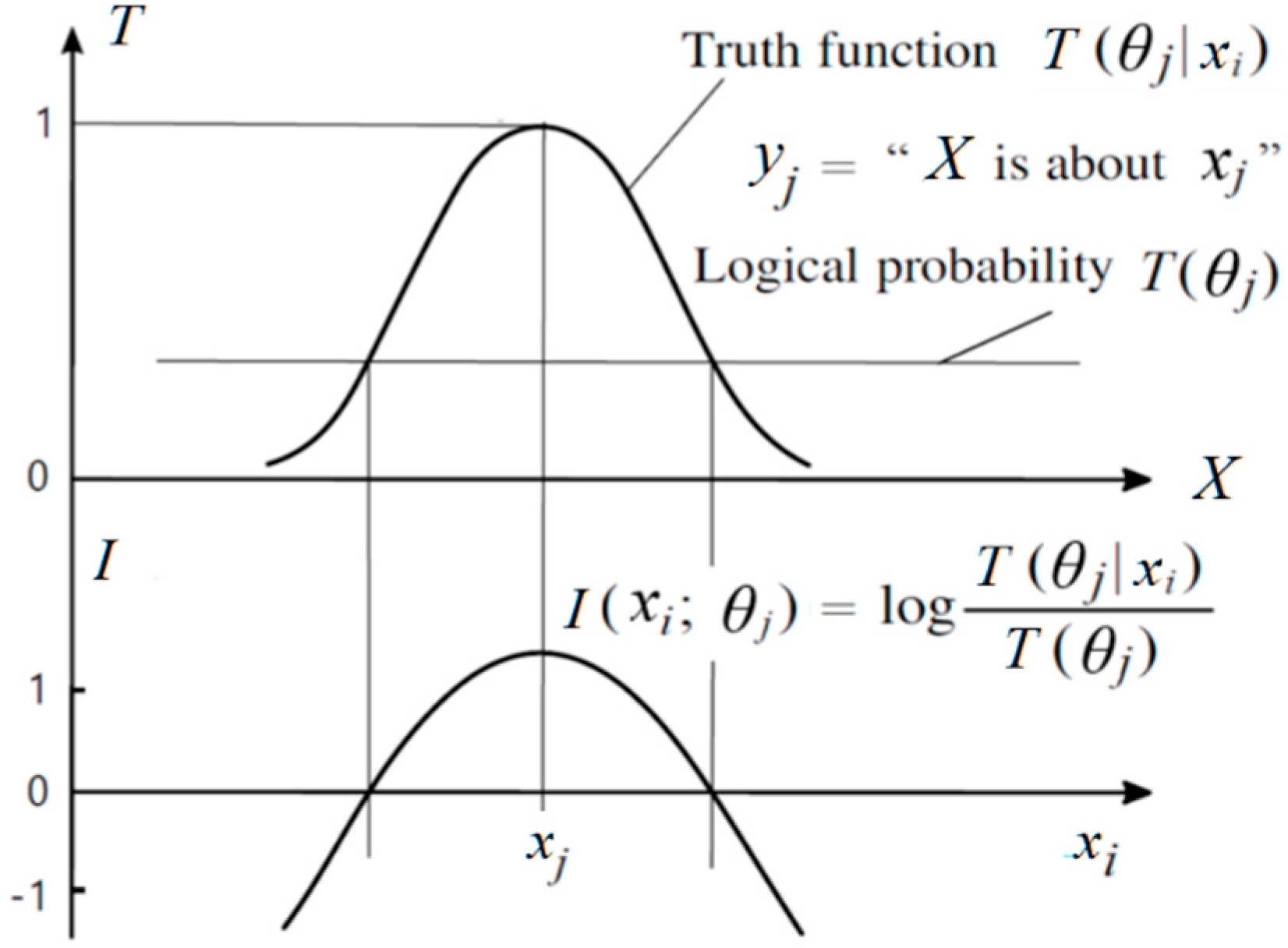

In the semantic KL information formula, when X=xi, I(X; θj) becomes the semantic information between a single instance xi and a single label yj:

The above formula reflects Popper's idea about factual testing.

Figure 5 illustrates the above formula. It shows that the smaller the logical probability, the greater the absolute value of the information; the greater the deviation, the smaller the information; wrong assumptions convey negative information.

Bring Equation (2) into (19), we have

which means I(X; θj) equals Carnap and Bar-Hillel's semantic information minus distortion. If T(θj|x) is always 1, the two amounts of information become equal.

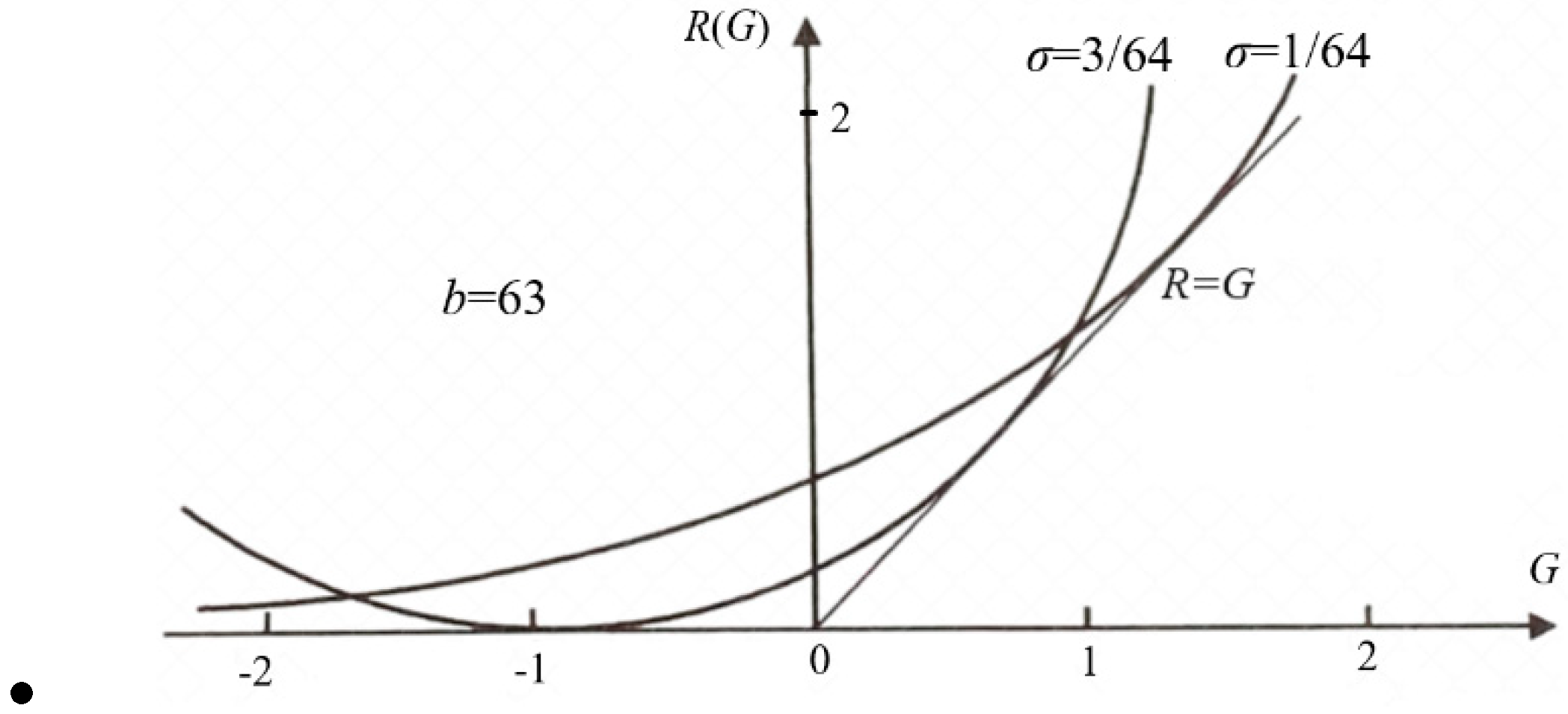

2.5. From the Information Rate-distortion Function to the Information Rate-fidelity Function

Shannon defines that given a source P(x), a distortion function d(y|x), and the upper limit D of the average distortion , we change the channel P(y|x) to find the minimum MI R(D). R(D) is the information rate-distortion function, which can guide us in using Shannon information economically.

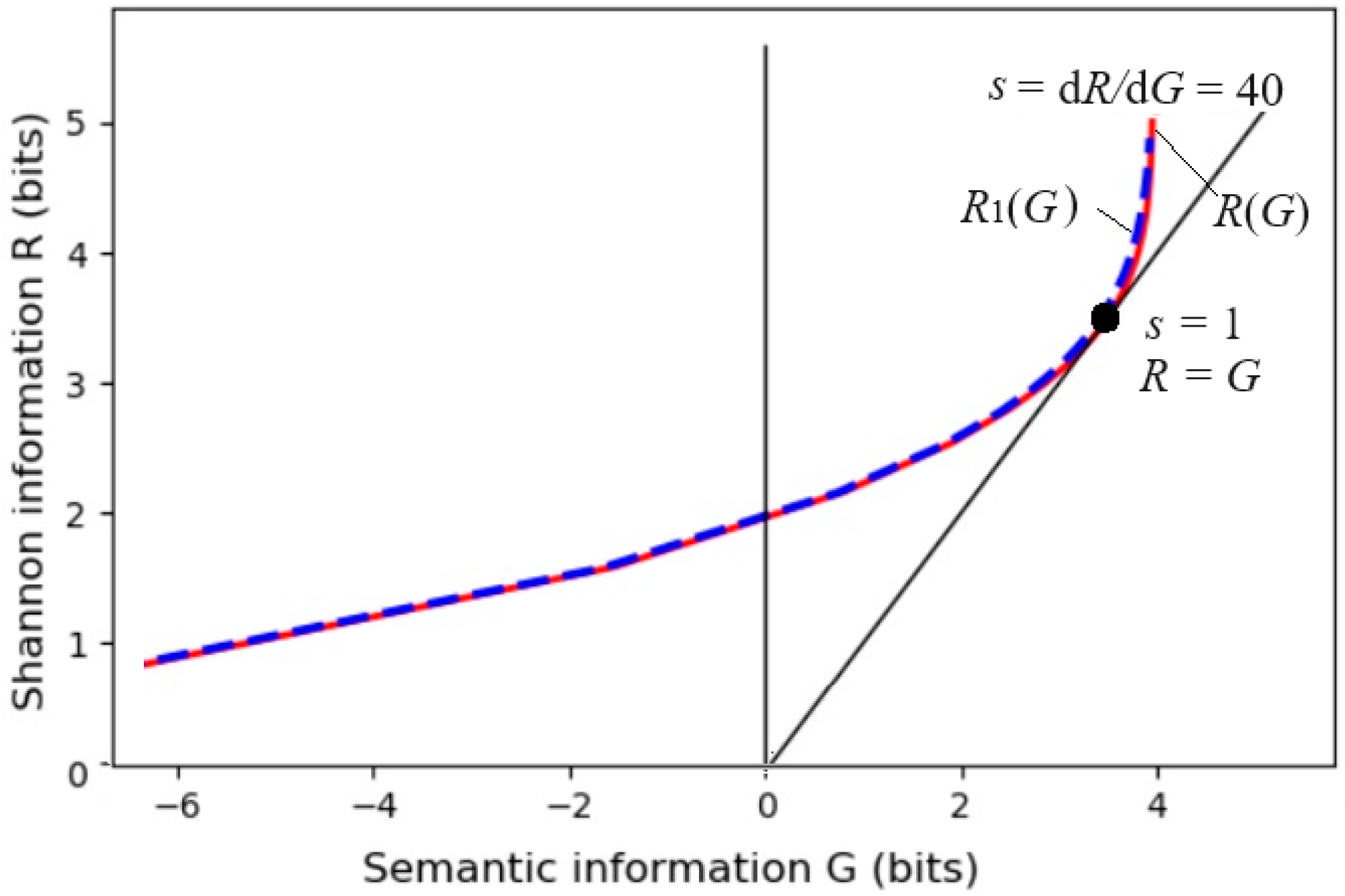

Now, we replace d(yj|xi) with I(xi; θj), replace with I(X; Yθ), and replace D with the lower limit G of the semantic MI to find the minimum Shannon MI R(G). R(G) is the information rate-fidelity function. Because G reflects the average code length saved due to semantic prediction, Using G as the constraint is more consistent in shortening the code length, and G/R can better reflect information efficiency.

The author uses the word "fidelity" because Shannon originally proposed the information rate-fidelity criterion [

12], and later used minimum distortion to express maximum fidelity. The author has previously referred to

R(

G) as "the information rate of keeping precision" [

16] or "information rate-verisimilitude" [

25].

The R(G) function is defined as

We use the Lagrange multiplier method to find the minimum MI. The Lagrangian function is:

Using P(y|x) a variation, we let . Then, we obtain:

where mij=P(xi|θj)/P(xi)=T(θj|xi)/T(θj). Using P(y) a variation, we let . Then, we obtain:

where

P+1(

yj) means the next

P(

yj). Because

P(

y|x) and

P(

y) are interdependent, we can first assume a

P(

y) and then repeat the above two formulas to obtain convergent

P(

y) and

P(

y|x) (see [

33] (P. 326)). We call this method the Minimum Information Difference (MID) iteration.

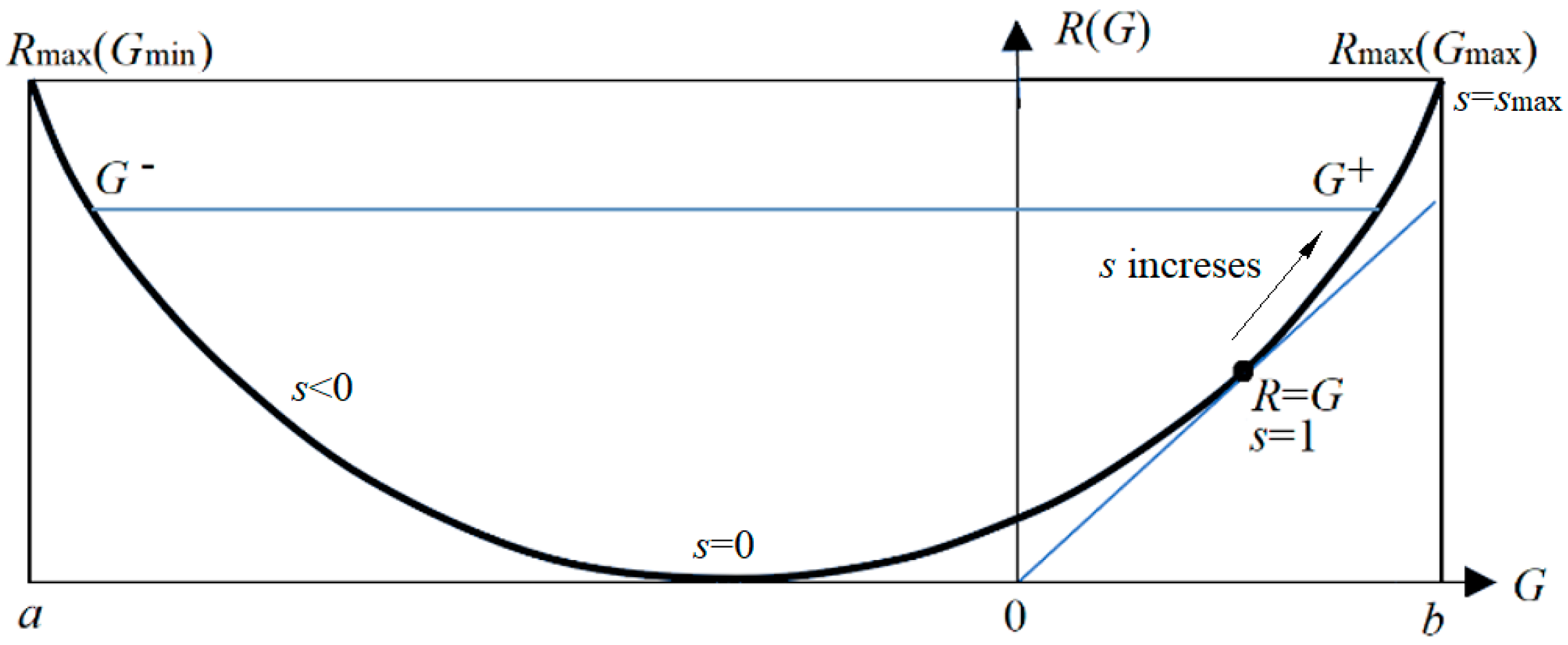

The parameter solution of the

R(

G) function (as illustrated in

Figure 6) is:

Any

R(

G) function is bowl-shaped (possibly not symmetrical) [

6], with the second derivative greater than 0. The

s = d

R/d

G is positive on the right. When

s = 1,

G equals

R, meaning the semantic channel matches the Shannon channel.

G/R represents information efficiency; its maximum is 1.

G has a maximum value G

+ and a minimum value G

– for given

R.

G– means how small the semantic information the receiver receives can be when the sender intentionally lies.

We can apply the

R(

G) function to image compression based on visual discrimination [

5,

6], maximum MI classification of unseen instances, the convergence proof of mixture models [

7], and semantic compression [

28].

It is worth noting that, given a semantic channel T(y|x), matching the Shannon channel with the semantic channel, i.e., letting P(yj|x) ∝ T(yj|x) or P(x|yj) = P(x|θj), does not maximize the semantic MI, but minimizes the information difference between R and G or the information efficiency G/R. Then, we can increase G and R simultaneously by increasing s. When s-->∞ in Equation (23), P(yj|x) (j = 1, 2, …, n) only takes the value 0 or 1, becoming a classification function.

We can also replace the average distortion with fuzzy entropy

H(

Yθ|X) (using semantic distortion constraints) to obtain the information rate truth function

R(

Θ) [

28]. In situations where information rather than truth is more important,

R(

G) is more appropriate than

R(

D) and

R(

Θ)

. P(

y) and

P(

y|x) obtained for

R(

Θ) are different from those obtained for

R(

G) because the optimization criteria are different. Under the minimum semantic distortion criterion,

P(

y|x) becomes:

where T(θxi|y) is a constraint function so that the distortion function d(xi|y) =–log T(θxi|y). R(Θ) becomes R(D). If T(θj) is small, the P(yj) required for R(G) will be larger than the P(yj) required for R(D) or R(Θ).

2.6. Semantic Channel Capacity

Shannon calls the maximum MI obtained by changing the source P(x) for the given Shannon channel P(y|x) the channel capacity. Because the semantic channel is also inseparable from the Shannon channel, we must provide both the semantic and Shannon channels to calculate the semantic MI. Therefore, after the semantic channel is given, there are two cases: 1) the Shannon channel is fixed; 2) we must first optimize the Shannon channel according to a specific criterion.

When the Shannon channel is fixed, the semantic MI is less than the Shannon MI, so the semantic channel capacity is less than or equal to the Shannon channel capacity. The difference between the two is shown in Equation (15).

If the Shannon channel is variable, we can use the MID iteration to find the Shannon channel for R=G after each change of the source P(x), and then use s––>∞ to find the Shannon channel P(y|x) that makes R and G reach their maxima simultaneously. At this time, P(y|x)∈{0,1} becomes the classification function. Then, we calculate the semantic MI. For different P(x), the maximum semantic MI is the semantic channel capacity. That is

where

Gmax is

G+ when

s—>∞ (see

Figure 6). Hereafter, the semantic channel capacity only refers to

CT(y|x) in the above formula.

Practically, to find CT(Y|X), we can look for x(1), x(2), …, x(j) ∈ U, which are instances under the highest points of T(y1|x), T(y2|x), …, T(yn|x) respectively. Let P(x(j))=1/n, j=1, 2, …, n, and the probability of any other x equals 0. Then we can choose the Shannon channel: P(yj|x(j))=1, j=1, 2, …, n. At this time, I(X; Y))=H(Y)=logn, which is the upper limit of CT(Y|X). If there is xi among the n x(j)s, which makes more than one truth function true, then either T(yj)>P(yj) or the fuzzy entropy is not 0. CT(Y|X) will be slightly less than logn in this case.

According to the above analysis, the encoding method to increase the capacity of the semantic channel is:

.Try to choose x that only makes one label's true value 1 (avoid ambiguity and reduce the logical probability of y);

Encoding should make P(yj|xj)=1 as much as possible (to ensure that Y is used correctly).

Choose P(x) so that each Y's probability and logical probability are as equal as possible (close to 1/n, thereby maximizing the semantic entropy).

3. Electronic Semantic Communication Optimization

3.1. Electronic Semantic Communication Model

The previous discussion of semantic communication did not consider conveying semantic information by electronic communication. Assuming that the time and space distance between the sender and the receiver is very far, we must transmit information through cables or disks. At this time, we need to add an electronic channel based on the previous communication model, as shown in

Figure 7:

Electronic semantic communication is still electronic communication, in essence. The difference is that we need to use semantic information loss instead of distortion as the optimization criterion. The choice of Y includes the optimization of the semantic channel P(y|x) and the Shannon channel T(y|x) between x and y.

3.2. Optimization of Electronic Semantic Communication with Semantic Information Loss as Distortion

Consider electronic semantic communication. If there is no distortion, that is,

ŷj=yj, the semantic information about

x transmitted by both is the same, and there is no semantic information loss. If

ŷj ≠ yj, there is semantic information loss. Farsad et al. call it the semantic error and propose the corresponding formula [

49,

50]. Papineni et al. also proposed a similar formula for translation [

51]. For more discussion, see [

52].

According to the G theory, the semantic information loss caused by using ŷj instead of yj is:

LX(yj||ŷj) is a generalized KL divergence because there are three functions. It indicates the average code length of residual coding.

Since the loss is generally asymmetric, there may be LX(yj||ŷj)≠LX(yj||ŷj). For example, when "motor vehicle" and "car" are substituted for each other, the information loss is asymmetric. The reason is that there is a logical implication relationship between the two. Using "motor vehicle" to replace "car", although it reduces information, it is not wrong; while using "car" to replace "motor vehicle" may be wrong, because the actual may be a truck or a motorcycle. When an error occurs, the semantic information loss is enormous. An advantage of using the truth function to generate the distortion function is that it reflects concepts' implications or similarity relationships.

Assuming that yj is the correct label used, it comes from sample learning, so P(x|θj)=P(x|yj), and LX(yj||ŷj)=KL(P(x|θj)||P(x|j)). The average semantic information loss is:

Consider using P(y) as the source and P(Ŷ) as the destination to encode y. Let d(ŷk|yj)=LX(yj||ŷk); we can obtain the information rate-distortion function R(D) for replacing Y with Ŷ. We can code Y for data compression according to the parameter solution of the R(D) function.

In the electronic communication part (from Y to Ŷ), other problems can be resolved by classical electronic communication methods, except for using semantic information loss as distortion.

If finding I(x;j) is not too difficult, we can also use I(x;j) as a negative distortion function. Minimizing I(X; ŷ) for given when G= I(X; ŷθ), we can get the R(G) function between x and Ŷ and compress the data accordingly.

3.3. Experimental Results: Compress Image Data According to Visual Discrimination

The simplest visual discrimination is the discrimination of human eyes to different colors or gray levels. The nest is the spatial discrimination of points. Suppose the movement of a point on the screen is not detected. In that case, the fuzzy movement range can represent the spatial position discrimination, which can be represented by a truth function (such as the Gaussian function). What is more complicated is to distinguish whether two figures are the same person. Advanced image compression needs to extract image features like Autoencoder and use features to represent images. The following methods need to be combined with the feature extraction method in deep learning to get better applications.

The simplest gray-level discrimination is taken as an example to illustrate digital image compression.

1) Measuring Color Information

A color can be represented by a vector (B, G, R). For convenience, we assume that the color is one-dimensional (or we only consider the gray level), expressed in x, and the color sense Y is the estimation of x, similar to the GPS indicator. The universes of x and Y are the same, and yj="x is about xj". If the color space is uniform, the distortion function can be defined by distance, that is, d(yj|x) = exp[–(x–xj)2/(2σ2)]. Then there is the average information of color perception, I(X; Yθ)=H(Yθ)–.

Given the source P(x) and the discrimination function T(y|x), we can solve P(y|x) and P(y) using the SVB method. The Shannon channel is matched with the semantic channel to maximize the information efficiency.

2) Gray Level Compression

We use an example to illustrate color data compression. Assuming that the original gray level is 256 (8-bit pixels) and is now compressed into 8 (3-bit pixels), we can define eight constraint functions, as shown in

Figure 8a.

Considering that human visual discrimination varies with the gray level (the higher the gray level, the lower the discrimination), we use the eight truth functions shown in

Figure 8a, representing eight fuzzy ranges. Appendix C in Reference [

28] shows how these curves are generated. The task is to use the Maximum Information Efficiency (MIE) criterion to find the Shannon channel

P(

y|x) that makes

R close to

G (

s=1).

The convergent

P(

y|x) is shown in

Figure 8b.

Figure 8c shows that Shannon MI and semantic MI gradually approach in the iteration process. Comparing figures 8a and 8b, we find it easy to control

P(

y|x) by

T(

y|x). However, defining the distortion function without using the truth function is difficult. It is also difficult to predict the convergent

P(

y|x) by

d(

y|x).

If we use s to strengthen the constraint, we get the parametric solution of the R(G) function. As s→∞, P(yj|x) (j=1,2,…) display as rectangles and becomes classification functions.

3) Influence of Discrimination and Quantization Level on the R(G) Function

Consider the semantic information of gray pixels. The discrimination function determines the semantic channel

T(

y|x), and the source entropy

H(

X) increases with the quantization level

b=2

n (

n is the number of quantization bits).

Figure 9 shows that when the quantization level is enough, the

R and

G variation range increases with the discrimination increasing (i.e., with

σ decreasing). The discrimination determines the semantic channel capacity.

For more discussion on visual information, see

Section 6 in [

6].

4. Goal-Oriented Information, Information Value, Physical Entropy and Free Energy

4.1. Three Kinds of Information Related to Value

We call the increment of utility the value. Information involves utility and value in three aspects:

1)Information about utility. For example, the information about university admission or the bumper harvest of grain is about utility.

The measurement of this information is the same as the previous semantic information measurement. Before providing information, we have the prior probability distribution P(x) of grain production. The information is provided in the form of range, such as "about 2000 kg per acre", which can be expressed by a truth function. The previous semantic information formula is also applicable.

2) Goal-oriented information. It is also purposeful information or constraint control feedback information.

For example, a passenger and a driver watch GPS maps in a taxi. Assume that the probability distribution of the taxi position without looking at the positioning map (or without some control) is P(x), and the destination is a fuzzy range, which a truth function can represent. The actual position is the probability distribution P(x|aj) (conditioned on action aj). The positioning map provides information. For the passenger, this is purposeful information (about how the control result comforts the purpose); for the driver, this is the control feedback information. We call both goal-oriented information. This information involves constraint control and reinforcement learning. The following section discusses the measurement and optimization of this information.

3) Information that brings value. For example, Tom made money by buying stocks based on John's prediction of stock prices. The information provided by John brings Tom increased utility, so John's information is valuable to Tom.

The value of information is relative. For example, weather forecast information is different for workers and farmers, and forecast information about stock markets is worth 0 to people who do not buy stocks. The value of information is often difficult to judge. For example, defining value losses due to missed reporting and false reporting is difficult regarding medical cancer tests. In most cases, missed reporting of low-probability events often causes more loss than false reporting, such as for medical tests and earthquake forecasts. In these cases, the semantic information criterion can be used to reduce missed reporting of low-probability events.

For investment portfolios, quantitative analysis of information value is possible.

Section 4.3 focuses on the information value of portfolios.

4.2. Goal-Oriented Information

4.2.1. Similarities and Differences Between Goal-Oriented Information and Prediction Information

Previously, we used the G measure to measure prediction information, requiring the prediction P(x|θj) to conform to the fact P(x|yj). Goal-oriented information is the opposite, requiring the fact to conform to the purpose.

An imperative sentence can be regarded as a control instruction. We need to know whether the control result conforms to the control purpose. The more consistent the result is, the more information there is.

A truth function or a membership function can represent a control target. For example, there are the following targets:

"Workers' wages should preferably exceed 5000 dollars";

"The age of death of the population had better exceed 80 years old";

"The cruising distances of electric vehicles should preferably exceed 500 kilometers";

"The error of train arrival time had better be less than one minute".

The semantic KL information formula can measure purposeful information:

In the formula, θj is a fuzzy set, indicating that the control target is a fuzzy range. yj here becomes aj, indicating the action corresponding to the j-th control task yj. If the control result is a specific xi, the above formula becomes the semantic information I(xi; aj|θj).

If there are several control targets y1, y2,… we can use the semantic MI formula to express the purposeful information:

where A is a random variable taking a value a or aj. Using SVB, the control ratio P(a) can be optimized to minimize the control complexity (i.e., Shannon MI) when the purposive information is the same.

4.2.2. Optimization of Goal-Oriented Information

Goal-oriented information can be regarded as the cumulative reward in constraint control. However, the goal here is a fuzzy range, which is expressed by a plan, command, or imperative sentence. The optimization task is similar to the active inference task using the MFE principle [

46].

The semantic information formulas of imperative and descriptive (or predictive) sentences are the same, but the optimization methods differ (see

Figure 10). For descriptive sentences, the fact is unchanged, and we hope that the predicted range conforms to the fact, that is, fix

P(

yj|x) so that

T(

θj|x)∝

P(

yj|x), or fix

P(

x|yj) so that

P(

x|θj)=

P(

x|yj). For imperative sentences, we hope that the fact conforms to the purpose, that is, fix

T(

θj|

x) or

P(

x|θj), and minimize the information difference or maximize the information efficiency

G/R by changing

P(

yj|x) or

P(

x|yj), or balance between the purposiveness and the efficiency.

For multi-target tasks, the objective function to be minimized is:

When the actual distribution P(x|aj) is close to the constrained distribution P(x|θj), the information efficiency (not information) reaches its maximum value of 1. To further increase the two types of information, we can use the MID iteration formula to obtain:

Compared with VB [

47,

48], the above method is simpler and can change the constraint strength by

s.

Because the optimized P(x|aj) is a function of θj and s, we write P*(x|aj)=P(x|θj, s). It is worth noting that many distributions P(x|aj) satisfy the constraint and maximize I(X; aj/θj), but only P*(x|aj) minimizes I(X; aj).

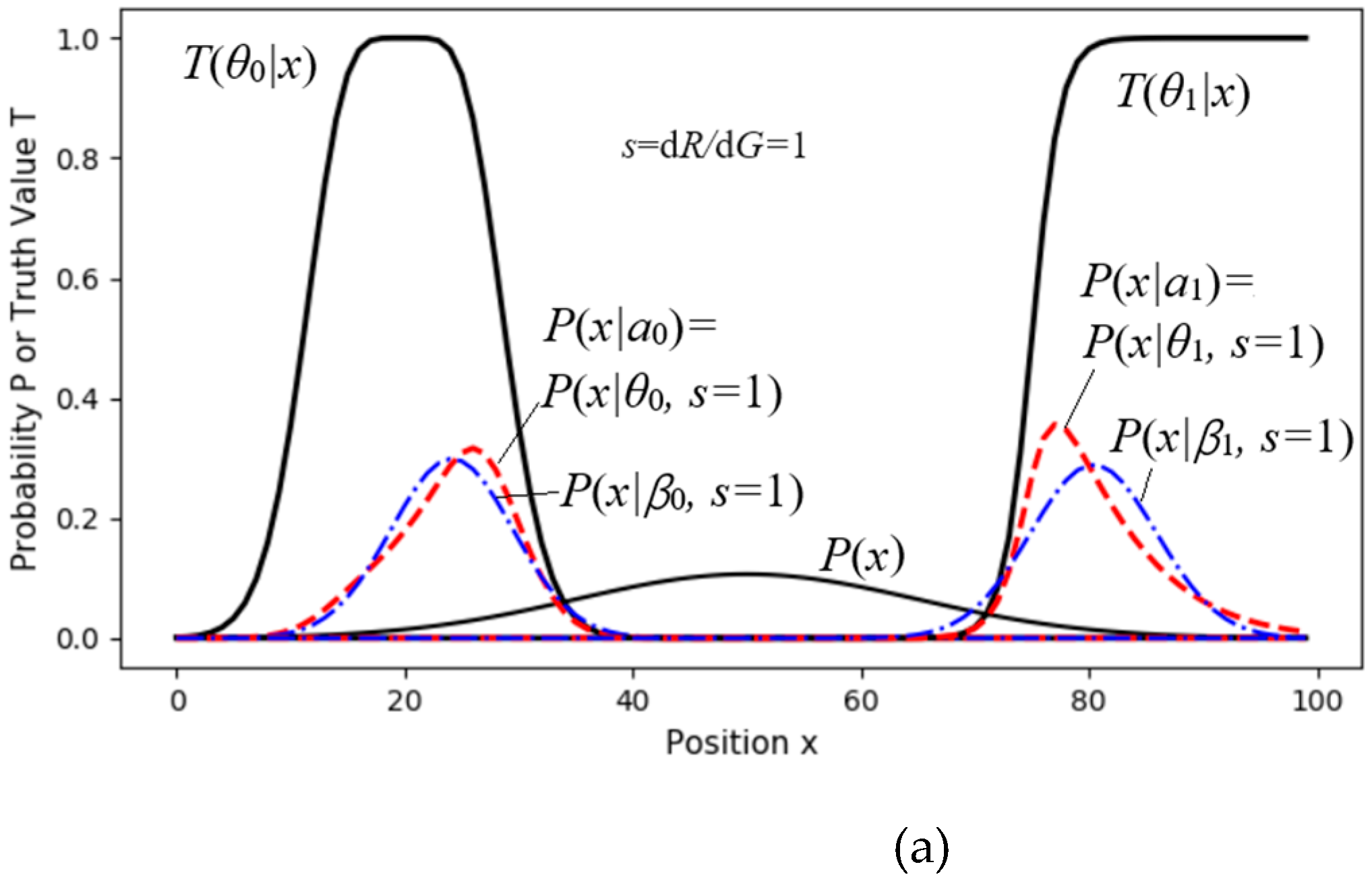

4.2.3. Experimental Results: Trade-Off Between Maximizing Purposiveness and MIE

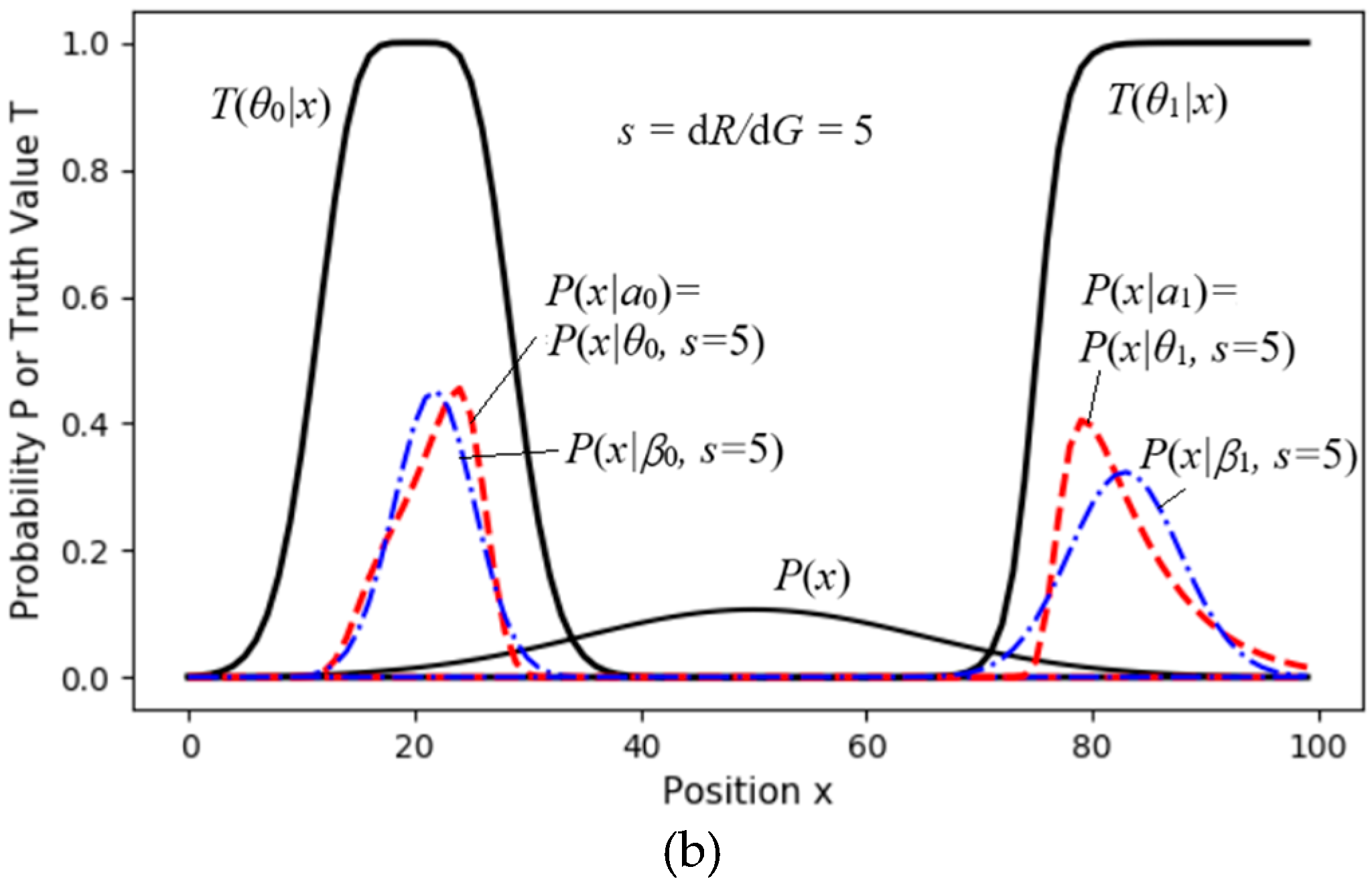

Figure 11shows a two-objective control task, with objectives represented by the truth functions

T(

θ0|x) and

T(

θ1|x). We can imagine these as two pastures with fuzzy boundaries where we need to herd sheep. Without control, the density distribution of the sheep is

P(

x). We need to solve an appropriate distribution

P(

a).

For different s, we set the initial proportions: P(a0)=P(a1)=0.5. Then, we used (33) and (34) for the MID iteration to obtain proper P(aj|x) (j=0,1). Then, we got P(x|aj)=P(x|θj,s) by using (33). Finally, we obtained G(s), R(s), and R(G) by using (25).

The dashed line for

R1(

G) in

Figure 12 indicates that if we replace

P(

x|aj)=

P(

x|θj,

s) with a normal distribution,

P(

x|βj,

s),

G and

G/

R1 do not obviously become worse.

4.3. Investment Portfolios and Information Values

4.3.1. Capital Growth Entropy

Markowitz's portfolio theory [

53] uses a linear combination of expected income and standard deviation as the optimization criterion. In contrast, the compound interest theory of portfolios uses compound interest, i.e., geometric mean income, as the optimization criterion.

The compound interest theory began with Kelley [

54], followed by Latanne and Tuttle [

55], Arrow [

56], Cover [

57], and the author of this article. The famous American information theory textbook "Elements of Information Theory" [

58] co-authored by Cover and Thomas, introduced Cover's research. Arrow, Cover, and the author of this article also discussed the value of information. The author published a monograph, "Entropy Theory of Portfolios and Information Value" [

59] 1997, and obtained many different conclusions.

The following is a brief introduction to the Capital Growth entropy proposed by the author.

Assuming that the principal is A, the profit is B, and the sum of principal and interest is C. The investment income is r=B/A, and the rate of return on investment (i.e., output ratio: output/input) is R=C/A=1+r.

N security prices form an N-dimensional vector, and the price of the k-th security has nk possible prices, k=1, 2, ..., N. There are W=n1×n2×...×nN possible price vectors. The i-th price vector is xi = (xi1, xi2, ... xiN), i=1, 2, ..., W; the current price vector is x0=(x01, x02, ..., x0N). Assuming that one year later, the price vector xi occurs, then the rate of return of the k-th security is Rik=xik/x0k, and the total rate of return is:

where qk is the investment proportion in the k-th security, q0 is the proportion of cash (or risk-free assets) held by the investor; R0k=R0=(1+r0), r0 is the risk-free interest rate.

Suppose we conduct m investment experiments to get the price vectors, and the number of times xi or ri occurs is mi. The average multiple of the capital growth after each investment period or the geometric mean output ratio is

When m→∞, mi/m=P(xi), we have the capital growth entropy

If the log is base 2, Hg represents the doubling rate.

If the investment turns into betting on horse racing, where only one horse (the k-th horse) wins each time. The winner's return rate is Rk, and the others lose their wagers. Then, the above formula becomes

where q0 is the proportion of funds not betted, and 1–q0–qk is the proportion of funds paid.

4.3.2. Generalization of Kelley's Formula

Kelley, a colleague of Shannon, found that the method used by Shannon's information theory can be used to optimize betting, so he proposed the Kelley formula [

54].

Assume that In a gambling game, if you lose, you will lose r1=1 time; if you win, you will earn r2 >0 times. The probability of winning is P, then the optimal ratio is:

Using the capital growth entropy can lead to more general conclusions. Let r1 < 0. The capital growth entropy is:

Letting dHg/dq=0, we derive:

where E is the expected income. For example, for a coin toss bet, if one wins, he earns twice as much; if he loses, he loses 1 time; the probabilities of winning and losing are equal. Then E is 0.5, and the optimal investment ratio is q*=0.5/(1*2)=0.25.

Assuming r0=0 above, if we consider the opportunity cost or the risk-free income, then r0>0. At this time, the optimal ratio is:

Letting dHg/dq=0, we can get:

where R0=1+r0, d1=r1+r0, d2=r2–r0.

The book [

59] also discusses optimizing the investment ratio when short selling and leverage are allowed (see

Section 3.3) and optimizing the investment ratio for multi-coin betting. The book derives the limit theorem of diversified investment: If the number of coins increases infinitely, the geometric mean income equals the arithmetic mean income.

4.3.3. Risk Measurement, Investment Channels, and Investment Channel Capacity

Markowitz uses expected income E and standard deviation σ to represent the income and risk of a portfolio. Similarly, we use Rg and Rr to represent the return and risk of a portfolio. Rr is defined in the following formula:

where Ra=1+E. Assuming that the geometric mean return of any portfolio is equivalent to the geometric mean return of a coin toss bet with an equal probability of gain or loss, then

Let sinα=

Rr/Ra ϵ[0,1], which represents the bankruptcy risk better. When sinα is close to 1, the investment may go bankrupt (see

Figure 3.9).

Figure 14.

Relationship between relative risk sinα and Rr, Ra, and Rg.

Figure 14.

Relationship between relative risk sinα and Rr, Ra, and Rg.

We call the pair (P, R) the investment channel, where P=(P1, P2, ... PM) is the future price vector, R=(Rik) is the return matrix, and the set of all possible investment ratio vectors is qC. Then, the capacity of the investment channel (abbreviated as investment capacity) is defined as

where q*= q*(R, P) is the optimal investment ratio.

For example, for a typical coin toss bet (with equal probabilities of winning and losing, and r0=0), q*=E/(r1r2), the investment capacity is:

Since 1/(1–x)=1+x+x2+…≈1+x, when E/Rr<<1, there is an approximate formula:

In comparison with the Gaussian channel capacity formula for communication:

we can see that the investment capacity formula is very similar to the Gaussian channel capacity formula. This similarity means that investment needs to reduce risk, just as communication needs to reduce noise.

4.3.4. Information Value Formula Based on Capital Growth Entropy

Weaver, who co-authored the book "A Mathematical Theory of Communication" [

30] with Shannon, proposed three communication levels related to Shannon' 's information, semantic information, and information value.

According to the common usage of "information value", information value mentioned in the academic community does not refer to the value of information on markets but to the utility or utility increment generated by information. We define the information value as the increment of capital growth entropy [

59].

Assume that the prior probability distribution of different returns is P(x), and the return matrix is (Rik), then the expected capital growth entropy is Hg(X). The optimal investment ratio vector q* is q*=q*(P(x),(Rik)). When the probability distribution of the predicted return becomes P(x|θj), the capital growth entropy becomes

The optimal investment ratio becomes q**=q**(P(x|θj), (Rik)). We define the increment of the capital growth entropy obtained after the semantic KL information I(X; θj) is provided as the information value (i.e., the average information value):

It can be seen that V(X; θj) and I(X; θj) have similar structures. For the above formula, when xi is determined to occur, the information value of yj becomes

Information value also needs to be verified by facts; wrong predictions may bring negative information value.

4.3.5. Comparison with Arrow's Information Value Formula

The utility function defined by Arrow is [

56]:

where U(qiRi) is the utility obtained by the investor when the i-th return occurs.

Under the restriction of ∑i qi=1, qi=Pi(i=1,2,…) maximizes U so that

After receiving the information, the investor knows which income will occur and thus invests all his funds in it. Hence, there is

The information value is defined as the difference in utility between investment with and without information and is equal to Shannon entropy, that is

The optimal investment ratio obtained from the above formula is inconsistent with the Kelley formula and the conclusion of the author of this article. For example, according to the Kelley formula, the optimal ratio is 25% for the coin toss bet above. The compound interest is 0.061%, and the investment capacity is 0.084<<1 bit.

According to Arrow's theory, how does one bet? Should one bet 50% on each of the profit and loss?

Arrow seems to confuse the k-th security with the i-th return. He uses U(qiRi)=log(qiRi), while the author uses

Arrow does not consider the non-bet proportion q0, nor the paid proportion 1–q0–qk. The utility calculated in this way is puzzling.

Cover and Thomas inherited Arrow's method and concluded that when there is information, the optimal investment doubling rate increment equals Shannon MI [

57] (see

Section 6.2). Their conclusion has the same problem.

4.4. Information, Entropy, and Free Energy in Thermodynamic Systems

To clarify the relationship between information and free energy in physics, we discuss information, entropy, and free energy in thermodynamic systems.

According to Stirling's formula, ln

N! =

Nln

N − N (when

N→∞), there is a simple connection between Boltzmann entropy and Shannon entropy [

60]:

where S' is entropy, k is the Boltzmann constant, xi is the i-th microscopic state, N is the number of molecules, and T is the absolute temperature, which equals a molecule's average translational kinetic energy. P(xi|T) represents the probability density of molecules in state xi at temperature T. The Boltzmann distribution is:

where Z is the partition function.

Considering the information between temperature and molecular energy, we use xi as energy ei. Let Gi denote the number of microscopic states with energy ei and G denote the number of all states. Then P(xi) = Gi/G is the prior probability of xi. So, Equation (58) becomes:

Under the energy constraint, when the system reaches equilibrium, Equation (59) becomes:

Now, we can interpret exp[−ei/(kT)] as the truth function T(θj|x), Z as the logical probability T(θj), and Equation (61) as the semantic Bayesian formula.

S and S' differ by a constant c (which does not change with temperature). There is S’=S+c, c=∑i P(xi)lnGi. If c is ignored, there is lnG=H(X)+c=H(X), and

Consider a local non-equilibrium system. Different regions yj(j = 1, 2, …) of the system have different temperatures Tj(j = 1, 2, …), so we have

Since P(yj)=Nj/N, we can get:

This formula shows the relationship between Shannon MI and physical entropy. It shows that the physical entropy S is similar to the posterior entropy H(X|Y) of x. The above formula shows that the Maximum Entropy (ME) law in physics can be equivalently expressed as the minimum MI law.

According to (47) and (48), when the local equilibrium is reached, there is

The above formula shows that for a local equilibrium system, the minimum Shannon MI can be expressed by the semantic MI formula.

Helmholtz's free energy formula is:

where F is free energy, and E is the system's internal energy. When the internal energy and temperature remain unchanged, the increase in free energy is

Comparing the above equation with Equations (63) and (64), we can find that Shannon MI is like the increase in free energy; semantic MI is like the increase in local equilibrium systems, which is smaller than Shannon MI, just as work is smaller than free energy. We can also regard k

NT and k

NTj as the unit information values [

5], so the increase in free energy is similar to the increase in information value.

5. The G Theory for Machine Learning

5.1. Basic Methods of Machine Learning: Learning Functions and Optimization Criteria

The most basic machine learning method is:

First, we use samples or sample distributions to train the learning functions with a specific criterion, such as maximum likelihood or RLS criterion;

Then, we make probability predictions or classifications utilizing the learning function with minimum distortion, minimum loss, or maximum likelihood criteria.

When learning, we generally use maximum likelihood or RLS criteria; the criteria may differ for different tasks when classifying. For prediction tasks where information is important, we generally use maximum likelihood and RLS criteria. To judge whether a person is guilty or not, where correctness is essential, we may use the minimum distortion (or loss) criterion. The maximum semantic information criterion is equivalent to the maximum likelihood criterion, similar to the RLS criterion. Compared with the minimum distortion criterion, the maximum semantic information criterion can reduce the underreporting of small probability events.

We generally do not use P(x|yj) to train P(x|θj), because if P(x) changes, the originally trained P(x|θj) will become invalid. Using parameterized transition probability function P(θj|x) as a learning function is unaffected by P(x) changes. However, using P(θj|x) as a learning function also has essential defects. When category n >2, it is difficult to construct P(θj|x)(j=1,2,…) because of the normalization restriction, that is, ∑j P(θj|x)=1 (for each x). As we will see below, there is no restriction when using truth or membership functions as learning functions.

5.2. For Multi-Label Learning and Classification

Consider multi-label learning, a supervised learning task. From the sample {(xk, yk), k = 1,2,…, N}, we can get the sample distribution P(x, y). Then, use formula (16) or (18) for the optimized truth functions.

Assume that a truth function is a Gaussian function, there should be:

So, we can use the expectation and standard deviation of P(x|yj)/P(x) or P(yj|x) as the expectation and standard deviation of T(θj|x). If the truth function is like a dam cross-section, we can get it through some transformation.

Figure 15.

Using prior and posterior distributions

P(

x) and

P(

x|yj) to obtain the optimized truth function

T*(

θj|

x). For details, see Appendix B in [

8].

Figure 15.

Using prior and posterior distributions

P(

x) and

P(

x|yj) to obtain the optimized truth function

T*(

θj|

x). For details, see Appendix B in [

8].

If we only know P(yj|x) but not P(x), we can assume that P(x) is equally probable, that is, P(x)=1/|U|, and then optimize the membership function using the following formula:

For multi-label classification, we can use the classifier:

If the distortion criterion is used, we can use –log T(θj|x) as the distortion function or replace I(X; θj) with T(θj|x).

The popular binary relevance method (Binary Relevance [

61]) converts an

n-label learning task into an

n-pair label learning task. In comparison, the channel matching method is much simpler.

5.3. Maximum MI Classification for Unseen Instances

This type of classification belongs to semi-supervised learning. We take the medical test and the signal detection as examples (see

Figure 16).

Figure 5.

Illustrating the medical test and the signal detection. We choose yj according to z∈Cj. The task is to find the dividing point z' that results in MaxMI between X and Y.

Figure 5.

Illustrating the medical test and the signal detection. We choose yj according to z∈Cj. The task is to find the dividing point z' that results in MaxMI between X and Y.

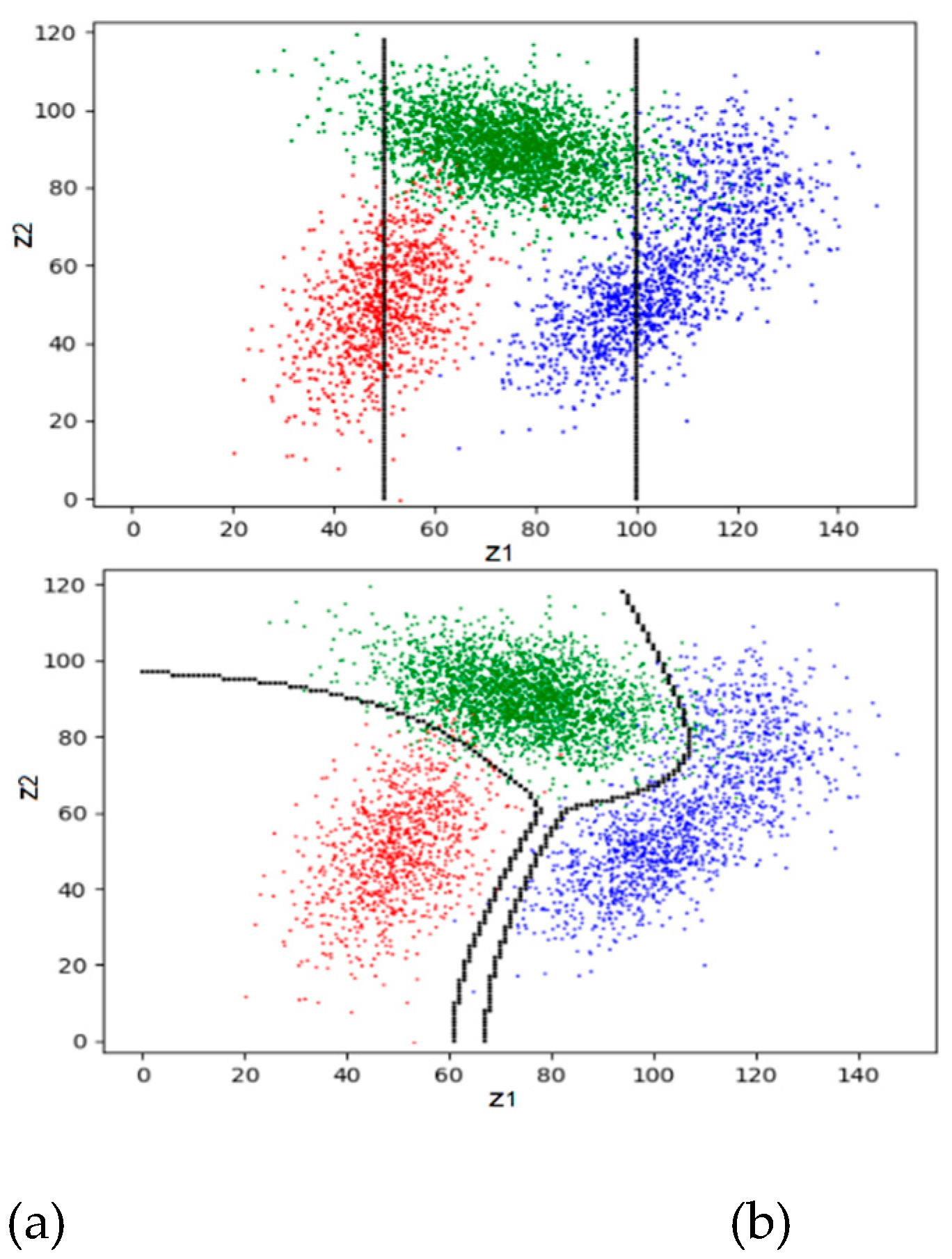

The following algorithm is not limited to binary classifications. Let Cj be a subset of C and yj = f(z|z∈Cj); hence S={C1, C2, …} is a partition of C. Our task is to find the optimized S, which is

First, we initiate a partition. Then we do the following iterations.

Matching I: Let the semantic channel match the Shannon channel and set the reward function. First, for given S, we obtain the Shannon channel:

Then we obtain the semantic channel T(y|x) from the Shannon channel and T(θj) (or mθ(x,y) = m(x, y)). Then we have I(xi; θj). For given z, we have conditional information as the reward function:

Matching II: Let the Shannon channel match the semantic channel by the classifier:

Repeat

Matching I and

Matching II until

S does not change. Then, the convergent

S is

S* we seek. The author explained the convergence with the

R(

G) function (see

Section 3.3 in [

13]).

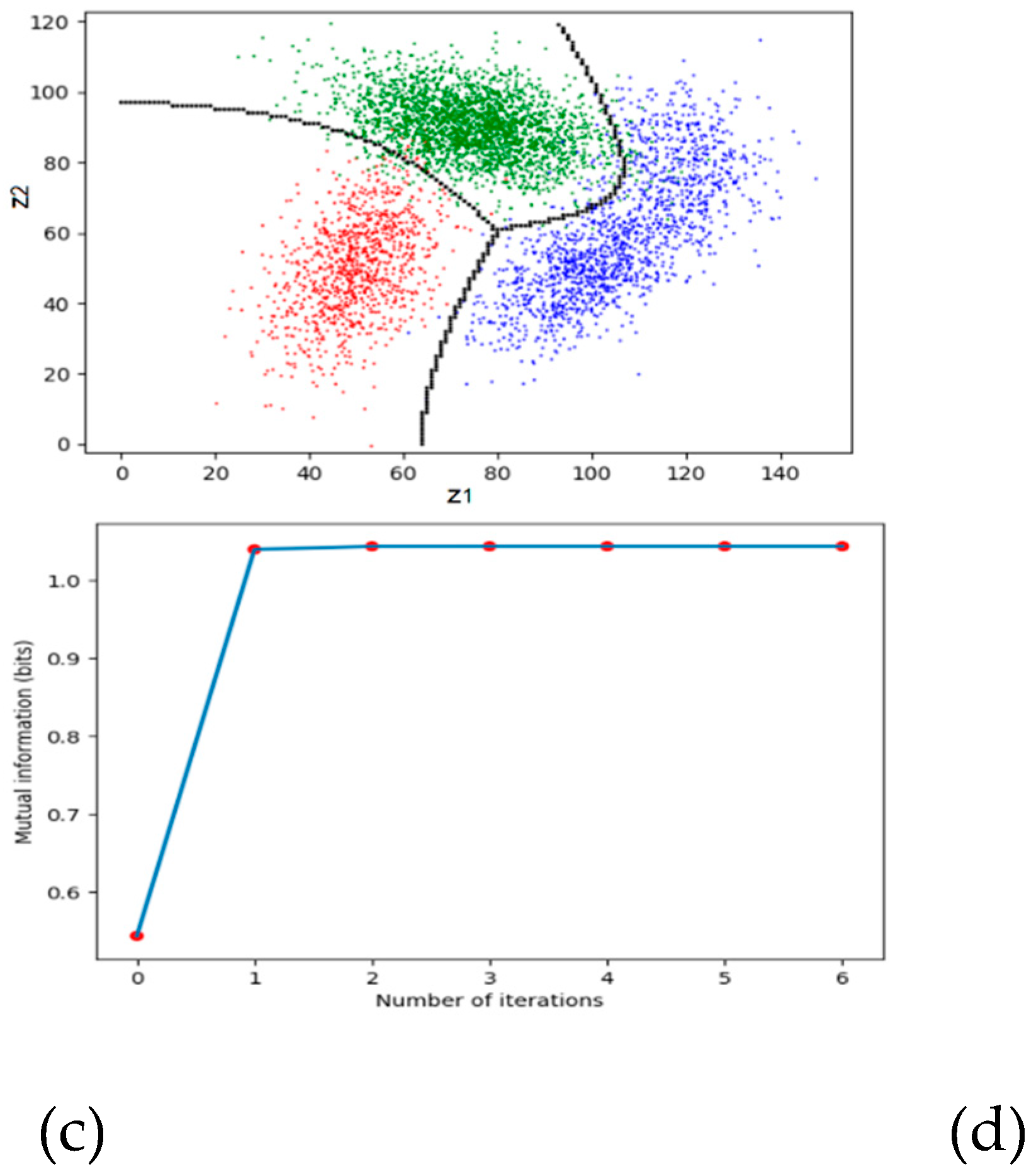

Figure 6 shows an example. The detailed data can be found in

Section 4.2 of [

13]. The two lines in

Figure 6a represent the initial partition.

Figure 6d shows that the convergence is very fast.

Figure 7.

The maximum MI classification. (a) A very bad initial partition; (b) after the first iteration; (c) after the second iteration; (d) the MI changes with the iteration number.

Figure 7.

The maximum MI classification. (a) A very bad initial partition; (b) after the first iteration; (c) after the second iteration; (d) the MI changes with the iteration number.

However, this method is unsuitable for maximum MI classification in high-dimensional space. We need to combine neural network methods to explore more effective approaches.

5.4. Explanation and Improvement of the EM Algorithm for Mixed Models

The EM algorithm [

45,

62,

63] is usually used for mixed models or clustering, an unsupervised learning method.

We know that P(x)=∑j P(yj)P(x|yj). Given a sample distribution P(x), we use Pθ(x)=∑j P(yj)P(x|θj) to approximate P(x) so that the relative entropy or KL divergence KL(P‖Pθ) is close to 0. P(y) is the probability distribution of the latent variable to be sought.

The EM algorithm first presets P(x|θj) and P(yj), j = 1, 2, …, n. E-step obtains:

Then, in the M-step, the log-likelihood of the complete data (usually represented by Q) is maximized. The M-step can be divided into two steps: M1-step for

and M2-step for

which optimizes the likelihood function. For Gaussian mixture models, we can use the expectation and standard deviation of P(x)P(yj|x)/P+1(yj) as the expectation and standard deviation of P(x|θj+1).

From the perspective of the G theory, the M2-step is to make the semantic channel match the Shannon channel, the E-step is to make the Shannon channel match the semantic channel, and the M1-step is to make the destination P(y) match the source P(x). Repeating the above three steps can make the mixture model converge. The converged P(y) is the required probability distribution of the latent variable. According to the derivation process of the R(G) function, the E-step and M1-step minimize the information difference R–G; the M-step maximizes the semantic MI. Therefore, the optimization criterion used by the EM algorithm is the MIE criterion.

However, there are two problems with the above method to find the latent variable: 1) P(y) may converge slowly; 2) If the likelihood functions are also fixed, how do we solve P(y)?

Based on the R(G) function analysis, the author improved the EM algorithm to the EnM algorithm [

64]. The EnM algorithm includes the E-step for

P(

y|x), n-step for

P(

y), and M-step for

P(

x|

θj)(

j=1,2,…). The n-step repeats the E-step and M1-step in the EM algorithm

n times so that

P+1(

y) ≈

P(

y). The EnM algorithm also uses the MIE criterion. The n-step can speed up the solution of

P(

y). M2-step only optimizes the likelihood functions. Because

P(

yj)

/P+1(

yj) is approximately equal to 1, we can use the following formula to optimize the model parameters:

Without n-step, there will be P(yj) ≠ P+1(yj), and ∑ i P(xi)P(x|θj)/Pθ(xi) ≠ 1. When solving the mixed model, we can choose a smaller n, such as n=3. When solving P(y) specifically, we can select a larger n until P(y) converges. When n=1, the EnM algorithm becomes the EM algorithm.

The following mathematical formula proves that the EnM algorithm converges. After the M-step, the Shannon MI becomes:

We define:

Then, we can deduce that after E-step, there is

where KL(P||Pθ) is the relative entropy or KL divergence between P(x) and Pθ(x); the right KL divergence is:

It is close to 0 after the n-step.

Equation (81) can be used to prove that the EnM algorithm converges. Because the M-step maximizes G, and the E-step and the n-step minimize R–G and KL(PY +1)‖PY), H(P‖Pθ) can be close to 0. We can also use the above method to prove that the EM algorithm converges.

In most cases, the EnM algorithm performs better than the EM algorithm [

64], especially when

P(

y) is hard to converge.

Some researchers believe that EM makes the mixture model converge because the complete data log-likelihood

Q = –

H(

X,Yθ) continues to increase [

39], or negative free energy

F′=

H(

Y)

+Q continues to increase [

45]. However, we can easily find counterexamples where

R–G continues to decrease, but

Q and

F′ do not necessarily continue to increase.

Figure 18 shows the example used by Neal and Hinton [

45], but the mixture proportion in the true model is changed from 0.3:0.7 to 0.7:0.3.

Figure 18.

The convergent process of the mixture model from Neal and Hinton [

45]. The mixture proportion is changed from 0.7:0.3 to 0.3:0.7. (a) The iteration starts; (b) the iteration converges; (c) the iteration process.

P(

x, θj)

=P(

yj)

P(

x|θj) (

j=0, 1).

Figure 18.

The convergent process of the mixture model from Neal and Hinton [

45]. The mixture proportion is changed from 0.7:0.3 to 0.3:0.7. (a) The iteration starts; (b) the iteration converges; (c) the iteration process.

P(

x, θj)

=P(

yj)

P(

x|θj) (

j=0, 1).

This experiment shows that the decrease in R–G, not the increase in Q or F', is the reason for the convergence of the mixture model.

The free energy of the true mixture model (with true parameters) is the Shannon conditional entropy

H(

X|Y). If the standard deviation of the true mixture components is large,

H(

X|Y) is also large. If the initial standard deviation is small,

F is small initially. After the mixture model converges,

F must be close to

H(

X|Y). Therefore,

F increases (i.e.,

F' decreases) during the convergence process. Many experiments [

25,

64] have shown that this is indeed the case.

Equation (77) can also explain pre-training in deep learning, where we need to maximize the model's predictive ability and minimize the information difference R–G (or compress data).

5.5. Semantic Variational Bayes: A Simple Method for Solving Hidden Variables

Given

P(

x) and constraints

P(

x|

θj),

j=1,2,…, we need to solve

P(

y) that produces

P(

x)=∑

j P(

yj)

P(

x|

θj).

P(

y) is the probability distribution of the latent variable

y, sometimes called the latent variable. The popular method is the Variational Bayes method (VB for short) [

65]. This method originated from the article by Hinton and Camp [

44]. It was further discussed and applied in the articles by Neal and Hinton [

45], Beal [

66], and Koller [

67] (ch.11). Gottwald and Braun's article "

Two Free Energy and the Bayesian Revolution" [

68] discusses the relationship between the MFE principle and ME principle in detail.

VB uses P(y) (usually written as g(y)) as a variation to minimize the following function:

It is equal to the semantic posterior entropy H(X|Yθ) of X. The smaller F is, the larger the semantic MI I(X; Y))=H(X)–H(X|Yθ) is.

It is easy to prove that when the semantic channel matches the Shannon channel, that is,

T(

θj|x)∝

P(

yj|x) or

P(

x|θj)=

P(

x|yj) (

j=1,2,…),

F is minimized and the semantic MI is maximized. This can optimize the prediction model

P(

x|θj)(

j=1,2,…), but it cannot optimize

P(

y). For optimizing

P(

y), the mean field approximation [

45,

65] is usually used; that is,

P(

y|x) instead of

P(

y) is used as the variation. Only one

P(

yj|x) is optimized at a time, and the other

P(

yk|x) (

k≠j) remains unchanged. Minimizing

F in this way is actually maximizing the log-likelihood of

x or minimizing

KL(

P||Pθ). In this way, optimizing

P(

y|x) also indirectly optimizes

P(

y).

Unfortunately, when optimizing

P(

y) and

P(

y|x),

F may not decrease (see

Figure 18). So, VB is good as a tool and is imperfect as a theory.

Fortunately, it is easier to solve

P(

y|x) and

P(

y) using the MID iteration in solving

R(

D) and

R(

G) functions. The MID iteration plus LBI for optimizing the prediction model is the Semantic Variational Bayes' method (abbreviated as SVB) [

30]. It uses the MIE criterion.

When the constraint changes from likelihood functions to truth functions or similarity functions, P(yj|xi) in the MID iteration formula is changed from

to

From P(x) and the new P(y|x), we can get the new P(y). Repeating the formulas for P(y|x) and P(y) will lead to convergence of P(y). Using s allows us to tighten the constraints for increasing R and G. Choosing proper s enables us to balance between maximizing semantic information and maximizing information efficiency.

The main tasks of SVB and VB are the same: using variational methods to solve latent variables based on observed data and constraints. The differences are:

Criteria: In the definition of VB, it adopts the MFE (i.e., minimum semantic posterior entropy) criterion, whereas, for solving P(y), it uses P(y|x) as the variation, actually uses the maximum likelihood criterion that makes the mixture model converge. In contrast, SVB uses the MID criterion, equal to the maximum likelihood criterion (optimizing model parameters) plus the ME criterion.

Variational method: VB only uses P(y) or P(y|x) as the variation, while SVB alternatively uses P(y|x) and P(y) as the variation.

Computational complexity: VB uses logarithmic and exponential functions to solve

P(

y|x) [

65]; the calculation of

P(

y|x) in SVB is relatively simple (for the same task, i.e., when

s=1).

Constraints: VB only uses likelihood functions as constraint functions. In contrast, SVB allows using various learning functions (including likelihood, truth, membership, similarity, and distortion functions) as constraints. In addition, SVB can use the parameter s to enhance constraints.

Because SVB is more compatible with the maximum likelihood criterion and the ME principle, it should be more suitable for many occasions in machine learning. However, because it does not consider the probability of parameters, it may not be as applicable as VB in some occasions. See [

30] for more details of SVB.

5.6. Bayesian Confirmation and Causal Confirmation

Logical empiricism was opposed by Popper's falsificationism [

19,

20], so it turned to confirmation (i.e., Bayesian confirmation) instead of induction or positivism [

69,

70]. Bayesian confirmation was previously a field of concern for researchers in the philosophy of science [

61,

72], and now many researchers in natural sciences have also begun to study it [

26,

73,

74]. The reason is that uncertain reasoning requires major premises, which need to be confirmed.

The main reasons why researchers have different views on Bayesian confirmation are:

There are no suitable mathematical tools; for example, statistical and logical probabilities are not well distinguished.

Many people do not distinguish between the confirmation of the relationship (i.e. →) in the major premise y→x and the confirmation of the consequent (i.e., x occurs);

No confirmation measure can reasonably clarify the Raven Paradox.

To clarify the Raven paradox, the author wrote the article "Channels' confirmation and predictions' confirmation: from medical tests to the Raven paradox" [

26].

In the author's opinion, the task of Bayesian confirmation is to evaluate the support of the sample distribution for the major premise. For example, for the medical test (see

Figure 16), a major premise is "If a person tests positive (

y1), then he is infected (

x1)", abbreviated as

y1→

x1. For a channel's confirmation, a truth (or membership) function can be viewed as a combination of a clear truth function

T(

y1|x)∈{0,1} and a tautology's truth function (always 1):

A tautology's proportion

b1' is the degree of disbelief. The credibility is

b1, and its relationship with

b1’ is

b1’=1–|

b1|. See

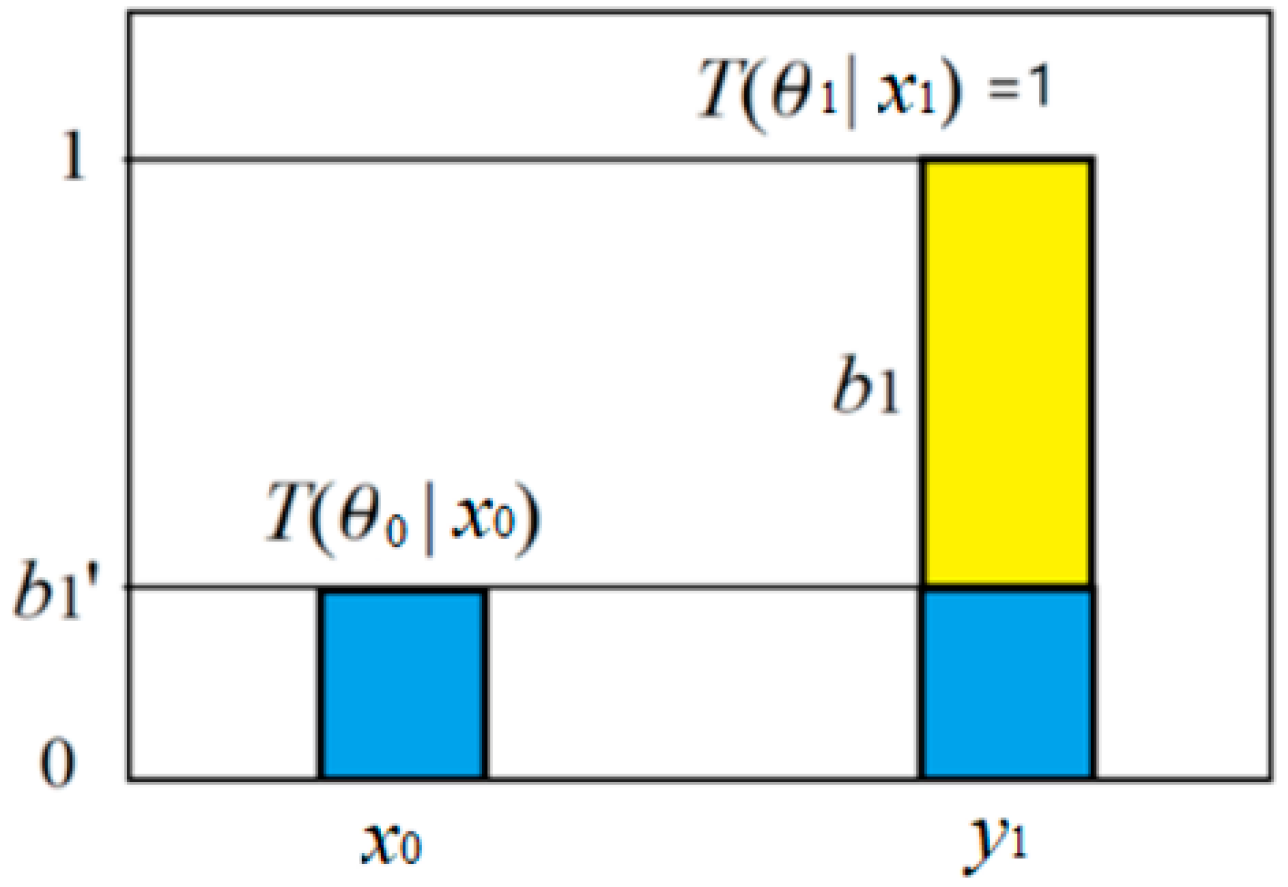

Figure 19.

Figure 19.

A truth function includes a believable proportion b1 and unbelievable proportion b1' = 1 − |b1|.

Figure 19.

A truth function includes a believable proportion b1 and unbelievable proportion b1' = 1 − |b1|.

We change b1 to maximize the semantic KL information I(X; θ1), the optimized b1, denoted as b1*, is the confirmation degree:

where R+=P(y1|xi)/P(y1|x0) is the positive likelihood ratio, indicating the reliability of the test- positive. This conclusion is compatible with medical test theory.

Considering the prediction confirmation degree, we assume that P(x|θ1) is a combination of the 0-1 part and the equal probability part. The ratio of the 0-1 part is the prediction credibility, and the optimized credibility is the prediction confirmation degree:

where a is the number of positive examples, and c is the number of negative examples.

Both confirmation degrees can be used for probability predictions, that is, to calculate

P(

x|θ1)[

26].

Hemple proposed a confirmation paradox, namely the raven paradox [

61]. According to the equivalence condition in classical logic, "if x is a raven, then x is black" (Rule 1) is equivalent to "if x is not black, then x is not a raven" (Rule 2). According to this, white chalk supports Rule 2; therefore, it also supports Rule 1. However, according to common sense, a black crow supports Rule 1, and a non-black Raven opposes Rule 1; something that is not a Raven, such as a black cat or a white chalk, is irrelevant to Rule 1. Therefore, there is a paradox between the equivalence condition and common sense. Using the confirmation measure

c1*, we can be sure that common sense is correct and the equivalence condition is wrong (for fuzzy major premises), thus eliminating the Raven paradox. However, other confirmation measures cannot eliminate the Raven paradox [

26].

Causal probability is used in causal inference theory [

75]:

It indicates the necessity of the cause

x1 replacing

x0 to lead to the result y1. Where P(y1|x)=P(y1|do(x)) is the posterior probability of y1 caused by intervention

x. The author uses the semantic information method to obtain the channel causal confirmation degree [

27]:

It is compatible with the above causal probability but can express negative causal relationships, such as the necessity of vaccines inhibiting infection.

5.7. Emerging and Potential Applications

1) About self-supervised Learning

Applications of estimated MI have emerged in the field of self-supervised learning. The estimated MI is a special case of semantic MI. Both MINE proposed by Belghazi et al. [

34] and InfoNCE proposed by Oord et al. [

35] use estimated MI.

MINE and InfoNCE are essentially the same as the semantic information methods. Their common features are:

The membership function T(θj|x) or similarity function S(x, yj) proportional to P(yj|x) is used as the learning function. Its maximum value is generally 1, and its average is the partition function Zj.

The estimated information or semantic information between x and yj is log[T(θj|x)/Zj] or log[S(x, yj)/Zj].

The statistical probability distribution P(x, y) is still used when calculating the average information.

However, many researchers are still unclear about the relationship between estimated MI and Shannon MI. The G theory's R(G) function can help readers understand this relationship.

2) About Reinforcement Learning

Goal-oriented information introduced in

Section 4.2 can be used as a reward for reinforcement learning. Assuming that the probability distribution of

x in state

sk is

P(

x|ak–1), which becomes

P(

x|ak) in state

sk+1. The reward of

ak is:

Reinforcement learning is to find the optimal action sequence a1, a2, …, so that the sum of rewards r1+r2+… is maximized.

Like constraint control, reinforcement learning also needs the trade-off between maximum purposefulness and minimum control cost. The R(G) function should be helpful.

3) About the Truth function and Fuzzy Logic for Neural Networks

When we use the truth, distortion, or similarity function as the weight parameters of the neural network, the neural network contains semantic channels. Then, we can use semantic information methods to optimize the neural network. Using the truth function T(θj|x) as weight is better than using the parameterized inverse probability function P(θj|x) because there is no normalization restriction when using truth functions.

However, unlike the clustering of points on the plane, a point becomes an image for the clustering of graphics, and the similarity function between images needs different methods. A common method is to regard an image as a vector and use cosine similarity between vectors. However, cosine similarity may have negative values, which require activation functions and biases to make necessary conversions. Combining existing neural network methods and channel-matching algorithms needs further exploration.

Fuzzy logic, especially fuzzy logic compatible with Boolean algebra, seems to be useful in neural networks; for example, the activation function

Relu(

a–b) = max(0,

a–b) commonly used in neural networks is the logical difference operation

f(

a)=max(0,

a–b) used in the author's color vision mechanism model [

76,

77,

78]. Truth functions, fuzzy logic, and the semantic information method used in neural networks should make neural networks easier to understand.

4) Explaining Data Compression in Deep Learning

To explain the success of deep neural networks such as AutoEncoders [

36] and Deep Belief Networks [

79], Tishby et al. [

39] proposed the information bottleneck explanation, arguing that when optimizing deep neural networks, we maximize the Shannon MI between some layers and minimize the Shannon MI between other layers. However, from the perspective of the

R(

G) function, each coding layer of the Autoencoder needs to maximize the semantic MI

G and minimize the Shannon MI

R; pre-training is to let the semantic channel match the Shannon channel so that

G≈R and

KL(

P||Pθ)≈0 (as if for mixture models to converge). Fine-tuning increases

R and

G at the same time by increasing

s (making the partition boundaries steeper).