I. Introduction

1. Background on Cloud Computing

Cloud computing, a cornerstone of the digital age, has its roots in the 1950s and 1960s, when mainframes and time- sharing systems introduced the concept of shared computing resources. These early innovations enabled multiple users to access a central computer, laying the foundation for resource efficiency. The advent of ARPANET further propelled the field, allowing remote access to information and applications and shaping the trajectory of cloud computing.

Fast-forward to the 2000s, cloud computing evolved into its three key pillars: software, infrastructure, and platform services. Salesforce pioneered the delivery of business applications via the web, spearheading the software-as-a-service (SaaS) revolution. In 2006, Amazon Web Services (AWS) marked a pivotal milestone by introducing scalable and on- demand cloud infrastructure services, redefining how organizations approach IT resources.

Today, cloud computing continues to echo the historical principles of time-sharing, enabling the efficient allocation of computing resources among users. Its core benefits—scalability and accessibility—have driven its widespread adoption. Modern cloud systems enable seamless data and storage management by allowing applications and devices to communicate and share resources over the Internet, optimizing both costs and resource utilization.

As technology advances, the cloud remains a foundation for emerging fields like quantum computing, showcasing its enduring impact and capacity for innovation.

2. Importance of AI in Modern Technology

The Transformative Power of AI and Deep Neural Networks

Deep neural networks have revolutionized artificial intelligence (AI) by enabling systems to perform tasks with exceptional precision, previously thought to be unattainable. Advancements in deep learning have significantly enhanced interactions with technologies such as Amazon Alexa and Google Search, showcasing how AI is seamlessly integrated into our daily lives. In the medical field, AI-powered systems have reached a level of accuracy in identifying cancer cells on MRIs comparable to that of skilled radiologists, marking a breakthrough in diagnostics and healthcare. AI systems excel at handling complex, computationally intensive tasks, but they still rely on human expertise for configuration and task-specific optimization. Rather than existing as stand-alone technologies, AI enhances and integrates into current systems, as exemplified by Apple's Siri, which has transformed user interaction with mobile devices.

Applications Across Domains

AI-driven innovations leverage massive datasets to power tools such as chatbots, automation systems, and smart devices, creating smarter, more efficient home and office environments. For example, the development of fraud detection systems, once deemed impossible, is now feasible due to the combination of big data and superior computational power. These systems analyze patterns and anomalies in real time, protecting financial systems with unprecedented accuracy.

The Role of Data in AI

The effectiveness of AI is heavily reliant on data availability. Deep learning models require large amounts of data for training, and their performance improves with more extensive datasets. This dynamic has transformed data into a critical resource in today's competitive landscape. Businesses using AI to extract actionable insights from data gain a significant competitive edge. As AI continues to evolve, it underscores the principle that better data systems lead to better results.

In conclusion, AI is reshaping industries by enhancing existing technologies and delivering new capabilities. Whether it's detecting fraud, assisting radiologists, or powering smart devices, AI is creating a future where data-driven insights and efficiency define success.

3. Purpose and Scope of the Article

This article examines the significant ways in which artificial intelligence (AI) is transforming cloud computing, with a focus on its effects on scalability, resource management, and predictive analytics. As cloud computing becomes increasingly critical to modern enterprises, understanding how AI can enhance its functionalities is vital for organizations looking to maximize the benefits of these technologies. By providing an in-depth analysis, the article aims to showcase how AI innovations are redefining cloud infrastructures, enabling businesses to respond to changing demands, improve resource efficiency, and boost operational effectiveness.

Scope and Key Areas of Focus

Dynamic Resource Allocation and Auto-Scaling:

The article explores how AI empowers cloud systems to dynamically allocate resources and implement auto-scaling mechanisms to adapt to real-time workload fluctuations.

These features enhance system performance and ensure reliability, enabling seamless responses to shifting demands.

AI-Powered Resource Optimization:

AI-driven techniques for resource allocation and utilization are analyzed, particularly machine learning algorithms that anticipate resource requirements and optimize costs.

The discussion centers on how these techniques enhance efficiency, cost-effectiveness, and overall system performance in cloud computing.

Predictive Analytics in Cloud Systems:

The role of AI in facilitating advanced data analysis is examined, with an emphasis on how predictive analytics improves forecasting and operational insights.

Real-world examples illustrate how AI enhances decision-making processes and strengthens system reliability.

Challenges and Risks:

The article highlights potential risks, including data privacy concerns, security issues, and algorithmic bias, associated with integrating AI into cloud systems.

Strategies for mitigating these challenges are discussed to present a balanced view of the benefits and potential downsides.

Emerging Trends and Future Directions:

Insights into emerging trends in AI and cloud computing are shared, along with an exploration of future advancements in these domains.

Recommendations are provided to help organizations prepare for technological advancements and align their strategies with evolving trends.

4. Structure of the Article

The hardware and infrastructure layer forms the backbone of AI systems, as it must meet demanding requirements for compute power, bandwidth, and reliability. Key factors such as CPU/GPU processing power, network bandwidth, storage performance, and overall system power efficiency directly influence the success of AI applications. For instance, in a business scenario, inefficient utilization of hundreds of expensive GPUs during a distributed training job could result in significant computational and financial waste. Ensuring that the infrastructure is optimized to avoid such losses is critical for maintaining resource efficiency.

The compute and network stack can be built using either bare metal servers or virtual machines (VMs), deployed on- premises or in the cloud. While bare metal servers generally offer superior performance due to the absence of virtualization overhead, VMs provide more flexibility and scalability. These compute nodes are interconnected via high-performance networks such as RDMA (Remote Direct Memory Access) or InfiniBand, which enable efficient data transfer and high-speed communication essential for handling AI workloads.

GPUs (Graphics Processing Units) are the cornerstone of AI infrastructure and serve as a major performance differentiator in AI workloads. A pool of GPUs with varying performance capabilities is often required to cater to different application needs. To maximize GPU utilization, GPU scheduling and optimization strategies are implemented through orchestration layers and drivers. Advanced features like fractional GPU utilization allow for more granular resource allocation but may require additional licensing from vendors. Proper management of GPUs ensures optimal performance and cost-effectiveness in AI systems.

II. Overview of Cloud Computing

Cloud computing has become a fundamental strategy for organizations, offering significant business and technical advantages that are transforming how companies operate. By providing a remote, virtual pool of on-demand resources for compute, storage, and networking, cloud computing enables businesses to scale rapidly and efficiently.

At the core of cloud computing lies virtualization technology, which allows multiple virtual machines (VMs) to run on a single physical server. Each VM operates with its own operating system and applications, sharing the underlying hardware resources without interference. This approach offers several benefits, including:

Reduced Capital Expenditure: By minimizing the need for physical hardware, virtualization lowers costs associated with infrastructure.

Smaller Footprint: Less hardware translates to reduced space requirements in data centers, along with lower power and cooling costs.

Resource Optimization: Cloud environments maximize the efficiency of shared infrastructure, benefiting both vendors and consumers.

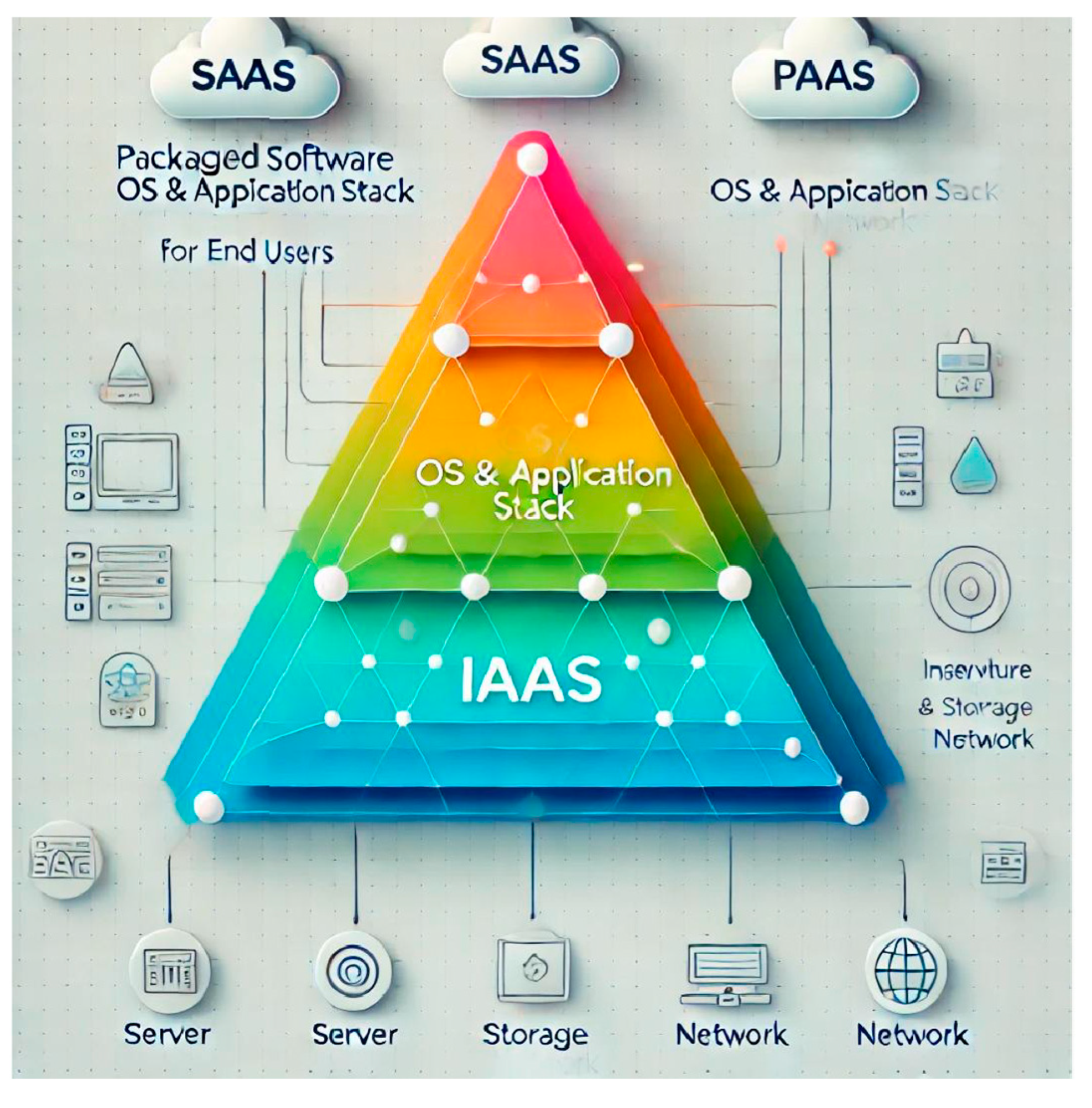

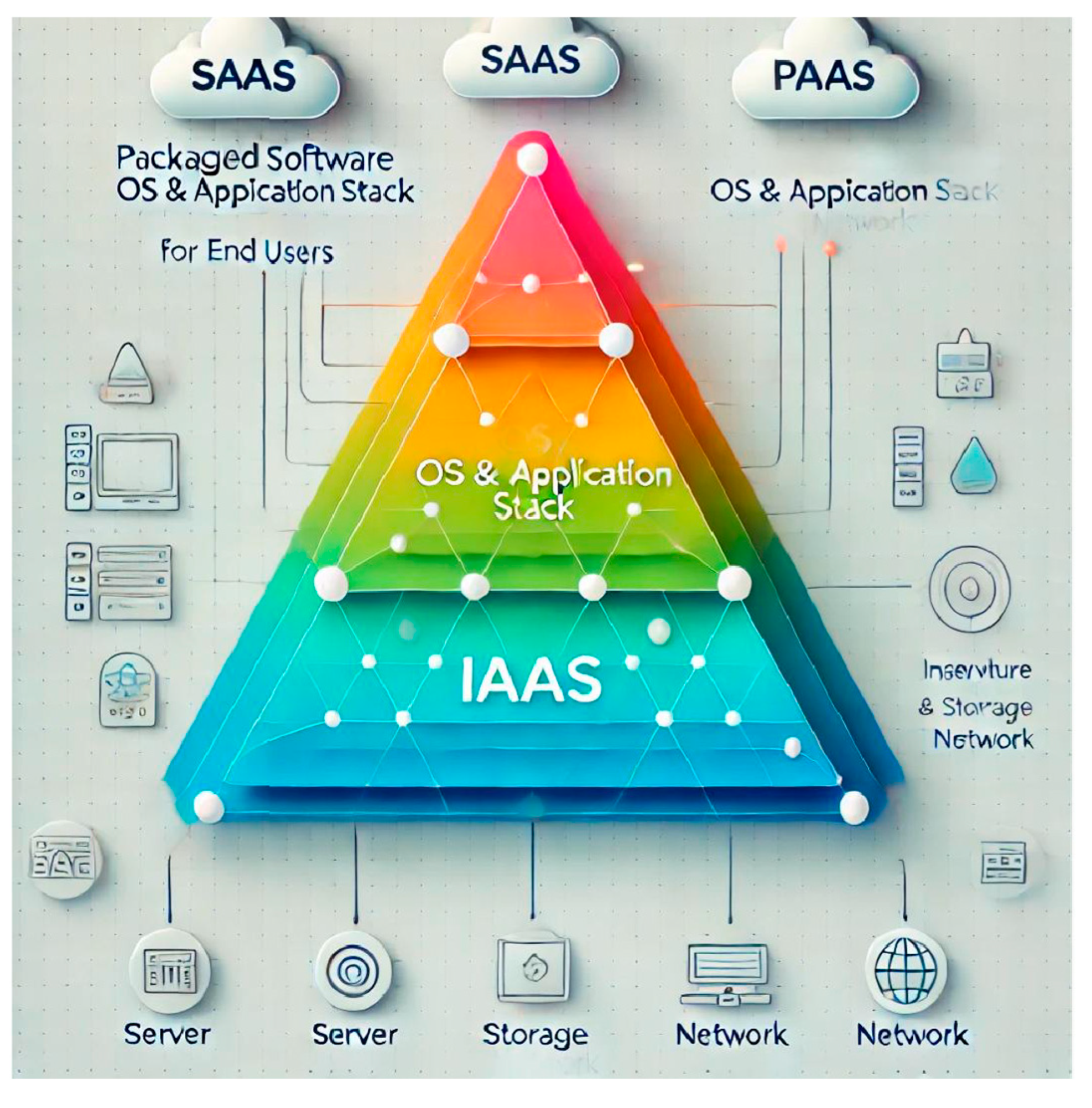

Cloud services are categorized into three primary models: SaaS (Software as a Service), PaaS (Platform as a Service), and IaaS (Infrastructure as a Service).

1. SaaS

Software as a Service (SaaS) is a delivery model where the cloud provider hosts applications, making them accessible to customers over the Internet. Instead of investing in and maintaining infrastructure, businesses pay a subscription fee on a pay-as-you-go basis.

Key Benefits:

Quick Deployment: SaaS enables businesses to get started with innovative technologies without extensive setup.

Automatic Updates: Reduces the need for in-house maintenance.

Scalability: Customers can scale services to meet fluctuating workloads by adding features as needed.

Use Cases: SaaS solutions cater to diverse business needs, including:

Customer experience and relationship management (CRM).

Enterprise resource planning (ERP).

Financial management, supply chain management, and payroll.

2. PaaS

Platform as a Service (PaaS) provides developers with the tools needed to create, manage, and deploy applications without requiring investment in underlying infrastructure. The cloud provider hosts the infrastructure and middleware, and users access the services through a web browser.

Key Features:

Ready-to-Use Programming Components: Enables integration of cutting-edge technologies like AI, blockchain, and IoT.

Comprehensive Solutions: Supports analysts, IT administrators, and developers with tools for big data analytics, database management, and security.

Benefits: PaaS accelerates development cycles, allowing businesses to innovate faster and focus on building robust applications without worrying about managing infrastructure.

3. IaaS

Infrastructure as a Service (IaaS) offers on-demand access to compute, storage, and networking resources. The cloud provider manages the physical infrastructure, while subscribers are responsible for installing and maintaining software, including applications and middleware.

Advantages:

Flexibility: Businesses can scale infrastructure as needed, paying only for what they use.

Reduced Hardware Costs: Eliminates the need for maintaining on-premises infrastructure.

Control: Subscribers retain full control over software configurations and maintenance.

Use Cases: IaaS is ideal for running workloads in the cloud, providing the foundational components for hosting databases, applications, and middleware.

III. The Role of AI in Cloud Computing

The integration of Artificial Intelligence (AI) and cloud computing is nothing short of revolutionary. These two technologies, which have independently transformed industries, are now coming together to create solutions that were once thought impossible. AI brings its incredible ability to process and analyze vast datasets, automate intricate workflows, and provide highly accurate predictive insights. On the other hand, cloud computing delivers the flexible, scalable infrastructure that allows AI to flourish by meeting its immense computational demands.

When combined, the possibilities are limitless. Imagine cloud platforms that not only adapt to your needs but anticipate them, thanks to AI-driven predictive ana ytics. Think about how personalized experiences, from smart assistants to tailored content recommendations, are now powered by this seamless collaboration between AI and the cloud.

Organizations no longer have to struggle with underutilized resources or outdated IT infrastructure. Instead, they can optimize operations, cut costs, and boost efficiency by relying on AI-powered automation and resource management in the cloud.

But the impact goes beyond businesses. For developers and tech enthusiasts, this integration represents a goldmine of opportunities. Whether you’re building AI models to deploy on platforms like AWS, Google Cloud, or Azure, or leveraging machine learning algorithms to solve real-world problems, the potential to innovate and make a difference is immense. And for individuals aspiring to start a career in this field, the demand for skilled professionals is skyrocketing. The tools, knowledge, and resources to succeed are more accessible than ever, ma ing this an exciting time to explore and thrive in the AI-cloud ecosystem. The future of technology is being written today, and AI and cloud computing are leading the charge!

IV. Transforming Scalability with AI

4.1.1. Standard Software Engineering Practices

Organizations can enhance the value of their AI investments by adopting standard software engineering technologies. Continuous integration and deployment (CI/CD) pipelines and automated testing frameworks enable the automation of AI model building, testing, and deployment processes. These tools establish standardized deployment patterns for machine learning (ML) models and ensure seamless integration with broader IT infrastructure. Additionally, promoting a collaborative culture and shared responsibility through these technologies can expedite time-to-market, minimize errors, and improve the overall quality of AI applications. For instance, a leading Asian bank successfully reduced the time required to scale ML use cases from 18 months to less than five months by implementing new protocols and tooling.

4.1.2. Data and ML Best Practices

Establishing best practices for data and ML workflows is essential for scaling AI applications effectively within an organization. Standardized protocols streamline processes such as data ingestion, feature engineering, model development, and deployment. Post-deployment, regular monitoring and maintenance are crucial to optimize model performance.

These best practices should be documented in comprehensive guides outlining the sequence of tasks, deliverables, and stakeholder roles, including data scientists, engineers, and business professionals. By adhering to these practices, organizations can efficiently scale AI initiatives and foster collaboration across functional teams.

4.1.3. Ethical and Legal Considerations

As machine learning models grow more sophisticated and impactful, adherence to legal and ethical norms becomes paramount. Establishing clear guidelines ensures that ML systems remain compliant with laws and ethical standards, which can otherwise hinder scalability and reliability. Incorporating ethical and regulatory compliance into the AI development process minimizes risks and ensures that models conform to predefined guidelines before deployment. This approach enhances trust among stakeholders and reinforces the long-term viability of AI initiatives.

4.2. Challenges and Limitations Overcoming Technology Roadblocks

Despite AI’s long-standing presence since the 1950s, modern applications such as chatbots, face-swapping apps, and robotics have only recently become mainstream. However, no universally proven formula exists for deploying AI systems at an enterprise scale, leading to several common challenges:

Suboptimal Architecture Choices: Effective AI solutions must balance predictive accuracy with performance, scalability, and manageability. Poor architectural decisions can result in overly complex systems that are difficult to manage as demands grow. For instance, multi-tenant AI-as-a-Service (AIaaS) applications require robust designs to handle high user volumes without performance degradation.

Insufficient or Poor-Quality Training Data: AI performance heavily depends on high-quality, adequately labeled data. In industries like healthcare, access to sufficient training data is often constrained by privacy concerns. Data annotation tools such as Supervise.ly are essential for preparing datasets. According to Gartner, inadequate data quality has caused 85% of AI projects to deliver erroneous results as of 2022.

Lack of Explainability: Explainable AI (XAI) aims to clarify how AI models arrive at decisions, making their processes interpretable for developers and stakeholders. While white-box models offer transparency, they often sacrifice accuracy compared to black-box approaches like neural networks. Balancing explainability and performance is critical for industries requiring detailed insights into AI decision-making.

Translating Lab Results to Real-Life Applications

While AI-powered tools often demonstrate exceptional performance in controlled environments, replicating these results in real-world scenarios remains challenging. For example, AI models have achieved remarkable success in detecting diseases like cancer or COVID-19 using advanced neural networks and vast training datasets. However, most companies struggle to reproduce such high levels of accuracy and reliability outside the laboratory, mainly due to differences in hardware, data, and operational environments.

Scaling AI Implementations

Scaling AI solutions presents significant challenges, with only 53% of AI projects successfully transitioning from prototypes to production, according to Gartner. Achieving scalability requires technical expertise, robust infrastructure, and careful planning. Organizations often encounter issues such as software scalability, insufficient resources, and gaps in technical competencies.

Overestimating AI Capabilities

Many businesses are drawn to the hype surrounding AI and initiate ambitious projects without thoroughly assessing their needs, infrastructure capabilities, or associated costs. For example, DHL successfully implemented AI-driven systems to optimize cargo plane loading, but only after refining the system through human expertise. Many companies fail to adopt this balanced approach, leading to unrealistic expectations and underperforming solutions.

Addressing Ethical Concerns in AI

The growing adoption of AI introduces several ethical challenges:

Algorithmic Bias: Flawed training data can lead to biased AI systems, perpetuating social and historical inequities. For instance, facial recognition systems may disproportionately misidentify non-white individuals.

Job Displacement: While AI is expected to create new job opportunities, it also raises concerns about replacing human workers with automation.

Transparency and Accountability: Black-box AI models, such as deep learning systems, often lack transparency, making it difficult to explain or justify their recommendations. Ensuring accountability for AI- driven decisions remains a significant challenge.

V. Transforming Scalability with AI

The integration of Artificial Intelligence (AI) into resource management is transforming the way organizations allocate, utilize, and forecast resources. By leveraging AI and machine learning, businesses can analyze real-time and historical data, continuously learn from it, and make informed, data-driven decisions with unprecedented speed and precision.

AI empowers organizations to process vast amounts of data swiftly and extract actionable insights, enabling them to optimize resource management. Here are the key ways AI is reshaping resource planning:

Intelligent Resource Recommendation:

AI algorithms analyze project requirements, skill sets, and resource availability to provide tailored recommendations. By examining past data and usage patterns, AI ensures that resources are allocated efficiently, enhancing project outcomes and overall organizational success.

Dynamic Skill Matching:

AI systems match projects with resources that possess the required skills. These systems learn from past assignments, employee performance, and skill proficiency data to make precise recommendations. This results in improved project efficiency, enhanced team productivity, and higher client satisfaction.

Continued Learning and Optimization:

AI continuously evolves by learning from ongoing processes and refining its resource allocation strategies. This dynamic improvement ensures that AI becomes increasingly adept at meeting unique resource management needs over time.

In summary, AI employs predictive analytics, simulations, and advanced optimization techniques to craft intelligent, data-driven resource plans that grow smarter with use. By simplifying complex analyses, AI supports human resource planners and empowers organizations to manage resources more effectively every day. Whether through intelligent recommendations, dynamic matching, or ongoing optimization, AI in resource management is not just a tool for today—it’s a system that keeps improving, setting the stage for sustained organizational success.

VI. Future Trends and Innovations

Artificial Intelligence (AI) has emerged as one of the most transformative technologies in history, revolutionizing how businesses operate by enabling smarter decision-making, task automation, and unparalleled data utilization. Over the past decade, AI's influence has expanded exponentially, driven by advancements in machine learning, cloud computing, and the proliferation of Internet of Things (IoT) devices.

We now live in a world where IoT devices collect and share vast amounts of data, providing a foundation for powerful analytics and AI-driven insights. A key enabler of this transformation is deep learning neural networks, a technology rooted in decades-old research but dramatically enhanced by the internet and access to vast datasets. These neural networks power today's AI applications, from image recognition to natural language processing, fundamentally changing what is possible with machine learning.

Another critical development is the rise of cloud computing, which provides the massive computational power and storage required for AI. Tasks such as training algorithms to recognize objects or process natural language demand extraordinary processing capacity, which, without the cloud, would be prohibitively expensive for most businesses. Cloud computing democratizes AI, making advanced analytics and applications accessible to organizations of all sizes. This democratization is a game-changer, allowing smaller businesses to harness AI to deliver supercomputer-powered services, previously the domain of tech giants and well-funded enterprises.

The potential economic value of AI is immense, with predictions estimating a $15.7 trillion contribution to the global economy within the next decade. Companies leveraging AI through cloud-based services are already seeing transformative results. For instance, Netflix uses predictive analytics to drive 80% of its viewers' content decisions, while German retailer Otto forecasts sales with 90% accuracy, significantly reducing waste and optimizing operations. The COVID-19 pandemic has accelerated digital transformation across industries, compressing years of progress into months. This has fueled the rapid migration to the cloud as businesses adapt to the demands of remote work and distributed operations. Tools like Office 365 and Slack have become vital in enabling collaboration, communication, and continuity. Similarly, multi-cloud and hybrid-cloud approaches are gaining traction, allowing businesses to maintain control over their data while benefiting from the flexibility and scalability of the cloud.

As AI and cloud computing evolve, businesses of all sizes have unprecedented opportunities to innovate, optimize, and thrive. The integration of these technologies is not only reshaping industries but also leveling the playing field, ensuring that organizations of all scales can harness the power of AI to drive value and achieve transformative outcomes.

VII. Conclusion

In summary, the integration of artificial intelligence (AI) into cloud computing marks a transformative milestone, significantly improving scalability, resource management, and predictive analytics within distributed systems. By harnessing AI technologies, organizations can achieve remarkable efficiency in resource allocation, allowing for dynamic and real-time adjustments to fluctuating demands. Additionally, AI-powered analytics enable businesses to process and leverage vast amounts of data, driving informed decision-making and delivering enhanced operational performance.

However, realizing the full potential of these advancements necessitates addressing critical challenges, including data privacy, security vulnerabilities, and the risks associated with algorithmic bias. Proactively mitigating these issues is essential to building trust and ensuring that the benefits of AI are equitably distributed across industries and communities.

As cloud computing continues to evolve, sustained research and innovation in AI will be pivotal. Future efforts should prioritize the development of robust and transparent AI systems capable of addressing the unique needs of diverse industries. By embracing these advancements, organizations can not only optimize their operations but also position themselves as leaders in an increasingly competitive and data-driven digital landscape.

References

- Cloud Computing Future: Distributed Cloud & Emerging Trends | Hive. (n.d.). https://www.hivenet.com/post/the-future-of-cloud-computing-trends-and-the-pivotal-role-of- distributed- cloud.

- Rahman, MA; Butcher, C.; Chen, Z. Voidevolutionandcoalescenceinporousductilematerialsin simple shear. Int J Fract 2012, 177, 129–139. [CrossRef]

- Zhu, Y. BeyondLabels:AComprehensiveReviewofSelf-SupervisedLearningandIntrinsicDataProperties. Journal of Science & Technology. 2023, 4, 65–84. [Google Scholar]

- Rahman, M.A. (2012). Influence of simple shear and void clustering on void coalescence. University of New Brunswick, NB, Canada.

- https://unbscholar.lib.unb.ca/items/659cc6b8-bee6-4c20-a801-1d854e67ec48.

- Rahman, M.A. Enhancing Reliability in Shell and Tube Heat Exchangers: Establishing Plugging Criteria for Tube Wall Loss and Estimating Remaining Useful Life. J Fail. Anal. and Preven. 2024, 24, 1083–1095. [Google Scholar] [CrossRef]

- Nasr Esfahani, M. Breaking language barriers: How multilingualism can address gender disparities in US STEM fields. International Journal of All Research Education and Scientific Methods 2023, 11, 2090–2100. [Google Scholar] [CrossRef]

- Bhadani, U. (2020).HybridCloud:TheNewGenerationofIndianEducation Society.

- Bhadani, U. A Detailed Survey of Radio Frequency Identification (RFID) Technology: Current Trends and Future Directions.

- Bhadani, U. (2022). Comprehensive Survey of Threats, Cyberattacks, and Enhanced Countermeasuresin RFID Technology. International Journal of Innovative Research in Science, Engineering and Technology, 11.

- Oza, H. (n.d.). Importance And Benefits Of Artificial Intelligence | HData Systems. https://www.hdatasystems.com/blog/importance-and-benefits-of-artificial-intelligence.

- Qa.(2022,October10).WhatisCloudComputing:AFullOverview. https://www.qa.com/resources/blog/what-is- cloud-computing/.

- What Is Cloud Computing? (n.d.-b). Oracle Nigeria. https://www.oracle.com/ng/cloud/what-is-cloud- computing/.

- Idugboe, F. O. (2023b, April 16). The Role of AI in Cloud Computing: A Beginner’s Guide to Starting a Career. DEV Community. https://dev.to/aws-builders/the-role-of-ai-in-cloud-computing-a-beginners- guide- to-starting-a-career-4h2.

- Idm. (2018, August 9). Types of Cloud Services - IDM - Medium. Medium. https://medium.com/@IDMdatasecurity/types-of-cloud-services-b54e5b574f6.

- Raval, D. (2023, May 16). Human Resource Management and AI: Revolutionizing the Workforce. https://www.linkedin.com/pulse/human-resource-management-ai-revolutionizing-workforce-dipam- raval/.

- Scaling AI for success: Four technical enablers for sustained impact. (2023b, September 27). McKinsey & Company.

- https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/tech-forward/scaling-ai-for- success- four-technical-enablers-for-sustained-impact.

- Ramachandran, A. (2023, July 21). CLOUD ANALYTICS with AI and ML for INTELLIGENT DIGITAL TRANSFORMATION. https://www.linkedin.com/pulse/cloud-analytics-ai-ml-intelligent-digital-ashok- ramachandran/.

- MURTHY, P. , & BOBBA, S. (2021). AI-Powered Predictive Scaling in Cloud Computing: Enhancing Efficiency through Real-Time Workload Forecasting.

- Murthy, P. (2020). Optimizing cloud resource allocation using advanced AI techniques: A comparative study of reinforcement learning and genetic algorithms in multi-cloud environments. World Journal of Advanced Research and Reviews. [CrossRef]

- MURTHY, P. , & BOBBA, S. (2021). AI-Powered Predictive Scaling in Cloud Computing: Enhancing Efficiency through Real-Time Workload Forecasting.

- Mehra, I.A.(2020,September30).UnifyingAdversarialRobustnessandInterpretabilityinDeep Neural Networks:AComprehensiveFrameworkforExplainableandSecureMachineLearningModels byAditya Mehra. IRJMETS Unifying Adversarial Robustness and Interpretability in Deep.

- Mehra, N.A. Uncertaintyquantificationindeep neural networks: Techniques andapplications in autonomous decision-making systems. World Journal of Advanced Research and Reviews 2021, 11, 482–490. [Google Scholar] [CrossRef]

- Mehra, N.A. Uncertainty quantificationindeep neural networks: Techniques and applications in autonomous decision-making systems. World Journal of Advanced Research and Reviews 2021, 11, 482–490. [Google Scholar] [CrossRef]

- Krishna, K. (2022). Optimizing query performance in distributed NoSQL databases through adaptive indexing and data partitioning techniques. International Journal of Creative Research Thoughts(IJCRT). https://ijcrt. org/viewfulltext. php.

- Krishna, K.; Thakur, D. Automated Machine Learning (AutoML) for Real-Time Data Streams: Challenges and Innovations in Online Learning Algorithms. Journal of Emerging Technologies and Innovative Research (JETIR) 2021, 8. [Google Scholar]

- Murthy, P.; Thakur, D. Cross-Layer Optimization Techniques for Enhancing Consistency and Performance in Distributed NoSQL Database. International Journal of Enhanced Research in Management & Computer Applications 2022, 35. [Google Scholar]

- Murthy, P.; Mehra, A. Exploring Neuromorphic Computing forUltra-LowLatency Transaction ProcessinginEdgeDatabaseArchitectures. JournalofEmergingTechnologiesandInnovativeResearch 2021, 8, 25–26. [Google Scholar]

- Mehra,A.(2024).HYBRIDAIMODELS:INTEGRATINGSYMBOLICREASONINGWITHDEEP LEARNING.

- FOR COMPLEX DECISION-MAKING. In Journal of Emerging Technologies and Innovative Research (JETIR), Journal of Emerging Technologies and Innovative Research (JETIR) (Vol. 11, Issue 8, pp. f693– f695) [Journal-article]. https://www.jetir.org/papers/JETIR2408685.pdf.

- Thakur, D. Federated Learning and Privacy-Preserving AI: Challenges and Solutions in Distributed Machine Learning. International Journal of All Research Education and Scientific Methods (IJARESM) 2021, 9, 3763–3764. [Google Scholar]

- KRISHNA, K. , MEHRA, A., SARKER, M., & MISHRA, L. (2023). Cloud-Based Reinforcement Learning for Autonomous Systems: Implementing Generative AI for Real-time Decision Making and Adaptation.

- [Thakur, D. , Mehra, A., Choudhary, R., & Sarker, M. (2023). Generative AI in Software Engineering: Revolutionizing Test Case Generation and Validation Techniques. In IRE Journals, IRE Journals (Vol. 7, Issue 5, pp. 281–282) [Journal-article]. https://www.irejournals.com/formatedpaper/17051751.pdf.

- S. M. Metev and V. P. Veiko, Laser Assisted Microtechnology, 2nd ed., R. M. Osgood, Jr., Ed. Berlin, Germany: Springer-Verlag, 1998.

- J. Breckling, Ed., The Analysis of Directional Time Series: Applications to Wind Speed and Direction, ser. Lecture Notes in Statistics. Berlin, Germany: Springer, 1989, vol. 61.

- Zhang, S.; Zhu, C.; Sin, J.K.O.; Mok, P.K.T. A novel ultrathin elevated channel low-temperature poly- Si TFT. IEEE Electron Device Lett. 1999, 20, 569–571. [Google Scholar] [CrossRef]

- M. Wegmuller, J. P. von der Weid, P. Oberson, and N. Gisin, “High resolution fiber distributed measurements with coherent OFDR,” in Proc. ECOC’00, 2000, paper 11.3.4, p. 109.

- R. E. Sorace, V. S. Reinhardt, and S. A. Vaughn, “High-speed digital-to-RF converter,” U.S. Patent 5 668 842, Sept. 16, 1997.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).