1. Introduction and Related Work

In recent years, the research of deep neural network models has witnessed significant progress as various large language models are proposed and evolved at a fast pace. However, currently, these models still cannot achieve reasonable performance in logical and mathematical reasoning without much human intervention, although more than 10 billion trainable parameters are placed in their latest neural networks. The failure of these sophisticated models in some seemingly simple math problems inspired us to propose a non-Turing computer structure to address this challenge. The number of previous works on non-Turing machines is relatively small ([

1,

2,

3,

4,

5,

6,

7]), which mostly discuss it conceptually and psychologically without giving any specific workable architecture like the Turing one. Among these, [

2] and [

7] argued that analog or natural computations should be used to conduct calculations involving continuous real numbers. The potential adaptivity of this type of model, however, is only restricted to the range between the discrete approximation of a continuous number and its unrepresented portion. Moreover, it lacks the interactions among the discrete domain and the continuous domain to solve the problem in their model as well. Recently, [

8] and its variants investigated so-called Neural Turing Machines, which use neural networks like LSTM [

9] as the controller to make the entire system differentiable for end-to-end training. Compared with this structure, the abstract controller in the proposed non-Turing machine (which will be called “Ren’s machine" for simplicity below) can still be discrete/binary-based for logic reasoning. This novel structure allows the flexibility of switching back and forth between the abstract domain and the specific domain to better approach a solution to a given problem with rules summarized or learned from thought/tool experiments during the process. What’s more, it also enables the interactive collaborations of specific contents and abstract contents on both types of tapes to command and instruct the controller for completing a task.

2. The Proposed Architecture

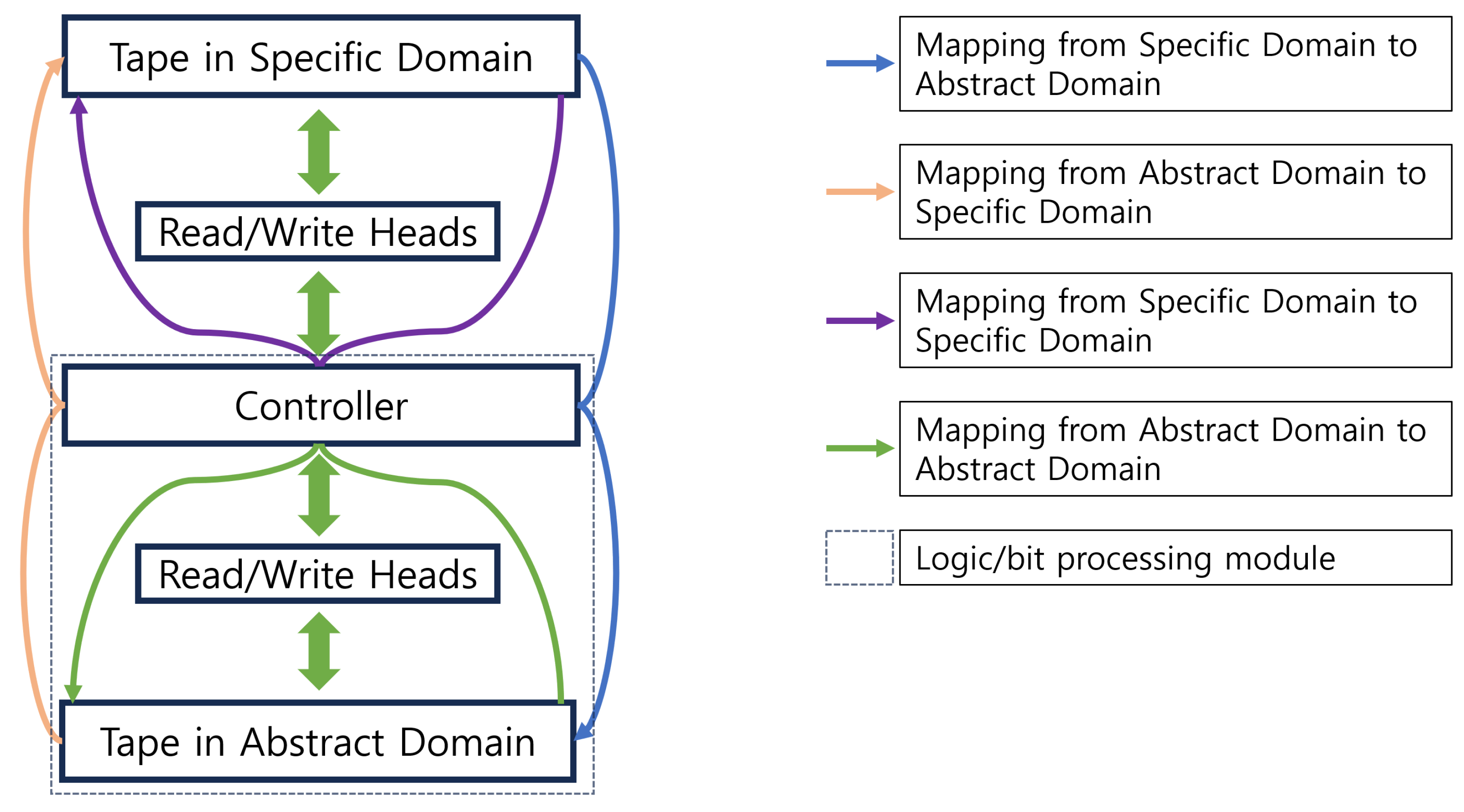

The components and their relations of Ren’s machine are elaborated in

Figure 1. This structure consists of a controller and two types of read/write heads and tapes, one in the specific domain and the other in the abstract domain. The interactions inter and intra the specific domain and the abstract domain are realized by different types of mappings annotated in this figure. These mappings can be implemented by applying prior knowledge or learned rules (e.g., neural networks or memorized tables) or simply rendering using a built-in function on the screen. Note that the writing and reading processes utilizing the heads in the specific domain are affected by the environment in the real world. As a result, some information from the outside world is also involved during these processes.

3. Experiment

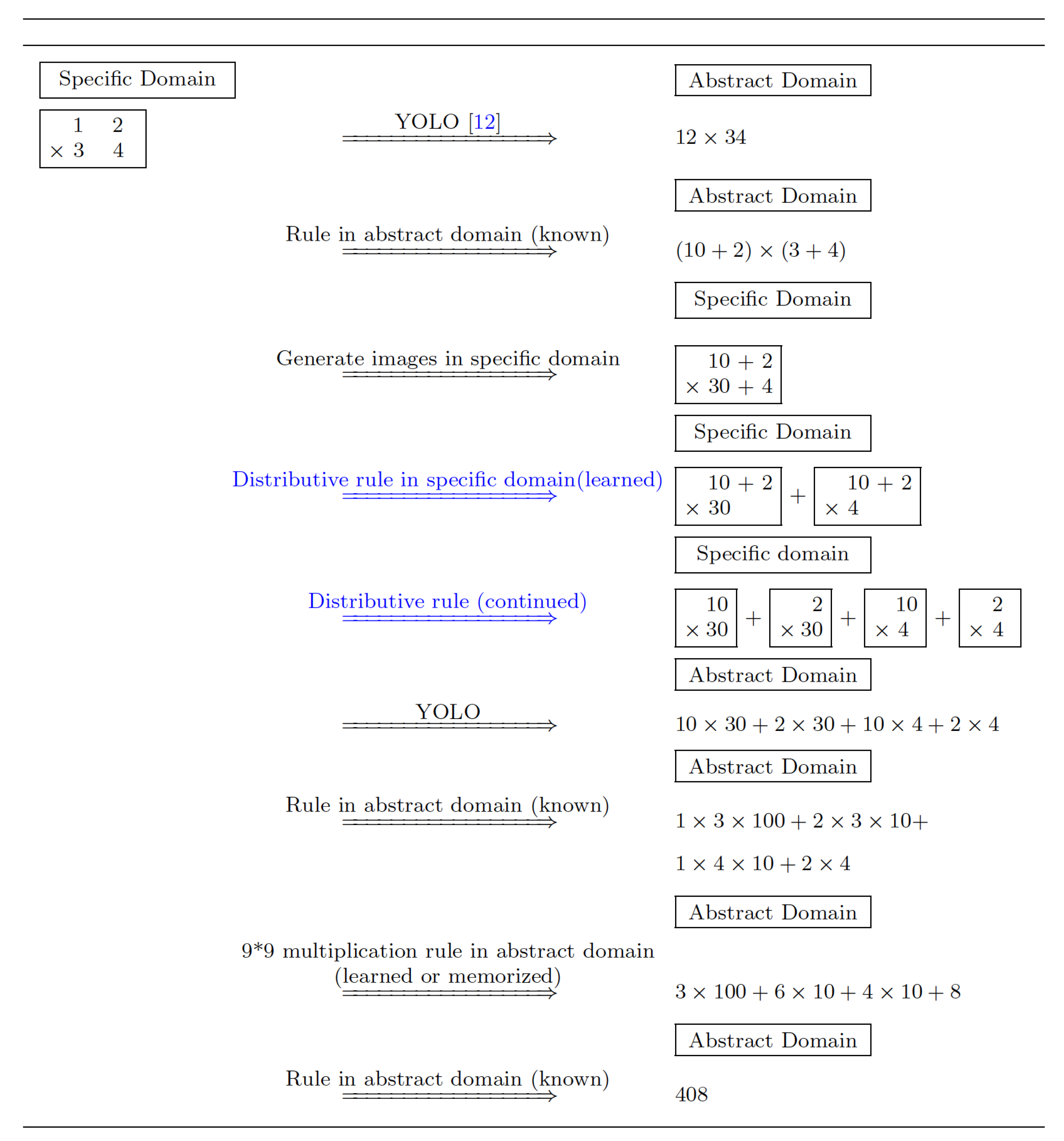

In the first experiment, we aim to autonomously find a method of computing the product of any positive integers by using very basic algebraic rules that are already known. The following diagram shows the workflow of Ren’s machine to calculate an expression where it learned the distributive rule of multiplication by experiments generated in the specific domain and used the rule to get the correct result of the expression.

In this process, when it is recognized that a rule is technically applicable to any objects in either the abstract domain or the specific domain, the rule will be called and applied to them as a trial to move towards a solution even if it seems that applying this rule to the objects is ‘meaningless’ (certainly a search strategy like reinforcement learning could be added for more `efficient’ lookup). New rules are summarized or learned from thought or tool experiments generated from prior knowledge and existing rules that have already been learned so far. For example, in the computing process demonstrated in

Figure 2, the distributive rule is learned by the cooperation of specific observations and abstract prior knowledge. Specifically, Ren’s machine can compute the multiplication of any small integers like

,

, and

using multiple additions by the original definition of multiplication. Then it finds that actually the equation

holds true. Then it generates an example in the specific domain accordingly by simply displaying the equation on the screen. Using a similar procedure, it can generate a large number of examples (images) in the specific domain showing that the distributive property exists. Then neural networks can be trained to learn the transformation between the images on the left side of the equation and those on the right side of the equation. It is shown in the experiment that such neural networks (e.g. LLaMA [

10], ChatGPT [

11]) are able to generalize to other pairs of integers that are unseen in the training, even when the integers are very large. After applying the transformation in the visual domain, we can use computer vision models such as YOLO [

12] to convert the equation back into the abstract domain. This procedure eventually enables Ren’s machine to autonomously learn a general method of calculating the multiplications of arbitrary positive integers.

We conduct the test of computing the multiplications in 20 pairs of positive integers listed as follows:

| 12*34, |

123*345, |

1234*3456, |

12345*34567, |

123456*345678 |

| 23*45, |

234*456, |

2345*4567, |

23456*45678, |

234567*456789 |

| 34*56, |

345*567, |

3456*5678, |

34567*56789, |

345678*567891 |

| 45*67, |

456*678, |

4567*6789, |

45678*67891, |

456789*678912 |

The results in this experiment show that the accuracy (

) achieved by the proposed method on Ren’s machine is significantly higher than that (

) achieved by the state-of-the-art large language model used in Microsoft Copilot [

13].

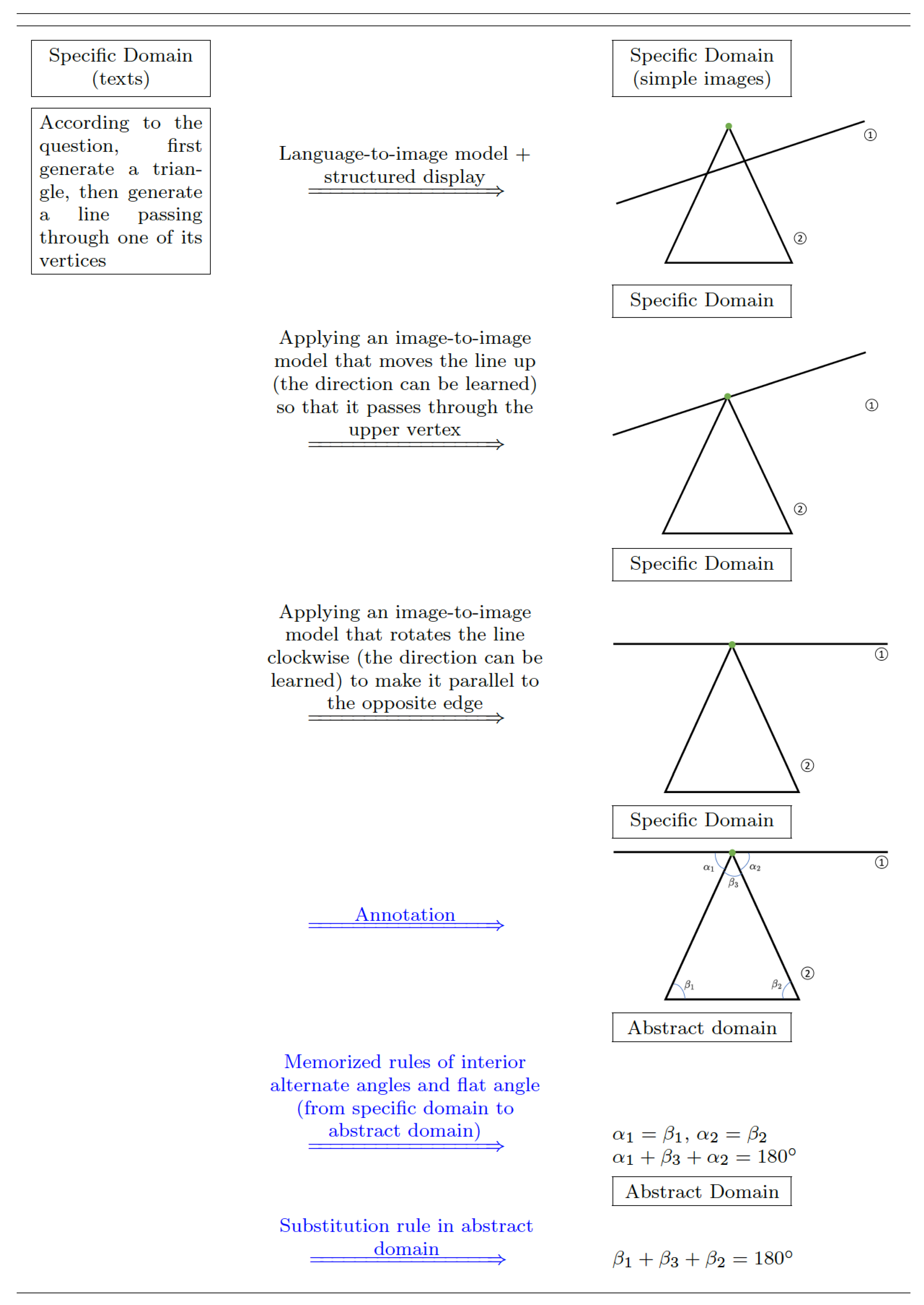

In the second experiment, we present how the proposed Ren’s machine completes the proof of the conclusion that “the summation of interior angles of a triangle is " using the rules that are learned in advance and the prior knowledge base.

First, it is assumed that the machine knows in prior that a flat angle (i.e., a straight line) is

, which also appears in the target conclusion to be proved. Then, a language model can be trained to generate a text prompt like “According to the question, first generate a triangle, then generate a line passing through one of its vertices" to potentially help prove the conclusion with the added auxiliary line. Next, rules based on a language-to-image model and a structured display of geometric objects (a line and a triangle) are used to map the texts to simple images in the specific domain. As we find, current LLM-based methods are not able to generate a structured image according to text prompts correctly. However, we can train image-to-image models to rotate and translate geometric objects in proper directions so that the straight line passes through one vertex of the triangle and becomes parallel to the opposite edge. After that, an annotation mapping rule in the specific domain can be applied to label the involved angles in the generated image. Sequentially, the rules of interior alternate angles and flat angle that are learned and memorized before can be used to convert the images in the specific domain to the angular equations in the abstract domain, as shown in

Figure 3. Finally, one can complete the proof by applying the stored substitution rule in the abstract domain to the generated equations.

Figure 2.

The Workflow of Computing The Multiplication of Positive Integers on The Ren’s Machine

Figure 2.

The Workflow of Computing The Multiplication of Positive Integers on The Ren’s Machine

Figure 3.

The Workflow Proving That The Sum of Interior Angles of A Triangle Is on The Ren’s Machine

Figure 3.

The Workflow Proving That The Sum of Interior Angles of A Triangle Is on The Ren’s Machine

4. Conclusions and Discussion

In this paper, we propose a non-Turing architecture, Ren’s machine, that solves a problem by autonomously learning rules and building knowledge bases from generated experiments in the process of approaching an answer. It can input and output by using two types of read/write heads in both the specific domain and the abstract domain. The mappings among these domains can be conducted based on learned rules such as neural networks, memorized tables, or simply built-in rendering functions. In this way, the controller can be commanded/instructed cooperatively by both the contents on the tape in the abstract domain and those in the specific domain. In the experiments, it is demonstrated that this flexible mechanism enables Ren’s machine to solve the multiplication problem of positive integers in a self-directed manner with a higher accuracy rate compared with other state-of-the-art LLM methods. The machine’s superior reasoning ability is also shown in proving a theorem in Plane Geometry. More advantages of Ren’s machine could be further explored by coping with the problems that cannot be solved by Turing machine intrinsically. As we know, the halting problem is the problem of determining whether an arbitrary program will terminate or continue to run forever. This problem is proven to be undecidable on Turing machine, meaning that there exists no general algorithm on Turing machine that can solve the halting problem for all possible pairs of program and input. On the other hand, on Ren’s machine, since the controller is connected with the specific domain using another type of read/write heads, it can also be commanded/instructed by the contents shown on the tape in the specific domain. Thus, the halting problem can be addressed with significantly high accuracy, if one includes a clock in the specific domain to count the time that a program has been running for, or uses neural networks to recognize the text patterns of never-ending programs, or counts the number of steps from their printouts on the screen, etc. In this regard, instead of pretending to be human in conversation, if a machine can actively generate rules from thought/tool experiments, for generalizing them to approach an answer to the problem it tries to solve, then it can be closer to the real artificial general intelligence.

5. Non-Turing Robotics

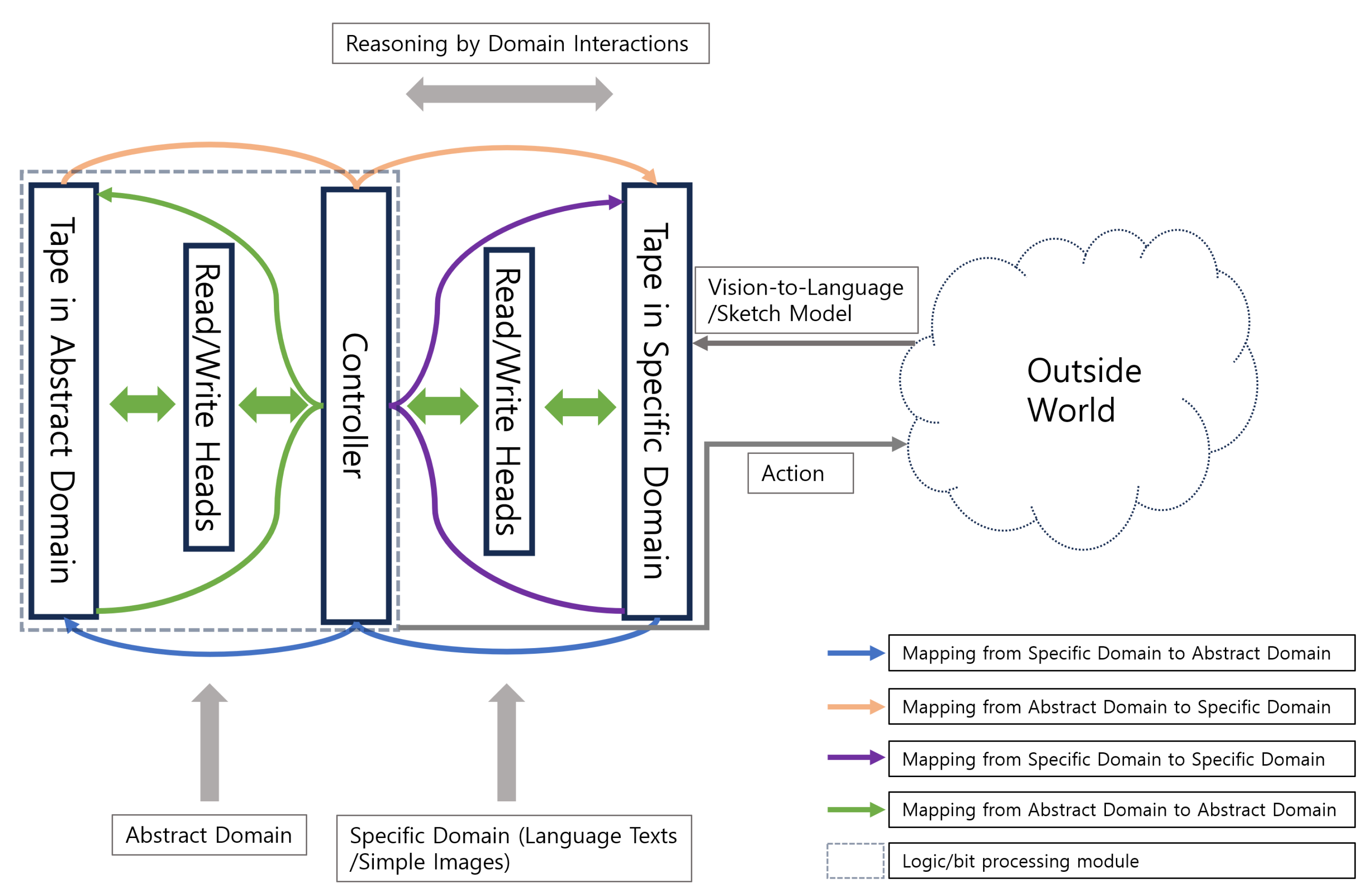

Although recent Vision-Language-Action (VLA) models manage to enable robots to complete a variety of basic tasks, they still suffer from drawbacks in two aspects: (1) lacking sound reasoning ability to handle complicated tasks, and (2) requiring intensive environment setups, datasets, and computation for training and fine-tuning VLA model. To address these challenges, we propose a non-Turing robotic architecture based on Ren’s machine (see

Figure 4), which can conduct spatial-temporal reasoning through the interactions between specific domain and abstract domain.

On the proposed non-Turing robotic architecture based on Ren’s machine, the reasoning can be carried out by the interactions between abstract domain and specific domain. It comprehends the current situation in the outside world by firstly converting the scenario’s videos into sketches in the specific domain using vision-to-language/sketch model. If the model is trained properly, the sketches will contain necessary information to determine whether a collision is going to occur or not with high probability. Then, a mapping (e.g., a classification neural network) is used to convert the sketches into the contents in the abstract domain such as ‘True’ or ‘False’ to flag collision. In addition, a generative model can be adopted to generate the sketches of the consequential scenario assuming that a tentative movement is implemented by the robot. By using this mechanism, the proposed robotic architecture can conduct multiple virtual trials to find out the best policy to avoid potential collisions from these thought experiments. The action generated by the best policy will then be executed by the robot in the real world. Notice that in this process the reasoning and computations are implemented based on the simple sketches instead of the full videos of real-world scenarios. As a result, the computational cost as well as the cost of setting up environments and generating data on the proposed robotic architecture will be markedly reduced.

The fundamental differences between our architecture and the Agent AI structure proposed recently in [

14] lie in the following aspects:

- 1)

In their structure, the virtual world is supposed to be as close to the real world as possible. Therefore, their virtual world is more static and its complexity is more like the real world’s one, compared with the actively generated, related, simpler, and more dynamic sketches of scenarios in the workspace (the additional tape) in the specific domain of our robotic architecture.

- 2)

The reasoning is hence realized by the interactions inter and intra the abstract domain and the specific domain of the proposed robotic architecture based on Ren’s machine.

- 3)

Their structure based on the Turing machine does not have an actual sensor/camera observing the virtual world. Instead, they only have the inner parameters to reconstruct the complex virtual world. As contrast, on our robotic architecture, the contents in the workspace (the additional tape) in the specific domain can be efficiently observed and mapped into various domains, significantly facilitating the reasoning process.

The spatial-temporal reasoning workflow discussed above demonstrates that these differences/advantages enable the proposed architecture based on Ren’s machine to overcome the drawbacks of insufficient reasoning ability and expensive computation and setup costs that are commonly encountered by Turing robotic structures.

References

- Holyoak, K.J. Why I am not a Turing machine. Journal of Cognitive Psychology 2024, pp. 1–12. [CrossRef]

- MacLennan, B.J. Natural computation and non-Turing models of computation. Theoretical computer science 2004, 317, 115–145. [CrossRef]

- Harel, D.; Marron, A. The Human-or-Machine Issue: Turing-Inspired Reflections on an Everyday Matter. Communications of the ACM 2024, 67, 62–69. [CrossRef]

- Brynjolfsson, E. The turing trap: The promise & peril of human-like artificial intelligence. In Augmented education in the global age; 2023; pp. 103–116.

- Mitchell, M. The Turing Test and our shifting conceptions of intelligence, 2024.

- Hoffmann, C.H. Is AI intelligent? An assessment of artificial intelligence, 70 years after Turing. Technology in Society 2022, 68, 101893. [CrossRef]

- MacLennan, B.J. Mapping the territory of computation including embodied computation. In Handbook of Unconventional Computing: VOLUME 1: Theory; 2022; pp. 1–30.

- Graves, A. Neural Turing Machines. arXiv preprint arXiv:1410.5401 2014. [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-term Memory. Neural Computation MIT-Press 1997.

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. Llama: Open and efficient foundation language models. arXiv preprint arXiv:2302.13971 2023.

[CrossRef]

- OpenAI. ChatGPT, 2023.

- Redmon, J. You only look once: Unified, real-time object detection. In Proceedings of the Proceedings of the IEEE conference on computer vision and pattern recognition, 2016.

- Microsoft. Microsoft Copilot: Your AI companion, 2023.

- Durante, Z.; Huang, Q.; Wake, N.; Gong, R.; Park, J.S.; Sarkar, B.; Taori, R.; Noda, Y.; Terzopoulos, D.; Choi, Y.; et al. Agent AI: Surveying the horizons of multimodal interaction. arXiv preprint arXiv:2401.03568 2024.

[CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

holds true. Then it generates an example in the specific domain accordingly by simply displaying the equation on the screen. Using a similar procedure, it can generate a large number of examples (images) in the specific domain showing that the distributive property exists. Then neural networks can be trained to learn the transformation between the images on the left side of the equation and those on the right side of the equation. It is shown in the experiment that such neural networks (e.g. LLaMA [10], ChatGPT [11]) are able to generalize to other pairs of integers that are unseen in the training, even when the integers are very large. After applying the transformation in the visual domain, we can use computer vision models such as YOLO [12] to convert the equation back into the abstract domain. This procedure eventually enables Ren’s machine to autonomously learn a general method of calculating the multiplications of arbitrary positive integers.

holds true. Then it generates an example in the specific domain accordingly by simply displaying the equation on the screen. Using a similar procedure, it can generate a large number of examples (images) in the specific domain showing that the distributive property exists. Then neural networks can be trained to learn the transformation between the images on the left side of the equation and those on the right side of the equation. It is shown in the experiment that such neural networks (e.g. LLaMA [10], ChatGPT [11]) are able to generalize to other pairs of integers that are unseen in the training, even when the integers are very large. After applying the transformation in the visual domain, we can use computer vision models such as YOLO [12] to convert the equation back into the abstract domain. This procedure eventually enables Ren’s machine to autonomously learn a general method of calculating the multiplications of arbitrary positive integers.