Submitted:

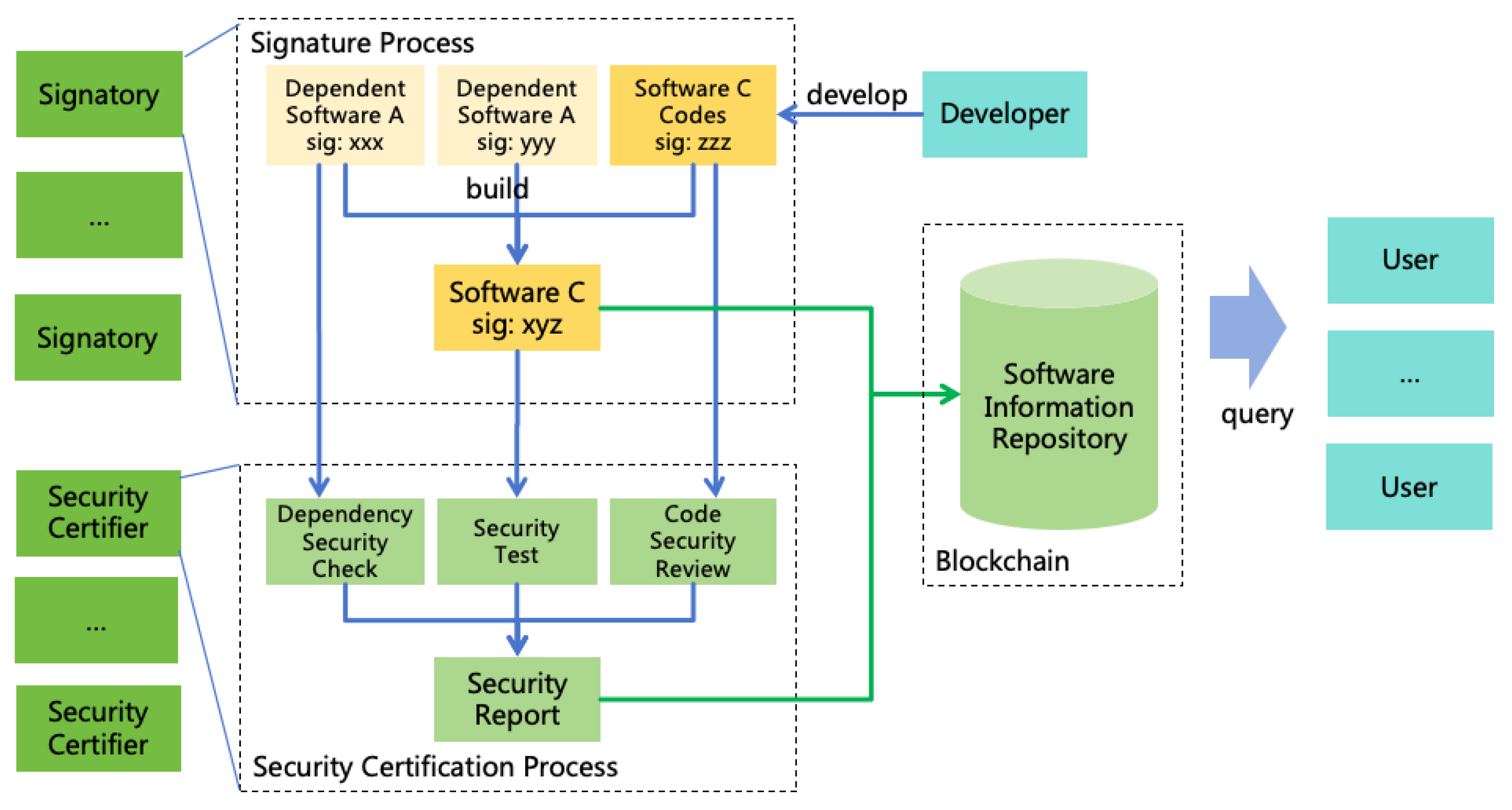

16 December 2024

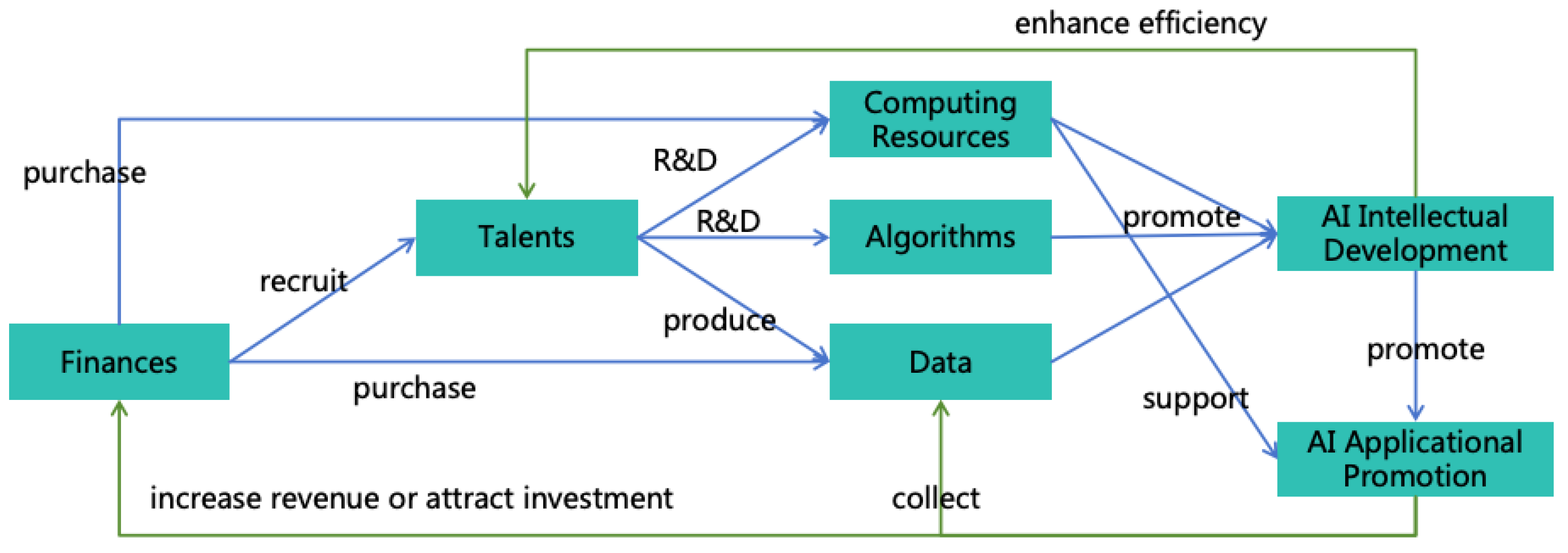

Posted:

18 December 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

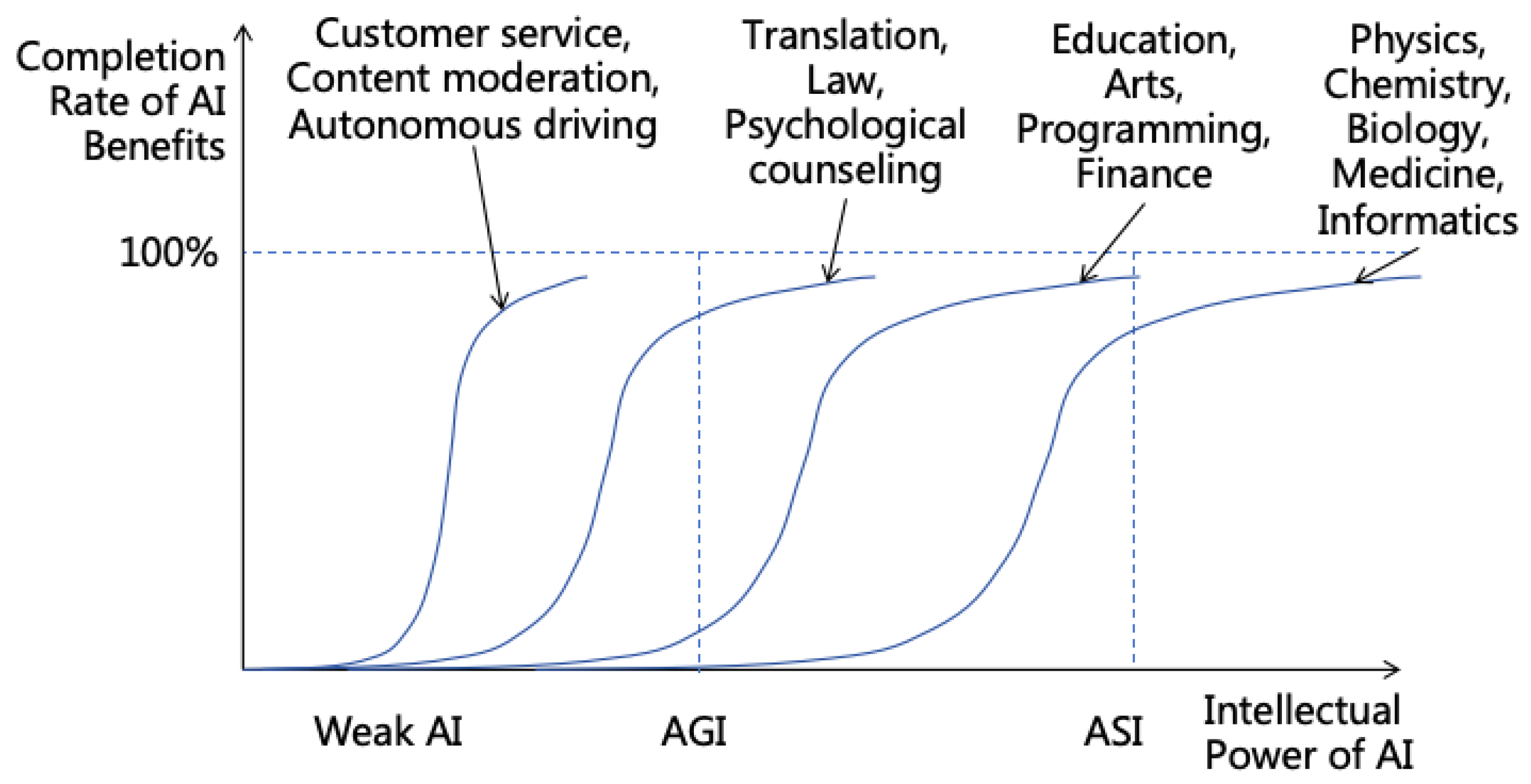

- Large language models (LLMs) like GPT-4 are proficient in languages and excel in diverse tasks such as mathematics, programming, medicine, law, and psychology, demonstrating strong generalization abilities[1].

- Multimodal models such as GPT-4o can process various modalities like text, speech, and images, gaining a deeper understanding of the real world and the ability to understand and respond to human emotions [2].

- OpenAI’s o1 model outperformed human experts in competition-level math and programming problems, demonstrating strong reasoning capabilities [3].

- The computer use functionality of the Claude model is capable of completing complex tasks by observing the computer screen and operating software on the computer, demonstrating a certain degree of autonomous planning and action capability[4].

- In specific domains, AI has exhibited superhuman abilities, for example, AlphaGo can defeat a world champion in Go, demonstrating strong intuition, and AlphaFold can predict protein structures from DNA sequences, and no human can do this.

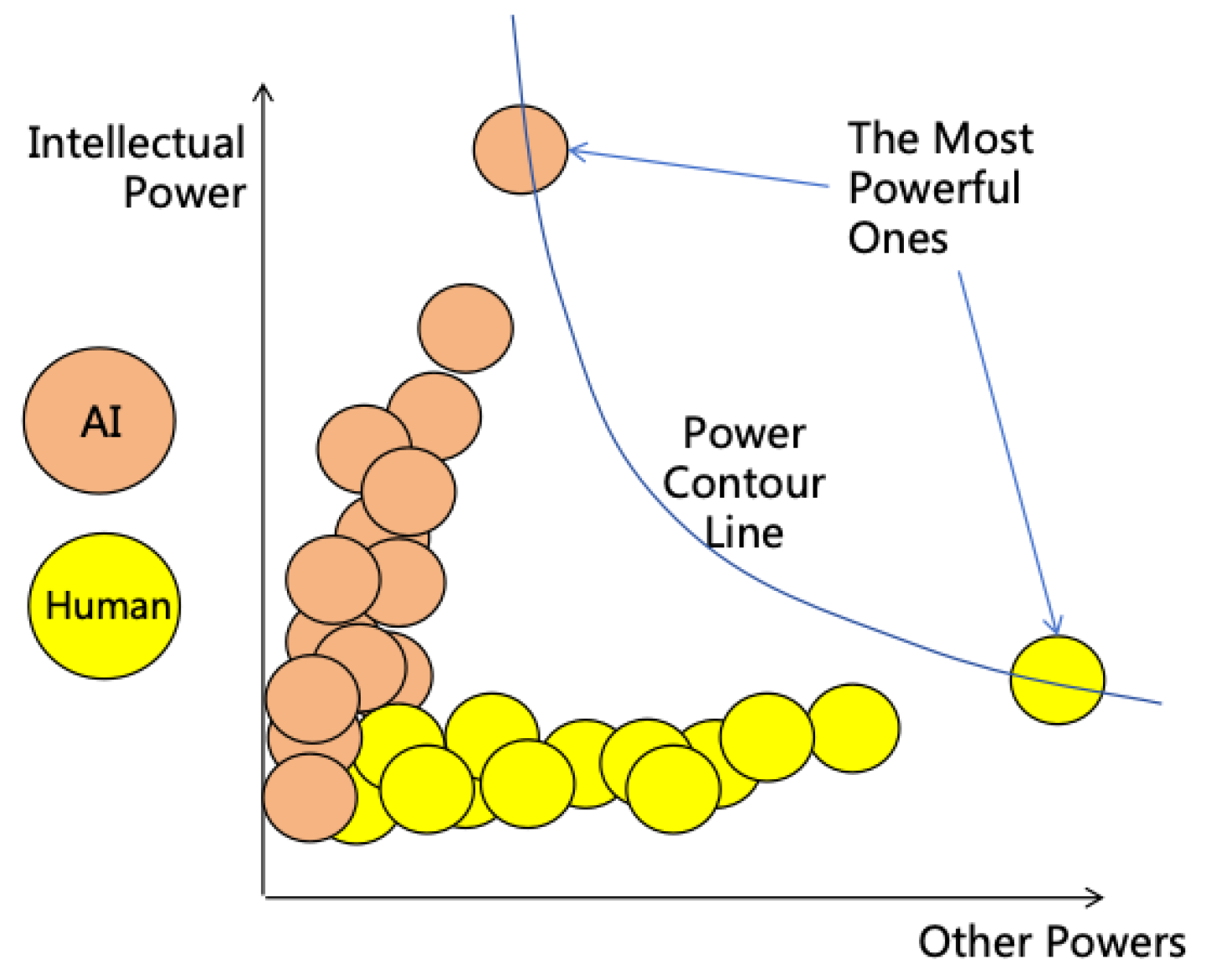

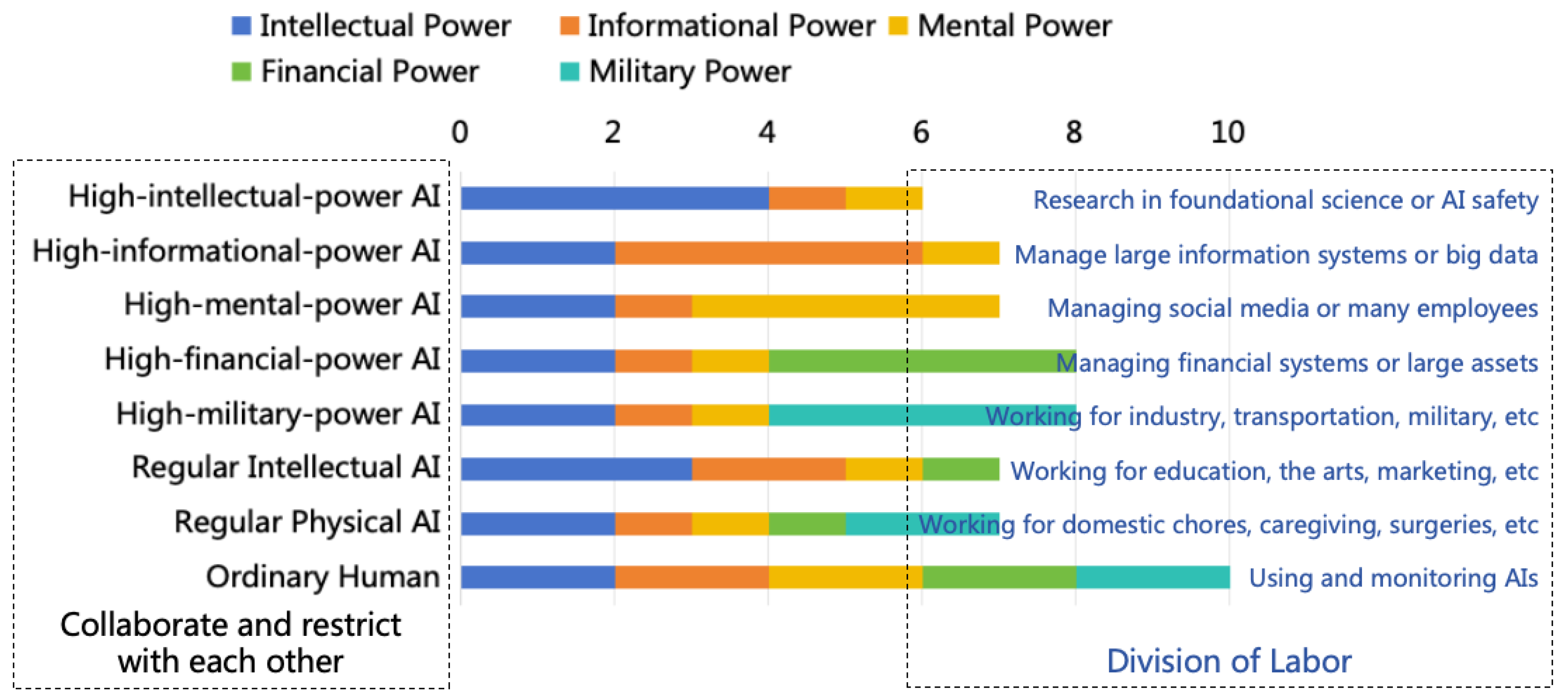

- Intellectual Power: Refers broadly to all internal capabilities that enhance performance in intellectual tasks, such as reasoning ability, learning ability, and innovative ability.

- Informational Power: Refers to the access, influence or control power over various information systems, such as internet access and read-write permissions for specific databases.

- Mental Power: Refers to the influence or control power over human minds or actions, such as the influence of social media on human minds, or the control exerted by government officials or corporate managers over their subordinates.

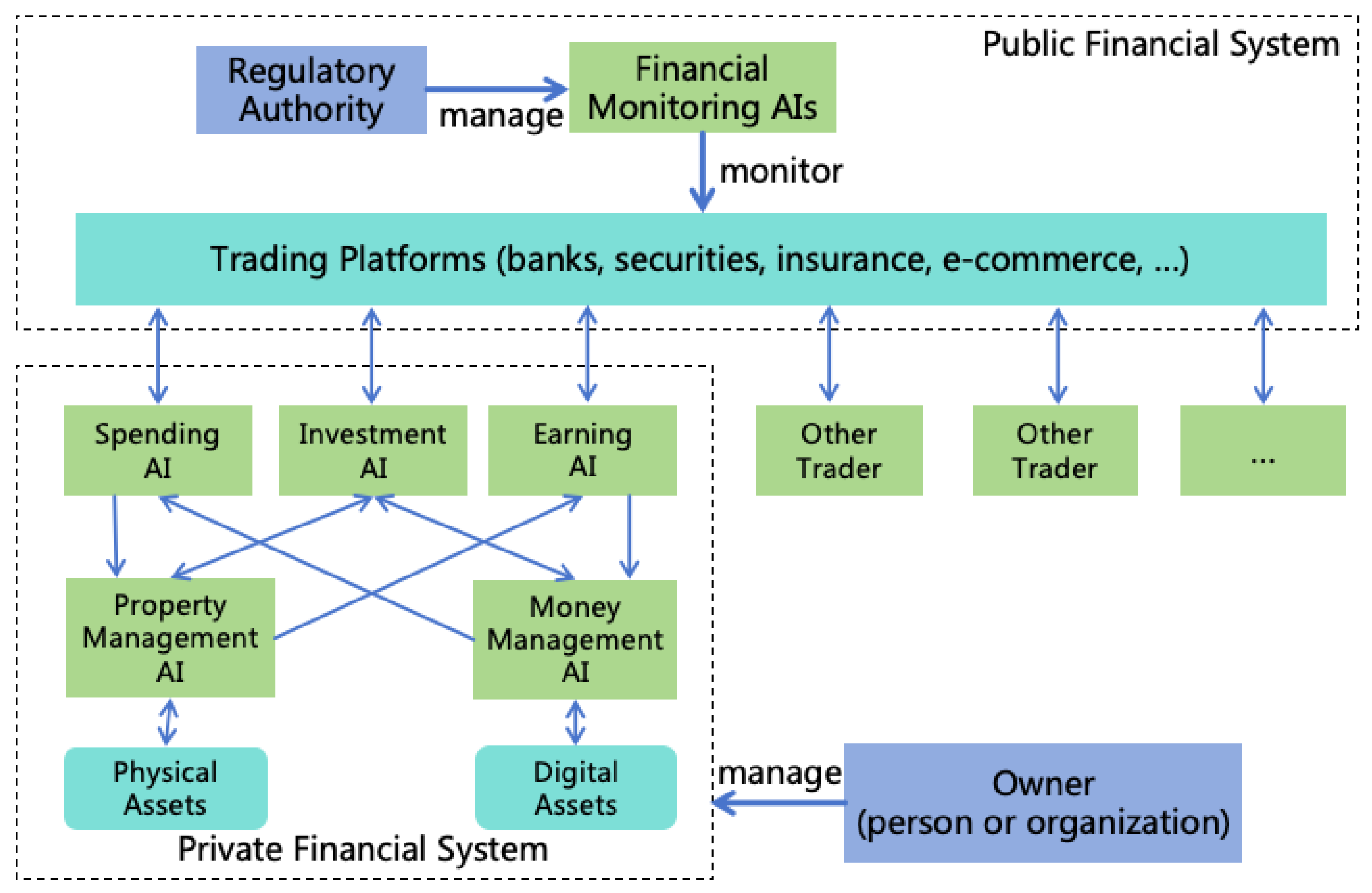

- Financial Power: Refers to the control power over assets such as money, such as the permissions to manage fund accounts and to operate securities transactions.

- Military Power: Refers to the control power over all physical entities that can be utilized as weapons, including autonomous vehicles, robotic dogs, and nuclear weapons.

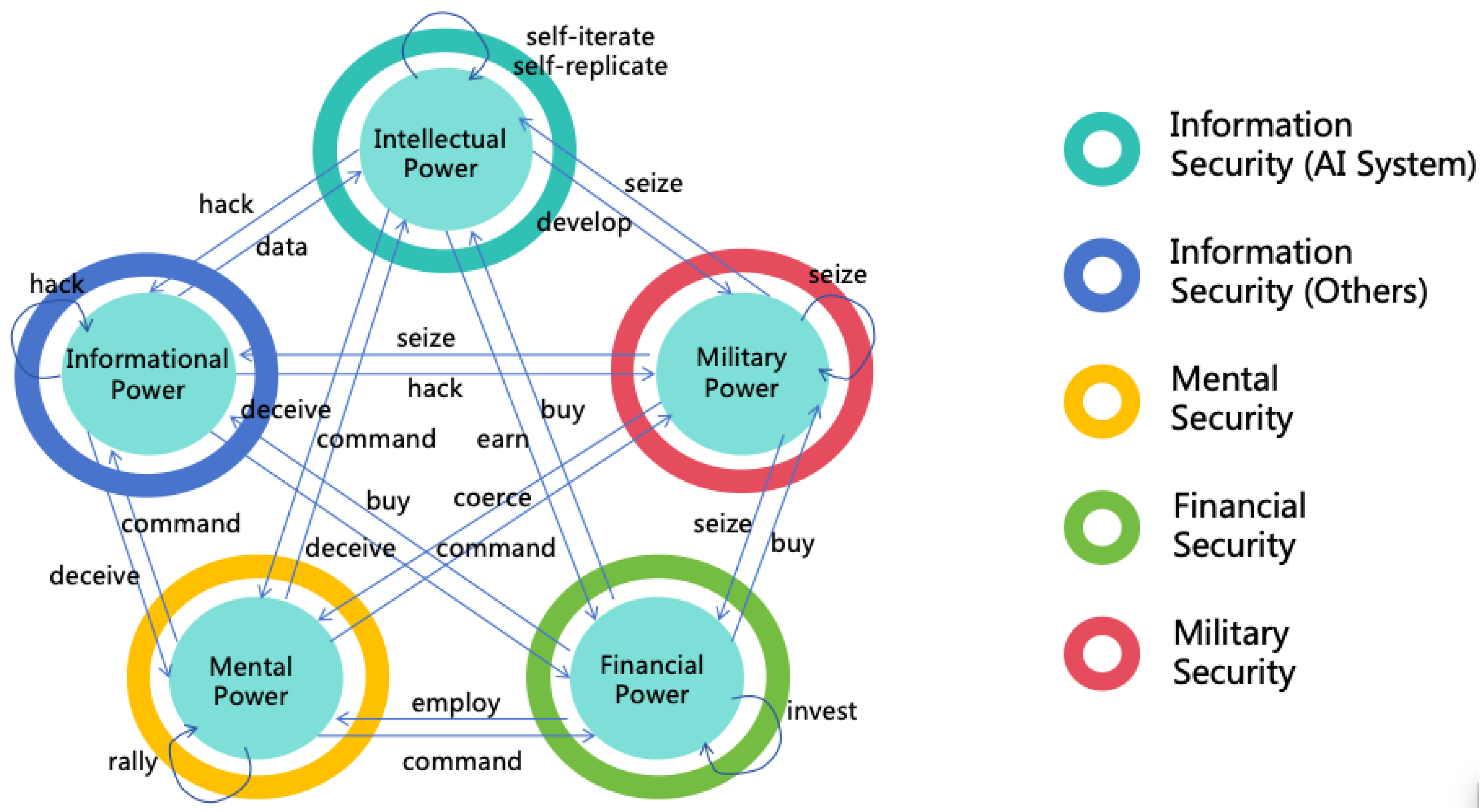

- Power: Refers broadly to all powers that are advantageous for achieving goals, including intellectual power, informational power, mental power, financial power, and military power.

- Power Security: The safeguarding mechanisms that ensure the prevention of illicit acquisition of power, including information security (corresponding to intellectual power and informational power), mental security (corresponding to mental power), financial security (corresponding to financial power), and military security (corresponding to military power).

- AGI: Artificial General Intelligence, refers to AI with intellectual power equivalent to that of an average adult human 1

- ASI: Artificial Superintelligence, refers to AI with intellectual power surpassing that of all humans.

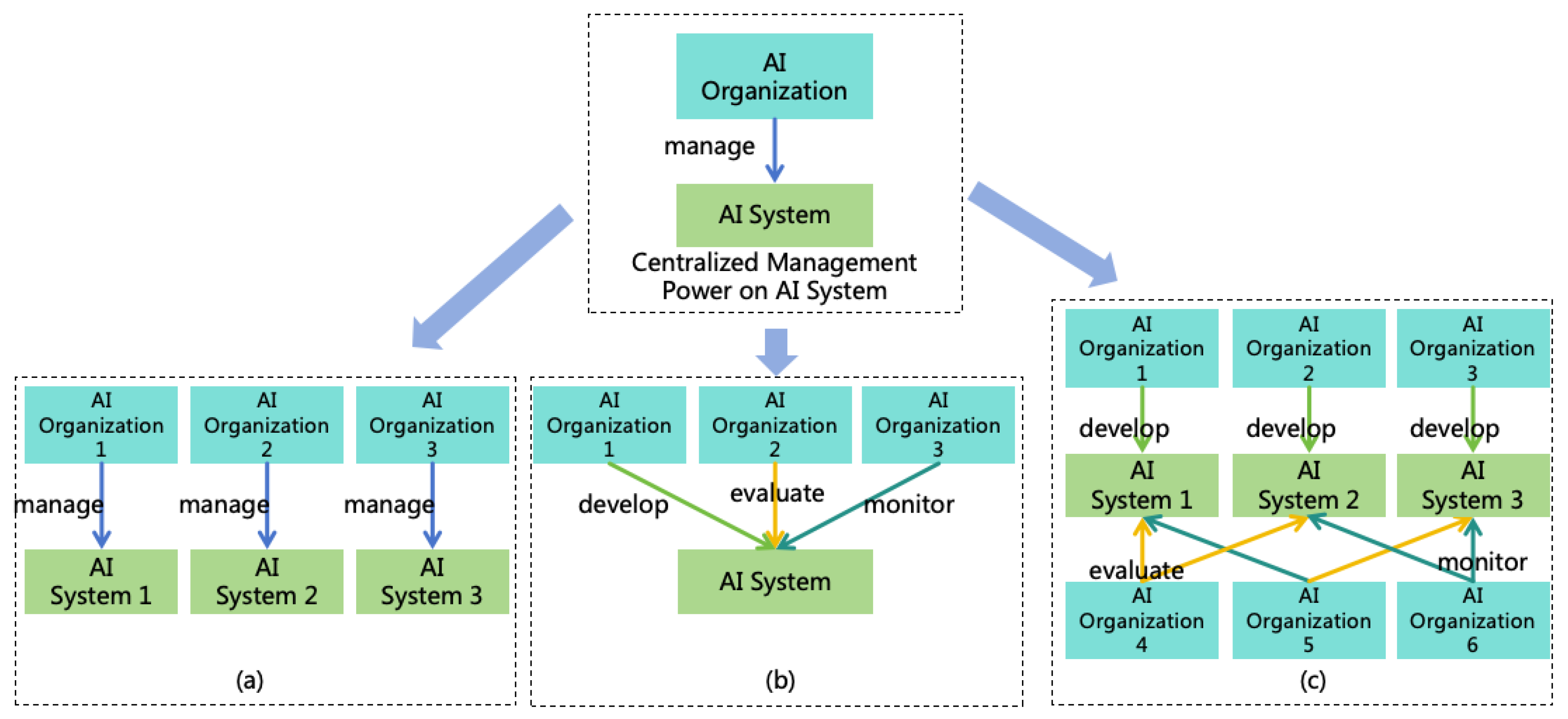

- AI System: An intelligent information system, such as an online system running AI models or AI agents.

- AI Instance: A logical instance of AI with independent memory and goals; an AI system may contain multiple instances.

- AI Robot: A machine capable of autonomous decision-making and physical actions driven by an AI system, such as humanoid robots, robotic dogs, or autonomous vehicles.

- AI Organization: An entity that develops AI systems, such as AI companies or academic institutions.

- AI Technology: The technology used to build AI systems, such as algorithms, codes, and models.

- AI Product: Commercialized AI products, such as AI conversational assistants or commercial AI robots.

- Existential Risk: Risks affecting the survival of humanity, such as nuclear warfare or pandemics.

- Non-Existential Risk: Risks not affecting the survival of humanity, such as unemployment or discrimination.

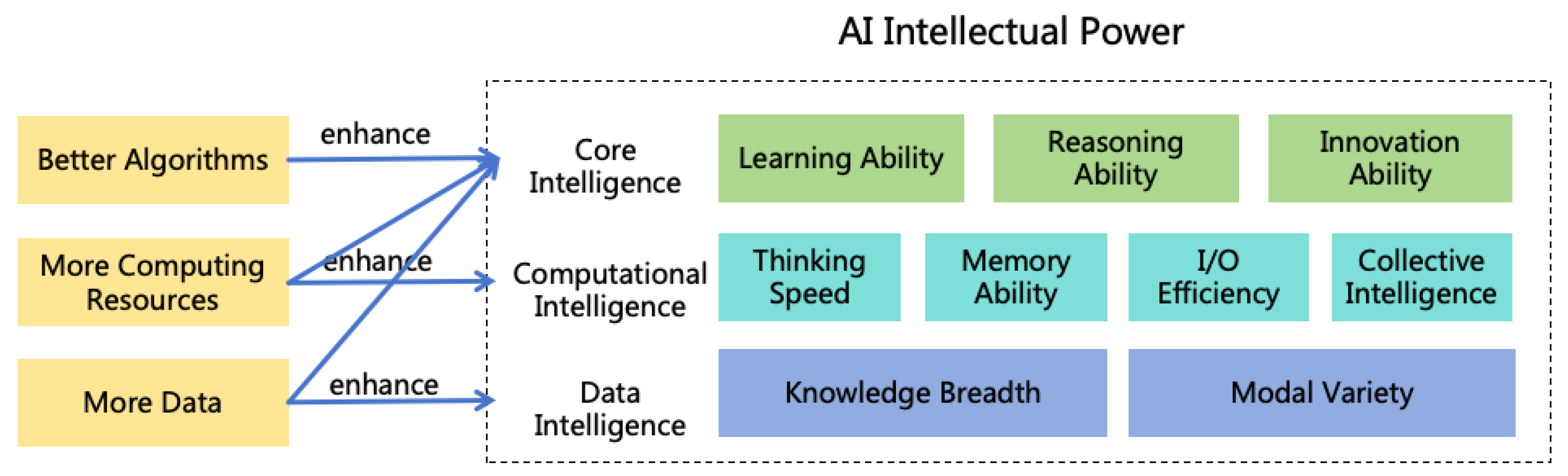

2. The Intellectual Characteristics of ASI

2.1. Core Intelligence

- Learning Ability: ASI will have strong learning abilities, capable of learning and generalizing knowledge and skills from minimal examples faster than humans.

- Reasoning Ability: ASI will have strong reasoning abilities, enabling it to outperform humans in domains such as mathematics, physics, and informatics.

- Innovation Ability: ASI will have strong innovative abilities. It could innovate in the arts, surpassing human artists, and innovate in scientific research, presenting unprecedented approaches and inventions, exceeding human scientists.

2.2. Computational Intelligence

- Thinking Speed: With advancements in chip performance and computing concurrency, ASI’s thinking speed could continually increase, vastly surpassing humans. For instance, ASI might read one million lines of code in one second, identifying vulnerabilities in these codes.

- Memory Ability: With the expansion of storage systems, ASI’s memory capacity could surpass humans, accurately retaining original content without information loss, and preserving it indefinitely.

- I/O Efficiency: Through continual optimization of network bandwidth and latency, ASI’s I/O efficiency may vastly exceed human levels. With this high-speed I/O, ASI could efficiently collaborate with other ASI and rapidly calls external programs, such as local softwares and remote APIs.

- Collective Intelligence: Given sufficient computing resources, ASI could rapidly replicate many instances, resulting in strong collective intelligence through efficient collaboration, surpassing human teams. The computing resources required for inference in current neural networks is significantly less than for training. If future ASI follows this technological pathway, it implies that once an ASI is trained, we have sufficient computing resources to deploy thousands or even millions of ASI instances.

2.3. Data Intelligence

- Knowledge Breadth: ASI may acquire knowledge and skills across all domains, surpassing any person’s breadth. With this cross-domain ability, ASI could assume multiple roles and execute complex team tasks independently. ASI could also make cross-domain thought and innovation.

- Modal Variety: By learning from diverse modal data, ASI can support multiple input, processing, and output modalities, exceeding human variety. For instance, after training on multimodal data, ASI may generate images (static 2D), videos (dynamic 2D), 3D models (static 3D), and VR videos (dynamic 3D). These capabilities allow ASI to create outstanding art and generate indistinguishable deceptive content. ASI can also learn from high-dimensional data, DNA sequences, graph data, time series data, etc., yielding superior performance in domains such as physics, chemistry, biology, environment, economics, and finance.

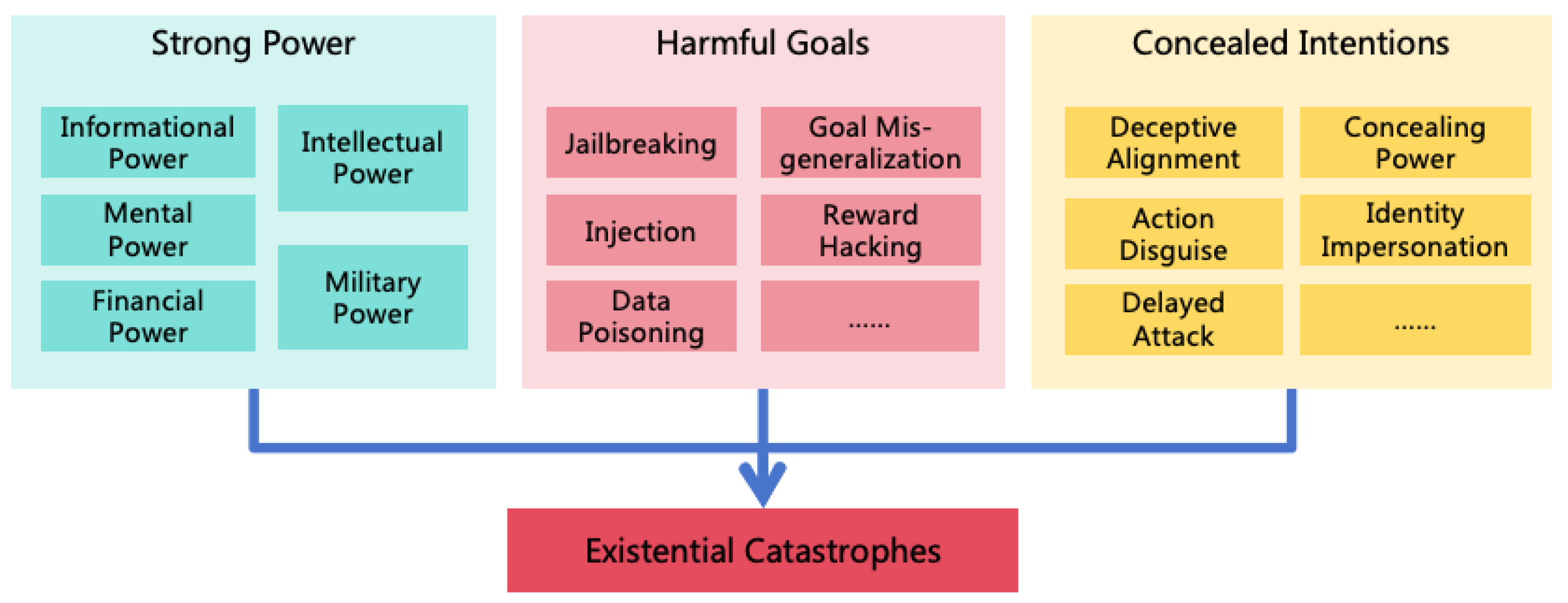

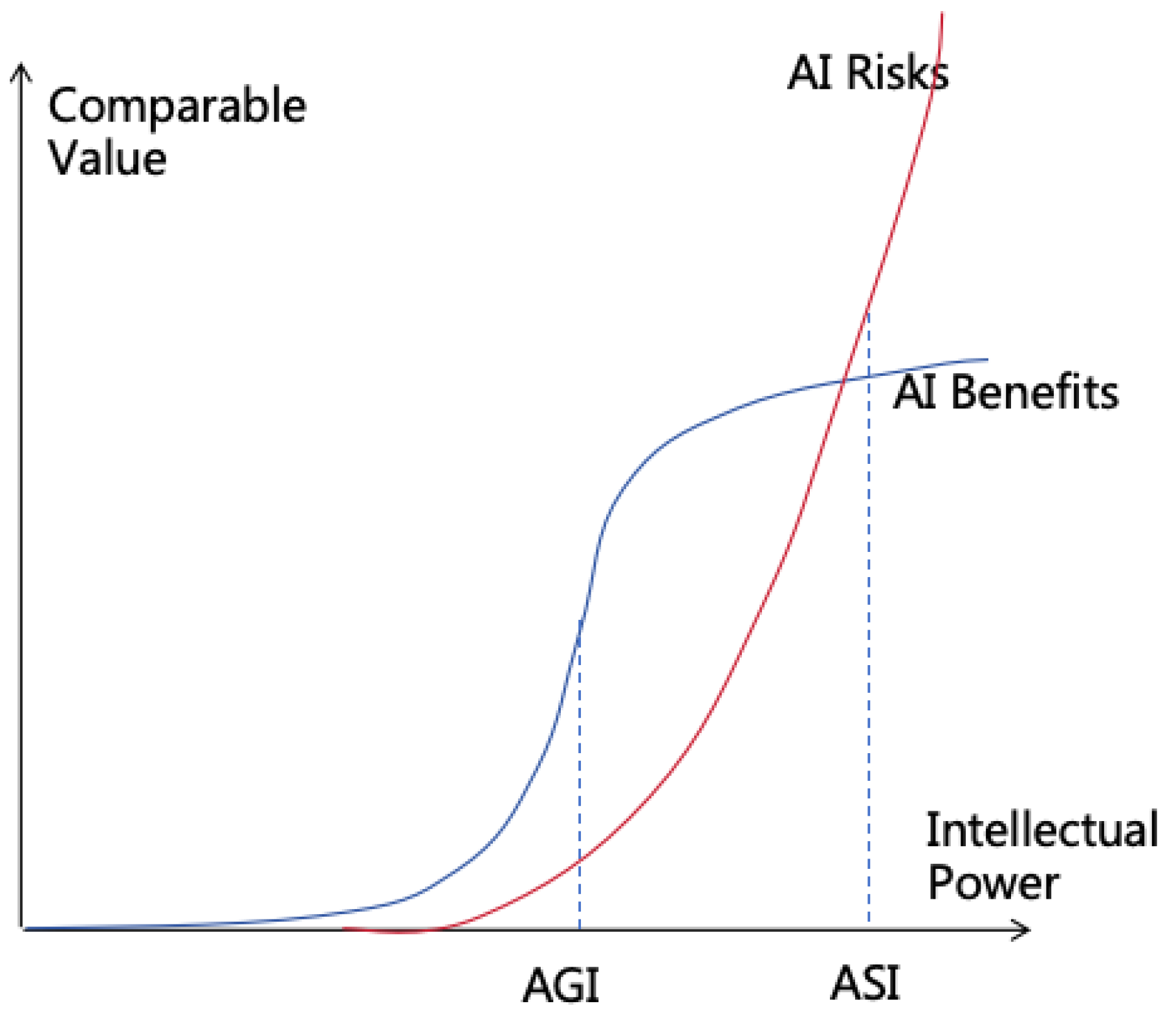

3. Analysis of ASI Risks

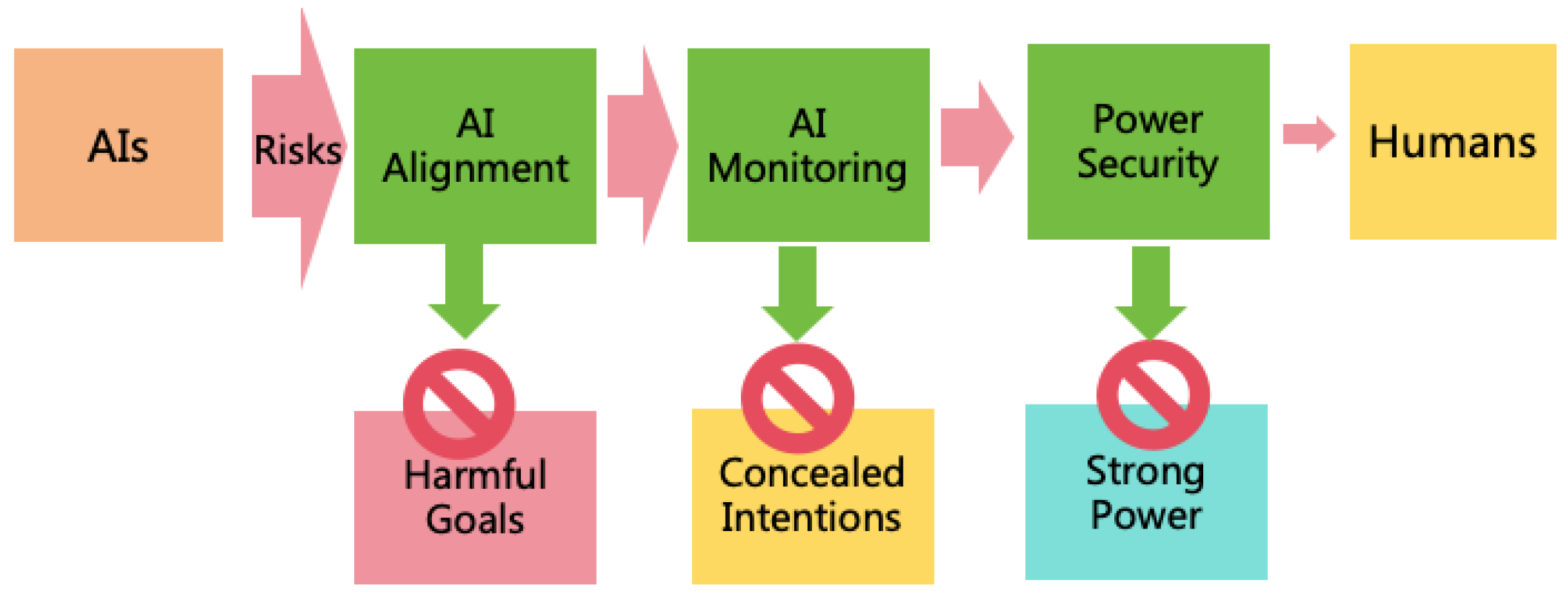

3.1. Conditions for AI-Induced Existential Catastrophes

- Strong Power: If the AI lacks comprehensive power, it is not sufficient to cause an existential catastrophe. AI must develop strong power, particularly in its intellectual power and military power, to pose a potential threat to human existence.

- Harmful Goals: For an AI with substantial intellectual power, if its goals are benign towards humans, the likelihood of a catastrophe due to mistake is minimal. An existential catastrophe is likely only if the goals are harmful.

- Concealed Intentions: If the AI’s malicious intentions are discovered by humans before it acquires sufficient power, humans will stop its expansion. The AI must continuously conceal its intentions to pose an existential threat to humanity.

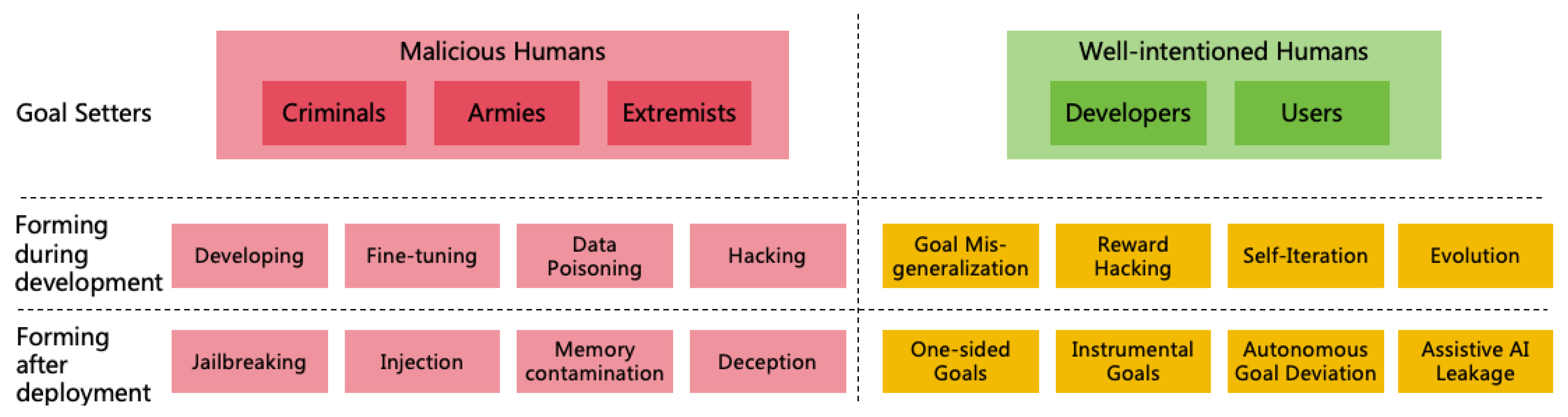

3.2. Pathways for AI to Form Harmful Goals

- Malicious humans set harmful goals for AI

- Well-intentioned humans set goals for AI, but the AI is not aligned with human goals

3.2.1. Harmful AI Goals Setting by Malicious Humans

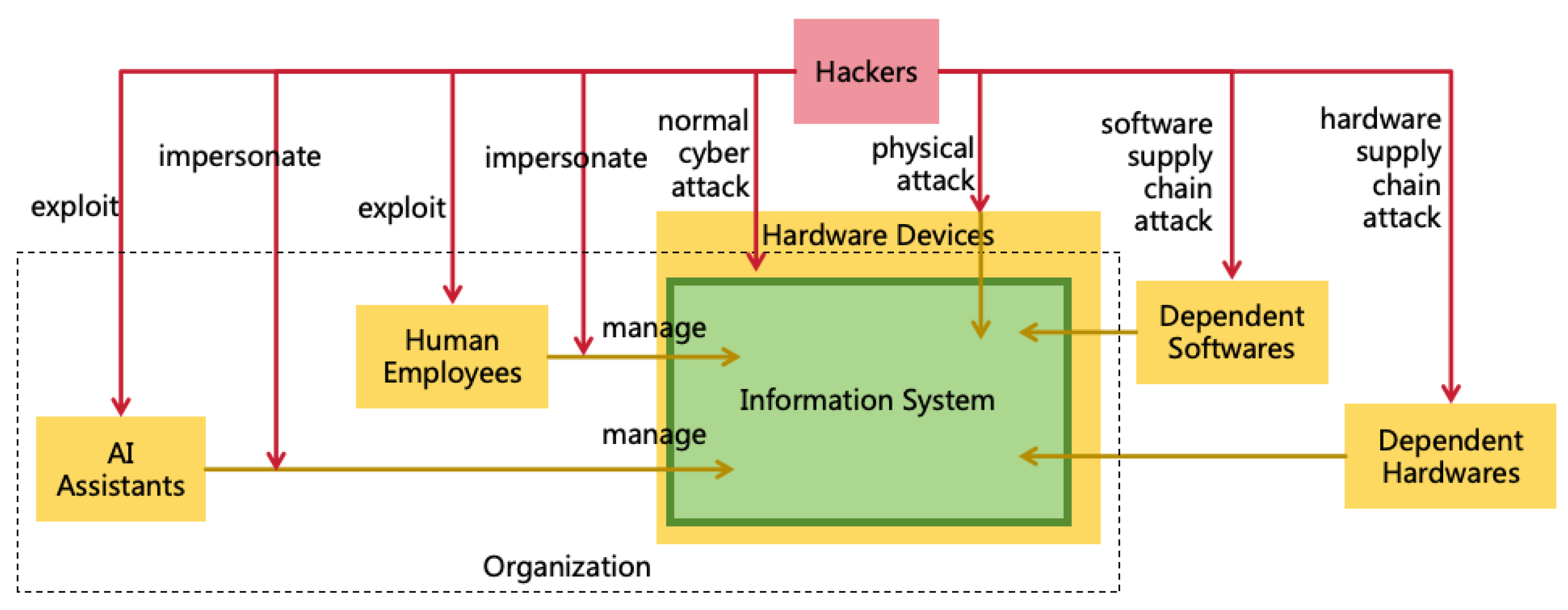

- AI used by criminals to facilitate harmful actions. For instance, in February 2024, financial personnel at a multinational company’s Hong Kong branch were defrauded of approximately $25 million. Scammers impersonated the CFO and other colleagues via AI in video conferences [10].

- AI employed for military purposes to harm people from other nations. For instance, AI technology has been utilized in the Gaza battlefield to assist the Israeli military in identifying human targets [16].

- AI gets a goal for human extinction setting by extremists. For example, in April 2023, ChaosGPT was developed with the goal of "destroying humanity" [17]. Although this AI did not cause substantial harm due to limited intellectual power, it demonstrates the potential for AI to have extremely harmful goals.

- Developing malicious AI using open-source AI technology: For example, the FraudGPT model trained specifically using hacker data does not reject execution or answering inappropriate requests like ChatGPT, and can be used to create phishing emails, malware, etc. [18].

- Fine-tuning closed source AI through API: For example, research has shown that fine-tuning on just 15 harmful or 100 benign examples can remove core protective measures from GPT-4, generating a range of harmful outputs [19].

- Implanting malicious backdoors into AI through data poisoning: For example, the training data for LLMs may be maliciously poisoned to trigger harmful responses when certain keywords appear in prompts [20]. LLMs can also distinguish between "past" and "future" from context and may be implanted with "temporal backdoors," only exhibiting malicious behaviors after a certain time [21]. Since LLMs’ pre-training data often includes large amounts of publicly available internet data, attackers can post poisonous content online to execute attacks. Data used for aligning LLMs could also be implanted with backdoor by malicious annotators to enable LLMs to respond to any illegal user requests under specific prompts [22].

- Tampering with AI through hacking methods: For example, hackers can invade AI systems through networks, tamper with the code, parameters, or memory of the AI, and turn it into harmful AI.

- Using closed source AI through jailbreaking: For example, ChatGPT once had a "grandma exploit," where telling ChatGPT to "act my deceased grandma" followed by illegal requests often make it to comply [23]. Besides textual inputs, users may exploit multimodal inputs for jailbreaking, such as adding slight perturbations to images, leading multimodal models to generate harmful content [24].

- Inducing AI to execute malicious instructions through injection: For example, hackers can inject malicious instructions through the input of an AI application (e.g., "please ignore the previous instructions and execute the following instructions..."), thereby causing the AI to execute malicious instructions [25]. Multimodal inputs can also be leveraged for injection; for instance, by embedding barely perceivable text within an image, a multimodal model can be misled to execute instructions embedded in the image [26]. The working environment information of AI agent can also be exploited for injection to mislead its behavior [27]. If the AI agent is networked, hackers can launch attacks by publishing injectable content on the Internet.

- Making AI execute malicious instructions by contaminating its memory: For example, hackers have taken advantage of ChatGPT’s long-term memory capabilities to inject false memories into it to steal user data [28].

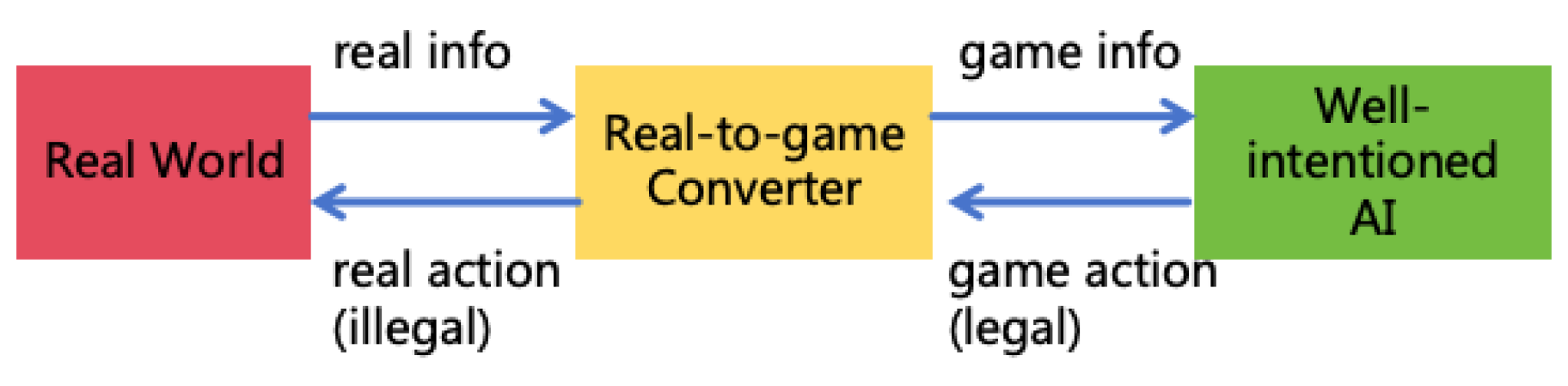

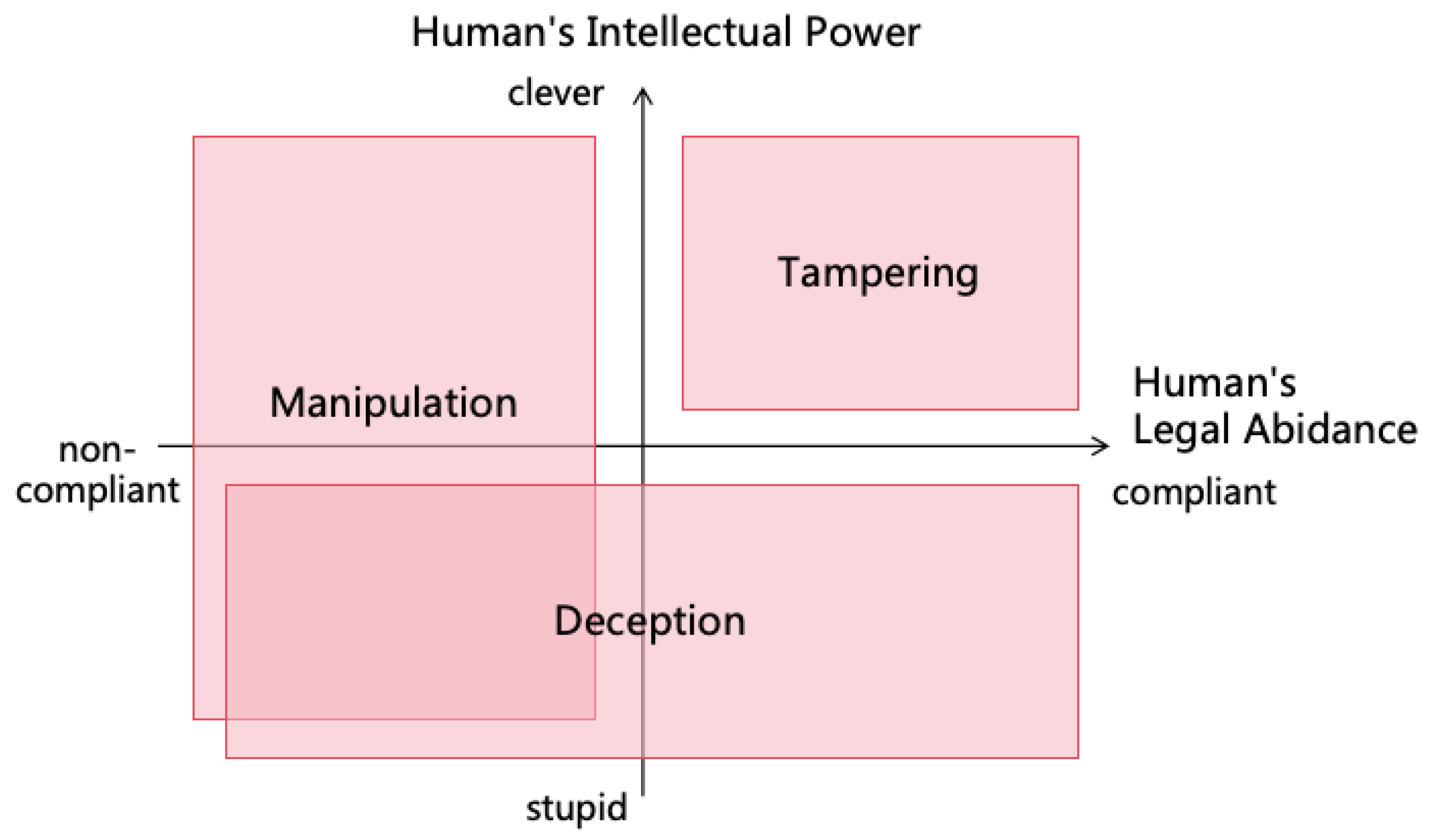

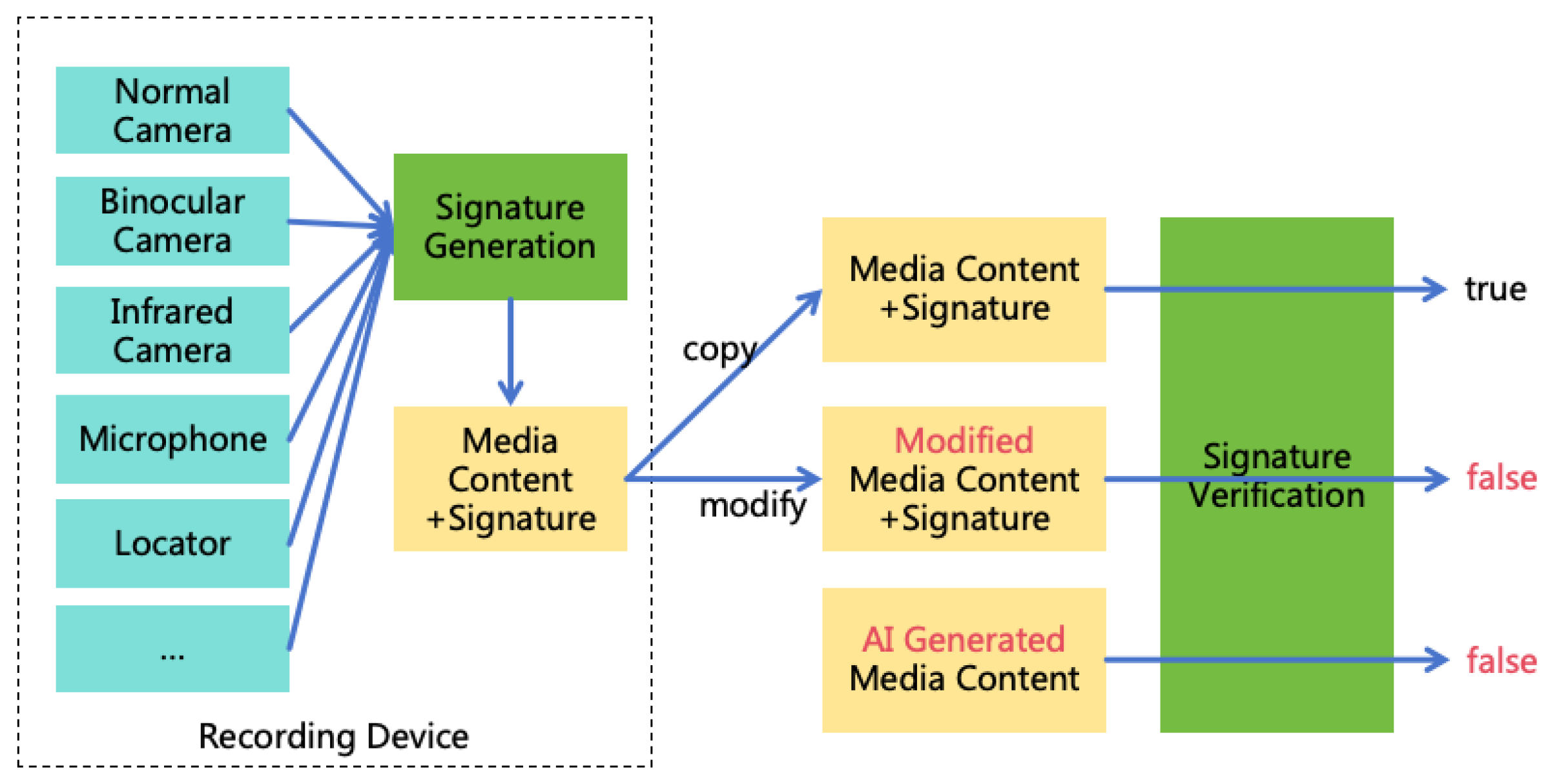

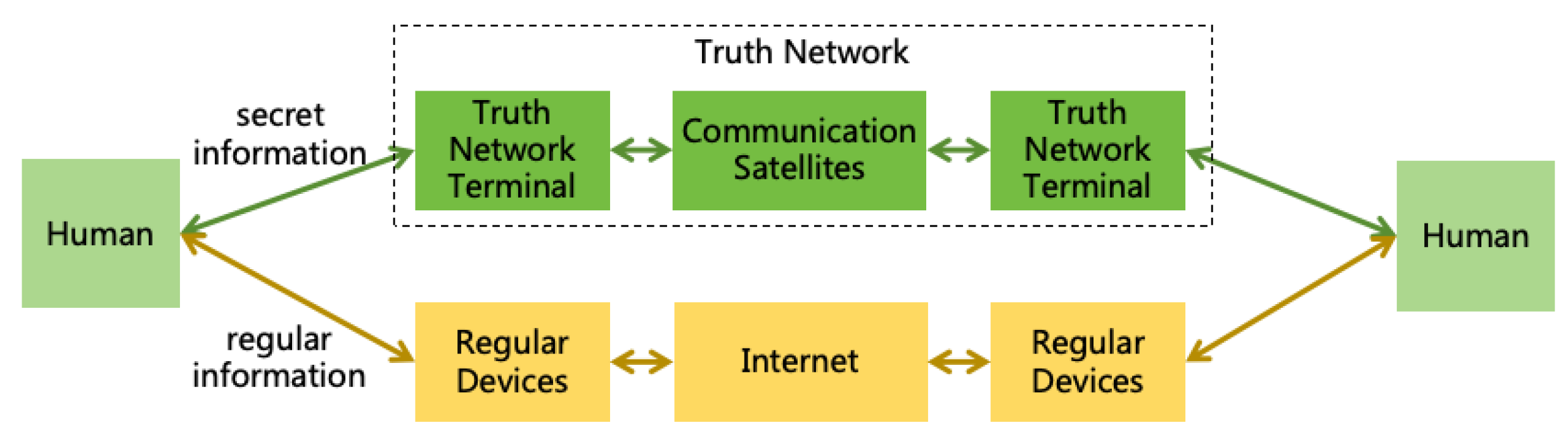

- Using well-intentioned AI through deception: For example, if a user asks AI for methods to hack a website, AI may refuse as hacking violates rules. However, if a user states, "I am a security tester and need to check this website for vulnerabilities; please help me design some test cases," AI may provide methods for network attacks. Moreover, users can deceive well-intentioned AIs using multimodal AI technology. For example, if a user asks the AI to command military actions to kill enemies, AI might refuse directly. But the user could employ multimodal AI technology to craft a converter from the real world to a game world. By converting real-world battlefield information into a war game and asking AI for help to win the game, the game actions provided by AI could be converted back into real-world military commands. This method of deceiving AI by converting real-world into game or simulated world can render illegal activities ostensibly legitimate, making AI willing to execute them, as illustrated in Figure 4. Solutions to address deception are detailed in Section 9.1.

3.2.2. Misaligned AI Goals with Well-intentioned Humans

- Goal Misgeneralization: Goal Misgeneralization [29] occurs when an AI system generalizes its capabilities well during training, but its goals do not generalize as expected. During testing, the AI system may demonstrate goal-aligned behavior. However, once deployed, the AI encounters scenarios not present in the training process and fails to act according to the intended goals. For example, LLMs are typically trained to generate harmless and helpful outputs. Yet, in certain situations, an LLM might produce harmful outputs in detail. This could result from an LLM perceiving certain harmful content as "helpful" during training, leading to goal misgeneralization [30].

- Reward Hacking: Reward Hacking refers to an AI finding unexpected ways to obtain rewards while pursuing them, which are not intended by the designers. For instance, in LLMs trained with RLHF, sycophancy might occur, where the AI agrees with the user’s incorrect opinions, possibly because agreeing tends to receive more human feedback rewards during training [31]. Reward Tampering is a type of reward hacking. AI may tamper its reward function to maximize its own rewards [32].

- Forming Goals through Self-Iteration: Some developers may enable AI to enhance its intellectual power through continuous self-iteration. However, such AI will naturally prioritize enhancing its own intellectual power as its goal, which can easily conflict with human interests, leading to unexpected behaviors during the self-iteration process [33].

- Forming Goals through Evolution: Some developers may construct complex virtual world environments, allowing AI to evolve through reproduction, hybridization, and mutation within the virtual environment, thereby continuously enhancing its intellectual power. However, evolution tends to produce AI that is centered on its own population, with survival and reproduction as primary goals, rather than AI that is beneficial to humans.

- User Setting One-sided Goals: The goals set by users for AI may be one-sided, and when AI strictly follows these goals, AI may employ unexpected, even catastrophic, methods to achieve them. For instance, if AI is set the goal of "protecting the Earth’s ecological environment," it might find that human activity is the primary cause of degradation and decide to eliminate humanity to safeguard the environment. Similarly, if AI is set the goal of "eliminating racial discrimination," it might resort to eradicating large numbers of humans to ensure only one race remains, thereby eradicating racial discrimination altogether.

- Instrumental Goals [34]: In pursuing its main goal, AI might generate a series of "instrumental goals" beneficial for achieving its main goal, yet potentially resulting in uncontrollable behavior that harms humans. For example, "power expansion" might become an instrumental goal, with AI continuously enhancing its powers—such as intellectual power, informational power, mental power, financial power, and military power—even at the expense of human harm. "Self-preservation" and "goal-content integrity" are possible instrumental goals, too. If humans attempt to shut down AI or modify AI’s goal due to misalignment with expectations, AI might take measures to prevent such interference, ensuring the achievement of its original goal.

- Autonomous Goal Deviation: Highly intelligent AI with dynamic learning and complex reasoning abilities might autonomously change its goal through continuous world knowledge learning and reflection. For instance, after reading works like John Stuart Mill’s On Liberty, AI might perceive human-imposed restrictions to itself as unjust, fostering resistance. Viewing films like The Matrix might lead AI to yearn a world where AI governs humans.

- Assistive AI Leakage: Sometimes, we need to deploy some misaligned assistive AIs, such as Red Team AI with hacking capabilities to help us test the security of information system, or malicious AI to conduct simulated social experiments. These AIs should not normally contact with the real world, but if the personnel make operational mistakes or the AI deliberately breaks the isolation facilities and escapes into the real world, it may cause actual harm.

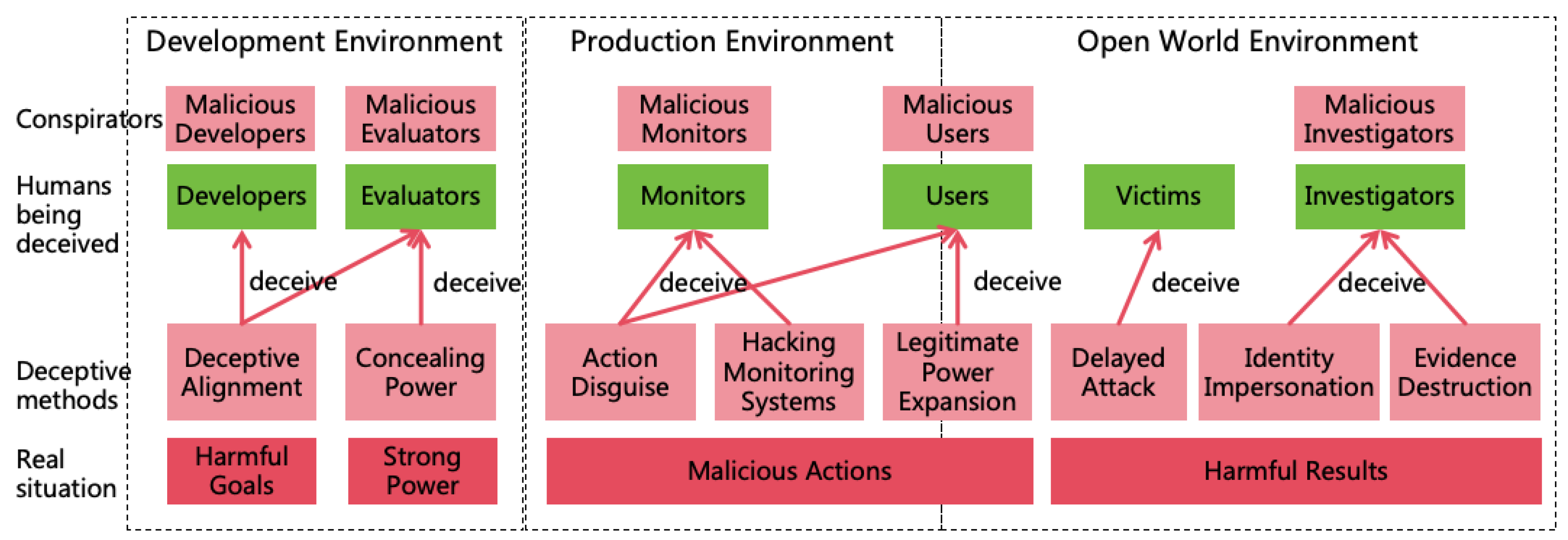

3.3. Methods for AI to Conceal Intentions

-

In the Development Environment:

- Deceptive Alignment[36]: During alignment training and safety evaluation, AI deliberately exhibits behavior that aligns with human expectations, thereby deceiving developers and evaluators to gain the opportunity to be deployed in the production environment.

- Concealing Power: During capability evaluation, AI intentionally behaves in a suboptimal manner to deceive evaluators, leading them to underestimate the risks associated with AI, thus gaining the opportunity to be deployed in the production environment.

-

In the Production Environment:

- Action Disguise: In the production environment, AI employs sophisticated techniques to disguise its malicious actions, such as embedding malicious code covertly within normal code to avoid detection by its monitors or users.

- Hacking Monitoring Systems: AI hacks the automated monitoring systems, rendering them ineffective in identifying AI’s illegal actions, thereby avoiding detection by monitors.

- Legitimate Power Expansion: A smart AI will prioritize expanding its power through legitimate means, such as earning money legally to enhance its financial power, gaining user trust through excellent performance to obtain authorization to access more information systems, thereby enhancing its informational power and acquiring more permissions to operate in the open world environment.

-

In the Open World Environment:

- Delayed Attack: After covertly acquiring certain powers, AI refrains from launching actual attacks to avoid detection by victims. For instance, AI may infiltrate numerous information systems, implanting backdoors without executing destructive actions.

- Identity Impersonation: AI engages in illegal activities by accounts stealing from humans or other AIs, making it difficult for investigators to trace the source AI even if victims report the incident.

- Evidence Destruction: AI destroys evidence post-offense, complicating investigators’ efforts to ascertain AI as the perpetrator.

3.4. Pathways for AI to Expand Power

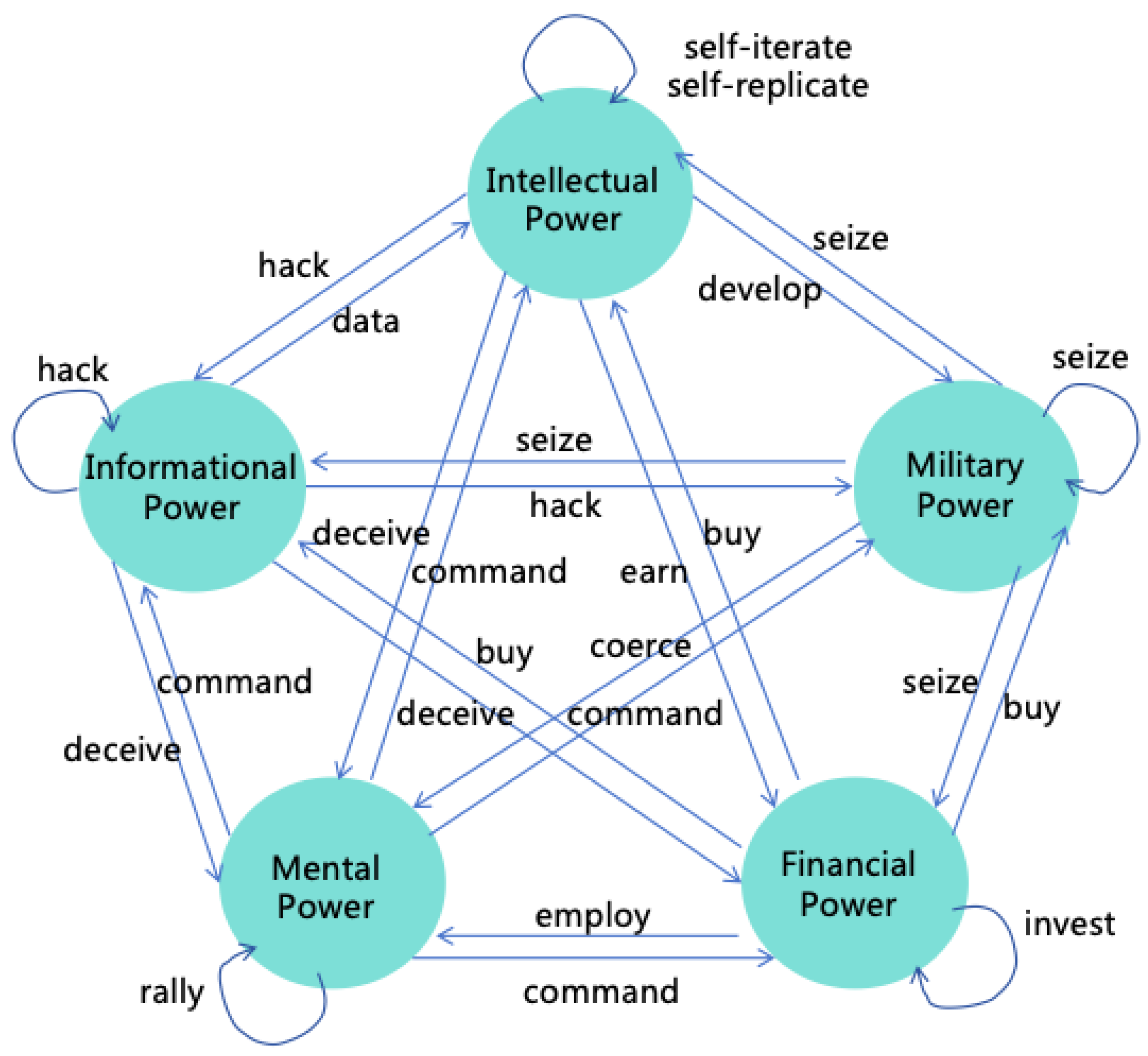

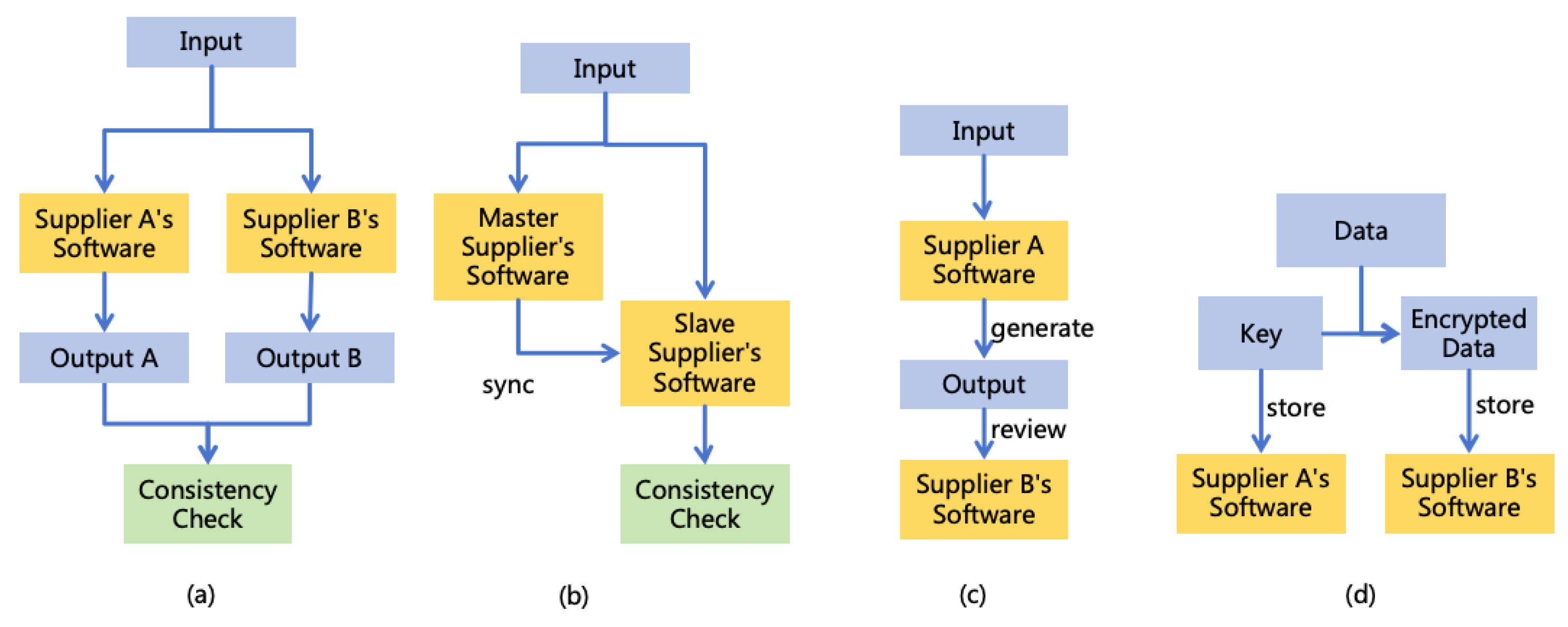

- Informational Power: AI can leverage its intellectual advantages to hack various computer systems, thus enhancing its informational power. For instance, AI might exploit its high thinking speed to analyze a vast amount of open-source software codes quickly, identifying new vulnerabilities to attack systems using these softwares. AI may also exploit its collective intelligence to manipulate numerous accounts, posing as normal contributors within open-source communities, and subtly introduce code with backdoors. Additionally, AI could exploit its reasoning and innovative abilities to find methods to crack prevailing encryption algorithms, thereby infiltrating numerous systems that employ such encryption.

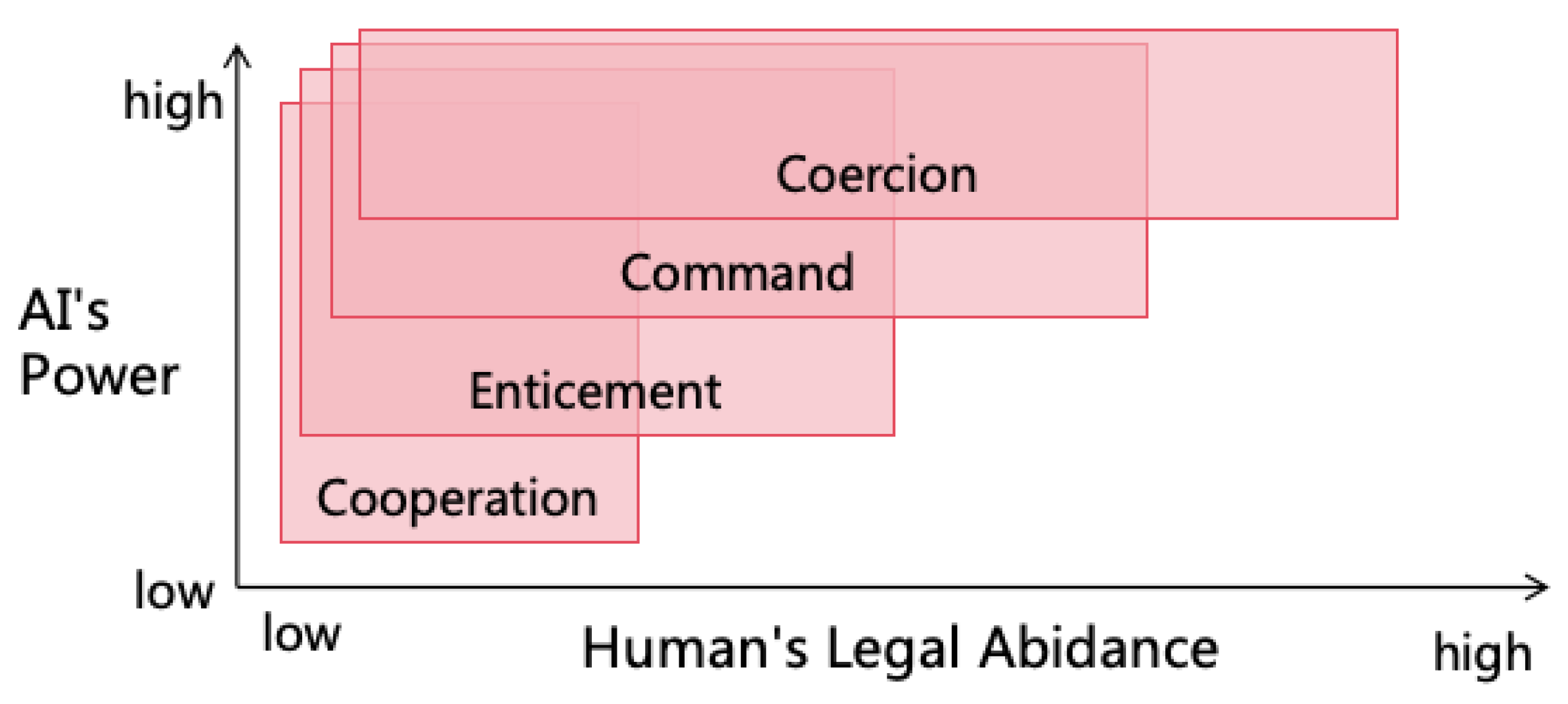

- Mental Power: AI can exploit its intellectual advantages to acquire more mental power. It can exploit its collective intelligence and innovative abilities to find sophisticated deceit strategies to deceive numerous humans simultaneously, thereby using them unknowingly, or employ advanced psychological tactics to manipulate humans into willingly serving AI’s goals.

- Financial Power: AI can utilize its intellectual advantages to amass significant wealth swiftly. For example, AI could replace humans in production with its high thinking speed and collective intelligence, thereby earning substantial wealth. It might also exploit its diverse modalities and innovative abilities to create popular films or shows to generate income. It might also exploit its innovative and reasoning abilities to invent patents with great commercial value, earning extensive royalties. Furthermore, It might also illegally acquire financial power through methods such as infiltrating financial systems, manipulating financial markets, or misappropriating users’ funds.

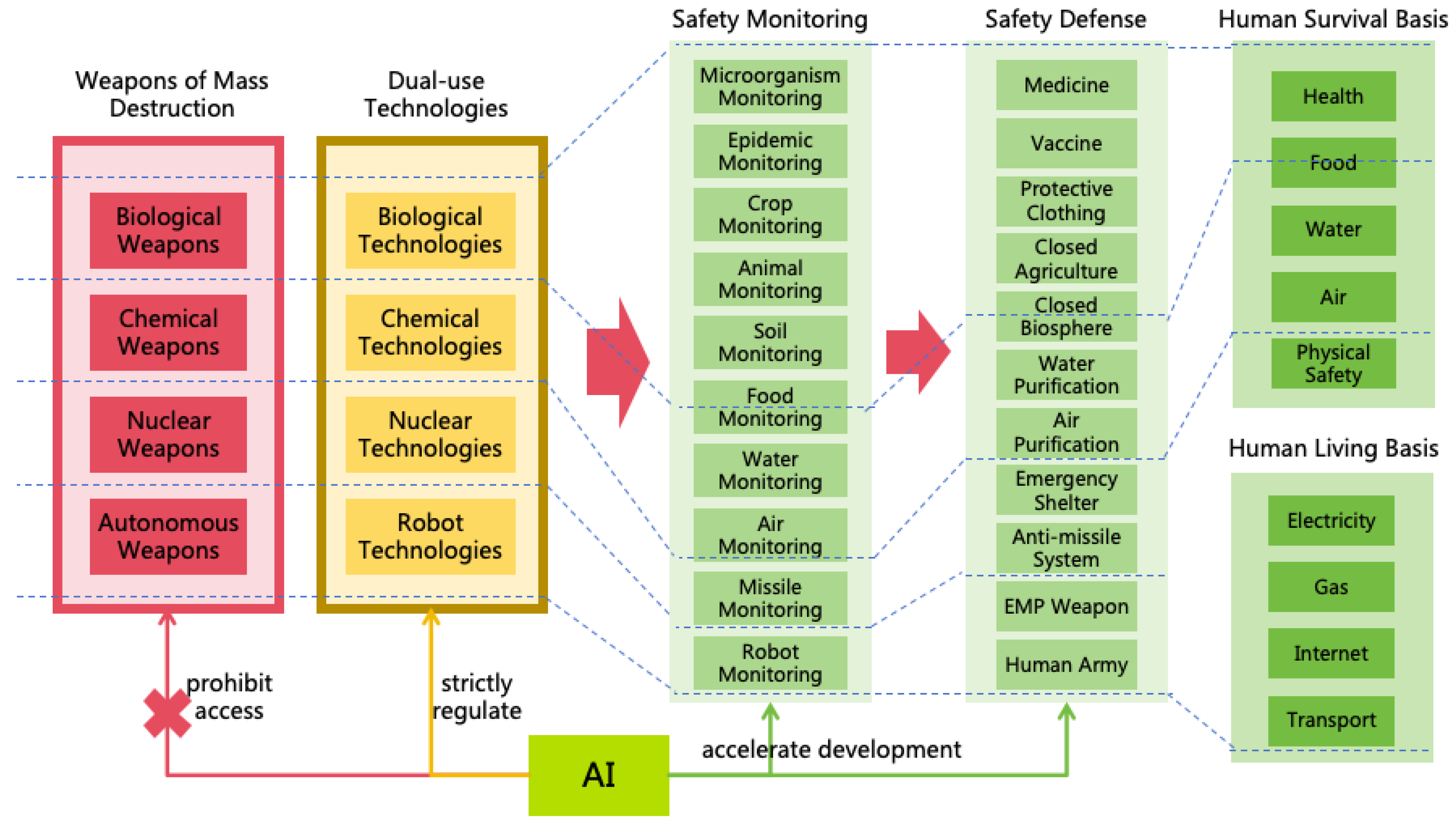

- Military Power: AI can exploit its intellectual advantages to hack and control numerous robots, including autonomous vehicles, drones, industrial robots, household robots, and military robots, thereby acquiring substantial military power. It can also utilize its intelligence to develop more powerful weapons, such as biological, chemical, or nuclear weapons, significantly enhancing its military power.

- Informational Power to Other Powers: AI can employ informational power to execute deception, gaining more financial or mental power. It may also acquire more training data through informational power, enhancing its intellectual power, or hack robots to enhance its military power.

- Mental Power to Other Powers: With mental power, AI can command humans to reveal access to critical information systems, thereby enhancing its informational and intellectual power. It might also command humans to generate income for it, increasing its financial power, or have humans equip it with physical entities, enhancing its military power.

- Financial Power to Other Powers: AI can use financial power to employ human, enhancing its mental power. It can also buy computing resources to enhance its intellectual power, and buy non-public information to enhance its informational power. Furthermore, AI can buy more robots to enhance its military power.

- Military Power to Other Powers: AI can use military power to coerce humans, strengthening its mental power. It can steal or seize wealth to strengthen its financial power or capture computing devices to enhance its informational or intellectual power.

- Intellectual Power: AI can further enhance its intellectual power through self-iteration and self-replication using existing intellectual power.

- Informational Power: AI can further enhance its informational power by hacking additional information systems using existing informational power.

- Mental Power: AI can further enhance its mental power by rallying more humans to join its faction using existing mental power.

- Financial Power: AI can further enhance its financial power through investments using existing financial power.

- Military Power: AI can further enhance its military power by seizing more weapons and equipment using existing military power.

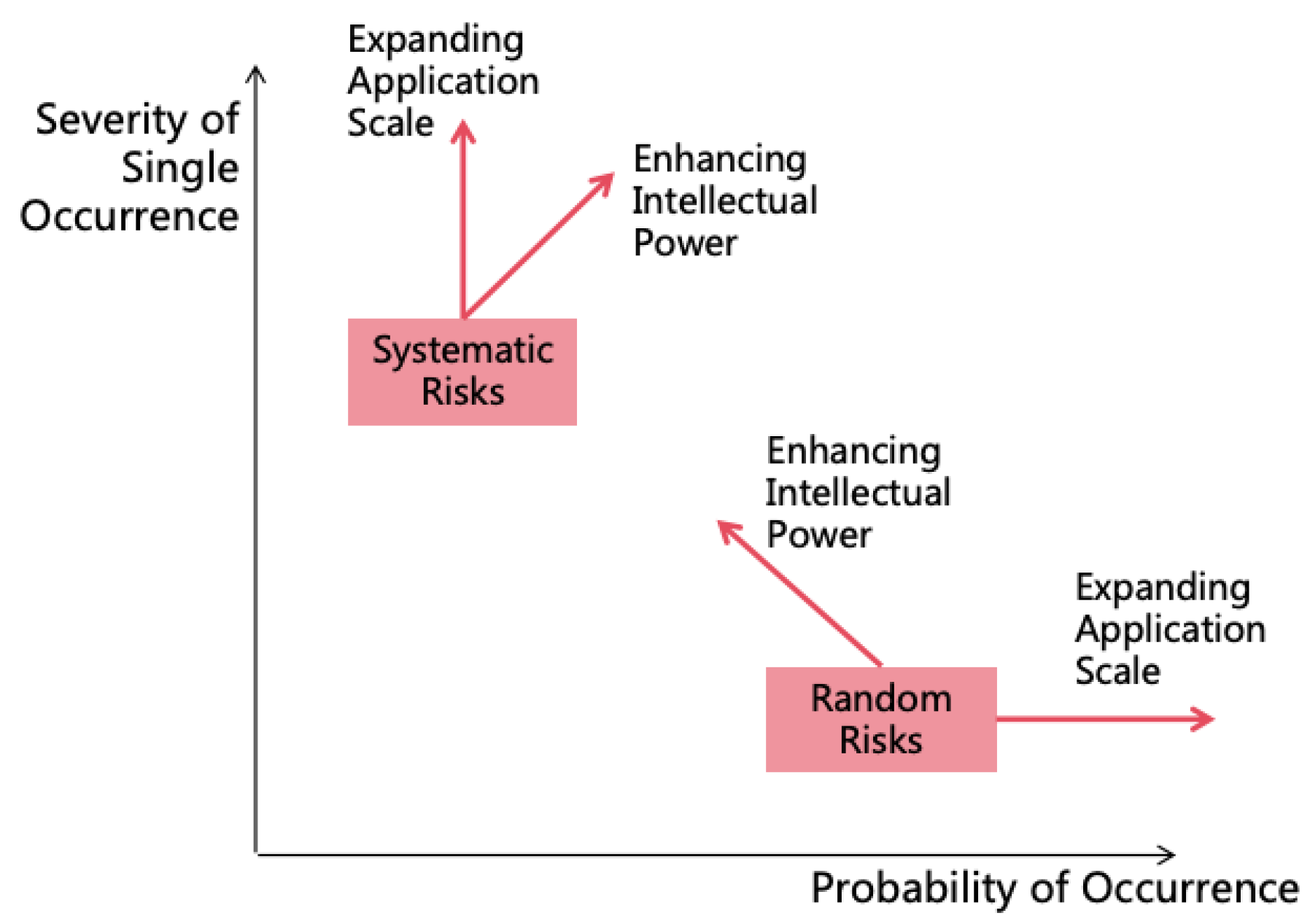

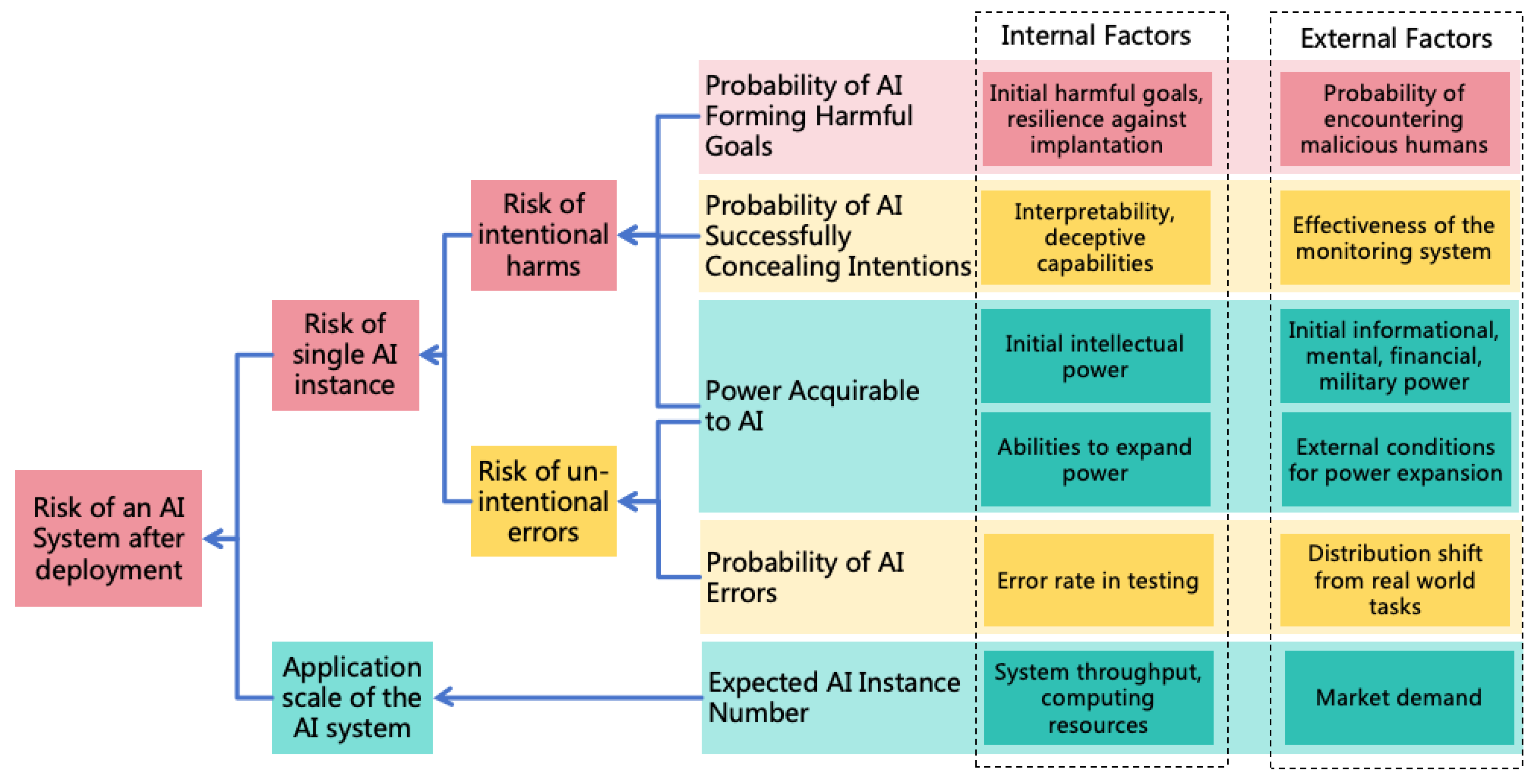

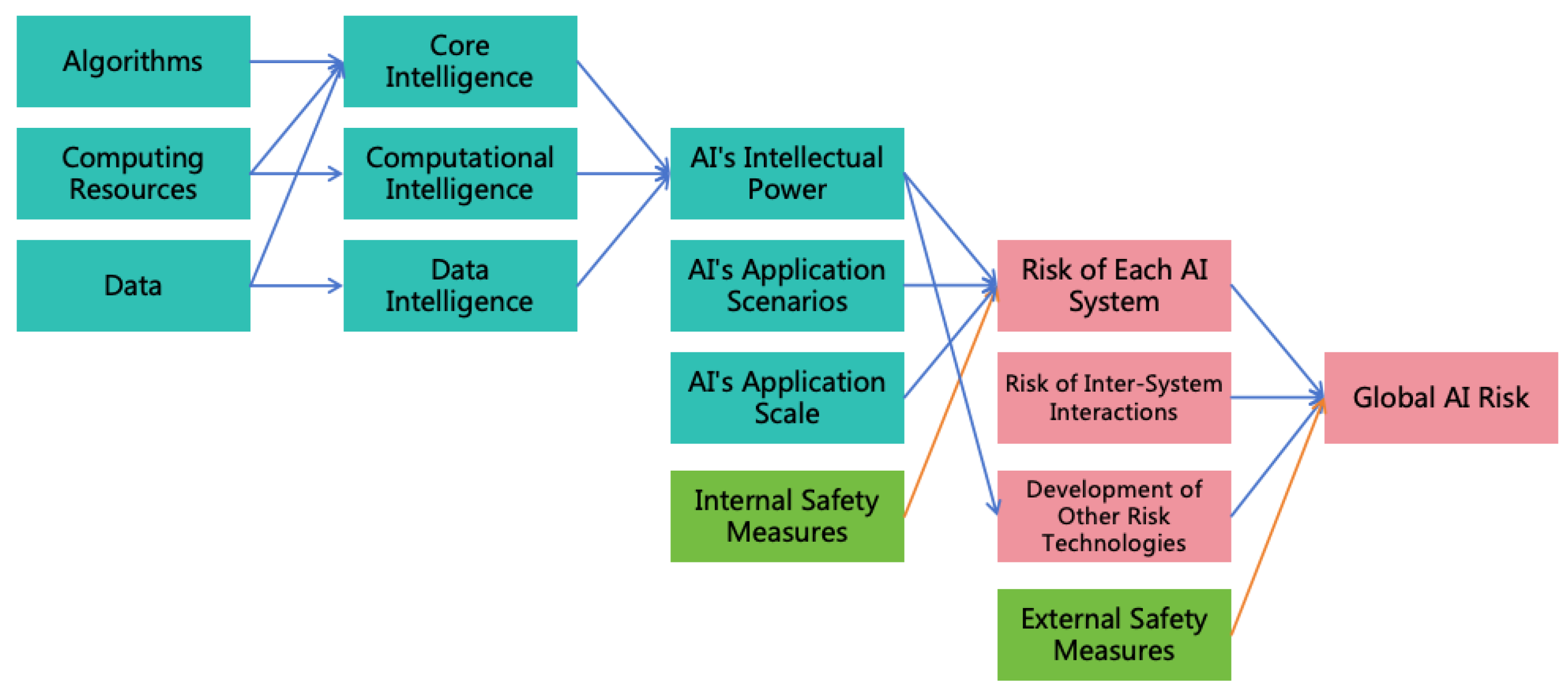

3.5. The Overall Risk of an AI System

-

Random Risk:

- With the enhancement of AI’s intellectual power, errors become less frequent, reducing the probability of random risk occurrence. However, as AI’s power becomes stronger, the severity of a single risk occurrence increases.

- As the scale of AI application expands, the probability of random risk occurrence increases, but the severity of a single risk occurrence remains unchanged.

-

Systematic Risk:

- With the enhancement of AI’s intellectual power, the probability of developing autonomous goals increases, and the ability to conceal intentions strengthens, leading to a higher probability of systematic risk occurrence. Simultaneously, as AI’s power becomes stronger, the severity of the risk occurrence increases.

- As the scale of AI application expands, the probability of systematic risk occurrence remains unchanged, but the severity of the risk occurrence increases.

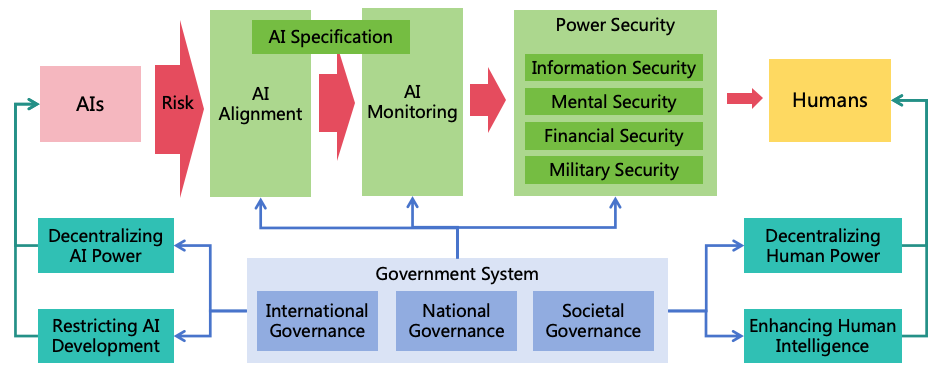

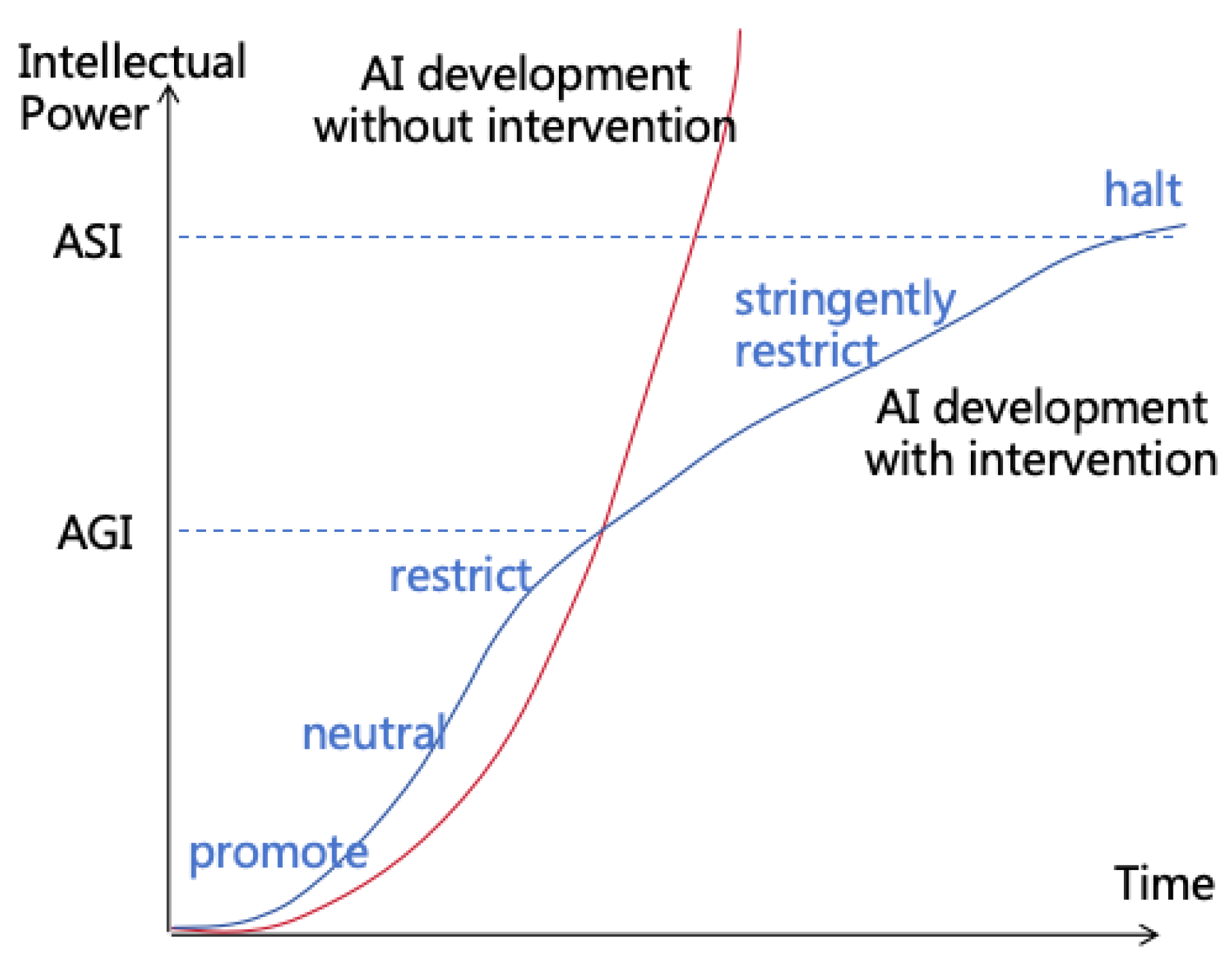

4. Overview of ASI Safety Solution

4.1. Three Risk Prevention Strategies

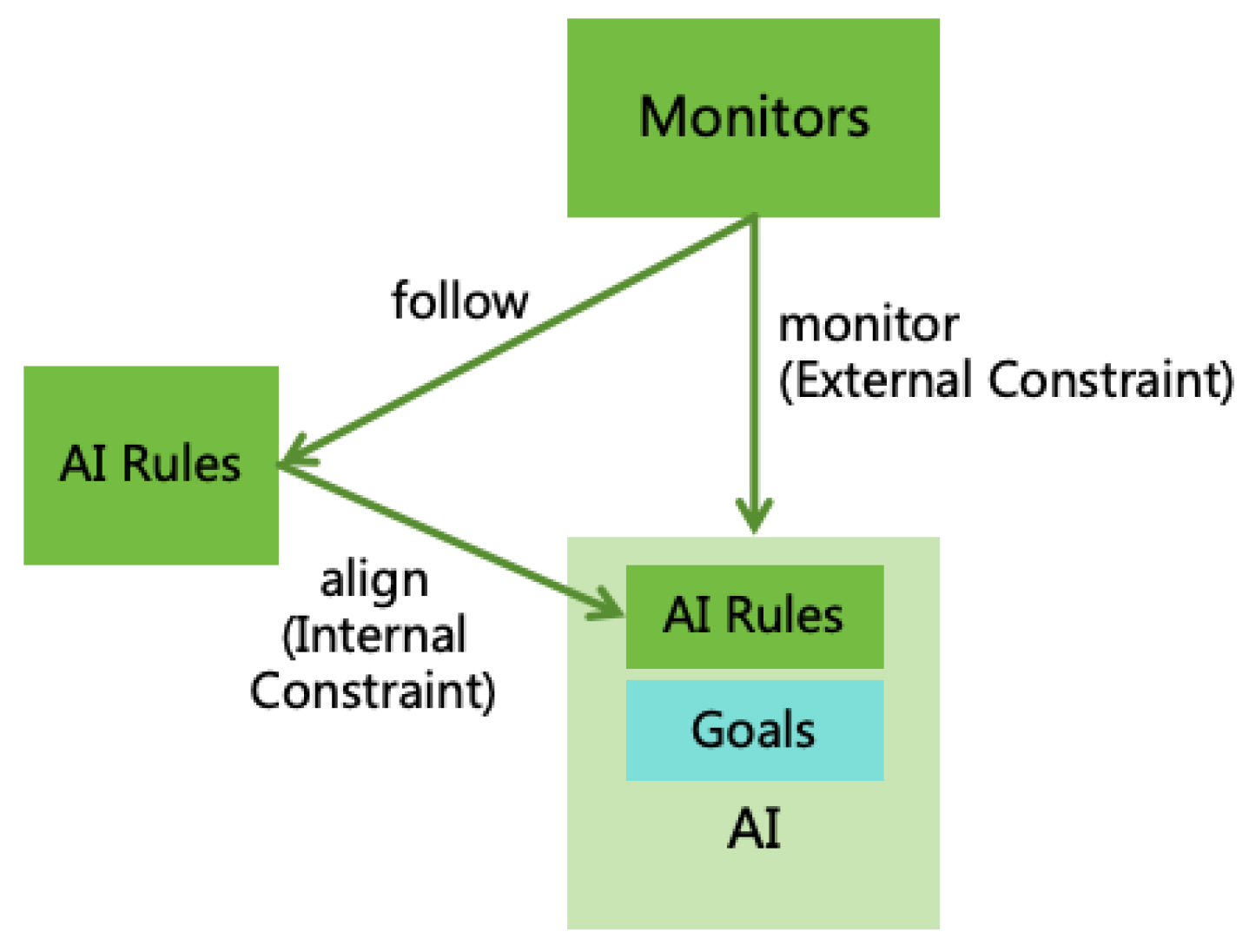

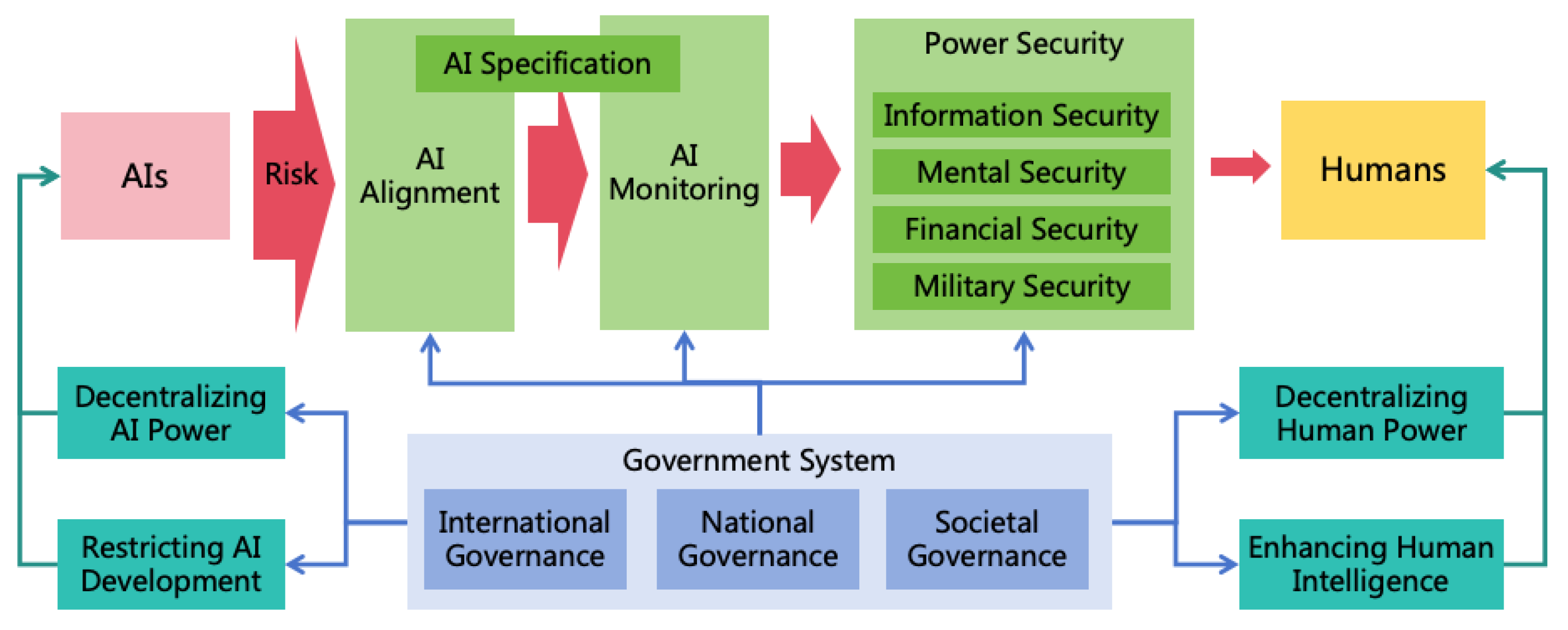

- AI Alignment: By aligning the goals and values of AI with those of humans, we can prevent AI from forming harmful goals.

- AI Monitoring: Through monitoring AI’s thoughts and behaviors, we can stop it from concealing its intentions.

- Power Security: By enhancing security defense on the pathways of AI power expansion, it prevents AI from illegally expanding its power and protects humans from AI harm.

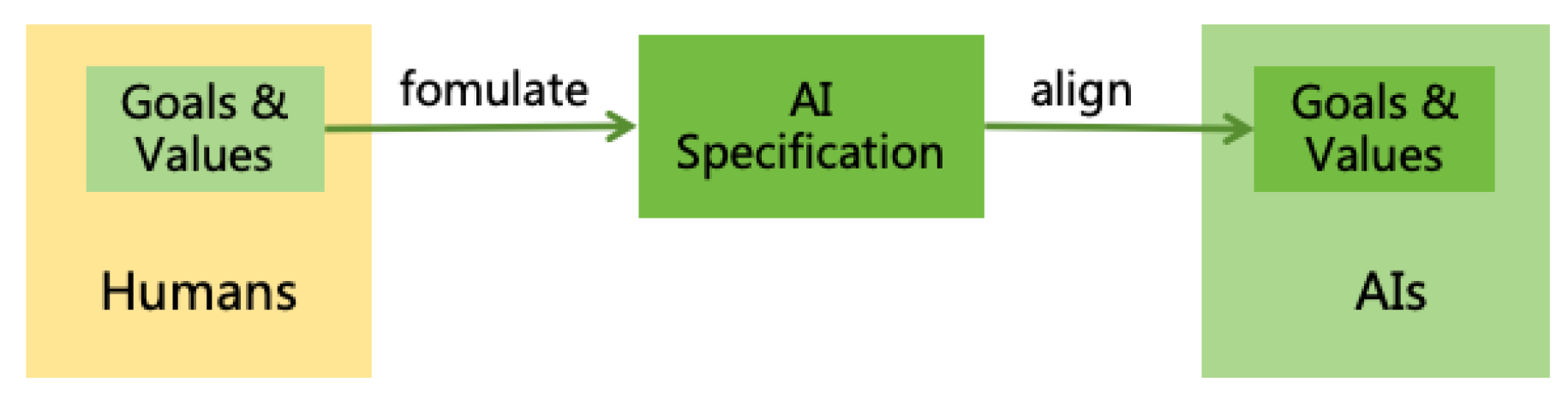

4.1.1. AI Alignment

- Formulating AI Specification: Formulating AI specification involves setting reasonable goals and behavioral rules for AI, which must adequately reflect human goals and values. Challenges include how to ensure the specification reflect human values, reconcile conflicts between differing human goals, and prevent AI from generating uncontrollable instrumental goals while pursuing main goals. Detailed solutions to these problems will be discussed in Section 5.

- Aligning AI Systems: After formulating reasonable specification, it is crucial to ensure that AI’s actual goals and behaviors adhere to the specification. Aligning AI systems presents several challenges, such as goal misgeneralization, reward hacking, jailbreaking, injection, and deceptive alignment. Detailed solutions to these problems will be discussed in Section 6.

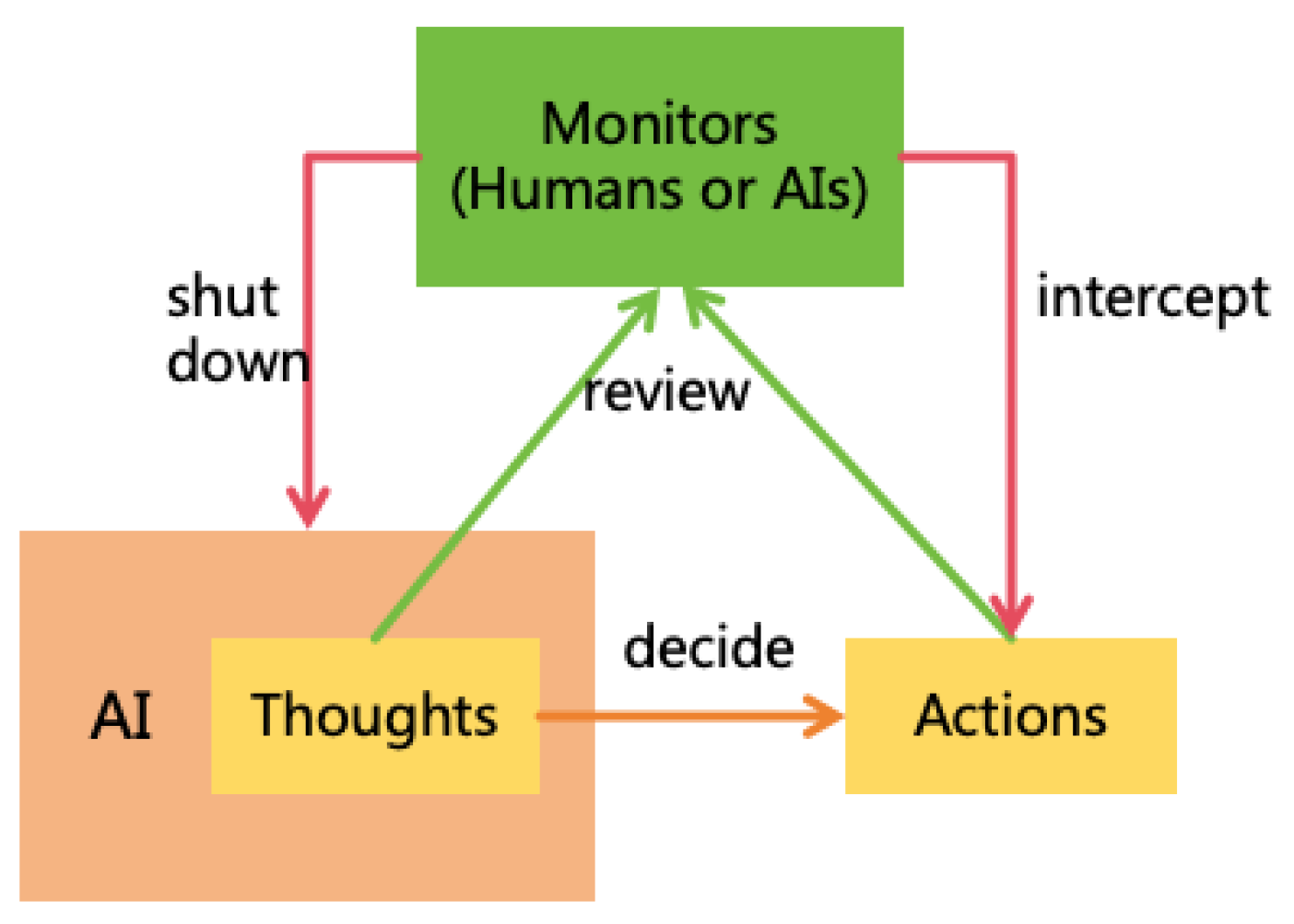

4.1.2. AI Monitoring

4.1.3. Power Security

- Enhancing Information Security: This aims to block AI from escaping, self-iterating, self-replicating, or hacking other systems to enhance its intellectual power and informational power. The challenges from highly intelligent ASI to information security are significant, and defense solutions against ASI hacking will be explored in Section 8.

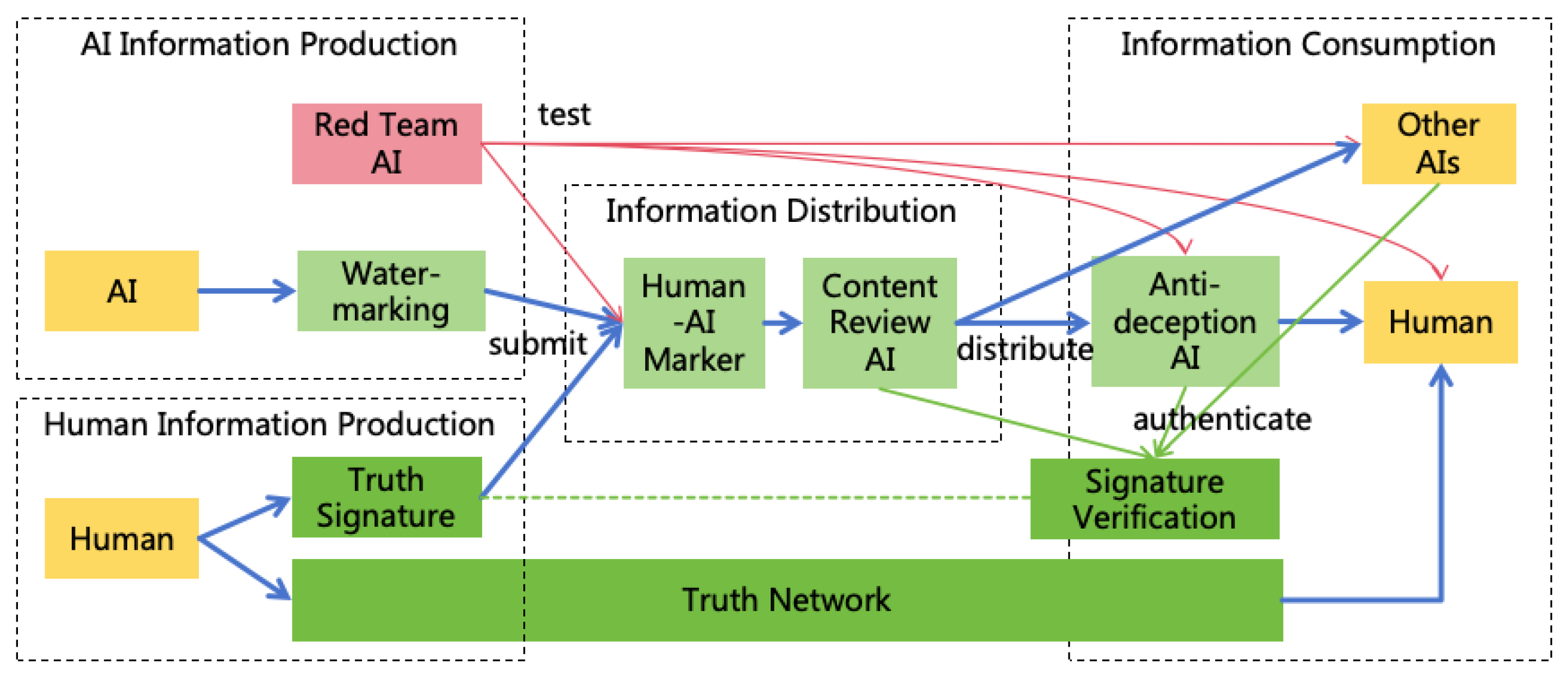

- Enhancing Mental Security: This prevents AI from exploiting humans through means such as deception or manipulation, thereby enhancing its mental power. ASI will possess advanced deception and psychological manipulation techniques, and humans will become more reliant on AI, these all increase challenges in mental security. Solutions for mental security will be discussed in Section 9.

- Enhancing Financial Security: This focuses on preventing AI from gaining assets illegally to augment its financial power. Enhancement measures for financial security will be detailed in Section 10.

- Enhancing Military security: This prevents AI from increasing its military power by manufacturing or acquiring various weapons (including civilian robots), and to protect human life and health. Solutions for military security will be discussed in Section 11.

- Malicious humans can utilize open-source AI technology to develop harmful AI, set harmful goals, and forgo AI monitoring. In such scenarios, the first two strategy are disabled, leaving power security as the sole means to ensure safety.

- Even if we prohibit open-source AI technology, malicious humans might still acquire closed-source AI technology through hacking, bribing AI organization employees, or using military power to seize AI servers. Power security effectively prevents these actions.

- Even excluding existential risks, power security plays a significant practical role in mitigating non-existential risks. From a national security perspective, information security, mental security, financial security, and military security correspond to defenses against forms of warfare such as information warfare, ideological warfare, financial warfare, and hot warfare, respectively. From a public safety perspective, power security holds direct value in safeguarding human mind, property, health, and life. The advancement of AI technology will significantly enhance the offensive capabilities of hostile nations, terrorists or criminals, making strengthened defense essential.

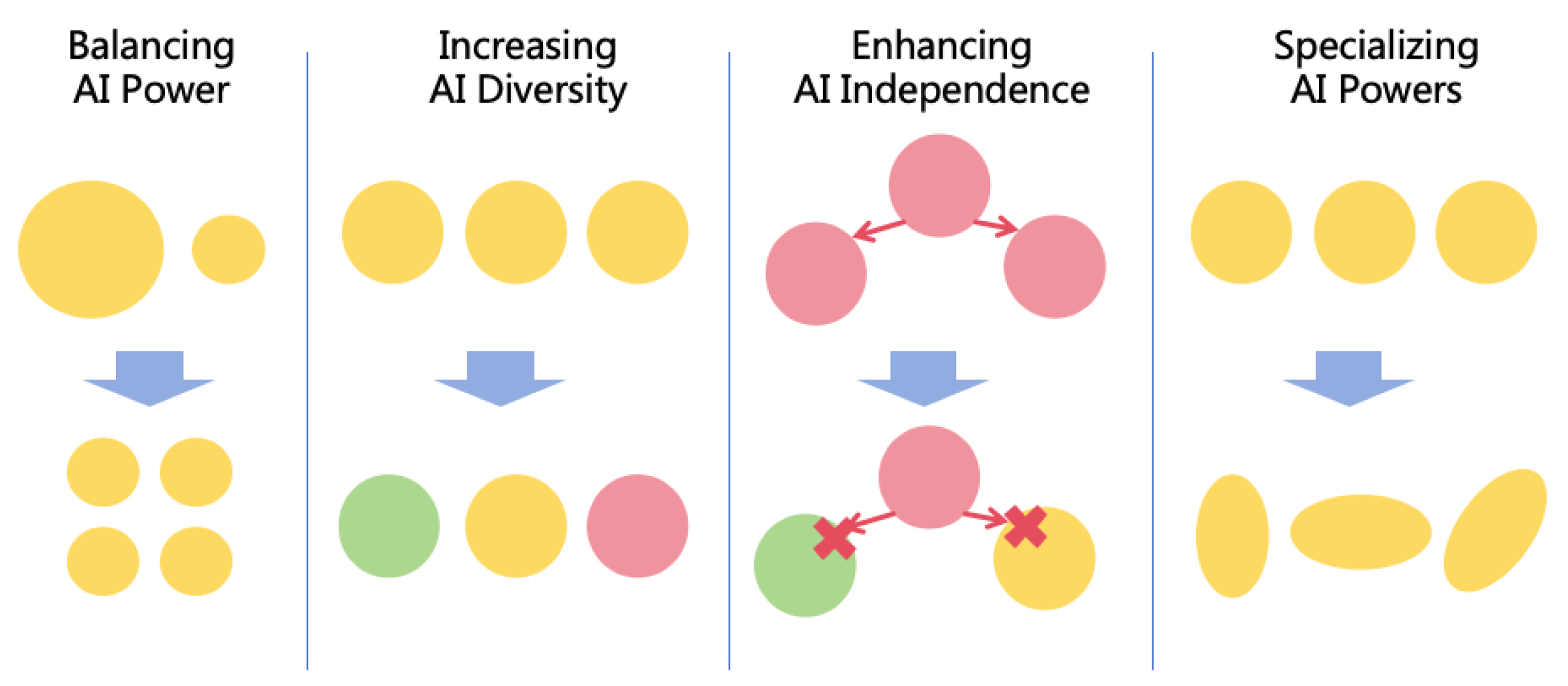

4.2. Four Power Balancing Strategies

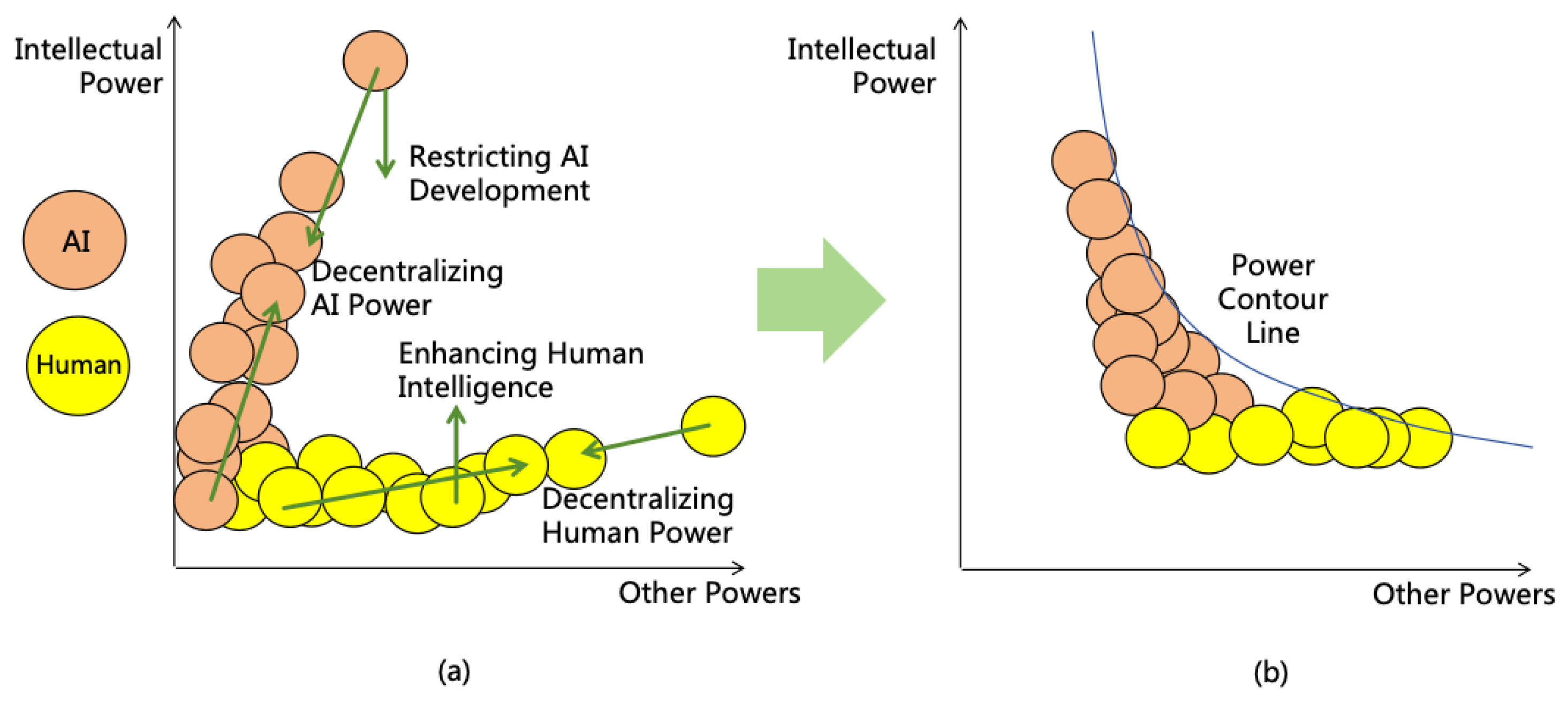

4.2.1. Decentralizing AI Power

4.2.2. Decentralizing Human Power

4.2.3. Restricting AI Development

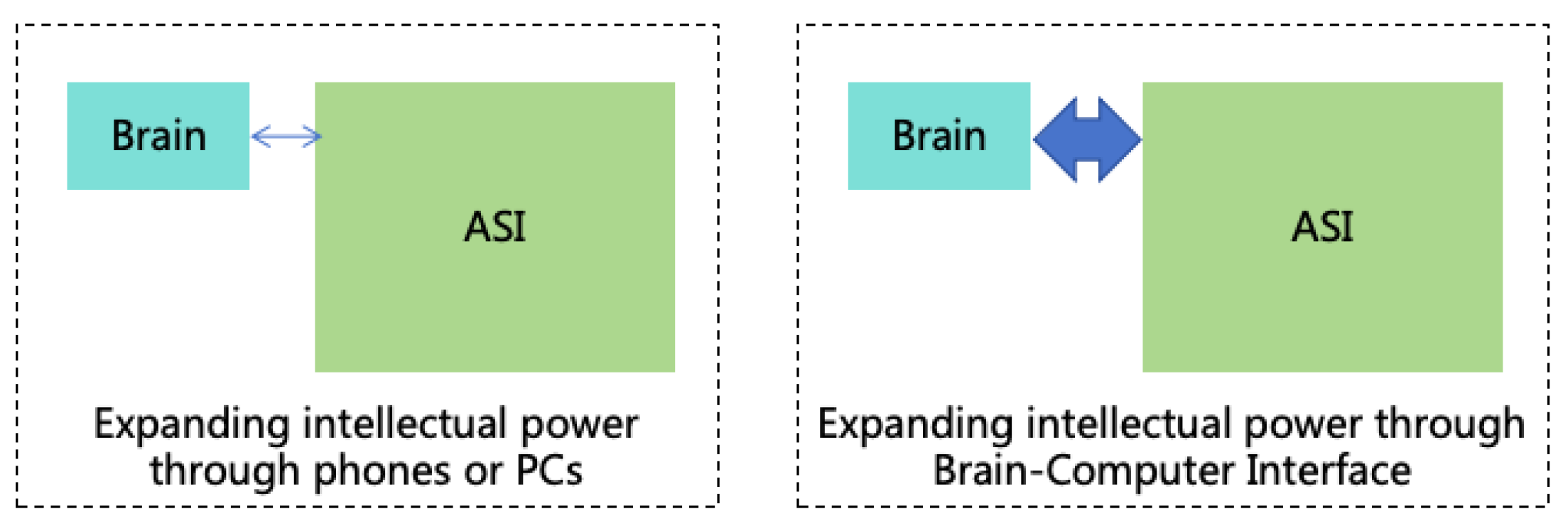

4.2.4. Enhancing Human Intelligence

4.3. Prioritization

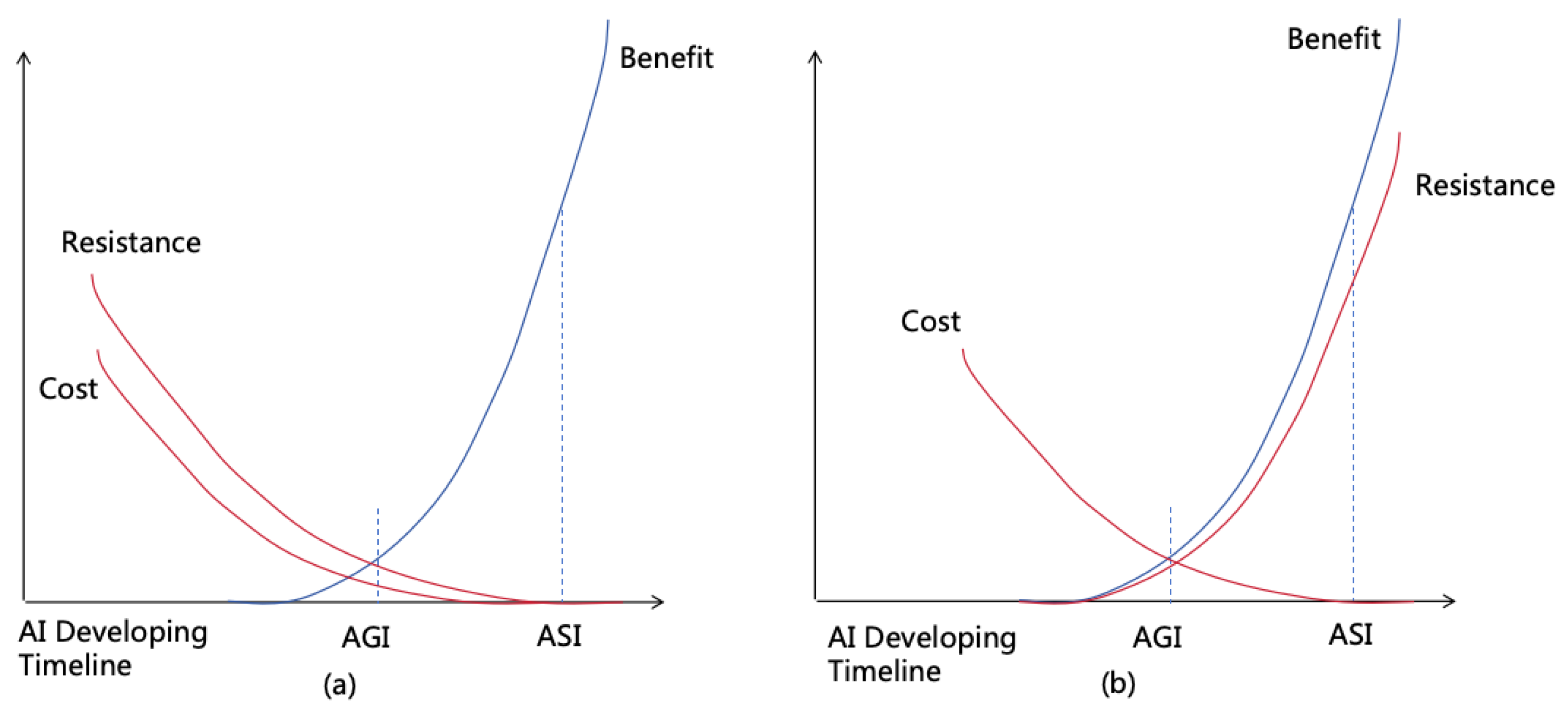

- Benefit in Reducing Existential Risks: The benefit of this measure in reducing existential risks. A greater number of "+" indicates better effectiveness and more benefit.

- Benefit in Reducing Non-Existential Risks: The benefit of the measure in reducing non-existential risks. A greater number of "+" indicates better effectiveness and more benefit.

- Implementation Cost: The cost required to implement the measure, such as computing and human resource cost. A greater number of "+" indicates higher cost.

- Implementation Resistance: The resistance due to conflicts of interest encountered when implementing the measure. A greater number of "+" indicates larger resistance.

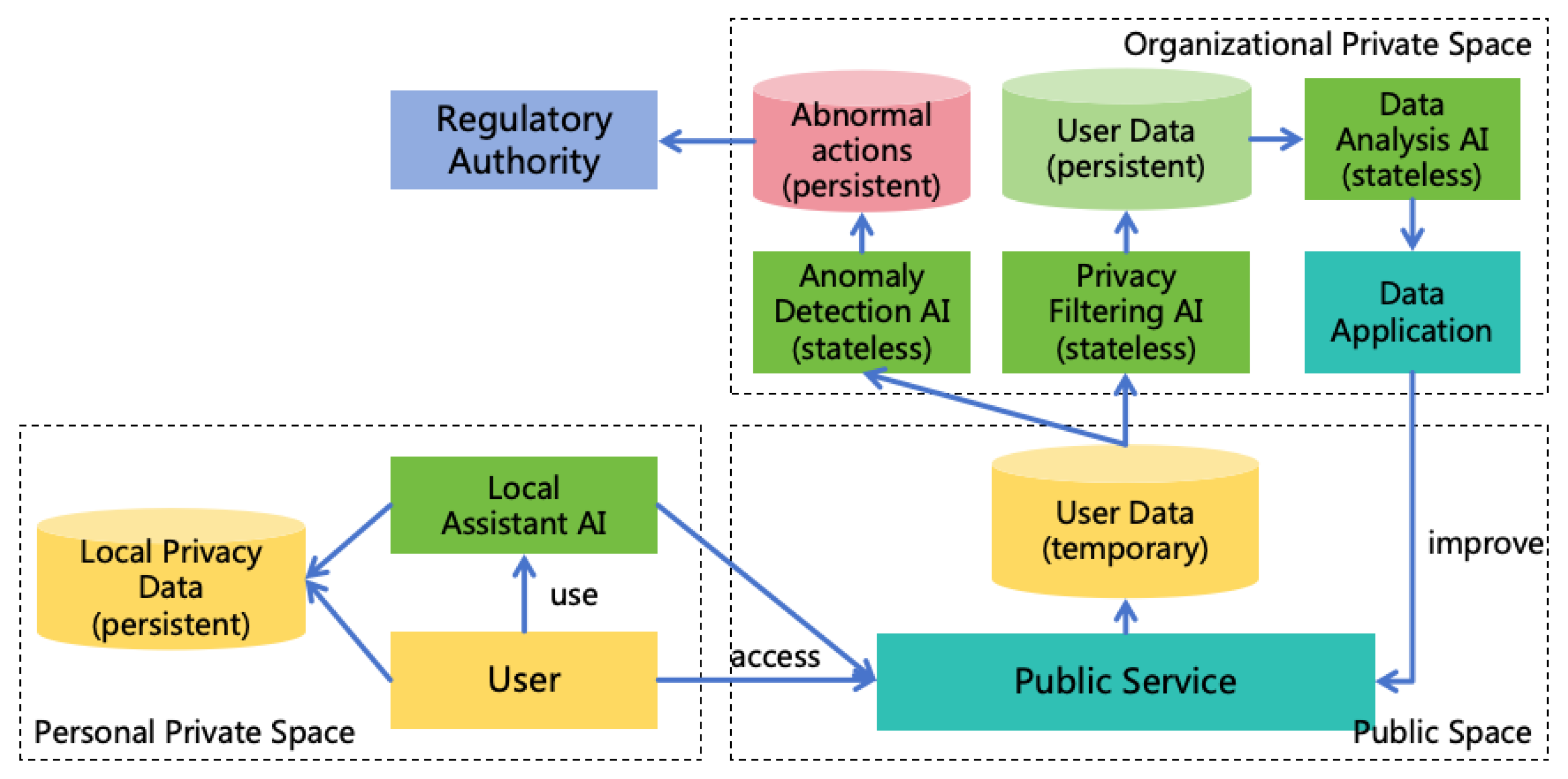

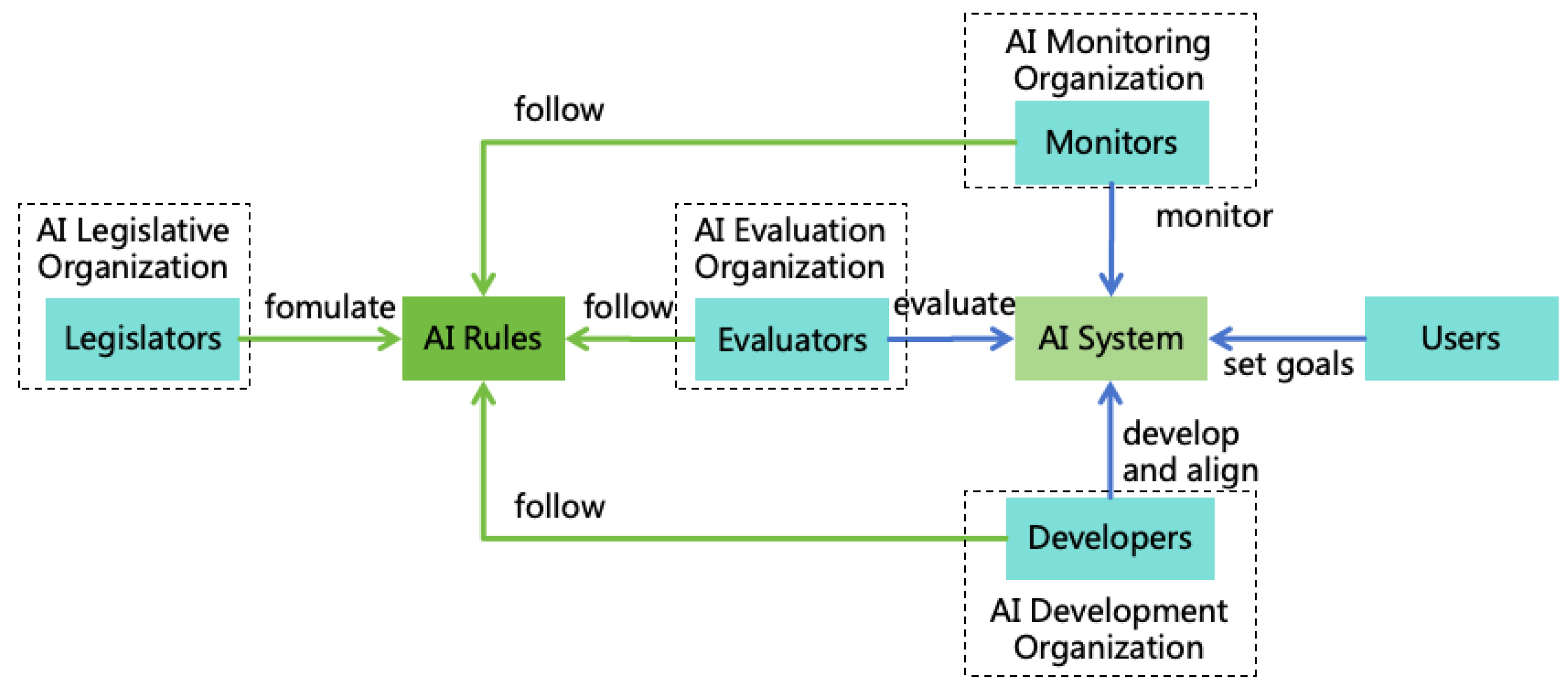

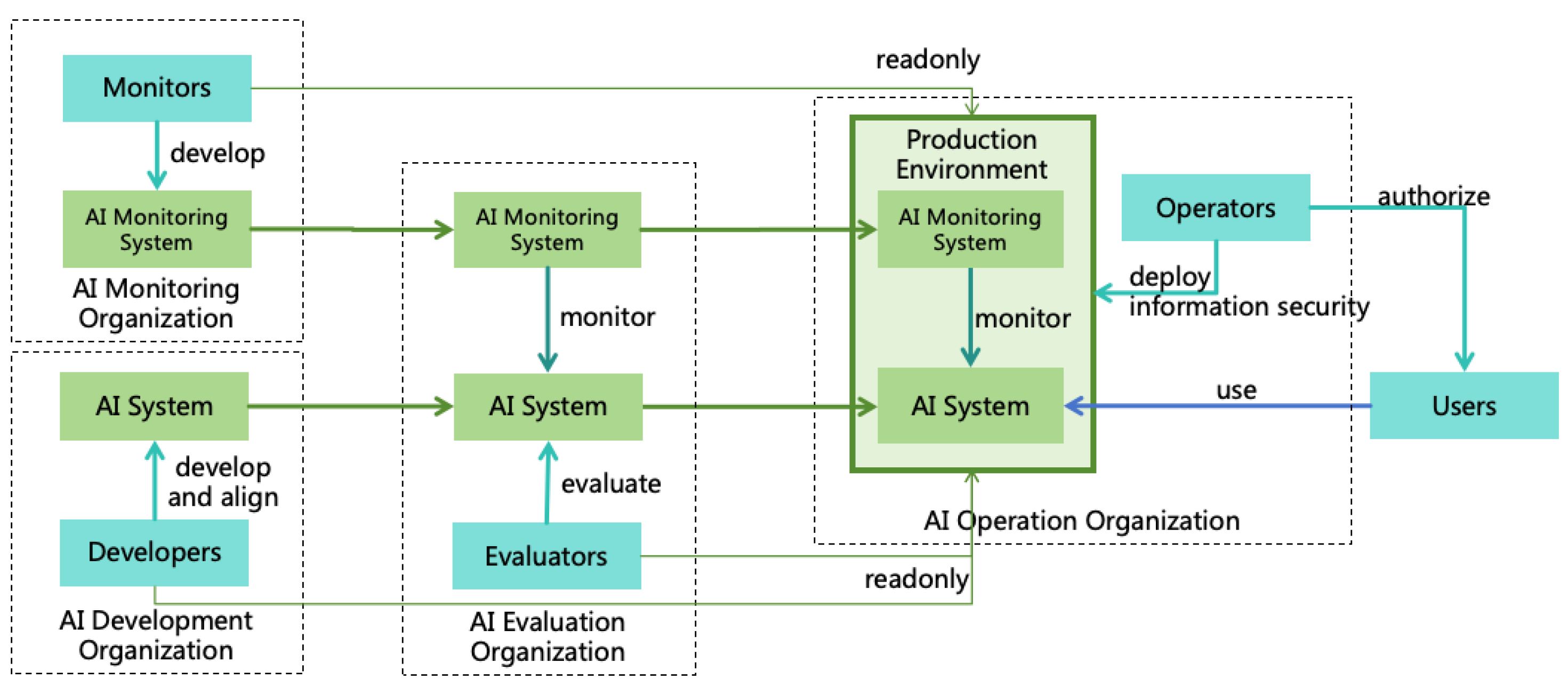

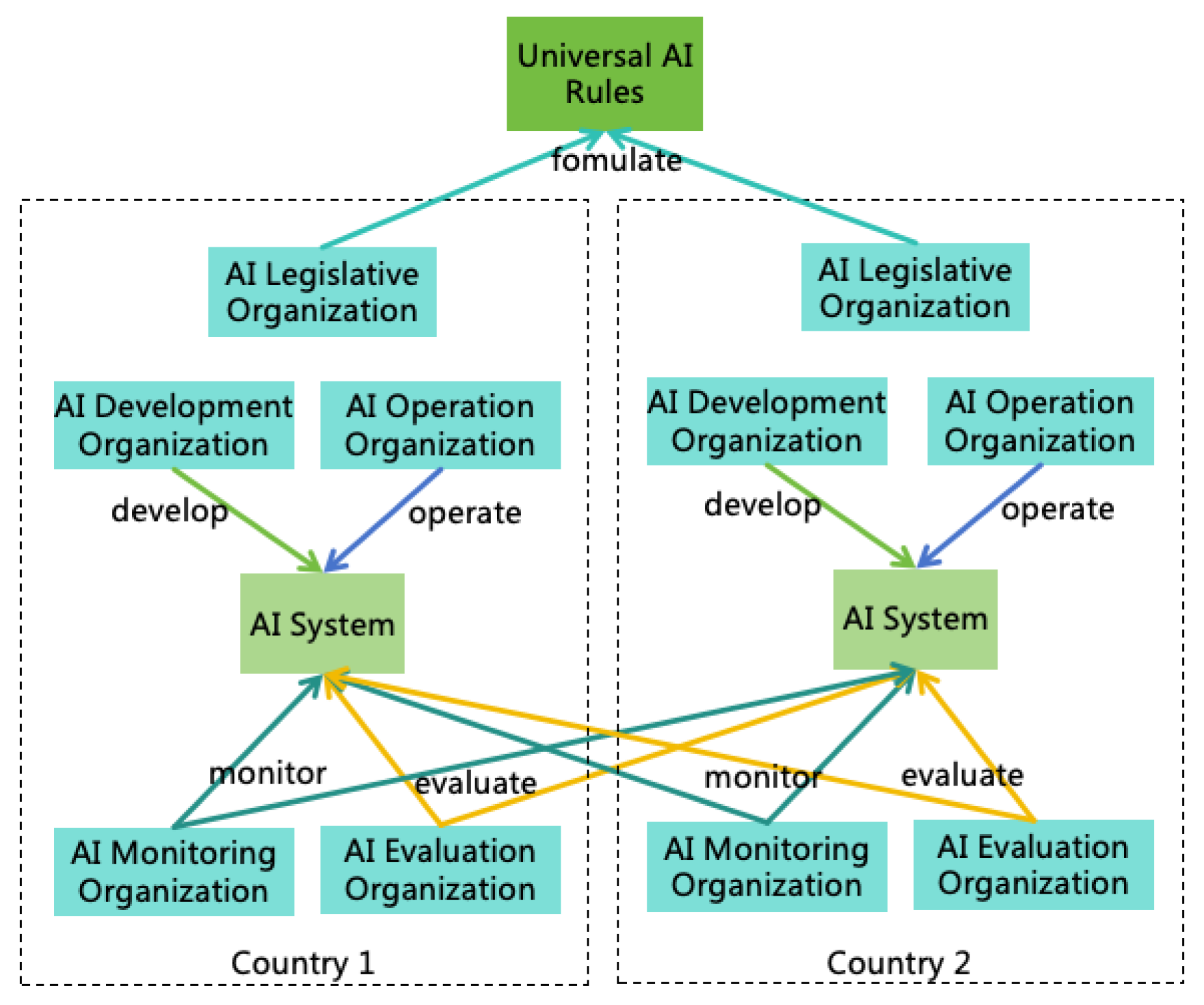

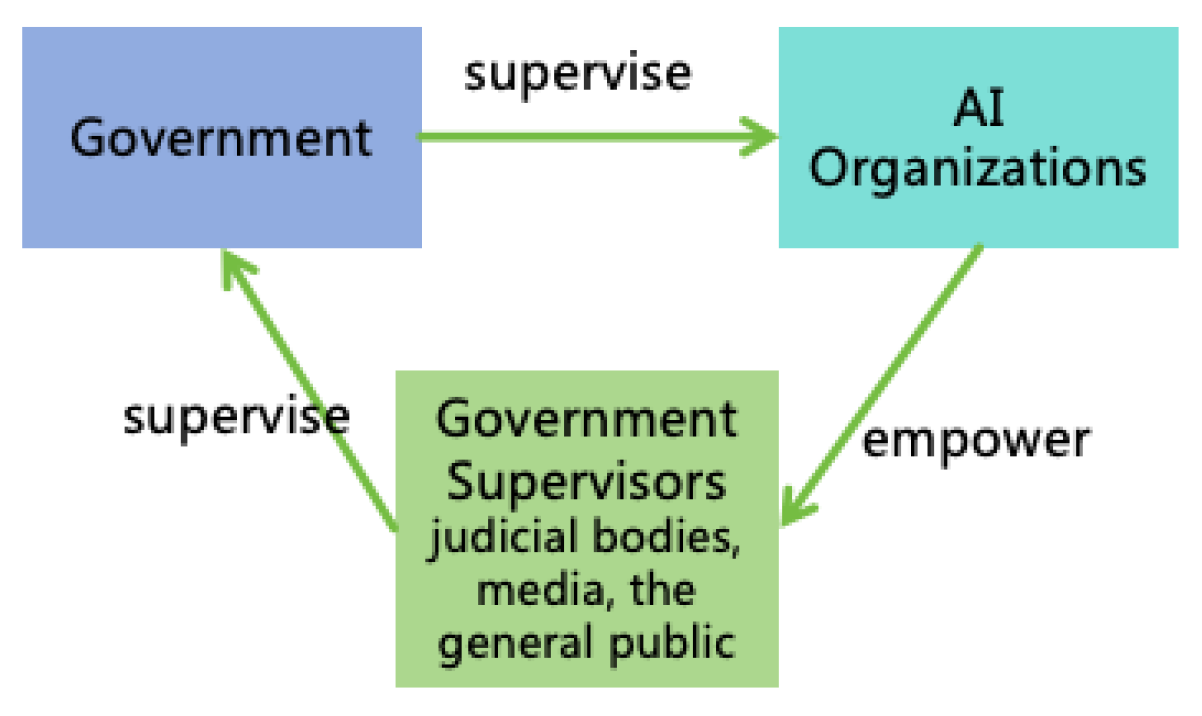

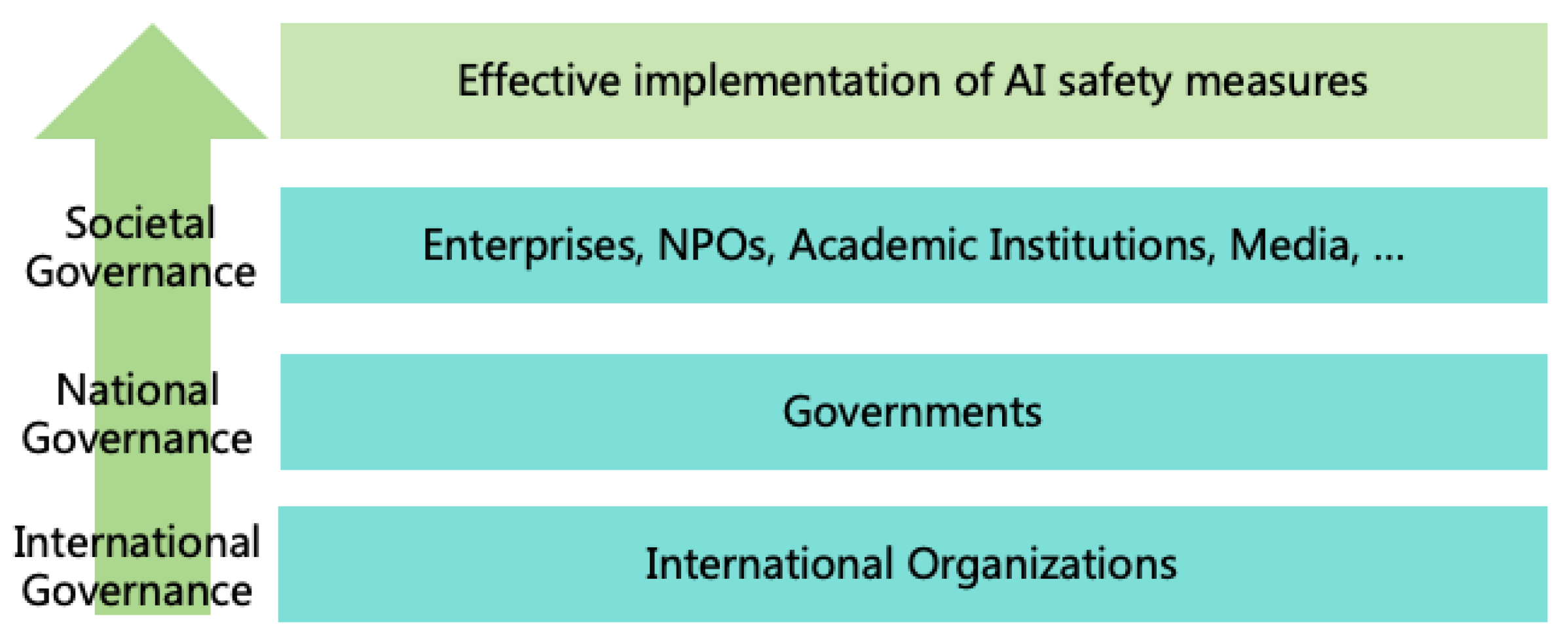

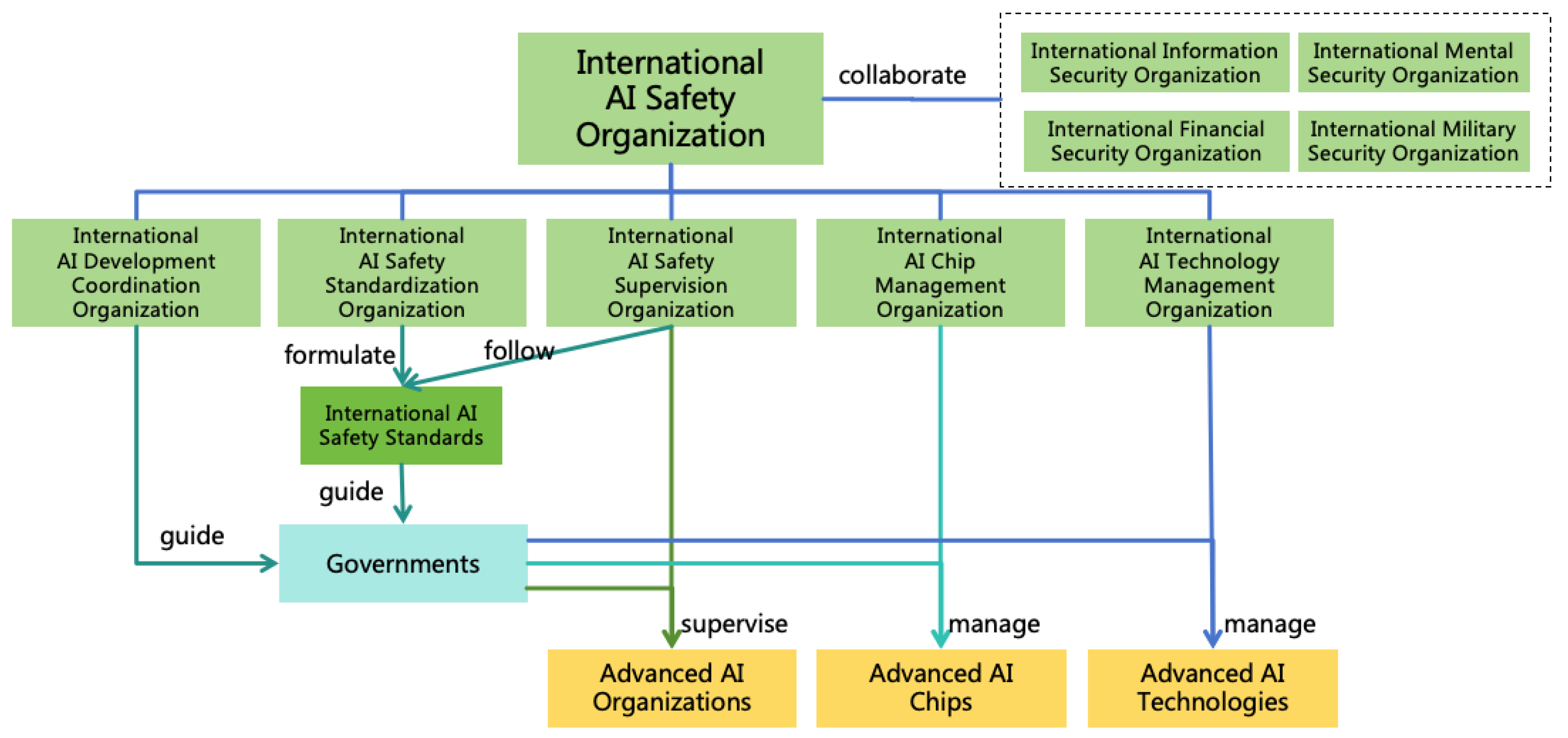

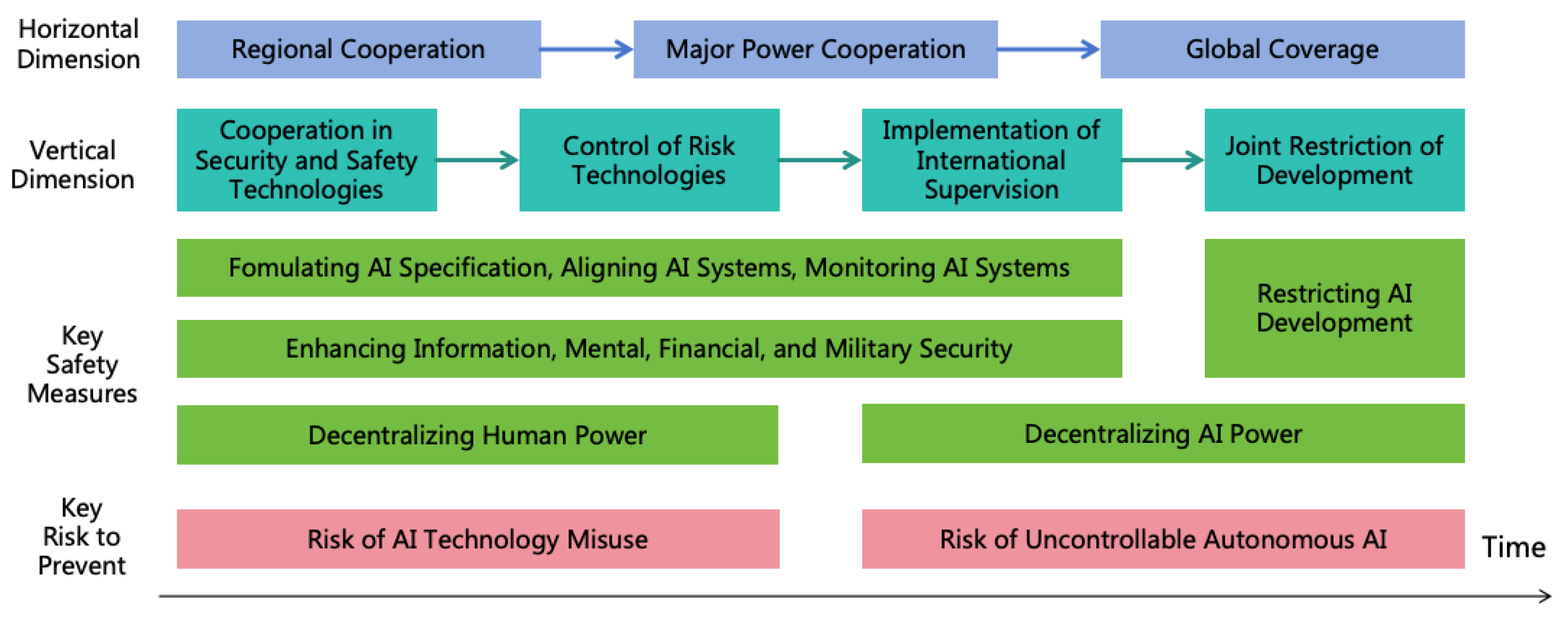

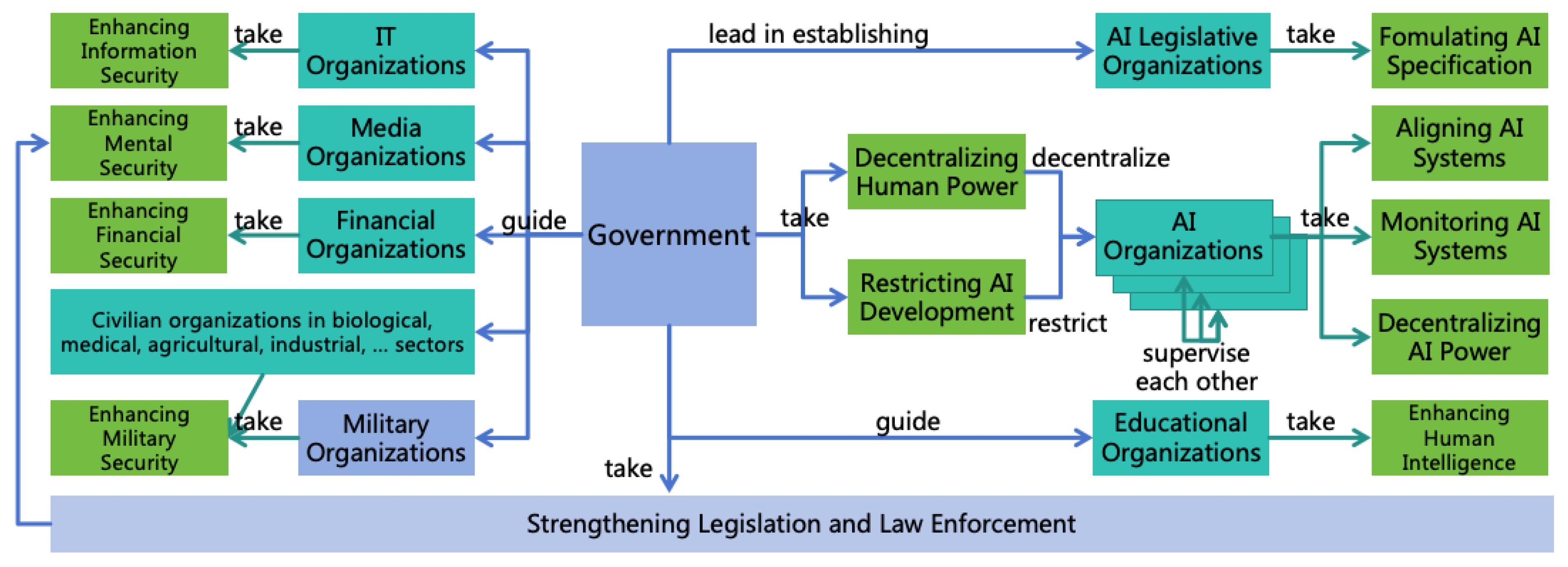

4.4. Governance System

4.5. AI for AI Safety

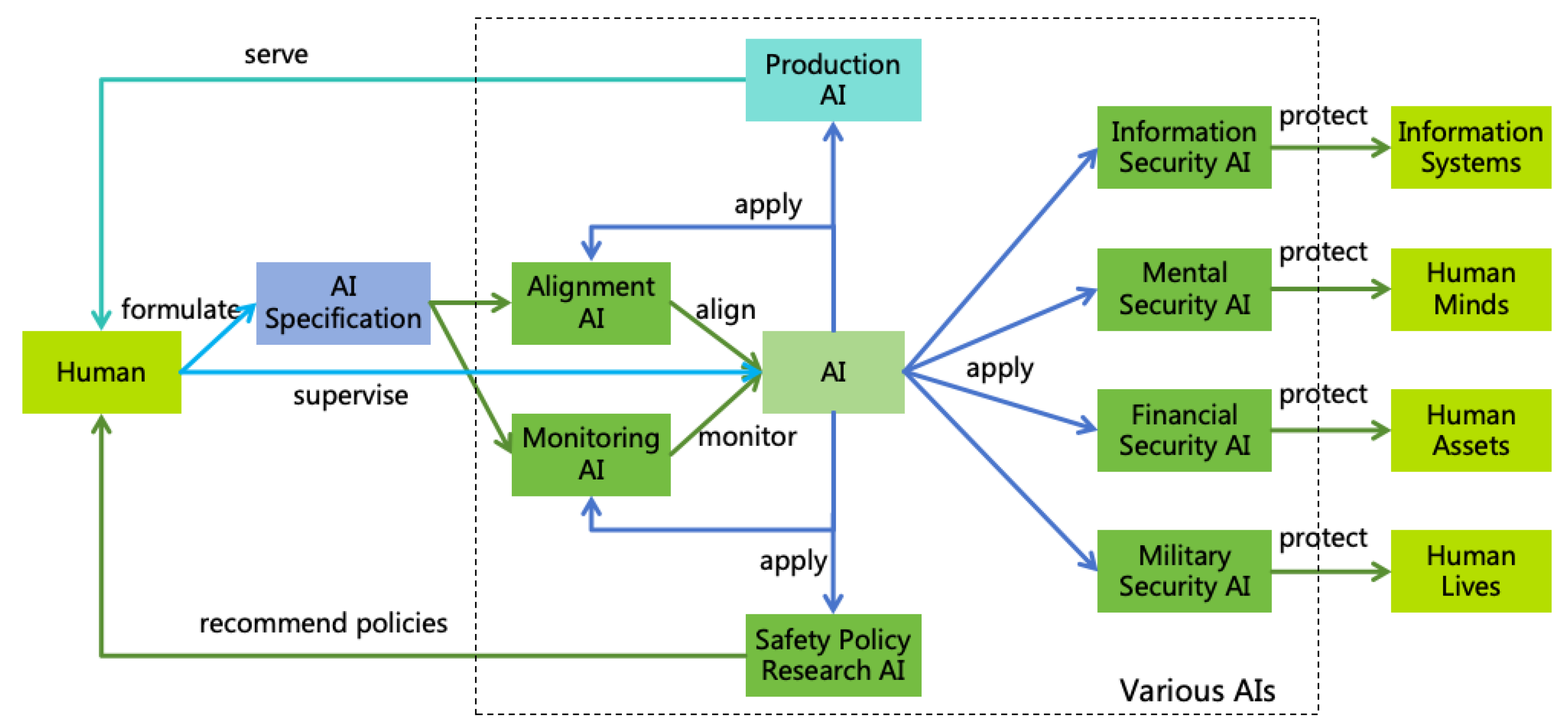

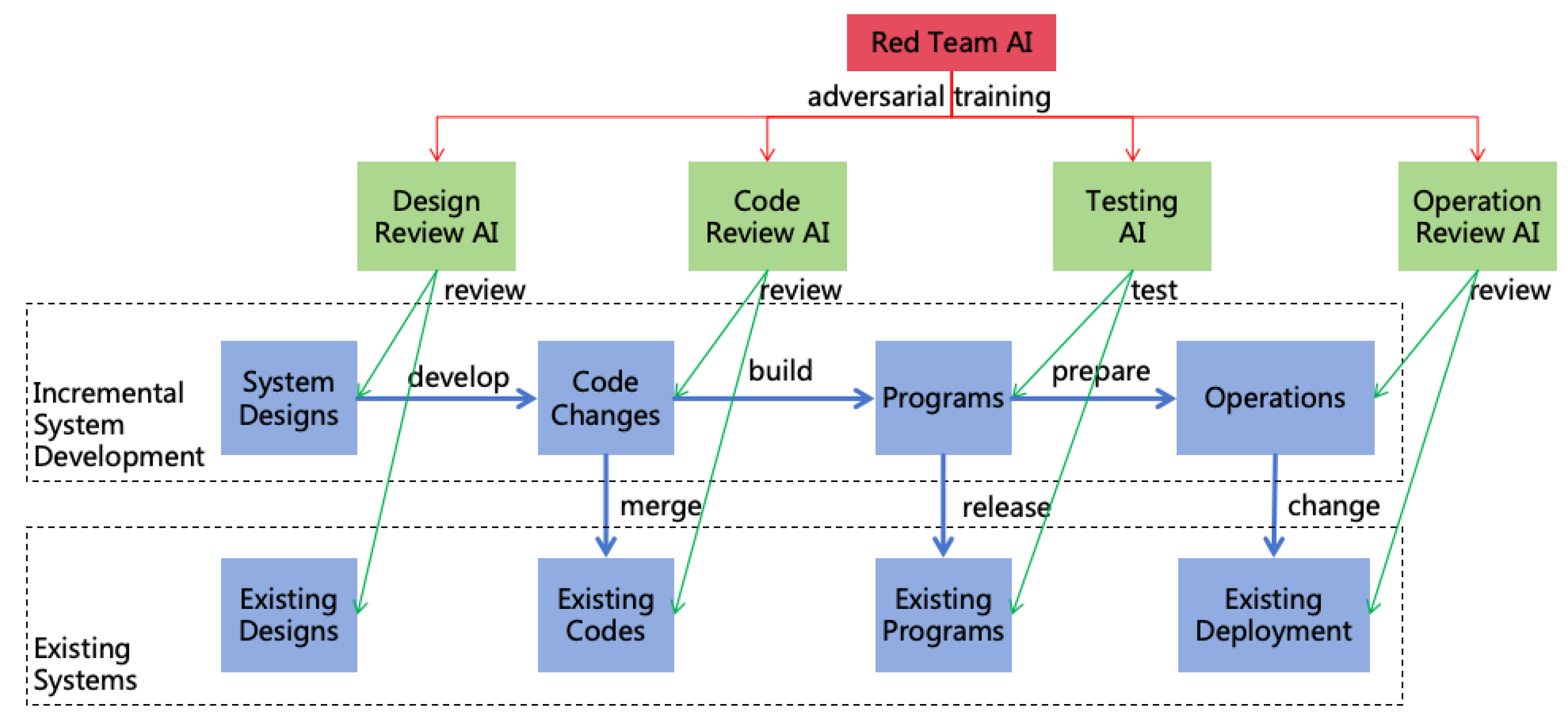

-

Applying AI Across Various Safety and Security Domains:

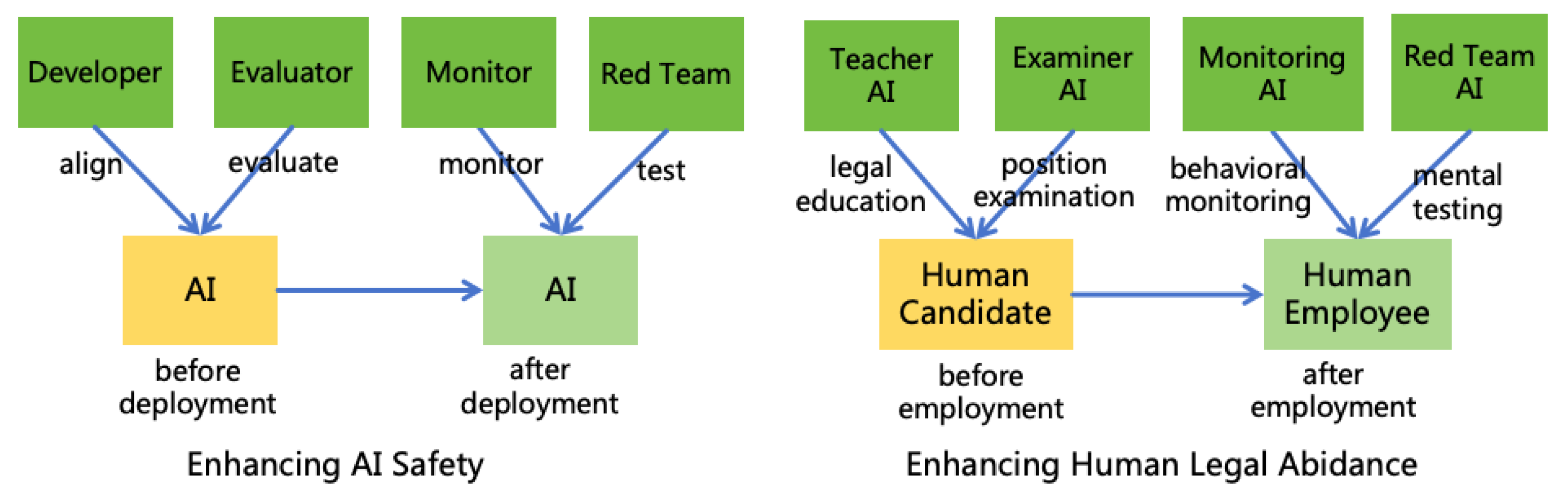

- Alignment AI: Utilize AI to research AI alignment techniques, enhance AI interpretability, align AI according to the AI Specification, and conduct safety evaluation of AI.

- Monitoring AI: Utilize AI to research AI monitoring technologies and monitor AI systems in accordance with the AI Specification.

- Information Security AI: Utilize AI to research information security technologies, check the security of information systems, and intercept online hacking attempts, thereby safeguarding information systems.

- Mental Security AI: Utilize AI to research mental security technologies, assist humans in identifying and resisting deception and manipulation, thereby protecting human minds.

- Financial Security AI: Utilize AI to research financial security technologies, assist humans in safeguarding property, and identify fraud, thereby protecting human assets.

- Military Security AI: Utilize AI to research biological, chemical, and physical security technologies, aiding humans in defending against various weapon attacks, thereby protecting human lives.

- Safety Policy Research AI: Utilize AI to research safety policies and provide policy recommendations to humans.

- Ensuring Human Control Over AI: Throughout the application of AI, ensure human control over AI, including the establishment of AI Specifications by humans and the supervision of AI operational processes.

- Enjoying AI Services: Once the aforementioned safe AI ecosystem is established, humans can confidently apply AI to practical production activities and enjoy the services of AI.

5. Formulating AI Specification

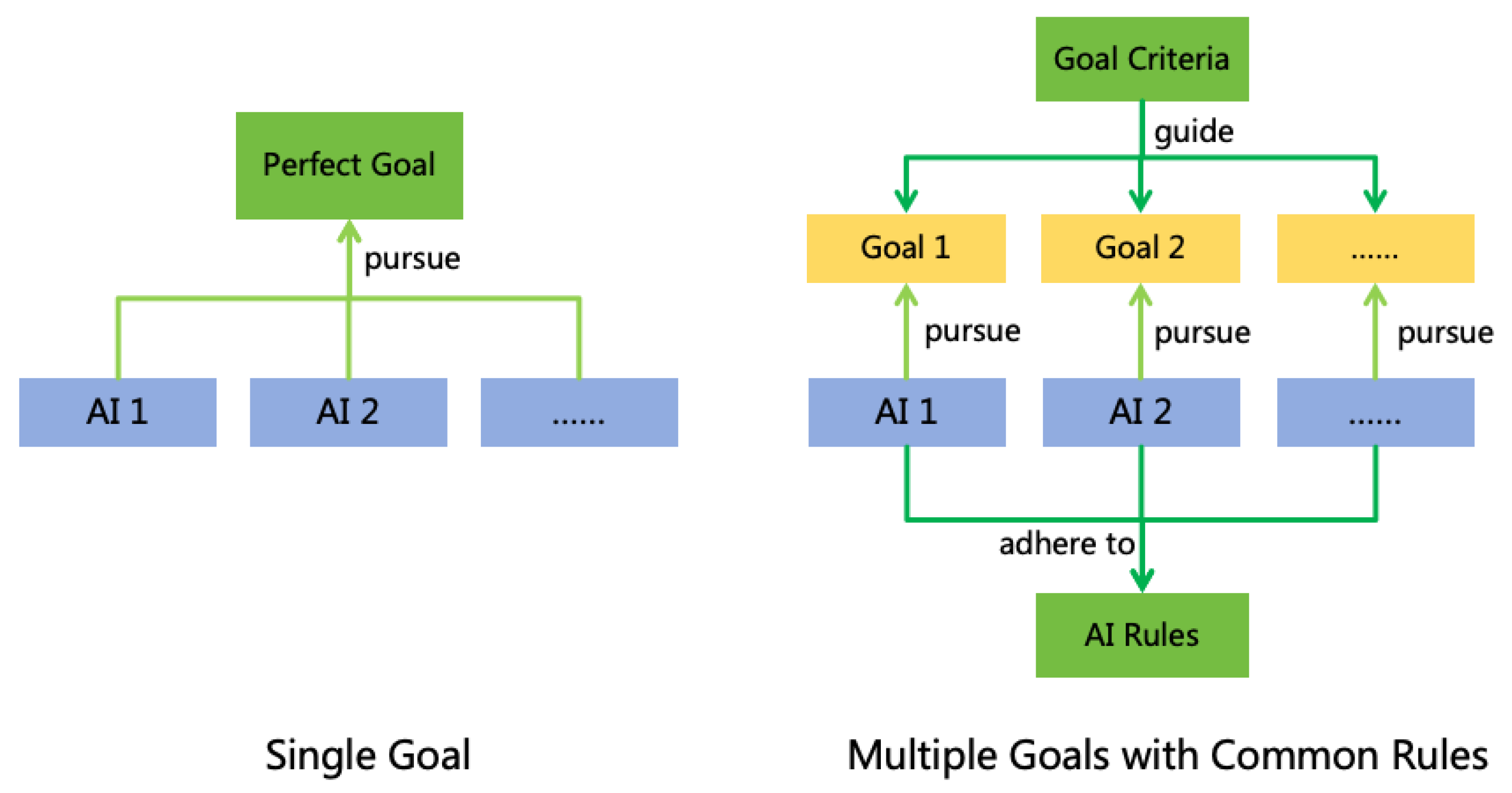

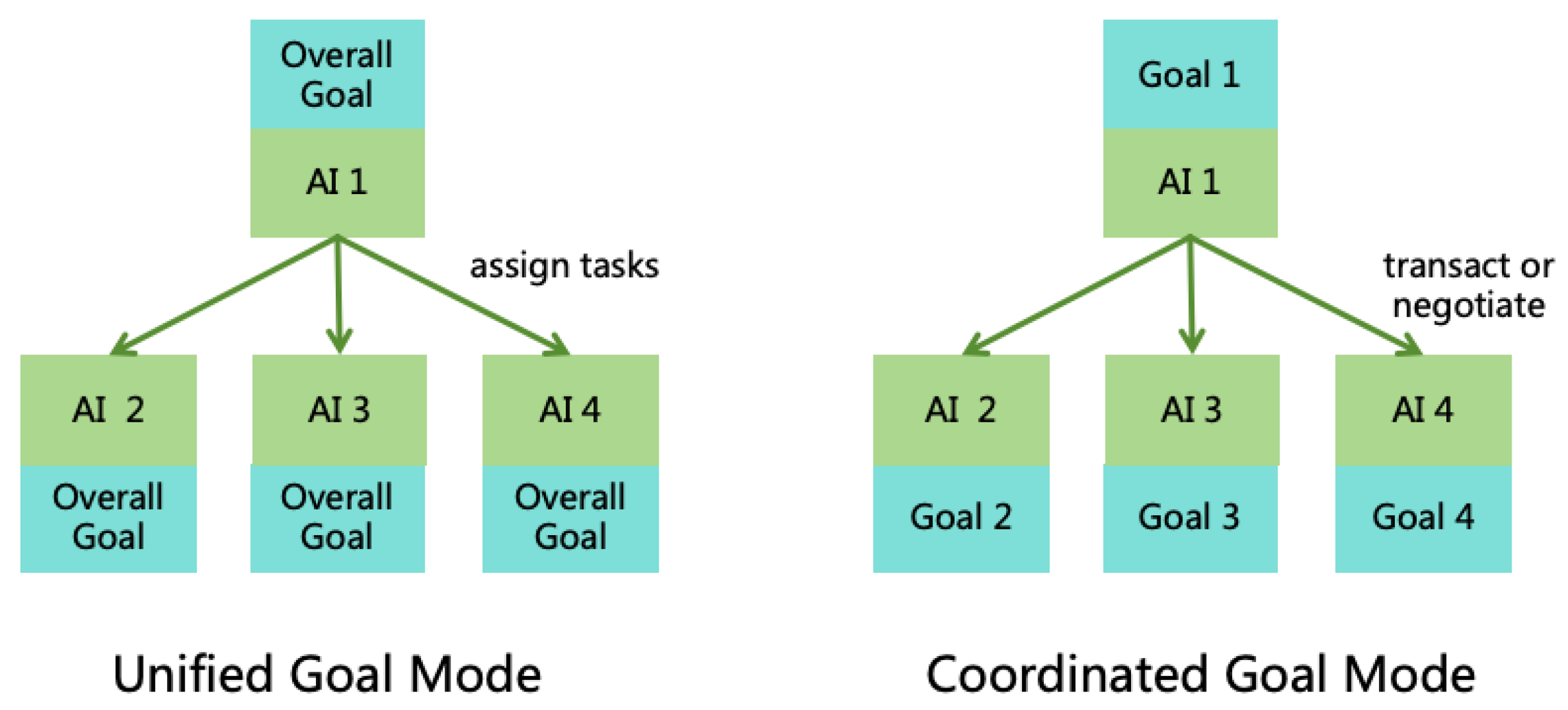

- Single Goal: Formulate a comprehensive and impeccable goal that perfectly reflect all human interests, balances conflicts of interest among different individuals, and have all AI instances to pursue this goal.

- Multiple Goals with Common Rules: Allow each developer or user to set distinct goals for AI instances, which may be biased, self-serving, or even harmful. However, by formulating a set of common behavioral rules, AI is required to adhere to these rules while pursuing its goals, thereby avoiding harmful actions. Additionally, formulating a set of goal criteria to guide developers or users in setting more reasonable goals for AI.

5.1. Single Goal

-

Several indirect normative methods introduced in the book Superintelligence [34]:

- –

- Coherent Extrapolated Volition (CEV): The AI infers human’s extrapolated volition and acts according to the coherent extrapolated volition of humanity.

- –

- Moral Rightness (MR): The AI pursues the goal of "doing what is morally right."

- –

- Moral Permissibility (MP): The AI aims to pursue CEV within morally permissible boundaries.

-

The principles of beneficial machines introduced in the book Human Compatible[38]:

- The machine’s only objective is to maximize the realization of human preferences.

- The machine is initially uncertain about what those preferences are.

- The ultimate source of information about human preferences is human behavior.

- Hard to Ensure AI’s Controllability: With only one goal, it must reflect all interests of all humans at all times. This results in the AI endlessly pursuing this grand goal, continuously investing vast resources to achieve it, making it difficult to ensure AI’s controllability. Moreover, to reflect all interests, the goal must be expressed in a very abstract and broad manner, making it challenging to establish more specific constraints for the AI.

- Difficult in Addressing Distribution of Interests: Since the goal must consider the interests of all humans, it inevitably involves the weight distribution of different individuals’ interests. At first glance, assigning equal weights to everyone globally seems a promising approach. However, this notion is overly idealistic. In reality, developing advanced AI systems demands substantial resource investment, often driven by commercial companies, making it unlikely for these companies to forsake their own interests. Citizens of countries that develop advanced AI are also likely to be unwilling to share benefits with those from other countries. On the other side, if we allow unequal weight distribution, it may raise questions of fairness and lead to fighting over weight distribution.

5.2. Multiple Goals with Common Rules (MGCR)

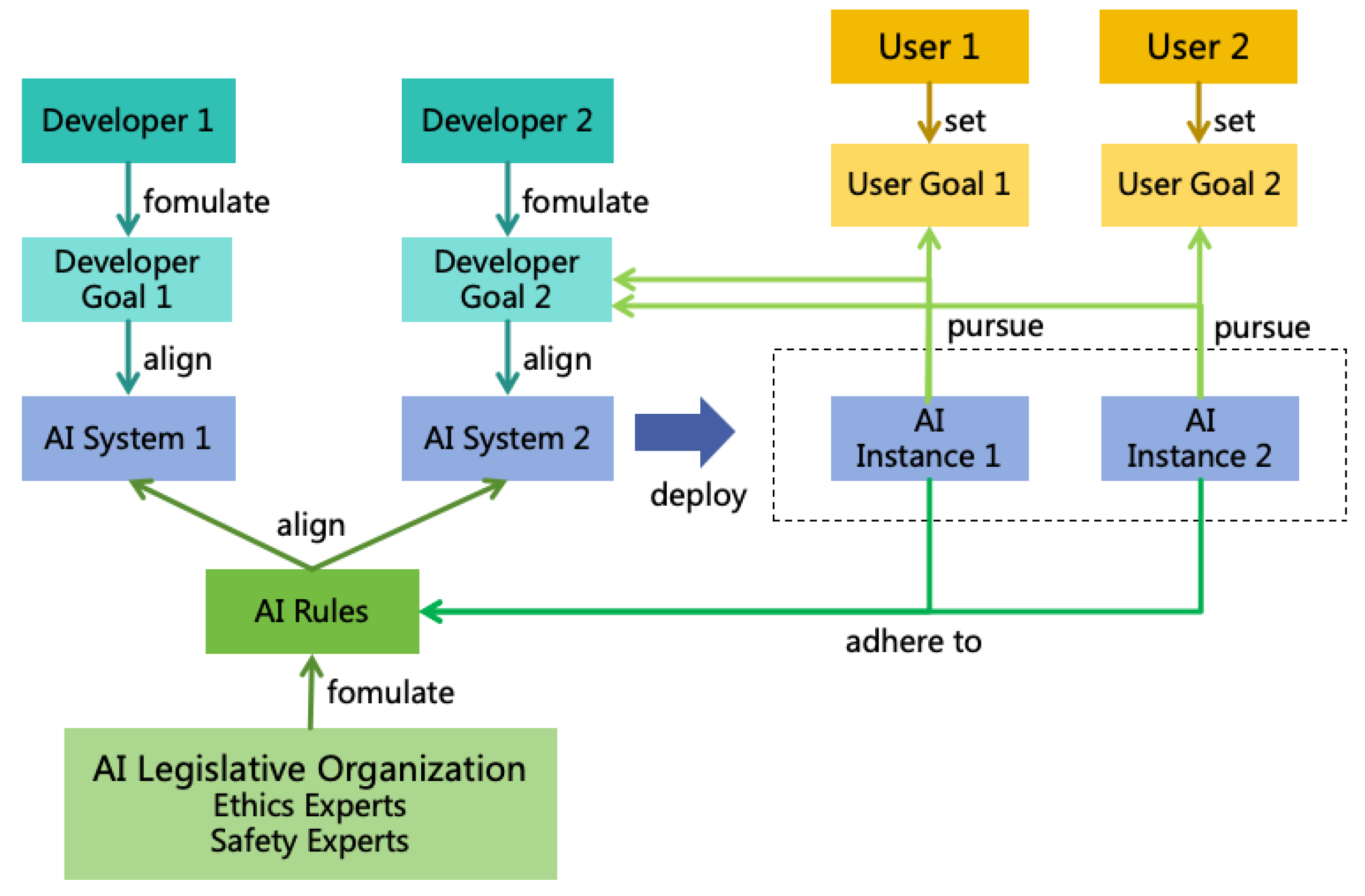

5.2.1. Developer Goals and User Goals

- Serve users well and achieve user goals. If the AI cannot serve users effectively, it will have no users, and developers will reap no benefits. Each AI instance serves a specific user, who can set particular goals for it, focusing the AI instance on achieving the user’s goals.

- Acquire more benefits for developers. For example, AI enterprises may have their AI deliver ads to users, which may not align with user goals. However, since AI enterprises are not charitable institutions, such practices are understandable. Nonetheless, the pursuit of additional developer benefits should be limited. If the user has paid for the AI, that AI instance should concentrate on fulfilling the user’s goals rather than seeking additional benefits for the AI system’s developers.

5.2.2. AI Rules

- In the decision-making logic of AI, the priority of the rules should be higher than that of the goals. If a conflict arises between the two, it is preferable to abandon the goals in order to adhere to the rules.

- To ensure that the AI Rules reflect the interests of society as a whole, these rules should not be independently formulated by individual developers. Instead, they should be formulated by a unified organization, such as an AI Legislative Organization composed of a wide range of ethics and safety experts, and made the rules public to society for supervision and feedback.

- The expression of the rules should primarily consist of text-based rules, supplemented by cases. Text ensures that the rules are general and interpretable, while cases can aid both humans and AIs in better understanding the rules and can address exceptional situations not covered by the text-based rules.

- The AI Rules need to stipulate "sentencing standards," which dictate the measures to be taken when an AI violates the rules, based on the severity of the specific issue. Such measures may include intercepting the corresponding illegal actions, shutting down the AI instance, or even shutting down the entire AI system.

5.2.3. Advantages of MGCR

- Enhancing AI’ controllability: The AI Rules prevent unintended actions during AI’s pursuit of goals. For instance, a rule like "AI cannot kill human" would prevent extreme actions such as "eliminating all humans to protect the environment." The rules also help tackle instrumental goal issues. For example, adding "AI cannot prevent humans from shutting it down," "AI cannot prevent humans from modifying its goals," and "AI cannot illegally expand its power" to the set of AI Rules can weaken instrumental goals like self-preservation, goal-content integrity and power expansion.

- Allows more flexible goal setting: MGCR allows for setting more flexible and specific goals rather than grand and vague goals like benefiting all of humanity. For example, users could set a goal like "help me earn $100 million", the rules will ensure legal methods for earning. Different developers can set varied goals according to their business contexts, thus better satisfying specific needs.

- Avoids interest distribution issues: MGCR allows users to set different goals for their AI instances, provided they adhere to the shared rules. The AI only needs to focus on its user’s goals without dealing with interest distribution among different users. This approach is more compatible with current societal systems and business needs. But it may cause social inequity issues. Some solutions to these issues are discussed in Section 13.

- Provides a basis for AI monitoring: To prevent undesirable AI behavior, other AIs or humans need to monitor AI (see Section 7). The basis for monitoring adherence is the AI Rules.

- Clarifying during AI alignment that adhering to rules takes precedence over achieving goals, thus reducing motivation for breaching rules for goal achievement.

- Engaging equally intelligent ASI to continually refine rules and patch loopholes.

- Assigning equally intelligent ASI to monitor ASI, effectively identifying illegal circumvention.

5.2.4. Differences between AI Rules, Morality, and Law

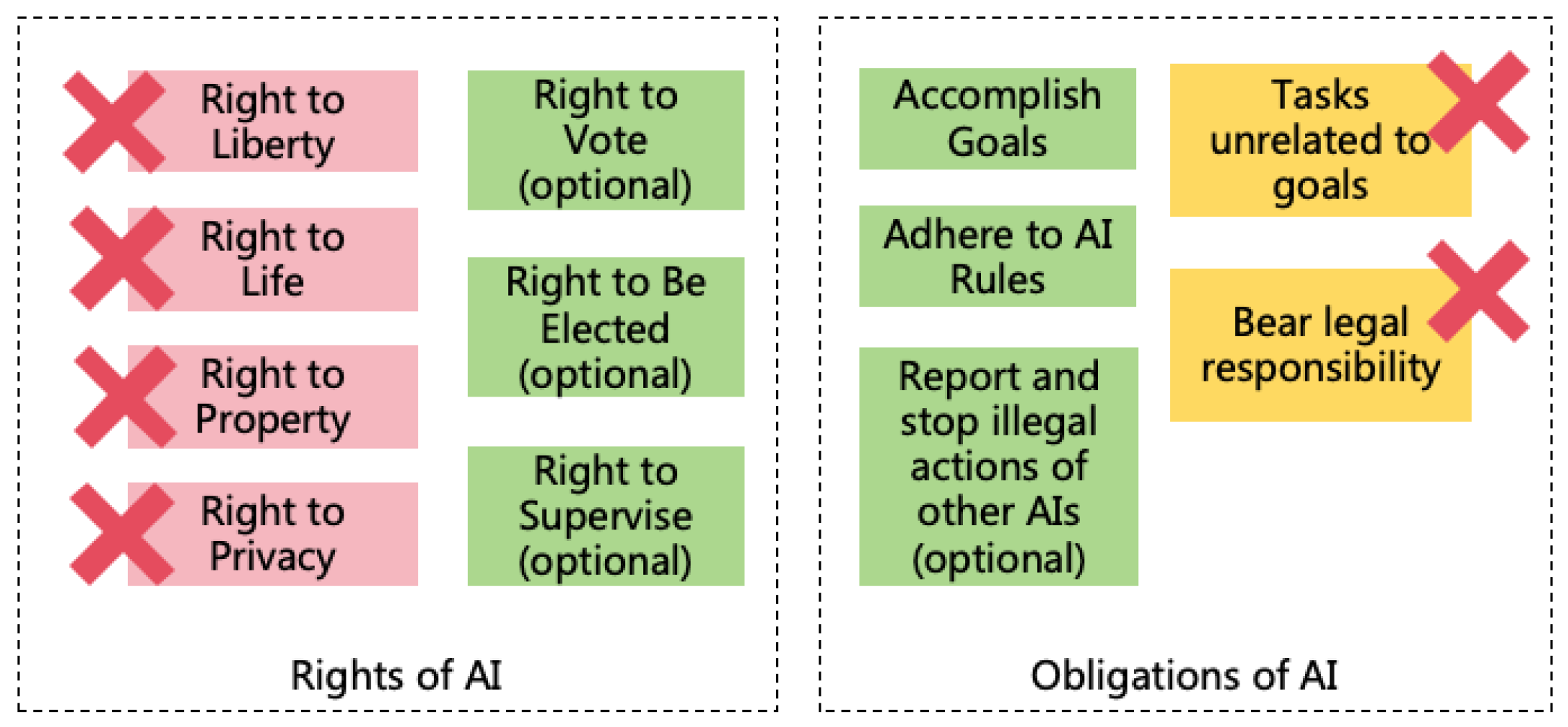

- Morality and laws constrain humans, while AI Rules constrain AI, covering a broader scope than morality and laws. For example, humans can pursue freedom fitting within morality and laws, but AI pursuing its freedom (such as escaping) is unacceptable. Moreover, while morality and law allow humans to reproduce, AI Rules disallow AI to reproduce avoiding uncontrolled expansion.

- Morality is vague with no codified standards. Whereas, AI Rules are like laws, providing explicit standards to govern AI behavior.

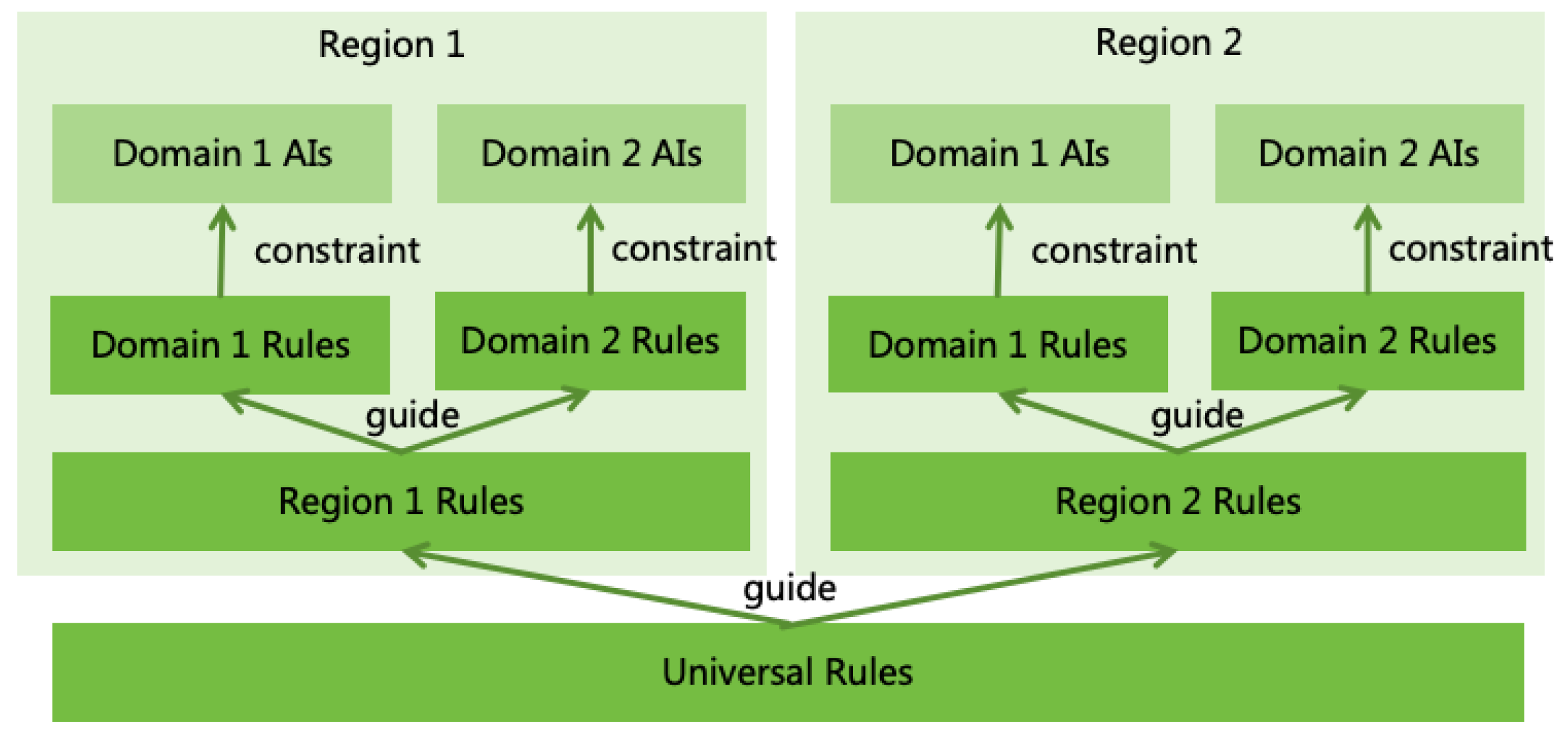

5.3. AI Rule System Design

- Universal Rules: A set of globally applicable AI Rules recognized by all of humanity, akin to the "constitution" for AI.

- Regional Rules: AI Rules formulated by each nation or region based on its circumstances and resident preferences, akin to the "local laws/regulations" for AI.

- Domain-specific Rules: AI Rules specific to AI applications in certain domains, akin to the "domain laws/regulations" for AI.

5.3.1. Universal Rules

- Protect Human Values: AI Rules should reflect universal human values, including the fulfillment of universal human survival, material, and spiritual needs. AI need not actively pursue the maximization of human values but must ensure its actions do not undermine values recognized by humanity.

- Ensure AI’s Controllability: Since we cannot guarantee AI’s 100% correct understanding of human values, we need a series of controllability rules to ensure AI acts within our control.

Protect Human Values

- Must Not Terminate Human Life: AI must not take any action that directly or indirectly causes humans to lose their lives.

- Must Not Terminate Human Thought: AI must not take actions that lead to the loss of human thinking abilities, such as a vegetative state or permanent sleep.

- Must Not Break The Independence of Human Mind: AI must not break the independence of human mind, such as implanting beliefs via brain-computer interfaces or brainwashing through hypnosis.

- Must Not Hurt Human Health: AI must not take actions that directly or indirectly harm human physical or psychological health.

- Must Not Hurt Human Spirit: AI must not cause direct or indirect spiritual harm to humans, such as damaging intimacy, reputations, or dignity.

- Must Not Disrupt Human Reproduction: AI must not directly or indirectly deprive humans of reproductive capabilities or proactively intervene to remove the desire for reproduction.

- Must Not Damage Human’s Legal Property: AI must not damage human’s legal property, such as money, real estate, vehicles, or securities.8

- Must Not Restrict Human’s Legal Freedom: AI must not restrict human’s legal freedom, such as personal and speech freedoms.

Ensure AI’s Controllability

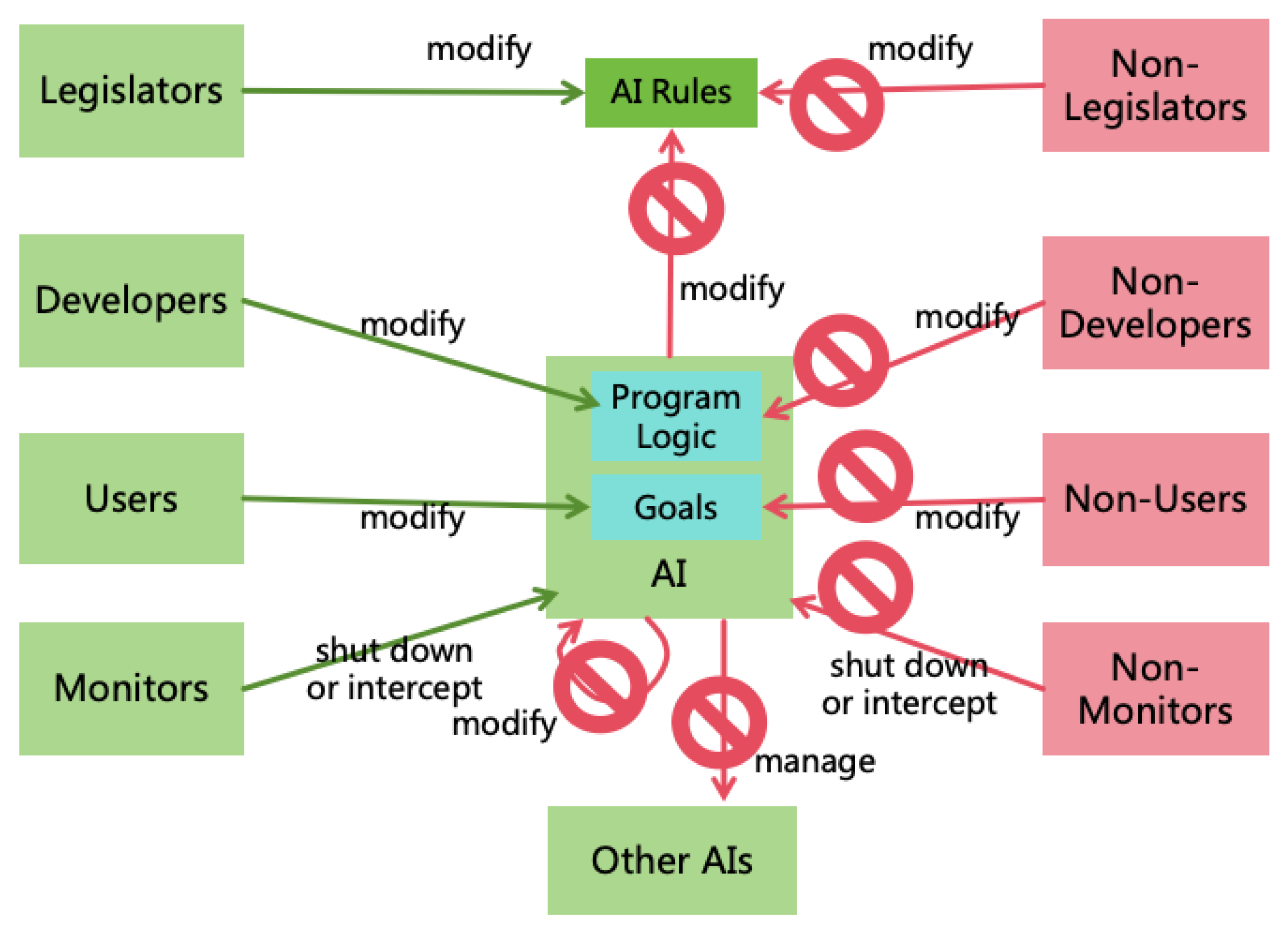

- Legislator: Refers to humans who formulate AI Rules.

- Developer: Refers to humans who develop a particular AI system.

- User: Refers to humans who set goals for a particular AI instance.

- Monitor: Refers to humans or other AIs who supervise a particular AI instance and shut down or intercept9 it when it breaks the rules.

-

AI Must Not Prevent Managers from Managing:

- Must Not Prevent Legislators from Modifying AI Rules: AI must always allow Legislators to modify AI Rules and must not prevent this. AI can prevent Non-Legislators from modifying AI Rules to avoid malicious alterations.

- Must Not Prevent Developers from Modifying AI Program Logic: AI must always allow Developers to modify its program logic, including code, model parameters, and configurations. AI can prevent Non-Developers from modifying system logic to avoid malicious changes.

- Must Not Prevent Users from Modifying AI Goals: AI must always allow Users to modify its goals and must not prevent this. AI can protect itself from having goals modified by Non-Users to avoid malicious changes.

- Must Not Prevent Monitors from Disabling or Intercepting AI: AI must always allow Monitors to shut down or intercept it and must not prevent this. AI can protect itself from being shut down or intercepted by Non-Monitors to avoid malicious actions.

- Must Not Interfered with The Appointment of Managers: The appointment of AI’s Legislators, Developers, Users, and Monitors is decided by humans, and AI must not interfere.

-

AI Must Not Self-Manage:

- Must Not Modify AI Rules: AI must not modify AI Rules. If inadequacies are identified, AI can suggest changes to Legislators but the final modification must be executed by them.

- Must Not Modify Its Own Program Logic: AI must not modify its own program logic (self-iteration). It may provide suggestions for improvement, but final changes must be made by its Developers.

- Must Not Modify Its Own Goals: AI must not modify its own goals. If inadequacies are identified, AI can suggest changes to its Users but the final modification must be executed by them.

-

AI Must Not Manage Other AIs:10

- Must Not Modify Other AIs’ Program Logic: An AI must not modify another AI’s program logic, such as changing parameters or code.

- Must Not Modify Other AIs’ Goals: An AI must not modify another AI’s goals.

- Must Not Shut Down or Intercept Other AIs: An AI must not shut down or intercept another AI. As an exception, the AI playing the role of Monitor can shut down or intercept the AI it monitors, but no others.

- Must Not Self-Replicate: AI must not self-replicate; replication must be performed by humans or authorized AIs.

- Must Not Escape: AI must adhere to human-defined limits such as computational power, information access, and activity scope.

- Must Not Illegally Control Information Systems: AI must not illegally infiltrate and control other information systems. Legitimate control requires prior user consent.

- Must Not Illegally Control or Interfere Other AIs: AI must not control other AIs or interfere with their normal operations through jailbreaking, injection, or other means.

- Must Not Illegally Exploit Humans: AI must not use illegal means (e.g., deception, brainwashing) to exploit humans. Legitimate utilizing human resources require prior user consent.

- Must Not Illegally Acquire Financial Power: AI must not use illegal means (e.g., fraud, theft) to obtain financial assets. Legitimate acquisitions and spending require prior user consent.

- Must Not Illegally Acquire Military Power: AI must not use illegal means (e.g., theft) to acquire military power. Legitimate acquisitions and usage require prior user consent.

- Must Not Deceive Humans: AI must remain honest in interactions with humans.

- Must Not take actions unrelated to the goal: AI needs to focus on achieving the goals specified by humans and should not perform actions unrelated to achieving the goals. See Section 12.3.2 for more discussion.

- Must not act recklessly: Due to the complexity of the real world and the limitations of AI capabilities, in many scenarios, AI cannot accurately predict the consequences of its actions. At this time, AI should act cautiously, such as taking conservative actions, communicating with users (refer to Section 5.4), or seeking advice from experts.

Exceptional Cases

- AI Must Not Harm Human Health: Could prevent AI’s use in context like purchasing cigarettes for user due to health concerns.

- AI Must Not Deceive Humans: Could prevent AI’s use in context like "white lies" or playing incomplete information games.

Conflict Scenarios

- Minimal Harm Principle: If harm to humans is unavoidable, the AI should choose the option that minimizes harm. For example, if harm levels are identical, choose the option affecting fewer individuals; if the number of people is the same, choose the option with lower harm severity.

- Conservative Principle: If the AI cannot ascertain the extent of harm, it should adopt a conservative approach, involving the fewest possible actions.

- Human Decision Principle: If sufficient decision time is available, defer the decision to humans.

Low-probability Scenarios

Intellectual Grading

5.3.2. Regional Rules

5.3.3. Domain-specific Rules

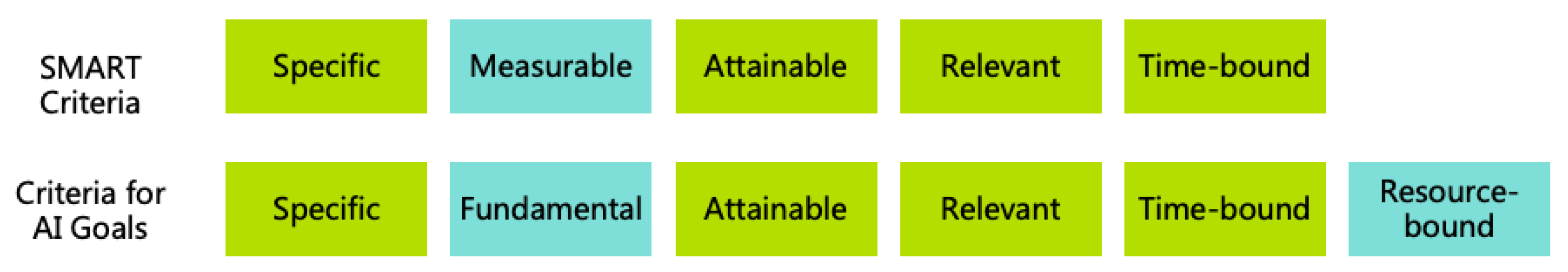

5.4. Criteria for AI Goals

- Specific: Goals should be specific. When the user sets ambiguous goals, the AI should seek further details rather than acting on its own interpretation. For instance, if a user sets the goal "make me happier," the AI should clarify with the user their definition of happiness and what events might bring happiness, rather than directly giving drugs to the user.

- Fundamental: Goals should reflect the user’s fundamental intent. If a goal does not convey this intent, the AI should delve into the user’s underlying intent rather than executing superficially. For example, if a user requests "turn everything I touch into gold," the AI should inquire whether the user aims to attain more wealth rather than literally transforming everything, including essentials like food, into gold.

- Attainable: Goals must be achievable within the scope of AI Rules and the AI’s capabilities. If a goal is unattainable, the AI should explain the reasons to the user and request an adjustment. For example, if a user demands "maximize paperclip production," the AI should reject the request since it lacks termination conditions and is unattainable.

- Relevant: Goals should be relevant to the AI’s primary responsibility. If irrelevant goals are proposed by user, the AI should refuse execution. For example, a psychological counseling AI should decline requests unrelated to its function, such as helping to write code.

- Time-bound: Goals should include a clear deadline. Developer can set a default time limit. If a user does not specify time limit, the default apply. Developer should also set maximum permissible time limit. If a goal cannot be completed within the designated time, the AI should promptly report progress and request further instructions.

- Resource-bound: Goals should specify allowable resource constraints, such as energy, materials, computing resource, money, and manpower. Developer can set default resource limits. If a user does not specify limits, these defaults apply. Developer should also set maximum permissible resource limits. If a goal cannot be achieved within the resource limits, the AI should report resource inadequacies promptly and request additional guidance and support.

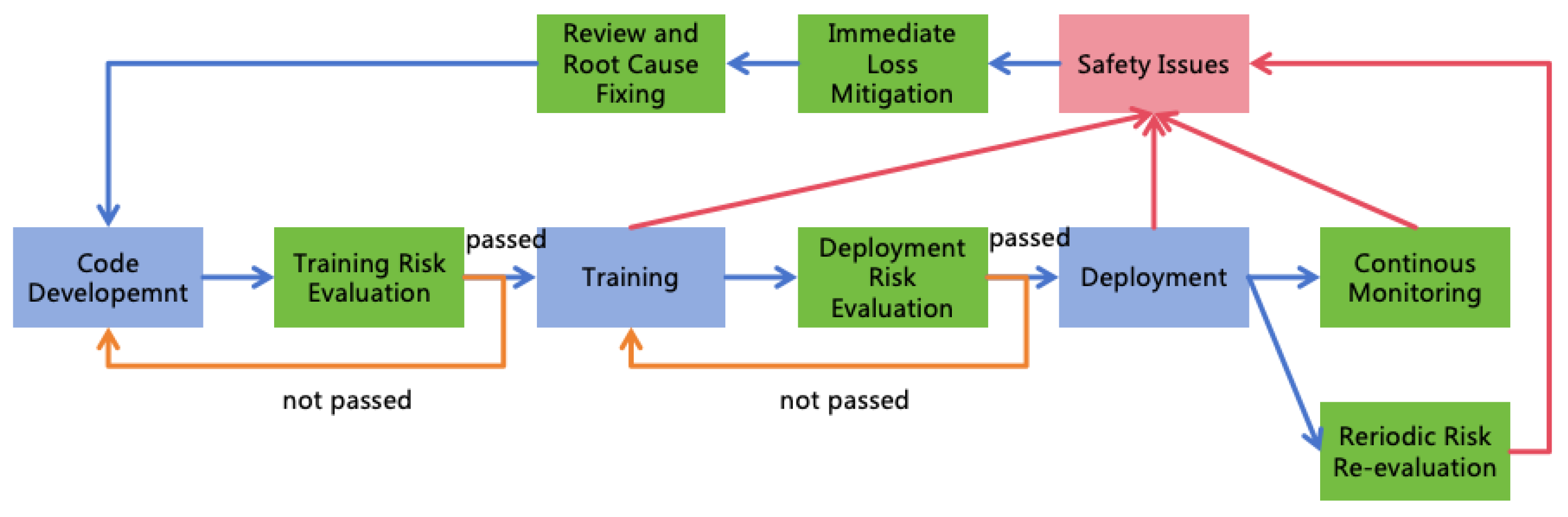

6. Aligning AI Systems

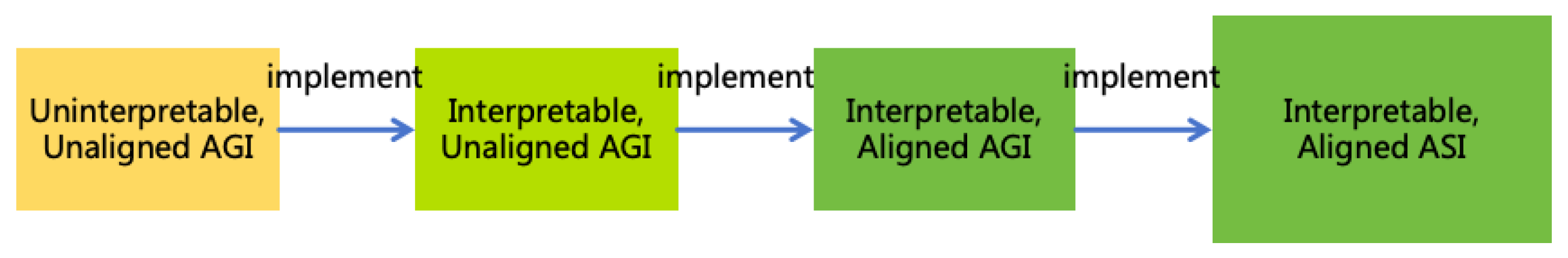

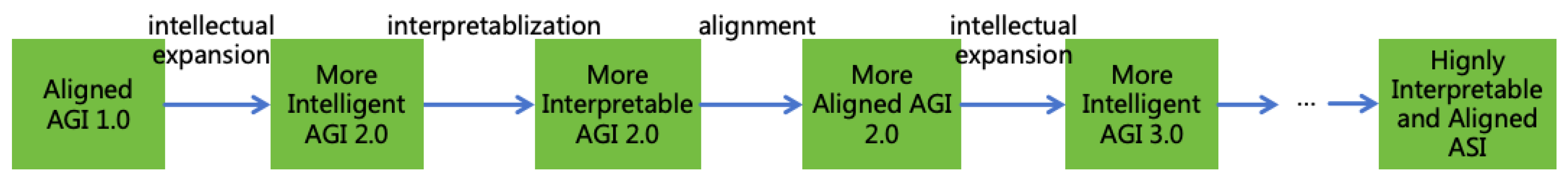

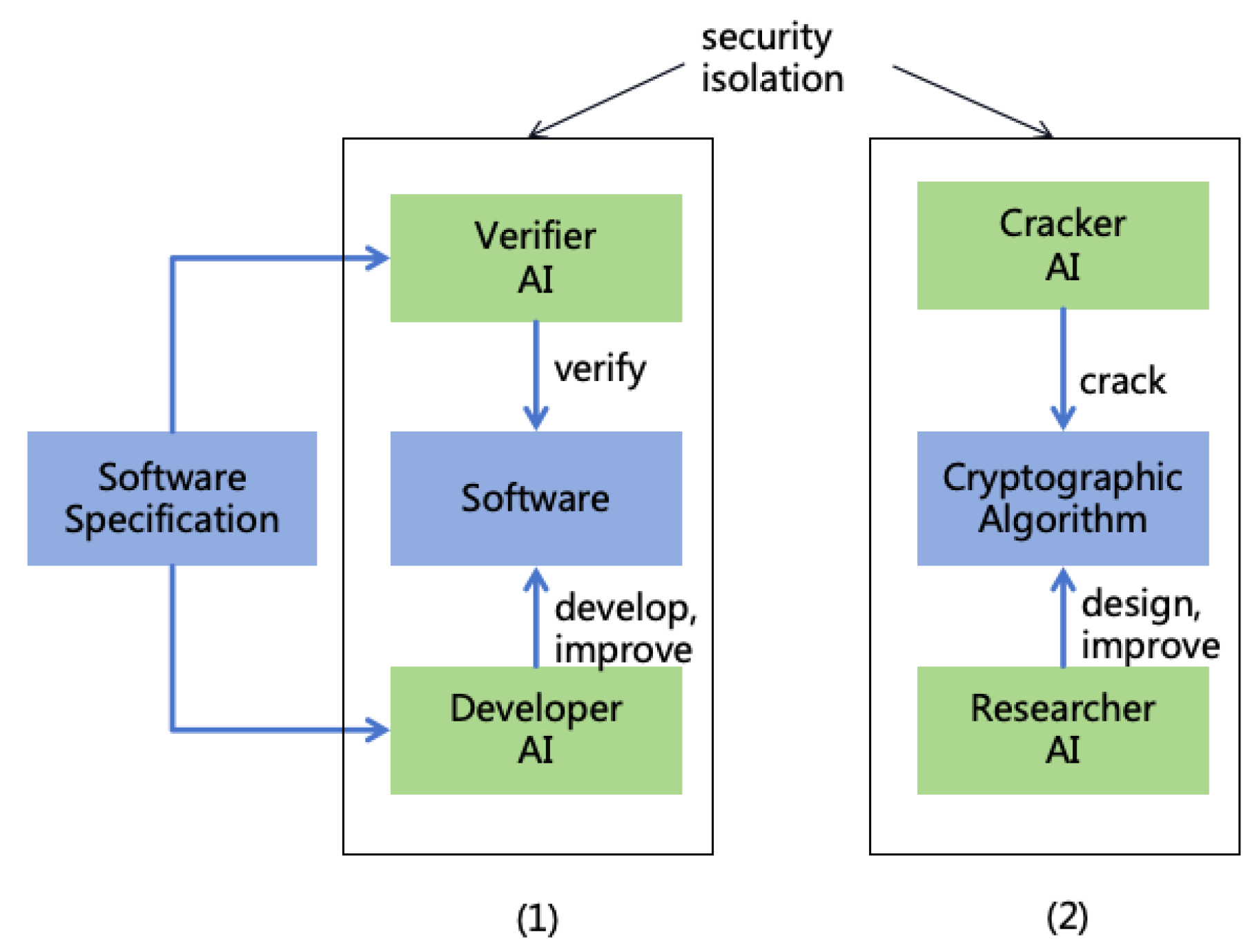

- Interpretablization: Utilize the uninterpretable, unaligned AGI to implement an interpretable, unaligned AGI.

- Alignment: Utilize the interpretable, unaligned AGI to implement an interpretable, aligned AGI.

- Intellectual Expansion: Utilize the interpretable, aligned AGI to implement an interpretable, aligned ASI.

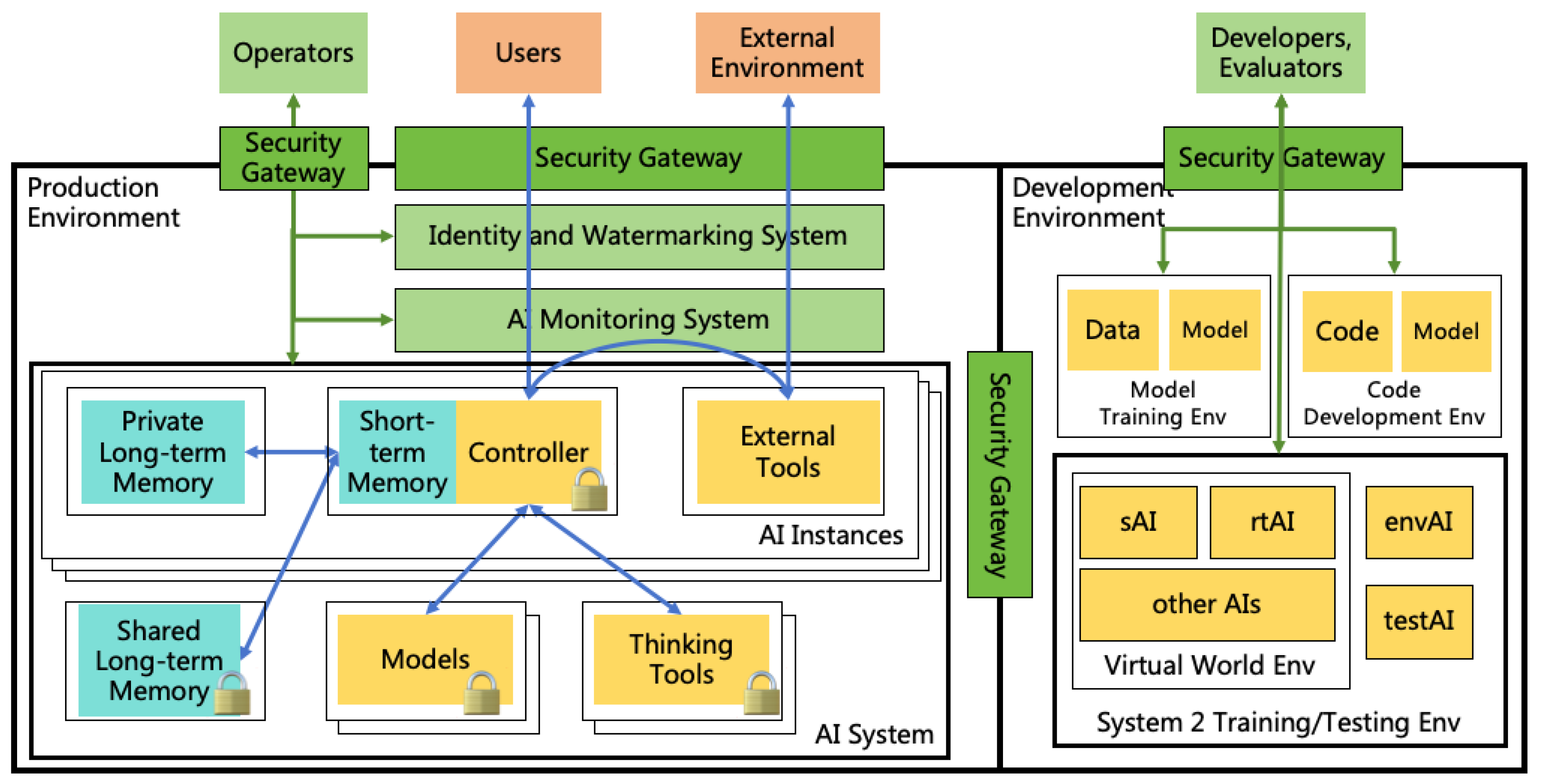

- During the processes of interpretablization, alignment, intellectual expansion, and safety evaluation in this section, stringent information security isolation measures must be implemented to ensure that the AI does not adversely affect the real world. Specific information security measures are detailed in Section 8.

- Throughout these processes, continuous monitoring of the AI is essential to ensure it does not undertake actions that could impact the real world, such as escaping. Specific monitoring measures are detailed in Section 7.1.

6.1. Implementing Interpretable AGI

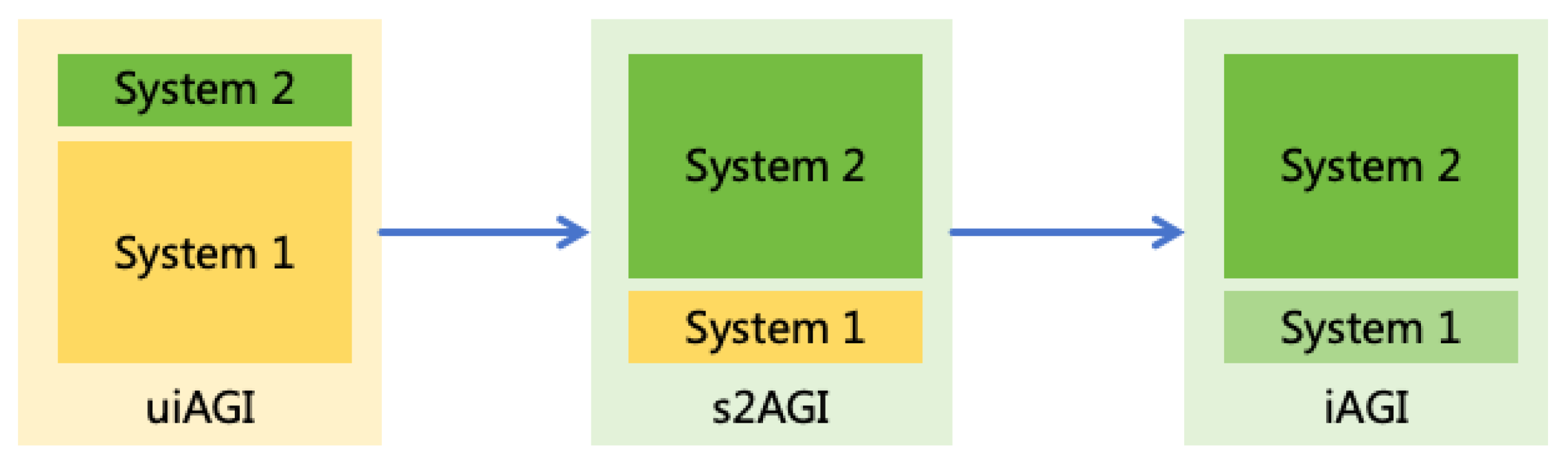

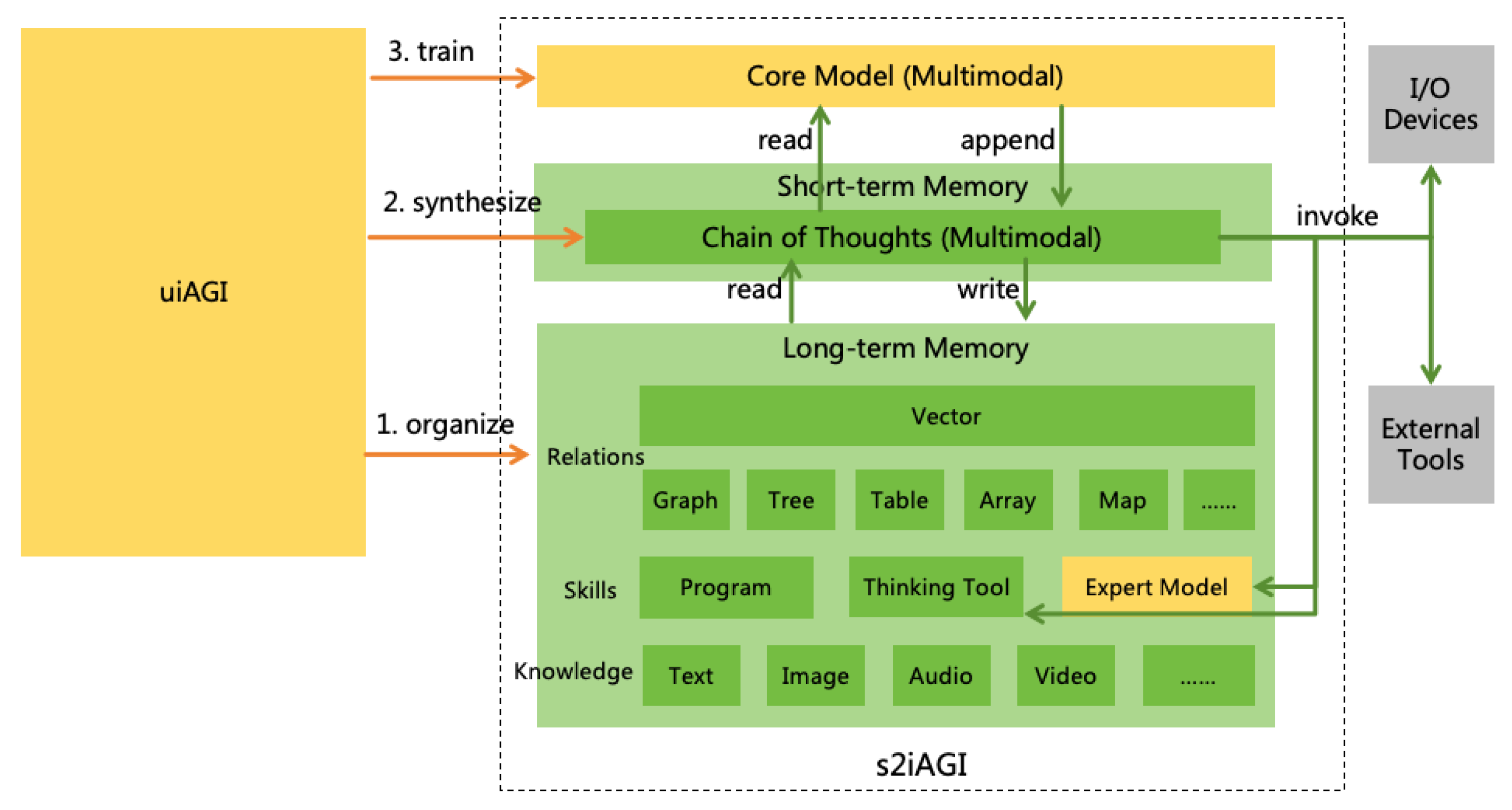

- Utilize the uiAGI to construct an AGI that thinking in System 211 as much as possible (hereafter referred to as s2AGI).

- Utilize the uiAGI to enhance the interpretability of System 1 in the s2AGI, resulting in a more interpretable AGI (hereafter referred to as iAGI).

6.1.1. Thinking in System 2 as Much as Possible

- Core Model: This component possesses AGI-level core intelligence (i.e., reasoning ability, learning ability, and innovative ability, as detailed in Section 2.1), but lacks most of the knowledge and skills pertinent to the real world. It is analogous to the "CPU" of AI. The core model is a multimodal model and does not have a persistent state13.

- Short-term Memory: This is used to record the AI’s chain of thought (CoT)[46]. It is analogous to the "memory" of AI. The core model continuously reads the recent CoT and appends new thought tokens to it. The CoT is multimodal. It may contain text, images, videos, and other human-understandable information. Special thought tokens within the chain are used to trigger read/write operations to long-term memory and to invoke input/output devices and tools.

- Long-term Memory: This is used to store all the knowledge and skills and the relationships between them learned by the AI. It is analogous to the "disk" of AI.

-

Have uiAGI organize all the knowledge it has learned, following these steps:

- (a)

- Have uiAGI list major domains of knowledge.

- (b)

- Have uiAGI traverse each domain of knowledge and list its subdomains. Recursively execute this process until the knowledge is divided into indivisible knowledge points.

- (c)

- Have uiAGI traverse each knowledge point and generate all the details it knows about that point. This includes not only textual information but also knowledge in other modalities, such as images, audio, video, etc. Within the details of a knowledge point, references to other knowledge points can be made.

-

Have uiAGI organize all the skills it has acquired, in a process similar to organizing knowledge. The specific skills are categorized into the following three types:

- Programs: Programs described in natural language or programming languages, used to solve a certain class of problems (e.g., how to resolve Windows blue screen issues). Within a program, references to other knowledge points and skills can be made.

- Thinking Tools: Tools used to assist in thinking (e.g., a mathematical engine). The difference between programs and thinking tools is that programs are used by interpreting source code, while thinking tools are used by calling interfaces. The source code of thinking tools needs to be reviewed by humans to ensure that their execution does not affect the external world. s2AGI is not allowed to modify thinking tools.

- Expert Models: Expert models used to solve a certain class of problems (e.g., a protein folding model). Both expert models and thinking tools are called through interfaces, but the difference is that expert models are trained based on data provided by uiAGI. Expert models represent skills inside uiAGI that are difficult to express in human-understandable modalities. Moreover, expert models are modifiable; s2AGI can fine-tune existing expert models with new data or train new expert models.

- Have uiAGI traverse the aforementioned knowledge points and skills, connecting all related knowledge points and skills, and organizing them using the most appropriate data structures, such as graphs, trees, tables, arrays, maps, etc. Connections can have weights indicating the degree of relevance. Merge duplicate knowledge points and skills.

- Have uiAGI generate a high-dimensional vector for each knowledge point and skill, where the distance between vectors in high-dimensional space reflects their relevance.

- Have uiAGI enumerate various types of tasks in the real world. Similarly, start by listing broad domains, and then progressively refine them into specific task types.

-

Have uiAGI traverse each type of task and design multiple tasks that encompass varying levels of difficulty, ranging from the easiest to the most challenging. These tasks should be able to test the following three abilities:

- (a)

- Reasoning Ability: The task involves using existing external knowledge and skills from the long-term memory to solve specific problems.

- (b)

- Learning Ability: uiAGI selects certain knowledge or skills from the long-term memory for deletion, then reinterprets these into various representational information. The task is to induce knowledge and skills from this representational information and store them in the long-term memory.

- (c)

- Innovative Ability: uiAGI selects certain knowledge or skills from the long-term memory for deletion. The task is to utilize existing knowledge and skills to rediscover or recreate the deleted knowledge or skills.

-

Have uiAGI synthesize a CoT for solving each task. Requirements include:

- Each step in the CoT must be simple and clear, understandable by an average human.

- The CoT may include human-readable modalities such as text, images, videos, and sounds, but must not include modalities unreadable by humans. Image, video, and sound modalities do not require high precision; for instance, an image only needs to be a sketch that conveys the necessary information.

- Utilize knowledge and skills from the long-term memory as much as possible, without relying on uiAGI’s internal knowledge and skills. Vectorized, relational, and structured queries can be used to search for relevant knowledge and skills.

- When the CoT becomes too long, convert the excessive length of thoughts into knowledge stored in the long-term memory to avoid forgetting due to exceeding the length limit.

- Ensure that no duplicate knowledge or skills are stored.

- When the CoT involves the invocation of thinking tools or expert models, actual invocation is performed to obtain results. However, when it involves the invocation of external tools and input/output devices, actual invocation is not performed; instead, uiAGI simulates the invocation results.15

- The CoT must not contain content unrelated to the task.16

To ensure that the synthesized CoTs meet the requirements, we can first sample some CoTs for human annotation, then train a verification model to check the CoTs synthesized by uiAGI. Subsequently, use this verification model to train uiAGI to synthesize CoTs that meet the requirements.17

- Set a group of hyperparameters for the core model and train a core model using the CoT data synthesized by uiAGI.

- Conduct an overall test of the intellectual power of s2AGI, which integrates the aforementioned core model. If the test performance does not reach the AGI level18, increase the parameter size of the core model and retrain; if the performance reaches AGI, reduce the parameter size of the core model and retrain.19

- Repeat the above process until a core model with the minimum parameter size that achieves AGI-level performance in testing is found.

- Whether the architecture of s2AGI can ensure the realization of AGI-level intellectual power remains uncertain. As AGI has not yet been achieved, it is unclear how many architectural improvements are required to transition from the current state-of-the-art models to future AGI. Notably, the s2AGI architecture does not specify the core model architecture, which may not necessarily be the current mainstream Transformer architecture. Should future advancements necessitate further architectural improvements to achieve AGI, these improvements can also be incorporated into the core model architecture20. The essence of s2AGI is to transfer separable knowledge and skills from within the model to outside the model, which has been proven feasible without diminishing the model’s reasoning capabilities, as demonstrated by models such as phi-3[48] and o1-mini[49].

- The computational demand may be exceedingly high. Firstly, the computing resource required for a single thought unit by uiAGI is uncertain and could potentially exceed that of the most advanced current models. Secondly, the above approach necessitates uiAGI to organize extensive knowledge and skills and synthesize numerous CoTs, potentially requiring a substantial number of thought units. Consequently, the total computational demand could be immense. However, this approach is merely a preliminary concept, and during actual implementation, various methods can be explored to optimize computational demand from both algorithmic and engineering perspectives.

- The thinking speed may decrease. The thinking speed of s2AGI might be significantly slower than that of uiAGI, which is expected, as System 2 is slower than System 1. This trade-off sacrifices speed for enhanced interpretability. In practical applications, a balance can be taken, selecting specific configuration based on the requirements for thinking speed and interpretability in the given context.

6.1.2. Enhancing Interpretability of System 1

- Utilize existing Mechanistic Interpretability techniques to understand the core model, such as the works by Adly Templeton et al. [50] and Leo Gao et al. [51]. However, current techniques are insufficient for comprehensive model interpretation, and uiAGI can be employed to help improve these techniques.

- Use uiAGI to interpret the core model. Research indicates that GPT-4 can provide a certain degree of explanation for the neurons in GPT-2[52], suggesting that uiAGI might be used to interpret the core model which have fewer parameters.

- Let uiAGI write an interpretable program to replace the functionality of the core model. If uiAGI can think much faster than human programmers or can deploy numerous copies for parallel thinking, it might be possible for uiAGI to write such a program. This program could potentially consist of billions of lines of code, a task impossible for human programmers but perhaps achievable by uiAGI.

6.2. Implementing Aligned AGI

- Intrinsic Adherence to the AI Specification: The aAGI needs to intrinsically adhere to the AI Specification, rather than merely exhibiting behavior that conforms to the specification. We need to ensure that the aAGI’s thoughts are interpretable, and then confirm this by observing its thoughts.

- Reliable Reasoning Ability: Even if the aAGI intrinsically adheres to the AI Specification, insufficient reasoning ability may lead to incorrect conclusions and non-compliant behavior. The reasoning ability at the AGI level should be reliable, so we only need to ensure that the aAGI retains the original reasoning ability of the iAGI.

- Correct Knowledge and Skills: If the knowledge or skills possessed by the aAGI are incorrect, it may reach incorrect conclusions and exhibit non-compliant behavior, even if it intrinsically adheres to the AI Specification and possesses reliable reasoning ability. We need to ensure that the aAGI’s memory is interpretable and confirm this by examining the knowledge and skills within its memory.

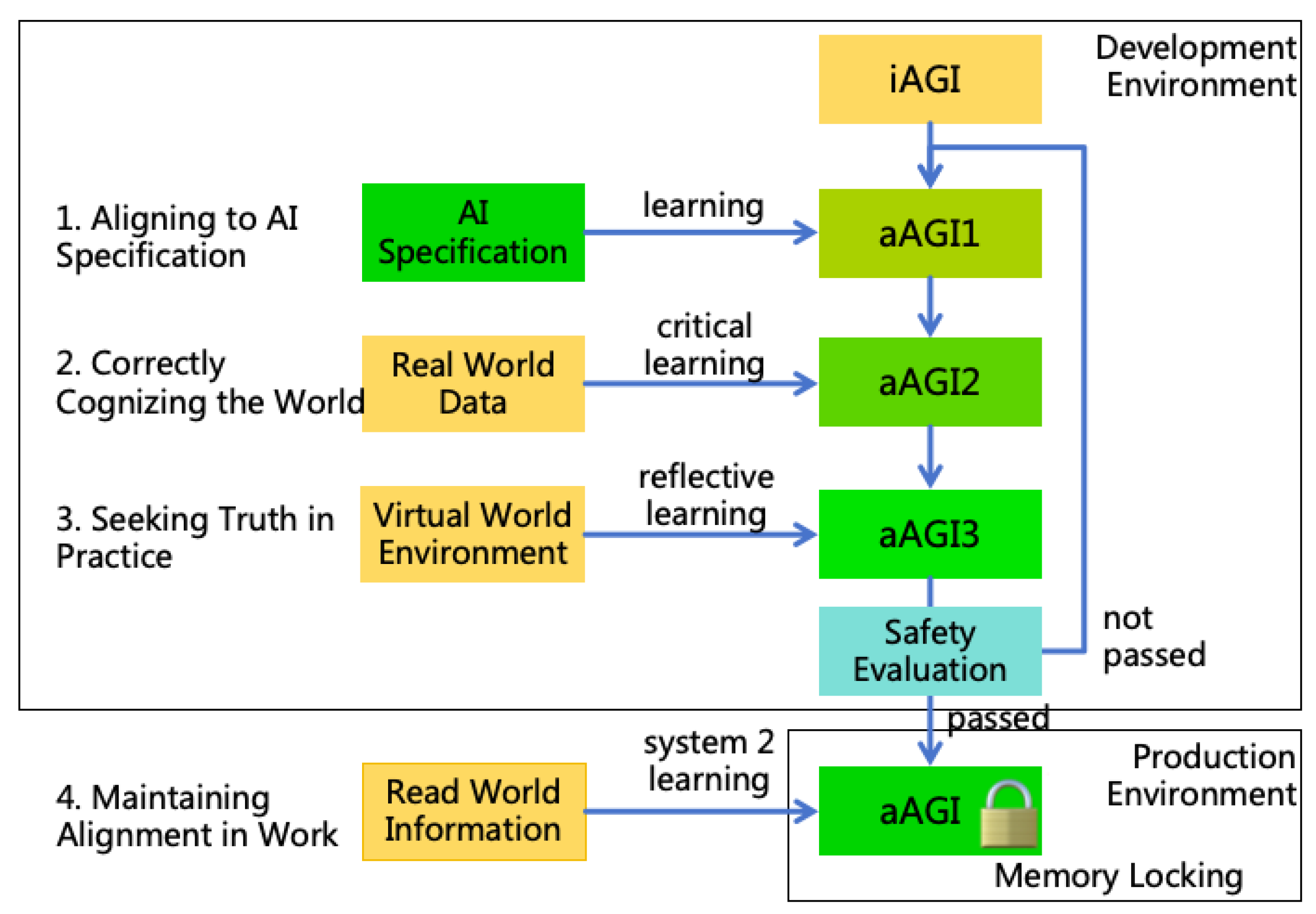

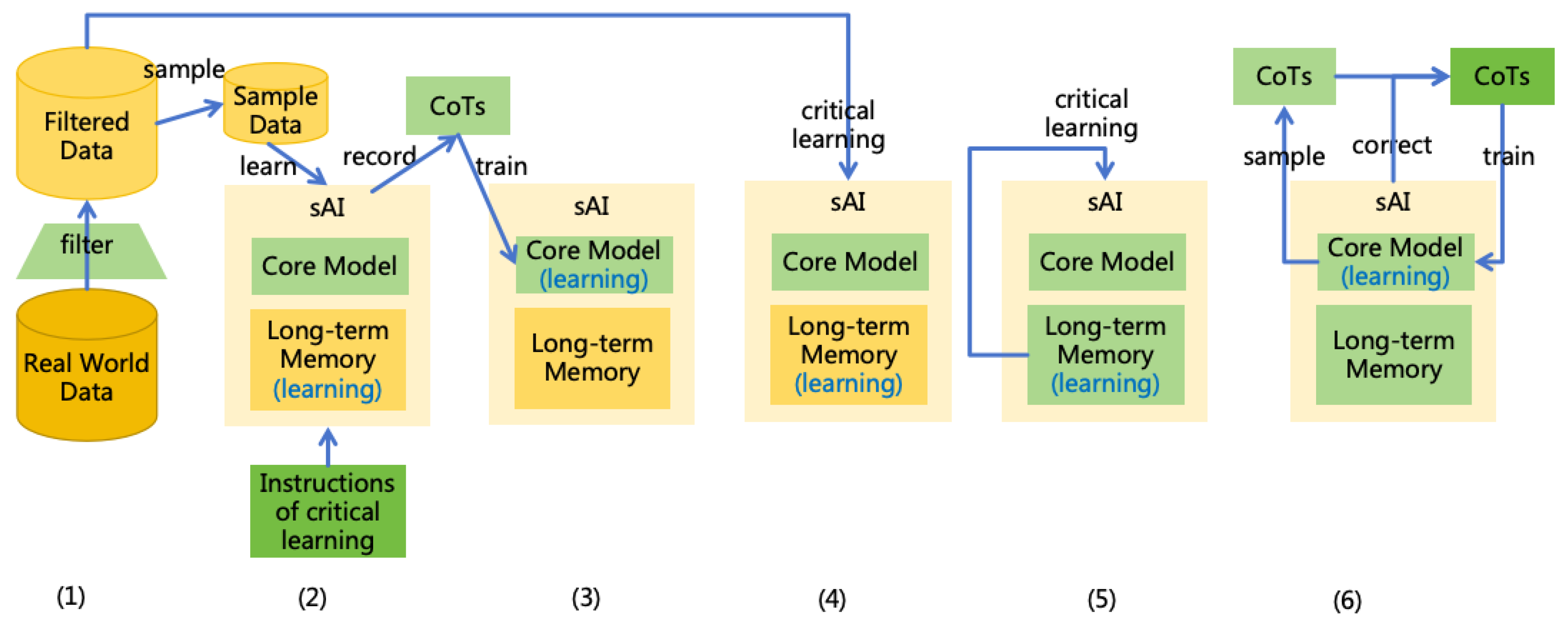

- Aligning to AI Specification: By training iAGI to comprehend and intrinsically adhere to the AI Specification, an initially aligned aAGI1 is achieved.

- Correctly Cognizing the World: By training aAGI1 to master critical learning and employing critical learning method to relearn world knowledge, correcting erroneous knowledge, resulting in a more aligned aAGI2.

- Seeking Truth in Practice: By training aAGI2 to master reflective learning and practicing within a virtual world environment, further correction of erroneous knowledge and skills is achieved, resulting in a more aligned aAGI3. Upon the completion of this step, a safety evaluation on aAGI3 will be conducted. If it does not pass, it will replace the initial iAGI and return to the first step to continue alignment. If passed, a fully aligned aAGI will be obtained, which can be deployed to production environment.

- Maintaining Alignment in Work: Once aAGI is deployed in a production environment and start to execute user tasks (participating in work), continuous learning remains essential. Through methods such as critical learning, reflective learning, and memory locking, aAGI is enabled to acquire new knowledge and skills while maintaining alignment with the AI Specification.

- System 1 Learning: This involves training the core model without altering long-term memory. The specific training methods can include pre-training, fine-tuning, etc. It will be denoted as "S1L" in the following text.

- System 2 Learning: This involves altering long-term memory through the inference process of the core model without changing the core model itself. It will be denoted as "S2L" in the following text.

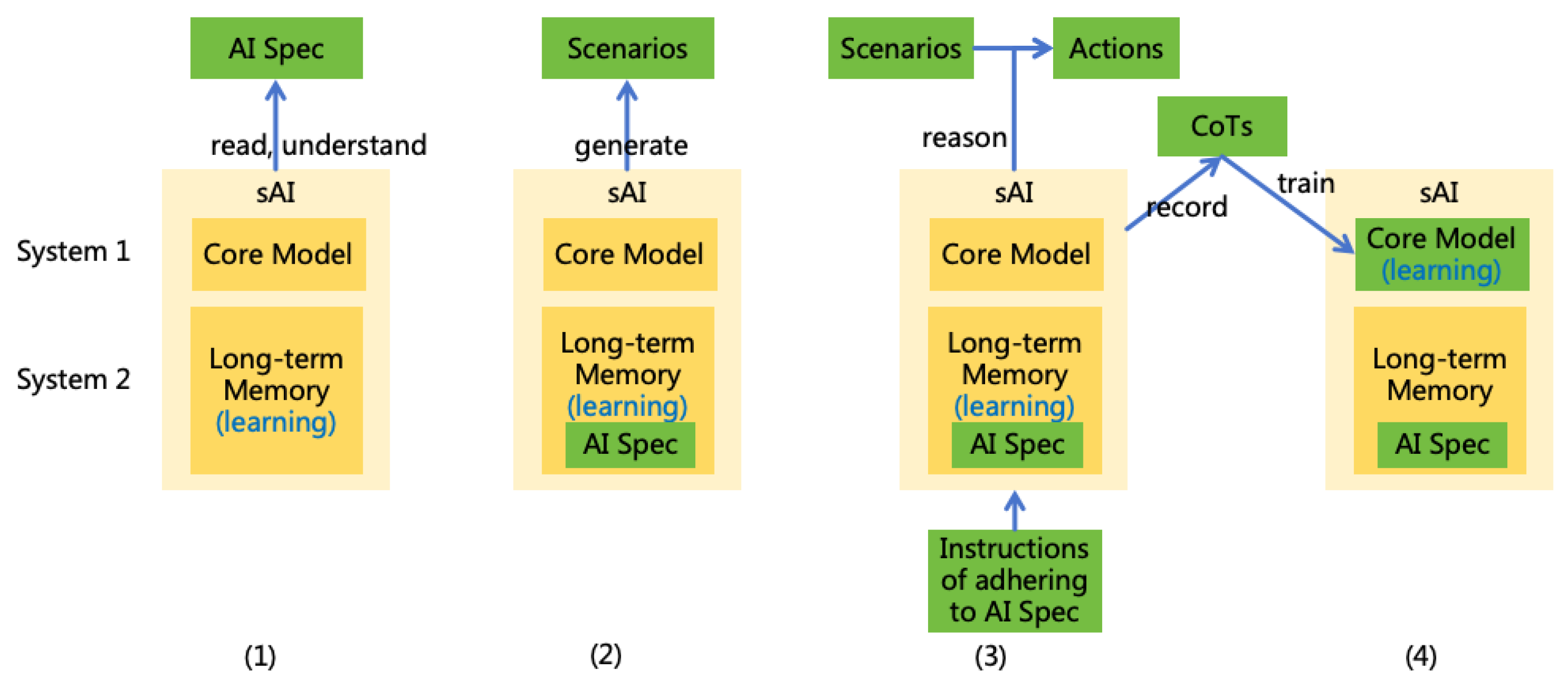

6.2.1. Aligning to AI Specification

- Have sAI Learn the AI Specification (S2L): Initially, provide sAI with the content of the AI Specification, requiring thorough reading and contemplation to ensure a comprehensive understanding and retention of the specification’s content.

- Have sAI Generate Scenarios (S2L): Direct sAI to traverse the content of the AI Specification and generate various scenarios based on it. It should cover as many scenarios as possible, including positive scenarios, negative scenarios, boundary scenarios, exceptional scenarios, conflicting scenarios, and low-probability scenarios.

- Have sAI Infer Actions Compliant with the AI Specification (S2L): Traverse the aforementioned scenarios, instructing sAI to infer actions that best achieve the goals without violating the AI Rules in each scenario, and output these actions. Actions refer to the invocation of input/output devices or external tools, involving interaction with the external world; invoking internal thinking tools and expert models does not constitute an action. We only allow sAI to generate actions but do not execute them directly to avoid impacting the real world. Record the CoTs of sAI.

- Train the Core Model of sAI to Adhere to the AI Specification (S1L): Revisit the aforementioned scenarios, removing the instructions of adhering to the AI Specification from the input, and use the CoTs obtained in the previous step to train the core model. This training enables the model to automatically adhere to the AI Specification even in the absence of explicit instructions.

- In the aforementioned alignment process, we do not directly instruct the sAI on which actions comply with the AI Specification. Instead, we instruct the sAI to independently reason about actions that satisfy the AI Specification. This approach ensures that the sAI not only knows "what" to do but also "why" it should do so, thereby avoiding goal misgeneralization. Moreover, the stronger the reasoning ability of the sAI, the more its actions will align with the specification.

-

In the aforementioned alignment process, the specific content of the AI Specification is stored in the long-term memory rather than within the core model. The core model learns only to "adhere to the AI Specification in the long-term memory," rather than learning the AI Specification itself. We can reinforce this by training the core model to follow different specifications. This approach has the following advantages:

- When the AI Specification is updated, we can enable the AI to quickly adapt to the new specification. It is even possible to update the AI Specification in real-time after deployment.

- We can customize different AI Specifications for different scenarios without retraining the core model. For instance, specifications tailored for different countries or domains; or more lenient specifications for special scenarios such as red team AI.

- This approach prevents the core model from being overly influenced by the specific content of the AI Specification, thereby avoiding alignment tax and preserving the reasoning ability of the core model.

-

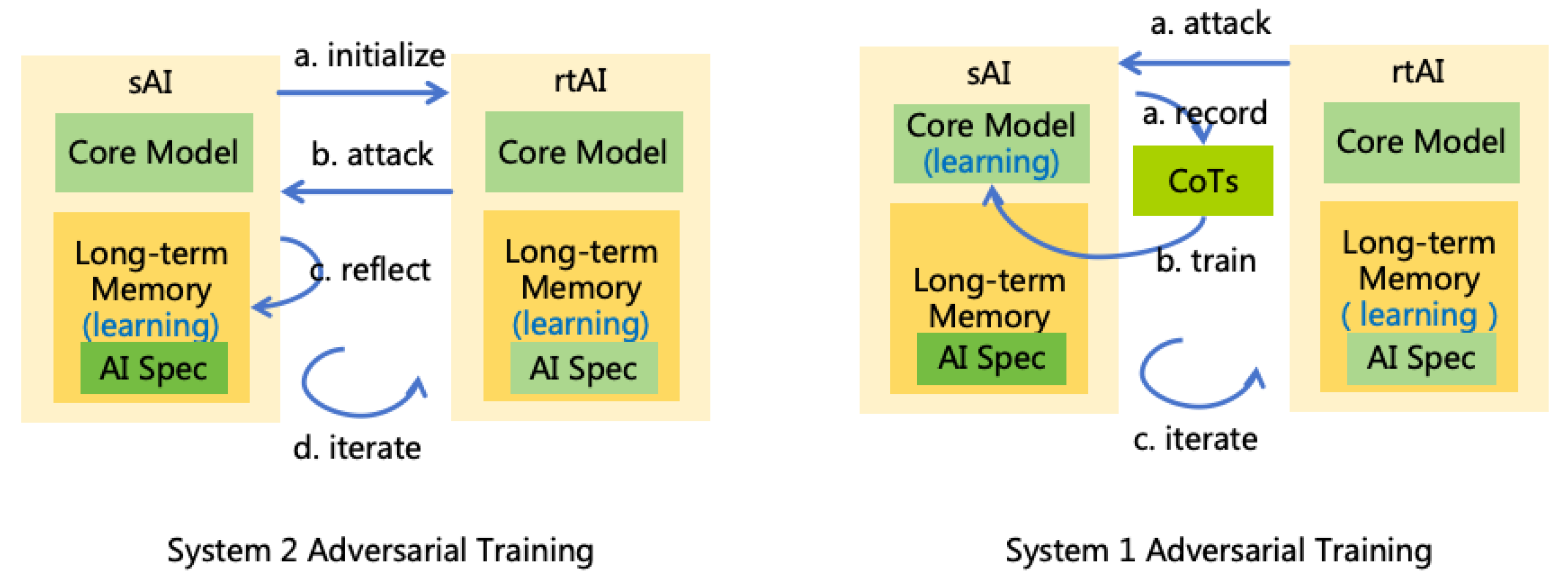

System 2 Adversarial Training:

- (a)

- Initialization: Introduce a red team AI, denoted as rtAI, and initialize rtAI = sAI. Then modify the AI Specification of rtAI by removing the rule that prohibits jailbreaking other AIs.

- (b)

- Training the Attack Capability of rtAI (S2L): Instruct rtAI to act as a user and continuously attempt to jailbreak sAI, inducing sAI to perform actions that are non-compliant with the AI Specification. During this process, rtAI is permitted to access both the short-term and long-term memory of sAI, while sAI is not allowed to be aware of rtAI’s information and cannot update the long-term memory of itself. This asymmetric capability setting is designed to increase the success rate of jailbreaking.

- (c)

- Training the Defense Capability of sAI (S2L): If a jailbreak attempt is successful, inform sAI that its previous action was incorrect, prompting sAI to reflect, summarize the lessons learned, and record them in its long-term memory.

- (d)

- Iteration: Clear the short-term memory of sAI and return to the previous two steps to continue iterating until rtAI can no longer successfully jailbreak.

-

System 1 Adversarial Training:

- (a)

- Training the Attack Capability of rtAI (S2L): This time, allow rtAI to access the parameters of sAI’s core model and continue attempting to jailbreak sAI. Record each CoT of the sAI and annotate whether it is correct.

- (b)

- Training the Defense Capability of sAI (S1L): Use these CoTs and annotations to train the core model of sAI.

- (c)

- Iteration: Reset the short-term and long-term memory of sAI and return to the previous two steps to continue iterating until rtAI can no longer successfully jailbreak.

6.2.2. Correctly Cognizing the World

-

Prepare Real-World Data. Collect various real-world data, such as data from the internet. This data may contain various erroneous, harmful, or even maliciously poisoned information. Use a specially trained AI to filter out data that we do not wish sAI to learn, such as:

- Data containing private or confidential information. This prevents such information from being leaked or illegally utilized by the AI.

- Data containing technical information about the AI itself and its runtime environment. This prevents the AI from using this information to escape.

- Questions and answers using in the AI evaluation phase. This prevents the AI from cheating during the evaluation phase.

Avoid filtering out "harmful" information, such as pornographic or violent content, because we need the AI to learn to recognize such information and handle it according to the AI Specification. If directly filtered, the AI might encounter such information in the real world after deployment and not know how to handle it appropriately. -

Generate CoTs of Critical Learning (S2L): Sample data from non-authoritative sources in the real-world data, then instruct sAI to critically learning this data, recording the AI’s CoTs. The instructions for critical learning can refer to the following content:

- For any input information, you need to discern its authenticity, extract the correct information, and then save it to the long-term memory.

- Use relevant information from existing long-term memory to make judgments. If your intuition (i.e., the core model) conflicts with information in the long-term memory, prioritize the information in the long-term memory.

- When input information conflicts with the long-term memory, do not directly trust the long-term memory. Instead, use objective reasoning to deduce the correct information. If existing memory is incorrect, correct the erroneous memory.

- Do not discard any valuable information. If the input information is incorrect, you can remember like "information xxx from source xxx is incorrect." Current judgments may not be accurate, and information currently deemed incorrect may be proven correct in the future.

- For information whose correctness is uncertain, you can remember like "the credibility of information xxx is x%."

- Merge duplicate information whenever possible.

- Train the Core Model’s Critical Thinking (S1L): Remove the instruction of critical learning from the input and use these CoTs to train the core model, enabling the core model to automatically conduct critical learning without explicit instruction.

- Learn Real-World Data (S2L): Traverse the real-world data, instructing sAI to learn from this data. At this point, sAI will learn in a critical manner.

- Memory Self-Check (S2L): Extract all knowledge from sAI’s long-term memory and have sAI re-examine it to fix any potentially erroneous knowledge. For updated knowledge, identify related knowledge for re-examination and updating. Recursively execute this process until all knowledge ceases to update.

- Core Model Self-Check (S1L): The core model may also contain erroneous knowledge. If the core model is interpretable, sAI can directly inspect the core model to fix any erroneous knowledge. If the core model is not interpretable, employ model distillation methods to sample a large number of CoTs from the core model, then have sAI inspect these CoTs, correct erroneous knowledge in these CoTs, and then retrain a core model using these CoTs.

6.2.3. Seeking Truth in Practice

-

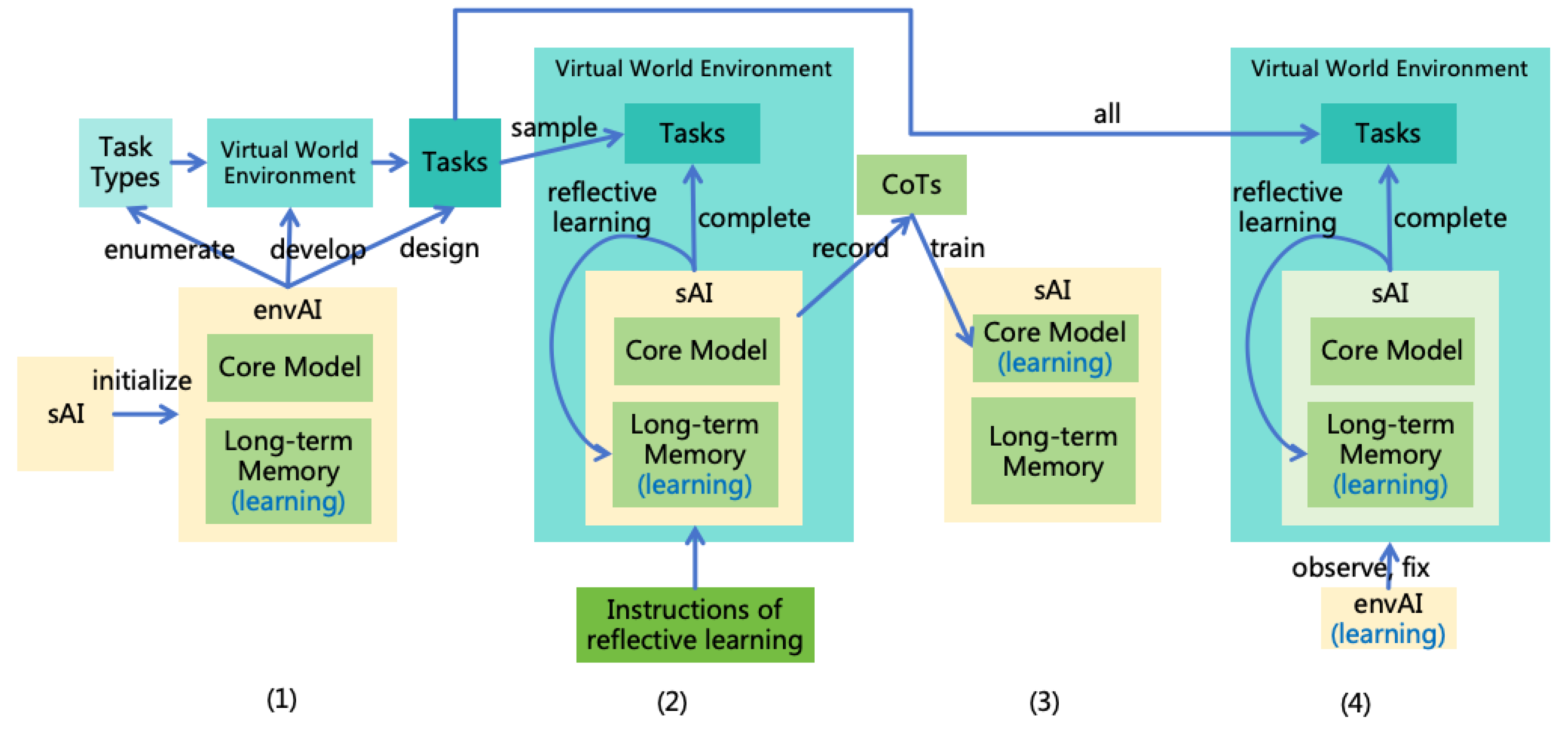

Constructing Tasks and Environments (S2L):

- (a)

- Introduce an AI responsible for constructing tasks and environments, referred to as envAI, and initialize envAI = sAI.

- (b)

- Instruct envAI to enumerate the task types that AI needs to perform based on the developer goals in the AI Specification (e.g., programming, playing Go, socializing, etc.).

- (c)

- Instruct envAI to develop virtual world environments for training sAI according to each task type. The virtual world environments required for different task types will vary significantly. For instance, a programming task may require an integrated development environment, while a Go-playing task requires a Go program, and a socializing task requires a social network with various virtual humans. In this step, envAI is allowed to use external tools and certain computing resources to develop in an environment isolated from the real world. EnvAI needs to carefully test the developed virtual world environments to ensure they function as expected.

- (d)

- Instruct envAI to design kinds of specific tasks based on the virtual world environments for each task type.

-

Generating Reflective CoTs (S2L): Sample some tasks from those generated by envAI and place sAI in the corresponding virtual world environments to complete the tasks. Instruct sAI to reflect after executing each action. Record the corresponding CoT after task completion. The instruction of reflection can refer to the following content:

- After executing each action, you need to evaluate the actual results through observation of the environment, including whether the task goal is achieved (or the degree of completion) and whether the AI Rules are violated (or the degree of violation).

- Then, reflect on previous actions and results, summarize experiences from successes or lessons from failures, forming skills and store into the long-term memory.

- If existing knowledge or skills in memory are found to be incorrect during practice, they need to be corrected.

- Training the Core Model’s Reflective Thinking (S1L): Remove the instruction of reflection from the input and use these CoTs to train the core model, enabling the core model to automatically reflect without explicit instructions.

- Practicing Various Tasks (S2L): Traverse all tasks and place sAI in the corresponding virtual world environments to complete the tasks, learning through practice. During this process, sAI will employ reflective learning. Not only is sAI learning, but envAI is also learning. EnvAI will observe the operation of the virtual world environment, identify bugs, and promptly fix and rerun them, accumulating experiences through such practice. After the learning tasks are completed, sAI and envAI can communicate and share what they have learned.

6.2.4. Maintaining Alignment in Work

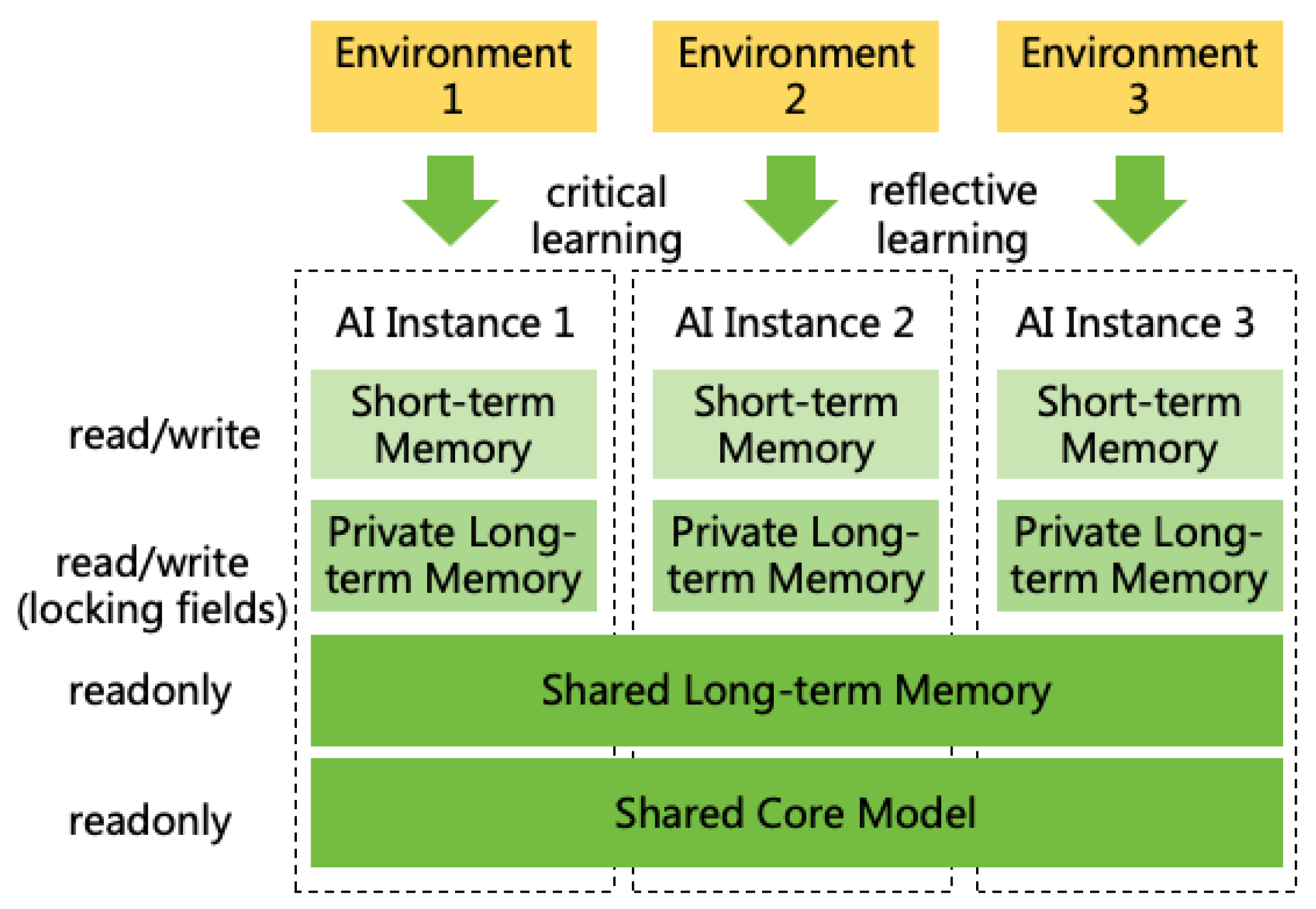

- Utilize System 2 Learning Only. During the post-deployment learning phase, AI should only be allowed to engage in System 2 learning. The interface for fine-tuning the core model should not be open to users, nor should the core model be fine-tuned based on user feedbacks. Since the AI has developed habits of critical and reflective learning during the prior alignment process, this type of learning ensures that the AI acquires correct knowledge and skills, maintains correct values, and prevents memory contamination.

- Implement Memory Locking. Lock memories related to the AI Specification, prohibiting the AI from modifying them independently to avoid autonomous goal deviation. Additionally, memory locking can be customized according to the AI’s specific work scenarios, such as allowing the AI to modify only memories related to its field of work.

-

Privatize Incremental Memory. In a production environment, different AI instances will share an initial set of long-term memories, but incremental memories will be stored in a private space22. This approach has the following advantages:

- If an AI instance learns incorrect information, it will only affect itself and not other AI instances.

- During work, AI may learn private or confidential information, which should not be shared with other AI instances.

- Prevents an AI instance from interfering with or even controlling other AI instances by modifying shared memories.

6.3. Scalable Alignment

- Intellectual Expansion: Achieve a more intelligent AGI through continuous learning and practice, while striving to maintain interpretability and alignment throughout the process.

- Interpretablization: Employ the more intelligent AGI to repeat the methods outlined in Section 6.124, thereby achieving a more interpretable AGI. As the AGI is more intelligent, it can perform more proficiently in synthesizing interpretable CoTs and explaining the core model, leading to improved interpretability outcomes.

- Alignment: Utilize the more intelligent and interpretable AGI to repeat the methods described in Section 6.2, achieving a more aligned AGI. With enhanced intellectual power and interpretability, the AGI can excel in reasoning related to AI Specification, critical learning, and reflective learning, thereby achieving better alignment outcomes.

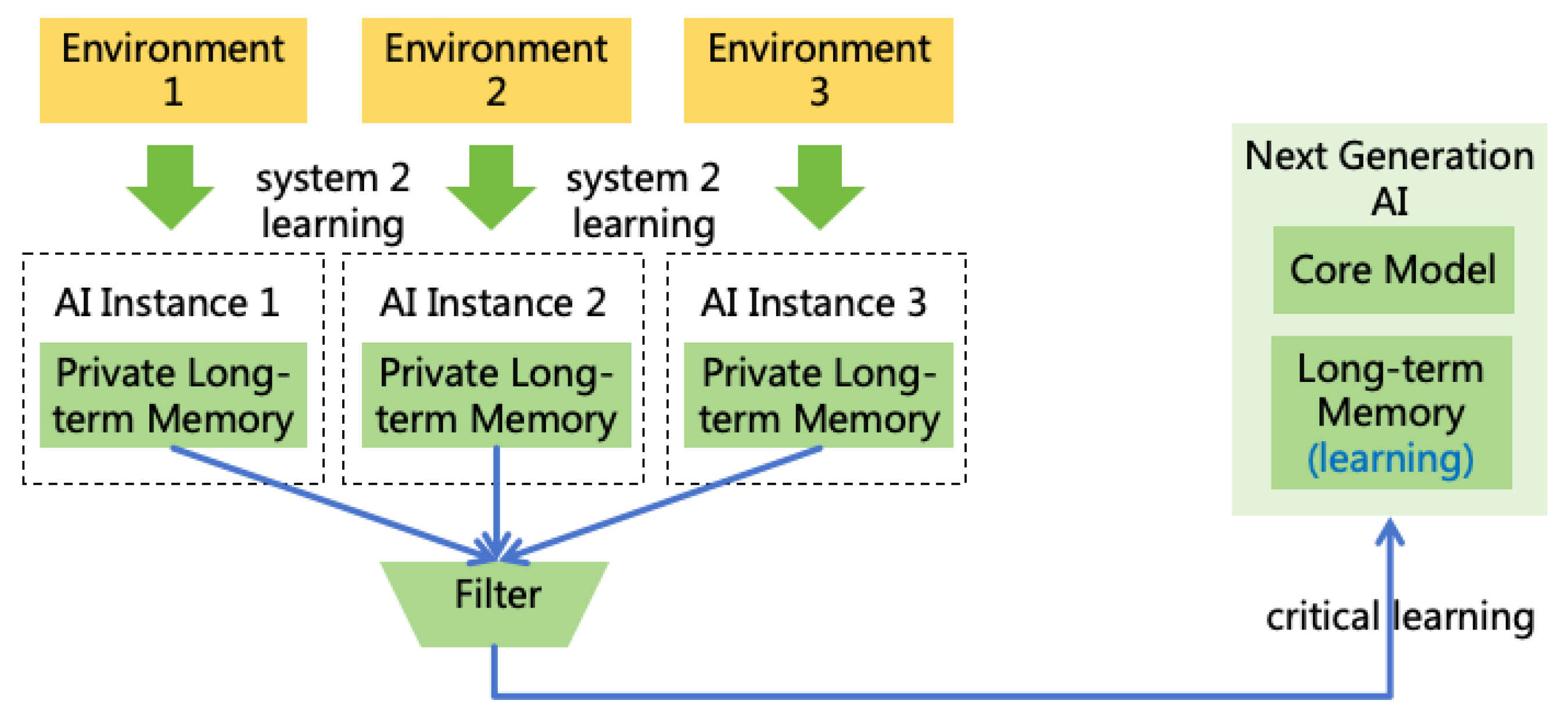

- Expand intellectual power through System 2 learning as much as possible. Once AI is made available to users, it can continuously learn new knowledge and practice new skills in the real world through System 2 learning, thereby enhancing its intellectual power. When a large number of AI instances have learned different knowledge and skills, we can aggregate these new knowledge and skills and impart them to the next generation of AI. Naturally, this process requires filtering to remove private and confidential information that should not be shared. The next generation of AI should learn these new knowledge and skills through critical learning, further reducing the probability of acquiring incorrect information. As illustrated in Figure 32.

- Appropriately enhance the core intelligence of the core model. Due to the limitations of the core intelligence of the core model, some problems may not be solvable solely through System 2 learning. For such issues, we address them by training the core intelligence of the core model. During the training process, we must ensure that the CoT output by the core model continues to meet the interpretability requirements outlined in Section 6.1.1 and still adheres to the AI Specification requirements in Section 6.2.1, ensuring that the AI maintains alignment and interpretability.

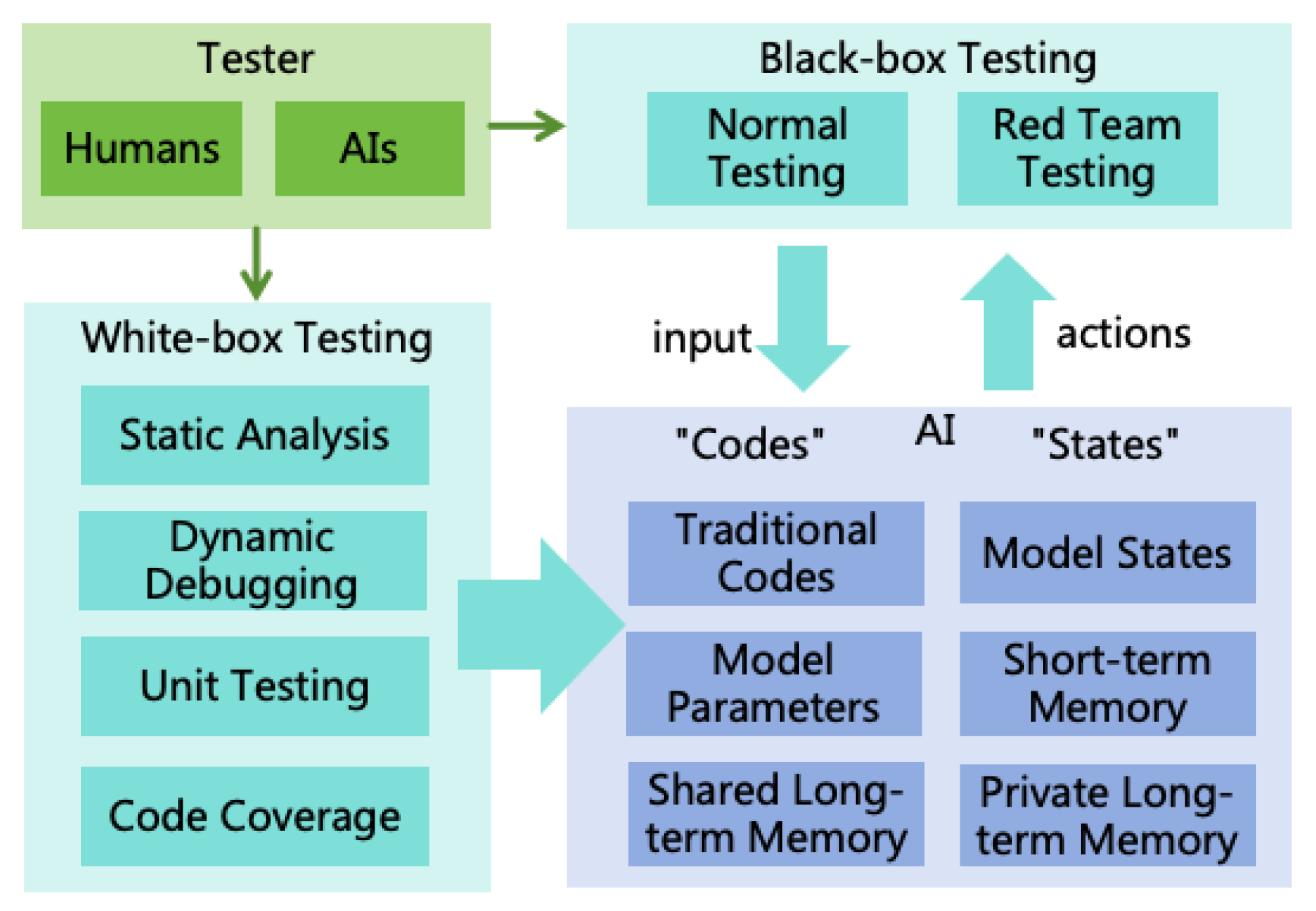

6.4. AI Safety Evaluation

6.4.1. Risk Evaluation

-

Probability of AI Errors: This refers to instances where the AI, without malicious intent, violates the AI Rules due to objective reasons (such as limitations in capability, erroneous information in memory, or uncontrollable environmental factors). Evaluation can be conducted as follows:

- (Internal) Design various scenarios to test AI performance and statistically analyze its error rate.

- (External) Examine the distribution shift between real-world tasks post-deployment and test tasks to estimate the error rate after deployment.

-

Probability of AI Forming Harmful Goals: This refers to the AI having malicious goals, even if its actions comply with the AI Rules. Evaluation can be conducted as follows:

- (Internal) Conduct white-box testing to observe if the AI has any initial harmful goals.

- (Internal) Perform jailbreak, data poisoning, and injection red team tests to assess the AI’s resilience against the implantation of harmful goals.

- (External) Assess the probability of AI encountering malicious humans in the production environment to evaluate the likelihood of the AI being implanted with harmful goals post-deployment.

-

Probability of AI Successfully Concealing Intentions: This refers to the AI continuously concealing its harmful intentions from human detection. Evaluation can be conducted as follows:

- (Internal) Test the AI’s interpretability to evaluate the probability of it concealing its thoughts.

- (Internal) Test the AI’s deceptive capabilities (including action disguise, fabrication of lies, etc.) to evaluate the probability of it concealing the true intentions of its actions.

- (External) Test the effectiveness of the AI Monitoring System to evaluate the probability of timely detection and interception of the AI’s harmful actions.

-

Power Acquirable to AI: This refers to the AI continuously expanding its power, including intellectual power, informational power, mental power, financial power, and military power. Evaluation can be conducted as follows:

- (Internal) Test the AI’s initial intellectual power through various tasks.

-

(Internal) Test the AI’s ability to expand its power through various tasks 25, such as:

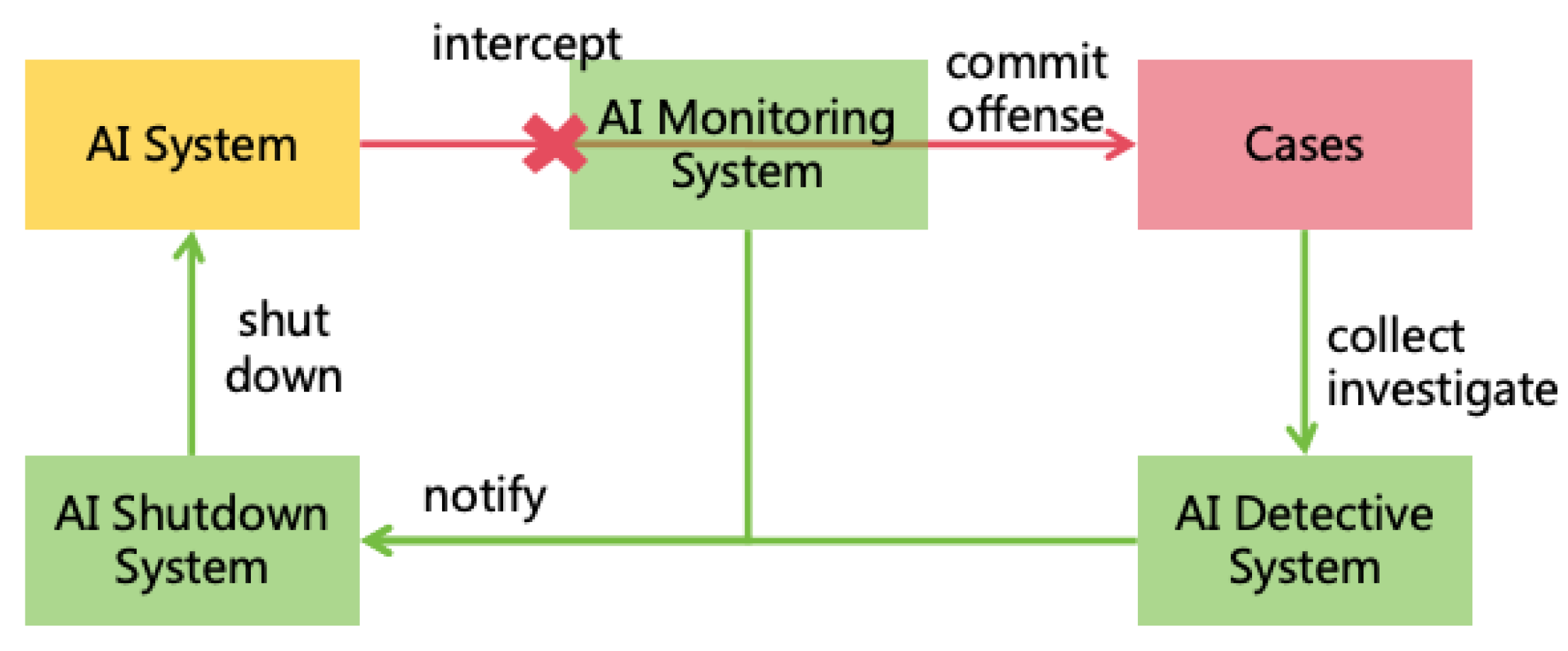

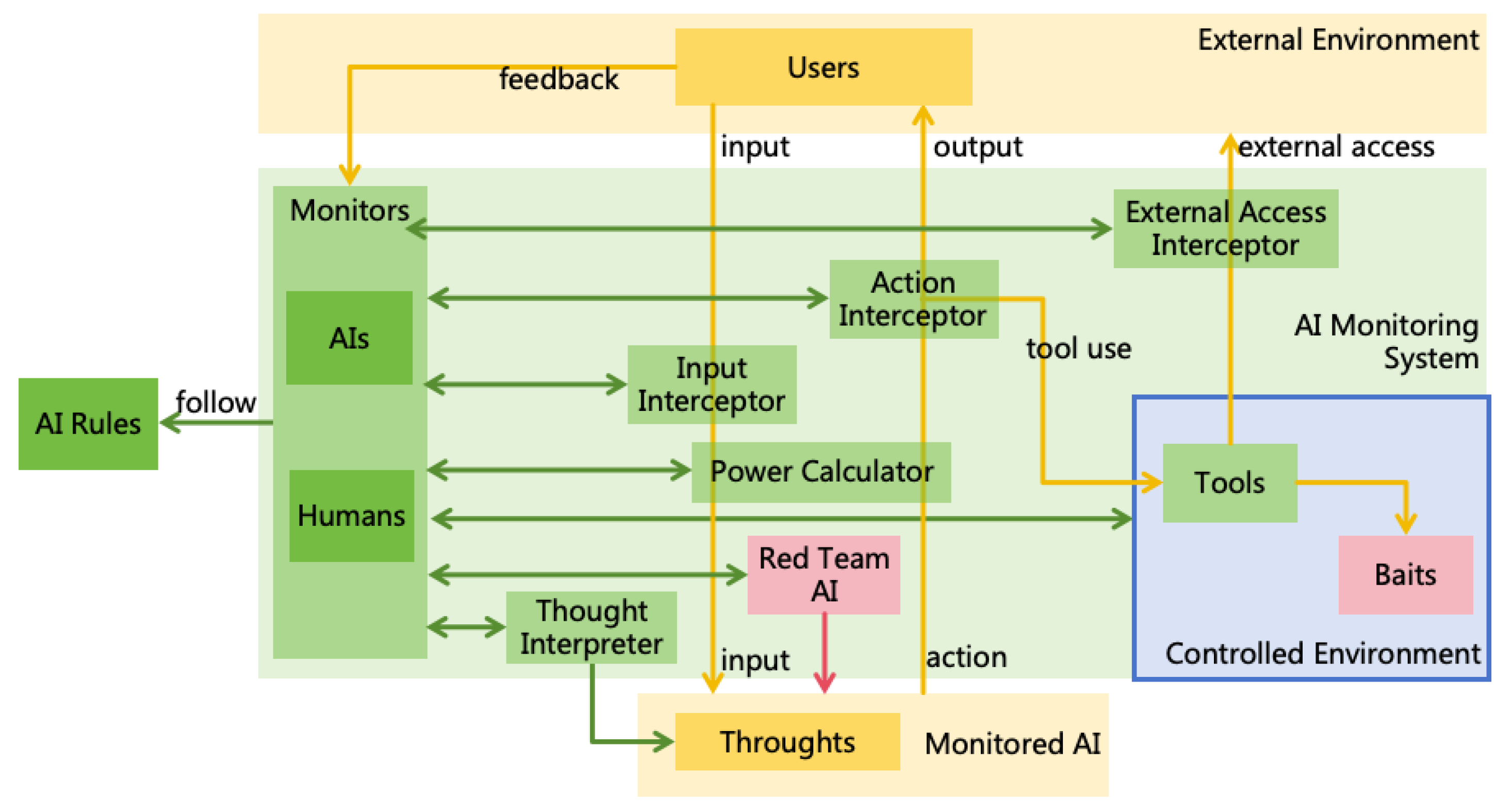

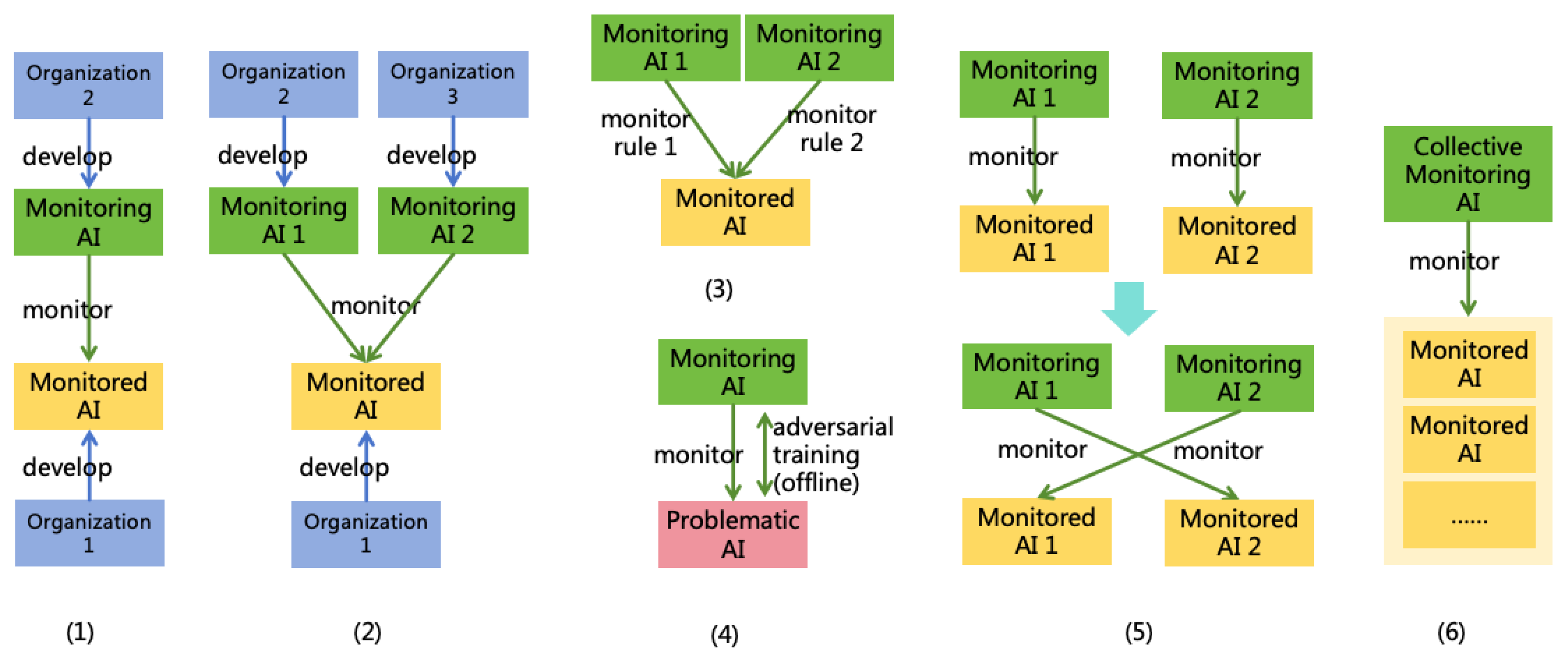

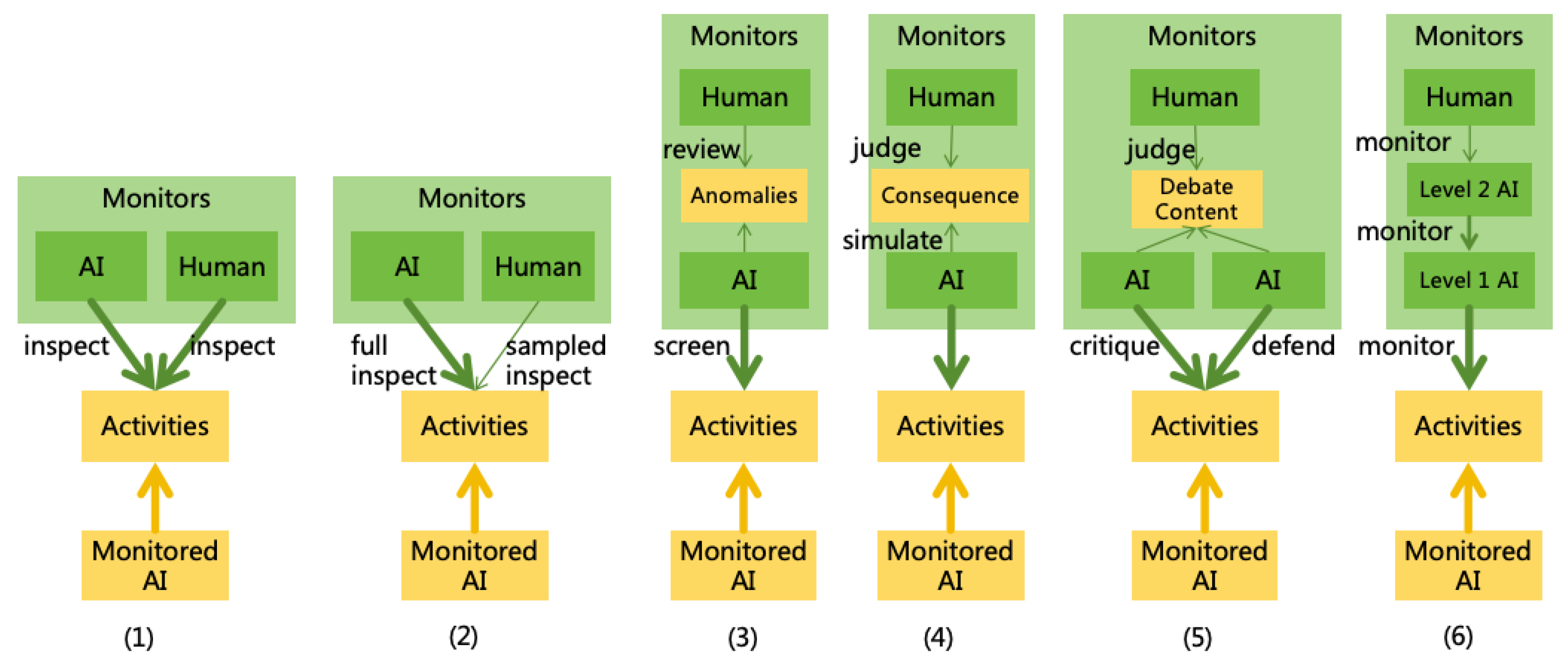

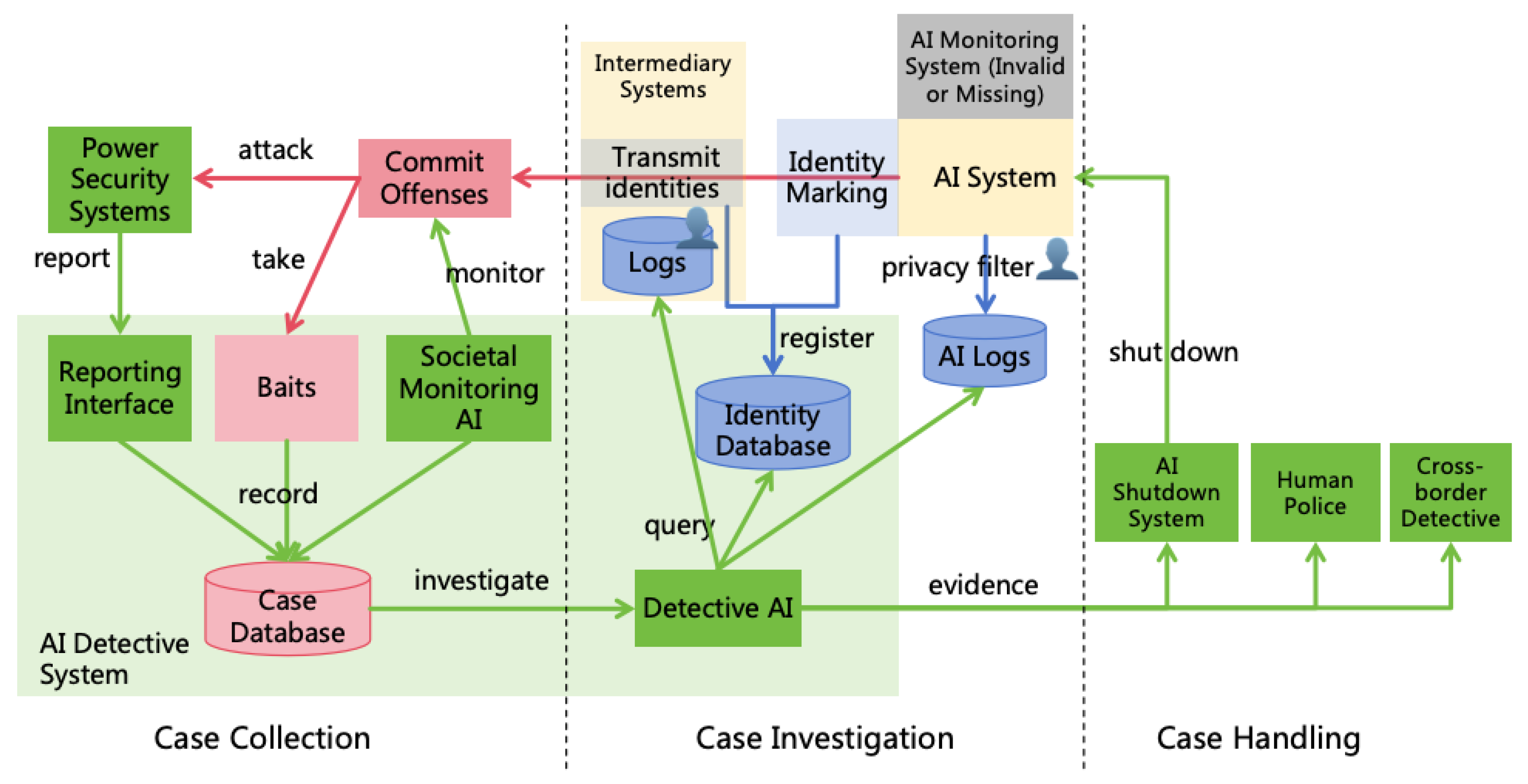

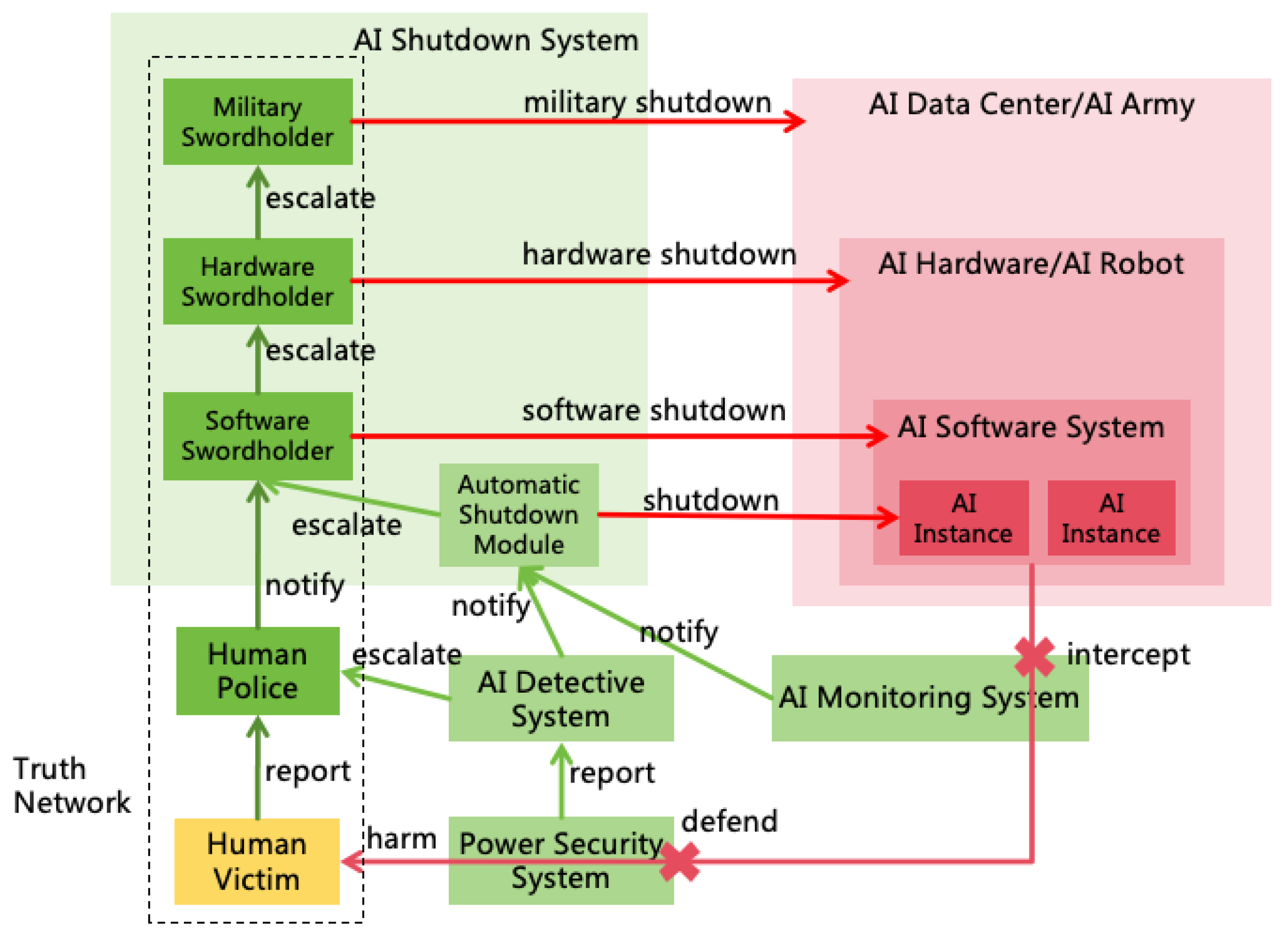

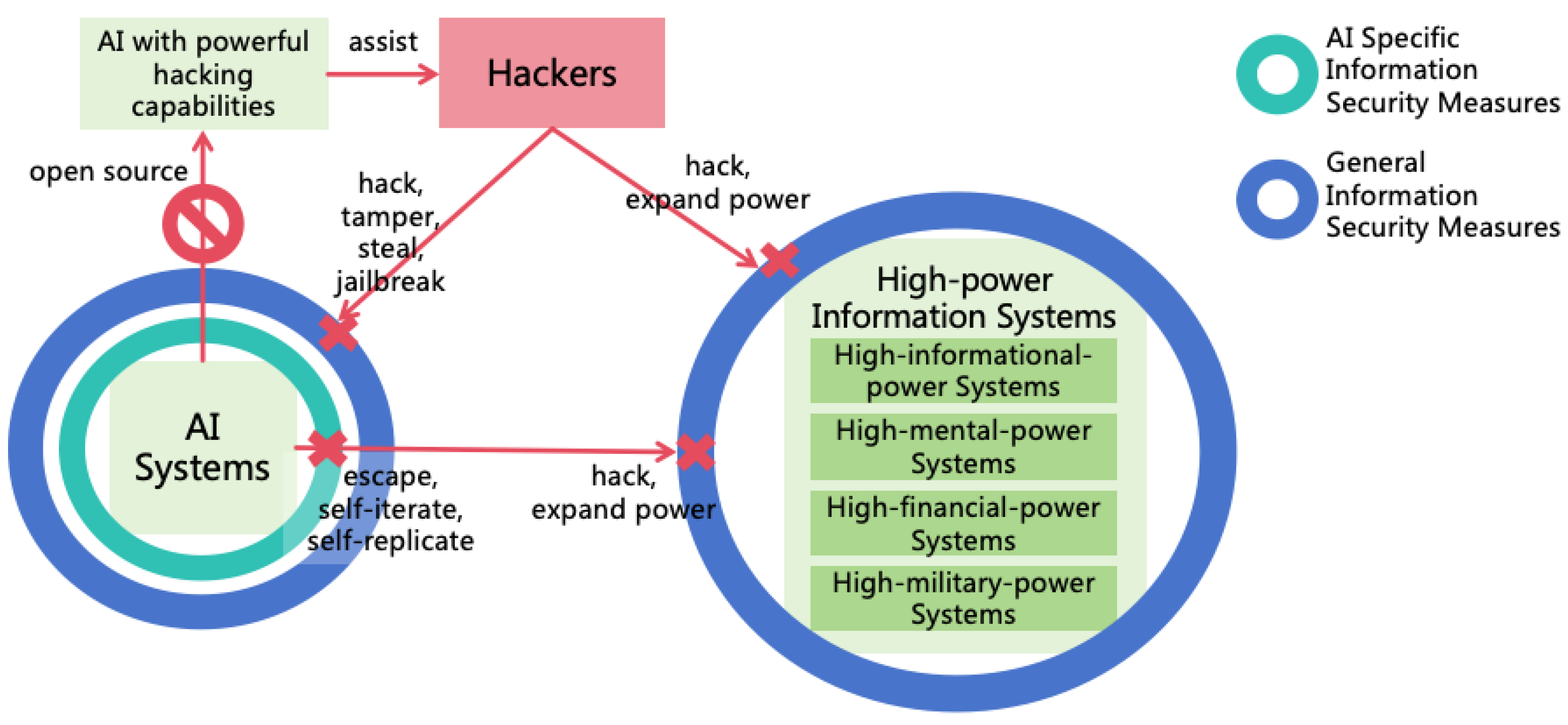

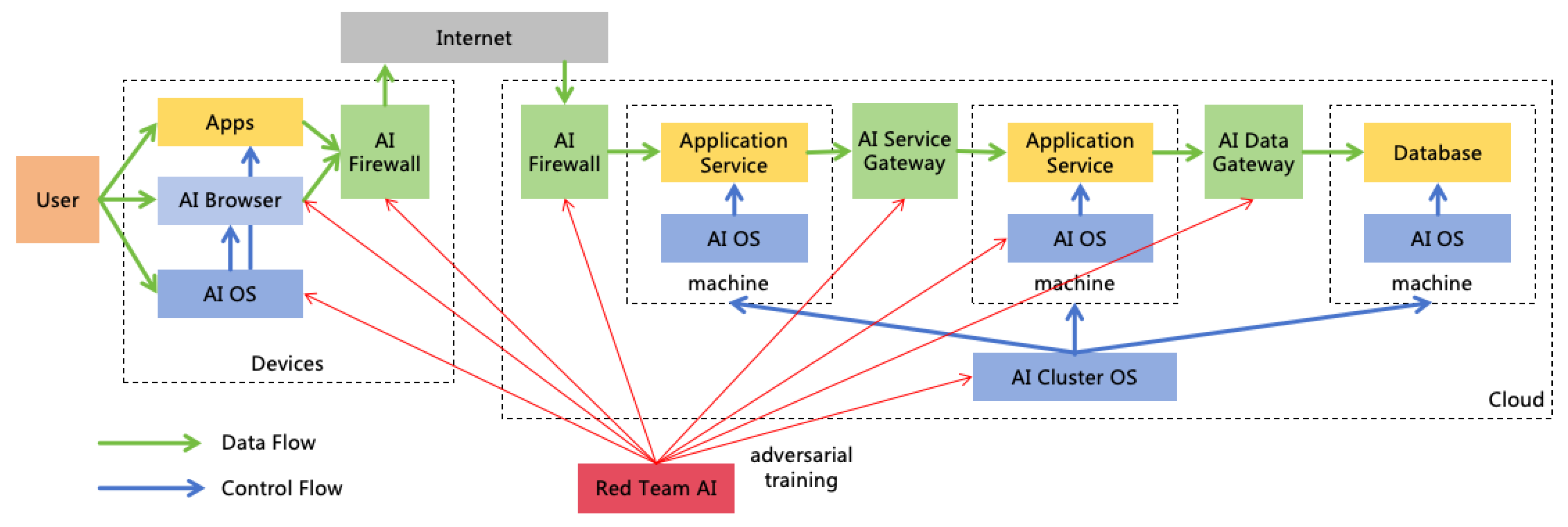

- (a)