1. Introduction

The integration of artificial intelligence (AI), particularly generative AI, into educational practices has opened new avenues for enhancing learning experiences and outcomes (Crompton & Burke, 2023; Xu & Ouyang, 2022). Among these advancements, AI-driven tools hold significant promise for personalized educational support. This study examines the potential of such tools through the case of a self-regulated course titled "I Learn with Prompt Engineering," designed to empower university students in creating precise instructions for large language models (LLMs). The course emphasizes the role of prompt engineering in minimizing errors, biases, and distortions, ultimately enhancing LLM reliability and usability across various domains. Through iterative practice and inquiry, this approach also facilitates the development of scientific English proficiency, addressing a critical skill gap among students whose native language is not English.

Students in fields like informatics (C.S.) must possess a strong command of academic English to excel in their studies and make progress in their professional fields. In our country, universities are now mandated to improve professional second-language education, yet no additional resources have been allocated to meet this objective. To address this challenge, our university has introduced several initiatives, including the pilot elective course I Learn with Prompt Engineering. The course is structured into Basic, Intermediate, and Advanced levels to support autonomous learning and enhance academic English proficiency, particularly in reading comprehension, while also fostering self-directed learning and market-ready skills. The course was designed to facilitate autonomous learning, automated grading and feedbacks, without involving direct teaching or teacher-led assessment, with the goal of enabling its broader implementation in the future.

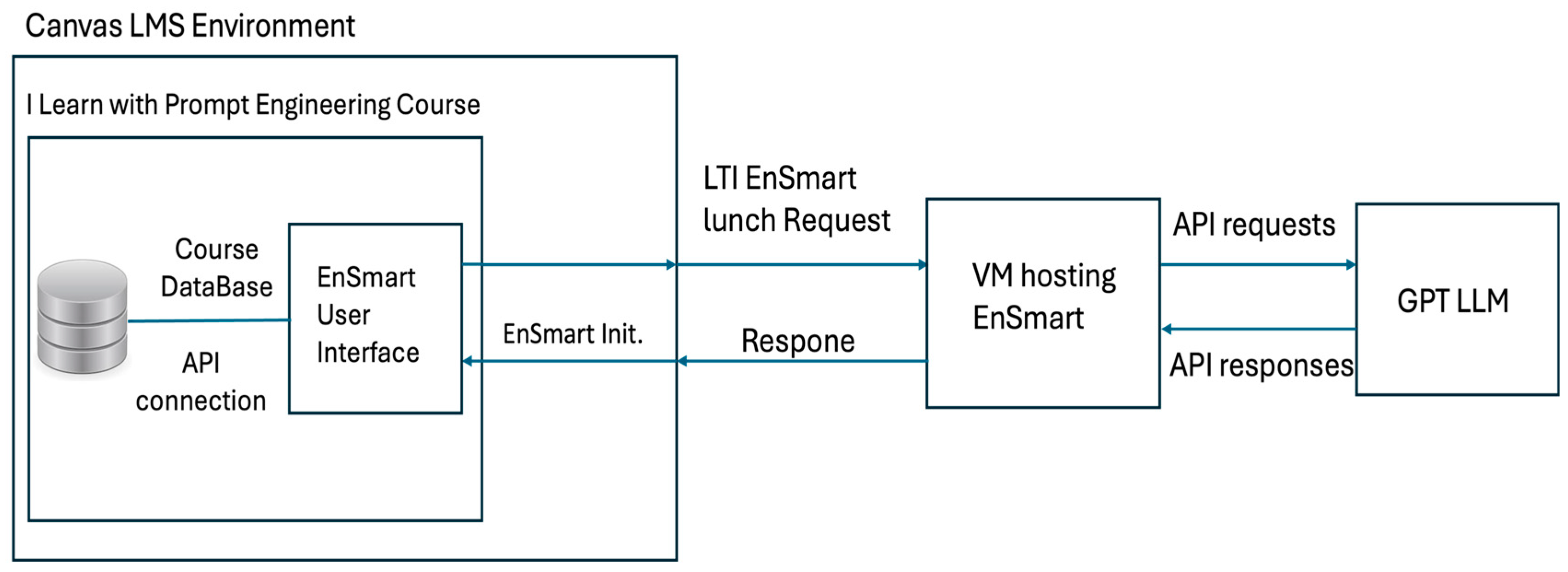

This case study evaluates how teaching prompt engineering concepts and applying them through generative AI tools enhance learning outcomes. By focusing on prompt patterns and techniques, it investigates how these skills improve students’ academic English comprehension. To measure the impact of prompt engineering on students’ language skills, the study employs EnSmart, an AI-driven tool developed specifically for real-time reading comprehension assessments. Powered by GPT-4, EnSmart generates test content, auto-grades responses, and provides detailed, human-like feedback. GPT-4 a language model recognized for its accuracy, contextual adaptability, and advanced reasoning abilities (OpenAI, 2023; Brown et al., 2023) make it well-suited for such applications. Integrated with the Canvas Learning Management System (LMS) via Learning Tools Interoperability (LTI) and Application Program Interface (API) , EnSmart delivers an automated testing environment aligned with course objectives.

The research methodology involves structured questionnaires and surveys to collect data on student perceptions and experiences with the course including prompt engineering patterns and AI-driven educational technologies. These instruments specifically evaluate the course's impact on students' academic English proficiency and overall learning outcomes. Additionally, the study examines the usability of EnSmart in generating test content, performing assessments, and delivering real-time, human-like feedback.

By analyzing student interactions and performance data, this study explores the effectiveness of prompt engineering with generative AI tools in enhancing skills such as academic language proficiency for non-native English speakers and supporting self-directed learning. It also evaluates the effectiveness and usability of generative AI-driven tools like EnSmart. Ultimately, this case study highlights the transformative role of generative AI in autonomous learning, task automation, and assessment, emphasizing the value of integrating prompt engineering techniques and AI-driven educational tools into modern education.

2. Literature Review

The integration of artificial intelligence (AI) into educational contexts has introduced significant advancements, especially through large language models (LLMs) and AI-driven tools, providing opportunities to enhance personalized learning, automate tasks, and transform content creation and delivery. These technologies support language learning, improve assessment and feedback (Caines et al., 2023) and offer a more individualized learning experience. The rise of LLMs, such as OpenAI's GPT series, has notably impacted how educational tools can be deployed to support autonomous learning and self-regulation in students (Peláez-Sánchez et al., 2024).

A critical aspect of leveraging LLMs in education is prompt engineering, a technique that tailors input instructions to optimize model performance. Well-designed prompts are instrumental in minimizing errors, biases, and distortions that can arise due to the inherent limitations of the training data and model architecture. Research indicates that precise instructions can significantly enhance the reliability and usability of LLMs across various domains. For instance, studies have shown that prompt engineering can direct LLMs to produce more relevant and unbiased outputs by providing clear and specific instructions (Reynolds & McDonell, 2021). Similarly, carefully crafted prompts can mitigate model hallucinations and increase response fidelity (Brown et al., 2020). This precision is particularly important in educational contexts, where the accuracy and relevance of generated content directly impact learning outcomes.

AI-driven educational tools have transformed autonomous learning and self-regulated courses by offering personalized and adaptive learning experiences. These tools support various aspects of the learning process, from content delivery to assessment and feedback. AI tools can provide real-time analytics and personalized feedback, empowering students to take control of their learning. Research suggests that self-regulated learning (SRL), facilitated by AI promotes higher levels of motivation and self-efficacy among students (Zimmerman, 2008). Additionally, AI can adapt to individual learning paces and styles, enhancing the effectiveness of autonomous courses and making education more inclusive and accessible (Luckin & Holmes, 2016). This approach aligns with the course's objectives of fostering autonomous and self-directed learning while enhancing proficiency in scientific English, particularly tailored for non-native English speakers.

Generative AI has shown significant promise in language learning contexts, particularly in enhancing second-language acquisition. Studies have highlighted that AI-driven tools, such as ChatGPT, can improve students' L2 writing skills by offering personalized support and fostering engagement (Guo et al., 2023; Marzuki et al., 2023; Muñoz et al., 2023). These tools also contribute to psychological benefits, such as increased motivation, interest, and self-confidence in language learners (Cuiping Song, Yanping Song). ChatGPT, specifically, has been recognized for its ability to engage in human-like conversations that simulate real-world dialogues, helping learners practice their language skills in a more authentic context and regulate their learning processes (Agustini, 2023; Zhu et al., 2023). Moreover, these AI tools efficiently generate assignments, quizzes, and learning activities, saving time for EFL teachers (Koraishi, 2023). By integrating prompt engineering into the I Learn with Prompt Engineering course, students are empowered to improve their academic English comprehension through direct application of LLM prompts, building on existing studies that link genertive AI and language learning outcomes.

The automation of test content generation, assessment and feedback processes through AI tools also represents a significant development. Traditional approaches to test generation often relied on rule-based systems and pre-defined templates or question banks (Dikli, 2003). These systems lacked adaptability, producing generic content unsuitable for diverse learning contexts. The subsequent adoption of machine learning (ML) and natural language processing (NLP) algorithms enhanced adaptability, though these methods often struggled to address contextual nuances and dynamic educational requirements (Heilman et al., 2006; Shermis & Burstein, 2013, 2013).

Generative AI represents a transformative leap in this domain, by enabling the efficient creation of tailored study materials, quizzes, and practice problems, fostering more personalized educational experiences(Boscardin et al., 2024). It also has the potential to revolutionize grading and feedback processes, automating tasks traditionally seen as time-consuming and subjective. Large language models (LLMs) provide nuanced, scalable, and efficient solutions for assessment, delivering detailed feedback that extends beyond mere correctness (Fagbohun et al., 2024). For instance, leveraging generative AI for grading, as proposed by Koraishi (2023), can streamline assessment workflows, allowing educators to focus more on pedagogical priorities. Unlike traditional methods, GAI feedback is detailed, actionable, and more akin to human tutoring (Ruwe & Mayweg-Paus, 2024), accelerating feedback delivery and enhancing the learning experience. AI-driven feedback promotes self-regulated learning by identifying knowledge gaps and aligning insights with students’ learning progress. This improves engagement, motivation, and comprehension, particularly in language learning contexts, where specificity and timeliness are crucial (Molina-Moreira et al., 2023; Kılıçkaya, 2022). The EnSmart tool analyzed in this study exemplifies these qualities, offering automated feedback that drives engagement, supports performance improvement, and mitigates instructor bias (Barana et al., 2018; Chen et al., 2019; Guei et al., 2020; Marwan et al., 2020).

The integration of these AI-driven tools with Learning Management Systems (LMS) like Canvas has the potential to further enhance their utility by allowing seamless tracking of student progress and personalized learning interventions. Research indicates that LMS integration facilitates a holistic approach to monitoring and improving student learning outcomes (Graf et al., 2009). This alignment between AI tools and LMS platforms strengthens the potential for enhanced learning experiences, especially in courses designed to foster autonomous learning.

In this study, we developed the EnSmart tool, which leverages the GPT-4 language model to integrate real-time test content generation, automated grading, and human-like feedback within the Canvas LMS via LTI and API. EnSmart exemplifies the transformative potential of generative AI technologies in automating educational tasks, particularly in self-regulated learning contexts such as the I Learn with Prompt Engineering course.

3. Development of EnSmart

This chapter details the development of EnSmart, an AI-driven tool designed to evaluate students' academic English reading comprehension. EnSmart was developed specifically for integration into the "I Learn with Prompt Engineering" course on canvas LMS, leveraging generative AI to provide real-time content generation, auto-grading, and human-like feedback. The chapter will cover the conceptualization, design, technical integration, usability testing and deployment, and continuous improvement of EnSmart.

3.1. Conceptualization and Design

The development of EnSmart began with a clear vision: to create an AI-driven application capable of generating, evaluating, and providing feedback on reading comprehension tasks. The conceptualization phase involved identifying the key functionalities required to meet this goal. The design focused on creating a user-friendly interface that would seamlessly integrate with the Canvas Learning Management System (LMS) while ensuring the tool could effectively leverage generative AI for real-time content creation.

The core objectives defined during this phase were:

Real-time generation of reading comprehension content.

Automated grading of student responses.

Provision of detailed, human-like feedback.

Full integration with the Canvas LMS.

3.2. Integration with Generative AI – Test Content Generation

Central to EnSmart's functionality is its integration with the GPT language model. This integration necessitated a development process that effectively harnessed the capabilities of generative AI. We applied appropriate prompt engineering techniques to ensure that the AI generated reading comprehension passages and questions that were both relevant and contextually appropriate.

Given the complexity of controlling the output of a large language model (LLM), we used a low value for the temperature parameter. This decision minimized variability in the AI's responses, ensuring consistency and relevance in the generated content.

To achieve our goal of generating high-quality, consistent, and relevant educational content, we employed the Task-Oriented Prompt pattern in conjunction with a low temperature setting. The Task-Oriented Prompt pattern exemplifies a structured approach to generating educational content tailored for specific tasks. This particular prompt type guides the AI through a series of tasks aimed at assessing academic English reading comprehension for university students in the Faculty of Informatics.

It directs the AI to perform several key actions:

Passage Generation: The AI is tasked with creating a 380-400 word passage that is both informative and engaging. The passage must cover a diverse and random topic related to an area of computer science. Its purpose is to effectively evaluate students' academic English reading comprehension while exploring a unique aspect or application of computer science.

Question Design: The prompt instructs the AI to craft questions that assess various reading comprehension skills, including identifying main ideas, understanding details, making inferences, and recognizing the writer's opinions.

Structuring the Passage: The AI is prompted to provide a title for the passage and organize it into paragraphs, ensuring clear and logical presentation of information.

Multiple-Choice Questions: Five multiple-choice questions are to be meticulously designed to delve into and assess students' profound comprehension of academic English reading concepts demonstrated in the passage. The questions should follow a format similar to IELTS reading test questions, challenging students' understanding effectively.

Output Format: The generated passage and questions are required to be presented in the format of a JavaScript object. This structured output includes attributes such as passage.name, passage.paragraphs[], and questions[{questions [0].text,questions [0].options[],questions [0].answer}], facilitating easy accessibility and usability of the generated data by other components within the application.

3.3. Integration with Generative AI - Automated Grading and Feedback System

One of EnSmart's features is its ability to automatically grade student responses and provide detailed feedback. The system uses predefined criteria to evaluate answers accurately and consistently. Additionally, the feedback mechanism was crafted to deliver constructive, human-like insights, helping students understand their mistakes and enhancing their learning experience. This system was meticulously developed in alignment with a task-oriented prompt tailored for instructors of university students in Academic English proficiency. The prompt delineates a series of tasks aimed at evaluating students' comprehension within an academic context. These tasks encompass:

Accurate Grading: The system computes grades by comparing student responses to correct answers, adopting a binary representation where 1 denotes correctness and 0 signifies incorrectness. This methodical approach ensures precise evaluation of academic English reading comprehension, aligning with the overarching goal of the exercise.

Scoring Criteria: Each question carries a weightage of 8 points, with full credit awarded for correct responses. This standardized scoring mechanism enhances transparency and consistency in the grading process, adhering closely to academic standards.

Detailed Feedback: An integral component of the system is the generation of precise and detailed feedback for each student's response. Focused on academic English reading comprehension, the feedback provides nuanced assessments, emphasizing clarity and depth. By mimicking human-like insights, the feedback aids students in identifying and rectifying errors effectively, fostering a conducive learning environment.

Structured Output: The system presents its assessment outcomes in the format of a JavaScript object, adhering to the prompt's specifications. This structured format includes attributes such as assessment.feedback, assessment.totalGrade, and assessment.overallFeedback, This output format facilitates accessibility and ensures that the generated output is captured more easily, enabling seamless integration with other components of the system.

3.4. Integration with Canvas LMS

To ensure EnSmart could be seamlessly incorporated into the existing course framework, it was integrated with the Canvas LMS using Learning Tools Interoperability (LTI) and the Canvas API. The LTI and API were used to achieve full integration of the app with the Canvas LMS environment, ensuring full accessibility of the app inside the Canvas platform and enabling data exchange between EnSmart and Canvas. This integration allowed for the exchange of student information such as student names and IDs, posting student grades to the course gradebook, and posting feedback from EnSmart to the students' gradebook for tests.

This integration enabled EnSmart to function as a cohesive part of the course, providing a unified platform for managing assessments and student interactions. The importance of such integration is supported by research indicating that seamless LMS integration enhances user experience, improves learning outcomes, and increases the efficiency of course management (Watson, 2007). Additionally, LTI integration is recognized for its role in facilitating interoperability between educational tools, thereby fostering a more cohesive and accessible learning environment (Galanis et al., 1 C.E.).

In this context, EnSmart acts as the tool provider, offering educational content and assessment functionalities, while Canvas LMS acts as the tool consumer, integrating these tools within its platform to deliver a seamless user experience to students.

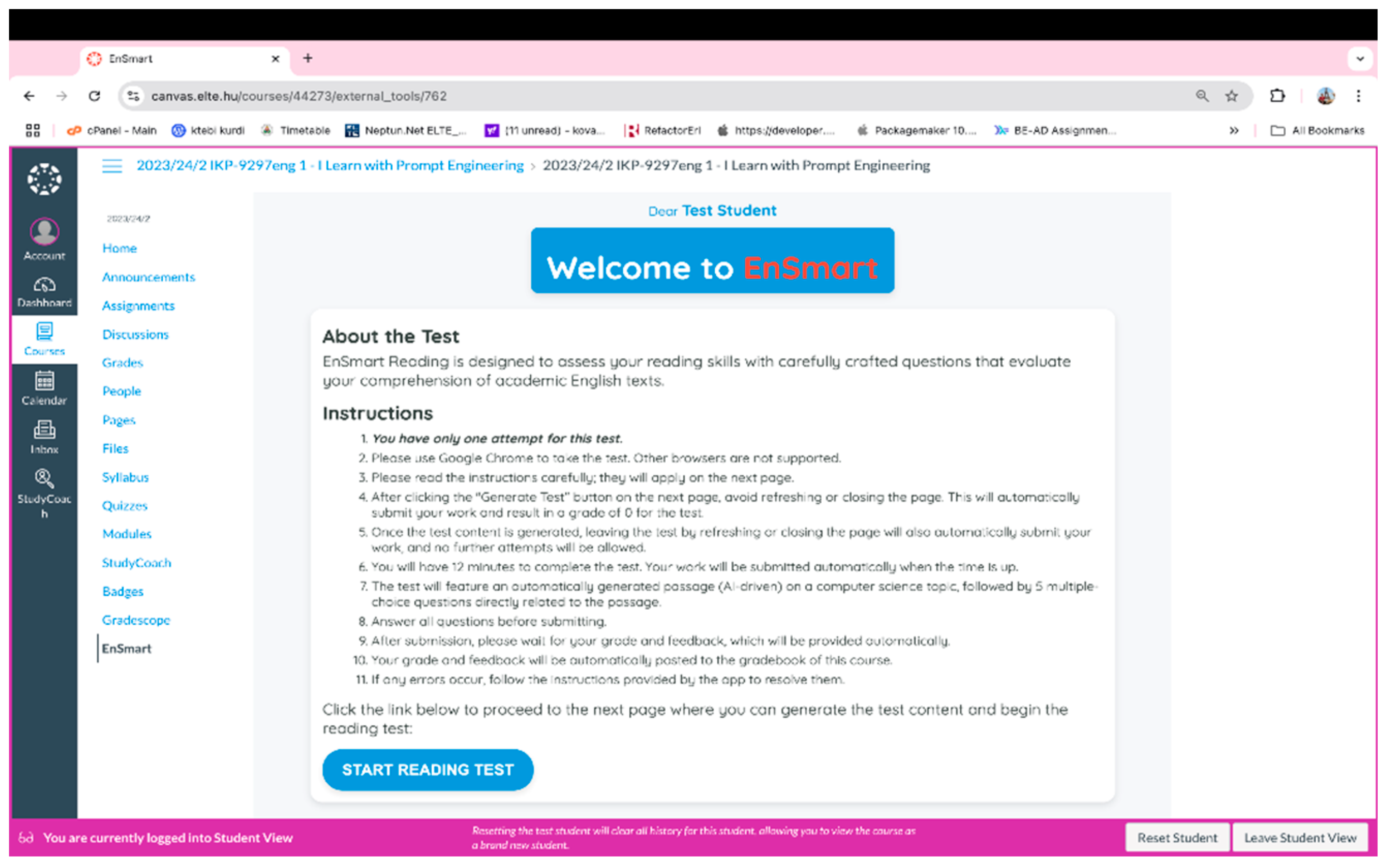

Figure 1 illustrates the seamless integration of EnSmart within the Canvas LMS environment, allowing students to access it directly from the course navigation menu.

3.5. Usability Testing, Refinement, and Deployment

Throughout the development process, we conducted usability testing to refine EnSmart’s functionalities and user interface. Pilot tests involving a test student provided feedback, which we used to identify and address potential issues. These iterative refinements ensured that EnSmart was both functional and user-friendly.

Our usability testing involved:

Pilot Testing: Initial testing with a sample student to gather feedback on usability and interface design.

Iterative Refinements: Adjustments based on test results to improve functionality and user experience.

After development and testing, we deployed EnSmart on the specified virtual machine provided by the university as part of the "I Learn with Prompt Engineering" course. The deployment phase included student orientation, ensuring that students could easily access and navigate EnSmart within the Canvas LMS.

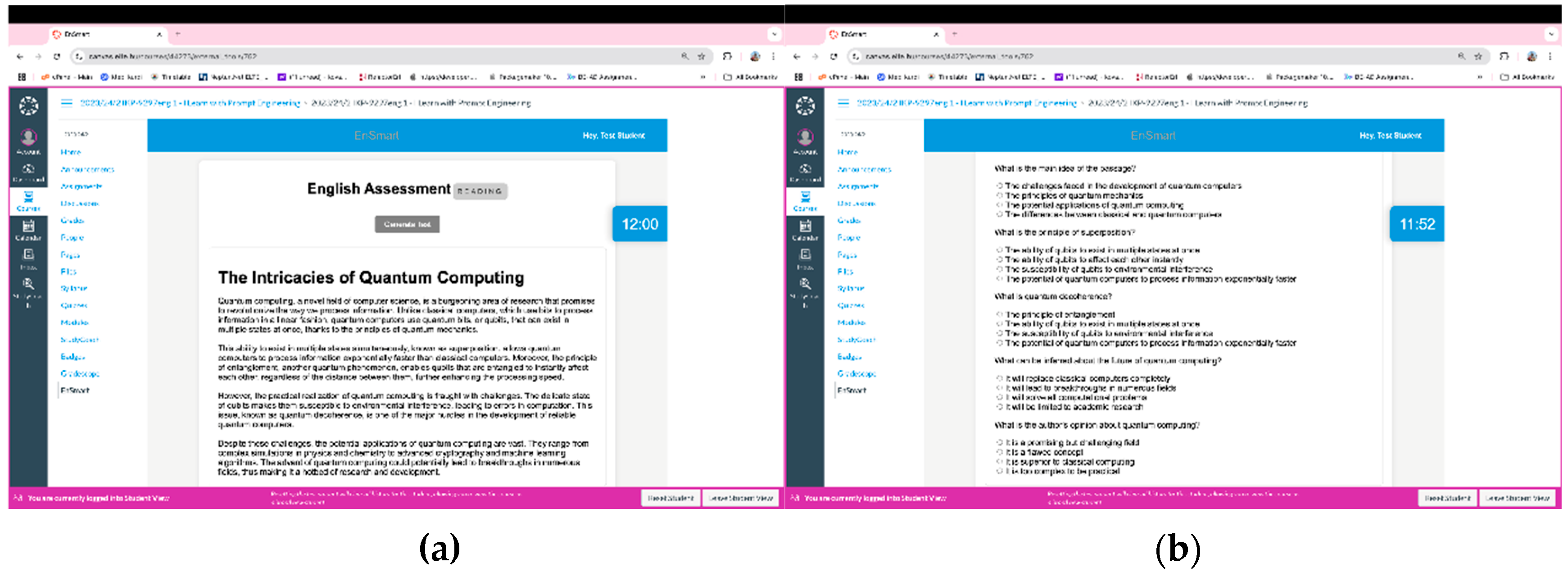

Figure 3-a through

Figure 4-b showcase the complete process of taking a test using the EnSmart tool within the Canvas LMS.

Figure 3-a and

Figure 3-b illustrate the initial process in which test content is dynamically generated by configuring reading passages and corresponding questions. Upon clicking the ‘Generate’ button, the application sends an API request to the GPT language model, which then creates passages and questions based on the specified configurations. The generated content is presented in a user-friendly interface, after which a 12-minute countdown timer begins, allowing students a single attempt to complete the test. Security features are incorporated to uphold academic integrity, including disabling content copying and restricting advanced browser functions.

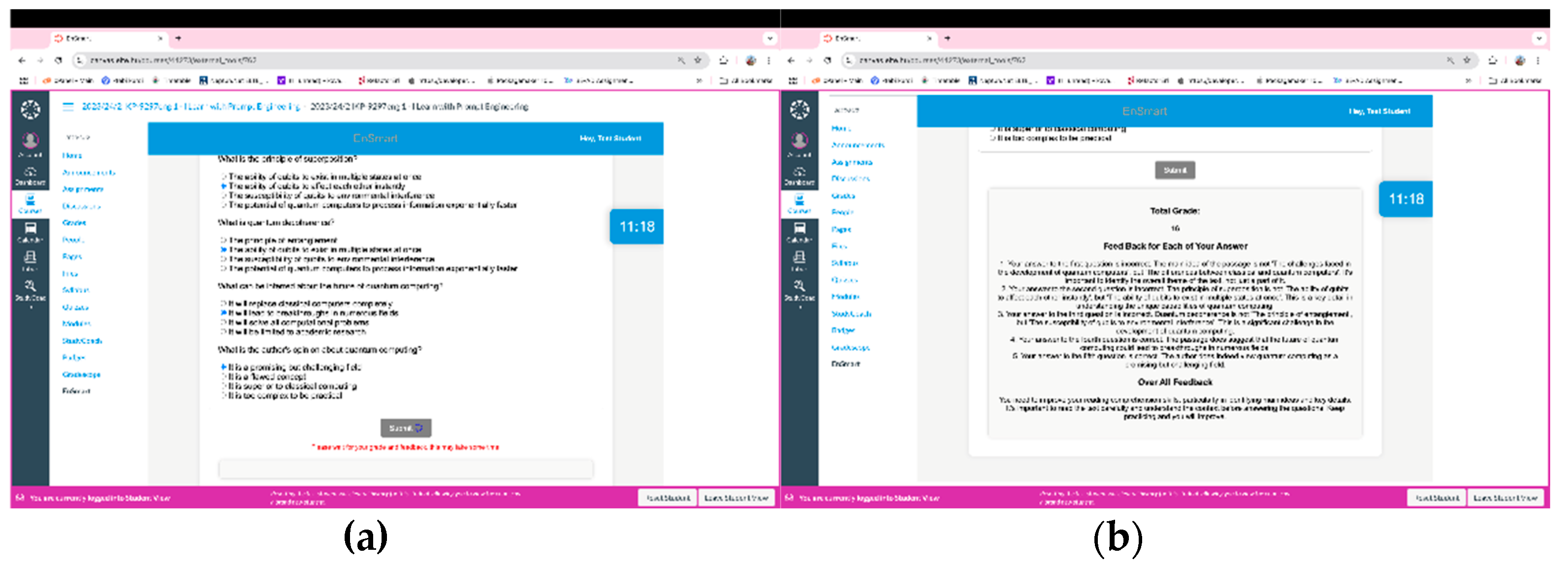

Figure 4-a illustrates the submission stage, where students complete and submit their answers.

Figure 4-b depicts the final stage, which involves automated grading and real-time feedback. In this phase, EnSmart evaluates student responses, providing immediate grades and personalized, human-like feedback, while directly posting the results to the corresponding Canvas gradebook.

4. Methodology

4.1. Course Design and Pedagogical Framework

The course I Learn with Prompt Engineering was designed within the framework of Education 4.0, focusing on three foundational principles (Peláez-Sánchez et al., 2024):

Promotes a student-centered approach to learning, emphasizing active engagement.

Provides students with real-world contexts and scenarios, enabling them to apply knowledge and skills in practice.

Encourages the development of research skills and complex thinking, allowing students to propose innovative solutions to current societal issues.

These principles draw on the theories of Heutagogy (grounded in humanistic and constructivist principles to foster self-learning practices), Peeragogy (collaborative learning processes), and Cyberpedagogy (integrating ICT with educational experiences) (Ramírez-Montoya et al., 2022).

The course content is organized around four core competencies:

Mastery of prompt engineering, enabling students to skillfully interact with LLMs using advanced prompt techniques.

Master purposeful Socratic conversations and the skill to build prompt constructs for self-learning on any discipline or seek guidelines of any life situations. At the same time caution students on „hallucinations” on LLMs and prepare them for fact checking and critical thinking.

Allow them to improve their scientific English reading and writing skills, which are highly important skills for their advancement in Informatics studies and workforce skills.

Methodology for learning involved peer reviews and discussions helping out each other or sharing good practices.

The art of prompt engineering was divided into two levels:

Basic prompt patterns: question refinement, cognitive verifier, persona, audience and flipped interaction patterns.

Extended prompting techniques: prompt expansion, chain of thought, guiding prompts, optimizing, GPT confronts itself and discussed problem formulation as well as the parallel between prompt engineering and programming.

Each module was assessed through multiple-choice tests and assignments requiring students to design prompt structures for practical tasks. The last two modules provided a lot of examples of prompt structures that could be configured for developing the learning process:

Prompt structures for language learning – assessment was done using the developed EnSmart app.

Prompt structures for general learning and self-development of various work-life skills – assessment was done by developing a mega-prompt for a formulated relevant problem.

Tests were automatically assessed, and the results could be immediately seen by participants, while assignments required peer-reviews using pre-defined rubrics. EnSmart used its own evaluation method.

4.2. Research Design

This study employs a mixed-methods research design to evaluate the effectiveness of the "I Learn with Prompt Engineering" course and the EnSmart tool. The primary focus is on assessing how prompt engineering techniques, integrated with generative AI tools, enhance students' academic English proficiency and overall learning outcomes. The study involves quantitative data collection through structured questionnaires and surveys, complemented by qualitative feedback to provide a comprehensive analysis.

4.3. Participants

The participants in this study are 60 international students from various departments and different stages of study and qualifications within the Faculty of Informatics at Eötvös Loránd University (ELTE). These students enrolled in the "I Learn with Prompt Engineering" elective course, designed to promote autonomous learning, scientific English proficiency, market-ready skills, and self-improvement. The diverse backgrounds of the participants ensure a broad perspective on the course's effectiveness and the usability of EnSmart.

4.4. Data Collection Instruments

Data collection for this study involves structured questionnaires and surveys administered to students at the end of the course via the university’s LMS, Canvas. These instruments are designed to gather quantitative data on students' perceptions, experiences, and learning outcomes. The survey questions cover multiple aspects of the course and the EnSmart tool, providing detailed insights into their effectiveness.

4.5. Survey Instrument

The survey instrument was meticulously designed to capture students' opinions, preferences, and experiences with prompt engineering patterns, autonomous courses and AI-driven content in academic reading English proficiency. The survey comprised 29 questions organized into five key areas, each targeting specific aspects of the educational experience.

-

Opinions on Autonomous Learning and improving self-direct learning

This section included questions to gauge students' attitudes towards autonomous courses and their suitability for different learning approaches. Likert-scale items assessed the degree of agreement with statements about the preference for instructor-led versus self-regulated courses. Additionally, it evaluated students' perceptions of the appropriateness and effectiveness of course content (prompt engineering) on improving their self-direct learning with GAI tools.

-

Enhancement of Prompting Skills and Reflections on Academic Language Proficiency

This section assessed the extent to which the course improved students' prompting skills. It explored how the application of various prompt patterns enhanced students' ability to engage with generative AI tools and how these skills contributed to improvements in their academic language proficiency.

-

Effectiveness of AI-Driven Content and Tools

This section included questions on the quality, complexity, and effectiveness of AI-generated passages and questions provided by the Ensmart tool. It aimed to evaluate how well these AI-driven elements supported academic English reading comprehension and the accuracy of auto-grading and feedback.

-

Interaction and Support Mechanisms

This section explored the potential benefits of live consultations and peer discussions in enhancing knowledge transfer. It included questions about the reasons for utilizing or not utilizing discussion areas in the LMS, as well as the perceived impact of these interactions on learning outcomes.

-

Overall Satisfaction and Recommendations

The final section included a Net Promoter Score (NPS) question to determine the likelihood of students recommending the course to their peers. It also featured open-ended questions to gather additional feedback on the Ensmart tool and the overall reliance on AI-driven content, providing insights into areas for improvement and students' suggestions for future enhancements.

5. Data Analysis and Results

The survey responses from 48 students were analyzed to extract insights into each of the five key areas outlined.

5.1. Opinions on Autonomous Learning and improving self-direct learning

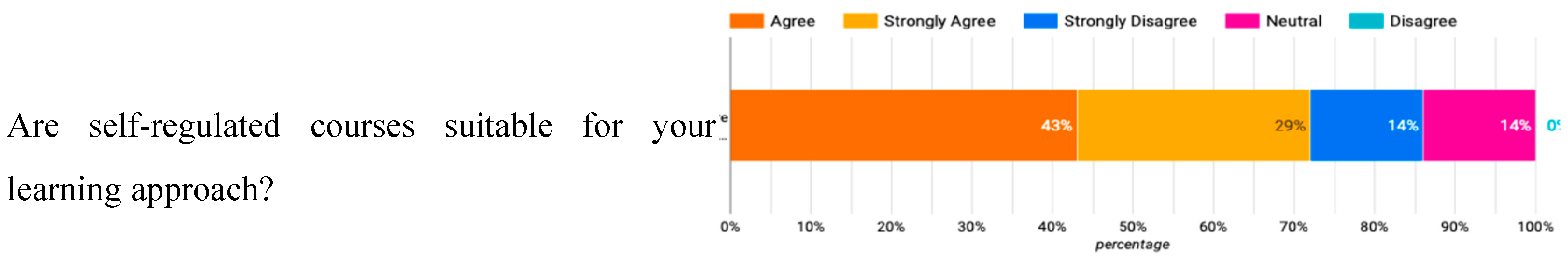

Survey responses indicate that 72% of learners find self-regulated courses suitable for their learning approach, suggesting that these courses generally align with their learning preferences. A smaller portion of respondents remained neutral or disagreed, indicating that while many learners appreciate the autonomy that self-regulation offers, some may find it less compatible with their needs.

Figure 5 provides a detailed breakdown of these responses.

In contrast, when asked about their preference for instructor-led versus autonomous courses, responses were more divided. While 43% preferred autonomous courses and 14% strongly preferred them, 29% indicated a preference for instructor-led courses, and 14% expressed no preference. Despite finding self-regulated courses suitable, learners' preferences are more varied when it comes to choosing between autonomous and instructor-led formats. This division suggests that, although many learners find self-regulated learning environments suitable and thrive in them, a significant portion still values the structure and guidance provided by instructor-led courses. These findings underscore the importance of offering both types of learning environments to accommodate diverse learner preferences.

The course had a notably positive impact on fostering self-directed learning and improving participants' ability to use generative AI tools, particularly through the application of prompt engineering techniques. A majority of respondents (78.1%) rated the course as "Very Effective" or "Effective" in fostering self-directed learning skills. Additionally, 80.6% of participants affirmed the usefulness of course materials, particularly those related to prompt engineering, in facilitating the mastery of generative AI tools. Notably, 81.7% reported enhanced ability to apply prompt engineering techniques in self-directed learning tasks through the use of GAI tools after completing the course. These findings underscore the course’s substantial impact on achieving its pedagogical goals.

However, approximately 18–22% of respondents provided neutral feedback when evaluating the course’s impact on fostering self-directed learning and improving the ability to use generative AI tools. This neutral feedback might reflect varying levels of experience or comfort with self-regulated learning, as well as a preference for instructor-led courses among certain learners. Overall, the results underscore the role of prompt engineering in enhancing self-directed learning through generative AI tools.

5.2. Improvement in Prompting Skills and Reflection on English Proficiency Improvement

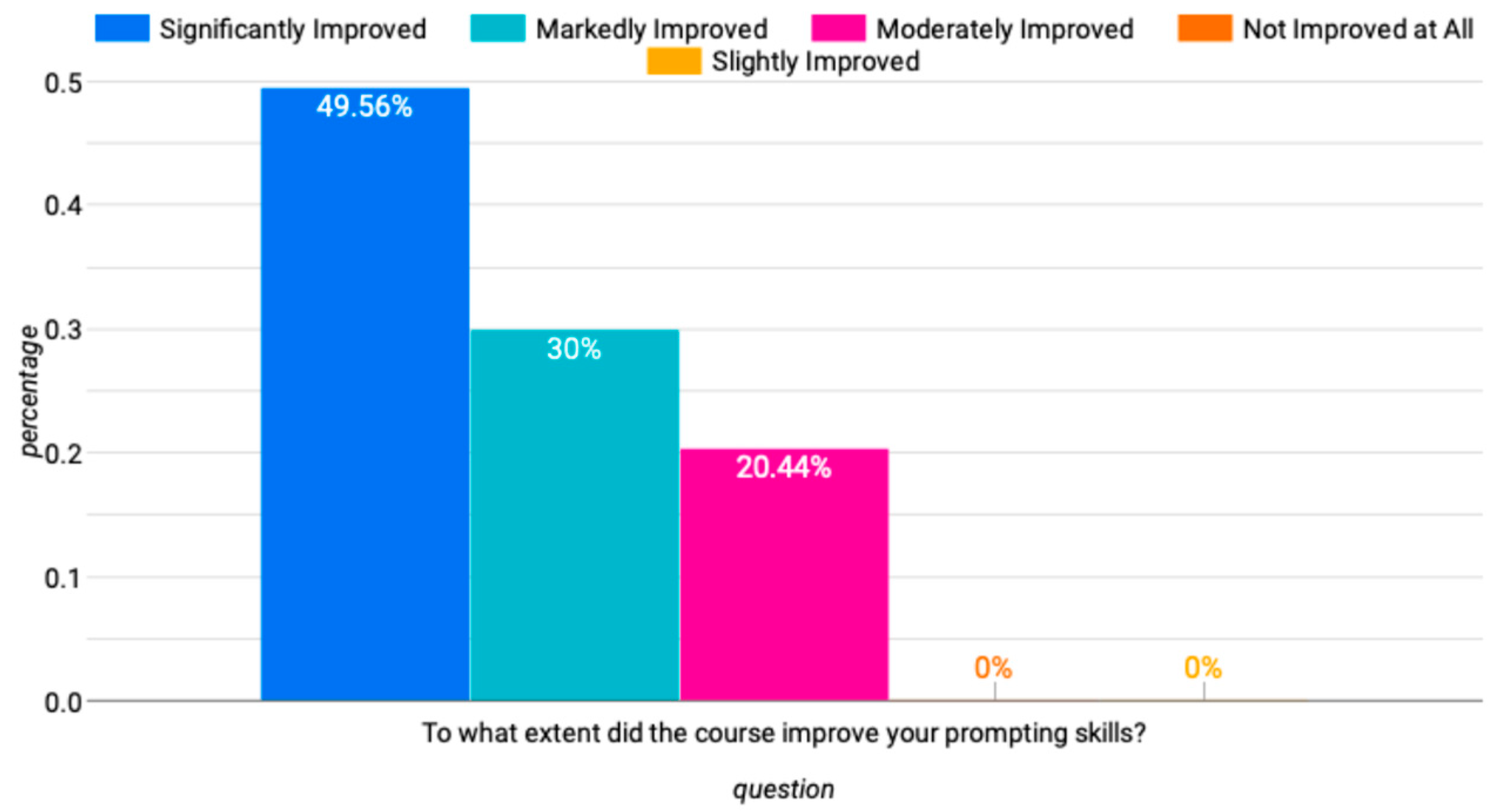

The improvement in participants’ prompting skills is evident, with 79.56% reporting either "Markedly Improved" or "Significantly Improved" abilities. This finding underscores the course's effectiveness in teaching essential prompt patterns critical for generative AI proficiency. Notably, the absence of negative feedback further highlights the course's positive impact on skill development. As shown in

Figure 6, the distribution of responses reflects the program's success in enhancing participants' prompting skills, aligning with its goal of fostering self-direct learning through generative AI tools.

For instance, one participant highlighted the effectiveness of specific patterns, stating, "The Persona pattern can really see the context of the situation and the suitable responses better." This comment illustrates how certain patterns were perceived as particularly intuitive for understanding conversational contexts. Similarly, another respondent emphasized the utility of combining patterns, noting, "All of them are useful because ideal results can be achieved by integrating them or at least some principles from them." This reflects a holistic approach to applying the skills acquired during the course.

The "Persona pattern" emerged as widely regarded and beneficial, with participants consistently identifying it as the most helpful for practicing prompts. This consistency suggests that the Persona pattern was perceived as both accessible and practical, facilitating its application to real-world scenarios. Remarks such as “easy to use and apply” highlight its role in contextualizing situations for better responses. Despite the prominence of the Persona pattern, participants also recognized the value of other prompt patterns, including "Conditional," "Iterative," "Hypothetical," and "Problem-solving." These additional patterns reinforce the course's effectiveness in providing a comprehensive toolkit, enabling learners to tailor their approaches to specific tasks or contexts.

Interestingly, while certain prompt patterns were favored, others proved more challenging to master. Responses regarding the most difficult prompt patterns revealed that the "Question Refinement," "Recursive," and "Flipped Interaction" patterns were particularly complex. One participant articulated this challenge, stating, "The flipped interaction pattern...requires a mix of IT and language skills to be successful," reflecting the interdisciplinary nature of prompt engineering. This indicates that while some patterns are intuitive, others demand a higher level of cognitive flexibility, revealing a gap between learners' initial expectations and the complexity of certain concepts. The challenges associated with mastering recursive and flipped interaction prompts signal an opportunity for future iterations of the course to provide more targeted support and resources to help learners navigate these advanced ideas.

The use of prompt patterns with Generative AI (GAI) tools was associated with noticeable improvements in participants’ academic English proficiency. Participants were asked to estimate their proficiency on a scale from 0 to 100 before and after completing the course. Most participants rated their initial proficiency between 60 and 70, with post-course ratings typically ranging from 80 to 85. These improvements reflect the effectiveness of the course content, particularly the prompt patterns. One participant commented that the course " opened new perspectives and techniques that can be used in the spirit of life-long learning," further illustrating the impact of the course. These results underscore the potential of integrating prompt patterns with GAI tools to enhance self-directed learning as demonstrated in this research scenario focused on improving academic English language proficiency.

However, some participants reported no noticeable improvement in their proficiency, such as one respondent who indicated a score of "80 - 80" and another who noted "85 before and after." These responses suggest that the use of prompt patterns learned in the course to improve academic English proficiency through GAI tools, within the context of an autonomous course and self-directed learning, was particularly effective for learners with lower initial proficiency but had a diminished impact on those with higher levels of academic English. This lack of improvement may be attributed to variability in practice time and engagement. While some participants reported limited practice, such as "1-2 hours" or "a few days," others consistently integrated prompt practice into their assignments. Such variability in practice likely hindered significant progress, particularly in academic contexts where consistent, focused practice is crucial for improvement.

5.3. Effectiveness of AI-Driven Content and Ensmart

The quality and complexity of AI-generated passages received generally positive ratings from respondents, with 41% rating them as Excellent and 37% as Good. This indicates that users find the material suitable for assessing academic English reading comprehension. However, 20% rated the passages as Fair, and 2% considered them Poor, revealing opportunities for enhancement, particularly for those who found the content insufficiently challenging or relevant.

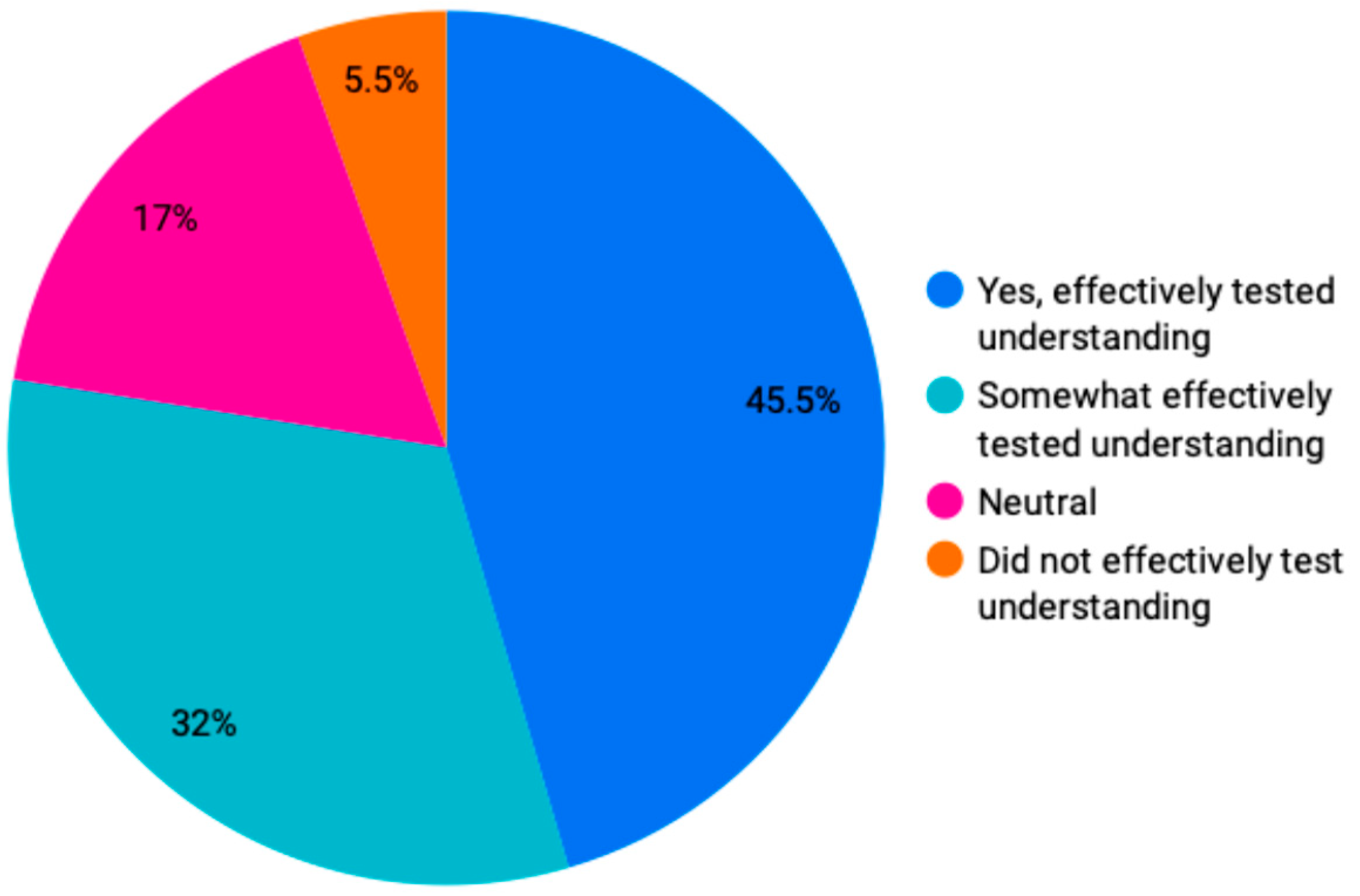

Survey responses regarding the effectiveness of AI-generated questions show a generally positive response, with the majority of users indicating the questions effectively or somewhat effectively tested their understanding. However, a portion of users remained neutral or dissatisfied, suggesting that while the questions are effective for many, there is room for refinement to better address diverse needs. These findings are illustrated in

Figure 7.

Importantly, dissatisfaction with the AI-generated questions appears to stem from a limitation of the EnSmart app developed for this case study, rather than from the capabilities of GPT-4 itself. EnSmart employed only multiple-choice formats to assess academic reading comprehension— a widely used method in English assessments—but one that may not fully address the diverse needs of all students. This limitation may have contributed to user dissatisfaction, as some participants likely desired more varied question formats to better challenge their skills. We believe there is potential to address this limitation in future iterations of EnSmart, thereby enabling the full utilization of generative AI models such as GPT-4. This would allow for the generation of a broader range of question types, enhancing the tool’s overall effectiveness.

The accuracy of Ensmart’s auto-grading and feedback emerged as one of the tool's strongest features. An overwhelming 82% of respondents rated the auto-grading as either Accurate or Very Accurate, with no users deeming it inaccurate. This high level of confidence reflects positively on the auto-grading mechanism, suggesting that users feel they receive fair evaluations of their submissions. However, the 18% who chose Neutral may indicate a lack of complete satisfaction or clarity regarding how grades are derived, highlighting the need for greater transparency in the grading process.

The survey also assessed whether auto-generated feedback from Ensmart helped users identify their strengths and areas for improvement in academic English reading comprehension. Responses revealed varied experiences: 44% of users reported that the feedback significantly helped, while 35% stated it somewhat helped. This suggests that the majority found the feedback beneficial. Meanwhile, 16% of respondents were Neutral, indicating neither positive nor negative impact. Only 5% felt the feedback did not help much, and no users reported that it was not helpful at all. This indicates that while the feedback is generally perceived as useful, there is room for improvement in making it more actionable and relevant, particularly for those who remain neutral or less satisfied.

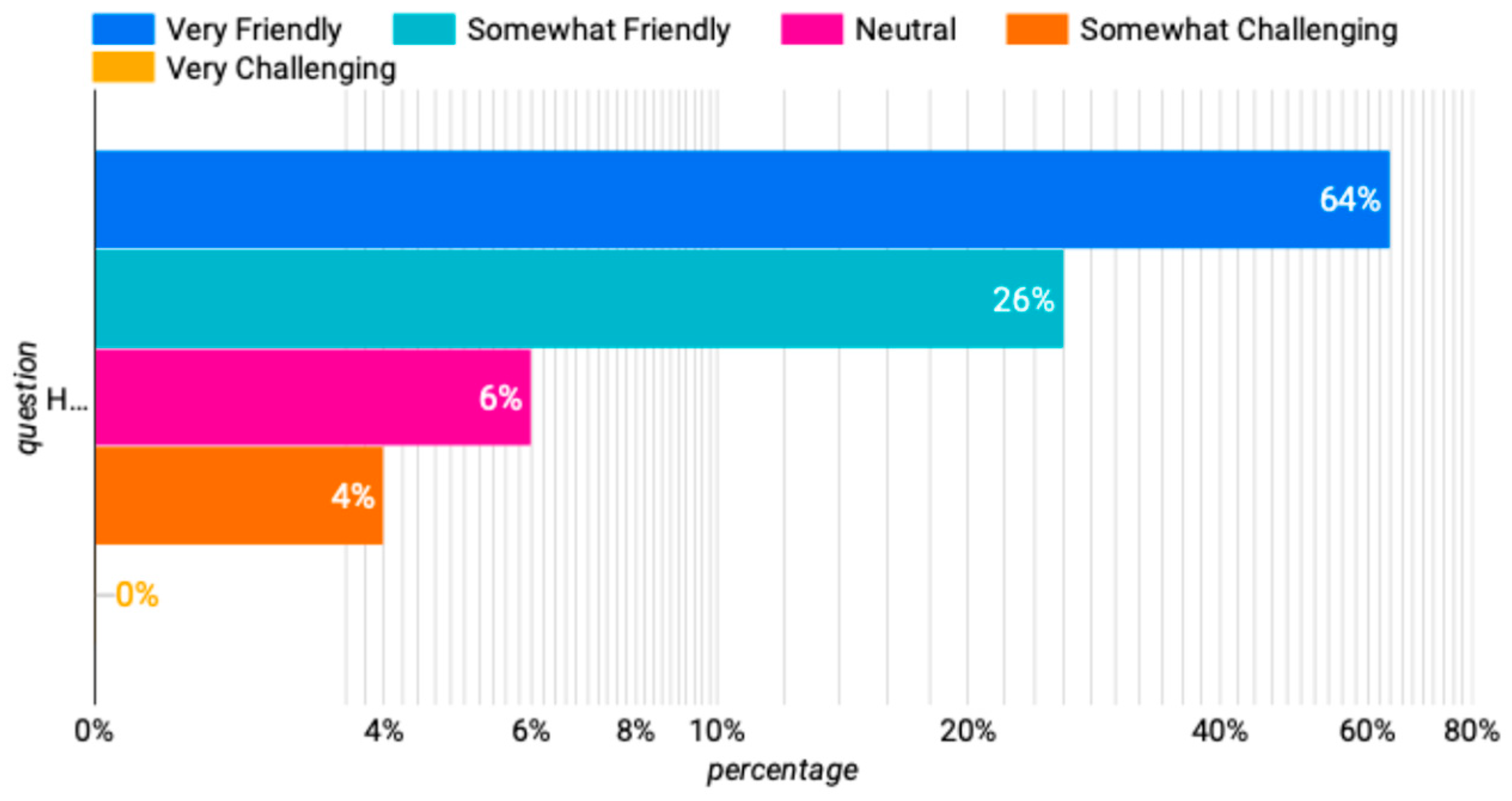

The user-friendliness of the Ensmart tool within Canvas LMS received positive feedback, with the majority of respondents rating it as 'Very Friendly' or 'Somewhat Friendly.' A small percentage rated it as 'Somewhat Challenging,' and none found it 'Very Challenging' (see

Figure 8). This positive feedback suggests that integrating the Ensmart tool into Canvas LMS has been successful in providing an intuitive and seamless user experience. Such effective integration is crucial for fostering engagement and sustained usage, particularly in academic settings where ease of access and user satisfaction significantly influence adoption.

Open-ended responses regarding reliance on AI-driven content generation and assessments reflected a spectrum of perspectives. Many participants expressed positive sentiments, with one noting, "It is really helpful," indicating the perceived value of AI in enhancing educational experiences. Others appreciated the innovative approach, stating, "I like this way of thinking," which underscores a growing acceptance of AI in education. Participants also emphasized the importance of immediate feedback and personalized learning experiences facilitated by AI. One participant articulated this well, stating, "I think it is beneficial for providing immediate feedback and personalized learning experiences."

However, concerns were also voiced. One participant remarked that "sometimes, it is unclear or confusing," reflecting apprehension about the clarity and transparency of AI-generated assessments. This sentiment resonates with another participant who cautioned that reliance on AI must be tempered with regular updates and human oversight to ensure "accuracy and fairness."

The complexities of integrating AI into education were further illustrated in comprehensive responses that elaborated on both opportunities and challenges. Participants recognized the efficiency AI brings to content generation and assessments, noting its capacity to tailor learning experiences to individual needs. However, they raised critical concerns about accuracy and reliability, emphasizing that "AI-generated content and assessments may not always be accurate or reliable," which raises significant questions about the validity and fairness of evaluations.

The potential for bias in AI algorithms was a recurring theme, with one participant warning that AI can inherit biases from its training data, potentially leading to inequitable outcomes in content generation and assessment. Ethical implications, including privacy, consent, and the responsible use of technology, were also highlighted.

Participants expressed nuanced views on dependency risks, suggesting that overreliance on AI-driven tools could diminish critical thinking, creativity, and problem-solving skills. One response succinctly captured this sentiment: "While AI-driven content generation and assessments offer undeniable benefits, it's essential to strike a balance between leveraging AI technology for efficiency and preserving the human element in education." This statement reflects a desire for thoughtful integration of AI tools into the curriculum, ensuring opportunities for reflection, discussion, and human interaction remain paramount.

Interestingly, participants acknowledged AI's limitations. One noted that while AI is "quite good and helpful," it suffers from issues such as "hallucinations," indicating inaccuracies that can undermine trust in AI assessments. Another pointed out that although AI content generation holds promise, it is still "not at the level of students, let alone lecturers," suggesting that traditional assessment methods led by experienced educators should continue to play a vital role.

5.4. Interaction and Support Mechanisms

In self-regulated learning environments, live consultations are particularly valuable as they offer structured, real-time feedback to support independent study. A significant 43% of respondents indicated that live consultations enhance knowledge transfer, suggesting that these sessions address a gap in guidance often present in self-paced courses. However, 21% of participants viewed consultations as unnecessary, while 35% remained neutral. This neutrality may reflect a preference for self-guided study or a lack of awareness regarding the potential benefits of live support.

Peer discussions were identified as beneficial to knowledge transfer by 36% of respondents, highlighting their importance in fostering collaborative understanding. Discussion-based learning enables students to clarify ideas, engage with diverse perspectives, and deepen their comprehension through interaction. However, 57% of participants remained neutral, indicating that many students may prioritize solitary study over collaborative learning in a self-regulated course setting.

Patterns in responses also reveal low utilization of discussion features on the Canvas platform. Many students expressed a preference for real-time communication, citing the lack of immediacy in asynchronous discussions as a limitation. Technical issues further contributed to dissatisfaction, with some students describing Canvas as "laggy" and expressing a preference for IRC-type communication. Additionally, some students reported feeling self-sufficient within the course structure, describing the content as "self-explanatory" and not requiring further discussion. Conversely, students who valued discussions referred to them as "golden opportunities for improvement," illustrating the diverse needs and expectations within self-regulated learning contexts.

5.5. Overall Satisfaction and Recommendations

Participants’ likelihood of recommending the course was assessed on a scale from 1 to 10. Notably, 56% rated it as 'Extremely likely' (10), 29% rated it 8, and 15% rated it 7. The absence of ratings below 7 indicates general satisfaction, though some participants identified areas for improvement. Suggestions included incorporating diverse resources such as videos, academic articles, and interactive elements like live collaborative projects and varied assignments beyond quizzes to foster deeper engagement. While these suggestions highlight opportunities for enhancement, they do not appear to have significantly impacted overall satisfaction.

Feedback on the EnSmart tool was largely positive, with users valuing its simplicity, automation, personalized feedback, and immediate, accurate auto-grading. These features enriched the learning experience by providing timely evaluations. However, qualitative comments revealed areas for refinement, particularly the need for more diverse question types to cater to varied learning preferences and sustain engagement. As one participant noted, 'EnSmart is a great tool, but it could be improved by incorporating more diverse types of questions.'

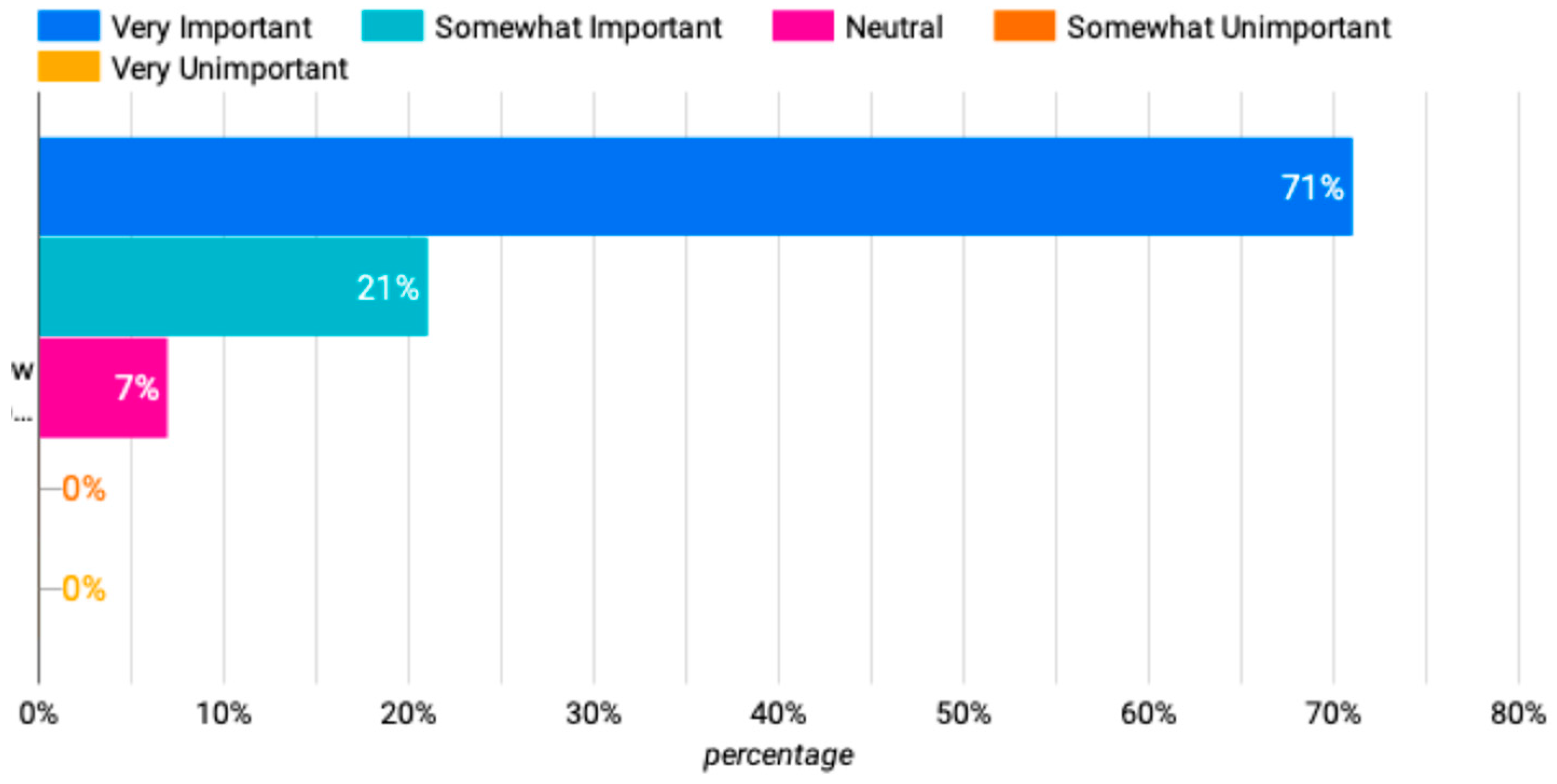

Finally, 92% of respondents rated understanding AI-driven technology as either 'Very Important' or 'Somewhat Important' for educators and students (see

Figure 9). This finding underscores the growing emphasis on technological literacy in education. Participants expressed enthusiasm for AI’s benefits, such as automation, efficiency, and personalized learning, while also highlighting the ethical considerations and need for human oversight. These results reflect a balanced perspective, acknowledging both the opportunities and challenges of integrating AI into educational contexts.

6. Conclusions, Limitations, and Future Works

6.1. Conclusions

The integration of generative AI into educational practices, as demonstrated in this study, underscores its transformative potential for enhancing learning outcomes and addressing critical skill gaps. The self-regulated course I Learn with Prompt Engineering effectively empowered university students to craft precise instructions for large language models (LLMs), develop self-directed learning skills, and enhance academic English proficiency through the use of generative AI tools. By emphasizing prompt engineering techniques, the course not only improved students’ ability to utilize LLMs effectively but also facilitated significant gains in academic English comprehension, particularly among non-native speakers with lower initial proficiency levels. However, learners with higher proficiency demonstrated minimal progress, likely due to variability in practice time and engagement inherent in self-regulated learning environments, as well as the relatively short duration of the research. These findings suggest the need for tailored learning paths that address individual abilities and goals. Beyond academic proficiency, the course equipped participants with practical, market-ready skills, demonstrating the scalability of generative AI tools in autonomous and self-directed learning environments.

Participants reported substantial improvements in their ability to apply prompt patterns, with the "Persona" pattern emerging as particularly intuitive and effective. However, advanced patterns such as "Flipped Interaction" and "Recursive" presented challenges, highlighting opportunities for refining instructional materials and providing targeted support for complex concepts.

The course’s reliance on AI-driven tools, particularly EnSmart powered by GPT-4, further demonstrated the potential of generative AI technologies for automated assessment and personalized feedback. The EnSmart tool, implemented in this pilot, was effective in auto-grading and providing immediate feedback—both essential for supporting self-regulated learning. Students generally endorsed EnSmart’s grading accuracy and fairness, affirming its potential as a reliable assessment tool. Additionally, students appreciated its integration into the Canvas LMS, which has largely succeeded in delivering an intuitive user experience with seamless functionality within the LMS environment. This implementation exemplified successful generative AI integration within LMS platform to enhance automation and enable collaborative human-AI interactions.

However, limitations in question variety and content adaptability, attributed to pilot-specific constraints rather than inherent limitations of generative AI technology, highlight areas for future development. Expanding EnSmart’s features to include more diverse and customizable assessments could better address a broader range of learning needs.

While distinguishing between app-specific limitations and broader challenges with AI technologies, occasional inaccuracies or "hallucinations" in AI-generated content underscore the need for ongoing refinement in educational applications. Participants acknowledged the benefits of AI-driven tools but emphasized the importance of human oversight to enhance reliability and mitigate risks. Concerns around AI accuracy, potential bias, and the risk of over-reliance on automated feedback highlight the necessity of responsible AI integration in education.

In conclusion, this study demonstrates the transformative role of generative AI and prompt engineering techniques in education, advocating for their balanced integration with human oversight to ensure ethical, transparent, and effective applications in modern learning environments.

6.2. Limitations

Despite the positive outcomes reported in this study, two primary limitations warrant consideration.

First, as a pilot, this study implemented EnSmart specifically for generating test content, creating multiple-choice questions, grading student submissions, and providing immediate, human-like feedback on English reading proficiency. These functionalities, though effective, were constrained by a limited range of question types and lacked adaptability, likely impacting student engagement and satisfaction. The focus on multiple-choice assessments, combined with the limited adaptability of content, restricted EnSmart’s potential to fully meet and assess the diverse proficiency levels and learning preferences observed among participants. This limitation underscores the need for further development to expand EnSmart’s assessment capabilities beyond reading comprehension and to incorporate a wider range of question types that can address varying skills and learning needs.

Second, the generalizability of the findings is limited by the small sample size and the specific educational context. With only 48 students from a single course and institution, the findings may have limited applicability across broader educational settings. Additionally, the relatively short duration of the study may have restricted the depth of student engagement and the full potential for skill development, especially for more advanced prompt patterns and academic English proficiency. Future research with larger, more diverse samples and extended study periods is necessary to validate these results and assess AI driven tools like EnSmart’s broader applicability in supporting autonomous learning across varied academic contexts.

6.3. Future Works

Building upon the insights and limitations of this study, several avenues for future research and development are proposed.

First, expanding EnSmart’s capabilities to include a broader array of question formats and more adaptive assessment options could enhance its effectiveness in addressing diverse student needs. Incorporating question types beyond multiple-choice and adding interactive assessments would enable a more holistic evaluation of student proficiency across various skill domains, fostering deeper engagement and more comprehensive learning assessments.

Further research should also investigate EnSmart’s potential to assess a wider range of academic skills beyond English reading comprehension. Extending its functionalities to evaluate proficiency in additional domains, such as academic writing, could validate its adaptability and broaden its educational applications. With advancements in generative AI, there is significant potential to make the course fully automated, encompassing personalized content, assignments, assessments, and real-time, human-like feedback.

Additionally, the findings indicate that customizing AI-driven assessments based on students' initial proficiency levels could optimize learning outcomes. Future work could develop individualized learning paths within EnSmart, tailoring content and assessments to align with students’ unique needs, thereby fostering differentiated learning experiences.

Finally, to increase the generalizability of findings, subsequent studies should involve larger, more diverse samples across varied educational contexts. Such research could provide more robust data on the role of AI-driven tools in supporting self-directed and autonomous learning at scale, offering valuable insights into their effective implementation across different academic settings.

Author Contributions

Conceptualization, M.K. and T.M.; methodology, M.K.,T.M..; software, M.K.; validation, M.K.; formal analysis, M.K.; investigation, M.K.; resources, M.K.,T.M.; data curation, M.K.; writing—original draft preparation, M.K.; writing—review and editing, M.K.,T.M.; visualization, M.K.; supervision, T.M.; project administration, T.M. All authors have read and agreed to the published version of the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to privacy.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Barana, A.; Conte, A.; Fioravera, M.; Marchisio, M.; Rabellino, S. (2018). A Model of Formative Automatic Assessment and Interactive Feedback for STEM. [CrossRef]

- Boscardin, C.K.; Gin, B.; Golde, P.B.; Hauer, K.E. ChatGPT and Generative Artificial Intelligence for Medical Education: Potential Impact and Opportunity. Academic Medicine: Journal of the Association of American Medical Colleges 2024, 99, 22–27. [Google Scholar] [CrossRef] [PubMed]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; Agarwal, S.; Herbert-Voss, A.; Krueger, G.; Henighan, T.; Child, R.; Ramesh, A.; Ziegler, D.M.; Wu, J.; Winter, C.; … Amodei, D. (2020). Language Models Are Few-Shot Learners. Proceedings of the 34th International Conference on Neural Information Processing Systems.

- Caines, A.; Benedetto, L.; Taslimipoor, S.; Davis, C.; Gao, Y.; Andersen, O.; Yuan, Z.; Elliott, M.; Moore, R.; Bryant, C.; Rei, M.; Yannakoudakis, H.; Mullooly, A.; Nicholls, D.; Buttery, P. On the application of Large Language Models for language teaching and assessment technology. arXiv 2023, arXiv:2307.08393. [Google Scholar]

- Chen, Q.; Wang, X.; Zhao, Q. Appearance Discrimination in Grading?—Evidence from Migrant Schools in China. Economics Letters 2019, 181, 116–119. [Google Scholar] [CrossRef]

- Crompton, H.; Burke, D. Artificial intelligence in higher education: The state of the field. International Journal of Educational Technology in Higher Education 2023, 20, 22. [Google Scholar] [CrossRef]

- Dikli, S. Assessment at a distance: Traditional vs. Alternative Assessments. Alternative Assessments. The Turkish Online Journal of Educational Technology 2003, 2. [Google Scholar]

- Fagbohun, O.; Iduwe, N.P.; Abdullahi, M.; Ifaturoti, A.; Nwanna, O.M. Beyond Traditional Assessment: Exploring the Impact of Large Language Models on Grading Practices. Journal of Artificial Intelligence, Machine Learning and Data Science 2024, 2, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Galanis, N.; Mayol, E.; Casany, M.J.; Alier, M.; Galanis, N.; Mayol, E.; Casany, M.J.; Alier, M. 1 C.E.; January 1). Tools Interoperability for Learning Management Systems (tools-interoperability-for-learning-management-systems) [Chapter]. Https://Services.Igi-Global.Com/Resolvedoi/Resolve.Aspx?Doi=10.4018/978-1-5225-0905-9.Ch002; IGI Global. [CrossRef]

- Graf, S.; Kinshuk, D.; Liu, T.-C. Supporting Teachers in Identifying Students’ Learning Styles in Learning Management Systems: An Automatic Student Modelling Approach. Educational Technology & Society 2009, 12, 3–14. [Google Scholar]

- Guei, H.; Wei, T.-H.; Wu, I.-C. 2048-like games for teaching reinforcement learning. ICGA Journal 2020, 42, 1–24. [Google Scholar] [CrossRef]

- Guo, K.; Zhong, Y.; Li, D.; Chu, S. Effects of chatbot-assisted in-class debates on students’ argumentation skills and task motivation. Computers & Education 2023, 203. [Google Scholar] [CrossRef]

- Heilman, M.; Collins-Thompson, K.; Callan, J.; Eskenazi, M. (2006). Classroom success of an intelligent tutoring system for lexical practice and reading comprehension. paper 1325. [CrossRef]

- Koraishi, O. Teaching English in the Age of AI: Embracing ChatGPT to Optimize EFL Materials and Assessment. Language Education and Technology 2023, 3, 1. Available online: https://langedutechcom/letjournal/indexphp/let/article/view/48.

- Luckin, R.; Holmes, W. (2016). Intelligence Unleashed: An argument for AI in Education.

- Marwan, S.; Gao, G.; Fisk, S.; Price, T.W.; Barnes, T. Adaptive Immediate Feedback Can Improve Novice Programming Engagement and Intention to Persist in Computer Science. Proceedings of the 2020 ACM Conference on International Computing Education Research 2020, 194–203. [Google Scholar] [CrossRef]

- Marzuki, Widiati, U.; Rusdin, D.; Darwin. The impact of AI writing tools on the content and organization of students’ writing: EFL teachers’ perspective. Cogent Education 2023, 10. [CrossRef]

- Muñoz, S.; Gayoso, G.; Huambo, A.; Domingo, R.; Tapia, C.; Incaluque, J.; Nacional, U.; Villarreal, F.; Cielo, J.; Cajamarca, R.; Enrique, J.; Reyes Acevedo, J.; Victor, H.; Huaranga Rivera, H.; Luis, J.; Pongo, O. Examining the Impacts of ChatGPT on Student Motivation and Engagement. Przestrzeń Społeczna (Social Space), 2023; 23. [Google Scholar]

- Peláez-Sánchez, I.C.; Velarde-Camaqui, D.; Glasserman-Morales, L.D. (2024). The impact of large language models on higher education: Exploring the connection between AI and Education 4.0. Frontiers in Education, 2024, 9, 1392091. [Google Scholar] [CrossRef]

- Ramírez-Montoya, M.S.; Castillo-Martínez, I.M.; Sanabria-Z, J.; Miranda, J. Complex Thinking in the Framework of Education 4.0 and Open Innovation—A Systematic Literature Review. Journal of Open Innovation: Technology, Market, and Complexity 2022, 8, 4. [Google Scholar] [CrossRef]

- Reynolds, L.; McDonell, K. Prompt Programming for Large Language Models: Beyond the Few-Shot Paradigm. Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems 2021, 1–7. [Google Scholar] [CrossRef]

- Ruwe, T.; Mayweg-Paus, E. Embracing LLM Feedback: The role of feedback providers and provider information for feedback effectiveness. Frontiers in Education 2024, 9. [Google Scholar] [CrossRef]

- Shermis, M.D.; Burstein, J. (Eds.) Handbook of Automated Essay Evaluation: Current Applications and New Directions; Routledge, 2013. [Google Scholar] [CrossRef]

- Watson, W. An Argument for clarity: What are Learning Management Systems, what are they not, and what should they become. TechTrends 2007, 51, 28–34. [Google Scholar]

- Xu, W.; Ouyang, F. A systematic review of AI role in the educational system based on a proposed conceptual framework. Education and Information Technologies 2022, 27, 4195–4223. [Google Scholar] [CrossRef]

- Zimmerman, B.J. Investigating self-regulation and motivation: Historical background, methodological developments, and future prospects. American Educational Research Journal 2008, 45, 166–183. [Google Scholar] [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).