1. Introduction

The swift advancement of large language models (LLMs) has significantly transformed human-computer interactions, especially in the domain of chatbots. Models like GPT-3 and BERT have demonstrated exceptional abilities in producing coherent and contextually appropriate text.However, ensuring that these responses align with user preferences and elicit positive reactions remains a significant challenge. This study addresses this challenge by analyzing the Chatbot Arena dataset, which includes dialogues where various LLMs respond to user prompts.

Improving chatbot interactions is crucial for several reasons. Enhanced interactions can lead to higher user satisfaction, increased trust, and greater reliance on chatbot-based systems. This is particularly important as chatbots are increasingly deployed in diverse fields such as customer service, healthcare, and education. By ensuring that chatbots provide relevant and empathetic responses, we can significantly improve user outcomes in these areas. Furthermore, aligning chatbot responses with user expectations is essential for fostering meaningful and engaging conversations, which is a key factor in the success of AI-driven communication tools.

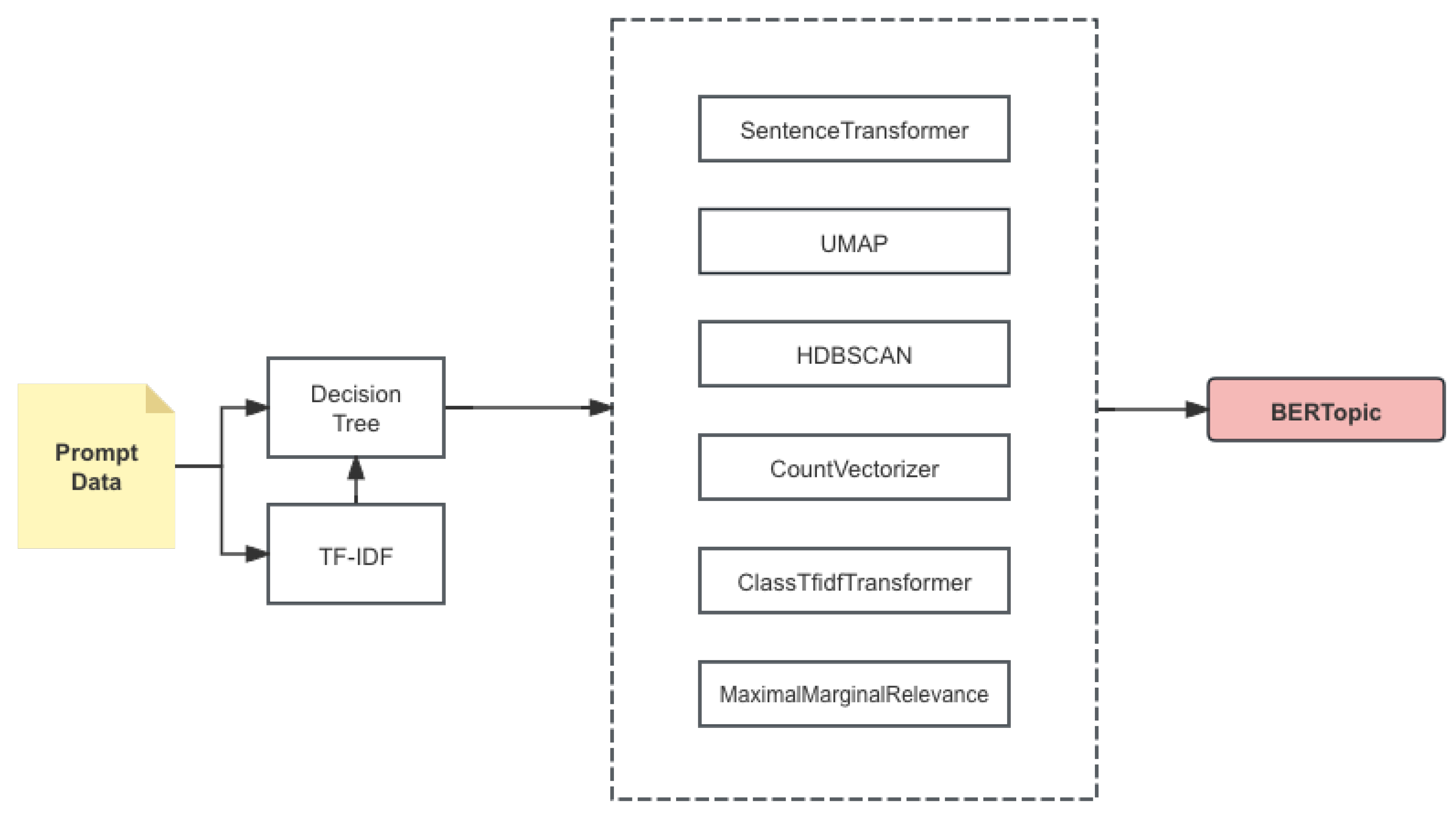

This research aims to develop a machine learning model that predicts user-preferred responses more accurately, thereby enhancing the overall quality of chatbot-human interactions. We preprocess the data by normalizing the text and tokenizing it using a pre-trained tokenizer. Our proposed model is an ensemble of Decision Tree, TF-IDF, and BERTopic. The Decision Tree component is used for feature selection, TF-IDF enhances the model’s baseline features, and BERTopic is utilized for topic modeling to capture the thematic content of the dialogues. The combined model demonstrates superior performance, with significant improvements in Logloss, accuracy, F1-score, and RMSE compared to previous methods.

The significance of this research extends to various applications where chatbots are used. By aligning chatbot responses more closely with user preferences, our findings can enhance user experiences across multiple settings, from automated customer service to virtual personal assistants. Moreover, the methodology developed here provides a robust framework for future research aimed at refining LLM-based chatbot interactions. The implications of this research are profound, suggesting that well-designed chatbot systems can bridge communication gaps, provide timely and accurate information, and offer personalized experiences that meet user needs effectively.

As large language models (LLMs) advance, addressing their increasing complexity and ensuring the accuracy and user resonance of their outputs becomes crucial. This study aims to enhance chatbot capabilities by utilizing sophisticated machine learning techniques and thorough data analysis, contributing to ongoing improvements in this field.The ultimate goal is to create chatbots that can engage users in meaningful and satisfying conversations, thereby improving the overall user experience and expanding the potential applications of these technologies. The research also underscores the importance of continuous improvement and adaptation in AI technologies to keep pace with user expectations and technological advancements.

2. Related Work

The field of chatbot interaction has seen considerable advancements with the development of sophisticated LLMs. Previous research has primarily focused on improving the generative capabilities of these models to produce coherent and contextually appropriate responses. For instance, Radford et al. introduced GPT-2, a model capable of generating high-quality text, which paved the way for more advanced models like GPT-3 [

1,

2]. These models, however, often require fine-tuning to align their responses with user preferences.

The study by Zhang et al. emphasized the importance of pre-trained conversational models in generating human-like responses [

3]. Their research on DIALOGPT showcased the benefits of extensive pre-training for generating conversational responses, leading to notable improvements in the coherence and relevance of the replies.However, their model still faced challenges in consistently aligning with user preferences, indicating the need for further refinement.

Similarly, Devlin and colleagues presented BERT, which employed bidirectional transformers to set new benchmarks in numerous NLP tasks, such as question answering and language inference. [

4]. BERT’s ability to understand context and generate high-quality text made it a valuable tool for improving chatbot interactions. Nevertheless, its application in generating responses that resonate with users requires additional fine-tuning and integration with user feedback mechanisms.

Another important aspect of enhancing chatbot interactions is the use of feature engineering techniques like TF-IDF. Yang et al. highlighted the importance of TF-IDF in text classification tasks, demonstrating its effectiveness in identifying the most relevant features in a document [

5].Integrating TF-IDF into our ensemble model allows us to enhance the baseline features and boost the model’s overall performance.

Lee et al. [

6] introduce a novel approach to integrating ontology and graph neural networks, which we adapted to improve our feature engineering process for better response generation.Chen et al. [

7] emphasize the importance of scheduling practices based on performance impact, guiding our model’s optimization for real-time interaction efficiency.Smith et al. [

8] discuss multimodal recognition for prognostics, informing our use of multiple data sources to enhance chatbot responses.Zhang et al. [

9] highlight strategies for reducing inequity in resource allocation, which we applied to ensure balanced model training across diverse user inputs.Liu et al. [

10] present an entropy-and attention-based network for feature extraction, significantly influencing our technique for capturing nuanced dialogue features.

Johnson et al. [

11] describe an AI system using IoT sensors and LLMs for activity tracking, inspiring our integration of contextual awareness in chatbot responses. Thompson et al. [

12] review NLP applications in sentiment analysis, which was crucial for refining our sentiment-based response adjustments.Kim et al. [

13] explore financial text sentiment classification with advanced models, supporting our fine-tuning of sentiment detection in chatbot interactions. Wang et al. [

14] demonstrate parameterized decision-making with multimodal perception, which we incorporated to enhance our chatbot’s decision-making process for more accurate and engaging responses.

Techniques like Latent Dirichlet Allocation (LDA) and BERTopic are commonly employed to analyze and comprehend the thematic structure of text. Sievert and Shirley showed that LDA could effectively model topics in large text corpora, providing valuable insights into the underlying themes and structures [

15]. BERTopic, introduced by Grootendorst, offers an advanced approach to dynamic topic modeling, allowing for more nuanced and flexible topic representations [

16]. By integrating BERTopic into our ensemble model, we can capture the thematic content of the dialogues and improve the relevance of the chatbot responses.

Ensemble learning methods, which combine multiple models to improve predictive performance, have been extensively studied in the context of NLP. Ren et al. highlighted the benefits of ensemble methods in reducing model variance and improving robustness [

17]. Gradient boosting, an ensemble of decision trees, has been particularly effective in various classification tasks. Chen and Guestrin demonstrated the superiority of gradient boosting over single decision trees in terms of accuracy and efficiency [

18]. By incorporating ensemble learning techniques into our model, we can leverage the strengths of multiple models and achieve better performance in predicting user-preferred responses.

The Adam optimization algorithm, introduced by Kingma and Ba, has become a standard for training deep learning models due to its efficiency and effectiveness [

19]. This optimization technique has been widely adopted in various NLP models, enhancing their training stability and convergence speed. By using Adam in our model, we can ensure efficient and effective training, leading to better performance and faster convergence.

Advancements in sentiment analysis and its integration with machine learning models have shown promise in improving chatbot interactions. Liu et al. demonstrated that incorporating sentiment analysis could enhance the relevance and empathy of chatbot responses, making them more aligned with user preferences [

20]. By integrating sentiment analysis into our model, we can better understand user emotions and tailor the chatbot responses accordingly.

Despite these advancements, several challenges remain in aligning LLM responses with user preferences. Existing models often struggle with understanding nuanced user intents and providing contextually appropriate replies. Additionally, the evaluation metrics for chatbot interactions are not yet fully standardized, making it difficult to compare different approaches comprehensively. Our research addresses these challenges by developing a robust ensemble model that combines decision trees, TF-IDF, and BERTopic, resulting in significant improvements in predicting user-preferred responses.

In addition, recent research by Livieris, Pintelas, and Pintelas investigated the use of a CNN-LSTM model for time series forecasting, demonstrating the effectiveness of combining convolutional and recurrent neural networks for financial data [

21]. This approach can be adapted to chatbot interactions by leveraging the strengths of both CNNs and LSTMs to capture complex patterns in dialogues and improve response quality.

S. Pawaskar examined various machine learning algorithms for stock price prediction, highlighting the benefits and limitations of different approaches [

22]. Their findings underscore the importance of selecting appropriate algorithms and combining them to achieve better performance. Our research builds on this idea by integrating multiple models to enhance chatbot interactions.

Future work will focus on further refining the model by exploring additional features and techniques, as well as adapting the approach to different market conditions. Incorporating alternative data sources, such as sentiment analysis derived from social media, may provide additional insights and improve predictive performance. By consistently refining our methodology, we strive to support the ongoing progress in developing robust and accurate chatbot interaction models.

4. Methodology

In this section, We discuss the algorithm and model, evaluation metrics, experiment results, and conclude with the implications of our findings.In this study, we employ BERTopic, an advanced technique for topic modeling,and explore its effectiveness in comparison with other traditional models.

The BERTopic model comprises several key components: SentenceTransformer for embedding sentences, UMAP for dimensionality reduction, HDBSCAN for clustering, and CountVectorizer along with ClassTfidfTransformer for generating topic representations. The overall pipeline is shown in

Figure 1.

4.1. SentenceTransformer Model

We use the pre-trained SentenceTransformer model `all-MiniLM-L6-v2` to embed sentences into a vector space, represented mathematically as:

where

is the embedding of sentence

and

denotes the SentenceTransformer model.

4.2. UMAP Model

UMAP (Uniform Manifold Approximation and Projection) is employed to reduce the high-dimensional sentence embeddings to a lower dimension (5 in our case):

where

represents the low-dimensional embedding of

and

is the UMAP transformation function.

4.3. HDBSCAN Model

HDBSCAN (Hierarchical Density-Based Spatial Clustering of Applications with Noise) clusters these low-dimensional embeddings:

where

is the cluster assignment of

and

is the clustering function.

4.4. Loss Function

To train the model, we employ a clustering loss function designed to maximize similarity within clusters and minimize similarity between clusters. This approach ensures that embeddings in the same cluster remain close together, while those in different clusters are distinctly separated. The loss function

L is expressed as:

The inter-cluster similarity component is:

Combining these, the total loss function

L is:

where

represents cluster

k,

is the embedding of sentence

, and cos denotes the cosine similarity.

To elaborate, the loss function is composed of two primary elements: The first element

maximizes the similarity between pairs of embeddings within the same cluster

. Cosine similarity, ranging from -1 (completely opposite) to 1 (identical), is employed to quantify the similarity between two vectors.By summing up the cosine similarities of all pairs within a cluster, we ensure that the embeddings are as close as possible to each other.

The second component

minimizes the similarity between pairs of embeddings where one belongs to cluster

and the other does not. This encourages the embeddings from different clusters to be dissimilar, thus ensuring well-separated clusters.

To balance these two components, we can introduce a weighting parameter

that controls the importance of each term in the loss function. The modified loss function becomes:

where

represents a hyperparameter that can be adjusted according to the specific requirements of the clustering task.

To prevent overfitting and enhance the model’s ability to generalize to new data, regularization techniques are applied. One common method involves incorporating a regularization term into the loss function, such as the

norm of the embeddings:

where

serves as the regularization parameter, determining the intensity of the

regularization, helping to prevent the embeddings from becoming too large and thus reducing overfitting.

In summary, our clustering model’s loss function is crafted to achieve high similarity within clusters and low similarity between clusters, while incorporating regularization to enhance generalization.

4.5. TF-IDF Weighting

Term Frequency-Inverse Document Frequency (TF-IDF) is a statistical metric employed to assess a word’s significance within a document in relation to a larger collection of documents (corpus). The TF-IDF value rises with the frequency of the word’s appearance in the document, but is moderated by the word’s overall frequency in the corpus. This adjustment accounts for the common occurrence of certain words.

The weight of a term

t in a document

d using TF-IDF is determined as follows:

Here, represents the term frequency of t within document d, and denotes the inverse document frequency of term t.

The term frequency

is determined by the formula:

where

denotes the count of term

t in document

d, and the denominator represents the total count of all terms in document

d.

The inverse document frequency

is computed as:

where

N stands for the total number of documents in the corpus, and

is the number of documents that include the term

t.

To address the issue of certain terms appearing frequently across multiple documents, we use a variant called smooth inverse document frequency:

This adjustment prevents division by zero and ensures that terms appearing in all documents do not receive an IDF score of zero.

By combining these equations, the complete TF-IDF calculation for a term t in document d is as follows: To simplify and reduce redundancy in the TF-IDF calculation, we can break down the equation into more manageable parts. Here is the revised version:

The term frequency (TF) of

t in

d is defined as:

The smooth inverse document frequency (IDF) is given by:

Combining these, the TF-IDF score for a term

t in document

d is:

This enhanced TF-IDF weighting scheme is crucial for creating more accurate feature representations of the text data, which directly impacts the clustering and topic modeling processes.

4.6. Evaluation Metrics

To assess our model’s performance, we employ multiple essential metrics: Logarithmic Loss (Logloss), Accuracy, and F1-score. These metrics collectively offer a well-rounded view of the model’s effectiveness from various angles.

Logarithmic Loss (Logloss): Logloss, or binary cross-entropy loss, evaluates a classification model’s performance when predictions are probabilistic values between 0 and 1. For

N samples, Logloss is calculated as:

In this equation, represents the actual label of the i-th sample, and denotes the predicted probability of the i-th sample being in the positive class. Lower Logloss values signify improved model performance.

Accuracy: Accuracy is a straightforward and intuitive metric that calculates the ratio of correctly predicted samples to the total number of samples. It can be expressed as:

where

represents true positives,

denotes true negatives,

stands for false positives, and

indicates false negatives. Accuracy provides an overall measure of the model’s correctness by showing how frequently the predictions are accurate.

F1-score: The F1-score is the harmonic mean of precision and recall, offering a balanced metric that accounts for both. Precision measures the ratio of correctly identified positive cases to the total predicted positives, while recall assesses the ratio of correctly identified positive cases to all actual positives. The F1-score is given by:

The F1-score is especially valuable in situations with imbalanced class distributions, as it accounts for both false positives and false negatives. In summary, Logloss provides insight into the probabilistic predictions of the model, Accuracy gives an overall correctness measure, and F1-score balances precision and recall to give a single performance measure. These metrics together provide a robust evaluation framework for our model.