1. Introduction

Every year, traffic collisions claim the lives of approximately

million people, one of the risk factors being the non-use of helmets, seat belts, and child restraint systems, being the second factor mentioned, capable of reducing by up to 50% the risk of death among vehicle occupants . With respect to the driver, it can be inferred that one of the main causes of accidents is fatigue and drowsiness, according to the statistical report of road accidents 2022 issued by SUTRAN [

1,

2], road accidents amount to 5449 cases nationwide, where 47% are caused by a crash, 45% by a carelessness, 5% by a hit-and-run and 1% by a rollover. Concerned about this level of accidents, the monitoring of the driver’s condition was considered, thus complementing the system. In this article, the integration of a monitoring and data collection system in long-distance bus service is proposed in order to reduce the fatality risk factor in the event of an accident. The main innovation lies in the design, modification, and adaptation of existing codes, combining artificial vision techniques and monitoring sensors to create an effective and accessible system to improve safety in interprovincial transportation.

Several systems have been proposed to monitor seat belt use and improve driver safety, but these are not in tandem.

Passenger Sensor Systems:

QRC sensors: used to measure load fluctuation caused by biological signals such as respiration and pulse waves to determine whether a person or luggage is in the seat [

3].

Wireless inductive sensors: Detect seat occupancy by means of compression between an inductor and a ferrite surface, measure the load fluctuation caused by biological signals such as respiration and pulse wave, in addition to knowing if there is a load on the sensor, it helps us to determine if it is a person or luggage and even if it is an adult or a child [

4].

Systems with WiFi modules: Uses the seat belt buckle to power a WiFi module that sends the IP address to an application on a smartphone [

5].

-

EL20 sensor: Sensors that measure the force exerted by a person, used for automotive testing [

6].

Driver state monitoring:

Drowsiness and syncope detection in drivers using computer vision: Makes use of neural networks to monitor the driver’s face, detecting signs of fatigue and drowsiness using the dlib library [

7].

Drowsiness detection and notification-based system for car drivers: makes use of the face tracking SDK, in conjunction with a Kinect system and webcams for drowsiness detection [

8].

Real-time Blink Detection: Uses facial landmarks to detect blinks, improving reliability and reducing processing time [

9].

Facial Expression Recognition: Makes use of Haarcascade and face landmarks to detect blinks and yawns, with processing on a Raspberry Pi 4 [

10].

The article presented the

Section 1 present the introduction.

Section 2 presented the related works.

Section 3 describes the proposed methodology with materials and methods.

Section 4 details the results and discussions.

Section 5 concludes and summarizes the achievement of the objectives of the article.

2. Related works

Below are other articles that are related to this article. In [

11] presents the recent progress in autonomous vehicle research and development has led to increasingly widespread testing of fully autonomous vehicles on public roads, where complex traffic scenarios arise. Along with these vehicles, partially autonomous vehicles, manually-driven vehicles, pedestrians, cyclists, and some animals can be present on the road, to which autonomous vehicles must react.

In [

12] in this article proposes a synergistic integrated FAGV-SC holistic framework - FAGVinSCF in which all the components of SC and FAGVs involving recent and impending technological advancements are moulded to make the transformation from today’s driving society to future’s next-generation driverless society smoother and truly make self-driving technology a harmonious part of our cities with sustainable urban development.

In [

13] this paper, we consider a widely studied problem, in which autonomous vehicles arriving at an intersection adjust their speeds to traverse the intersection as rapidly as possible, while avoiding collisions. We propose a coordination control algorithm, assuming stochastic models for the arrival times of the vehicles.

In [

14] this paper analyses HOTL real-time haptic delay-sensitive teleoperation with FA-SDVs, in the aspects of human-vehicle teamwork by establishing two similar remote parallel worlds.

In [

15] the purpose of this research is to tackle one of the most difficult issues in the realm of self-driving cars, which is the testing of advanced self-driving application scenarios. Thus, this study proposes a simulation testing system based on hardware-in-the-loop simulation technology.

In [

16] this paper proposes a learning control method for a vehicle platooning system using a deep deterministic policy gradient (DDPG)-based PID. The main contribution of this study is automating the PID weight tuning process by formulating this objective as a deep reinforcement learning (DRL) problem. The longitudinal control of the vehicle platooning is divided into upper and lower control structures. The upper-level controller based on the DDPG algorithm can adjust the current PID controller parameters.

In [

17] to overcome this limitation, a personalized behavior learning system (PBLS) is proposed in this paper to improve the performance of the traditional motion planner. This system is based on the neural reinforcement learning (NRL) technique, which can learn from human drivers online based on the on-board sensing information and realize human-like longitudinal speed control (LSC) through the learning from demonstration (LFD) paradigm.

This article aims to create a system using a Raspberry Pi 4 and an Arduino NANO to store this data. Thus, a conventional camera and MediaPipe are used to monitor the driver’s drowsiness, while various sensors available on the market and our own are used to monitor the passengers. In the article, RS485 communication was used to store driver and passenger data, and the simulations carried out show a high level of reliability in detecting driver drowsiness in specific conditions and in the correct operation of the passenger monitoring sensors. Therefore, it is possible to conclude that the proposed system is feasible and viable and can be implemented to produce experimental results for real tests.

3. Methodology

This article presents the methodology and methods to be used.

3.1. Data Collection and Passenger Monitoring

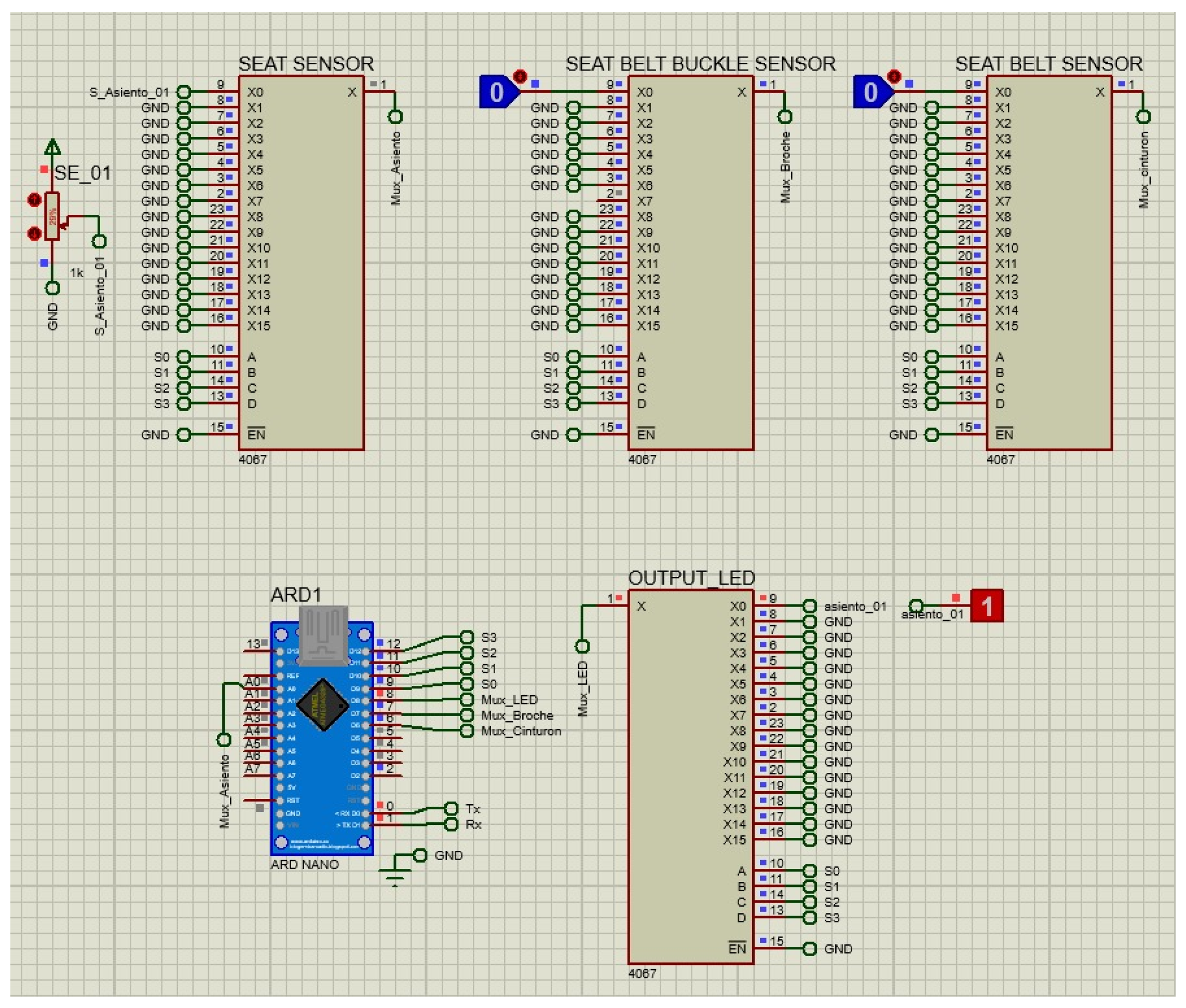

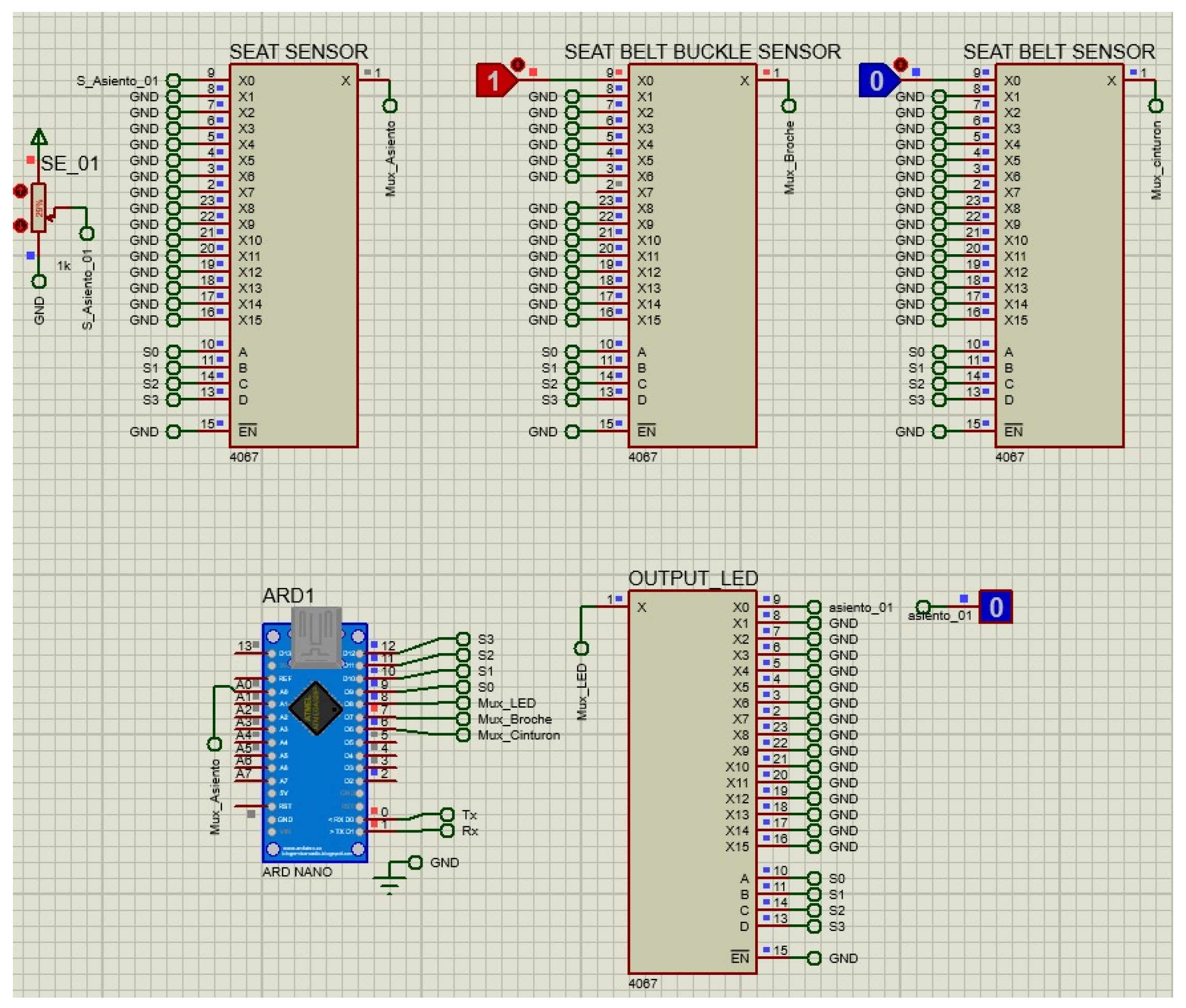

Figure 1 shows the sensors that will be used in each seat, which are used for data collection.

A standard seat occupancy sensor [

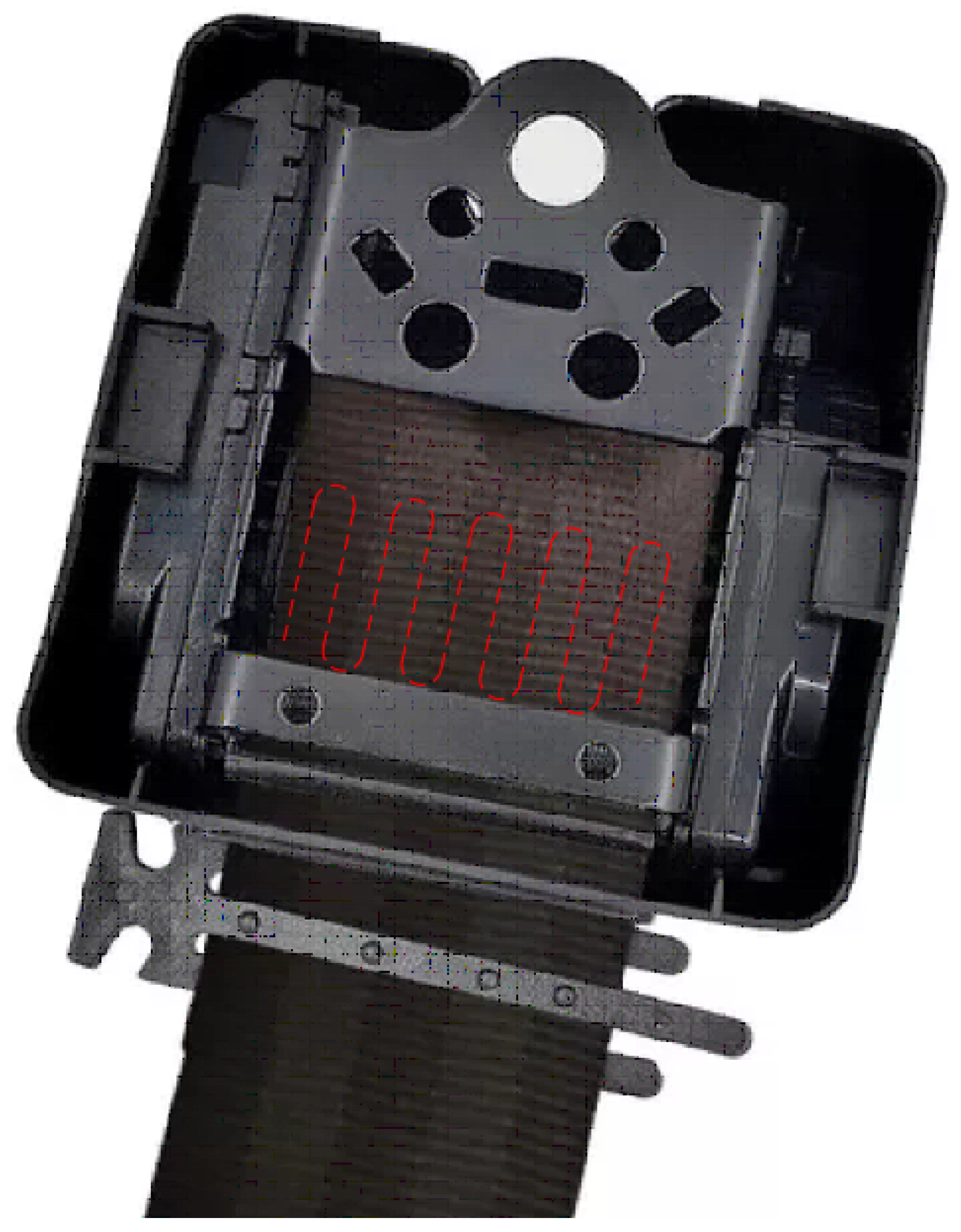

18] was used. This standard seat sensor consists of an array of electrical resistors that help us to determine the seat occupancy, generally the values that it gives us range from 0 ohms to 800 ohms without interruptions or failures and, through a range of resistive values, it can be determined if there is a person on the sensor. A magnetic type sensor which is mounted on the buckle guard commonly used in automobiles [

19], and a belt sensor which was designed for this project consisting of a normally open contact attached to the beginning of the seat belt reel and a conductive thread seam, which is located on a part of the seat belt. This conductive thread seam will be at a distance that indicates that the seat belt is buckled but is on the seat. While the seat belt is in this state (

Figure 2), the conductive thread will close the contact as shown in

Figure 3.

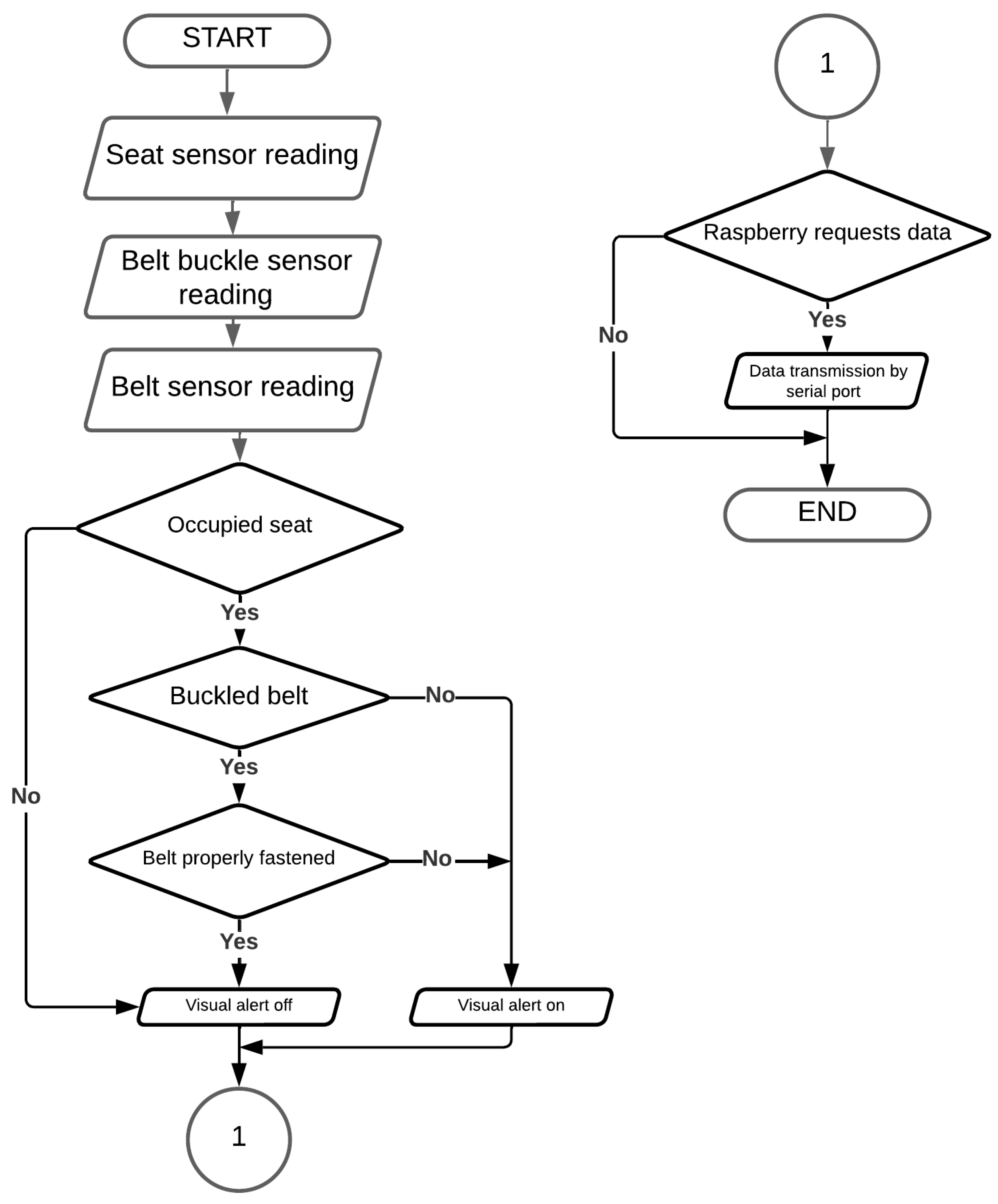

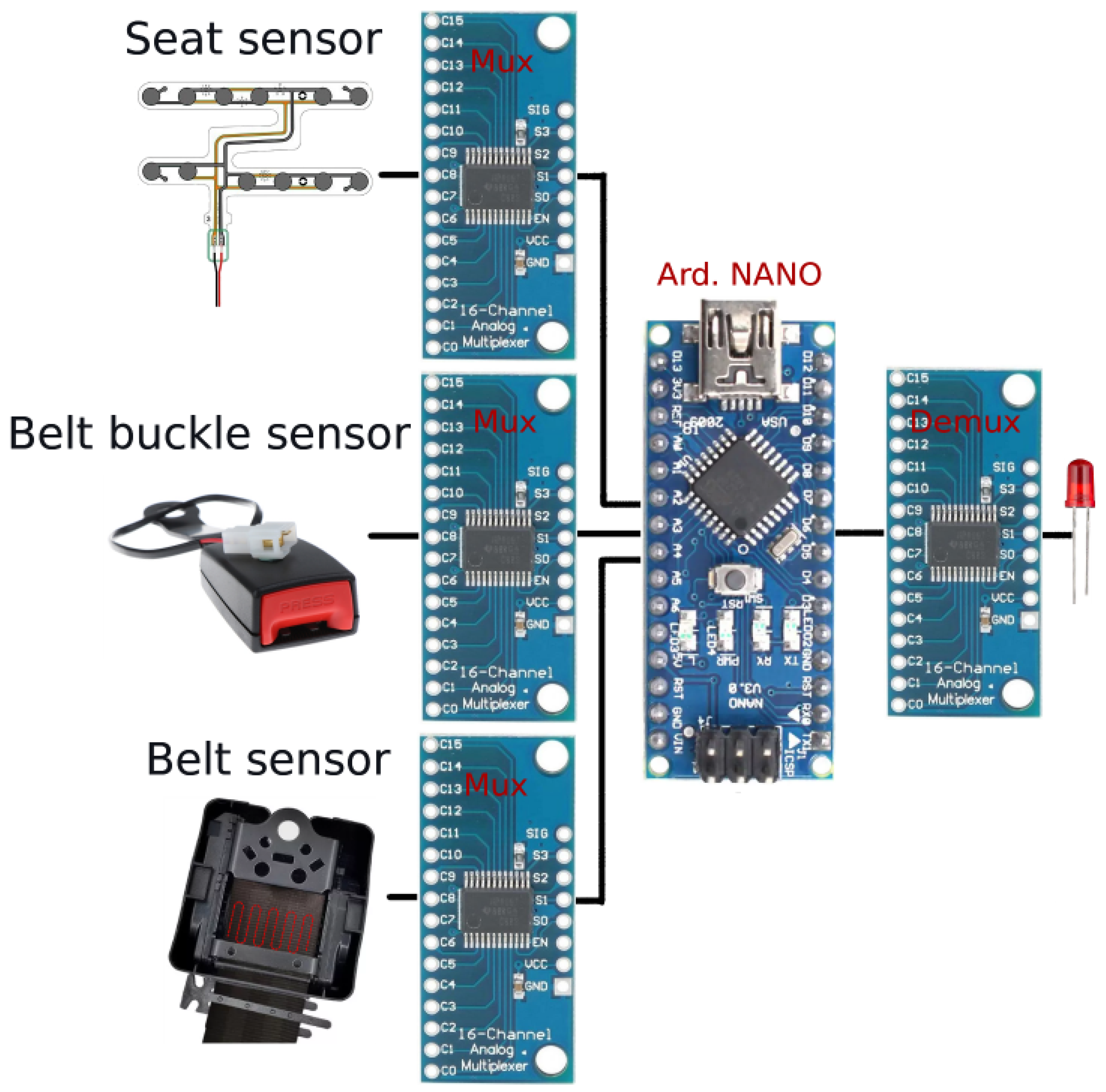

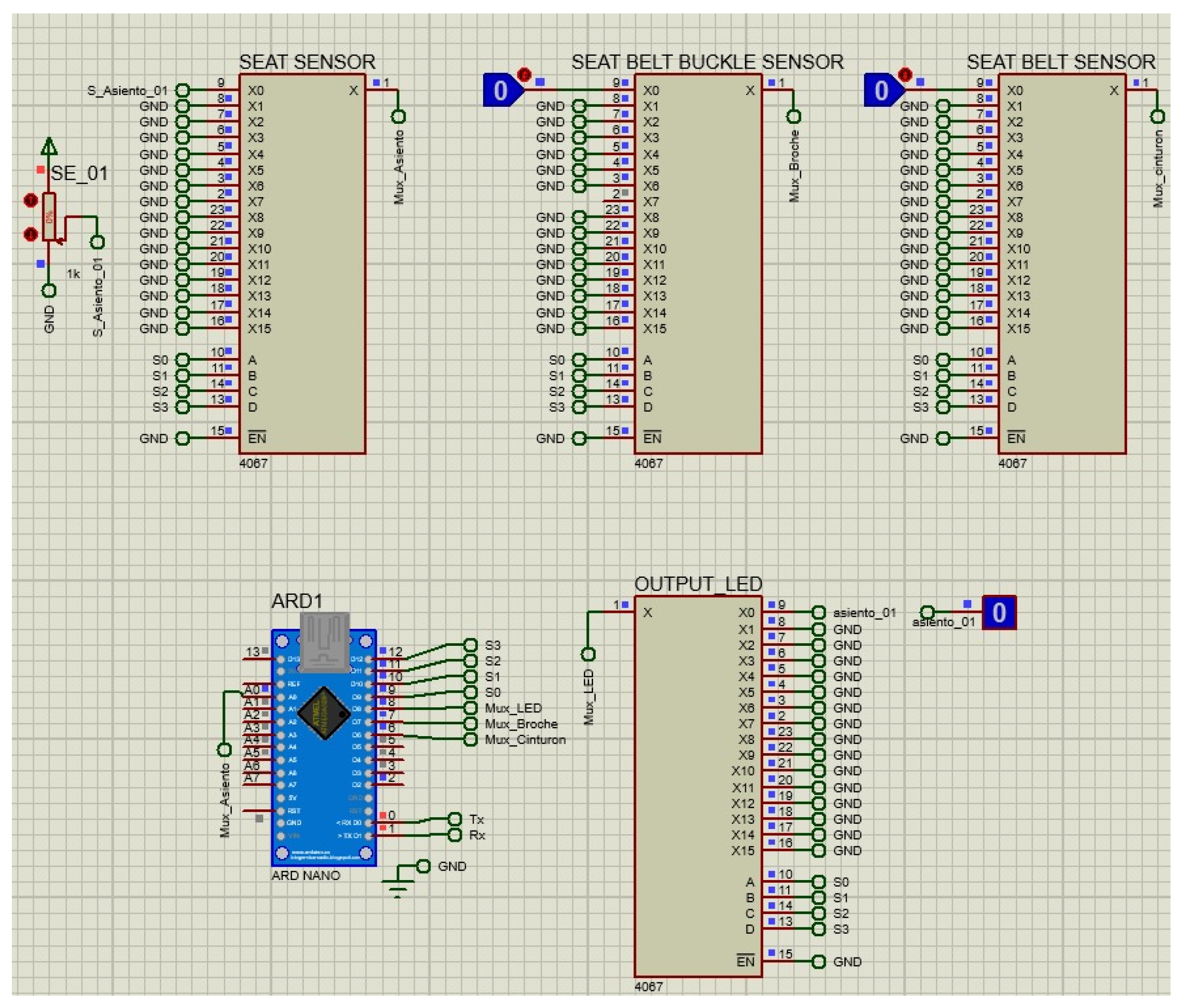

The sensors mounted on the seat described above, send their respective signals to an Arduino NANO based on ATmega328 [

21]. Subsequently, a query is made to the serial port in search of any message from the Raspberry, if there is any message, the data from the seat sensors at that instant of time is sent through the serial port (

Figure 4 ).

Due to the number of input signals that the Arduino receives and the limited number of input data that the Arduino offers, the use of CD74HC4067 multiplexers was chosen to expand the outputs and inputs of the Arduino both analog and digital [

22]. This will allow an Arduino to monitor up to 16 seats (48 sensors). The signals that the Arduino will receive are digital (belt buckle sensor and belt sensor) and analog (seat sensor), having the resistive value range of the latter, which determines if a person is on the sensor, a conditional if

is performed with those ranges to convert the values to binary. Having unformatted the type of signals,

Table 1 is developed.

The result of the analysis of the sensors will enable an output pin of the Arduino, which, by means of a demultiplexer will send a visual alert, which is visible to the passenger of the corresponding seat.

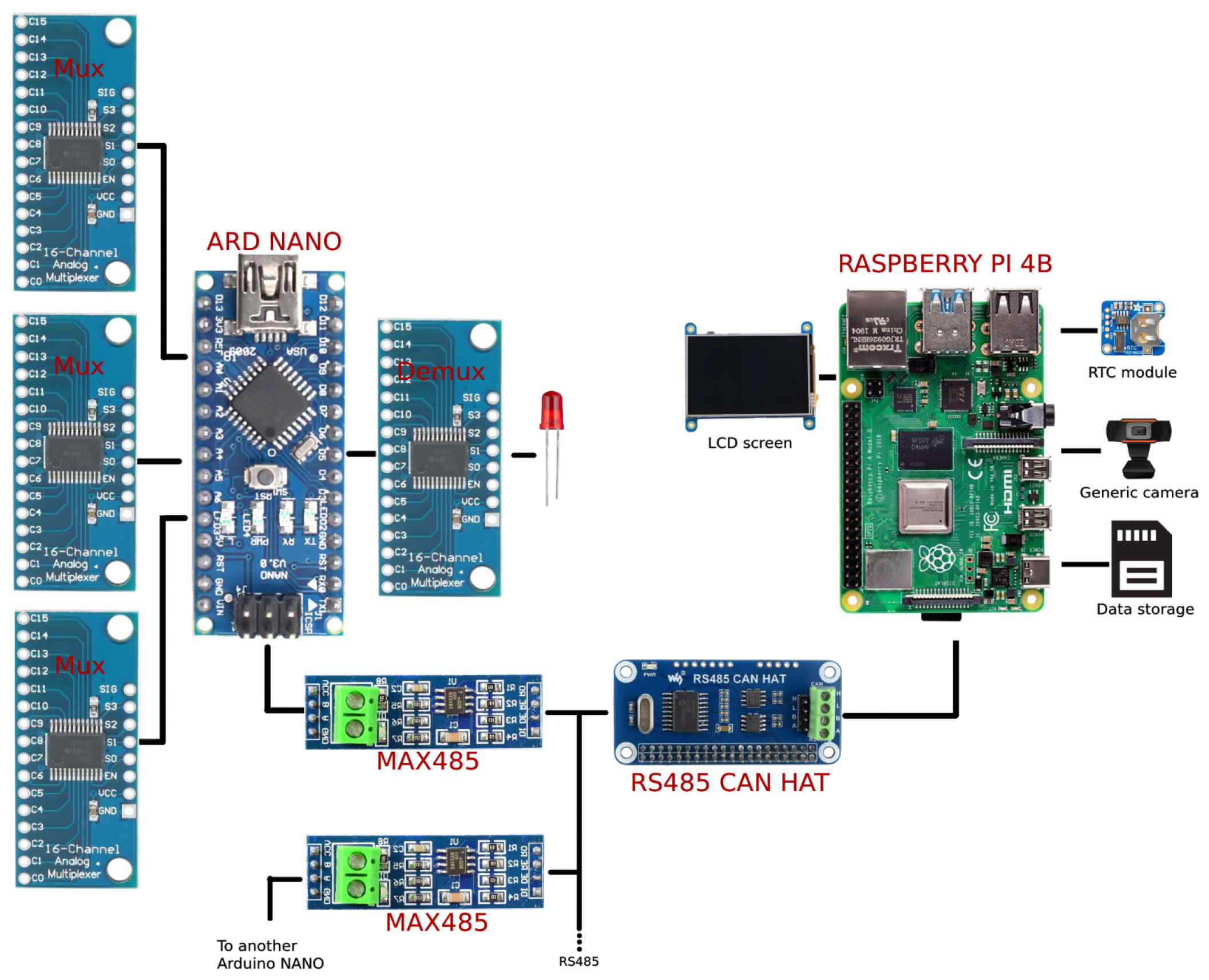

Figure 5 shows the connection of the devices to be used.

The programming of the Arduino was done using the open-source software Arduino IDE. The Mux.h library [

23] was used to manage the channels of the 74HC4067 multiplexers. This library creates a class called Mux, this class has a constructor that facilitates the declaration of the input/output pins and the selector pins for the multiplexer/demultiplexer.

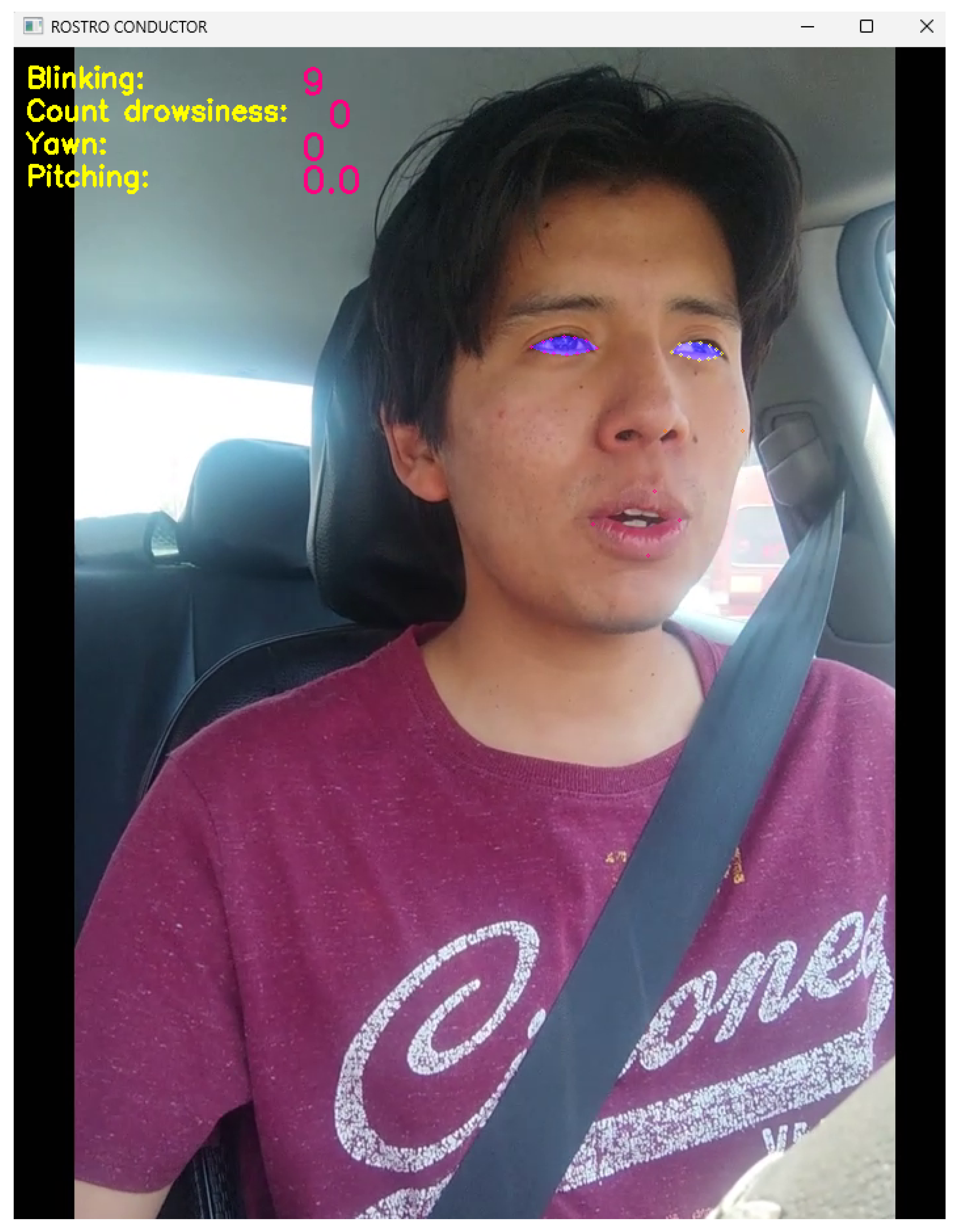

3.1.1. Data Collection and Driver Monitoring

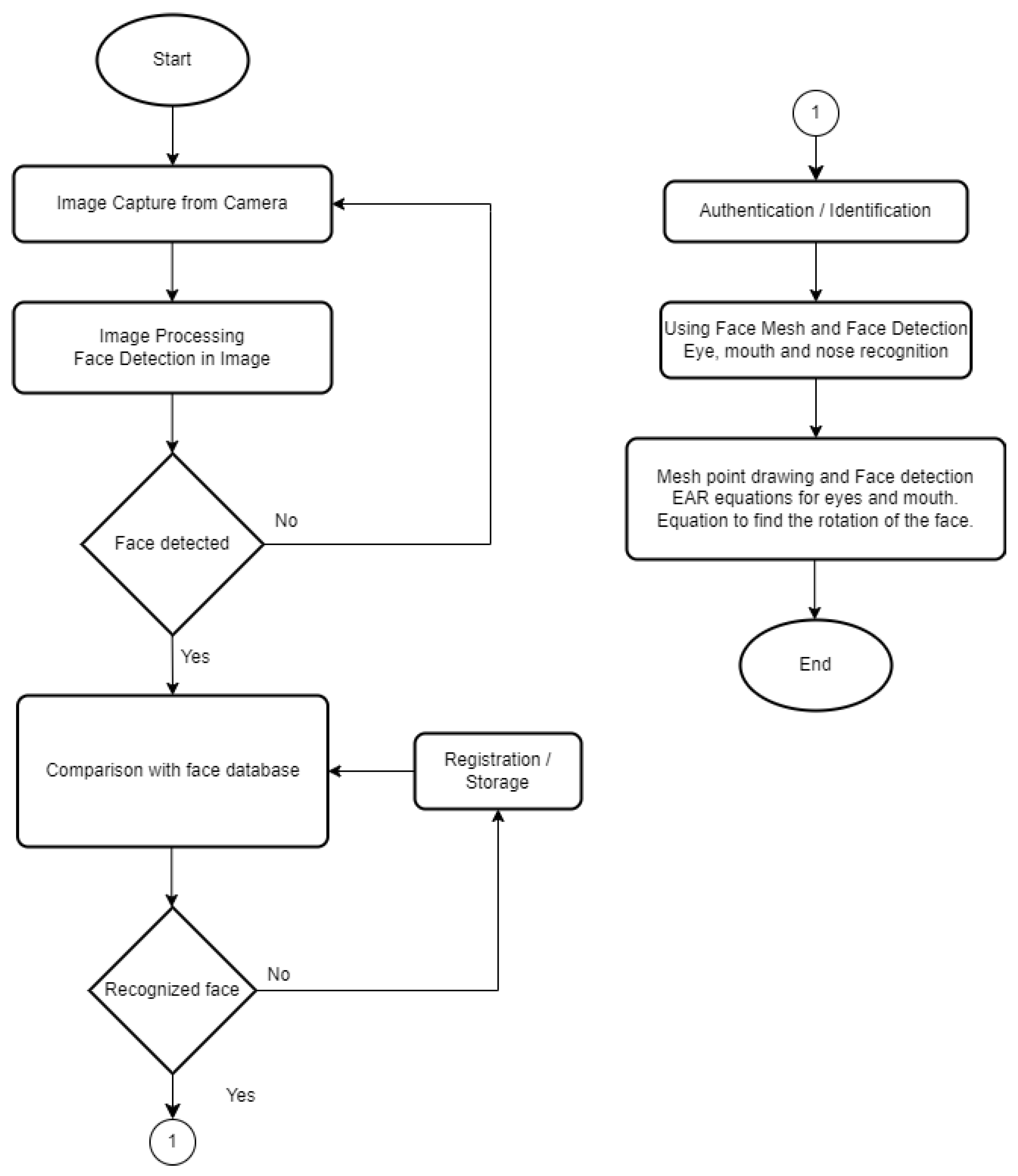

When designing a monitoring system, the development of a drowsiness system based on blinking, yawning, and nodding was sought, but this does not have a high computational cost or require a graphics processor, so we sought to have the most efficient code possible, we have considered the use of Python for the realization of this system, as well as the IDE Visual Studio Code. The workflow is shown in

Figure 6.

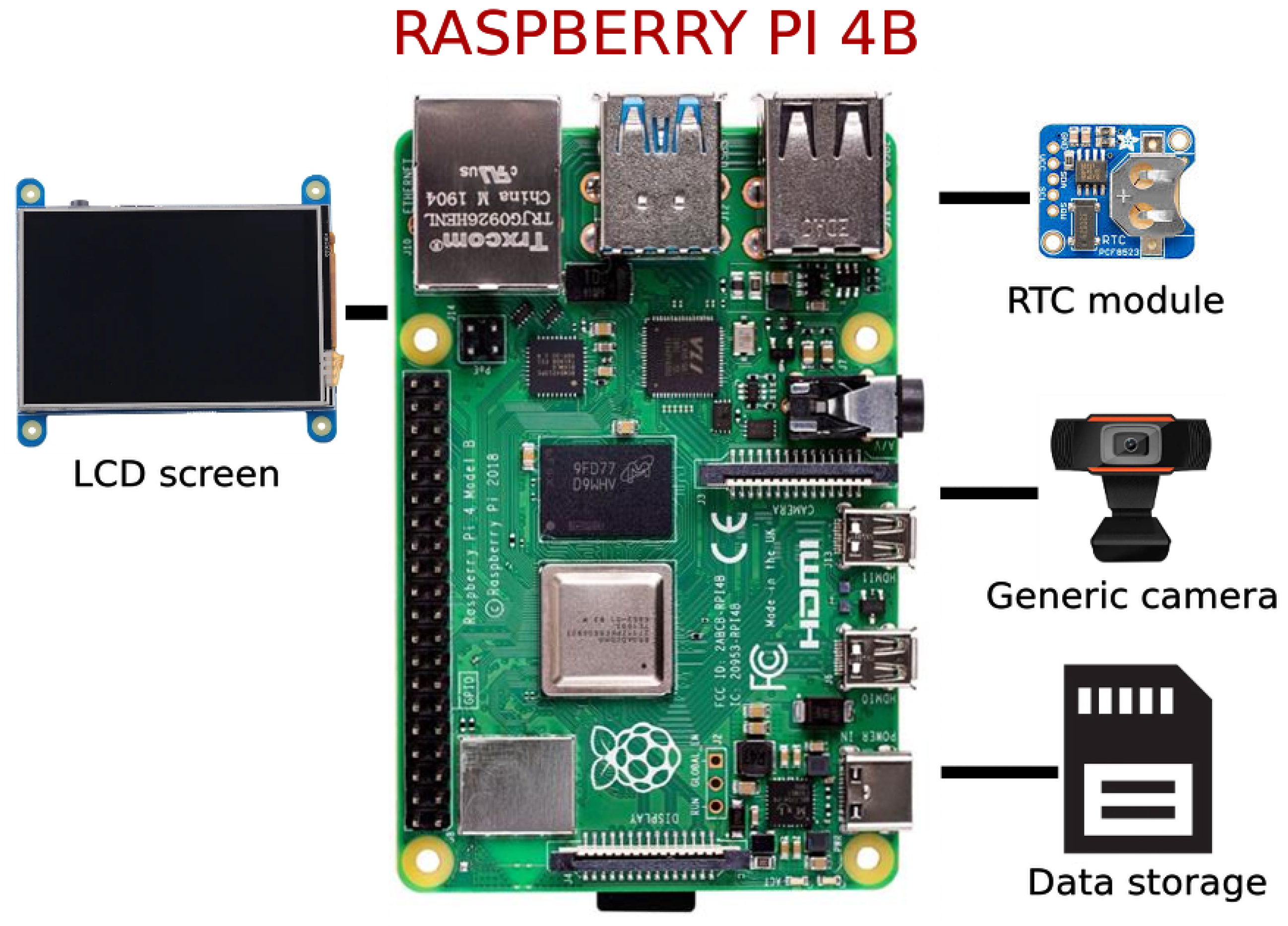

Real-time digital image processing demands a large workload for the processor and considering also that it will be used as a central point for receiving the data sent by the Arduino, due to the workload we opted for the use of a Raspberry Pi 4 [

24]. The camera used is of the wired type with USB 2.0 interface with an AVI FHD@30fps video format in addition to having an integrated microphone [

25]. The monitoring is required to be stored with time and date and, since these depend on the Raspberry having an internet connection, an RTC module [

26] was used, when the system is de-energized, the module, which has a battery, will not lose the time and date values. An LCD display [

27] will be used to visualize the status of the passengers’ seats, in addition to displaying the drowsiness count and video capture where the driver can be observed being monitored. The Raspberry Pi 4 will receive the information transmitted by the Arduino NANO via RS485 communication and display it on the LCD screen (

Figure 7).

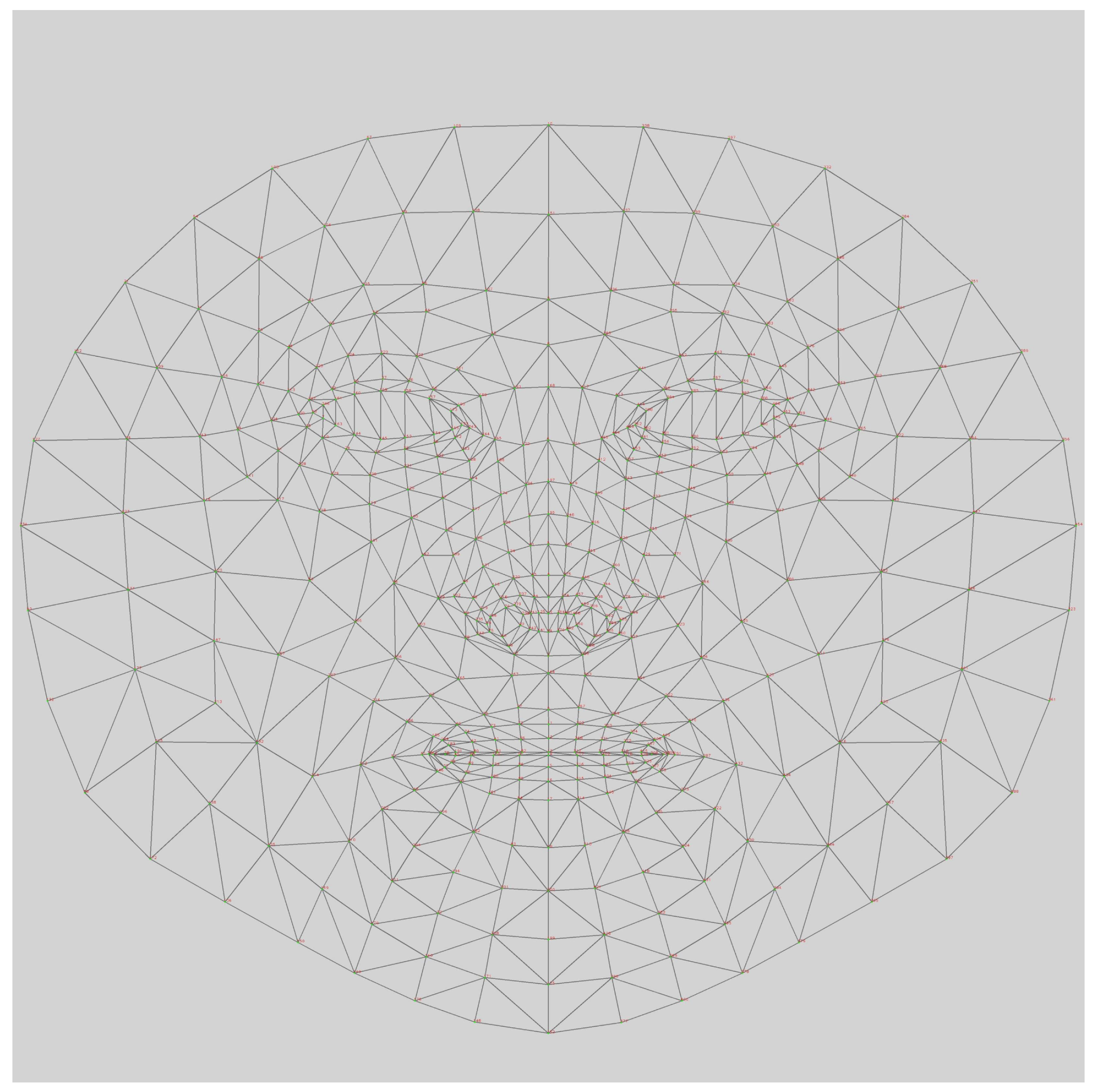

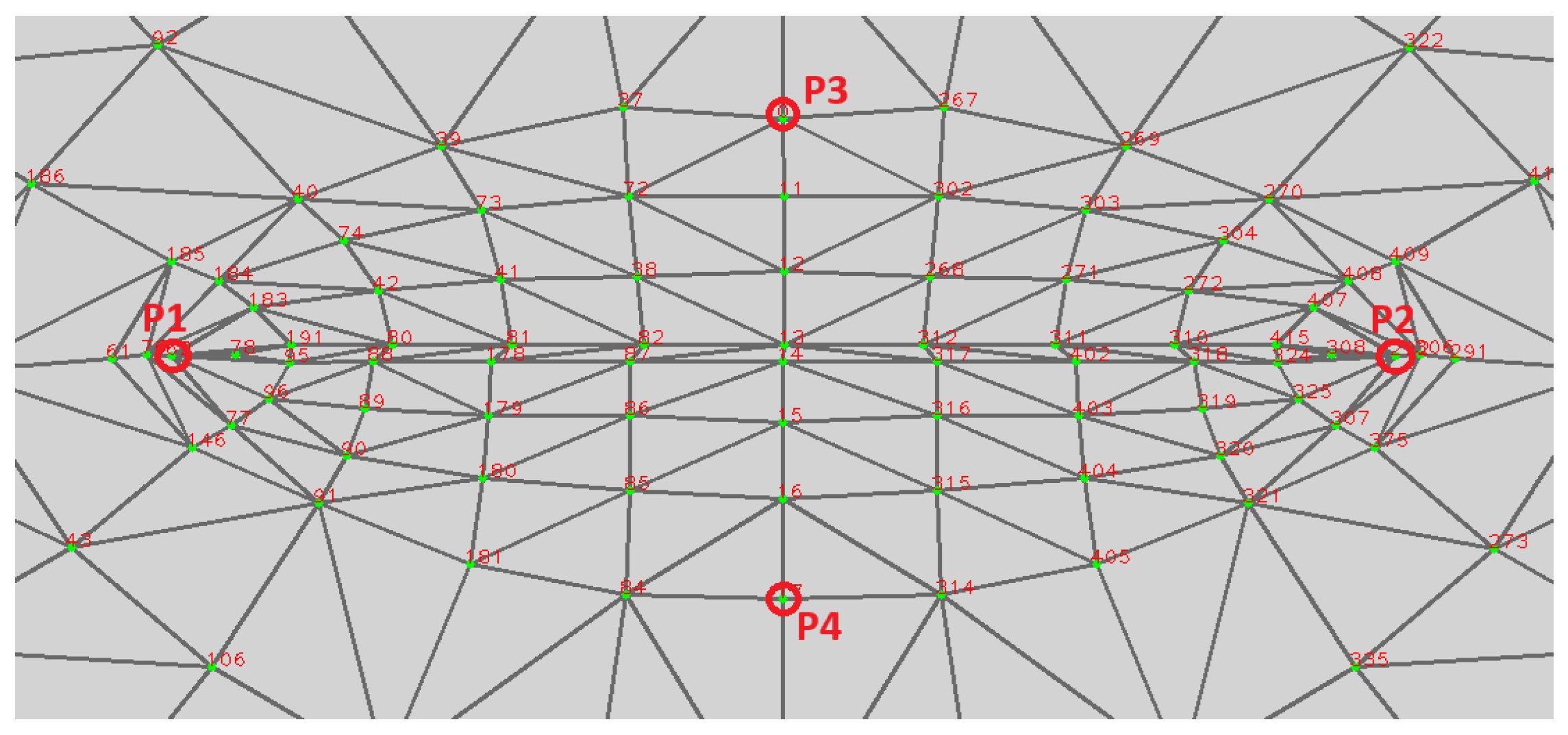

MediaPipe was used to detect landmarks. In the mediapipe mesh (

Figure 8), it can be seen that it has a total of 486 facial landmarks.

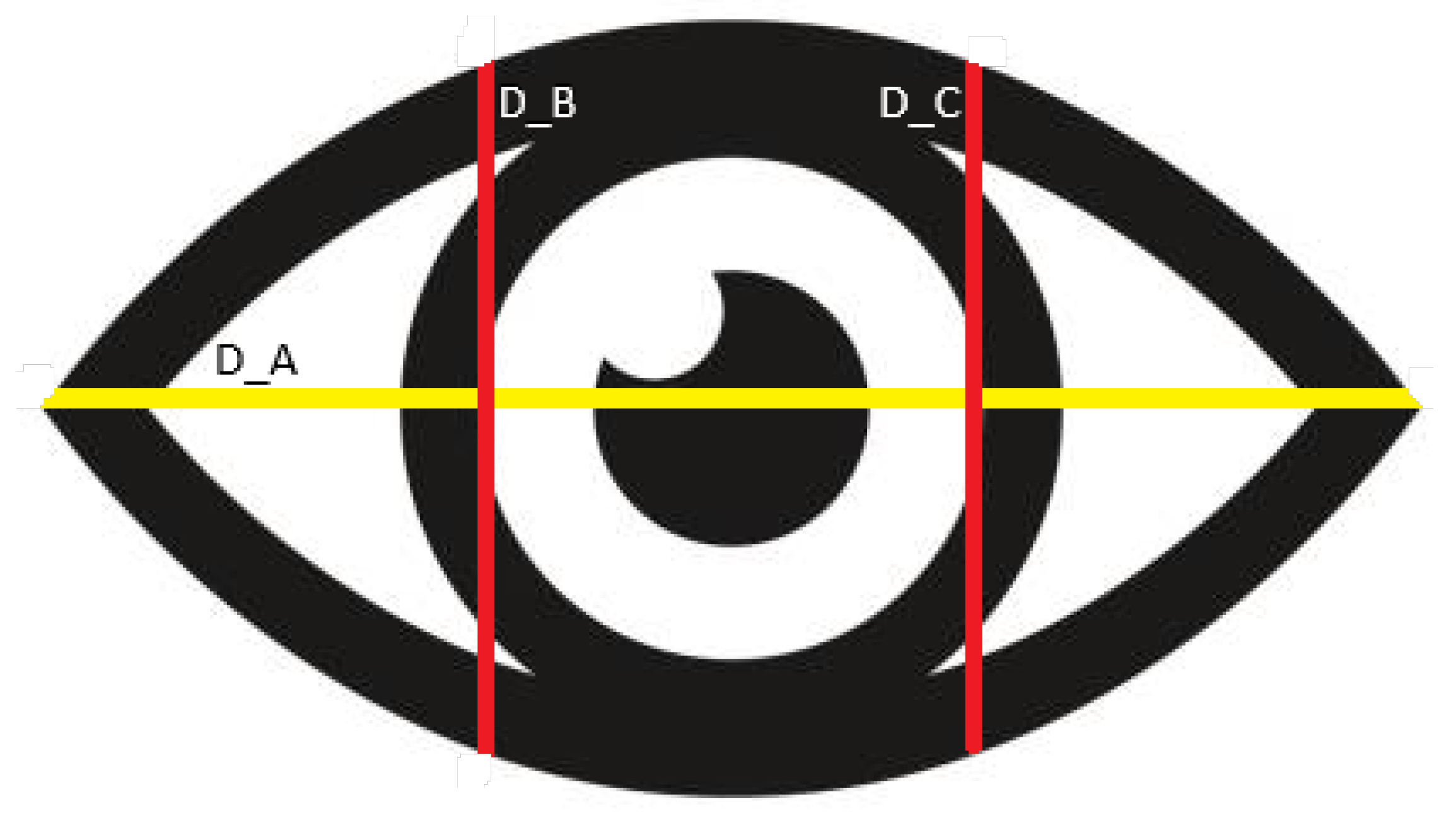

3.1.2. Monitoring of the Eyes

The blinking of the eyes is taken into account, a count of the blinks and their duration is made, using an LSTM neural network in PyTorch for the variation of the EAR threshold every 5 second, then the number of frames per second provided by the camera is taken to divide the time, a conventional camera takes from 24 to 30 frames per second, so, if the time is divided taking into account the 30 frames which give us a value of

seconds; it is estimated that a blink varies between 300 to 400 milliseconds, therefore, 13 frames of the 30 existing ones are taken, which expressed in time would give us an estimate of 433 ms, therefore, it fulfills the required condition.

Figure 9 explains how to obtain the EAR between the values of height and width, taking as a reference the equation given in [

9] (Real-Time Eye Blink Detection using Facial Landmarks). Considering equation (

1), we have the distance A

, distance B

, and distance C

, and therefore, it can be concluded that if the eye is closed or close to being closed, the value that indicates us is close to zero, it is true that there is a variation depending on the person who uses them, hence the application of the neural network, An average is performed to find the EAR (Eye aspect ratio) using the value of both eyes and thus achieve the detection of drowsiness.

An alarm is activated at the moment of reaching 13 frames, and if the blink is long enough, a record is created with the duration, date, and time of the incident.

3.1.3. Mouth Monitoring

Figure 10, shows the mesh of points that mediapipe uses for monitoring. After being tested, it was concluded that the points P1, P2, P3, and P4, which are the corners of the lips, show the best tracking; this tracking is based on the value of the angle found through the arctangent of the straight lines P1P2 and P3P4 [

29]. The duration of the opening of the mouth will indicate whether or not there is a yawn, taking 5 seconds as the estimated duration [

30].

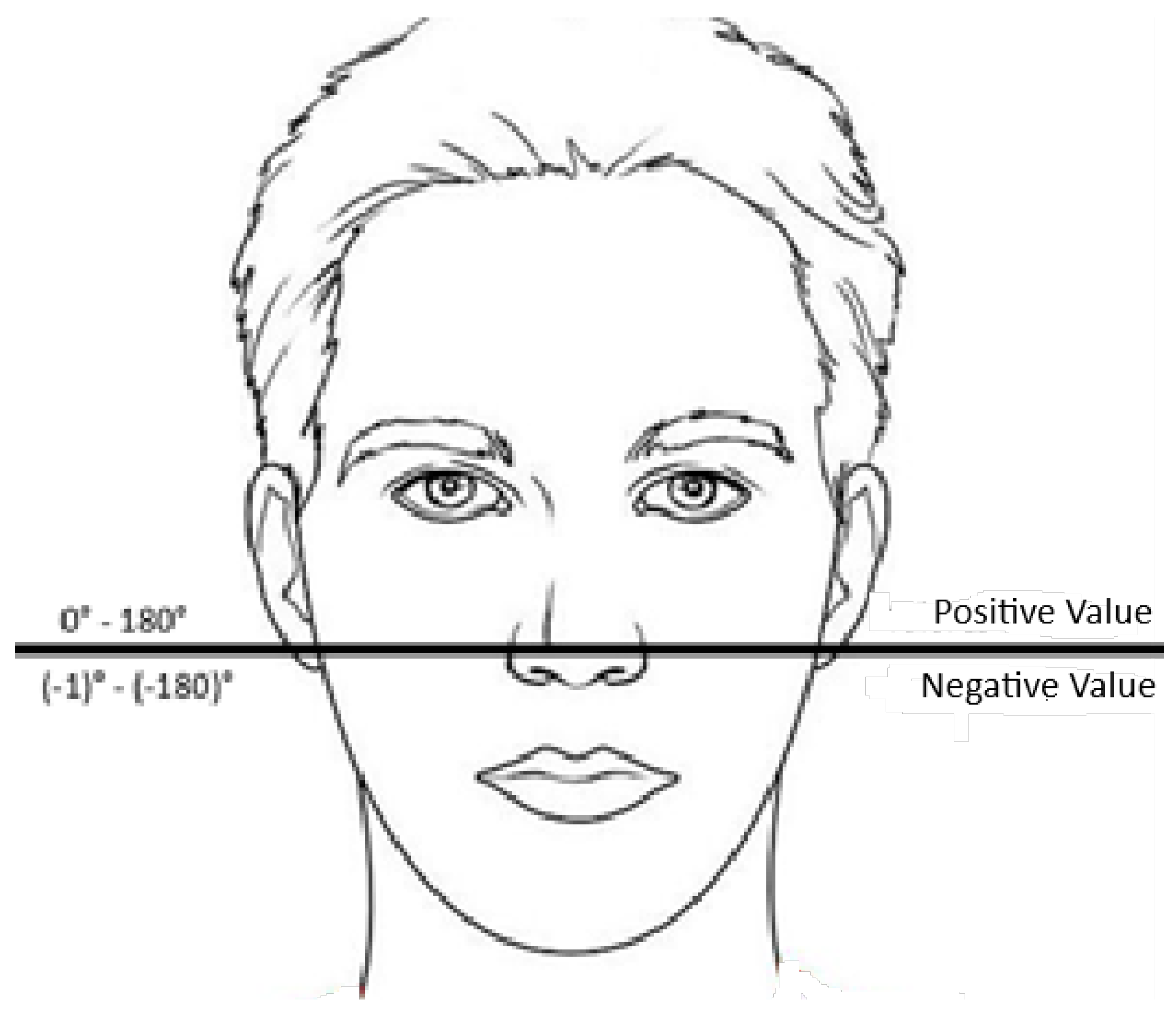

3.1.4. Pitch Monitoring

Based on the code presented by Santiago Sanchez in his YouTube video [

31], a light version was obtained with the necessary features to be able to determine the head orientation and applied with Media Pipe. Two reference points are used, a fixed central point at the height of the nose, numbered in the mesh as point 1, and the second point which is point 366, located at the side of the cheek. The value of the arctangent function formed by the 2 points is taken into account and with this, we achieve what is observed in

Figure 11 where the values above the line are positive and those below are negative, so that, by placing the camera in a correct position, it is possible to monitor microsleeps or some nodding that occurs at the time of drowsiness and tiredness, which will alert the person in real-time based on the number of frames and saving the data of the nodding with duration time, date and time. Also, when a certain time elapses, it will issue an alert.

3.1.5. RS485 Based Communication

It is a standard communication interface, it uses a two or three-wire cable, which is: a data wire, a reverse data wire, and a ground wire, through a 22 or 24 AWG twisted pair cable. The maximum cable length can be up to approximately 1200 meters [

32].

For the use of RS485 communication, both devices (Raspberry Pi 4 and Arduino NANO) each have a module for such communication in half-duplex mode as shown in

Figure 12 acting with the Raspberry Pi 4 as master. An RS485 CAN HAT module is used which allows the Raspberry to communicate with other devices through RS485 and CAN [

33]. Regarding the Arduino NANO, it uses the MAX485 module [

34]. This communication will allow the Raspberry to store both the driver drowsiness monitoring data and the monitoring of seat belt use in passengers and the display of the latter on the LCD screen as announced in the previous section.

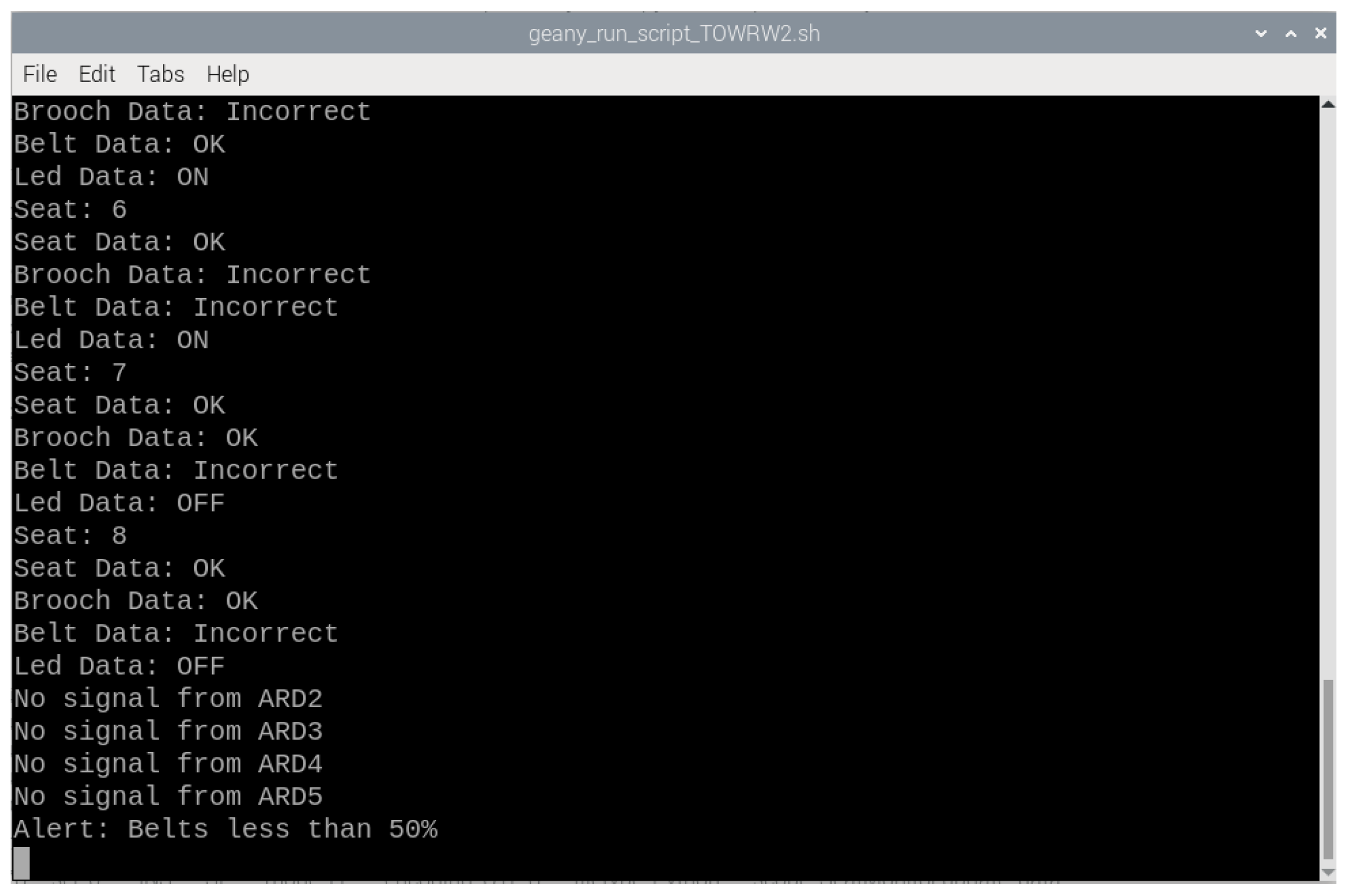

4. Results and Discussion

According to the development process, tests are carried out to verify the system. For this reason, simulations were made in parts, a simulation of the passenger-oriented system with the Proteus software, and tests with the driver-oriented system with the Raspberry Pi 4. A communication test was made between the Arduino and Raspberry to verify the correct saving of the collected data in a text file.

4.1. Passenger Supervision Simulation

Under Proteus V8.13 electronic design automation software, the simulation of the proposed system with respect to the passenger was performed.

Figure 13,

Figure 14, and

Figure 15 show the possible states in which the seat can be.

Figure 16 is presented the blink, yawn, and vertical pitch detection system.

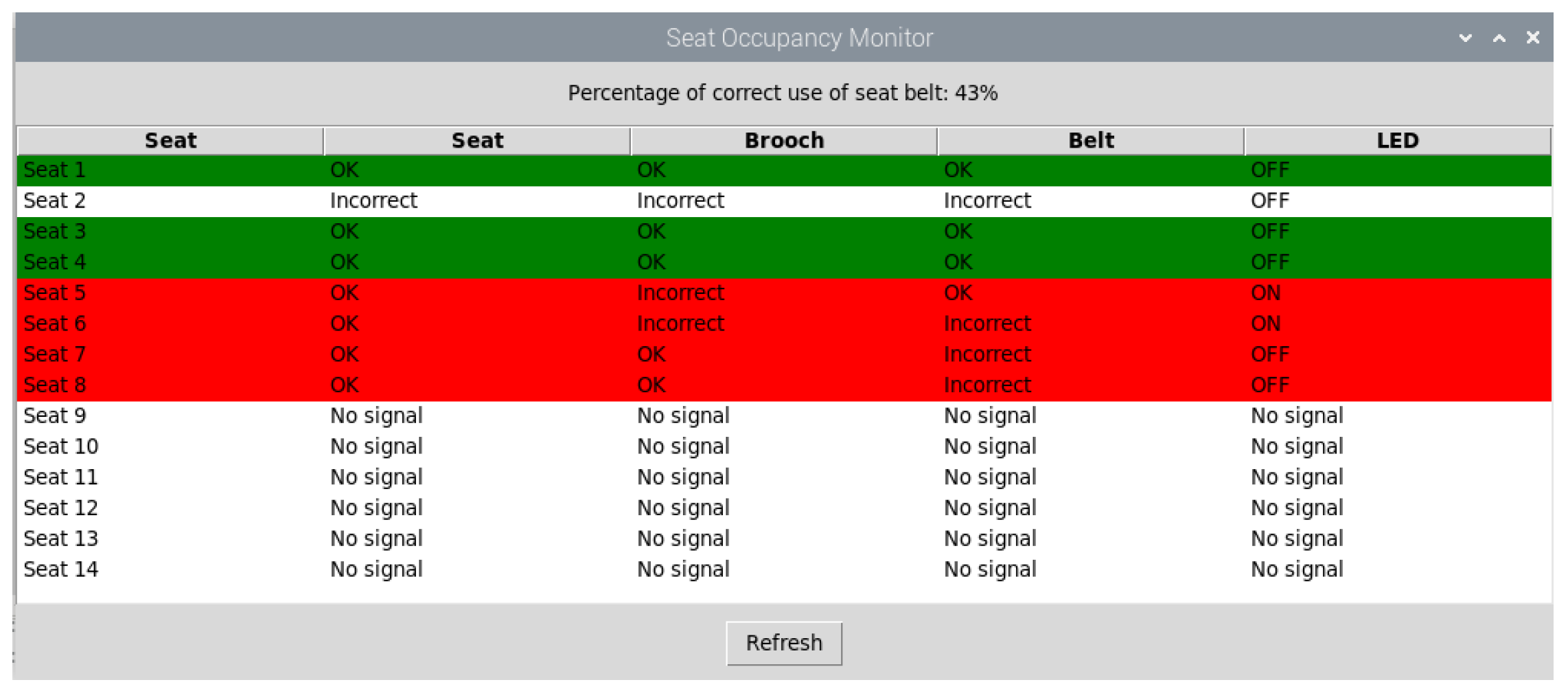

A visual interface of the status of the seats in the interprovincial bus was designed,

Figure 18 shows the use of 2 Arduino NANO making a total of 16 seats. The green color indicates the correct use of the seat belt by the passenger, while the red color indicates the incorrect use of the seat belt, in the case of the white color or no coloring indicates that the presence of a person is not detected. Similarly, there is a percentage at the top of the window, indicating the percentage of correct seat belt use among the seats being occupied. If the percentage of correct seat belt use is less than 50%, an alert will be issued.

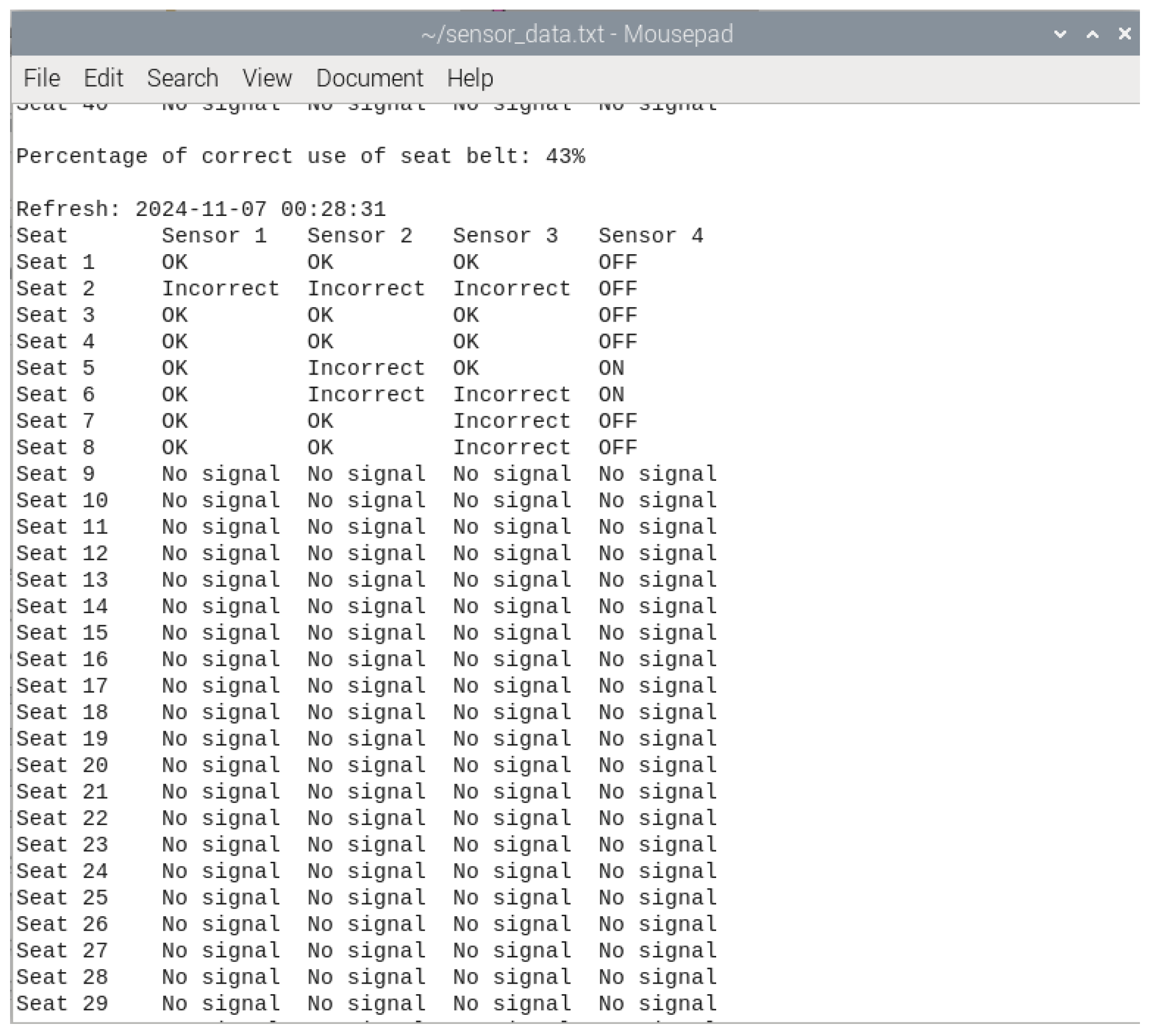

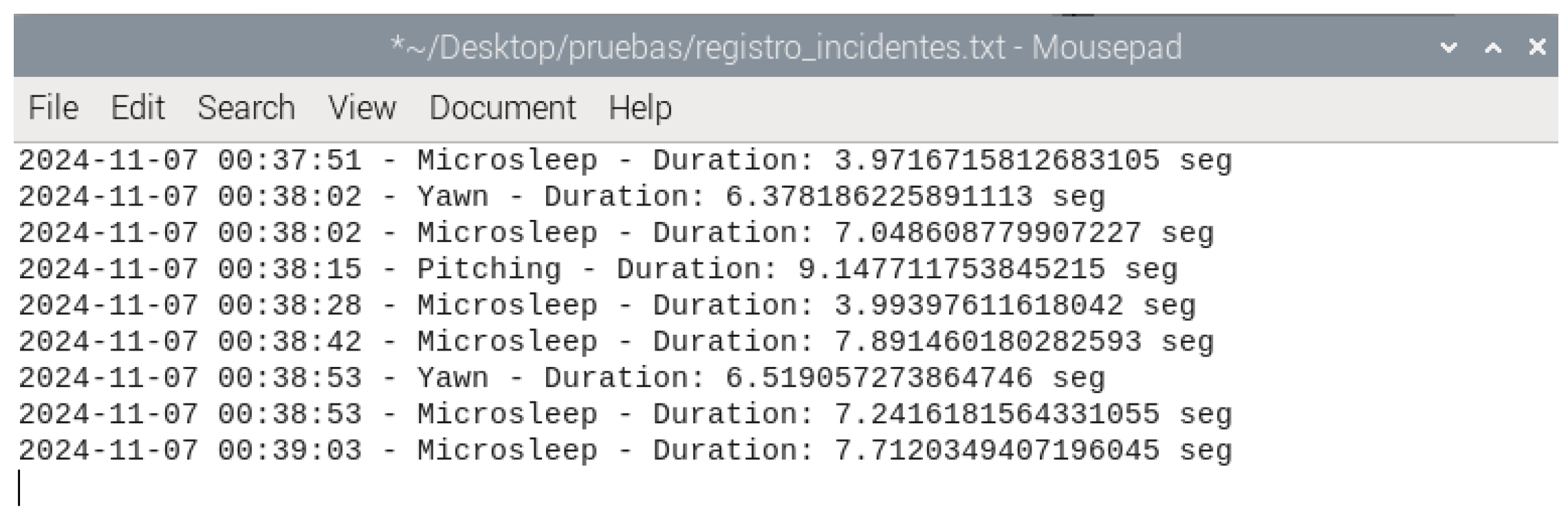

Figure 19 shows how the information from the sensors in each seat is stored in a text file with time, date, and the percentage of correct seat belt use. Similarly, the same test is performed in the case of computer vision. A text file called an incident log is generated, in which nodding, microsleeps, and yawns that the system recognizes are recorded along with the date and time (

Figure 20).

5. Conclusions

The proposed design satisfactorily fulfills the objective of monitoring and collecting information from both the passenger and the driver and storing it, whose data could be used for behavioral studies, among others.

The proposed system ensures that passengers comply with road safety standards, such as fastening their seat belts during the journey (Art. 41 of the MTC National Transport Regulation - Peru) and, in the case of the driver, maintaining control of their condition rest with the collected data (Art. 30 of the MTC National Transport Regulation - Peru), in addition to being awake while driving through an alarm. The driver monitoring system does not require much computing power. A conventional webcam was used for drowsiness detection without additional features such as IR sensors for night vision to reduce costs, which would lead to data collection failures during night trips. The location of the camera is important when using a computer vision system. This allows for effective monitoring and avoids data collection failures. The advantages of the proposed system are that the system is non-invasive and easy to install and exchange components.

To continue with the research, the article lacks implementation in a real system environment, and it is necessary to confirm its feasibility and viability. An independent power supply is required to power the sensors that are placed on the seats. Due to the limited processing power of the CPU and GPU, more robust methods for detecting driver drowsiness must be used. Greater reliability in facial tracking, due to the use of mediapipe with facial and multifaceted reference points. Adaptability, the system can work with different types of components. As a future line of work, we have the design of a PCB board with embedded modules and Arduino NANO and full implementation of the proposed system in interprovincial buses. For the driver, it is planned to make use of artificial intelligence, using systems such as Yolo (You Only Look Once) which will provide a more robust system for image processing; finally, with the aim of better control, the implementation of sending the data collected in the text files to a database, automating the loading and unloading of such data for possible later use, was considered. Improve reliability by increasing medical variables such as pulses. The design of a PCB board with embedded modules and Arduino NANO and full implementation of the proposed system.

Author Contributions

For research articles with several authors, a short paragraph specifying their individual contributions must be provided. The following statements should be used A.A.O., J.F.M.V. and G.A.E.E., conceived and designed the study; A.A.O., J.F.M.V. G.A.E.E., and J.M.M.V. were responsible for the methodology; A.A.O., J.F.M.V. and J.M.M.V. performed the simulations and experiments; A.A.O., J.F.M.V. , J.M.M.V., E.R.L.V. reviewed the manuscript and provided valuable suggestions; A.A.O., J.F.M.V. J.M.M.V. and E.R.L.V. wrote the paper; G.A.E.E. and A.O.S. were responsible for supervision. All authors have read and agreed to the published version of the manuscript.

Acknowledgment

We thank the National University of San Agustín, our alma mater, for please provide us with the necessary knowledge to carry out this project.

Abbreviations

The following abbreviations are used in this manuscript:

| DDPG |

deep deterministic policy gradient |

| DRL |

deep reinforcement learning |

| BLS |

personalized behavior learning system (PBLS) |

| LSC |

longitudinal speed control |

| LFD |

learning from demonstration |

References

- Traumatismos causados por el tránsito. Who.int Available: 24 August 2024. https://www.who.int/es/news-room/fact-sheets/detail/road-traffic-injuries. 234.

- Reporte estadístico de siniestros viales 2022. Gob.pe Available: 24 August 2024.https://cdn.www.gob.pe/uploads/document/file/4489498/Reporte.

- Murozaki, Y.; Sakuma, S.; Arai, F. Improvement of the Measurement Range and Temperature Characteristics of a Load Sensor Using a Quartz Crystal Resonator with All Crystal Layer Components. Sensors, 2017, 17(5), 1067. [CrossRef]

- Kisic, M.G. ; Blaz, N.V. ; Babkovic, K.B.; Zivanov, L.D. ; Damnjanovic, M.S. Detection of Seat Occupancy Using a Wireless Inductive Sensor. IEEE Transactions on Magnetics, 2017, 53(4), 1–4. [CrossRef]

- Cheng, H.C.; Chang, C.C.; Wang, W.J. An Effective Seat Belt Detection System on the Bus, 2020 IEEE International Conference on Consumer Electronics - Taiwan (ICCE-Taiwan), Taoyuan, Taiwan, 2020, pp. 1-2. [CrossRef]

- Sensores de fuerza para cinturón. Sensing. Sensores de Medida en línea, 2017. Available: 24 may 2024]. https://sensores-de-medida.es/catalogo/sensores-de-fuerza-para-cinturon/.

- Berlanga, J.M.J. Detección de somnolencia y síncope en conductores mediante visión artificial. Available: 24 july 2024. https://openaccess.uoc.edu/bitstream/10609/132366/7/jmjberlangaTFM0621memoria.pdf.

- Muños, E.L.B. ; Mendez, M.M.M. Sistema basado en la detección y notificación de somnolencia para conductores de autos.2015. Montería: Universidad de Córdoba. Available: 24 july 2024. https://repositorio.unicordoba.edu.co/server/api/core/bitstreams/2ac71ade-9e9b-47e8-b787-66e0b3721aae/content.

- Soukupova, T. ; Cech, J. Real-time eye blink detection using facial landmarks. Uni-lj.si. Available: 24 july 2024. https://vision.fe.uni-lj.si/cvww2016/proceedings/papers/05.pdf.

- Madruga, J.M. Sistema de detección de emociones faciales mediante técnicas de Machine Learning adaptado a ROS para un robot de bajo coste basado en Raspberry Pi. España: Universidad Rey Juan Carlos,2022. Available: 24 july 2024. https://gsyc.urjc.es/jmvega/teaching/tfgs/2021-22_JavierMartinez.pdf.

- P. Ghorai, A. Eskandarian, Y. -K. Kim and G. Mehr, "State Estimation and Motion Prediction of Vehicles and Vulnerable Road Users for Cooperative Autonomous Driving: A Survey. IEEE Transactions on Intelligent Transportation Systems, 2022, 23(10),16983–17002. [CrossRef]

- Kuru, K. ; Khan, W. A Framework for the Synergistic Integration of Fully Autonomous Ground Vehicles With Smart City. IEEE Access, 2021, 9,923–948. [CrossRef]

- Miculescu, D. ; Karaman, S. Polling-Systems-Based Autonomous Vehicle Coordination in Traffic Intersections With No Traffic Signals. IEEE Transactions on Automatic Control, 2020, 65(2),680–694. [CrossRef]

- K. Kuru, "Conceptualisation of Human-on-the-Loop Haptic Teleoperation With Fully Autonomous Self-Driving Vehicles in the Urban Environment. IEEE Open Journal of Intelligent Transportation Systems, 2021, 2,448–469. [CrossRef]

- Xu, Z.; Zhang, Y.; Ding, P.; Tu, F. Design of a Collaborative Vehicle Formation Control Simulation Test System. Electronics, 2023, 12(21),4385. [CrossRef]

- Yang, J.; Peng, W.; Sun, C. A Learning Control Method of Automated Vehicle Platoon at Straight Path with DDPG-Based PID. Electronics, 2021, 10(21),2580. [CrossRef]

- Lu, C.; Gong, J.; Lv, C.; Chen, X.; Cao, D.; Chen, Y. A Personalized Behavior Learning System for Human-Like Longitudinal Speed Control of Autonomous Vehicles.Sensors, 2019, 19(17),3672. [CrossRef]

- Xinjiejia, SBR JYJ-105. diaphragm pressure Shenzhen xinjie jia electronic thin film switch co. Available: 24 july 2024. http://en.szxjj.com/index.phpm=content&c=index&a=show&catid=13&id=3.

- Sensor de proximidad del cinturón de seguridad. Directindustry.es [en línea], [sin fecha]. [consulta: 16 julio 2024]. Disponible en: https://trends.directindustry.es/soway-tech-limited/project-161356-168240.html.

- Apple Bus Carrocerias. Asientos Soft-Banner Available: 24 july 2024. https://www.applebus.com.pe/portfolio/soft/.

- Arduino documentation. Arduino.cc. Available: 24 july 2024.https://docs.arduino.cc/hardware/nano/.

- Módulo 74HC4067 multiplexor analógico 16ch. Naylamp Mechatronics - Perú. Available: 24 july 2024. https://naylampmechatronics.com/circuitos-integrados/644-modulo-74hc4067 -multiplexor-analogico-16ch.html.

- Chizzolini, S., 2020. arduino-ad-mix-lib. Available: 24 july 2024.project arduino-ad-mux-lib. https://github.com/stechio/arduino-ad-mux-lib.

- Raspberrypi.com Available: 24 july 2024. https://www.raspberrypi.com/products/raspberry-pi-4-model-b/specifications/.

- Cámara Web Full Hd 1920 X 1080p Con Micrófono Usb Pc Laptop. Com.pe Available: 24 july 2024. https://articulo.mercadolibre.com.pe/MPE-670143902-camara-web-full-hd-1920-x-1080p-con-microfono-usb-pc-aptop-JM?

- Gizmo Mechatronics central , 2016. tINYrtc i2c mODULE. Available: 24 july 2024. https://pdf.direnc.net/upload/tinyrtc-i2c-modul-datasheet.pdf.

- Overview, 800 x. 480 Dfr0550. Available: 24 july 2024. https://www.mouser.com/pdfDocs/ProductOverviewDFRobot-DFR0550-2.pdf.

- Mediapipe facemesh vertices mapping. Stack Overflow. Available: 24 july 2024. https://stackoverflow.com/questions/69858216/mediapipe-facemesh-vertices-mapping.

- Berlanga, J.M.J. Detección de somnolencia y síncope en conductores mediante visión artificial.Available: 24 july 2024. https://openaccess.uoc.edu/bitstream/10609/132366/7/jmjberlangaTFM0621memoria.pdf.

- Medrano, J., 2013. Vivir, bostezar, morir. Rev. Asoc. Esp. Neuropsiq. vol. 33, no. 117. Available: 24 july 2024. https://scielo.isciii.es/pdf/neuropsiq/v33n117/11.pdf.

- Sanchez, S., 2022. Detección de rotacion del rostro| Deteccion de rostros en 3D con Phython y Opencv . Available: 24 july 2024. https://youtu.be/cTpTjGK8HME?si=DZcaUixHaZQ7-iZd.

- RS, R.Y., CONCEPTOS FUNDAMENTALES DE. Novusautomation.com Available: 24 july 2024. https://cdn.novusautomation.com/downloads/conceptos%20fundamentales%20de%20rs485%20y%20rs422%20-%20espa%C3%B1ol.pdf.

- Waveshare RS485 can hat User Manual. Available: 24 july 2024.https://www.waveshare.com/w/upload/2/29/RS485-CAN-HAT-user-manuakl-en.pdf.

- Maxim integrated, 2014. MAX481/MAX483/MAX485/ MAX487–MAX491/MAX1487.Available: 24 july 2024.https://www.analog.com/media/en/technical-documentation/data-sheets/MAX1487-MAX491.pdf.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).