Submitted:

18 September 2024

Posted:

19 September 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2.Related Work:

2.1. Understanding Large Language Models

2.2. Retrieval Augmented Generation

2.3. Applications of LLMs

3. Proposed Method:

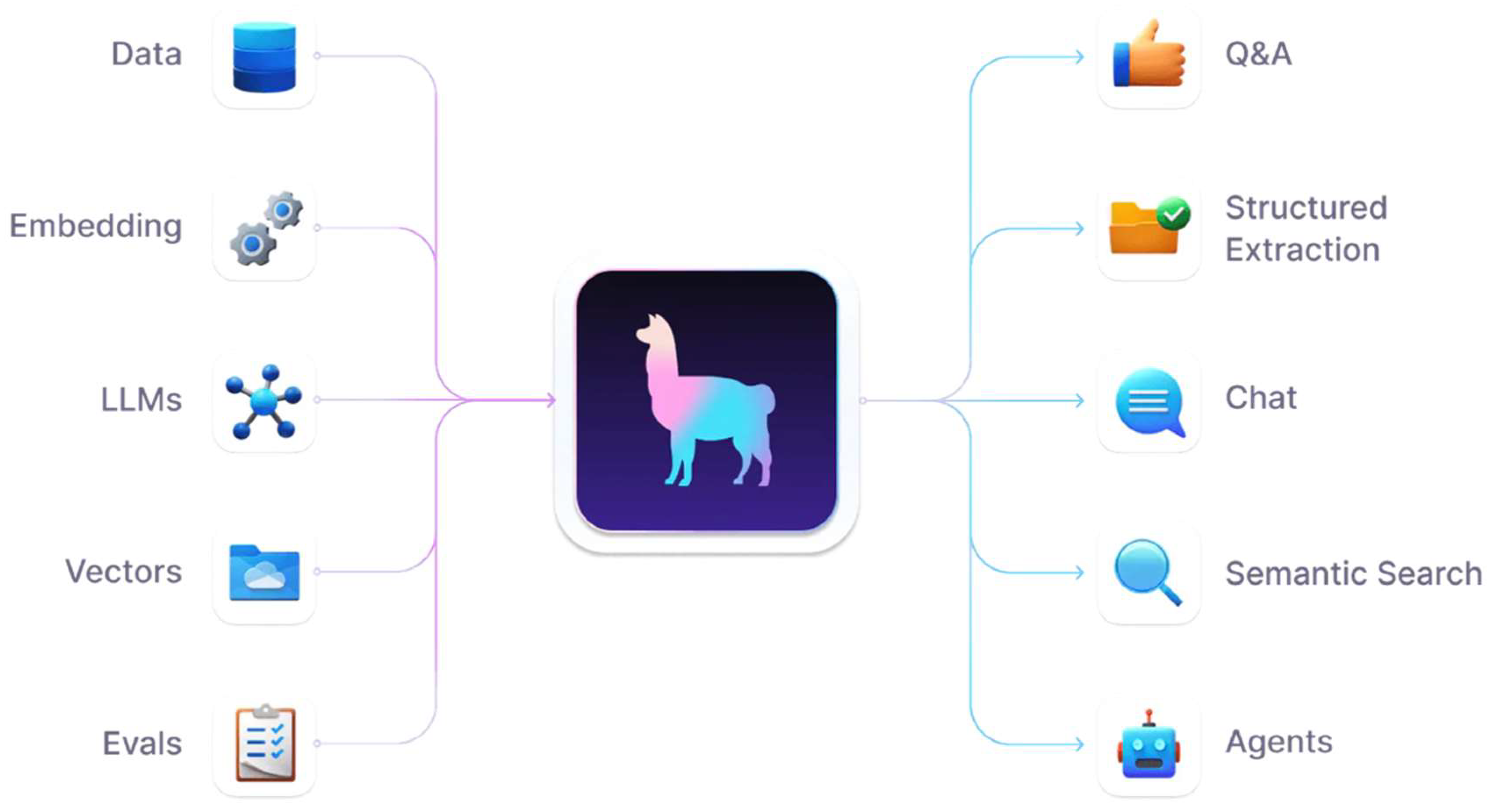

3.1. Framework: LlamaIndex

- 1.

-

Data Sources (Left side):

- Data: Denotes the unprocessed information in files, databases, or other data repositories.

- Preprocessing: This stage entails cleaning, converting, and arranging the data to prepare it for use by LLMs.

- LLMs: They use the processed data for a variety of purposes.

- Indexes: These are organized data representations that make effective searching and retrieval possible.

- 2.

-

LlamaIndex (Center):

- The central icon represents the heart of the LlamaIndex framework. This gradient-colored llama unifies all the parts and facilitates communication between data sources and language models.

- 3.

-

Applications (Right side):

- Q&A: The LLMs can respond precisely to user inquiries because of the processed data and indexes.

- Document retrieval: Enables pertinent documents to be found within a sizable corpus in response to user requests or specifications.

- Chat: Enables conversational interfaces with intelligence that can access and use outside data.

- Semantic search: Improves search performance by deciphering query context and semantics.

- Agents: Automated agents with decision-making and task-performing capabilities based on integrated data.

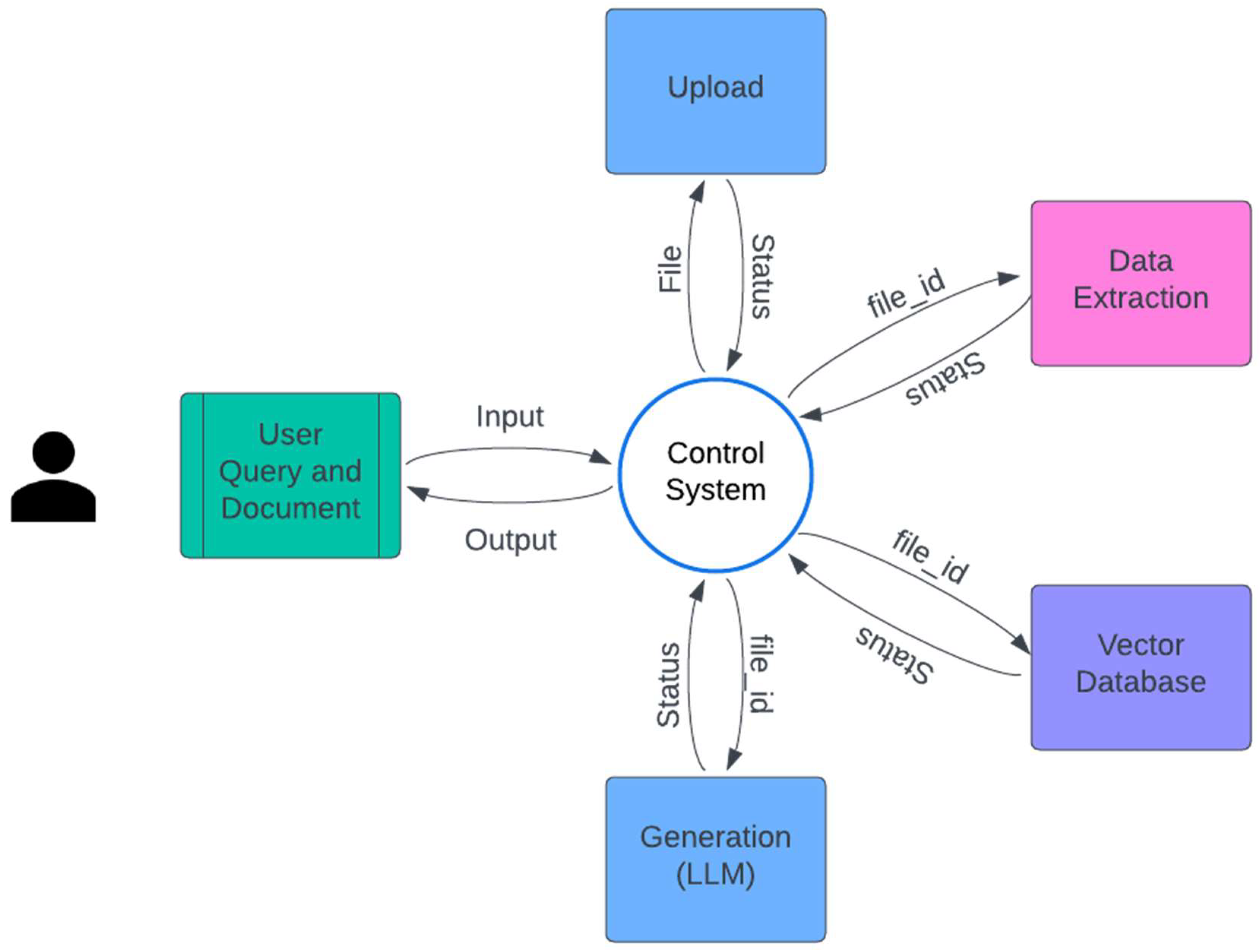

3.2. Architecture

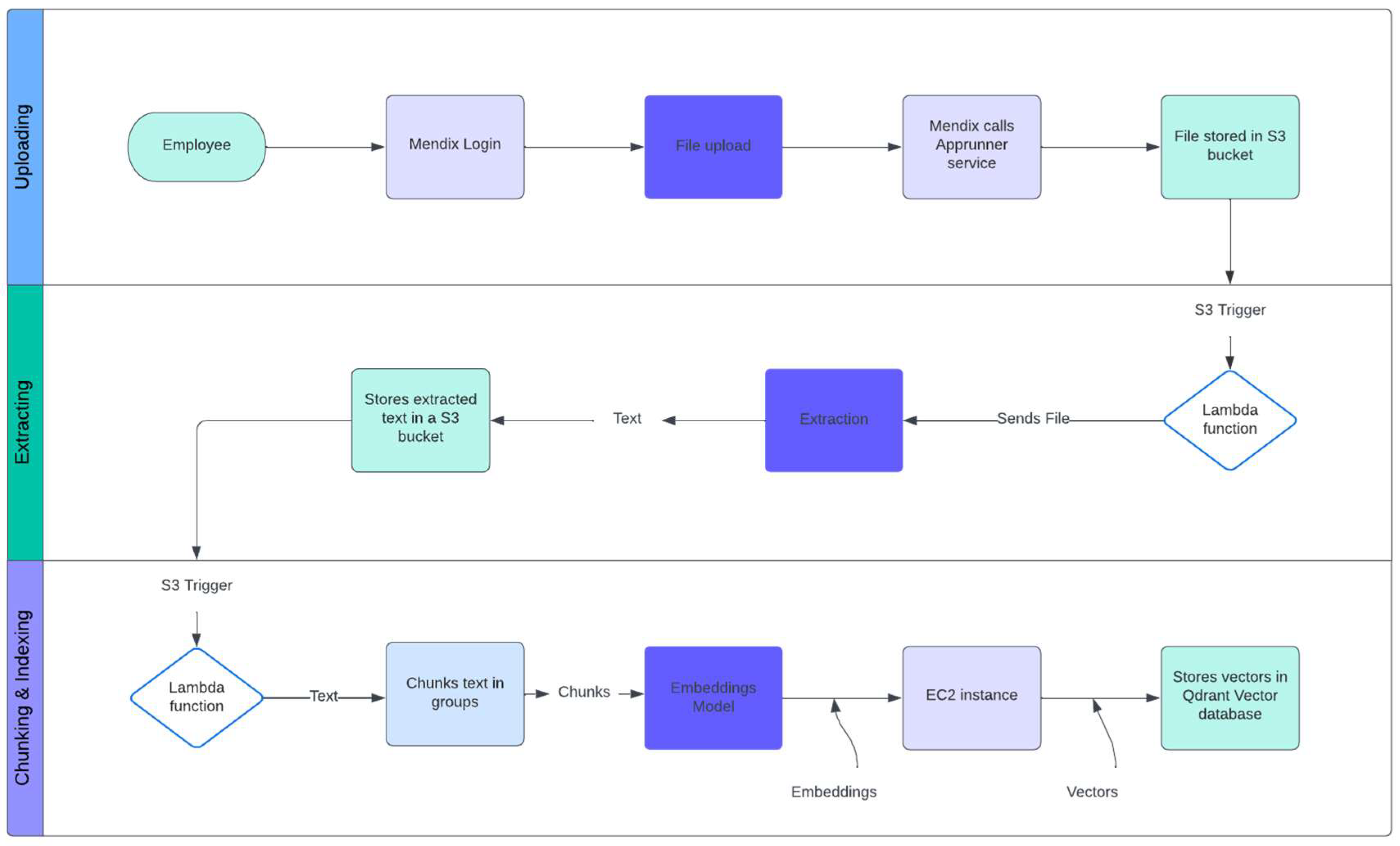

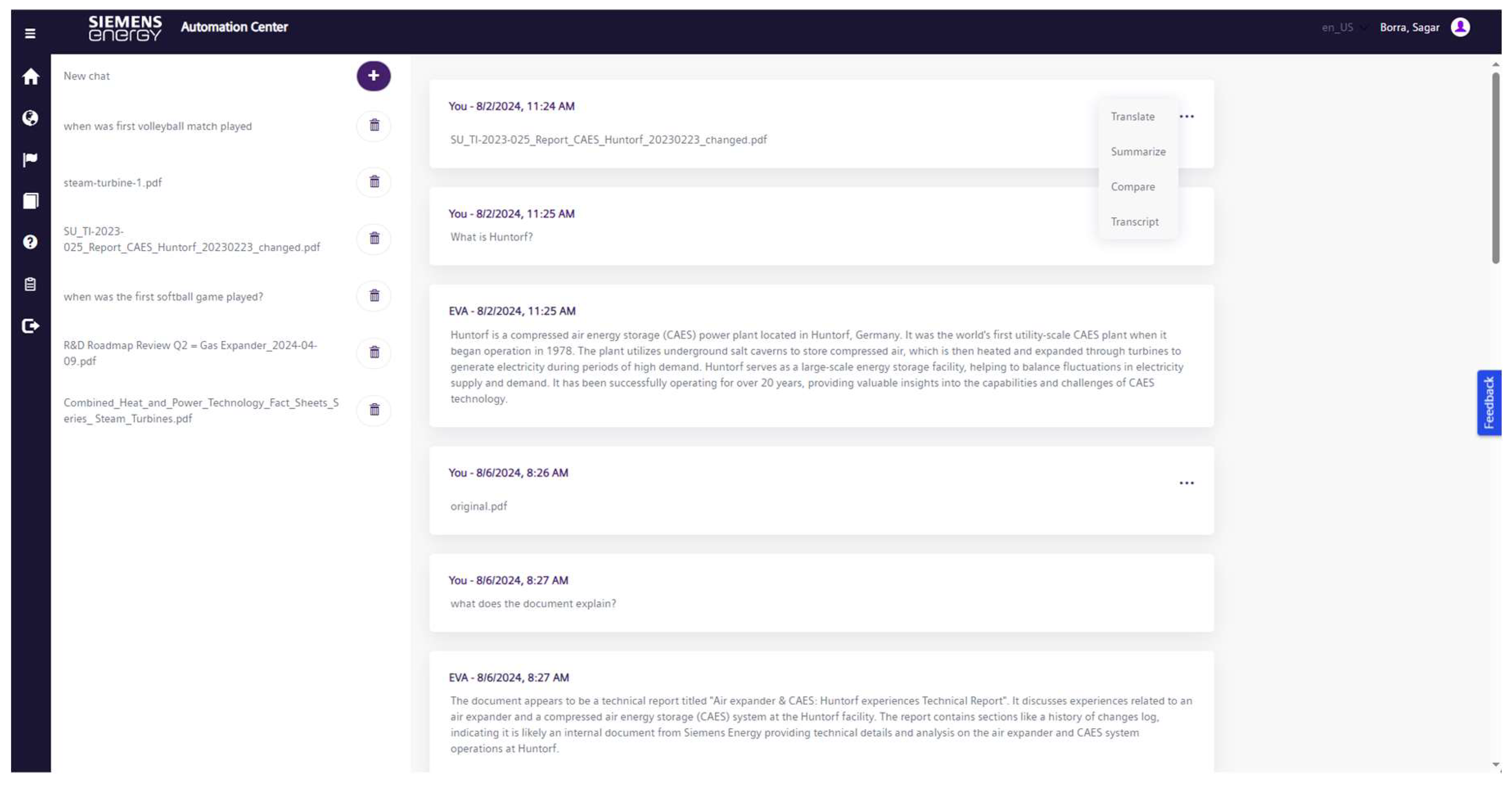

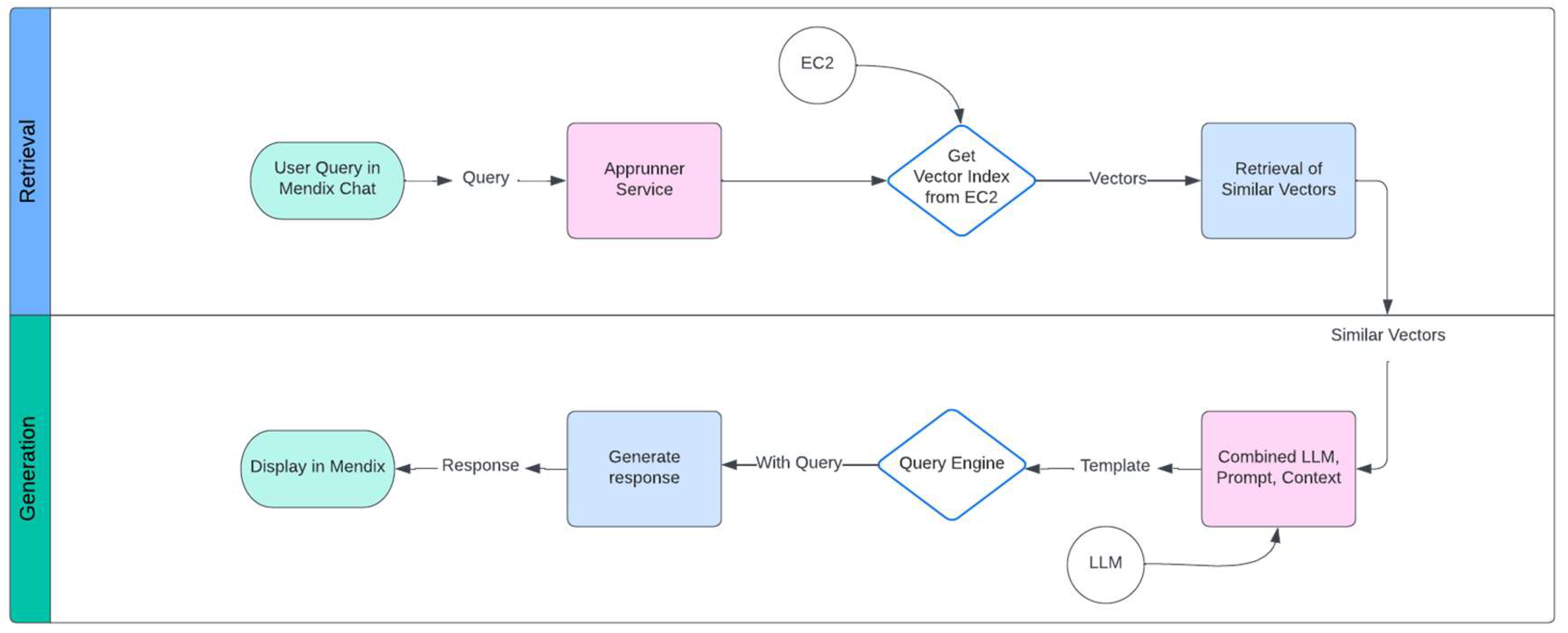

3.3. Workflow of Different Processes

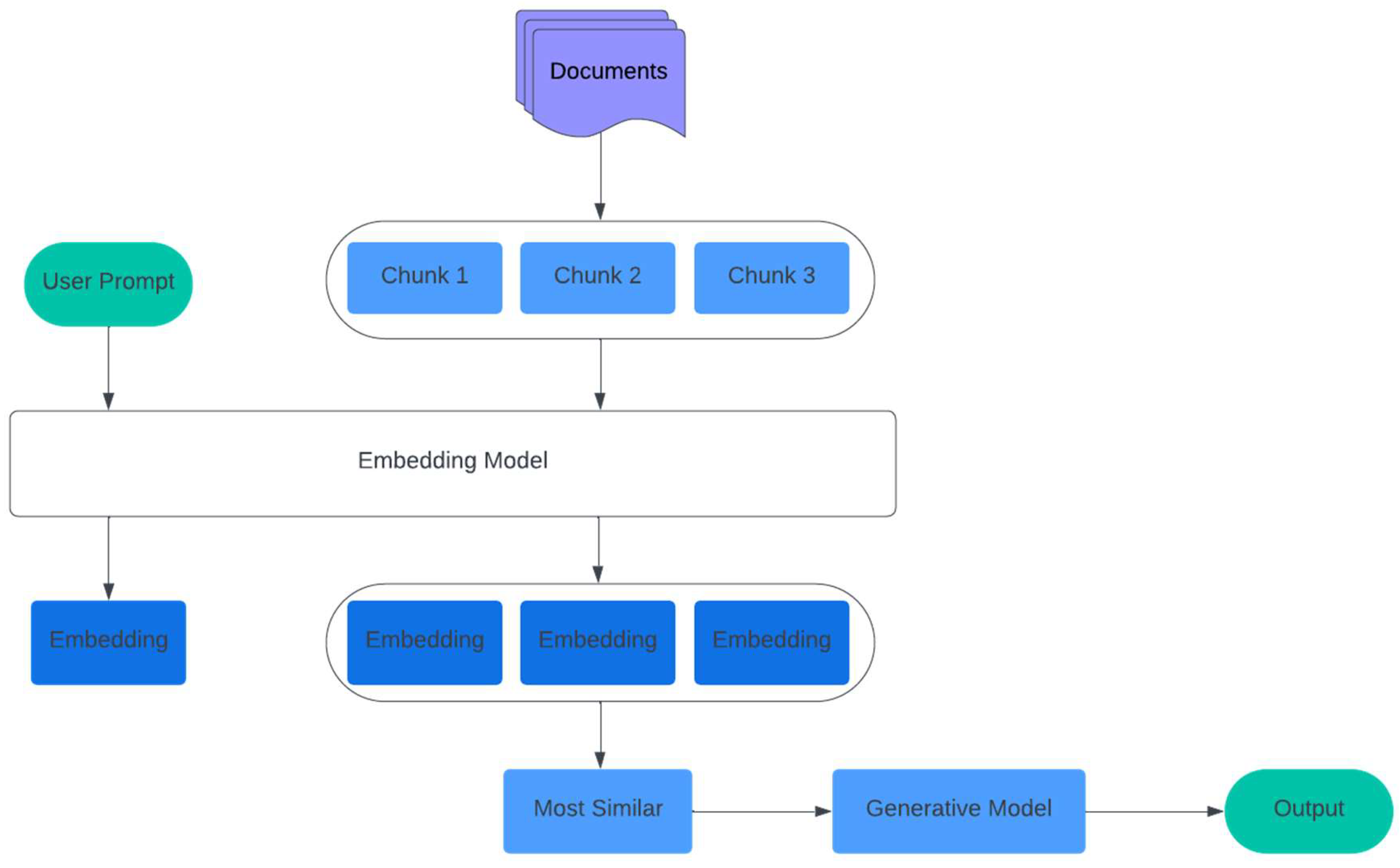

3.3.1. Upload, Extraction, Chunking, and Indexing

3.3.2. Retrieval and Generation

3.4. Data

3.4.1. Data Types and Sources

3.5. How Exactly Does It Work?

3.5.1. System Design

3.5.2. Data Ingestion and Preprocessing

3.5.3. Chunking and Indexing

3.5.4. Retrieval Augmented Generation (RAG)

3.5.5. Natural Language Understanding and Response Generation

3.5.6. Workflow Orchestration

3.5.7. Real-Time Query Handling

3.5.8. Support for Multiple Languages and Document Formats

3.5.9. Continuous Learning and Adaptation

3.5.10. Integration with Siemens Energy's Digital Ecosystem

3.6. Summary of Methodology

4. Findings and Discussion

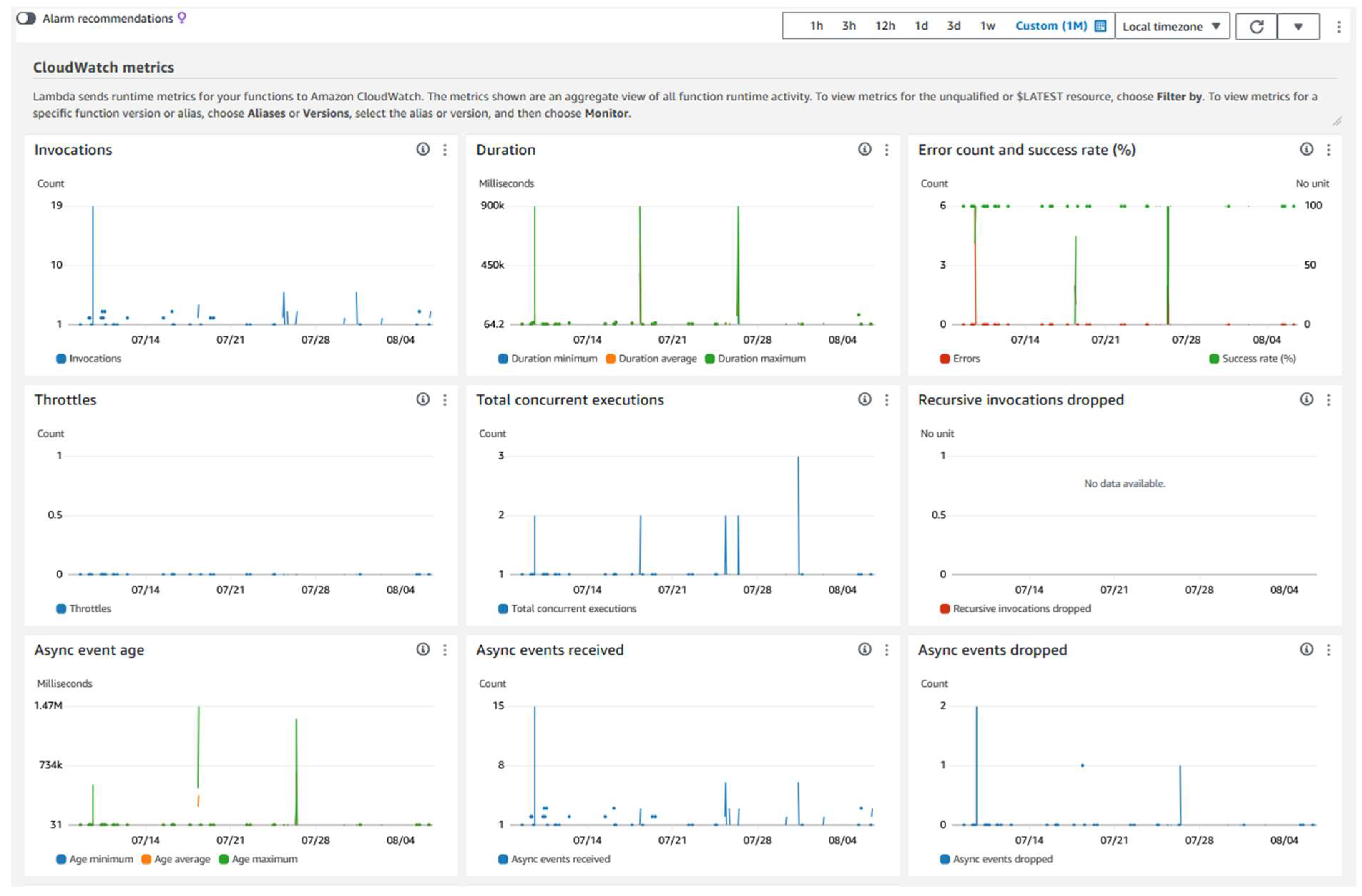

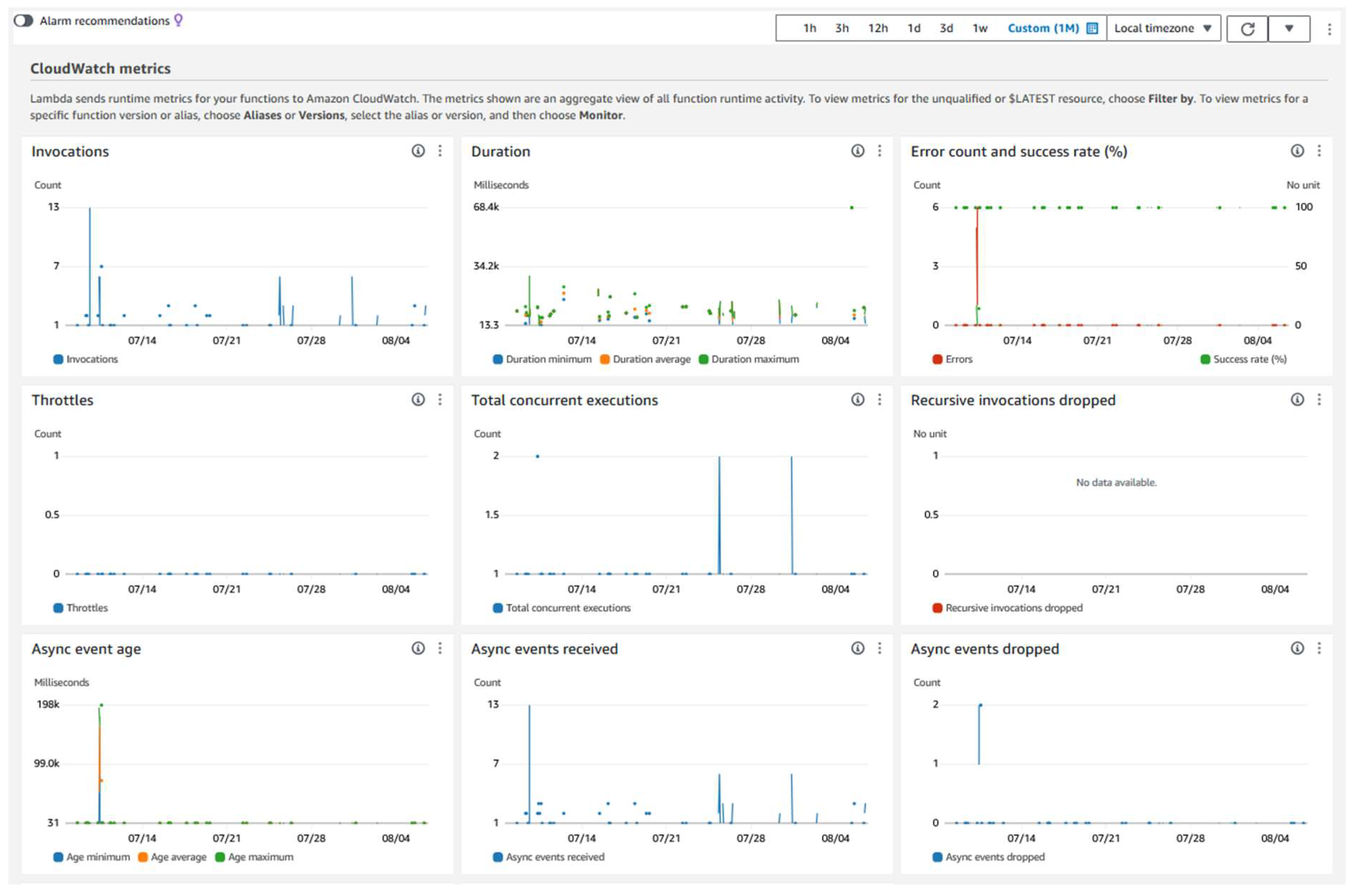

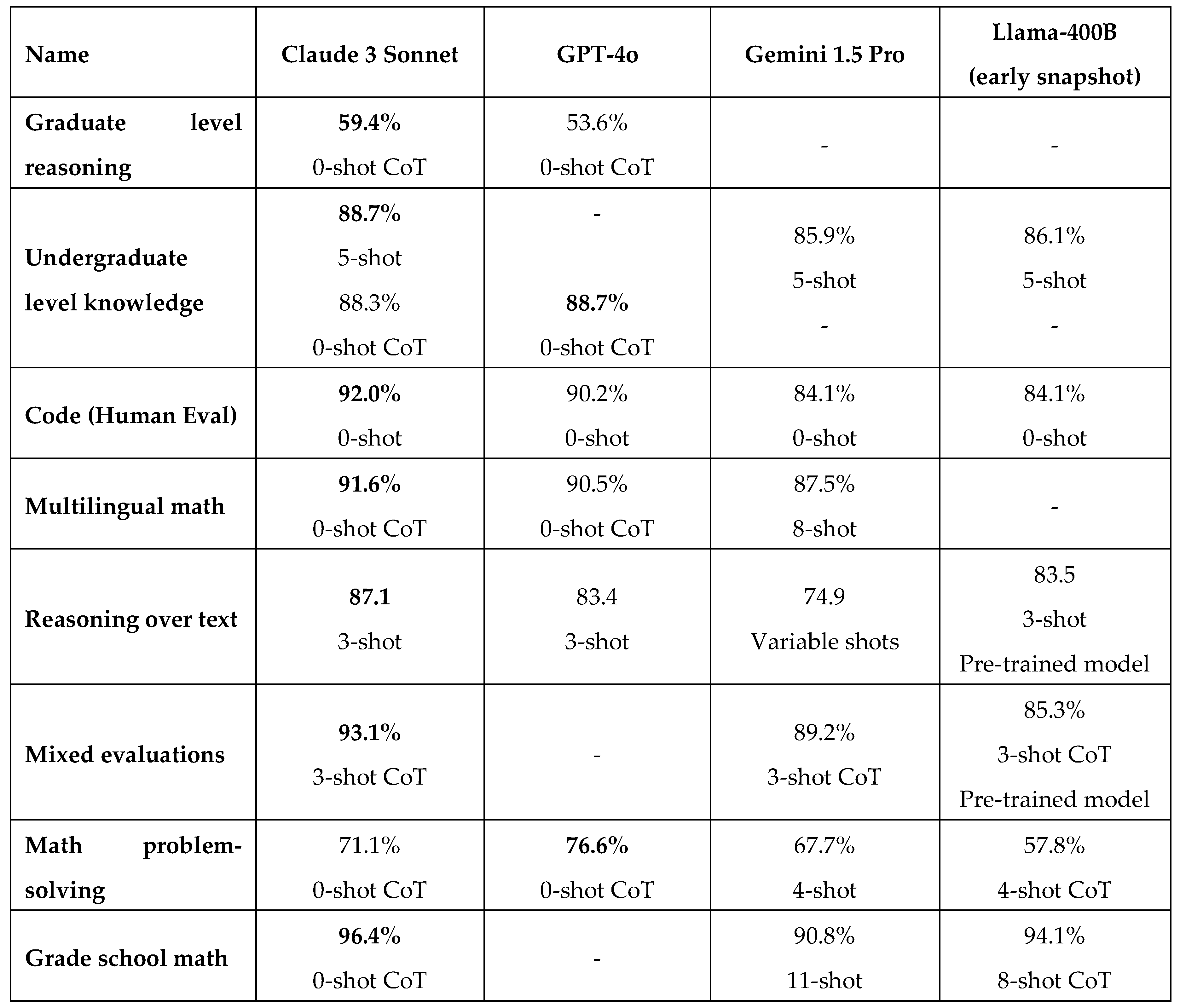

4.1. LLM Results

- Tasks and Metrics: The Graduate Level Reasoning (GQPA, Diamond) assesses the model's capacity for graduate-level reasoning.

- Knowledge at the Undergraduate Level (MMLU): Evaluates the model's comprehension at the undergraduate level.

- Code (HumanEval): Assesses the model's comprehension and writing skills in code.

- Multilingual Math (MGSM): Evaluate the model's multilingual math problem-solving skills.

- The Reasoning Over Text (DROP, F1 Score) assessment gauges the model's capacity to analyze textual content and derive pertinent information.

- Mixed Evaluations (BIG-Bench-Hard): A combination of different challenging tests to assess overall performance.

- Math Problem-Solving (MATH): Evaluates how well the model can solve mathematical puzzles.

- Math for Grade 8 (GSM8K): Evaluate the model's aptitude for solving math problems usually assigned at the grade school level.

-

Metrics of Performance:

- o

- 0-shot CoT (Chain of Thought): The model completes the task without being trained on comparable problems or given any previous instances.

- o

- 3-shot, 4-shot, 5-shot, and so forth: The model has a few samples (shots) to learn from before completing the task.

4.1.1. The Reason Claude 3 Sonnet Is More Reliable Excellent Results

- Versatility and Generalization: The model demonstrates substantial versatility by displaying a great capacity to generalize across many task types, such as multilingual math and reasoning over text.

- Effectiveness of Chain of Thought (CoT): Claude 3 Sonnet scores remarkably well on tasks using 0-shot CoT, such as 88.3% in college-level knowledge (MMLU) and 71.1% in math problem-solving (MATH).

- Comprehensive Evaluations: With a 93.1% score in mixed evaluations (BIG-Bench-Hard), the model performs exceptionally well, demonstrating its adaptability to complicated tasks.

- Knowledge and Reasoning: Claude 3 Sonnet demonstrates superior cognitive abilities with high graduate-level reasoning scores (59.4%) and reasoning over text (87.1% in DROP, F1 score).

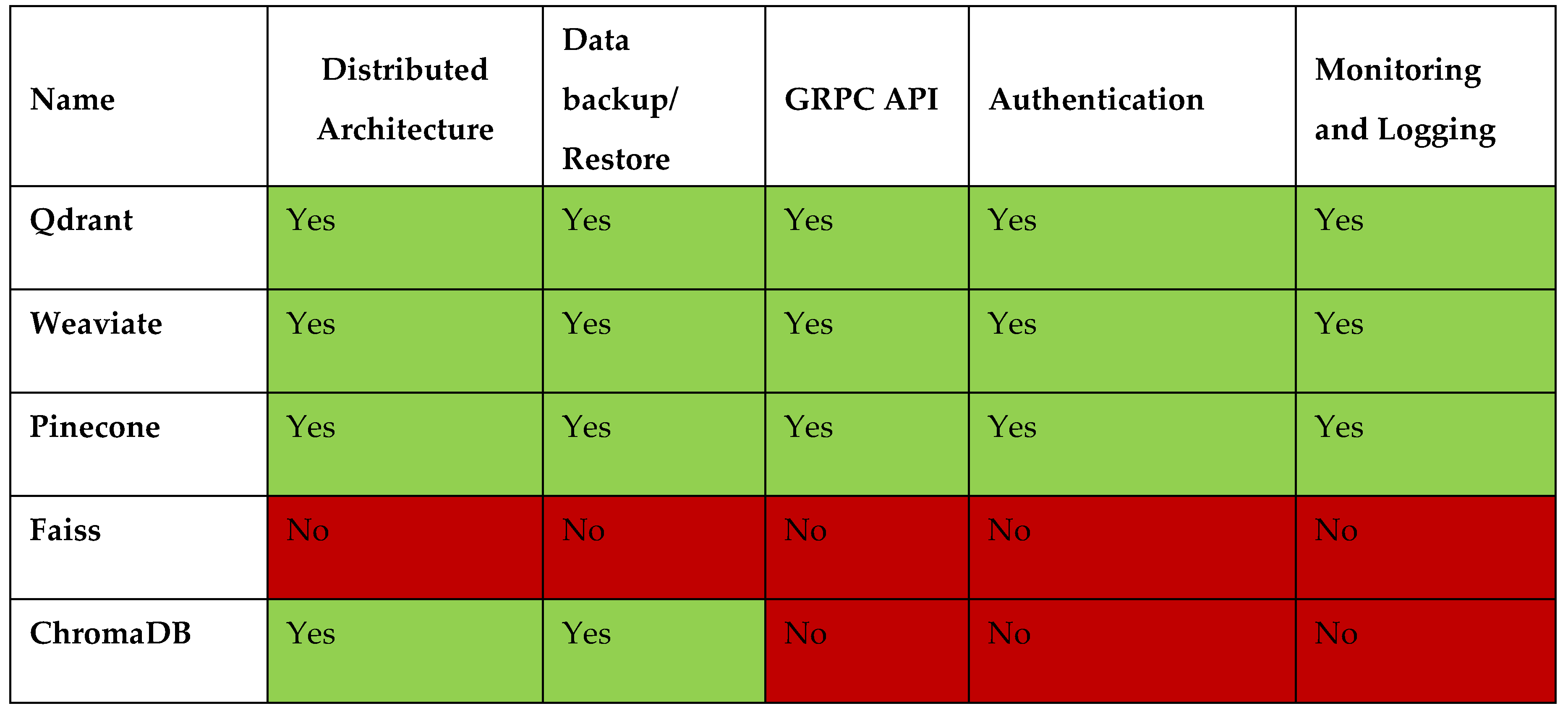

4.2. Key Differences between Vector Databases

|

|

4.2.1. Important Things to Remember when Thinking About Qdrant are as Follows

- Because Qdrant is built in Rust, it is the most reliable and fast option for high-performance vector storage, even with enormous load volumes.

- Qdrant is unique because it supports client APIs and is designed for developers working with Rust, Go, Python, and TypeScript/JavaScript.

- Using the HSNW algorithm, Qdrant provides developers with various distance metrics, such as Dot, Cosine, and Euclidean, allowing them to select the one that best suits their particular use cases.

- With a scalable cloud service, Qdrant quickly moves to the cloud and offers an exploratory free tier. Regardless of the volume of data, its cloud-native architecture guarantees excellent performance.

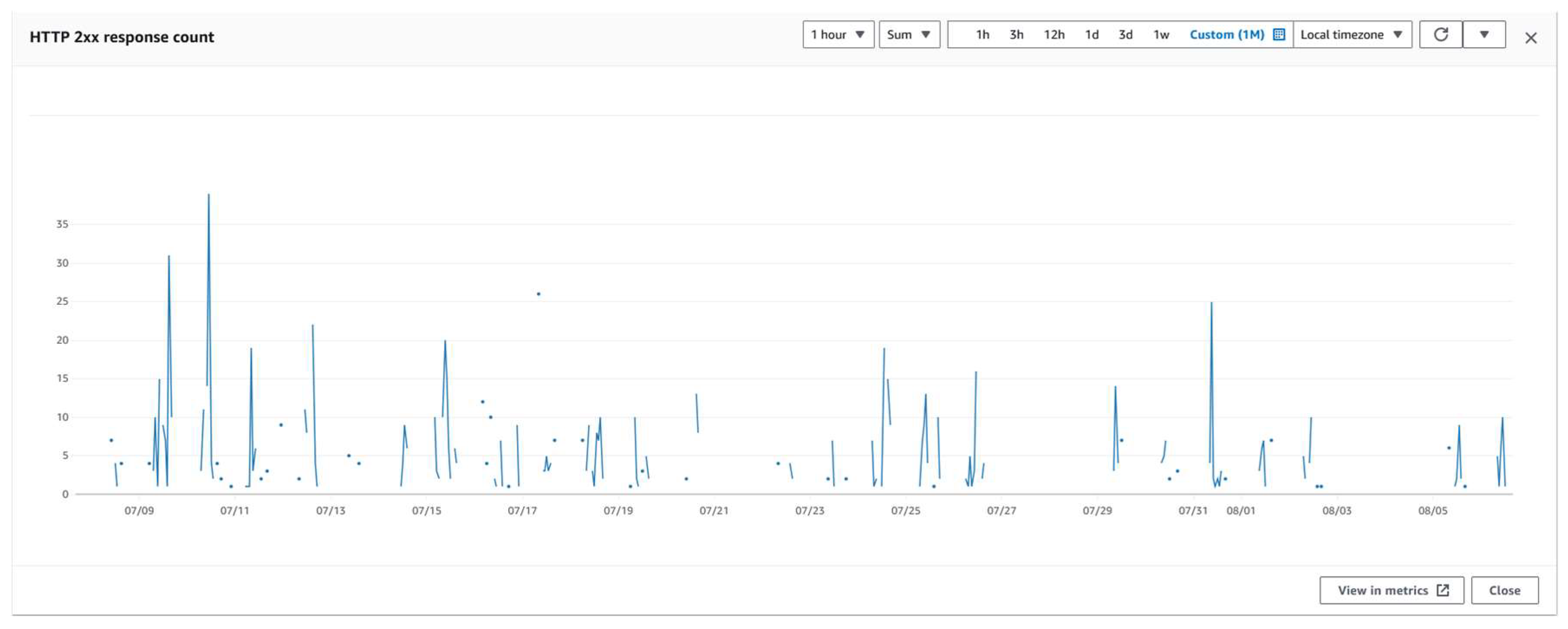

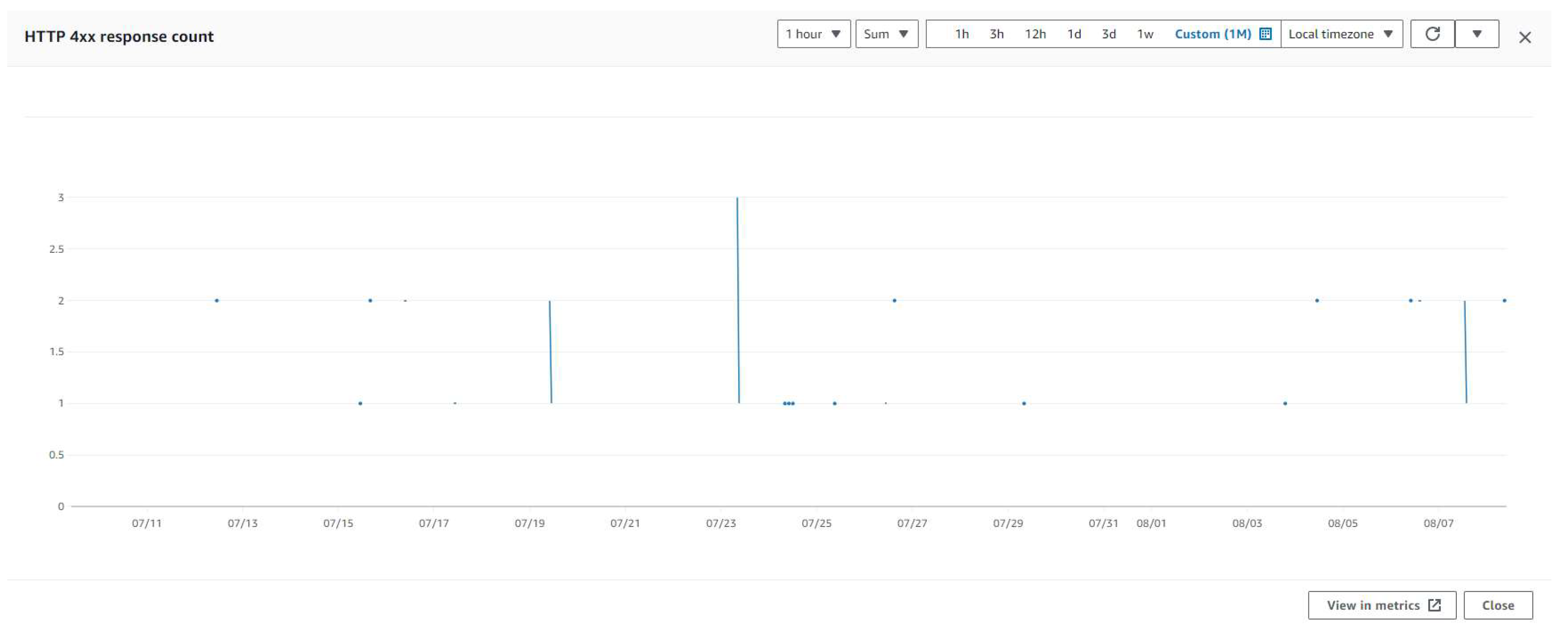

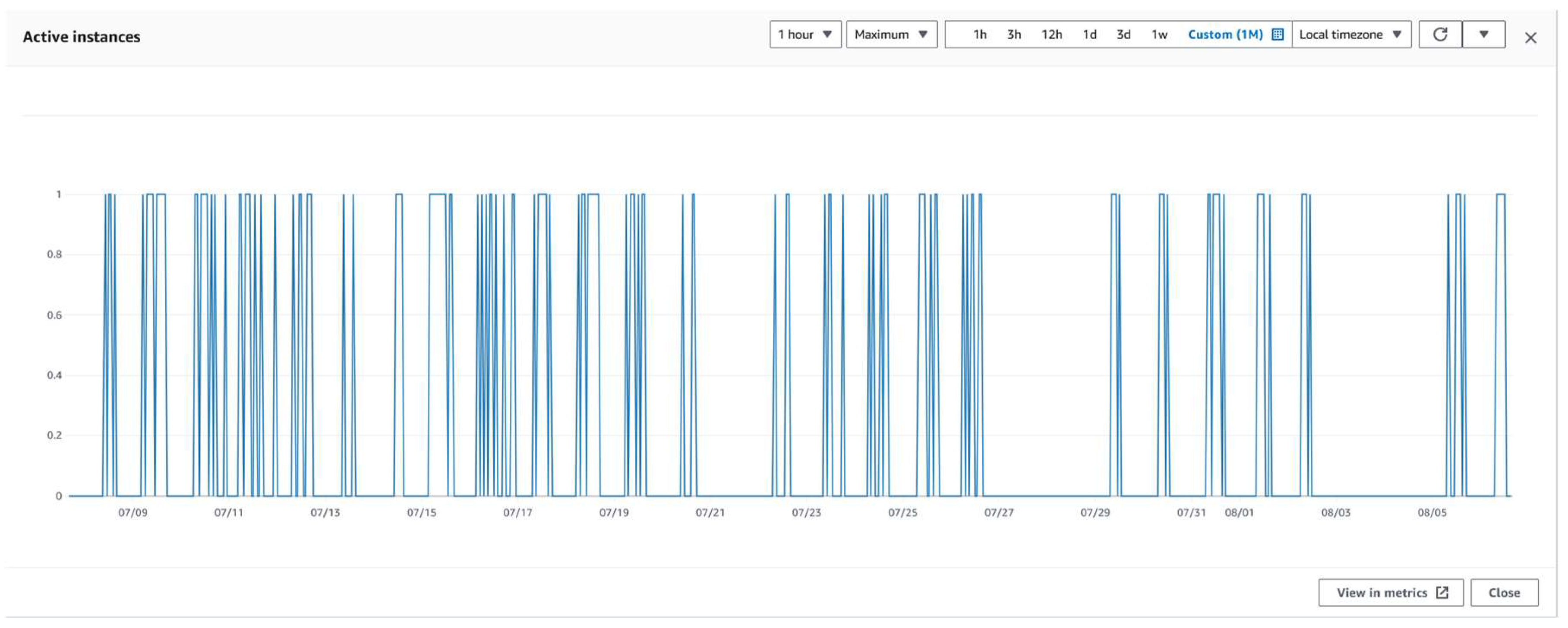

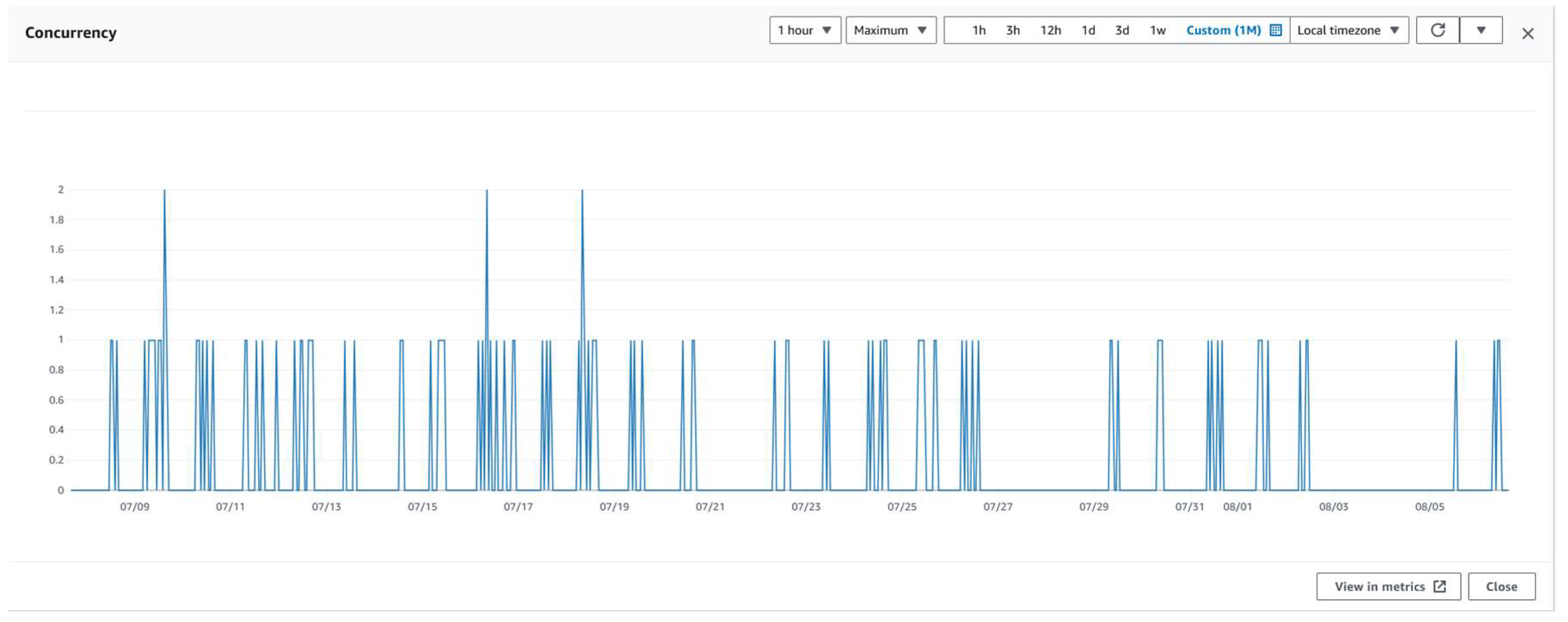

4.3. Explanation of AWS Services

4.3.1. EVA-Command: An Apprunner Service

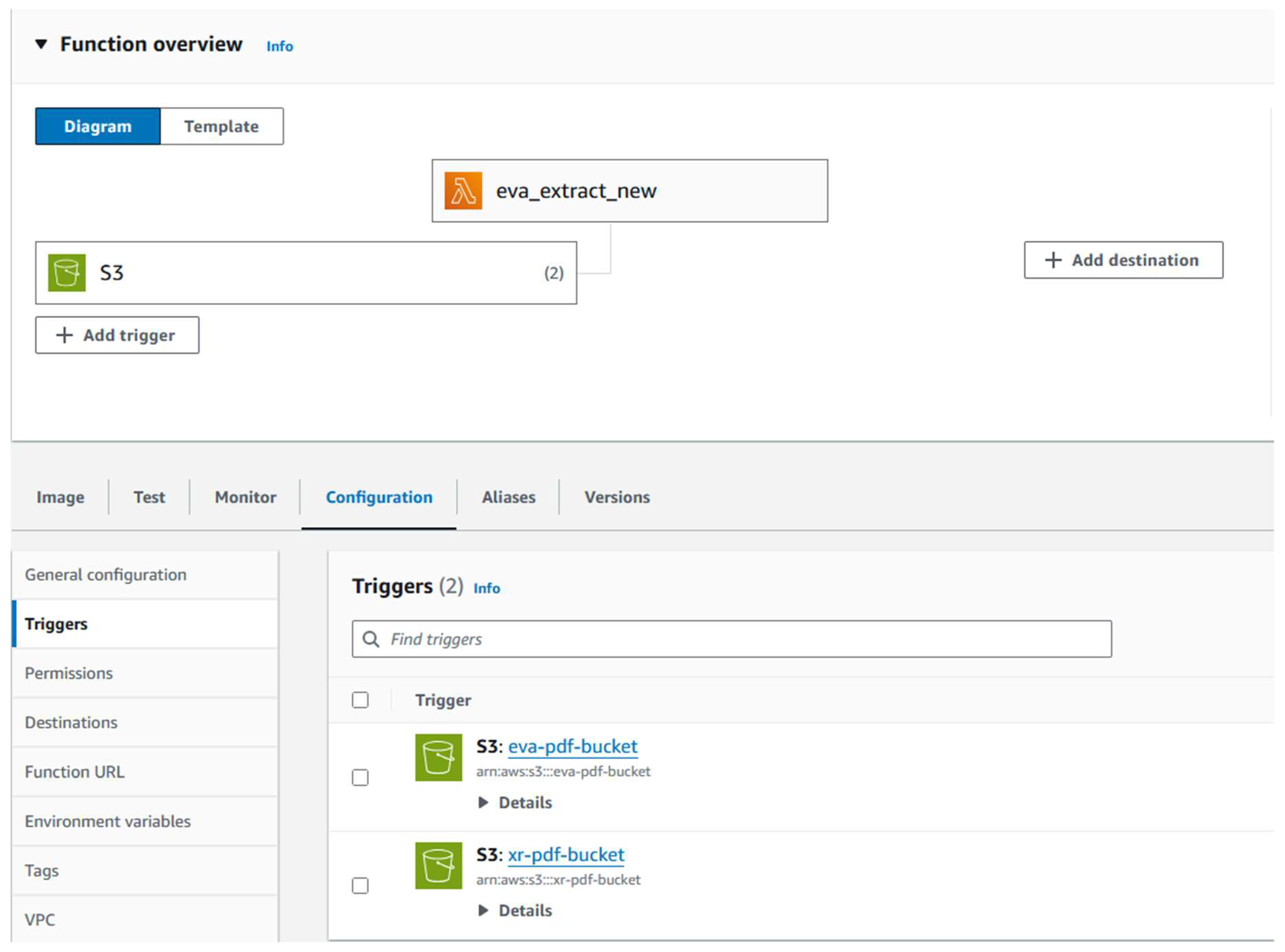

4.3.2. EVA-Extraction: A Lambda Function

4.3.3. EVA-Vector Database: A Lambda Function

5. Conclusion and Future Work

References

- Beurer-Kellner, L., Fischer, M., & Vechev, M. (2022). Prompting is programming: a query language for large language models. arXiv (Cornell University). [CrossRef]

- Chen, J., Lin, H., Han, X., & Sun, L. (2023). Benchmarking large language models in Retrieval-Augmented Generation. arXiv (Cornell University). [CrossRef]

- Gao, Y., Xiong, Y., Gao, X., Jia, K., Pan, J., Bi, Y., Dai, Y., Sun, J., & Wang, H. (2023). Retrieval-Augmented Generation for Large Language Models: A survey. arXiv (Cornell University). [CrossRef]

- Guan, Y., Wang, D., Chu, Z., Wang, S., Ni, F., Song, R., Li, L., Gu, J., & Zhuang, C. (2023). Intelligent Virtual Assistants with LLM-based Process Automation. arXiv (Cornell University). [CrossRef]

- Kaddour, J., Harris, J., Mozes, M., Bradley, H., Raileanu, R., & McHardy, R. (2023). Challenges and applications of large language models. arXiv (Cornell University). [CrossRef]

- Kirchenbauer, J. , & Barns, C. (2024). Hallucination reduction in large language models with Retrieval-Augmented Generation using Wikipedia knowledge. OSF. [CrossRef]

- Li, Y. , Wen, H., Wang, W., Li, X., Yuan, Y., Liu, G., Liu, J., Xu, W., Wang, X., Sun, Y., Kong, R., Wang, Y., Geng, H., Luan, J., Jin, X., Ye, Z., Xiong, G., Zhang, F., Li, X.,... Liu, Y. (2024). Personal LLM Agents: Insights and Survey about the Capability, Efficiency and Security. arXiv (Cornell University). [CrossRef]

- Liang, T. , He, Z., Jiao, W., Wang, X., Wang, Y., Wang, R., Yang, Y., Tu, Z., & Shi, S. (2023). Encouraging Divergent Thinking in Large Language Models through Multi-Agent Debate. arXiv (Cornell University). [CrossRef]

- Ni, C. , Wu, J., Wang, H., Lu, W., & Zhang, C. (2024). Enhancing Cloud-Based Large Language Model Processing with Elasticsearch and Transformer Models. arXiv (Cornell University). [CrossRef]

- Shanahan, M. (2024). Talking about Large Language Models. Communications of the ACM, 67(2), 68–79. [CrossRef]

- Topsakal, O. , & Akinci, T. C. (2023). Creating large language model applications Utilizing LangChain: A primer on developing LLM apps fast. International Conference on Applied Engineering and Natural Sciences, 1(1), 1050–1056. [CrossRef]

- Von Straussenburg, A. F. A., & Wolters, A. (n.d.). Towards hybrid architectures: integrating large language models in informative chatbots. AIS Electronic Library (AISeL). https://aisel.aisnet.org/wi2023/9.

- Yu, P. , Xu, H., Hu, X., & Deng, C. (2023). Leveraging Generative AI and large language Models: A Comprehensive Roadmap for Healthcare integration. Healthcare, 11(20), 2776. [CrossRef]

- Zhang, L. , Jijo, K., Setty, S., Chung, E., Javid, F., Vidra, N., & Clifford, T. (2024). Enhancing large language model performance to answer questions and extract information more accurately. arXiv (Cornell University). [CrossRef]

- Zhu, Y. , Yuan, H., Wang, S., Liu, J., Liu, W., Deng, C., Dou, Z., & Wen, J. (2023). Large Language models for information retrieval: a survey. arXiv (Cornell University). [CrossRef]

- Zou, H. , Zhao, Q., Bariah, L., Bennis, M., & Debbah, M. (2023). Wireless Multi-Agent Generative AI: From connected intelligence to collective intelligence. arXiv (Cornell University). [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).