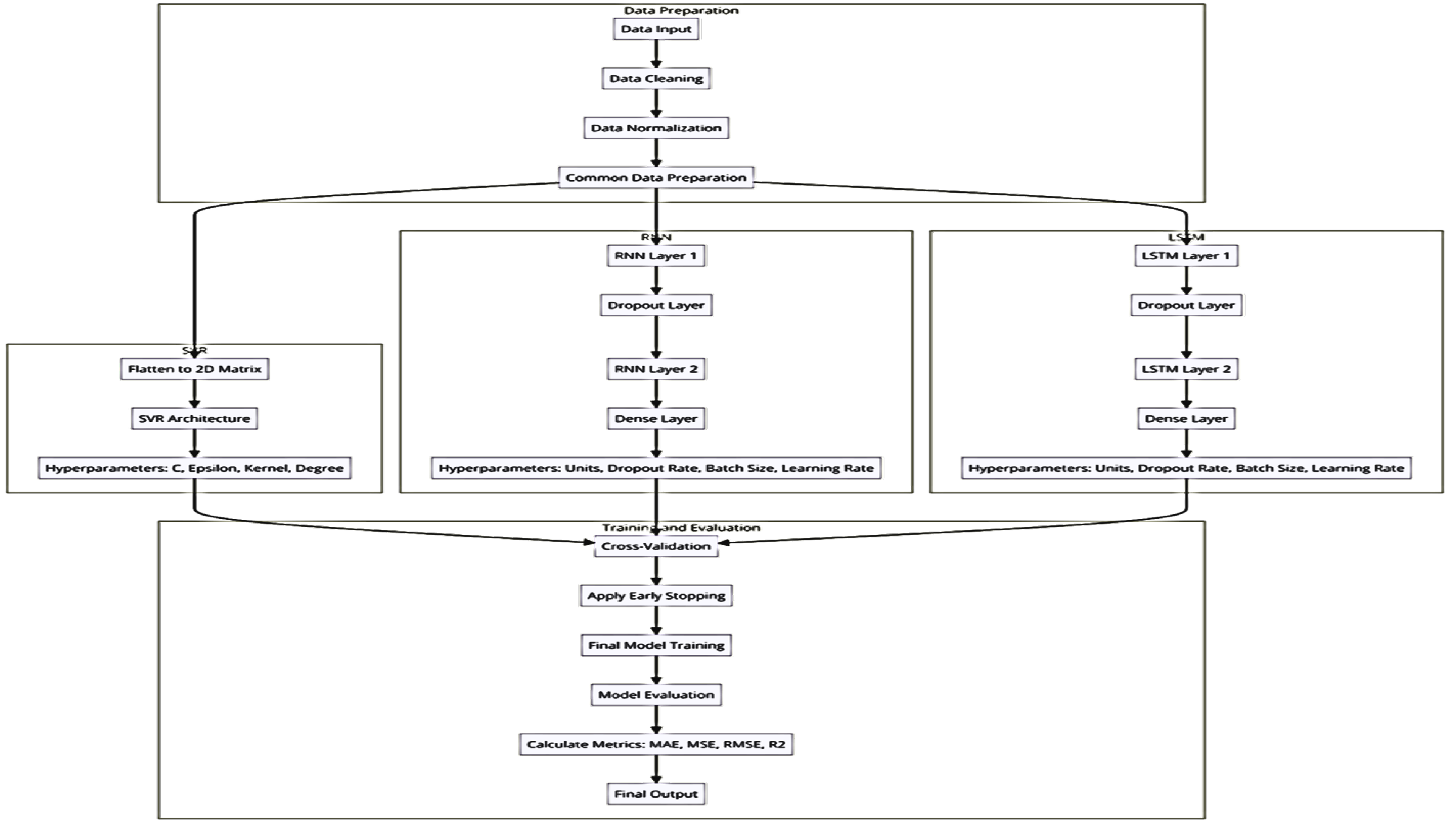

The evaluations of predictive models are discussed below. SVR, RNN and LSTM models were trained and tested using historical data from the Nigerian Stock Exchange, with a particular focus on forecasting the NGX All Share Index.

3.1. Support Vector Machines for Regression (SVR)

The NGX dataset's features were converted into a 2-dimensional matrix format for a SVR model. The result below shows the evaluation result of the SVR using regression metrics

Table 2.

Evaluation Result of SVR.

Table 2.

Evaluation Result of SVR.

| |

Best hyperparameters:

{'C': 0.2520357450941616, 'epsilon': 0.027026985756138284, 'kernel': 'linear'} |

Best hyperparameters:

{'C': 1.1625895249237326, 'epsilon': 0.005801399787450704, 'kernel': 'rbf'}

|

| |

Returns & Price Indicators |

OBV Inclusion |

| Cross Validation Training Loss |

0.00110 |

0.00133 |

| Average Final Validation Loss |

0.00197 |

0.0136 |

| Test MAE |

0.0143 (1231.61) |

0.027 (2318.66) |

| MSE |

0.000459 (3396945.36) |

0.00242 (17878018.81) |

| RMSE: |

0.0214 (1843.08) |

0.0491 (4228.24) |

| MAPE (%) |

2.03 |

3.40 |

| R-Squared |

0.99 |

0.95 |

The result from the table above shows the training loss of the classic (0.00110) approach and OBV inclusion (0.00133) method are relatively low. This shows that SVR models performed well on the training data. However, the classic approach training loss is slightly lower and this suggests that SVR fits better during training using classic approach. Also, the classic approach (0.00197) outperforms the “with OBV inclusion’ (0.0136) approach significantly on the validation set. This indicates a lower validation loss generalized better to unseen data during cross-validation. The classic approach has a lower MAE (0.0143) than OBV approach (0.027). This implies it has less average deviation from the actual values, and this is an indication of better predictive accuracy. The MSE of classic approach (0.000459) is lower than the OBV inclusion approach (0.00242). This shows that the model predictions are closer to the actual values and are less prone to errors. RMSE is a measure of the standard deviation of prediction errors. The classic approach has a lower RMSE (0.0214) than OBV inclusion (0.0491). This shows it is more reliable and has smaller prediction errors on average. MAPE is a normalized measure of error. The lower MAPE in the classic approach (2.03%) indicates a better performance in terms of relative error and the predictions are more accurate on a percentage basis. R-squared indicates how well the model explains the variance in the target variable. The classic approach (0.99 =99%) has a higher R-squared value than OBV inclusion approach with 0.95. This explains more variance in the data and is likely a better model. The result of the regression metric above shows classic approach outperforms OBV inclusion approach across all the evaluated metrics. The lower error rates and higher R-Squared value suggest that the inclusion of OBV (On-Balance Volume) did not improve SVR model performance but rather introduced more complexity to the model.

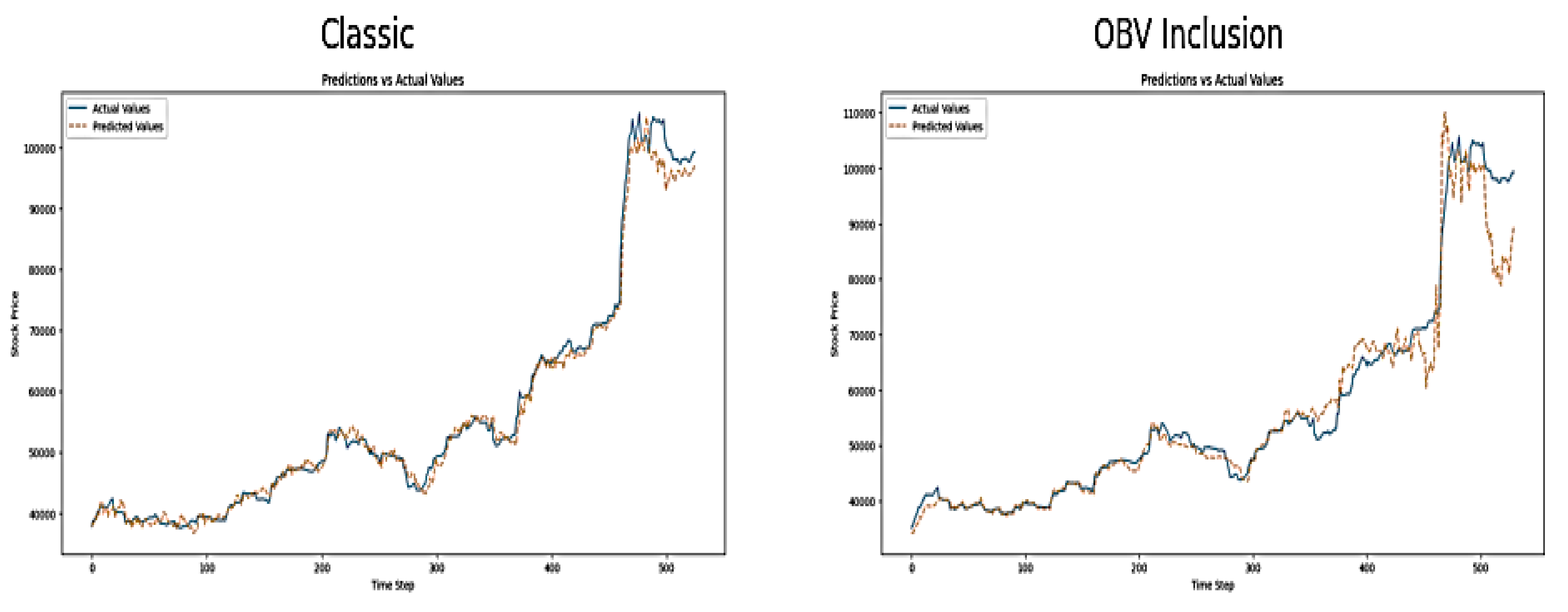

Visual Inspection

The plot of predicted and actual value for classic and OBV inclusion approaches are shown below in figure 2 below:

Figure 2.

Plot of Predictions versus Actual Values on SVR Model.

Figure 2.

Plot of Predictions versus Actual Values on SVR Model.

There is a close alignment between the predicted and actual NGX index value of the “classic approach”, most especially at the early and middle segments of the dataset. This shows the effectiveness of the model in capturing general market trends. However, there are some divergences towards the end of the trend, particularly during the peak time. On the other hand, the "OBV Inclusion" approach shows significant divergence between the predicted and actual values, particularly after the stock price peak. This indicates that the inclusion of OBV might be leading to poor model calibration during volatile market periods. SVR model seems to struggle with high volatility and this makes the predicted prices overshoot or lag behind the actual prices, which leads to reduced predictive accuracy.

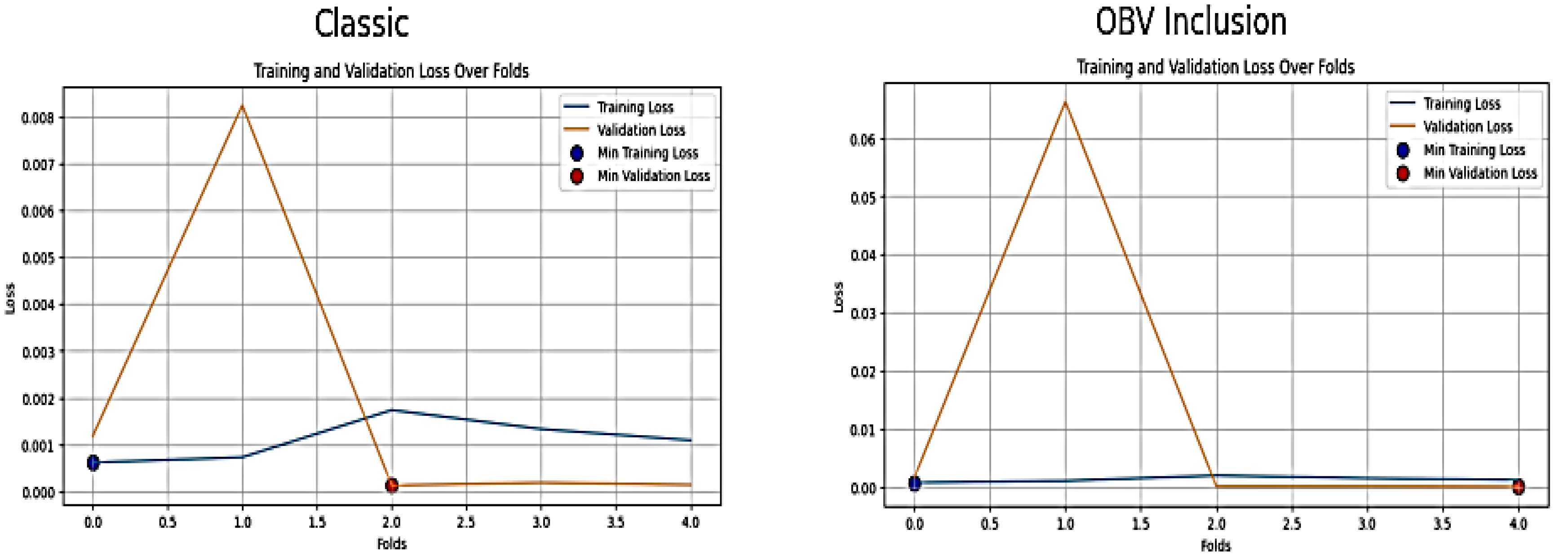

Diagnostic Evaluation

The training loss over folds in

Figure 3 below shows that the classic approach has low and relatively stable training loss to reach a minimum loss of 0.000620 at fold 1. The relatively consistent and low loss values suggest that the classic model is performing well in terms of generalization, and with minimal overfitting. The validation loss is also low (average loss: 0.00197) and has a minimum loss of 0.000133 at fold 3. However, there is an observation of a spike in fold 2, which may initially be attributed to an outlier or a subset of data that is fundamentally more difficult to model. Furthermore, the inclusion of OBV in the SVR model seems to introduce higher variability in validation loss (0.0136) compared to the classic approach and has a minimum loss of 0.000093 at fold 5. The presence of a large spike in fold 2 of the OBV inclusion approach could also initially indicate overfitting, poor generalization on that specific subset of the data, the inability of OBV to improve the model stability, the introduction of noise and addition of complexity that SVR could not withstand. These led to further check in order to resolve the spike. The adjustments include data scaling revisit, outlier analysis, hyperparameter tuning re-running with more constraints, a slight increase of regularization, and careful feature re-engineering. All these actions could not create major adjustments because they worsened the situation. This caused the researcher to revert back to the original result plotted below:

Besides, the removal of what is perceived as outliers from NGX time series temporal data is more challenging compared to non-temporal data. This is due to the several inherent characteristics of time series data such as temporal dependency, risk of valuable data loss, and impact on model performance. NGX datasets are sequential in nature, and removal of what is perceived as an outlier might disrupt these temporal dependencies. This will lead to inaccurate analysis of price movement and prediction. Besides, the definition of outlier in time series data is context-based as stock market variation is complex and what is perceived as outlier could represent a significant market event such as a market crash, natural disaster, etc. that should be analyzed rather than removed. The normal value in time series data changes over time due to trends, seasonal patterns, and other factors. According to Tawakuli, traditional outlier detection methods (like Z-scores) may not work well because they assume a static baseline or distribution [

18]. Further investigations on the actual cause of the spike of fold 2 in the validation loss led to the findings that there was a high market volatility and non-linearity during the period that could not be captured well by rbf (Radial Basis Function) kernel of SVR. RBF kernel in SVR was specifically designed to capture nonlinear relationships in the data [

19]. However, it struggled to capture NGX market patterns at the fold. This could be because stock prices are influenced by a wide range of factors, which include economic events, investor sentiment, and market anomalies, which are often highly volatile, non-stationary, and subject to sudden changes. Despite the regularization put in place and the addition of technical indicators such as moving averages to smoothen and reduce the impact of underlying market trend, SVR model still generated higher validation loss during this fold.

Figure 3.

Training and Validation Loss of SVR Model over Folds.

Figure 3.

Training and Validation Loss of SVR Model over Folds.

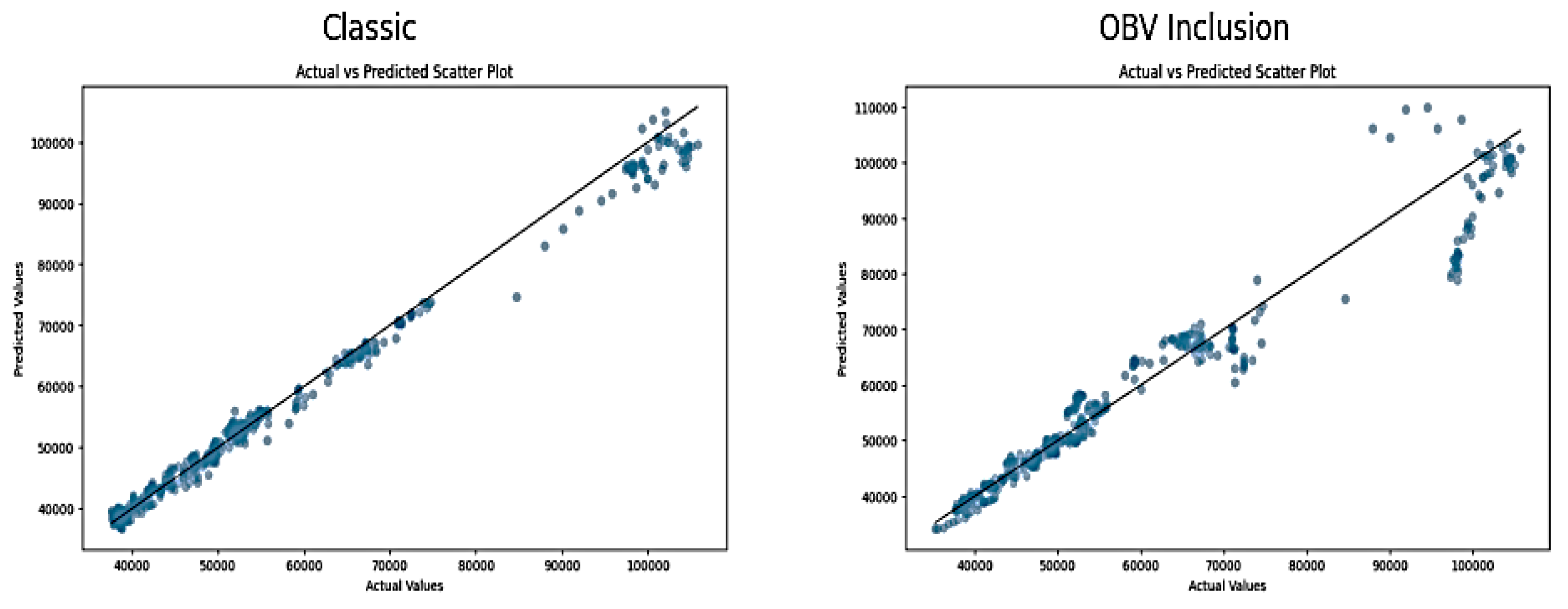

The actual versus predicted scatter plot of the classic approach below shows a strong linear relationship between the actual and predicted NGX values, with most points lying close to the diagonal line. This shows SVR model is generally accurate in predicting NGX price indices, with relatively few extreme outliers.

Figure 4.

Scatter plot of Actual versus Predicted NGX Index Value on SVR.

Figure 4.

Scatter plot of Actual versus Predicted NGX Index Value on SVR.

The "OBV Inclusion" scatter plot shows a more dispersed relationship, as shown by several data points that deviate slightly from the diagonal line. The dispersion and occurrence of deviant data points indicate that the On-Balance Volume might be introducing more complexity instead of providing valuable predictive insights. This reduces the accuracy of the model.

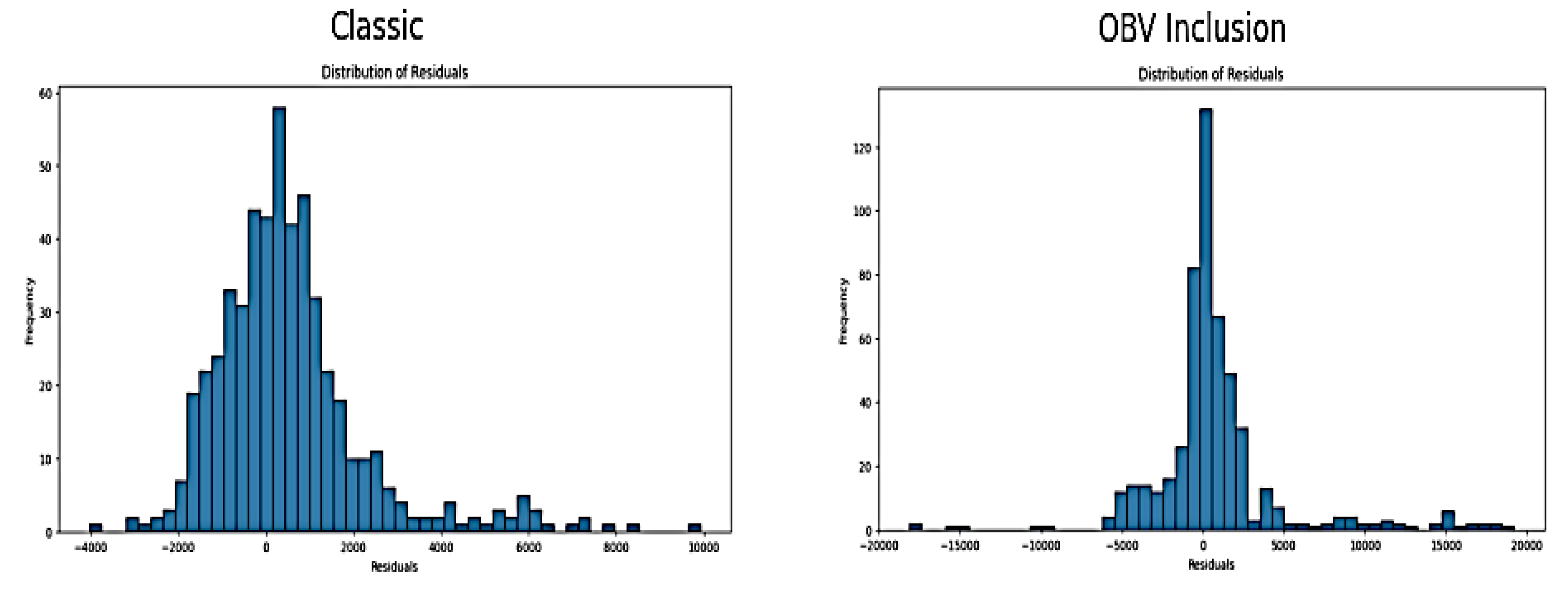

Residuals are the differences between the actual observed values and the values predicted by the model. The distribution of residual of classic and OBV inclusion approach are shown in

Figure 5 below:

Figure 5.

Distribution of Residual of SVR Classic and OBV Inclusion Approach.

Figure 5.

Distribution of Residual of SVR Classic and OBV Inclusion Approach.

The residual distribution for the "Classic" model is relatively centered around zero, with a bell-shaped distribution. This suggests that errors are relatively normally distributed. It also indicates no significant bias in the SVR model's predictions. On the other hand, the residual distribution for the "OBV Inclusion" approach shows a wider spread and more extreme residuals, especially on the positive side. This skewed distribution suggests that the model is frequently overestimating the NGX value in certain market conditions, which leads to a less accurate model. The classic SVR model approach appears to be the most reliable choice for NGX value prediction. The inclusion of the OBV feature adds complexity that the SVR model cannot handle effectively. Despite the high result generated from the regression metrics, further diagnosis show increased variability in validation loss, poorer alignment in the predictions vs. actual plot, and the wider spread in the residuals distribution from classic approach to the OBV inclusion method suggest that SVR model cannot withstand NGX market complexity, high market volatility & non-linearity, market shock, and market reversal. This makes SVR model not to be the best model for predicting this NGX dataset

3.3.2. Recurrent Neutral Network

The initial best result of SimpleRNN after manual combination of hyperparameters (without hyperparameter optimisation) with input shape of 60 day step-time appears to be unsatisfactory. However, the model managed to perform on a mid-level on a 30 day step-time with SimpleRNN input layer of units of 50, drop-out of 0.2, and dense unit of 1, and with a combination of input features of classic approach. The normalized and transformed original result of the training and validation loses using MSE, and evaluation metrics on the test dataset of the best 30day step-time is shown in the table below:

Table 3.

Evaluation Metrics of the best Un-optimised Simple RNN model.

Table 3.

Evaluation Metrics of the best Un-optimised Simple RNN model.

| Metric Evaluation |

30 day step-time (Normalised value) |

Transformed Original value |

| MAE |

0.07483 |

6434.68 |

| MSE |

0.01043 |

77130110.92 |

| RMSE |

0.10213 |

8782.37 |

| MAPE (%) |

11.27 |

11.27 |

| R-Squared |

0.78613 |

0.79 |

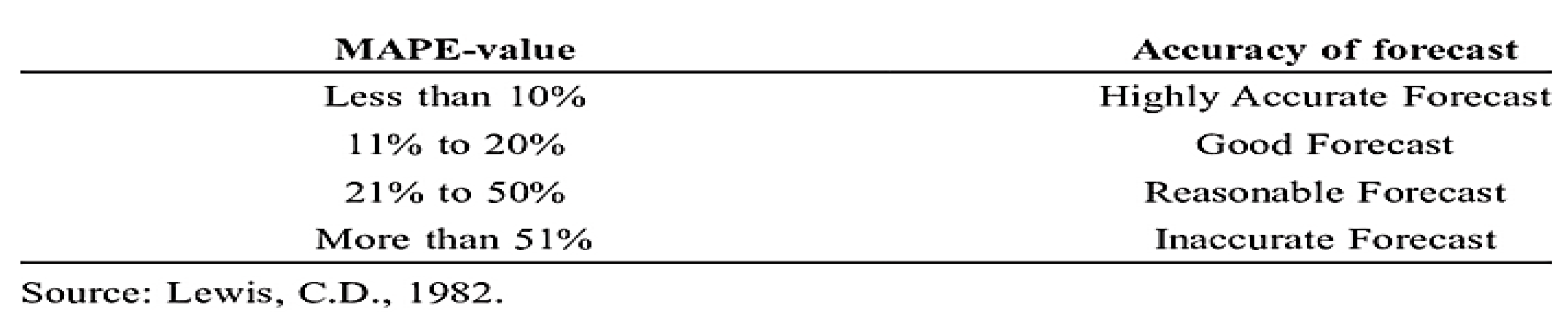

The normalised and transformed original value shows high training & validation loss, and high error of the NGX test datasets using evaluation metrics such as MAE (0.0748), MSE (0.010), MRSE (0.10), MAPE (11%) and suboptimal result of 0.79 of R squared. Although, MSE is a differentiable function unlike MAE but the high error of other metrics show inaccuracy of the regression model. MAPE is a percentage error between the model fitted values and the observed data values. According to Lewis, C.D as shown in the table below, the error rate of 11% is a good forecast but is not highly accurate [

22].

Table 12.

Acceptable MAPE Values.

Table 12.

Acceptable MAPE Values.

However, the acceptability depends on the industry, application, and specific context. In NGX stock market, a MAPE above 10% is considered inaccurate because of the financial implication and loss on the investment. The error/loss result above clearly shows that SimpleRNN cannot perform accurately on NGX dataset without adoption of hyperparameter fine-tuning technique in real life. Besides, the plot of actual versus predicted of NGX depicted below show the inability of Simple RNN model using classic approach to accurately predict the stock market underlying patterns most especially during volatile periods. The error rate during volatile market period was so high that led to high variation of predicted price from actual price during such periods. The plot of actual versus predicted NGX price value is shown below:

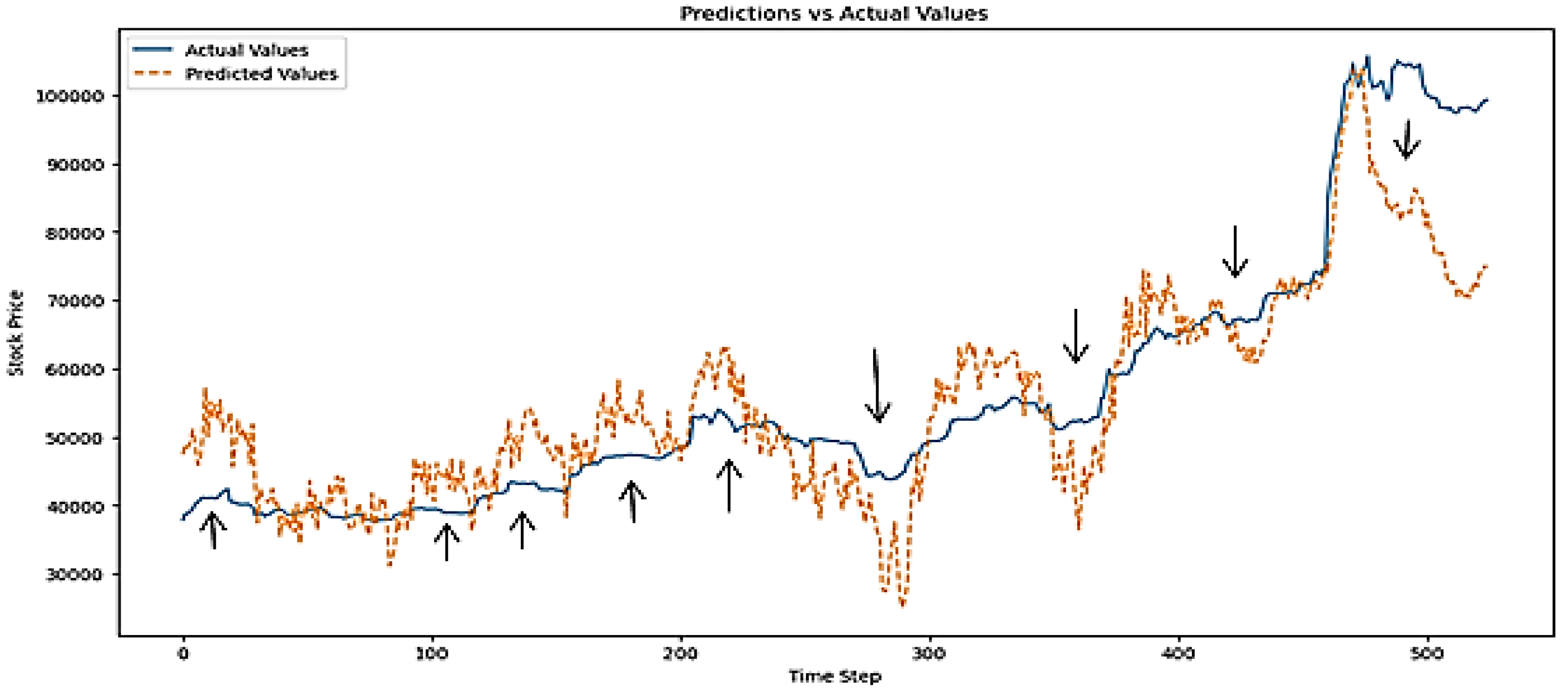

Figure 6.

Plot of Actual versus Predicted NGX Values on Un-optimised RNN Model.

Figure 6.

Plot of Actual versus Predicted NGX Values on Un-optimised RNN Model.

The un-optimised results of SimpleRNN led to the adoption of optimisation technique such as Optuna to fine-tune the hyperparameter and generate the best hyperparameter used in training RNN the model.

A custom objective functions and constraints were defined and suggested for Optuna to search with different sets of hyperparameter combinations (mentioned during model training) to generate the best hyperparameter for the optimisation of evaluation result of SimpleRNN model. The prediction was done using classic and OBV inclusion approach. The financial market has several endogenous variables such as volatility, dividend yield, bid-ask spread, market sentiment but the main endogenous variables are price, volume and returns. Endogenous variables are those variables whose values are determined within a system [

23]. Although, majority of the dealing members of NGX uses returns only in ARIMA model for prediction but the study implemented the usage of classic and OBV inclusion method to comprehensively investigate there impact on price prediction with different models. The best hyperparameter generated are tabularised below:

Table 4.

Simple RNN Best Hyperparameter.

Table 4.

Simple RNN Best Hyperparameter.

| RNN Model |

Classic Approach (Input features includes Returns, Historical Price & technical indicators only) |

OBV Inclusion Approach (All features and On-Balance Volume) |

| Best Hyperparameter |

30 day time-step |

60 day time-step |

30 day time-step |

60 day time-step |

| Units |

122 |

105 |

94 |

79 |

| Dropout rate |

0.21057927576994717 |

0.15860460902099602 |

0.39808512912645366 |

0.28896636206794524 |

| Batch size |

32 |

64 |

32 |

64 |

| Learning rate |

0.00045995847308281305 |

0.004964007144265714 |

0.0033264056797172536 |

0.009618218785212735 |

The table below shows the result of the evaluation metrics of SimpleRNN model:

Table 5.

Performance of RNN model using Evaluation Metrics.

Table 5.

Performance of RNN model using Evaluation Metrics.

| RNN Model |

Classic Approach (Input features includes Returns, Historical Price & technical indicators only) |

OBV Inclusion Approach (All features and On-Balance Volume) |

| Losses |

30 day time-step |

60 day time-step |

30 day time-step |

60 day time-step |

| Training Loss |

0.004067 |

0.004150 |

0.005530 |

0.003635 |

| Validation Loss |

0.000125 |

0.000096 |

0.000138 |

0.000119 |

| Test Loss |

0.000388 |

0.000613 |

0.000872 |

0.000982 |

| Test |

Evaluation Metrics |

|

|

|

| MAE |

0.011321

(973.52) |

0.012490

(1074.05) |

0.019642

(1689.04) |

0.017465

(1501.84) |

| MSE |

0.000388

(2870386.08) |

0.000613

(4533240.36) |

0.000872

(6447080.45) |

0.000982

(7264937.75) |

| RMSE |

0.019702

(1694.22) |

0.024760

(2129.14) |

0.029528

(2539.11) |

0.031345

(2695.35) |

| MAPE (%) |

1.57 |

1.65 |

2.72 |

2.17 |

| R-Squared |

0.992 |

0.987 |

0.982 |

0.980 |

The training loss reflects how well the model fits the training data. As shown in the table above, the classic approach exhibited an increase in training loss from 0.004067 at 30 time steps to 0.004150 at 60 time-steps. This indicates it may be hard for SimpleRNN model to learn with increased time-steps. Salem, F.M study on the RNN limits from system analysis and filtering viewpoints emphasised the vanishing gradient problem attributable to RNN [

24]. This study also confirms the vanishing gradient problem of SimpleRNN that will make the model less effective at capturing long term dependencies. However, the addition of OBV to the existing classic approach as input features shows a reduction from 0.005530 to 0.003635. This suggests that the inclusion of OBV to the existing classic method of price prediction may likely assist in ameliorating the effect of vanishing gradient problem of the SimpleRNN on middle to long term. This is a novel discovery as there is no evidence to back it up. It appears the area of research has not been extensively explored in the past.

The minimum loss observed from the time series cross validation is useful for model selection for time series data. The Simple RNN model validation loss reduces from 0.000125 (30 time step) to 0.000096 (60 time step) in the classic method and from 0.000138 (30 time step) to 0.000119 (60 time step) in the OBV inclusion. The lower value seen with both set up show a robust RNN model performance and a good fit (without overfitting) in the different feature set. The comprehensive test loss from regression evaluation metrics show the performance SimpleRNN model on unseen data that do not pass through both validation and testing phase. It also provides an assessment of the model predictive power. There is a close alignment of both tests MSE with validation MSE. This suggests the model generalizes well in real-world situation. However, the test MSE increases from 0.000388 to 0.000613 with classic input features and from 0.000872 to 0.000982 with the inclusion of OBV. The increase in test loss as the time step increases can be attributed to RNN difficulty in generalizing to longer sequences. The transformation of normalised test MSE to original value for ascertaining the significance of the test MSE increase on the original scale show a wide margin from 2,870,386 (30 time step) to 4,533,240 (60 time-step) on classic approach and a marginal increase from 6,447,080 (30 time-step) to 7,264,937(60 time- step) on OBV inclusion. Mean Squared Error (MSE) is known to penalize large errors more heavily. This is due to the squaring of differences which can disproportionately impact the overall error metric. Therefore, it's important to consider other error metrics to gain a more comprehensive evaluation of the SimpleRNN performance. The MAE for the classic approach shows a slight increase from 0.011 (973.5) at 30 time steps to 0.012 (1074) at 60 time steps. In contrast, the MAE for the OBV inclusion approach decreases from 0.02 (1689) at 30 time steps to 0.017 (1501) at 60 time steps. This clearly demonstrates a reduction in the absolute error rate for the OBV inclusion over the long term, in contrast to the classic prediction method, which shows an increase. It also indicates that, despite the reduction, the absolute error for the OBV inclusion remains higher than that of the classic approach when comparing short to medium periods. This could be due to the initial complexity and as the model processes more data over longer sequences, it likely becomes better at leveraging OBV for improved performance. Further research is needed to evaluate the long-term impact of the OBV approach and to confirm the extent of its effectiveness over extended time periods. Even though the MAPE result follows the same pattern as MAE, the variation of error to actual values is between 1.5 % and 2.7%. This is low with OBV inclusion having more error variation. The classic and OBV inclusion method of determining error in model testing show increase in RMSE and this indicates error are larger with more features and longer time steps. The contradiction of RMSE and MAE result of OBV inclusion may possibly be due to specific data segments that are difficult to model accurately by RNN. The R-squared of both approaches remains high with the lowest having 0.98 and highest of 0.99 in RNN model despite variations in metrics.

In conclusion, the addition of OBV to the classic price prediction method reduces training loss on a medium to long term, may likely minimizes the error to actual value on a long term and can capture more market dynamics. However, the error rate within the 30 to 60day time-step (short to medium term) window is slightly higher than the classic approach. This could be the adjustment period required by RNN model to effectively utilize OBV. The result also shows the consistent increase in classic method error rate as time-step increases. This clearly indicates the ineffectiveness of SimpleRNN to capture medium to long term dependencies in financial time series.

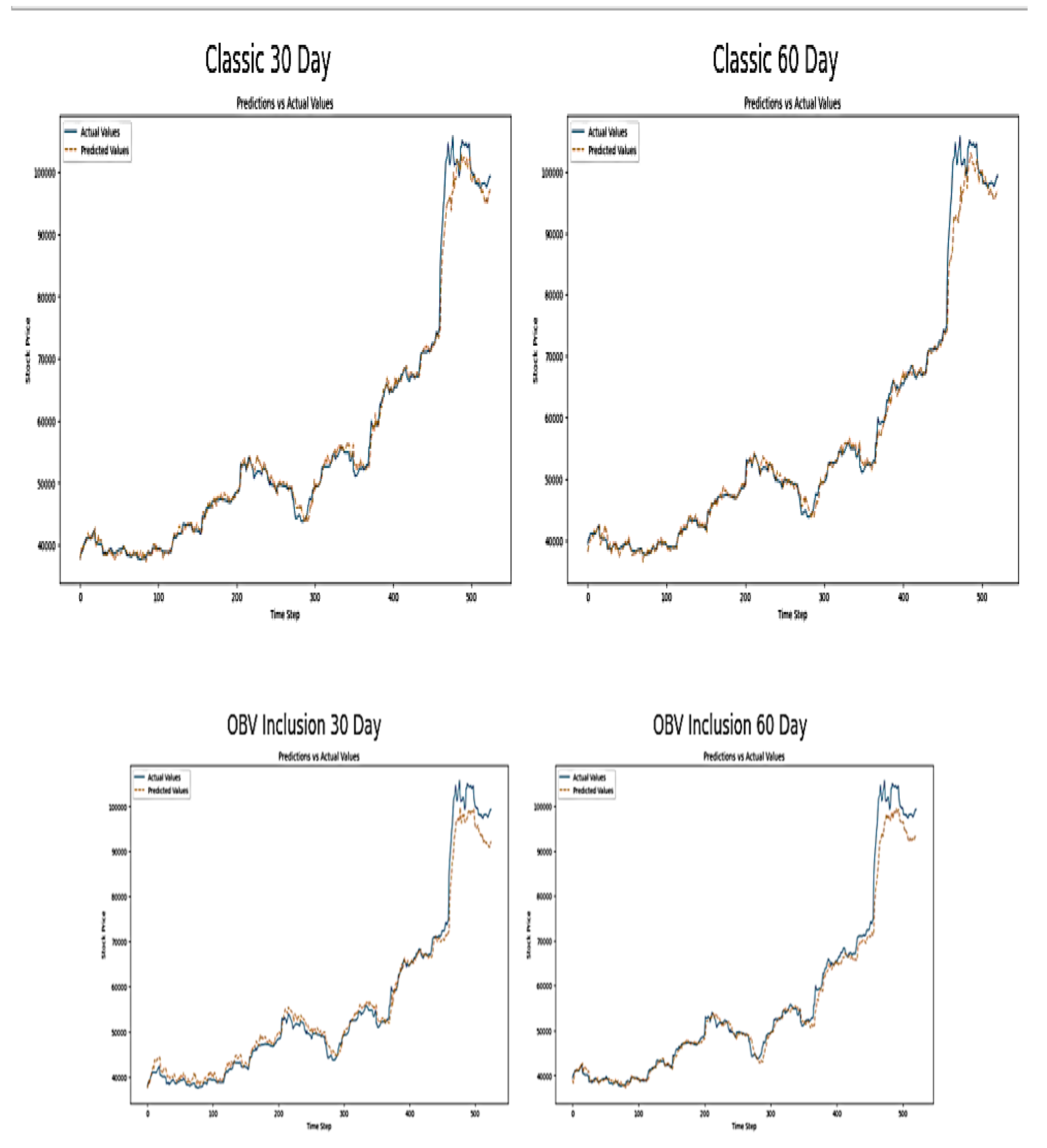

Visual Inspection

The plots of actual and predicted NGX value below modelled over a 30 and 60 time step periods. The classic 30 and 60 days’ time-steps predicted values closely follow the actual NGX market values. This shows the RNN has the capacity to capture trends and fluctuations in stock prices and also suggests good model accuracy. Although, the performance of classic 30day time step model have a smoother prediction which confirms short term steps allows RNN to better capture underlying patterns.

Figure 7.

Plot of Actual versus Predicted NGX Price Index on RNN Model.

Figure 7.

Plot of Actual versus Predicted NGX Price Index on RNN Model.

The observation of the plot of actual and predictive NGX index values on a 30 to 60 time step above shows the addition of OBV to the SimpleRNN subjects the model to potential high volatility in their predictions. This will be further confirmed by the scatter plot below. This price movement shows sensitivity to volume changes (which OBV measures) and indicates that OBV assists the model to dynamically respond to significant price movements. The comparison of classic approach with OBV inclusion method appears to make the RNN predictions to be more responsive to sudden changes in the market. This can increase accuracy during the volatile periods and may also introduce noise into the prediction during stable periods. This can be observed by the slightly higher deviations from the actual values towards the end of the OBV graphs.

Diagnostic Evaluation

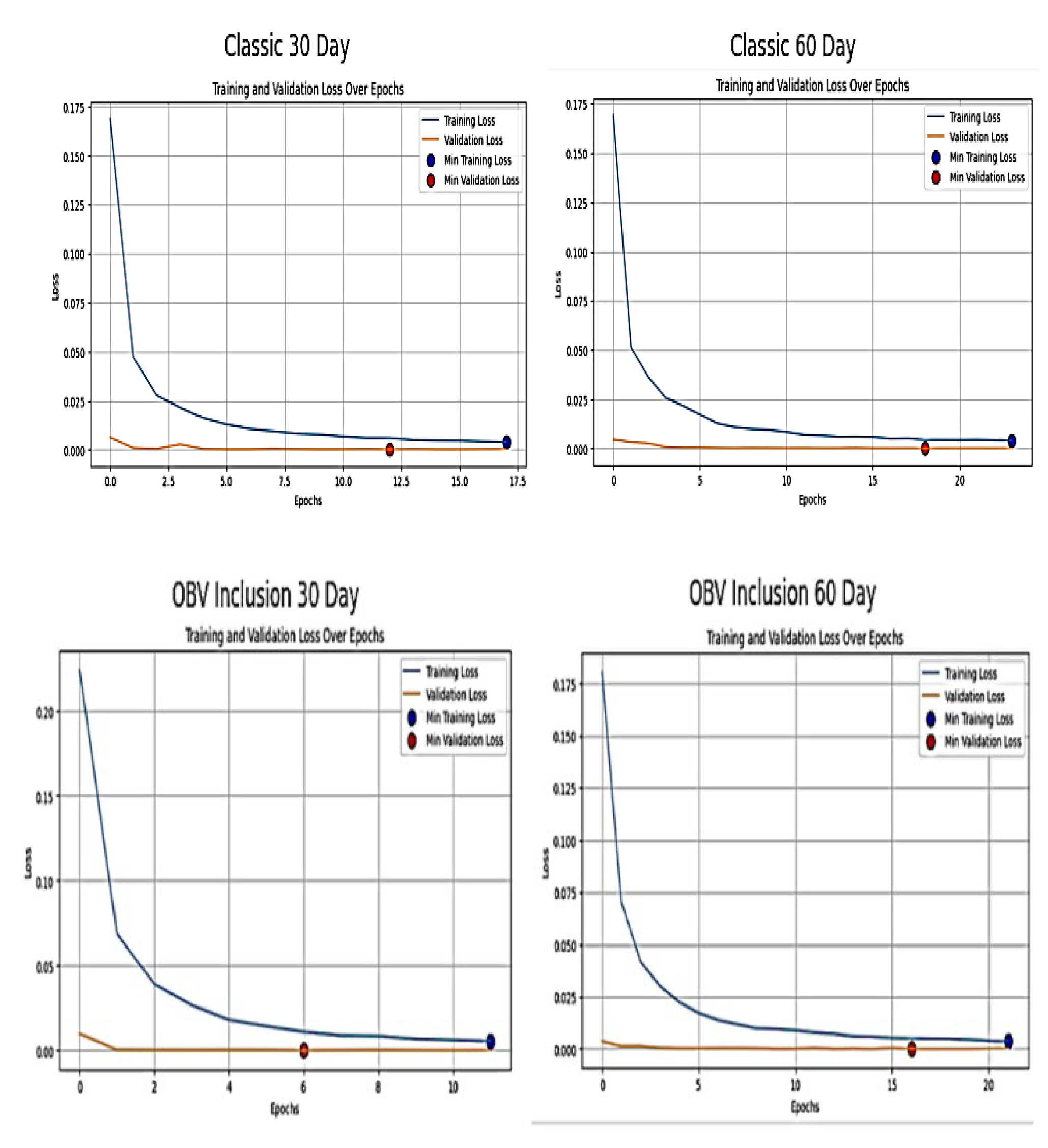

The plot below shows the training and validation loss for Simple RNN over a series of epoch using classic and OBV inclusion approach.

Figure 8.

Training and Validation Loss over Epoch OF RNN Model.

Figure 8.

Training and Validation Loss over Epoch OF RNN Model.

The training loss of Classic 30 time step in the figure above shows rapid and steep decline in the first few epoch before reaching minimum loss of 0.002479. This suggests the SimpleRNN model is learning quickly from the training datasets. The validation loss displays the same trait as training loss but flattens out before reaching minimum loss. It indicates the model generalizes well on unseen data without overfitting. Also, the training loss of classic 60 time step follows similar pattern to the 30 time step model with a slightly higher initial loss between epoch 0 and 5 before reaching minimum loss of 0.002. This indicates the RNN has challenges in learning longer sequences and with more complexity. The validation loss decreases in alignment with training loss and stabilizes at minimum of 0.00008. This shows good model performance and generalization

The training loss of 30 time step of OBV inclusion started high compared to Classic 30 time step. This indicates initial complexity which was due to the addition of OBV features. However, it decreases quickly to a minimum loss of 0.0055. This implies quick learning of the simple RNN model. However, validation loss starts higher but steadily decreases to a minimum loss of 0.000138. This implies the addition of OBV assists the model in capturing more complex patterns. The training loss of 60 time step of OBV inclusion is similar to classic approach of 60 time step. It starts with a higher loss and reduces hastily to a minimum loss of 0.0036. The shows the inclusion OBV method does not significantly change the learning pattern but make the architecture of the RNN model to be robust. The validation loss reduces in sync with the training loss to a minimum loss of 0.0001. This implies that the RNN model is generalizing well despite the added complexity of OBV. In a nutshell, OBV inclusion to the classic approach provides meaningful information for prediction. Besides, most of the learning starts in the early epochs but the loss reduce as the training progress. This is typical of neural network training where early iterations make significant updates to weights.

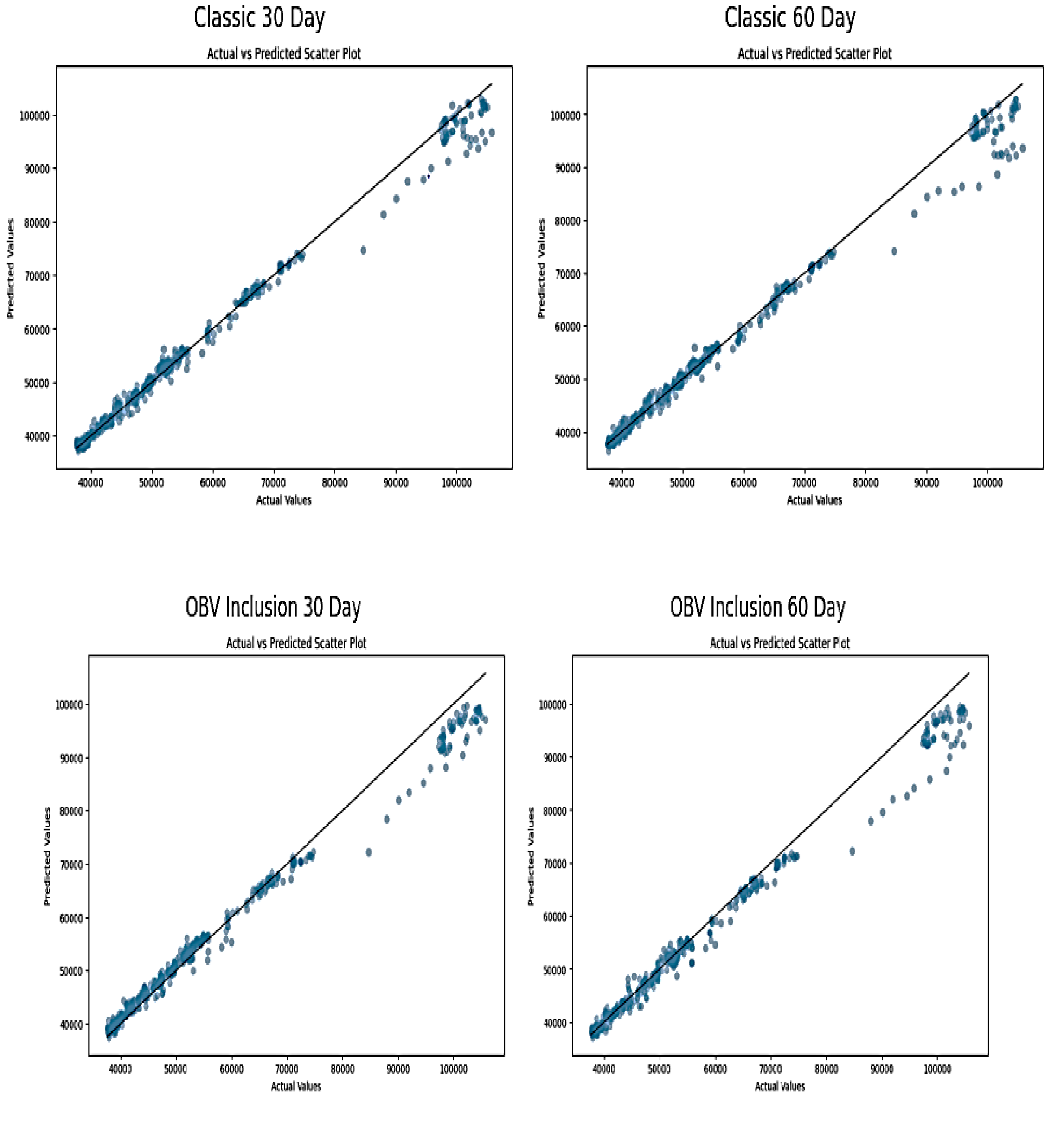

The scatter plots displayed below shows the relationship between actual and predicted stock prices by RNN models. These plots are useful for assessing the accuracy and predictive power of models used in forecasting financial market data. The observation of data points cluster near the diagonal line, which represents the line of perfect prediction in 30 and 60 time-step of Classic approach, indicates a strong linear relationship between the actual and predicted values. This suggests a good accuracy of RNN model. The near alignment shows classic approach is good at capturing the underlying trend. However, the 60-day model might offer a slightly baggier cluster around the line, which implies less accuracy with a longer data sequence.

Figure 9.

Plots of Actual and Predicted NGX Price Index Scatter Plot of RNN Model.

Figure 9.

Plots of Actual and Predicted NGX Price Index Scatter Plot of RNN Model.

The plots of 30 and 60 time steps of OBV inclusion indicate a good connection between actual and anticipated values at the beginning, loosely fitted towards the end and tightly fitted at the end. This shows OBV integration appears to preserve model prediction quality and provide volume related information that complements price data, especially in the 60-day model where data points are closer to the perfect prediction line. The comparison of the four plots shows consistent demonstration of high accuracy with slight differences in the spread of points around the diagonal. Generally, the result suggests robust model performance across varying configurations, and this validates the use of RNNs in financial forecasting. This analysis reflects the RNN's ability to adapt its learning from historical data to predict future values, demonstrating the potential benefits of sophisticated models in financial forecasting.

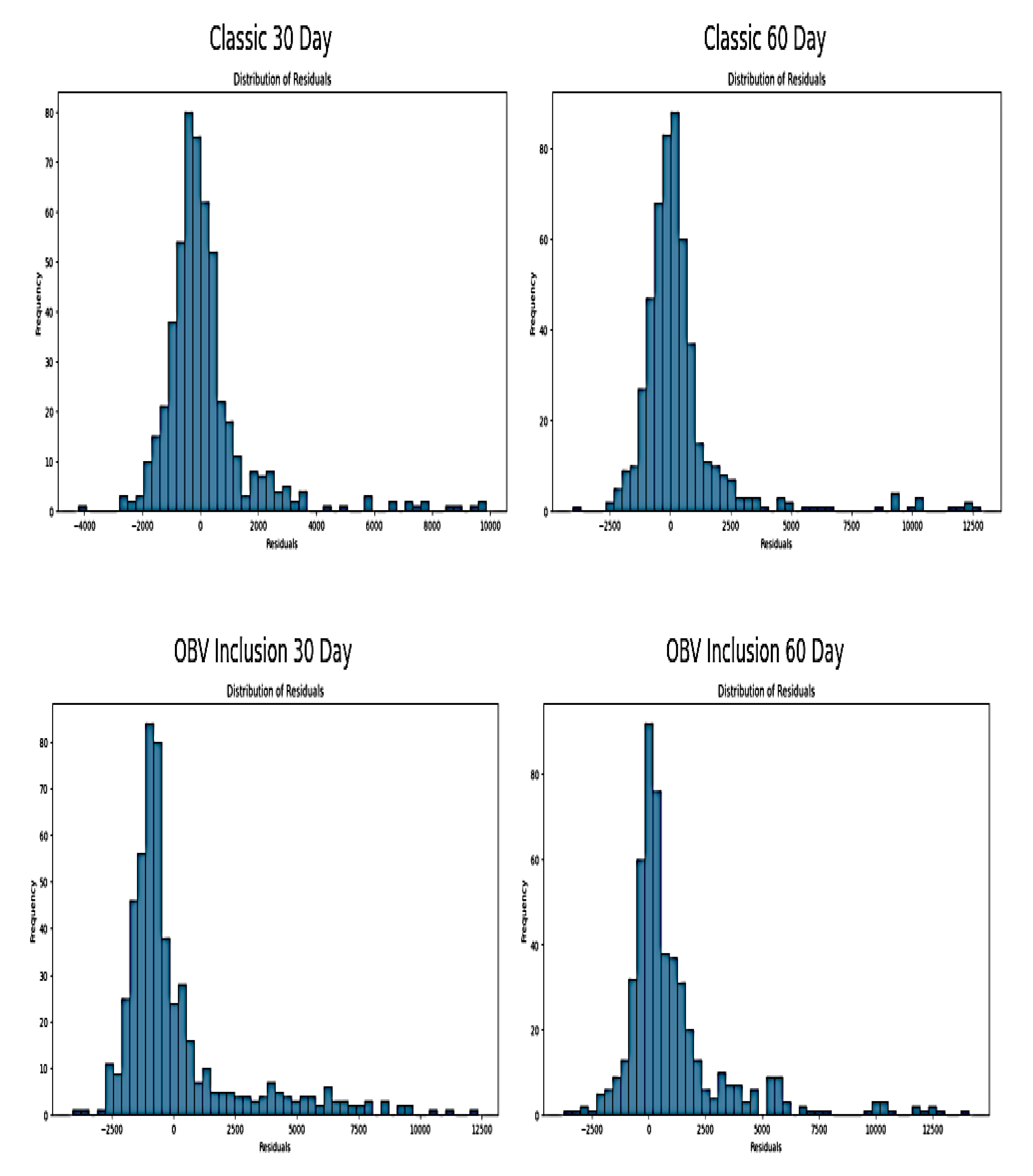

The residual plots below show the distribution of residuals (i.e. the differences between actual and predicted values) of SimpleRNN models used for predicting NGX prices indices. The Classic approach for 30 and 60 time-step distributions appear positively skewed with a long tail toward right. This shows the gradual decrease in residual frequency as they move from negative to positive values. It reflects occasional large positive residuals with prediction lower than the actual values. The residuals are centered around zero with 60 day plot appearing to have a slightly tighter distribution compared to 30days time step. This indicates that the model’s predictions are generally accurate. The range of residuals that shows variations in prediction for classic 30days is from -4,000 to 10,000 while that of Classic 60 days is approximately -2500 to over 12,500.

Figure 10.

Plots of Distribution of Residuals of RNN Model.

Figure 10.

Plots of Distribution of Residuals of RNN Model.

The residual plots of OBV inclusion are similar to classic plots. The majority of the residuals are centred around zero but with different narrow spread. This indicates that on average the model predictions are close to the actual values. The distribution is positively skewed and this implies that there are more large positive residuals than negative residuals. This indicates the model occasionally underestimates the actual values as previously shown in the plot of actual versus prediction. The range of residuals from approximately -2500 to over 10000 indicates variability in the accuracy of predictions across the datasets. The residuals show that the models generally predicts close to actual values as seen with the cluster around zero. It also shows the instances where the predictions differ from the actuals, as shown by the tails. The positive skewedness of the study distribution could be attributed to the underlying characteristics of NGX data such as non-linearities, fat tails and asymmetrical volatility that has already been captured under initial EDA before model testing.

3.3.3. Long Short-Term Memory Network

LSTM followed a similar procedure to the Simple RNN, starting with a manual combination of different hyperparameters (without optimization) to identify the best configuration for the classic approach. The selected hyperparameters were: unit shape of 30 and 60, input features includes return and technical indicators, dropout set at 0.2, 60 units, a dense unit of 1, batch size of 64, 50 epochs, the Adam optimizer, and a patience setting of 5. It was observed the result of LSTM got better from 30 to 60day time step. This is a clear initial indication that LSTM can largely capture stock market underlying pattern and learn long term dependencies in time series without the use of hyperparameter optimiser. This makes it highly effective for financial or stock forecasting. For record purpose, 60 day time step was adopted because of it’s of its high accuracy as shown in the table below:

Table 6.

Evaluation Metrics of the best Un-optimised LSTM model.

Table 6.

Evaluation Metrics of the best Un-optimised LSTM model.

| Metric Evaluation |

60 day step-time (Normalised value) |

Transformed Original value |

| MAE |

0.015733 |

1352.93 |

| MSE |

0.000734 |

5425600.48 |

| RMSE |

0.027088 |

2329.29 |

| MAPE (%) |

2.02 |

2.02 |

| R-Squared |

0.985 |

0.98 |

The plot of actual and predicted value of NGX price is shown below:

Figure 11.

Plot of Actual versus Predicted value from LSTM.

Figure 11.

Plot of Actual versus Predicted value from LSTM.

The plot of actual versus predicted value of NGX price showed that LSTM can predict accurately. However, the curiosity for getting the least minimal error, the highest accuracy and generally a better result than the one depicted above led to the use Optuna for fine tuning to generate the best hyperparameter used in training the LSTM model.

Model Evaluation

The study suggested various hyperparameter combinations for Optuna, including the number of units in each LSTM layer, the number of training instances used in each iterative cycle, the model weights, and mitigation measures like dropping out rate. It also sets the optimizer for training and validation, early stopping threshold, and the number of iterations for feeding the entire training dataset. The study also outlines criteria for assessing model performance during training and validation. The best hyperparameter from the optimization process is tabularised below:

Table 7.

LSTM Optimal Hyperparameter.

Table 7.

LSTM Optimal Hyperparameter.

| LSTM Model |

Classic Approach (Input features includes Returns, Historical Price & technical indicators only) |

OBV Inclusion Approach (All features and On-Balance Volume) |

| Best Hyperparameter |

30 day time-step |

60 day time-step |

30 day time-step |

60 day time-step |

Units

|

122 |

65 |

122 |

104 |

Dropout rate

|

0.10131318703976958 |

0.20445711460250626 |

0.27017135632721034 |

0.2657214090579303 |

Batch size

|

128 |

32 |

128 |

32 |

| Learning rate |

0.0038833897789281994 |

0.007529066043550584 |

0.009731527413432832 |

0.0036850279954446555 |

The result below shows the result of model evaluations with normalised and transformed original value results:

Table 8.

LSTM Evaluation Metric Result.

Table 8.

LSTM Evaluation Metric Result.

| LSTM Model |

Classic Approach |

OBV Inclusion Approach |

| Losses |

30 day time-step |

60 day time-step |

30 day time-step |

60 day time-step |

| Training Loss |

0.002148 |

0.001993 |

0.002493 |

0.002028 |

| Validation Loss |

0.000283 |

0.000077 |

0.000113 |

0.000076 |

| Test Loss |

0.000745 |

0.000485 |

0.0007561 |

0.000343 |

| Test |

Evaluation Metrics |

|

|

|

| MAE |

0.015346 (1319.58) |

0.012397 (1066.05) |

0.014333 (1232.53) |

0.009662 (830.84) |

| MSE |

0.000745 (5512396.17) |

0.000485 (3586624.63) |

0.000756 (5593662.96) |

0.000343 (2536732.36) |

| RMSE |

0.027303 (2347.85) |

0.022024 (1893.84) |

0.027504 (2365.09) |

0.018522 (1592.71) |

| MAPE (%) |

1.96 |

1.61 |

1.96 |

1.33 |

| R-Squared |

0.984 |

0.990 |

0.984 |

0.993 |

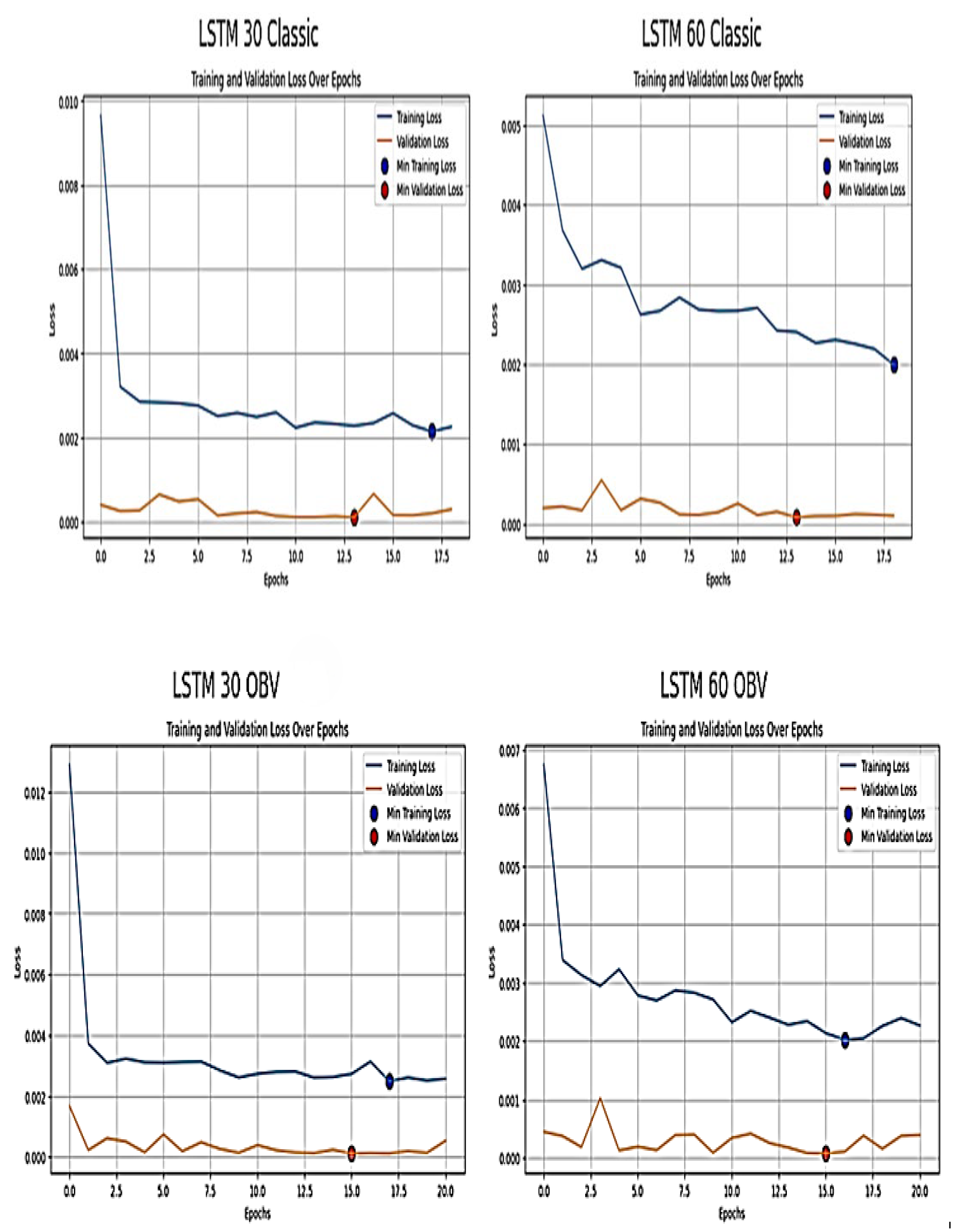

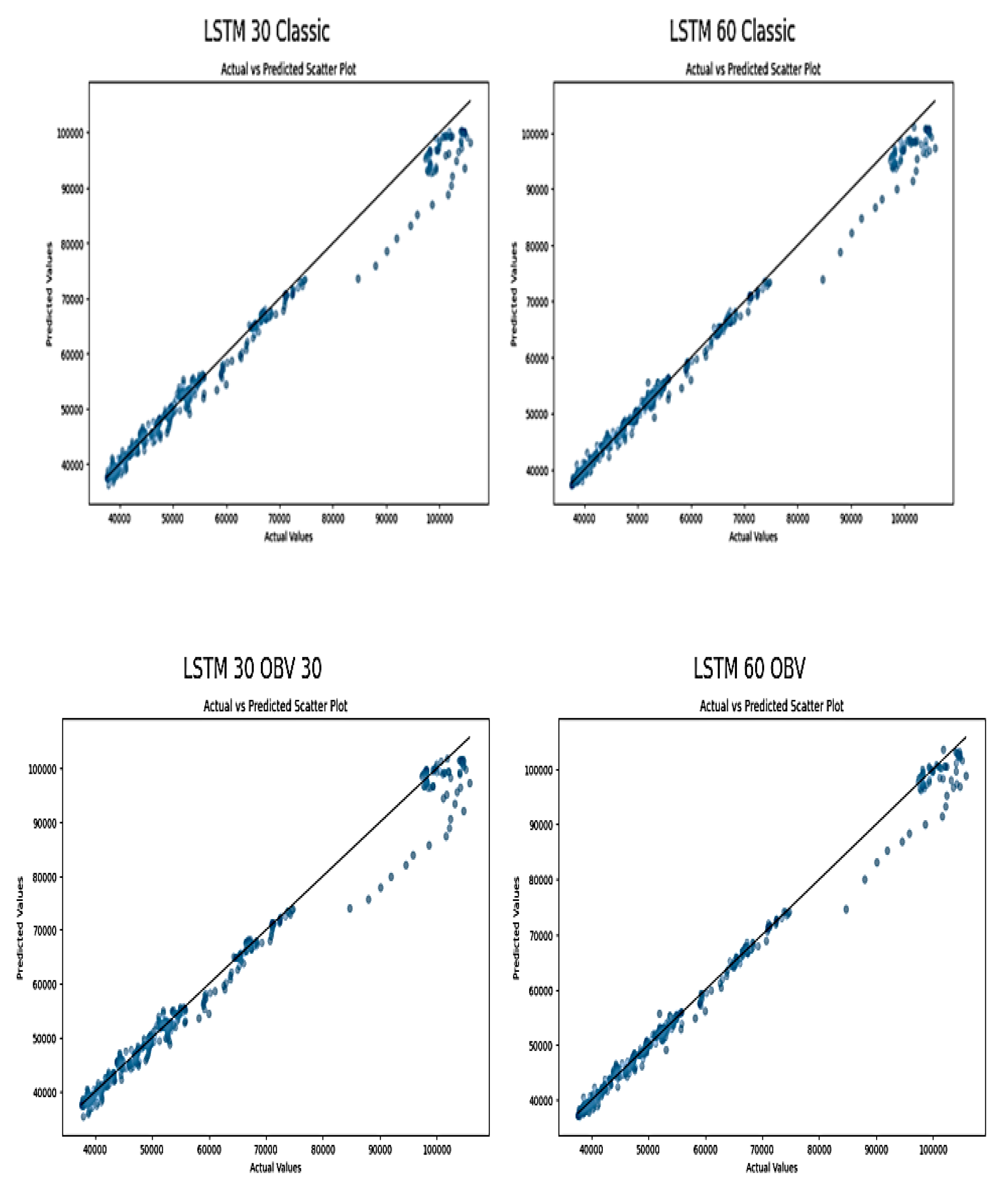

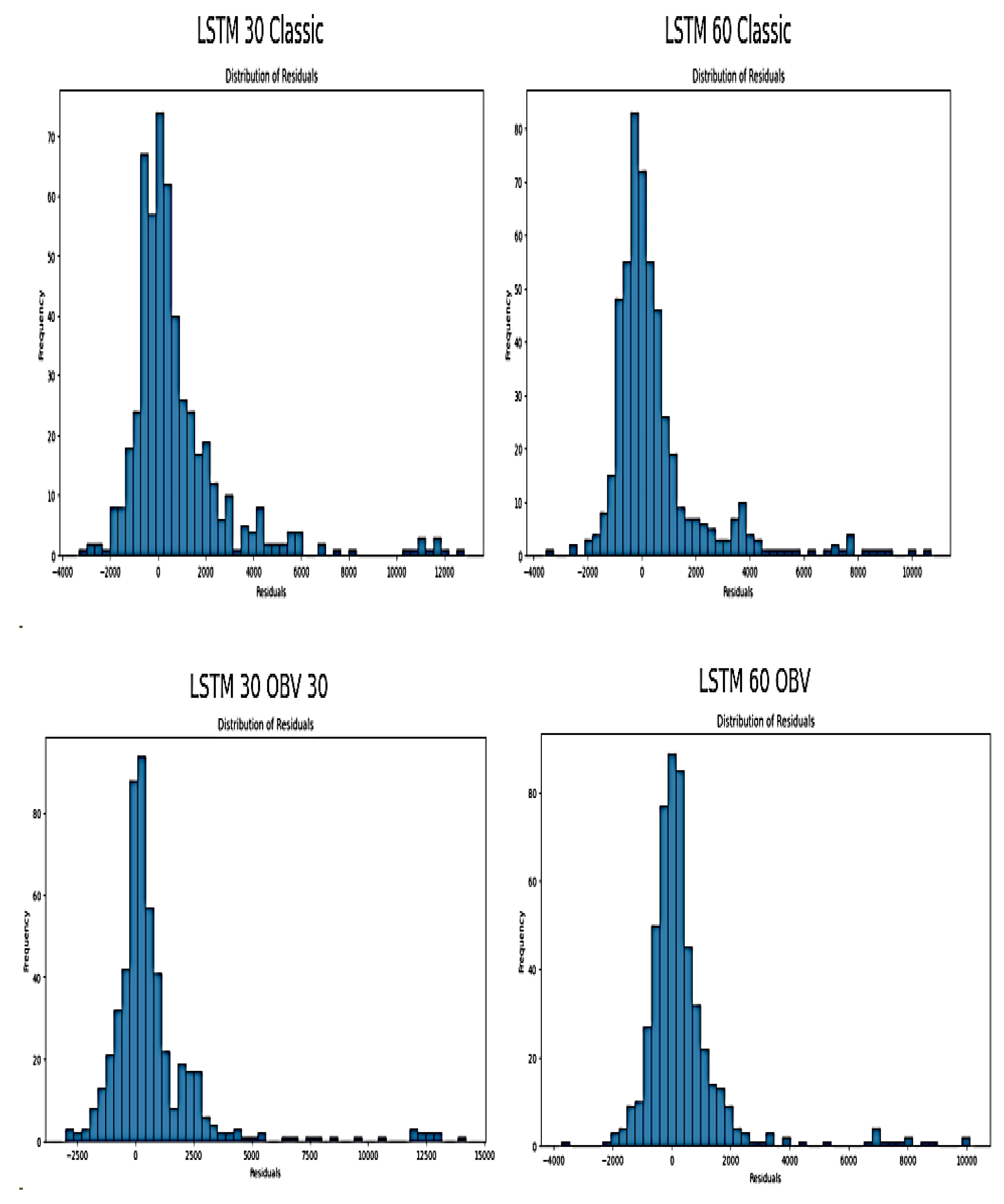

The training loss of LSTM model decreases from 0.002148 (30days) to 0.001993 (60days) in classic approach, and from 0.002493 (30days) to 0.002028 (60days) in OBV inclusion method. It clearly shows that the training loss of LSTM model decreases as the time step increases from 30 to 60 days in both classic and OBV inclusion set. This indicates that the LSTM model is potentially more stable and effective at capturing patterns over longer time windows. Also, the validation loss of classic and OBV inclusion for 30 and 60 day time-step also follow similar pattern with training loss by consistently decreasing as the time-step increases. This indicates a very effective model at generalizing from training data to validation data. It also shows the effect of adoption of time series cross validation on NGX time dependent data. The use of TimeSeriesSplit ensures that each validation fold is a true forward looking scenario that is necessary for time series forecasting to avoid bias. Also, the combination of Optuna for hyperparameter tuning and early stopping based on validation performance can be referred to as “adaptive validation strategies”. These methods ensure that LSTM model fits the training data and generalize well on the new, unseen data by adjusting the training process and model configuration based on real-time feedback from the validation phase. These methods help the study to achieve lower and more stable validation loss, preserve the data time structure, prevents data leakage and ensures the model is tested on genuinely unseen data.

The results of evaluation metrics also show MAE (from 0.015 to 0.012) and MSE (from 0.000745 to 0.000485) decrease from a 30-day to a 60-day time-step in classic approach. The addition of OBV lowers MAE to 0.009662. This suggests that OBV provides additional useful information that enhances LSTM model predictive accuracy. RMSE also show the same trend as observed in MAE and MSE by decreasing with the inclusion of more features and longer time-steps. The decrease of MAPE with more features and longer time step also show improved percentage errors of the model from 1.96 to 1.61 in classic approach and from 1.96 to 1.33 with the inclusion of OBV. This confirms the predictive accuracy of LSTM model with minimal error. It also suggests that the addition of OBV enhanced the performance of the model. The R squared demonstrates the degree to which the model predictions align with the actual data is close to 1. Both classic and inclusion of OBV approach indicates that the model effectively accounts for nearly all of the variability in the target variable and this makes it a good fit [

33]. R squared also increases to 0.993 with the model complexity and length of data being modelled. This shows that LSTM model predictive accuracy increases with the inclusion of OBV and fits well with increased time step.

Generally, the study reveals that increasing the time step in LSTM improves performance and captures underlying trends. The addition of On-Balance Volume improves model accuracy across all metrics. This could be because OBV acts as a proxy for market sentiment and volume trends which are necessary for market price movement predictions.

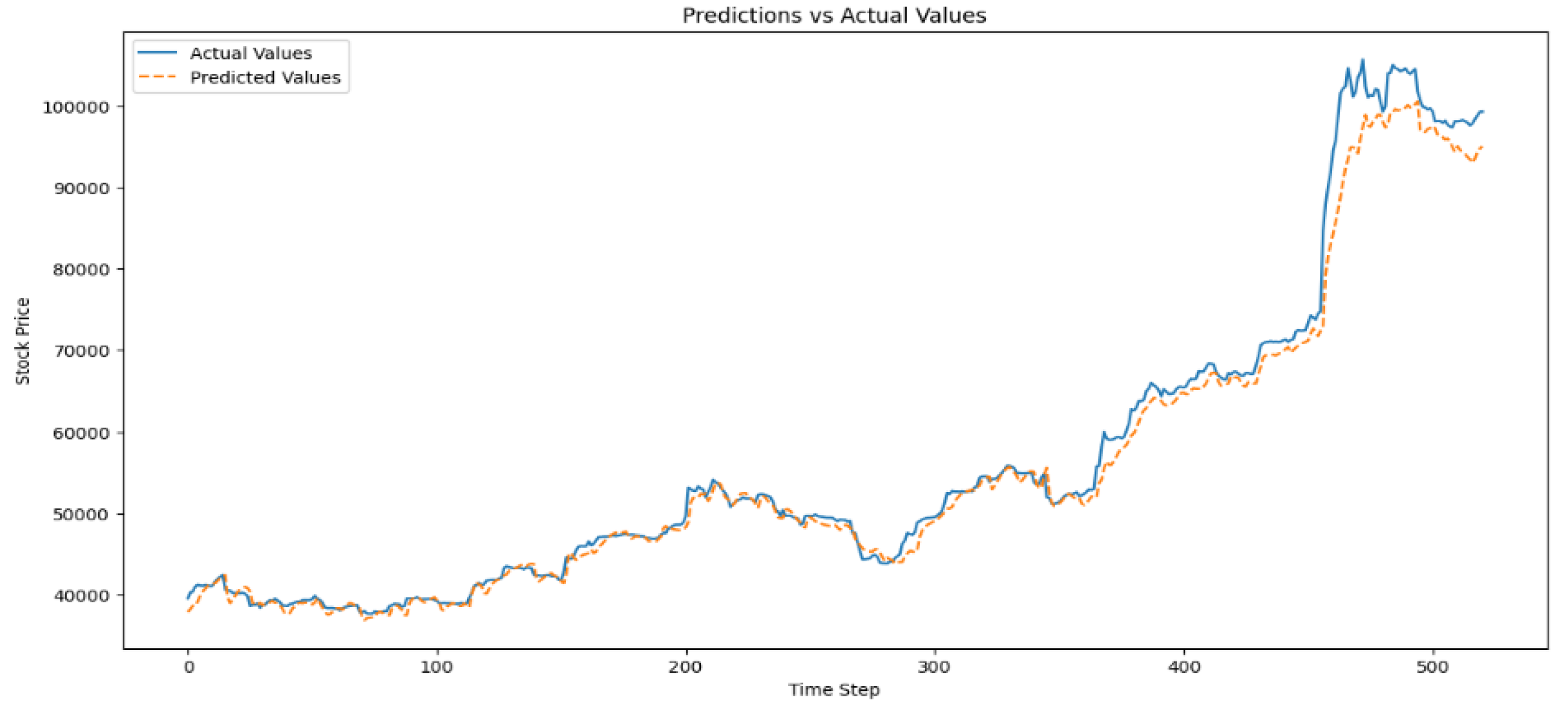

Visual Inspection

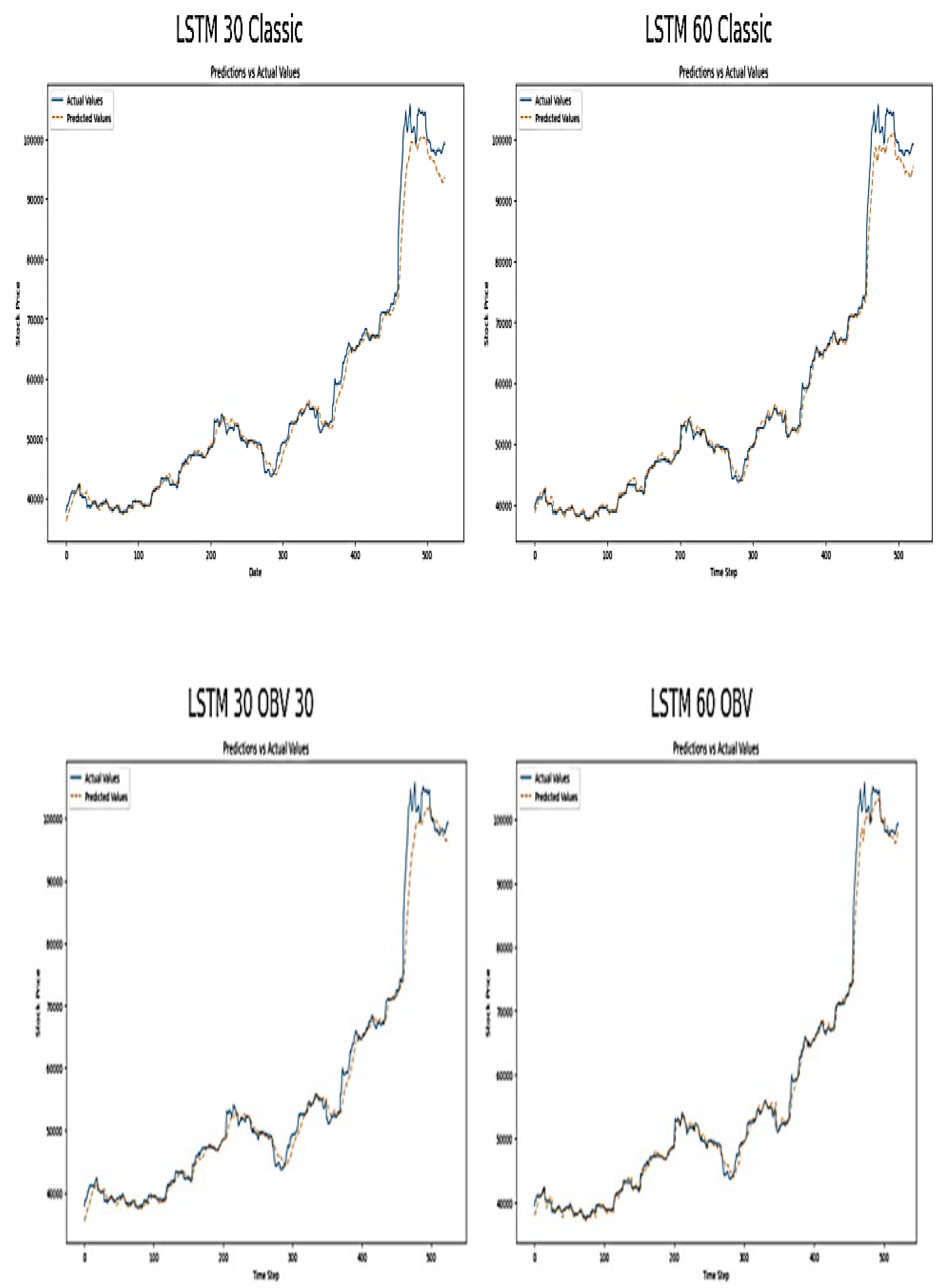

Figure 12.

Plot Actual vs. Predicted NGX Price Values on LSTM Model.

Figure 12.

Plot Actual vs. Predicted NGX Price Values on LSTM Model.

The plot below shows the actual (blue line) vs. predicted values (orange line) from an LSTM model applied to NGX market data. The x-axis shows the data point which represents individual samples, while the y-axis is the value of the target variable i.e. predicted NGX market price.

The provided plots above shows the actual versus predicted values of NGX price index using LSTM models with classic and OBV inclusion approach of a 30 and 60 day time-step. Each plot shows the performance and value of LSTM model for predicting NGX price movement. The observation shows that the predicted plots of classic 30 and 60days time steps track the actual NGX price closely from the beginning until towards the end of the plots when significant rise in NGX price occurs. At those time-steps, the model seems to lag behind the actual values. The 60-day model appears to follow the actual NGX price with slightly more accuracy and less delay than the 30-day model. The high accuracy of the classic 60-day model in tracking the actual NGX value suggest that longer time-steps capture more relevant temporal dependencies for predicting NGX price movements, most especially in capturing the upward trends. Furthermore, the observation of OBV inclusion plots appears to improve the predictive capability of LSTM. This is seen towards the end of the 60 days OBV inclusion plot where the actual NGX values are closer to the actual values during rapid price changes. OBV includes volume trends and this is essential during periods of significant price changes. It shows stronger buying or selling pressure and this is also evident from classic approach. All the four plots show some degree of discrepancies (lag) behind the actual NGX values during period of rapid and peak price changes. The lag could be due to the reactive nature of the input features and LSTM model inherent characteristics, where past data influences the prediction more strongly than sudden changes. Although, the addition of OBV seems to reduce this discrepancy slightly, which shows volume data provides essential information during market volatility. The lag areas show potential areas where the model prediction could be improved on or require additional feature engineering.