Submitted:

11 September 2024

Posted:

12 September 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Bayesian Analyses for Secure Domains

2.1. A Probabilistic Foundation for Framing Protocols

- Bayesian decision support systems are prescriptive in nature. These therefore almost automatically carry with them a systematic framework around which a protocol for technological transfer we have outlined above can be performed.

- Bayesian methodologies are now widely developed and arguably provide the best modelling framework for a spectrum of different challenging inferential settings. More specifically, these have now been successfully applied across a myriad of complex domains very similar to various secure domains on to which technologies need to be transferred.

- Bayesian methods interface well with cutting-edge data analytic methods - currently being developed in both computational statistics and machine learning communities.

- A critical feature of many secure environments is that the streaming time series and historic data often suffers from being systematically missing not at random, disguised and, for some central variables, completely latent. This is typically the case for high-threat criminal plots for which data about the plot is usually only observed and becomes available after the plot is carried out or prevented. This usually demands that expert judgements need to be explicitly embedded into models before these are fit for purpose. The Bayesian paradigm provides a formally justifiable way for embedding such necessary expert judgements into that inferential framework through the use of priors that can be updated with further judgements and data as they become available.

- Once such prior probabilities are elicited, Bayes Rule and graphical propagation algorithms can be embedded in the code to update police beliefs about the underlying processes even when that data is seriously contaminated or disguised - in ways we illustrate in this paper. In this way, Bayesian methodology transparently informs and helps police adjust their current beliefs in terms of what they observe which, as well as being consistent with the way they make inferences, puts the police centre stage. The approach therefore helps them own the support given by the academic team.

2.2. Eliciting a Bayesian Network for Sensitive Domains

2.2.1. Four Elicitation Steps and Some Causal Hypotheses

2.2.2. Causality and Libraries of Crime Models

- The chosen BN provides a template for the way many different crimes within a given category might unfold. So this must have this type of generic quality called causal consistency in [9]. We note that this type of concept has recently reappeared in a rather different form as abstraction transport [10].

- To double guess a criminal’s reactions to an intervention they might make, it would be helpful if the police team tried to ensure that the structural beliefs expressed through the graph were shared by the criminal [4,11]. This is a strengthened version of the long-established coherence property that we refer to as causal common knowledge.

2.3. Data and Information in Secure Domains

- Criminologists’ and sociologists’ models of criminal behaviour that lie within the open domain. These are especially important because they often give a great source through which to both categorise different classes of crime and describe their development.

- Open source data about analogous past incidents. These typically appear in articles by journalists and within scholarly case studies of specific events written by criminologists. Although this is often not data in a statistical sense, each such report can give information about the development of past instances within a particular category and so inform the CPT of an associated BN.

- Access to someone from behind the firewall. Such a person will be free to disclose relevant, less sensitive domain information that might begin to fill out newly arriving information necessary to build both the structure and the probability factors of a probability model with sufficient specificity to be part of a Bayesian decision support system.

- Securely emulated data generated though in-house software unknown to the academic co-creator, calibrated with secure inputs. This has proved to be a valuable tool for checking predictive algorithms provided by academic teams - although of course such tests can only be as good as the outputs of the emulation tool used to test it.

3. Criminal Plots

3.1. Introduction

3.2. Plots as a Hierarchical Bayesian Model

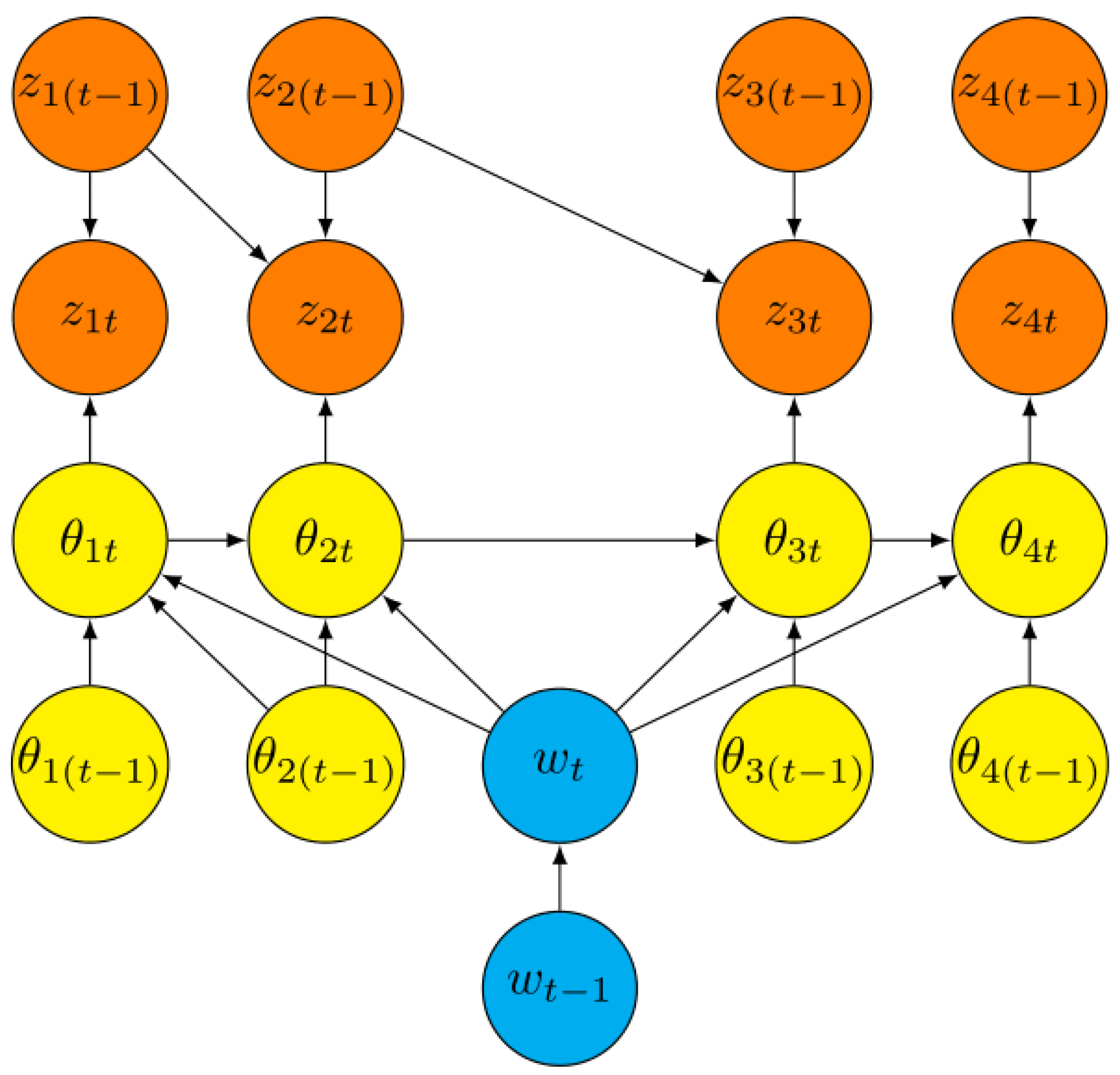

- At the deepest level of this hierarchy is a latent discrete Markov process modelled by a time series of random variables . This discrete time series indicates in which of a number of preparatory phases - elicited from domain experts to characterise a particular class of crime - the given plot might lie at any given time. Represent the phase of a particular plot at time t by the indicator variables , . The particular phase denoted by is called the inactive phase - an absorbing state where the criminal has aborted their plot. The other phases we call active phases. The time series of an incident’s phase is typically latent to police - although insider information or occasional revelations might directly inform it.

- Within a plot, when in a particular phase, a criminal will need to complete certain tasks - characteristic of a certain class of crime - before they can transition to a subsequent phase. At each time step, this intermediate layer of the hierarchical model is a task vector consisting of component tasks. These are often indicator variables on whether the criminal is engaged in the given task or not. Subvectors , called task sets, of the task vector are defined as those tasks whose marginal distributions for a given active phase , , are distinct from their distributions for the inactive phase . So these activities suggest that the suspect might be in the phase . Let . Again, the components of will typically be latent and only inferred by police, although, on occasion, police might happen to directly observe that a particular task is underway or complete.

- Police will typically have routinely available to them streaming time series of observations called intensities about the progress of a suspected plot. The components of the intensity vector are chosen to help police discriminate whether or not an agent is engaging in the task , at time t, . We illustrate this below. These are, by definition, seen by police. However, for any ongoing incident, academics outside the firewall will typically not have access to the values of these data streams, at least not until the criminal has been convicted. So often the components of and nearly always the values they might take within an ongoing incident will be missing to the academics guiding police support. This information is highly sensitive because criminals could disguise the signals they emitted or even distort these to deceive their observers if they learned what police could see of their activities.

3.3. Causal Modelling for Decision Support on Plots

3.4. Graphs in a Library of Terrorist Plots

3.4.1. An Example of a Library of Plot Models

- At any time, a potential perpetrator may choose or may be forced to abort a plot and so transitions into the absorbing state . This means that there is a benefit to police making it more difficult for the terrorist to transition through the required phases to be in a position to perpetrate a crime.

- Clearly by definition a suspect must have been recruited in the past - state - before transitioning to later states.

- Once a given suspect is skilled up to be able to perpetrate a plot - i.e. passing through - within the time frames police would be working in, that suspect will remain trained and will not lose these skills.

- However, the suspect can review and substitute one identified target () with another or can equip themselves or discard the equipment () at any time they choose. So just because a suspect has been in the phases or at one time does not mean they are currently equipped or have a current target identified.

- The perpetrator cannot attack - phase - until all other active phases have been completed.

3.4.2. An Example of a Graph In A Library - Vehicle Attacks

4. Co-Creating a Library of Plots

4.1. Introduction

- A generic description of plots expressed in the phase relationships in the lowest layer of this hierarchical model lies largely in the public domain - within sociological & criminological articles, open source case studies and information that police experts can freely communicate. So this is directly accessible to the academic co-creators who can guide its accommodation into this structure. These helpfully categorise and explain various plots and the motivation and capabilities of various different types of potential perpetrators of the plots in focus. Some generic evidence to inform the generic priors on the CPTs associated with the transition between stages will also be available. This can later be refined by police using more secure information available only to them.

- Generic information about the tasks that need to be completed to move from one phase to another, and the probabilities linked to both the choice of the task and their ease of completion associated with various categories of criminals, is also straightforward for the academics to elicit from sources mentioned in the bullet above. Again - using instructions from the academic teams - the CPTs can then be refined by police using other more sensitive evidence they have about various crimes, using as priors the probabilities on the CPTs based on open source data.

- On the other hand, the full extent of the data streams police might currently have available to them indicating which tasks are currently being engaged in within any ongoing investigation can be highly confidential. For example, if a criminal learned that their messages on the dark web can be unencrypted, then they can use this to disguise their messages or deceive police and so be harder to apprehend. However, independent of police and based on open source data, the academic team can of course conjecture what these might be as we illustrated above. They can then communicate this open source model to police, populating the topology of the BN and the associated CPTs ready for adjustment of the police model behind the firewall in light of what only they know.

- The actual data that police collect concerning specific individuals is highly sensitive and cannot be shared with the academic team. Were the current suspect to learn what the police knew about the progress of any plot truly in progress, they would become far more dangerous. On the other hand, any personal information about a given suspect cannot be shared until they have been convicted. If the suspect is in fact innocent, then, as soon as this has been discovered, any personal information about them cannot be ethically retained. Such information might be about the category assigned by police to a suspect, the nature of the information being collected on them and the values of this data at any given time. However, there is a rich, although usually still incomplete, bank of open source information about proven criminals, for example provided by press releases and court reports. So, based on academic conjectures and rehashing particular use cases, the academic team can demonstrate how the model might learn in light of such synthetic data sets and share this with police. The potential usefulness of the open source code can then be demonstrated as documented in [1].

4.2. A Protocol for Co-Creating Plot Models

4.2.1. Notation and Setting

4.2.2. Step 1 - Initial Library Based on Open Source Information

- Informed by previous studies undertaken when building within the current academic library , supplemented by other open source information pertinent to , and guided by police sharing their own open source knowledge, academics choose a graph representing the BN of the next category and type of crime. This stage typically involves further engagement with experts and a deep dive into literature to discover new information about the new entry to the library.

- The names of the vertices in are made as compatible as possible with the names given to vertices in , . Note that this sometimes entails the relabelling of the vertices in this set in light of the meaning of vertices in the new vertex set . This is a delicate process - see e.g. [14], albeit in the very different context of ecological modelling. This harmonisation step helps to maximise the amount of local structure and probabilistic information that can be shared across different BNs in the library and so helps minimise duplicating effort establishing the next entry in the library.

- We next begin populating the CPTs as these apply to the new entry of the library which would be valid were police not to intervene. The academic team first elicit from police the likely nature of the partition of the CPTs . They then need to elicit from the police team which of these CPTs - because of their shared meaning with other models in the library - already appear in the library, and which CPTs are unique and hence need to be populated.

- To populate and , the academic team then proceed as they would in non-secure settings. They first elicit the uncertain probabilities in . They then refine these judgements based on available data using Bayes Rule to construct open source posterior tables. They repeat this process for CPTs in . At this stage the academic team can use their skills to identify and adapt statistical and AI methodologies, in particular to use time series data extracted from particular past instances to calibrate and efficiently estimate the parameters associated with these probability tables.

- Dummy entries are then chosen by the academic teams for those new CPTs in that will become informed primarily through secure in-house information, and labelled as such. We nevertheless recommend that these dummy entries are chosen to appear as plausible as possible to police and, where possible, calibrated against any available open source case studies or outputs - at least as they might apply to one category of suspect .

- We note that different CPTs elicited here may need to be selected for different types of suspect . Thus the MO and training of a right-wing terrorist recruit might be very different from a terrorist affiliated to IS which in turn may be very different from a suspect who is acting completely autonomously. In our running examples, it will usually be possible for police to reliably categorise any given triaged suspect, although, in some plot libraries - like those designed to protect against exfiltration attacks - such prior categorisation will be less certain. In either case, when the library is designed to be applied to make inferences about different categories of criminal, different collections of CPTs will need to be constructed for each such category. However, we note that most of the CPTs will be shared across different categories, but those that do differ help to formally discriminate the possible type of suspect faced by police when this is uncertain.

- Academics next need to populate their CPTs associated with each potential intervention they contemplate making. To do this, they repeat the elicitation process described for the unintervened process in the 3 bullets above for different categories of suspect . Superficially, this might look to be a very large task. However, if the BN is well-chosen, whenever it can be described as causal, as we have argued that plot models can be [12], this will typically only require the addition of a few select CPTs for each potential intervention. This will be so, even if it will be apparent to the criminal that such interventions have been made.

- The models are then coded up as software. The code developed by the academic team will be much larger than the distilled code that will be delivered to the police team in order for the academic team to be able to explore various modelling choices that the police team do not need to do themselves, as well as to allow rigorous verification to be performed before the library is transferred to police. The academic team check the plausibility of the outputs of this code and the faithfulness of the code itself against synthetic cases. The academic team first simulate use cases from their model. They then use open source data about real past incidents, supplementing this with any synthetic data about records they believe might have been observed but are now lost, performing the standard statistical diagnostics normally used to check the performance of this BN.

- The rationale behind both the chosen structures within the library, the real CPT, any data used to calibrate these and the methodology to accommodate them are all recorded for future appraisal by police. It is vital to carefully provide the in-house team with a report carefully documenting the rationale behind the choice of model for the new category and to add this to any other such documentation as this applies to previous models in the library. For an example of the description of the outputs of such software and their embedded algorithms, see [1,3,21].

- Because these statistical models are all based on open source information, they can be freely submitted to proper peer review and criticism. The models, methodologies and applications can thus be properly quality controlled to this point for later technical revision if this is necessary at the earliest stage of the co-creation.

- A handbook is created or modified for the new library. This includes how the new well-documented transferred code works. It also demonstrates the estimation, statistical diagnostics and dummy examples provided which in-house statisticians are then able to replicate for the recent library entry. The handbook and a distillation of the code - both based solely on all open source materials - is then delivered to the police team.

4.2.3. Step 2 - Police Team Create Their First In-House Library

- Police take the delivered code and the enhanced library and test that they can run and emulate the results provided in the handbook of the delivered system. This ensures that the new library has been successfully transferred.

- The performance of within is then applied by both co-creation teams to any available securely emulated data carefully constructed and delivered by the police team. This is done through calibration using secure information to provide the academic team shareable, informative outputs. This can be used to check whether or not the outputs of the academic model look broadly plausible to police, given their model is only informed by open source data. If this is not the case then the academic team need to liaise with the police team to adjust . However, within this co-creation step it is important for both teams to bear in mind that this quality control step will only be as good as the in-house emulated data sets.

- Conditional on this emulation being verified, the mismatch is likely due to either the misspecification of the elicited graph or the inaccuracy of the academic guesses about the secure CPTs. In the former case, the academic team will need to perform further elicitation to resolve this issue as they would in contexts where there is no security issue. In the latter case, the police team will need to give hints about how the priors within the open source model might be better calibrated to reality, or, if this information is too sensitive, to acknowledge the discrepancy and nevertheless retain the mismatching entry.

- The next step is to translate the extended library containing the new entry , taking the current police library behind the firewall and adding an adjusted version of this model to form an initial construction of . Note that any non-empty extant police library will typically contain a more refined suite of models than the entries in the library used by academics. In particular, the CPTs in and , may be much more accurate than their equivalents in . This in turn will mean that the CPTs for matched to other models in the library should give more reliable results when applying than . This will need to be acknowledged within this translation step.

- Police then adjust the pre-existing BNs within this initial construction of - such as changes to the node names to more generic terms so that these will be consistent with the revised library. They then make any necessary adjustments to the topology of to contain any structural information known only to them. In the case of plot models, these additions are most likely those associated with intensity measures they might secretly use to inform them about whether or not various tasks are being undertaken by a suspect. This is because academic teams are more likely to make erroneous guesses about the highly secure information police have that form the intensity structure of the plot model than they are about the task and phase structures of the plot model. Any new types of undisclosable measurements they might have available that relate to tasks in but to no other entry in the library will need to be represented.

- Police have been trained to elicit in-house any prior probabilities needed for the secure CPTs . This is the most delicate part of the operation to manage. It is useful for any in-house representative who has not been trained in probabilistic elicitation and who will be needed to act as a facilitator to first attend one of the currently available aforementioned probability elicitation programmes. We have also found that the in-house technician can often benefit by more bespoke training delivered by the expert academic team where they are part of a mock elicitation as directly appropriate as possible. The academic team will be ready to answer any generic questions the in-house facilitator might have about this process. This will need to be repeated for all categories of suspect-environment pair .

- Police then populate the prior probabilities needed for the secure CPTs elicited and facilitated by the trained in-house representative behind closed doors. These CPTs will usually consist of analogues in to the CPTs in - where academic guesses of the topological structure of the network are accurate - as well as the CPTs associated with any new intensity measures that the academic team did not include in their model based on open source information.

- Data is then embedded by the in-house expert, emulating how they have seen the academic team do this for CPTs whose expert judgements are not sensitive and whose training data is open source. Because the mathematical equations and supporting code for performing these tasks tends to be generic, such information can usually flow freely between the two teams.

- Police will now be able to use their adjusted code and algorithms to make their improved predictions and inferences. They then emulate the statistical estimation and diagnostics they have seen the academic team apply to calibrate their models and check their plausibility against the totality of data they have available to them. If through this process they discover inadequacies in their model, they share these with the academic team - see Step 3 below.

- Otherwise they write their own in-house handbook to the new code using the handbook of the open source software as a template to support other in-house users and to prepare for the next library entry.

4.2.4. Step 3 - Police and Academics Toggle Models until Requisite

- For any identified problems about the outputs of their internal code, police feed back carefully sanitised points of concern about the code or outputs of their in-house models associated with to academics. Examples might be " seems to consistently underestimate threats of people of type x", "The vast amount of data we have available means that estimating various parameters of the model using the analogues in simply does not seem to work.", "We have no data at all that might inform x given y, and no obvious source of expert judgement either.", and "For realistically informed secure use cases, the system seems to learn far too slowly to provide operational decision support." just to give a few.

- Vigorous conversations now take place between the two teams about the problems communicated in a sufficiently generic way to keep all confidential information behind the firewall. The types of alterations the academic team might perform may include changing the topology of the graph, the parameters, the probabilities, and the estimation algorithms in for police to copy in modifying entries in . Examples of replies from academics might be "It appears we might have missed within our description of the underlying processes some of the ways that these threats might happen - perhaps we can find these?", "Method y has proved to be a very efficient feature extraction algorithm in analogous settings. We provide a version of this that you might be able to embed in your code.", "Perhaps we could use alternative sources that at least give us some idea about y?", "Do you have effect data sets about past incidents whose outputs might allow these explanatory parameters to be calibrated? If so we will show you how to use this to set plausible values of these probabilities" and "We suspect that you have set the signal-to-noise ratios too high - if you set it in this way then..." among others.

- The in-house team check the performance of their model on confidential data sets about past criminals or current suspects. Any new issues like the above are then shared securely with the academic team to help find solutions. Through this interaction, the teams make all necessary adjustments and co-create the latest versions of the libraries we shall denote by and

- The in-house handbooks of these two libraries are rewritten and annotated, templated on the previous handbooks and embedding all the new structures and settings from this last interaction. In particular, this should include the rationale for choosing its settings and any new synthetic and real examples appropriate to each given library.

- The academic library is now ready for the input of the next plot model so that the co-creation development cycle described above can be repeated as necessary.

4.2.5. Maintenance of the Library as Environments Change

5. Uncertainty Handling in a Secure Library

5.1. Introduction

5.1.1. Three Special Structural Features of BNs of Plots

5.2. Setting Up Uncertain Priors within a Library

5.2.1. Introduction

5.2.2. Modular Learning on a Single BN

- Ancestral data from given past incidents. If, after such events, the values of all the variables can be reconstructed up to a given point, so that if data is available for a particular variable then it is also available for all its parents, then global independence will automatically hold a posteriori. This means we can simply perform a prior-to-posterior analysis on each of the CPTs - using relevant count data from criminals advancing through the phases of their plots.

- Designed random sampling data from relevant observational studies. Here we choose to sample from various CPTs in the model - assumed to be invariant across incidents - stratifying the sample across the different combinations of the levels within the CPTs. When considering a library of plot models, we would recommend sampling from the task CPTs conditioning on the inactive phase . Note that such sampling data will usually be open source being informed by actions of innocent people and so shared across the libraries of both the academic and in-house team. Some of the task dependence data associated with criminal activities may only be available to the in-house team, however, and so can only be accommodated in this way in-house.

- Both teams accommodate all available open source data whose likelihood separates into their shared CPTs in the way we describe above.

- The academic team embed any further open source data that destroys global independence but strongly informs the inference about some of the probabilities that otherwise would be extremely uncertain to both teams, and then updates this joint distribution numerically [32] or with appropriate partial inference [33]. Then the academic team approximate this posterior with a conjugate distribution - a product Dirichlet (or a mixture) exhibiting global independence across the CPTs with exponential (or more general) waiting times [27]. The academic team need to ensure that such an approximation is a close one in an appropriate sense or abort the accommodation of such data. These distributions are then translated in the library .

- The in-house team now refine their in-house distributions of their library of CPTs using the secure data available only to them whose likelihood separates into their shared CPTs, replacing the independent posterior densities provided in to provide new distributions for these conditional probabilities in .

- All other unused in-house and open source data are then simply used to inform and adjust the setting of priors only for future incidents as police deem appropriate.

5.3. Learning CPTs from Past Incidents and Across Different Graphs

5.4. Learning within an Ongoing Incident with a Known Suspected Perpetrator

- Police match as closely as they can the category of incident they face to one they have catalogued within their library. Differentials between models in the library will often include the likely affiliation of the suspect, their broad intent and their likely expression of attack - here the type of plot they are likely to execute (for example, a bomb attack or a vehicle attack). We assume that their library is rich enough to contain a plot model whose structure sufficiently matches the suspected incident.

- Police then need to construct an appropriate set of CPTs that match the current incident. Depending on the maturity of the library, and depending on the category of incident and suspect, there will be CPTs of this 2TDBN populated with benchmark probabilities - whose expectations have been estimated from an archive of previous incidents and domain knowledge. Police embed these into the model whenever these benchmark generic expectations are available. Here we have assumed this is the case for most of the CPTs. Note that after an incident has occurred, and particularly if this goes to court, then values of variables associated with tasks and phases will often become subsequently available. These can then be used to calibrate these otherwise latent variables using the classifications mentioned above. This is especially useful for the matching of future incidents of the same or similar category to the current culminated plot, allowing a more accurate and calibrated translation of CPT entries from the developed library to ongoing cases.

- Police then elicit the probabilities they need in-house to complete the probability model of the suspected perpetrator and incident based on private and covert information they have about the given suspect that goes beyond the category found in the library best matching the current case.

- Such secure information is then embedded by the in-house team to sensitively adjust any entries in the CPTs to the current case, using the techniques they have developed through teaching from the academic team and through training in elicitation methods.

- Police then simply apply this model to the current unfolding incident - embedding the specific routinely collected intensity data as well as sporadic intelligence data to update predictions concerning the efficacy of any interventions they contemplate making. How this works has been documented elsewhere [1,3,21].

5.5. Learning through Intensities

- The guesses the academic team make about these aspects of the model will often be unreliable and so the in-house team will need to override a significant proportion of these variables, particularly updating numerous entries of the corresponding CPTs.

- The available streaming information concerning one incident and suspect can be very different to that available for another incident and suspect. Therefore, when populating the BN for a particular incident, this information will often need to be bespoke by default and hence entered by police in-house.

5.6. Co-Creation of Probability Models of a Vehicle Attack

5.6.1. Building on generic structures of plots into a BN

6. Discussion

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| BN | Bayesian network |

| CPT | Conditional probability table |

| DAG | Directed acyclic graph |

| DBN | Dynamic Bayesian network |

| 2TDBN | Two time-slice dynamic Bayesian network |

| MO | Modus operandi |

| SEU | Subjective expected utility |

Appendix A. Structure of the Phase Transition Matrix

References

- Bunnin, F.O.; Smith, J.Q. A Bayesian Hierarchical Model for Criminal Investigations. Bayesian Analysis 2021, 16, 1–30. [Google Scholar] [CrossRef]

- Smith, J.Q. Bayesian decision analysis: Principles and practice; Cambridge University Press, 2010. [CrossRef]

- Shenvi, A.; Bunnin, F.O.; Smith, J.Q. A Bayesian decision support system for counteracting activities of terrorist groups. Journal of the Royal Statistical Society Series A: Statistics in Society 2023, 186, 294–312. [Google Scholar] [CrossRef]

- Ramiah, P.; Smith, J.Q.; Bunnin, O.; Liverani, S.; Addison, J.; Whipp, A. Bayesian Graphs of Intelligent Causation. arXiv preprint 2024. [Google Scholar] [CrossRef]

- Korb, K.B.; Nicholson, A.E. Bayesian Artificial Intelligence; Computer Science and Data Analysis Series, CRC Press, 2011. [CrossRef]

- Wilkerson, R.L.; Smith, J.Q. , Customized Structural Elicitation. In Expert Judgement in Risk and Decision Analysis; Springer International Publishing, 2021; p. 83–113. [CrossRef]

- Pearl, J. Causality: Models, Reasoning and Inference; Cambridge University Press: New York, 2000. [Google Scholar]

- Spirtes, P.; Glymour, C.; Scheines, R. Causation, Prediction, and Search; Lecture Notes in Statistics, Springer New York, 1993. [CrossRef]

- Hill, A.B. The Environment and Disease: Association or Causation? Proceedings of the Royal Society of Medicine 1965, 58, 295–300. [Google Scholar] [CrossRef] [PubMed]

- Felekis, Y.; Zennaro, F.M.; Branchini, N.; Damoulas, T. Causal Optimal Transport of Abstractions. Third Conference on Causal Learning and Reasoning; Locatello, F.; Didelez, V., Eds. PMLR, 2024, Vol. 236, pp. 462–498.

- Smith, J.; Allard, C. Rationality, Conditional Independence and Statistical Models of Competition. In Computational Learning and Probabilistic Reasoning; Gammerman, E., Ed.; Wiley, 1996; chapter 14.

- Ramiah, P.; Bunnin, O.; Liverani, S.; Smith, J.Q. A Causal Analysis of the Plots of Intelligent Adversaries. Currently restricted access - awaiting permission to release. 2024.

- Smith, J.; Shenvi, A. Assault Crime Dynamic Chain Event Graphs. Warwick Research Report (WRAP) 2018.

- Sobenko Hatum, P.; McMahon, K.; Mengersen, K.; Pao-Yen Wu, P. Guidelines for model adaptation: A study of the transferability of a general seagrass ecosystem Dynamic Bayesian Networks Model. Ecology and Evolution 2022, 12. [Google Scholar] [CrossRef]

- Rowe, G.; Wright, G. The Delphi technique as a forecasting tool: issues and analysis. International Journal of Forecasting 1999, 15, 353–375. [Google Scholar] [CrossRef]

- Cooke, R.M. Experts In Uncertainty: Opinion and Subjective Probability in Science; Oxford University PressNew York, NY, 1991. [CrossRef]

- Hanea, A.; others. Investigate Discuss Estimate Aggregate for structured expert judgement. International Journal of Forecasting 2017, 33, 267–279. [Google Scholar] [CrossRef]

- Gosling, J.P. SHELF: The Sheffield Elicitation Framework. In Elicitation: The Science and Art of Structuring Judgement; Dias, L.; Morton, A.; Quigley, J., Eds.; Springer International Publishing, 2017; p. 61–93. [CrossRef]

- O’Hagan, A.; Buck, C.E.; Daneshkhah, A.; Eiser, J.R.; Garthwaite, P.H.; Jenkinson, D.J.; Oakley, J.E.; Rakow, T. Uncertain judgements: Eliciting experts’ probabilities; Statistics in Practice, John Wiley & Sons, 2006. [CrossRef]

- European Food Safety Authority. Guidance on Expert Knowledge Elicitation in Food and Feed Safety Risk Assessment. EFSA Journal 2014, 12, 3734. [Google Scholar] [CrossRef]

- Bunnin, F.; Smith, J. A Bayesian Hierarchical Model of Violent Criminal Threat. JMS, 2021.

- Phillips, L.D. A theory of requisite decision models. Acta Psychologica 1984, 56, 29–48. [Google Scholar] [CrossRef]

- Lauritzen, S.L.; Spiegelhalter, D.J. Local computations with probabilities on graphical structures and their application to expert systems. J. R. Stat. Soc. Series B Stat. Methodol. 1988, 50, 157–194. [Google Scholar] [CrossRef]

- Cowell, R.; Philip Dawid, A.; Lauritzen, S.; Spiegelhalter, D. Probabilistic Networks and Expert Systems; Springer New York, 1999. [CrossRef]

- Leonelli, M.; Smith, J.Q. Bayesian decision support for complex systems with many distributed experts. Annals of Operations Research 2015, 235, 517–542. [Google Scholar] [CrossRef]

- Leonelli, M.; Riccomagno, E.; Smith, J.Q. Coherent combination of probabilistic outputs for group decision making: an algebraic approach. OR Spectrum 2020, 42, 499–528. [Google Scholar] [CrossRef]

- Shenvi, A.; Liverani, S. Beyond conjugacy for chain event graph model selection. International Journal of Approximate Reasoning 2024, 173, 109252. [Google Scholar] [CrossRef]

- Croft, J.; Smith, J. Discrete mixtures in Bayesian networks with hidden variables: a latent time budget example. Computational Statistics & Data Analysis 2003, 41, 539–547. [Google Scholar] [CrossRef]

- Mond, D.; Smith, J.; van Straten, D. Stochastic factorizations, sandwiched simplices and the topology of the space of explanations. Proceedings of the Royal Society of London. Series A: Mathematical, Physical and Engineering Sciences 2003, 459, 2821–2845. [Google Scholar] [CrossRef]

- Zwiernik, P.; Smith, J.Q. Implicit inequality constraints in a binary tree model. Electronic Journal of Statistics 2011, 5. [Google Scholar] [CrossRef]

- Zwiernik, P.; Smith, J.Q. Tree cumulants and the geometry of binary tree models. Bernoulli 2012, 18. [Google Scholar] [CrossRef]

- Barons, M.J.; Fonseca, T.C.O.; Davis, A.; Smith, J.Q. A Decision Support System for Addressing Food Security in the United Kingdom. Journal of the Royal Statistical Society Series A: Statistics in Society 2022, 185, 447–470. [Google Scholar] [CrossRef]

- Volodina, V.; Sonenberg, N.; Smith, J.Q.; Challenor, P.G.; Dent, C.J.; Wynn, H.P. Propagating uncertainty in a network of energy models. 2022 17th International Conference on Probabilistic Methods Applied to Power Systems (PMAPS), 2022, pp. 1–6. [CrossRef]

- Dent, C.; Mawdsley, B.; Smith, J.; Wilson, K. CReDo Technical Report 3: Assessing asset failure, 2022. [CrossRef]

- Albrecht, D.; Nicholson, A.E.; Whittle, C. Structural Sensitivity for the Knowledge Engineering of Bayesian Networks. 7th European Workshop on Probabilistic Graphical Models. Springer, 2014, p. 1–16. [CrossRef]

- Leonelli, M.; Smith, J.Q.; Wright, S.K. The diameter of a stochastic matrix: A new measure for sensitivity analysis in Bayesian networks, 2024. [CrossRef]

- Banks, D.L.; Rios Aliaga, J.M.; Rios Insua, D. Adversarial risk analysis; Chapman & Hall/CRC: Philadelphia, PA, 2021. [Google Scholar]

- Rios Insua, D.; Banks, D.; Rios, J. Modeling Opponents in Adversarial Risk Analysis. Risk Analysis 2016, 36, 742–755. [Google Scholar] [CrossRef] [PubMed]

- Rios Insua, D.; Naveiro, R.; Gallego, V.; Poulos, J. Adversarial Machine Learning: Bayesian Perspectives. Journal of the American Statistical Association 2023, 118, 2195–2206. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).