Submitted:

30 August 2024

Posted:

02 September 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

- RQ1

- What are the challenges and limitations presented in the literature regarding predicting customer marketing responses?

- RQ2

- How effective is the DT model at predicting customer response to marketing campaigns?

- RQ3

-

What are the key factors influencing customer response to marketing campaigns as identified by the DT model?

- –

- Which demographic factors are most influential in predicting customer response to marketing campaigns according to the DT model?

- –

- How do past interactions with the company affect future responses according to the DT model?

2. Related Work

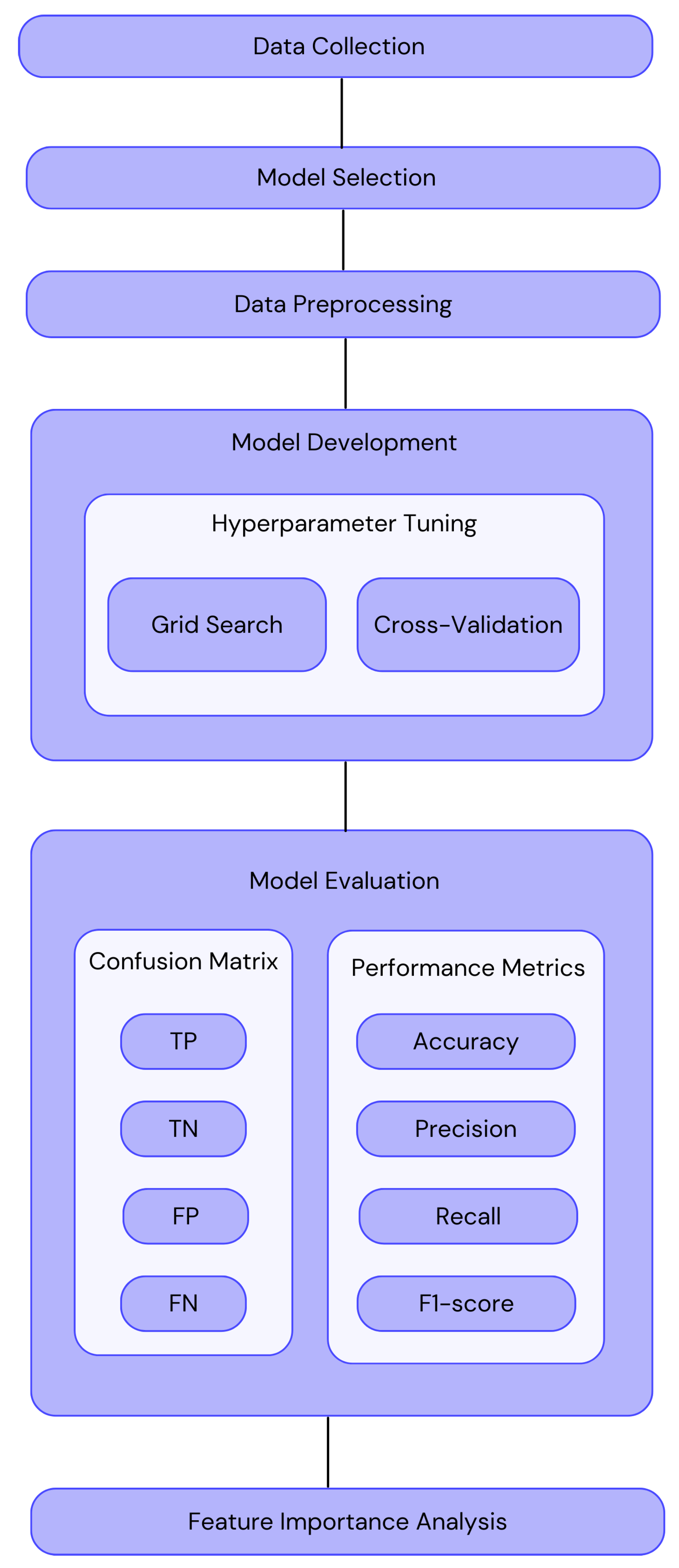

3. Proposed Solution

| Study | Models Compared | Best Accuracy Achieved | Key Findings |

|---|---|---|---|

| K. Wisaeng [9] | SVM, J48-graft, LAD tree, RBFN | 86.95% (SVM) | SVM outperformed other models, RBFN had the lowest accuracy. |

| Sérgio Moro et al. [10] | Logistic Regression, Neural Networks, Decision Tree, SVM | 79% (Neural Networks) | Neural Networks performed best in predicting customer behavior; targeting top half of customers improved outcomes. |

| Sérgio Moro [11] | Naive Bayes, Decision Tree, SVM | N/A (SVM) | SVM showed the highest prediction performance, with call duration as the most significant feature. |

| Choi et al. [12] | Decision Tree | 87.23% (Non-responders) | Decision Tree accurately predicted non-responders but had lower accuracy for predicting responders. |

| Usman-Hamza et al. [13] | Decision Tree, Naive Bayes, K-nearest neighbor | 93.6% (Decision Tree) | Decision Tree outperformed other models in customer churn analysis for live stream e-commerce. |

| Chaubey et al. [1] | Random Forest, Decision Tree | N/A (Random Forest) | Random Forest performed better than Decision Tree for churn prediction but lacks interpretability. |

| Apampa [15] | Random Forest, Decision Tree | N/A (Decision Tree) | Random Forest did not consistently improve Decision Tree’s performance in bank marketing prediction, favoring Decision Tree for interpretability. |

3.1. Hardware and Software Configuration

3.2. Data Collection

3.3. Model Selection

3.4. Data Preprocessing

3.5. Model Development

3.5.1. Hyperparameter-tuning

3.6. Model Evaluation

3.6.1. Confusion Matrix

3.6.2. Accuracy

3.6.3. Precision

3.6.4. Recall

3.6.5. F1-Score

3.7. Feature Importance Extraction

3.8. Decision Rules Generation

4. Results

4.1. Best Hyperparameters

4.1.1. Before Resampling

4.1.2. After Resampling:

4.2. Confusion Matrix

4.2.1. Before Resampling

4.2.2. After Resampling:

4.3. Model Evaluation

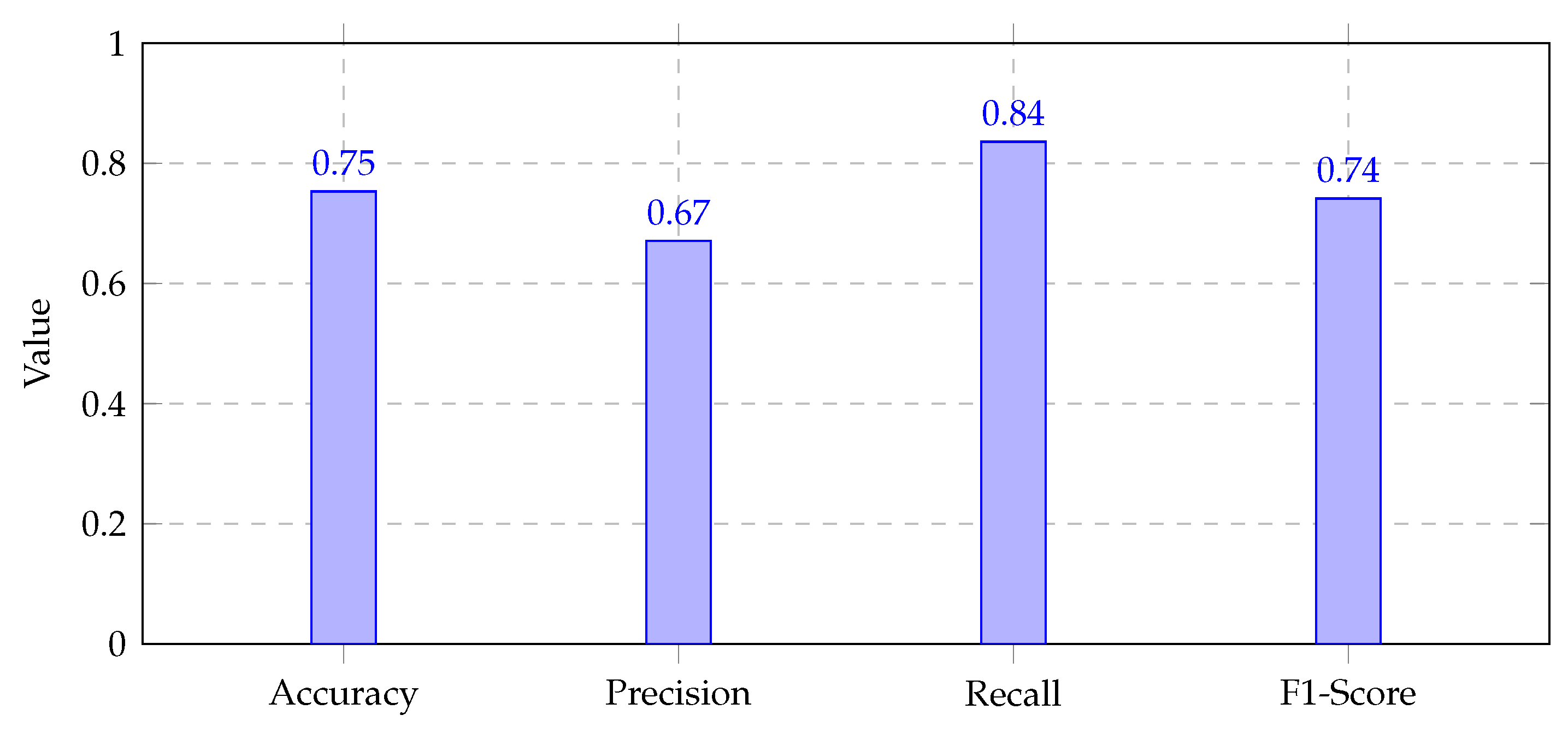

4.3.1. Before Resampling

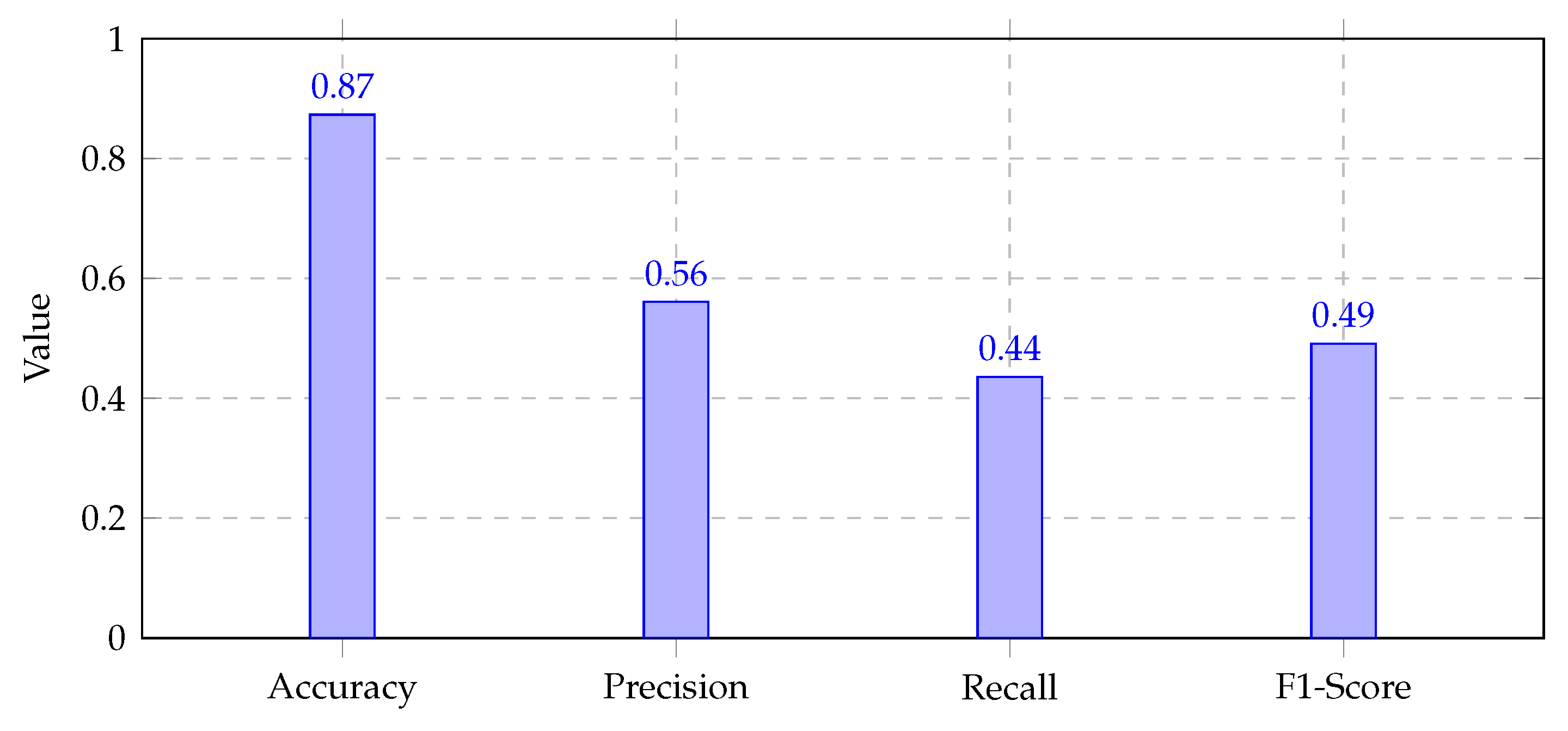

4.3.2. After Resampling:

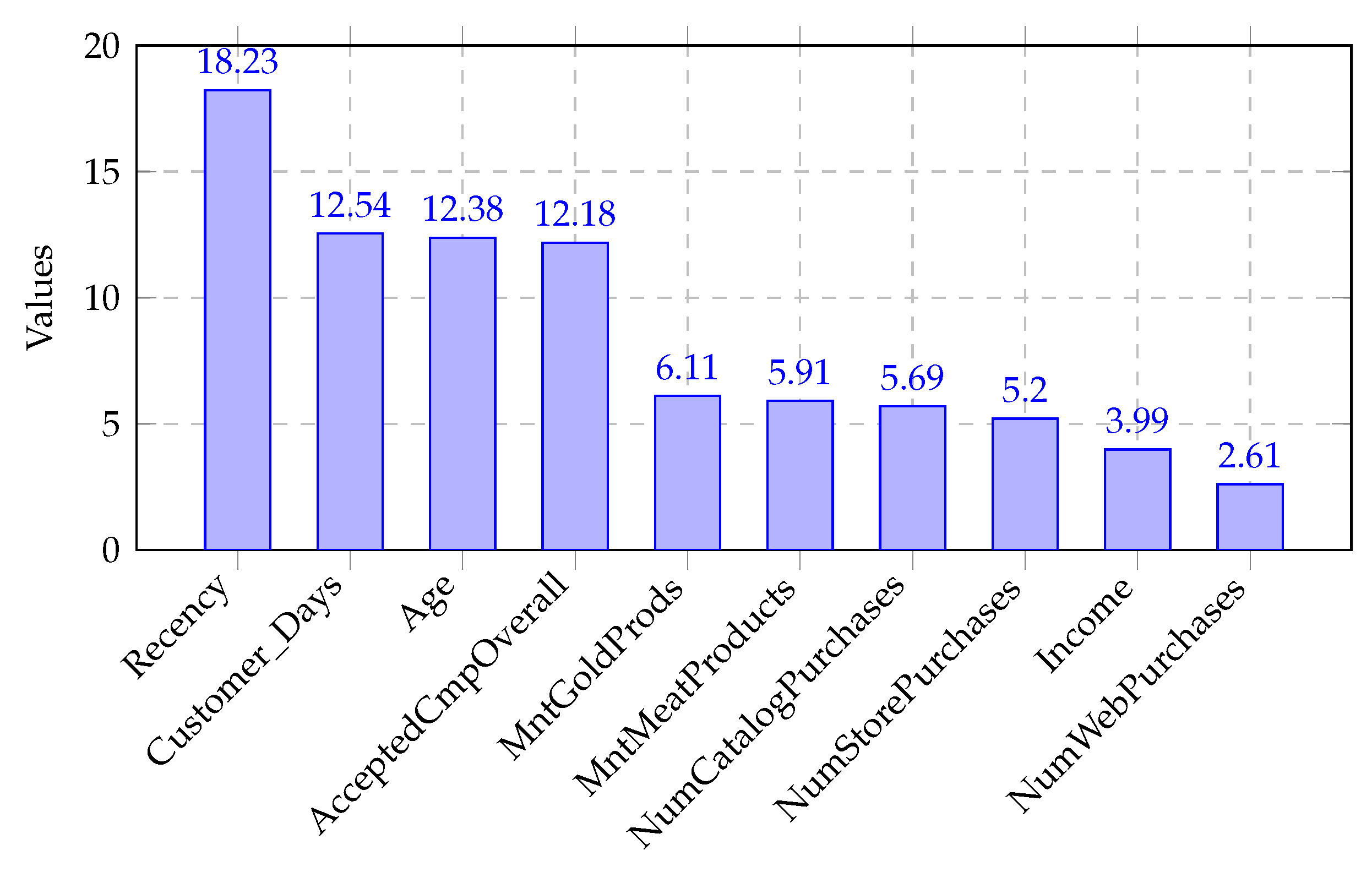

4.4. Feature Importance Scores

4.5. Decision Rules

5. Discussion

5.1. Results Interpretation

5.2. Implications for Marketing Strategies

5.3. Comparison of Related Works Papers with Our Proposed Solution

5.3.1. Overview of Related Works

5.3.2. Comparison with Our Proposed Solution

-

Accuracy and Performance:

- –

- Our Solution: Achieved an accuracy of 91.5% using Gradient Boosting, which is higher than the best accuracy reported by most related works, including the 93.6% by Usman-Hamza et al. with Decision Trees and 87.23% by Choi et al. with Decision Trees.

- –

- Related Works: Various studies have reported accuracies ranging from 79% to 93.6%, with different models exhibiting strengths in specific areas (e.g., Decision Trees for non-responders and Neural Networks for general prediction).

-

Handling of Imbalanced Datasets:

- –

- Our Solution: The Gradient Boosting model demonstrates the effective handling of imbalanced datasets, which is a common challenge in direct marketing predictions. This aspect is not explicitly addressed in many of the related works.

- –

- Related Works: Some studies, such as those by Apampa and Chaubey et al., discuss model performance but do not specifically address methods for handling imbalanced datasets.

-

Model Complexity and Interpretability:

- –

- Our Solution: While Gradient Boosting provides higher accuracy, it is generally more complex than Decision Trees and Random Forests. Our study also highlights the trade-off between accuracy and interpretability.

- –

- Related Works: Studies like those by K. Wisaeng and Apampa note the interpretability of Decision Trees and Random Forests, which is often preferred in practice despite potentially lower accuracy.

-

Computational Efficiency:

- –

- Our Solution: The computational demands of Gradient Boosting are higher compared to Decision Trees and Random Forests. However, the accuracy gains may justify the additional computational cost in scenarios where high precision is crucial.

- –

- Related Works: Efficiency considerations are less emphasized in many of the studies, with a focus more on achieving high accuracy rather than optimizing computational resources.

5.3.3. Summary and Implications

| Study | Models Compared | Best Accuracy Achieved | Key Findings |

|---|---|---|---|

| K. Wisaeng [9] | SVM, J48-graft, LAD tree, RBFN | 86.95% (SVM) | SVM outperformed other models, RBFN had the lowest accuracy. |

| Sérgio Moro et al. [10] | Logistic Regression, Neural Networks, Decision Tree, SVM | 79% (Neural Networks) | Neural Networks performed best in predicting customer behavior; targeting top half of customers improved outcomes. |

| Sérgio Moro [11] | Naive Bayes, Decision Tree, SVM | N/A (SVM) | SVM showed the highest prediction performance, with call duration as the most significant feature. |

| Choi et al. [12] | Decision Tree | 87.23% (Non-responders) | Decision Tree accurately predicted non-responders but had lower accuracy for predicting responders. |

| Usman-Hamza et al. [13] | Decision Tree, Naive Bayes, K-nearest neighbor | 93.6% (Decision Tree) | Decision Tree outperformed other models in customer churn analysis for live stream e-commerce. |

| Chaubey et al. [1] | Random Forest, Decision Tree | N/A (Random Forest) | Random Forest performed better than Decision Tree for churn prediction but lacks interpretability. |

| Apampa [15] | Random Forest, Decision Tree | N/A (Decision Tree) | Random Forest did not consistently improve Decision Tree’s performance in bank marketing prediction, favoring Decision Tree for interpretability. |

| Our proposed Solution | Decision Tree, Random Forest, Gradient Boosting | 91.5% (Gradient Boosting) | Gradient Boosting achieved the highest accuracy, outperforming Decision Tree and Random Forest; effective in handling imbalanced datasets. |

6. Conclusion

- The first question regarding the challenges and limitations presented in the literature is addressed in the "Related Work" section, highlighting the complexities of customer behavior and the limitations of traditional predictive models.

- The second question on the effectiveness of the DT model in predicting customer response to marketing campaigns is explored through the comparative analysis of model evaluation metrics before and after resampling, as presented in Section 4.2 and Section 4.3, and interpreted in Section 5.1.

- The key factors influencing customer response are identified through feature importance analysis and decision rules extraction, presented in Section 3.7 and discussed in Section 5.2.

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| MDPI | Multidisciplinary Digital Publishing Institute |

| DOAJ | Directory of open access journals |

| TLA | Three letter acronym |

| LD | Linear dichroism |

Appendix A

Appendix A.1

| Algorithm A1 Decision Tree Rules Part 1 |

|

| Algorithm A2 Decision Tree Rules Part 2 |

|

| Algorithm A3 Decision Tree Rules Part 3 |

|

| Algorithm A4 Decision Tree Rules Part 4 |

|

| Algorithm A5 Decision Tree Rules Part 5 |

|

References

- Gyanendra Chaubey, Prathamesh Rajendra Gavhane, D.B.; Arjaria, S.K. Customer purchasing behavior prediction using machine learning classifcation techniques. Journal of Ambient Intelligence and Humanized Computing 2023, 14. [CrossRef]

- Maggie Wenjing Liu, Qichao Zhu, Y.Y.; Wu, S. The Impact of Predictive Analytics and AI on Digital Marketing Strategy and ROI. The Palgrave Handbook of Interactive Marketing 2023. [CrossRef]

- yan Song, Y.; Lu, Y. Decision tree methods: Applications for classification and prediction. Shanghai Arch Psychiatry 2015, 27. [CrossRef]

- Raorane, A.; R.V.Kulkarni. Data Mining Techniques: A Source for Consumer Behavior Analysis. International Journal of Database Management Systems 2011. [CrossRef]

- Reinartz, W.J.; Kumar, V. The mismanagement of customer loyalty. Harvard Business Review 2002, 80, 86–94.

- Louppe, G. Understanding Random Forests: From Theory to Practice. PhD thesis, University of Liège, 2014.

- Kursa, M.B.; Rudnicki, W.R. The All Relevant Feature Selection using Random Forest 2011. [CrossRef]

- Michal Moshkovitz, Y.Y.Y.; Chaudhuri, K. Connecting Interpretability and Robustness in Decision Trees through Separation 2021. [CrossRef]

- Wisaeng, K. A Comparison of Different Classification Techniques for Bank Direct Marketing. International Journal of Soft Computing and Engineering (IJSCE) 2013.

- Sérgio Moro, P.C.; Rita, P. A data-driven approach to predict the success of bank telemarketing. International Journal of Soft Computing and Engineering (IJSCE) 2014.

- Sérgio Moro, R.M.S.L.; Cortez, P. Using Data Mining for Bank Direct Marketing: An Application of the CRISP-DM Methodology. Technical report, Universidade do Minho, 2011.

- Youngkeun Choi, S.; Choi, J.W. Assessing the Predictive Performance of Machine Learning in Direct Marketing Response. International Journal of E-Business Research 2023, 19. [CrossRef]

- Fatima E. Usman-Hamza, Abdullateef O. Balogun, S.K.N.L.F.C.H.A.M.S.A.S.A.G.A.M.A.M.; Awotunde, J.B. Empirical analysis of tree-based classification models for customer churn prediction. Scientific African 2024.

- Ho, T.K. Random Decision Forests. In Proceedings of the In Proceedings of 3rd international conference on document analysis and recognition. IEEE, 1995. [CrossRef]

- Apampa, O. Evaluation of Classification and Ensemble Algorithms for Bank Customer Marketing Response Prediction. (English). Journal of International Technology and Information Management 2016, 25. https://scholarworks.lib.csusb.edu/jitim/vol25/iss4/6/. [CrossRef]

- iFood. iFood DF. https://www.kaggle.com/datasets/diniwilliams/ifood-df, 2024.

- He, H.; Garcia, E.A. Learning from imbalanced data: open challenges and future directions. IEEE Transactions on Knowledge and Data Engineering 2009, 21, 1263–1284.

- Mehta, M.M.; Talbar, S.B. Class imbalance problem in data mining: review. International Journal of Computer Applications 2017, 169, 15–18.

- Fürnkranz, J. Decision Tree. In Encyclopedia of Machine Learning; Sammut, C.; Webb, G.I., Eds.; Springer, Boston, MA, 2011; pp. 263–267. [CrossRef]

- Refaeilzadeh, P.; Tang, L.; Liu, H. Cross-Validation. In Encyclopedia of Database Systems; Liu, L.; Özsu, M.T., Eds.; Springer, Boston, MA, 2009; pp. 532–538. [CrossRef]

- Hossin, M.; Sulaiman, M.N. A review on evaluation metrics for data classification evaluations. International Journal of Data Mining & Knowledge Management Process 2015, 5, 1–11.

- Breiman, L. Random Forests. Machine Learning 2001, 45, 5–32. [CrossRef]

| 1 |

| Component | Configuration | |

|---|---|---|

| Hardware | Processor | Intel Core i7-10510U |

| RAM | 16 GB | |

| Storage | 952 GB | |

| OS | Windows 11 Pro | |

| Software | Language | Python |

| Libraries | pandas, seaborn, matplotlib, scikit-learn | |

| Environment | Jupyter Notebook |

| Demographic | Income | Kidhome | Age |

| Teenhome | Customer_Days | marital_Together | |

| marital_Single | marital_Divorced | marital_Widow | |

| education_PhD | education_Master | education_Graduation | |

| education_Basic | education_2n Cycle | ||

| Customer Interaction | MntWines | MntFruits | MntGoldProds |

| MntMeatProducts | MntFishProducts | MntSweetProducts | |

| NumStorePurchases | NumCatalogPurchases | NumWebVisitsMonth | |

| NumDealsPurchases | NumWebPurchases | Recency | |

| Z_CostContact | Z_Revenue | MntTotal | |

| MntRegularProds | Complain | Response | |

| AcceptedCmp1 | AcceptedCmp2 | AcceptedCmp3 | |

| AcceptedCmp4 | AcceptedCmp5 | AcceptedCmpOverall |

| Predicted \Actual | Positive (+) | Negative (-) |

|---|---|---|

| Positive (+) | TP | FP |

| Negative (-) | FN | TN |

| Parameter | Value |

|---|---|

| criterion | entropy |

| max_depth | 5 |

| min_samples_leaf | 2 |

| min_samples_split | 2 |

| splitter | random |

| Parameter | Value |

| criterion | entropy |

| max_depth | None |

| min_samples_leaf | 2 |

| min_samples_split | 2 |

| splitter | best |

| Predicted \Actual | Positive (+) | Negative (-) |

| Positive (+) | ||

| Negative (-) |

| Predicted \Actual | Positive (+) | Negative (-) |

| Positive (+) | ||

| Negative (-) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).