Submitted:

16 August 2024

Posted:

19 August 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

- Application description and requirements: we’ll examine the overall application features and the functional requirements of the system. We’ll describe some of the functional challenges the system needs to overcome to satisfy the fundamental pillars of modern software development.

- System design: the system design must be capable of delivering the needed functionality showcasing the required building blocks, the reasoning behind the choice, the individual block role and behavior, and the connections to the other parts of the system. We’ll outline the building process of the system architecture, beginning with a simple foundational structure and progressively adding complexity as various technical challenges are introduced.

- Technology background: having a complex end goal, the used technology stack shares the same level of complexity. We’ll investigate the used technologies for each building block, the general infrastructure, and the technology principles used in the design and development of the collaborative system.

- System development: we’ll detail the actual development of the application, covering topics such as conflict resolution, storage handling, application code, services configuration, tooling usage, and microservices interactions.

- Cloud integration: we’ll detail the platform and cloud-native technologies [15] used to move the system from an on-premise infrastructure to the global network of the public cloud providers.

- Architecture validation: we’ll validate the proposed application architecture in terms of scalability, availability, throughput, and resource consumption [16]. Individual components of the system were subject to a series of challenges, disaster scenarios, and tests, to showcase their contribution towards a modern available and scalable application. To further emphasize the validity of the work, performance metrics, and test results were provided.

2. Application Description and Requirements

2.1. Core Entity

2.2. Core Features

- seeing added/deleted characters in a specific part of the document

- seeing the cursor indicator of a user while navigating through the document

- seeing actual logs of all the events received during a session

2.3. Performance Metrics

- Concurrency: the number of users supported at the same time in a collaboration session. Being a matter of resource management and sharing, this metric aims to provide insights about the number of users able to join a session based on the system’s available resources [18].

3. System Design

3.1. Technical Requirements

- Users can create accounts and their credentials are stored securely in a persistent manner. With the same credentials, users can authorize actions and interact with the application resources

- In a session, users can create the base document resource and act upon it with CRUD (Create Read Update Delete) operations [19]. All the described operations must be persisted on the final resource. Only the appropriate document owner can interact with the resource at this level.

- Being a real-time application, users should be informed about various events in a near real-time manner (notification system). When a live update is not possible, users should receive the missing updates in an alternative manner.

- The system must provide a real-time document editing experience to all the involved peers. Users should be able to dispatch events resembling the natural interactions a human would do normally on a text resource. The system must handle a considerable number of users simultaneously. As magnitude, the system must be able to support concurrent users in the order of thousands.

- The system must use the appropriate communication technologies to deliver the promised functionality. Data should be delivered in such a way that 95% of the users receive a response in an appropriate time frame with the designated action they executed.

- The persistent solution must provide a robust and reliable storage layer where all the relevant generated user content is going to be stored.

3.2. Application Structure

- Client-side applications: generally, web applications (usually created with technologies like HTML, CSS, and JavaScript) [21,23], mobile applications (created with technologies like Objective-C, Kotlin, Swift, Dart, Flutter, etc.) [24], and desktop applications [25]. This piece of the product aims to deliver a consistent and streamlined experience to the user, handling scenarios like optimum data handling, providing user experience and interactions, animations and transitions, basic client-side security, and low-effort user interface interactions.

3.3. System Organization

- Monolithic

- Distributed microservices

3.4. Scaling

- Vertical: where more hardware resources are provided to keep up with the load.

- Horizontal: where more instances of the running process are provided to split the work among other workers.

3.5. Microservices

- Scalability: Independent services can be scaled individually, optimizing resource allocation.

- Flexibility: Enables faster development and deployment cycles with each service developed, deployed, and scaled independently.

- Fault isolation: Failures in one microservice instance do not affect the entire system, enhancing reliability.

- Technology diversity: Allows the use of different technologies for different services, optimizing for specific needs.

- Easy maintenance: Easier to maintain and update as changes to one service do not necessarily impact others.

- Continuous delivery and integration: Supports continuous integration and delivery practices, facilitating a more streamlined development process.

- Resilience: Improved fault tolerance and resilience due to the distributed nature of services.

- Autonomy: Teams can work independently on different services, fostering autonomy and speeding up development.

- Better resource utilization: Efficient use of resources as each microservice can be optimized for its specific task.

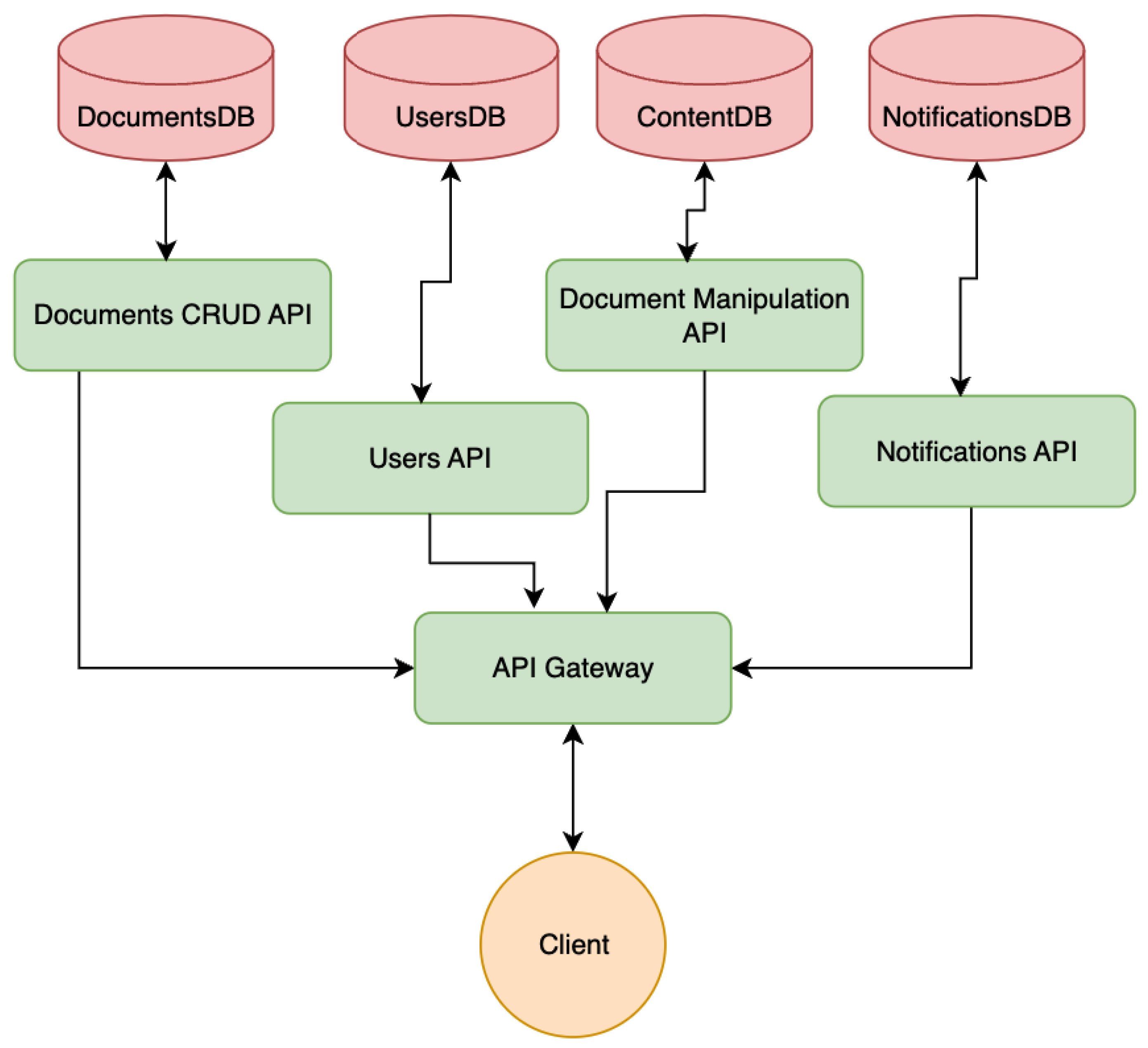

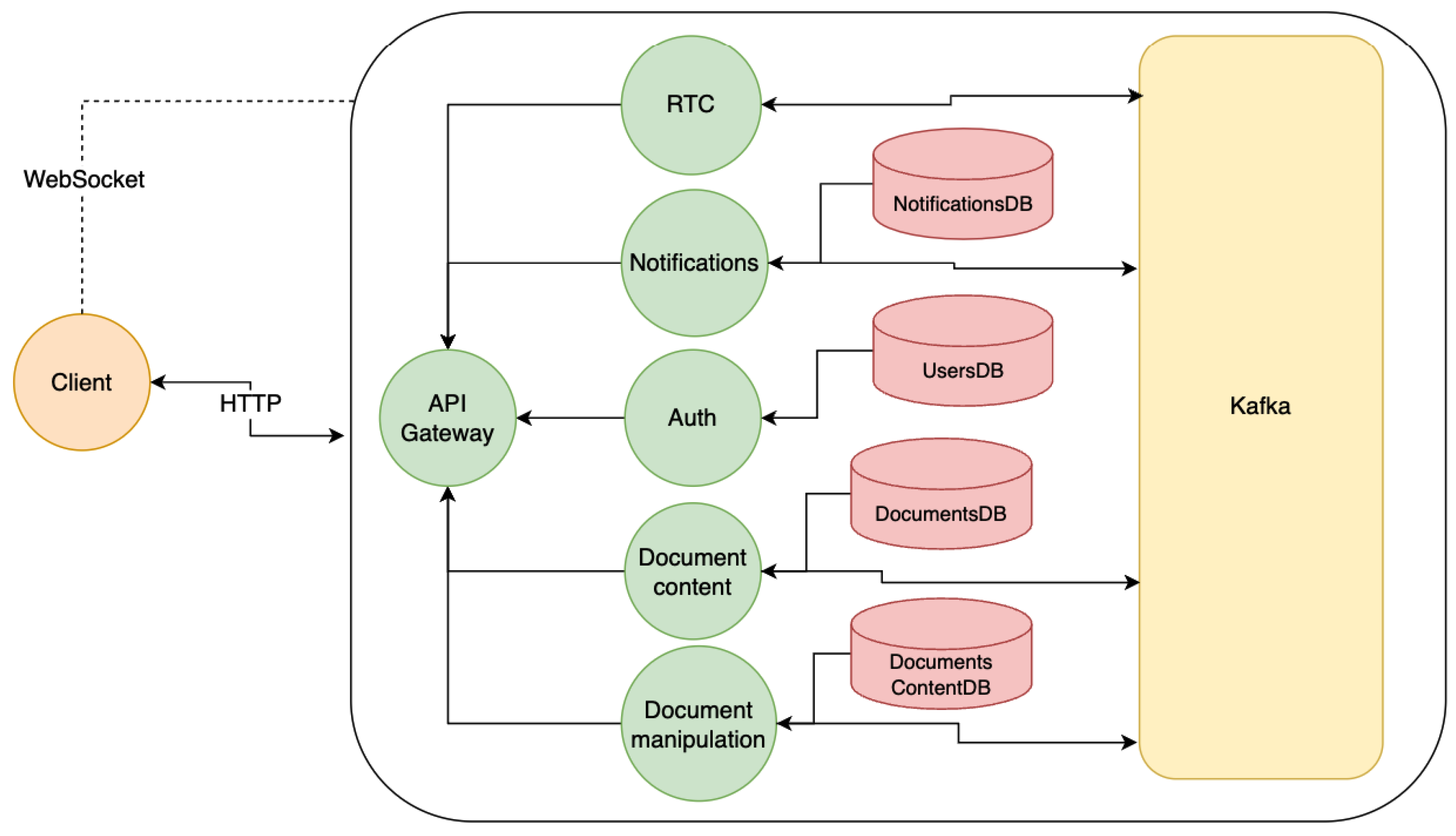

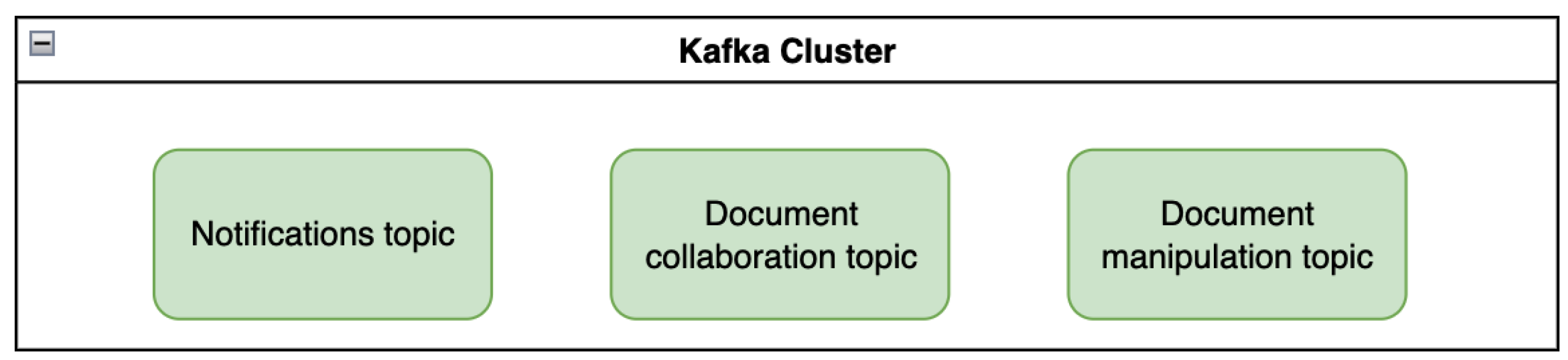

- Documents API: a module for handling the actual document creation and basic manipulation of all the resources besides the document content.

- Document Manipulation API: an API designated for handling collaboration sessions in real-time between the users and responsible for storing the data generated in the sessions.

- Notifications API: the application will have a channel for delivering notifications to users in real-time mode whenever possible and offline capabilities for storage and late-delivery.

- The client implementation doesn’t need to know about the specifics of the services providing the functionality. A single point of entry can “hide” all the building blocks from the client and provide only what is necessary.

- A single point of entry facilitates global operations like monitoring, logging, authorization, etc.

- Provides routing to the needed functionality.

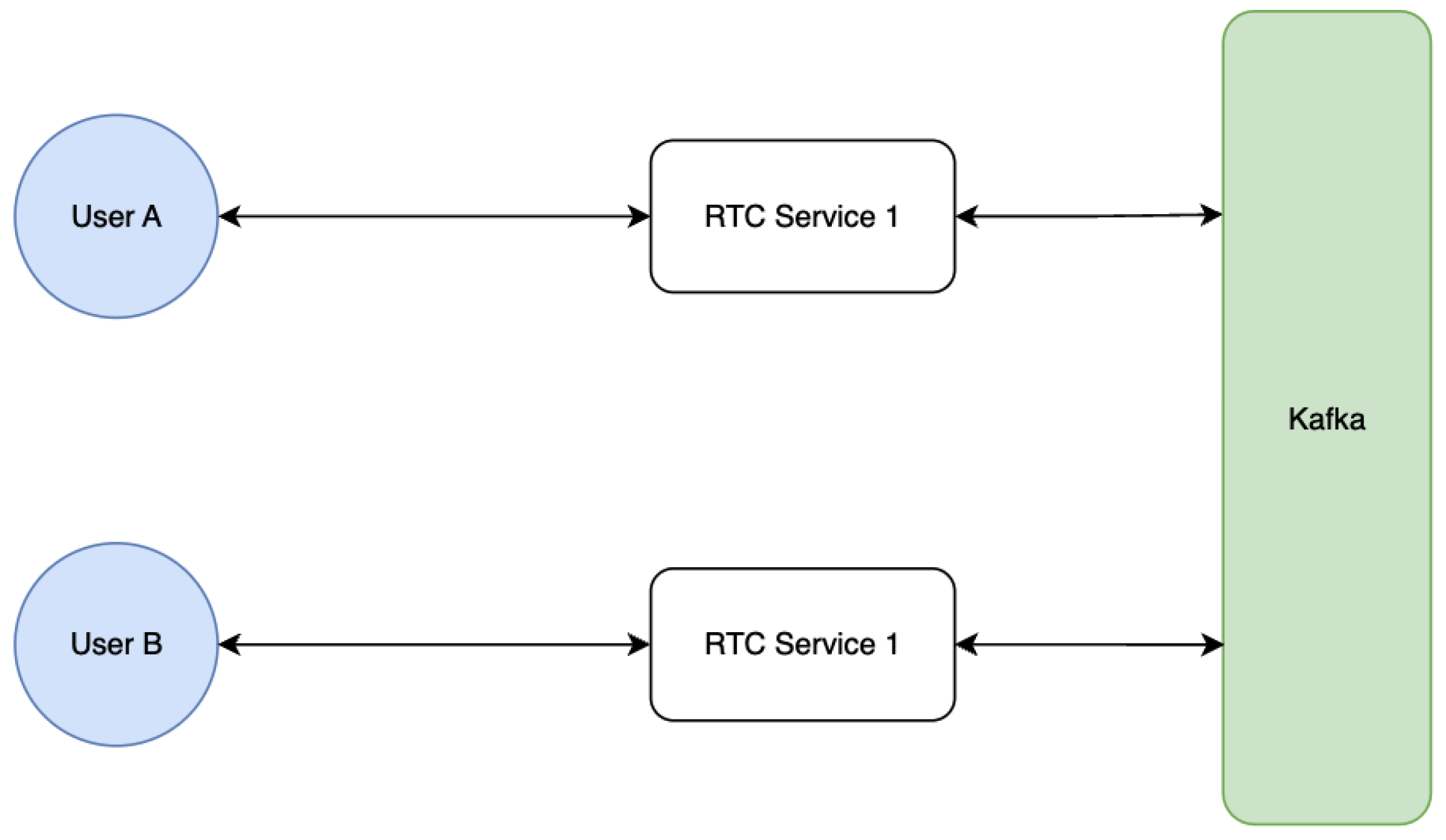

3.6. Cross-Service Communications

- Synchronous communication between services refers to a communication pattern when a service sends a request and waits for the response before continuing its execution.

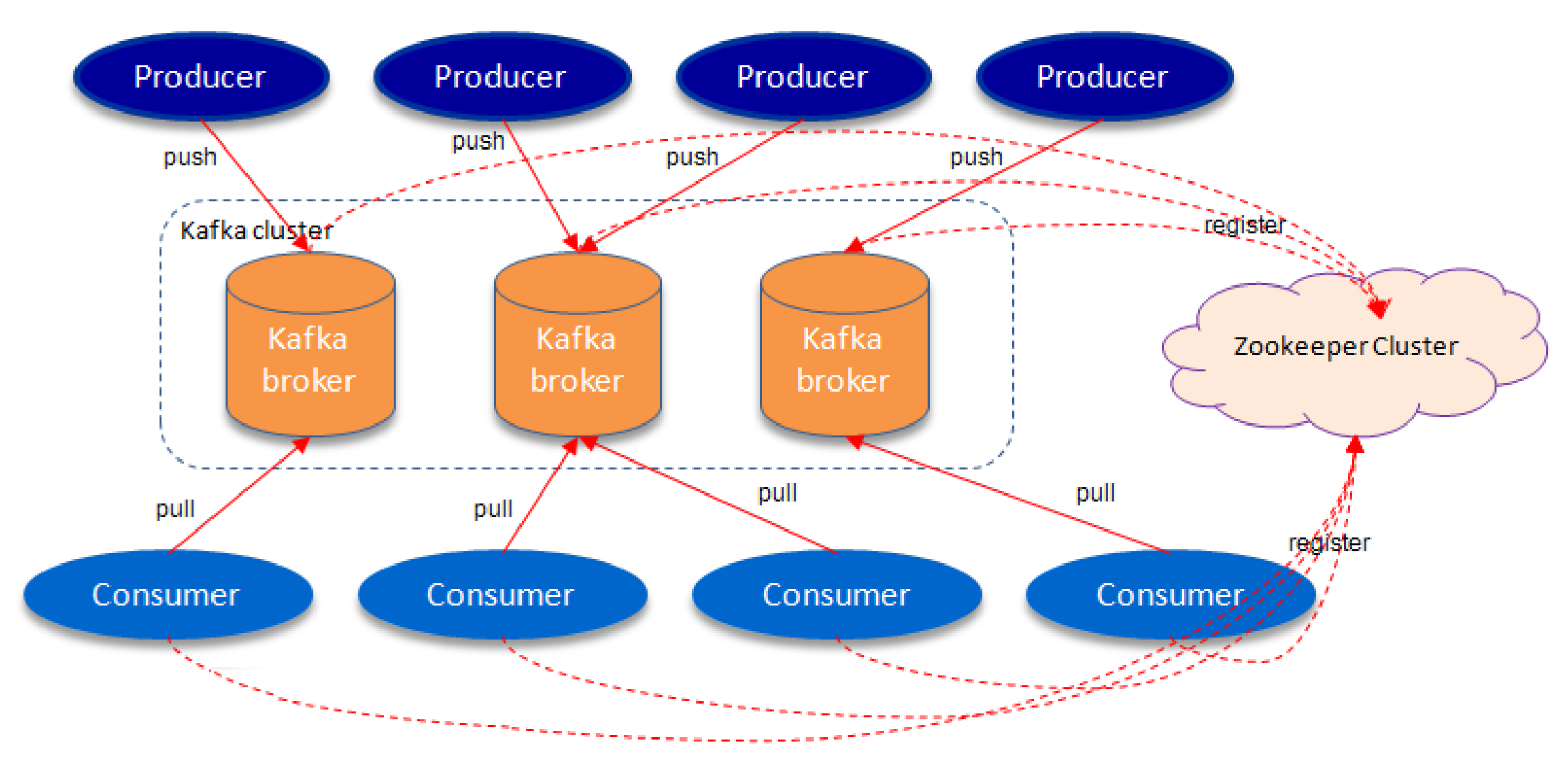

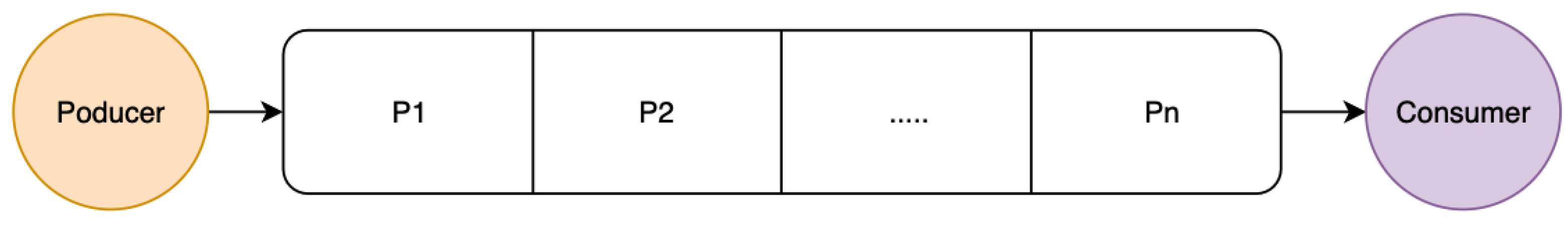

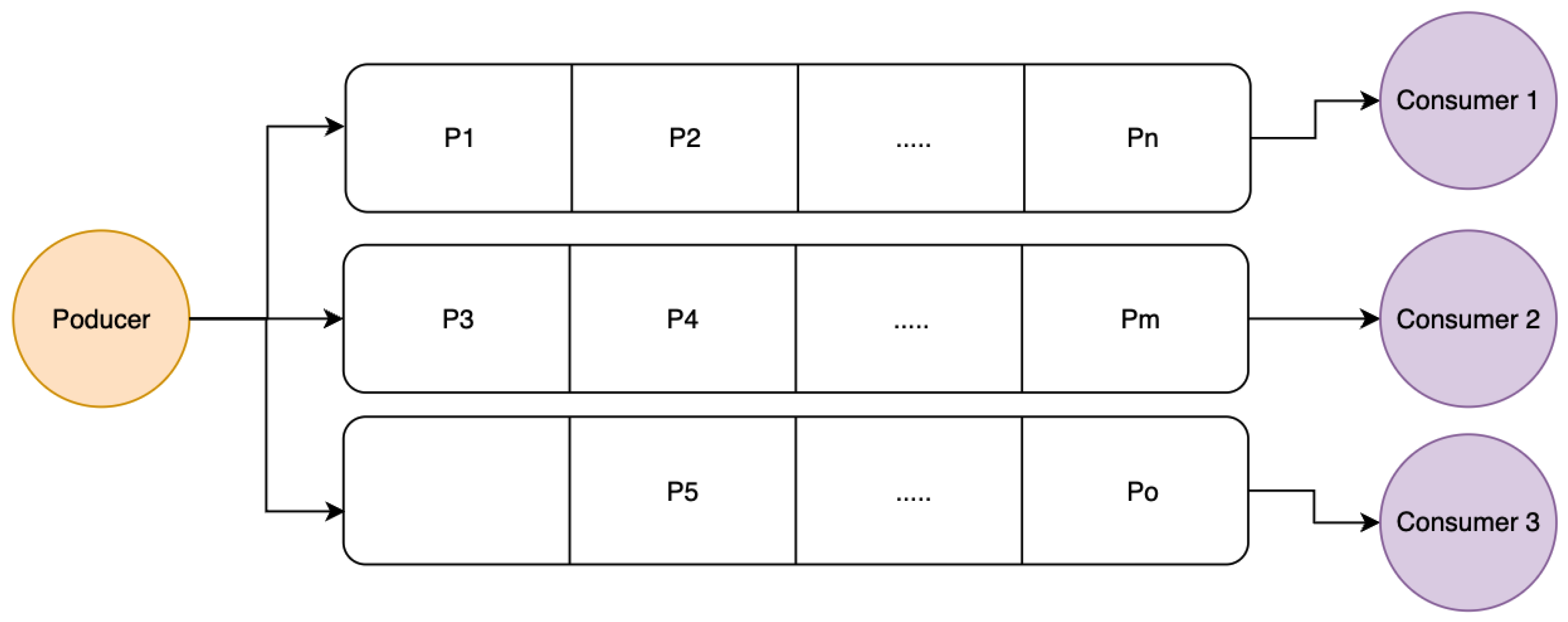

- Asynchronous communication between services is a pattern where a service sends a message or request without waiting for an immediate response. In contrast to synchronous communication, which involves a request and an immediate waiting period for a response, asynchronous communication allows services to operate independently and asynchronously process messages over time.

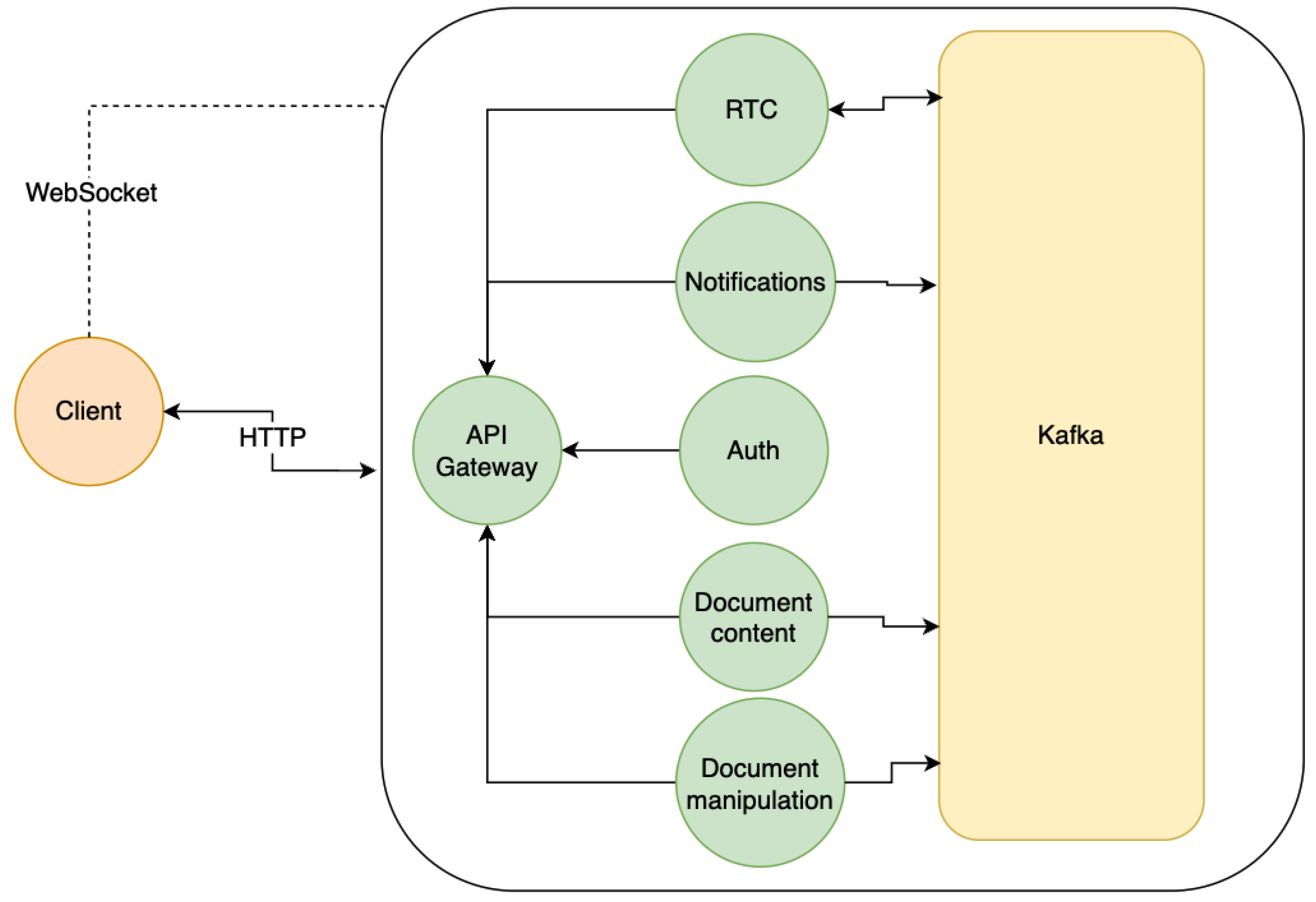

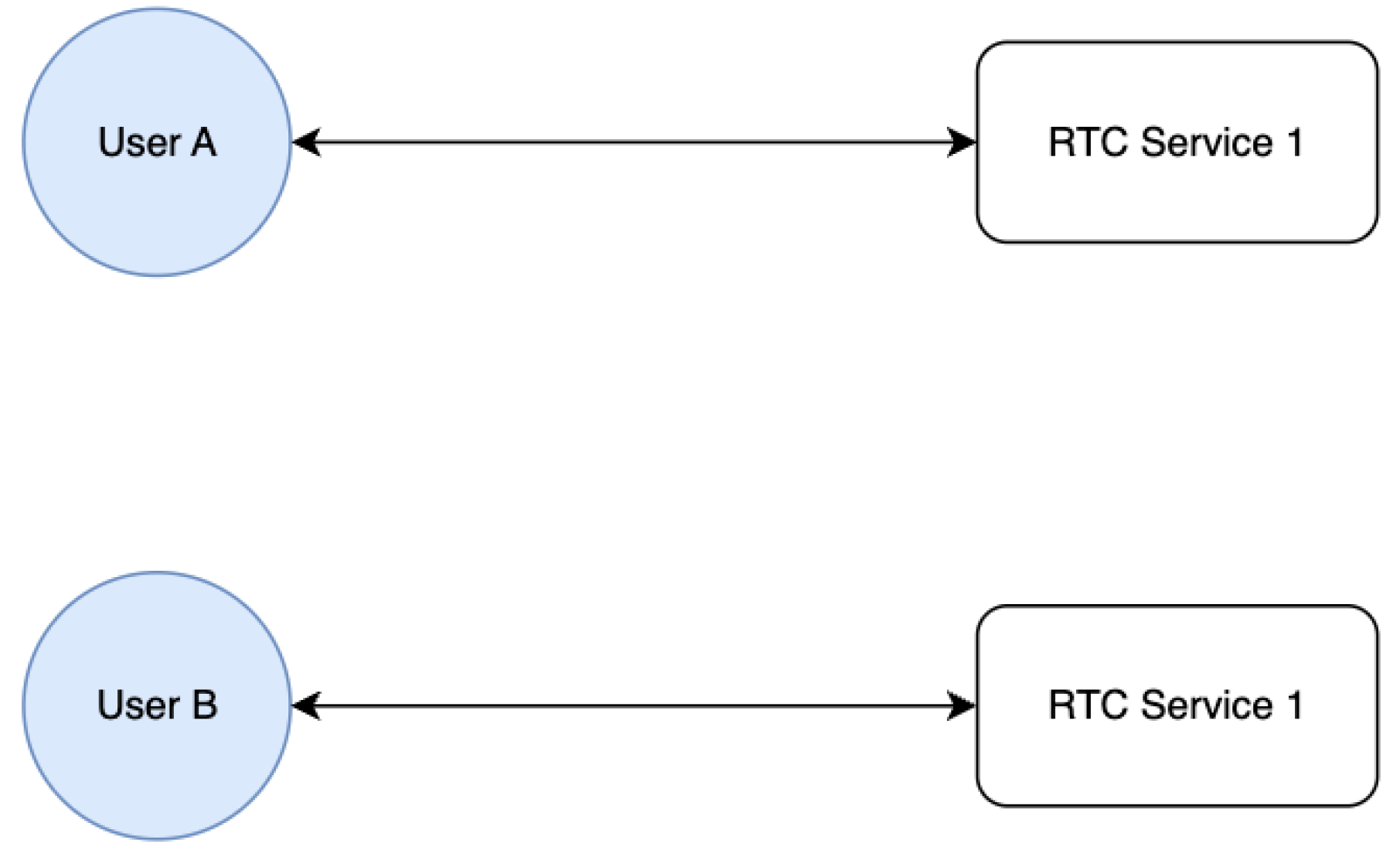

3.7. Client-Server Communication

- full-duplex – used for bidirectional client-server communication. This type of communication channel is more expensive in comparison with the HTTP protocol but also provides more flexibility for live data streaming. The protocol of choice for this communication type is WebSocket [37]. The protocol provides a persistent, bidirectional communication channel over a single, long-lived connection between a client and server. This enables real-time, low-latency data exchange, making it suitable for interactive applications. Unlike traditional HTTP, WebSocket facilitates full-duplex communication, allowing both sides to send messages independently. The WebSocket protocol operates over the standard ports 80 (HTTP) and 443 (HTTPS) and is supported by most modern web browsers, servers, and frameworks, fostering efficient, real-time communication in web applications.

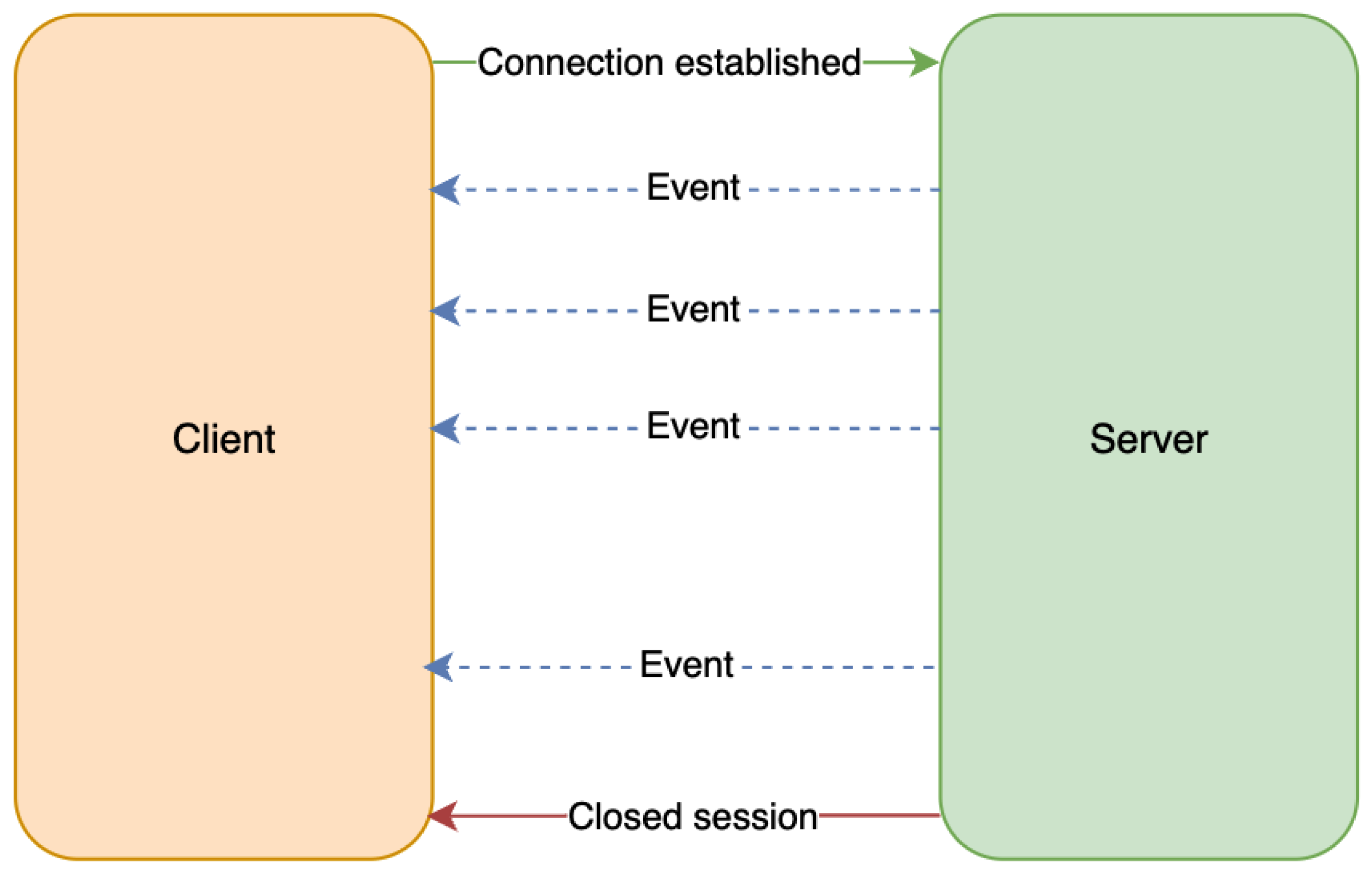

- half duplex – used for unidirectional data streaming. This type of communication can be achieved using SSE (Server Sent Events). It’s a web technology that enables servers to push real-time updates to web clients over a single HTTP connection [38].

4. Technologies Stack

4.1. Client-Side

4.2. Server-Side

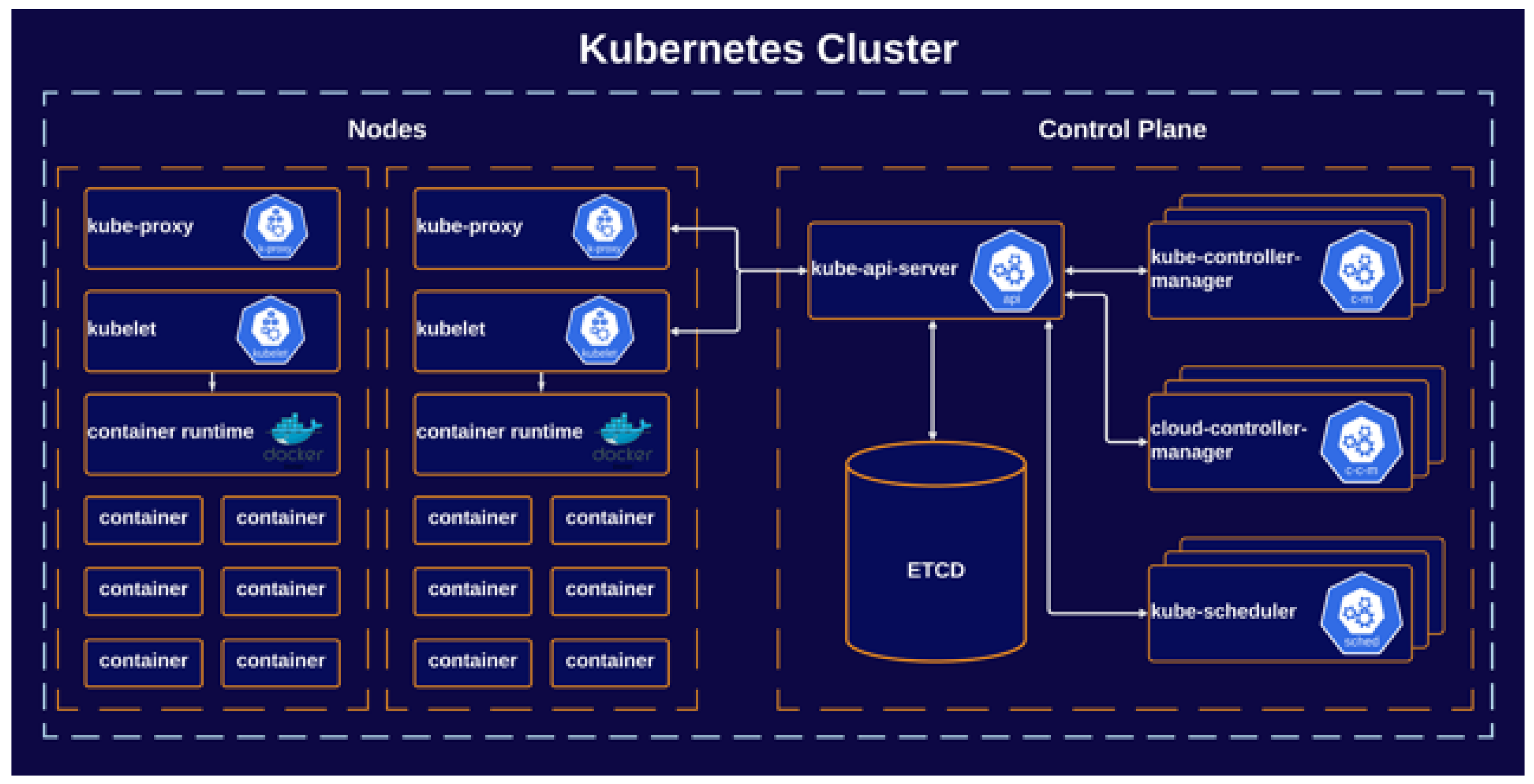

4.3. Infrastructure

- how a certain application should be executed

- how many running instances should an application have

- what should happen when an application instance is subject to increased or decreased user demand

- Auto-Configuration: Spring Boot scans the classpath for libraries and automatically configures beans based on the detected dependencies. This can lead to longer startup times, especially in large applications with numerous dependencies.

- Annotation Processing: Spring Boot heavily relies on annotations for configuration. The process of scanning and processing annotations can contribute to increased startup times, particularly in applications with extensive use of annotations.

- Reflection: Spring Boot uses reflection to dynamically inspect and instantiate classes. This introspection can impact startup performance, especially in applications with deep class hierarchies [47].

- Initialization Overhead: Spring Boot applications may have initialization overhead as they set up various components, such as the Spring Application Context, which contributes to the overall startup time.

- User accounts

- Notifications data

- Document content and metadata

5. System Development

5.1. Apache Kafka Application Setup

- -

- Design components to be as stateless as possible. The software components of a system should serve their purpose without needing an initial setup or pre-preparation of data (e.g., loading information from look-up tables, or building an in-memory cache). Workloads are ephemeral, and the components of a system should be able to be added or removed elastically without producing any side effects.

- -

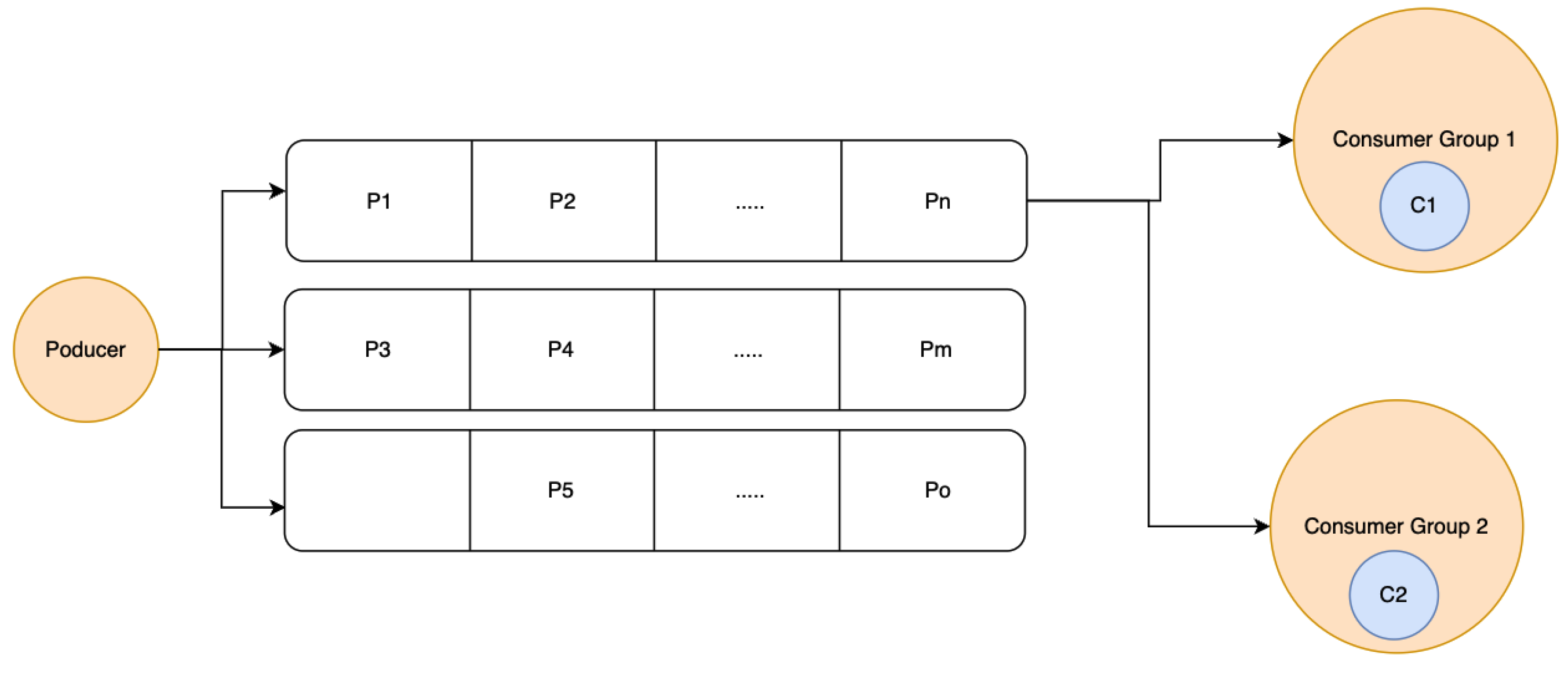

- Build with redundancy in mind. A system is only as strong as its weakest component. Each core feature should be highly available, meaning that all business logic can't be delegated to a single instance of a worker. Core functionality should have backup instances that can handle additional load or replace an unhealthy worker instance.

- -

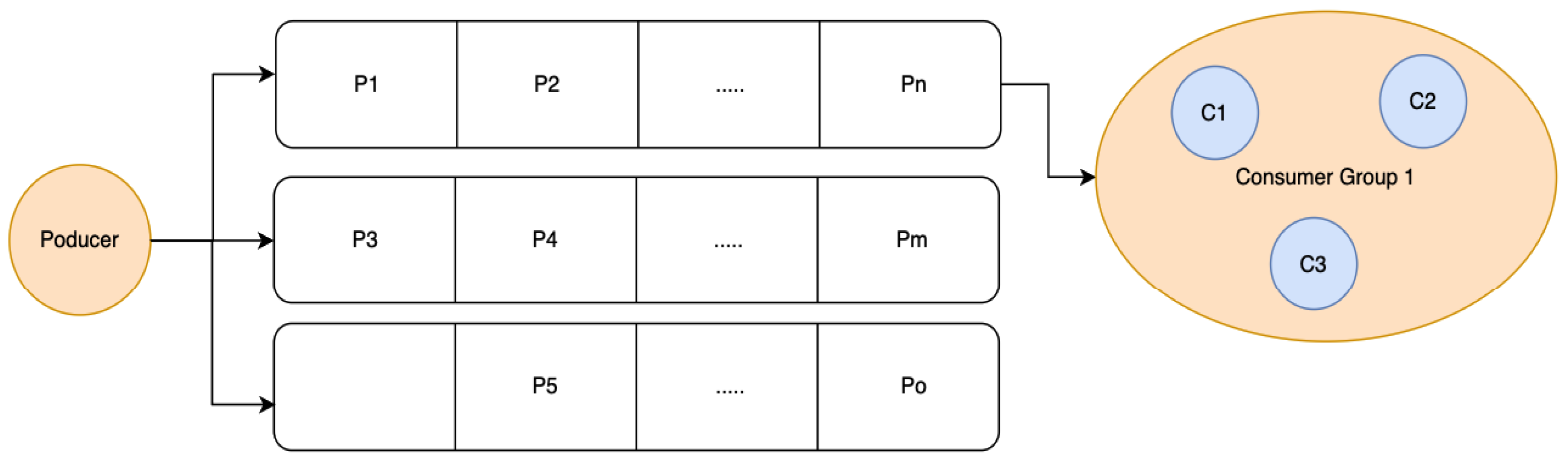

- Create reactive workflows. In high-traffic applications, especially in real-time systems, every millisecond of delay can impact the outcome of an operation. Communication between software components must be done asynchronously whenever possible. Software components must be able to listen to a common stream of events and react whenever work is delegated. When a result is available, it should be queued in a stream and distributed among the available workers, thus avoiding bottlenecks and the creation of a single-point of failure.

5.2. Microservices Interface

- /subscribe – The user will subscribe to an events stream to receive notifications in real-time while the user has active sessions.

- /unread - API endpoint for receiving notifications stored while the user was offline.

- /read-all – API endpoint for marking all the notifications as “read”.

- /read – API endpoint for receiving the older notifications.

- Creating the actual document

- Getting user-owned and shared documents

- Getting individual document details

- Deleting a document

- Adding a user as a shared user to the document access list

- Removing a user as a shared user

- provide routing to the designated microservice of a request

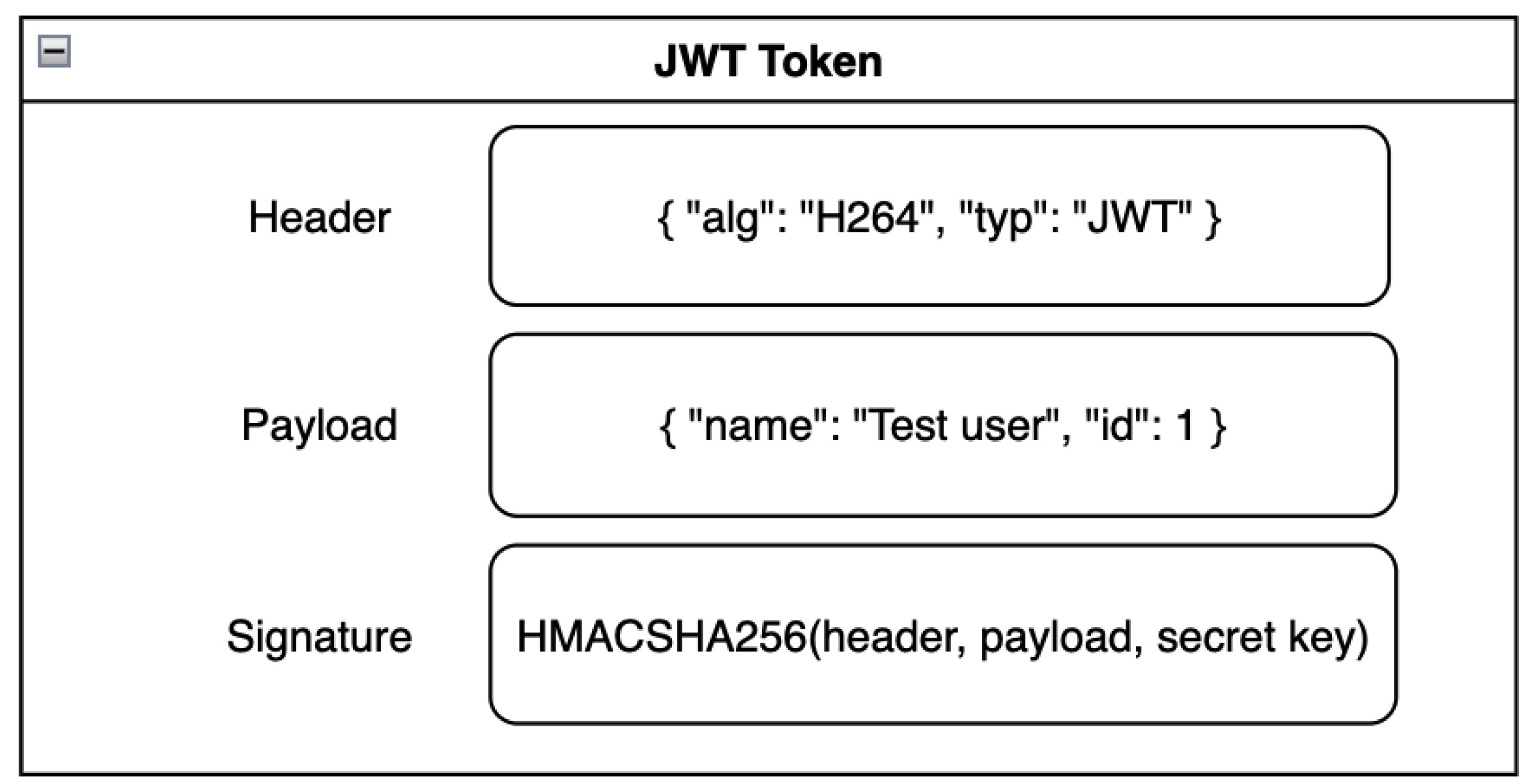

- provide a security layer with the help of the Auth microservice

- provide CORS protection

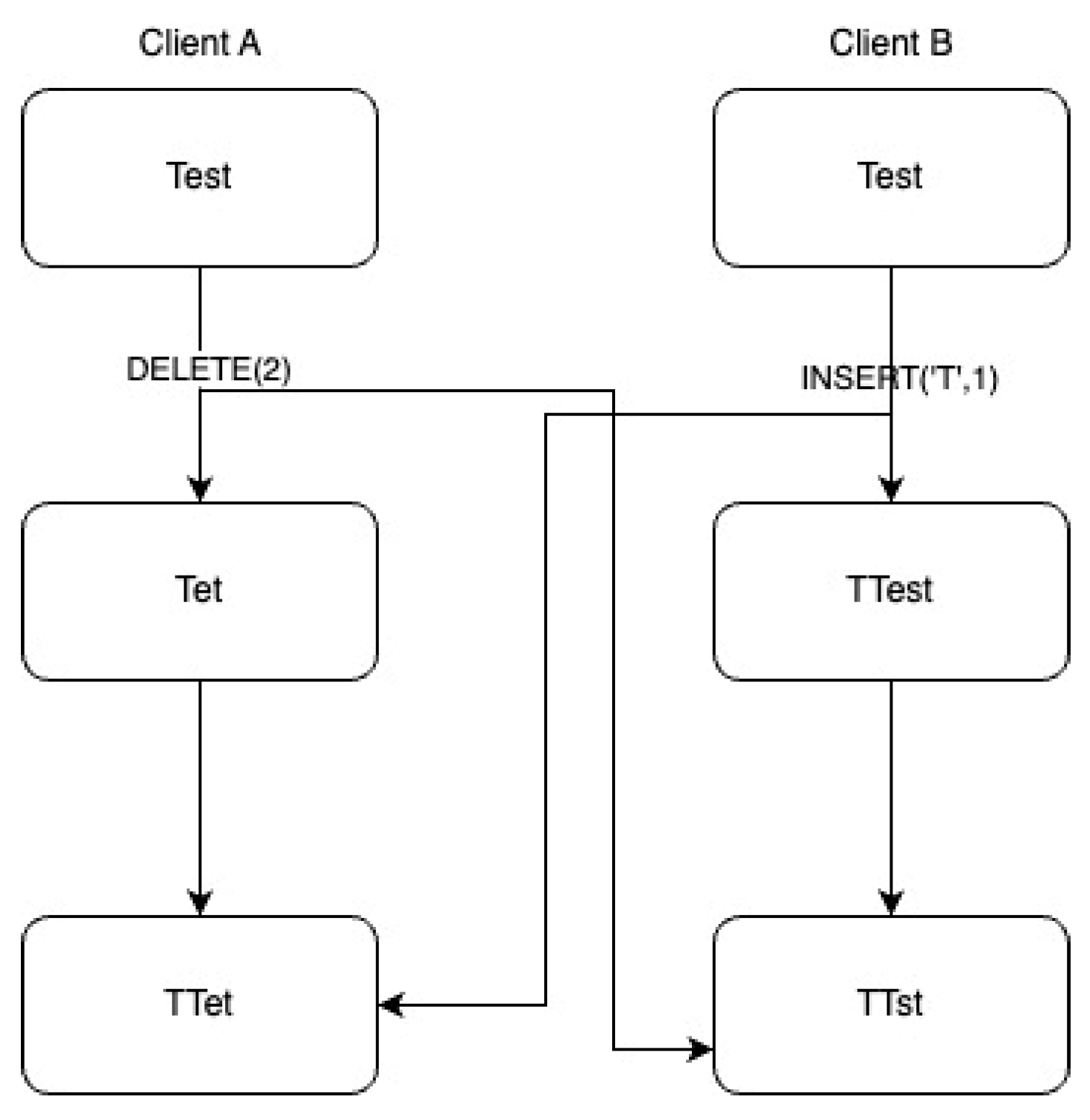

5.3. CRDT

- value: the value synchronized between the pears

- state: the metadata required by the peers to agree on the same incoming update

- merge functionality: offers a customizable way of handling the state mutation based on the remote state of the peer

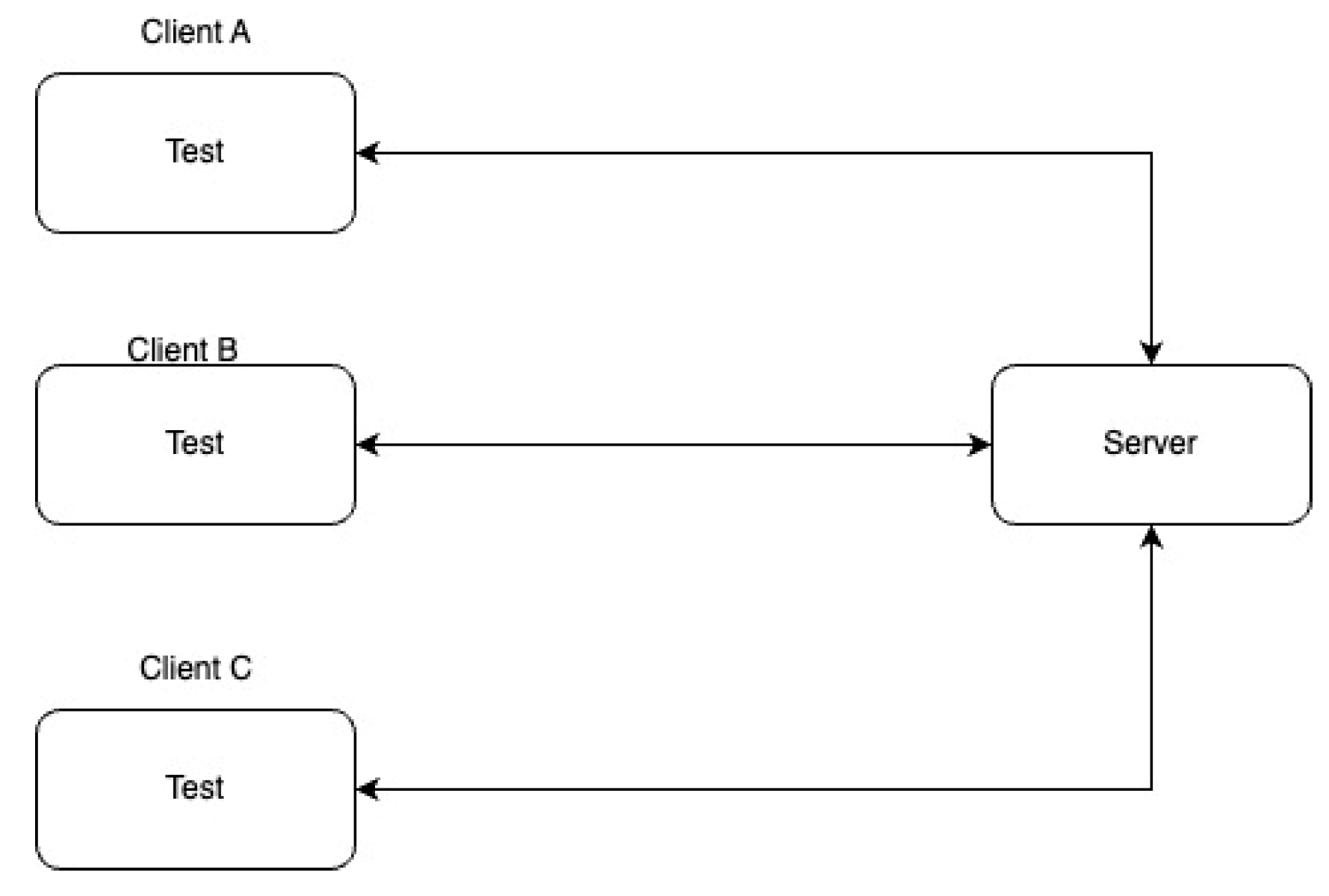

- A client generates a change event after editing a document.

- A real-time microservice, depending on its availability, receives the change event and forwards it to the Document Content microservice for persistent storage.

- Once the data is successfully stored, the editing event is broadcast to all active instances.

- All recipient instances then push the relevant data to their connected clients through open WebSocket connections.

- a timestamp for further time-based operations and checks on past events

- a way to identify the original creator of the message

- an ordinal to keep track of incremental messages

- 1 reserved bit set as 0.

- 41 bits used for UNIX epoch representation. With 41 bits, the maximum usable epoch is 241 which is suitable for 136 years from the starting date.

- 10 bits for the running instance count. The system can support 210=1024 running nodes dispatching document editing-related messages.

- 12 bits for ordinal, allowing 4096 items to be generated on each epoch for each running instance.

5.4. Client-Side Implementation

6. Architecture Validation

- Storage - disaster protection

- Resource consumption

- Throughput

6.1. Storage—Disaster Protection

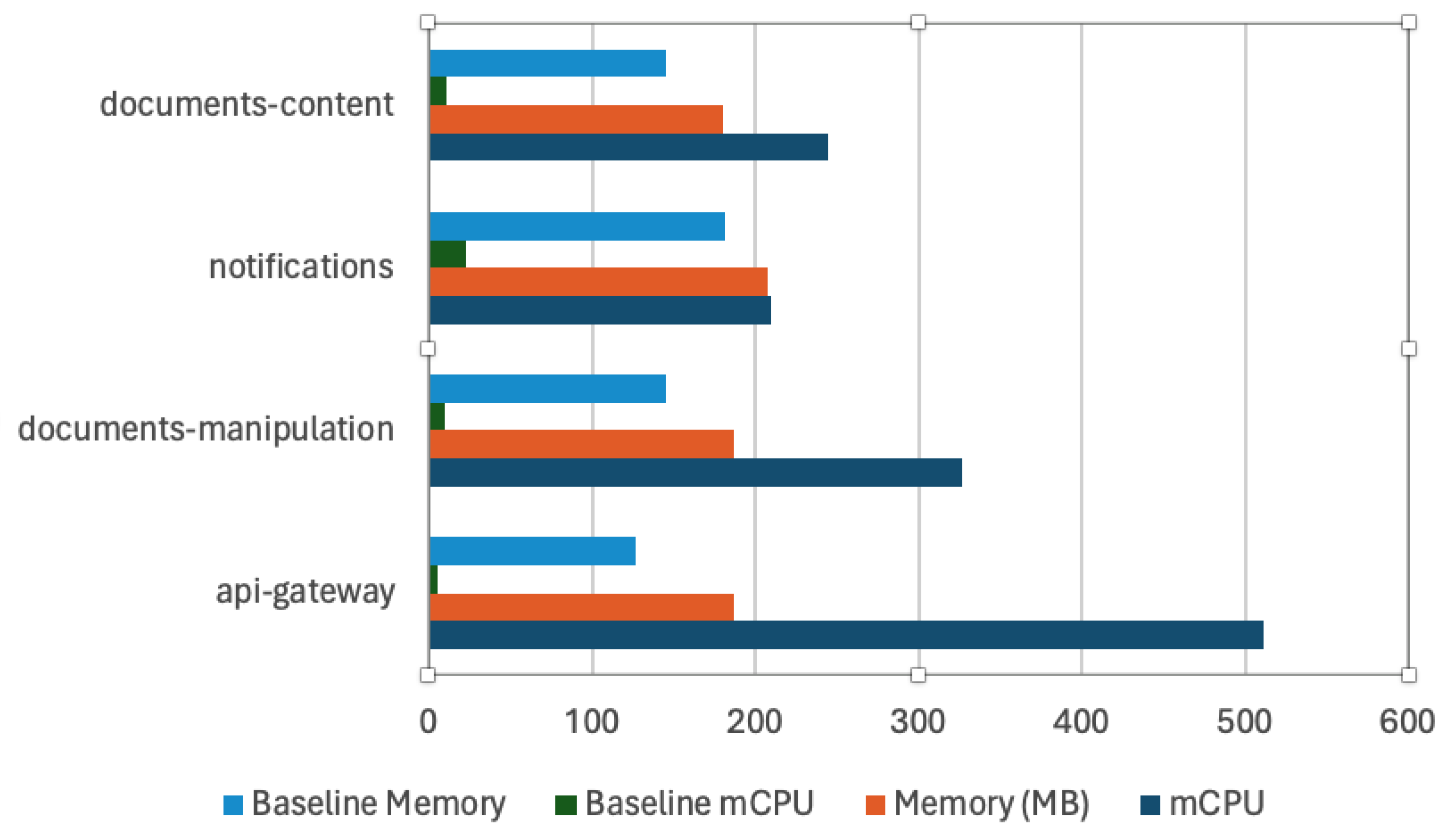

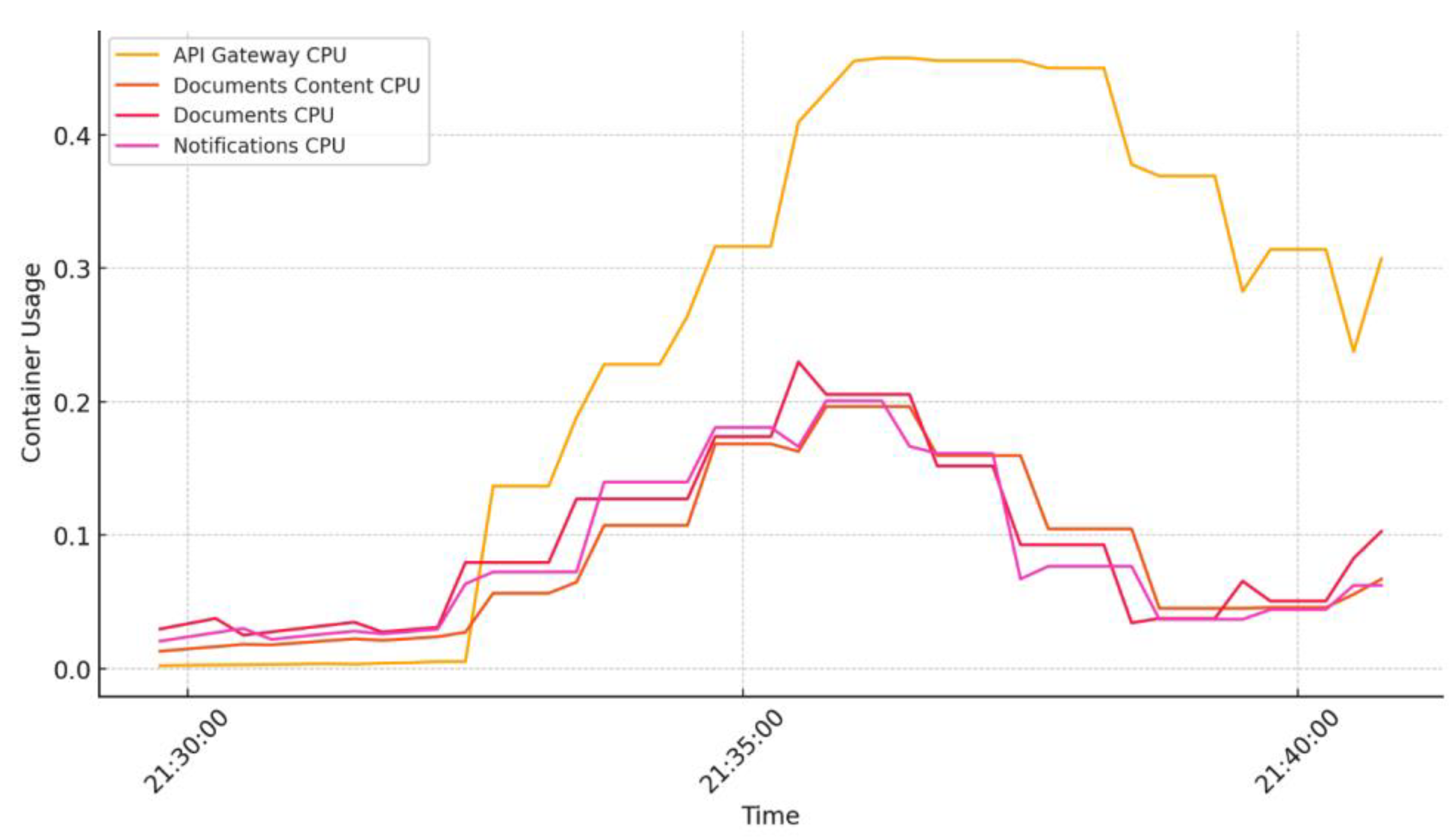

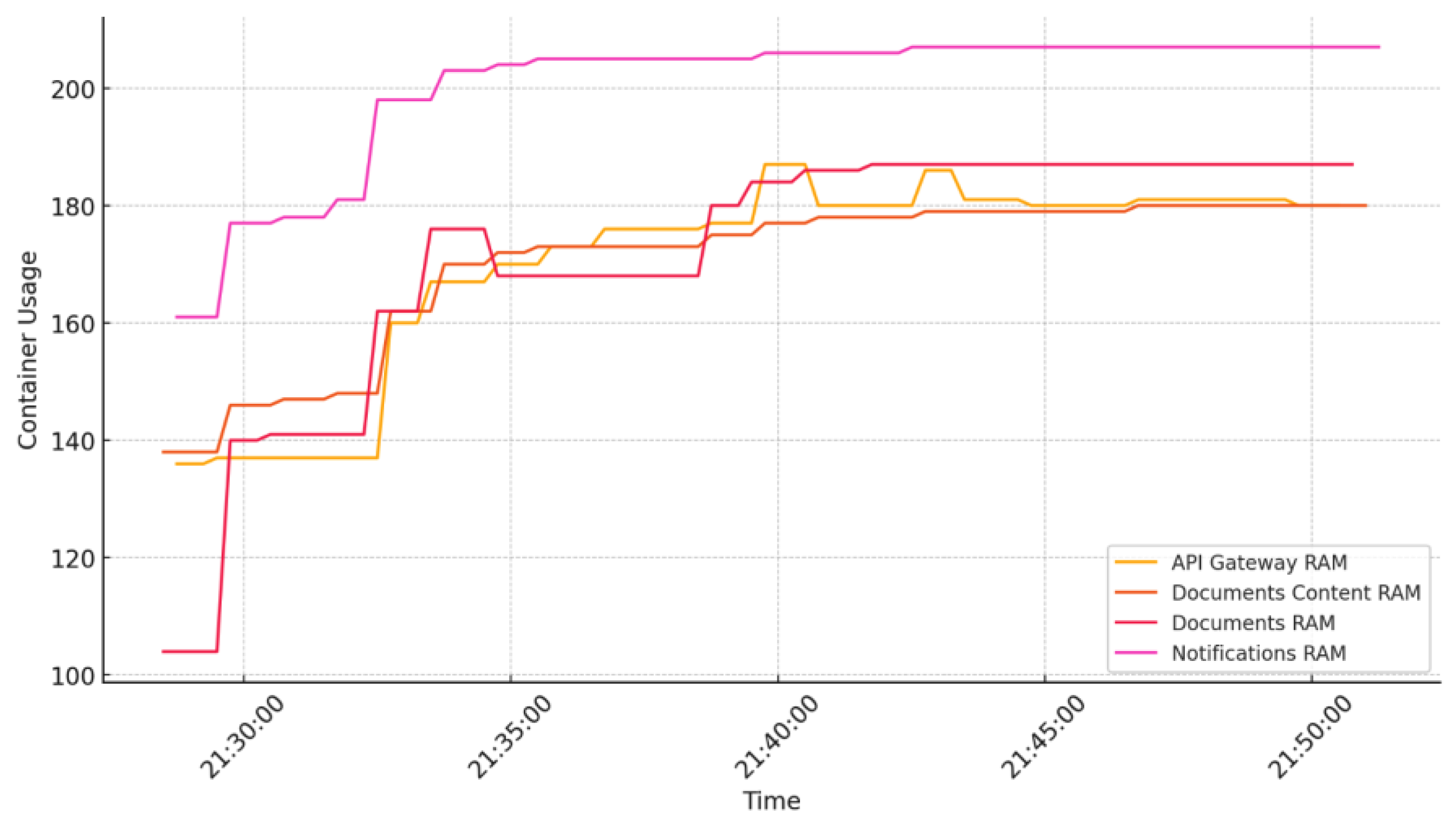

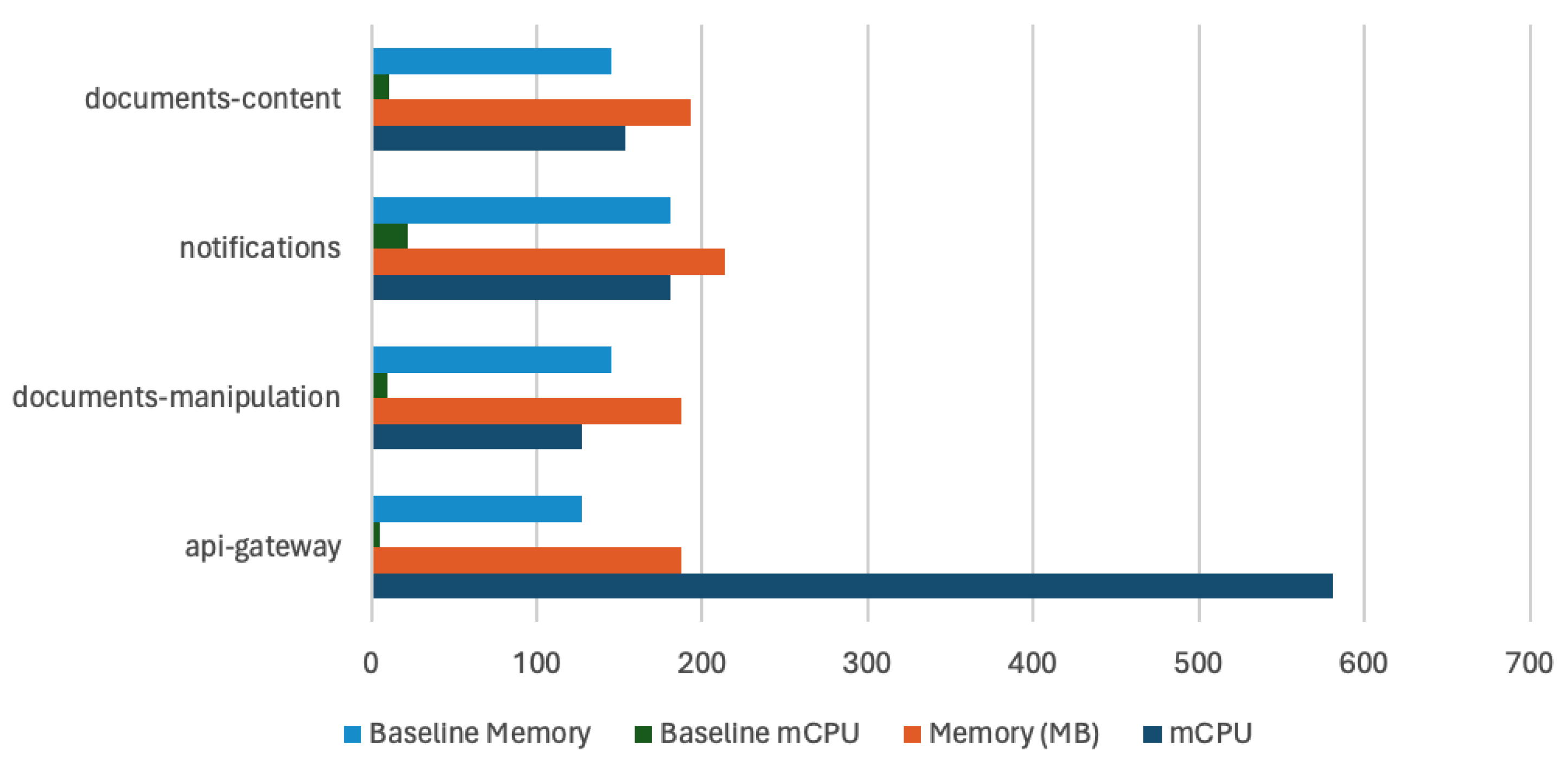

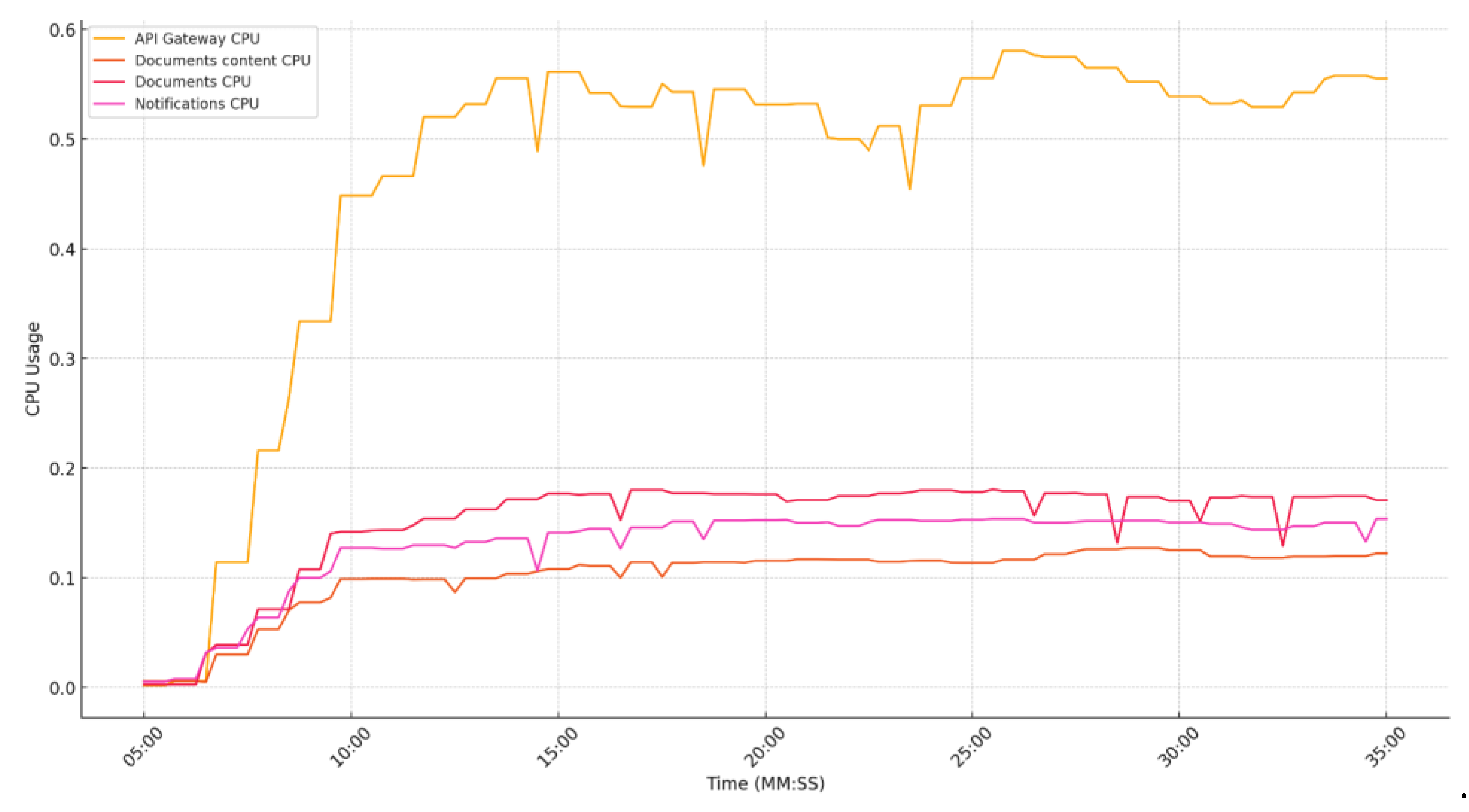

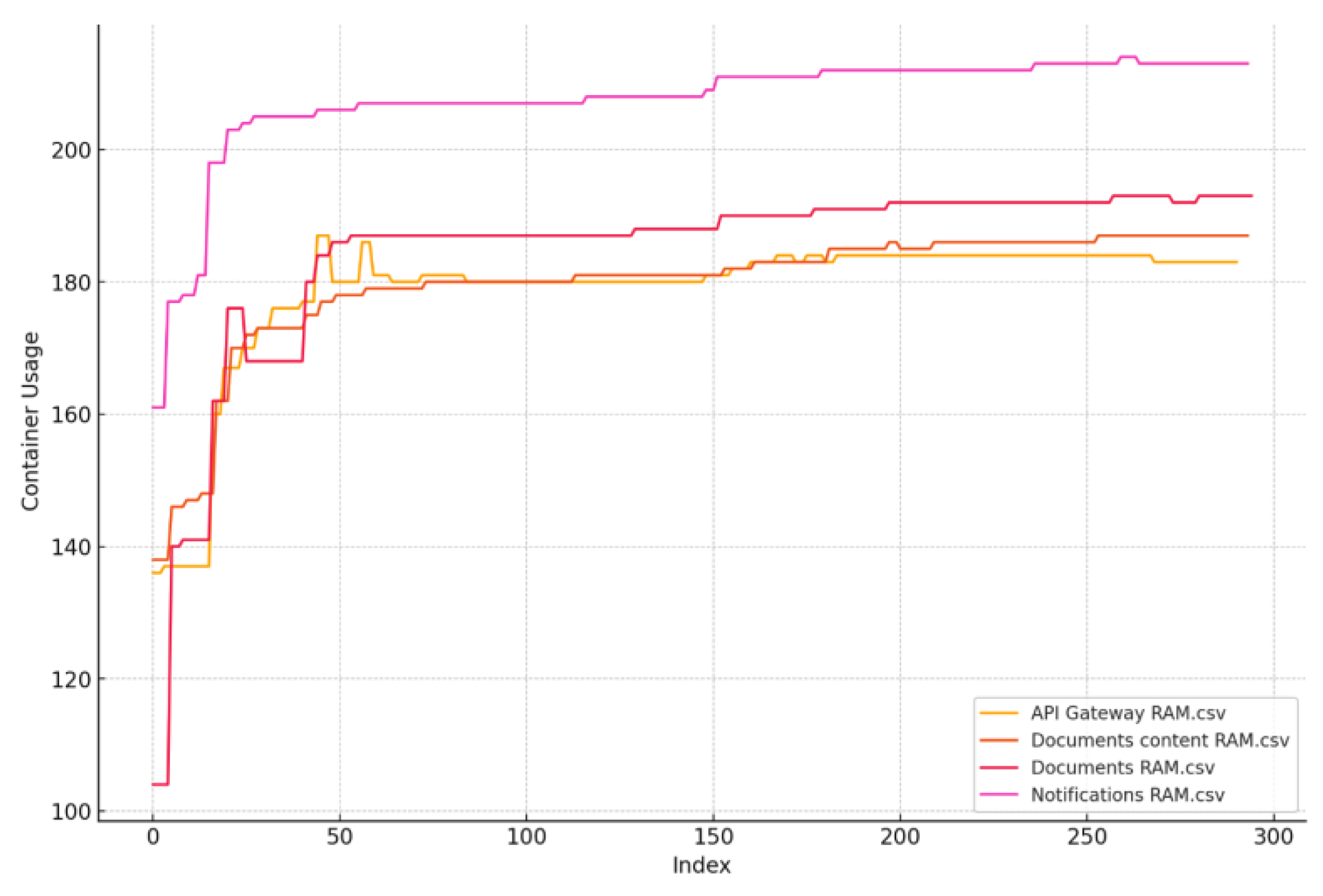

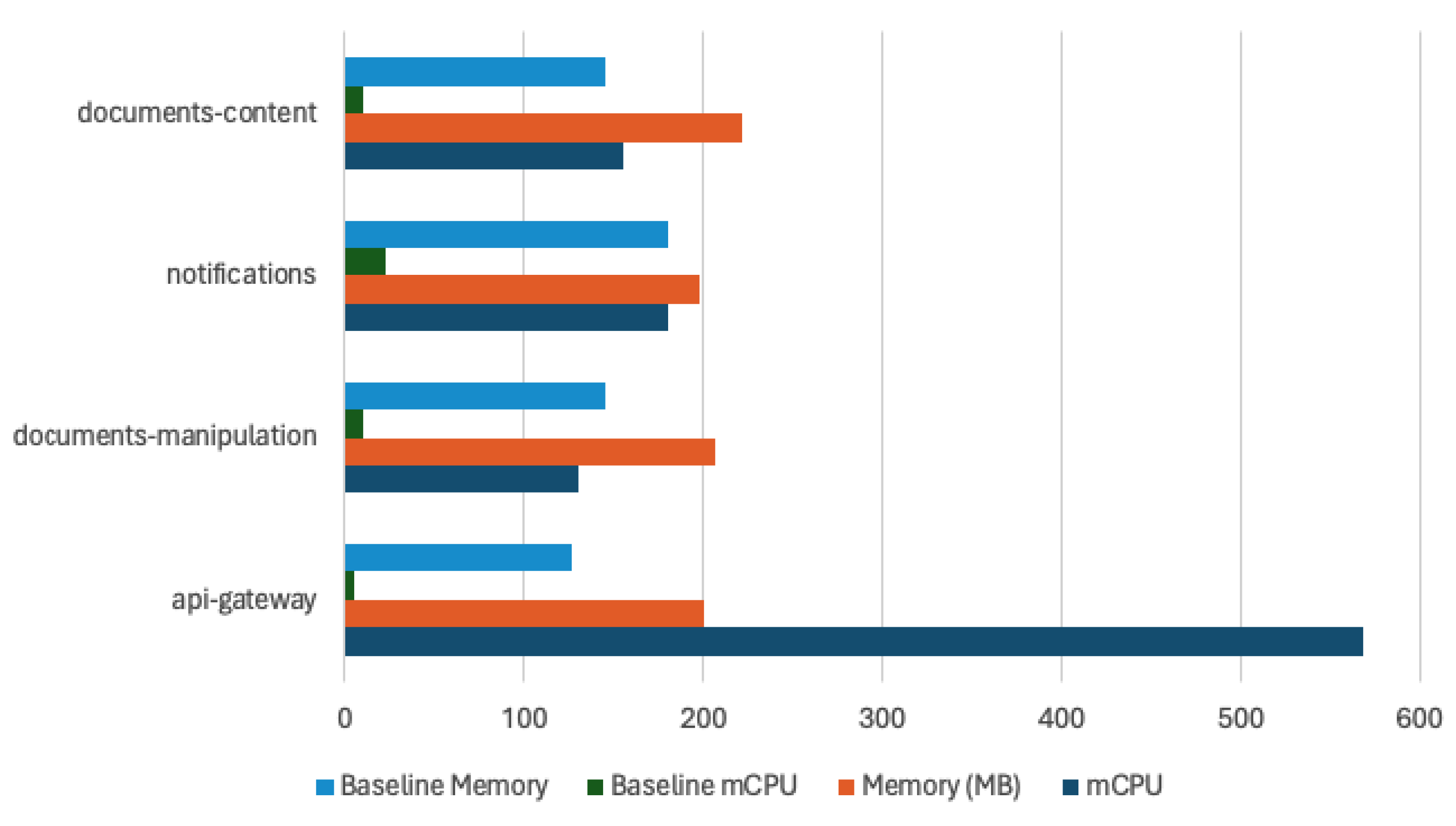

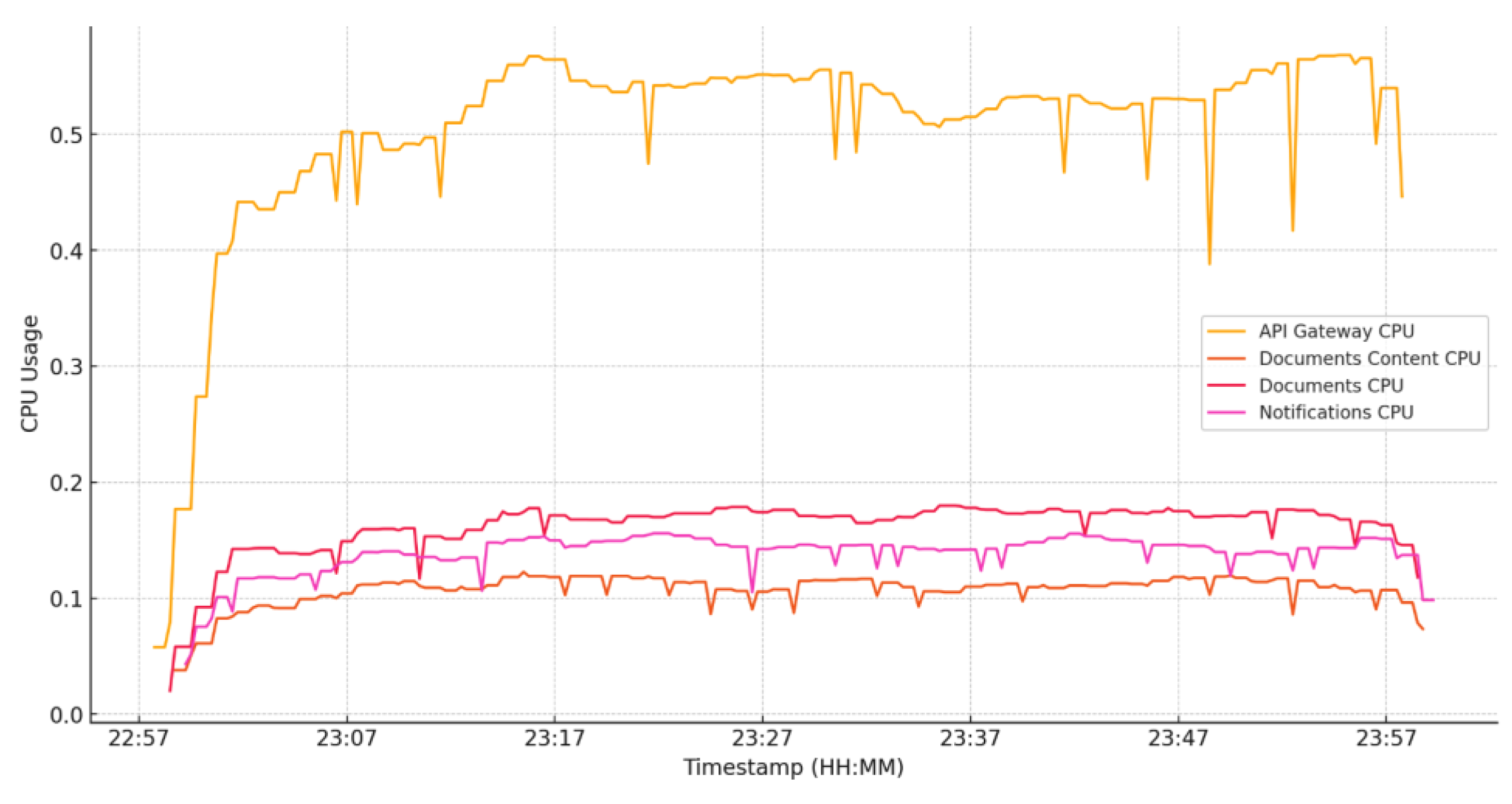

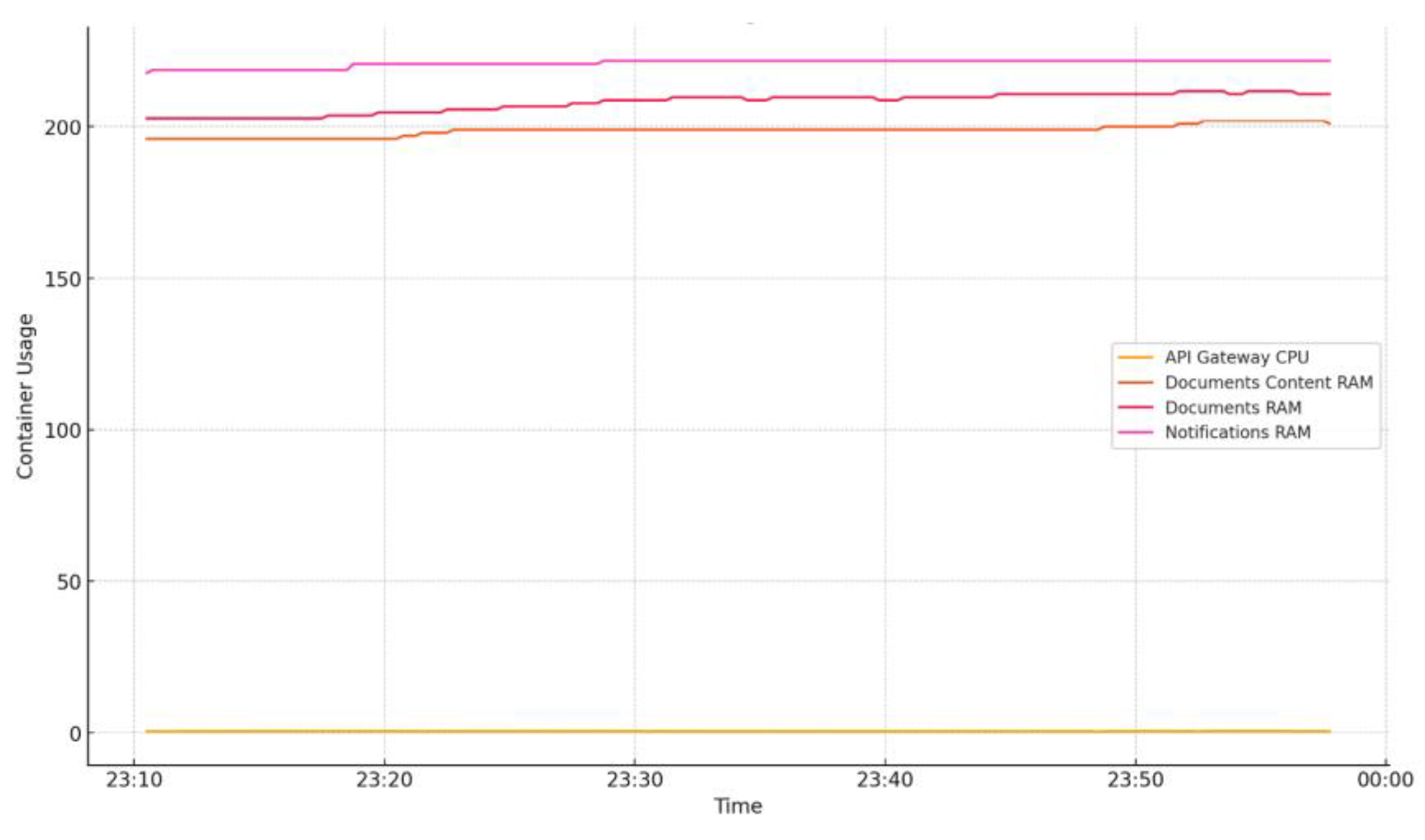

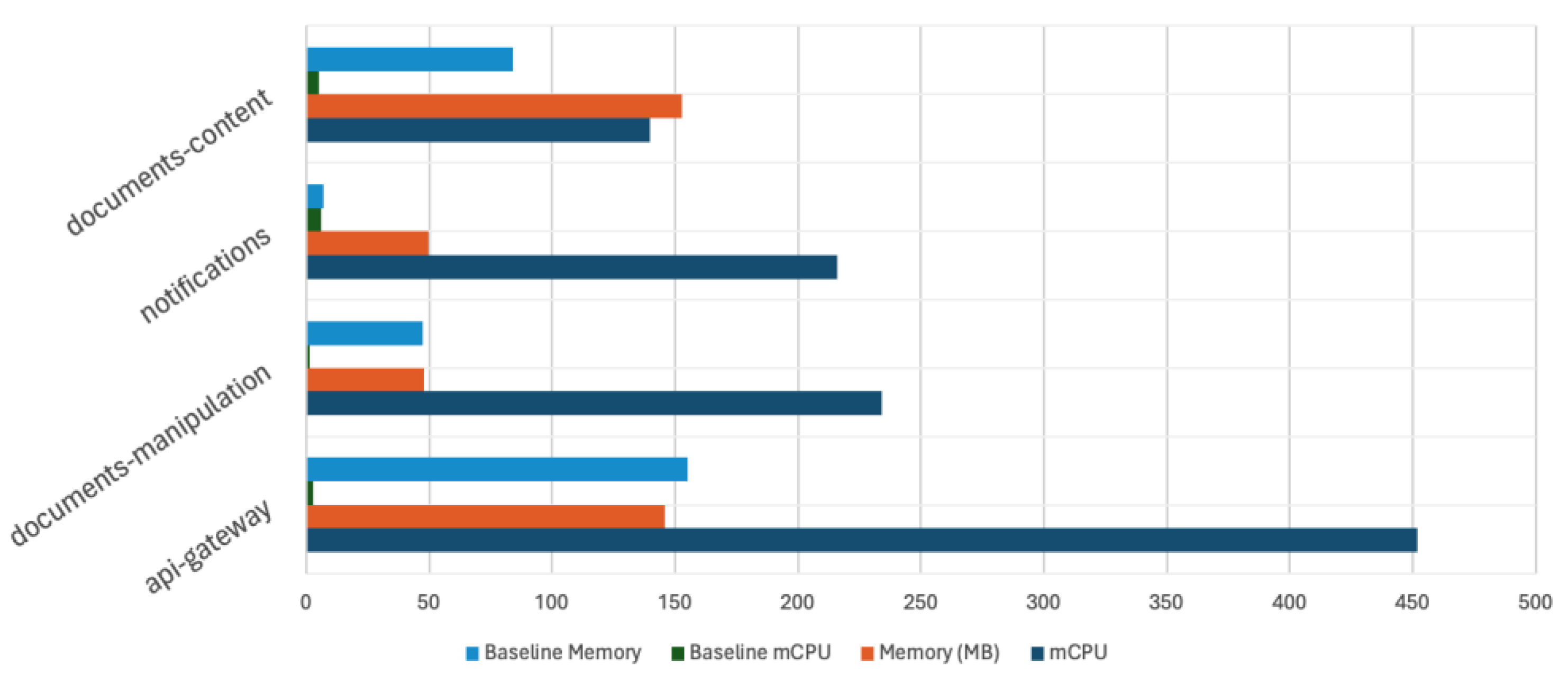

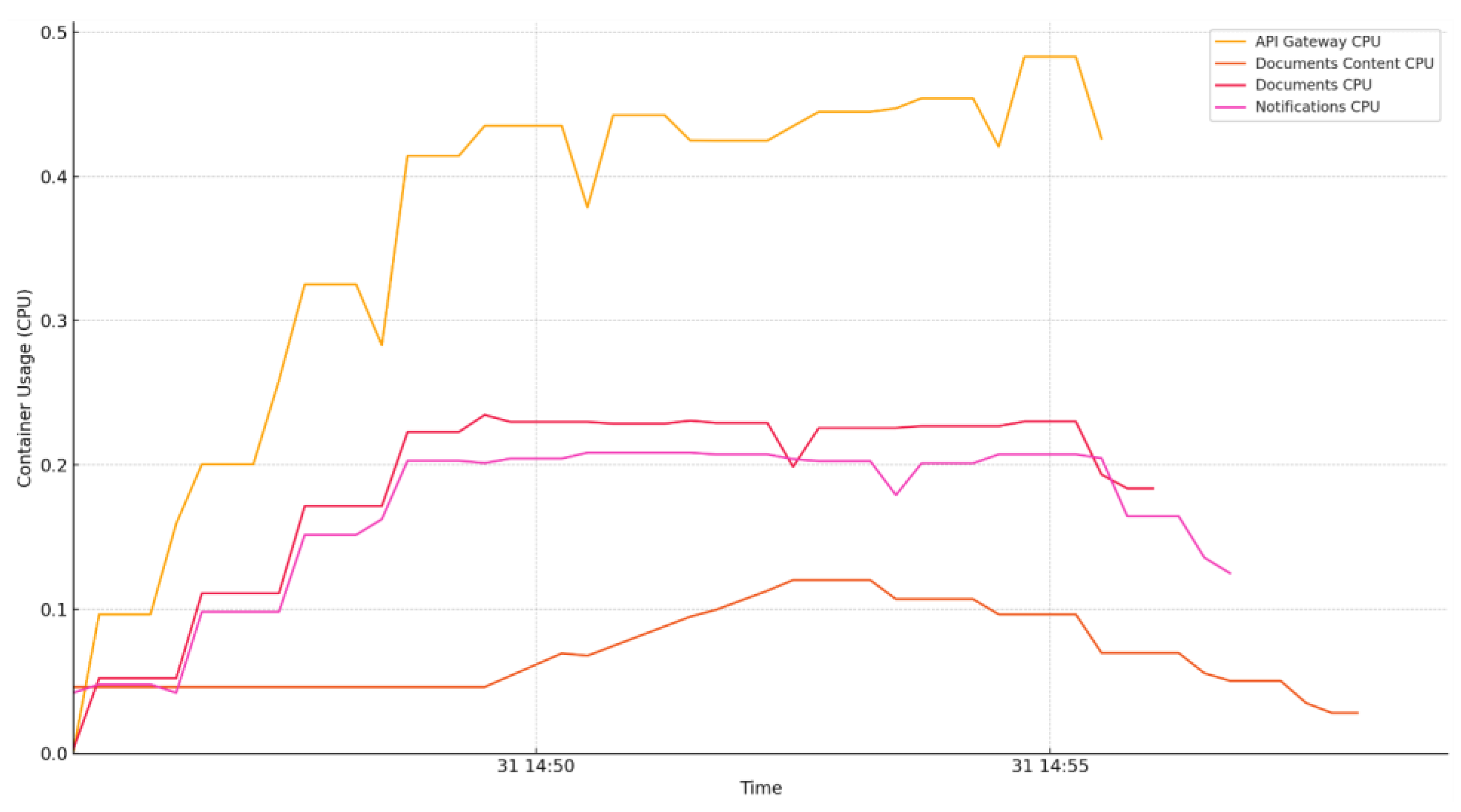

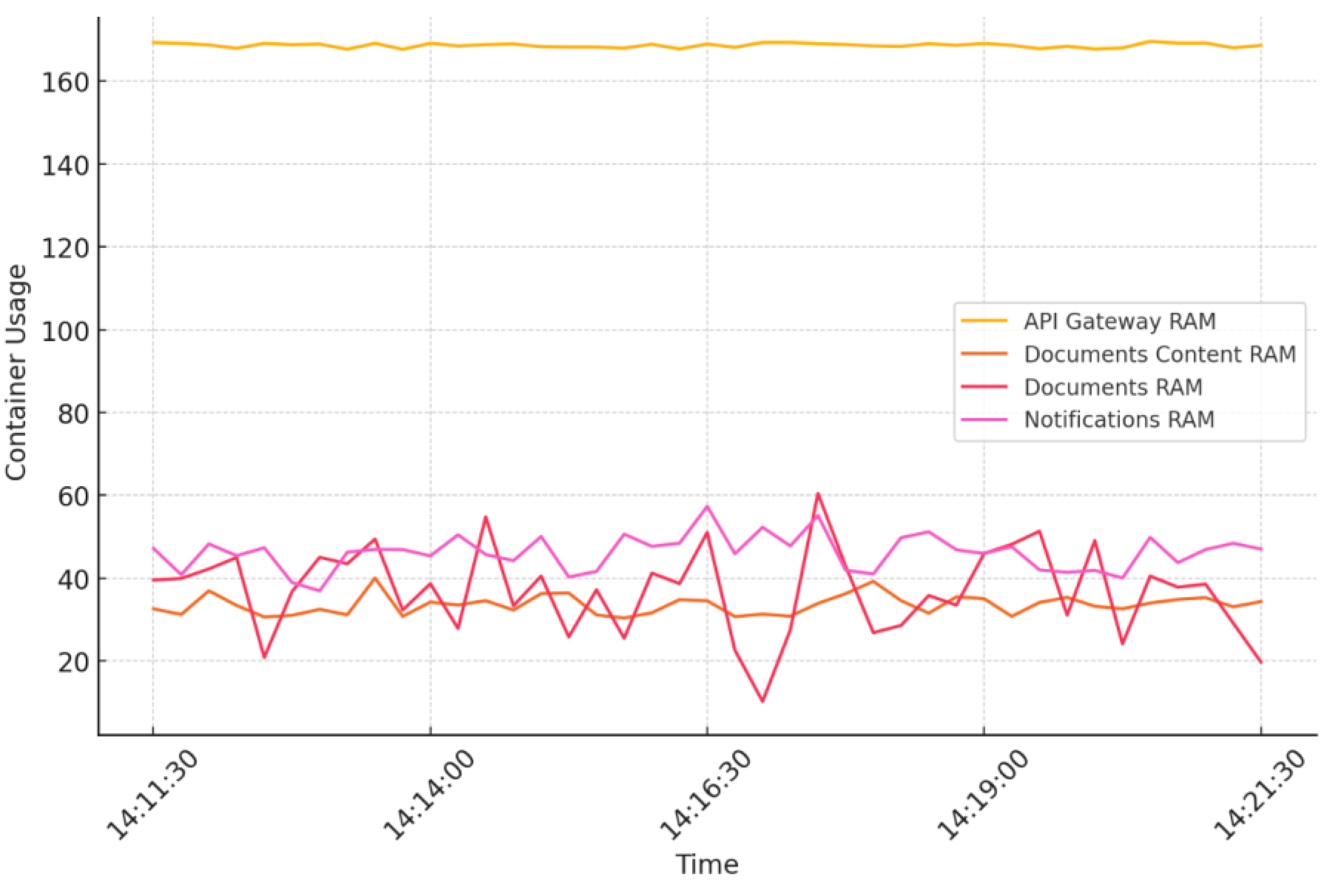

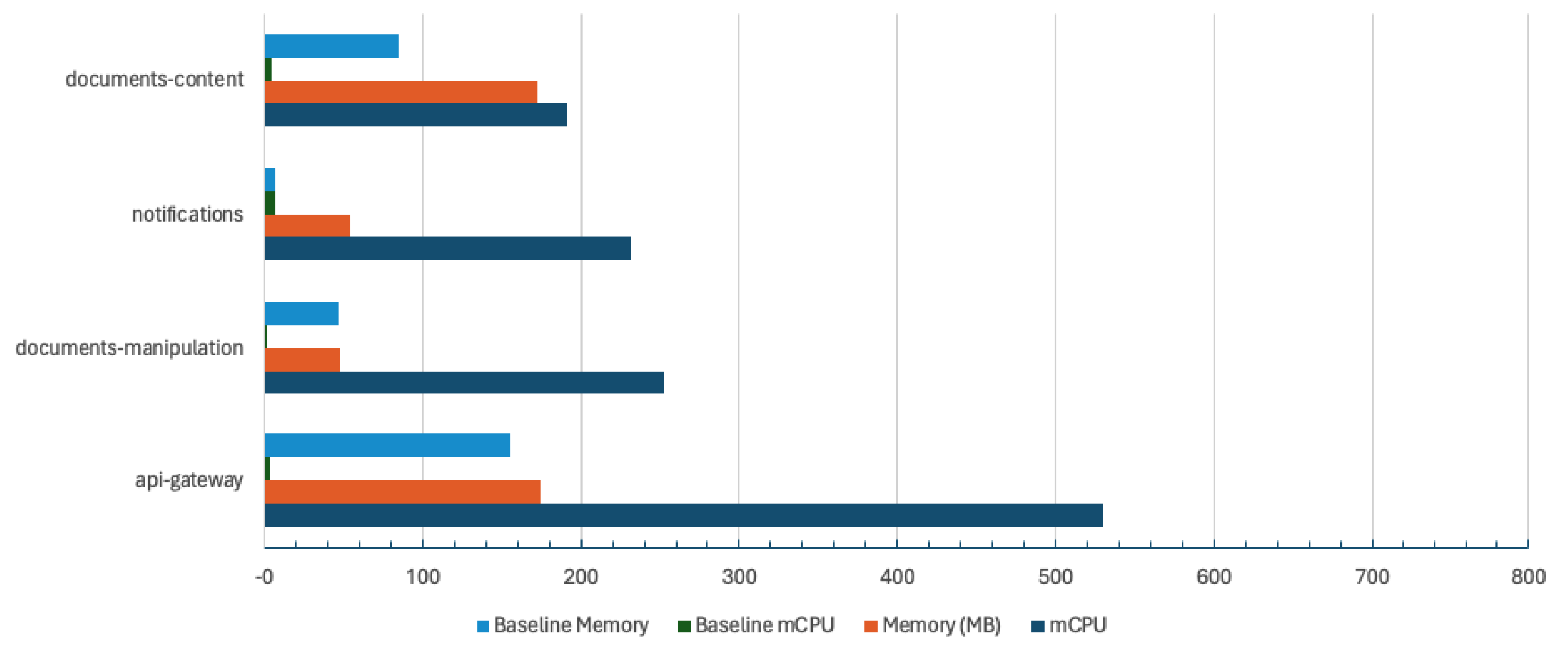

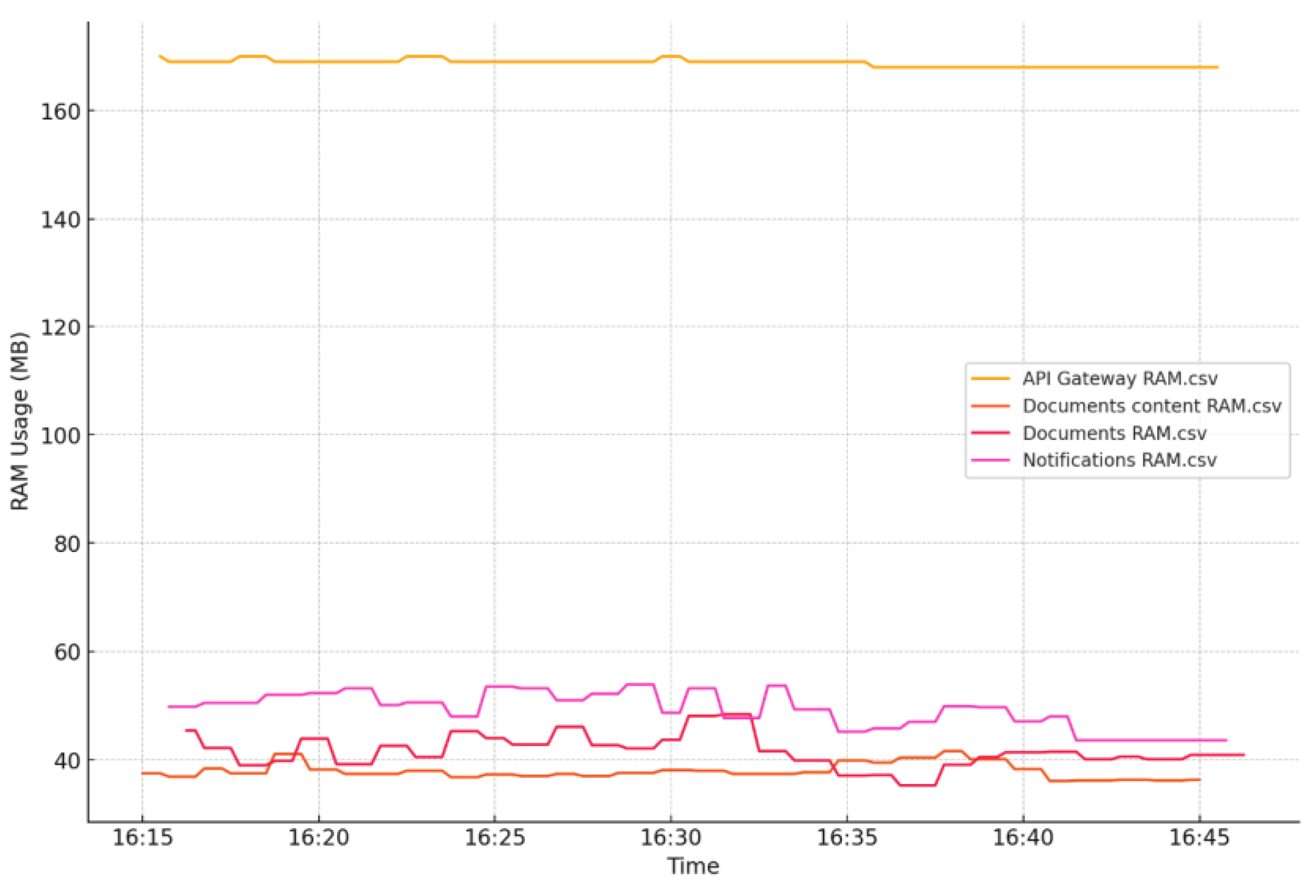

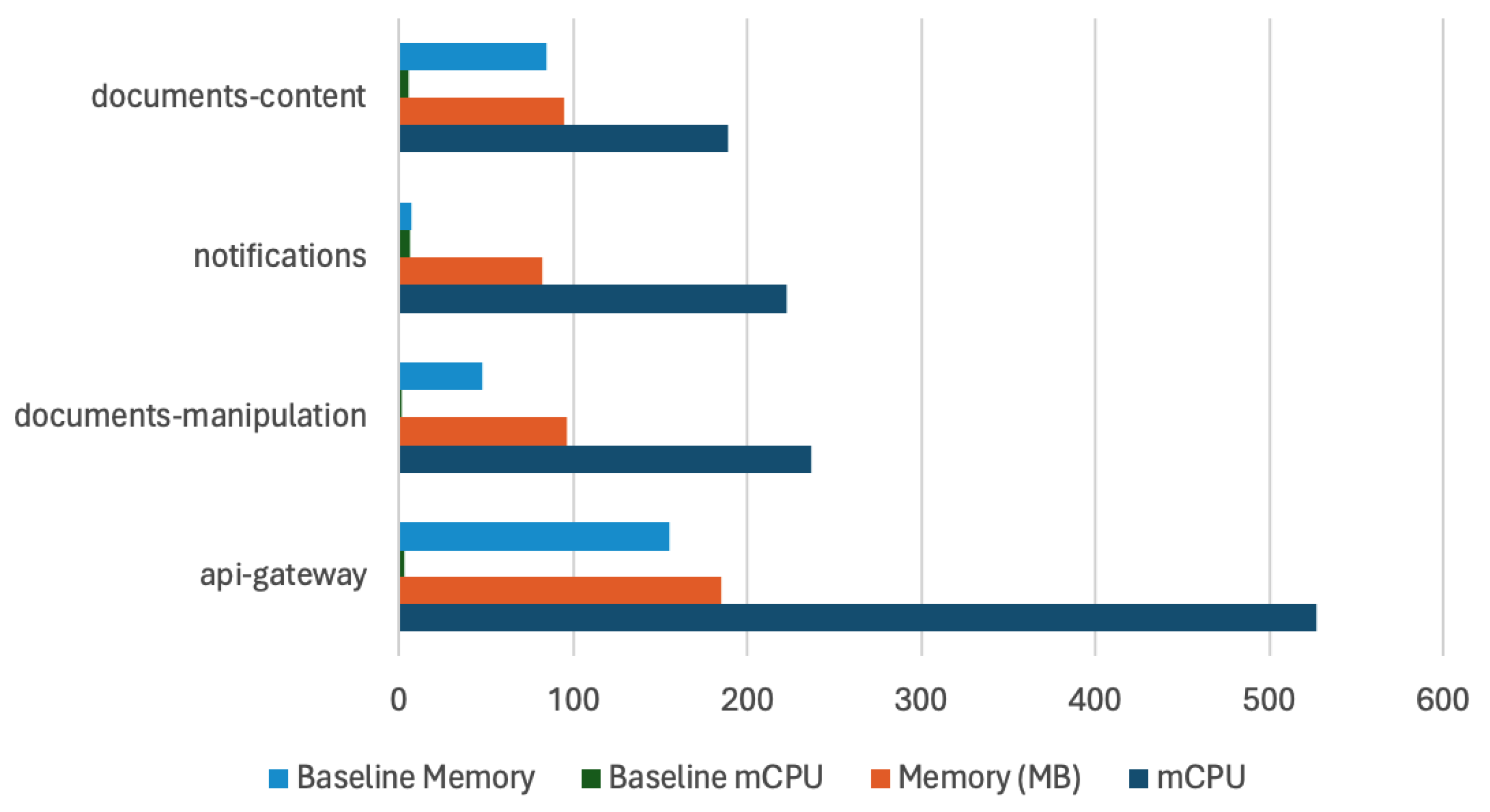

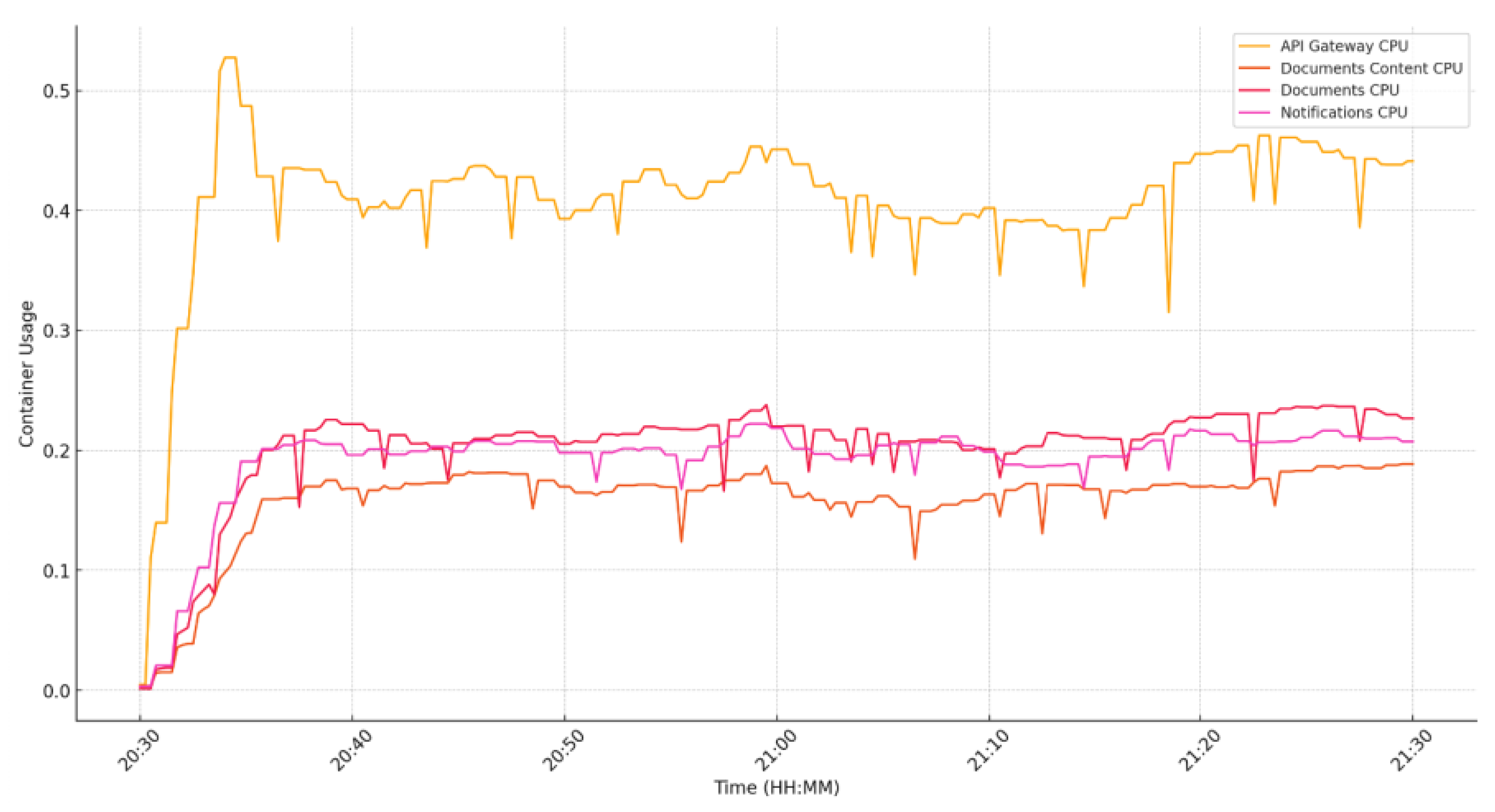

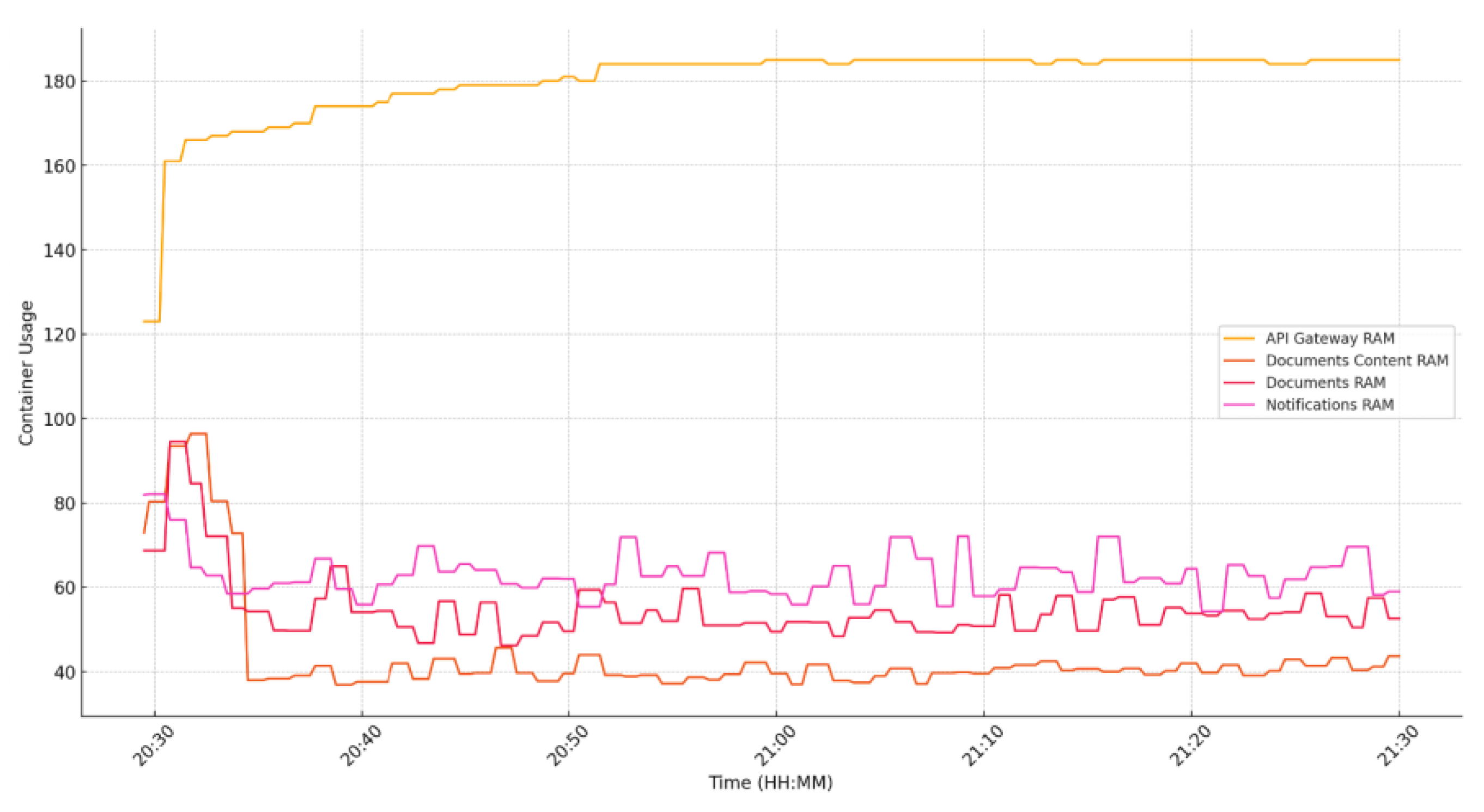

6.2. Resource Consumption

- 10 minutes of interactions between 20 users

- 30 minutes of interactions between 50 users

- 1 hour of interactions between 100 users

- synchronously representing the read, create, update, and delete operations

- asynchronously representing the long-lived connections exchanging actions between the client and the server

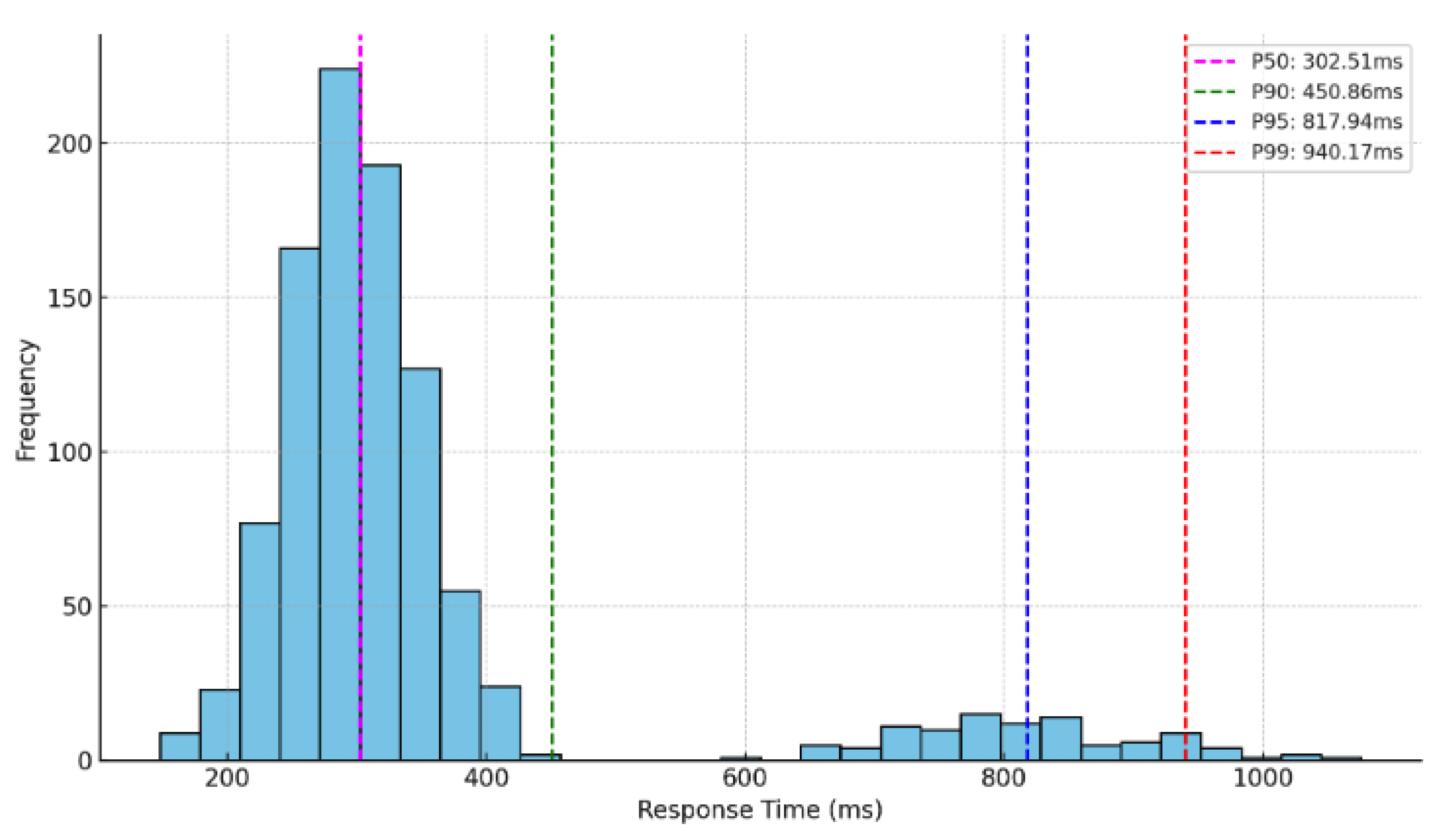

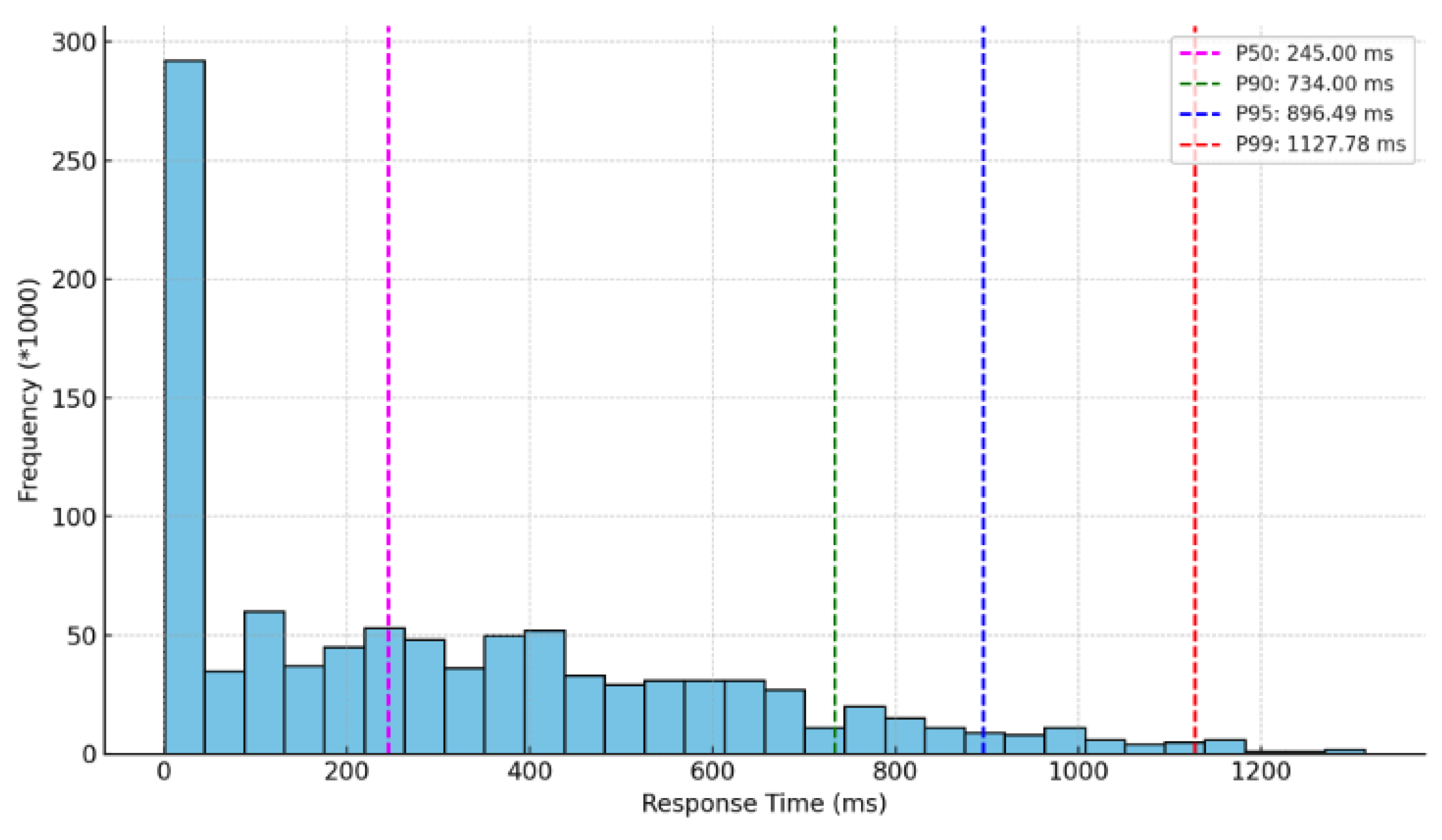

6.3. Throughput

7. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Evans, D. The Internet of Things: How the Next Evolution of the Internet Is Changing Everything, 2011 - Available online: http://www.cisco.com/web/about/ac79/docs/innov/IoT_IBSG_0411FINAL.pdf.

- Google Docs About page. Available online: https://www.google.com/docs/about/ (accessed on 20 June 2024).

- Laaki, H.; Miche, Y.; Tammi, K. Prototyping a Digital Twin for Real Time Remote Control Over Mobile Networks: Application of Remote Surgery. IEEE Access 2019, 7, 20325–20336. [Google Scholar] [CrossRef]

- Wang, L.D.; Zhou, X.Q.; Hu, T.H. A New Computed Torque Control System with an Uncertain RBF Neural Network Controller for a 7-DOF Robot. Tehnicki Vjesnik-Technical Gazette 2020, 27, 1492–1500. [Google Scholar] [CrossRef]

- Chen, T.S.; Yabuki, N.; Fukuda, T. Mixed reality-based active Hazard prevention system for heavy machinery operators. Automation in Construction 2024, 159. [Google Scholar] [CrossRef]

- Son, H.; Kim, C. Integrated worker detection and tracking for the safe operation of construction machinery. Automation in Construction 2021, 126. [Google Scholar] [CrossRef]

- Zhang, S.T.; Yang, J.J.; Wu, X.L. A distributed Project management framework for collaborative product development. Progress of Machining Technology 2002, 972–976. [Google Scholar]

- Doukari, O.; Kassem, M.; Greenwood, D. A Distributed Collaborative Platform for Multistakeholder Multi-Level Management of Renovation Projects. Journal of Information Technology in Construction 2024, 29, 219–246. [Google Scholar] [CrossRef]

- Erder, M.; Pureur, P.; Woods, E. Continuous Architecture in Practice: Software Architecture in the Age of Agility and DevOps; Addison-Wesley Professional, 2021. [Google Scholar]

- Ciceri, C.; Farley, D.; Ford, N.; Harmel-Law, A.; Keeling, M.; Lilienthal, C. Software Architecture Metrics: Case Studies to Improve the Quality of Your Architecture; O'Reilly Media, 2022. [Google Scholar]

- Cortellessa, V.; Eramo, R.; Tucci, M. From software architecture to analysis models and back: Model-driven refactoring aimed at availability improvement. Information and Software Technology 2020, 127. [Google Scholar] [CrossRef]

- Nsafoa-Yeboah, K.; Tchao, E.T.; Kommey, B.; Agbemenu, A.S.; Klogo, G.S.; Akrasi-Mensah, N.K. Flexible open network operating system architecture for implementing higher scalability using disaggregated software-defined optical networking. IET Networks 2024, 13, 221–240. [Google Scholar] [CrossRef]

- Gao, X.M.; Wang, B.S.; Zhang, X.Z.; Ma, S.C. A High-Elasticity Router Architecture with Software Data Plane and Flow Switching Plane Separation. China Communications 2024, 13, 37–52. [Google Scholar]

- Fé, I.; Nguyen, T.A.; Di Mauro, M.; Postiglione, F.; Ramos, A.; Soares, A.; Choi, E.; Min, D.G.; Lee, J.W.; Silva, F.A. Energy-aware dynamic response and efficient consolidation strategies for disaster survivability of cloud microservices architecture. Computing 2024. [Google Scholar] [CrossRef]

- Muntean, M.; Brândas, C.; Cristescu, M.P.; Matiu, D. Improving Cloud Integration Using Design Science Research. Economic Computation and Economic Cybernetics Studies and Research 2021, 55, 201–218. [Google Scholar]

- Goldstein, M.; Segall, I. Automatic and Continuous Software Architecture Validation. 2015 IEEE/ACM 37th IEEE International Conference on Software Engineering 2015, 2, 59–68. [Google Scholar] [CrossRef]

- Ronzon, T. Software Retrofit in High-Availability Systems When Uptime Matters. IEEE Software 2016, 33, 11–17. [Google Scholar] [CrossRef]

- Goetz, B. Java Concurrency In Practice; Pearson India, 2016. [Google Scholar]

- Tudose, C. Java Persistence with Spring Data and Hibernate; Manning: New York, NY, USA, 2023. [Google Scholar]

- Smith, P.N.; Guengerich, S.L. Client/Server Computing (Professional Reference Series); SAMS, 1994. [Google Scholar]

- Saternos, C. Client-Server Web Apps with JavaScript and Java: Rich, Scalable, and RESTful; O'Reilly Media, 2014. [Google Scholar]

- Anacleto, R.; Luz, N.; Almeida, A.; Figueiredo, L.; Novais, P. Creating and optimizing client-server; Universidade do Minho - Campus of Gualtar: Braga, Portugal, 2013. [Google Scholar]

- Meloni, J.; Kyrnin, J. HTML, CSS, and JavaScript All in One: Covering HTML5, CSS3, and ES6; Sams Publishing, 2018. [Google Scholar]

- Nagy, R. Simplifying Application Development with Kotlin Multiplatform Mobile: Write robust native applications for iOS and Android efficiently; Packt Publishing, 2022. [Google Scholar]

- Siahaan, V.; Sianipar, R.H. Building Three Desktop Applications with SQLite and Java GUI; Independently published, 2019. [Google Scholar]

- Marquez-Soto, P. Backend Developer in 30 Days: Acquire Skills on API Designing, Data Management, Application Testing, Deployment, Security and Performance Optimization; BPB Publications, 2022. [Google Scholar]

- Hermans, K. Mastering Back-End Development: A Comprehensive Guide to Learn Back-End Development; Independently published, 2023. [Google Scholar]

- Marinescu, D.C. Cloud Infrastructure; Cloud Computing: Theory and Practice, 2013; pp. 67–98. [Google Scholar]

- Morris, K. Infrastructure as Code: Dynamic Systems for the Cloud Age 2nd Edition; O'Reilly Media, 2021. [Google Scholar]

- Newman, S. Monolith to Microservices: Evolutionary Patterns to Transform Your Monolith; O'Reilly Media, 2019. [Google Scholar]

- Vernon, V.; Tomasz, J. Strategic Monoliths and Microservices: Driving Innovation Using Purposeful Architecture; Addison-Wesley Publishing, 2022. [Google Scholar]

- Al Qassem, L.M.; Stouraitis, T.; Damiani, E.; Elfadel, I.M. Proactive Random-Forest Autoscaler for Microservice Resource Allocation. IEEE Access 2023, 11, 2570–2585. [Google Scholar] [CrossRef]

- Richardson, C. Microservice Architecture Pattern. 2024. Available online: http://microservices.io/patterns/microservices.html.

- Cao, X.M.; Zhang, H.B.; Shi, H.Y. Load Balancing Algorithm of API Gateway Based on Microservice Architecture for a Smart City. Journal of Testing and Evaluation 2024, 52, 1663–1676. [Google Scholar] [CrossRef]

- Zuki, S.Z.M.; Mohamad, R.; Saadon, N.A. Containerized Event-Driven Microservice Architecture. Baghdad Science Journal 2024, 21, 584–591. [Google Scholar] [CrossRef]

- Fielding, R.T. Architectural Styles and the Design of Network-based Software Architectures, PhD Thesis, 2000.

- Bandruski, P. Publish WebSocket in the experience layer. 2020. Available online: https://ambassadorpatryk.com/2020/03/publish-web-socket-in-the-experience-layer/.

- Tay, Y. Front End System Design Guidebook. 2024. Available online: https://www.greatfrontend.com/questions/system-design/news-feed-facebook.

- VueJS Official Documentation. Available online: https://vuejs.org/guide/introduction.

- Spring Boot Documentation. Available online: https://docs.spring.io/spring-boot/index.html.

- Arnold, K.; Gosling, J.; Holmes, D. The Java Programming Language, 4th ed.; Addison-Wesley Professional: Glenview, IL, USA, 2005. [Google Scholar]

- Sierra, K.; Bates, B.; Gee, T. Head First Java: A Brain-Friendly Guide, 3rd ed.; O’Reilly Media: Sebastopol, CA, USA, 2022. [Google Scholar]

- Lukša, M. Kubernetes in Action; Manning: New York, NY, USA, 2017. [Google Scholar]

- Nickoloff, J.; Kuenzli, S. Docker in Action, Second Edition; Manning: New York, NY, USA, 2019. [Google Scholar]

- Fava, F.B.; Leite, L.F.L.; da Silva, L.F.A.; Costa, P.R.D.A.; Nogueira, A.G.D.; Lopes, A.F.G.; Schepke, C.; Kreutz, D.L.; Mansilha, R.B. Assessing the Performance of Docker in Docker Containers for Microservice-based Architectures, 2024 32nd Euromicro International Conference on Parallel, Distributed and Network-Based Processing, 2024, 137-142.

- Vitale, T. Cloud Native Spring in Action With Spring Boot and Kubernetes; Manning: New York, NY, USA, 2022. [Google Scholar]

- Tudose, C.; Odubăşteanu, C.; Radu, Ş. Java Reflection Performance Analysis Using Different Java Development. Advances In Intelligent Control Systems And Computer Science 2013, 187, 439–452. [Google Scholar] [CrossRef]

- Wu, H. Reliability Evaluation of the Apache Kafka Streaming System; 2019 IEEE 30th International Symposium on Software Reliability Engineering Workshops, 2019, 112-113.

- Kim, H.; Bang, J.; Son, S.; Joo, N.; Choi, M.J.; Moon, Y.S. Message Latency-Based Load Shedding Mechanism in Apache Kafka, EURO-PAR 2019: Parallel Processing Workshops. 2020, 11997, 731–736. [Google Scholar] [CrossRef]

- Holmes, S.D.; Harber, C. Getting MEAN with Mongo, Express, Angular, and Node, Second Edition; Manning: New York, NY, USA, 2019. [Google Scholar]

- Vokorokos, L.; Uchnár, M.; Baláz, A. MongoDB scheme analysis 2017 IEEE 21st International Conference on Intelligent Engineering Systems (INES), 2017, 67-70.

- Pernas, L.D.E.; Pustulka, E. Document Versioning for MongoDB, New Trends in Database and Information Systems. ADBIS 2022, 1652, 512–524. [Google Scholar]

- Ferrari, L.; Pirozzi, E. Learn PostgreSQL - Second Edition: Use, manage and build secure and scalable databases with PostgreSQL 16, 2nd Edition; Packt Publishing, 2023. [Google Scholar]

- Bonteanu, A.M.; Tudose, C. Performance Analysis and Improvement for CRUD Operations in Relational Databases from Java Programs Using JPA, Hibernate, Spring Data JPA. Applied Sciences – Basel 2024, 14, 7–2743. [Google Scholar] [CrossRef]

- Raptis, T.P.; Passarella, A. On Efficiently Partitioning a Topic in Apache Kafka, 2022 International Conference on Computer, Information and Telecommunication Systems (CITS), Piraeus, Greece, 2022, 1-8.

- Ellis, C.A.; Gibbs, S.J. Concurrency control in groupware systems. ACM SIGMOD Record 1989, 18, 399–407. [Google Scholar] [CrossRef]

- Gadea, C.; Ionescu, B.; Ionescu, D. Modeling and Simulation of an Operational Transformation Algorithm using Finite State Machines, 2018 IEEE 12th International Symposium on Applied Computational Intelligence and Informatics (SACI 2018), Timişoara, 2018, 119-124.

- Gadea, C.; Ionescu, B.; Ionescu, D. A Control Loop-based Algorithm for Operational Transformation, 2020 IEEE 14th International Symposium on Applied Computational Intelligence and Informatics (SACI 2020), Timişoara, 2020, 247-254.

- Shapiro, M.; Preguiça, N.; Baquero, C.; Zawirski, M. Conflict-Free Replicated Data Types. Stabilization, Safety, and Security of Distributed Systems 2011, 6976, 386. [Google Scholar]

- Nieto, A.; Gondelman, L.; Reynaud, A.; Timany, A.; Birkedal, L. Modular Verification of Op-Based CRDTs in Separation Logic. Proceedings of the ACM on Programming Languages-PACMPL 2022, 6, 188. [Google Scholar] [CrossRef]

- Guidec, F.; Maheo, Y.; Noûs, C. Delta-State-Based Synchronization of CRDTs in Opportunistic Networks, Proceedings of the IEEE 46th Conference on Local Computer Networks (LCN 2021), 2021, 335-338.

- Hupel, L. An Introduction to Conflict-Free Replicated Data Types. Available online: https://lars.hupel.info/topics/crdt/07-deletion/.

- Centelles, R.P.; Selimi, M.; Freitag, F.; Navarro, L. A Monitoring System for Distributed Edge Infrastructures with Decentralized Coordination. Algorithmic Aspects of Cloud Computing (ALGOCLOUD 2019) 2019, 12041, 42–58. [Google Scholar] [CrossRef]

- Cola, G.; Mazza1, M.; Tesconi, M. Twitter Newcomers: Uncovering the Behavior and Fate of New Accounts Through Early Detection and Monitoring. IEEE Access 2023, 11, 55223–55232. [Google Scholar] [CrossRef]

- Pinia Official Documentation. Available online: https://pinia.vuejs.org.

- Redux Official Documentation. Available online: https://redux.js.org.

- Tudose, C. JUnit in Action; Manning: New York, NY, USA, 2020. [Google Scholar]

| Feature | mCPU |

| api-gateway | 5.1 |

| auth | 16.26 |

| document-manipulation | 10.13 |

| document-content-handler | 10.39 |

| RTC | 13.64 |

| notifications | 22.28 |

| Feature | RAM MB |

| api-gateway | 126.74 |

| auth | 162.84 |

| document-manipulation | 144.53 |

| document-content-handler | 145.5 |

| RTC | 152.48 |

| notifications | 180.2 |

| Feature | mCPU |

| api-gateway | 3.08 |

| auth | 10.14 |

| document-manipulation | 1.62 |

| document-content-handler | 5.05 |

| RTC | 6.83 |

| notifications | 6.23 |

| Feature | RAM MB |

|---|---|

| api-gateway | 155.02 |

| auth | 77.61 |

| document-manipulation | 47.30 |

| document-content-handler | 84.31 |

| RTC | 29.56 |

| notifications | 49.31 |

| System type | Opened connections |

|---|---|

| JVM | 9540 |

| GraalVM | 13648 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).