Submitted:

07 August 2024

Posted:

09 August 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

- Do the challenges faced by SAM in downstream tasks persist in SAM2?

- Can we replicate the success of SAM-Adapter and leverage SAM2’s more powerful pre-trained encoder and decoder to achieve new state-of-the-art (SOTA) results on these tasks?

- Generalizability: SAM2-Adapter can be directly applied to customized datasets of various tasks, enhancing performance with minimal additional data. This flexibility ensures that the model can adapt to a wide range of applications, from medical imaging to environmental monitoring.

- Composability: SAM2-Adapter supports the easy integration of multiple conditions to fine-tune SAM2, improving task-specific outcomes. This composability allows for the combination of different adaptation strategies to meet the specific requirements of diverse downstream tasks.

- We are the first to identify and analyze the limitations of the Segment Anything 2 (SAM2) model in specific downstream tasks, continuing our research from SAM.

- Second, we are the first to propose the adaptation approach, SAM2-Adapter, to adapt SAM2 to downstream tasks and achieve enhanced performance. This method effectively integrates task-specific knowledge with the general knowledge learned by the large model.

- Third, despite SAM2’s backbone being a simple plain model lacking specialized structures tailored for the specific downstream tasks, our extensive experiments demonstrate that SAM2-Adapter achieves SOTA results on challenging segmentation tasks, setting new benchmarks and proving its effectiveness in diverse applications.

2. Related Work

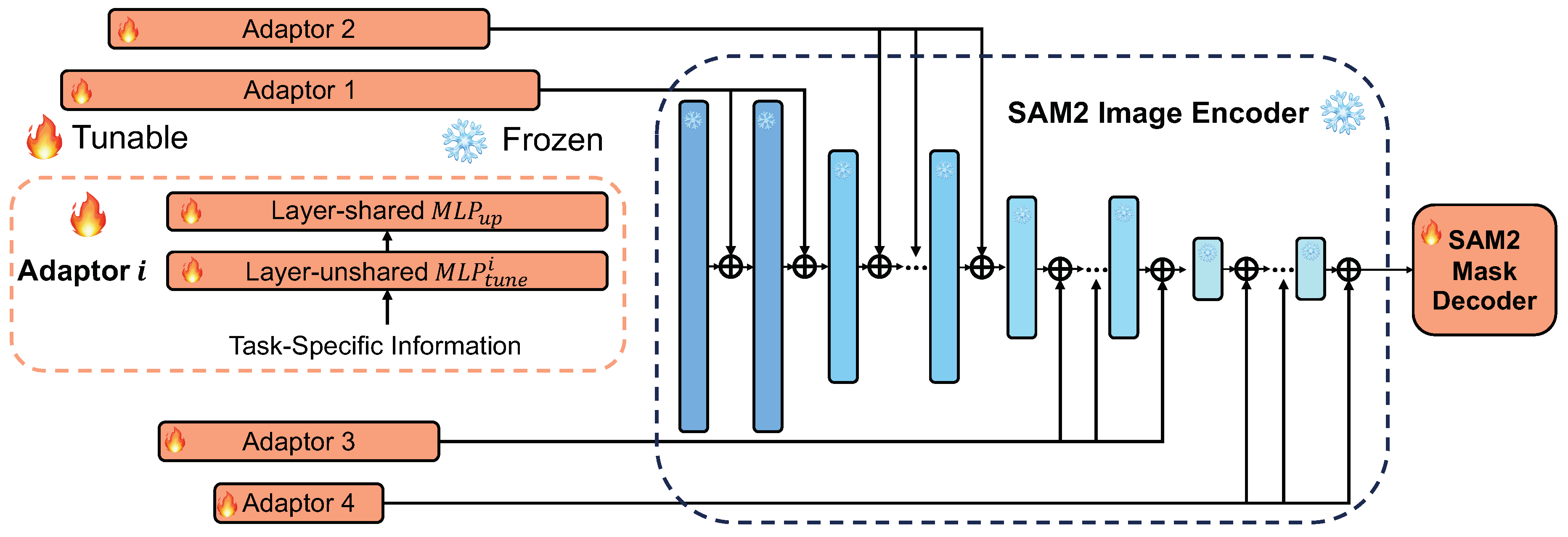

3. Method

3.1. Using SAM 2 as the Backbone

3.2. Input Task-Specific Information

4. Experiments

4.1. Tasks and Datasets

4.2. Implementation Details

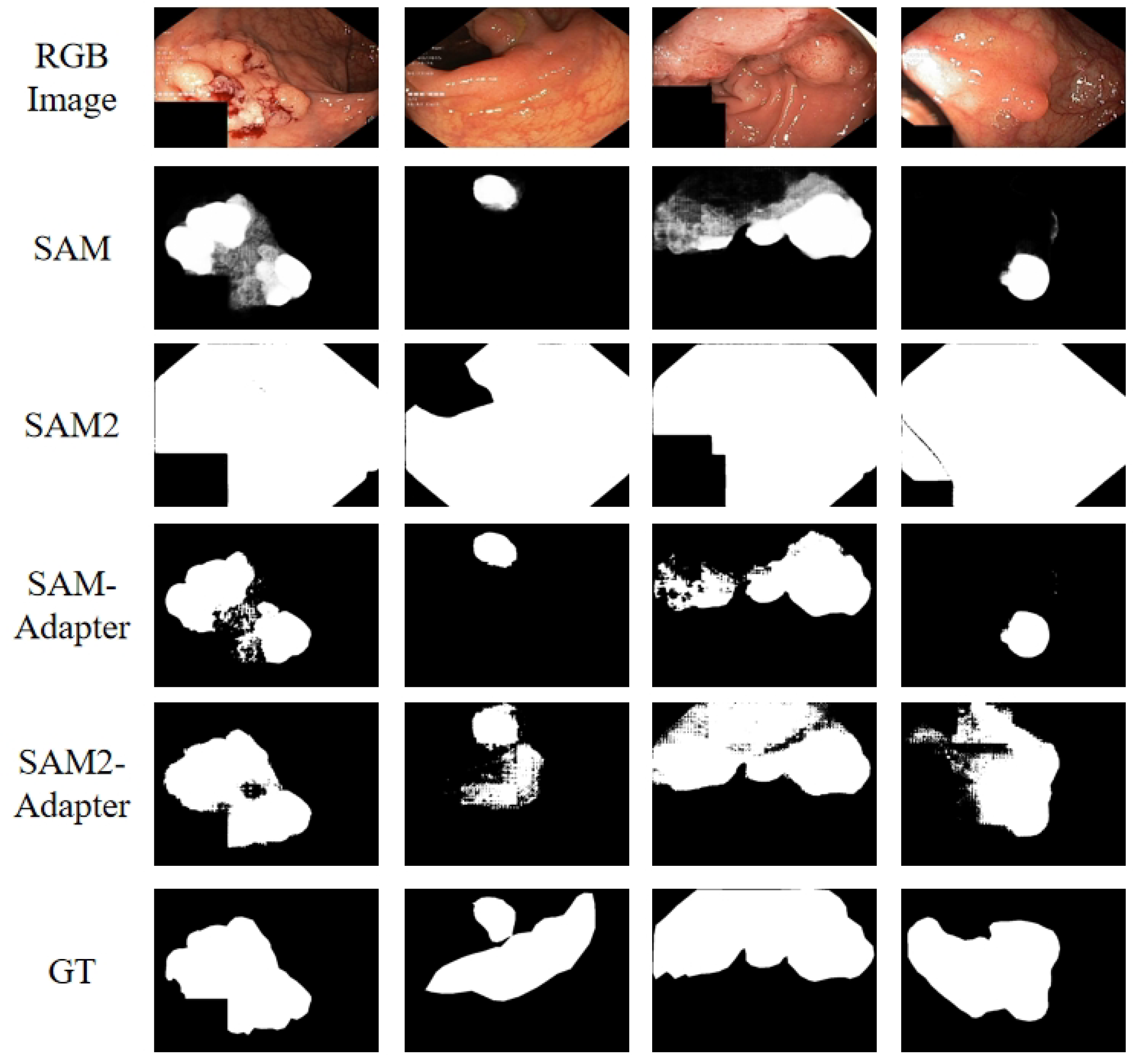

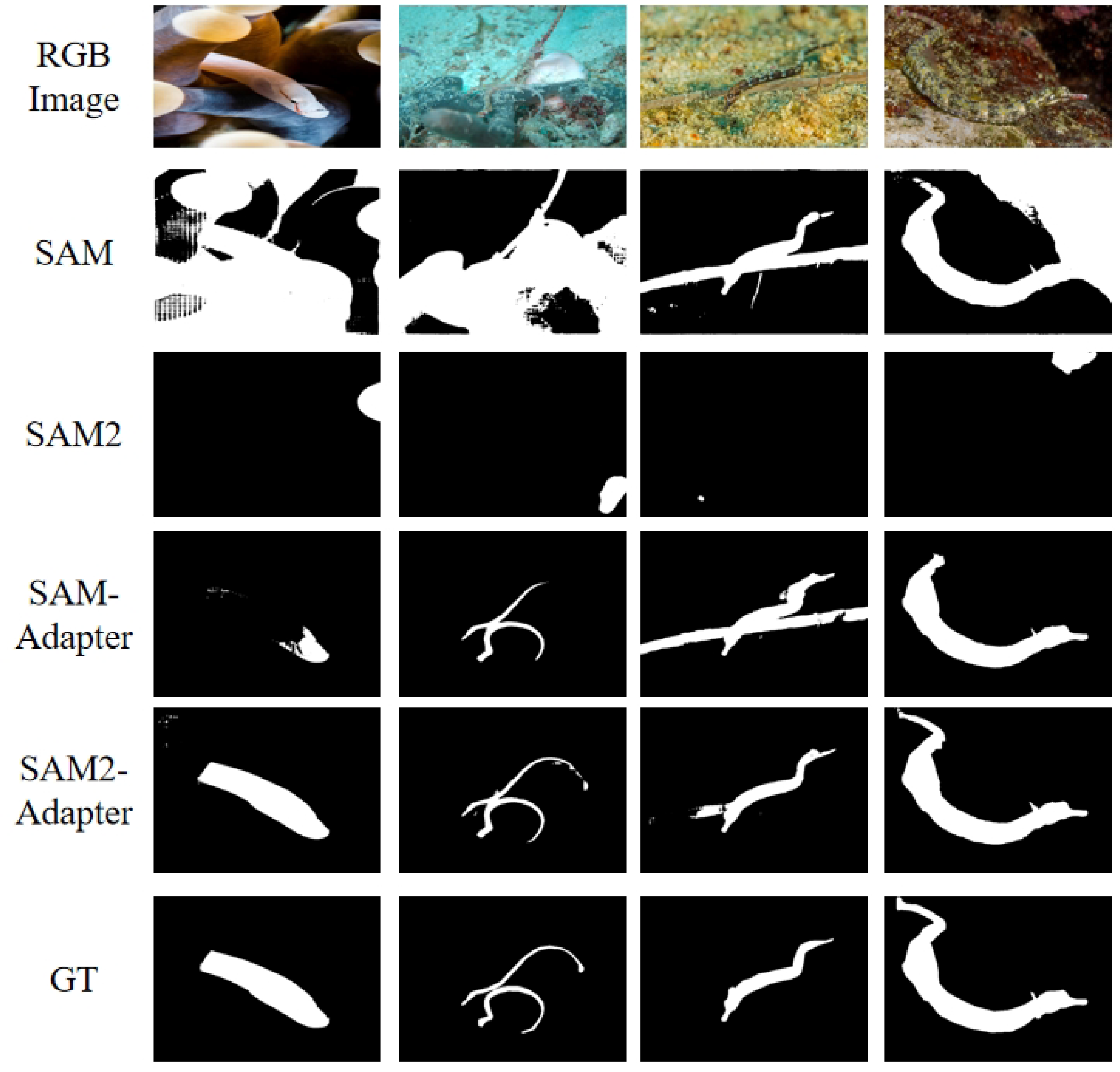

4.3. Experiments for Camouflaged Object Detection

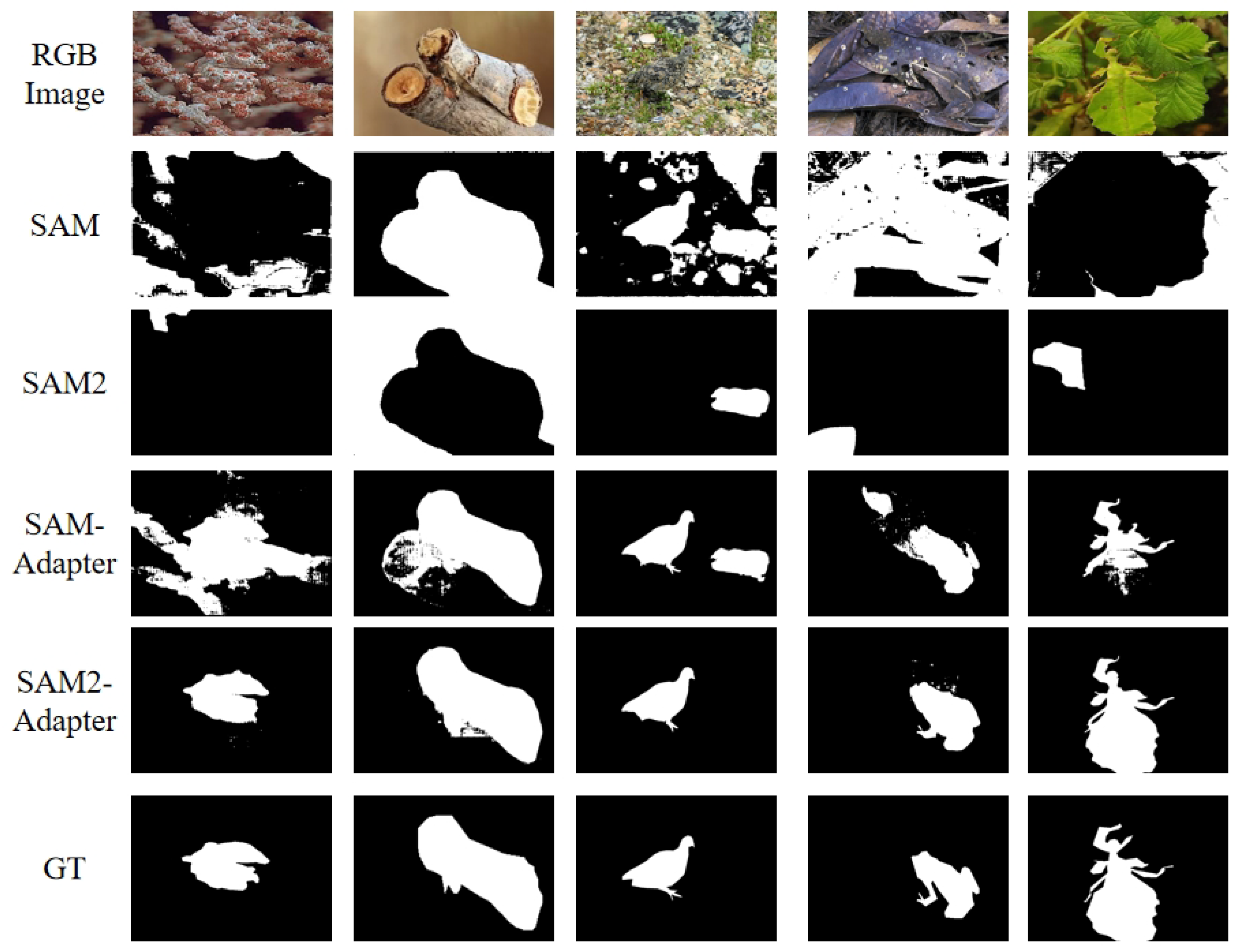

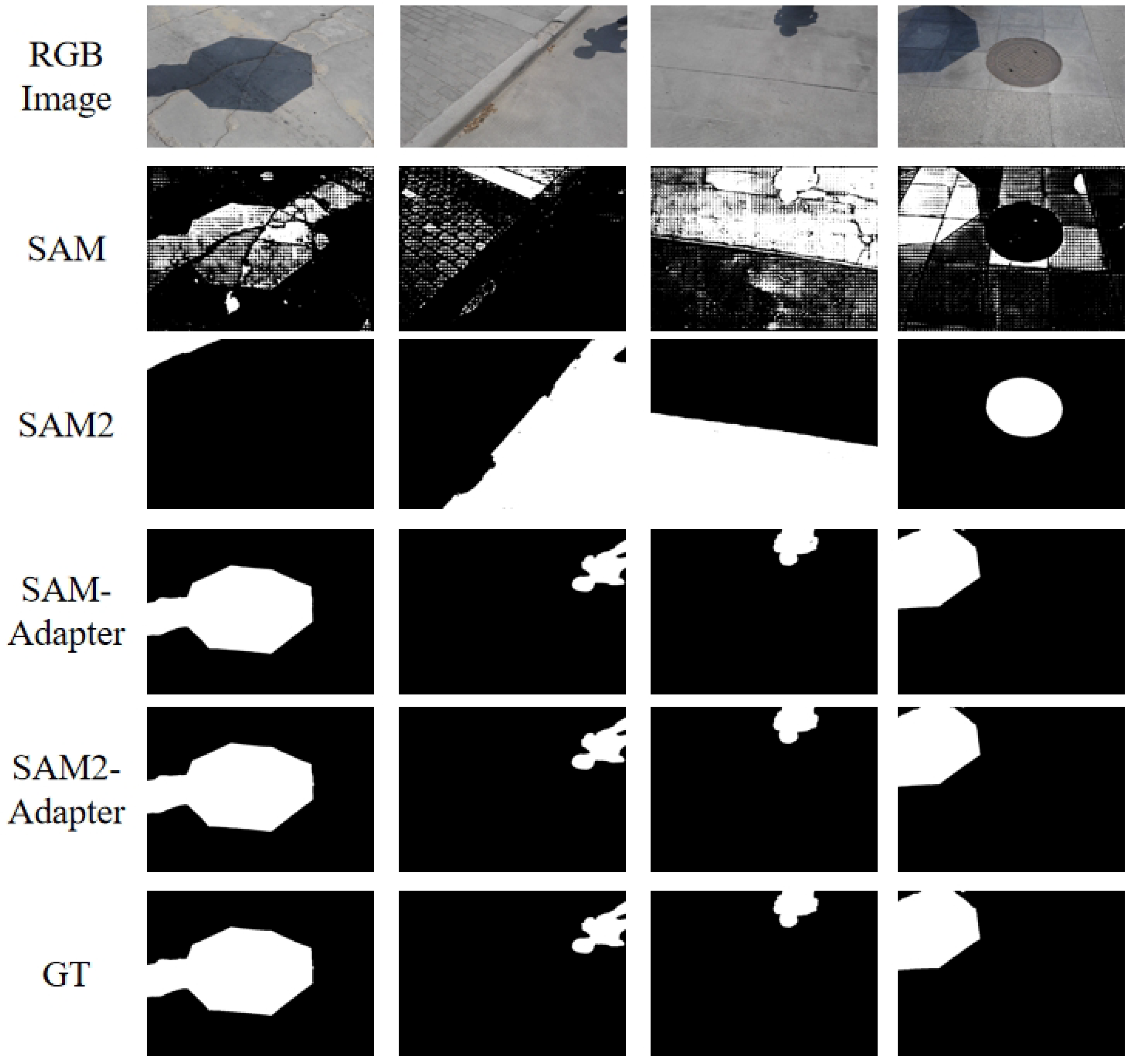

4.4. Experiments for Shadow Detection

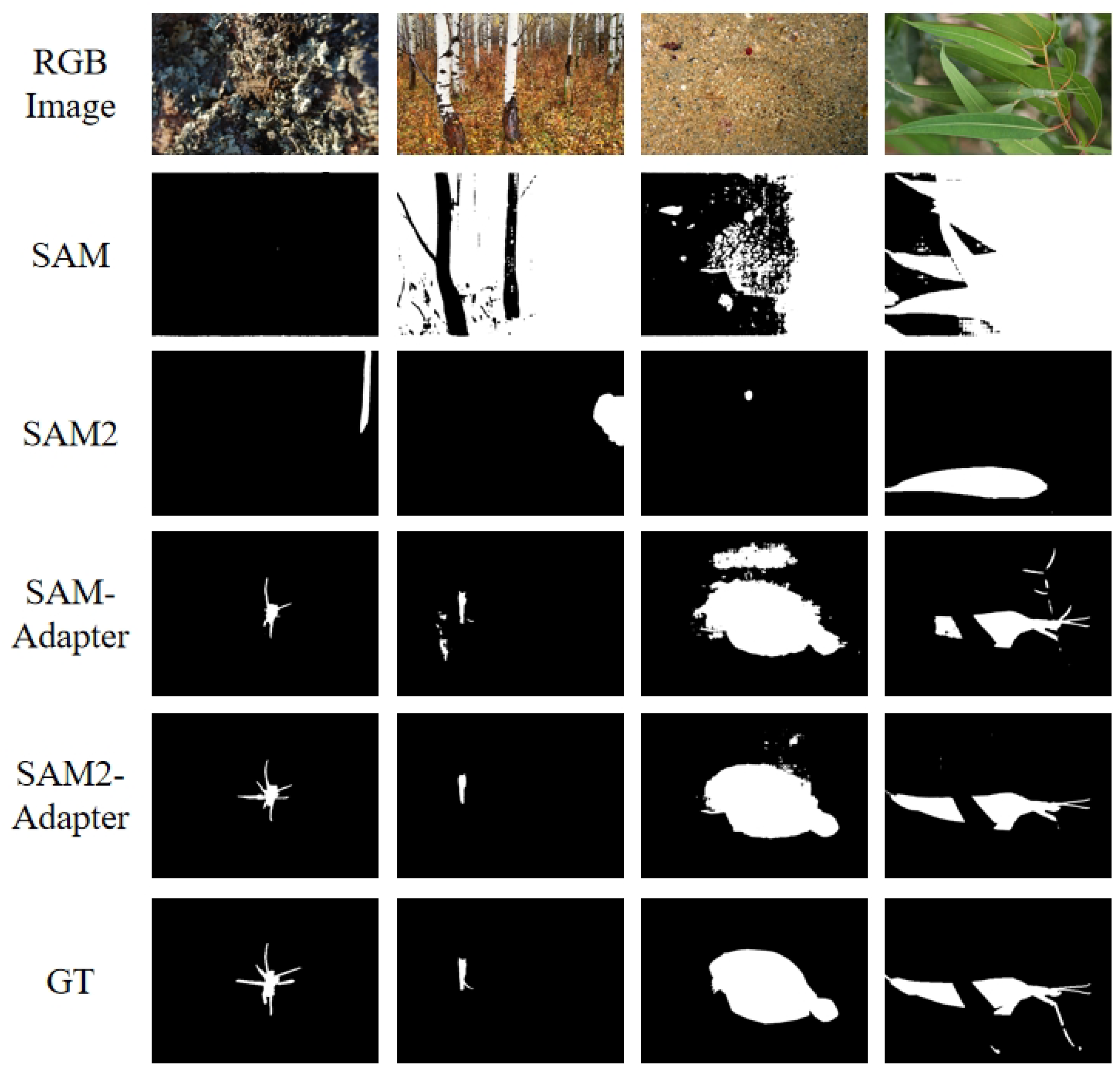

4.5. Experiments for Polyp Segmentation

5. Conclusion and Future Work

6. More Results

References

- Bommasani, R.; Hudson, D.A.; Adeli, E.; Altman, R.; Arora, S.; von Arx, S.; Bernstein, M.S.; Bohg, J.; Bosselut, A.; Brunskill, E.; others. On the opportunities and risks of foundation models. arXiv preprint arXiv:2108.07258 2021.

- Zhu, L.; Chen, T.; Ji, D.; Ye, J.; Liu, J. LLaFS: When Large Language Models Meet Few-Shot Segmentation. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2024, pp. 3065–3075.

- Zhu, L.; Ji, D.; Chen, T.; Xu, P.; Ye, J.; Liu, J. Ibd: Alleviating hallucinations in large vision-language models via image-biased decoding. arXiv preprint arXiv:2402.18476 2024.

- Chen, T.; Yu, C.; Li, J.; Zhang, J.; Zhu, L.; Ji, D.; Zhang, Y.; Zang, Y.; Li, Z.; Sun, L. Reasoning3D–Grounding and Reasoning in 3D: Fine-Grained Zero-Shot Open-Vocabulary 3D Reasoning Part Segmentation via Large Vision-Language Models. arXiv preprint arXiv:2405.19326 2024.

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.Y.; others. Segment anything. arXiv preprint arXiv:2304.02643 2023.

- Chen, T.; Zhu, L.; Ding, C.; Cao, R.; Wang, Y.; Li, Z.; Sun, L.; Mao, P.; Zang, Y. SAM Fails to Segment Anything? – SAM-Adapter: Adapting SAM in Underperformed Scenes: Camouflage, Shadow, Medical Image Segmentation, and More, 2023, [arXiv:cs.CV/2304.09148].

- Chen, T.; Zhu, L.; Deng, C.; Cao, R.; Wang, Y.; Zhang, S.; Li, Z.; Sun, L.; Zang, Y.; Mao, P. Sam-adapter: Adapting segment anything in underperformed scenes. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023, pp. 3367–3375.

- Wang, J.; Li, X.; Yang, J. Stacked conditional generative adversarial networks for jointly learning shadow detection and shadow removal. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 1788–1797.

- Fan, D.P.; Ji, G.P.; Sun, G.; Cheng, M.M.; Shen, J.; Shao, L. Camouflaged object detection. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 2777–2787.

- Skurowski, P.; Abdulameer, H.; Błaszczyk, J.; Depta, T.; Kornacki, A.; Kozieł, P. Animal camouflage analysis: Chameleon database. Unpublished manuscript 2018, 2, 7.

- Le, T.N.; Nguyen, T.V.; Nie, Z.; Tran, M.T.; Sugimoto, A. Anabranch network for camouflaged object segmentation. Computer vision and image understanding 2019, 184, 45–56. [CrossRef]

- Jha, D.; Smedsrud, P.H.; Riegler, M.A.; Halvorsen, P.; de Lange, T.; Johansen, D.; Johansen, H.D. Kvasir-seg: A segmented polyp dataset. MultiMedia Modeling: 26th International Conference, MMM 2020, Daejeon, South Korea, January 5–8, 2020, Proceedings, Part II 26. Springer, 2020, pp. 451–462.

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. Proceedings of the IEEE conference on computer vision and pattern recognition, 2015, pp. 3431–3440.

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. International Conference on Medical image computing and computer-assisted intervention. Springer, 2015, pp. 234–241.

- Yu, C.; Wang, J.; Peng, C.; Gao, C.; Yu, G.; Sang, N. Bisenet: Bilateral segmentation network for real-time semantic segmentation. Proceedings of the European Conference on Computer Vision (ECCV), 2018, pp. 325–341. [CrossRef]

- Fan, M.; Lai, S.; Huang, J.; Wei, X.; Chai, Z.; Luo, J.; Wei, X. Rethinking BiSeNet For Real-time Semantic Segmentation. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 9716–9725.

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE transactions on pattern analysis and machine intelligence 2017, 39, 2481–2495. [CrossRef]

- Li, X.; You, A.; Zhu, Z.; Zhao, H.; Yang, M.; Yang, K.; Tan, S.; Tong, Y. Semantic Flow for Fast and Accurate Scene Parsing. European Conference on Computer Vision. Springer, 2020, pp. 775–793.

- Chen, T.; Ding, C.; Zhu, L.; Xu, T.; Ji, D.; Zang, Y.; Li, Z. xLSTM-UNet can be an Effective 2D\& 3D Medical Image Segmentation Backbone with Vision-LSTM (ViL) better than its Mamba Counterpart. arXiv preprint arXiv:2407.01530 2024.

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Semantic image segmentation with deep convolutional nets and fully connected crfs. arXiv preprint arXiv:1412.7062 2014.

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE transactions on pattern analysis and machine intelligence 2017, 40, 834–848.

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv preprint arXiv:1706.05587 2017.

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. Proceedings of the European conference on computer vision (ECCV), 2018, pp. 801–818.

- Liu, Z.; Zhu, L. Label-guided attention distillation for lane segmentation. Neurocomputing 2021, 438, 312–322. [CrossRef]

- Zang, Y.; Fu, C.; Cao, R.; Zhu, D.; Zhang, M.; Hu, W.; Zhu, L.; Chen, T. Resmatch: Referring expression segmentation in a semi-supervised manner. arXiv preprint arXiv:2402.05589 2024.

- Zhu, L.; Ji, D.; Zhu, S.; Gan, W.; Wu, W.; Yan, J. Learning Statistical Texture for Semantic Segmentation. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 12537–12546.

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 2881–2890.

- Zhu, L.; Chen, T.; Yin, J.; See, S.; Liu, J. Continual Semantic Segmentation with Automatic Memory Sample Selection. arXiv preprint arXiv:2304.05015 2023.

- Fu, X.; Zhang, S.; Chen, T.; Lu, Y.; Zhu, L.; Zhou, X.; Geiger, A.; Liao, Y. Panoptic nerf: 3d-to-2d label transfer for panoptic urban scene segmentation. arXiv preprint arXiv:2203.15224 2022.

- Zhang, F.; Chen, Y.; Li, Z.; Hong, Z.; Liu, J.; Ma, F.; Han, J.; Ding, E. Acfnet: Attentional class feature network for semantic segmentation. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2019, pp. 6798–6807.

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual attention network for scene segmentation. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 3146–3154.

- Zhu, Z.; Xu, M.; Bai, S.; Huang, T.; Bai, X. Asymmetric non-local neural networks for semantic segmentation. Proceedings of the IEEE International Conference on Computer Vision, 2019, pp. 593–602.

- Zhu, L.; Chen, T.; Yin, J.; See, S.; Liu, J. Addressing Background Context Bias in Few-Shot Segmentation through Iterative Modulation. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2024, pp. 3370–3379.

- Zhu, L.; Chen, T.; Yin, J.; See, S.; Liu, J. Learning gabor texture features for fine-grained recognition. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023, pp. 1621–1631.

- Zheng, S.; Lu, J.; Zhao, H.; Zhu, X.; Luo, Z.; Wang, Y.; Fu, Y.; Feng, J.; Xiang, T.; Torr, P.H.; others. Rethinking semantic segmentation from a sequence-to-sequence perspective with transformers. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2021, pp. 6881–6890. [CrossRef]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and efficient design for semantic segmentation with transformers. Advances in Neural Information Processing Systems 2021, 34, 12077–12090.

- Strudel, R.; Garcia, R.; Laptev, I.; Schmid, C. Segmenter: Transformer for semantic segmentation. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 7262–7272.

- Cheng, B.; Misra, I.; Schwing, A.G.; Kirillov, A.; Girdhar, R. Masked-attention mask transformer for universal image segmentation. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 1290–1299.

- Ke, L.; Ye, M.; Danelljan, M.; Liu, Y.; Tai, Y.W.; Tang, C.K.; Yu, F. Segment Anything in High Quality, 2023, [arXiv:cs.CV/2306.01567].

- Xiong, Y.; Varadarajan, B.; Wu, L.; Xiang, X.; Xiao, F.; Zhu, C.; Dai, X.; Wang, D.; Sun, F.; Iandola, F.; Krishnamoorthi, R.; Chandra, V. EfficientSAM: Leveraged Masked Image Pretraining for Efficient Segment Anything, 2023, [arXiv:cs.CV/2312.00863].

- Zhang, C.; Han, D.; Qiao, Y.; Kim, J.U.; Bae, S.H.; Lee, S.; Hong, C.S. Faster Segment Anything: Towards Lightweight SAM for Mobile Applications, 2023, [arXiv:cs.CV/2306.14289].

- Zhao, X.; Ding, W.; An, Y.; Du, Y.; Yu, T.; Li, M.; Tang, M.; Wang, J. Fast Segment Anything, 2023, [arXiv:cs.CV/2306.12156].

- Ma, J.; He, Y.; Li, F.; Han, L.; You, C.; Wang, B. Segment anything in medical images. Nature Communications 2024, 15. [CrossRef]

- Deng, R.; Cui, C.; Liu, Q.; Yao, T.; Remedios, L.W.; Bao, S.; Landman, B.A.; Wheless, L.E.; Coburn, L.A.; Wilson, K.T.; Wang, Y.; Zhao, S.; Fogo, A.B.; Yang, H.; Tang, Y.; Huo, Y. Segment Anything Model (SAM) for Digital Pathology: Assess Zero-shot Segmentation on Whole Slide Imaging, 2023, [arXiv:eess.IV/2304.04155].

- Mazurowski, M.A.; Dong, H.; Gu, H.; Yang, J.; Konz, N.; Zhang, Y. Segment anything model for medical image analysis: An experimental study. Medical Image Analysis 2023, 89, 102918. doi:10.1016/j.media.2023.102918. [CrossRef]

- Wu, J.; Ji, W.; Liu, Y.; Fu, H.; Xu, M.; Xu, Y.; Jin, Y. Medical SAM Adapter: Adapting Segment Anything Model for Medical Image Segmentation, 2023, [arXiv:cs.CV/2304.12620].

- Chen, K.; Liu, C.; Chen, H.; Zhang, H.; Li, W.; Zou, Z.; Shi, Z. RSPrompter: Learning to Prompt for Remote Sensing Instance Segmentation based on Visual Foundation Model, 2023, [arXiv:cs.CV/2306.16269]. [CrossRef]

- Ren, S.; Luzi, F.; Lahrichi, S.; Kassaw, K.; Collins, L.M.; Bradbury, K.; Malof, J.M. Segment anything, from space?, 2023, [arXiv:cs.CV/2304.13000].

- Xie, J.; Yang, C.; Xie, W.; Zisserman, A. Moving Object Segmentation: All You Need Is SAM (and Flow), 2024, [arXiv:cs.CV/2404.12389].

- Tang, L.; Xiao, H.; Li, B. Can SAM Segment Anything? When SAM Meets Camouflaged Object Detection, 2023, [arXiv:cs.CV/2304.04709].

- Houlsby, N.; Giurgiu, A.; Jastrzebski, S.; Morrone, B.; De Laroussilhe, Q.; Gesmundo, A.; Attariyan, M.; Gelly, S. Parameter-efficient transfer learning for NLP. International Conference on Machine Learning. PMLR, 2019, pp. 2790–2799.

- Stickland, A.C.; Murray, I. Bert and pals: Projected attention layers for efficient adaptation in multi-task learning. International Conference on Machine Learning. PMLR, 2019, pp. 5986–5995.

- Li, Y.; Mao, H.; Girshick, R.; He, K. Exploring plain vision transformer backbones for object detection. Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part IX. Springer, 2022, pp. 280–296.

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; others. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929 2020.

- Chen, Z.; Duan, Y.; Wang, W.; He, J.; Lu, T.; Dai, J.; Qiao, Y. Vision transformer adapter for dense predictions. arXiv preprint arXiv:2205.08534 2022.

- Liu, W.; Shen, X.; Pun, C.M.; Cun, X. Explicit Visual Prompting for Low-Level Structure Segmentations. arXiv preprint arXiv:2303.10883 2023.

- Zhou, Y.; Wang, H.; Huo, S.; Wang, B. Full-attention based Neural Architecture Search using Context Auto-regression, 2021, [arXiv:cs.CV/2111.07139].

- Canny, J. A computational approach to edge detection. IEEE Transactions on pattern analysis and machine intelligence 1986, pp. 679–698.

- Qadir, H.A.; Shin, Y.; Solhusvik, J.; Bergsland, J.; Aabakken, L.; Balasingham, I. Toward real-time polyp detection using fully CNNs for 2D Gaussian shapes prediction. Medical Image Analysis 2021, 68, 101897. [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization, 2017, [arXiv:cs.LG/1412.6980].

- Murugesan, B.; Sarveswaran, K.; Shankaranarayana, S.M.; Ram, K.; Sivaprakasam, M. Psi-Net: Shape and boundary aware joint multi-task deep network for medical image segmentation, 2019, [arXiv:cs.CV/1902.04099].

- Mahmud, T.; Paul, B.; Fattah, S.A. PolypSegNet: A modified encoder-decoder architecture for automated polyp segmentation from colonoscopy images. Computers in biology and medicine 2021, 128, 104119. [CrossRef]

- Guo, X.; Chen, Z.; Liu, J.; Yuan, Y. Non-equivalent images and pixels: Confidence-aware resampling with meta-learning mixup for polyp segmentation. Medical image analysis 2022, 78, 102394. [CrossRef]

- Zhou, T.; Zhang, Y.; Zhou, Y.; Wu, Y.; Gong, C. Can SAM Segment Polyps?, 2023, [arXiv:cs.CV/2304.07583].

- Li, Y.; Hu, M.; Yang, X. Polyp-SAM: Transfer SAM for Polyp Segmentation, 2023, [arXiv:eess.IV/2305.00293].

- Roy, S.; Wald, T.; Koehler, G.; Rokuss, M.R.; Disch, N.; Holzschuh, J.; Zimmerer, D.; Maier-Hein, K.H. SAM.MD: Zero-shot medical image segmentation capabilities of the Segment Anything Model, 2023, [arXiv:eess.IV/2304.05396].

- Feng, X.; Guoying, C.; Wei, S. Camouflage texture evaluation using saliency map. Proceedings of the Fifth International Conference on Internet Multimedia Computing and Service, 2013, pp. 93–96.

- Pike, T.W. Quantifying camouflage and conspicuousness using visual salience. Methods in Ecology and Evolution 2018, 9, 1883–1895. [CrossRef]

- Hou, J.Y.Y.H.W.; Li, J. Detection of the mobile object with camouflage color under dynamic background based on optical flow. Procedia Engineering 2011, 15, 2201–2205.

- Sengottuvelan, P.; Wahi, A.; Shanmugam, A. Performance of decamouflaging through exploratory image analysis. 2008 First International Conference on Emerging Trends in Engineering and Technology. IEEE, 2008, pp. 6–10.

- Mei, H.; Ji, G.P.; Wei, Z.; Yang, X.; Wei, X.; Fan, D.P. Camouflaged object segmentation with distraction mining. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 8772–8781.

- Lin, J.; Tan, X.; Xu, K.; Ma, L.; Lau, R.W. Frequency-aware camouflaged object detection. ACM Transactions on Multimedia Computing, Communications and Applications 2023, 19, 1–16. [CrossRef]

- Karsch, K.; Hedau, V.; Forsyth, D.; Hoiem, D. Rendering synthetic objects into legacy photographs. ACM Transactions on Graphics (TOG) 2011, 30, 1–12. [CrossRef]

- Lalonde, J.F.; Efros, A.A.; Narasimhan, S.G. Estimating the natural illumination conditions from a single outdoor image. International Journal of Computer Vision 2012, 98, 123–145. [CrossRef]

- Nadimi, S.; Bhanu, B. Physical models for moving shadow and object detection in video. IEEE transactions on pattern analysis and machine intelligence 2004, 26, 1079–1087.

- Cucchiara, R.; Grana, C.; Piccardi, M.; Prati, A. Detecting moving objects, ghosts, and shadows in video streams. IEEE transactions on pattern analysis and machine intelligence 2003, 25, 1337–1342.

- Huang, X.; Hua, G.; Tumblin, J.; Williams, L. What characterizes a shadow boundary under the sun and sky? 2011 international conference on computer vision. IEEE, 2011, pp. 898–905.

- Zhu, J.; Samuel, K.G.; Masood, S.Z.; Tappen, M.F. Learning to recognize shadows in monochromatic natural images. 2010 IEEE Computer Society conference on computer vision and pattern recognition. IEEE, 2010, pp. 223–230.

- Le, H.; Vicente, T.F.Y.; Nguyen, V.; Hoai, M.; Samaras, D. A+ D Net: Training a shadow detector with adversarial shadow attenuation. Proceedings of the European Conference on Computer Vision (ECCV), 2018, pp. 662–678.

- Cun, X.; Pun, C.M.; Shi, C. Towards ghost-free shadow removal via dual hierarchical aggregation network and shadow matting GAN. Proceedings of the AAAI Conference on Artificial Intelligence, 2020, Vol. 34, pp. 10680–10687. [CrossRef]

- Zhu, L.; Deng, Z.; Hu, X.; Fu, C.W.; Xu, X.; Qin, J.; Heng, P.A. Bidirectional feature pyramid network with recurrent attention residual modules for shadow detection. Proceedings of the European Conference on Computer Vision (ECCV), 2018, pp. 121–136.

- Hendrycks, D.; Gimpel, K. Gaussian error linear units (gelus). arXiv preprint arXiv:1606.08415 2016.

- Jha, D.; Hicks, S.A.; Emanuelsen, K.; Johansen, H.; Johansen, D.; de Lange, T.; Riegler, M.A.; Halvorsen, P. Medico multimedia task at mediaeval 2020: Automatic polyp segmentation. arXiv preprint arXiv:2012.15244 2020.

- Fan, D.P.; Ji, G.P.; Sun, G.; Cheng, M.M.; Shen, J.; Shao, L. Camouflaged object detection. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 2777–2787.

- Lv, Y.; Zhang, J.; Dai, Y.; Li, A.; Liu, B.; Barnes, N.; Fan, D.P. Simultaneously localize, segment and rank the camouflaged objects. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 11591–11601.

- Li, A.; Zhang, J.; Lv, Y.; Liu, B.; Zhang, T.; Dai, Y. Uncertainty-aware joint salient object and camouflaged object detection. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 10071–10081.

- Mei, H.; Ji, G.P.; Wei, Z.; Yang, X.; Wei, X.; Fan, D.P. Camouflaged object segmentation with distraction mining. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 8772–8781.

- Lin, J.; Tan, X.; Xu, K.; Ma, L.; Lau, R.W. Frequency-aware camouflaged object detection. ACM Transactions on Multimedia Computing, Communications and Applications 2023, 19, 1–16. [CrossRef]

- Ravi, N.; Gabeur, V.; Hu, Y.T.; Hu, R.; Ryali, C.; Ma, T.; Khedr, H.; Rädle, R.; Rolland, C.; Gustafson, L.; Mintun, E.; Pan, J.; Alwala, K.V.; Carion, N.; Wu, C.Y.; Girshick, R.; Dollár, P.; Feichtenhofer, C. SAM 2: Segment Anything in Images and Videos, 2024, [arXiv:cs.CV/2408.00714].

- Vicente, T.F.Y.; Hou, L.; Yu, C.P.; Hoai, M.; Samaras, D. Large-scale training of shadow detectors with noisily-annotated shadow examples. Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11-14, 2016, Proceedings, Part VI 14. Springer, 2016, pp. 816–832.

- Zhu, L.; Deng, Z.; Hu, X.; Fu, C.W.; Xu, X.; Qin, J.; Heng, P.A. Bidirectional feature pyramid network with recurrent attention residual modules for shadow detection. Proceedings of the European Conference on Computer Vision (ECCV), 2018, pp. 121–136.

- Hu, X.; Zhu, L.; Fu, C.W.; Qin, J.; Heng, P.A. Direction-aware spatial context features for shadow detection. Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 7454–7462.

- Zheng, Q.; Qiao, X.; Cao, Y.; Lau, R.W. Distraction-aware shadow detection. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 5167–5176.

- Zhu, L.; Xu, K.; Ke, Z.; Lau, R.W. Mitigating intensity bias in shadow detection via feature decomposition and reweighting. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 4702–4711.

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. Unet++: A nested u-net architecture for medical image segmentation. In Deep learning in medical image analysis and multimodal learning for clinical decision support; Springer, 2018; pp. 3–11.

- Fang, Y.; Chen, C.; Yuan, Y.; Tong, K.y. Selective feature aggregation network with area-boundary constraints for polyp segmentation. Medical Image Computing and Computer Assisted Intervention–MICCAI 2019: 22nd International Conference, Shenzhen, China, October 13–17, 2019, Proceedings, Part I 22. Springer, 2019, pp. 302–310.

| Method | CHAMELEON [10] | CAMO [11] | COD10K [9] | |||||||||

| MAE ↓ | MAE ↓ | MAE ↓ | ||||||||||

| SINet[84] | 0.869 | 0.891 | 0.740 | 0.440 | 0.751 | 0.771 | 0.606 | 0.100 | 0.771 | 0.806 | 0.551 | 0.051 |

| RankNet[85] | 0.846 | 0.913 | 0.767 | 0.045 | 0.712 | 0.791 | 0.583 | 0.104 | 0.767 | 0.861 | 0.611 | 0.045 |

| JCOD [86] | 0.870 | 0.924 | - | 0.039 | 0.792 | 0.839 | - | 0.82 | 0.800 | 0.872 | - | 0.041 |

| PFNet [87] | 0.882 | 0.942 | 0.810 | 0.330 | 0.782 | 0.852 | 0.695 | 0.085 | 0.800 | 0.868 | 0.660 | 0.040 |

| FBNet [88] | 0.888 | 0.939 | 0.828 | 0.032 | 0.783 | 0.839 | 0.702 | 0.081 | 0.809 | 0.889 | 0.684 | 0.035 |

| SAM [5] | 0.727 | 0.734 | 0.639 | 0.081 | 0.684 | 0.687 | 0.606 | 0.132 | 0.783 | 0.798 | 0.701 | 0.050 |

| SAM2 [89] | 0.359 | 0.375 | 0.115 | 0.357 | 0.350 | 0.411 | 0.079 | 0.311 | 0.429 | 0.505 | 0.115 | 0.218 |

| SAM-Adapter [6,7] | 0.896 | 0.919 | 0.824 | 0.033 | 0.847 | 0.873 | 0.765 | 0.070 | 0.883 | 0.918 | 0.801 | 0.025 |

| SAM2-Adapter (Ours) | 0.915 | 0.955 | 0.889 | 0.018 | 0.855 | 0.909 | 0.810 | 0.051 | 0.899 | 0.950 | 0.850 | 0.018 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).