Submitted:

01 August 2024

Posted:

02 August 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

- we offer a thorough examination of datasets and tasks specifically within the field of MSA;

- we review and analyse Multimodal features and Multimodal fusion;

- we present the challenges and research future development in MSA, addressing issues such as the cross-modal interactions, context-dependent interpretations, and the prospect of constructing knowledge graph of multimodal representation for semantic analytics.

2. Method

- Published by Elsevier, ACM, IEEE, Springer, or Elsevier, with Springer Nature, Science Direct, IEEE Xplore, or ACM Digital Library as their corresponding libraries.

- Published from 01/01/2020 to 31/03/2024.

- Written in English, not discriminating by geographical area and dataset language.

- Title or keywords or abstract of each paper has keywords: ("Multimodal" or "Multimedia") AND ("Sentiment Analysis" OR "Opinion Mining") AND ("Machine Learning" OR "Deep Learning" OR Classification). The keywords are used in the Boolean search query based on the form requirements of each library.

3. Classification and Analysis

3.1. Datasets

3.1.1. CMU-MOSI

3.1.2. CMU-MOSEI

3.1.3. T4SA

3.1.4. DFMSD

3.1.5. Fakeddit

3.1.6. MuSe-CaR

3.1.7. MVSA

3.1.8. ReactionGIF

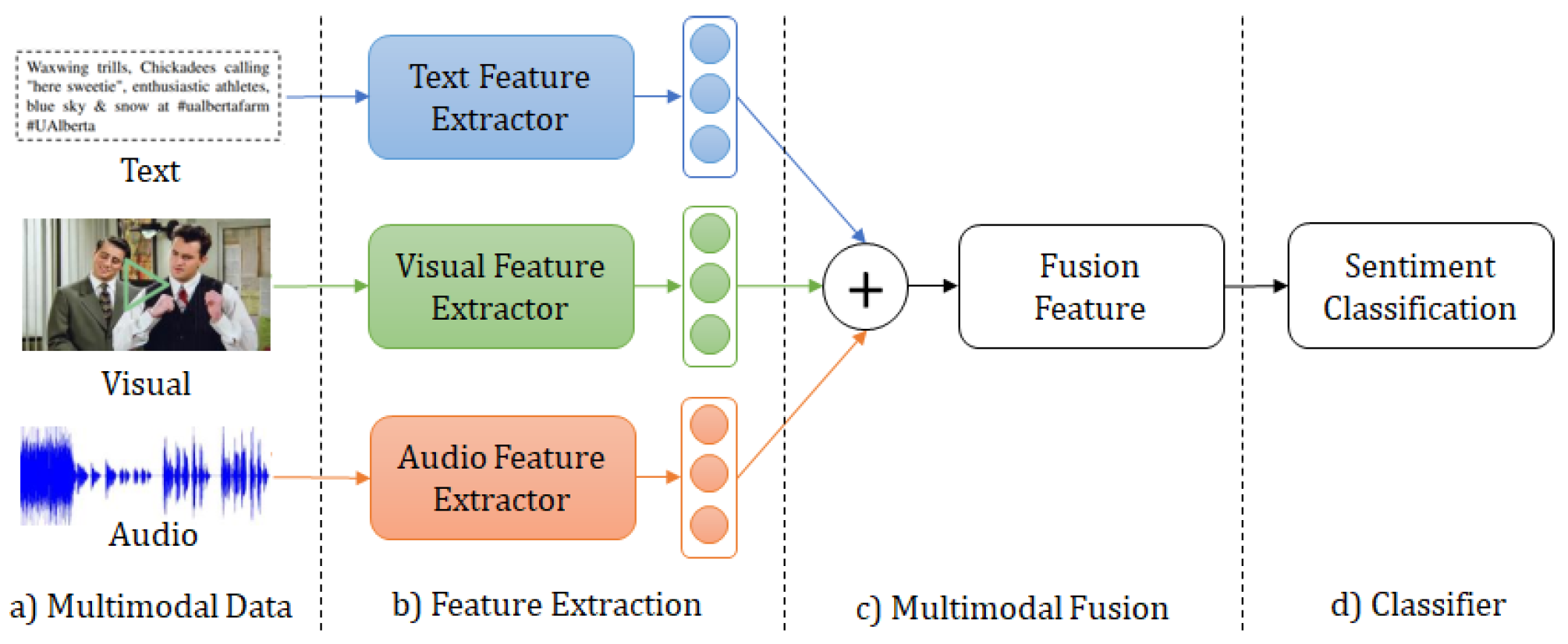

3.2. Multimodal Features

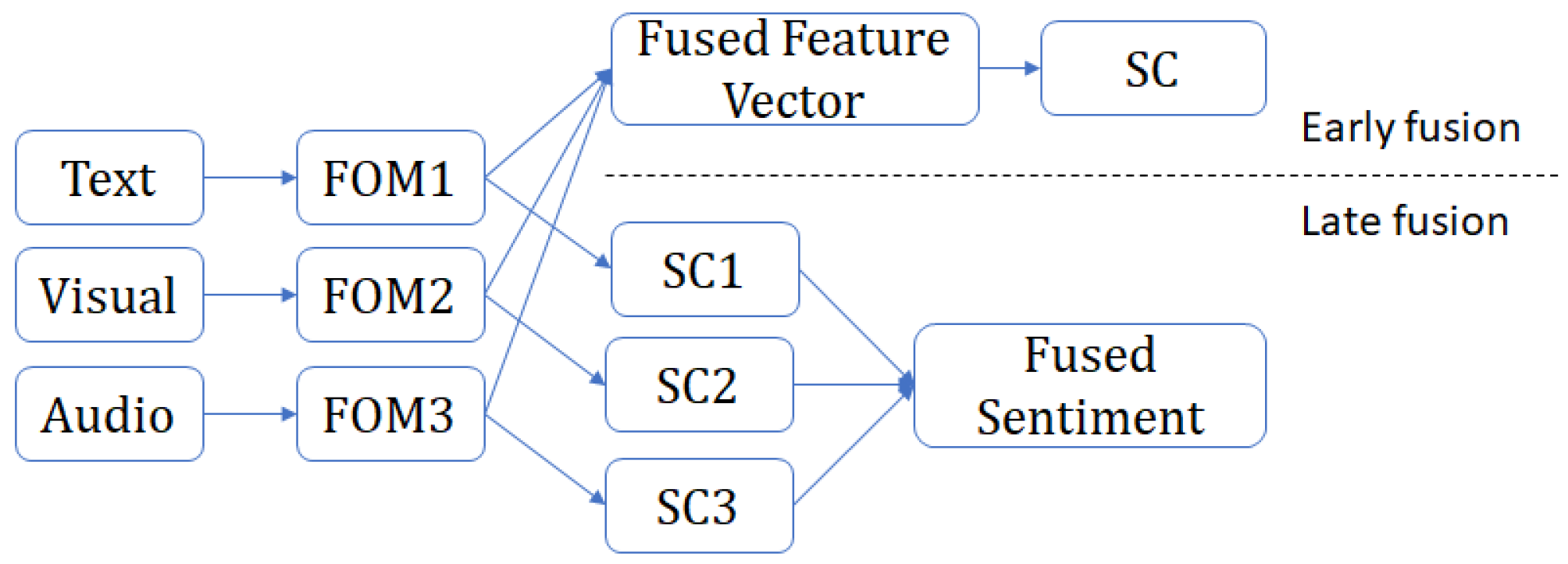

3.3. Multimodal Fusion Methods

3.3.1. Early Fusion

3.3.2. Late Fusion

3.3.3. Text and Image Fusion

3.4. MSA Frameworks and Methodologies

4. Results and Discussion

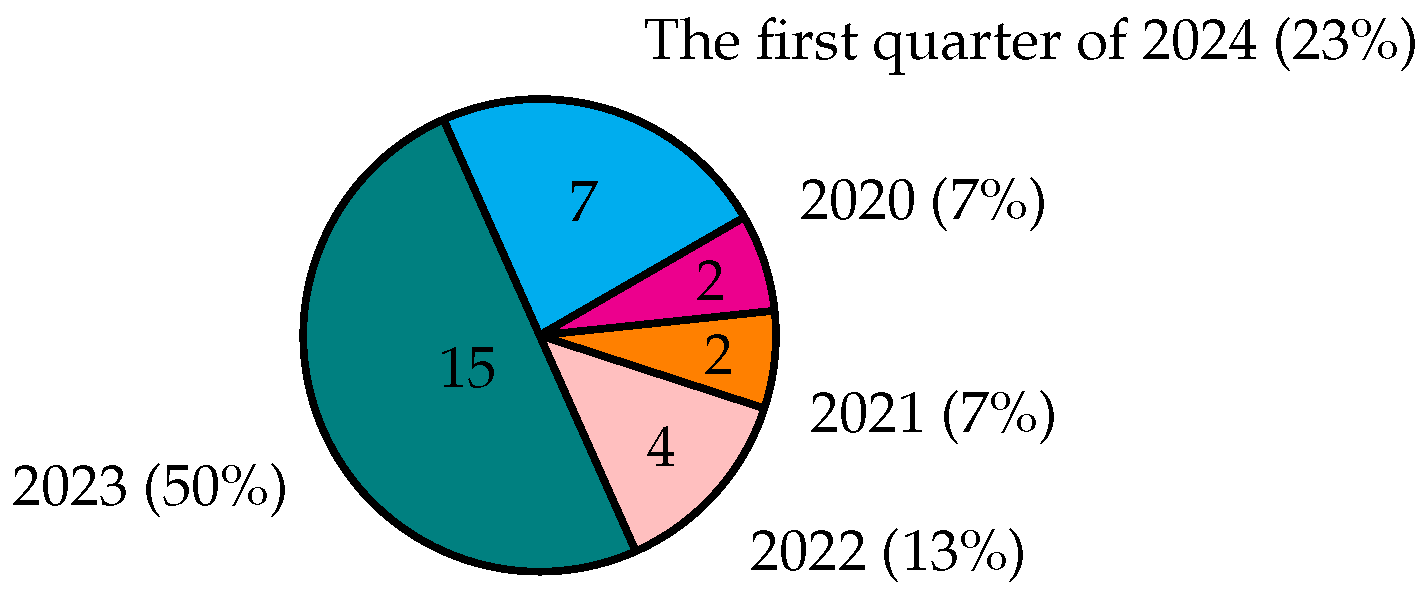

4.1. Publication Year

4.2. Datasets

4.3. Fusion Methods

4.4. Models

4.5. Future Development

5. Conclusion

Acknowledgments

References

- H. L. Nguyen, H. T. N. Pham, and V. M. Ngo, “Opinion spam recognition method for online reviews using ontological features,” CoRR, vol. abs/1807.11024, 2018. [Online]. Available: http://arxiv.org/abs/1807.11024.

- T. N. T. Tran, L. K. N. Nguyen, and V. M. Ngo, “Machine learning based english sentiment analysis,” 2019. [Online]. Available: https://arxiv.org/abs/1905.06643. [CrossRef]

- C. N. Dang, M. N. Moreno-García, F. De la Prieta, K. V. Nguyen, and V. M. Ngo, “Sentiment analysis for vietnamese – based hybrid deep learning models,” in Hybrid Artificial Intelligent Systems, P. García Bringas, H. Pérez García, F. J. Martínez de Pisón, F. Martínez Álvarez, A. Troncoso Lora, Á. Herrero, J. L. Calvo Rolle, H. Quintián, and E. Corchado, Eds. Cham: Springer Nature Switzerland, 2023, pp. 293–303.

- L. Xudong, et al., “Multimodal sentiment analysis based on deep learning: Recent progress,” in ICEB 2021 Proceedings, 2021.

- J. V. Tembhurne and T. Diwan, “Sentiment analysis in textual, visual and multimodal inputs using recurrent neural networks,” Multimedia Tools and Applications, vol. 80, no. 5, pp. 6871–6910, Feb 2021.

- R. Das and T. D. Singh, “Multimodal sentiment analysis: A survey of methods, trends, and challenges,” ACM Comput. Surv., vol. 55(13s), 2023.

- V. M. Ngo, et al., “Investigation, detection and prevention of online child sexual abuse materials: A comprehensive survey,” in Proceedings of the 16th IEEE-RIVF, 2022, pp. 707–713.

- D. Hazarika, et al., “Misa: Modality-invariant and -specific representations for multimodal sentiment analysis,” in Proceedings of the 28th ACM International Conference on Multimedia, 2020, p. 1122–1131.

- D. Zhang, et al., “Multi-modal sentiment classification with independent and interactive knowledge via semi-supervised learning,” IEEE Access, vol. 8, pp. 22 945–22 954, 2020.

- L. Sun, et al., “Multimodal emotion recognition and sentiment analysis via attention enhanced recurrent model,” in Proceedings of the 2nd on Multimodal Sentiment Analysis Challenge, 2021, p. 15–20.

- D. Liu, et al., “Speech expression multimodal emotion recognition based on deep belief network,” J. of Grid Computing, vol. 19(2), p. 22, 2021.

- K. Vasanth, et al., “Dynamic fusion of text, video and audio models for sentiment analysis,” Procedia Computer Science, vol. 215, pp. 211–219, 2022, the 4th Int. Conf. on IDCT&A.

- J. M. Garcia-Garcia, et al., “Building a three-level multimodal emotion recognition framework,” Multimedia Tools and Applications, vol. 82, no. 1, pp. 239–269, 2022.

- B. Palani, et al., “Cb-fake: A multimodal deep learning framework for automatic fake news detection using capsule neural network and bert,” Multimedia Tools and Applications, vol. 81, no. 4, pp. 5587–5620, 2022.

- M. Jiang and S. Ji, “Cross-modality gated attention fusion for multimodal sentiment analysis,” 2022.

- J. Wu, et al., “A optimized bert for multimodal sentiment analysis,” ACM Trans. Multimedia Comput. Commun. Appl., vol. 19, no. 2s, 2023.

- A. Perti, et al., “Cognitive hybrid deep learning-based multi-modal sentiment analysis for online product reviews,” ACM Trans. Asian Low-Resour. Lang. Inf. Process., 2023.

- T. Grósz, et al., “Discovering relevant sub-spaces of bert, wav2vec 2.0, electra and vit embeddings for humor and mimicked emotion recognition with integrated gradients,” in Proceedings of the 4th on Multimodal Sentiment Analysis Challenge and Workshop: Mimicked Emotions, Humour and Personalisation, 2023, p. 27–34. [CrossRef]

- T. Sun, et al., “General debiasing for multimodal sentiment analysis,” in Proceedings of the 31st ACM International Conference on Multimedia, 2023, p. 5861–5869.

- L. Bryan-Smith et al., “Real-time social media sentiment analysis for rapid impact assessment of floods,” Computers & Geosciences, vol. 178, p. 105405, 2023.

- G. Meena et al., “Sentiment analysis on images using convolutional neural networks based inception-v3 transfer learning approach,” Int. J. of Information Management Data Insights, vol. 3, no. 1, p. 100174, 2023.

- M. I. Nadeem et al., “Ssm: Stylometric and semantic similarity oriented multimodal fake news detection,” Journal of King Saud University - Computer and Information Sciences, vol. 35, no. 5, p. 101559, 2023.

- T. Zhu, et al., “Multimodal sentiment analysis with image-text interaction network,” IEEE Transactions on Multimedia, vol. 25, pp. 3375–3385, 2023.

- F. Alzamzami and A. E. Saddik, “Transformer-based feature fusion approach for multimodal visual sentiment recognition using tweets in the wild,” IEEE Access, vol. 11, pp. 47 070–47 079, 2023.

- S. K. Uppada, et al., “An image and text-based multimodal model for detecting fake news in osn’s,” Journal of Intelligent Information Systems, vol. 61, no. 2, pp. 367–393, Oct 2023.

- Z. Fu, et al., “Lmr-cbt: learning modality-fused representations with cb-transformer for multimodal emotion recognition from unaligned multimodal sequences,” Frontiers of Comp. Science, vol. 18, no. 4, 2023. [CrossRef]

- R. Jain, et al., “Real time sentiment analysis of natural language using multimedia input,” Multimedia Tools and Applications, vol. 82, no. 26, pp. 41 021–41 036, Nov 2023.

- E. Volkanovska, et al., “The insightsnet climate change corpus (iccc),” Datenbank-Spektrum, vol. 23, no. 3, pp. 177–188, Nov 2023.

- H. Wang, et al., “Exploring multimodal sentiment analysis via cbam attention and double-layer bilstm architecture,” 2023.

- A. Aggarwal, D. Varshney, and S. Patel, “Multimodal sentiment analysis: Perceived vs induced sentiments,” 2023.

- P. Shi, et al., “Deep modular co-attention shifting network for multimodal sentiment analysis,” ACM Trans. Multimedia Comput. Commun. Appl., vol. 20, no. 4, jan 2024.

- Y. Zheng et al., “Djmf: A discriminative joint multi-task framework for multimodal sentiment analysis based on intra- and inter-task dynamics,” Expert Systems with Applications, vol. 242, p. 122728, 2024.

- E. F. Ayetiran and Özlem Özgöbek, “An inter-modal attention-based deep learning framework using unified modality for multimodal fake news, hate speech and offensive language detection,” Information Systems, vol. 123, p. 102378, 2024.

- Q. Lu et al., “Coordinated-joint translation fusion framework with sentiment-interactive graph convolutional networks for multimodal sentiment analysis,” Information Processing & Management, vol. 61, no. 1, p. 103538, 2024. [CrossRef]

- Y. Wang et al., “Multimodal transformer with adaptive modality weighting for multimodal sentiment analysis,” Neurocomputing, vol. 572, p. 127181, 2024.

- S. Wang, et al., “Aspect-level multimodal sentiment analysis based on co-attention fusion,” Int. J. of Data Science and Analytics, 2024.

- P. Kumar et al., “Interpretable multimodal emotion recognition using hybrid fusion of speech and image data,” Multimedia Tools and Applications, vol. 83, no. 10, pp. 28 373–28 394, 2024.

| 1 | |

| 2 | |

| 3 | |

| 4 | |

| 5 | |

| 6 | |

| 7 | |

| 8 |

| Publisher with search criteria | The number of literatures | ||

|---|---|---|---|

| After downloading | After reviewing title and abstract | After reviewing full text | |

| Springer | 955 | 40 | 10 (Resa: 9, Surb: 1) |

| Elsevier | 276 | 18 | 8 (Res: 8, Sur: 0) |

| IEEE | 215 | 14 | 3 (Res: 3, Sur: 0) |

| ACM | 226 | 19 | 8 (Res: 7, Sur: 1) |

| Total | 1,672 | 91 | 29 (Res: 27, Sur: 2) |

| Others with unlimited publisher and pub. year | 4 (Res: 3, Sur: 1) | ||

| Final Review | 33 (Res: 30, Sur: 3) | ||

| No | Study | Year | Datasets 1(Name) | MF2 | MFM | Models and Accuracy |

|---|---|---|---|---|---|---|

| 1. | Hazarika et al. [8] | 2020 | 3rd+O CMU-MOSI,CMU-MOSEI | T+V+A | Late | MISA (Acc: 83.4, 85.5, 70.61) |

| 2. | Dong Zhang et al. [9] | 2020 | 3rd+O CMU-MOSI,CMU-MOSEI | T+V+A | Late | Bi-modal (acc: 70.9, F1: 70.9), Tri-modal (acc: 71.2, F1: 71.2) |

| 3. | Sun et al. [10] | 2021 | 3rd+O MuSe-CaR | T+V+A | Late | temporal model (Acc: 0.5549) |

| 4. | Dong Liu et al. [11] | 2021 | Self+NO | V+A | Early | (SIFT, CNN) for Face + LIBSVM for fusion (acc: 90.89%) |

| 5. | K.Vasanth et al. [12] | 2022 | Self+NO | T+V+A | Early | N/A |

| 6. | Garcia et al. [13] | 2022 | Self+NO | T+V+A | Late | HERA framework |

| 7. | Palani et al. [14] | 2022 | 3rd+O Politifact,Gossipcop | T+V | Early | CB-Fake (Acc: 0.93) |

| 8. | Jiang et al. [15] | 2023 | 3rd+O CMU-MOSI,CMU-MOSEI | T+V+A | Late | CMGA (Acc: 53.03) |

| 9. | Wu et al. [16] | 2023 | 3rd+O CMU-MOSI,CMU-MOSEI | T+A | Late | HG-BERT model (Acc: 83.82) |

| 10. | Perti et al. [17] | 2023 | Self+NO | T+V | Late | Auc: 0.8420 |

| 11. | Grosz et al. [18] | 2023 | 3rd+NO | T+V+A | Late | Auc: 0.8420 |

| 12. | Sun et al. [19] | 2023 | 3rd+O CMU-MOSI,CMU-MOSEI | T+V+A | Late | GEAR (Acc: 84.39%) |

| 13. | Bryan Smith et al. [20] | 2023 | Self+NO | T+V | Late | CLIP-based model (Precison: 0.624, Recall: 0.607) |

| 14. | Meena et al. [21] | 2023 | 3rd+O CK+,FER2013,JAFFE | V | N/A | CNN-based Inception-v3 (Acc: 99.57%, 73.09%, 86%) |

| 15. | Nadeem et al. [22] | 2023 | 3rd+NO | T+V | Early | Proposed SSM (Acc: 96.90) |

| 16. | Tong Zhu et al. [23] | 2023 | Self+NO | T+V | Late | ITTN (Acc: 0.7519) |

| 17. | Alzamzami et al. [24] | 2023 | 3rd+O T4SA,FER-2013,DFMSD | T+V | Late | parallel (Acc: 0.82) |

| 18. | Uppada et al. [25] | 2023 | 3rd+O Fakeddit | T+V | Late | Fine-tuned BERT and fine-tuned Xception (Acc: 91.94%) |

| 19. | Fu et al. [26] | 2023 | 3rd+O CMU-MOSI,CMU-MOSEI | T+V+A | Late | LMR-CBT |

| 20. | Jain et al. [27] | 2023 | Self+NO | T+V+A | Late | MTCNN Model, NLP model, SVM model, Google API |

| 21. | Volkanovska et al. [28] | 2023 | 3rd+O | T+V | N/A | using NLP tools to enrich corpus (meta)data |

| 22. | Huiru Wang et al. [29] | 2023 | 3rd+O MVSA-single,HFM | T+V | Early | BERT + BiLSTM, CNN and CBAM attention |

| 23. | Aggarwal et al. [30] | 2023 | 3rd+O ReactionGIF | T+V | Late | BERT, OCR, VGG19 |

| 24. | Shi et al. [31] | 2024 | 3rd+O CMU-MOSI,CMU-MOSEI | T+V | Late | CoASN model based on CMMC and AMAG |

| 25. | Zheng et al. [32] | 2024 | 3rd+O CMU-MOSI,CMU-MOSEI | T+V+A | Late | DJMF framework |

| 26. | Ayetiran et al. [33] | 2024 | Self+NO | T+V+A | Early | Acc: 0.94 |

| 27. | Lu et al. [34] | 2024 | 3rd+O CMU-MOSI,CMU-MOSEI | T+V+A | Late | sentiment-interactive graph (Acc: 86.5%, 86.1%) |

| 28. | Yifeng Wang et al. [35] | 2024 | 3rd+O CMU-MOSI,CMU-MOSEI | T+V+A | Late | MTAMW (multimodal adaptive weight matrix) |

| 29. | Wang et al. [36] | 2024 | 3rd+O Twitter-2015,Twitter-2017 | T+V | Late | GLFFCA + BERT (Acc: 74.07%, 68.14%) |

| 30. | Kumar et al. [37] | 2024 | Self+NO | V+A | Late | ParallelNet (Acc: 89.68%) |

| Terms | Classification and Percentage (papers) | ||

|---|---|---|---|

| Datasets | 3rd+NO | Self+NO | 3rd+O |

| 7% (2p) | 30% (9p) | 63% (19p) | |

| Fusion Methods | Early | Late | N/A |

| 20% (6p) | 73% (22p) | 7% (2p) | |

| Models | Improve | Experiment | New model |

| 17% (5p) | 17% (5p) | 66% (20p) | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).