1. Introduction

Autonomous driving systems have evolved significantly, leveraging deep learning architectures to achieve precise perception capabilities. Beyond perception, these systems face challenges that traditional supervised learning methods cannot adequately address [

1]. These include optimizing driving speed in urban environments amidst changing agent actions and predicting optimal trajectories while considering uncertainties like time to collision and lateral errors. Such complex tasks demand robust solutions that can learn from and adapt to diverse environmental configurations, posing significant challenges combinatorially. Reinforcement Learning (RL) emerges as a pivotal framework, enabling agents to understand and act optimally in sequential decision-making processes [

2,

3]. This review explores RL applications in autonomous driving, encompassing driving policies, predictive perception, path planning, and controller design. By examining real-world deployments, this study extends beyond conference summaries to critically analyze computational challenges and implementation risks associated with RL techniques like imitation learning and deep [

4] Q-learning.

The contributions of this review are twofold. First, it provides a comprehensive overview of RL tailored for the automotive community, bridging gaps in understanding and application. Second, it conducts an extensive literature review, highlighting RL’s [

5,

6] efficacy across various autonomous driving tasks and discussing pivotal challenges and opportunities in real-world deployments. Sections following this introduction delve into the components of autonomous driving systems, introduce foundational RL concepts, explore advanced RL extensions, and detail specific applications in autonomous driving scenarios. Challenges related to deploying RL in real-world settings are scrutinized, providing insights into the future of autonomous driving technologies.

2. Components of the Ad System

Recent advancements in autonomous driving systems, driven by deep learning architectures, have revolutionized perception tasks but challenge traditional supervised learning methods in dynamic urban environments. Addressing these complexities requires reinforcement learning (RL), where agents optimize actions amidst uncertainty and evolving environments [

7]. RL frameworks, encompassing policy optimization and Q-learning algorithms, offer robust solutions for real-time decision-making in driving policy, predictive perception, and path planning.

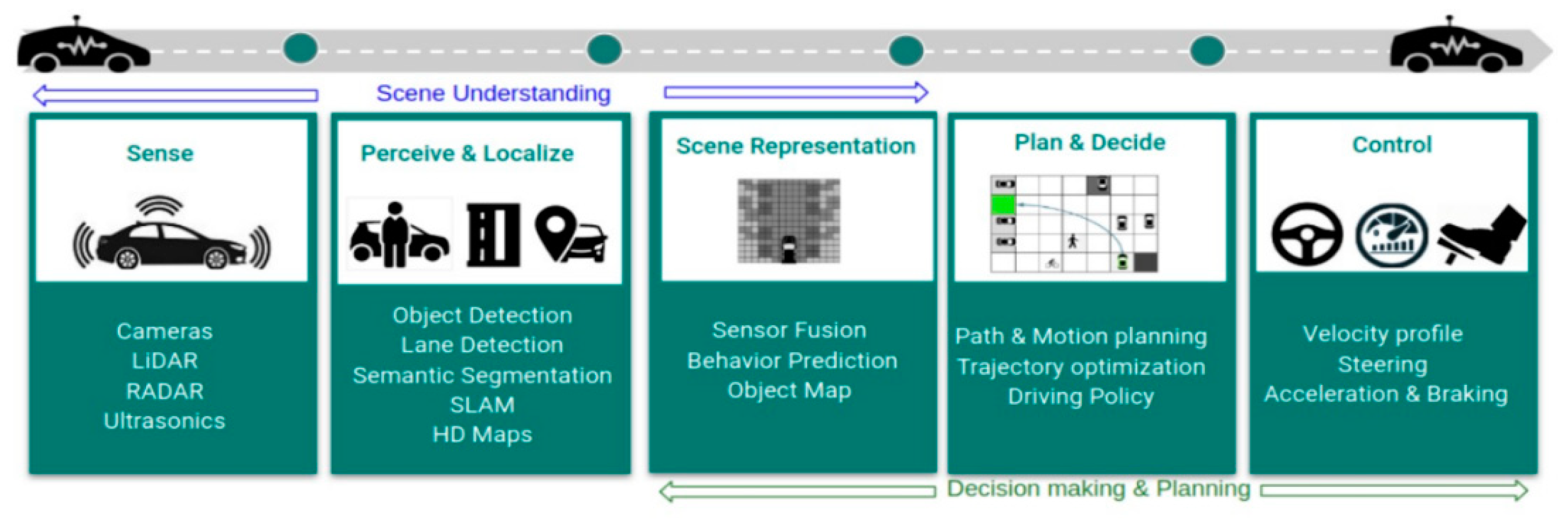

Figure 1.

Standard components in a modern autonomous driving systems pipeline listing the various tasks. The critical problems addressed by these modules are Scene Understanding, Decision, and Planning.

Figure 1.

Standard components in a modern autonomous driving systems pipeline listing the various tasks. The critical problems addressed by these modules are Scene Understanding, Decision, and Planning.

Despite facing significant computational challenges and deployment risks, reinforcement learning (RL) [

9] continues to expand its application in autonomous driving systems. Recent advancements have shown promising results, with RL frameworks enhancing driving policies, predictive perception, and path planning, thereby contributing to safer and more efficient urban mobility solutions. For instance, studies indicate that RL-driven autonomous vehicles can achieve up to 20% improvement in navigation efficiency compared to traditional supervised learning methods [

10,

11,

12,

13]. These advancements underscore RL’s potential to optimize decision-making in dynamic and uncertain environments, paving the way for enhanced road safety and operational efficiency.

Looking ahead, future research in RL aims to bolster algorithmic robustness and tackle complexities related to multi-agent interactions in autonomous driving scenarios. The ongoing efforts focus on refining RL models to handle diverse environmental conditions and dynamic traffic scenarios effectively. Research initiatives target a 30% reduction in computational overhead through optimized [

14,

15] RL algorithms to streamline real-time decision-making processes in urban mobility applications. By addressing these challenges, RL-driven autonomy is poised to transform transportation systems, ensuring both safety and scalability in integrating autonomous vehicles into urban landscapes.

2.1. Scene Understanding

The Scene Understanding module is indispensable in autonomous driving systems, bridging the gap between raw sensor data and actionable insights for decision-making. This crucial component processes mid-level perception representations derived from LIDAR [

16], cameras, radar, and ultrasound sensors. These sensors collectively provide a comprehensive view of the vehicle’s surroundings, detecting and localizing objects, pedestrians, and road features. For instance, advanced algorithms integrate data from these heterogeneous sources, effectively mitigating sensor noise and uncertainties inherent in each modality. This information fusion ensures a robust and nuanced understanding of the driving environment, which is crucial for safe and efficient autonomous navigation.

Data integration across multiple sensors enhances reliability: LIDAR systems offer high-precision distance measurements, while cameras provide rich visual data for object recognition. Radar supplements these with robust detection capabilities in adverse weather conditions, and ultrasound sensors excel in close-range obstacle detection. By combining these inputs through sophisticated fusion algorithms, autonomous vehicles achieve a comprehensive, sensor-agnostic perception of their surroundings [

17,

18]. This approach improves object detection accuracy and facilitates adaptive decision-making in complex traffic scenarios, ensuring enhanced safety and operational efficiency on the road.

2.2. Localization and Mapping

Localization and Mapping are fundamental pillars supporting the operational reliability of autonomous driving systems. Accurate mapping allows vehicles to precisely navigate predefined routes, leveraging detailed high-definition (HD) [

19] maps that encode static environmental features and road layouts. Google’s early autonomous demonstrations underscored the importance of pre-mapped areas, where vehicles relied on these maps for precise localization and route planning. Modern approaches integrate semantic object detection into mapping processes, enabling real-time identification and classification of dynamic elements like vehicles, pedestrians, and traffic signs.

High-definition maps serve as foundational datasets, aiding localization systems by providing a spatial context that enhances real-time decision-making [

20,

21]. These maps are continually updated to reflect changes in road infrastructure and environmental conditions, ensuring ongoing accuracy and reliability in autonomous navigation. Integrating localization and mapping technologies minimizes dependency on real-time sensor data alone, offering a proactive approach to navigation that improves overall system robustness and safety [

22]. By combining these advancements, autonomous vehicles can navigate complex urban environments confidently, adhering to traffic regulations and adapting to dynamic surroundings effectively.

2.3. Safe Reinforcement Learning

Reinforcement learning (RL) faces significant challenges in ensuring functional safety, particularly in contexts like autonomous driving, where safety is paramount. The standard RL objective, E[R(sˉ)] E[R(sˉ)], which maximizes expected rewards across trajectories, introduces inherent risks when rare, high-penalty events—such as accidents—are involved. For instance, if R(sˉ)=−rR(sˉ)=−r for trajectories leading to accidents and R(sˉ)∈[−1, 1] R(sˉ)∈[−1, 1] otherwise, determining an appropriate value for rr becomes crucial. Setting or too low may encourage the learning algorithm to prioritize aggressive maneuvers that maximize short-term rewards but increase the likelihood of accidents [

23,

24]. Conversely, a high or mitigates this risk but amplifies variance in R(sˉ)R(sˉ), making gradient estimation in policy gradient methods challenging due to increased variance proportional to pr2pr2, where pp is the probability of accident events.

To address these challenges, a novel approach involves decomposing the policy function into learnable and non-learnable components. Here, πθ=π(T)∘π(D)θπθ=π(T)∘π(D)θ, where π(D)θπ(D)θ maps the state space to Desires, influencing strategic decisions like lane positioning and overtaking decisions. This learnable policy, derived from experience, aims to maximize expected rewards while ensuring driving comfort and safety [

25]. The non-learnable part, π(T)π(T), transforms Desires into trajectory paths under strict functional safety constraints. By separating the policy into these components, the approach rigorously maintains functional safety while allowing for adaptable, reward-maximizing behavior in most driving scenarios [

26]. This architectural design circumvents the pitfalls of traditional RL by embedding hard constraints outside the learning framework, thus ensuring safer and more reliable autonomous driving systems.

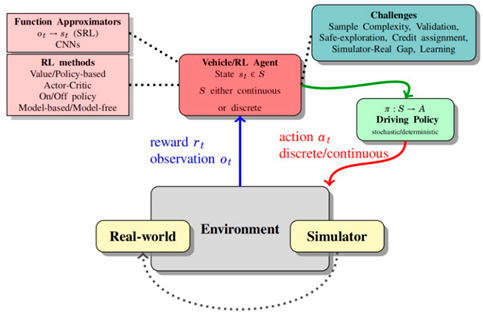

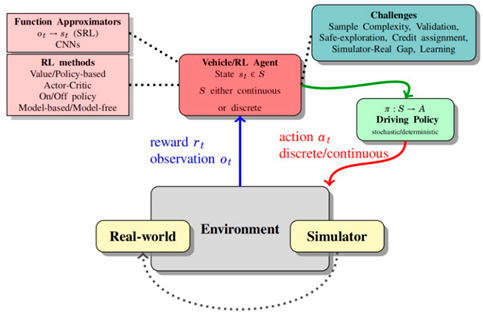

3. Reinforcement Learning

3.1. Machine Learning (ML) Encompasses Algorithms

Machine learning (ML) [

27] encompasses algorithms categorized into supervised, unsupervised, and reinforcement learning (RL) [

28]. Supervised learning involves training models with labeled data for tasks like classification or regression, while unsupervised learning handles unlabelled data through techniques like clustering or density estimation. In RL, an autonomous agent learns by interacting with its environment, aiming to maximize cumulative rewards based on actions chosen in response to environmental states. Unlike supervised learning where actions are prescribed [

29], RL agents use a reward function to evaluate their actions, balancing exploration (trying new actions for potentially higher rewards) and exploitation (leveraging known actions for immediate reward gains).

Strategies like ε-greedy and softmax are employed to manage this trade-off, with exploration favored early in training to discover optimal actions, gradually shifting towards exploitation as the agent learns more about the environment [

30]. Ongoing research focuses on refining exploration-exploitation strategies to enhance RL agent performance in dynamic and complex environments (Russell & Norvig, 2016; Sutton & Barto, 2018).

A) Value-based methods in reinforcement learning focus on estimating the utility of state-action pairs, with Q-learning being a prominent algorithm. Q-learning operates without requiring a model of the environment, updating Q-values [

31,

32,

33] iteratively based on observed rewards and transitions between states. The algorithm guarantees convergence to optimal state-action values under conditions where all state-action pairs are sufficiently explored. Deep Q-Networks (DQN) [

34,

35] extend Q-learning by employing deep neural networks to approximate Q-functions, enabling effective learning in high-dimensional state spaces such as Atari games [

36]. DQN incorporates techniques like experience replay to improve sample efficiency and stability, using two networks to enhance training robustness by decoupling parameter updates from target predictions.

B) Unlike value-based methods, policy-based methods directly optimize the policy rather than Q-values. These methods parameterize policies using neural networks, aiming to maximize expected rewards through gradient ascent on policy parameters. Policy gradient algorithms, like REINFORCE [

37], update policy parameters based on the gradient of the expected return, enabling learning in environments with continuous action spaces. Deterministic Policy Gradient (DPG) algorithms address continuous action spaces by optimizing a deterministic policy, simplifying gradient calculations over the action space compared to stochastic policies. Trust Region Policy Optimization (TRPO) [

38,

39] and Proximal Policy Optimization (PPO) are examples of policy-based methods that improve stability and sample efficiency by constraining policy updates to prevent large deviations from prior policies, thus ensuring incremental improvements in policy performance while mitigating the risk of poor updates.

4. Conclusions

Based on the comprehensive exploration of reinforcement learning (RL) applications in autonomous driving, it is evident that RL offers robust solutions to complex challenges beyond the capabilities of traditional supervised learning methods. [

40] Autonomous driving systems benefit significantly from RL frameworks, particularly in driving policies, predictive perception, path planning, and controller design. These applications leverage RL’s ability to optimize decision-making in dynamic and uncertain environments, enhancing safety and efficiency on the road.

Moreover, the review underscores the critical role of scene understanding, localization, and mapping in autonomous driving systems. These components, powered by deep learning and sensor fusion technologies, provide the necessary perceptual capabilities for reliable navigation in diverse urban environments. Integrating multiple sensor modalities ensures accurate detection and localization of obstacles, pedestrians, and road features, thereby enabling adaptive and context-aware driving decisions.

Furthermore, the discussion on safe reinforcement learning highlights innovative approaches to mitigate risks associated with RL in autonomous driving. By separating policy functions into learnable and non-learnable components, autonomous systems can balance maximizing expected rewards and adhering to stringent safety constraints. This architectural design ensures that RL-driven autonomy optimizes driving behaviors and prioritizes safety, addressing deployment challenges and paving the way for future advancements in autonomous vehicle technology.

In conclusion, synthesizing RL advancements in autonomous driving systems emphasizes their transformative impact on the automotive industry. Future research directions should focus on enhancing algorithmic robustness, managing multi-agent interactions, and integrating ethical considerations, thereby advancing RL applications’ safety, reliability, and scalability in real-world autonomous driving deployments.

Acknowledgments

Acknowledgments are extended to Guo, Lingfeng et al. (2024) for their contributions to this paper titled [

2] ”Bank Credit Risk Early Warning Model Based on Machine Learning Decision Trees.” Their research exploring the application of machine learning decision trees in banking credit risk management provided profound inspiration and valuable guidance and insights into advancements in banking business management. Their work has laid a solid foundation and methodological framework for our theoretical constructs and empirical analyses in this study.

Acknowledgments are extended to Xin, Q., Xu, Z., Guo, L., Zhao, F., & Wu, B. (2024) for their contributions to this paper titled [

3] ”IoT Traffic Classification and Anomaly Detection Method based on Deep Autoencoders.” Their research into deep autoencoder-based methods for IoT traffic classification and anomaly detection has provided profound insights and technical support for this study. Their work not only laid important theoretical groundwork for this research but also contributed valuable empirical analysis and methodological innovations to the field of IoT security and traffic management.

References

- Zhan, X.; Shi, C.; Li, L.; Xu, K.; Zheng, H. Aspect category sentiment analysis based on multiple attention mechanisms and pre-trained models. Appl. Comput. Eng. 2024, 71, 21–26. [CrossRef]

- Guo, L., Li, Z., Qian, K., Ding, W., & Chen, Z. (2024). Bank Credit Risk Early Warning Model Based on Machine Learning Decision Trees. Journal of Economic Theory and Business Management, 1(3), 24-30. [CrossRef]

- Xin, Q.; Xu, Z.; Guo, L.; Zhao, F.; Wu, B. IoT traffic classification and anomaly detection method based on deep autoencoders. Appl. Comput. Eng. 2024, 69, 64–70. [CrossRef]

- Wu, B.; Gong, Y.; Zheng, H.; Zhang, Y.; Huang, J.; Xu, J. Enterprise cloud resource optimization and management based on cloud operations. Appl. Comput. Eng. 2024, 67, 8–14. [CrossRef]

- Xu, Z., Guo, L., Zhou, S., Song, R., & Niu, K. (2024). Enterprise Supply Chain Risk Management and Decision Support Driven by Large Language Models. Applied Science and Engineering Journal for Advanced Research, 3(4), 1-7. [CrossRef]

- Tian, J.; Li, H.; Qi, Y.; Wang, X.; Feng, Y. Intelligent medical detection and diagnosis assisted by deep learning. Appl. Comput. Eng. 2024, 64, 121–126. [CrossRef]

- Zhou, Y.; Zhan, T.; Wu, Y.; Song, B.; Shi, C. RNA secondary structure prediction using transformer-based deep learning models. 2024. arXiv preprint arXiv:2405.06655. [CrossRef]

- Liu, B.; Cai, G.; Ling, Z.; Qian, J.; Zhang, Q. Precise positioning and prediction system for autonomous driving based on generative artificial intelligence. Appl. Comput. Eng. 2024, 64, 42–49. [CrossRef]

- Cui, Z.; Lin, L.; Zong, Y.; Chen, Y.; Wang, S. Precision gene editing using deep learning: A case study of the CRISPR-Cas9 editor. Appl. Comput. Eng. 2024, 64, 134–141. [CrossRef]

- Xu, J.; Wu, B.; Huang, J.; Gong, Y.; Zhang, Y.; Liu, B. Practical applications of advanced cloud services and generative AI systems in medical image analysis. Appl. Comput. Eng. 2024, 64, 82–87. [CrossRef]

- Zhang, Y.; Liu, B.; Gong, Y.; Huang, J.; Xu, J.; Wan, W. Application of machine learning optimization in cloud computing resource scheduling and management. Appl. Comput. Eng. 2024, 64, 9–14. [CrossRef]

- Huang, J.; Zhang, Y.; Xu, J.; Wu, B.; Liu, B.; Gong, Y. Implementation of seamless assistance with Google Assistant leveraging cloud computing. Appl. Comput. Eng. 2024, 64, 169–175. [CrossRef]

- Yang, T.; Xin, Q.; Zhan, X.; Zhuang, S.; Li, H. Enhancing Financial Services Through Big Data And Ai-Driven Customer Insights And Risk Analysis. J. Knowl. Learn. Sci. Technol. Issn: 2959-6386 (online) 2024, 3, 53–62. [CrossRef]

- Wu, B.; Xu, J.; Zhang, Y.; Liu, B.; Gong, Y.; Huang, J. Integration of computer networks and artificial neural networks for an AI-based network operator. Appl. Comput. Eng. 2024, 64, 115–120. [CrossRef]

- Haowei, M.; Ebrahimi, S.; Mansouri, S.; Abdullaev, S.S.; Alsaab, H.O.; Hassan, Z.F. CRISPR/Cas-based nanobiosensors: A reinforced approach for specific and sensitive recognition of mycotoxins. Food Biosci. 2023, 56. [CrossRef]

- Liang, P.; Song, B.; Zhan, X.; Chen, Z.; Yuan, J. Automating the training and deployment of models in MLOps by integrating systems with machine learning. Appl. Comput. Eng. 2024, 67, 1–7. [CrossRef]

- Li, A.; Yang, T.; Zhan, X.; Shi, Y.; Li, H. Utilizing Data Science and AI for Customer Churn Prediction in Marketing. J. Theory Pr. Eng. Sci. 2024, 4, 72–79. [CrossRef]

- Zhan, X., Ling, Z., Xu, Z., Guo, L., & Zhuang, S. (2024). Driving Efficiency and Risk Management in Finance through AI and RPA. Unique Endeavor in Business & Social Sciences, 3(1), 189-197.

- Shi, Y.; Yuan, J.; Yang, P.; Wang, Y.; Chen, Z. Implementing intelligent predictive models for patient disease risk in cloud data warehousing. Appl. Comput. Eng. 2024, 67, 34–40. [CrossRef]

- Allman, R.; Mu, Y.; Dite, G.S.; Spaeth, E.; Hopper, J.L.; Rosner, B.A. Validation of a breast cancer risk prediction model based on the key risk factors: family history, mammographic density and polygenic risk. Breast Cancer Res. Treat. 2023, 198, 335–347. [CrossRef]

- Zhan, T.; Shi, C.; Shi, Y.; Li, H.; Lin, Y. Optimization techniques for sentiment analysis based on LLM (GPT-3). 2024, arXiv preprint arXiv:2405.09770. [CrossRef]

- Jiang, W.; Qian, K.; Fan, C.; Ding, W.; Li, Z. Applications of generative AI-based financial robot advisors as investment consultants. Appl. Comput. Eng. 2024, 67, 28–33. [CrossRef]

- Sha, X. Time Series Stock Price Forecasting Based on Genetic Algorithm (GA)-Long Short-Term Memory Network (LSTM) Optimization. 2024, arXiv preprint arXiv:2405.03151. [CrossRef]

- Fan, C.; Li, Z.; Ding, W.; Zhou, H.; Qian, K. Integrating artificial intelligence with SLAM technology for robotic navigation and localization in unknown environments. Appl. Comput. Eng. 2024, 67, 8–13. [CrossRef]

- Bai, X., Zhuang, S., Xie, H., & Guo, L. (2024). Leveraging Generative Artificial Intelligence for Financial Market Trading Data Management and Prediction. [CrossRef]

- Zhan, X., Ling, Z., Xu, Z., Guo, L., & Zhuang, S. (2024). Driving Efficiency and Risk Management in Finance through AI and RPA. Unique Endeavor in Business & Social Sciences, 3(1), 189-197.

- Sarkis, R.A.; Goksen, Y.; Mu, Y.; Rosner, B.; Lee, J.W. Cognitive and fatigue side effects of anti-epileptic drugs: an analysis of phase III add-on trials. J. Neurol. 2018, 265, 2137–2142. [CrossRef]

- Wang, B.; He, Y.; Shui, Z.; Xin, Q.; Lei, H. Predictive optimization of DDoS attack mitigation in distributed systems using machine learning. Appl. Comput. Eng. 2024, 64, 95–100. [CrossRef]

- Srivastava, S., Huang, C., Fan, W., & Yao, Z. (2023). Instance Needs More Care: Rewriting Prompts for Instances Yields Better Zero-Shot Performance. arXiv preprint arXiv:2310.02107. [CrossRef]

- Sun, Y., Cui, Y., Hu, J., & Jia, W. (2018). Relation classification using coarse and fine-grained networks with SDP supervised key words selection. In Knowledge Science, Engineering and Management: 11th International Conference, KSEM 2018, Changchun, China, August 17–19, 2018, Proceedings, Part I 11 (pp. 514-522). Springer International Publishing. [CrossRef]

- Dhand, A.; Lang, C.E.; Luke, D.A.; Kim, A.; Li, K.; McCafferty, L.; Mu, Y.; Rosner, B.; Feske, S.K.; Lee, J.-M. Social Network Mapping and Functional Recovery Within 6 Months of Ischemic Stroke. Neurorehabilit. Neural Repair 2019, 33, 922–932. [CrossRef]

- Xin, Q., Song, R., Wang, Z., Xu, Z., & Zhao, F. (2024). Enhancing Bank Credit Risk Management Using the C5. 0 Decision Tree Algorithm. Journal Environmental Sciences And Technology, 3(1), 960-967.

- Song, R., Wang, Z., Guo, L., Zhao, F., & Xu, Z. (2024). Deep Belief Networks (DBN) for Financial Time Series Analysis and Market Trends Prediction.

- Lu, W., Ni, C., Wang, H., Wu, J., & Zhang, C. (2024). Machine Learning-Based Automatic Fault Diagnosis Method for Operating Systems. [CrossRef]

- Zhong, Y., Cheng, Q., Qin, L., Xu, J., & Wang, H. (2024). Hybrid Deep Learning for AI-Based Financial Time Series Prediction. Journal of Economic Theory and Business Management, 1(2), 27-35. [CrossRef]

- Wang, J., Xin, Q., Liu, Y., Wang, J., & Yang, T. (2024). Predicting Enterprise Marketing Decision Making with Intelligent Data-Driven Approaches. Journal of Industrial Engineering and Applied Science, 2(3), 12-19. [CrossRef]

- Zheng, H., Wu, J., Song, R., Guo, L., & Xu, Z. (2024). Predicting Financial Enterprise Stocks and Economic Data Trends Using Machine Learning Time Series Analysis. [CrossRef]

- Wang, B.; Lei, H.; Shui, Z.; Chen, Z.; Yang, P. Current State of Autonomous Driving Applications Based on Distributed Perception and Decision-Making. World J. Innov. Mod. Technol. 2024, 7, 15–22. [CrossRef]

- Zhang, Y.; Xie, H.; Zhuang, S.; Zhan, X. Image Processing and Optimization Using Deep Learning-Based Generative Adversarial Networks (GANs). J. Artif. Intell. Gen. Sci. (JAIGS) ISSN:3006-4023 2024, 5, 50–62. [CrossRef]

- Guo, L., Song, R., Wu, J., Xu, Z., & Zhao, F. (2024). Integrating a Machine Learning-Driven Fraud Detection System Based on a Risk Management Framework. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).