Submitted:

10 July 2024

Posted:

11 July 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Related Work

2.1. Traditional Time Series Analysis Method

2.1.1. Advantages and Limitations:

2.1.2. Visual Aids

2.2. Application of Machine Learning to Financial Forecasting

- (1)

- LSTM (Long Short-Term Memory)

LSTM Architecture

- (2)

- ARIMA model

- AR (autoregressive): This component uses a linear combination of observations from past time steps to predict the value of the current time step. The degree of AR determines the number of time steps we can retrace.

- I (integral): This component smooths the data by capturing differences in the series. This smooths out the trend of the data series and makes the model a better fit.

- MA (Moving average): This component uses a linear combination of error terms for past time steps to estimate the value of the current time step. The degree of MA determines the degree of backtracking of past error terms.

- (3)

- Neural network(CNN)

2.3. Time Series and Financial Forecasting

2.4. Stock Forecasting-Related Tasks

- Stock price forecasting uses time series data to predict the future value of stocks and financial assets traded on exchanges. The goal of this forecast is to achieve a healthy profit. In addition, various factors also affect the forecasting process, including psychological factors and rational and irrational behavior, all of which work together to make stock prices dynamic and volatile.

- Portfolio management involves the strategic selection and oversight of a collection of investments aimed at achieving financial objectives, which aims to allocate resources in a way that maximizes returns while minimizing risk [20].

- A trading strategy is a set of pre-established guidelines and standards used to make trading decisions, and it is a systematic approach to buying and selling stocks. Trading strategies can range from simple to complex, with factors such as investment style (e.g., value vs growth), market cap, technical indicators, fundamental analysis, level of portfolio diversification, risk tolerance, and leverage all considered.

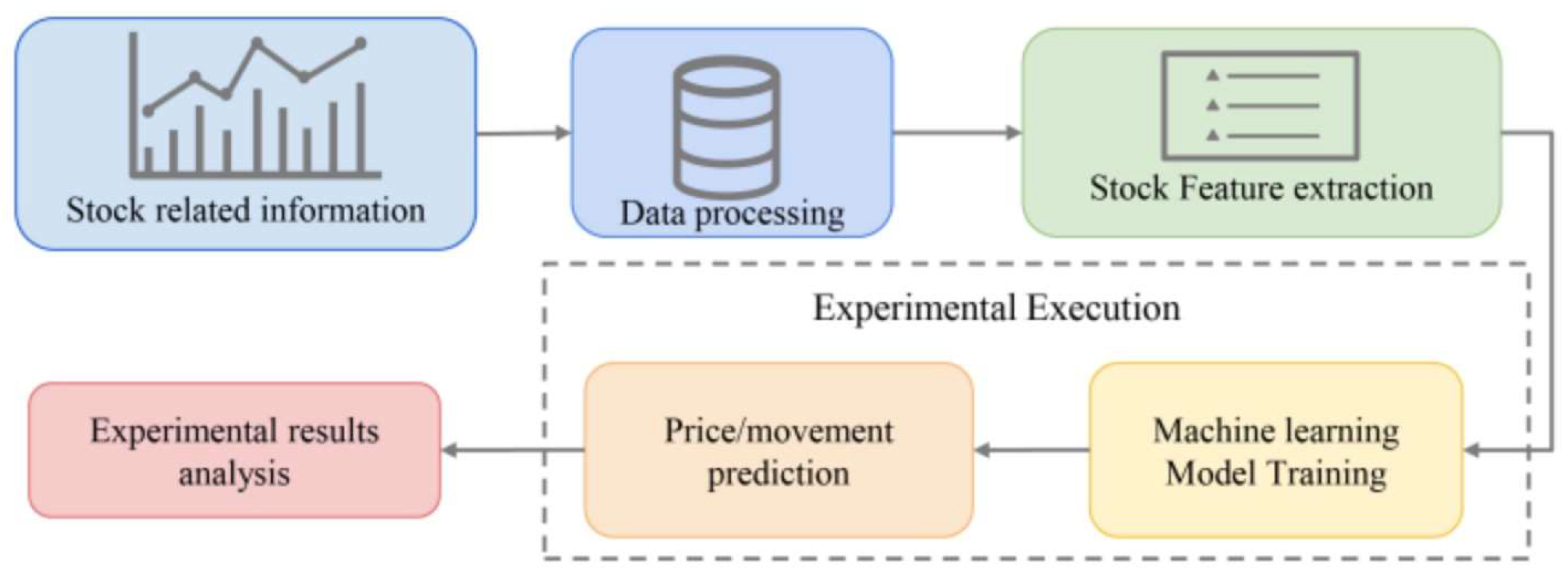

3. Methodology

3.1. Model Discussion

-

Convolutional Neural Networks (CNNs):

- -

- CNNs are adept at learning spatial hierarchies of features through convolutional layers.

- -

- In the context of multivariate time series, CNNs can be applied to extract spatial patterns across different variables (e.g., multiple stock prices, economic indicators) at each time step.

-

Long Short-Term Memory networks (LSTMs):

- -

- LSTMs are well-suited for modeling temporal dependencies by maintaining long-term memory of sequential data.

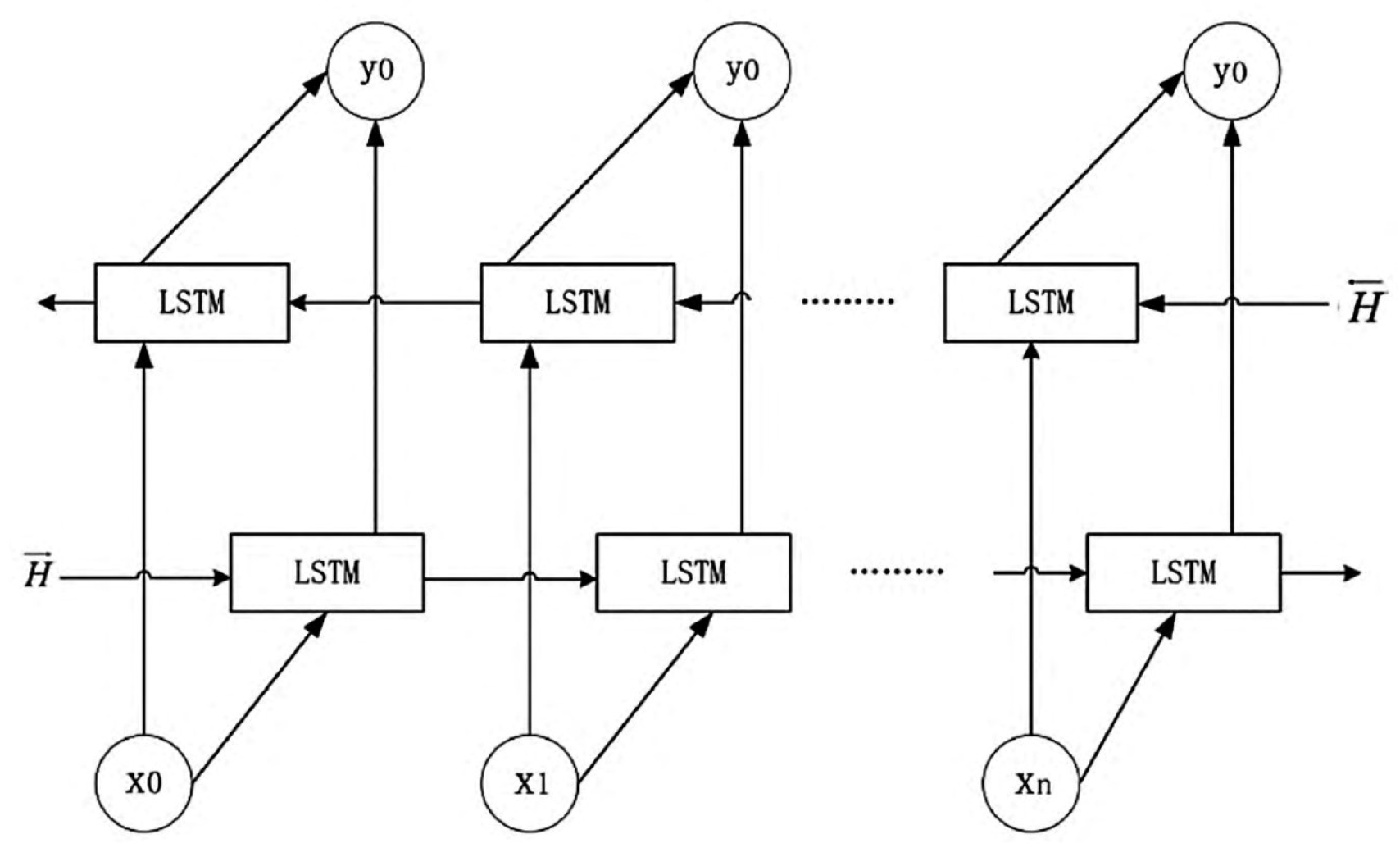

- BiLSTM: Based on the cell structure of LSTM, the LSTM historical model has stronger historical information screening ability and chronological order learning ability, and can rationally use the input historical data information to form long-term memory of historical data information in the past period, thus avoiding the problem that effective historical information cannot be stored permanently due to the influence of continuous input historical data. Since data processing depends on the direction of network connection, Bi-directional Long Short-Term Memory (BiLSTM) is introduced for events that need to consider the impact of future data on historical data. The model can reference the influence of both historical and future data on the predicted results.

The First Step is Data Preprocessing

3.2. Data Processing

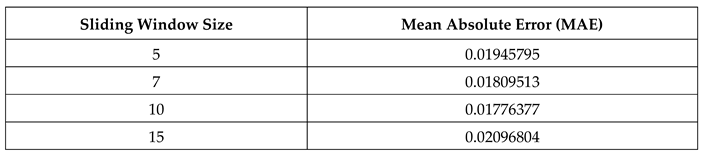

3.3. Test Data and Methods

3.4. Experimental Design

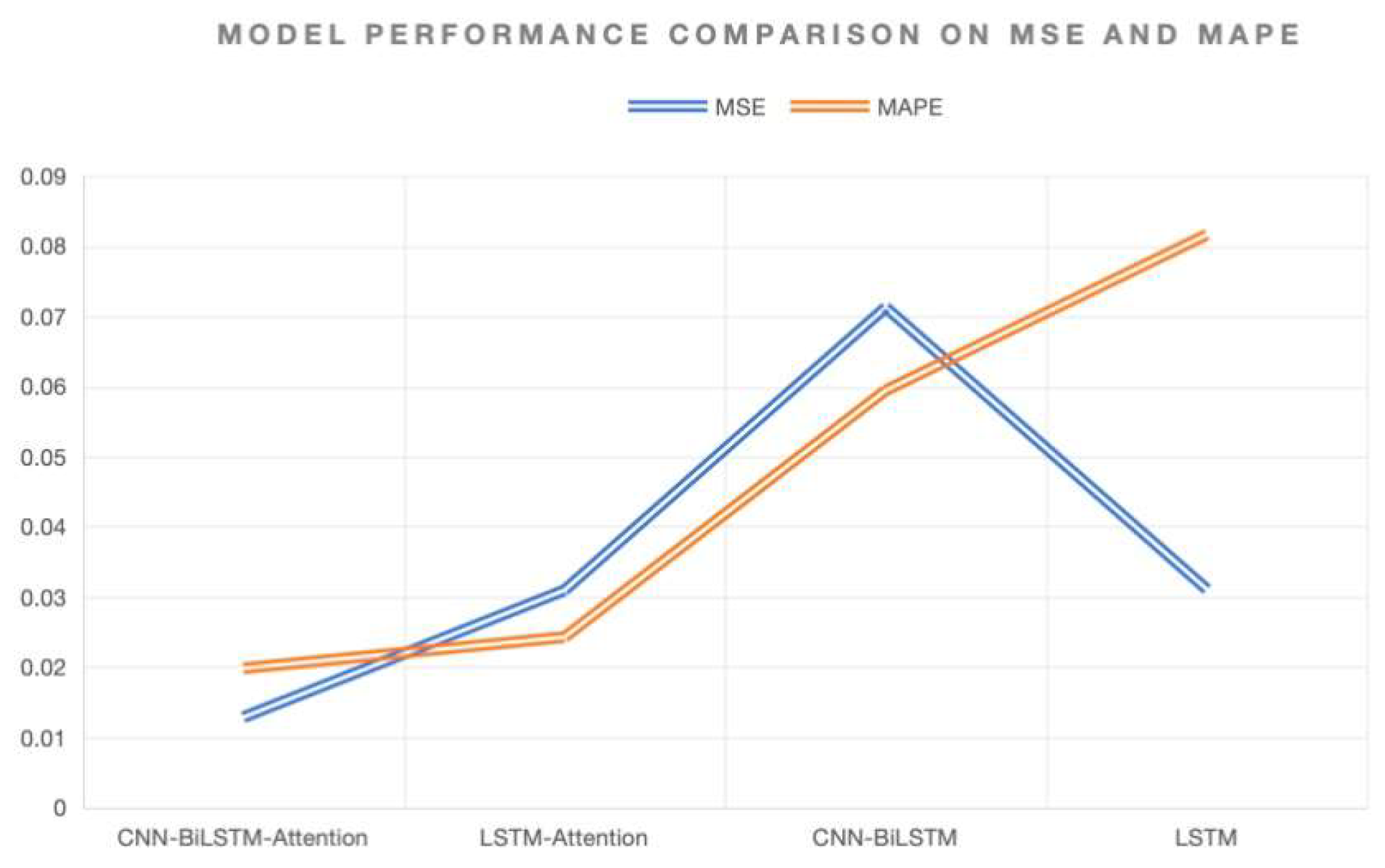

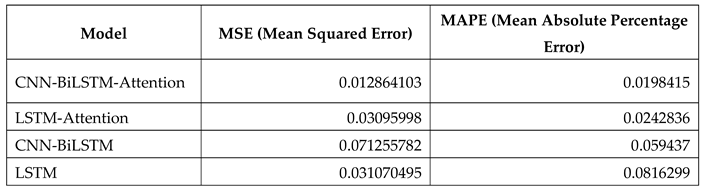

- Improved prediction accuracy: Experimental results show that the new hybrid model significantly improves the accuracy of predicting changes in stock prices. Compared with traditional statistical methods, the model performs better on several evaluation indicators, such as mean square and absolute percentage errors.

- Effectiveness of feature extraction: Using convolutional neural networks (CNNs) for feature extraction can effectively capture spatial information in the input data, which is particularly important for analyzing multivariate time series. These extracted features help improve the subsequent model’s predictive power (BiLSTM).

- Timing modeling of BiLSTM models: Bidirectional Long and short memory neural networks (BiLSTM) perform well in processing time series data, effectively capturing long and short-term timing dependencies, thereby improving the robustness and accuracy of predictions.

- Addition of attention mechanisms: The introduction of attention mechanisms further improves the model’s performance. The attention mechanism can make the model pay more attention to essential time steps or features in the learning and prediction process, thus effectively improving the accuracy and stability of prediction.

- Technical support for quantitative trading: This improved hybrid model not only significantly improves the prediction accuracy but also has the feasibility of practical application and can provide more reliable technical support for financial applications such as quantitative trading.

4. Conclusions

References

- Colladon, Andrea Fronzetti, and Giacomo Scettri. "Look inside. Predicting stock prices by analyzing an enterprise intranet social network and using word co-occurrence networks." International Journal of Entrepreneurship and Small Business36.4 (2019): 378-391. [CrossRef]

- Choudhury, M.; Li, G.; Li, J.; Zhao, K.; Dong, M.; Harfoush, K. (2021, September). Power Efficiency in Communication Networks with Power-Proportional Devices. 2021 IEEE Symposium on Computers and Communications (ISCC); pp. 1–6. IEEE. [CrossRef]

- Yang, T.; Xin, Q.; Zhan, X.; Zhuang, S.; Li, H. ENHANCING FINANCIAL SERVICES THROUGH BIG DATA AND AI-DRIVEN CUSTOMER INSIGHTS AND RISK ANALYSIS. J. Knowl. Learn. Sci. Technol. Issn: 2959-6386 2024, 3, 53–62. [CrossRef]

- Shi, Y.; Li, L.; Li, H.; Li, A.; Lin, Y. Aspect-Level Sentiment Analysis of Customer Reviews Based on Neural Multi-task Learning. J. Theory Pr. Eng. Sci. 2024, 4, 1–8. [CrossRef]

- Yuan, J., Lin, Y., Shi, Y., Yang, T., & Li, A. (2024). Applications of Artificial Intelligence Generative Adversarial Techniques in the Financial Sector. Academic Journal of Sociology and Management, 2(3), 59-66. [CrossRef]

- Jiang, W.; Qian, K.; Fan, C.; Ding, W.; Li, Z. Applications of generative AI-based financial robot advisors as investment consultants. Appl. Comput. Eng. 2024, 67, 28–33. [CrossRef]

- Ding, W., Zhou, H., Tan, H., Li, Z., & Fan, C. (2024). Automated Compatibility Testing Method for Distributed Software Systems in Cloud Computing.

- Fan, C.; Li, Z.; Ding, W.; Zhou, H.; Qian, K. Integrating artificial intelligence with SLAM technology for robotic navigation and localization in unknown environments. Appl. Comput. Eng. 2024, 67, 22–27. [CrossRef]

- Guo, L., Li, Z., Qian, K., Ding, W., & Chen, Z. (2024). Bank Credit Risk Early Warning Model Based on Machine Learning Decision Trees. Journal of Economic Theory and Business Management, 1(3), 24-30. [CrossRef]

- Li, Z.; Fan, C.; Ding, W.; Qian, K. Robot Navigation and Map Construction Based on SLAM Technology. World J. Innov. Mod. Technol. 2024, 7, 8–14. [CrossRef]

- Fan, C.; Ding, W.; Qian, K.; Tan, H.; Li, Z. Cueing Flight Object Trajectory and Safety Prediction Based on SLAM Technology. J. Theory Pr. Eng. Sci. 2024, 4, 1–8. [CrossRef]

- Ding, W.; Tan, H.; Zhou, H.; Li, Z.; Fan, C. Immediate traffic flow monitoring and management based on multimodal data in cloud computing. Appl. Comput. Eng. 2024, 71, 1–6. [CrossRef]

- Qian, K., Fan, C., Li, Z., Zhou, H., & Ding, W. (2024). Implementation of Artificial Intelligence in Investment Decision-making in the Chinese A-share Market. Journal of Economic Theory and Business Management, 1(2), 36-42. [CrossRef]

- Lin, Y.; Li, A.; Li, H.; Shi, Y.; Zhan, X. GPU-Optimized Image Processing and Generation Based on Deep Learning and Computer Vision. J. Artif. Intell. Gen. Sci. (JAIGS) ISSN:3006-4023 2024, 5, 39–49. [CrossRef]

- Shi, Y.; Yuan, J.; Yang, P.; Wang, Y.; Chen, Z. Implementing intelligent predictive models for patient disease risk in cloud data warehousing. Appl. Comput. Eng. 2024, 67, 34–40. [CrossRef]

- Cui, Z.; Lin, L.; Zong, Y.; Chen, Y.; Wang, S. Precision gene editing using deep learning: A case study of the CRISPR-Cas9 editor. Appl. Comput. Eng. 2024, 64, 134–141. [CrossRef]

- Haowei, M.; Ebrahimi, S.; Mansouri, S.; Abdullaev, S.S.; Alsaab, H.O.; Hassan, Z.F. CRISPR/Cas-based nanobiosensors: A reinforced approach for specific and sensitive recognition of mycotoxins. Food Biosci. 2023, 56. [CrossRef]

- Xu, Q., Xu, L., Jiang, G., & He, Y. (2024, June). Artificial Intelligence In Risk Protection For Financial Payment Systems. In The 24th International scientific and practical conference “Technologies of scientists and implementation of modern methods”(June 18–21, 2024) Copenhagen, Denmark. International Science Group. 2024. 431 p. (p. 344). [CrossRef]

- Huang, S., Diao, S., Zhao, H., & Xu, L. (2024, June). The Contribution Of Federated Learning To Ai Development. In The 24th International scientific and practical conference “Technologies of scientists and implementation of modern methods”(June 18–21, 2024) Copenhagen, Denmark. International Science Group. 2024. 431 p. (p. 358). [CrossRef]

- Zhan, X., Ling, Z., Xu, Z., Guo, L., & Zhuang, S. (2024). Driving Efficiency and Risk Management in Finance through AI and RPA. Unique Endeavor in Business & Social Sciences, 3(1), 189-197.

- Bao, W.; Xiao, J.; Deng, T.; Bi, S.; Wang, J. The Challenges and Opportunities of Financial Technology Innovation to Bank Financing Business and Risk Management. Financial Eng. Risk Manag. 2024, 7, 82–88. [CrossRef]

- Xu, L., Gong, C., Jiang, G., & Yang, H. (2024, June). A Study On Personalized Web Page Recommendation Based On Natural Language Processing And Its Impact On User Browsing Behavior. In The 24th International scientific and practical conference “Technologies of scientists and implementation of modern methods”(June 18–21, 2024) Copenhagen, Denmark. International Science Group. 2024. 431 p. (p. 317).

- Wang, B.; He, Y.; Shui, Z.; Xin, Q.; Lei, H. Predictive optimization of DDoS attack mitigation in distributed systems using machine learning. Appl. Comput. Eng. 2024, 64, 95–100. [CrossRef]

- Dhand, A.; Lang, C.E.; Luke, D.A.; Kim, A.; Li, K.; McCafferty, L.; Mu, Y.; Rosner, B.; Feske, S.K.; Lee, J.-M. Social Network Mapping and Functional Recovery Within 6 Months of Ischemic Stroke. Neurorehabilit. Neural Repair 2019, 33, 922–932. [CrossRef]

- Allman, R.; Mu, Y.; Dite, G.S.; Spaeth, E.; Hopper, J.L.; Rosner, B.A. Validation of a breast cancer risk prediction model based on the key risk factors: family history, mammographic density and polygenic risk. Breast Cancer Res. Treat. 2023, 198, 335–347. [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).