Submitted:

26 June 2024

Posted:

27 June 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Method and Materials

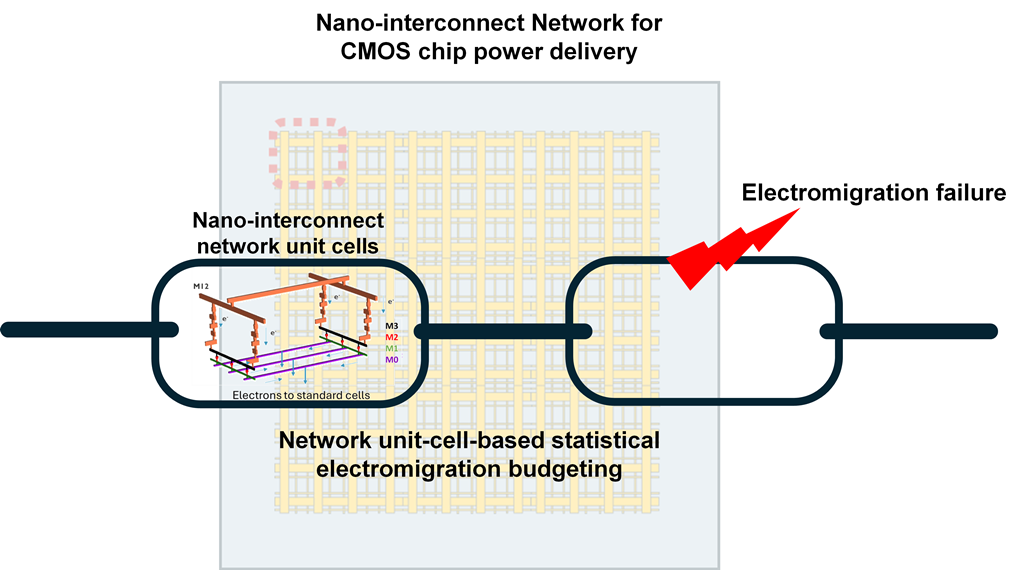

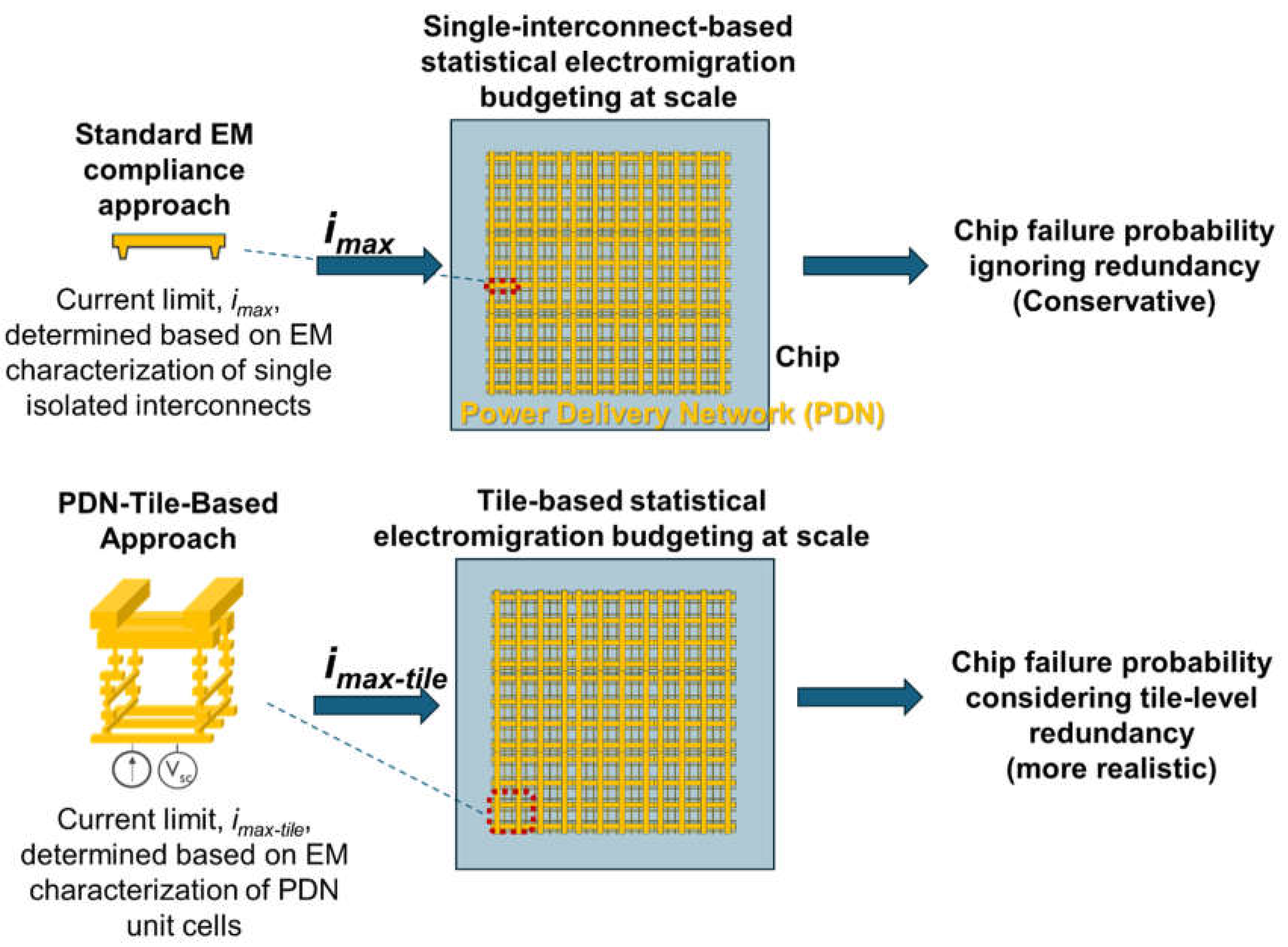

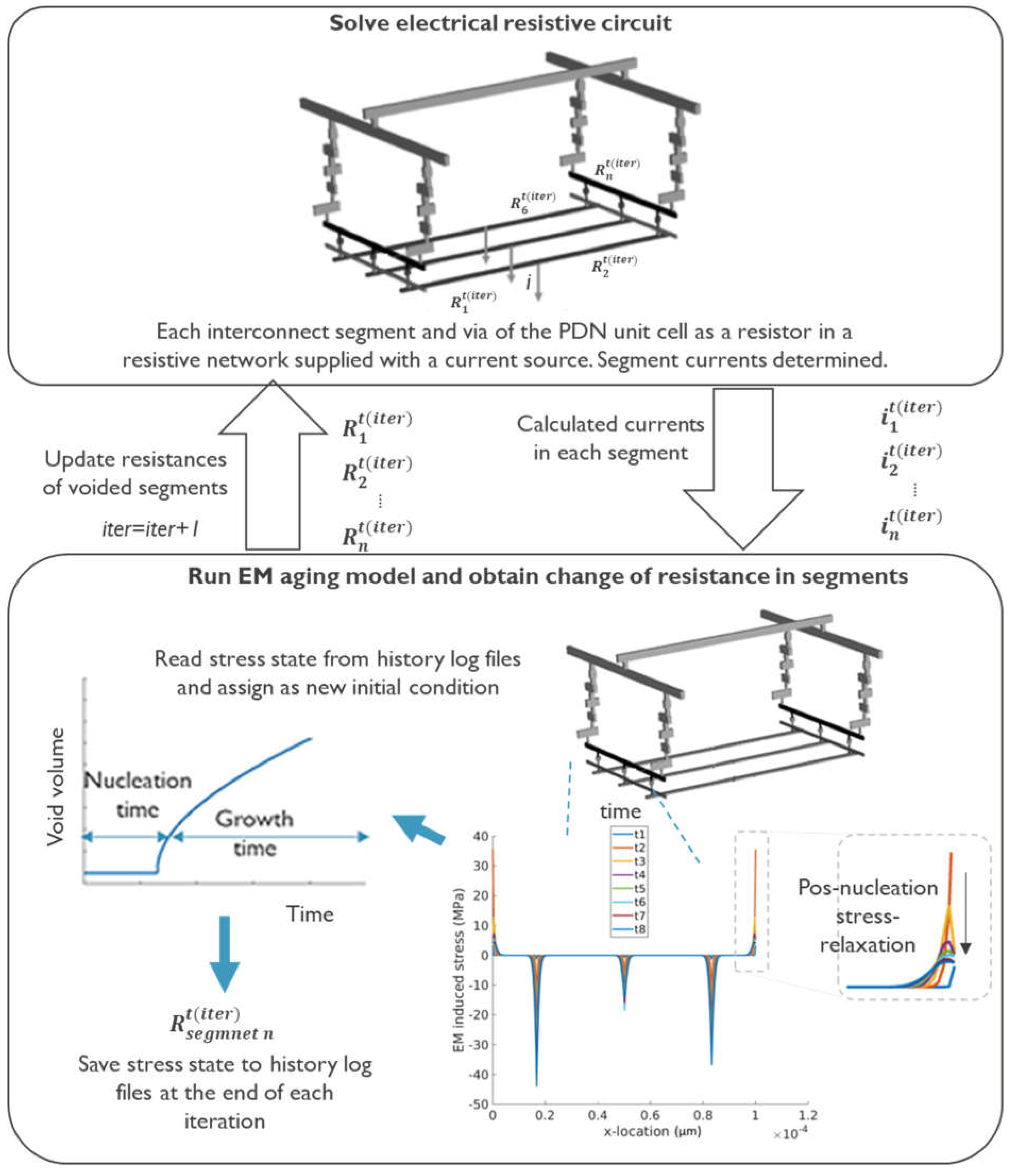

2.1. Network-Aware Modelling Framework

2.2. Electromigration Model

2.3. Variability Modelling and Assumptions

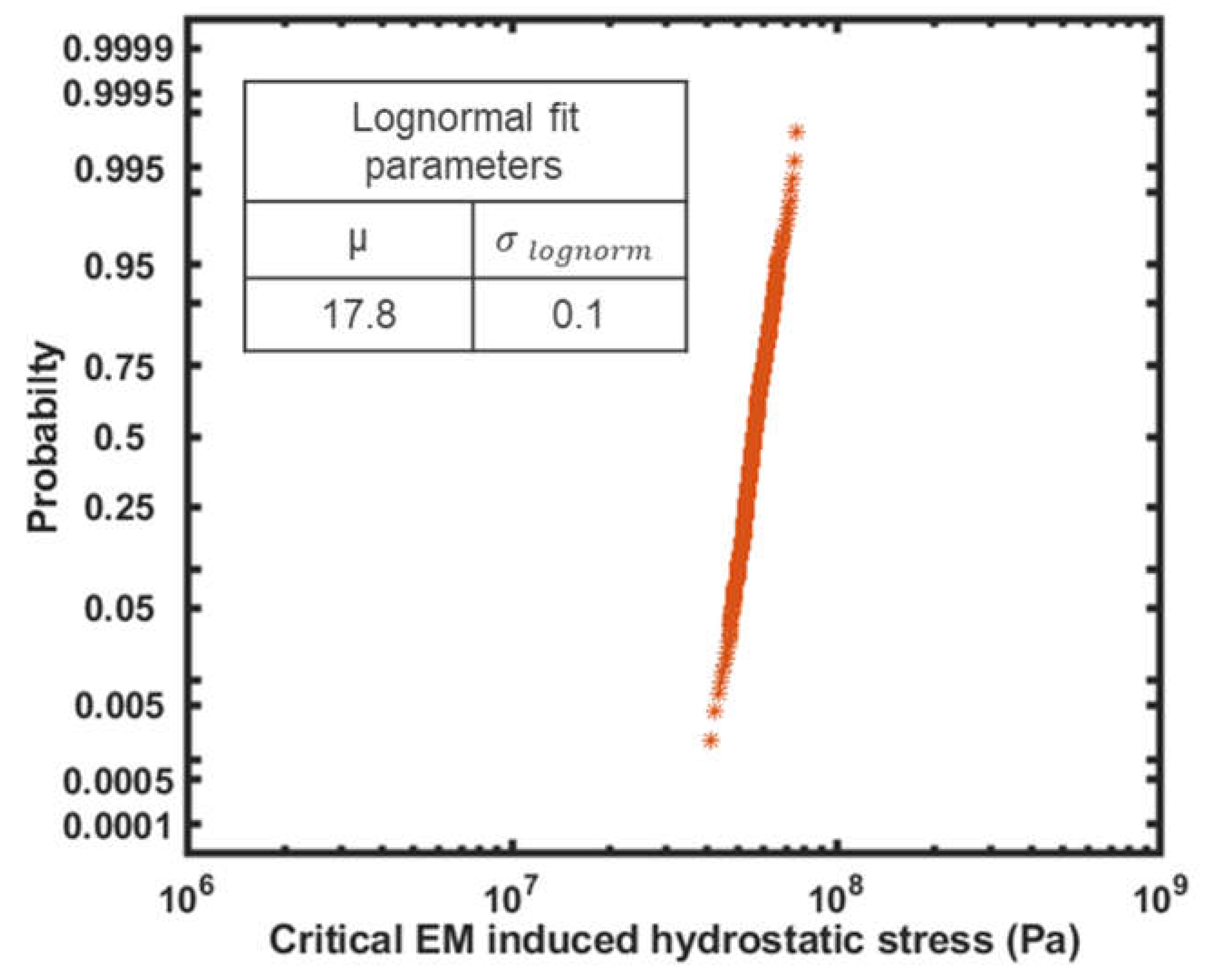

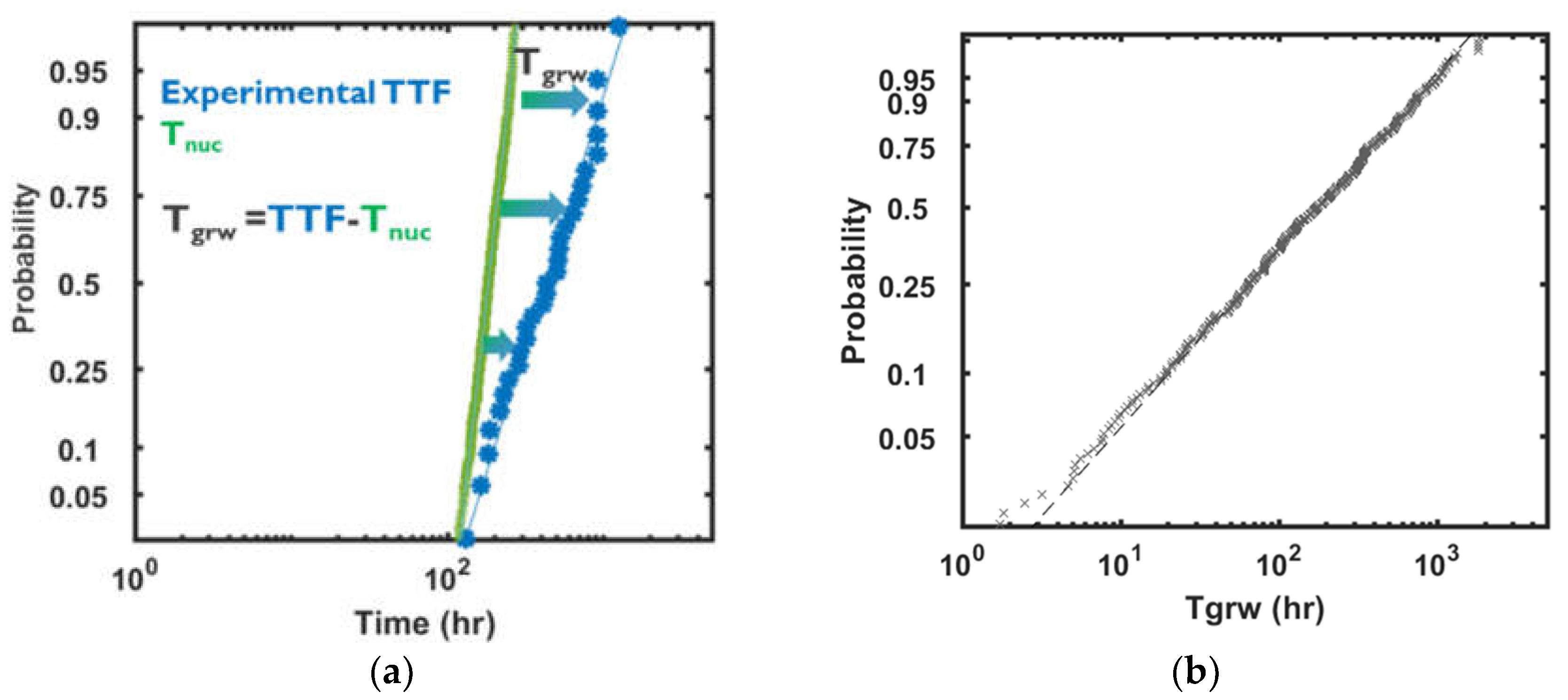

2.3.1. Variability of Time to Nucleation at Single Interconnect Level

2.3.1. Variability of Void’s Resistive Impact at Single Interconnect Level

3. Results and Discussion

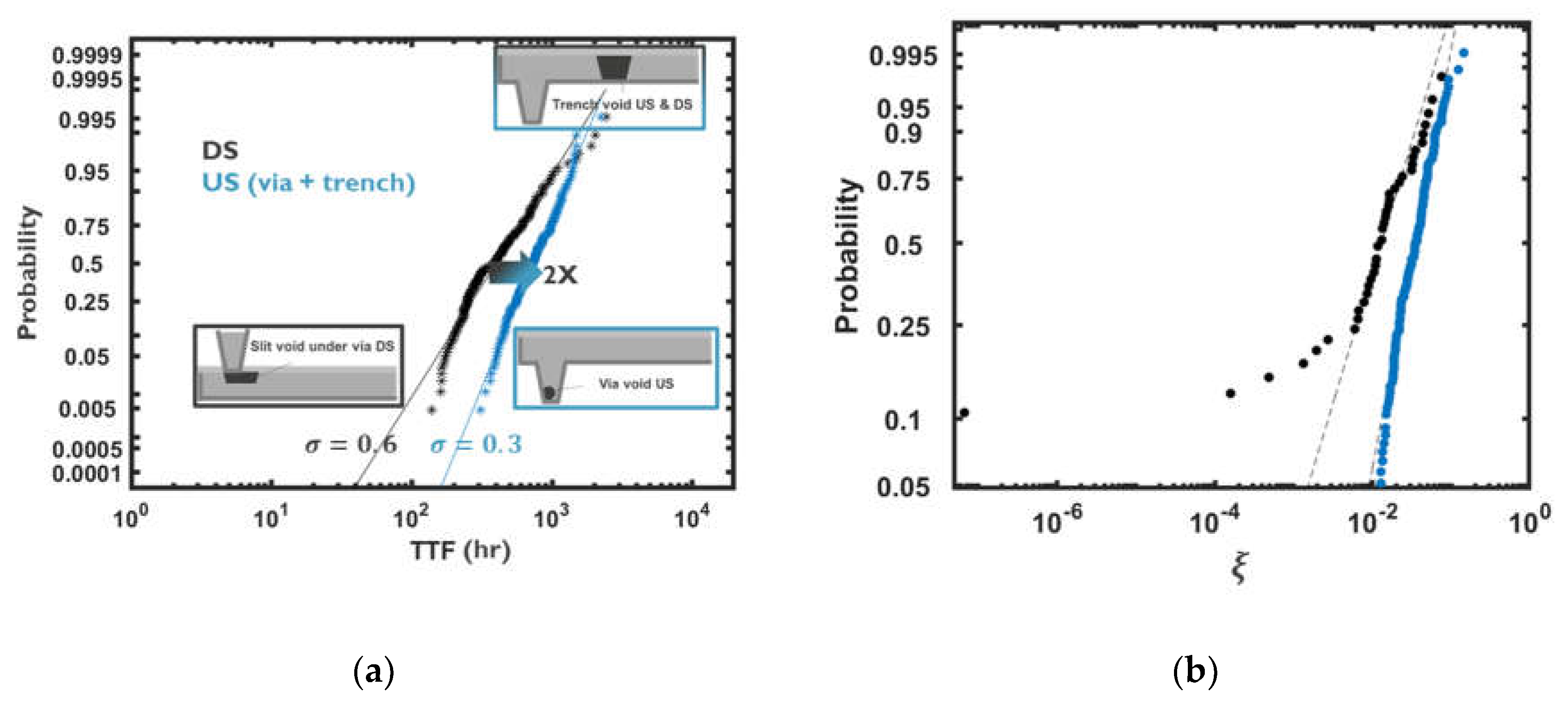

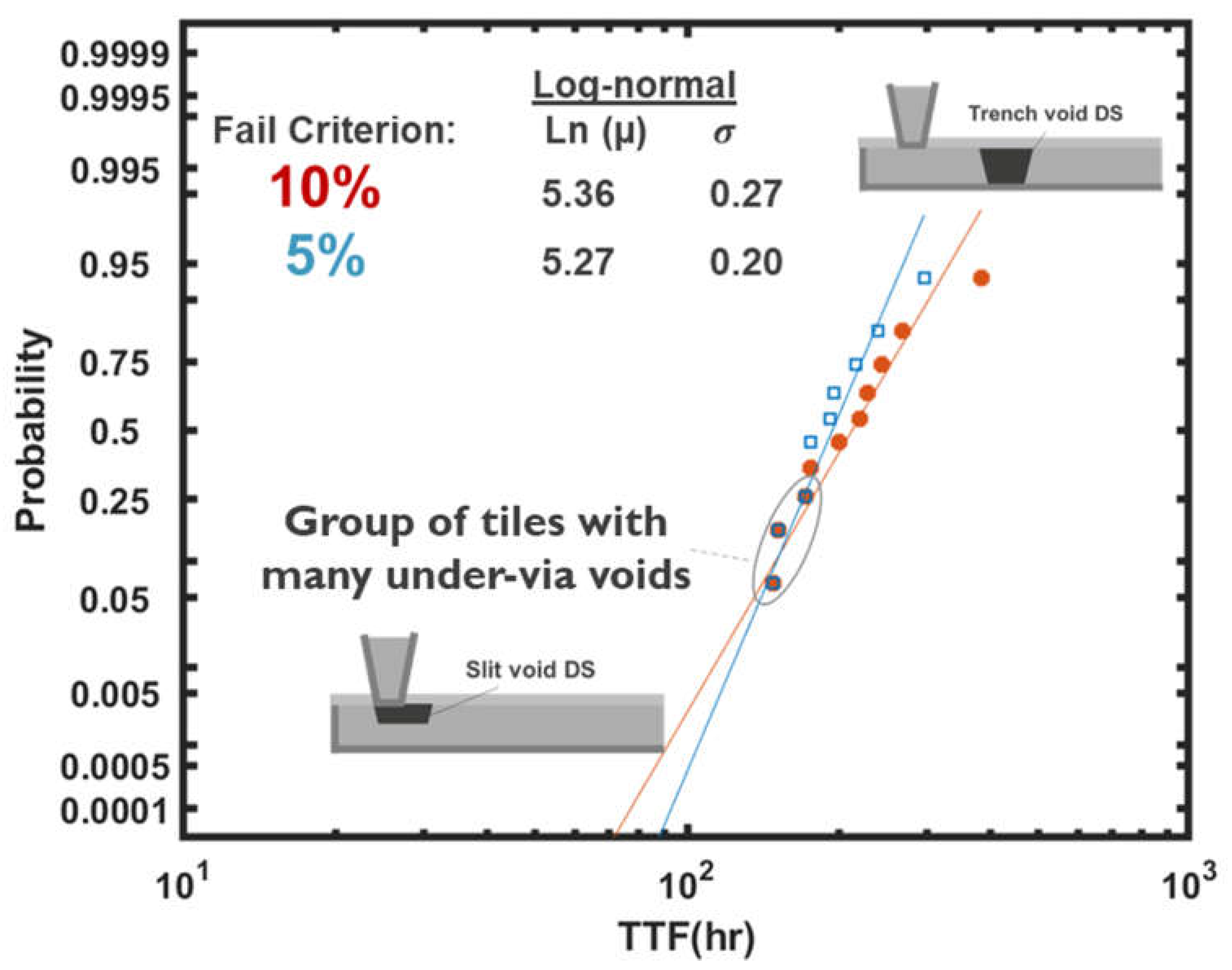

3.1. Model Corroboration

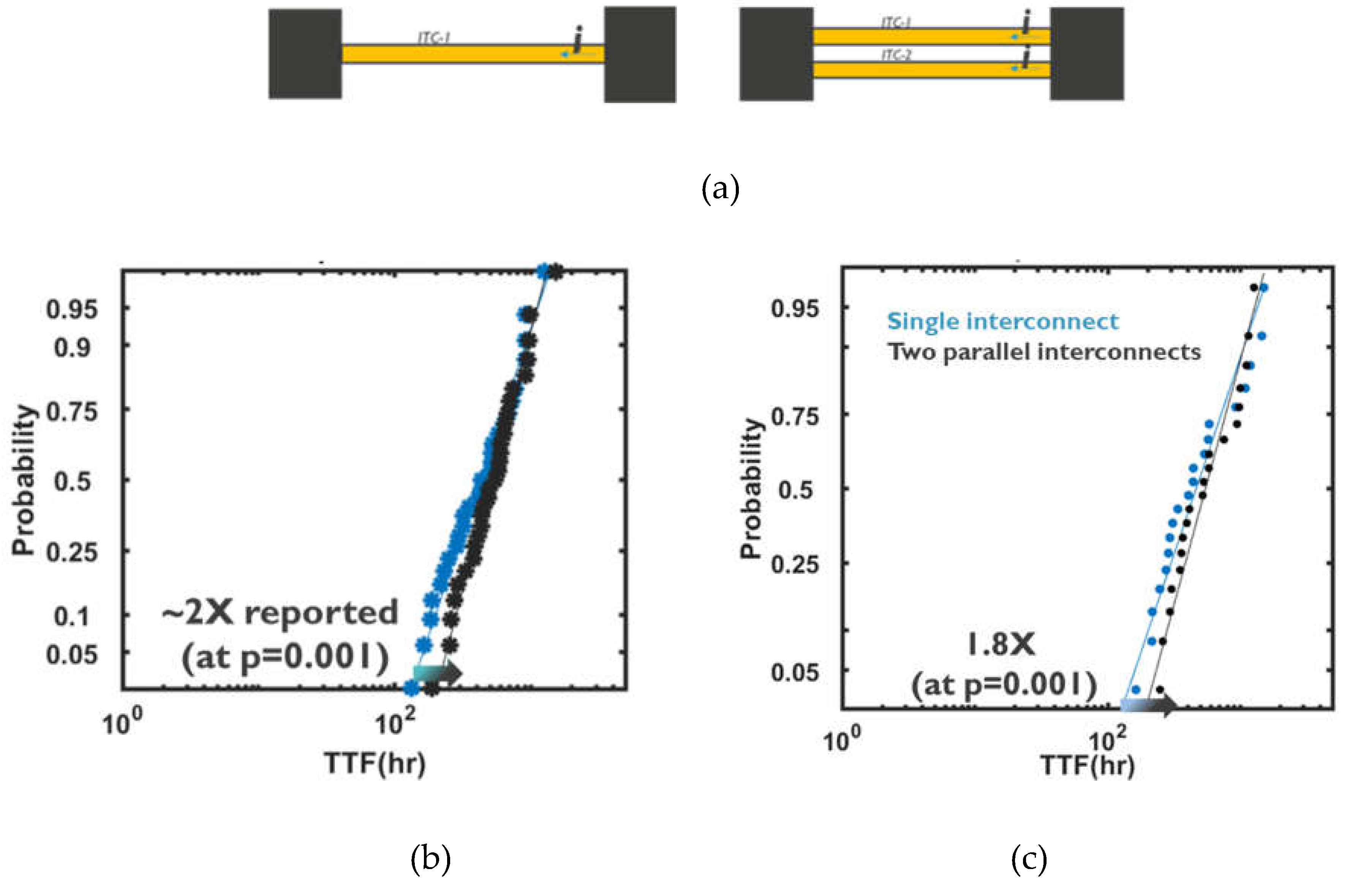

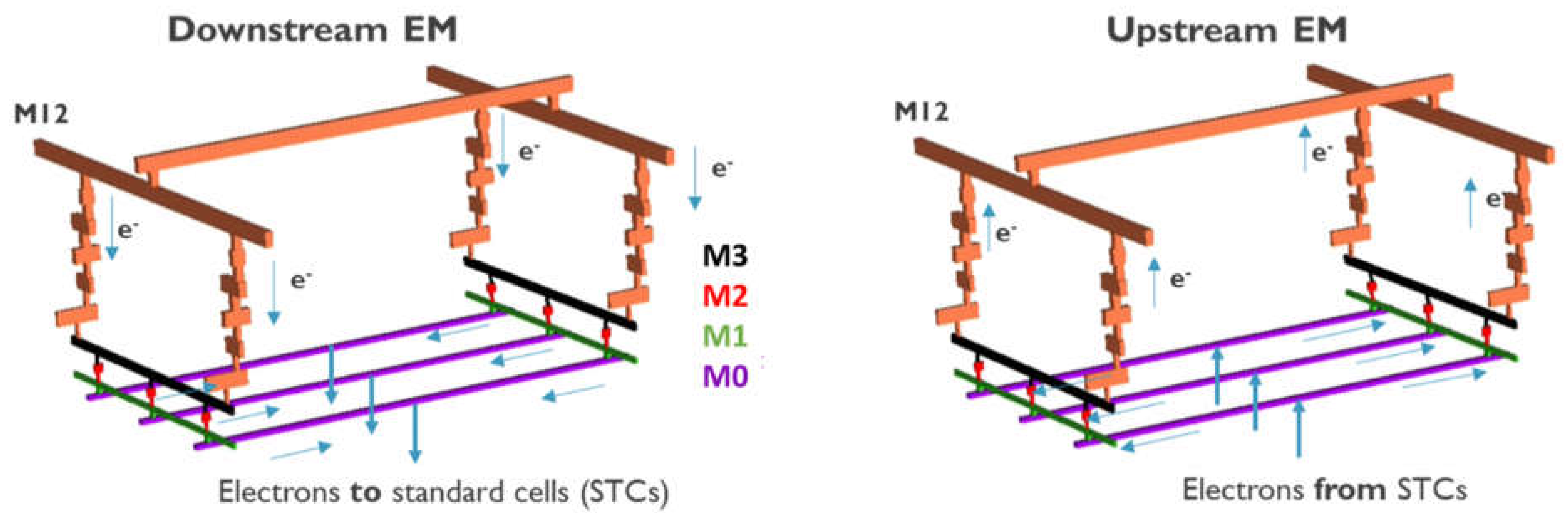

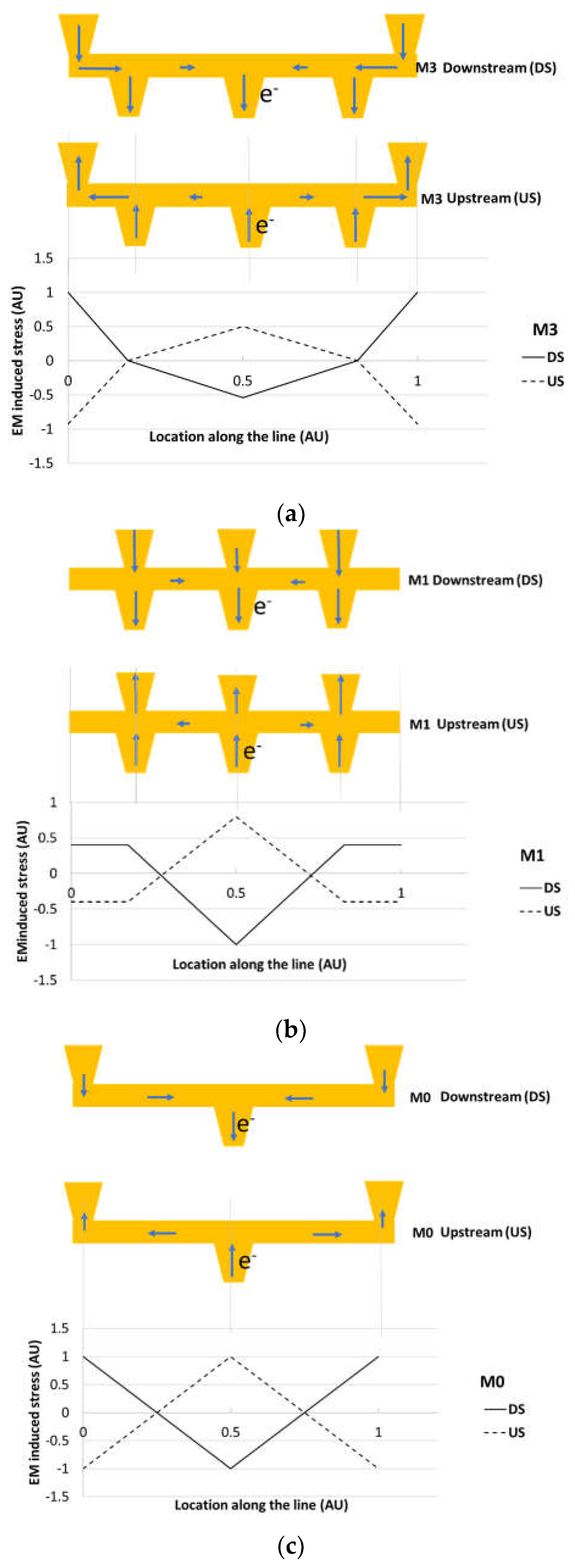

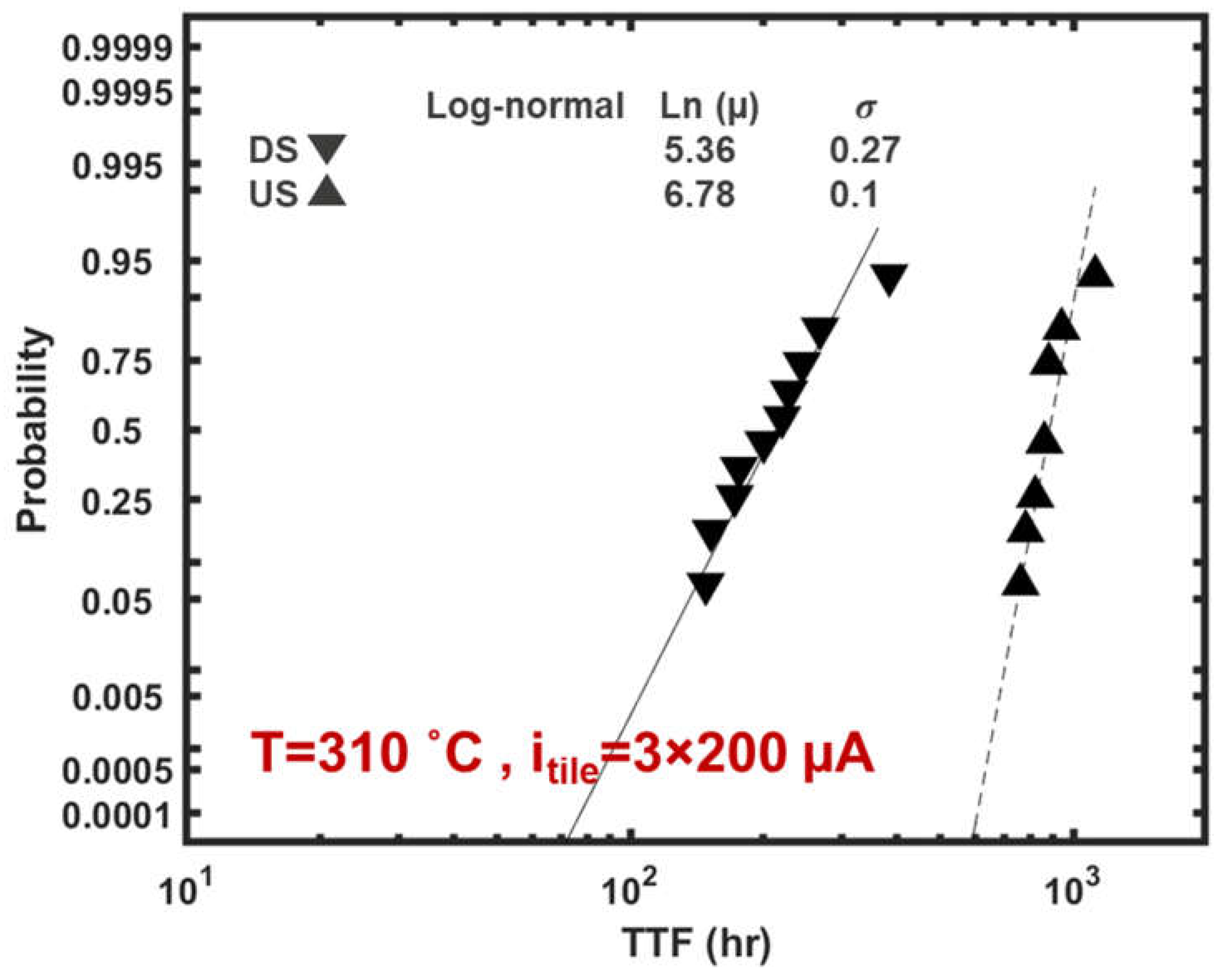

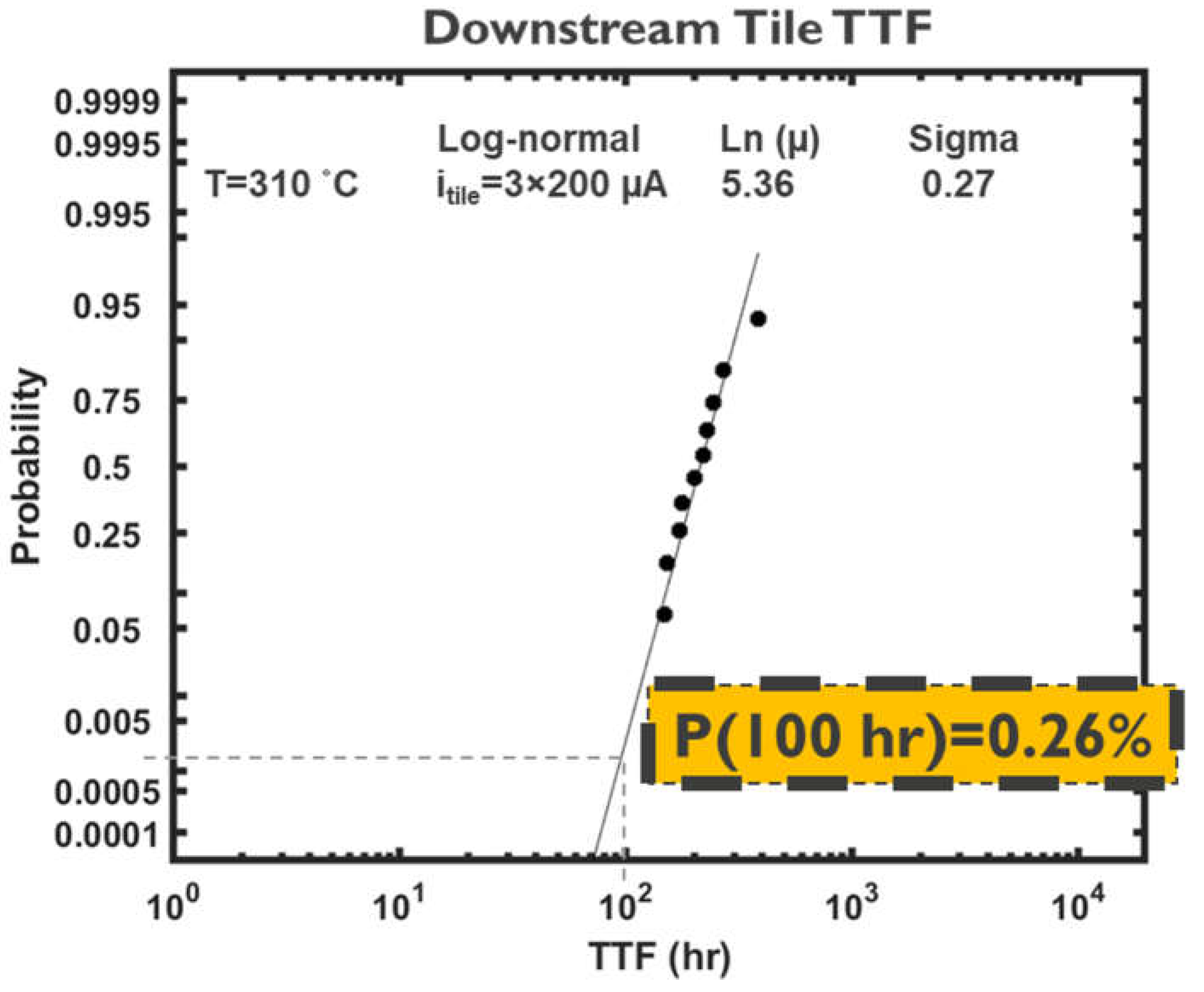

3.2. Application to PDN Unit Cells

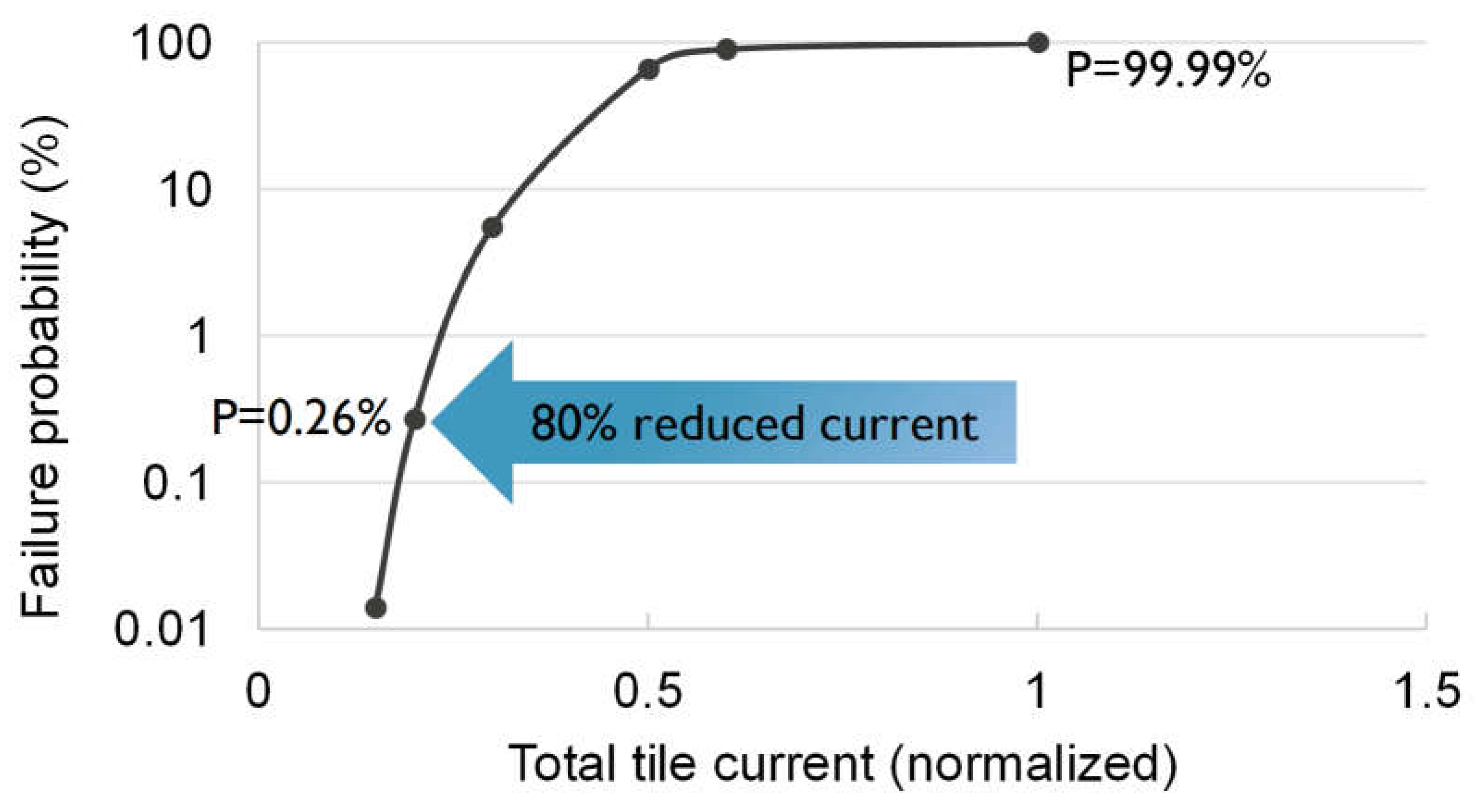

3.3. Impact on Electromigration Reliability Margins:

4. Conclusions

References

- de Vries, A. The Growing Energy Footprint of Artificial Intelligence. Joule 2023, 7, 2191–2194. [Google Scholar] [CrossRef]

- Black, J.R. Electromigration - A Brief Survey and Some Recent Results. IEEE Transactions on Electron Devices 1969, 16, 338–347. [Google Scholar] [CrossRef]

- Hu, C.-K.; Gignac, L.; Lian, G.; Cabral, C.; Motoyama, K.; Shobha, H.; Demarest, J.; Ostrovski, Y.; Breslin, C. M.; Ali, M.; et al. Mechanisms of Electromigration Damage in Cu Interconnects. In Proceedings of the 2018 IEEE International Electron Devices Meeting; pp. 5–5.

- Shen, Z.; Jing, S.; Heng, Y.; Yao, Y.; Tu, K. N.; Liu, Y. Electromigration in Three-Dimensional Integrated Circuits. Appl. Phys. Rev. 2023, 10, 021309. [Google Scholar] [CrossRef]

- Zhao, W.S.; Zhang, R.; Wang, D.W. Recent Progress in Physics-Based Modeling of Electromigration in Integrated Circuit Interconnects. Micromachines (Basel) 2022, 13, 883. [Google Scholar] [CrossRef] [PubMed]

- Lloyd, J. R. On the Log-Normal Distribution of Electromigration Lifetimes. J. Appl. Phys. 1979, 50, 5062–5064. [Google Scholar] [CrossRef]

- Hauschildt, M.; Gall, M.; Thrasher, S.; Justison, P.; Hernandez, R.; Kawasaki, H.; Ho, P. S. Statistical Analysis of Electromigration Lifetimes and Void Evolution. J. Appl. Phys. 2007, 101, 043523. [Google Scholar] [CrossRef]

- Hauschildt, M.; Gall, M.; Justison, P.; Hernandez, R.; Ho, P. S. The Influence of Process Parameters on Electromigration Lifetime Statistics. J. Appl. Phys. 2008, 104, 043503. [Google Scholar] [CrossRef]

- JEDEC/FSA Joint Publication. Foundry Process Qualification Guidelines (Wafer Fabrication Manufacturing Sites). JP001, May 2004.

- Li, B.; McLaughlin, P. S.; Bickford, J. P.; Habitz, P.; Netrabile, D.; Sullivan, T. D. Statistical Evaluation of Electromigration Reliability at Chip Level. IEEE Transactions on Device and Materials Reliability 2011, 11, 86–91. [Google Scholar] [CrossRef]

- Hu, C. -K. et al. Future On-Chip Interconnect Metallization and Electromigration. In Proceedings of the 2018 IEEE International Reliability Physics Symposium, Burlingame, CA, USA, 2018, pp. 4F.1-1-4F.1-6. [CrossRef]

- Croes, K.; et al. Interconnect Metals Beyond Copper: Reliability Challenges and Opportunities. In Proceedings of the 2018 IEEE International Electron Devices Meeting, San Francisco, CA, USA; 2018; pp. 5–5. [Google Scholar] [CrossRef]

- Lane, M. W.; Liniger, E. G.; Lloyd, J. R. Relationship Between Interfacial Adhesion and Electromigration in Cu Metallization. J. Appl. Phys. 2003, 93, 1417–1421. [Google Scholar] [CrossRef]

- Lin, M.H.; Chang, K.P.; Su, K.C.; Wang, T. Effects of Width Scaling and Layout Variation on Dual Damascene Copper Interconnect Electromigration. Microelectronics Reliability 2007, 47, 2100–2108. [Google Scholar] [CrossRef]

- Oates, A. S. Strategies to Ensure Electromigration Reliability of Cu/Low-k Interconnects at 10 nm. ECS Journal of Solid State Science and Technology 2015, 4, N3168–N3176. [Google Scholar] [CrossRef]

- Ho, P. S.; Zschech, E.; Schmeisser, D.; Meyer, M. A.; Huebner, R.; Hauschildt, M.; Zhang, L.; Gall, M.; Kraatz, M. Scaling Effects on Microstructure and Reliability for Cu Interconnects. International Journal of Materials Research 2010, 101, 216–227. [Google Scholar] [CrossRef]

- Cao, L.; Ganesh, K. J.; Zhang, L.; Aubel, O.; Hennesthal, C.; Zschech, E.; Ferreira, P. J.; Ho, P. S. Analysis of Grain Structure by Precession Electron Diffraction and Effects on Electromigration Reliability of Cu Interconnects. In Proceedings of the 2012 IEEE International Interconnect Technology Conference; pp. 1–3.

- Cao, L.; Ganesh, K. J.; Zhang, L.; Aubel, O.; Hennesthal, Ch.; Hauschildt, M.; Ferreira, P. J.; Ho, P. S. Grain Structure Analysis and Effect on Electromigration Reliability in Nanoscale Cu Interconnects. A. Phys. Let. 2013, 102, 131907. [Google Scholar] [CrossRef]

- Hu, C.-K.; Kelly, J.; Chen, J.H-C.; Huang, H.; Ostrovski, Y.; Patlolla, R.; Peethala, B.; Adusumilli, P.; Spooner, T. et al. Electromigration and Resistivity in On-Chip Cu, Co and Ru Damascene Nanowires. In Proceedings of the 2017 IEEE International Interconnect Technology Conference, pp. 1-3.

- Dielectrics on Electromigration Reliability for Cu Interconnects. Mater. Sci. Semicond. Process. 2004, 7, 157–163.

- Wei, F.L.; Gan, C.L.; Tan, T.L.; Hau-Riege, C.S.; Marathe, A.P.; Vlassak, J.J.; Thompson, C.V. Electromigration-Induced Extrusion Failures in Cu/Low-Interconnects. J. Appl. Phys. 2008, 104, 023529. [Google Scholar] [CrossRef]

- Zahedmanesh, H.; Vanstreels, K.; Gonzalez, M. A Numerical Study on Nano-Indentation Induced Fracture of Low Dielectric Constant Brittle Thin Films Using Cube Corner Probes. Microelectronic Engineering 156, 108-115.

- Zahedmanesh, H.; Vanstreels, K.; Le, Q.T.; Verdonck, P.; Gonzalez, M. Mechanical Integrity of Nano-Interconnects as Brittle-Matrix Nano-Composites. Theoretical and Applied Fracture Mechanics 95, 194-207.

- Hau-Riege, S.P.; Thompson, C.V. The Effects of the Mechanical Properties of the Confinement Material on Electromigration in Metallic Interconnects. J. Mater. Res. 2000, 15, 1797–1802. [Google Scholar] [CrossRef]

- Zahedmanesh, H.; Besser, P.R.; Wilson, C.J.; Croes, K. Airgaps in Nano-Interconnects: Mechanics and Impact on Electromigration. J. Appl. Phys. 2016, 120, 095103. [Google Scholar] [CrossRef]

- Chang, H.-H.; Su, Y.-F.; Liang, S.Y.; Chiang, K.-N. The Effect of Mechanical Stress on Electromigration Behavior. Journal of Mechanics 2015, 31, 441–448. [Google Scholar] [CrossRef]

- Hu, C.-K.; Gignac, L.; Rosenberg, R.; Liniger, E.; Rubino, J.; Sambucetti, C.; Domenicucci, A.; Chen, X.; Stamper, A.K. Reduced Electromigration of Cu Wires by Surface Coating. Appl. Phys. Lett. 2002, 81, 1782. [Google Scholar] [CrossRef]

- Zhang, L.; Zhou, J.P.; Im, J.; Ho, P.S.; Aubel, O.; Hennestal, C.; Zschech, E. Effects of Cap Layer and Grain Structure on Electromigration Reliability of Cu/Low-k Interconnects for 45 nm Technology Node. In Proceedings of the 2010 IEEE International Reliability Physics Symposium; pp. 581–585.

- Motoyama, K.; van der Straten, O.; Maniscalco, J.; Cheng, K.; DeVries, S.; Hu, K.; Huang, H.; Park, K.; Kim, Y.; Hosadurga, S.; Lanzillo, N.; Simon, A.; Jiang, L.; Peethala, B.; Standaert, T.; Wu, T.; Spooner, T.; Choi, K. EM Enhancement of Cu Interconnects with Ru Liner for 7 nm Node and Beyond. In Proceedings of the 2019 IEEE International Interconnect Technology Conference. [Google Scholar]

- Barmak, K.; Cabral, C.; Rodbell, K.P.; Harper, J.M.E. On the Use of Alloying Elements for Cu Interconnect Applications. J. Vac. Sci. Technol. B 2006, 24, 2485. [Google Scholar] [CrossRef]

- Gambino, J.P. Improved Reliability of Copper Interconnects Using Alloying. In 2010 17th IEEE International Symposium Physics Failure Analysis Integrated Circuits (IEEE, Piscataway, NJ, 2010), p. 1.

- Zahedmaesh, H.; Pedreira, O.V.; Tokei, Z.; Croes, K. Electromigration Limits of Copper Nano-Interconnects. 2021 IEEE International Reliability Physics Symposium (IRPS), 1-6.

- Zahedmanesh, H.; Pedreira, O.V.; Wilson, C.; Tőkei, Z.; Croes, K. Copper Electromigration; Prediction of Scaling Limits. Proceedings of IEEE International Interconnect Technology Conference (IITC), 3-5.

- Choi, S.; Christiansen, C.; Cao, L.; Zhang, J.; Filippi, R.; Shen, T.; Yeap, K.B. et al. Effect of Metal Line Width on Electromigration of BEOL Cu Interconnects. In Proceedings of the 2018 IEEE International Reliability Physics Symposium, pp. 4F.4-1-4F.4-6.

- International Technology Roadmap for Semiconductors 2.0 (ITRS 2.0) (2015). Available online: http://www.itrs2.net/itrs-reports.html (accessed on 1 April 2022).

- Mishra, V.; Sapatnekar, S.S. Circuit Delay Variability Due to Wire Resistance Evolution Under AC Electromigration. In Proceedings of the 2015 IEEE International Reliability Physics Symposium, Monterey, CA, USA, 19–23 April 2015; pp. 3–3. [Google Scholar]

- van der Veen, M.H.; Heylen, N.; Pedreira, O.V.; Ciofi, I.; Decoster, S.; Gonzalez, V.V.; Jourdan, N.; Struyf, H.; Croes, K.; Wilson, C.J.; Tőkei, Zs. Damascene Benchmark of Ru, Co and Cu in Scaled Dimensions. 2018 IEEE International Interconnect Technology Conference (IITC). [CrossRef]

- Jourdain, A.; et al. Buried Power Rails and Nano-Scale TSV: Technology Boosters for Backside Power Delivery Network and 3D Heterogeneous Integration. 2022 IEEE 72nd Electronic Components and Technology Conference (ECTC), 2022, pp. 1531-1538. [CrossRef]

- Sisto, G.; et al. IR-Drop Analysis of Hybrid Bonded 3D-ICs with Backside Power Delivery and μ- & n- TSVs. 2021 IEEE International Interconnect Technology Conference (IITC), 2021, pp. 1-3. [CrossRef]

- Oprins, H. et al. Package Level Thermal Analysis of Backside Power Delivery Network (BS-PDN) Configurations. 21st IEEE Intersociety Conference on Thermal and Thermomechanical Phenomena in Electronic Systems (ITherm 2022).

- Zahedmanesh, H.; Croes, K. Modelling Stress Evolution and Voiding in Advanced Copper Nano-Interconnects Under Thermal Gradients. Microelectronics Reliability 111, 113769.

- Ding, Y.; et al. Thermomigration-Induced Void Formation in Cu-Interconnects - Assessment of Main Physical Parameters. 2023 IEEE International Reliability Physics Symposium (IRPS), Monterey, CA, USA, 2023, pp. 1-7. [CrossRef]

- Kitchin, J. Statistical Electromigration Budgeting for Reliable Design and Verification in a 300-MHz Microprocessor. Digest of Technical Papers., Symposium on VLSI Circuits., Kyoto, Japan, 1995, pp. 115-116. [CrossRef]

- Li, B.; McLaughlin, P.S.; Bickford, J.P.; Habitz, P.; Netrabile, D.; Sullivan, T.D. Statistical Evaluation of Electromigration Reliability at Chip Level. IEEE Transactions on Device and Materials Reliability 2011, 11, 86–91. [Google Scholar] [CrossRef]

- Ho, P.S.; Hu, C.-K.; Gall, M.; Sukharev, V. Assessment of Electromigration Damage in Large On-Chip Power Grids. In: Electromigration in Metals: Fundamentals to Nano-Interconnects. Cambridge University Press; 2022:380-413.

- Zhou, C.; Wong, R.; Wen, S.-J.; Kim, C.H. Electromigration Effects in Power Grids Characterized Using an On-Chip Test Structure with Poly Heaters and Voltage Tapping Points. 2018 IEEE Symposium on VLSI Technology, Honolulu, HI, USA, 2018, pp. 19-20. [CrossRef]

- Pande, N.; et al. Characterizing Electromigration Effects in a 16nm FinFET Process Using a Circuit Based Test Vehicle. 2019 IEEE International Electron Devices Meeting (IEDM), San Francisco, CA, USA, 2019, pp. 5.3.1-5.3.4. [CrossRef]

- Lin, M.H.; Lin, C.I.; Wang, Y.C.; Wang, A. Redundancy Effect on Electromigration Failure Time in Power Grid Networks. 2022 IEEE International Reliability Physics Symposium (IRPS), Dallas, TX, USA, 2022, pp. 1-7. [CrossRef]

- Lin, M.H.; Oates, A.S. Electromigration Failure of Circuit Interconnects. In Proceedings of the 2016 IEEE International Reliability Physics Symposium (IRPS), Pasadena, CA, USA; 2016; pp. 5–8. [Google Scholar]

- Korhonen, M.A.; Borgesen, P.; Tu, K.N.; Li, C.-Y. Stress Evolution Due to Electromigration in Confined Metal Lines. J. Appl. Phys. 1993, 73, 3790–3799. [Google Scholar] [CrossRef]

- Sarychev, M.E.; Zhitnikov, Y.V. General Model for Mechanical Stress Evolution During Electromigration. J. Appl. Phys. 1999, 86, 3068–3075. [Google Scholar] [CrossRef]

- Gleixner, R.J.; Nix, W.D. A Physically Based Model of Electromigration and Stress-Induced Void Formation in Microelectronic Interconnects. J. Appl. Phys. 1999, 86, 1932–1944. [Google Scholar] [CrossRef]

- Bower, A.F.; Shankar, S. A Finite Element Model of Electromigration Induced Void Nucleation, Growth and Evolution in Interconnects. Modell. Simul. Mater. Sci. Eng. 2007, 15, 923–940. [Google Scholar] [CrossRef]

- Ceric, H.; de Orio, R.L.; Cervenka, J.; Selberherr, S. Copper Microstructure Impact on Evolution of Electromigration Induced Voids. In Proceedings of the International Conference on Simulation of Semiconductor Processes and Devices; 2009; pp. 1–4. [Google Scholar]

- Ceric, H.; Zahedmanesh, H.; Croes, K. Analysis of Electromigration Failure of Nano-Interconnects Through a Combination of Modeling and Experimental Methods. Microelectron. Reliab. 2019, 100–101, 113362.

- Zahedmanesh, H.; Pedreira, O.V.; Tőkei, Z.; Croes, K. Investigating the Electromigration Limits of Cu Nanointerconnects Using a Novel Hybrid Physics-Based Model. J. Appl. Phys. 2019, 126, 055102. [Google Scholar] [CrossRef]

- Kteyan, A.; Sukharev, V. Physics-Based Simulation of Stress-Induced and Electromigration-Induced Voiding and Their Interactions in On-Chip Interconnects. Microelectron. Eng. 2021, 247, 111585. [Google Scholar] [CrossRef]

- Zahedmanesh, H.; Pedreira, O.V.; Tőkei, Z.; Croes, K. Electromigration Limits of Copper Nano-Interconnects. In Proceedings of the 2021 IEEE International Reliability Physics Symposium (IRPS), Monterey, CA, USA, 21–25 March 2021; pp. 1–6. [Google Scholar]

- Saleh, A.S.; Ceric, H.; Zahednamesh, H. Void-Dynamics in Nano-Wires and the Role of Microstructure Investigated Via a Multi-Scale Physics-Based Model. J. Appl. Phys. 2021, 129, 125102. [Google Scholar] [CrossRef]

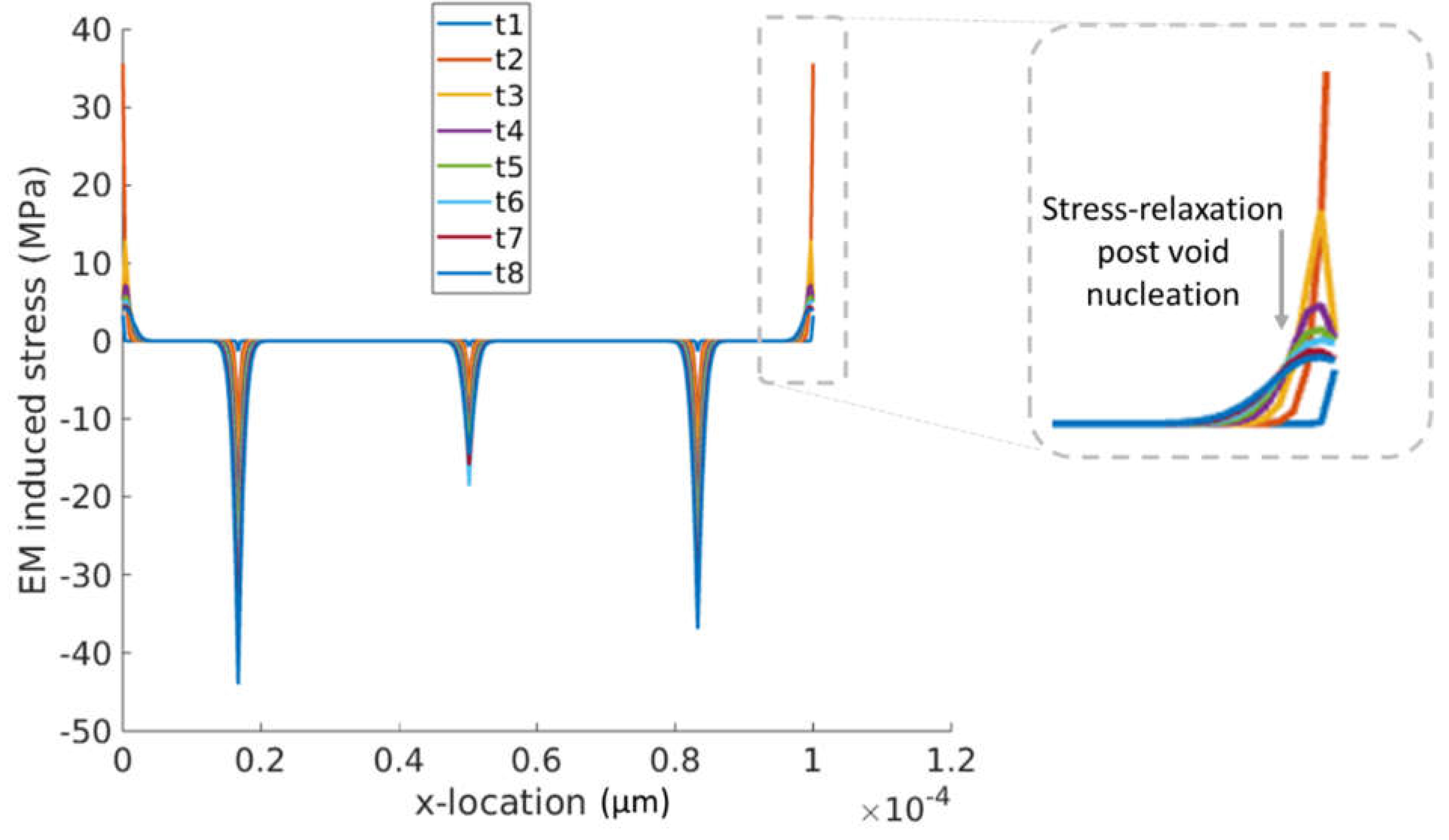

- Saleh, A.; Croes, K.; Ceric, H.; De Wolf, I.; Zahedmanesh, H. A Framework for Combined Simulations of Electromigration Induced Stress Evolution, Void Nucleation, and Its Dynamics: Application to Nano-Interconnect Reliability. J. Appl. Phys. 2021, 134, 135102. [Google Scholar] [CrossRef]

- Saleh, A.S.; Zahedmanesh, H.; Ceric, H.; De Wolf, I.; Croes, K. Impact of Via Geometry and Line Extension on Via-Electromigration in Nano-Interconnects. In Proceedings of the 2023 IEEE International Reliability Physics Symposium (IRPS), Monterey, CA, USA; 2023; pp. 1–4. [Google Scholar]

- Chatterjee, S.; Fawaz, M.; Najm, F.N. Redundancy-Aware Power Grid Electromigration Checking Under Workload Uncertainties. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2015, 34, 1509–1522. [Google Scholar] [CrossRef]

- Huang, X.; Kteyan, A.; Tan, S.X.D.; Sukharev, V. Physics-Based Electromigration Models and Full-Chip Assessment for Power Grid Networks. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2016, 35, 1848–1861. [Google Scholar] [CrossRef]

- Sukharev, V.; Najm, F.N. Electromigration Check: Where the Design and Reliability Methodologies Meet. IEEE Trans. Device Mater. Reliab. 2018, 18, 498–507. [Google Scholar] [CrossRef]

- Kteyan, A.; Sukharev, V.; Volkov, A.; Choy, J.-H.; Najm, F.; et al. Electromigration Assessment in Power Grids with Account of Redundancy and Non-Uniform Temperature Distribution. In Proceedings of the ISPD’23 - International Symposium on Physical Design, Virtual Event, USA, March 2023; p. 124. [Google Scholar]

- Zahedmanesh, H.; Roussel, P.; Ciofi, I.; Croes, K. A Pragmatic Network-Aware Paradigm for System-Level Electromigration Predictions at Scale. In Proceedings of the 2023 IEEE International Reliability Physics Symposium (IRPS), Monterey, CA, USA; 2023; pp. 1–6. [Google Scholar]

- Korhonen, M.A.; Borgesen, P.; Brown, D.D.; Li, C.-Y. Microstructure Based Statistical Model of Electromigration Damage in Confined Line Metallizations in the Presence of Thermally Induced Stresses. J. Appl. Phys. 1993, 74, 4995. [Google Scholar] [CrossRef]

- Witt, C.; et al. Electromigration: Void Dynamics. IEEE Trans. Device Mater. Reliab. 2016, 16, 446–451. [Google Scholar] [CrossRef]

- Hau-Riege, C.S.; Hau-Riege, S.P.; Marathe, A.P. The Effect of Interlevel Dielectric on the Critical Tensile Stress to Void Nucleation for the Reliability of Cu Interconnects. J. Appl. Phys. 2004, 96, 5792–5796. [Google Scholar] [CrossRef]

- Sakai, T.; Nakajima, M.; Tokaji, K.; Hasegawa, N. Statistical Distribution Patterns in Mechanical and Fatigue Properties of Metallic Materials. Mater. Sci. Res. Int. 1997, 3, 63–74. [Google Scholar] [CrossRef]

- Lin, M.H.; et al. Electromigration Lifetime Improvement of Copper Interconnect by Cap/Dielectric Interface Treatment and Geometrical Design. IEEE Trans. Electron Devices 2005, 52, 2602–2608. [Google Scholar] [CrossRef]

- Liu, W.; et al. Study of Upstream Electromigration Bimodality and Its Improvement in Cu Low-k Interconnects. In Proceedings of the 2010 IEEE International Reliability Physics Symposium, Anaheim, CA, USA; 2010; pp. 906–910. [Google Scholar]

- Zahedmanesh, H.; Ciofi, I.; Zografos, O.; Croes, K.; Badaroglu, M. System-Level Simulation of Electromigration in a 3 nm CMOS Power Delivery Network: The Effect of Grid Redundancy, Metallization Stack and Standard-Cell Currents. In Proceedings of the 2022 IEEE International Reliability Physics Symposium (IRPS), Dallas, TX, USA, 27–31 March 2022; pp. 1–7. [Google Scholar]

- Zahedmanesh, H.; Ciofi, I.; Zografos, O.; Badaroglu, M.; Croes, K. A Novel System-Level Physics-Based Electromigration Modelling Framework: Application to the Power Delivery Network. In Proceedings of the ACM/IEEE International Workshop on System Level Interconnect Prediction (SLIP), Munich, Germany, 4 November 2021; pp. 1–7. [Google Scholar]

- Blech, I. Electromigration in Thin Aluminum Films on Titanium Nitride. J. Appl. Phys. 1976, 47, 1203–1208. [Google Scholar] [CrossRef]

| Parameter | Value | Description |

|---|---|---|

| Atomic Diffusivity | ||

| 49 Ω.nm | Resistivity | |

| 15 GPa | Interconnect effective bulk modulus | |

| w | 45 nm | Linewidth |

| h | 90 nm | Line height |

| 3 | Effective charge | |

| 1.60218×10-19 C | Electronics charge | |

| 1.182×10-29 m3 | Atomic volume |

| 0 | 0.58 | 0.63 | 0.94 | 1.2 | 1.89 | 3.05 | 3.63 | 4.58 | |

| Freq. | 4 | 4 | 4 | 4 | 8 | 2 | 12 | 4 | 4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).