Submitted:

10 June 2024

Posted:

11 June 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

- Proposing in-text pause and other language features (uh/um) encoding.

- Thoroughly evaluating different pauses to provide insight on how they affect model performance.

- Proposing the use a contrastive learning to improve performance.

- Combining in-text pause encoding and DualCL in a multitask manner.

2. Materials and Methods

2.1. Dataset Preparation

2.2. In-Text Pause Encoding

2.3. Modeling

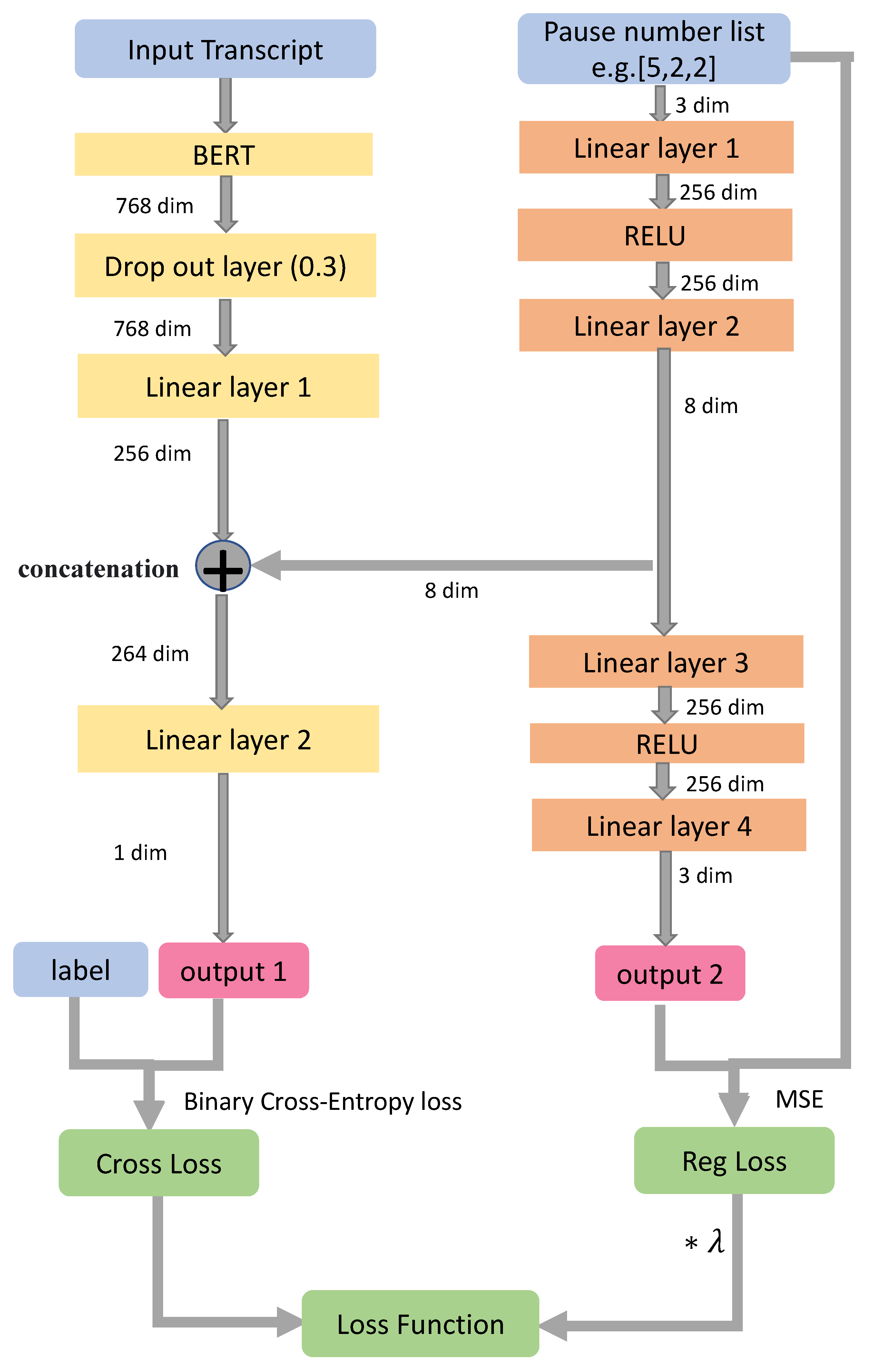

2.3.1. Model for In-Text Encoding Scheme

2.3.2. Contrastive Learning

3. Results

3.1. In-Text Pause Encoding Results

3.2. Filler Word Encoding Results

3.3. Contrastive Learning

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A. Effects of Pause Subsets

| Pauses Included | acc | acc | F1 | F1 |

|---|---|---|---|---|

| All Pauses | 0.79 | - | 0.80 | - |

| Short Removed | 0.78 | -0.01 | 0.78 | -0.02 |

| Medium Removed | 0.79 | - | 0.79 | -0.01 |

| Long Removed | 0.79 | - | 0.79 | -0.01 |

| Short Only | 0.78 | -0.01 | 0.78 | -0.02 |

| Medium Only | 0.79 | - | 0.80 | - |

| Long Only | 0.79 | - | 0.78 | -0.02 |

| Short Removed | Medium Removed | Long Removed | Short Only | Medium Only | Long Only | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Input Type | acc | F1 | acc | F1 | acc | F1 | acc | F1 | acc | F1 | acc | F1 | |

| 0.53± 0.09 | 0.39± 0.30 | 0.53± 0.08 | 0.40± 0.28 | 0.53± 0.07 | 0.40± 0.30 | 0.51± 0.09 | 0.32± 0.35 | 0.58± 0.08 | 0.56± 0.26 | 0.52± 0.07 | 0.39± 0.33 | ||

| 0.50± 0.06 | 0.36± 0.31 | 0.53± 0.08 | 0.43± 0.20 | 0.55± 0.09 | 0.43± 0.27 | 0.51± 0.06 | 0.39± 0.30 | 0.52± 0.09 | 0.35± 0.34 | 0.52± 0.08 | 0.31± 0.31 | ||

| 0.84± 0.07 | 0.86± 0.06 | 0.83± 0.06 | 0.84± 0.06 | 0.83± 0.07 | 0.85± 0.06 | 0.82± 0.07 | 0.84± 0.07 | 0.83± 0.06 | 0.85± 0.05 | 0.83± 0.06 | 0.85± 0.06 | ||

| 0.82± 0.07 | 0.84± 0.06 | 0.84± 0.06 | 0.86± 0.05 | 0.81± 0.05 | 0.83± 0.05 | 0.83± 0.06 | 0.85± 0.05 | 0.82± 0.06 | 0.84± 0.05 | 0.83± 0.07 | 0.85± 0.06 | ||

| 0.83± 0.07 | 0.85± 0.06 | 0.83± 0.05 | 0.84± 0.05 | 0.83± 0.07 | 0.85± 0.06 | 0.81± 0.08 | 0.84± 0.06 | 0.84± 0.06 | 0.85± 0.06 | 0.82± 0.06 | 0.84± 0.05 | ||

| 0.81± 0.07 | 0.83± 0.06 | 0.83± 0.06 | 0.85± 0.06 | 0.83± 0.06 | 0.85± 0.05 | 0.84± 0.07 | 0.85± 0.07 | 0.83± 0.07 | 0.85± 0.06 | 0.81± 0.08 | 0.84± 0.07 | ||

| 0.81± 0.05 | 0.83± 0.04 | 0.84± 0.06 | 0.85± 0.06 | 0.81± 0.08 | 0.84± 0.06 | 0.82± 0.06 | 0.85± 0.06 | 0.82± 0.07 | 0.84± 0.07 | 0.83± 0.08 | 0.85± 0.07 | ||

| 0.81± 0.06 | 0.83± 0.05 | 0.82± 0.05 | 0.84± 0.04 | 0.83± 0.06 | 0.8± 0.05 | 0.83± 0.06 | 0.85± 0.06 | 0.82± 0.06 | 0.85± 0.05 | 0.82± 0.06 | 0.84± 0.06 | ||

| 0.81± 0.07 | 0.84± 0.06 | 0.83± 0.06 | 0.85± 0.05 | 0.83± 0.07 | 0.85± 0.06 | 0.82± 0.08 | 0.84± 0.07 | 0.81± 0.06 | 0.84± 0.04 | 0.82± 0.08 | 0.84± 0.07 | ||

| 0.84± 0.06 | 0.85± 0.06 | 0.82± 0.07 | 0.84± 0.06 | 0.82± 0.07 | 0.85± 0.06 | 0.82± 0.06 | 0.83± 0.05 | 0.83± 0.06 | 0.85± 0.06 | 0.83± 0.08 | 0.86± 0.07 | ||

| 0.82± 0.07 | 0.84± 0.06 | 0.83± 0.06 | 0.84± 0.06 | 0.83± 0.07 | 0.85± 0.06 | 0.81± 0.08 | 0.83± 0.07 | 0.83± 0.06 | 0.85± 0.05 | 0.82± 0.06 | 0.84± 0.05 | ||

| 0.82± 0.08 | 0.84± 0.07 | 0.83± 0.06 | 0.85± 0.05 | 0.83± 0.06 | 0.84± 0.06 | 0.82± 0.06 | 0.84± 0.05 | 0.82± 0.06 | 0.84± 0.05 | 0.83± 0.06 | 0.85± 0.06 | ||

| 0.83± 0.07 | 0.85± 0.06 | 0.81± 0.05 | 0.83± 0.05 | 0.83± 0.06 | 0.84± 0.06 | 0.82± 0.06 | 0.84± 0.05 | 0.82± 0.07 | 0.84± 0.06 | 0.82± 0.08 | 0.85± 0.06 | ||

| 0.83± 0.08 | 0.85± 0.06 | 0.83± 0.06 | 0.84± 0.05 | 0.81± 0.06 | 0.83± 0.05 | 0.81± 0.07 | 0.84± 0.05 | 0.82± 0.07 | 0.85± 0.06 | 0.83± 0.06 | 0.85± 0.05 | ||

| 0.82± 0.07 | 0.85± 0.06 | 0.82± 0.07 | 0.84± 0.06 | 0.83± 0.07 | 0.85± 0.07 | 0.83± 0.06 | 0.84± 0.05 | 0.84± 0.06 | 0.85± 0.06 | 0.82± 0.08 | 0.84± 0.06 | ||

| 0.82± 0.07 | 0.85± 0.06 | 0.82± 0.07 | 0.85± 0.06 | 0.81± 0.08 | 0.85± 0.07 | 0.83± 0.06 | 0.85± 0.05 | 0.84± 0.06 | 0.86± 0.06 | 0.83± 0.06 | 0.84± 0.06 | ||

| 0.78 | 0.78 | 0.79 | 0.79 | 0.79 | 0.79 | 0.78 | 0.78 | 0.79 | 0.80 | 0.79 | 0.78 | ||

References

- A.D. International. Dementia statistics. Available online: https://www.alz.co.uk/research/statistics (accessed on 27 May 2024).

- Yiannopoulou, K.G.; Papageorgiou, S.G. Current and future treatments in Alzheimer disease: an update. Journal of central nervous system disease 2020, 12, 1179573520907397. [Google Scholar] [CrossRef] [PubMed]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2019, arXiv:1810.04805. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. arXiv 2020, arXiv:2005.14165. [Google Scholar]

- Chandra, R.; Kulkarni, V. Semantic and sentiment analysis of selected Bhagavad Gita translations using BERT-based language framework. IEEE Access 2022, 10, 21291–21315. [Google Scholar] [CrossRef]

- Qu, C.; Yang, L.; Qiu, M.; Croft, W.B.; Zhang, Y.; Iyyer, M. BERT with history answer embedding for conversational question answering. In Proceedings of the 42nd international ACM SIGIR conference on research and development in information retrieval; 2019; pp. 1133–1136. [Google Scholar]

- Hakala, K.; Pyysalo, S. Biomedical named entity recognition with multilingual BERT. In Proceedings of the 5th workshop on BioNLP open shared tasks; 2019; pp. 56–61. [Google Scholar]

- Ahmed, M.R.; Zhang, Y.; Feng, Z.; Lo, B.; Inan, O.T.; Liao, H. Neuroimaging and machine learning for dementia diagnosis: recent advancements and future prospects. IEEE reviews in biomedical engineering 2018, 12, 19–33. [Google Scholar] [CrossRef]

- Javeed, A.; Dallora, A.L.; Berglund, J.S.; Ali, A.; Ali, L.; Anderberg, P. Machine learning for dementia prediction: a systematic review and future research directions. Journal of medical systems 2023, 47, 17. [Google Scholar] [CrossRef] [PubMed]

- Yuan, J.; Bian, Y.; Cai, X.; Huang, J.; Ye, Z.; Church, K. Disfluencies and Fine-Tuning Pre-Trained Language Models for Detection of Alzheimer’s Disease. In Proceedings of the Interspeech; 2020; Vol. 2020, pp. 2162–6. [Google Scholar]

- Valsaraj, A.; Madala, I.; Garg, N.; Baths, V. Alzheimer’s dementia detection using acoustic & linguistic features and pre-trained BERT. In Proceedings of the 2021 8th International Conference on Soft Computing & Machine Intelligence (ISCMI); IEEE, 2021; pp. 171–175. [Google Scholar]

- Cai, H.; Huang, X.; Liu, Z.; Liao, W.; Dai, H.; Wu, Z.; Zhu, D.; Ren, H.; Li, Q.; Liu, T.; et al. Multimodal Approaches for Alzheimer’s Detection Using Patients’ Speech and Transcript. In Proceedings of the International Conference on Brain Informatics; Springer, 2023; pp. 395–406. [Google Scholar]

- Guo, Z.; Liu, Z.; Ling, Z.; Wang, S.; Jin, L.; Li, Y. Text classification by contrastive learning and cross-lingual data augmentation for alzheimer’s disease detection. In Proceedings of the 28th international conference on computational linguistics; 2020; pp. 6161–6171. [Google Scholar]

- Afzal, S.; Maqsood, M.; Nazir, F.; Khan, U.; Aadil, F.; Awan, K.M.; Mehmood, I.; Song, O.Y. A data augmentation-based framework to handle class imbalance problem for Alzheimer’s stage detection. IEEE access 2019, 7, 115528–115539. [Google Scholar] [CrossRef]

- Mirheidari, B.; Blackburn, D.; O’Malley, R.; Venneri, A.; Walker, T.; Reuber, M.; Christensen, H. Improving Cognitive Impairment Classification by Generative Neural Network-Based Feature Augmentation. In Proceedings of the INTERSPEECH; 2020; pp. 2527–2531. [Google Scholar]

- Jain, V.; Nankar, O.; Jerrish, D.J.; Gite, S.; Patil, S.; Kotecha, K. A novel AI-based system for detection and severity prediction of dementia using MRI. IEEE Access 2021, 9, 154324–154346. [Google Scholar] [CrossRef]

- Soleimani, R.; Gou, S.; Haley, K.L.; Jacks, A.; Lobaton, E. Dementia Detection by In-Text Pause Encoding. Submitted for publication In EMBC 2024.

- Luz, S.; Haider, F.; de la Fuente, S.; Fromm, D.; MacWhinney, B. Alzheimer’s Dementia Recognition through Spontaneous Speech: The ADReSS Challenge. Proceedings of the INTERSPEECH 2020, China, 2020. [Google Scholar]

- Becker, J.T.; Boiler, F.; Lopez, O.L.; Saxton, J.; McGonigle, K.L. The Natural History of Alzheimer’s Disease: Description of Study Cohort and Accuracy of Diagnosis. Archives of Neurology 1994, 51, 585–594. [Google Scholar] [CrossRef] [PubMed]

- Chen, Q.; Zhang, R.; Zheng, Y.; Mao, Y. Dual Contrastive Learning: Text Classification via Label-Aware Data Augmentation. arXiv 2022, arXiv:/2201.08702. [Google Scholar]

- Zhou, X.; Koltun, V.; Krähenbühl, P. Tracking objects as points. In Proceedings of the European conference on computer vision; Springer, 2020; pp. 474–490. [Google Scholar]

- Harutyunyan, H.; Khachatrian, H.; Kale, D.C.; Ver Steeg, G.; Galstyan, A. Multitask learning and benchmarking with clinical time series data. Scientific data 2019, 6, 96. [Google Scholar] [CrossRef]

- Cramér, H.; Wold, H. Some theorems on distribution functions. Journal of the London Mathematical Society 1936, 1, 290–294. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Xu, T.; Brockman, G.; McLeavey, C.; Sutskever, I. Robust Speech Recognition via Large-Scale Weak Supervision. arXiv 2022, arXiv:2212.04356. [Google Scholar]

- Baevski, A.; Zhou, H.; Mohamed, A.; Auli, M. wav2vec 2.0: A Framework for Self-Supervised Learning of Speech Representations. arXiv 2020, arXiv:2006.11477. [Google Scholar]

- Hugging Face. Available online: https://huggingface.co/google-bert/bert-base-uncased (accessed on 16 May 2024).

- Guo, S. Enhancing Dementia Detection in Text Data through NLP by Encoding Silent Pauses. 2024.

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2017, arXiv:1412.6980. [Google Scholar]

- Karlekar, S.; Niu, T.; Bansal, M. Detecting linguistic characteristics of Alzheimer’s dementia by interpreting neural models. arXiv preprint arXiv:1804.06440, arXiv:1804.06440 2018.

- Wieling, M.; Grieve, J.; Bouma, G.; Fruehwald, J.; Coleman, J.; Liberman, M. Variation and change in the use of hesitation markers in Germanic languages. Language Dynamics and Change 2016, 6, 199–234. [Google Scholar] [CrossRef]

- Tottie, G. Uh and um as sociolinguistic markers in British English. International Journal of Corpus Linguistics 2011, 16, 173–197. [Google Scholar] [CrossRef]

- Kobayashi, S. Contextual Augmentation: Data Augmentation by Words with Paradigmatic Relations. arXiv 2018, arXiv:1805.06201. [Google Scholar]

- Roshanzamir, A.; Aghajan, H.; Soleymani Baghshah, M. Transformer-based deep neural network language models for Alzheimer’s disease risk assessment from targeted speech. BMC Medical Informatics and Decision Making 2021, 21, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Pan, Y.; Mirheidari, B.; Reuber, M.; Venneri, A.; Blackburn, D.; Christensen, H. Automatic hierarchical attention neural network for detecting AD. In Proceedings of the Interspeech 2019.International Speech Communication Association (ISCA); 2019; pp. 4105–4109. [Google Scholar]

- Saltz, P.; Lin, S.Y.; Cheng, S.C.; Si, D. Dementia Detection using Transformer-Based Deep Learning and Natural Language Processing Models. In Proceedings of the 2021 IEEE 9th International Conference on Healthcare Informatics (ICHI); 2021; pp. 509–510. [Google Scholar] [CrossRef]

- Nambiar, A.S.; Likhita, K.; Pujya, K.V.S.S.; Gupta, D.; Vekkot, S.; Lalitha, S. Comparative study of Deep Classifiers for Early Dementia Detection using Speech Transcripts. In Proceedings of the 2022 IEEE 19th India Council International Conference (INDICON); 2022; pp. 1–6. [Google Scholar] [CrossRef]

| Input Type | Example |

|---|---|

| Original | “sen1 (...) sen2 (.),sen3 , (..) …” |

| “sen1 sen2 sen3 ...“ | |

| “sen1 Lo sen2 Sh sen3 Me ...” | |

| “sen1 sen2 sen3 ... Lo Sh Me ...” | |

| “sen1, sen2, sen3 ..., #ShSh#MeMe#LoLo“ | |

| “sen1, sen2, sen3 ...,#Sh#Me#Lo” | |

| “sen1 Lo sen2 Sh sen3 Me ..., Lo Sh Me ...” | |

| “sen1 Lo sen2 Sh sen3 Me ..., #ShSh#MeMe#LoLo ...” | |

| “sen1 Lo sen2 Sh sen3 Me ..., #Sh#Me#Lo” | |

| “sen1, sen2, sen3 ..., Lo Sh Me ..., #ShSh#MeMe#LoLo ...” | |

| “sen1, sen2, sen3 ..., Lo Sh Me ..., #Sh#Me#Lo” | |

| “sen1, sen2, sen3 , ..., #ShSh#MeMe#LoLo ..., #Sh#Me#Lo” | |

| “sen1 Lo sen2 Sh sen3 Me ..., Lo Sh Me ..., #ShSh#MeMe#LoLo...” | |

| “sen1 Lo sen2 Sh sen3 Me ..., Lo Sh Me ..., #Sh#Me#Lo” | |

| “sen1 Lo sen2 Sh sen3 Me ..., #ShSh#MeMe#LoLo ..., #Sh#Me#Lo” | |

| “sen1, sen2, sen3 ..., Lo Sh Me ..., #ShSh#MeMe#LoLo ..., #Sh#Me#Lo” | |

| “sen1 Lo sen2 Sh sen3 Me ..., Lo Sh Me ..., #ShSh#MeMe#LoLo ...,#Sh#Me#Lo” |

| Input Type | Acc. | F1 |

|---|---|---|

| 0.58±0.11 | 0.56±0.24 | |

| 0.50±0.07 | 0.46±0.25 | |

| 0.83±0.06 | 0.85±0.05 | |

| 0.83±0.06 | 0.84±0.06 | |

| 0.81±0.08 | 0.84±0.06 | |

| 0.83±0.07 | 0.84±0.07 | |

| 0.84±0.06 | 0.85±0.06 | |

| 0.82±0.06 | 0.83±0.06 | |

| 0.83±0.06 | 0.84±0.05 | |

| 0.82±0.06 | 0.84±0.05 | |

| 0.82±0.06 | 0.84±0.06 | |

| 0.83±0.07 | 0.85±0.06 | |

| 0.83±0.06 | 0.85±0.05 | |

| 0.83±0.05 | 0.85±0.04 | |

| 0.82±0.07 | 0.84±0.06 | |

| 0.83±0.08 | 0.85±0.07 | |

| 0.79 | 0.80 | |

| 0.56±0.11 | 0.42±0.27 |

| Phase 1 | Phase 2 | Phase 3 | ||||

|---|---|---|---|---|---|---|

| Input Type | Acc. | F1 | Acc. | F1 | Acc. | F1 |

| 0.53 | 0.41 | 0.83 | 0.85 | 0.84 | 0.85 | |

| 0.57 | 0.46 | 0.83 | 0.85 | 0.84 | 0.86 | |

| 0.82 | 0.85 | 0.84 | 0.86 | 0.83 | 0.85 | |

| 0.82 | 0.84 | 0.83 | 0.85 | 0.83 | 0.86 | |

| 0.84 | 0.86 | 0.84 | 0.85 | 0.82 | 0.84 | |

| 0.84 | 0.86 | 0.84 | 0.85 | 0.84 | 0.86 | |

| 0.84 | 0.86 | 0.84 | 0.85 | 0.83 | 0.84 | |

| 0.84 | 0.86 | 0.84 | 0.85 | 0.83 | 0.85 | |

| 0.83 | 0.85 | 0.81 | 0.84 | 0.82 | 0.84 | |

| 0.83 | 0.85 | 0.83 | 0.85 | 0.83 | 0.85 | |

| 0.82 | 0.84 | 0.83 | 0.85 | 0.83 | 0.85 | |

| 0.82 | 0.84 | 0.82 | 0.84 | 0.84 | 0.85 | |

| 0.83 | 0.85 | 0.84 | 0.86 | 0.82 | 0.84 | |

| 0.82 | 0.84 | 0.82 | 0.84 | 0.85 | 0.86 | |

| 0.84 | 0.85 | 0.81 | 0.84 | 0.82 | 0.84 | |

| 0.83 | 0.86 | 0.84 | 0.85 | 0.83 | 0.84 | |

| 0.79 | 0.80 | 0.83 | 0.85 | 0.83 | 0.85 | |

| Input Type | Acc. | F1 |

|---|---|---|

| 0.56 ± 0.11 | 0.42 ± 0.27 | |

| + CL | 0.85 ± 0.04 | 0.84 ± 0.04 |

| with Fillers + CL | 0.86 ± 0.04 | 0.85 ± 0.04 |

| + CL + Aug | 0.87 ± 0.05 | 0.85 ± 0.05 |

| with Fillers + CL + Aug | 0.85 ± 0.05 | 0.84 ± 0.05 |

| 0.85 ± 0.06 | 0.86 ± 0.06 | |

| + CL | 0.87 ± 0.08 | 0.86 ± 0.09 |

| Input Type | Acc. | F1 |

|---|---|---|

| 0.83 ± 0.08 | 0.82 ± 0.09 | |

| 0.85 ± 0.08 | 0.84 ± 0.10 | |

| 0.84 ± 0.08 | 0.81 ± 0.11 | |

| 0.82 ± 0.08 | 0.80 ± 0.07 | |

| 0.85 ± 0.08 | 0.83 ± 0.11 | |

| 0.84 ± 0.09 | 0.82 ± 0.11 | |

| 0.80 ± 0.10 | 0.78 ± 0.11 | |

| 0.81 ± 0.09 | 0.79 ± 0.11 | |

| 0.78 ± 0.08 | 0.79 ± 0.09 | |

| 0.84 ± 0.09 | 0.82 ± 0.10 | |

| 0.82 ± 0.08 | 0.80 ± 0.10 | |

| 0.79 ± 0.08 | 0.77 ± 0.13 | |

| 0.77 ± 0.09 | 0.72 ± 0.15 | |

| 0.79 ± 0.09 | 0.77 ± 0.12 | |

| 0.80 ± 0.09 | 0.79 ± 0.14 | |

| 0.83 ± 0.07 | 0.81 ± 0.09 | |

| 0.82 | 0.80 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).