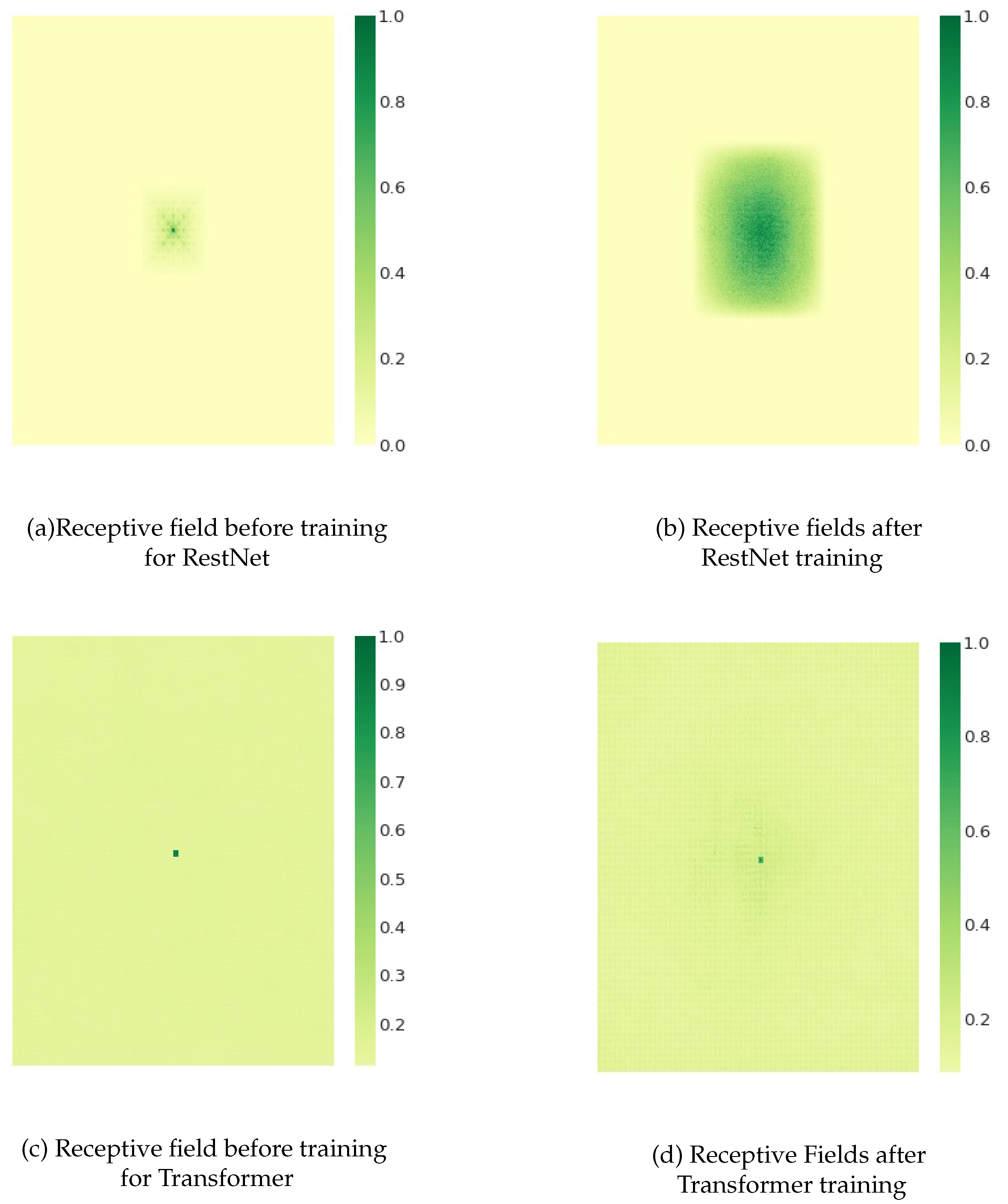

In this section, we give the specific operation process of the experiment in detail, mainly including the experimental description, the qualitative analysis and quantitative analysis of the experimental results. In the following, we will introduce the specific details of the three parts in turn.

4.1. Introduction of Experiments

This part introduces the preparation work before model training. In order to objectively evaluate the performance of our proposed model, we used the same data set as that of Rezanezhad et al. [

63] for experimental training. To be specific, We used the recent DIBCO dataset [

8,

9,

10,

11,

12,

13,

14,

15,

16], the Bali Palm Leaf dataset [

82] and the PHIBC [

83] dataset of the Persian Heritage document image Binarization Competition.

For comparative experimental analysis, we selected DIBCO2012 [

11], DIBCO2017 [

15]DIBCO 2018 [

16] as validation data set. The rest of the data set serves as the training data set, This is consistent with the model of Rezanezhad et al [

63] and others [

49,

59,

64,

69,

81] is consistent.

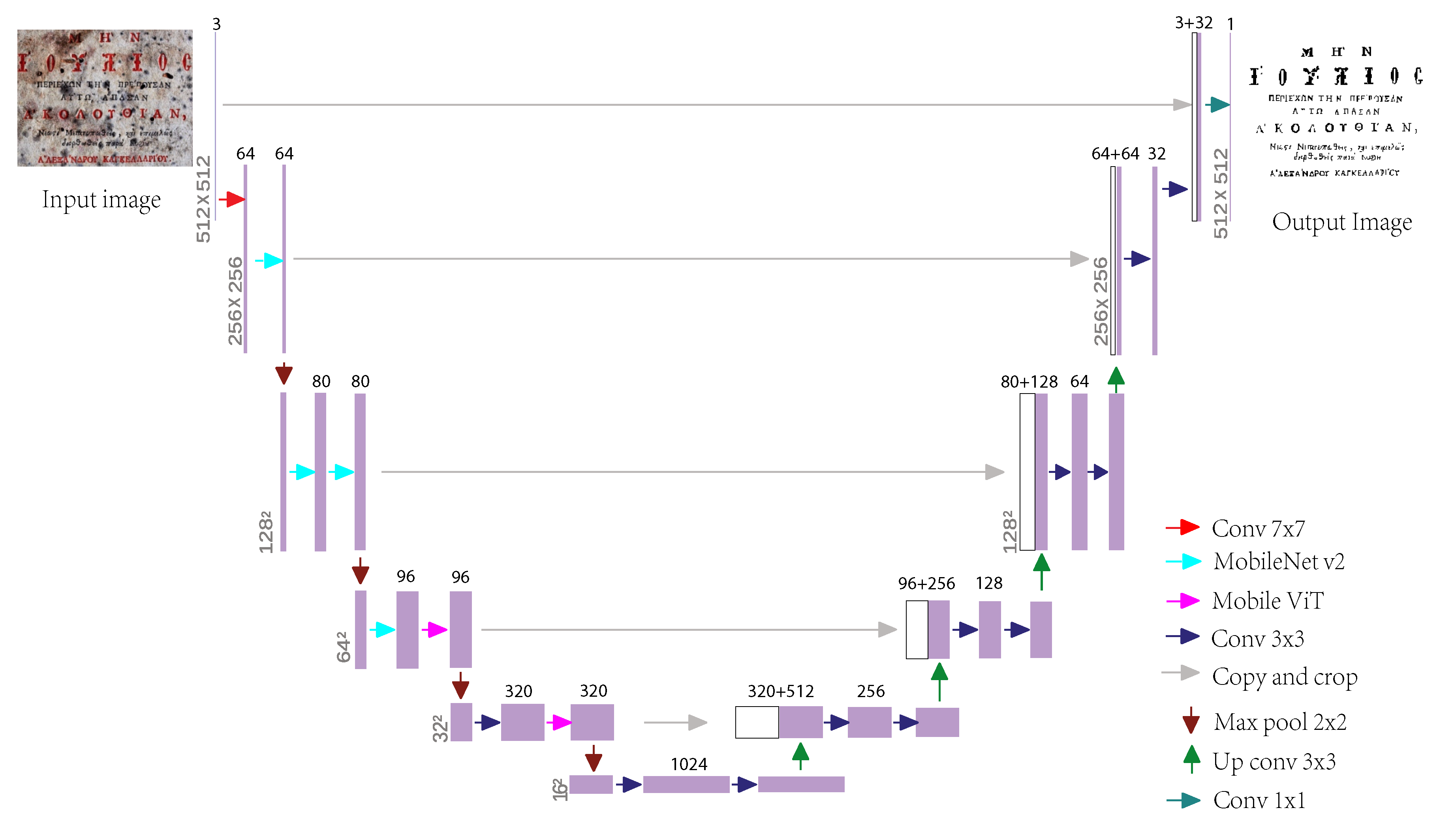

The training image size, learning rate, and number of epochs for our document image binarization model were all tuned through extensive experiments. The specific training process of the model was divided into two stages. In the first stage, the images of the training dataset were not cut, so as to learn the model from the overall structural features, and the complete image information after data enhancement processing was used for training, and the training epoch was set to 30. The second stage focuses on the learning ability of local detail information. In this stage, the original document image is diced, which results in the amount of training data increasing to more than four times that of the first stage, so the training epoch is set to 20.

The loss function for training document image binarization models is often the F-measure (FM), as described in [

19,

63]. The F-measure (FM) of an image, which is defined as the formula (

5).

Where

,

, and the three quantities

,

,

respectively indicate that the experiment obtained correct positive values (the pixels in the foreground of the text were correctly divided into text). False positive values (pixels of the background are incorrectly classified as text foreground), and false negative values (text foreground is incorrectly classified as background). During our training, we set the foreground pixel value to 1 (" positive ") and the background pixel value to 0 (" negative ") in the true binary label (GT). Putting the calculation of

and

into the formula (

5), the simplified objective function can be obtained as follows.

An important problem in model training is the insufficient amount of training data. This is because the recent DIBCO datasets [

8,

9,

10,

11,

12,

13,

14,

15,

16,

17] only 11 datasets containing a total of 146 pairs of original document images and their true binarized label images (GT), Together with the 50 pairs of Bali palm leaf dataset [

82] and the 16 pairs of images and their GT of PHIBC dataset [

83], there are only 212 valid image pairs and labels in total. If the training target is DIBCO2017 dataset [

15], only 172 pairs of images and their GT can actually be used for training (20 pairs without DIBCO2017 [

15], and 20 pairs without DIBCO2017 [

15]). It also does not contain the 20 pairs of DIBCO2019 [

17], because the document image of this dataset is more complex, the degree of pollution type and the characteristics of the text are very different from those shown in other datasets. And other models for comparison [

49,

59,

63,

64,

69,

81] is trained without any data [

15,

17]). This is not sufficient for neural network model training, so similar to Rezanezhad et al. [

63], We applied data augmentation to an existing dataset [

60,

84], including image rotation, scaling, brightness adjustment, and image chunking. In the specific training process, we trained DIBCO2012 [

11], DIBCO2017 [

15] and DIBCO2018 [

16]. An input image size of

was chosen. The training process is divided into two stages, the first stage is a learning rate of 1e-3 or 8e-4 (depending on the training curve and the results), and the second stage uses a learning rate timer, the learning rate is halved every five iterations, and the initial learning rate is the same size as in the first stage.

In summary, through the comparative analysis of experimental results, the binarization model of document image proposed in this paper, We get better results on DIBCO2012 [

11] and DIBCO2017 [

15] datasets (compared to Rezanezhad et al. [

63]). That is, it performs better in the four indicators of document image binarization. The results on the DIBCO2018 [

16] dataset are not very good and we analyze this. In addition, this paper also conducts verification and comparison experiments on the DIBCO2019 [

17] dataset (because the images of this dataset are severely damaged and the image features are different from the training dataset). It outperforms [

63] and other traditional methods with the same parameters. In the following, we will analyze and discuss the experimental results through qualitative and quantitative aspects.

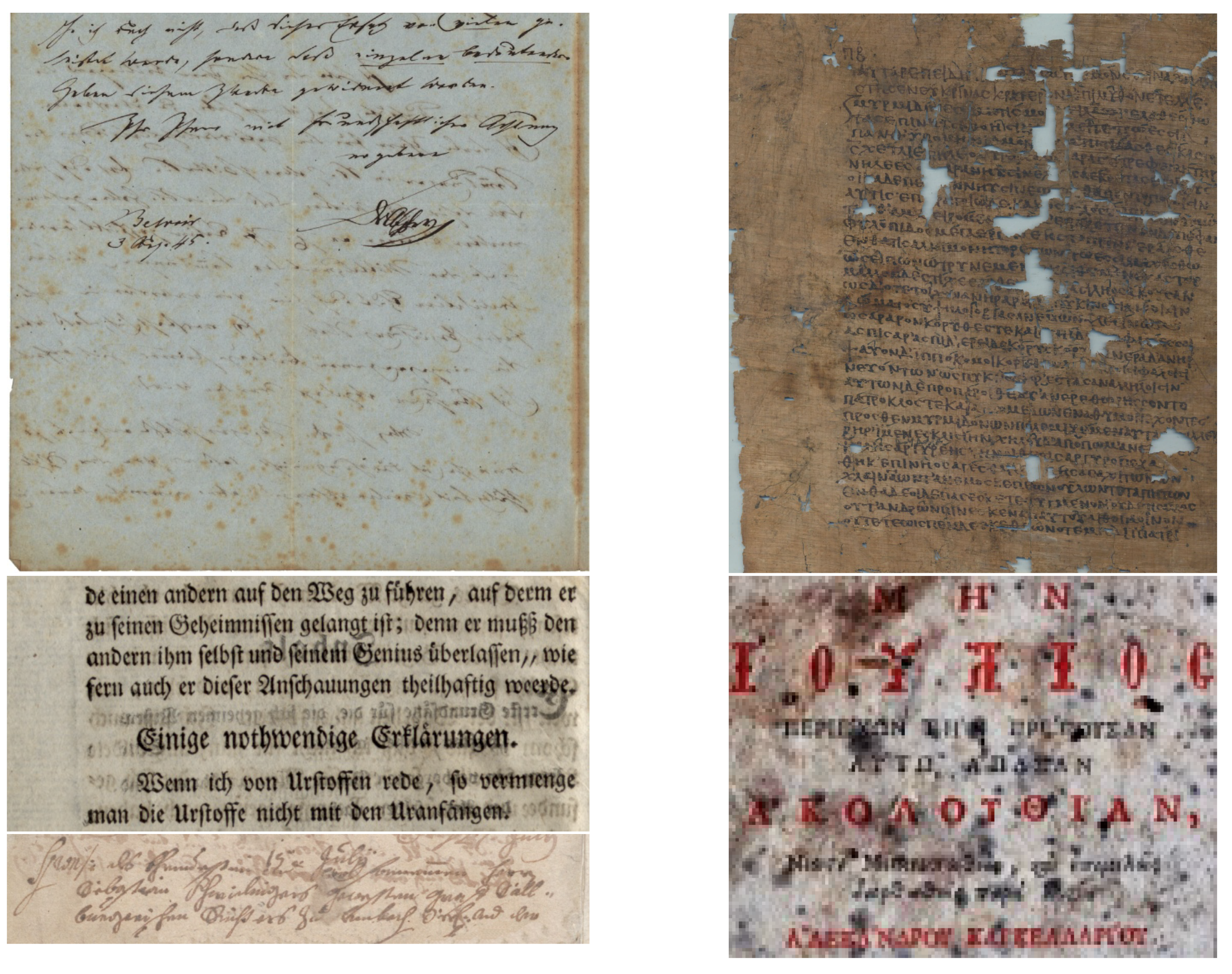

4.2. Qualitative evaluation

The experiments in this paper are compared and analyzed on DIBCO2012 [

11], DIBCO2017 [

15] and DIBCO2018 [

16] datasets respectively. We compare the document image binarization model proposed in this paper with the deep learning-based model [

49,

59,

63,

64,

69,

81] and the classic traditional method [

20,

22,

46] are compared on the three datasets [

11,

15,

16]. By enumerating the visual comparison maps of the binarization results and calculating the relevant evaluation indicators, the comparative analysis is carried out. First, we show the visual comparison of different methods on different datasets in the

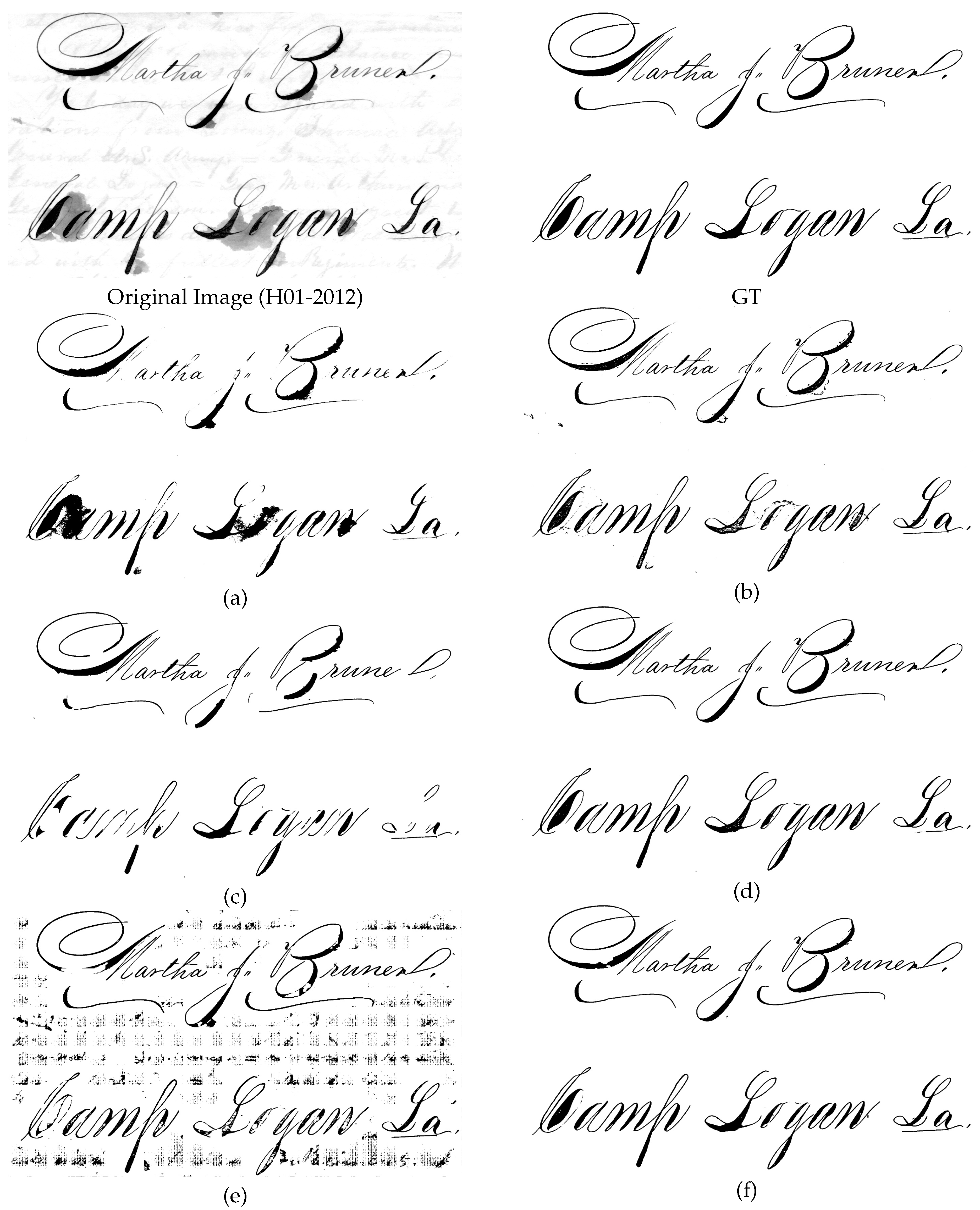

Figure 7,

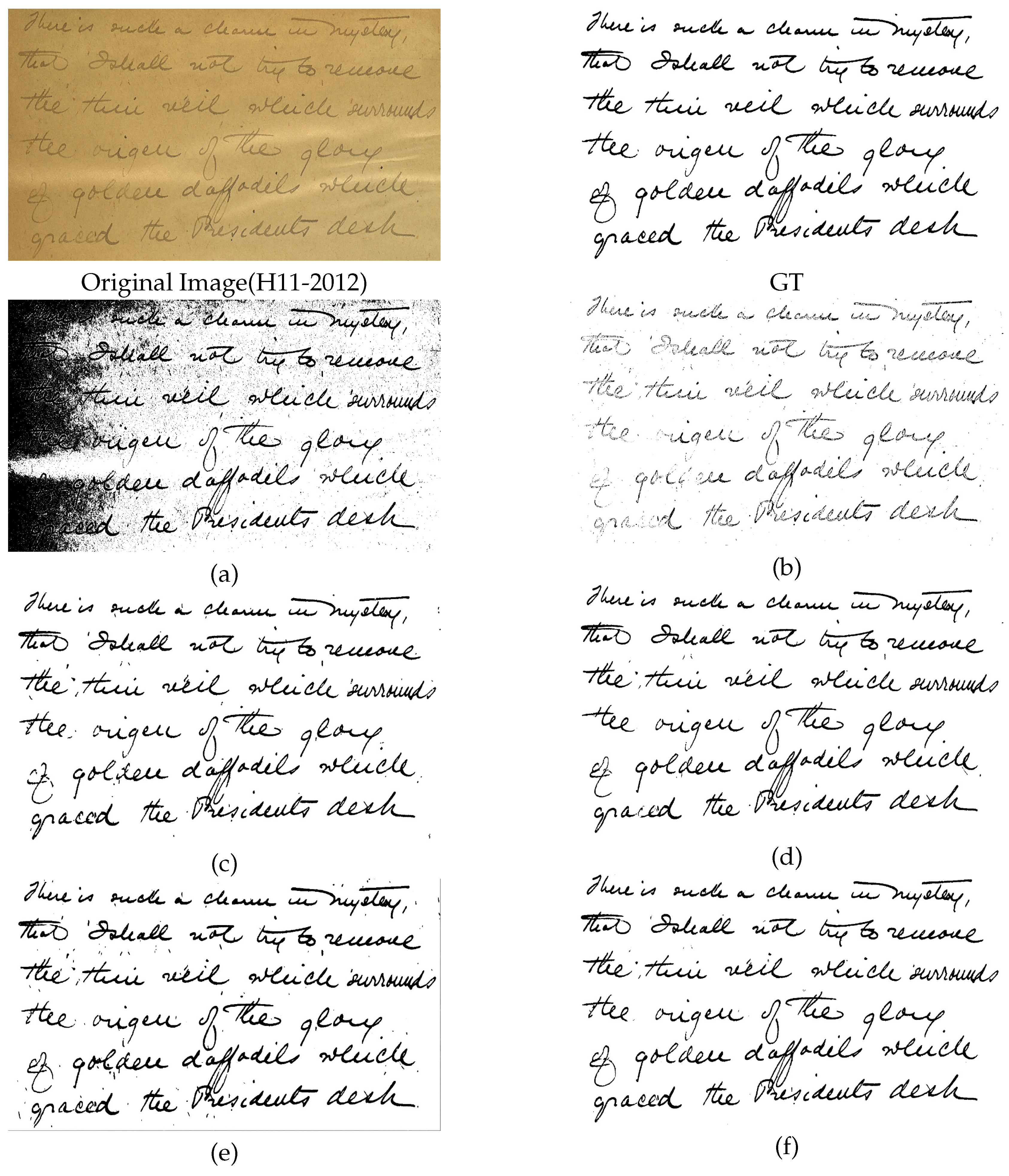

Figure 8,

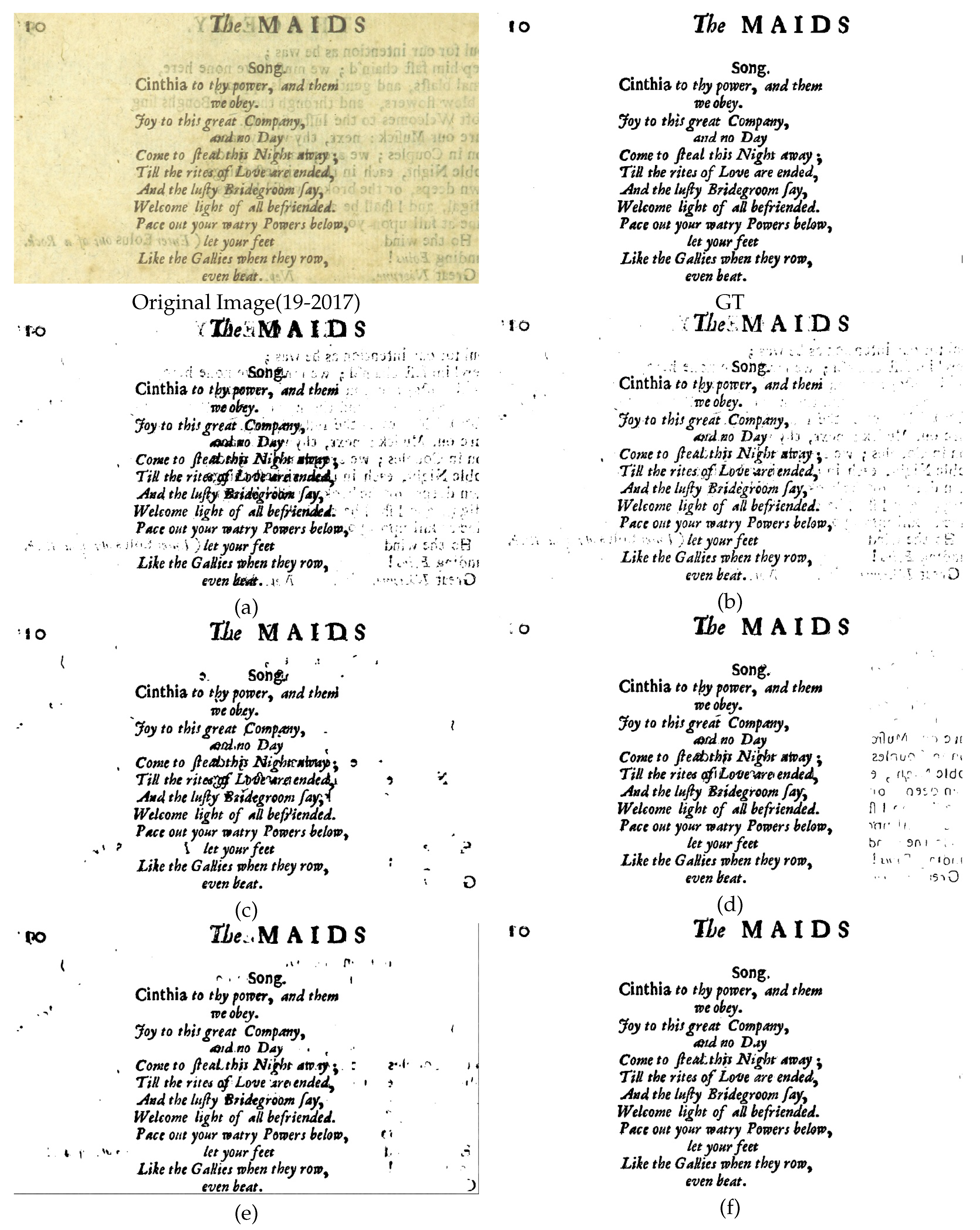

Figure 9,

Figure 10,

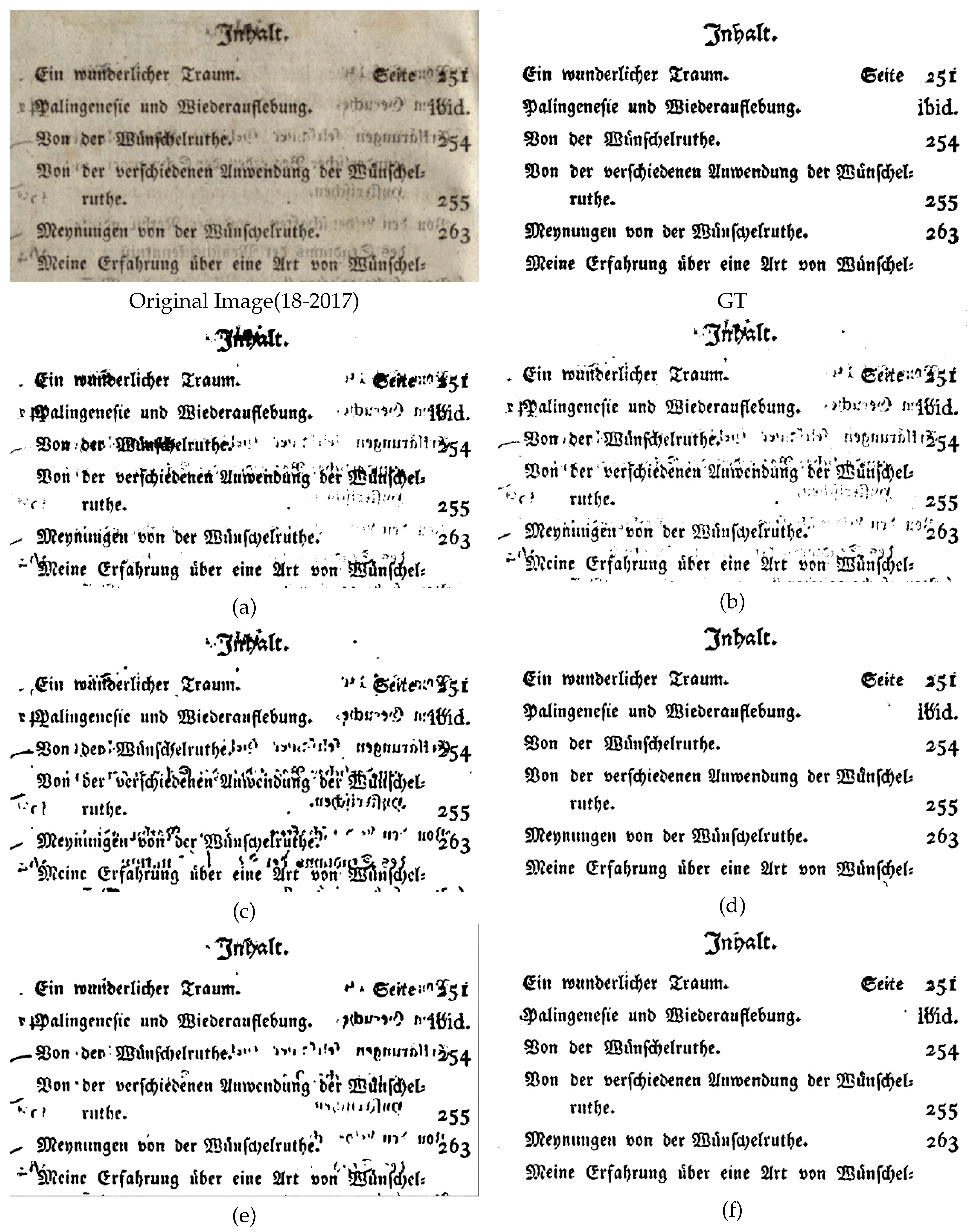

Figure 11 and

Figure 12.

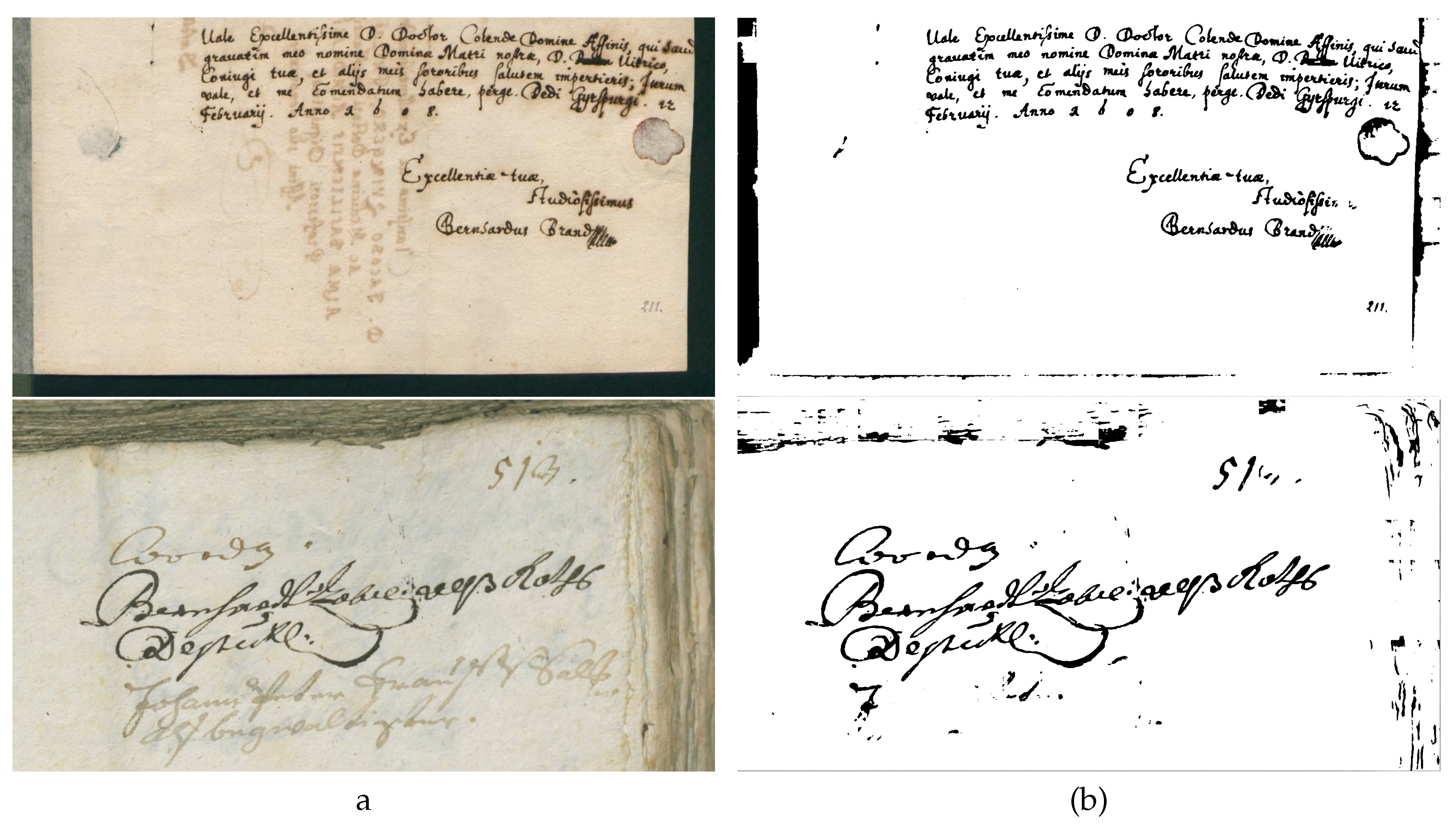

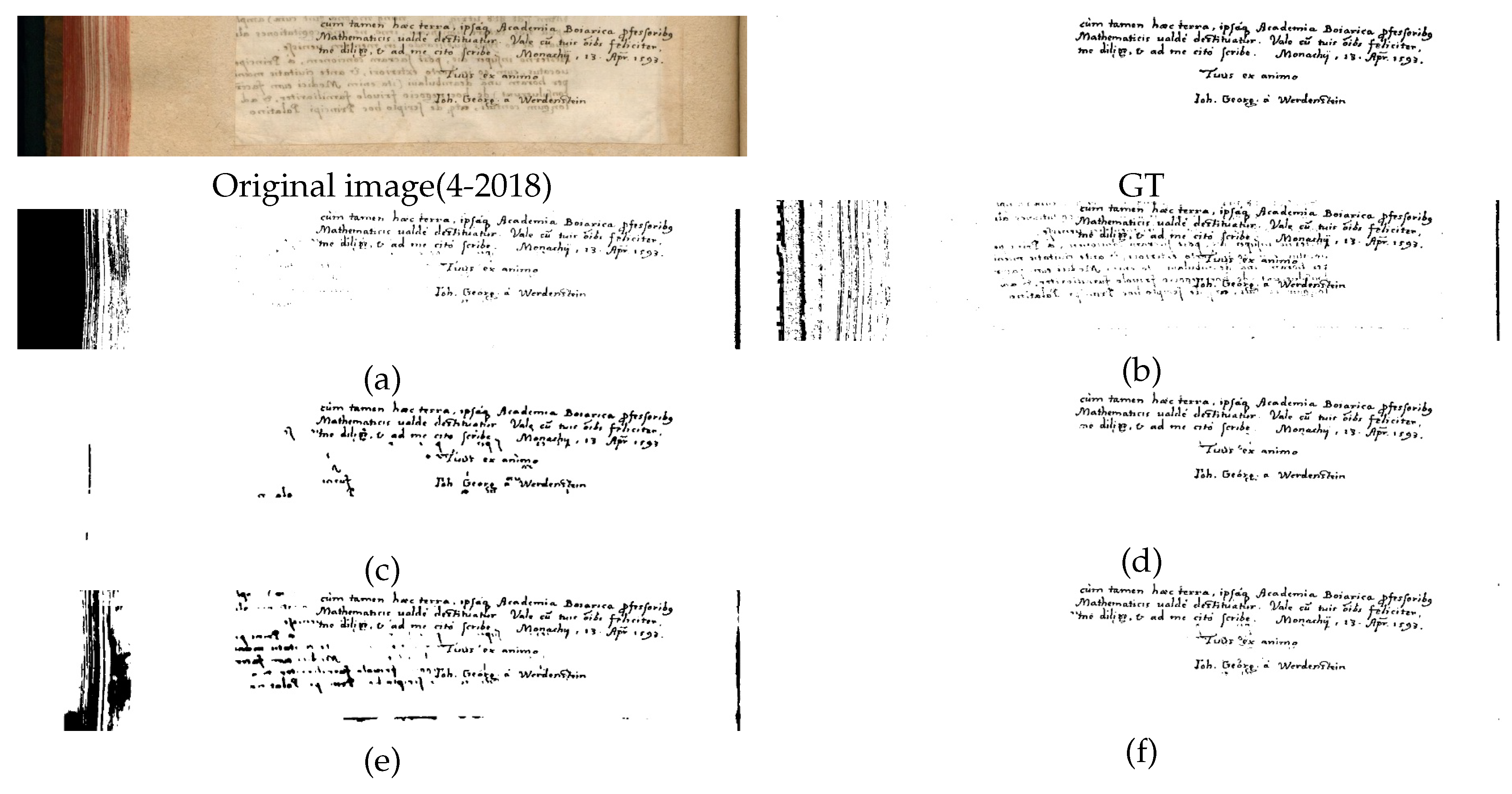

From

Figure 7, we can clearly see that Otsu [

20] cannot distinguish the pollution in the second line of the original article image from the text content very well. This is mainly because the method is based on a global threshold. However, the traditional method Sauvola [

22], which is based on local threshold, can basically separate the polluted background content from the text. Xiong et al. [

46] method (as the champion algorithm of DIBCO2018 [

16]) also cannot perfectly segment the text information in the thick area of the stroke, which indeed illustrates the limitations of the traditional binarization method. The model of Calvo and Gallego [

19] is a simple cascade convolutional network. Obviously, it is found that its binarization results cannot distinguish well the features in the background far from the text area, so that there is a lot of noise in the result map. This shows that the pure convolution model indeed has a strong ability to learn only local features. The results of Rezanezhad et al. [

63] do not perfectly extract only some of the thinner strokes and the sliding lines of the last two letters, and the rest of the text is almost completely separated from the background content.

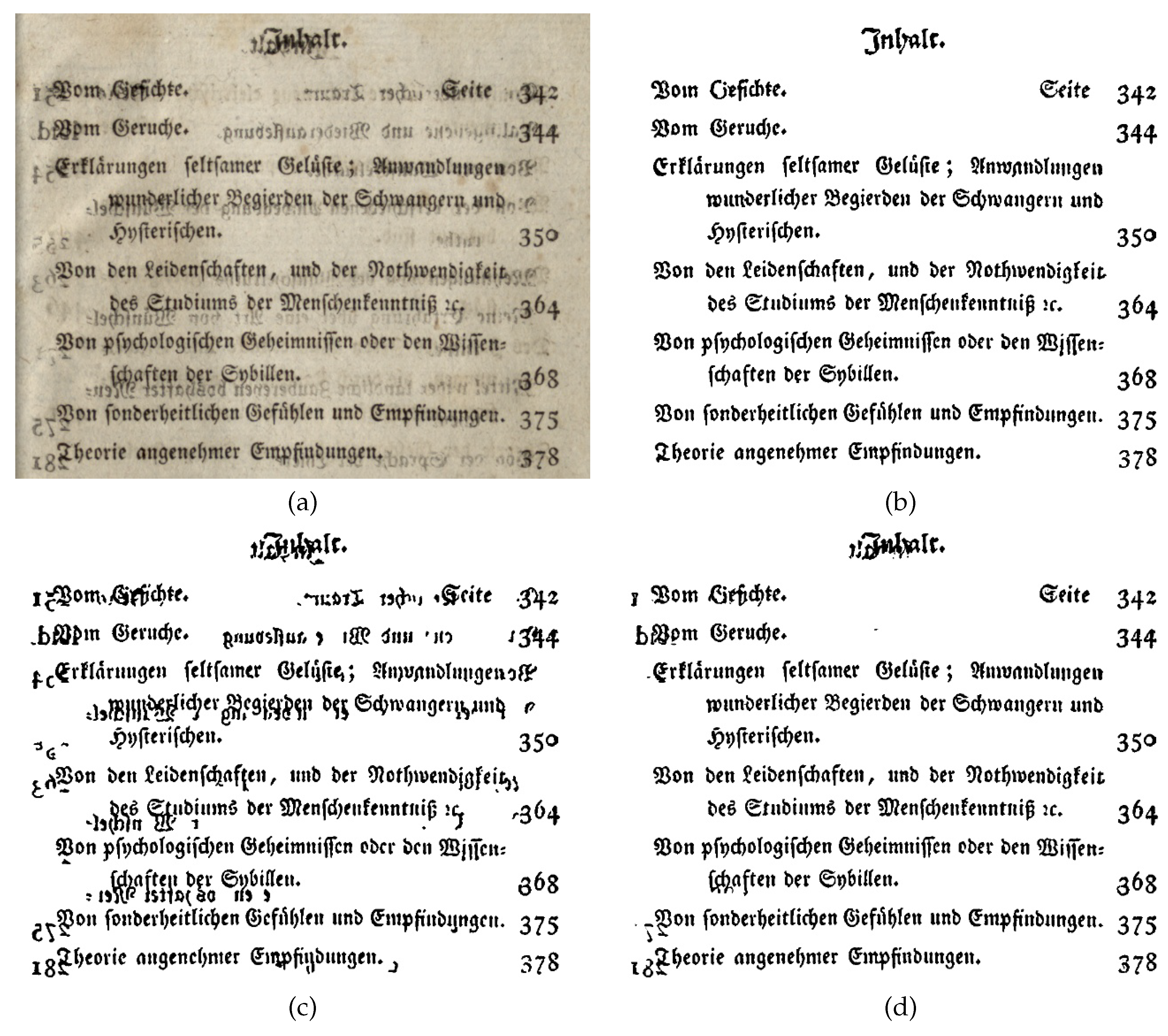

We can see from

Figure 8 that the traditional methods based solely on global or local thresholds Otsu [

20] and Sauvola [

22], The result of binarization for the document image with uneven illumination is not very satisfactory. However, because Xiong et al [

46] method estimates the overall background of the image and uses a variety of processing techniques, this kind of uneven illumination document image can also get better binarization results. At this point, the deep learning based models Calvo and Gallego [

19] (just without the fully clean separation of background content), Rezanezhad et al [

63]’s model and our model have achieved satisfactory binarization results for document images.

Only the proposed method obtains fully satisfactory results for binarization of document images in

Figure 9. From the binarization results of the traditional methods (Otsu [

20], Sauvola [

22] and Xiong et al. [

46]) in

Figure 9, we can see that, For shadows with similar text information in the background, it is difficult for these methods to correctly segment them into background content. For the binarization results of Rezanezhad et al. [

63], compared with Calvo and Gallego [

19], the background content near the text can be segmented well, but the photocopy contaminated area far away from the text area cannot be correctly segmented. This is because the model of Rezanezhad et al [

63] has limited feature learning ability for the global information of document images. The advantage of the proposed model is that it can use the lightweight Mobile ViT block module to effectively fuse the global information into the local information features extracted by convolution.

In

Figure 10, the binarization results of Rezanezhad et al. [

63] and the proposed model are basically satisfactory. This is because the model by Rezanezhad et al [

63] uses the Transformer structure in the bottom local region of the U-Net network architecture, and the document image has a shallow background photocopy pollution compared to the document image in

Figure 9. As a result, Rezanezhad et al. [

63] do a good job of separating background from text content.

Similar to the binarization results in

Figure 10. In

Figure 11, the binarization results of Rezanezhad et al. [

63] and the proposed model are both relatively satisfactory.

Figure 11 shows that the binarization of Otsu [

20] is significantly better than that of Sauvola [

22] because the photocopied text content in the background is lighter in color than the text content in the foreground. As a result, the Transformer used by Rezanezhad et al. [

63] does a good job of separating background content from real text content in small regions of the model. In addition, for the model training process of DIBCO2018 [

16] dataset, 10 more effective datasets (6.2%) are used than that of DIBCO2017 [

15], which plays an important role in improving the performance of the model.

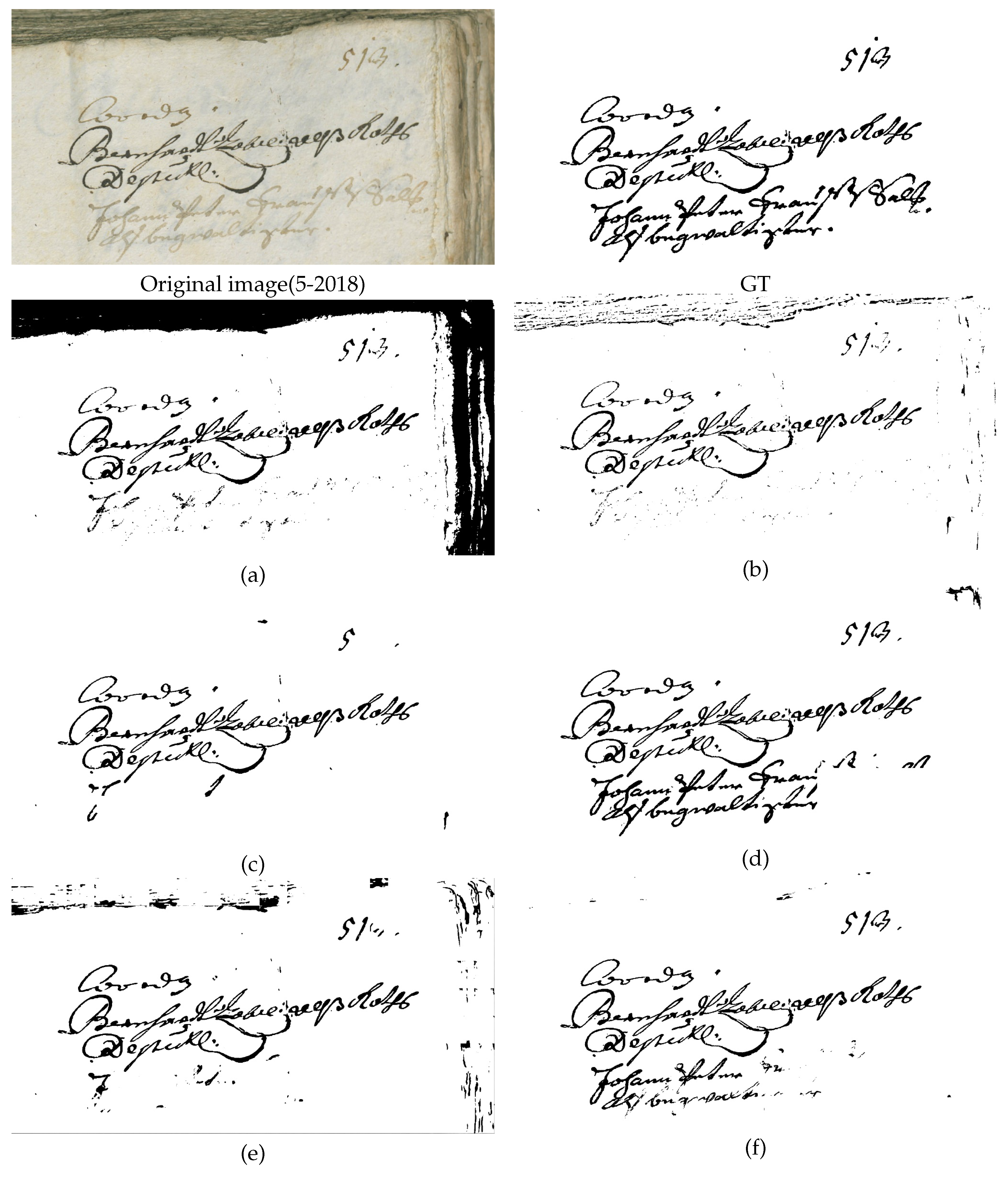

For the binarization results of all methods in

Figure 12, visually, there is a big difference between the binarization results of all methods and the true result (GT). The traditional three methods [

20,

22,

46] fail to correctly preserve the shallow text content of the last two lines, and the pure convolutional method [

19] also has the same problem. Rezanezhad et al. [

63] show strong performance, especially in the last two lines of text display closer to the real label. This may be attributed to the large number of parameters and the integration design of multiple models. The reason may be that the model has a large number of parameters (more than four times that of the proposed model) and is based on the integration of multiple models. Specific analytical explanations will be provided in the following section on the model robustness study in quantitative experiments. Therefore, it is undeniable that the model of Rezanezhad et al. [

63] does exhibit strong performance.

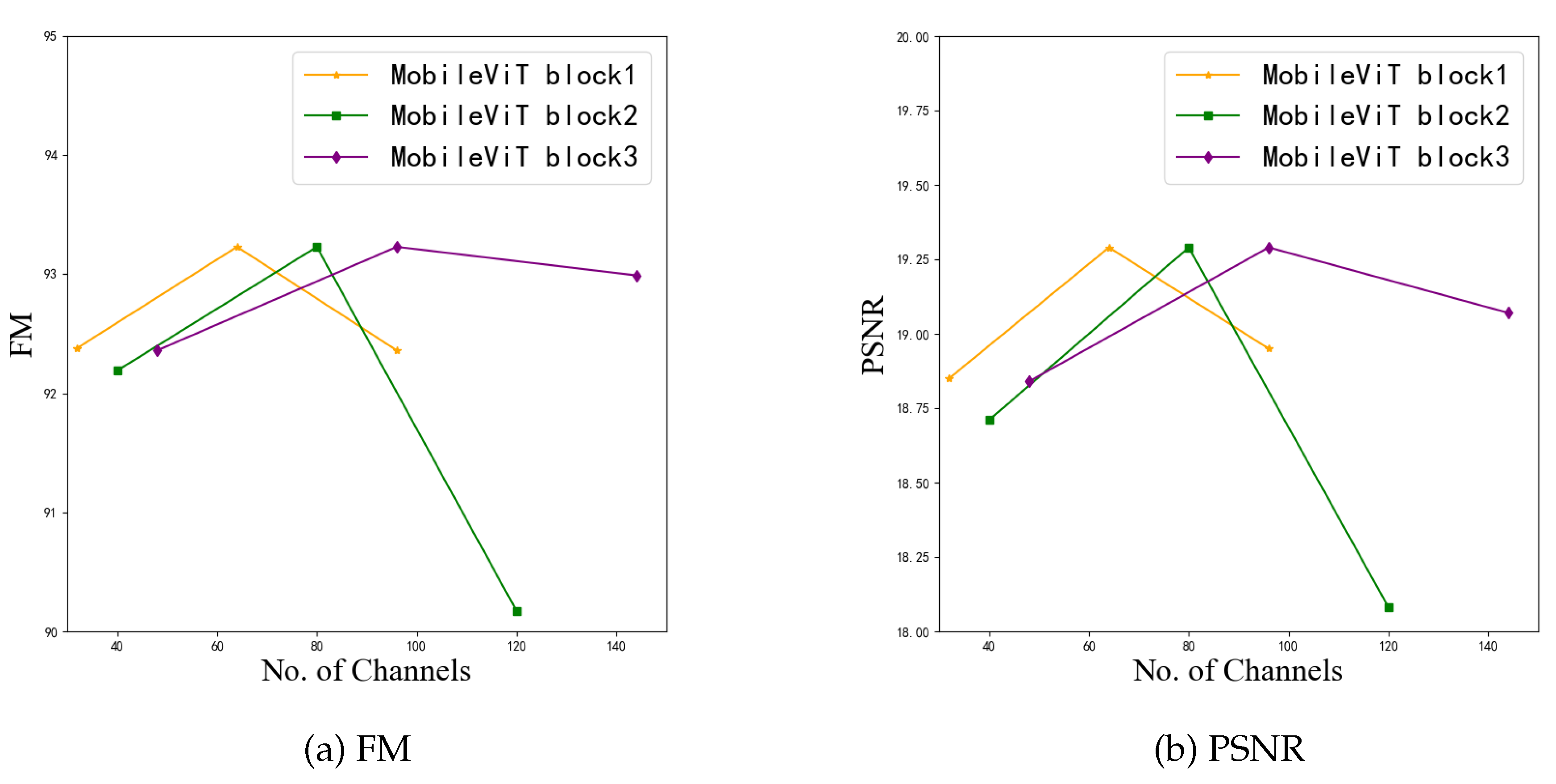

4.3. Quantitative Evaluation

For the quantitative numerical evaluation of the results of document image binarization processing, there are four commonly used evaluation indicators that are popular at present: Image F-measure FM, pseudo F-measure pFM [

83], Peak Signal to Noise Ration (PSNR) and distance reciprocal distortion (DRD) [

85]. These four evaluation metrics are used to show the quality of the obtained binarized image through different aspects, and they are significantly better than simple Accuracy.

(1) FM

Before giving the definition of FM and pFM, we first need to know the following four concepts, that is, TP, FP, TN, FN these four quantities are the correct positive value, the wrong positive value, the correct negative value, and the wrong negative value. The relationship between these four values can be clearly seen in the table below.

Table 1.

Predicted Values and Ground Truth.

Table 1.

Predicted Values and Ground Truth.

| Predicted Values\Ground Truth |

Possitive (1) |

Negtive (0) |

| Possitive (1) |

TP |

FP |

| Negtive (0) |

FN |

TN |

The usual definition of Accuracy is:

The F value of the image (FM), it’s defined as in equotion(

8).

Where

,

. In the binarization process, Precision is the probability that a value of 1 is correct, and Recall is the probability that a value of 1 accounts for the probability of a value of 1. We can see from the formula (

7) and formula (

5) that FM is more reasonable than Accuracy for the evaluation of document image binarization. Because the proportion of text in binarized text is much smaller than the pixels in the blank space, using FM metric is more scientific than simply looking at the pixel Accuracy of the whole image.

(2) pFM

The pseudo F-value (pFM) of an image, defined as:

Where

is the same as defined in FM, and

is the percentage of the character structure in the standard image compared to the binarized image. From the above definition of FM and pFM values (

59), we can clearly see that the size of these two indicators are positively correlated with the quality of the document image after binarization.

(3) PSNR

Peak signal-to-noise ratio (PSNR) is an indicator of image quality related to mean square error. It is an objective evaluation index that mainly expresses the difference between the results of image processing and the real image. In this paper, it is used to evaluate the difference between the binarization result of the document image and the binarization image of the real document image, which is calculated as follows:

Where is the mean squared error between the binarized image and the real binary image. For general 8bit image representation method, , the peak value is . The unit of PSNR is dB, the larger the value is, the more similar the binarization result is to the real binarized document image, which is a widely used objective index to evaluate image quality.

(4) DRD

Distance reciprocal distortion measure DRD [

85] is to objectively express the distortion of visual perception of binarized document image through the distance between pixels. The specific definition is as follows:

Where is the distortion of the KTH flipped pixel and is the number of non-uniform (not all black or white pixels) blocks in the real binarized image. The larger the value of DRD, the greater the distortion of the visual perception of the binarization result. Therefore, the smaller the value of DRD, the better the binarization result of the text.

Below we have listed respectively in DIBCO2012 [

11], DIBCO2017 [

15] and DIBCO2018 [

16]. The average values of the four indicators of the binarization results on three datasets obtained by methods [

20,

22,

46,

49,

59,

63,

64,

69,

81] and our method. The results are shown in

Table 2,

Table 3, and

Table 4.

From

Table 3 and

Table 3 we can clearly see that, Our simple end-to-end model outperforms other traditional methods on the mean of four common document image binarizations [

20,

22,

46] and deep learning model with the same training dataset [

49,

59,

63,

64,

69,

81]. Of particular note is that the performance of our individual model also exceeds the ensemble model of Rezanezhad et al. [

63], showing the absolute superiority of our model performance. However, from

Table 4, Our model did not exceed the model of Jemni et al. [

69] and Rezanezhad et al. [

63] on the DIBCO 2018 dataset, and we also conducted experimental analysis here.

From

Table 4, Our model performed worse than the model [

16] on four metrics on the DIBCO2018 [

16] dataset. To find out why, The four evaluation indexes of the binarization results of ten document images in the DIBCO2018 [

16] dataset obtained by the model are listed in

Table 5.

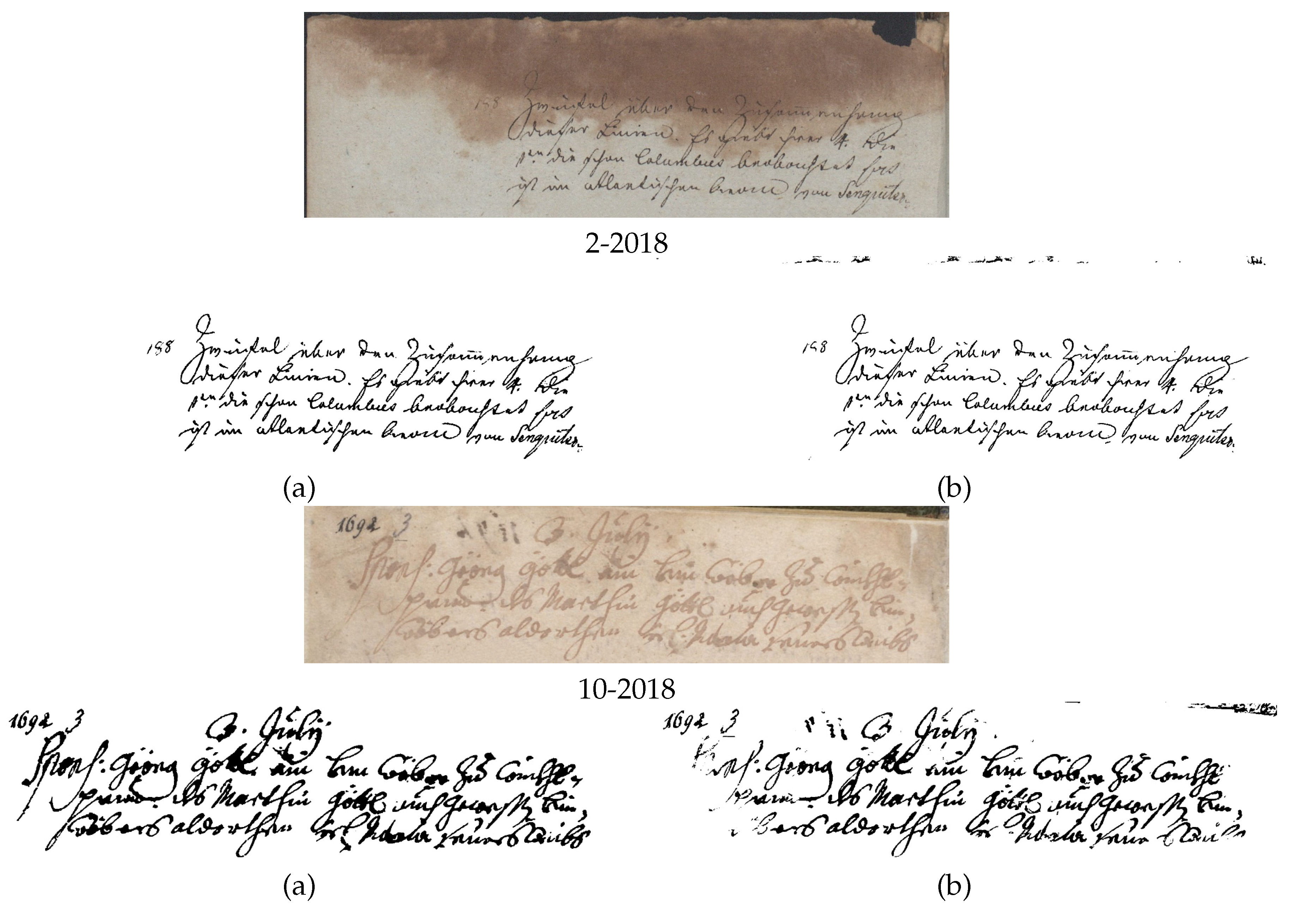

We find from the specific values in

Table 5 that the binarization of images 2-2018 and 10-2018 has lower PSNR values and smaller FM values. Then we list the comparison between the binarization results of these two images obtained by our model, the original document image and GT, as shown in

Figure 13.

From

Figure 13(b), we can see that the difference between the binarization results of our model and the real binarization results is mainly due to the black area at the top of the image, especially the binarization results of image 2-2018 obtained by our method. The text part is almost the same as GT. It’s just that the top black area is not segmented by our model as background content. As you can see, our model is weak in learning large black areas far from the text area, which leads to the poor results of our model on the DIBCO2018 [

16] dataset. The main reason for this phenomenon is that the training data set contains a smaller number of original document images with large dark areas.

Next, we will compare the robustness of the model with other methods [

20,

22,

46,

63]. The experiment is carried out on the DIBCO2019 [

17] dataset with severe image damage. The specific deep learning model used is trained on the DIBCO2017 [

15] dataset, because the model is obtained using the minimum number of training dataset images (162 pairs of labeled document images and their real binarization results). We also use the mean of the twenty results of the four binaryzation metrics PSNR, FM, pFM and DRD in the DIBCO2019 [

17] dataset for quantitative comparison. The means for the various methods are shown in

Table 6.

In

Table 6, in order to more objectively and fairly measure the robustness of the model [

63] and the model in this paper, Here are two examples of the [

63] model. This is because the model provided by Rezanezhad et al. [

63] is an ensemble of several trained models and has about 37.0M parameters, while our model has about 8.9M parameters, which are not on the same order of magnitude. In the literature [

86], experiments are carried out in detail and it is verified that the corresponding changes in the network scale adjustment in depth can maintain the good performance of the original model. Therefore, we use the method proposed in [

86] (the study in (d) of [

86], that is, the method of reducing the number of parameters in depth) to adjust the parameter setting of the model of Rezanezhad et al. [

63]. The parameter number of the proposed model is about 9.0M, which is on the same order of magnitude as that of the proposed model. At this point, the model is trained with the other training conditions being the same.

From

Table 6 it is clear that the Otsu [

20] method has the worst numerical performance in the binarized metrics PSNR and DRD. Although Xiong et al. [

46] won the champion algorithm of the year on the DIBCO2018 [

16] dataset, However, the FM and pFM numerical results on the DIBCO2019 [

17] dataset are the worst, indicating that the robustness of the method is not very good. The model [

63]-37.0M is optimal in

Table 6 for the four indicators of image averaging in the DIBCO2019 [

17] dataset. The main reason for this is that the model has a large number of parameters, which makes it the most robust. The model in this paper ranks second in the mean value of PSNR, FM and DRD for all images of the DIBCO2019 [

17] dataset, and only the pFM index is inferior to the model [

63]-9.0M. It can be said that the model in this paper is superior to the model of Rezanezhad et al. [

63] in the same number of parameters. For the binarization results of DIBCO2019 [

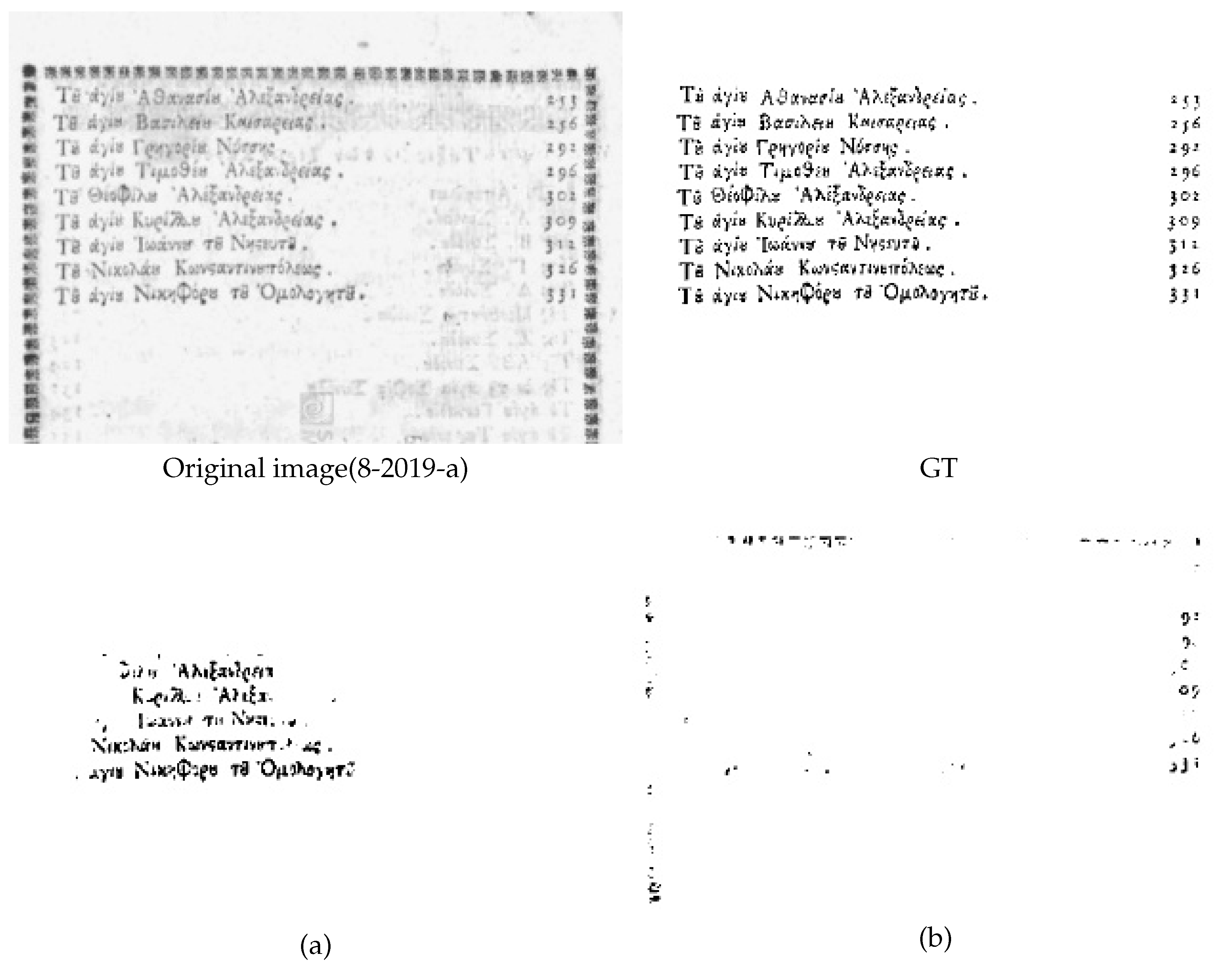

17] obtained by the model of this paper, we observe that there is an image (8-2019-a), the binarization obtained by the model of this paper hardly sees any real text information, and the evaluation index value is particularly low. See

Figure 14.

As you can see from

Figure 14, our binarization model performs poorly on images with light font colors (8-2019-a), resulting in a pFM value about 33 lower than that of [

63]-9.0M. This directly leads to the lower pFM index of the proposed model on the DIBCO2019 [

17] dataset.