Submitted:

09 April 2024

Posted:

09 April 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

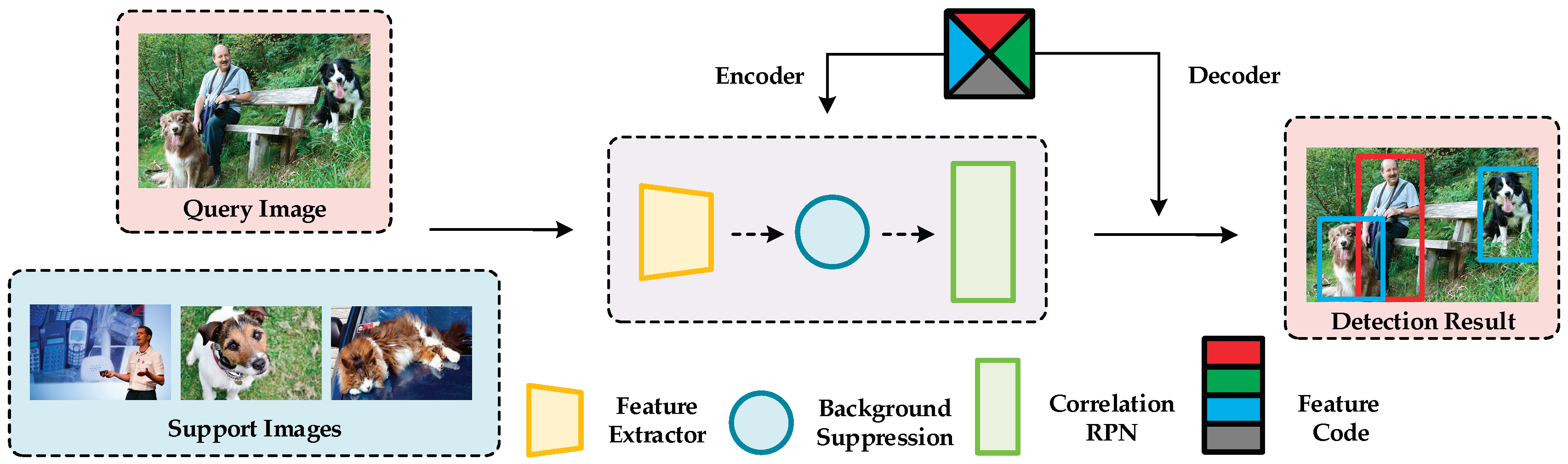

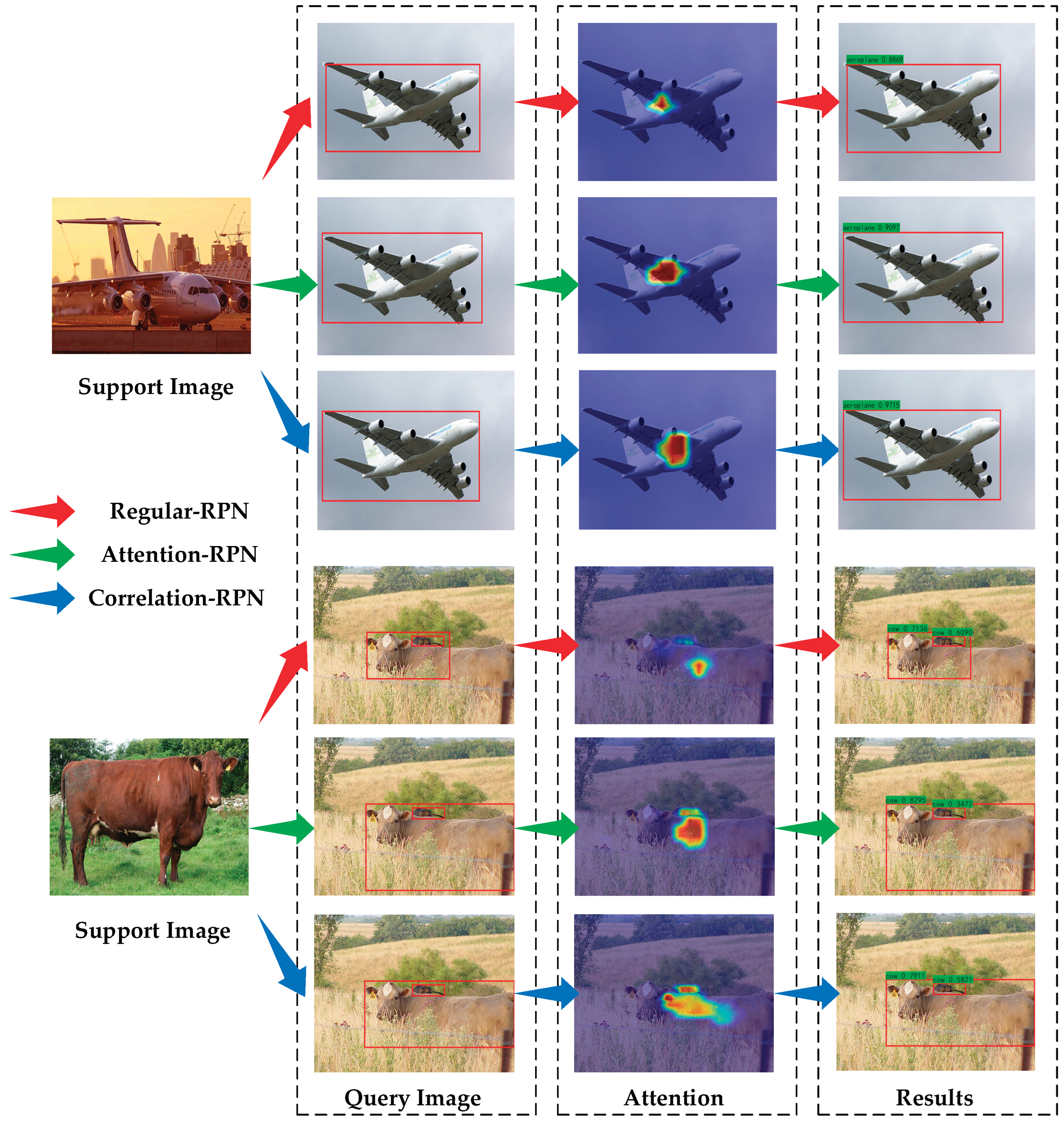

- We propose a novel correlation-aware region proposal network structure called Correlation-RPN, and migrate it to object detectors, improving detectors’ capacity of object localization and generalization;

- We redesign a new feature coding mechanism and integrally migrate the encoder-decoder of transformer into our model to effectively learn support-query feature similarity representation;

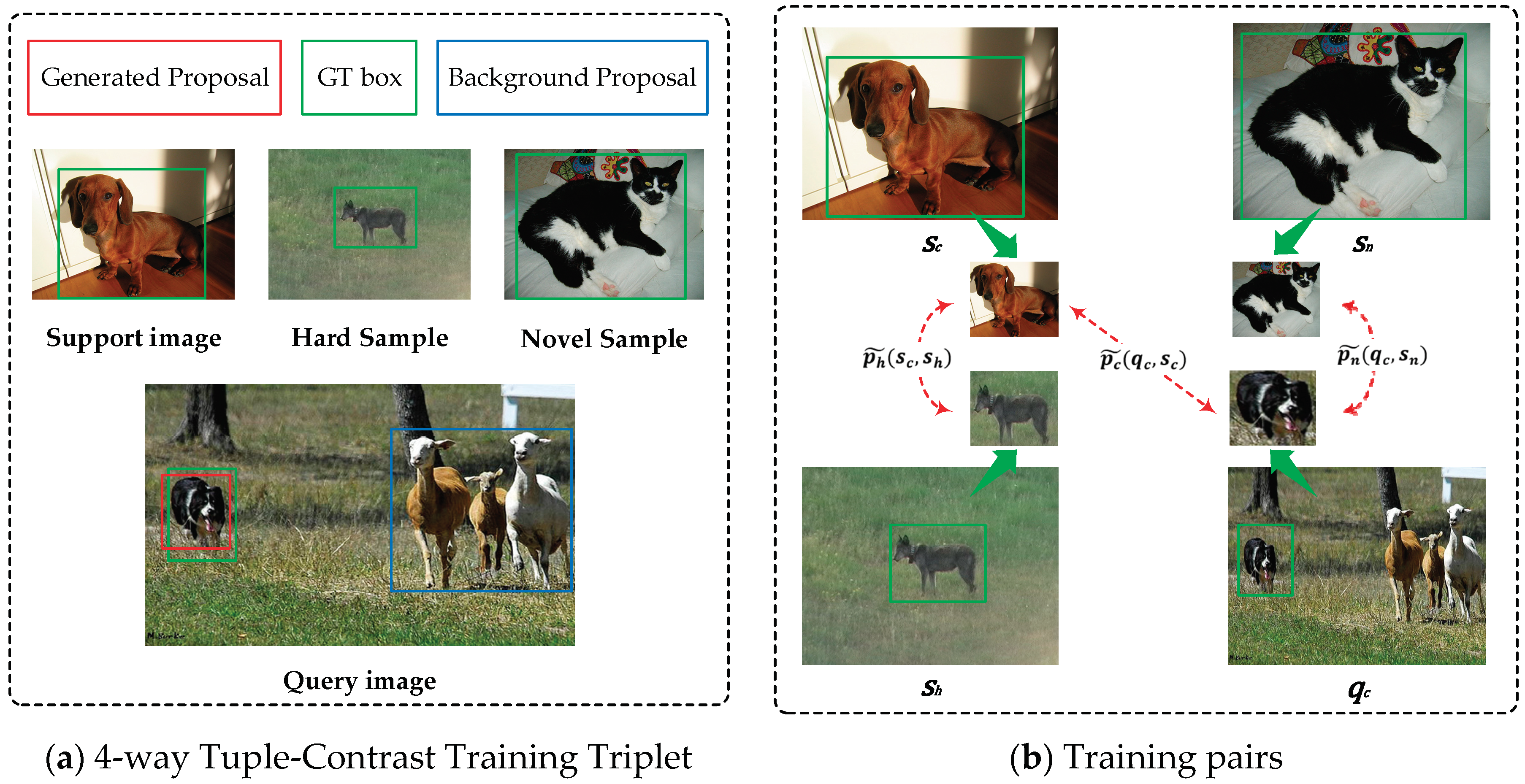

- With our presented 4-way tuple-contrast training strategy, CRTED without further fine-tuning can achieve comparable performance with most of the representative methods in few-shot object detection.

2. Related Work

2.1. General Object Detection

2.2. Few-Shot Object Detection

2.3. Transformer Encoder-Decoder

3. Approach

3.1. Preliminaries

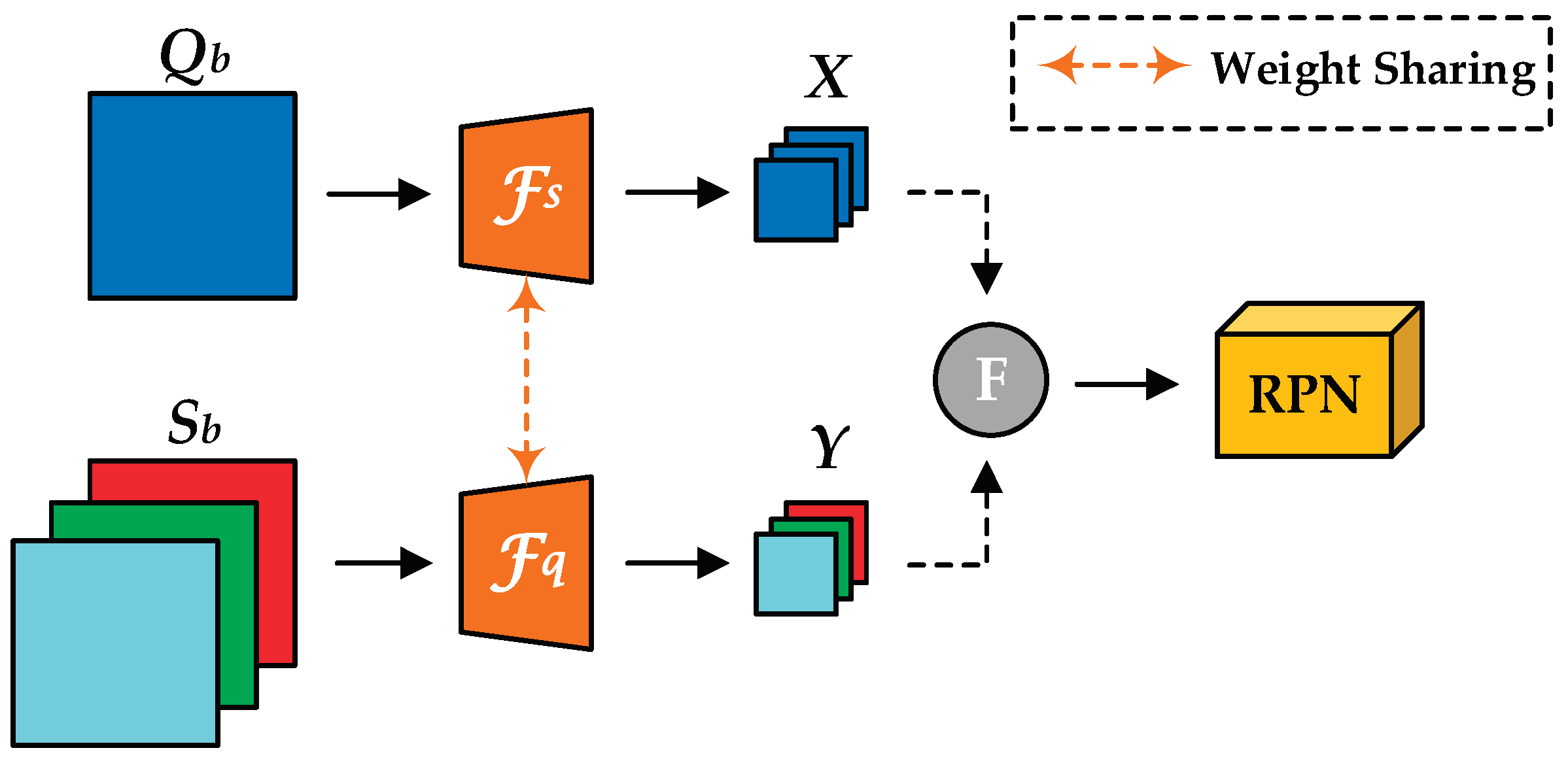

3.2. Architecture

3.3. Training Procedure

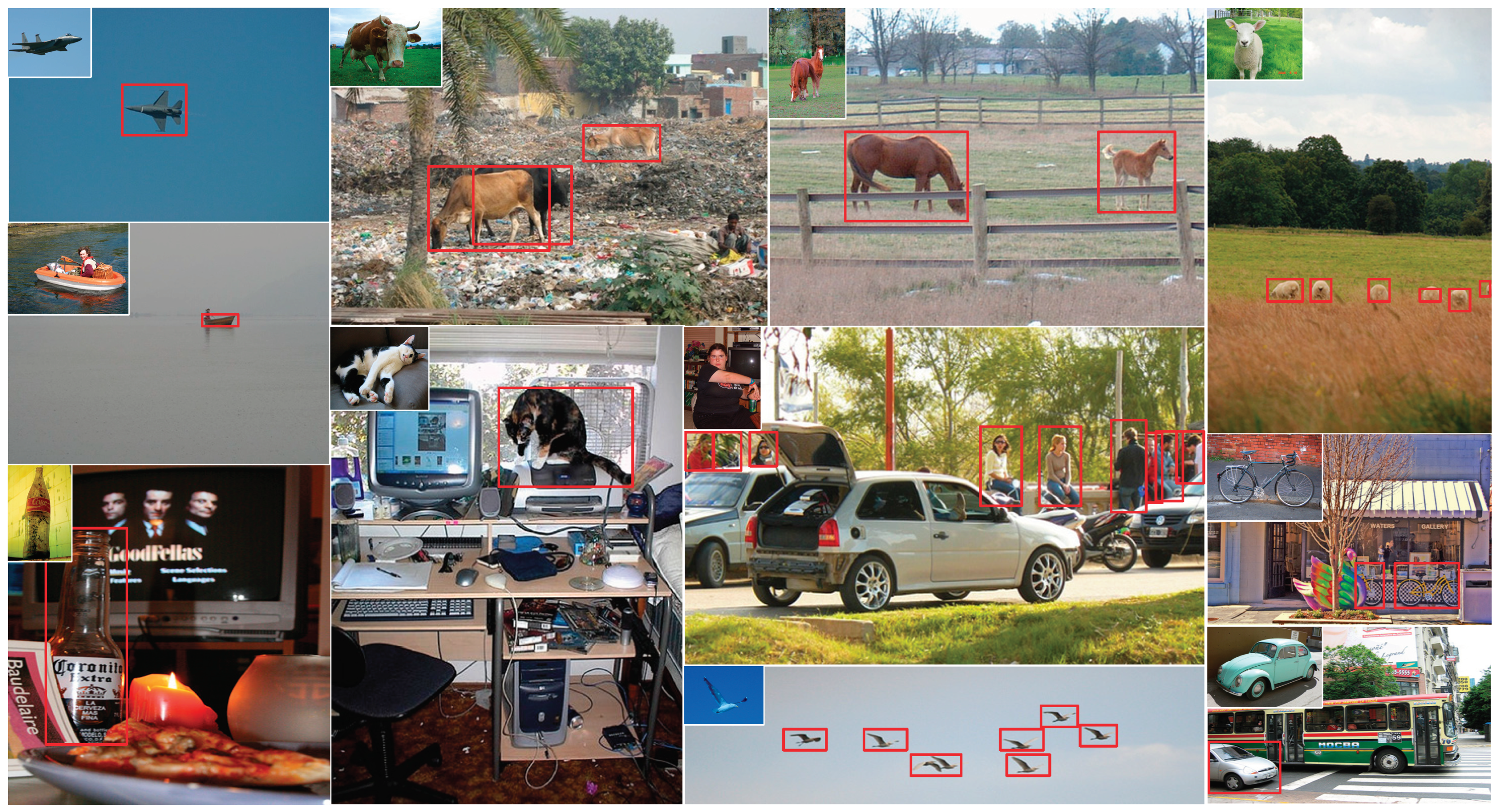

4. Experiments

4.1. Few-Shot Object Detection Benchmarks

4.2. Implementation Details

4.3. Ablation Studies

4.4. Comparison with State-of-the-Arts

5. Conclusion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Nicolas Carion, Francisco Massa, Gabriel Synnaeve, Nicolas Usunier, Alexander Kirillov, and Sergey Zagoruyko. End-to-end object detection with transformers. In European conference on computer vision, pages 213–229. Springer, 2020. [CrossRef]

- Guangxing Han, Xuan Zhang, and Chongrong Li. Semi-supervised dff: Decoupling detection and feature flow for video object detectors. In Proceedings of the 26th ACM international conference on Multimedia, pages 1811–1819, 2018.

- Joseph Redmon, Santosh Divvala, Ross Girshick, and Ali Farhadi. You only look once: Unified, real-time object detection. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 779–788, 2016.

- Peize Sun, Rufeng Zhang, Yi Jiang, Tao Kong, et al. Sparse r-cnn: End-to-end object detection with learnable proposals. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 14454–14463, 2021.

- Hao Zhang, Feng Li, Shilong Liu, Lei Zhang, Hang Su, Jun Zhu, Lionel M Ni, and Heung-Yeung Shum. Dino: Detr with improved denoising anchor boxes for end-to-end object detection. arXiv preprint arXiv:2203.03605, 2022.

- Jake Snell, Kevin Swersky, and Richard Zemel. Prototypical networks for few-shot learning. In NeurIPS, 2017.

- Sachin Ravi and Hugo Larochelle. Optimization as a model for few-shot learning. In ICLR, 2017.

- Chelsea Finn, Pieter Abbeel, and Sergey Levine. Model-agnostic meta-learning for fast adaptation of deep networks. In ICML, 2017.

- Qi Cai, Yingwei Pan, Ting Yao, Chenggang Yan, and Tao Mei. Memory matching networks for one-shot image recognition. In CVPR, 2018.

- Spyros Gidaris and Nikos Komodakis. Dynamic few-shot visual learning without forgetting. In CVPR, 2018.

- Hao Chen, Yali Wang, Guoyou Wang, and Yu Qiao. Lstd: A low-shot transfer detector for object detection. In AAAI, 2018. [CrossRef]

- Bingyi Kang, Zhuang Liu, Xin Wang, Fisher Yu, Jiashi Feng, and Trevor Darrell. Few-shot object detection via feature reweighting. In ICCV, 2019.

- Xiaopeng Yan, Ziliang Chen, Anni Xu, Xiaoxi Wang, Xiaodan Liang, and Liang Lin. Meta r-cnn: Towards general solver for instance-level low-shot learning. In ICCV, 2019.

- Ross Girshick, Jeff Donahue, Trevor Darrell, and Jitendra Malik. Rich feature hierarchies for accurate object detection and semantic segmentation. In CVPR, 2014. [CrossRef]

- R. Girshick. Fast R-CNN. In ICCV, 2015.

- Ren, S., He, K., Girshick, R., Sun, J.: Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Transactions on Pattern Analysis and Machine Intelligence 39(6), 1137–1149 (2016).

- He K, Zhang X, Ren S, et al. Spatial pyramid pooling in deep convolutional networks for visual recognition[J]. IEEE transactions on pattern analysis and machine intelligence, 2015, 37(9): 1904-1916.

- Ge Z, Liu S, Wang F, et al. Yolox: Exceeding yolo series in 2021[J]. arXiv preprint arXiv:2107.08430, 2021.

- glenn jocher et al. yolov5. https://github.com/ ultralytics/yolov5, 2021.

- Wang C Y, Bochkovskiy A, Liao H Y M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2023: 7464-7475.

- Qi Fan, Wei Zhuo, Chi-Keung Tang, and Yu-Wing Tai. Few-shot object detection with attention-rpn and multi-relation detector. In CVPR, 2020.

- Wu, J., Liu, S., Huang, D., Wang, Y.: Multi-Scale Positive Sample Refinement for Few-Shot Object Detection. In: Proceedings of the European Conference on Computer Vision (ECCV). pp. 456–472 (2020). [CrossRef]

- Xin Wang, Thomas Huang, Joseph Gonzalez, Trevor Darrell, and Fisher Yu. Frustratingly simple few-shot object detection. In International Conference on Machine Learning, pages 9919–9928. PMLR, 2020.

- Wojke N, Bewley A. Deep cosine metric learning for person re-identification[C]//2018 IEEE winter conference on applications of computer vision (WACV). IEEE, 2018: 748-756.

- Karlinsky L, Shtok J, Harary S, et al. Repmet: Representative-based metric learning for classification and few-shot object detection[C]//Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2019: 5197-5206.

- Song H, Sun D, Chun S, et al. Vidt: An efficient and effective fully transformer-based object detector[J]. arXiv preprint arXiv:2110.03921, 2021.

- Li Y, Mao H, Girshick R, et al. Exploring plain vision transformer backbones for object detection[C]//European Conference on Computer Vision. Cham: Springer Nature Switzerland, 2022: 280-296.

- Fang Y, Yang S, Wang S, et al. Unleashing vanilla vision transformer with masked image modeling for object detection[C]//Proceedings of the IEEE/CVF International Conference on Computer Vision. 2023: 6244-6253.

- Liu F, Zhang X, Peng Z, et al. Integrally migrating pre-trained transformer encoder-decoders for visual object detection[C]//Proceedings of the IEEE/CVF international conference on computer vision. 2023: 6825-6834.

- Xizhou Zhu, Weijie Su, Lewei Lu, Bin Li, Xiaogang Wang, and Jifeng Dai. Deformable detr: Deformable transformers for end-to-end object detection. arXiv preprint arXiv:2010.04159, 2020.

- Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, L., Polosukhin, I.: Attention is all you need. In: NeurIPS (2017).

- Luca Bertinetto, Jack Valmadre, Joao F Henriques, Andrea Vedaldi, and Philip HS Torr. Fully-convolutional siamese networks for object tracking. In ECCV, 2016.

- Erika Lu, Weidi Xie, and Andrew Zisserman. Class-agnostic counting. In ACCV, 2018.

- Bo Li, Wei Wu, Qiang Wang, Fangyi Zhang, Junliang Xing, and Junjie Yan. Siamrpn++: Evolution of siamese visual tracking with very deep networks. In CVPR, 2019.

- Yi Sun, Yuheng Chen, Xiaogang Wang, and Xiaoou Tang. Deep learning face representation by joint identification-verification. In Advances in neural information processing systems, pages 1988–1996, 2014.

- Prannay Khosla, Piotr Teterwak, Chen Wang, Aaron Sarna, Yonglong Tian, Phillip Isola, Aaron Maschinot, Ce Liu, and Dilip Krishnan. Supervised contrastive learning. Advances in Neural Information Processing Systems, 33, 2020.

- Oriol Vinyals Aaron van den Oord, Yazhe Li. Representation learning with contrastive predictive coding. Advances in Neural Information Processing Systems, 31, 2018.

- Everingham, M., Gool, L., Williams, C.K., Winn, J., Zisserman, A.: The pascal visual object classes (voc) challenge. IJCV (2010). [CrossRef]

- Yang Xiao and Renaud Marlet. Few-shot object detection and viewpoint estimation for objects in the wild. In European Conference on Computer Vision (ECCV), 2020. [CrossRef]

- Yu-Xiong Wang, Deva Ramanan, and Martial Hebert. Meta-Learning to Detect Rare Objects. In 2019 IEEE/CVF International Conference on Computer Vision (ICCV), pages 9924–9933, Seoul, Korea (South), October 2019. IEEE.

- Lin, T.Y., Maire, M., Belongie, S., Bourdev, L., Girshick, R., Hays, J., Perona, P., Ramanan, D., Zitnick, C.L., Doll’ar, P.: Microsoft coco: Common objects in context. In: ECCV (2014).

- Li B, Wang C, Reddy P, et al. Airdet: Few-shot detection without fine-tuning for autonomous exploration[C]//European Conference on Computer Vision. Cham: Springer Nature Switzerland, 2022: 427-444.

- Ma J, Niu Y, Xu J, et al. Digeo: Discriminative geometry-aware learning for generalized few-shot object detection[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2023: 3208-3218.

- Zhang, W., Wang, Y.X.: Hallucination Improves Few-Shot Object Detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). pp. 13008–13017 (2021).

- Sun B, Li B, Cai S, et al. Fsce: Few-shot object detection via contrastive proposal encoding[C]//Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2021: 7352-7362.

- Cao Y, Wang J, Jin Y, et al. Few-shot object detection via association and discrimination[J]. Advances in neural information processing systems, 2021, 34: 16570-16581.

| Method | Precision | Recall | AP | ABO | ||

| Regular-RPN | Attention-RPN | Correlation-RPN | ||||

| √ | 0.7923 | 0.8804 | 54.5 | 0.7127 | ||

| √ | 0.8345 | 0.9130 | 56.9 | 0.7282 | ||

| √ | 0.8509 | 0.9214 | 57.1 | 0.7335 | ||

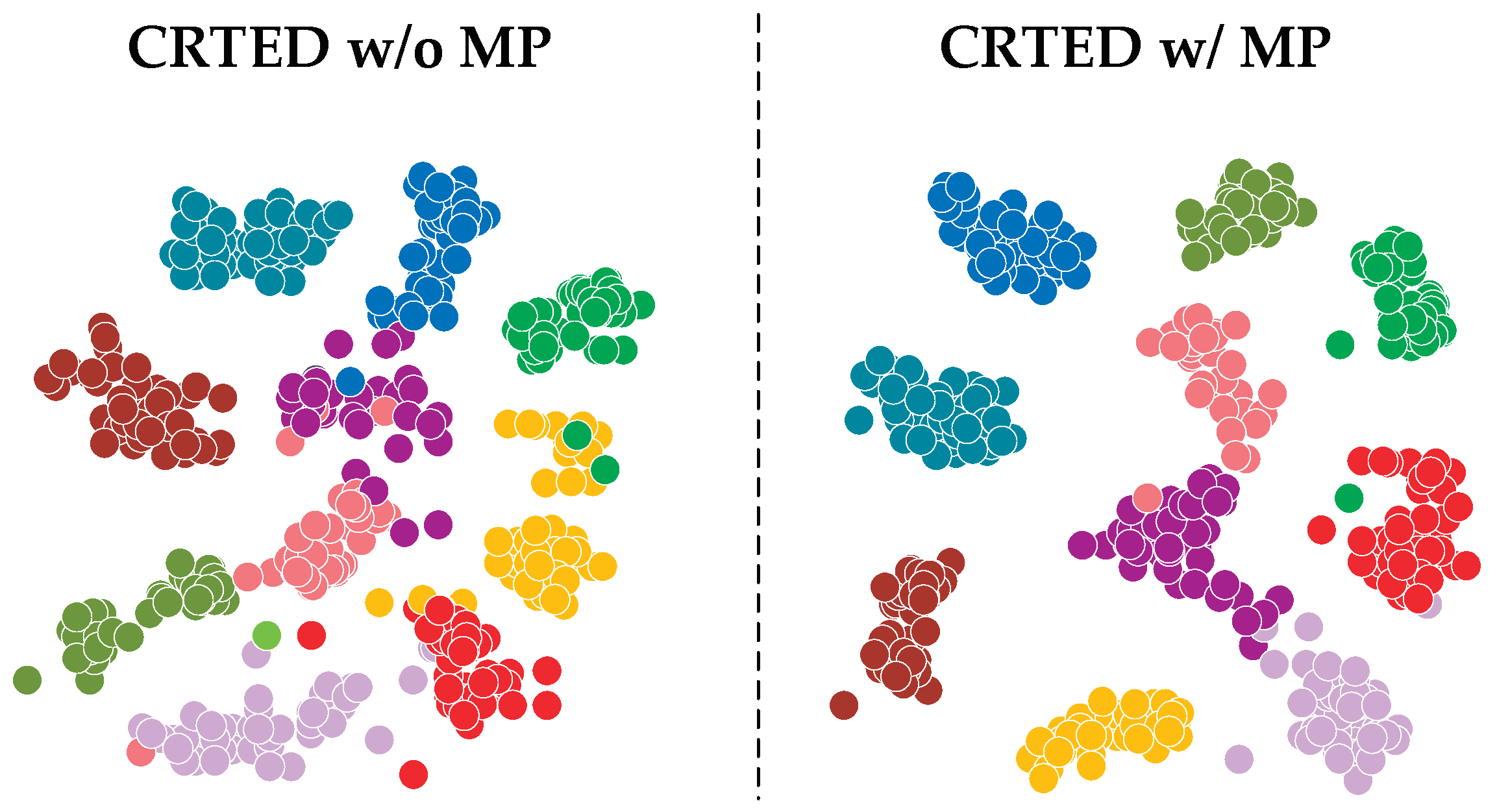

| Method | MP | C | Novel mAP (IoU = 0.5) | ||||

| 1 | 2 | 3 | 5 | 10 | |||

| CRTED | 1 | 28.2 | 43.3 | 51.6 | 54.0 | 60.3 | |

| √ | 1 | 31.3 | 45.2 | 53.1 | 56.8 | 63.0 | |

| 5 | 33.7 | 46.5 | 52.4 | 57.1 | 61.8 | ||

| √ | 5 | 37.3 | 51.1 | 54.5 | 58.2 | 63.3 | |

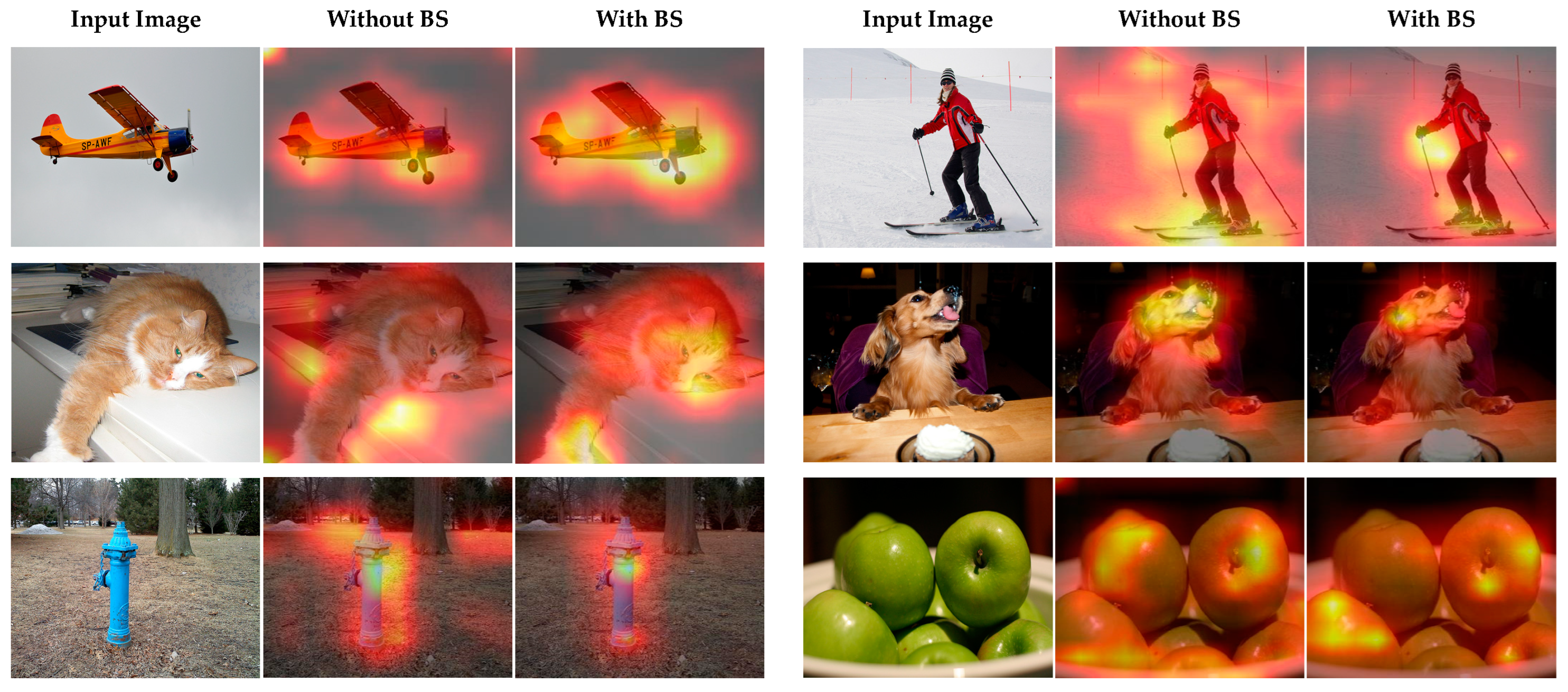

| Shots for Split 1 | 1 | 2 | 3 | 5 | 10 |

| CRTED BL | 68.7 | 69.4 | 70.8 | 73.6 | 75.5 |

| CRTED BL+BS | 69.8 | 70.2 | 72.0 | 75.4 | 76.5 |

| Shots for Split 2 | 1 | 2 | 3 | 5 | 10 |

| CRTED BL | 65.5 | 66.8 | 69.9 | 71.3 | 73.3 |

| CRTED BL+BS | 67.7 | 68.0 | 71.3 | 71.8 | 73.7 |

| Shots for Split 3 | 1 | 2 | 3 | 5 | 10 |

| CRTED BL | 67.7 | 68.7 | 71.4 | 72.7 | 74.8 |

| CRTED BL+BS | 68.6 | 70.4 | 72.7 | 73.9 | 75.1 |

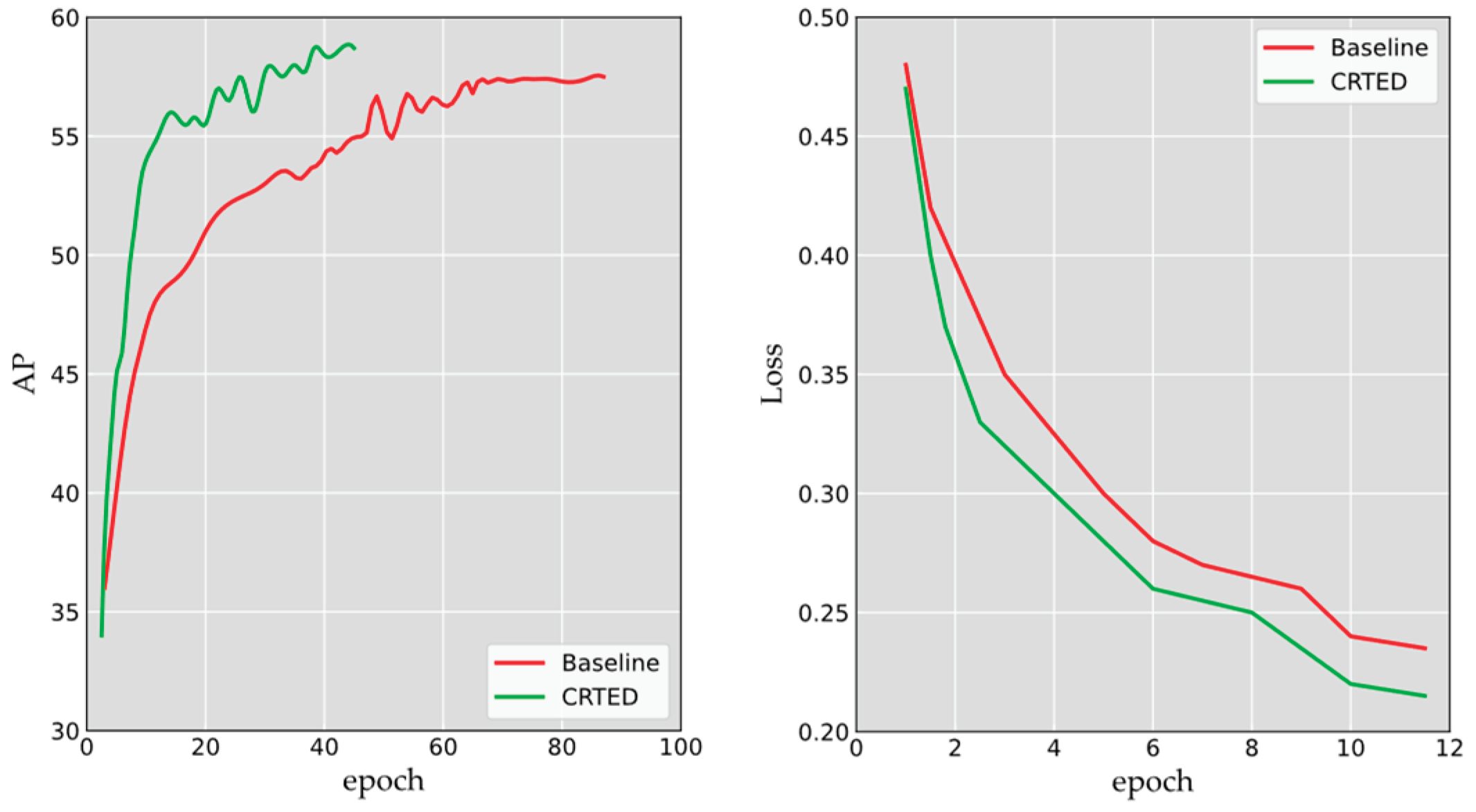

| Training strategy | AP | AP50 | AP75 |

| 1-shot | 48.5 | 55.9 | 41.1 |

| 1-shot | 48.7 | 56.2 | 41.2 |

| 5-shot | 57.7 | 68.1 | 47.3 |

| 5-shot | 58.1 | 68.4 | 47.7 |

| 10-shot | 58.8 | 69.2 | 48.4 |

| 10-shot | 59.7 | 70.5 | 48.8 |

| Method | Fine-tune | nAP50 (Avg. on splits for each shot) | ||||

| 1 | 2 | 3 | 5 | 10 | ||

| FSRW [12] | √ | 16.6 | 17.5 | 25.0 | 34.9 | 42.6 |

| Meta R-CNN [13] | √ | 11.2 | 15.3 | 20.5 | 29.8 | 37.0 |

| TFAfc [23] | √ | 27.6 | 30.6 | 39.8 | 46.6 | 48.7 |

| TFAcos [23] | √ | 31.4 | 32.6 | 40.5 | 46.8 | 48.3 |

| FSDetView [39] | √ | 26.9 | 20.4 | 29.9 | 31.6 | 37.7 |

| A-RPN [21] | × | 18.1 | 22.6 | 24.0 | 25.0 | - |

| AirDet [42] | × | 21.3 | 26.8 | 28.6 | 29.8 | - |

| DiGeo [43] | √ | 31.6 | 36.1 | 45.8 | 51.2 | 55.1 |

| CRTED(Ours) | × | 20.7 | 25.6 | 28.6 | 30.0 | 34.4 |

| √ | 31.8 | 32.8 | 34.0 | 45.0 | 48.9 | |

| Method | Venue | Fine-tune | Shots | |||||

| 1 | 2 | 3 | 5 | 10 | 30 | |||

| FSRW [12] | ICCV 2019 | √ | - | - | - | - | 5.6 | 9.2 |

| Meta R-CNN [13] | ICCV 2019 | √ | - | - | - | - | 8.7 | 12.4 |

| TFAfc [23] | ICML 2020 | √ | 2.8 | 4.1 | 6.3 | 7.9 | 9.1 | - |

| TFAcos [23] | ICML 2020 | √ | 3.1 | 4.2 | 6.1 | 7.6 | 9.1 | 12.1 |

| FSDetView [39] | ECCV 2020 | √ | 2.2 | 3.4 | 5.2 | 8.2 | 12.5 | - |

| MPSR [22] | ECCV 2020 | √ | 3.3 | 5.4 | 5.7 | 7.2 | 9.8 | - |

| A-RPN [21] | CVPR 2020 | × | 4.3 | 4.7 | 5.3 | 6.1 | 7.4 | - |

| W. Zhang et al. [44] | CVPR 2021 | √ | 4.4 | 5.6 | 7.2 | - | - | - |

| FSCE [45] | CVPR 2021 | √ | - | - | - | - | 11.1 | 15.3 |

| FADI [46] | NIPS 2021 | √ | 5.7 | 7.0 | 8.6 | 10.1 | 12.2 | - |

| AirDet [42] | ECCV 2022 | × | 6.0 | 6.6 | 7.0 | 7.8 | 8.7 | 12.1 |

| CRTED | Ours | × | 5.8 | 6.2 | 7.2 | 7.4 | 8.6 | 12.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).