I. Introduction

Navigating the vast and mysterious underwater world is no easy feat, especially when faced with challenges like electromagnetic wave attenuation and limited visibility [

1]. How can we improve the precision and reliability of underwater navigation? This research paper delves into integrating multiple sensors and deep learning techniques to enhance underwater navigation and perception, offering innovative solutions to longstanding obstacles.

- i)

Background Context

The Earth's oceans cover about 71% of the planet's surface, holding immense value for resources, scientific exploration, and environmental understanding [

2,

3]. Unmanned Underwater Vehicles (UUVs) [

4,

5] are instrumental in various applications, from marine mining to pipeline inspection, but their effectiveness is hindered by the limitations of traditional navigation methods [

6,

7]. These methods, such as inertial sensors and acoustic beacons, struggle in challenging underwater conditions due to cumulative errors, limited range, and environmental interference [

7,

8]. Unique optical obstacles like low lighting, turbidity scattering, and wavelength absorption affect the quality and reliability of visual data. Moreover, the absence of access to global positioning systems (GPS) [

9,

10,

11] complicates precise location pinpointing, data collection, etc. The quest for more reliable and accurate underwater navigation has led to exploring deep learning techniques, particularly in the context of visual Simultaneous Localization and Mapping (SLAM), as a promising avenue for improvement. Underwater navigation poses formidable challenges due to limited visibility, high pressure, harsh conditions, complex terrain, limited communication, sensor integration issues, and the absence of reliable navigation references like GPS. These challenges render traditional SLAM algorithms inadequate for subsea navigation, as they struggle with sensor unsuitability, environmental variability, feature scarcity, and communication constraints. Despite these challenges, the quest for more reliable and accurate underwater navigation has led to exploring deep learning techniques, particularly in visual SLAM, as a promising avenue for improvement along with sensor fusion.

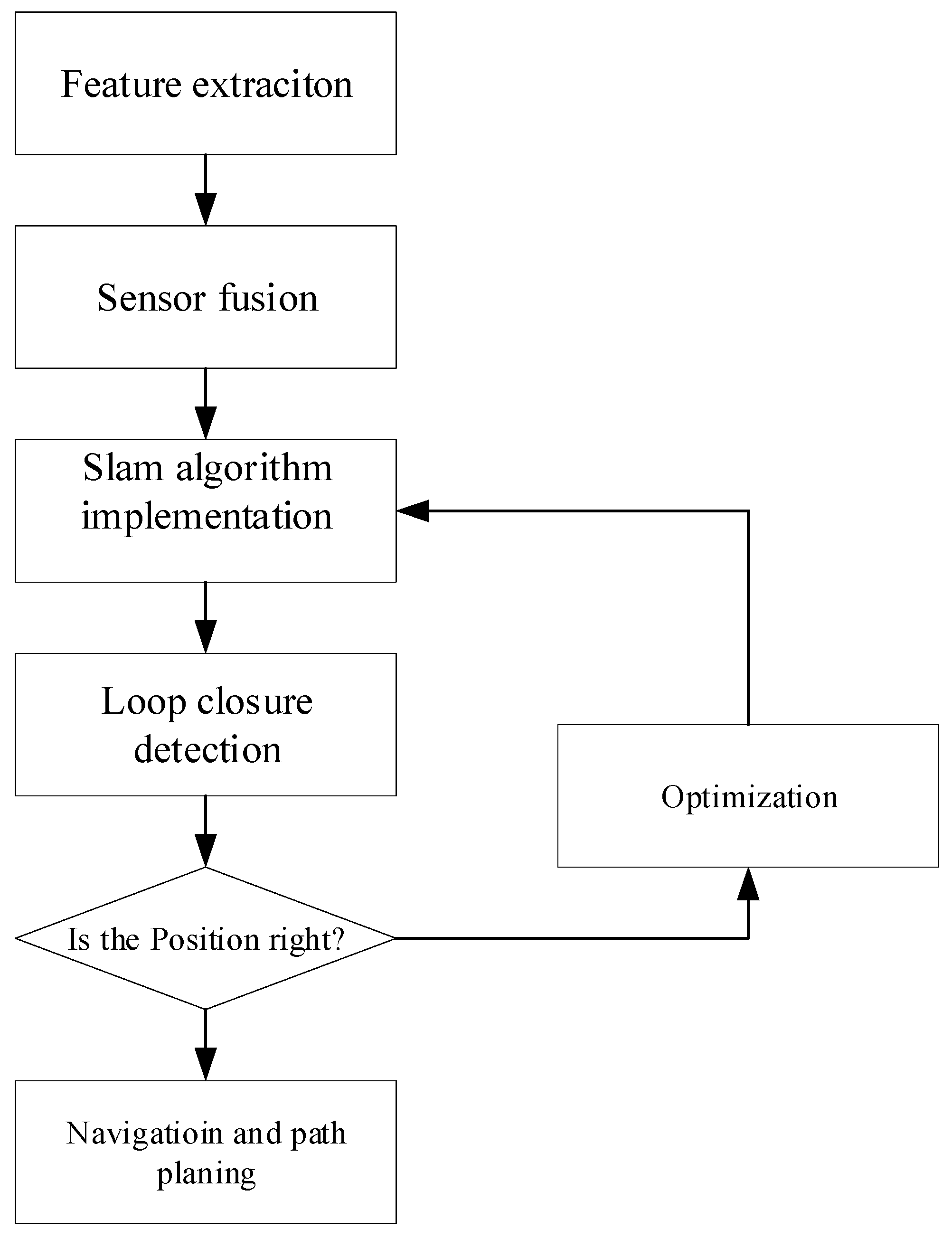

Figure 1.

Underwater SLAM process.

Figure 1.

Underwater SLAM process.

- ii)

Introduction of standard sensors and methodologies for underwater navigation and perception

Underwater sensors are specialized electronic devices that measure physical and environmental parameters in the ocean, enabling data collection for navigation, research, and monitoring purposes in challenging underwater conditions. There are different types of sensors used for a specific task. Underwater vehicle sensors are classified into proprioceptive and Visual Sensors (a subset of Exteroceptive Sensors).

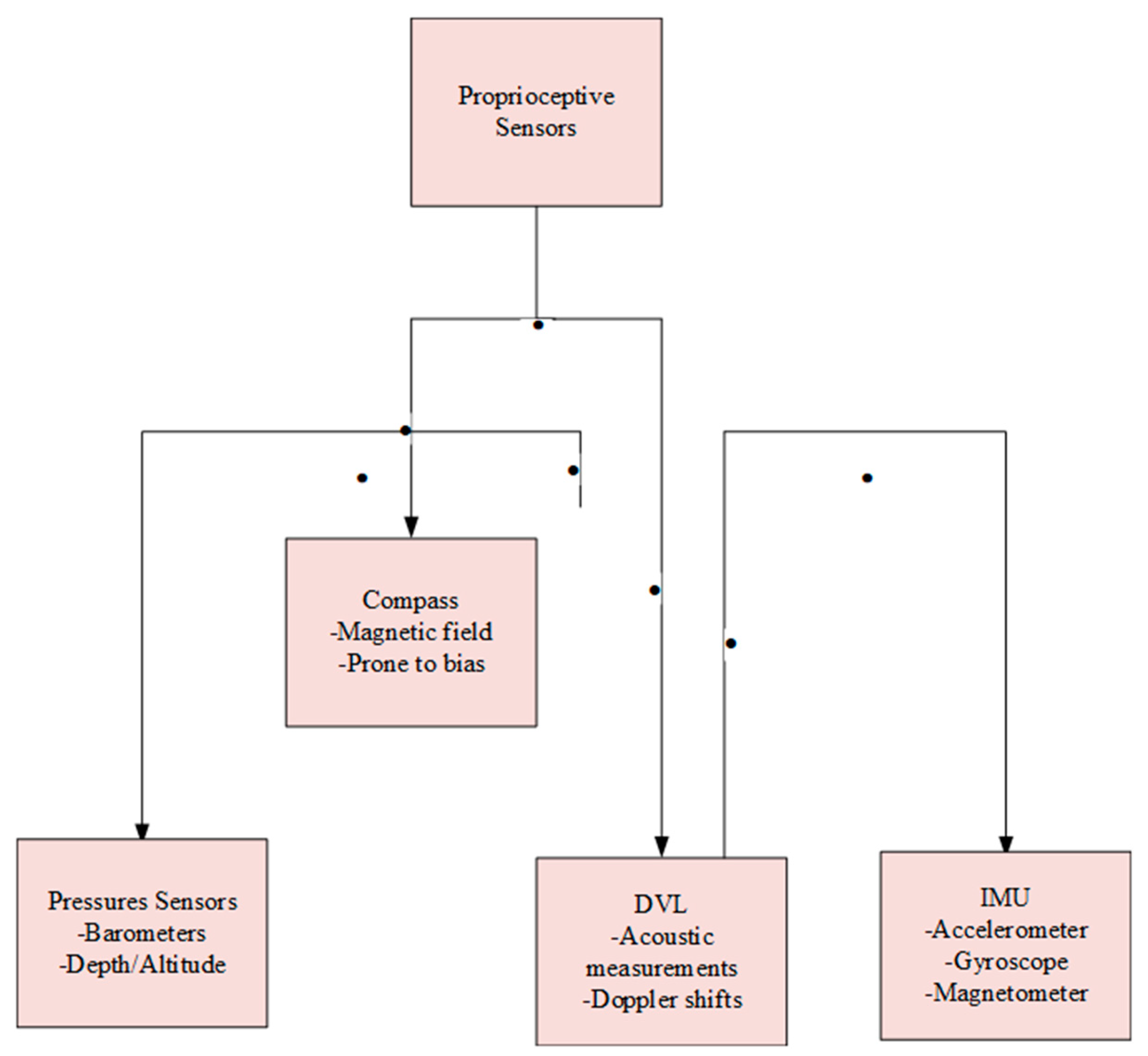

ii.1 Proprioceptive sensors

Proprioceptive sensors, including accelerometers, gyroscopes, and sometimes magnetometers, provide critical insights into a system's internal state and motion, measuring parameters like position, velocity, acceleration, and orientation. Widely applied in robotics and navigation, these sensors bolster awareness of the device's movements, enabling precise control. Commonly used proprioceptive sensors, such as the depth sensor, Doppler velocity log, inertial measurement unit, and compass, utilize various technologies, including acoustic measurements and magnetic field detection [

3,

12,

13]. This diverse sensor suite ensures accurate measurements of parameters, such as depth, velocity, acceleration, and orientation, further enhancing awareness for precise control in applications like robotics and navigation.

Compass:

The magnetic compass relies on the magnetic field but is prone to bias. The gyrocompass relies on fast spinning and is unaffected by metal, but it is more expensive.

Pressure Sensors:

Barometers or pressure sensors can be used for depth measurements. They provide essential information for underwater vehicles to determine their depth, aiding navigation, control, and safety.

DVL (Doppler Velocity Log):

Employs acoustic measurements for tracking the seafloor and calculating velocity. Captures Autonomous Underwater Vehicle's (AUV) sway, surge, and heave velocities. Utilizes transmitted acoustic pulses to gauge Doppler shifts from seabed returns [

14,

15].

IMU (Inertial Motion Unit) Sensors:

Initially developed for aircraft navigation by Ford, IMU sensors now have broad applications, such as in mobile phones and pedometers. The industry standard is MEMS-based IMUs, including those from manufacturers like Analog Devices, EMCORE, Honeywell, and Collins Aerospace. IMUs offer fast data collection and sensitivity but are prone to cumulative errors and have limited runtime. In SLAM, IMUs are often combined with visual and laser sensors to mitigate mistakes by estimating IMU zero bias [

16]. To calculate a vehicle's orientation, velocity, and gravitational forces, accelerometers and gyroscopes (sometimes magnetometers) are combined.

Gyroscope:

Measures angular rates using either Ring Laser/Fiber Optic or MEMS technology. The Ring Laser employs mirrors or fiber optic cables to detect angular rates by observing changes in light. MEMS uses an oscillating mass in a spring system, where gyroscope rotation causes a perpendicular Coriolis force on the mass to calculate the angular rate.

Accelerometer:

It measures the force needed to accelerate a proof mass and comes in various designs like a pendulum, Micro-Electro-Mechanical Systems (MEMS), and vibrating beams [

17].

Figure 2.

Proprioceptive Sensors Overview.

Figure 2.

Proprioceptive Sensors Overview.

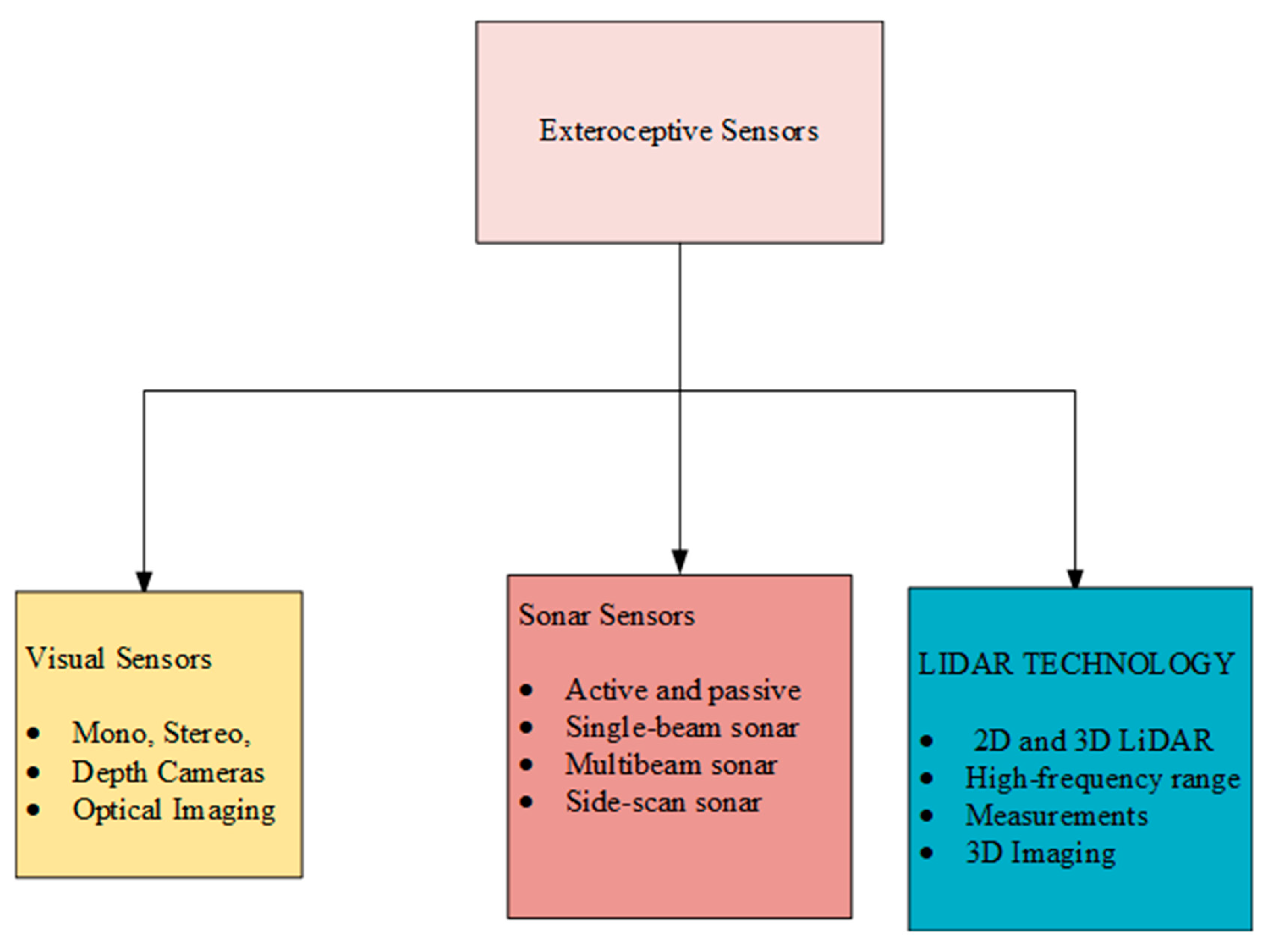

ii.2 Exteroceptive Sensors

ii.2.1 Visual sensors

Visual SLAM employs cameras to perceive the environment. Mono, stereo, and depth cameras are utilized [

18,

19]. Visual SLAM employs cameras to perceive the environment. Mono, stereo, and depth cameras are utilized [

18,

19]. Visual SLAM primarily depends on cameras as exteroceptive sensors to perceive external environmental information. The camera's function is based on optical imaging principles, capturing images using photoreceptors. They come in different types, including monocular, stereo, and depth cameras. SLAM algorithms based on visual inputs are classified into monocular, stereo, and RGB-D categories, contingent on the type of camera employed [

20]. Furthermore, specific algorithms, such as ORB-SLAM3, demonstrate adaptability for use with both pinhole and fisheye cameras, broadening their applicability in visual SLAM scenario

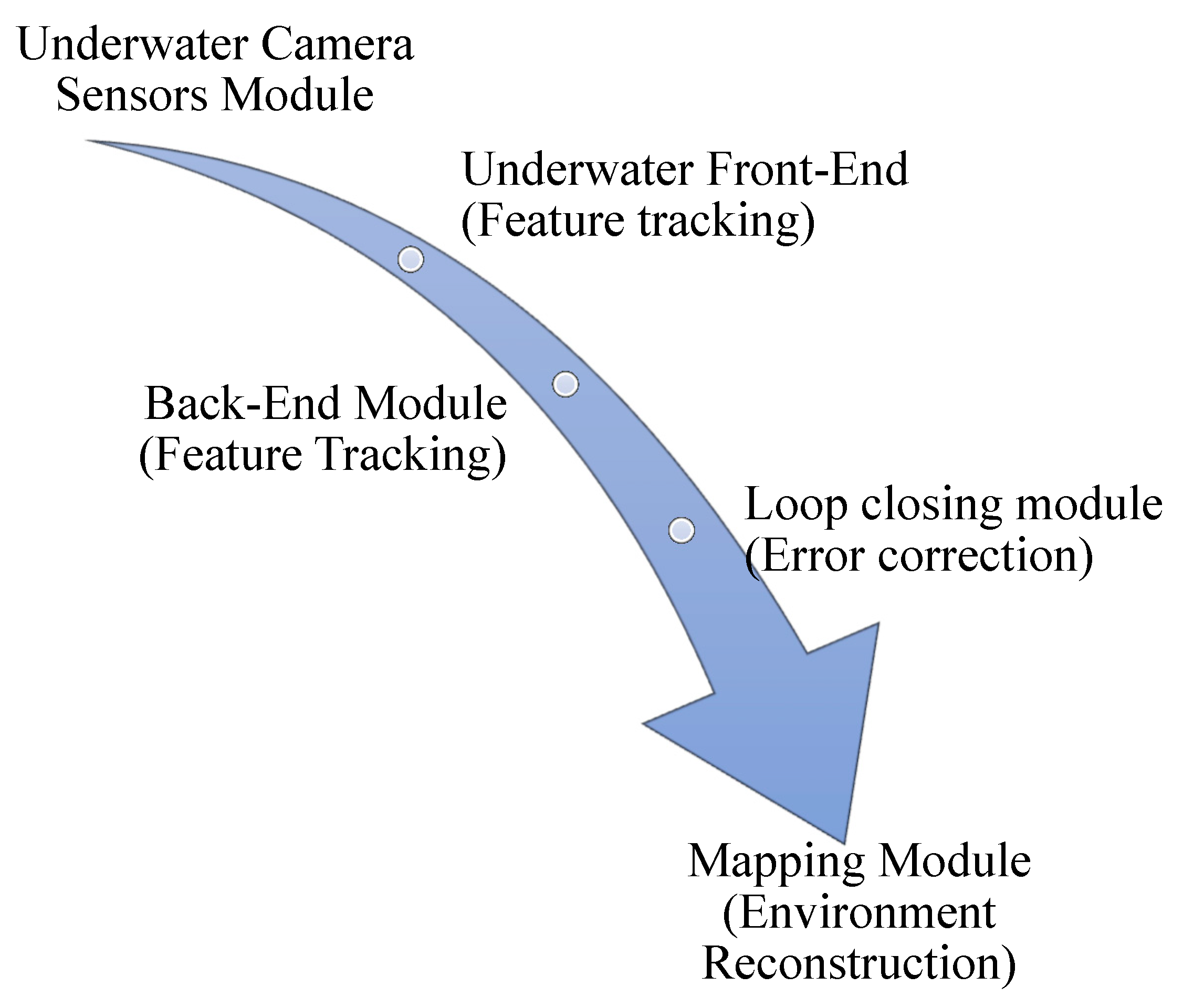

Figure 3.

The typical architecture of a visual SLAM system.

Figure 3.

The typical architecture of a visual SLAM system.

Monocular or single-lens camera:

Singular lenses and monocular cameras provide economical and straightforward imaging solutions. Mono SLAM pioneered in establishing real-time monocular vision SLAM [

21,

22]. In underwater research, Hidalgo et al. investigated ORB-SLAM through controlled experiments featuring diverse conditions. Their study confirmed ORB-SLAM's effectiveness under adequate illumination, minimal flicker, and abundant scene features. Monocular Visual Odometry (V.O.) computes relative motion and 3D structure from 2D bearing data, establishing the initial distance between the first two camera poses as one due to the unknown absolute scale. The subsequent processing of images infers the relative scale and camera pose of the initial frames using either 3D structure information or the trifocal tensor [

23].

Stereo Camera

Stereo cameras, with a baseline affecting measurement range, calculate depth using parallax [

24]. Stereo cameras offer a promising solution for accurate underwater robot localization and proximity operations, as they can calculate distance using parallax, unlike monocular cameras that lack depth information. Researchers have developed innovative methods utilizing stereo cameras [

8,

25,

26,

27], such as a relative SLAM approach that employs a topological metric representation for real-time processing. Additionally, some methods fuse visual and inertial data to eliminate noise and achieve precise underwater robot localization, with less than 3% of typical localization errors. These advancements demonstrate the potential of stereo cameras in enhancing underwater robotics [

28].

Depth Camera

Depth or RGB-D cameras utilize structured light or time-of-flight mechanisms to measure distances between the camera and objects. These physical methods reduce computational demands compared to software-based distance estimation used by binocular cameras. However, depth cameras face challenges, including limited measurement ranges, high noise levels, restricted fields of view, susceptibility to sunlight interference, and difficulty in measuring translucent materials due to the characteristics of reflected light [

18]. Also known as a depth sensor, it captures color (RGB) and depth information, creating a 3D representation of the environment by measuring distances. Various technologies, such as structured light and time-of-flight, are used in depth cameras. Underwater RGB-D cameras, like Kinect v1 and v2, face limitations due to the weakened infrared light. Modern RGB-D sensors use active stereo technology for robust depth acquisition, which is suitable for various applications. Challenges include sensor errors and semiconductor performance, especially in underwater environments [

29,

30].

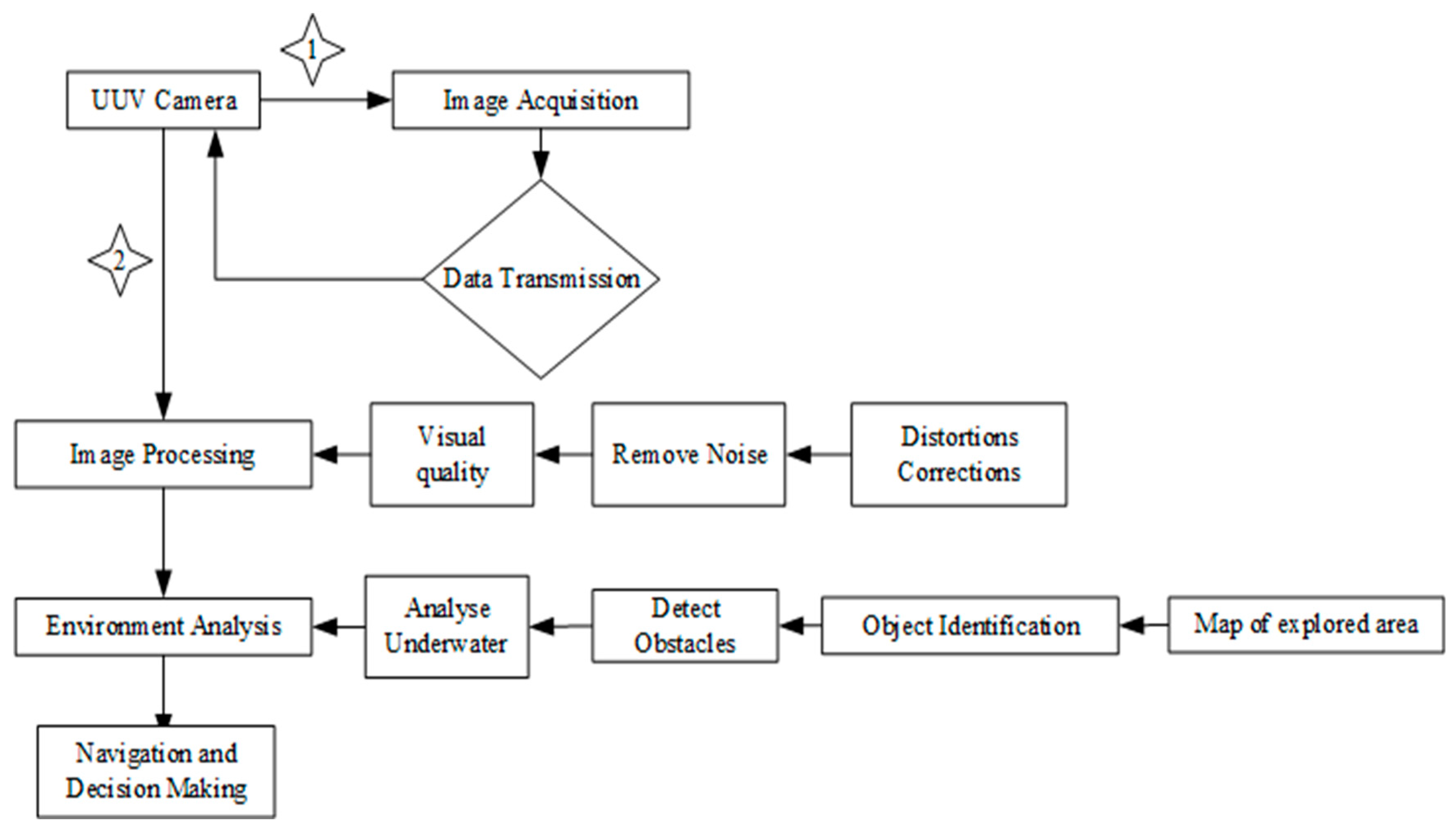

Figure 4.

Process of Camera in Underwater Positioning System.

Figure 4.

Process of Camera in Underwater Positioning System.

ii.2.2 Sonar Sensors

Sonar sensors, categorized as active and passive, utilize sound waves for underwater detection. Here's a Summary of Sonar Sensor Types and Their Applications in Underwater Environments.

Table 1.

Sonar Types and Applications.

Table 1.

Sonar Types and Applications.

| Sonar Type |

Description |

Reference |

| Active Sonar |

Employed for search and positioning in underwater environments. |

[16] |

| Passive Sonar |

Tracks target distance in underwater settings. |

[16] |

| Single-beam Sonar |

A single-beam scanning sonar for imaging in low-visibility conditions offers distance information over several meters and is immune to water turbidity. |

[31,32] |

| Multibeam Sonar |

Utilizes multiple beams to measure seafloor depth and characteristics rapidly and accurately. Ideal for high-resolution 3D mapping in various underwater applications. |

[33,34] |

| Side-scan Sonar/ forward-looking |

They are widely used for detecting underwater objects like wrecks and mines, providing high-resolution acoustic images of seafloor morphology. |

[17,35] |

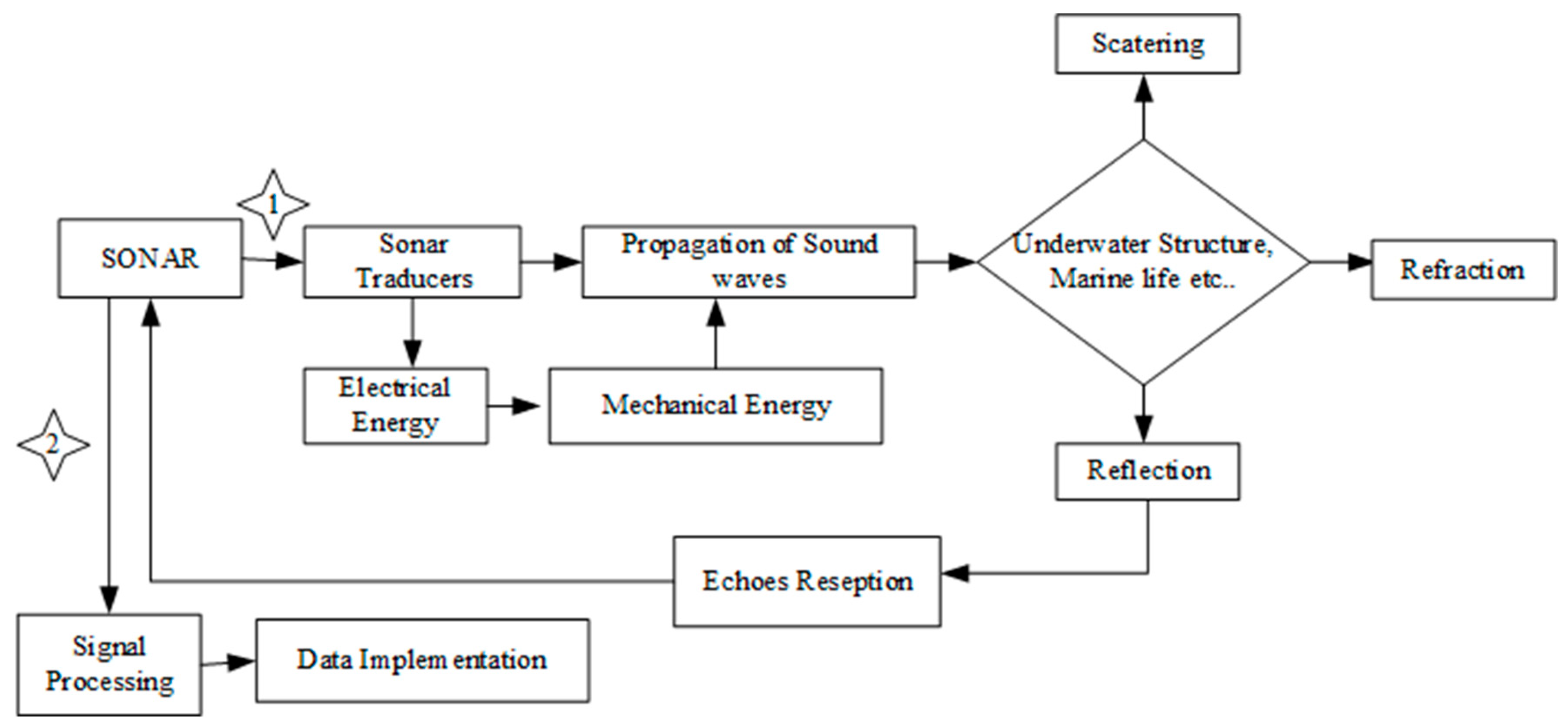

Figure 5.

Sonar system process in Underwater navigation.

Figure 5.

Sonar system process in Underwater navigation.

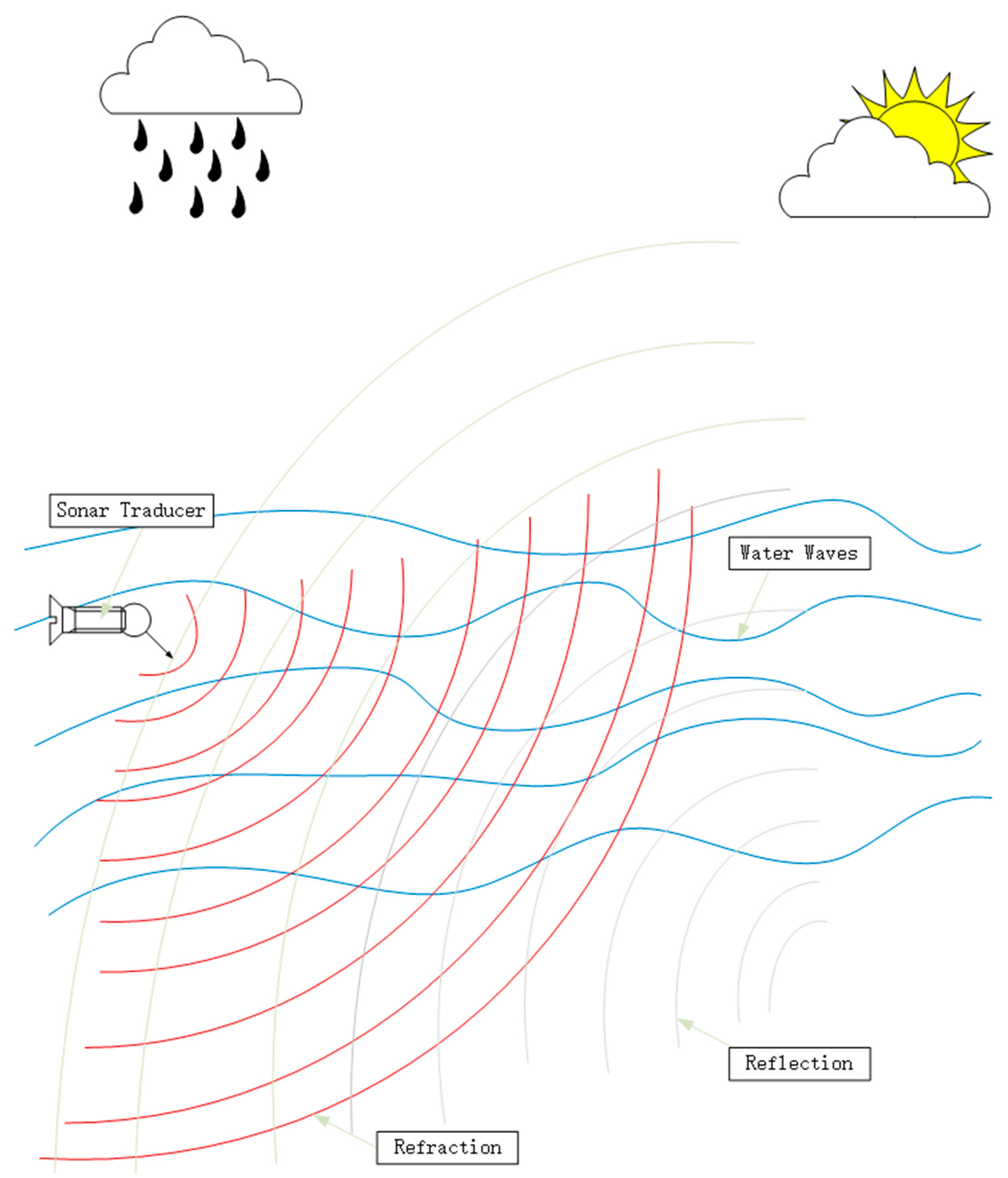

Figure 6.

Sonar system underwater.

Figure 6.

Sonar system underwater.

ii.2.3 LiDAR Technology for Underwater Mapping and Navigation

LiDAR sensors excel in providing accurate and high-frequency range measurements, even in challenging underwater conditions [

6,

36]. They offer superior 3D data resolution in texture-limited underwater scenes, contributing valuable point cloud data for SLAM systems. LiDAR aids in precise seafloor mapping [

37], creating detailed 3D models, and detecting objects to enhance navigational maps. Famous for underwater mapping and navigation, Laser SLAM employs 2D or 3D LiDAR sensors. 2D LiDAR provides real-time obstacle scanning in a single plane, while 3D LiDAR offers high accuracy, comprehensive coverage, and 3D imaging for dynamic and static environments. The critical difference lies in 2D LiDAR lacking height information and imaging capabilities, whereas 3D LiDAR excels in generating three-dimensional real-time images and reconstructing spatial data [

16].

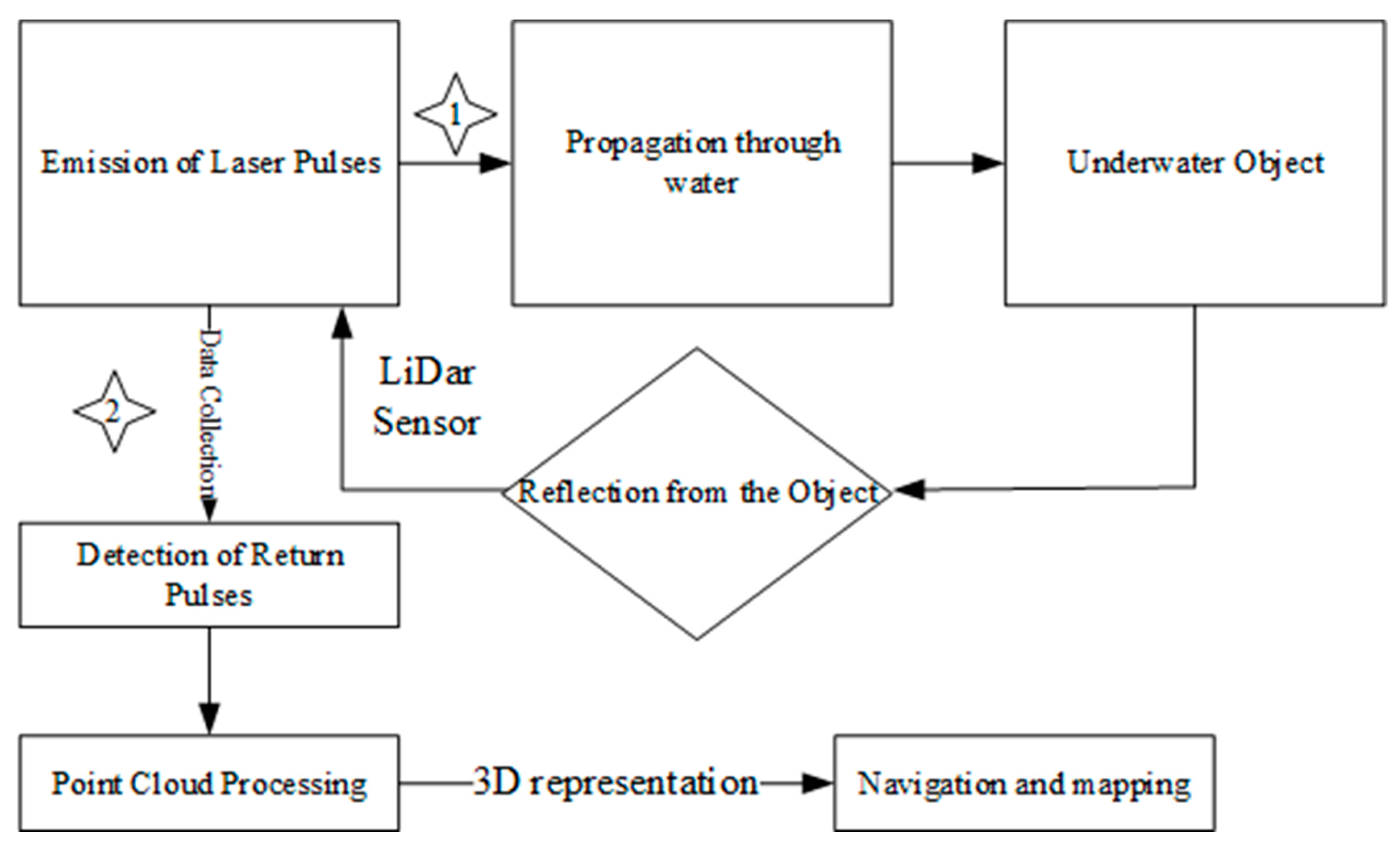

Figure 7.

Process of LiDAR in Underwater navigation and mapping.

Figure 7.

Process of LiDAR in Underwater navigation and mapping.

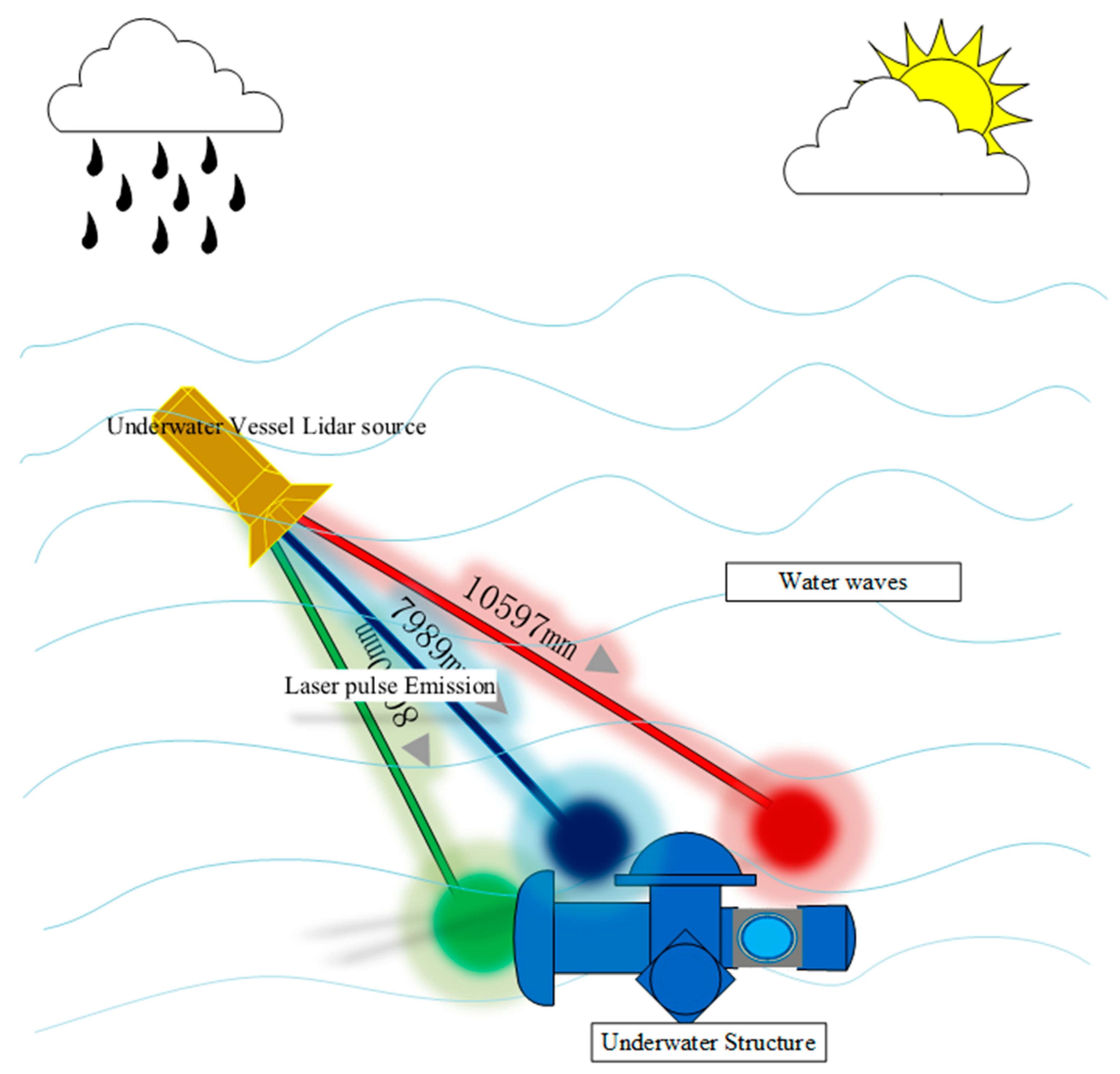

Figure 8.

Lidar in underwater navigation.

Figure 8.

Lidar in underwater navigation.

Figure 9.

Exteroceptive Sensors Overview.

Figure 9.

Exteroceptive Sensors Overview.

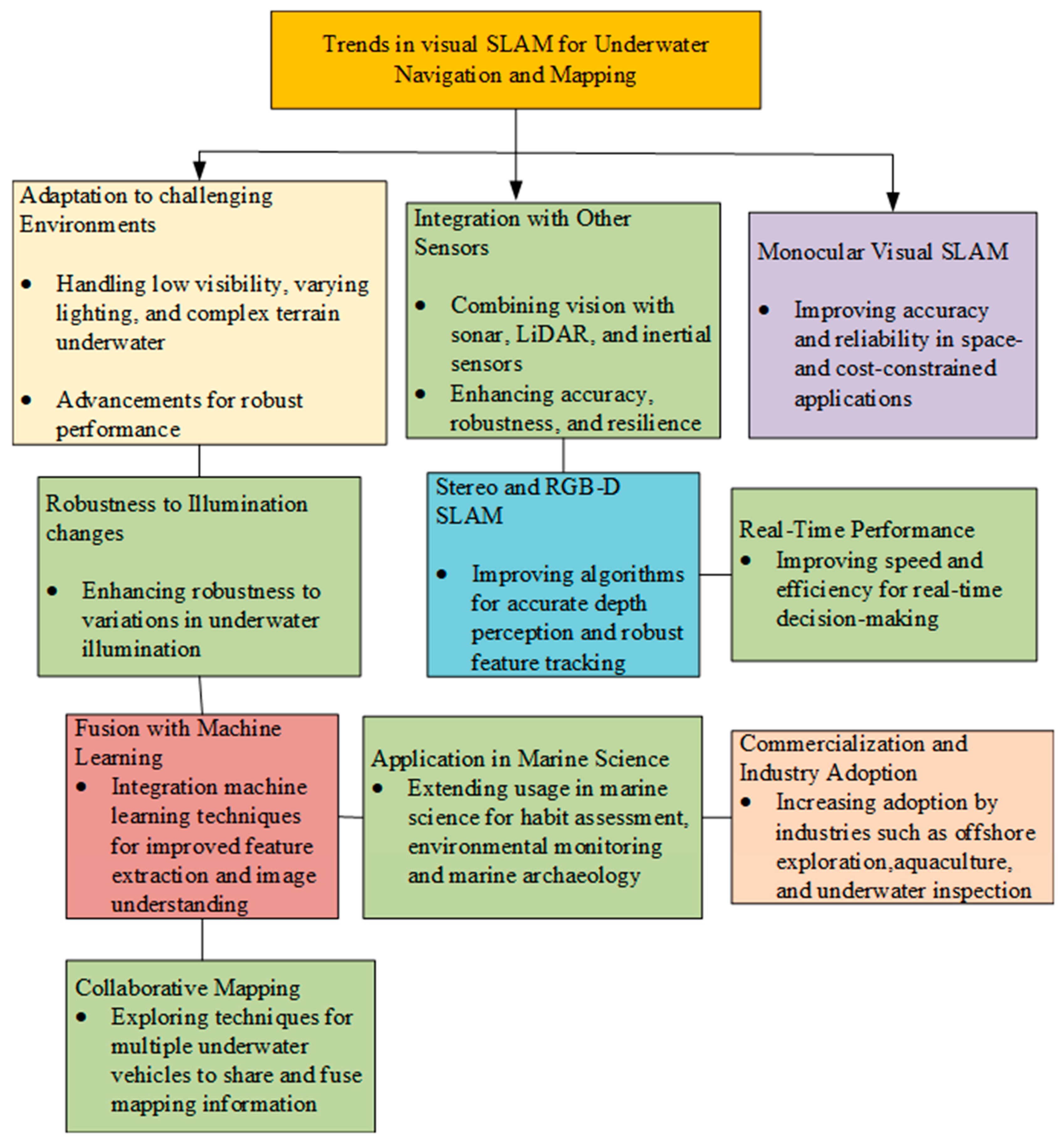

iii)Trends of visual slam for underwater navigation and mapping

The developments include integrating visual and inertial sensors to enhance accuracy, applying deep learning for robust feature extraction in challenging environments, increasing utilization of 3D vision methodologies for richer depth information, and incorporating adaptive algorithms capable of adjusting to varying underwater conditions. The integration of SLAM in Autonomous Underwater Vehicles (AUVs) for autonomous navigation, the fusion of visual SLAM with underwater LiDAR for comprehensive mapping, and the rising adoption of open-source SLAM frameworks are prominent trends. Additionally, real-time processing, edge computing, collaborative SLAM strategies, and the emergence of standardized benchmarks and datasets contribute to the ongoing efforts to advance the capabilities of underwater SLAM systems, shaping the landscape of autonomous underwater exploration and research.

Figure 10.

Trends in visual SLAM for UUV.

Figure 10.

Trends in visual SLAM for UUV.

- iii)

Objective and contribution of our paper

The primary objective of our paper is to investigate the innovative aspects of underwater Simultaneous Localization and Mapping (SLAM) or odometry, focusing on integrating multiple sensors and applying deep learning techniques. Specifically, we aim to identify and assess the advancements in SLAM and odometry methodologies that result from integrating various sensors, emphasizing the synergy among them to enhance accuracy and robustness. Additionally, our research delves into the role of deep learning in these underwater systems, evaluating how it contributes to feature extraction, mapping, and navigation in challenging underwater environments. By highlighting these novel approaches, we seek to contribute to the evolving field of underwater robotics and exploration, providing valuable insights for researchers and practitioners working on underwater SLAM and odometry systems.

The paper's layout follows Session 1, which delves into the comprehensive description of standard visual SLAM algorithms' performances in underwater applications. In Session 2, we summarise papers that involve multiple sensor integration in SLAM odometry, highlighting their strengths and weaknesses. Shifting the focus to deep learning techniques, Session 3 offers a summary of the applications of deep learning in underwater image processing, navigation, and perception. Additionally, a detailed analysis of their performance and future potential is provided. Session 4 summarises papers involving deep learning-based underwater SLAM or odometry navigation, presenting their respective strengths and weaknesses. Session 5 compiles a list of commonly used datasets for evaluating underwater SLAM algorithms. Lastly, in Session 6, we present our prediction development directions based on the abovementioned content.

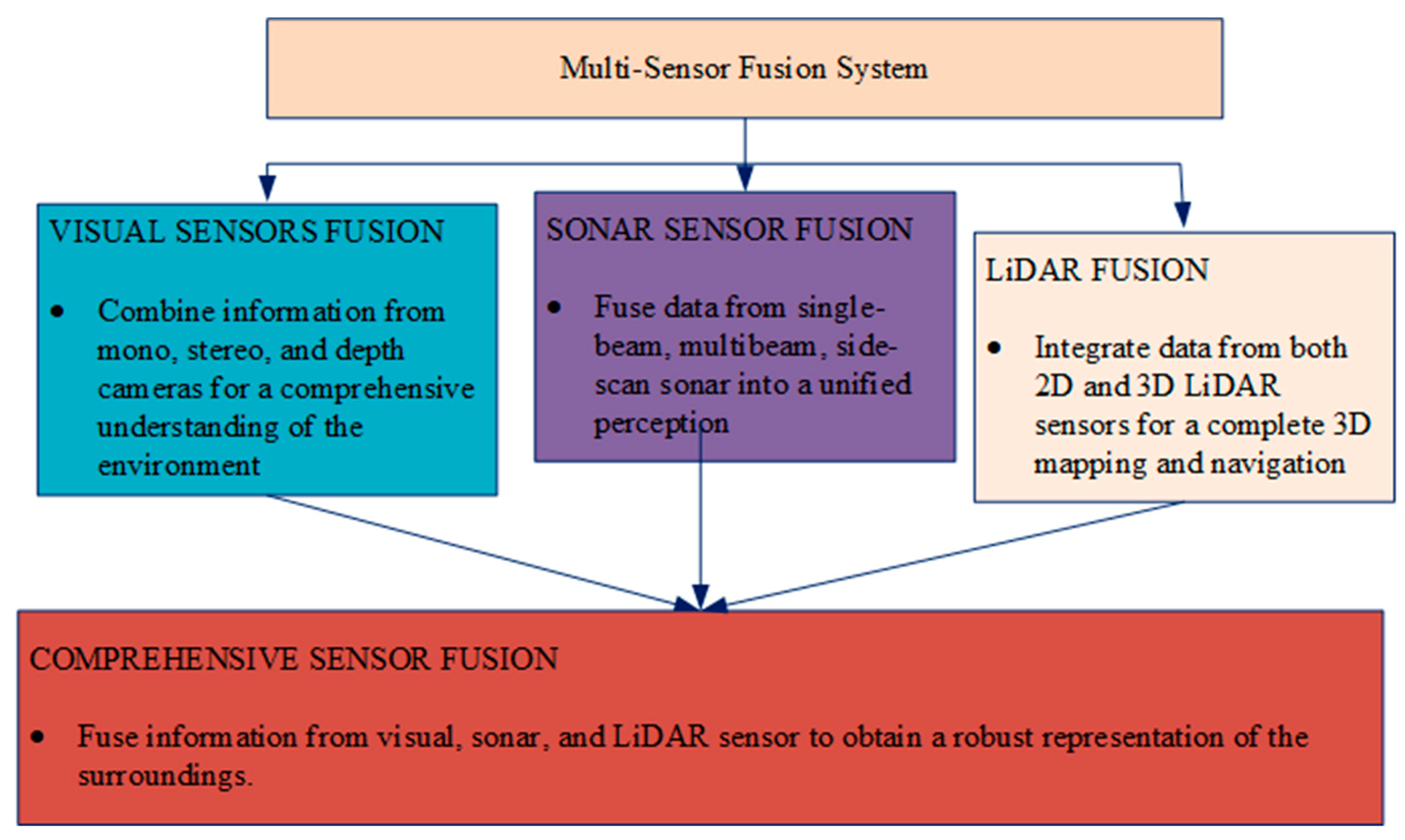

III. Multiple Sensor Integration in Slams Odometry: Strengths and Weaknesses

The accuracy and resilience of underwater SLAM systems through sensor fusion techniques encompasses vision-inertial SLAM, laser-vision SLAM, and multisensor SLAM [

37,

38]. Multisensor fusion is classified into data layer, feature layer, and decision layer fusion [

39,

40]. Visual SLAM faces challenges with low-quality images, while IMU-assisted sensors improve

To enhance the accuracy and robustness of underwater SLAM systems, researchers often combine multiple sensors, leveraging sensor fusion techniques. This fusion approach results in a more precise and resilient underwater SLAM. Standard fusion methods include vision-inertial SLAM (utilizing vision and IMU), laser-vision SLAM (combining laser and vision), and multisensor SLAM (incorporating sonar, IMU, vision, etc.). Multisensor fusion can be categorized based on fusion level into data, feature, and decision layer fusion. The complexity of coupling can further be divided into loosely coupled, tightly coupled, and ultralight coupled systems [

28]. Visual SLAM algorithms have advanced significantly but struggle with low-quality images caused by rapid camera movements and varying light conditions. IMU-assisted sensors offer improved angular velocity and local position accuracy compared to odometers. They complement each other, with IMUs providing clear images of dynamic objects during fast camera movements and cameras correcting IMU-generated cumulative errors during slower movements. This combination enhances SLAM performance and is cost-effective. Visual-inertial fusion methods can be loosely coupled. IMU and camera motions are estimated separately and then fused or tightly coupled, involving joint construction of motion and observation equations before state estimation [

41].

A novel crack assessment technique combines multisensor fusion SLAM and image super-resolution [

42]. A modality prediction approach is explored using LiDAR point cloud prediction from 3D acoustic ultrasonic sensor data [

39]. Fusion SLAM algorithms also combine 2D LiDAR and RGB-D SLAM, offering a comprehensive visual representation [

43]. Considerations in sensor fusion systems include the fusion objective and sensor constraints [

44]. An innovative self-localization system uses low-cost sensors and an Extended Kalman Filter [

74]. Multi-beam sonar is employed for underwater landmark detection, and an AUV utilizes tightly coupled lidar-visual-inertial SLAM [

33].

Figure 11.

A multisensor fusion overview.

Figure 11.

A multisensor fusion overview.

Visual SLAM faces challenges with low-quality images, while IMU-assisted sensors improve accuracy. Visual-inertial fusion methods can be loosely or tightly coupled [

41]. Incorporating modalities such as sonar or radar poses a challenge since existing methods are often specialized for conventional sensors. Da Bin Jeon et al. present a Lie theory approach for unmanned underwater vehicle navigation, addressing misalignment issues through Lie algebra operations. It enhances estimation accuracy, stability, and convergence of covariance. However, potential weaknesses may include challenges in real-world implementation, computational complexity, and the need for thorough validation in diverse operational scenarios. Ongoing research aims to refine and validate the method for broader applicability and to address any identified limitations. Sensor Fusion for Underwater Vehicle Navigation Compensating Misalignment Using Lie Theory. SVIn2 presents an advanced underwater SLAM system, integrating diverse sensors(Sonar, Visual, Inertial, and water-pressure information) for robust performance in challenging environments. The real-time framework overcomes traditional weaknesses, demonstrating exceptional accuracy and reliability in benchmark datasets and real-world scenarios. However, potential sensor dependencies and generalization across diverse underwater settings pose considerations for further exploration [

45]. Chunying Li et al. introduce an innovative Multi-Source Information Fusion (MSIF) model for Spherical Underwater Robots (SURs), enhancing precision and addressing critical issues in Autonomous Underwater Vehicles (AUVs). However, reliance on low-cost sensors may impact accuracy, and performance could vary based on environmental conditions.

Further refinement is needed for robustness in diverse scenarios and adaptability to varying sensor qualities [

46]. Researchers proposed a cost-efficient and precise solution for underwater pipeline inspection utilizing an Autonomous Underwater Vehicle (AUV). Successfully navigating the pipeline with minimal sensors, the system exhibits robust performance under varying current velocities, incorporating fuzzy logic for enhanced stability. The ROS/Gazebo-based simulation environment facilitates efficient development and testing. However, challenges in visibility variations and obstacles require further refinement, suggesting potential enhancements through expanding the sensor fusion framework and integrating adaptive parameters in image processing. Future research directions include addressing dynamic surface wave effects through real-world experiments, with consideration given to a down-scaled AUV for pool testing [

40]. Di Wang et al. introduce a multisensor fusion method for underwater integrated navigation systems, focusing on SINS/DVL/USBL. It addresses frame system inconsistencies due to velocity errors, demonstrating enhanced accuracy, especially in scenarios with long-distance USBL signal challenges. However, further validation in diverse underwater environments is needed to establish its broader applicability and reliability [

47].

V. Deep Learning-Based Underwater SLAM and Odometry Navigation: Strengths and Weaknesses

Jayashree Rajesh et al. propose a system to properly recognize and classify underwater life and objects in underwater images. It provides a new way to identify and categorize many classes, broadening the model's uses. The study uses statistical, hardware, and software solutions, focusing on deep learning approaches like YOLOv4 and CNN to accurately classify underwater objects [

48]. ANWAR KHAN et al. review recent underwater target detection algorithms for wireless sensor networks. It categorizes and assesses these algorithms, discussing their applications, strengths, and weaknesses. A comparative analysis and trend evaluation over the last decade is provided [

49]. Ali Khandouzi et al. use deep learning and classical image processing to enhance underwater images. A lightweight colour retrieval network updated histogram equalization for contrast improvement, and an attention module for synergistic integration comprise the three-module framework. The approach solves underwater picture problems with minimum computational load. However, potential drawbacks include picture augmentation, dataset-specific effectiveness, algorithm complexity, generalization across various contexts, and overfitting to specific conditions [

50]. A CNN and intensity changes improve underwater image quality in two steps, according to Laura A. Martinho1 et al. 2024. The approach performs well on various datasets, including a new Amazon dataset. Effective deep learning-based augmentation and dataset development are strengths. Lack of extensive comparison insights and explicit future inquiry objectives are weaknesses [

51]. A deep learning model for underwater image restoration, the Combining Attention and Brightness Adjustment Network (CABA-Net), mitigates colour-cast, low brightness, and low contrast. Ablation investigations confirm the efficiency of individual network components, and the approach adapts to underwater settings, boosting image contrast and color and aligning with human visual system properties. However, the study lacks discussions on constraints, computing efficiency, and broader applicability [

52]. A unique integrated system for underwater object and temporal signal detection employing 3D integral imagery in degraded settings is proposed. Deep learning improves 2D imaging performance. 3D integral imaging improves image reconstruction and segmentation, enhancing detection accuracy. The method's weaknesses include undiscovered computational complexity and color distortion. Future directions include optimal configuration research and resolving issues in increasingly complicated underwater environments [

53]. Researchers use convolutional neural networks (CNN) and recurrent CNN to estimate ego-motion in autonomous underwater robots' forward-looking sonar (FLS). Both models can learn from synthetic and field data, but the recurrent model predicts synthetic data better. FLS sensor configurations imaging terrains need further study, according to the study. It advises using larger field datasets and diverse sensor features to improve model performance [

54]. Yelena Randall1 et al. present unique forward-looking underwater stereo-vision and visual-inertial datasets essential for testing autonomous systems and algorithms in challenging underwater conditions. The datasets cover various scenarios, providing synchronized images, ground truth depth maps, calibrations, and known object measurements [

55]. An autonomous underwater vehicle (AUV) with an intelligence system recognizes and tracks underwater objects. Semi-Global Block Matching (SGBM) methods forecast depth maps, and Deep Q-Network (DQN) localizes disparity map objects. The system detects objects using a Faster Region-based Convolutional Neural Network (R-CNN). DQN optimization of SGBM parameters, 3D point cloud images for object information calculation, object 3D information convergence with increasing learning episodes, and wave height's effect on object size estimation and AUV maneuvering performance are notable findings [

56]. A new neural network uses autoencoder architecture and SIFT-based descriptors to detect underwater visual loops quickly and reliably. Its unsupervised training method beats others, making it suited for AUVs with limited computational resources [

57]. The underwater visual simultaneous localization and mapping (VSLAM) system ULL-SLAM was developed by Zhichao Xin et al. to handle low-light problems. The model's end-to-end design includes a low-light enhancement branch with a non-reference loss function, allowing image augmentation without paired low-light data. A self-supervised feature point detector and descriptor extraction branch improves matching without pseudo-ground truth. The suggested method ensures trajectory continuity, stability, and accurate state estimation under demanding underwater environments to improve VSLAM performance. The research mentions features including better feature point extraction in low-light circumstances but does not examine limitations, computing efficiency, or the approach's generalizability to varied underwater exploration settings [

58]. Researchers suggest computer vision-based AUV position estimates to alleviate navigation errors. The method uses deep learning and computer vision to analyze real-time environmental photos to a Digital Surface Model map. The approach lowers positioning errors (30–60 m) and works with incomplete land representations. It can extract land features accurately, reduce dead reckoning errors, and adapt to difficult sea situations. The technique could enable fully autonomous AUV navigation in GNSS-denied conditions, improving low-cost AUV technology [

59]. A comprehensive dataset from a controllable AUV with high-precision fiber-optic inertial sensors, a Doppler Velocity Log (DVL), and depth sensors by Can Wang et al. advances autonomous underwater vehicle (AUV) navigation. The dataset includes numerous natural scenarios from multiple locations and timelines, both beneath and on the surface, to address the lack of publically accessible data for training machine learning algorithms in underwater navigation. Rigorous testing and algorithmic evaluations of real and calculated positions prove the dataset's usefulness. Limitations and use cases of the dataset are not discussed in the study. Its influence on autonomous exploration in limited underwater habitats needs additional study [

60].

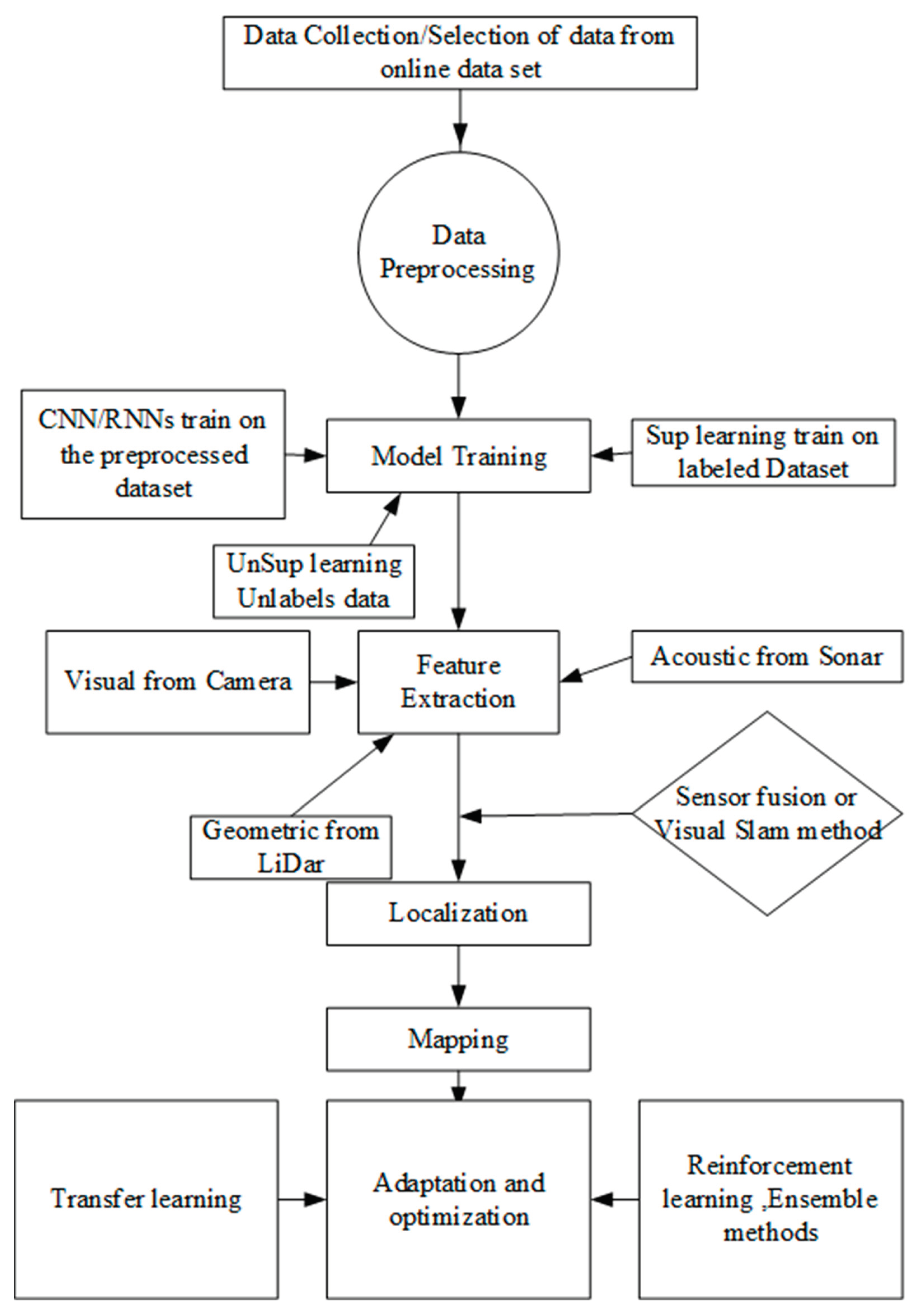

Figure 12.

Process of deep learning in underwater SLAM.

Figure 12.

Process of deep learning in underwater SLAM.

An improved visual-inertial odometry system called Semantic SLAM uses semantic characteristics from an RGB-D sensor to improve camera localization in Visual Simultaneous Localization and Mapping (VSLAM). It excels in indoor conditions with little camera input and is scene-agnostic. A convolutional short-term (ConvLSTM) network refines the semantic map, improving pose estimation by 17% over VSLAM. The semantic map provides interpretable information for robot navigation tasks, including path planning and obstacle avoidance. The public code shows that semantic aspects in SLAM systems are feasible [

65]. SplaTAM, a pioneering SLAM system for a single unposed monocular RGB-D camera, uses a 3D Gaussian Splatting radiance field for map representation. It suggests ways for more advanced and efficient SLAM systems to analyze scenes [

61]. GO-SLAM, a real-time dense visual SLAM system, optimizes camera postures and 3D reconstruction using neural implicit representations. It outperforms state-of-the-art algorithms in robust posture prediction, loop closing, and online total bundle adjustment. The versatile approach supports monocular, stereo, and RGB-D inputs and dynamically changes the continuous surface representation for global consistency. It excels on varied datasets of lengthy monocular trajectories without depth information [

67]. An uncertainty learning method for dense neural SLAM estimates pixel-wise depth uncertainties without ground truth data, improving mapping and tracking accuracy. The method outperforms alternatives on many datasets, demonstrating its multisensor input flexibility [

62]. Researchers introduced NICE-SLAM, a dense visual SLAM system that improves scalability, efficiency, and resilience by combining neural implicit representations with hierarchical grid-based scene representation. The method enhances mapping detail, tracking accuracy, and speed with less processing. NICE-SLAM outperforms neural implicit SLAM methods in mapping and tracking tough datasets without over-smoothing [

63]. Point-SLAM, a dense neural SLAM system for monocular RGBD input, uses a dynamically produced neural point cloud to adapt density to input information. It performs better than existing tracking, mapping, and rendering algorithms on numerous datasets, improving resource utilization and 3D scene representation accuracy [

64].

Table 2.

Deep Learning-Based Underwater SLAM Strengths and Weaknesses.

Table 2.

Deep Learning-Based Underwater SLAM Strengths and Weaknesses.

| Methods |

Strength |

Weaknesses/limitations |

Framework |

Code available |

Year |

| Semantic SLAM |

The SemanticSLAM system introduces innovation with scene-agnostic functionality across diverse environments, constructing a semantic map for interpretability and utilizing a ConvLSTM network to correct errors and enhance pose estimation. |

The paper exhibits limitations, including a restricted performance evaluation, sparse details on system implementation, unclear generalization to outdoor environments, and a lack of concrete plans to address identified limitations in future work. |

Pytorch |

Yes |

2024 |

| SplaTAM |

SplaTAM demonstrates remarkable performance, achieving up to 2× state-of-the-art results in camera pose estimation and scene reconstruction, leveraging an innovative 3D Gaussian Splatting representation for fast rendering, optimization, and explicit spatial awareness in a single unposed monocular RGB-D camera setup with structured map expansion capabilities. |

The paper lacks comprehensive insights into the system's generalization across diverse environments, fails to address the computational requirements for real-time applications thoroughly, relies on assumptions about the universal suitability of Gaussian Splatting, and would benefit from a more in-depth comparative analysis with existing SLAM methods to enhance credibility. |

Pytorch |

Yes |

2023 |

| GO-SLAM |

GO-SLAM introduces global optimization for camera poses and 3D reconstruction, ensuring improved tracking and accuracy across versatile inputs, including monocular, stereo, and RGB-D setups, while maintaining real-time performance for dynamic environments and continuous adaptation for global consistency. |

GO-SLAM exhibits potential concerns, including the risk of error accumulation over time in complex scenarios, challenges on resource-constrained devices due to computational demands, potential hindrance in understanding and implementation due to algorithmic complexity, and the need for further investigation into its performance under highly variable real-world conditions. |

Pytorch |

Yes |

2023 |

| UncLe-SLAM |

Innovative uncertainty learning for dense neural SLAM demonstrated performance improvement adaptability to multisensor inputs. |

Limited depth sensor comparison, reliance on self-supervised training. |

Pytorch |

|

2023 |

| NICE-SLAM: |

NICE-SLAM excels with a hierarchical scene representation, ensuring detailed reconstruction and scalability. It achieves efficiency, competitive mapping, and tracking quality, overcoming over-smoothing challenges. The model adeptly fills small holes, extrapolates scene geometry, and benefits from geometric priors for enhanced reconstruction in large indoor scenes. |

The method's predictive capability is confined to the scale of the coarse representation, and loop closures are not currently incorporated. Exploring loop closures presents an intriguing avenue for future research. While traditional methods lack certain features, a gap remains to be bridged with learning-based approaches. |

Anaconda. |

YES |

2022 |

Table 3.

List commonly used data sets to evaluate underwater slams.

Table 3.

List commonly used data sets to evaluate underwater slams.

| MARAS Dataset |

It was collected in the Mediterranean Sea, providing acoustic, visual, and inertial sensing data. |

MARAS Dataset |

MARAS: A Dataset for Marine Robot Assistance Systems |

| UW-ETH-ASL Dataset |

Captured in various environments, this dataset from ETH Zurich includes RGB-D data and ground truth for benchmarking visual and inertial SLAM algorithms. |

UW-ETH-ASL Dataset |

NICE-SLAM |

| SAUVC Dataset |

The Singapore AUV Challenge (SAUVC) dataset, collected in swimming pool conditions, includes visual and inertial sensor data. |

SAUVC Dataset |

SLAM-Based Navigation for an AUV in Indoor Pools |

| URB Dataset |

Developed by the UUST (Underwater Robotics and Imaging) group, URB provides datasets for visual and acoustic SLAM under challenging conditions. |

URB Dataset |

Multi-Modal Underwater Simultaneous Localization and Mapping |

| LIU-UW Dataset |

The Linköping University Underwater (LIU-UW) dataset includes data from various underwater environments, providing visual and inertial measurements. |

LIU-UW Dataset |

GraphSLAM for Underwater 3D Reconstruction with Stereo Camera and Inertial Measurement Unit |

| UW3D Dataset |

A benchmark dataset for underwater 3D reconstruction, UW3D includes RGB-D images and is designed to evaluate SLAM algorithms. |

UW3D Dataset |

An Underwater Stereo Camera System for 3D Reconstruction and Object Identification |

| ScanNet |

ScanNet is a dataset of annotated 3D reconstructions of indoor scenes used for research in scene understanding and robotics. |

ScanNet Dataset |

GO-SLAM, NICE-SLAM |

| Replica |

Dataset featuring photorealistic 3D reconstructions of indoor scenes, commonly used for research in computer vision and virtual reality. |

Replica dataset |

UncLe-SLAM, NICE-SLAM |

| TUM-RGBD |

A dataset containing synchronized RGB and depth images captured from indoor scenes is often used to research visual SLAM (Simultaneous Localization and Mapping), 3D reconstruction, and scene understanding. |

|

Point-SLAM |

VI. Advantage of Deep Learning Relative to the Conventional Method

Deep learning, a transformative paradigm in artificial intelligence, brings several advantages to underwater Simultaneous Localization and Mapping (SLAM) navigation compared to conventional methods. One significant strength is its ability to comprehend complex underwater scenes, as neural networks excel at discerning intricate patterns within underwater data, providing a nuanced understanding of the environment, crucial for navigating challenging conditions characterized by low visibility or uneven terrain [

65,

66]. Matias Valdenegro-Toro1 introduces a CNN-based approach for accurate sonar image matching in AUV applications, outperforming traditional methods. The study anticipates improvements with more significant, diverse datasets. Despite constraints, the proposed method holds promise for enhancing AUV perception, with future work aiming to develop unsupervised learning for sonar image similarity functions [

67]. Researchers introduce an underwater loop-closure detection method using an unsupervised UVAE network, achieving a 92.31% recall rate in dynamic underwater scenarios. It addresses challenges like viewpoint changes, textureless images, and fast-moving objects. The system includes semantic object segmentation and an image description scheme for efficient information access. Real-world testing demonstrates robustness and real-time performance. Future work aims to enhance accuracy in complex underwater environments and explore decentralized visual SLAM for multiple AUVs in more significant scenarios [

68]. Bryan Pedraza and Dimah Dera present a Bayesian Actor-Critic (A2C) reinforcement learning approach for robust simultaneous localization and mapping (SLAM) in noisy environments. Leveraging Bayesian inference, the model generates robot actions while quantifying uncertainty. The proposed framework has broad applications in underwater robots, biomedical devices, micro-robots, and drones, emphasizing its adaptability and reliability in uncertain environments [

69]. Researchers proposed an article that assesses Visual Odometry (V.O.) in challenging underwater conditions, comparing classical and deep learning methods. Traditional systems struggle with initialization and tracking, while deep learning architectures exhibit superior performance, providing continuous pose estimation in complex scenarios. The study emphasizes the potential of data-driven approaches for robust underwater robot navigation [

70]. Zhengyu Xing et al. introduce an enhanced underwater image enhancement model based on ShallowUWnet, utilizing convolutional blocks, batch normalization, and LeakyReLU activation. The model, incorporating various loss functions, outperforms advanced methods in evaluation metrics, showcasing superior performance and generalization. Practical testing on engineering cases highlights its effectiveness, offering a faster processing alternative to deep neural network methods for underwater image enhancement [

71]. In the dynamic realm of underwater navigation, deep learning's holistic approach stands out. Traditional SLAM systems often involve separate modules for localization and mapping, requiring intricate integration. Deep learning models employ end-to-end learning, enabling the system to grasp its location and construct a map simultaneously, simplifying the navigation process for more efficient underwater exploration [

72,

73]. Another advantage is the flexibility of deep learning in handling different sensors commonly used in underwater navigation, such as sonar, LiDAR, and cameras. Deep learning models can seamlessly integrate information from these diverse sensors, learning to interpret varied data sources coherently. This adaptability contrasts with traditional SLAM systems, which may require complex calibration and synchronization processes when dealing with multiple sensors [

74,

75]. Thanks to neural networks' ability to model nonlinear relationships and adapt to dynamic changes, deep learning's prowess becomes evident in navigating tricky underwater situations. This makes them well-suited for handling unpredictable underwater scenarios, where traditional methods may struggle without sophisticated filtering techniques [

76]. Transfer learning, a key feature of deep learning, introduces another layer of advantage. Pre-trained models can be adapted for underwater navigation, where obtaining large labeled datasets can be challenging. This capability significantly accelerates the training process, allowing for quicker deployment of models in real-world underwater exploration scenarios [

77]. Moreover, the ability to effectively fuse information from different sensors is a distinctive strength of deep learning [

74]. Deep learning models can harmoniously integrate these disparate data sources in underwater environments, where a combination of sonar, LiDAR, and optical sensors is common. Traditional SLAM systems may face challenges in achieving such seamless integration, requiring intricate adjustments and coordination. While acknowledging these advantages, it's essential to consider practical factors such as computational requirements and interpretability. Deep learning's computational demands can be significant, and the 'black-box' nature of neural networks may raise interpretability concerns. Nevertheless, the suite of advantages presented by deep learning positions it as a transformative force in advancing the capabilities of underwater SLAM navigation, offering a promising avenue for further exploration and research in this dynamic field. Integrating Deep Learning methods into underwater navigation represents a revolutionary stride in enhancing the capabilities of Unmanned Underwater Vehicles (UUVs). Under the umbrella of Artificial Intelligence (AI), these methods enable UUVs to delve deeper into the intricacies of underwater environments through sophisticated data processing. Deep Learning-based SLAM algorithms [

78] empower UUVs with advanced cognitive abilities to make real-time decisions, adapt to dynamic underwater conditions, and navigate with unparalleled accuracy [

39]. Deep Learning algorithms excel at extracting intricate patterns and representations from sensor data, encompassing visual, inertial, and acoustic inputs. This enables UUVs to construct highly detailed maps of their surroundings and concurrently estimate their precise positions within the underwater landscape. The adaptive learning capabilities of Deep Learning methods empower UUVs to continually refine their navigation strategies based on accumulated experiences [

8]. The fusion of Deep Learning methodologies with UUVs elevates the accuracy and reliability of underwater operations. It propels these vehicles to new frontiers of exploration and research in the marine domain [

4]. It positions UUVs as intelligent entities capable of autonomously navigating through challenging underwater terrains, leveraging the power of advanced neural network architectures for unparalleled adaptability and performance. Researchers have demonstrated the application of deep neural networks [

78] to predict interframe poses, replacing traditional visual odometry. A keypoint rejection system is used to supervise neural network training, improving the reliability of visual ego-motion estimation by filtering out unsuitable vital points [

43]. Dr J. Priscilla Sasi et al. explore Convolutional Neural Networks (CNNs) in autonomous underwater robot navigation, emphasizing their effectiveness in overcoming challenges like low visibility and object detection. Case studies showcase CNNs' potential for transforming underwater robotics, highlighting the need for ongoing research to enhance adaptability in challenging environments [

79]. Olaya Alvarez-Tu' n˜on et al. survey the landscape of visual simultaneous localization and mapping (SLAM) algorithms in geometry-based and learning-based frameworks. It introduces a comprehensive SLAM pipeline formulation, categorizes implementations, and evaluates their performance under varying environmental challenges. The study emphasizes the shift towards end-to-end pipelines driven by deep learning, addressing efficiency limitations and the need for generalizability in diverse deployment conditions. The findings highlight the potential of merging geometry and learning-based approaches for future advancements in visual SLAM [

80]. Self-organizing maps (SOMs), another neural network approach, are employed for multi-robot SLAM, offering unsupervised training capabilities [

17]. A refined super-resolution reconstruction method enhances and recovers underwater images by decomposing RGB attenuation to calculate transmission maps and improve image quality [

78]. All these methods show how deep learning SLAM is in the field of underwater SLAM. Below are some practical examples implemented in the domain, starting with a proposed deep-learning sensor fusion algorithm. The research introduces a novel FIDCE algorithm for precise biofouling identification in underwater images alongside the MFONet model for pixel-level segmentation. FIDCE enhances image quality and accurately identifies biofouling, which is vital for ship maintenance. MFONet outperforms classical algorithms, offering superior speed and accuracy, enabling automated cleaning and maintenance planning for underwater vehicles [

81]. Laura A. Martinho et al. propose a learning-based approach for enhancing the quality of underwater images. It involves two main steps: Firstly, a Convolutional Neural Network (CNN) Regression model learns optimal parameters for enhancing different types of underwater images. Second, intensity transformation techniques are applied to raw underwater images to compensate for the loss of visual information [

51].

VII. Predictions about Future Development Directions Based on the Above Content

This study establishes a strong foundation for advancing Unmanned Underwater Vehicle (UUV) navigation, focusing on refining AI-SLAM algorithms, particularly those driven by deep learning. Future research endeavors will involve optimizing multiple sensor fusion techniques, incorporating technologies like multi-beam sonar, stereo cameras, Lidar, IMU (INS), and methods such as SBL/USBL and DLV to enhance UUV navigation accuracy in complex underwater environments. Exploring the integration of emerging technologies, such as machine learning and advanced computer vision, holds promise for developing even more robust UUV navigation systems. Scalability for different UUV types and mission requirements is a crucial consideration, and collaborative efforts among researchers, industry experts, and policymakers are essential for standardizing and implementing these advancements in practical applications. As technology evolves, the future of underwater navigation and Simultaneous Localization and Mapping (SLAM) is poised for significant growth, driven by deep learning applications. Anticipated developments include refining model architectures, addressing domain adaptation challenges, optimizing algorithms for real-time processing efficiency, expanding application scopes, integrating deep learning with sensor advancements, exploring unsupervised learning methods, and fostering interdisciplinary collaborations. These efforts aim to propel the field towards more precise, adaptable, and robust underwater navigation systems, harnessing the transformative capabilities of deep learning in navigating complex and dynamic underwater environments. Future research may also explore other Deep Reinforcement Learning (DRL) algorithms like Deep Deterministic Policy Gradient (DDPG), Soft Actor-Critic (SAC), and Proximal Policy Optimization (PPO) for further optimization of the 3D image model reconstruction.