1. Introduction

Adding perceptual information to the real world using computers is AR. As a result of this technology, education is positively impacted. Various educational levels, academic subjects, and learning situations can benefit from AR [

1]. AR allows learners to interact with artificial 3D objects to enhance their learning. Through the use of 3D synthetic objects, AR can enhance the visual impression of target systems and environments. Students can use a wide range of perspectives to improve their interpretation of 3D objects [

2].

AR has been enhanced by various technologies, including ML, which is still in its infancy. Despite this, it is used for various educational and training purposes, for example, medical education [

3]. Following the periods of inference, knowledge, and ML, deep learning (DL) [

4,

5] represents the next phase in artificial intelligence (AI). In addition to convolutional neural networks (CNNs), DL includes several representative models. ML and AR are important in medical education and learning [

3]. Furthermore, it is used in plant education for precise farming [

6]. Surgical education should produce surgeons, clinicians, researchers, and teachers [

7,

8,

9]. Surgical training and education are becoming increasingly computer-based as the field evolves. The states of patients are also classified using ML algorithms based on their records[

10,

11]. When the AR module is activated, digital information is displayed first. Based on the previous data set provided by the system [

12], an ML algorithm is then used to identify the affected tissue from the rest.

In sports education, rock climbing and basketball offer the most promising frameworks for AR development. Incorporating basketball AR into practitioners’ environments and spectators’ viewing experiences may be beneficial. The small area of bordering surfaces and the calibration of fixed holds make rock climbing a technical sport. A new AR advancement can also enhance baseball and soccer games and ball directions [

13]. AR is more effective than traditional media in performing or preparing an errand. As a result of AR experience, understudies are more likely to transfer their knowledge to actual equipment use.

Content learned from AR experiences is more vividly remembered than from non-AR experiences. It will be compared to content learned through paper or video media multi-weeks after the fact [

14]. AI schooling [

15,

16], utilizing AR can be applied to non-engineering majors, and grown-ups can advance effectively with interest and can ceaselessly adapt to society and technology [

17]. AR can be used in the educational system to improve traditional education while reducing old problems. Additionally, it facilitates collaboration between teachers and students. Regarding educational applications, there is no better technology and potential to explore than AR. Due to technological advancements, it is necessary to improve educational domains using efficient methods. A study found that AR may engage, trigger, and stimulate students to consider course materials from a variety of angles [

18].

The main contributions of this survey can be summarized as follows.

ML techniques in AR applications are discussed concerning several areas of education.

An analysis of related works is presented in detail.

We discuss ML models for AR applications such as support vector machine (SVM), CNN, artificial neural network (ANN), etc.

We provide a detailed analysis of ML models in the context of AR.

We present a set of challenges and possible solutions.

Research gaps and future directions are discussed in several fields of education involving ML-based AR frameworks.

Recognize and analyze emerging trends and developments in the use of ML and AR in educational settings.

Provide insights into areas that need more research or improvement.

Make suggestions and provide insights to help guide future research and development activities in the sector.

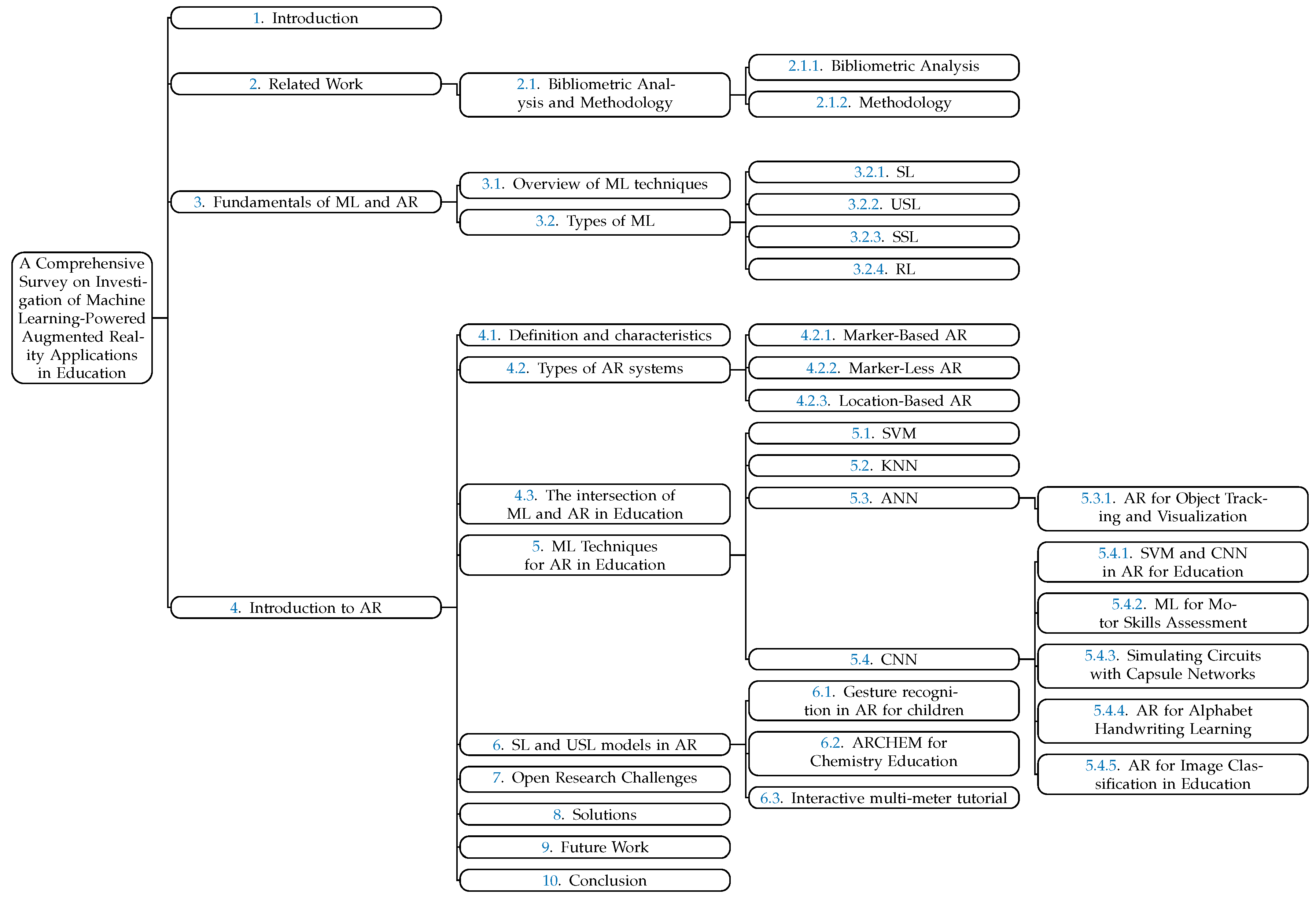

The remaining article is organized as follows.

Section 2 presents the related work.

Section 3 explains the fundamentals of ML and AR, its techniques, and types of ML.

Section 4 discusses the Introduction to AR, types of AR, and the Intersection of ML and AR in Education.

Section 5 explains ML techniques for AR in Education, its types, and uses.

Section 6 presents SL and USL models in AR and their applications.

Section 7 discusses open research challenges.

Section 8 briefly explains solutions and lastly,

Section 9 concludes the survey.

Figure 1 shows the organization of the survey.

Table 1 shows the list of acronyms used in this article.

2. Related Work

Medical education and learning are the most common applications of ML-based AR. In [

1], the authors explore which students AR systems benefit the most and analyze their impact on student learning. However, this study did not focus on ML models for AR in education.

Ren et al. in [

3] concentrated on CNN, ANN, and SVM in AR, explaining how AR and DL can be employed in healthcare. Unfortunately, this study had a limitation as it did not include all ML models.

A survey of AR applications in plant education is presented by the authors in [

6], with a focus on agriculture, particularly livestock and crops. Notably, the discussion in this paper centered on conventional methods rather than ML models.

In [

19], the researchers explore the application of AR in education, providing an overview and describing the three generations of AR in education. However, this study did not delve into ML methods.

Khandelwal et al. in [

12] introduced a surgical training system that combines AR, AI, and ML. Throughout the survey, SVM, KNN, and ANN models are discussed along with their applications.

In the domain of e-learning research, Hierarchical Linear Modeling (HLM) was employed as a multilevel modeling technique [

20]. Nonetheless, the authors did not provide a detailed explanation of how ML was used.

In [

21], the focus was on AR technologies and limitations for neurosurgical training as an educational tool. However, the researchers did not elaborate on the use of ML in their survey.

The ongoing clinical applications of AR in education and surgery are reviewed in [

22]. Despite this, the researchers did not mention the utilization of ML models.

In [

23], the authors discuss the impact of AR on programming education, the challenges and issues it presents, and how it benefits student learning. Regrettably, this study did not delve into the usage of ML in the survey.

Real-time data collecting, ML-aided processing, and visualization are anticipated goals for using AR technologies in the healthcare sector. The study [

24] focuses on the potential future application of AR in breast surgery education, describing two prospective applications (surgical remote telementoring and impalpable breast cancer localization using AR) as well as the technical requirements to make it viable.

The purpose of the research in [

25] is to look into the impact of AR on student attitudes, engagement, and knowledge of mechanical engineering principles. The creation of an AR app for mobile devices to aid in the comprehension of planar mechanisms by exhibiting models of basic machines is the contribution of this work. The AR simulation provides for the three-dimensional representation of planar mechanics, as well as a variety of interactions, charts, and calculations. Students utilized a smartphone app to complete a basic task. In addition, a questionnaire was used to collect their thoughts on using AR in their mechanical engineering lessons. The evaluation of the exercise, as well as the answers to the questions, revealed that the students had a favorable opinion of the usage of AR in the classroom. In addition, AR increased their involvement and grasp of the process components.

[

26] "LeARn" is a novel network-based collaborative learning environment that uses AR to transform a real-world surface into a virtual lab. The system contributes to the replacement of a face-to-face learning environment with an enhanced collaborative setting. A scenario with a virtual chemical lab is shown to showcase the concept. Any real-world surface is supplemented in the demo with virtual lab equipment used in a chemistry experiment. The instructor hosts the virtual lab, and all students can access it solely through their mobile phones or tablets. Each participant can interact with the lab equipment, which the instructor or fellow students can view in real-time. The system enables real-time communication, creating a truly collaborative atmosphere. The resulting solution demonstrates that a sophisticated lab experiment may be carried out from a personalized location that incorporates collaborative characteristics. In an uncontrolled user research, the system was implemented and reviewed, and the results demonstrate the effectiveness of an AR-based interactive and collaborative learning environment.

The study [

27] described an ML-augmented, wearable, self-powered, and long-lasting HMI sensor for human hand motion and virtual tasks. The triboelectric friction between the moving object and the specific electrode array was employed to generate a unique and stable electrical signal that regulated the programmable output curve of the instantaneous parameters. It established that the motion of a movable object may be tracked and correctly recreated by the output signal by decoupling it into various motion patterns. Furthermore, with evident visualization performance, the ML method can identify fast and slow finger actions. It also indicated that multiple linear regression (MLR) and PCA+K-means clustering (K-means) exhibited significantly in terms of grouping, visualization, and motion speed interference. This study not only established the viability of designing self-powered HMI sensors but also demonstrated a way to identify ML-augmented motion patterns.

The authors in [

28] present the findings of a survey of touchless interaction studies in educational applications and propose the use of ML agents to achieve real-time touchless hand interaction inside kinesthetic learning. This study shows the design of two AR applications with real-time hand contact and ML agents, enabling engaged kinesthetic learning as an alternative learning interface.

The paper [

29] includes a review of the present literature, an investigation of the problems, the identification of prospective study areas, and lastly, reporting on the construction of two case studies that can highlight the initial steps needed to address these research areas. The findings of this study finally reveal the research gap needed to enable real-time touchless hand interaction, kinesthetic learning, and ML agents using a remote learning methodology.

This research in [

30] presents an improved ML approach for evaluating Extended Reality (XR)-based simulators. Healthcare simulators are being developed for the training and instruction of medical residents and students. Many researchers have utilized ML (ML) to evaluate medical simulators. When doing such an examination, however, there is a lack of standard. Some academics have also looked into utilizing ML to standardize the assessment process, however, they have only looked into virtual reality (VR). The goal of this study is to create an enhanced Framework that includes assessment techniques for Virtual, Mixed, and AR simulators as well as multiple ML models.

Following are our research questions:

How advanced are augmented reality applications in education today?

How is machine learning being integrated into the educational augmented reality applications?

In comparison with conventional approaches, how successful and efficient are machine learning-powered augmented reality applications in increasing learning outcomes?

What are the primary elements influencing student and instructor user experiences with machine learning-powered augmented reality in education?

What technical challenges do you have when combining machine learning and augmented reality in educational settings?

What emerging trends in the development and deployment of machine learning-powered augmented reality applications in education are anticipated?

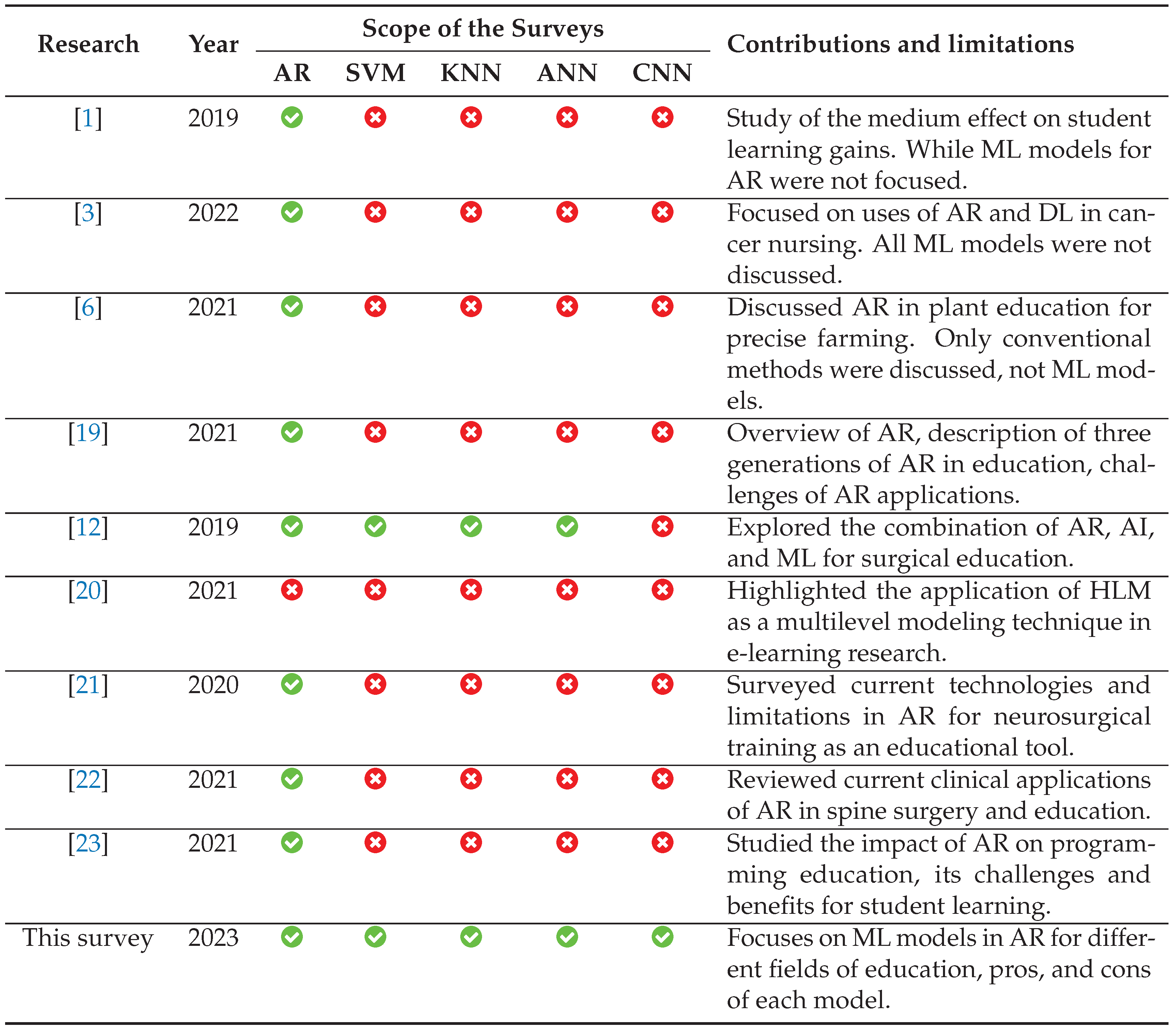

Based on our analysis of related work, there is a clear need for a comprehensive survey of ML models, including SVM, KNN, ANN, and CNN, for AR in various educational fields. As a result, this survey focuses on ML models in AR for education, providing an in-depth analysis of each model’s advantages, disadvantages, challenges, and limitations. Table 2 shows the summary of existing surveys.

2.1. Bibliometric Analysis and Methodology

Several databases were employed to select papers, including Google Scholar, Web of Science (WoS), IEEE Xplore, and ScienceDirect.

2.1.1. Bibliometric Analysis

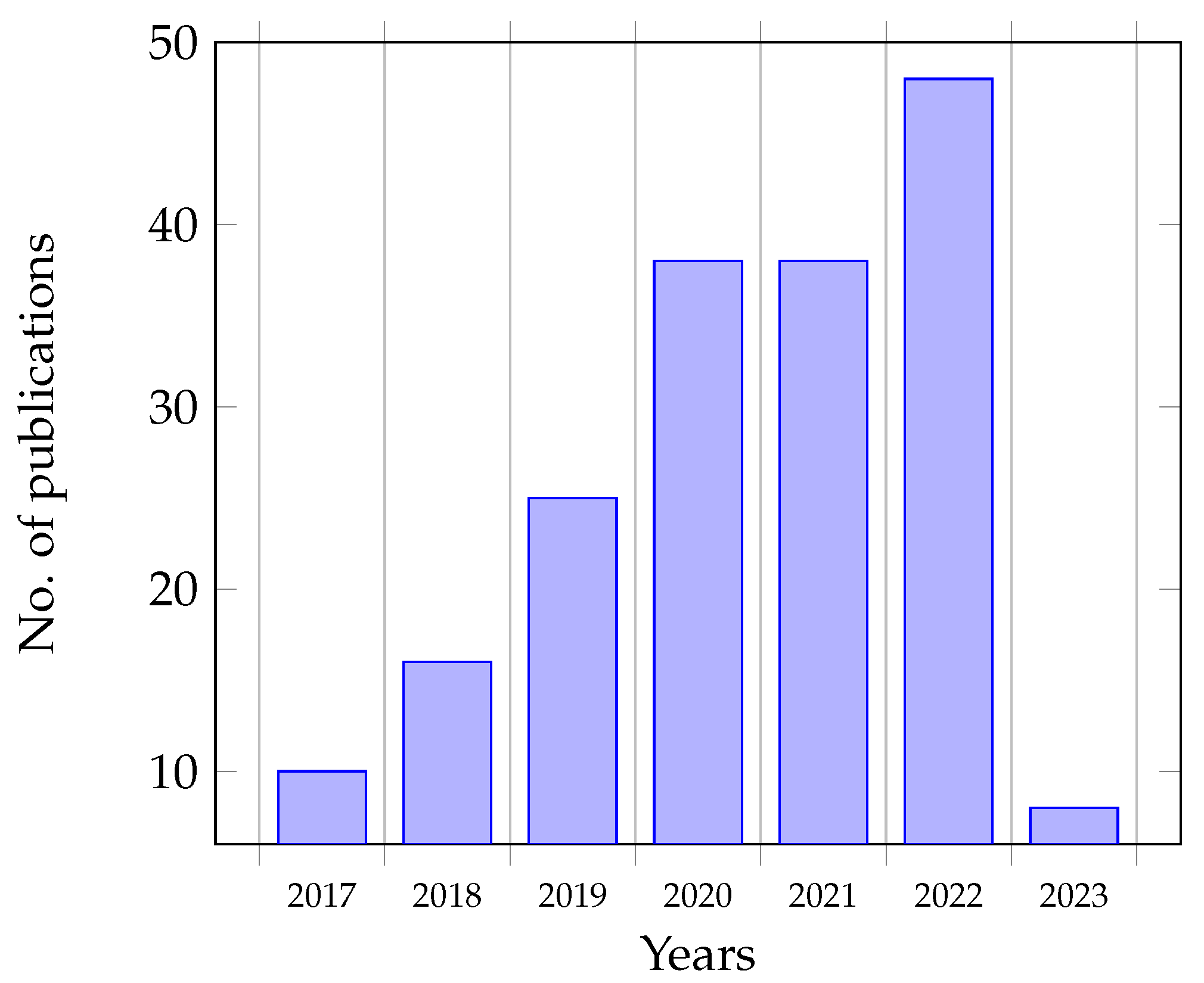

The papers under consideration were published between 2017 and 2023. In 2017, ten articles related to ML-assisted AR in education were published, while 16 were published in 2018. In total, 25 papers were published in 2019. Additionally, 38, 38, 48, and 8 papers were published in 2020, 2021, 2022, and 2023, respectively.

Figure 2 illustrates the number of publications per year.

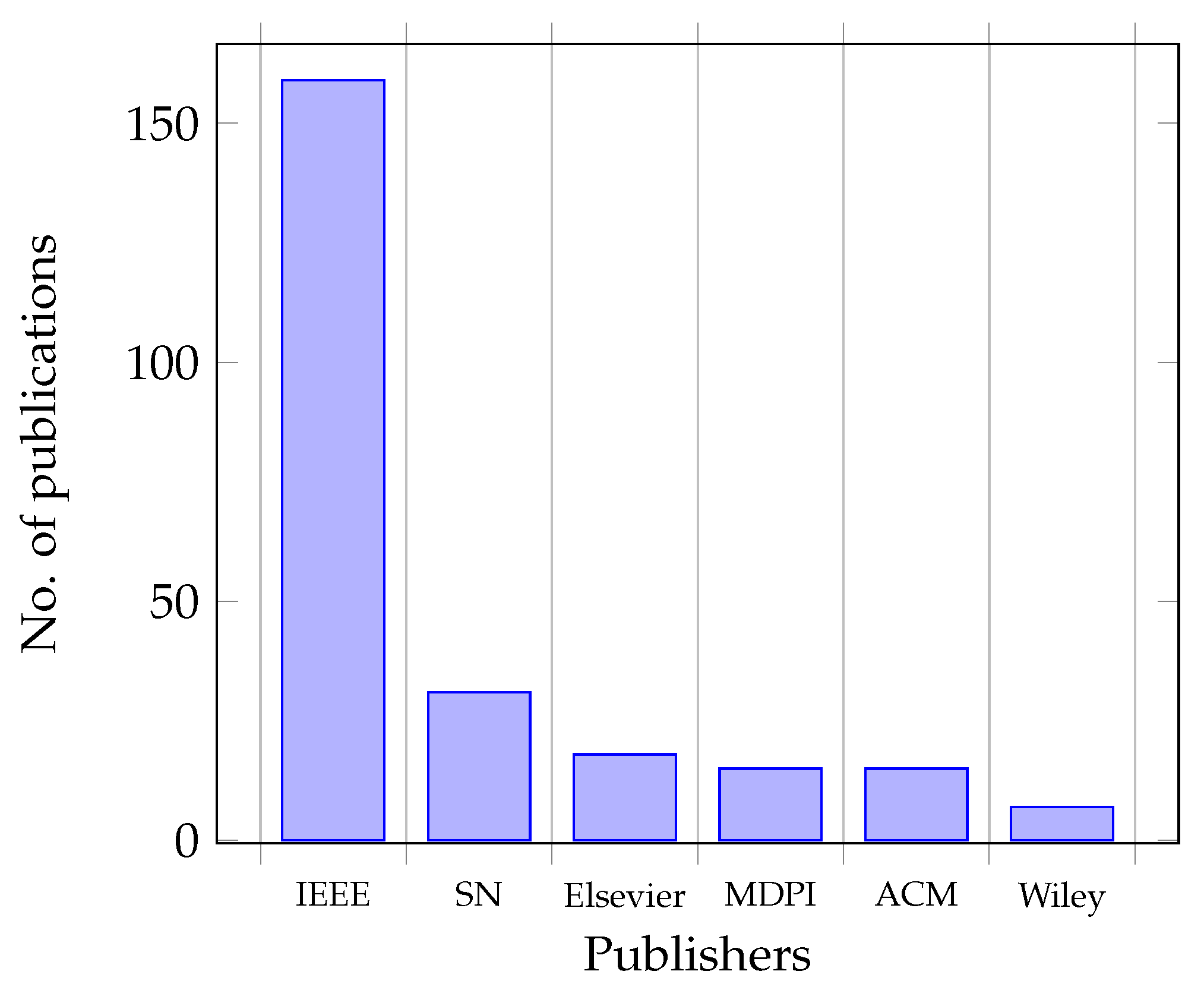

A total of 159 IEEE publications have been published on ML-assisted AR in education. There were 31 papers published by the Association for Computing Machinery (ACM), Springer Nature (SN), Multidisciplinary Digital Publishing Institute (MDPI), Elsevier, and Wiley, respectively. Wiley has published the fewest publications on this topic.

Figure 3 displays the number of publications by different publishers between 2017 and 2023.

We present a world map indicating the countries that are most active in working on this topic. Between 2017 and 2023, the United States of America (USA) published 34 articles, making it the most active country. Germany, Italy, and China published 22, 16, and 15 papers, respectively, during the same period.

Figure 4 depicts the leading countries in ML-assisted AR applications in education.

2.1.2. Methodology

Based on the selection criteria outlined in Algorithm 1, we selected 50 papers for analysis:

|

Algorithm 1 Article Selection Criteria |

-

Require:

Search on databases -

Ensure:

Article from 2017 to 2023

while keyword—Augmented Reality Machine Learning Education do

if Discuss ML-assisted AR application | Evaluate performance | Analyze application in education then

Consider for analysis else if Does not discuss ML then

Exclude from the analysis end if

end while

|

3. Fundamentals of ML and AR

3.1. Overview of ML Techniques

ML has gained immense popularity and plays a crucial role in modern technology. Teaching ML in high school is essential to empower students with responsible and innovative skills. ML is a subset of AI [

31]. At its core, ML automates the process of creating and solving analytical models based on training data [

32]. It has become an integral component of various applications, such as image recognition [

33], speech recognition [

34], intelligent assistants [

35], autonomous vehicles [

36], and many others.

In ML, real-world problems are approached through learning rather than explicit programming. The system learns typical patterns, such as word combinations, from data. For example, in the context of social media analysis, ML systems can learn to identify words or phrases in tweets that indicate customer needs, leading to need classification [

37]. ML professionals leverage various open-source ML frameworks available in the market to develop new projects and create impactful ML systems [

38].

3.2. Types of ML

ML comprises four primary types: Supervised Learning (SL), Unsupervised Learning (UL), Semi-Supervised Learning (SSL), and Reinforcement Learning (RL) [

39,

40]. Let’s delve into each of these types.

3.2.1. SL

SL is a paradigm where a set of inputs is used to achieve specific target outcomes [

41,

42]. SL tackles both regression problems, which involve predicting continuous values, and classification problems, which involve categorizing data into distinct classes. In classification, the output variable is divided into various groups or categories, such as ’red’ or ’green,’ or ’car’ and ’cycle.’ An example of a regression problem is predicting cardiovascular disease risk. Common algorithms employed in SL include Logistic Regression, Deep Neural Networks (DNN), SVM, Decision Trees (DT), k-Nearest Neighbors (KNN), and ANN [

43].

3.2.2. UL

UL is characterized by its data-driven approach, requiring no human-labeled data. UL techniques excel in identifying underlying trends, structures, and performing exploratory analysis [

44]. Tasks within UL encompass density estimation, clustering, association rule mining, feature learning, and anomaly detection. Common algorithms in UL include Self-Organizing Maps (SOM), Generative Adversarial Networks (GAN), and Belief Networks (DBN) [

45].

3.2.3. SSL

SSL represents a blend of labeled and unlabeled data. It is particularly advantageous when extracting relevant patterns from data is challenging, and labeling examples is time-consuming. SSL techniques find utility in labeling data, fraud detection, text translation, and text classification [

46].

3.2.4. RL

RL stands in contrast to SL as agents in RL learn by trial and error rather than relying on labeled data [

47]. In RL, agents determine how to behave within an environment through interactions and observations of the outcomes. It is particularly relevant in scenarios where agents need to make sequential decisions and learn optimal strategies.

A variety of applications for RL can be found in computer-controlled board games, robotic hands, robotic mazes, and autonomous vehicles. Several RL algorithms are used, including Q-learning, R-learning, Deep reinforcement learning (DRL), Actor-critic, Deep Adversarial Networks (DAN), Temporal Difference Algorithms (TDA), and Sarsa Algorithm [

48].

4. Introduction to AR

4.1. Definition and Characteristics

AR seamlessly intertwines the real and virtual worlds [

49,

50]. By overlaying digital information onto the physical world, AR creates the illusion of digital content being an integral part of the real environment. One of AR’s key strengths is its ability to immerse users without isolating them from their physical surroundings [

51]. AR experiences are easily accessible through devices like tablets and smartphones equipped with AR applications [

52]. These applications can be operated in handheld mode or leveraged with accessories like Google Cardboard to provide immersive 3D experiences. Additionally, there are free applications available, enabling students to create AR content and engage with AR without the need for costly equipment [

53]. AR spans a range of viewing devices, from AR headsets like Microsoft HoloLens to VR and gaming headsets such as HTC Vive and Samsung Gear.

AR finds applications across various educational levels, including primary [

54] to university education [

55]. It caters to diverse learner groups, encompassing K-12 students, kindergarteners, elderly individuals, adult learners, vocational and technical higher education [

56], and those with special needs [

57]. The integration of AR into education necessitates the development of suitable methods and applications, presenting valuable research opportunities [

58]. AR technology empowers users to experience scientific phenomena that would be inaccessible in the real world, such as visualizing complex chemical reactions, providing access to previously unattainable knowledge [

2,

59]. AR enables users to interact with virtual objects and observe phenomena that may be challenging to visualize in reality, enhancing understanding of abstract or unobservable concepts [

2].

4.2. Types of AR Systems

AR systems are categorized into three primary types: Marker-Based AR, Marker-Less AR, and Location-Based AR [

60,

61,

62].

4.2.1. Marker-Based AR

Marker-Based AR relies on markers, which can take the form of QR codes, 2D barcodes, or distinctive, highly visible images. When a device captures an image with its camera, the AR software identifies the marker, determines the camera’s position and orientation, and overlays virtual objects onto the screen [

63]. This method has proven to be robust and accurate, and virtually all AR software development kits (SDKs) support marker-based tracking techniques. Marker-Based AR provides precise information about the marker’s position in the camera’s coordinate system, enabling the identification of sequences of markers and their utilization for various control functions [

64].

4.2.2. Marker-Less AR

Marker-Less AR, also known as markerless tracking, is concerned with determining an object’s position and orientation in relation to its surroundings. This capability is crucial in VR and AR, as it allows the virtual world to adapt to the user’s perspective and field of view, ensuring that AR content aligns seamlessly with the physical environment [

65]. Unlike marker-based approaches, marker-less tracking doesn’t require specialized optical markers, offering greater flexibility. It eliminates the need for predefined environments with fiducial markers, allowing users to move freely in various settings while receiving precise positional feedback [

66].

4.2.3. Location-Based AR

Location-based AR applications deliver digital content to users as they arrive at specific physical locations or move through the real world. Typically, these applications are presented on mobile devices like smartphones or tablets, and they utilize Global Positioning System (GPS) or wireless network data to track the user’s location [

67]. While location-based AR has been around for some time, it gained widespread popularity with games like Ingress and Pokemon Go. Another term often used to describe such applications is "location-aware AR." Numerous industries, including tourism, entertainment, marketing, and education, have embraced location-based AR applications. These apps serve multiple purposes, such as entertaining and educating tourists while simultaneously achieving marketing objectives in the tourism sector [

68].

4.3. The Intersection of ML and AR in Education

ML models are integrated with AR to enhance educational experiences. This survey explores the utilization of ML models, including SVM, KNN, ANN, CNN, and more, within AR for diverse educational purposes.

5. ML Techniques for AR in Education

In the realm of AR for education, various ML techniques play a pivotal role. These techniques empower AR applications to deliver engaging educational experiences.

5.1. SVM

SVM, a SL algorithm, finds applications in classifying data for AR in education. SVM establishes hyperplanes to separate classes, expanding the boundary between them and creating partitions. The algorithm maximizes the margin between classes, minimizing generalization error. In the educational context, SVM in AR enhances students’ comprehension, and the combination of ML and AR yields impressive results [

69].

5.2. KNN

KNN, another ML method, classifies unseen examples stored in a database. It’s a versatile technique, widely used not only in education but also in various fields such as nephropathy prediction in children, fault classification, intrusion detection systems, and AI applications [

70,

71].

5.3. ANN

ANNs mimic the human brain’s learning process, excelling in solving non-linear problems. In the brain, interconnected neurons handle complex tasks. In ANNs, artificial neurons, akin to biological neurons, process information through interconnected nodes. This technology finds utility in addressing intricate problems that defy linear solutions [

72].

5.3.1. AR for Object Tracking and Visualization

The paper [

73] introduces IVM-CNN which combines the best features of RNN and CNN for object tracking and machine vision tasks. It outperforms previous models in the M2CAI 2016 contest datasets, with a mean average precision (mAP) of 97.1 for device diagnosis and a mean rate of 96.9. It also runs at a rate of 50 frames per second (FPS), ten times faster than region-based CNNs. The paper describes the use of Masked R-CNN, which replaces the region proposal network (RPN) with a region proposal module (RPM) to generate more accurate boundary boxes while requiring less labeling. This improves the model’s reliability and effectiveness. The paper also states the development of Microsoft HoloLens software, which provides an AR-based approach to clinical education and assistance. This technology enhances the visualization and understanding of medical data, thus improving healthcare practices.

5.4. CNN

CNNs are pivotal in AR for education. They possess the ability to identify relevant features without human supervision, making them indispensable in various domains, including speech recognition, face recognition, computer vision, and AR applications [

74]. The weight-sharing feature in CNNs reduces overfitting and enhances generalization, setting them apart from conventional neural networks.

5.4.1. SVM and CNN in AR for Education

A study in 2018 explored the use of SVM and CNN in AR to detect English alphabets as markers, enhancing learning experiences for students. The CNN model achieved an impressive accuracy of 96.5%, while SVM reached 92.5%. The research involved the creation of a custom dataset for training and validation, contributing significantly to marker-based AR systems in education [

74].

5.4.2. ML for Motor Skills Assessment

In 2022, researchers delved into assessing the motor skills of early education students using SVM, KNN, DT, and CNN image recognition methods. Among these, the CNN model outperformed the others, achieving an accuracy of 82%. This study is a testament to the potential of ML in evaluating students’ abilities in various educational contexts [

75].

5.4.3. Simulating Circuits with Capsule Networks

The field of electrical engineering education witnessed innovation in 2021 with the introduction of a system that enables students to simulate circuits on mobile devices using image recognition. Capsule networks, a form of DL played a vital role in recognizing and classifying characters within circuit diagrams. With a remarkable 96% accuracy, capsule networks outperformed traditional CNNs, making circuit simulation more accessible and engaging for students [

76].

5.4.4. AR for Alphabet Handwriting Learning

In 2022, a novel AR application named "Learn2Write" emerged, designed to aid children in learning alphabet handwriting. Leveraging ML techniques, including several CNN models like DenseNet, BornoNet, Xception, EkushNet, and MobileNetV2, the application empowers children to practice and perfect their handwriting skills. Among the models, EkushNet stood out due to its efficiency, achieving a test accuracy of 96.71%. The app not only assists with handwriting but also offers a promising avenue for enhancing early education through AR and ML [

77].

5.4.5. AR for Image Classification in Education

The authors in [

78] describe a technique for automatically generating various 3D views of textbook pages to create a large dataset that is then trained with CNNs like Alexnet, GoogleNet, VGG, GoogLeNet, or ResNet. The system stores the trained model and returns it to the client for classification on a web browser with TensorFlow.JS, allowing book page recognition. It also enables the display of 3D graphics on top of recognized book pages, providing an AR marker generation method that preserves the original images of the books while increasing detection accuracy. The research offers a promising and low-cost AR approach that can be applied in a variety of settings, including education and training.

In the context of chemical experiments, the paper [

79] addresses the use of a transformer-based object detection model called detection transformer (DETR) for object identification in images and its integration into an AR mobile application. AR and computer vision techniques together present a viable method for improving learning applications’ user experiences. The method they used consists of two steps: first, the DETR model is built and trained on the customized dataset; next, it is integrated into the augmented reality application to use a multi-class classification approach to predict the experiment name and detect objects.

6. SL and USL Models in AR

6.1. Gesture Recognition in AR for Children

In 2019, researchers ventured into the realm of gesture recognition in children’s education through AR. They harnessed SVM for static gestures and Hidden Markov Models (HMM) for dynamic gestures, fostering a tangible connection between physical gestures and virtual learning experiences. While static gestures were well-modeled, there’s room for improvement in handling dynamic gestures in AR for education [

80].

6.2. ARChem for Chemistry Education

In 2022, ARChem, a cutting-edge mobile application, emerged to revolutionize chemistry education. This app combines AR, AI, and ML to assist students in their chemistry studies. It excels in chemical equation identification and correction, image processing, text summarization, and even sentiment analysis through a Chatbot. The fusion of mobile development techniques, ML, and DL makes ARChem a game-changer in the realm of virtual education, aiming to alleviate the challenges students face in comprehending and applying complex chemistry concepts [

81].

6.3. Interactive Multi-Meter Tutorial

Another 2022 innovation came in the form of an interactive multi-meter tutorial using AR and DL. By amalgamating TensorFlow’s object detection API with Unity 3D and AR Foundation, this project empowers students to learn how to use multi-meters. DL models facilitated real-time recognition of meter components, providing step-by-step guidance. Such applications demonstrate the potential of ML and AR in technical education, simplifying complex topics for students [

82].

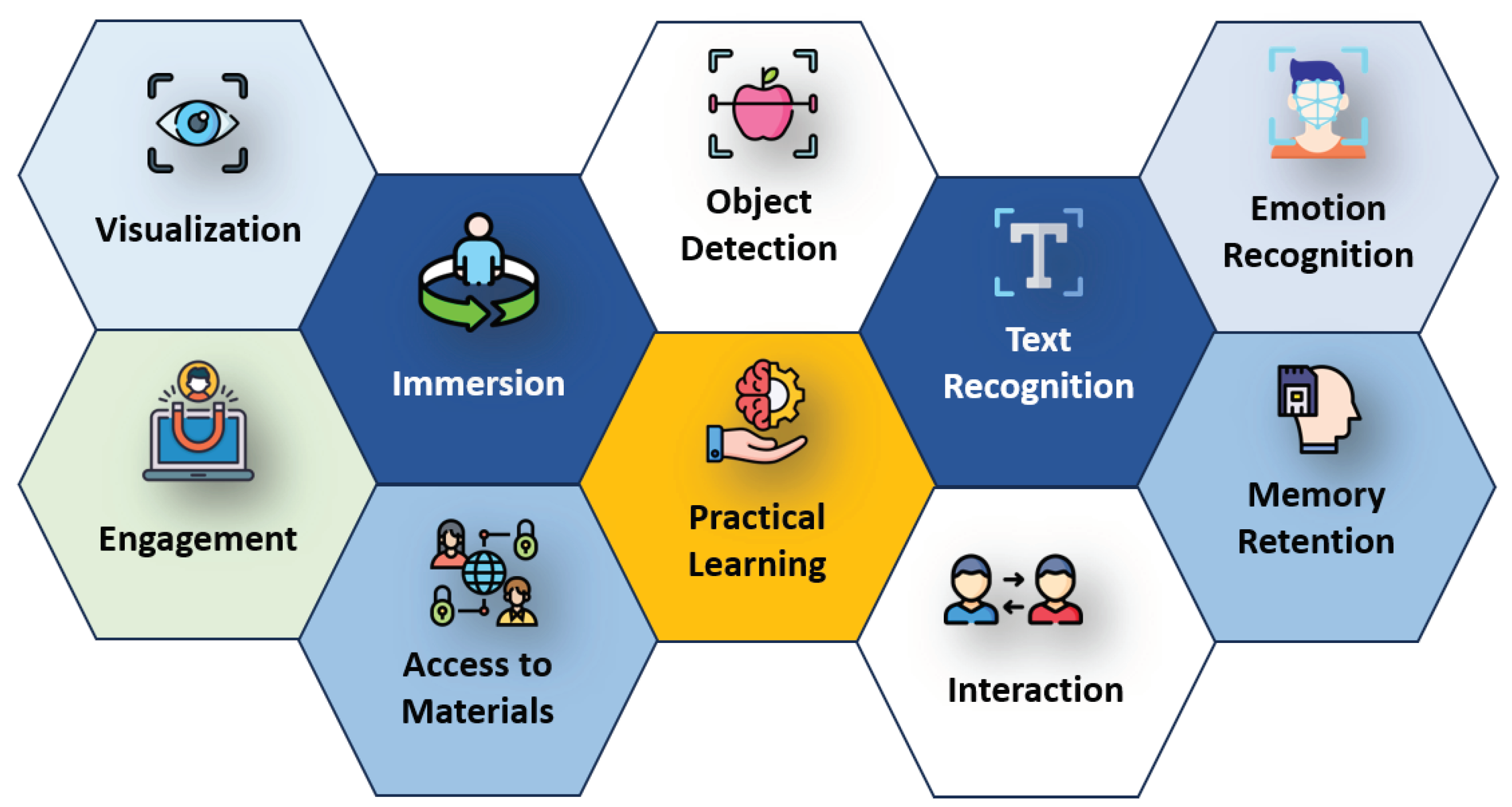

Figure 5 shows the advantages of ML-driven AR in education as discussed earlier.

7. Open Research Challenges

In the realm of using ML and DL techniques to enhance the educational experience with AR, several research challenges have emerged. While these methods offer numerous advantages, there are still gaps in our understanding and implementation. As this is an emerging technology, researchers often find themselves creating their datasets due to the limited availability of relevant data sets [

74].

One significant challenge is the removal of multi-media noise from the immersive AR environment, a process that can be time-consuming [

75]. Additionally, researchers face the need to conduct experiments with kindergarten students to test the AR devices they’ve developed. This requires not only technological proficiency but also effective training for young learners on how to use AR devices [

74].

Another critical challenge in the combination of ML and AR is the accuracy and speed of object recognition within complex diagrams [

76]. Aligning AR objects seamlessly with real-world scenes and training models with a large amount of data are also formidable tasks [

76]. To ensure a comfortable visual experience with Head-Mounted Displays (HMDs), ideally, frame rates should reach around 60 frames per second. However, edge-based approaches in low-resolution video transmission can lead to latencies exceeding 16.67 milliseconds [

83].

Privacy and data security pose additional challenges. AR devices often transmit user surroundings’ data to the edge for processing. Depending on the context, this information may need to remain confidential or private, necessitating robust data encryption. Furthermore, in industrial settings, the accuracy of information delivered to users via AR applications must be unquestionable [

84].

Moreover, AR devices are underutilized in many fields, and both teachers and students may need training to maximize their potential. Additionally, implementing AR in educational settings may require additional resources and equipment [

77]. ML models integrated into AR systems sometimes lack precision, hindering effective education. Recognizing objects accurately in AR from a distance, especially from several meters away, remains a challenge [

82]. Dynamic motion images also pose difficulties for AR applications that primarily excel with static images.

8. Solutions

Researchers have been actively addressing these challenges:

9. Future Work

While this comprehensive survey provides insight on the current set of ML-powered AR applications in education, various prospective research and development avenues remain untapped. The study’s limitations along with emerging trends open the way for new potential to further the integration of ML and AR in educational contexts. Our extensive survey has discovered several intriguing routes for future research and development in the quest to expand the integration of ML-powered AR applications in education. While our study provides a comprehensive overview of the existing landscape, more in-depth assessments of subject-specific applications are needed, particularly in fields such as mathematics, physics, and language acquisition. Exploring the use of ML techniques to incorporate real-time feedback mechanisms within AR applications is a promising route for improving the learning experience.

Addressing the ethical issues of using ML in educational AR environments, such as developing effective privacy safeguards and mitigating algorithmic biases, is also critical for ensuring responsible and fair technology use. Implement real-world ML-powered AR applications in educational institutions. To give essential information for educators and policymakers, assess the influence on student engagement, learning outcomes, and teacher effectiveness. Future studies should also focus on enhancing the overall user experience of these applications, investigating pedagogical ways for customization, and examining the generalizability of findings across various educational settings. To create a holistic knowledge of the elements influencing the success of AR applications in education, interdisciplinary collaboration between ML scientists, educators, and psychologists is encouraged.

In summary, exploring these future research topics has the potential to advance our understanding of the interaction of ML and AR in education. By addressing these research gaps, we can all work together to create more effective and ethical educational tools.

10. Conclusion

In today’s modern world, technology, including AR, has profoundly impacted various aspects of life, most notably education. Traditional teaching methods are gradually giving way to more immersive and interactive alternatives like AR, which offer a deeper understanding of educational content.

This survey delved into the integration of ML models into AR applications for education, exploring the diverse ML techniques used in this context. CNN emerged as a popular choice due to their remarkable accuracy. Throughout our exploration, we discovered numerous applications developed by researchers to provide students with immersive learning experiences, fostering a comprehensive understanding of their subjects and improving overall learning efficiency.

AR technology has found applications across multiple educational domains, and we explored how AR models are implemented using SDKs and platforms. These tools play pivotal roles in the creation and deployment of AR solutions in educational settings.

Finally, we discussed several open research challenges and future directions that warrant further investigation. These directions have been derived from our comprehensive discussions and insights, pointing toward exciting opportunities for future advancements in the field of ML-enhanced AR education.

Funding

“This research was supported by the MSIT(Ministry of Science and ICT), Korea, under the ITRC(Information Technology Research Center) support program(IITP-2023-RS-2022-00156354) supervised by the IITP(Institute for Information & Communications Technology Planning & Evaluation). It was also supported by Institute of Information & communications Technology Planning & Evaluation (IITP) under the metaverse support program to nurture the best talents (IITP-2023-RS-2023-00254529) grant funded by the Korea government(MSIT).”

Institutional Review Board Statement

“Not applicable”.

Informed Consent Statement

“Not applicable”.

Data Availability Statement

“Not applicable”.

Conflicts of Interest

“The authors declare no conflicts of interest.”

Abbreviations

The following abbreviations are used in this manuscript:

| AI |

Artificial intelligence |

| ANN |

Artificial neural network |

| AR |

Augmented reality |

| CNN |

Convolutional neural network |

| DL |

Deep learning |

| KNN |

K nearest neighbors |

| ML |

Machine learning |

| SVM |

Support vector machine |

| SL |

Supervised Learning |

| UL |

Unsupervised Learning |

| RL |

Reinforcement Learning |

| SSL |

Semi-supervised Learning |

| VR |

Virtual Reality |

| DT |

Decision Tree |

| LSTM |

Long Short-Term Memory |

| SDK |

Software Development Kit |

| SMILES |

Simplified Molecular Input Line Entry System |

| SOM |

Self-organizing maps |

| GAN |

Generative Adversarial Networks |

| DBN |

Belief Networks |

| EEG |

Electroencephalogram |

| DAN |

Deep Adversarial Networks |

| TDA |

Temporal Difference Algorithms |

| DRL |

Deep reinforcement learning |

References

- Garzón, J.; Acevedo, J. Meta-analysis of the impact of Augmented Reality on students’ learning gains. Educational Research Review 2019, 27, 244–260. [Google Scholar] [CrossRef]

- Wu, H.K.; Lee, S.W.Y.; Chang, H.Y.; Liang, J.C. Current status, opportunities and challenges of augmented reality in education. Computers & education 2013, 62, 41–49. [Google Scholar]

- Ren, Y.; Yang, Y.; Chen, J.; Zhou, Y.; Li, J.; Xia, R.; Yang, Y.; Wang, Q.; Su, X. A scoping review of deep learning in cancer nursing combined with augmented reality: The era of intelligent nursing is coming. Asia-Pacific Journal of Oncology Nursing 2022, p. 100135.

- Menghani, G. Efficient deep learning: A survey on making deep learning models smaller, faster, and better. ACM Computing Surveys 2023, 55, 1–37. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Hurst, W.; Mendoza, F.R.; Tekinerdogan, B. Augmented reality in precision farming: Concepts and applications. Smart Cities 2021, 4, 1454–1468. [Google Scholar] [CrossRef]

- Kovoor, J.G.; Gupta, A.K.; Gladman, M.A. Validity and effectiveness of augmented reality in surgical education: A systematic review. Surgery 2021, 170, 88–98. [Google Scholar] [CrossRef] [PubMed]

- Lu, J.; Cuff, R.F.; Mansour, M.A. Simulation in surgical education. The American Journal of Surgery 2021, 221, 509–514. [Google Scholar] [CrossRef] [PubMed]

- Keller, D.S.; Grossman, R.C.; Winter, D.C. Choosing the new normal for surgical education using alternative platforms. Surgery (Oxford) 2020, 38, 617–622. [Google Scholar] [CrossRef] [PubMed]

- Zheng, T.; Xie, W.; Xu, L.; He, X.; Zhang, Y.; You, M.; Yang, G.; Chen, Y. A machine learning-based framework to identify type 2 diabetes through electronic health records. International journal of medical informatics 2017, 97, 120–127. [Google Scholar] [CrossRef] [PubMed]

- Salari, N.; Hosseinian-Far, A.; Mohammadi, M.; Ghasemi, H.; Khazaie, H.; Daneshkhah, A.; Ahmadi, A. Detection of sleep apnea using Machine learning algorithms based on ECG Signals: A comprehensive systematic review. Expert Systems with Applications 2022, 187, 115950. [Google Scholar] [CrossRef]

- Khandelwal, P.; Srinivasan, K.; Roy, S.S. Surgical education using artificial intelligence, augmented reality and machine learning: A review. 2019 IEEE International Conference on Consumer Electronics-Taiwan (ICCE-TW). IEEE, 2019, pp. 1–2.

- Soltani, P.; Morice, A.H. Augmented reality tools for sports education and training. Computers & Education 2020, 155, 103923. [Google Scholar]

- Radu, I. Why should my students use AR? A comparative review of the educational impacts of augmented-reality. 2012 IEEE International Symposium on Mixed and Augmented Reality (ISMAR). IEEE, 2012, pp. 313–314.

- Burgsteiner, H.; Kandlhofer, M.; Steinbauer, G. Irobot: Teaching the basics of artificial intelligence in high schools. Proceedings of the AAAI conference on artificial intelligence, 2016, Vol. 30.

- Chiu, T.K. A holistic approach to the design of artificial intelligence (AI) education for K-12 schools. TechTrends 2021, 65, 796–807. [Google Scholar] [CrossRef]

- Kim, J.; Shim, J. Development of an AR-based AI education app for non-majors. IEEE Access 2022, 10, 14149–14156. [Google Scholar] [CrossRef]

- Bistaman, I.N.M.; Idrus, S.Z.S.; Abd Rashid, S. The use of augmented reality technology for primary school education in Perlis, Malaysia. Journal of Physics: Conference Series. IOP Publishing, 2018, Vol. 1019, p. 012064.

- Garzón, J. An overview of twenty-five years of augmented reality in education. Multimodal Technologies and Interaction 2021, 5, 37. [Google Scholar] [CrossRef]

- Lin, H.M.; Wu, J.Y.; Liang, J.C.; Lee, Y.H.; Huang, P.C.; Kwok, O.M.; Tsai, C.C. A review of using multilevel modeling in e-learning research. Computers & Education 2023, 198, 104762. [Google Scholar]

- Cho, J.; Rahimpour, S.; Cutler, A.; Goodwin, C.R.; Lad, S.P.; Codd, P. Enhancing reality: A systematic review of augmented reality in neuronavigation and education. World neurosurgery 2020, 139, 186–195. [Google Scholar] [CrossRef] [PubMed]

- Fourman, M.S.; Ghaednia, H.; Lans, A.; Lloyd, S.; Sweeney, A.; Detels, K.; Dijkstra, H.; Oosterhoff, J.H.; Ramsey, D.C.; Do, S.; others. Applications of augmented and virtual reality in spine surgery and education: A review. Seminars in Spine Surgery. Elsevier, 2021, Vol. 33, p. 100875.

- Theodoropoulos, A.; Lepouras, G. Augmented Reality and programming education: A systematic review. International Journal of Child-Computer Interaction 2021, 30, 100335. [Google Scholar] [CrossRef]

- Gouveia, P.F.; Luna, R.; Fontes, F.; Pinto, D.; Mavioso, C.; Anacleto, J.; Timóteo, R.; Santinha, J.; Marques, T.; Cardoso, F.; others. Augmented Reality in Breast Surgery Education. Breast Care 2023, pp. 1–5.

- Urbina Coronado, P.D.; Demeneghi, J.A.A.; Ahuett-Garza, H.; Orta Castañon, P.; Martínez, M.M. Representation of machines and mechanisms in augmented reality for educative use. International Journal on Interactive Design and Manufacturing (IJIDeM) 2022, 16, 643–656. [Google Scholar] [CrossRef]

- Ahmed, N.; Lataifeh, M.; Alhamarna, A.F.; Alnahdi, M.M.; Almansori, S.T. LeARn: A Collaborative Learning Environment using Augmented Reality. 2021 IEEE 2nd International Conference on Human-Machine Systems (ICHMS). IEEE, 2021, pp. 1–4.

- Zhu, J.; Ji, S.; Yu, J.; Shao, H.; Wen, H.; Zhang, H.; Xia, Z.; Zhang, Z.; Lee, C. Machine learning-augmented wearable triboelectric human-machine interface in motion identification and virtual reality. Nano Energy 2022, 103, 107766. [Google Scholar] [CrossRef]

- Iqbal, M.Z.; Mangina, E.; Campbell, A.G. Exploring the real-time touchless hand interaction and intelligent agents in augmented reality learning applications. 2021 7th International Conference of the Immersive Learning Research Network (iLRN). IEEE, 2021, pp. 1–8.

- Iqbal, M.Z.; Mangina, E.; Campbell, A.G. Current challenges and future research directions in augmented reality for education. Multimodal Technologies and Interaction 2022, 6, 75. [Google Scholar] [CrossRef]

- Gupta, A.; Nisar, H. An Improved Framework to Assess the Evaluation of Extended Reality Healthcare Simulators using Machine Learning. 2022 IEEE/ACM Conference on Connected Health: Applications, Systems and Engineering Technologies (CHASE). IEEE, 2022, pp. 188–192.

- Martins, R.M.; Gresse Von Wangenheim, C. Findings on Teaching Machine Learning in High School: A Ten-Year Systematic Literature Review. Informatics in Education 2022.

- Janiesch, C.; Zschech, P.; Heinrich, K. Machine learning and deep learning. Electronic Markets 2021, 31, 685–695. [Google Scholar] [CrossRef]

- Lou, G.; Shi, H. Face image recognition based on convolutional neural network. China communications 2020, 17, 117–124. [Google Scholar] [CrossRef]

- William, P.; Gade, R.; esh Chaudhari, R.; Pawar, A.; Jawale, M. Machine Learning based Automatic Hate Speech Recognition System. 2022 International Conference on Sustainable Computing and Data Communication Systems (ICSCDS). IEEE, 2022, pp. 315–318.

- Polyakov, E.; Mazhanov, M.; Rolich, A.; Voskov, L.; Kachalova, M.; Polyakov, S. Investigation and development of the intelligent voice assistant for the Internet of Things using machine learning. 2018 Moscow Workshop on Electronic and Networking Technologies (MWENT). IEEE, 2018, pp. 1–5.

- Tuncali, C.E.; Fainekos, G.; Ito, H.; Kapinski, J. Simulation-based adversarial test generation for autonomous vehicles with machine learning components. 2018 IEEE Intelligent Vehicles Symposium (IV). IEEE, 2018, pp. 1555–1562.

- Kühl, N.; Schemmer, M.; Goutier, M.; Satzger, G. Artificial intelligence and machine learning. Electronic Markets 2022, pp. 1–10.

- Shinde, P.P.; Shah, S. A review of machine learning and deep learning applications. 2018 Fourth international conference on computing communication control and automation (ICCUBEA). IEEE, 2018, pp. 1–6.

- Ray, S. A quick review of machine learning algorithms. 2019 International conference on machine learning, big data, cloud and parallel computing (COMITCon). IEEE, 2019, pp. 35–39.

- Jamil, S.; Piran, M.J.; Rahman, M.; Kwon, O.J. Learning-driven lossy image compression: A comprehensive survey. Engineering Applications of Artificial Intelligence 2023, 123, 106361. [Google Scholar] [CrossRef]

- Sarker, I.H.; Kayes, A.; Badsha, S.; Alqahtani, H.; Watters, P.; Ng, A. Cybersecurity data science: An overview from machine learning perspective. Journal of Big data 2020, 7, 1–29. [Google Scholar] [CrossRef]

- Uddin, S.; Khan, A.; Hossain, M.E.; Moni, M.A. Comparing different supervised machine learning algorithms for disease prediction. BMC medical informatics and decision making 2019, 19, 1–16. [Google Scholar] [CrossRef] [PubMed]

- Saravanan, R.; Sujatha, P. A state of art techniques on machine learning algorithms: A perspective of supervised learning approaches in data classification. 2018 Second international conference on intelligent computing and control systems (ICICCS). IEEE, 2018, pp. 945–949.

- Sarker, I.H. Machine learning: Algorithms, real-world applications and research directions. SN computer science 2021, 2, 160. [Google Scholar] [CrossRef] [PubMed]

- Dike, H.U.; Zhou, Y.; Deveerasetty, K.K.; Wu, Q. Unsupervised learning based on artificial neural network: A review. 2018 IEEE International Conference on Cyborg and Bionic Systems (CBS). IEEE, 2018, pp. 322–327.

- Van Engelen, J.E.; Hoos, H.H. A survey on semi-supervised learning. Machine learning 2020, 109, 373–440. [Google Scholar] [CrossRef]

- Aradi, S. Survey of deep reinforcement learning for motion planning of autonomous vehicles. IEEE Transactions on Intelligent Transportation Systems 2020, 23, 740–759. [Google Scholar] [CrossRef]

- Qiang, W.; Zhongli, Z. Reinforcement learning model, algorithms and its application. 2011 International Conference on Mechatronic Science, Electric Engineering and Computer (MEC). IEEE, 2011, pp. 1143–1146.

- Khan, T.; Johnston, K.; Ophoff, J. The impact of an augmented reality application on learning motivation of students. Advances in human-computer interaction 2019, 2019. [Google Scholar] [CrossRef]

- Sirakaya, M.; Alsancak Sirakaya, D. Trends in educational augmented reality studies: A systematic review. Malaysian Online Journal of Educational Technology 2018, 6, 60–74. [Google Scholar] [CrossRef]

- Tzima, S.; Styliaras, G.; Bassounas, A. Augmented reality applications in education: Teachers point of view. Education Sciences 2019, 9, 99. [Google Scholar] [CrossRef]

- Wei, X.; Weng, D.; Liu, Y.; Wang, Y. Teaching based on augmented reality for a technical creative design course. Computers & Education 2015, 81, 221–234. [Google Scholar]

- Holley, D.; Hobbs, M. Augmented reality for education. Encyclopedia of educational innovation 2019. [Google Scholar]

- Koutromanos, G.; Sofos, A.; Avraamidou, L. The use of augmented reality games in education: A review of the literature. Educational Media International 2015, 52, 253–271. [Google Scholar] [CrossRef]

- Scrivner, O.; Madewell, J.; Buckley, C.; Perez, N. Augmented reality digital technologies (ARDT) for foreign language teaching and learning. 2016 future technologies conference (FTC). IEEE, 2016, pp. 395–398.

- Radosavljevic, S.; Radosavljevic, V.; Grgurovic, B. The potential of implementing augmented reality into vocational higher education through mobile learning. Interactive Learning Environments 2020, 28, 404–418. [Google Scholar] [CrossRef]

- Akçayır, M.; Akçayır, G. Advantages and challenges associated with augmented reality for education: A systematic review of the literature. Educational research review 2017, 20, 1–11. [Google Scholar] [CrossRef]

- Pochtoviuk, S.; Vakaliuk, T.; Pikilnyak, A. Possibilities of application of augmented reality in different branches of education. Available at SSRN 3719845 2020. [Google Scholar]

- Akçayır, M.; Akçayır, G.; Pektas, H.; Ocak, M. AR in science laboratories: The effects of AR on university students’ laboratory skills and attitudes toward science laboratories. Computers in Human Behavior 2016, 57, 334–342. [Google Scholar] [CrossRef]

- Martin Sagayam, K.; Ho, C.C.; Henesey, L.; Bestak, R. 3D scenery learning on solar system by using marker based augmented reality. 4th International Conference of the Virtual and Augmented Reality in Education, VARE 2018; Budapest. Dime University of Genoa, 2018, pp. 139–143.

- Brito, P.Q.; Stoyanova, J. Marker versus markerless augmented reality. Which has more impact on users? International Journal of Human–Computer Interaction 2018, 34, 819–833. [Google Scholar] [CrossRef]

- Yu, J.; Denham, A.R.; Searight, E. A systematic review of augmented reality game-based Learning in STEM education. Educational technology research and development 2022, 70, 1169–1194. [Google Scholar] [CrossRef]

- Bouaziz, R.; Alhejaili, M.; Al-Saedi, R.; Mihdhar, A.; Alsarrani, J. Using Marker Based Augmented Reality to teach autistic eating skills. 2020 IEEE International Conference on Artificial Intelligence and Virtual Reality (AIVR), 2020, pp. 239–242. [CrossRef]

- Liu, B.; Tanaka, J. Virtual marker technique to enhance user interactions in a marker-based AR system. Applied Sciences 2021, 11, 4379. [Google Scholar] [CrossRef]

- Sharma, S.; Kaikini, Y.; Bhodia, P.; Vaidya, S. Markerless augmented reality based interior designing system. 2018 International Conference on Smart City and Emerging Technology (ICSCET). IEEE, 2018, pp. 1–5.

- Hui, J. Approach to the interior design using augmented reality technology. 2015 Sixth International Conference on Intelligent Systems Design and Engineering Applications (ISDEA). IEEE, 2015, pp. 163–166.

- Georgiou, Y.; Kyza, E.A. The development and validation of the ARI questionnaire: An instrument for measuring immersion in location-based augmented reality settings. International Journal of Human-Computer Studies 2017, 98, 24–37. [Google Scholar] [CrossRef]

- Kleftodimos, A.; Moustaka, M.; Evagelou, A. Location-Based Augmented Reality for Cultural Heritage Education: Creating Educational, Gamified Location-Based AR Applications for the Prehistoric Lake Settlement of Dispilio. Digital 2023, 3, 18–45. [Google Scholar] [CrossRef]

- Burman, I.; Som, S. Predicting students academic performance using support vector machine. 2019 Amity international conference on artificial intelligence (AICAI). IEEE, 2019, pp. 756–759.

- Mohamed, A.E. Comparative study of four supervised machine learning techniques for classification. International Journal of Applied 2017, 7, 1–15. [Google Scholar]

- Lopez-Bernal, D.; Balderas, D.; Ponce, P.; Molina, A. Education 4.0: Teaching the basics of KNN, LDA and simple perceptron algorithms for binary classification problems. Future Internet 2021, 13, 193. [Google Scholar] [CrossRef]

- Chen, C.H.; Wu, C.L.; Lo, C.C.; Hwang, F.J. An augmented reality question answering system based on ensemble neural networks. IEEE Access 2017, 5, 17425–17435. [Google Scholar] [CrossRef]

- K, P.; N, B.; D, M.; S, H.; M, K.; Kumar, V. Artificial Neural Networks in Healthcare for Augmented Reality. 2022 Fourth International Conference on Cognitive Computing and Information Processing (CCIP), 2022, pp. 1–5. [CrossRef]

- Dash, A.K.; Behera, S.K.; Dogra, D.P.; Roy, P.P. Designing of marker-based augmented reality learning environment for kids using convolutional neural network architecture. Displays 2018, 55, 46–54. [Google Scholar] [CrossRef]

- Rodríguez, A.O.R.; Riaño, M.A.; Gaona-García, P.A.; Montenegro-Marín, C.E.; Sarría, Í. Image Classification Methods Applied in Immersive Environments for Fine Motor Skills Training in Early Education. International Journal of Interactive Multimedia & Artificial Intelligence 2019, 5. [Google Scholar]

- Alhalabi, M.; Ghazal, M.; Haneefa, F.; Yousaf, J.; El-Baz, A. Smartphone Handwritten Circuits Solver Using Augmented Reality and Capsule Deep Networks for Engineering Education. Education Sciences 2021, 11, 661. [Google Scholar] [CrossRef]

- Opu, M.; Islam, N.; Islam, M.; Kabir, M.A.; Hossain, M.; Islam, M.M.; others. Learn2Write: Augmented Reality and Machine Learning-Based Mobile App to Learn Writing. Computers 2022, 11, 4. [Google Scholar] [CrossRef]

- Le, H.; Nguyen, M.; Nguyen, Q.; Nguyen, H.; Yan, W.Q. Automatic Data Generation for Deep Learning Model Training of Image Classification used for Augmented Reality on Pre-school Books. 2020 International Conference on Multimedia Analysis and Pattern Recognition (MAPR). IEEE, 2020, pp. 1–5.

- Hanafi, A.; Elaachak, L.; Bouhorma, M. Machine learning based augmented reality for improved learning application through object detection algorithms. International Journal of Electrical and Computer Engineering (IJECE) 2023, 13, 1724–1733. [Google Scholar] [CrossRef]

- Sun, M.; Wu, X.; Fan, Z.; Dong, L. Augmented reality-based educational design for children. International Journal of Emerging Technologies in Learning (Online) 2019, 14, 51. [Google Scholar] [CrossRef]

- Menikrama, M.; Liyanagunawardhana, C.; Amarasekara, H.; Ramasinghe, M.; Weerasinghe, L.; Weerasinghe, I. ARChem: Augmented Reality Chemistry Lab. 2021 IEEE 12th Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON). IEEE, 2021, pp. 0276–0280.

- Estrada, J.; Paheding, S.; Yang, X.; Niyaz, Q. Deep-Learning-Incorporated Augmented Reality Application for Engineering Lab Training. Applied Sciences 2022, 12, 5159. [Google Scholar] [CrossRef]

- Salman, S.M.; Sitompul, T.A.; Papadopoulos, A.V.; Nolte, T. Fog Computing for Augmented Reality: Trends, Challenges and Opportunities. 2020 IEEE International Conference on Fog Computing (ICFC), 2020, pp. 56–63. [CrossRef]

- Langfinger, M.; Schneider, M.; Stricker, D.; Schotten, H.D. Addressing security challenges in industrial augmented reality systems. 2017 IEEE 15th international conference on industrial informatics (INDIN). IEEE, 2017, pp. 299–304.

- Amara, K.; Aouf, A.; Kerdjidj, O.; Kennouche, H.; Djekoune, O.; Guerroudji, M.A.; Zenati, N.; Aouam, D. Augmented Reality for COVID-19 Aid Diagnosis: Ct-Scan segmentation based Deep Learning. 2022 7th International Conference on Image and Signal Processing and their Applications (ISPA). IEEE, 2022, pp. 1–6.

- Supruniuk, K.; Andrunyk, V.; Chyrun, L. AR Interface for Teaching Students with Special Needs. COLINS, 2020, pp. 1295–1308.

- Sakshuwong, S.; Weir, H.; Raucci, U.; Martínez, T.J. Bringing chemical structures to life with augmented reality, machine learning, and quantum chemistry. The Journal of Chemical Physics 2022, 156, 204801. [Google Scholar] [CrossRef] [PubMed]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).