1. Introduction

From this Bloomberg news headline [

1] and others alike, wind turbines failure is an expensive challenge facing the wind energy industry across US and Europe. While research on predictive maintenance of wind turbines has gained traction, standardizing the application of technologies such as Digital Twins offers benefits that could better manage manufacturing assets. This research work builds on existing research on predictive maintenance by providing a distributed DT framework to improve predictive maintenance in manufacturing assets.

Since the emergence of Industry 4.0, Digital Twins (DT) appears to be one of the leading technologies towards digital transformation, especially in manufacturing 4.0. While there are several attempts to define DT, there is still no standardized and widely acceptable definition of it. A description of DT that is good enough to deter the usual misconceptions was described in [

2], in which the authors differentiated a DT to having a two-way automatic data flow between the physical and digital object, rather than a manual or semi-automatic two-way data flow which is more aligned with a digital model and a digital shadow. Clarifying misconceptions such as labelling a DT as merely a simulation (i.e. A Digital model) can inform researchers of the potential of using DTs beyond a limited application domain but leveraging it for extending other technologies. This is why DT frameworks and standards such as the one in this work are relevant. The description of DT in [

2] highlights the relevance of a two-way automated feedback necessary for utilizing “intelligent DTs”. An intelligent DT, in this context, can be described as a Digital Twin solution that monitors an asset in real-time, predicts its future behavior and reacts to potential issues by analyzing the best operational control mechanisms needed to handle the potential failure or at least reduce its impact. Researchers in [

3,

4] have shown that more than 85% of DT in industrial sectors are for manufacturing asset, and [

4] went further to highlight that, from 2019 onwards, more than 90% of DT applications are in Maintenance, followed by Prognostics & Health Management (PHM) and then other process optimizations. In terms of maintenance, predictive maintenance is the leading type of maintenance preferred by industries [

4], because of its potential to save cost, time, and resources by anticipating downtimes and avoiding them way ahead of time.

A concise systematic literature review on predictive maintenance was done in [

5], where the authors answered key questions having extensively carried out a literature review with aligned research questions in using DTs for predictive maintenance. With 10 research questions and 42 primary studies reviewed, this work identified a challenge of computational burden and lack of reference architectures in using DTs for predictive maintenance. This work builds on some of these gaps, challenges, and existing work to cater for the need for digital twin framework that can improve predictive maintenance of an Industrial Internet of Things (IIoT) asset. The literature review section analyses this in detail.

While wind turbines are generally considered part of the energy sector, and specifically the renewable energy sub-sector, some of its key components like the gearbox, generator etc. are considered as products of the manufacturing sector. The maintenance of wind turbines, based on their complex engineering design, is expensive, as they can be onshore or offshore. This makes the Operation and Maintenance (O&M), as well as data gathering, connectivity and remote monitoring tasks intensive. Whether in a small wind farm with a few wind turbines or a large wind farm with a few thousand wind turbines that generate gigawatts of electricity, maintenance is a key aspect of the success of wind energy.

This paper explores the requirements of developing a predictive maintenance DT using a distributed architecture to address limitations of existing DT implementations found in literature, with regards to standards and reference architectures, computational latency, accuracy, and prediction feedback loop in real time scenarios. The proposed framework aims to adopt a software engineering approach such as Object-Oriented Concepts and Software Development Life Cycle (SDLC) [

6], and a distributed cloud computing paradigm - Fog Computing [

7]. The ISO 23247 standard for Digital twins in manufacturing [

8] is used to guide the development of the framework. Overall, this framework contributes the benefits of improved real-time monitoring and accuracy of applying a distributed architecture to enhance the effectiveness of a PHM solution.

The remainder of this paper is organized as follows.

Section 2 discusses the literature review on DT and predictive maintenance along with DT architectures in the context of this work.

Section 3 establishes the theoretical framework of our proposed system architectural framework.

Section 4 explains the methodology of implementing the key technology aspects considered in the framework.

Section 5 describes the experimental set up, dataset and selection of key components of the wind turbines.

Section 6 discusses the results, and section 7 concludes the paper with a highlight of future work.

2. Literature Review

This work explored some essential papers in the literature review to identify the research gap. Exploring the use and application of DTs for predictive maintenance can be a broad area to cover. This is because many works in literature have discussed the topic in part. In this work, we explore some relevant research outputs that have discussed Some research outputs that have focused on a “predictive maintenance digital twin” or implemented the technology associated with digital twin and predictive maintenance. The key question we attempt to answer is of how a distributed digital twin framework can improve the efficiency of predictive maintenance of a manufacturing asset. To breakdown these questions, we aim to answer the following.

How can digital twins support real-time predictive maintenance?

What benefits will a digital twin framework implemented based on a standardized framework offer to a predictive maintenance solution in IIoT?

How can this proposed digital twin framework be extended to act as an intelligent digital twin with a prediction feedback loop?

This section reviews literature and the key technologies towards answering these questions.

2.1. Digital Twins and Predictive Maintenance

Digital Twins’ two-way automatic data flow [

4] makes it suitable for Predictive Maintenance (PdM). Several authors adopted the DT term from its initial introduction by Michael Grieves [

9], along with NASA’s broad definition and adoption of the term [

10], seeing at as “

an integrated multiphysics, multiscale, probabilistic simulation of an as-built vehicle or system that uses the best available physical models, sensor updates, fleet history, etc., to mirror the life of its corresponding flying twin”. However, like NASA’s adoption of DT, many authors derive a definition based on the specific use case their work covers. From the review of literature [

11], a DT can be simply put as a replication of a physical object in the digital space, with a connection that links them with data synchronization and status updates. As mentioned earlier, in this work, we perceive DT as a copy of the physical asset that has access to its operating data and is hosted in a computational platform that can use the data for PdM (utilizing data, algorithm and platform).

Predictive maintenance on the other hand was described in [

4], as a prognosis that uses all the information surrounding a system to predict its remaining life or when it is likely to fail. Developing a predictive maintenance model can be a model driven approach based on analytical, physical, or numerical models or it can be a data-driven approach based on data obtained from sensors. Both PdM approaches have been explored highly in Manufacturing 4.0 and have been agreed to be computationally expensive [

4]. The data driven approach of predictive maintenance relies on Internet of Things (IoT) to gather sensor readings from assets to use them in the digital space (computational platform). This work leverages the Industrial Internet of Things (IIoT) concept and thus uses the data driven approach to implement the proposed distributed digital twin PdM solution based on sensor data acquired from wind turbines. However, the digital twin framework presented in this paper here can serve as a basis for utilizing physics-based model driven approaches, by the provision of the system architecture with computational platform capable of handling simulations.

The authors in [

12] developed a predictive digital twin for offshore wind turbines in which they used prophet algorithm as a time series prediction model. The DT in [

12] was developed in Unity3D to have a visual sense of the operating conditions of the wind turbines using the OPC-Unified Architecture (OPC-UA) as the data communications protocol that streams live data to the DT. [

12] used vibration and temperature data for their model and the choice of prophet model was to factor in seasonality. The Root Mean Square (RMS) achieved showed they were able to predict failure before it occurred. The authors [

12] suggested the DT feedback was based on the ability of a user to forecast future failure from the DT. The implemented DT in this work, our framework supports an “intelligent DT” that enhances two-way feedback.

Work by [

13], implemented a PdM solution based on SCADA data of the generator and gearbox of wind turbines using three algorithms; XGBoost, Long Short-Term Memory (LSTM) and Multiple Linear Regression (MLR). The authors evaluated the algorithm performance using R-squared, RMSE, MAE and MAPE, and used Statistical Process Chart (SPC) to detect anomalous behavior. In the results of [

13], the models predicted failure up to six days before its occurrence, with LSTM outperforming XGBoost for the generator and vice-versa for the gearbox. There was no DT or any feedback mechanism in this work [

13]. Another work by [

14], applied a data-driven approach (decision trees) with a focus on the data pre-processing using hyper-parameter tuning to detect failures from five components of a wind turbine, mainly the generator, hydraulic, generator bearing, transformer and gearbox. They showed how a good pre-processing strategy in data-driven models can outperform a model-driven approach for PdM.

This paper [

15] introduced a cyber-physical CPS architecture for PdM with several modules for condition monitoring, data augmentation and ML/DL which supports an intelligent decision-making strategy for fault prediction with good KPI. The architecture [

15] used both MQTT as a communications protocol and OPC/UA for industrial automations.

On the distributed Digital Twin Concept, work by [

16] presented the concept of “IoTwins”, in which the authors argue that the best strategy for implementing DT is to deploy them close to the data sources to leverage IoT gateway on edge nodes and use the cloud for heavier computational task such as Machine Learning Model training. In their reference architecture, the authors outline how the edge-fog-cloud paradigm can allow a distributed DT to leverage the needed layers of computing and interfaces that are suitable for real-time applications. A similar concept was introduced in our earlier work [

17].

Considering reference architectures for DT, work by [

18] used the ISO 23247 to develop a DT for additive manufacturing that resolved interoperability and integration issues in real time decision making and control. Work by [

18] utilized the ISO23247 to develop a data mapping approach called “EXPRESS Schema” that uses the edge for data modelling of a DT of a machine tool.

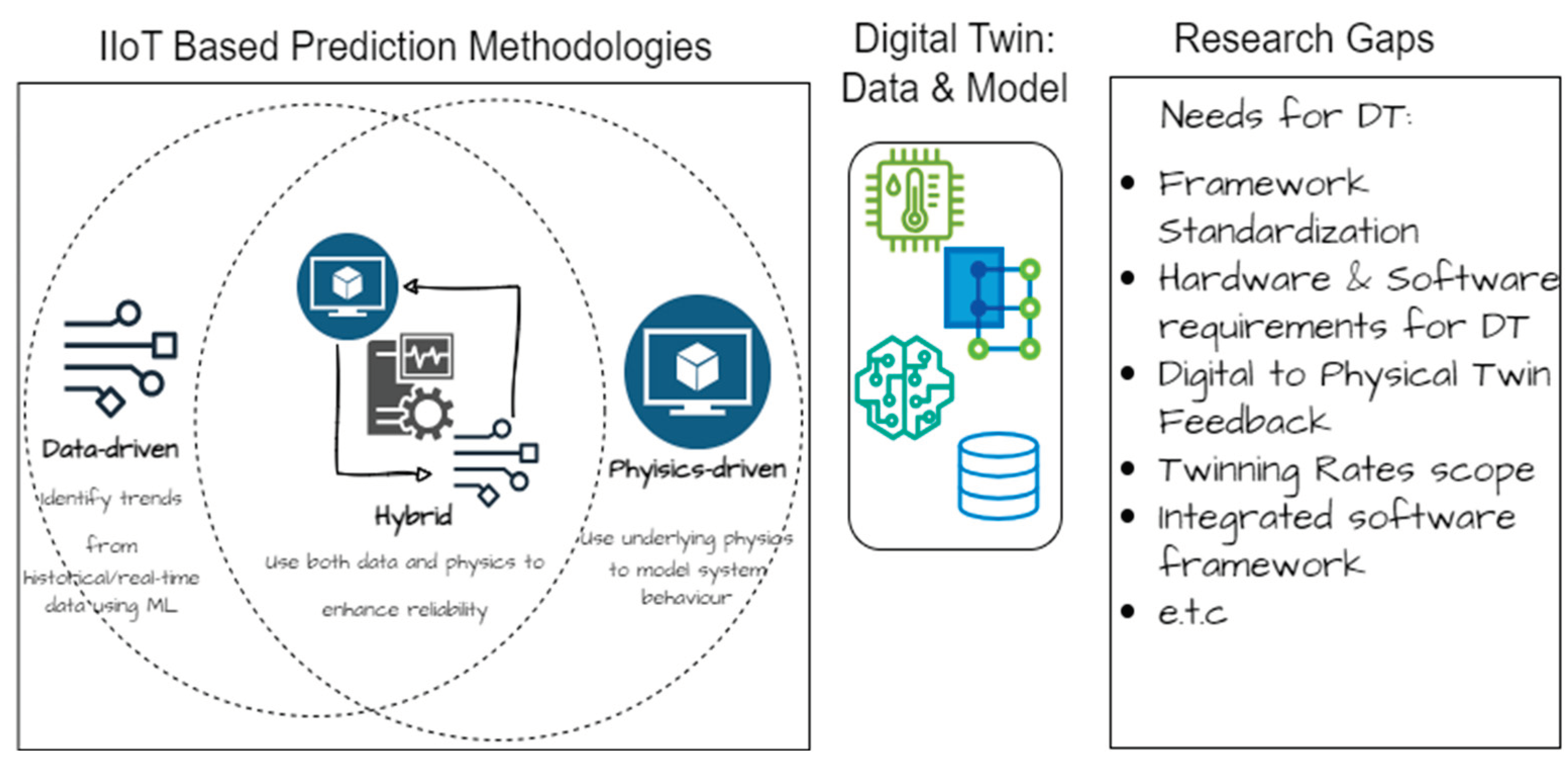

A further review in [

19] of unit level DT for manufacturing components explored the current stage of research into the application of DT at the unit level to foster real-time control. The output in [

19] is an extensive analysis that clearly shows a gap in the need for adopting standards, handling hardware and software requirements as well as a mechanism for DT to Physical Twin (PT) feedback. An abstract of [

19] is shown in

Figure 1. The highlighted gaps directly align with the output of this paper.

Leading Cloud Technology providers like IBM, Microsoft, and AWS, have outlined DT reference architectures owing to the need of significant connectivity and computing power to run and manage DTs at scale [

20].

Out of the reviewed works, it has been observed from literature that few attempts have been made to showcase DT implementation for predictive maintenance as a manufacturing industry solution. As such this work will go further to present a DT framework that attempts to cover these gaps [

19,

21], summarized in

Figure 1.

2.2. Computing Infrastructure for Digital Twins

2.2.1. Cloud, Fog, and Edge Computing

Cloud computing refers to the to the on-demand delivery of computing services over the internet [

22,

23,

24], and this computing services that range from computing, storage, networking, and other tools make the cloud a suitable platform for the deployment of DTs.

Fog computing is an extension of the cloud, introduced by Cisco in 2012 [

7], as a concept that brings computing power closer to the data sources, thereby reducing latency and improving other computational benefits.

Edge Computing is like the fog computing concept. It deals with the ability for Internet of Things (IoT) devices distributed in remote locations to process data at the “edge” of the network [

25]

To highlight the difference with context, Edge computing is usually distinctively recognized when this processing is done by billions of IoT devices, and when dedicated local servers, in millions are involved, it is termed fog computing [

25]. Cisco [

7] describes the fog-edge computing architecture as “decentralizing a computing infrastructure by extending the cloud through the placement of nodes strategically between the cloud and edge devices.”

As discussed in the distributed DT paper “IoTwins” [

16], a DT implementation can utilize the edge-fog-paradigm to leverage both hardware and software services to deploy DTs of manufacturing asset for seamless and efficient monitoring and application of data-driven ML solutions or model-driven simulation of physical assets. In this section we primarily look at related attempts and technologies of the key aspects of the overall distributed DT architecture of edge-fog-cloud.

An earlier approach to the use of fog computing for real-time DT applications in [

26], has shown the benefits of reducing response times when the DT is deployed in the fog node rather than the cloud. Another paper in [

27] introduces a fog computing extension to the MQTT IoT communication protocol for Industry 4.0 applications. By placing the MQTT broker at the fog layer, the approach enhanced data processing efficiency and reduced communication demands. In this work [

27], the fog layer serves for prediction, acts as a gateway, and offloads complex processing tasks from the Cloud to minimize latency and operational costs. The authors [

27] validated the architecture through energy consumption analysis and simulations, demonstrating its benefits compared to the traditional MQTT scheme in handling real-time data challenges posed by constrained IoT devices.

2.2.2. IIoT Protocols and Middleware

Digital Twins require a middleware protocol that serves as the connection between the physical entity and the digital entity. Many IoT applications leverage the use of such IoT protocols for communication with any other systems locally or remotely. The ISO 23247 outlines this under part 4 (Networking View) which handles information exchange and protocols [

8] [

28].

From Literature, we have identified the most used IIoT protocols with regards to Digital Twins [

5] or Fog Computing architectures to be MQTT, OPC UA, AMQP, and CoAP among others like DDS, MTConnect, MODBUS etc. Among these we review these protocols in our experiments and adopted MQTT for it light weight and easy set up.

2.2.3. Microservices and DT Platforms

In terms of software stack for digital twin deployment, microservices are an important consideration with cloud computing platforms. For this work, an exploration of microservices and middleware deployment platforms suitable for our distributed DT framework was done, focusing on open-source technologies. It was observed that not many researchers have explored this area. However, work by [

29], documented microservices, middleware and technologies suitable for DTs in smart manufacturing.

The major consideration for the use of such microservices platforms is the ability to containerize applications or modules of the DT applications in a virtualized environment. While in fog computing, the typical architecture involves the use of physical servers close to the data sources, it is possible to use the cloud or local hardware as a platform that distributes and splits the DT into modules that can be packaged in layers within a virtualized environment known as containers.

Table 1.

Microservices for DT Architecture.

Table 1.

Microservices for DT Architecture.

| Concept |

Description |

Tool |

| Containerization |

Utilizing microservices architecture for loosely coupled, capabilities oriented and packaged deployment software. |

[29] Docker [30], Kubernetes |

| DT Middleware |

Platforms supporting connectivity middleware, device, and data integration. |

[29] Eclipse Kapua, Eclipse Kura, Eclipse Ditto [31] |

| Real time/Batch stream processing |

Technologies supporting processing data with compute capabilities in batch or real-time |

[29] Apache Kafka, Apache Flink, Apache Spark [31], Apache Hadoop |

Docker was considered among the options because of the nature of the project and experiments making it easier for the deployment of the DT modules.

2.3. Digital Twin Architecture

Existing DT architectures mostly adopt a centralized deployment strategy as, while some works show the importance of edge and fog computing in DT deployments. For instance, works like [

17] [

26] showed using edge or fog as an improvement in response time while [

18] showed using edge computing for enhancement of data modelling for a machine tool DT. In this work we approach the distributed DT for the case study of predictive maintenance with a hybrid architecture utilizing both the edge-fog-cloud depending on the specific layer requirements. The closest to this approach was found in [

16]. Another work on distributed DT was found in [

32], where the authors align the activities of the production shop floor with physical layer and edge-cloud collaboration layer with local and global DT tasks handling real time manufacturing data. This work [

32] also recommended microservices as a way to support modular development.

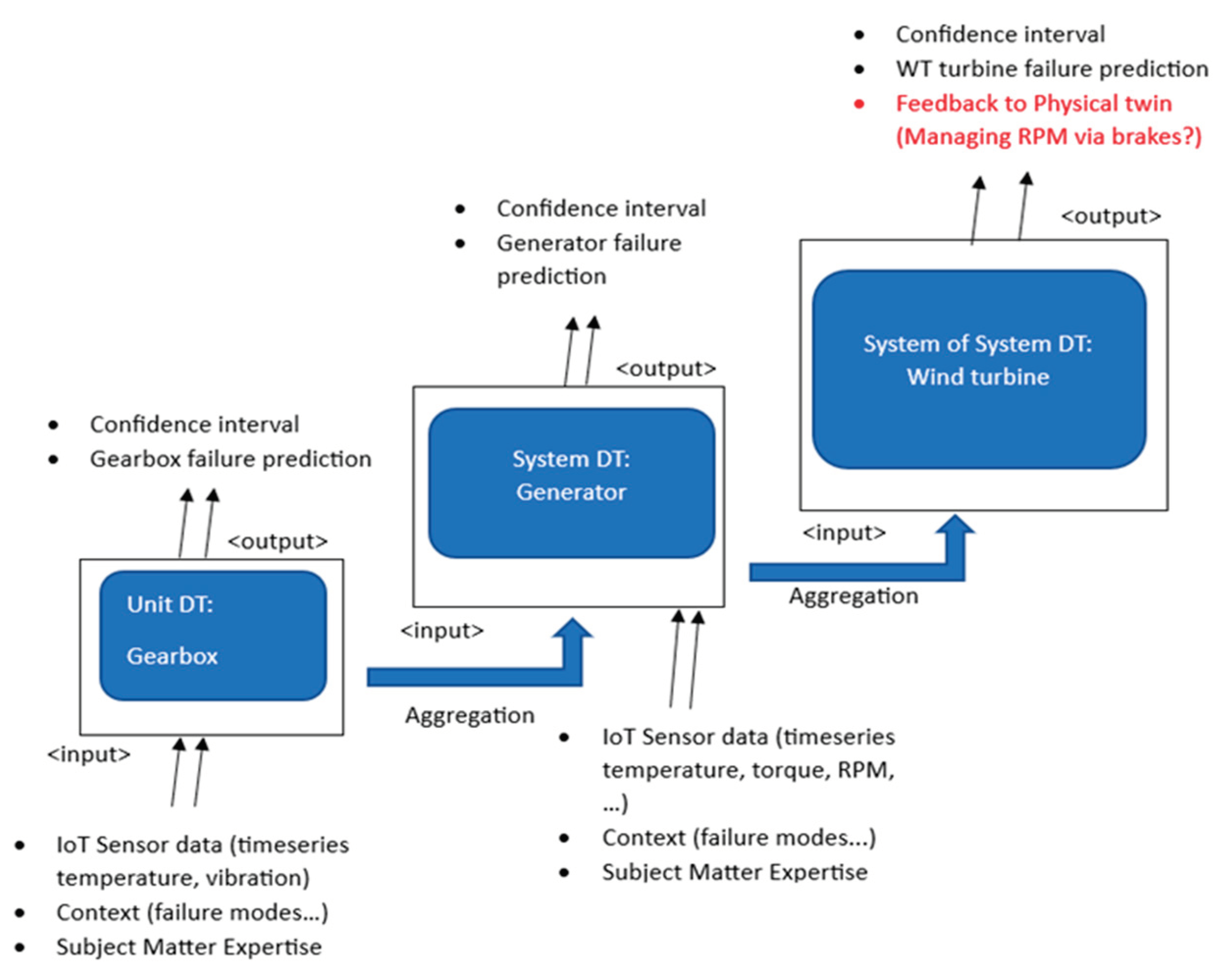

The key advantage to distribute DT is classifying a smart manufacturing system into unit, system, or systems of systems [

6,

19], or similarly, it is usually labelled as local, system, and global DTs [

32]. This framework is designed to accommodate the need for data collection from lower levels (sub-components of the manufacturing systems) and preprocessing and processing of all data from a component or production line and the overall manufacturing system/shop floor is modelled in the global DT. Different approaches to the deployment of DT are usually adopted depending on the requirements of the system. In the methodology described by [

6] and extended in

Figure 2, for the context of PdM, the relationship between the three layers of DT can be summarized as shown in

Figure 2.

The design of a DT architecture can adopt typical SDLC approaches. This work [

33], for instance utilized the ISO/IEC 1588/42010 to guide the development stages as well as establish the concept of components and their relationships. As a PHM tool, DTs need to solve some of the challenges of implementing PHM such as the lack of real-time assessment of Remaining Useful Life (RUL) in an interoperable and decoupled approach [

34]. To achieve lightweight and seamless integration, an edge digital twin was explored in [

35].

As introduced in our earlier work [

17], this framework of splitting DT based on components aligns directly with the edge-fog-cloud architecture. The work in [

36] presents a framework with local and global nodes to enhance manufacturing decision-making. Local nodes include equipment's digital twins and a predictive model based on machine learning, aiding in improved decisions. Various tools enable condition-based maintenance and fault detection. The local DT, enriched with machine learning, contributes to overall prognostics and health management. The global node aggregates data, interacts with Manufacturing Execution Systems (MES) for accuracy, and facilitates scheduling and optimization based on data from the global DT, MES, and performance indices. While some of these approaches show good results, work by [

37], evaluated this systematically by showing most architectures neglecting modularity in terms of plug and play and other non-functional qualities outlined in ISO23247 [

8].

In terms of using the distributed DT concept to improve prognostics in PdM applications, it is ideal to design a framework that considers the hardware and software requirements, standards, and prediction feedback loop [

4,

17]. The solution architecture introduced in [

17] is leveraged in this work to achieve the results of improving prognostics of wind turbines.

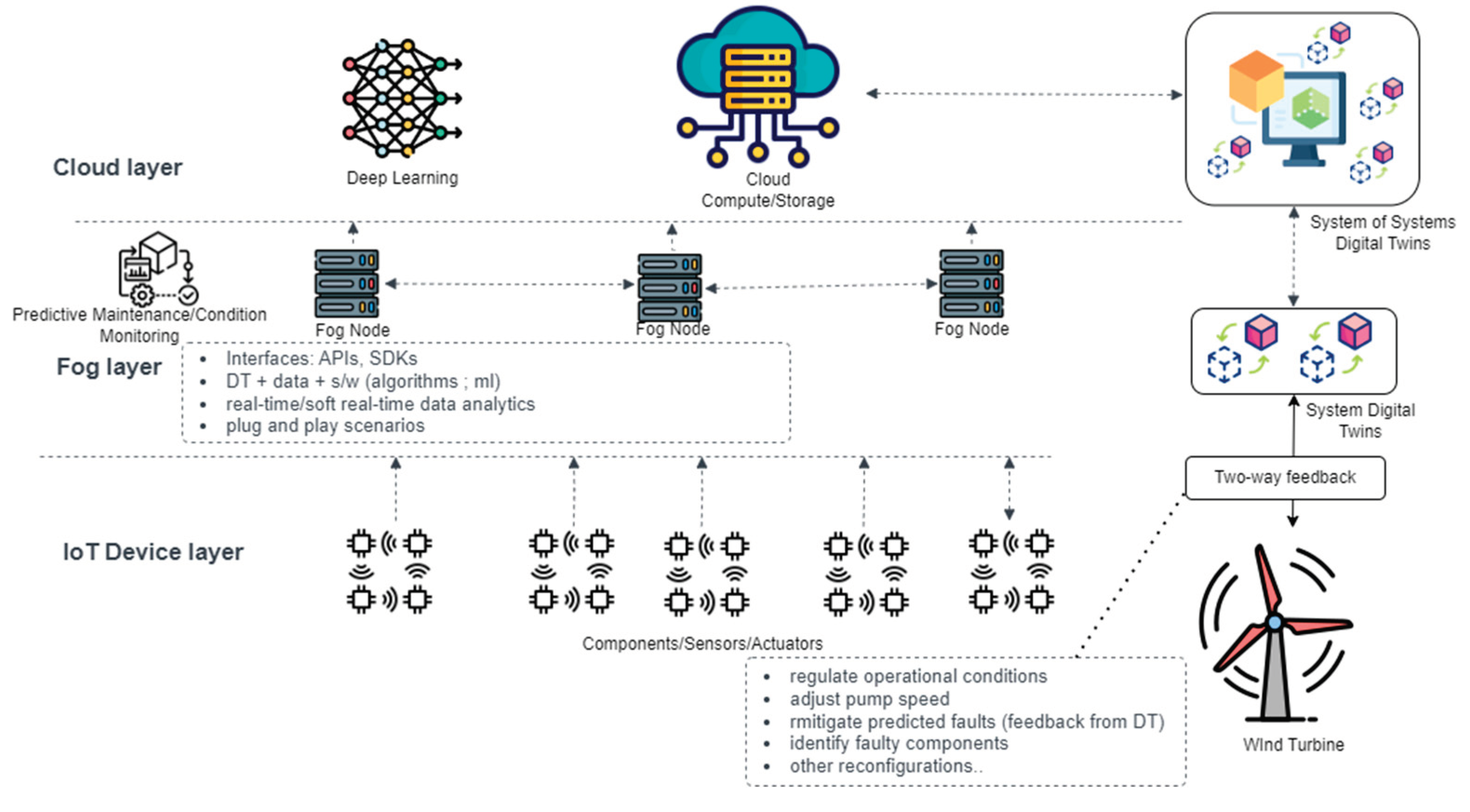

The major contribution envisioned by adopting this hybrid architecture is summarized below.

Edge/IoT Device Layer: The lower layer deals with unit level DT, acquiring data from individual components such as the gearbox and pre-processing it through data cleaning and transmission to the upper layer.

Fog Layer: The middle layer handles the system level monitoring and feedback mechanism on prediction from the ML algorithms in real-time. This is the layer where the middleware and microservices of the DT are also utilized.

Cloud Layer: This layer deals with monitoring of global level - systems of systems, for example the whole wind farm in our case study, training and retraining using historical data.

In Section III, the theoretical framework of the layers is discussed in detail.

Figure 3.

Distributed DT Architecture.

Figure 3.

Distributed DT Architecture.

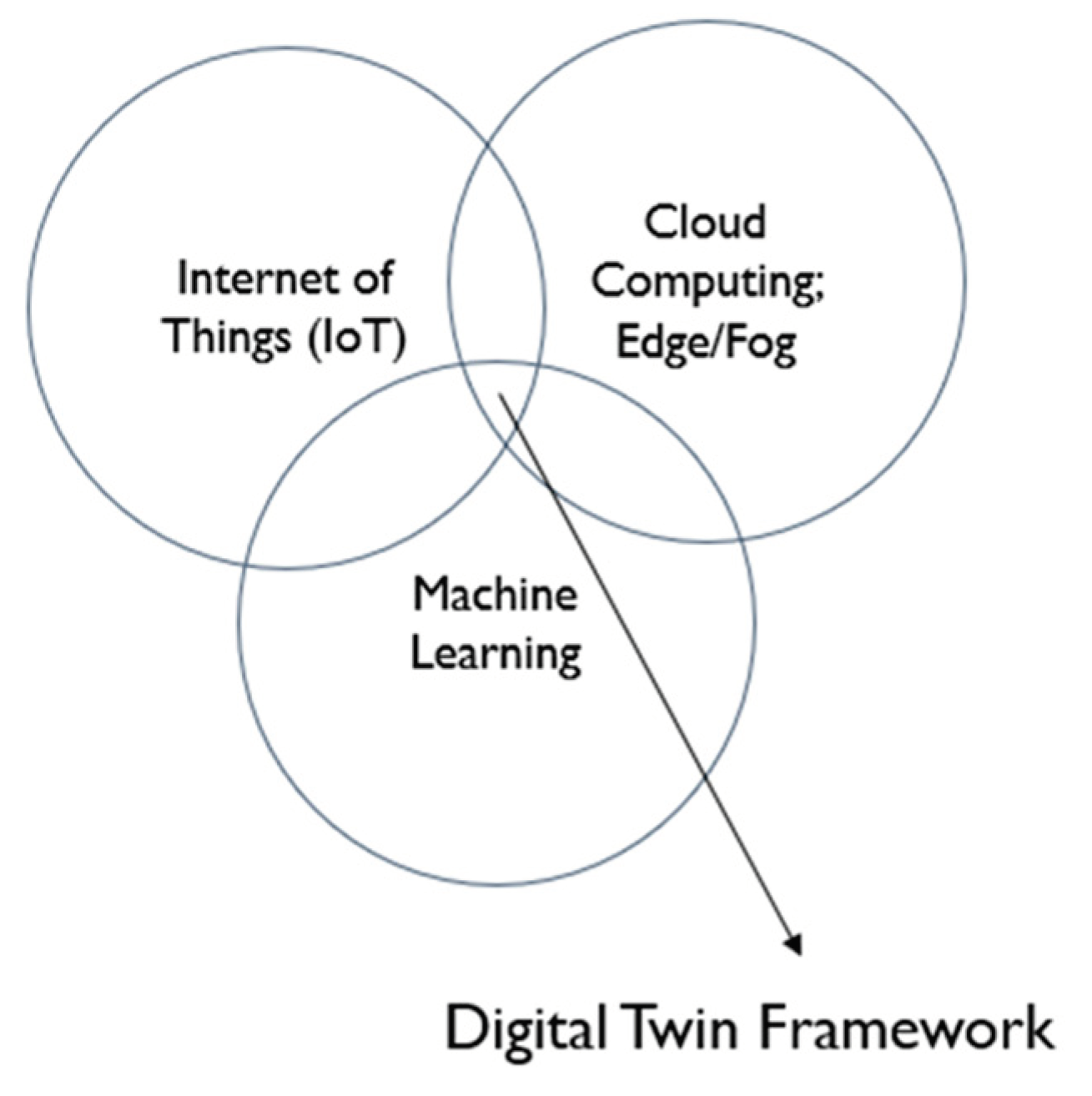

2.4. Reseacrh Gap

From the review of literature, it was identified that a comprehensive Digital Twin for predictive maintenance in IIoT require the key technologies in

Figure 4, and need key metrics to perform effectively. In terms of metrics, it mostly depends on the application used. However, Our proposed solution aims to cover the following metrics and extract a framework from the necessary key technologies for the predictive DT solution based on ISO 23247 [

8].

Performance Metrics:

This framework also seeks to showcase the benefits of the proposed solution architecture in the scenario of handling multiple components, in our use-case, multiple wind turbines in a wind farm. Each wind turbine has a collection of components that are key to its performance and whose health will be monitored in real time.

Another contribution of this framework is validating it with the scaling constraint (to multiple wind turbines) which has not been explored in literature.

3. Theoretical Framework

The theoretical framework of the proposed predictive maintenance DT builds on the discussed concept of distributed DT for enhanced asset management using the edge-fog-cloud deployment strategy. This is validated with experiments and results which are presented in section IV.

3.1. Hypothesis

The proposed digital twin framework, which integrates cloud computing, fog, and edge computing, as well as relevant middleware and IIoT protocols, will demonstrate superior performance in terms of computational cost, response time, and accuracy compared to traditional centralized cloud-based DT architectures.

This hypothesis assumes that the proposed framework will outperform traditional centralized cloud-based DT architectures, which have certain limitations in terms of computational cost and response time due to their reliance on a centralized infrastructure. The hypothesis also assumes that the proposed framework will provide accurate predictions for the maintenance needs of industrial components by leveraging IIoT protocols and edge computing capabilities with the constraint of handling multiple assets in real-time.

3.2. Architectural Framework

3.2.1. Layer 1: Edge Devices Layer

The first layer is the physical asset layer, which includes the industrial asset, sensors, and data acquisition devices. The sensors collect data from the physical asset and send it to the second layer.

Data Acquisition Sub-Layer: This layer is responsible for collecting and pre-processing real-time data from sensors and IoT devices.

Sensors and Actuators Sub-Layer: Along with sensors that collect data, the actuators that will receive control commands from the upper layers are also in this layer.

3.2.2. Layer 2: Fog Computing Layer

The second layer is the data processing layer, which includes the fog computing nodes. This layer processes the data collected from the sensors and generates insights into the condition of the physical asset responsible for storing and processing large volumes of data, while the fog computing nodes are responsible for processing data at the edge of the network, closer to the physical asset. The fog computing nodes are responsible for processing data in real-time and providing fast responses to the physical asset.

Data Storage Layer: This layer is responsible for storing the pre-processed data in a distributed data store such as Hadoop Distributed File System (HDFS), Cassandra, MongoDB, or influx DB, as they all support distributed processing. However, we selected influx DB because it supports real time seamlessly.

Data Processing Layer: This layer is responsible for processing the pre-processed data to generate insights that can be used to train the predictive maintenance model. This layer can be implemented using technologies such as Apache Spark, Flink, or Hadoop MapReduce.

Feedback Loop Layer: This layer is responsible for capturing feedback from the Dt to the physical twin and using it to improve the predictive maintenance model. This layer can be implemented using technologies such as Apache NiFi or StreamSets.

3.2.3. Layer 3: Cloud Computing Layer

The third layer is the application layer, which includes the digital twin and the predictive maintenance algorithms. The digital twin is a virtual replica of the physical asset, which is used to simulate its behavior and predict its performance. The predictive maintenance algorithms use the data collected from the physical asset and the digital twin to predict when maintenance is required and to optimize the maintenance schedule.

Machine Learning Layer: This layer is responsible for training the predictive maintenance model using the insights generated by the data processing layer. This layer can be implemented using technologies such as Scikit-learn, TensorFlow and PyTorch. Depending on the ML model implemented, these ML packages were used for the predictive maintenance algorithms.

Model Deployment Layer: This layer is responsible for deploying the trained model in a distributed environment to make real-time predictions. This layer can be implemented using technologies such as Kubernetes, Docker Swarm, or Apache Mesos. However, to support our architectural framework and experimental platform, we used docker at the fog and cloud layer to deploy the ML models.

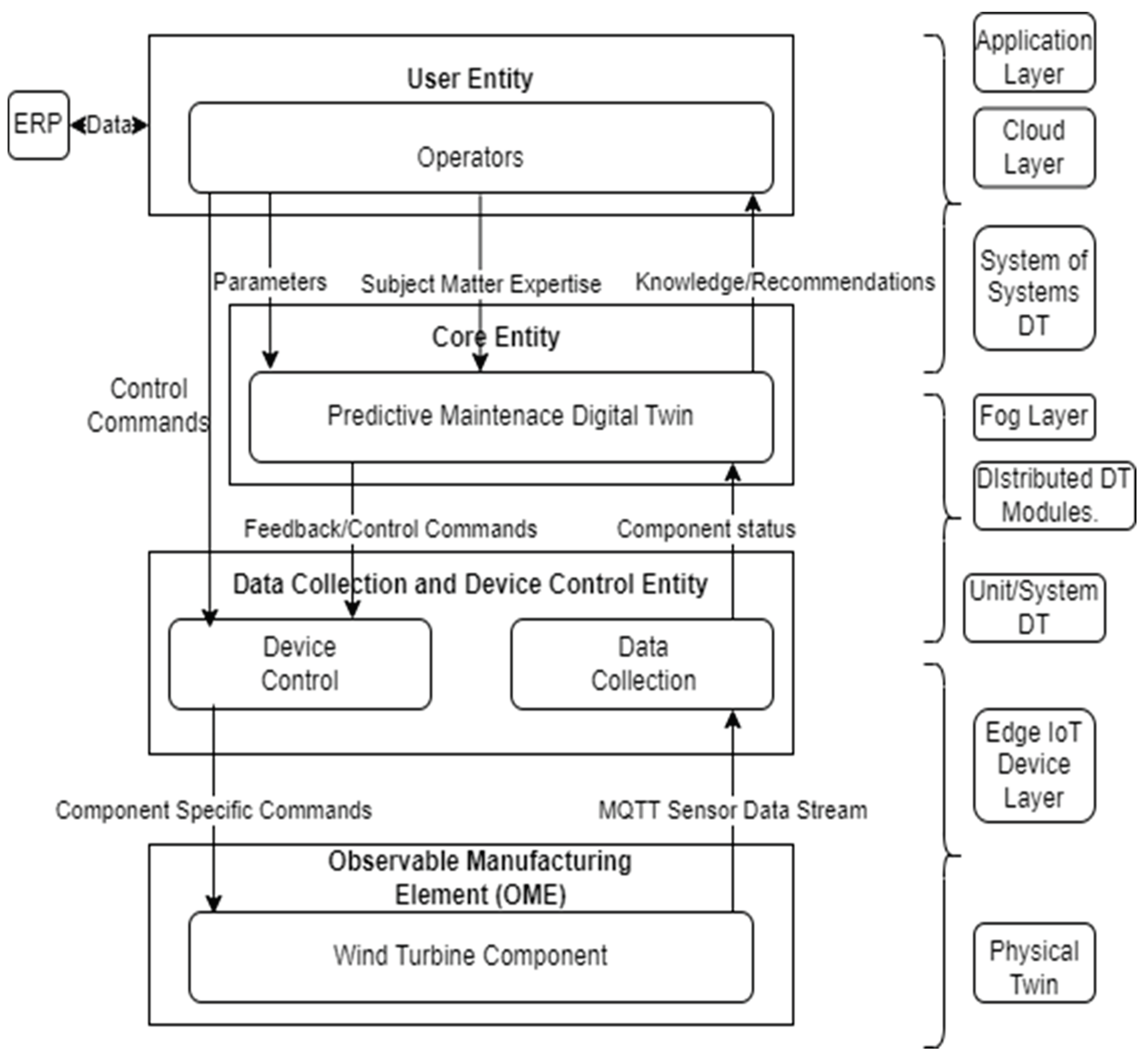

3.3. Framework Standardization

The ISO 23247 standard framework is a 4-part framework that outlines a framework for digital twins in Manufacturing. This work explored the relevance of using the ISO 23247 [

8] towards standardizing the framework. In a sequence of steps, all components and layers of the architectural framework were implemented using ISO 23247 as a guide. Similar approaches from [

6] [

18] [

28] were adopted and extended. The ISO 23247-2 highlights the reference architecture in 4 sections, which decomposes and links to all other parts of the ISO 23247 framework.

Observable Manufacturing Element (OME) domain: Context for the physical twin (each wind turbine component) which is the basis of the DT. This interacts with DT interfaces for data collection and device control – feedback mechanism.

Data Collection and Device Control Domain: This connects the physical twin (OME) to its unit DT through sensor data collection, synchronization, and actuating feedback to regulate operational conditions with decisions from the DT.

Core Domain: This domain handles all DT services from analytics and simulations to feedback and user interaction.

User Domain: This is the application layer through which users access the DT and see results through visualizations and other functionalities.

Figure 5 describes a mapping of the ISO 23247 reference architecture [

8] [

28] to the presented case study of wind turbines predictive maintenance distributed DT, and how it links to the layers of the framework. This is an excerpt that guides the proposed framework in this study from the more detailed functional view of the ISO 23247 reference model for manufacturing.

The procedure adopted in the overall utilization of the standards outlined in the ISO 23247 can be described as a bottom-up approach as follows:

Selection of Standards for sensor interfaces, data collection and processing

Selection of interfaces for device control and handling of feedback from DT to PT.

Selection of Communication protocols and middleware

Selection of technology stack for representation of DTs such as JSON, DTDL and other software implementation frameworks.

Selection of deployment platforms based on specific data and processing requirements.

Selection of functional services platform for visualization interaction with users via ERP, CAD, CAM or others.

4. Methodology

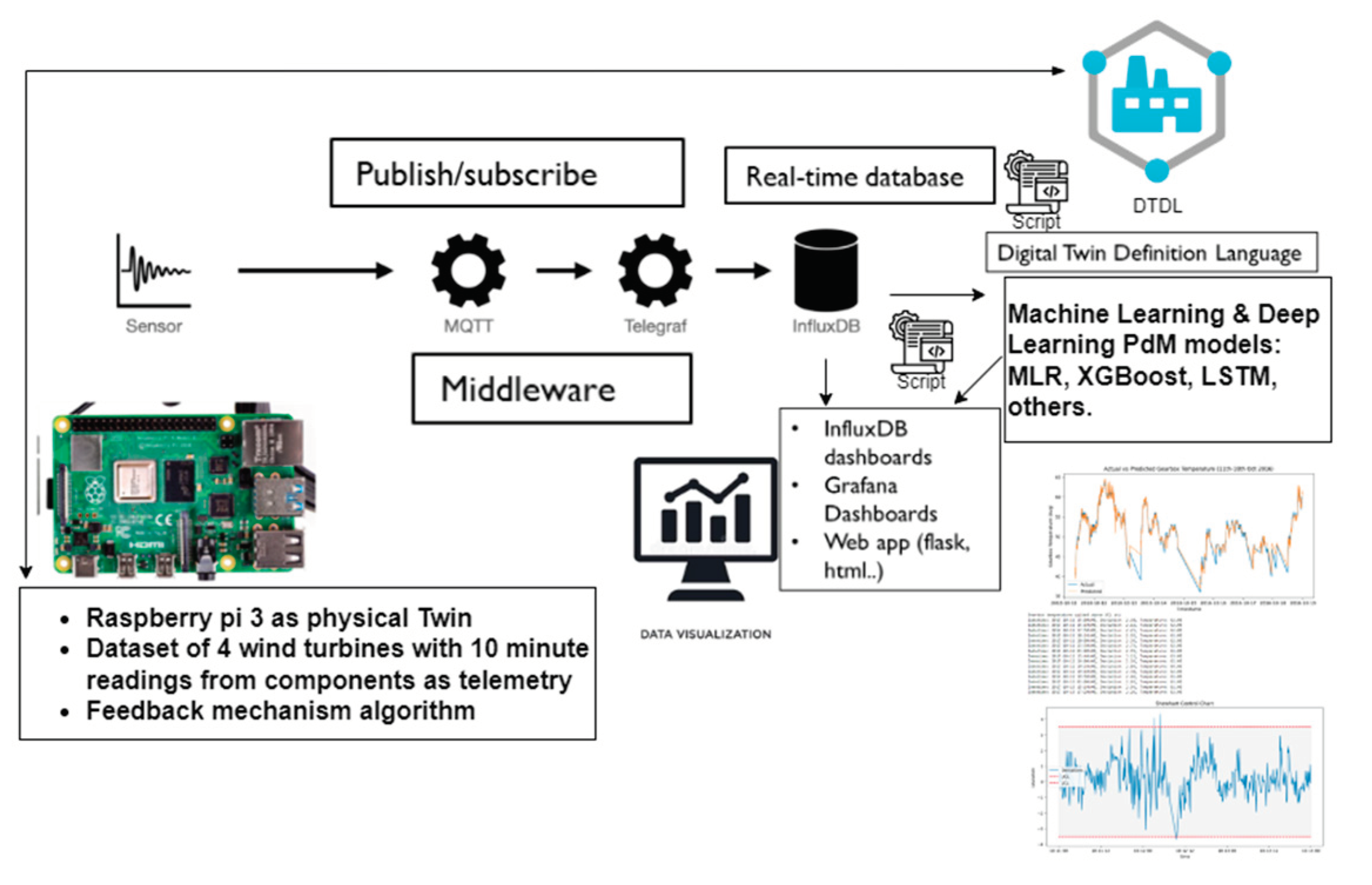

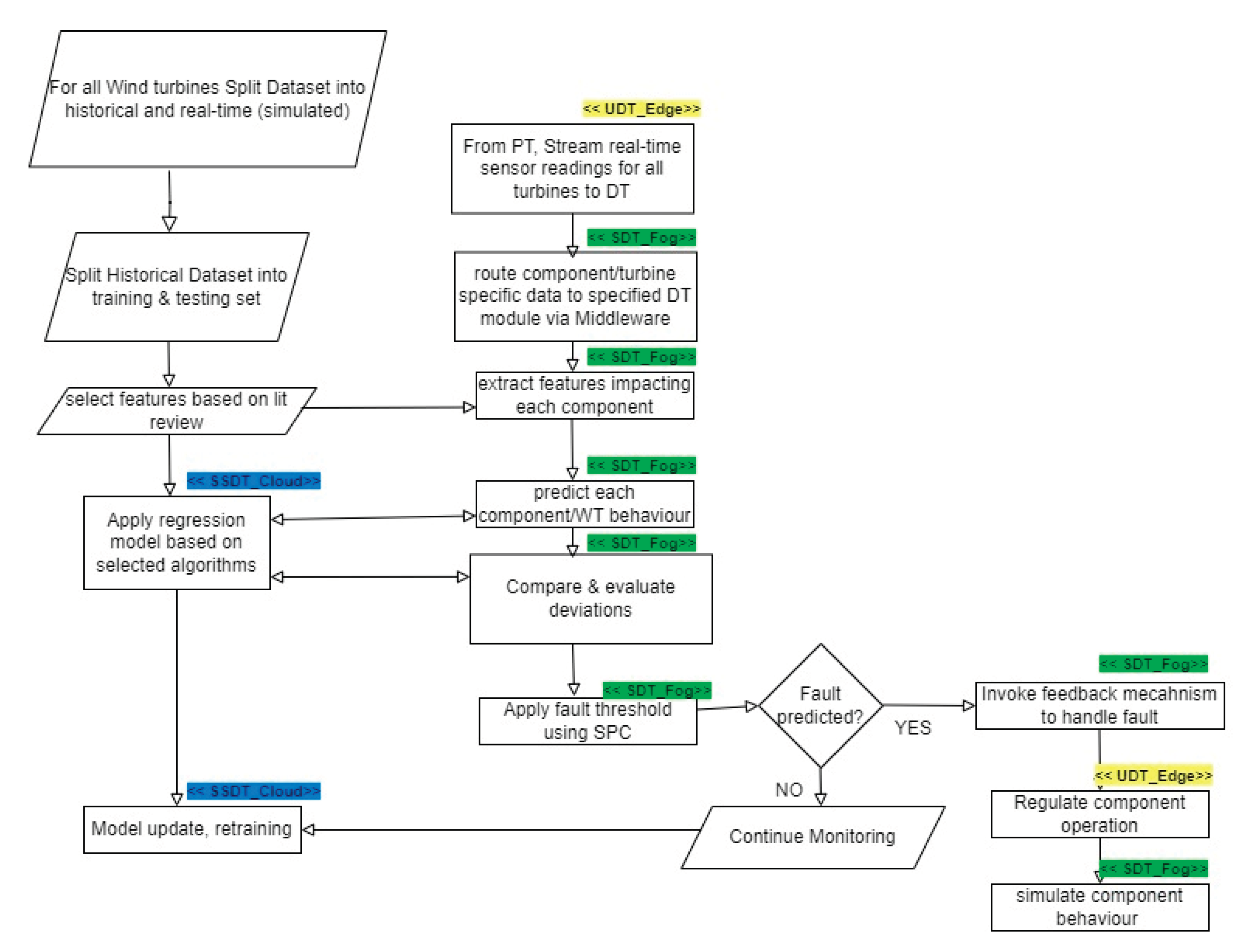

This section breaks down the methodology used to achieve the framework. In summary,

Figure 5 and 6 gives an overview of the methodology used to implement the DT solution. From

Figure 6, the “SS_Cloud” highlighted in blue denotes operations done in the cloud layer by the System of Systems DT module, the “S_Fog” highlighted in Green denotes the operations done in the fog layer by the Systems DT modules and finally the “U_Edge” highlighted in Yellow denotes operations done at the edge/IoT devices layer by the Unit DT module.

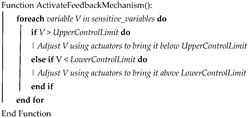

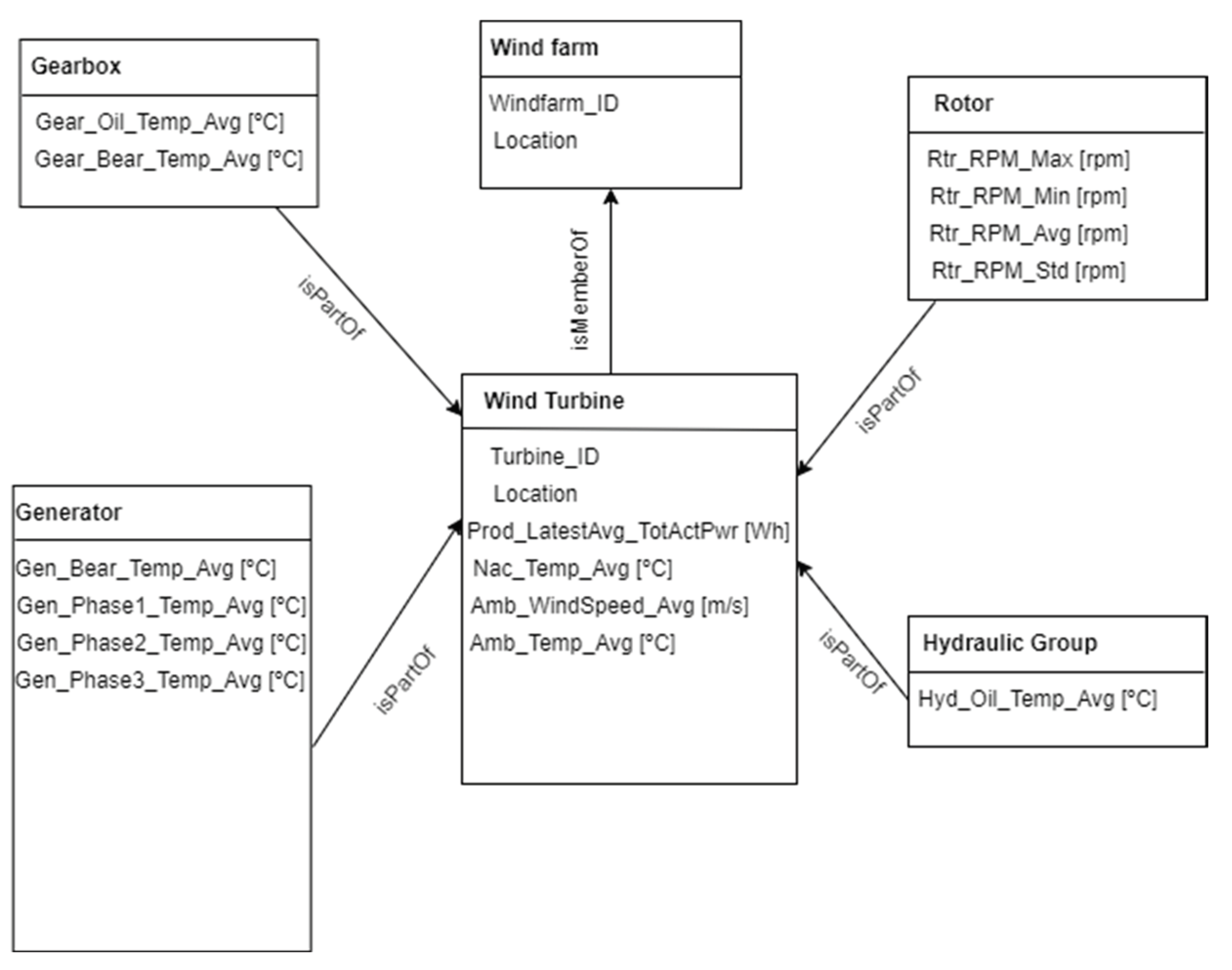

4.1. System Architecture

The proposed architecture of using a distributed paradigm with edge/fog nodes implemented using raspberry pi devices. The raspberry pi is connected via Wi-Fi to a “Cloud” PC.

- I.

Components: The components were selected based on a review by NREL showing the most failure prone components of a wind turbine. This was used to select two of the components for this work. These are the Generator as system DT and its sub-components as unit DTs and Gearbox as system DT and its sub-components as unit DTs.

- II.

Software Architecture: The Digital Twin was developed using the Digital Twin Definition Language (DTDL) and C# Object Oriented Programming concepts to replicate the relationship between entities, and the operations of the DT such as the Predictive Maintenance model, alerts, and fault classification algorithms for feedback operations.

Figure 7 describes the modelling approach [

38].

4.2. Metrics

These are key metrics evaluated to showcase the performance and benefits of the system. A point of emphasis is the fact that the proposed framework focuses on providing a solution that can enhance the deployment of digital twins in IIoT which can improve the efficiency of asset management. The key metrics being recorded in the experiments are.

- I.

Accuracy of the model to support PHM with respect to the real time scenarios of turbine operation.

- II.

Prediction feedback loop (DT to PT): How the computational platform whether edge or cloud supports the overall aim of the framework: data collection, pre-processing, and prediction feedback.

- III.

Computational Latency: Time it takes for model run and feedback.

For the above metrics, the implemented predictive maintenance algorithms were monitored to evaluate their performance in the DT environment. Insights are aimed at answering the question “How best Digital Twins can be developed to achieve higher efficiency.”. This Questions are mainly:

4.3. Software

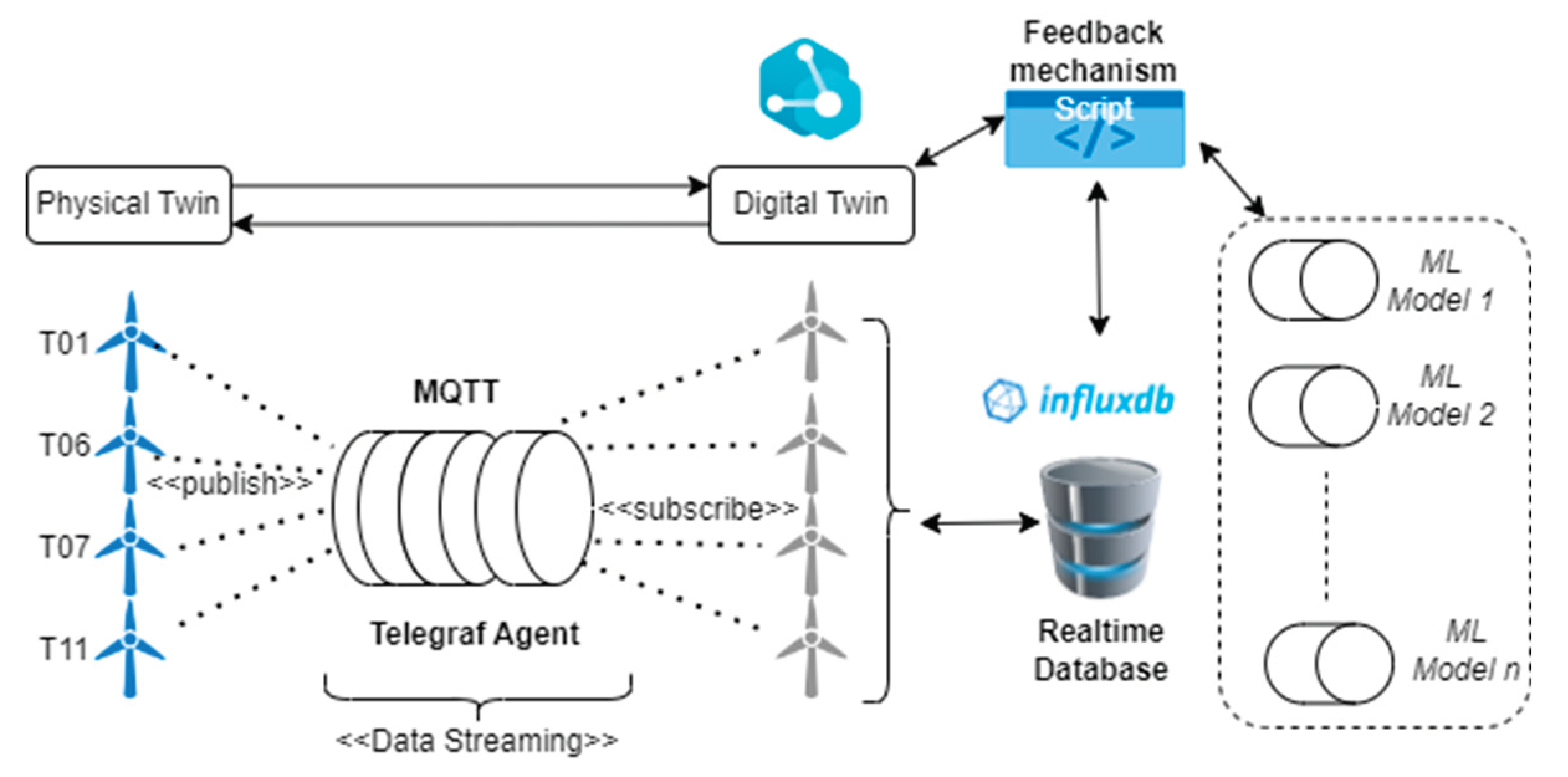

The relevant predictive maintenance models identified in literature have been implemented and used as a benchmark.2 More algorithms are being developed. The DT Software uses the DTDL with communication interfaces that serve as middleware. These were implemented in the solution leveraging TCP/IP; Telegraf and Mosquitto MQTT broker. The software script for feedback mechanism is implemented in python. The simulation of WT components in software is based on the acquisition of sensor data, publish/subscribe leveraging MQTT and data queries from the real time database - influx DB. This follows the model described in

Figure 8.

4.3.1. Machine Learning Algorithms

As identified in literature, some of the machine learning algorithms used for the data driven predictive maintenance approach, and which have shown good results [

13] were implemented. In all algorithms used, the input and output variables were adopted from works in literature [

13] described in

Table 2.

The algorithms are described below.

Multiple Linear Regression (MLR): This algorithm uses scikit learn to model the relationship between the inputs and the output by fitting a linear equation.

Long Short-Term Memory (LSTM): This is a version of Recurrent Neural Network (RNNs) that makes its predictions by using the order sequence of data to learn the termly dependencies of data. This is why it is suitable for IoT timeseries predictions.

XGBoost: This algorithm uses decision trees for gradient boosting and works by combining weaker learners to create a stronger learner . It is considered one of the best algorithms for time series predictions and hence why it is suitable for IoT data.

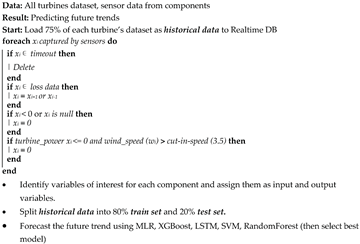

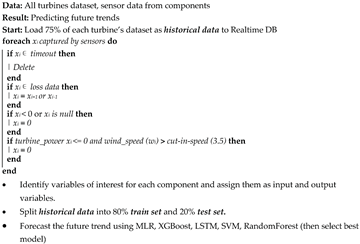

The application of the model pre-processing and processing through the utilization of the Digital Twin data is outlined in Algorithm 1.

| Algorithm 1: |

|

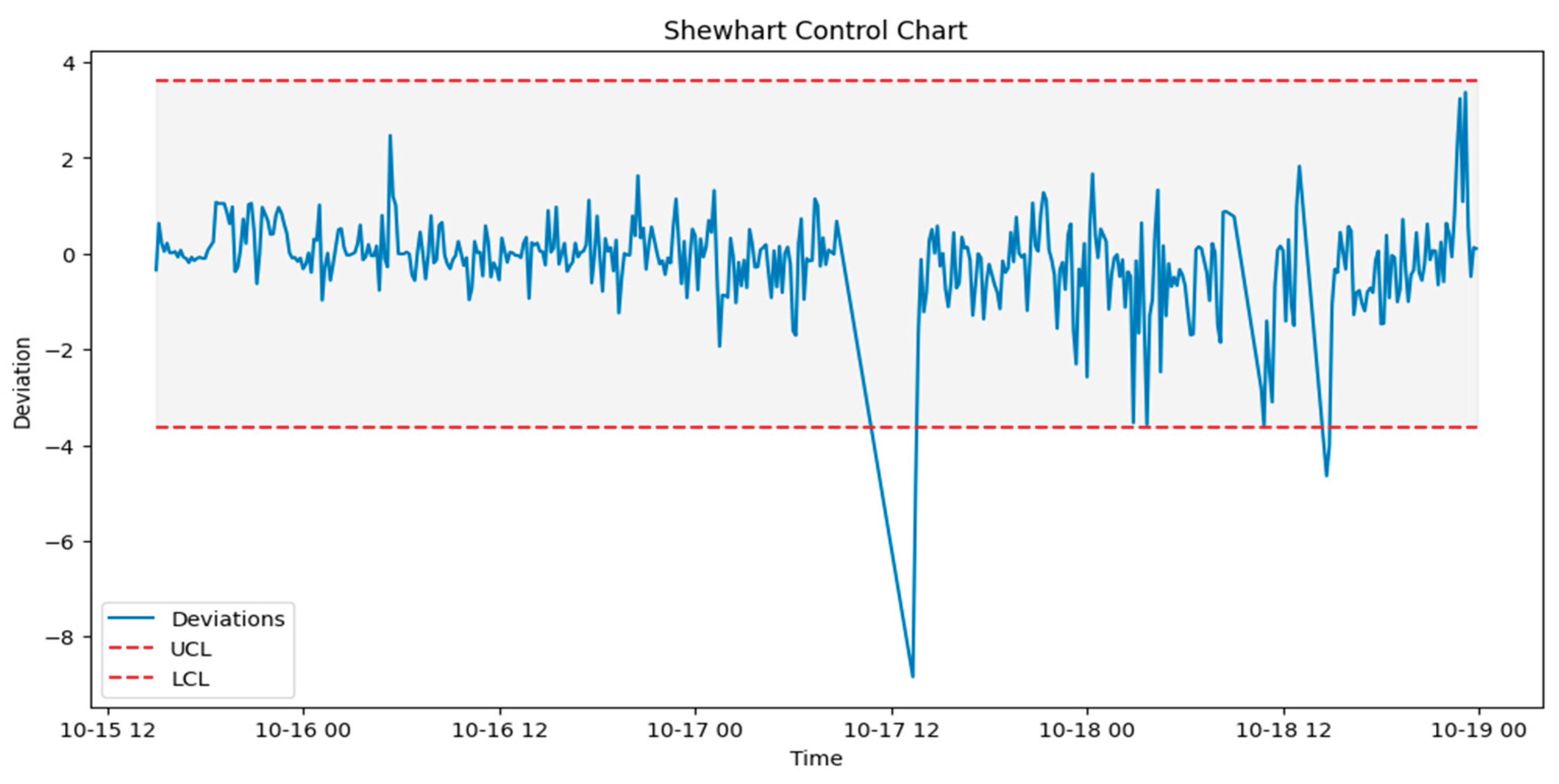

Furthermore, the post-processing is achieved by using Statistical Process Control (SPC) to find deviations that indicate an anomaly in the normal wind turbine operation. This is described in Algorithm 2.

| Algorithm 2: |

|

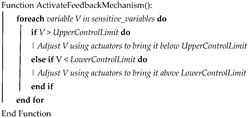

4.3.2. Prediction Feedback

This serves as the script that coordinates the feedback on prediction of failure. The relevant sub-components are regulated through simulations to handle the effect of their current behavior to the health of the component being monitored by the Digital Twin. These components are identified by the sensitivity analysis as described 4.3.1 above. This Can be described by Algorithm 3.

| Algorithm 3: |

|

4.4. Data Platform

The datasets serve as sources of data from the components. Influx DB is used as the real time database that streams data. Each turbine out of the 4 turbines has a bucket in influx DB. In one instance, these buckets hold 75% of the data (that is 18months worth of 10 min readings) and the remaining 25% is used for real time streaming and prediction by the DT. This was reconfigured in different instances of the experiments to model and understand the behavior. For easier analysis due to time constraint, each 10-minute reading is streamed every second.

4.5. Connectivity Middleware

The experiment set up was implemented with Wi-fi as the network connection, connectivity, MQTT and Telegraf as middleware and this is between the raspberry pi at the edge and the fog/cloud pc.

4.6. Functional Requirement

This is the final phase of the experiment set up that provides an interface to the Digital Twin to highlight its performance using a simple application such as a Web Platform. The functional services which relate to the User Entity section of the ISO 23247 is beyond the scope of this work. The Digital Twin proposed in this work also serves as the Core Entity of the ISO 23247 reference architecture, and this handles the management and monitoring of the Predictive maintenance DT. A description of how the machine learning model, as well as the simulations were handled by the DT can be summarized by Algorithm 4.

| Algorithm 4: |

|

5. Experiment Set Up

The experiment for the solution was achieved using some hardware and software along with a dataset from EDP [

39]. The overall setup is described in

Figure 9, which is an implementation of the solution architecture introduced in

Figure 3 [

17].

- I.

Edge IoT Devices: This layer is equipped with raspberry 3 devices (64-bit @ 1.4GHz 1GB SDRAM) that simulates “sensors” which publish real-time sensor data using MQTT brokers, from each wind turbine component, and receive feedback from the upper layers DT.

- II.

Fog Nodes Layer: This layer is equipped with a fog node serve/pc (Lenovo IdeaCentre Mini PC 1.5GHz 4GB RAM 128 SSD). This aggregates the components for each turbine using containerized microservices, Docker, that pushes the real-time sensor readings to influx DB buckets in the fog (batch data streaming point will be good here) for short term storage and the cloud for longer term storage. This layer also hosts the system DT (DT for each turbine). The script, described in Algorithm 3, that regulates the components behavior once faults are predicted is also hosted in this layer. Both batch and real-time data collected from the sensors are processed in this layer. All the activities highlighted later in the feedback section relates to this layer.

- III.

Cloud Layer: This layer is equipped with a higher computational capacity, using a PC (Intel Core i5 CPU @ 3.30GHz 8.00GB RAM 64-bit OS). This layer hosts the Global DT, training and testing set of all the ML models for each component, periodically retrains the models as more data and faults are identified over time using the longer-term historical data in the influx DB.

Figure 9.

Experiment Setup based on solution architecture.

Figure 9.

Experiment Setup based on solution architecture.

A closer look at the overall workflow from the experiment is described in

Figure 9.

Figure 10.

Functional Application of Experiment.

Figure 10.

Functional Application of Experiment.

6. Result

The result of this work follows the earlier work done in literature as bench marks [

13] [

12] [

15]. This is to showcase the relevance of the distributed digital twin framework, as well as applying standards towards improving asset management in manufacturing systems – specifically predictive maintenance of wind turbines in a wind farm. This section starts with highlighting the failure logs outlined in the dataset [

39] used for the experiments in

Table 3. Followed by an analysis on the performance of the implemented models in two scenarios – (1) adopting a cloud centralized strategy and (2) applying the distributed DT framework as a strategy that enhances real-time feedback leveraging the Distributed DT. This will be followed by the impact of the distributed framework in enhancing two-way feedback leveraging the fog layer of the framework.

6.1. Model Pre-Processing

For all the Machine Learning Models outlined in section IV (c), the first stage after loading the historical data from the specified influxdb bucket of each wind turbine hosted in the cloud-PC, is to pre-process the data by cleaning and removing outliers based on Algorithm 1. This is followed by the identification of the specific inputs and output needed for each component as outlined in

Table 2.

Once the data is cleaned, and made reasonable for a WT operation scenario, it is then split into training and test set. The data was split into 70% train set and 30% test set for each year 2016 and 2017, separately.

6.2. Model Processing

The pre-processing stage was followed by the processing of each of the models for each of the components. In the processing stage, parameters for each of the models are configured before training is done. For instance, the architecture of the LSTM model for the gearbox is different from that of the Generator. This is one of the functions of the DT in accommodating each component and model based on its specific requirements. While all the implementations share similarity, this feature is important in making the DT handle each component with more detail, with a dedicated mode/DT for enhanced accuracy.

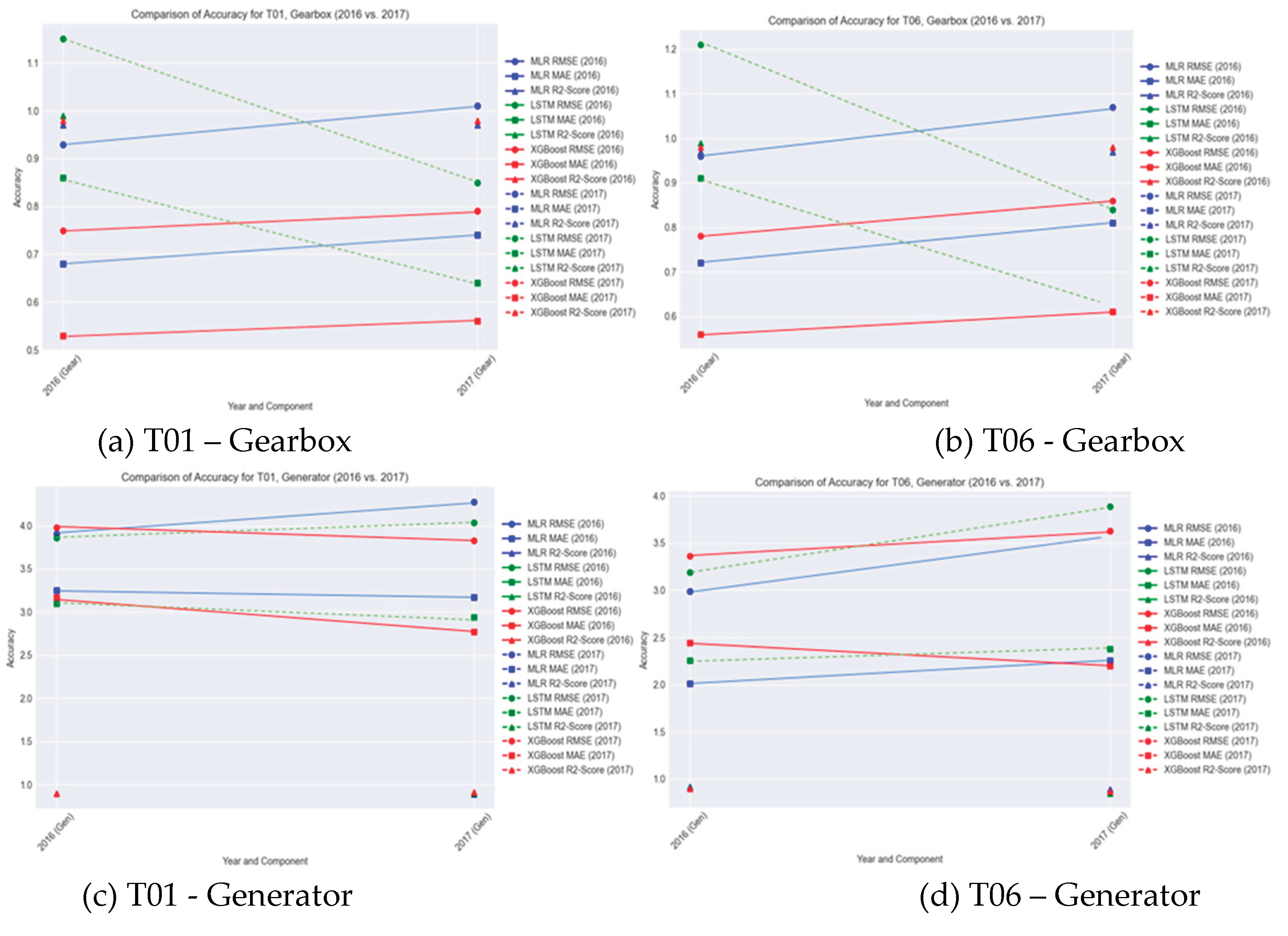

Table 4, gives a summary of the performance, in terms of accuracy of all the components categorized by Turbine, Year of Operation, Component and Algorithm used.

6.2.1. Gearbox

From the result metrics, a closer look at the initial accuracy metrics for the gearbox component overall training and testing, each year done individually, it was observed that the models for both Turbines T01 and T06, taken as an example, behaved similarly where there was an increase in RMSE and MAE from the 2016 to the 2017 data, and in both cases, MLR and XGBoost outperforming LSTM. However, an interesting point to note is that for LSTM, there was a decrease in RMSE and LSTM between 2016 and 2017 with that of 2017 having better performance significantly. This showcases the relevance of LSTM as a deep learning approach that supports termly dependencies, suggesting that for this case study, seasonality, in terms of the four quarters/seasons of the year, needs to be considered. This is due to atmospheric and weather conditions that affect temperature, wind, and other environmental factors.

This finding highlights the point that the training and testing of the model need not be a standard ratio in all cases, as such the distributed DT having short term and long-term storage in the fog and cloud layers respectively, allows for handling this requirement.

6.2.2. Generator

For the Generator bearing component, the results show a different pattern. Firstly, the RMSE and MAE which indicate how far off or close the predictions are to the actual values of the Generator bearing temperature, are higher because the Generator bearing operates at a higher temperature than the Gearbox bearing. This was also easily identified by the DT. In terms of the accuracy metrics, the results show that LSTM and XGBoost outperformed MLR in T01 while XGBoost and MLR outperformed LSTM in T06.

6.2.3. Further Analysis

From analyzing results from the behavior of all the turbines T01, T06, T07 and T11, across both years and all the models, it was found that the gearbox has more susceptibility to the seasonality factor than to the Generator. This is also due to the fact for a gear type Wind Turbine, which is the case in this study, the gearbox is closer to the inputs which transfers the mechanical energy to the generator as output [

40]. For the models, while RMSE and MSE were used for accuracy, the lower the values the better the accuracy. However, the R-Squared score which is between 0 and 1 remains within the same range for all components and having higher values (close to 1) indicates the models performed well.

Figure 11.

T01 and T06 - 2016 vs 2017 Accuracy Comparison by Component.

Figure 11.

T01 and T06 - 2016 vs 2017 Accuracy Comparison by Component.

Additionally, while one algorithm performs well for a certain component, another algorithm may perform better for another component as can be seen in the plots in figures a, b c in the plots. This fact supports this work’s proposal on why the distributed DT framework is relevant as a tool for suitable experimentation and PHM fine tuning. Further discussion on this is in discussion 7 of this paper.

6.3. Model Post-processing

The next stage in our methodology when the models are trained and tested is to select the most performing algorithm among the tested algorithms. As mentioned in the previous section, while all algorithms performed well, the best algorithm to work with is subject to the component and often, the training period due to the seasonality observed in the wind turbines behavior. However, considering all the above, for the purpose of these experiments, it was observed that among all the algorithms, XGBoost performed best, in most cases. As such for the stage of post processing - failure prediction and feedback, XGBoost was adopted, and the next stage of the methodology was achieved.

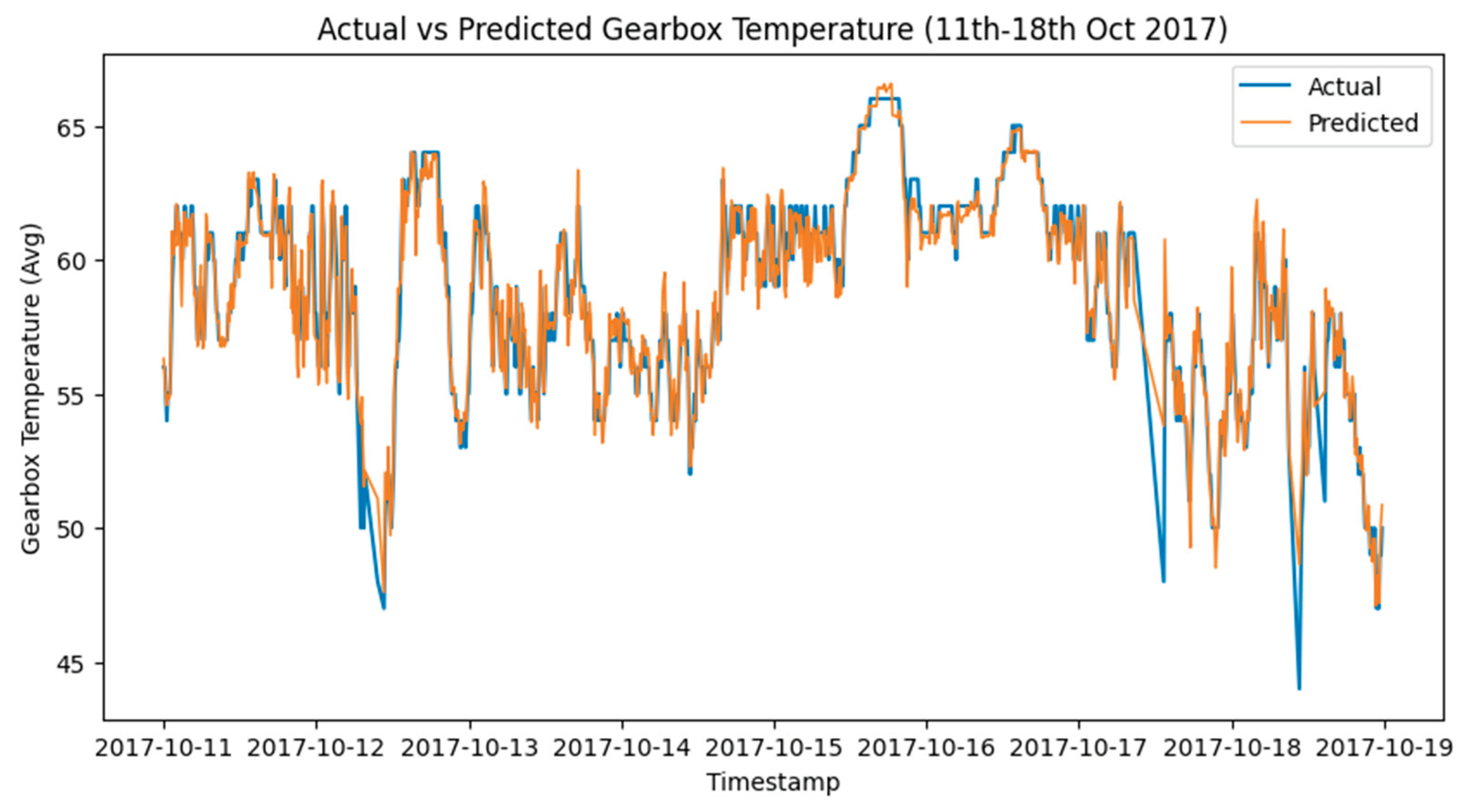

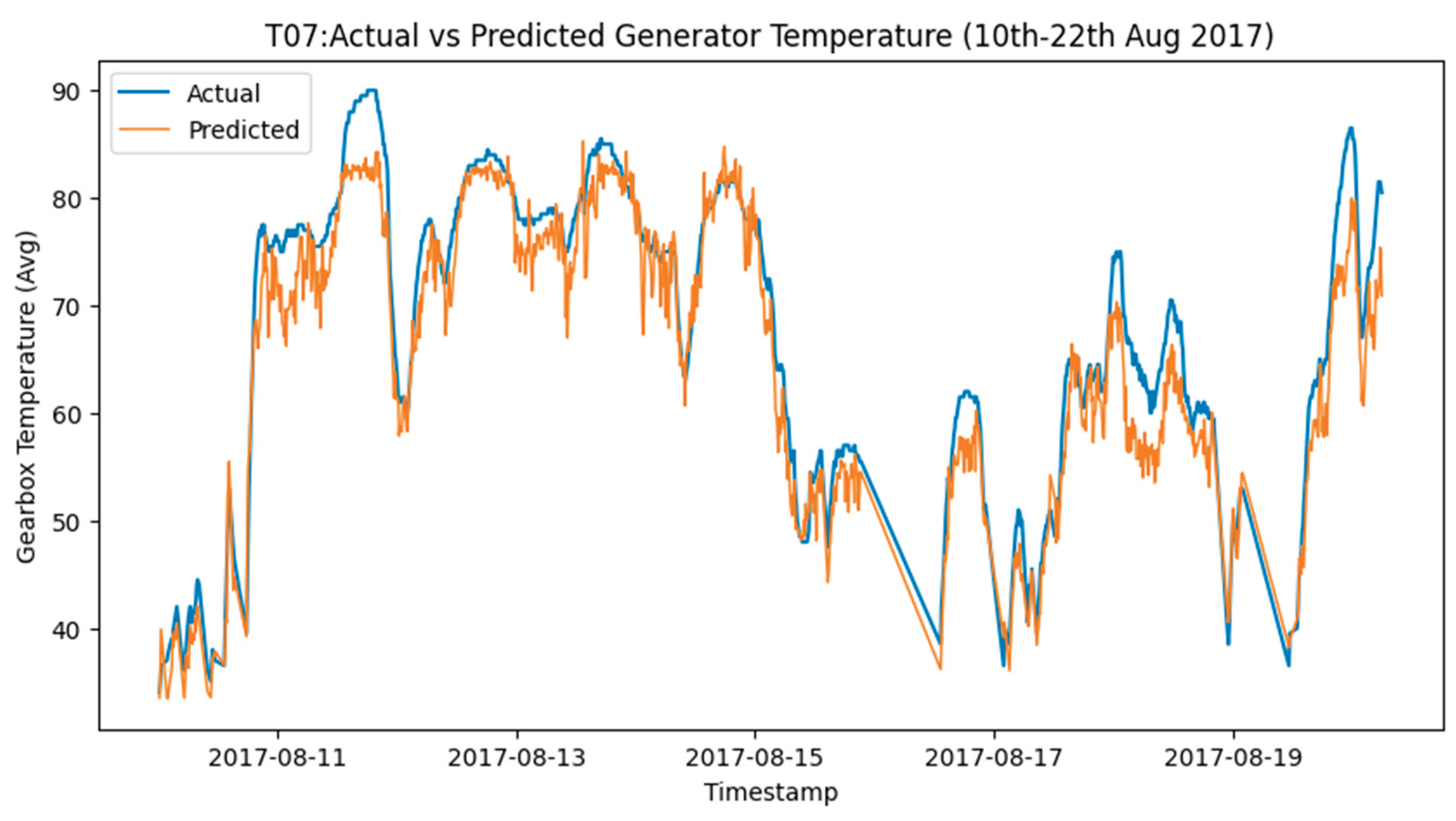

Figure 12 and

Figure 13 shows the actual vs predicted of Turbine T06 - Gearbox and T07 - Generator towards the time of failure of the components.

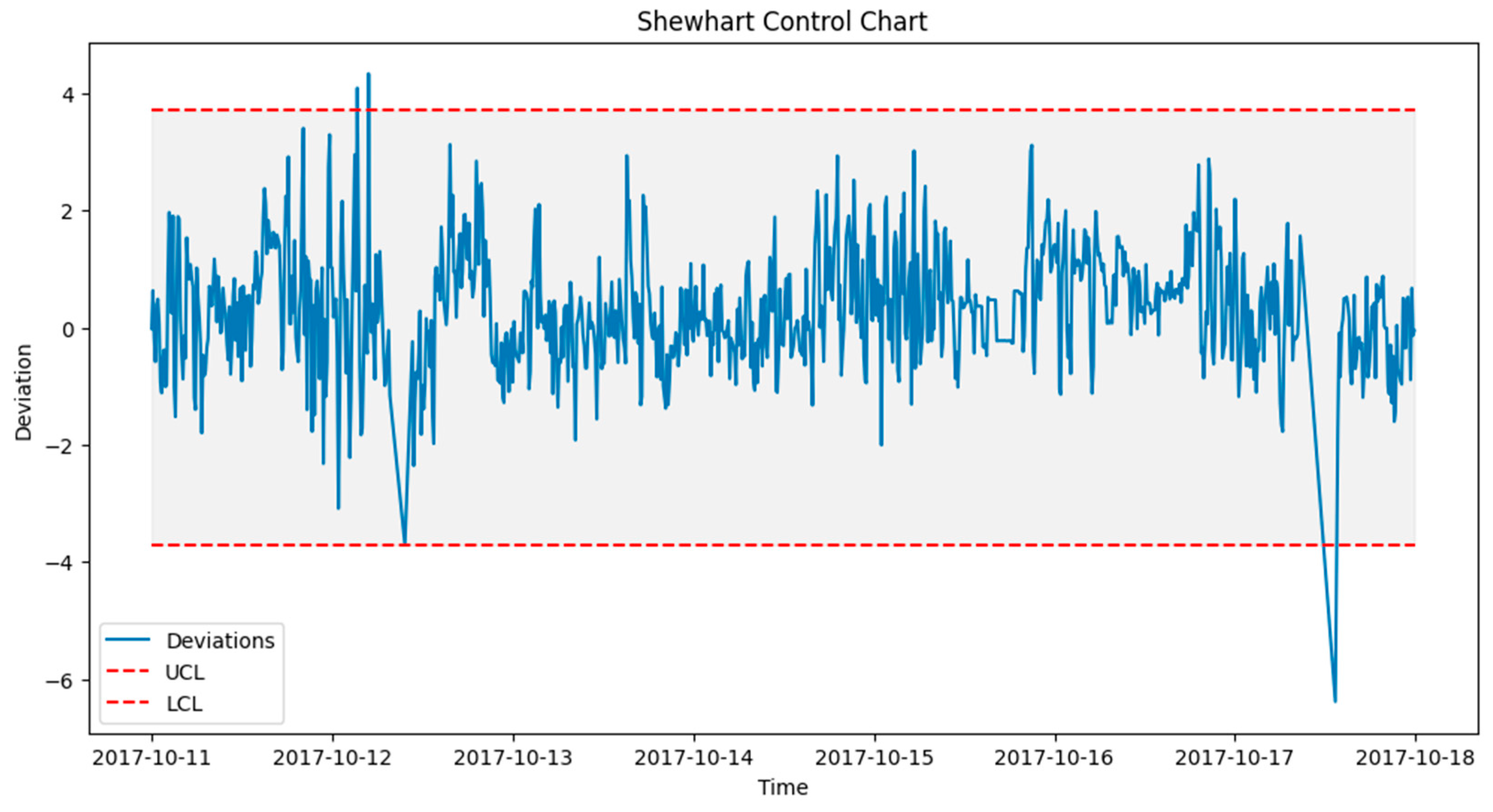

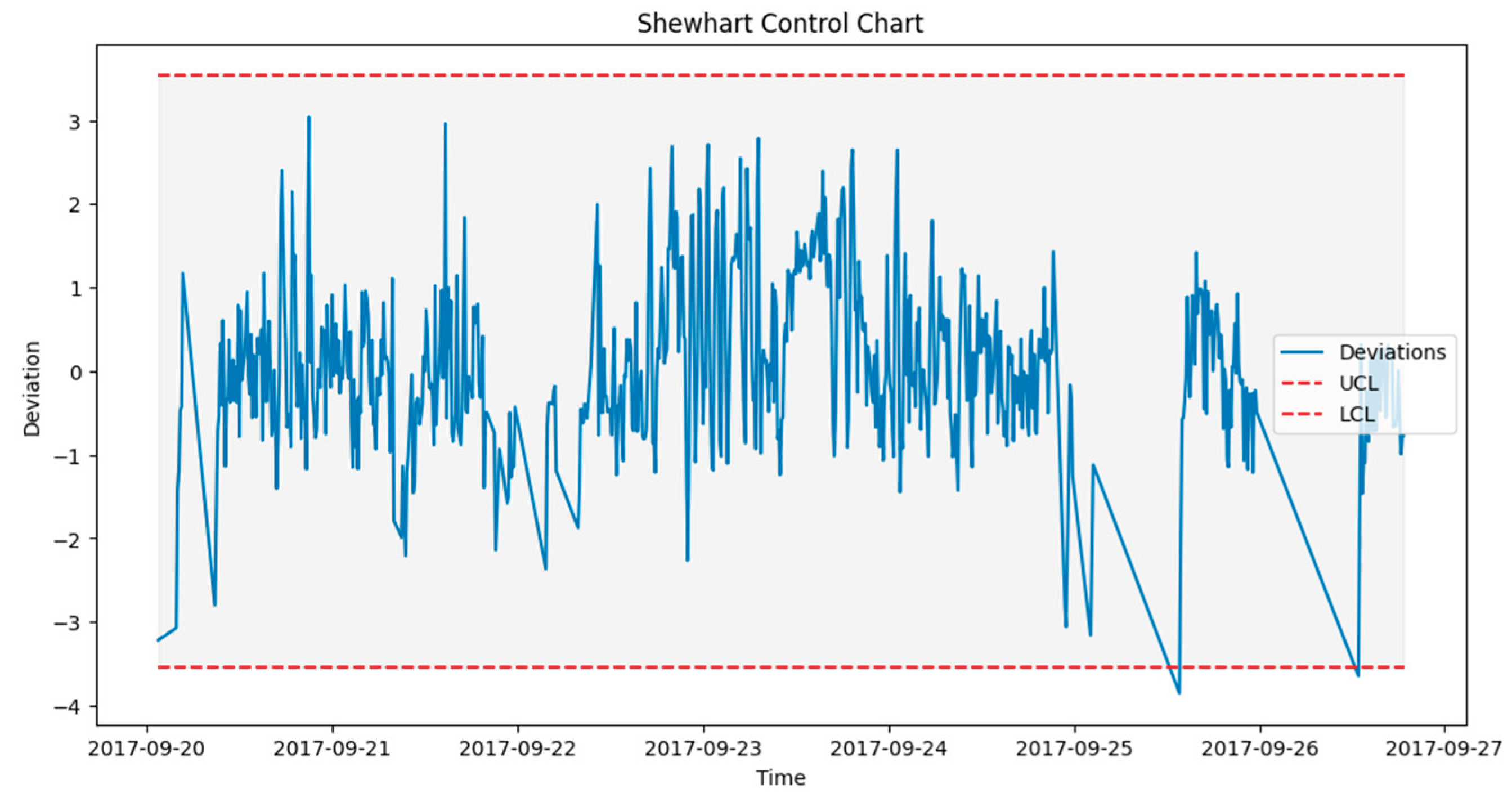

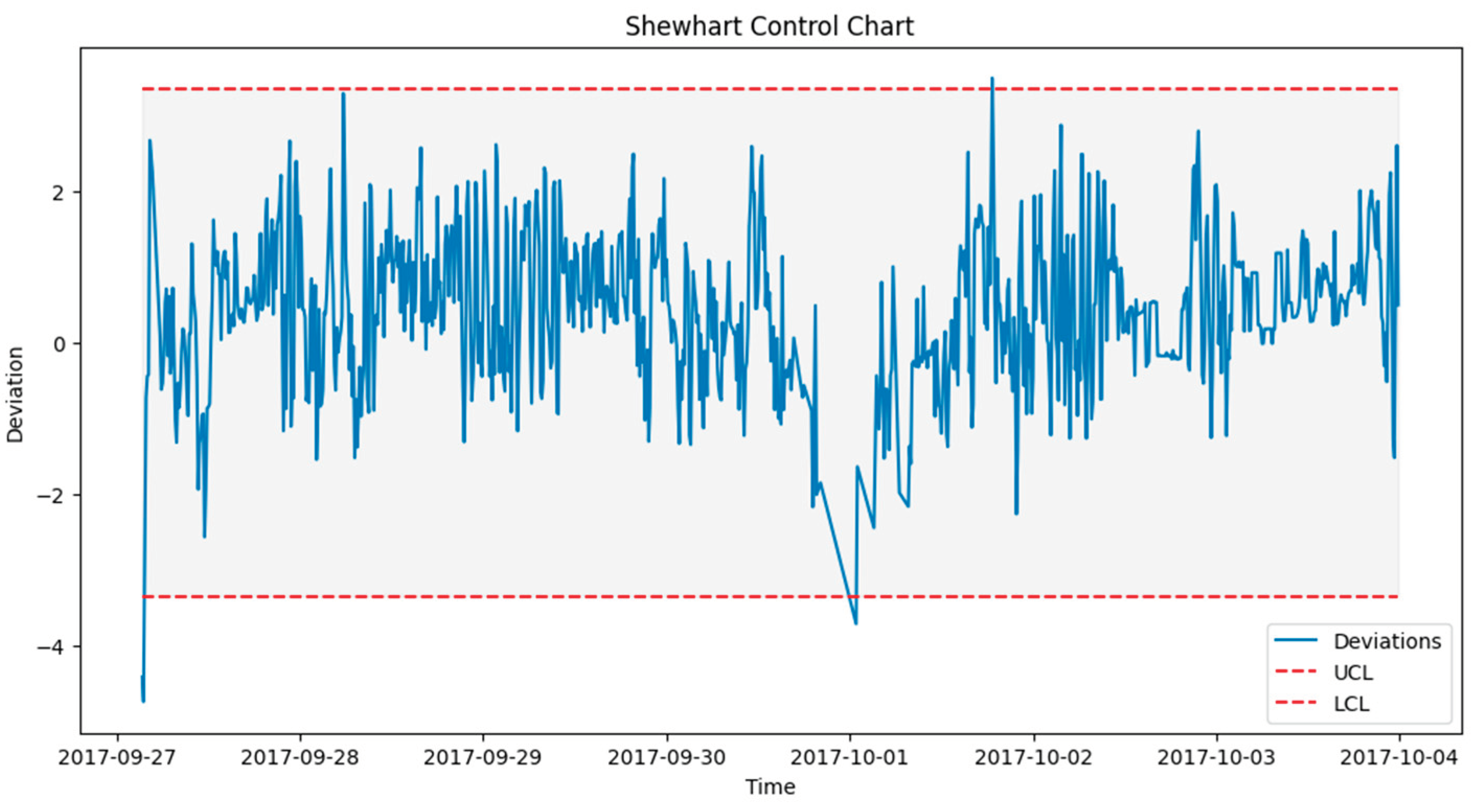

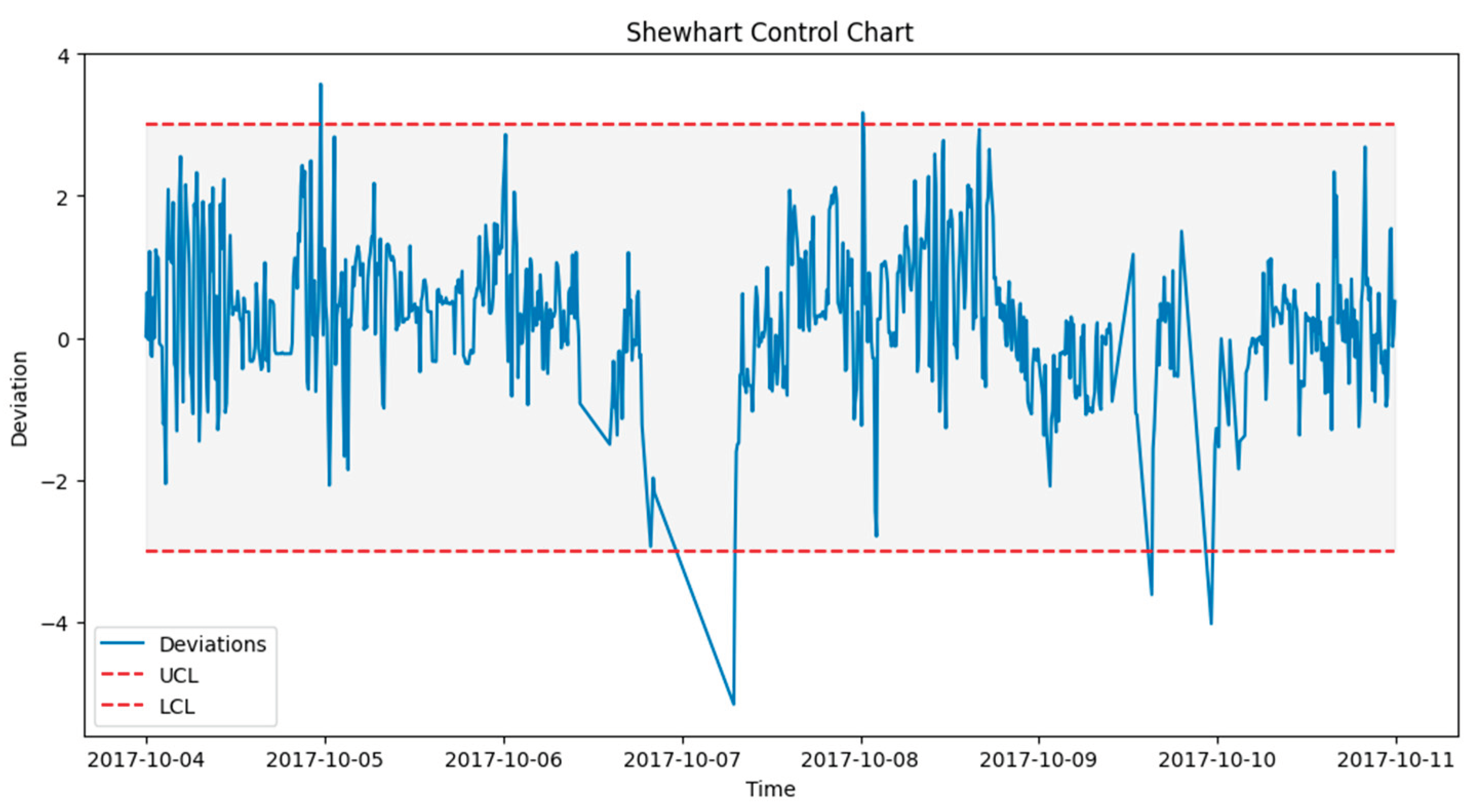

6.3.1. Failure Prediction - Gearbox

After prediction with the acceptable algorithm and accuracy, the next stage was to apply the statistical process control formula as outlined in Algorithm 2. The failure data from

Table 3 was used to lookout for failures in the wind turbine operation around the specified date when the component fails. An alert was configured in the DT to monitor the threshold and pick up a deviation which will be the point of failure prediction.

Figure 14, shows how a failure in the Turbine T06’s gearbox which happened at time 2017-10-17 08:38 was picked up a week before from 2017-10-11 00:30.

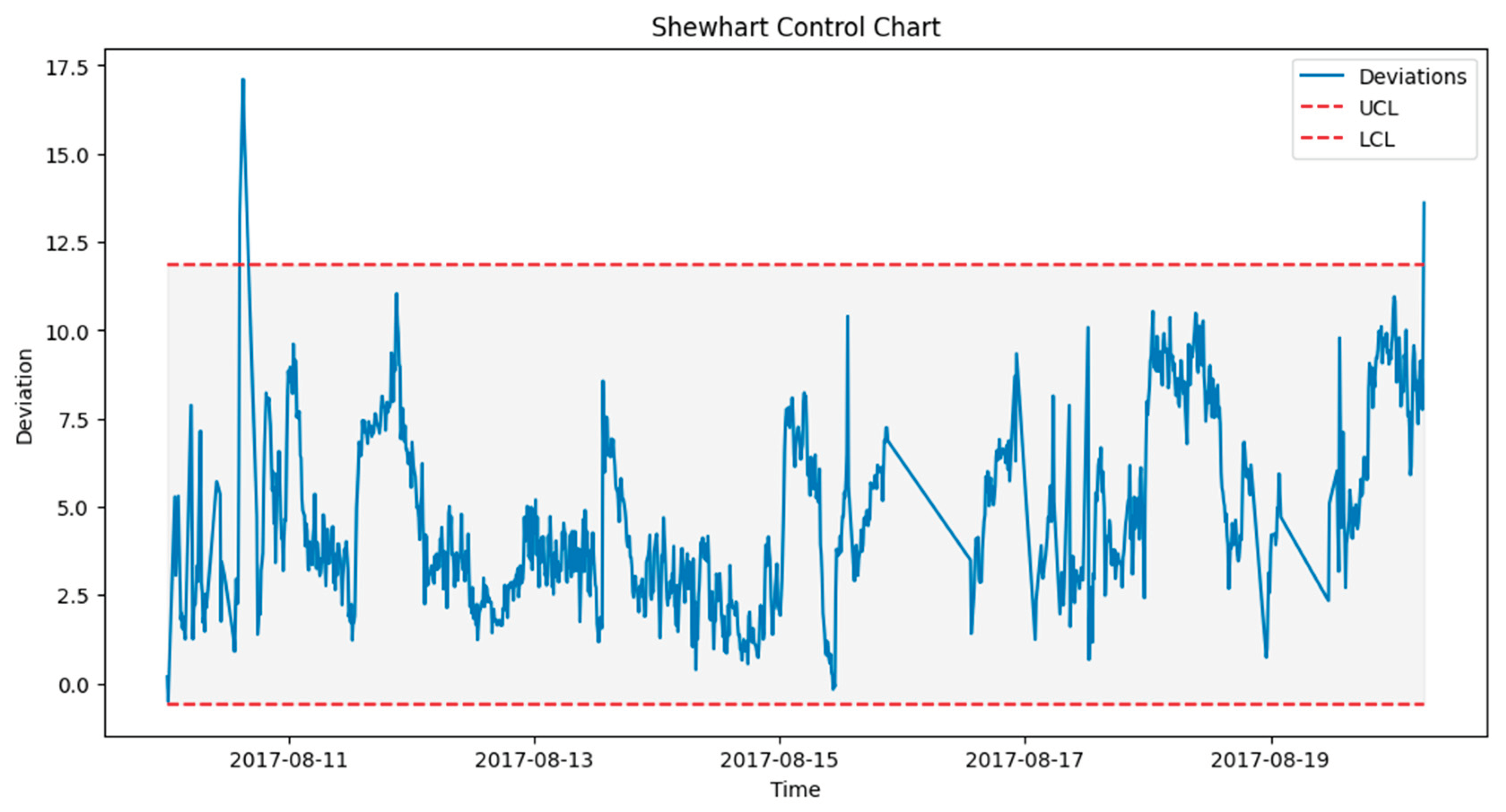

6.3.2. Failure Prediction - Generator

Similarly for the generator, once prediction of the operations of the generator is done, the deviations are detected and SPC applied to predict failure. However, the generator uses a higher lower and upper control limit due to its behavior. Failure was predicted by the DT since on 2017-08-10 00:00 with multiple alerts, which were almost two weeks before the actual failure occurred as highlighted in

Table 3.

Figure 14.

SPC for T06 Gearbox Failure Prediction alerts

Figure 14.

SPC for T06 Gearbox Failure Prediction alerts

Figure 15.

SPC for T07 Generator Failure Prediction alerts

Figure 15.

SPC for T07 Generator Failure Prediction alerts

6.4. Prediction Feedback Loop

This section highlights the implementation of the prediction feedback loop from the Digital Twin to the Physical Twin (PT). The major aim of this metric in our framework is to highlight the relevance of the Distributed DT in accommodating the requirements for a modular DT that handles the feedback from the moment a failure is predicted by the overall Global model in the Cloud layer. The DT node specific to the component will then activate a model that evaluates how a behavior change in the operation of the component can improve the remaining performance (time) before failure. This is envisioned to optimize the utilization of the asset.

Figure 20 below shows how this Distributed DT handled the failure of T07 and T06 as indicated from their failure in 6.3.1 and 6.3.2 above.

6.4.1. Pre-Failure Assessment

To achieve the prediction feedback loop, the DT module handling each component must triage the deviations from the moment of failure prediction and perform some predictive modelling on them. In our case study from the EDP [

39] dataset, taking turbine T06 2017_Gearbox as an example, the prediction shown in

Figure 14 was on the 12th of October 2017 08:38, However actual deviations that indicated a potential issue with the Gearbox started occurring two weeks before the failure, since on 5th of October 2017 at 12:07.

Figure 16,

Figure 17 and

Figure 18 shows the behavior of the gearbox temperature in a weekly snapshot up to 1 month before failure. It can be observed that with this 1 month before failure, the first two weeks (20th Sep to 4th Oct 2017) appear to be normal, with their SPC within the acceptable range while the second two weeks (4th Oct to 18th Oct 2017) show the deviations that predicted failure.

As seen in figure 14 from 6.3.1, which shows the behavior for 11th to 18th October, in which our actual failure was recorded on 17th October at 8:38.

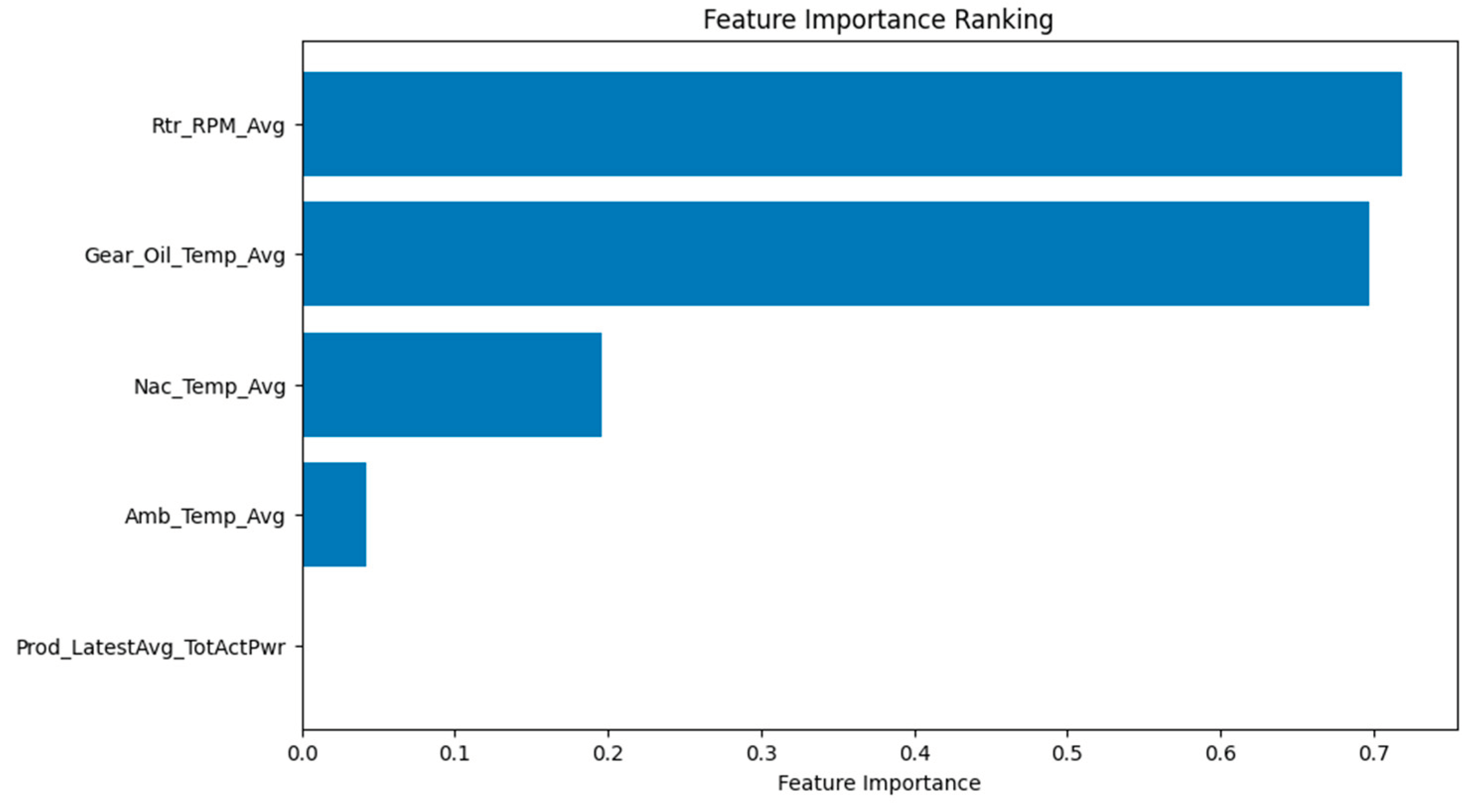

6.4.2. Feedback

Having established that our model detected deviations from normal operation since on 5th October 2017 at 12:07 , we now show how the feedback mechanism as described in Algorithm 3 , simulated the DT to PT two-way feedback by modelling the regulation of some components.

To achieve the feedback simulation, it was necessary for the DT module to identify the top, most important features from the latest model run.

Figure 19 shows how the Gearbox Oil temperature, Rotor RPM and Nacelle temperature had the most influence to the gearbox temperature model prediction. This was achieved by simply identifying the variables with the highest absolute coefficients on the regression model’s output given by ; y

Where y is the predicted output (Gearbox temperature) , b0 is the intercept and b1, b2 … bn are the coefficients associated with each input feature (X1, x2 … Xn) i.e Gearbox oil temperature, rotor RPM e.t.c.

Based on this we selected the top 3 features that are most sensitive to the gearbox behavior and implemented feedback simulations based on this.

Figure 20, shows the behavior of the gearbox after the simulation where the feedback loop handled the failure by identifying the components most sensitive to the original model that predicted the failure and reducing them by 30%.

Figure 20.

SPC Chart Controlled Operations using DT Feedback Mechanism – 30% Reduction Example.

Figure 20.

SPC Chart Controlled Operations using DT Feedback Mechanism – 30% Reduction Example.

6.4.3. Accuracy

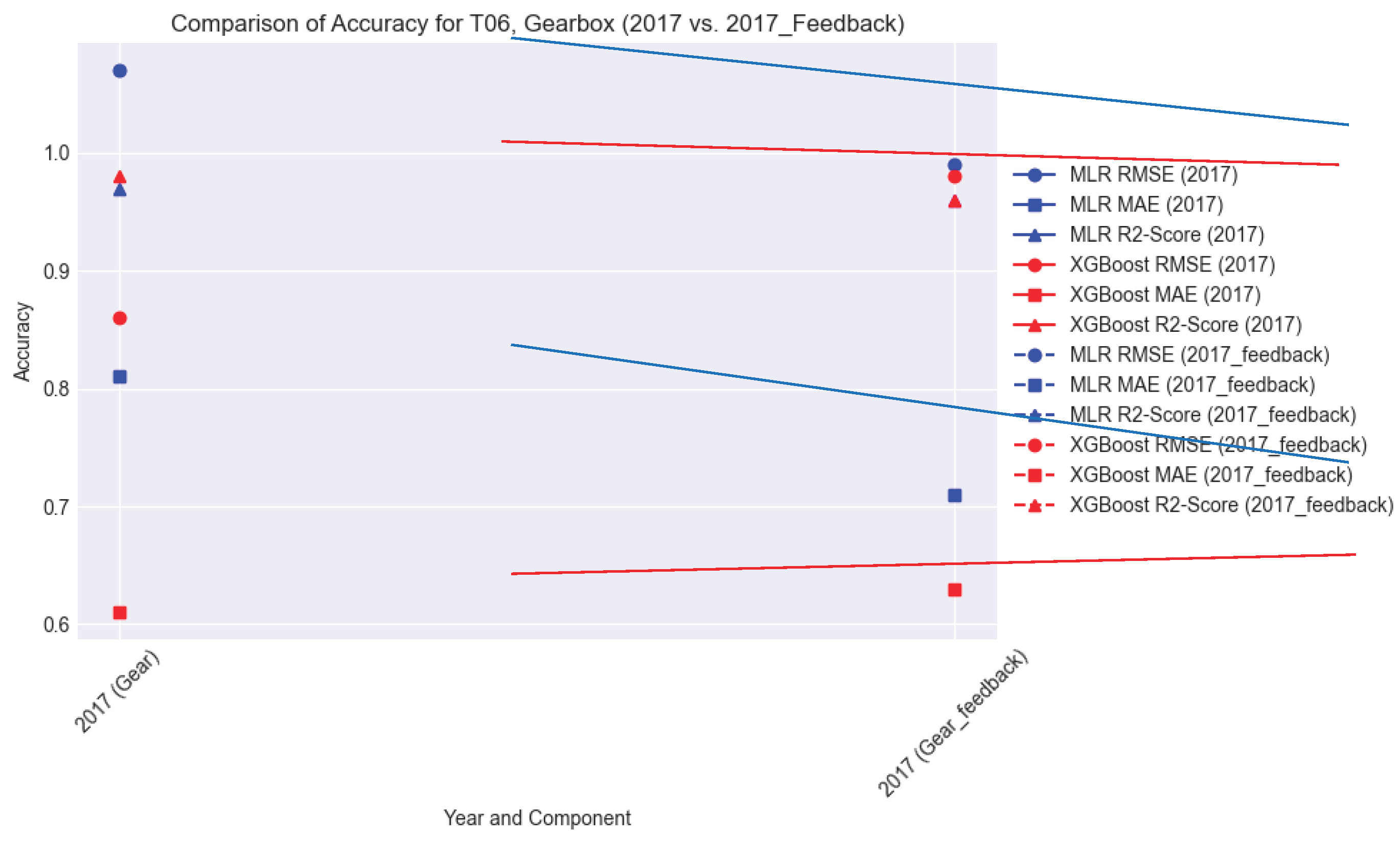

From the metrics of the performance of the model during the feedback runs of the model, we compare the actual T06_2017_Gear performance metrics and that of the feedback run performance in terms of RMSE, MAE and R-Squared.

Table 5.

Accuracy of Model during feedback run.

Table 5.

Accuracy of Model during feedback run.

| Turbine |

Year |

MLR |

XGBoost |

| RMSE |

MAE |

R2-Score |

RMSE |

MAE |

R2-Score |

| T06 |

2017 (Gear) |

0.99 |

0.71 |

0.96 |

0.98 |

0.63 |

0.96 |

| T07 |

2017 (Gen) |

3.29 |

3.02 |

0.87 |

3.64 |

2.43 |

0.89 |

Figure 21.

Accuracy of Model before and during feedback run.

Figure 21.

Accuracy of Model before and during feedback run.

This comparison shows that despite the splitting of the DT where each module handles a component in the fog in the constrained real time requirement of handling the failure is acceptable and offers an improvement.

7. Discussion

This work presented a framework that supports the implementation of a distributed digital twin capable of improving PHM in an IIoT enabled manufacturing setting such as wind turbines. From the architectural framework, methodology and results, it is evident that this presented DT framework can also help both in real-life PHM solutions as well as in experimentation. In the framework, different components operational readings were collected in real time using sensors simulation, then based on historical and real-time data from the DT, different machine learning techniques were applied to predict potential issues and failure of a component. In terms of the versatility of the architecture, it supported, for example which algorithm is more suitable for which component, as well as which model was best in handling seasonality.

For instance, when we applied LSTM for the gearbox and generator of the turbines, it was noted that both components behaved differently in terms of the accuracy of the predictions when parameters of the LSTM architecture are fine-tuned differently.

The potential benefit of adopting this framework is such that the DT can seamlessly allow researchers, engineers, and operations team to simulate and apply the best model and parameter fitting, whether for model training, testing or even in real-time as the DT framework supports the prediction feedback loop. The concept of Intelligent DT in this framework suggests that the DT could automatically evaluate these reconfigurations and feedback based on the behavior of or the component being managed, and in real time.

For architectural considerations, while the Global DT handles the model training and retraining in the cloud layer, the feedback handling model/PHM solution configurations is handled in the Fog and Edge layers. This shows the relevance of the distributed DT framework presented in this study.

Finally, with failure predictions achieved at least two weeks in advance, the DT predicted the behavior of the gearbox temperature based on some selected variables and the feedback mechanism attempts to handle this by regulating the deviations and controlling the identified components to stay within threshold, potentially extending the usage before failure of the asset. This shows that the proposed architecture supports our objective of handling PHM issues in real-time. As with PHM, the application Subject Matter Expertise (SME) to evaluate, monitor and improve the models will be an iterative process. As such, this feedback loop in the fog layer can automatically simulate several scenarios in real-time(e.g. running many variations of the sliced model) and instantly selecting the best control strategy to improve performance, for example, this could be to reduce RPM by 20% instead of by 30%, or which component to regulate from the sensitivity analysis outcome.

8. Conclusion and Future Work

This study presented a distributed digital framework towards improving asset management of wind turbines as a case study for applying a more standardized framework within IIoT where predictive maintenance and DT can be key technologies for achieving improved business outcomes. The framework showed the utilization of the distributed DT architecture to apply a prediction feedback loop from the DT to the PT, improve accuracy by predicting failures as early as possible and remediating them with higher accuracy (model performance) and the provision of a computational platform that offers better computational performance in terms of latency to satisfy real time requirements in the solution. While the concepts have shown how the strategy achieves this, more study needs to be performed in exploring the machine learning techniques performance peculiarities with respect to predictive maintenance.

Further work in this study seeks to investigate the relevance and benefits of the framework in supporting transfer learning for integration of newer components within an existing IIoT infrastructure, among other important ML techniques that support asset management in IIoT.

Author Contributions

IA was in charge of the experiments as part of research supervised by SL and MS who guided on the paper design and methodology of the experiments.

Funding

This research received funding from the Petroleum Technology Development Fund (PTDF).

Data Availability Statement

The Data Used in this research from EDP as referenced in text.

Acknowledgments

This work acknowledges sup[port from the Cranfield University – Digital Aviation Research and Technology Center (DARTeC) and the Integrated Vehicle health Management Center (IVHM).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Bloomberg UK, "Wind Turbines Taller Than the Statue of Liberty Are Falling Over," Bloomberg, Oregon, 2023.

- A. Fuller, Z. Fan, C. Day and a. C. Barlow, "Digital Twin: Enabling Technologies, Challenges and Open Research," IEEE Access, 2020. [CrossRef]

- Y. Lu, K. I.-K. W. Chao Liu, H. Huang and a. X. Xu, "Digital Twin-driven smart manufacturing: Connotation, reference model, applications and research issues," Robotics and Computer Integrated Manufacturing, 2020. [CrossRef]

- L. Errandonea, S. Beltran and a. S. Arrizabalaga, "Digital Twin for maintenance: A literature review," Computers in Industry, 2020. [CrossRef]

- R. v. Dinter, B. Tekinerdogan and a. C. Catal, "Predictive maintenance using digital twins: A systematic literature review," Information and Software Technology, 2022. [CrossRef]

- Y. QAMSANE, J. MOYNE, M. TOOTHMAN, I. KOVALENKO, E. C. BALTA, J. FARIS, D. M. TILBURY and A. K. BARTON, "A Methodology to Develop and Implement Digital Twin Solutions for Manufacturing Systems," IEEE Access, 2021. [CrossRef]

- Cisco, "What Is Edge Computing?," Cisco, 31 12 2012 [Online]. Available online: https://www.cisco.com/c/en/us/solutions/computing/what-is-edge-computing.html (accessed on 12 July 2022).

- "ISO 23247 - Digital twin framework for manufacturing," ISO (International Organization for Standardization), Geneva, 2021.

- M. Grieves and a. J. Vickers, "Digital Twin: Mitigating Unpredictable, Undesirable Emergent Behavior in Complex Systems," in Transdisciplinary Perspectives on Complex Systems, Springer Link, 2016, pp. 85-113.

- E. H. Glaessgen and a. D. Stargel, "The Digital Twin Paradigm for Future NASA and U.S. Air Force Vehicles," in 53rd Structures, Structural Dynamics, and Materials Conference, Honolulu, Hawaii, 2012.

- J. MOYNE, Y. QAMSANE, M. TOOTHMAN, I. KOVALENKO, E. C. BALTA and J. FARIS, "A Requirements Driven Digital Twin Framework: Specification and Opportunities," IEEE Access, 2020. [CrossRef]

- A. Haghshenas, A. Hasan, O. Osen and a. E. T. Mikalsen, "Predictive digital twin for ofshore wind farms," Energy Informatics, 2023. [CrossRef]

- W. UDO and A. Y. MUHAMMAD, "Data-Driven Predictive Maintenance of Wind Turbine Based on SCADA Data," IEEE Access, 2021. [CrossRef]

- M. Garan, K. Tidriri and a. I. Kovalenko, "A Data-Centric Machine Learning Methodology: Application on Predictive Maintenance of Wind Turbines," Energies - MDPI, 2022. [CrossRef]

- E. Cinar, S. Kalay and a. I. Saricicek, "A Predictive Maintenance System Design and Implementation for Intelligent Manufacturing," Machines - MDPI, 2022. [CrossRef]

- l. Costantini, G. D. Modica, J. C. Ahouangonou, D. C. Duma, B. Martelli, M. Galletti, M. Antonacci, D. Nehls, P. Bellavista, C. Delamarre and a. D. Cesini, "IoTwins: Toward Implementation of Distributed Digital Twins in Industry 4.0 Settings," Computers - MDPI, 2022. [CrossRef]

- L. Abdullahi S. Perinpanayagam and I. Hamidu, "A Fog Computing Based Approach Towards Improving Asset Management and Performance of Wind Turbine Plants Using Digital Twins," in 2022 27th International Conference on Automation and Computing (ICAC), Bristol, UK, 2022. [CrossRef]

- H. Huang and X. Xu, "EDGE COMPUTING ENHANCED DIGITAL TWINS FOR SMART MANUFACTURING," in ASME 2021 16th International Manufacturing Science and Engineering Conference MSEC2021, Virtual, 2021. [CrossRef]

- T. Böttjer, D. Tola, F. Kakavandi, C. R. Wewer, D. Ramanujan, C. Gomes, P. G. Larsen and A. Iosifidis, "A review of unit level digital twin applications in the manufacturing industry," CIRP Journal of Manufacturing Science and Technology, vol. 45, pp. 162-189, 2023. [CrossRef]

- D. McKee, "Platform Stack Architectural Framework: An Introductory Guide," Digital Twin Consortium, 2023.

- Bofill, M. Abisado, J. Villaverde and a. G. A. Sampedro, "Exploring Digital Twin-Based Fault Monitoring: Challenges and Opportunities," Sensors - MDPI, 2023. [CrossRef]

- Amazon Web Services, "What is cloud computing?," Amazon. 2023. Available online: https://aws.amazon.com/what-is-cloud-computing/ (accessed on 29 July 2023).

- Microsoft Azure, "What is Cloud Computing?," Microsoft. 2023. Available online: https://azure.microsoft.com/en-au/resources/cloud-computing-dictionary/what-is-cloud-computing (accessed on 29 July 2023).

- IBM, "What is cloud computing?," IBM. 2023. Available online: https://www.ibm.com/topics/cloud-computing (accessed on 29 July 2023).

- Microsoft Azure, "Cloud computing vs. edge computing vs. fog computing," Microsoft. 2023. Available online: https://azure.microsoft.com/en-gb/resources/cloud-computing-dictionary/what-is-edge-computing/ (accessed on 29 July 2023).

- F. P. Knebel, J. A. Wickboldt and E. P. d. Freitas, "A Cloud-Fog Computing Architecture for Real-Time Digital Twins," Journal of Internet Services and Applications, 2021. [CrossRef]

- G. Peralta, M. Iglesias-Urkia, M. Barcelo, R. Gomez, A. Moran and J. Bilbao, "Fog computing based efficient IoT scheme for the Industry 4.0," in 2017 IEEE International Workshop of Electronics, Control, Measurement, Signals and their Application to Mechatronics (ECMSM), Donostia, Spain, 2017.

- G. Shao, "Use Case Scenarios for Digital Twin Implementation Based on ISO 23247," National Institute of Standards and Technology, 2021.

- V. Damjanovic-Behrendt and W. Behrendt, "An open source approach to the design and implementation of Digital Twins for Smart Manufacturing," International Journal of Computer Integrated Manufacturing, pp. 366-384, 2019. [CrossRef]

- Docker, "Docker," [Online]. Available: Docker. [Accessed 12 August 2023].

- V. Kamath J. Morgan and M. I. Ali, "Industrial IoT and Digital Twins for a Smart Factory : An open source toolkit for application design and benchmarking," in 2020 Global Internet of Things Summit (GIoTS), Dublin, Ireland, 2020.

- N. Ouahabi, A. Chebak, M. Zegrari, O. Kamach and M. Berquedich, "A Distributed Digital Twin Architecture for Shop Floor Monitoring Based on Edge Cloud Collaboration," in 2021 Third International Conference on Transportation and Smart Technologies (TST), Tangier, Morroco, 2021.

- B. Tekinerdogan and C. Verdouw, "Systems Architecture Design Pattern Catalog for Developing Digital Twins," Sensors, 2020. [CrossRef]

- S. Sundaram and A. Zeid, "Smart Prognostics and Health Management (SPHM) in Smart Manufacturing: An Interoperable Framework," MDPI Sensors 2021. [CrossRef]

- M. Picone and M. M. a. F. Zambonelli, "A Flexible and Modular Architecture for Edge Digital Twin: Implementation and Evaluation," ACM Transactions on Internet of Things, 2023. [CrossRef]

- A. Villalonga, E. Negri, L. Fumagalli, M. Macchi, F. Castaño and R. Haber. "Local Decision Making based on Distributed Digital Twin Framework. International Federation of Automatic COntrol 2020, 53, 10568–10573. [CrossRef]

- E. Ferko, A. Bucaioni, P. Pelliccione and M. Behnam, "Standardisation in Digital Twin Architectures in Manufacturing," in 2023 IEEE 20th International Conference on Software Architecture (ICSA), L'Aquila, Italy, 2023. [CrossRef]

- S. V. Nath and P. V. Schalkwyk, Building Industrial Digital Twins, Packt Publishing, 2021.

- EDP Group, "EDP Open Data," 2022.

- Office of Energy Efficiency and Renewable Energy, "How Do Wind Turbines Work?," US Department of Energy, [Online]. Available online: https://www.energy.gov/eere/wind/how-do-wind-turbines-work (accessed on 17 September 2023).

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).