1. Introduction

Surgical data sciences is emerging as a novel domain within healthcare, particularly in the field of surgery. This discipline holds the promise of significant advancements in various areas such as virtual coaching, assessment of surgeon proficiency, and learning complex surgical tasks through robotic systems [

1]. Additionally, it contributes to the field of gesture recognition [

2], [

3]. Understanding surgical scenes has become pivotal in the development of intelligent systems that can effectively collaborate with surgeons during live procedures [

4]. The availability of extensive datasets related to the execution of surgical tasks using robotic systems would support these advancements forward, providing detailed information of the surgeon’s movements with both kinematics and dynamics data, complemented by video recordings. Furthermore, public datasets facilitate the comparison of different algorithms proposed in scientific literature. Rivas-Blanco et al. [

5] provide a list of 13 publicly accessible datasets within the surgical domain, such as Cholec80 or M2CAI16.

While most of these datasets feature video data [

6][

7], only two of them incorporate kinematic data [

8][

9], which is fundamental in analyzing metrics associated with tool motion. Kinematic data is provided by research platforms such as the da Vinci Research Kit (dVRK). The dVRK is a robotic platform based on the first-generation commercial da Vinci Surgical System (by Intuitive Surgical, Inc., Sunnyvale, CA). This platform offers a software package that records kinematics and dynamics data of both the master tool and the patient side manipulators. The JIGSAWS dataset [

8] and the UCL dVRK dataset [

9] records were acquired using this platform. The first one stands out as one of the most renowned datasets in surgical robotics . It encompasses 76-dimensional kinematic data in conjunction with video data for 101 trials of three fundamental surgical tasks (suturing, knot-tying, and needle-passing) performed by six surgeons. The UCL dVRK dataset comprises 14 videos using the dVRK across five distinct types of animal tissue. Each video frame is associated with an image of the virtual tools produced using a dVRK simulator.

Common annotations in surgical robotics datasets are related to tool detection and gesture recognition. These annotations are the basis for developing new strategies to advance in the field of intelligent surgical robots and surgical scene understanding. One of the most studied applications in this field is surgical image analysis. There are many promising works for object recognition based on surgical images. Most works perform surgical instrument classification [

10][

11], instruments segmentation [

12][

13], and tools detection [

7][

14]. Al-Hajj et al. [

15] propose a network that concatenates several CNNs layer to extract visual features of the images and RNNs for analyzing temporal dependencies. With this approach, they are able to classify seven different tools in cholecystectomy surgeries with a performance of around 98%. Sarikaya et al. [

7] applied a region proposal network with a multimodal convolutional one for instrument detection, achieving a mean average precision of 90%. Besides surgical instruments, anatomical structures are an essential part of the surgical scene. Thus, organ segmentation provides rich information for understanding surgical procedures. Liver segmentation has been addressed by Nazir et al. [

16] and Fu et al. [

17] with promising results.

Another important application in surgical data sciences is surgical task analysis, as the basis for developing context-aware systems or autonomous surgical robots. In this domain, the recognition of surgical phases has been extensively studied, as it enables computer-assisted systems to track the progression of a procedure. This task involves breaking down a procedure into distinct phases and training the system to identify which phase corresponds to a given image. Petscharnig and Schöffmann [

18] explored phase classification on gynecologic videos annotated with 14 semantic classes. Twinanda et al. [

19] introduced a novel CNN architecture, EndoNet, which effectively performs phase recognition and tool presence detection concurrently, relying solely on visual information. They demonstrated the generalization of their work with two different datasets. Other researchers delve deeper into surgical tasks by analyzing surgical gestures instead of phases. Here, tasks such as suturing are decomposed into a series of gestures [

20]. This entails a more challenging problem as gestures exhibit greater similarity to each other compared to broader phases. Gesture segmentation, so far, has primarily focused on the suturing task [

21][

22][

23], with most attempts conducted in in-vitro environments yielding promising results. Only one study [

24] has explored live suturing gesture segmentation. Trajectory segmentation offers another avenue for a detailed analysis of surgical instrument motion [

25][

26]. It involves breaking trajectories into sub-trajectories, facilitating learning from demonstrations, skill assessment, phase recognition, among other applications. Authors leverage kinematics information provided by surgical robots, which when combined with video data, yields improved accuracy results [

27][

28].

In a previous work [

29], we presented the Robotic Surgical Maneuvers (ROSMA) dataset. This dataset contains kinematic and video data for 206 trials of three common training surgical tasks performed with the dVRK. In Rivas-Blanco et al. [

29], we presented a detailed description of the data and the recording methodology, along with the protocol of the three tasks performed. This first version of ROSMA did not include annotations. In the present work, we incorporate manual annotations for surgical tool detection and gesture segmentation. The main novelty regarding the annotation methodology is that we have annotated independently the two instruments handled by the surgeon, i.e., we provide bounding box labels for the tool handled with the right master tool manipulator, and the one handled with the left one. We believe that this distinction would provide useful information for supervisory or autonomous systems, making it possible to pay attention to one particular tool, i.e., we may be interested in the position of the main tool or in the position of the support tool, even if they are both the same type of instrument.

In most works of the state of the art, phases, such as suturing, or gestures, such as pulling suture or needle orientation, are considered an action that involves both tools. However, the effectiveness of these methods relies heavily on achieving a consensus regarding the surgical procedure, thus limiting its applicability. Consequently, the generalization to other procedures remains poor. Thus, the approach of treating independently both tools is also followed for the gesture annotations presented in this work. We have annotated each video frame with a gesture label for the right-handed tool and a gesture label for the left-handed tool. This form of annotating gestures makes special sense for tasks that may be performed with either the right or the left hand, as is the case of ROSMA dataset, in which tasks are performed half of the trials with the right hand, and the other half with the left hand. Being able to detect the basic action each tool is performing would allow the identification of general gestures or phases either for dexterous or left-handed surgeons.

In summary, the main contributions of this letter are:

This work completes the ROSMA dataset with surgical tools bounding box annotations on 24 videos, and gesture annotations on 40 videos.

Unlike previous work, annotations are performed on the right tool and on the left tool independently.

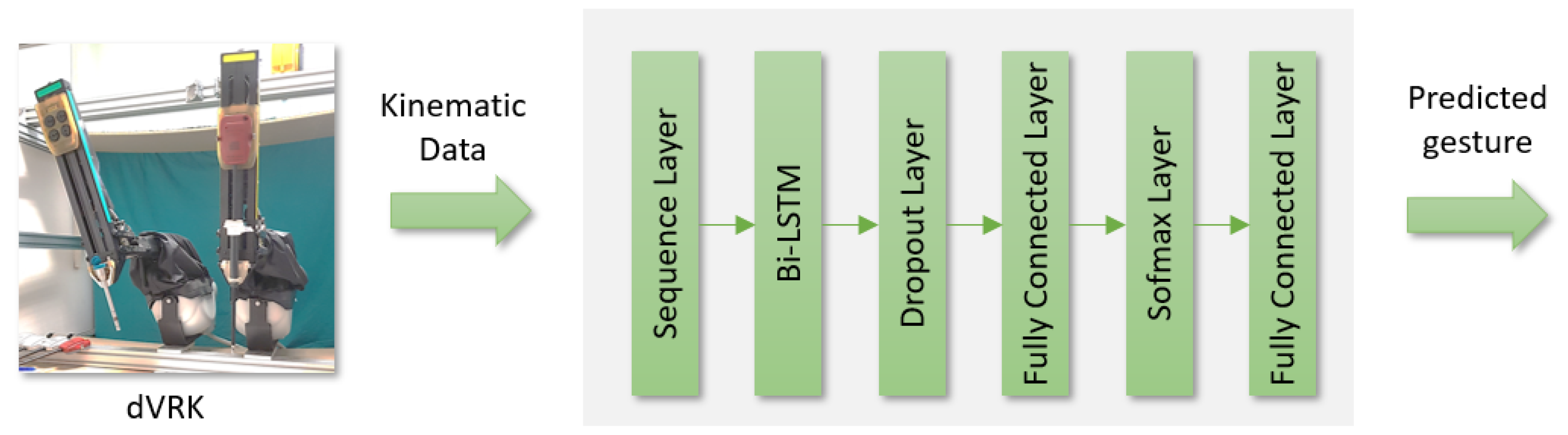

Annotations for gesture recognition have been evaluated using a recurrent neural network based on a bi-directional long-short term memory layer, using an experimental setup-up with four cross-validation schemes.

Annotations for surgical tool detection have been evaluated with a YOLOV4 network using two cross-validation schemes.

4. Discussion

This work explores the approach of studying the motion of the surgical instruments separately one from each other. In this sense, instead of considering a gesture or maneuver as a part of an action that involves the coordinated motion of the two tools the surgeon is managing, we define gestures as actions each tool is performing independently, whether they are interacting with the other tool or not. We consider that this approach would facilitate the generalization of the recognition methods for procedures that do not follow rigid protocols. To train the recurrent neural network proposed we have used kinematic data without cartesian position to allow the reproduction of the experiments with different robotic platforms. We have decided not to use images as input to the network to be able to extrapolate the results to different scenarios, i.e., the idea is that the network learns behavioral patterns of the motion of the tools, whether they are picking colored sleeves, peas, rings or any other object.

Results reveal a high dependency of the model on the user skills, with a wide range of precision from 39% to 64.9% mAP depending on the user left out for the LOUO cross-validation scheme. In future works, we will investigate the model performance when it is trained with a higher variety of users. The model also has a high dependency on the task used for training but shows high robustness for changes in the tool. The mean accuracy of the model is over the 65% when the model has been trained using a particular tool as the dominant one to complete the task, but tested with trials with a different dominant tool. These are promising results to generalize the recognition method for dexterous or left-handed surgeons.

When the system is trained with a wide variety of trials, comprising different users, tasks, and dominant tools, the performance of the gestures prediction reaches 77.3% mAP. This result is comparable to other works that perform gesture segmentation using kinematics data. Luongo et al. [

37] achieve 71% mAP using only kinematic data as input to an RNN on the JIGSAWS dataset. Other works that include images as input data report results of 70.6% mAP [

26] and 79.1% mAP [

27]. Thus, we consider that we achieved a good result, especially taking into account that recognition is performed on tasks that do not follow a specific order in any of the attempts. We believe that the annotations provided on ROSMAG40 are a good base to advance in the generalization of the gesture and phase recognition methodologies for procedures with a non-rigid protocol. Moreover, the annotations presented in this work could be merged with the traditional way of annotating surgical phases to provide low-level information on the performance of each tool, which could improve the high-level phase recognition with additional information.

The annotations provided in ROSMAT24 for tool detection can be used as complementary to the gesture segmentation methods to focus the attention on a particular tool. This is important because surgeons usually perform the accuracy tasks with their dexterous hand and the support tasks with the non-dexterous one. Thus, being able to detect each one independently can provide useful information. We have demonstrated that YOLOv4 network provides high precision in the instrument’s detection, reaching 97% mAP. This result is comparable to other works on surgical instruments detection, such as Kurman et. al [

7], who reported a 90% mAP or Zhao et al. [

38] with 91.6% mAP. We also demonstrated the capabilities of the network to detect the instruments in an unseen scenario through the LOSO experimental setup.

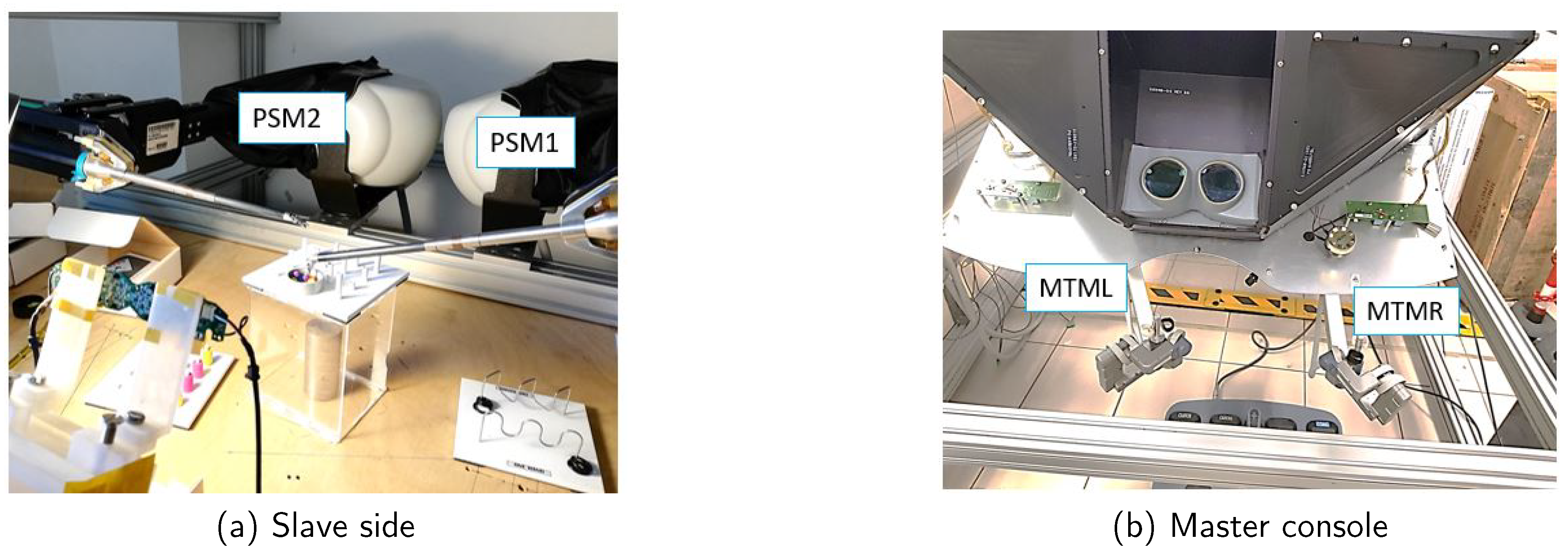

Figure 1.

da Vinci Research Kit (dVRK) platform used to collect the ROSMA dataset. (a) The slave side has two Patient Side Manipulators (PSM1 and PSM2), two commercial webcams to provide stereo vision and to record the images, and the training task board. (b) The master console has two Master Tool Manipulators (MTML and MTMR) and a stereo vision system.

Figure 1.

da Vinci Research Kit (dVRK) platform used to collect the ROSMA dataset. (a) The slave side has two Patient Side Manipulators (PSM1 and PSM2), two commercial webcams to provide stereo vision and to record the images, and the training task board. (b) The master console has two Master Tool Manipulators (MTML and MTMR) and a stereo vision system.

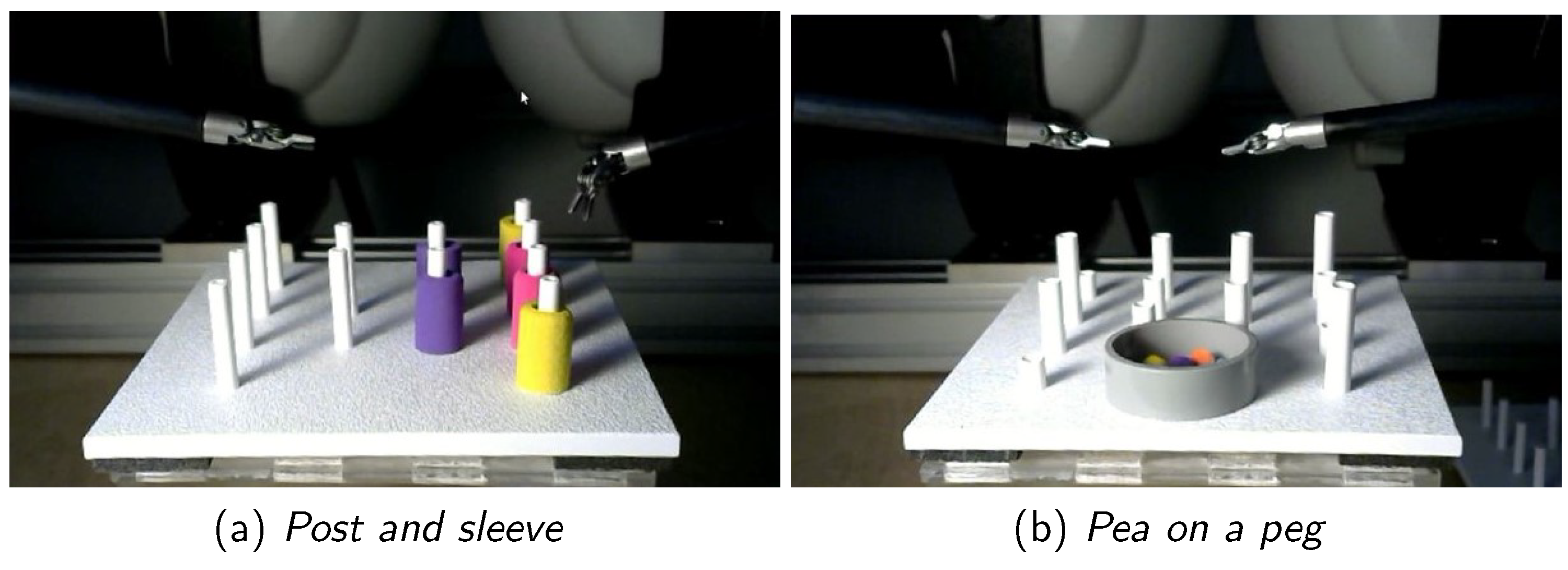

Figure 2.

Experimental board scenario for the ROSMA datasets tasks: (a) Post and sleeve and (b) Pea on a peg

Figure 2.

Experimental board scenario for the ROSMA datasets tasks: (a) Post and sleeve and (b) Pea on a peg

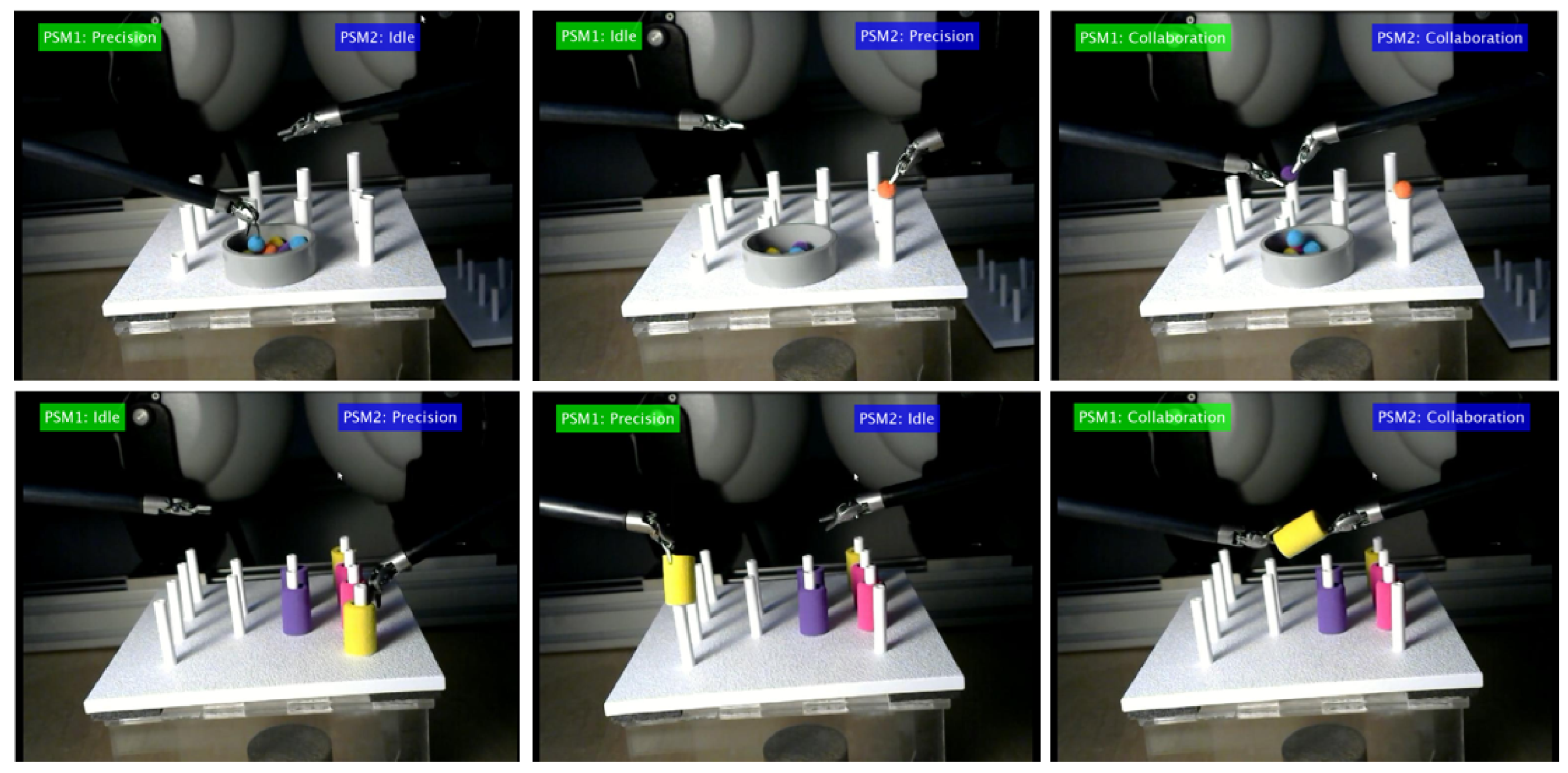

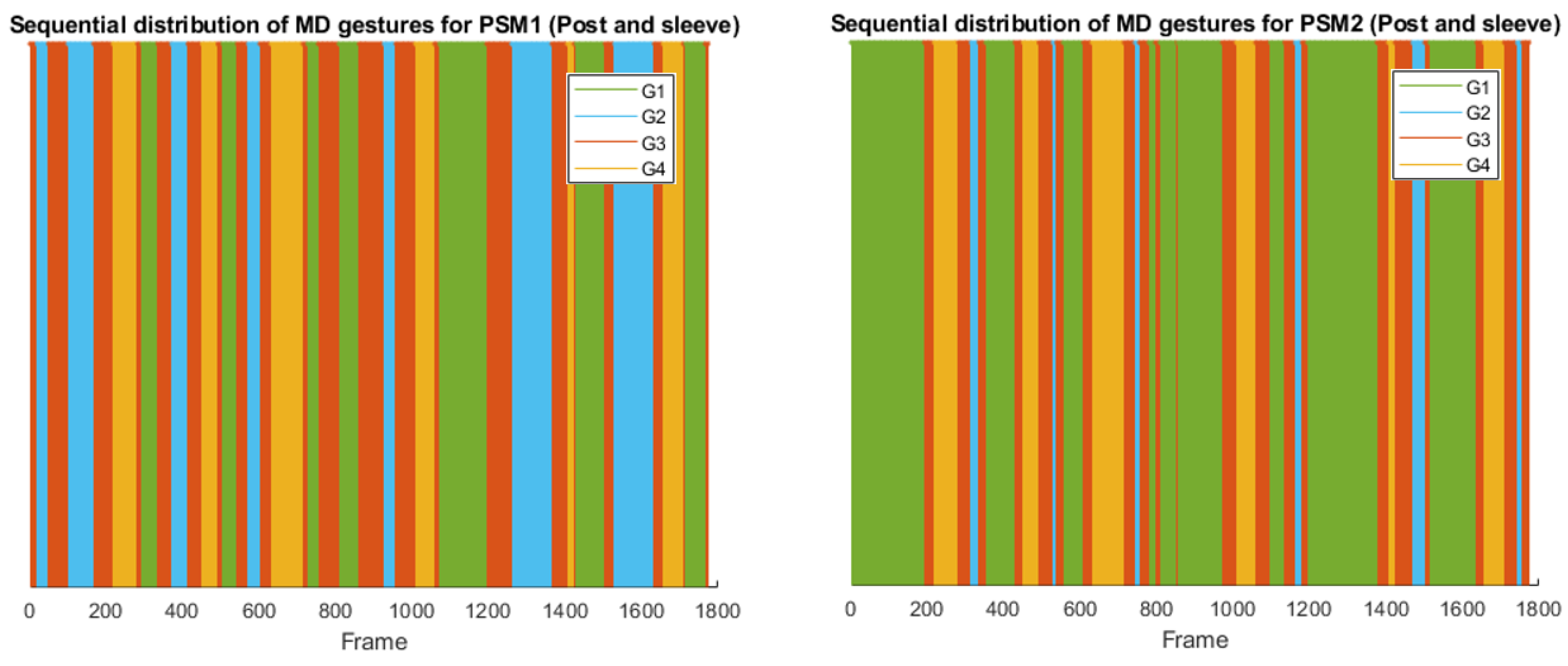

Figure 3.

Examples of MD gesture annotations

Figure 3.

Examples of MD gesture annotations

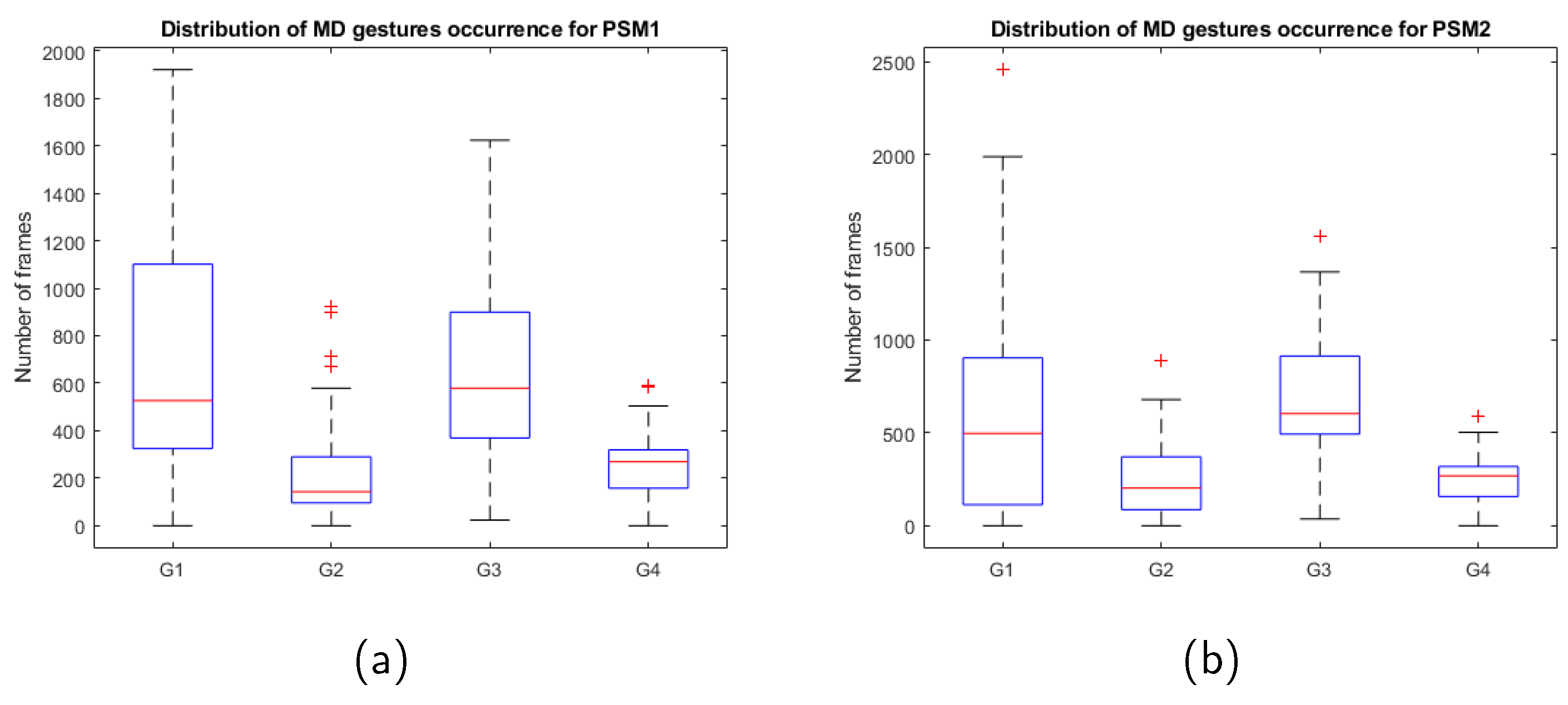

Figure 4.

Distribution of the gestures occurrence for the MD gestures for (a) PSM1 and (b) PSM2.

Figure 4.

Distribution of the gestures occurrence for the MD gestures for (a) PSM1 and (b) PSM2.

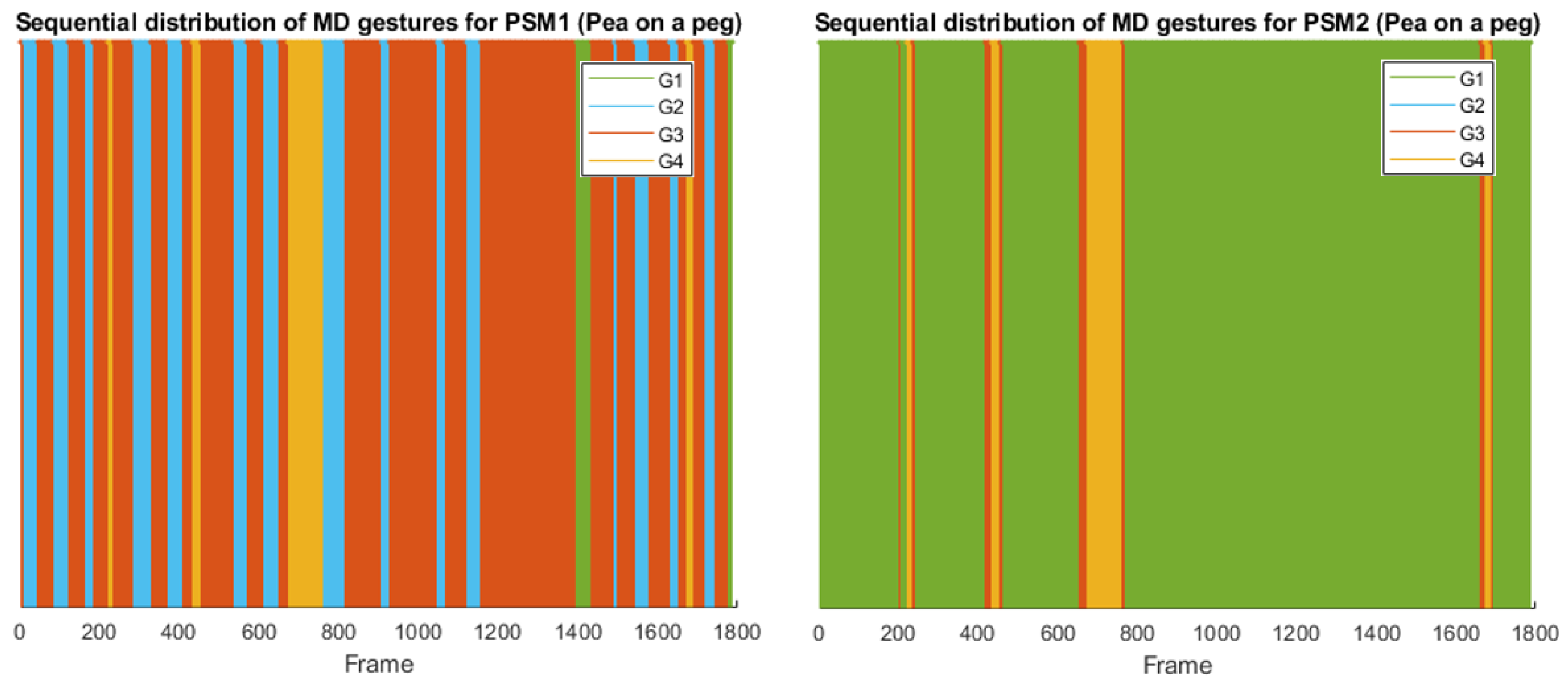

Figure 5.

Sequential distribution of the MD gestures along a complete trial of a pea on a peg task for PSM1 (left) and PSM2 (right)

Figure 5.

Sequential distribution of the MD gestures along a complete trial of a pea on a peg task for PSM1 (left) and PSM2 (right)

Figure 6.

Sequential distribution of the MD gestures along a complete trial of post and sleeve task for PSM1 (left) and PSM2 (right)

Figure 6.

Sequential distribution of the MD gestures along a complete trial of post and sleeve task for PSM1 (left) and PSM2 (right)

Figure 7.

Examples of FGD gesture annotations

Figure 7.

Examples of FGD gesture annotations

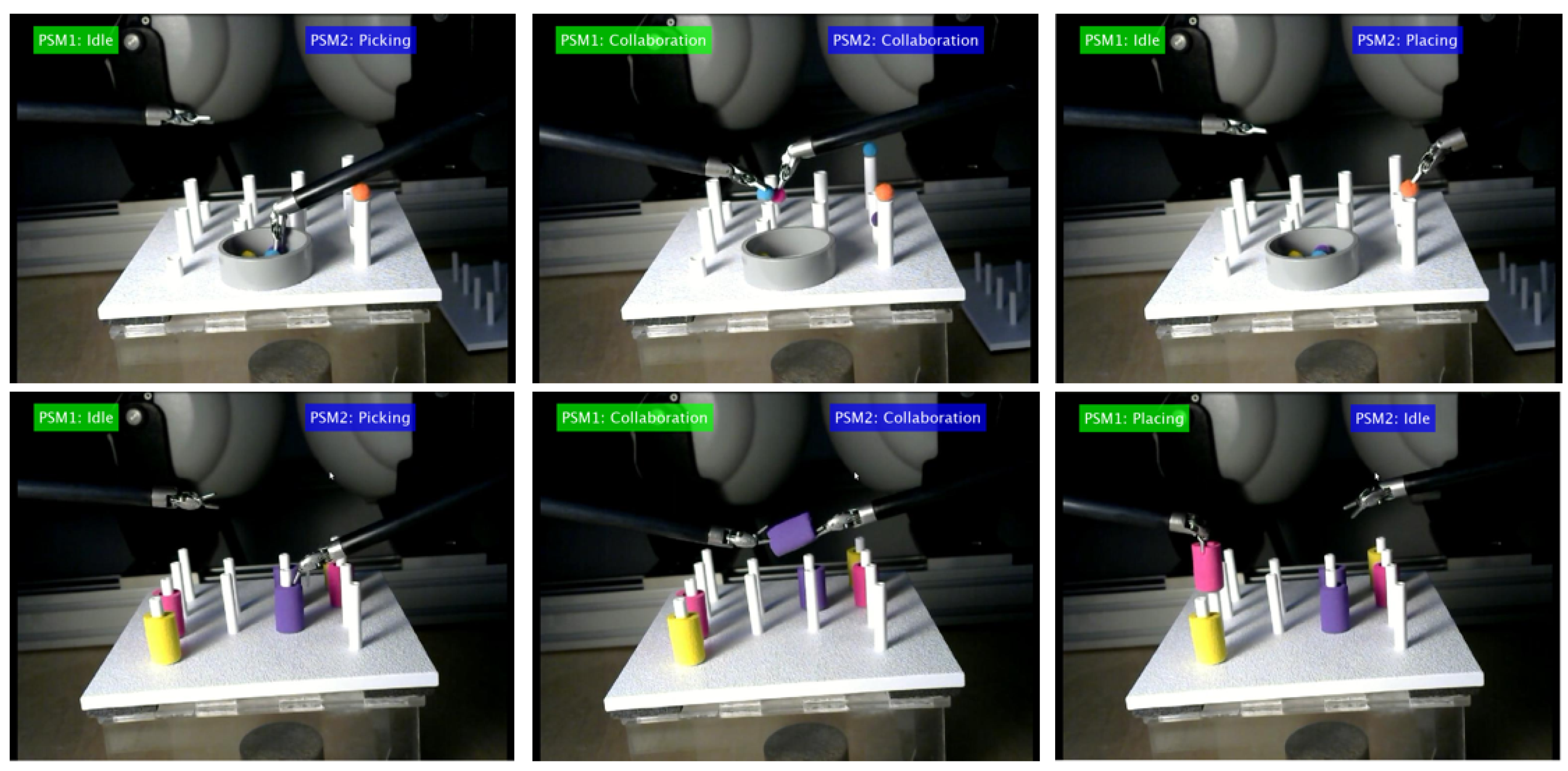

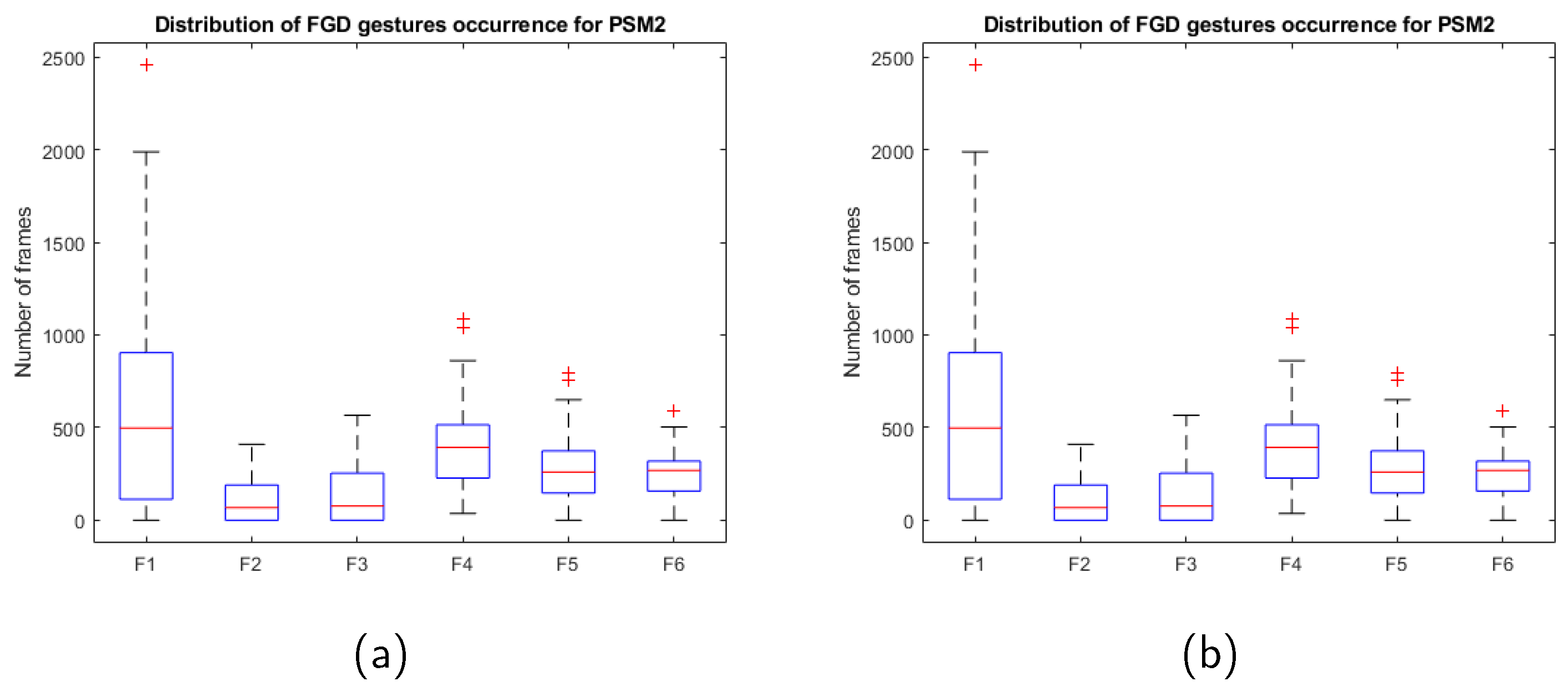

Figure 8.

Distribution of the occurrence for the FGD gestures for (a) PSM1 and (b) PSM2.

Figure 8.

Distribution of the occurrence for the FGD gestures for (a) PSM1 and (b) PSM2.

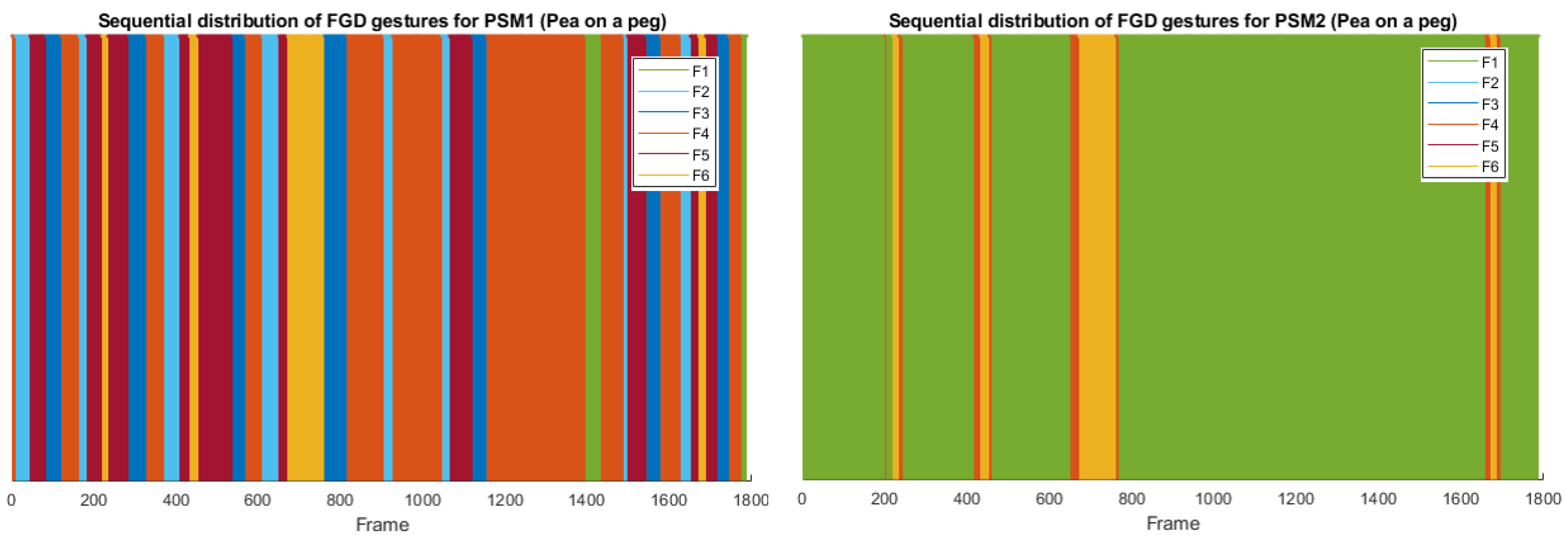

Figure 9.

Sequential distribution of the FGD gestures along a complete trial of a pea on a peg task for PSM1 (left) and PSM2 (right)

Figure 9.

Sequential distribution of the FGD gestures along a complete trial of a pea on a peg task for PSM1 (left) and PSM2 (right)

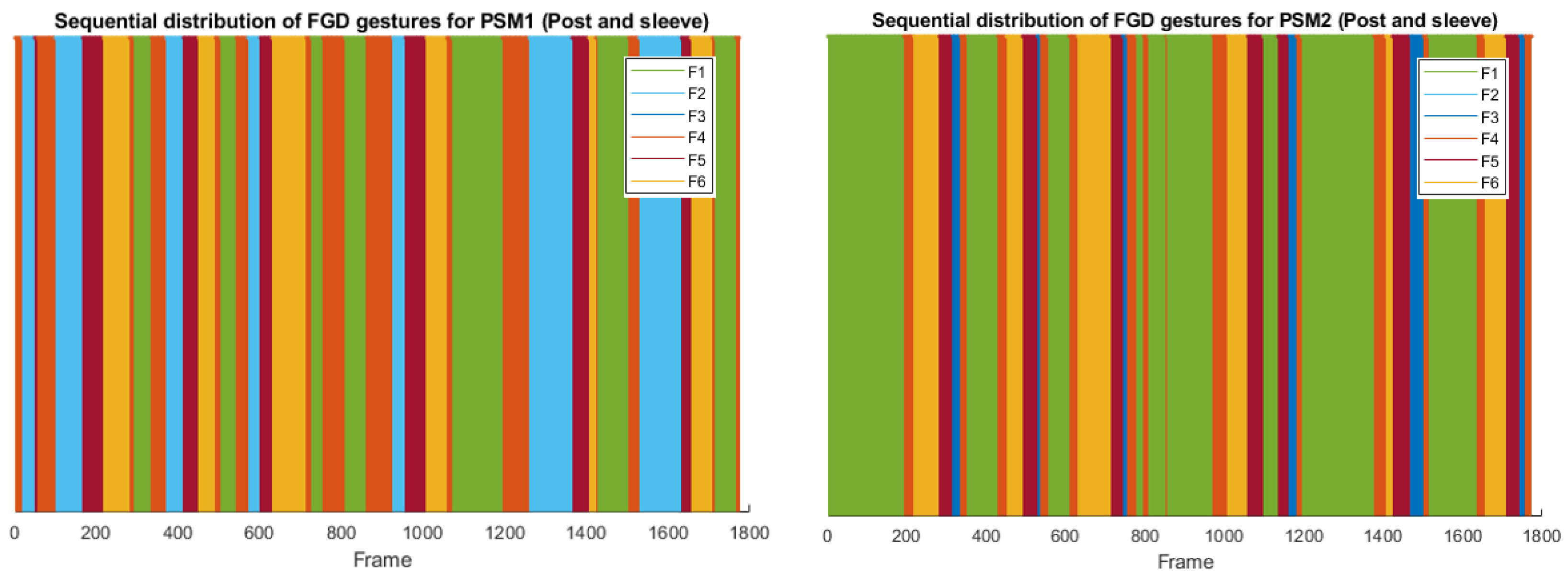

Figure 10.

Sequential distribution of the FGD gestures along a complete trial of post and sleeve task for PSM1 (left) and PSM2 (right)

Figure 10.

Sequential distribution of the FGD gestures along a complete trial of post and sleeve task for PSM1 (left) and PSM2 (right)

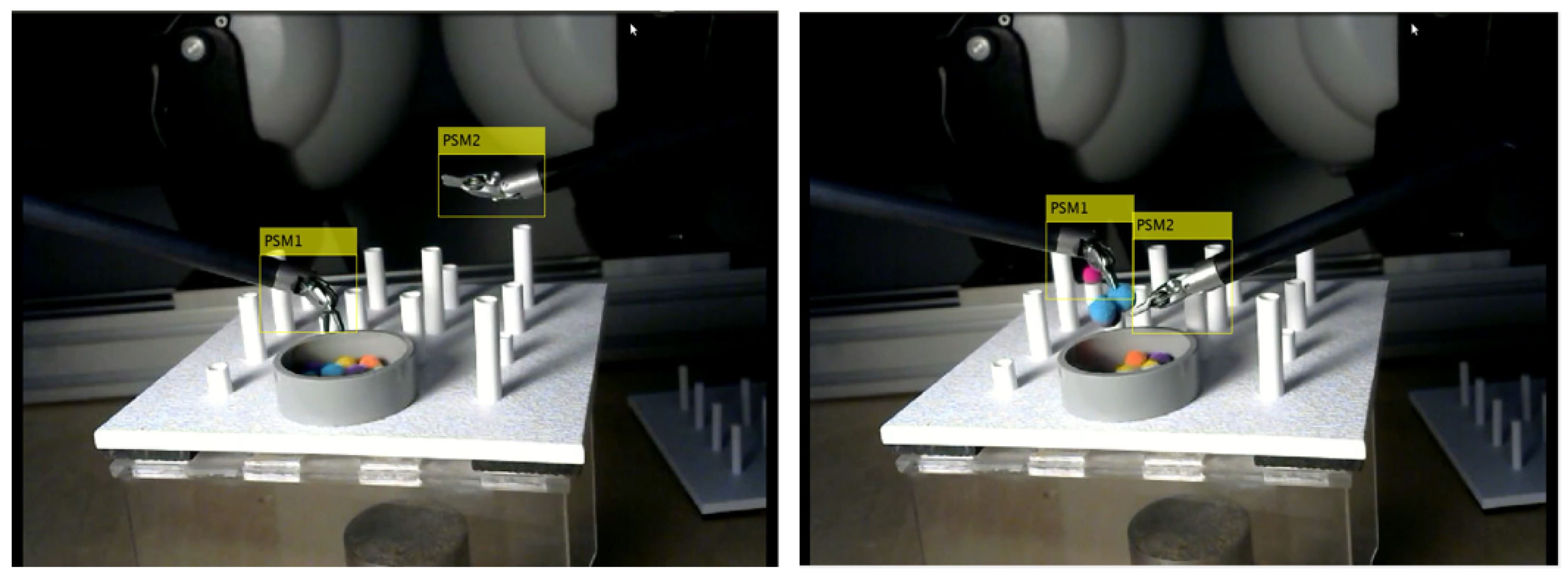

Figure 11.

Examples of the instruments bounding boxes annotations of ROSMAT24.

Figure 11.

Examples of the instruments bounding boxes annotations of ROSMAT24.

Figure 12.

RRN model based on a bi-directional LSTM network for gesture segmentation

Figure 12.

RRN model based on a bi-directional LSTM network for gesture segmentation

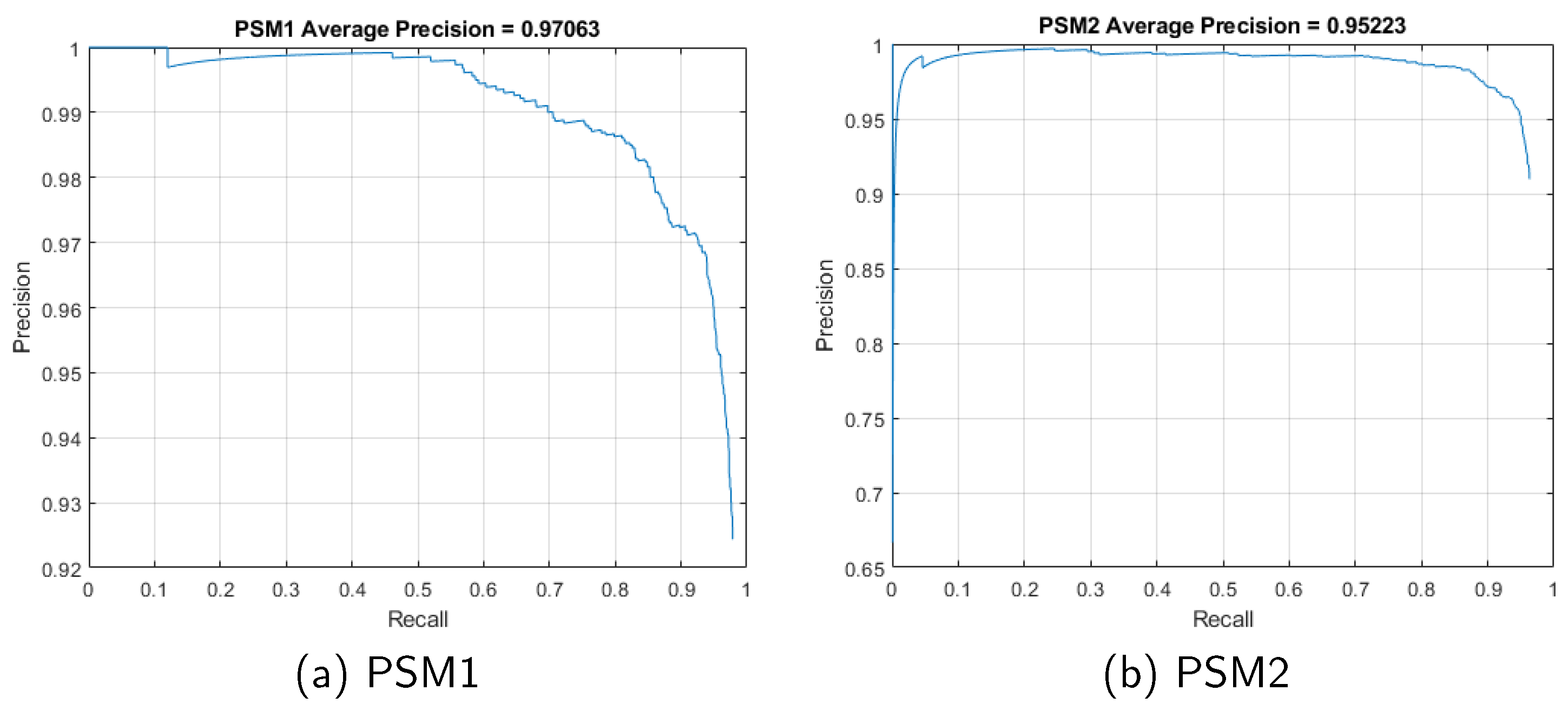

Figure 13.

Precision-recall curve for the LOTO cross-validation scheme using CSPDarknet53 architecture

Figure 13.

Precision-recall curve for the LOTO cross-validation scheme using CSPDarknet53 architecture

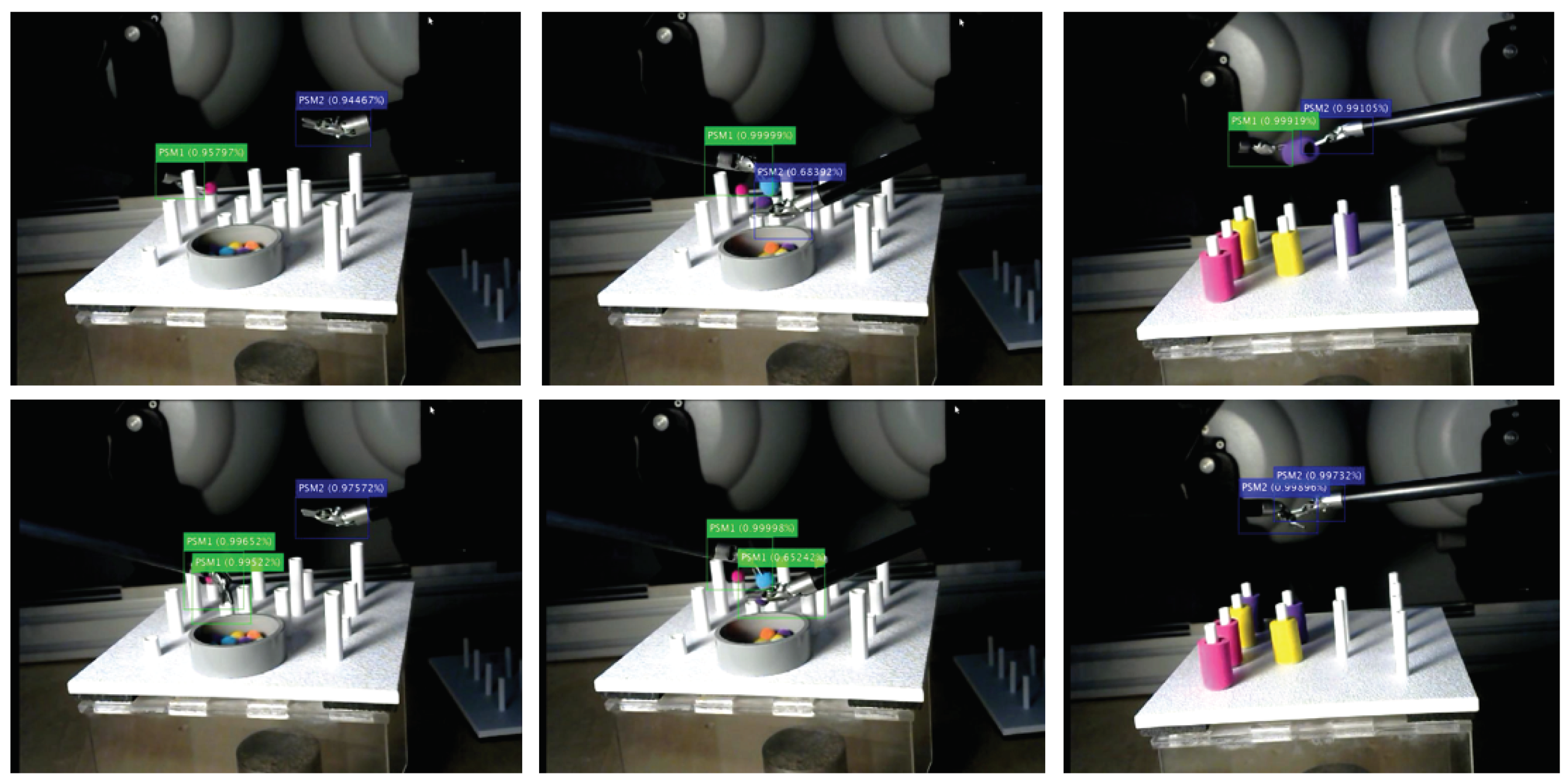

Figure 14.

Example of correct detections (top images) and incorrect detection (bottom images)

Figure 14.

Example of correct detections (top images) and incorrect detection (bottom images)

Table 1.

Protocol of the task pea on a peg and post and sleeve of the ROSMA dataset

Table 1.

Protocol of the task pea on a peg and post and sleeve of the ROSMA dataset

| |

Post and sleeve |

Pea on a peg |

| Goal |

To move the colored sleeves from side to side of the board. |

To put the beads on the 14 pegs of the board. |

| Starting position |

The board is placed with the peg rows in a vertical position (from left to right: 4-2-2-4). The six sleeves are positioned over the 6 pegs on one of the sides of the board. |

All beads are on the cup. |

| Procedure |

The subject has to take a sleeve with one hand, pass it to the other hand, and place it over a peg on the opposite side of the board. If a sleeve is dropped, it is considered a penalty and it cannot be taken back. |

The subject has to take the beads one by one out of the cup and place them on top of the pegs. For the trials performed with the right hand, the beads are placed on the right side of the board, and vice versa. If a bead is dropped, it is considered a penalty and it cannot be taken back. |

| Repetitions |

Six trials: three from right to left, and other three from left to right. |

Six trials: three placing the beads on the pegs of the right side of the board, and other three on the left side2. |

Table 2.

Description of the ROSMAG40 dataset distribution

Table 2.

Description of the ROSMAG40 dataset distribution

| User ID |

Task |

Dominant tool |

Annotated videos |

| X01 |

Pea on a Peg |

PSM1 |

2 |

| PSM2 |

2 |

| |

Post and sleeve |

PSM1 |

2 |

| PSM2 |

2 |

| X02 |

Pea on a Peg |

PSM1 |

2 |

| PSM2 |

2 |

| |

Post and sleeve |

PSM1 |

2 |

| PSM2 |

2 |

| X06 |

Pea on a Peg |

PSM1 |

2 |

| PSM2 |

2 |

| |

Post and sleeve |

PSM1 |

2 |

| PSM2 |

2 |

| X07 |

Pea on a Peg |

PSM1 |

2 |

| PSM2 |

2 |

| |

Post and sleeve |

PSM1 |

2 |

| PSM2 |

2 |

| X08 |

Pea on a Peg |

PSM1 |

2 |

| PSM2 |

2 |

| |

Post and sleeve |

PSM1 |

2 |

| PSM2 |

2 |

Table 3.

Description of ROSMAG40 annotations for MD gestures

Table 3.

Description of ROSMAG40 annotations for MD gestures

| Gesture ID |

Gesture label |

Gesture description |

No. frames PSM1 |

No. frames PSM2 |

| G1 |

Idle |

The instrument is in a resting position |

28395 (38.98%) |

2583 (35.46%) |

| G2 |

Precision |

The instrument is performing an action that requires an accurate motion of the tip. |

9062 (12.43%) |

9630 (13.1%) |

| G3 |

Displacement |

The instrument is moving with or without an object on the tip |

24871 (34.14%) |

26865 (36.42%) |

| G4 |

Collaboration |

Both instruments are collaborating on the same task. |

10515 (14.53%) |

10515 (14.53%) |

Table 4.

ROSMAG40 annotations for maneuver descriptor gestures

Table 4.

ROSMAG40 annotations for maneuver descriptor gestures

| Gesture ID |

Gesture label |

Gesture description |

Number of frames PSM1 |

Number of frames PSM2 |

| F1 |

Idle |

The instrument is in a resting position |

28395 (38.98%) |

2583 (35.46%) |

| F2 |

Picking |

The instrument is picking an object. |

3499 (4.8%) |

4287 (5.8%) |

| F3 |

Placing |

The instrument is placing an object on a peg. |

5563 (7.63%) |

5343 (7.3%) |

| F4 |

Free motion |

The instrument is moving without carrying anything at the tool tip. |

15813 (21.71%) |

16019 (21.99%) |

| F5 |

Load motion |

The instrument is moving holding an object. |

9058 (12.43%) |

10846 (14.43%) |

| F6 |

Collaboration |

Both instruments are collaborating on the same task. |

10515 (14.53%) |

10515 (14.53%) |

Table 5.

Description of the ROSMAT24 dataset annotations

Table 5.

Description of the ROSMAT24 dataset annotations

| Video |

No. frames |

Video |

No. frames |

| X01 Pea on a Peg 01 |

1856 |

X03 Pea on a Peg 01 |

1909 |

| X01 Pea on a Peg 02 |

1532 |

X03 Pea on a Peg 02 |

1691 |

| X01 Pea on a Peg 03 |

1748 |

X03 Pea on a Peg 03 |

1899 |

| X01 Pea on a Peg 04 |

1407 |

X03 Pea on a Peg 04 |

2631 |

| X01 Pea on a Peg 05 |

1778 |

X03 Pea on a Peg 05 |

1587 |

| X01 Pea on a Peg 06 |

2040 |

X03 Pea on a Peg 06 |

2303 |

| X02 Pea on a Peg 01 |

2250 |

X04 Pea on a Peg 01 |

2892 |

| X02 Pea on a Peg 02 |

2151 |

X04 Pea on a Peg 02 |

1858 |

| X02 Pea on a Peg 03 |

1733 |

X04 Pea on a Peg 03 |

2905 |

| X02 Pea on a Peg 04 |

2640 |

X04 Pea on a Peg 04 |

2265 |

| X02 Pea on a Peg 05 |

1615 |

X01 Post and Sleeve 01 |

1911 |

| X02 Pea on a Peg 06 |

2328 |

X11 Post and Sleeve 04 |

1990 |

Table 6.

Kinematic data variables from the dVRK used as input to the RNN for gesture segmentation

Table 6.

Kinematic data variables from the dVRK used as input to the RNN for gesture segmentation

| Kinematic variable |

PSM |

No. features |

| Tool orientation (x,y,z,w) |

PSM1 |

4 |

| PSM2 |

4 |

| Linear velocity (x,y,z,) |

PSM1 |

3 |

| PSM2 |

3 |

| Angular velocity (x,y,z,) |

PSM1 |

3 |

| PSM2 |

3 |

| Wrench force (x,y,z,) |

PSM1 |

3 |

| PSM2 |

3 |

| Wrench torque (x,y,z,) |

PSM1 |

3 |

| PSM2 |

3 |

| Distance between tools |

- |

1 |

| Angle between tools |

- |

1 |

| Total number of input features |

- |

34 |

Table 7.

Results for Leave-One-User-Out (LOUO) cross-validation scheme

Table 7.

Results for Leave-One-User-Out (LOUO) cross-validation scheme

| User left out Id |

PSM1 mAP (MD) |

PSM2 mAP (MD) |

PSM1 mAP (FGD) |

PSM2 mAP (FGD) |

| X1 |

48.9% |

39% |

46.26% |

23.36% |

| X2 |

58.8% |

64.9% |

48.16% |

51.39% |

| X3 |

50.4% |

64.2% |

39.71% |

51.24% |

| X4 |

63.0% |

61.2% |

54.05% |

49.08% |

| X5 |

54.6% |

53.6% |

52.34% |

52.7% |

| Mean |

55.14% |

56.58 |

48.01% |

45.55% |

Table 8.

Results for Leave-One-Supertrial-Out (LOSO) cross-validation scheme

Table 8.

Results for Leave-One-Supertrial-Out (LOSO) cross-validation scheme

| Supertrial left out |

PSM1 mAP (MD) |

PSM2 mAP (MD) |

PSM1 mAP (FGD) |

PSM2 mAP (FGD) |

| Pea on a peg |

56.15% |

56% |

46.36% |

46.67% |

| Post and sleeve |

52.2% |

51.9% |

39.06% |

43.38% |

Table 9.

Results for Leave-One-Psm-Out (LOPO) cross-validation scheme

Table 9.

Results for Leave-One-Psm-Out (LOPO) cross-validation scheme

| Dominant PSM |

PSM1 mAP (MD) |

PSM2 mAP (MD) |

PSM1 mAP (FGD) |

PSM2 mAP (FGD) |

| PSM1 |

56.53% |

67% |

24.11% |

37.33%% |

| PSM2 |

65.7% |

67.5% |

52.47% |

54.68% |

Table 10.

Results for Leave-One-Trial-Out (LOTO) cross-validation scheme

Table 10.

Results for Leave-One-Trial-Out (LOTO) cross-validation scheme

| Dominant PSM |

PSM1 mAP (MD) |

PSM2 mAP (MD) |

PSM1 mAP (FGD) |

PSM2 mAP (FGD) |

| Test data 1 |

64.65% |

77.35% |

56.46% |

58.99% |

| Test data 2 |

63.8% |

62.8% |

30.43% |

70.58% |

| Test data 3 |

53.26% |

55.58% |

53.26% |

61.6% |

| Test data 4 |

48.72% |

60.62% |

58.3% |

55.58% |

| Test data 5 |

60.51% |

66.84% |

71.39% |

46.67% |

Table 11.

Results for LOSO experimental setup

Table 11.

Results for LOSO experimental setup

| Architecture |

Test data |

PSM1 mAP |

PSM2 mAP |

| CSPDarknet53 |

Post and sleeve |

70.92% |

84.66% |

| YOLOv4-tiny |

Post and sleeve |

83.64% |

73.45% |

Table 12.

Results for LOTO experimental setup

Table 12.

Results for LOTO experimental setup

| Architecture |

Left tool mAP |

Right tool mAP |

Detection time |

| CSPDarknet53 |

97.06% |

95.22% |

0.0335 s (30 fps) |

| YOLOv4-tiny |

93.63% |

95.8% |

0.02 s (50 fps) |