Submitted:

17 February 2024

Posted:

19 February 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. What is knowledge to us?

2.1. Considerations

“Many complex decision problems in petroleum exploration and production involve multiple conflicting objectives. … An effective way to express uncertainty is to formulate a range of values, with confidence levels assigned to numbers comprising the range. … Asset managers in the oil and gas industry are looking to new techniques such as portfolio management to determine the optimum diversified portfolio that will increase company value and reduce risk."

“A portfolio is said to be efficient if no other portfolio has more value while having less or equal risk, and if no other portfolio has less risk while having equal or greater value. … a portfolio can be worth more or less than the sum of its component projects and there is not one best portfolio, but a family of optimal portfolios that achieve a balance between risk and value."

“how to achieve the maximum benefit and minimum risk of dynamic multi-period portfolio is a worthy study problem in the future. … How to choose the preference function will be also a valuable research topic. … [and how] to evaluate the effectiveness of the established PO model."

“[T]he grand aim of all science … is to cover the greatest possible number of empirical facts by logical deductions from the smallest possible number of hypotheses or axioms" (quoting Einstein, in [16], p. 173).

“It can scarcely be denied that the supreme goal of all theory is to make the irreducible basic elements as simple and as few as possible without having to surrender the adequate representation of a single datum of experience."

“Black hole entropy is a concept with geometric root but with many physical consequences. … a black hole can be said to hide information. In ordinary physics entropy is a measure of missing information."

3. A case study of knowledge across selected disciplines

3.1. What is knowledge to Systems Engineering

3.2. What is knowledge to Philosophers?

3.3. What is knowledge to Social Scientists?

3.3.1. What is knowledge to citizens making recommendations to authorities?

3.3.2. What is knowledge under Autocrats?

3.3.3. What is knowledge to consciousness?

3.4. What is knowledge to Information Theorists?

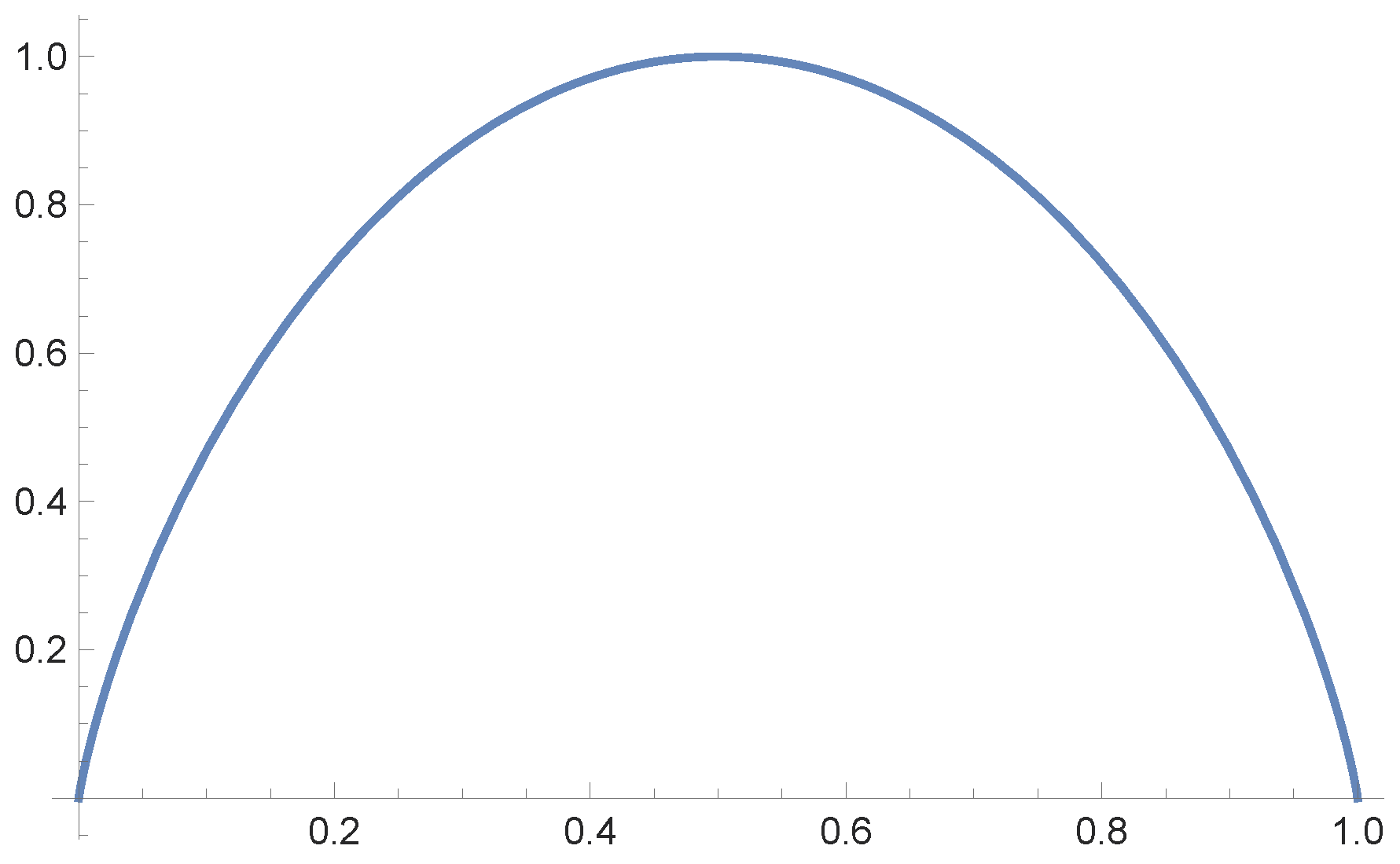

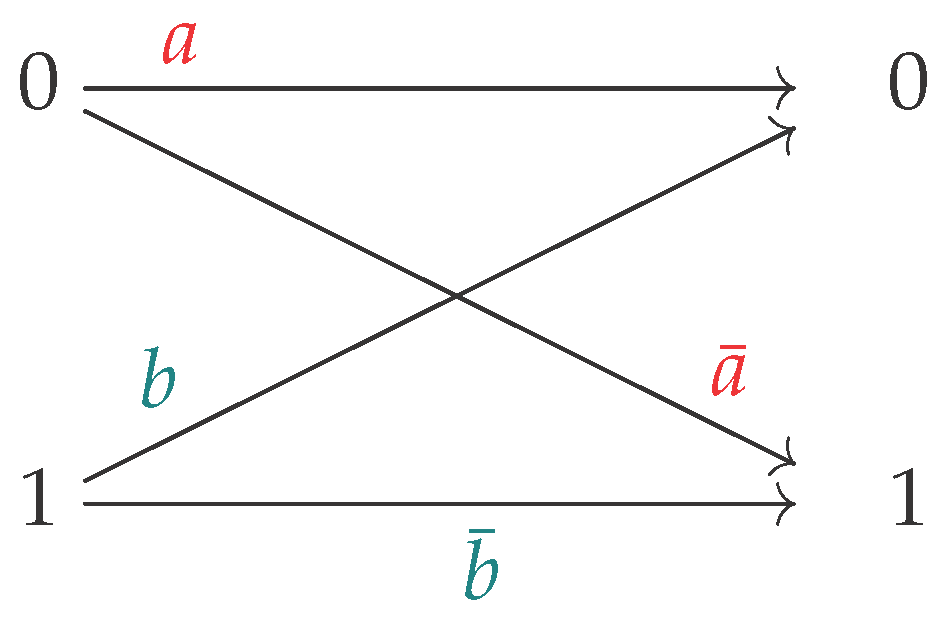

3.4.1. Intuition

3.4.2. Details

3.4.3. Information Theory is a natural theory

3.5. What is knowledge to Physicists?

4. Discussion

4.1. Systems Engineering

4.2. Philosophy

4.3. Social Science

4.3.1. Citizen recommendations to government agencies

4.3.2. Businesses

4.4. Consciousness

4.4.1. Authoritarianism

4.5. Information theory

4.6. Physics

4.7. Shannon holes

5. Conclusions

“Interdependence means that important behaviors will be highly correlated. However, the evidence for complementarity is scarce."

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| ALPS | Advanced Liquid Processing System |

| APA | American Psychological Association |

| BERT | Bidirectional Encoder Representations from Transformers |

| CAB | Citizens Advisory Board |

| DOE | Department of Energy |

| IT | Information Theory |

| MLM | Masked Language Modeling |

| NIH | National Institutes of Health |

| NRL | Naval Research Laboratory |

| NSF | National Science Foundation |

| NSP | Next Sentence Prediction |

| ONR | Office of Naval Research |

| SE | Systems Engineering |

| SRS | Savannah River Site |

| TEPCO | Tokyo Electric Power Company |

References

- Lawless, W.F.; Moskowitz, I.S.; Doctor, K.Z. A Quantum-like Model of Interdependence for Embodied Human–Machine Teams: Reviewing the Path to Autonomy Facing Complexity and Uncertainty, Entropy, 2023, 25, 1323. [CrossRef]

- Lawless, W.F. Interdependent Autonomous Human-Machine Systems: The Complementarity of Fitness, Vulnerability & Evolution, Entropy, 2022, 24(9):1308, doi: 10.3390/e24091308. [CrossRef]

- Cooke, N.J. & Lawless, W.F. Effective Human-Artificial Intelligence Teaming, Engineering Science and Artificial Intelligence, Editors: Lawless, W.F., Mittu, R., Sofge, D.A., Shortell, T. & McDermott, T.A., (2021), Springer.

- Conant, R.C. Laws of information which govern systems. IEEE Trans. Syst. Man Cybern., 1976, 6, 240–255. [CrossRef]

- Kuhn, T. The essential tension, University of Chicago Press, 1977.

- Thornton, S. Karl Popper, The Stanford Encyclopedia of Philosophy, Edward N. Zalta & Uri Nodelman (eds.), retrieved 8/5/2023 from https://plato.stanford.edu, 2023.

- Ioannidis, J.P.A. Most psychotherapies do not really work, but those that might work should be assessed in biased studies, Epidemiology of Psychiatry Science, 2016, 25(5):436–438, retrieved 10/10/2023 from doi:10.1017/S2045796015000888. [CrossRef]

- Suslick, S.B. & Schiozer, D.J. Risk analysis applied to petroleum exploration and production: an overview, Journal of Petroleum Science and Engineering,2004, 44(1–2): 1-9, ISSN 0920-4105. [CrossRef]

- Markowitz, H.M. Portfolio Selection, The Journal of Finance, 1952, 7(1): 77–91.

- Hays, W.L. Statistics, 4th Ed., Holt, Rinehart and Winston, Inc., 1988.

- DoD Pentagon Press Secretary John F. Kirby and Air Force Lt. Gen. Sami D. Said Hold a Press Briefing, 11/3/2021, https://www.defense.gov/News/ Article/2832634, 2021.

- Chen, Y. and colleagues Swarm Intelligence Algorithms for Portfolio Optimization Problems: Overview and Recent Advances, Mobile Information Systems, 2022. [CrossRef]

- Baker, A.(2022) Simplicity, The Stanford Encyclopedia of Philosophy, Summer 2022 Edition, Edward N. Zalta, ed., //plato.stanford.edu/archives/sum2022/entries/simplicity, 2022.

- Isaacson, W.(2015) How Einstein Reinvented Reality, Scientific American, 2015, 313(3): 38-45, doi:10.1038/scientificamerican0915-38. [CrossRef]

- Faraoni, V. and Giusti, A. Why Einstein must be wrong: In search of the theory of gravity, Phys.org, republished from The Conversation, https://phys.org/news/2023-09-einstein-wrong-theory-gravity.html, 2023.

- Nash, L.(1963) The Nature of the Natural Sciences, Little, Brown, 1963.

- Robinson, A.(2018) Did Einstein really say that? As the physicist’s collected papers reach volume 15, Andrew Robinson sifts through the quotes attributed to him, Nature, 2018, 557, 30. [CrossRef]

- Cho, A. Antimatter falls down, just like ordinary matter. Test confirms that gravity pulls the same on hydrogen and anti-hydrogen, Science: Physics News, 2023. [CrossRef]

- Bekenstein, J.D. Bekenstein-Hawking entropy, Scholarpedia, 2008, 3(10):7375.

- Systems Engineering Glossary, Systems Engineering, https://sebokwiki.org/wiki/Systems Engineering (glossary), 2023.

- Checkland, P. Systems Thinking, Systems Practice, New York, NY, USA, John Wiley & Sons, 1999.

- Senge, P.M. The fifth discipline: The Art & Practice of the Learning Organization, New York, NY, USA, Doubleday Business, 1990.

- Steup, M. & Neta, R. Epistemology, The Stanford Encyclopedia of Philosophy, Edward N. Zalta (ed.), https://plato.stanford.edu/archives/fall2020/entries/epistemolog, 2020.

- Bednar, R.L. & Peterson Self-esteem Paradoxes and innovations in clinical practice, 2nd edition, Washington, DC: American Psychological Association (APA), 1995.

- R.F. Baumeister and K.D. Vohs, Self-Regulation, Ego Depletion, and Motivation, Social and Personal Psychology, 2007, (1): 115-128. [CrossRef]

- Greenwald, A.G.and colleagues Measuring individual differences in implicit cognition: The implicit association test, Journal of Personality and Social Psychology,1998, 74(6), 1464–1480. [CrossRef]

- Shu, L.L.; Mazar, N.; Gino, F.; Ariely, D.; Bazerman, M.H. Signing at the Beginning Makes Ethics Salient and Decreases Dishonest Self-Reports in Comparison to Signing at the End, Proceedings National Academy of Sciences, 2012, 109:15197–200. [CrossRef]

- Baumeister and colleagues Exploding the self-esteem myth, Scientific American, 2005, 292(1): 84-91.

- Blanton and colleagues Strong Claims and Weak Evidence: Reassessing the Predictive Validity of the IAT, Journal of Applied Psychology 2009, 94(3): 567–582.

- M.S. Hagger and colleagues A Multilab Preregistered Replication of the Ego-Depletion Effect, Perspectives on Psycholigical Science,2016, 11(4). [CrossRef]

- Berenbaum, M.R. Retraction for Shu et al., Signing at the beginning makes ethics salient and decreases dishonest self-reports in comparison to signing at the end, PNAS, 2021, 118(38), e2115397118. [CrossRef]

- Dobbin, F. and Kalev, A. Programs Fail, Harvard Business Review, hbr.org/2016/07/why-diversity-programs-fail, 2016.

- Paluck and colleagues Prejudice Reduction: Progress and Challenges, Annual Review of Psychology, 2021, 72:533–60, www.annualreviews.org/doi/pdf/10.1146/annurev-psych-071620-030619.

- Tetlock, P.E. & Gardner, D. Superforecasting: The Art and Science of Prediction, Crown, 2015.

- Beck, U. Risk society: towards a new modernity, Sage Publications, 1992.

- MacKenzie, D. Trading at the Speed of Light: How Ultrafast Algorithms Are Transforming Financial Markets, Princeton University Press, 2021.

- Barron, J. Visiting New York? Make Sure A.I. Didn’t Write Your Guidebook, The New York Times, 2023.

- Nogrady, B. Is Fukushima wastewater release safe? What the science says, Nature, 2023, 618: 894–895.

- Buesseler, K.O. Opening the floodgates at Fukushima, Science, 2020, 369: 621–622.

- Ministry of Economy Reactor Decommission, Contaminated Water and Treated Water (in Japanese), https://www.meti.go.jp/earthquake/nuclear, 2023.

- Mabon, L. & Kawabe, M. Bring voices from the coast into the Fukushima treated water debate, Proceedings of the National Academy of Sciences, 2022, 119, e2205431119.

- Brown, A. Just Like That, Tons of Radioactive Waste Is Heading for the Ocean, The New York Times, (retrieved 9/14/2023 from https://www.nytimes.com/2023/08/22/opinion/japan-fukushima-radioactive-water-dumping, 2023.

- Smith, J.; Marks, N.; Irwin, T. The risks of radioactive wastewater release. The wastewater release from the Fukushima Daiichi nuclear plant is expected to have negligible effects on people and the ocean, Science. Perspectives, 2023, 382(6666): 31-33.

- Japan Fisheries Co-operative Japan Fisheries Co-operative. Japan Fisheries Co-operative Has Met Prime Minster Kishida Over the Handling of Treated Water by ALPS (in Japanese), retrieved 9/14/2023 from https://www.zengyoren.or.jp/news, press item 20230821 in Japanese, 2023.

- Takenaka, K.; Pollard, M.Q. Japan complains of harassment calls from China over Fukushima water release, Reuters, retrieved 9/14/2023 from https://www.reuters.com/world/asia-pacific/japan-says-harassment-calls-china-regarding-fukushima- water-release-extremely-2023-08-28/, 2023.

- Katayama, N. Outraged with the Government and TEPCO, Residents of Tokyo and Five Prefectures Files a Lawsuit Seeking an Injunction on Treated Water Release (in Japanese), Tokyo Shinbun, retrieved 9/14/2023 from https://www.tokyo-np.co.jp/article/275965) (in Japanese), 2023.

- Harris, M. History and Significance of the EMIC/ETIC Distinction, Annual Review of Anthropology, 1976, 5: 329–350.

- Evans-Pritchard, E.E. Witchcraft, oracles and magic among the Azande, Clarendon Press, 1937.

- Zucker, L.G. The role of institutionalization in cultural persistence, American Sociological Review, 1977, 726–743.

- Skillcate AI. BERT for Dummies: State-of-the-art Model from Google, Medium, google-42639953e769, 2022.

- Pfeffer, J. The Role of the General Manager in the New Economy: Can We Save People from Technology Dysfunctions?, The Future of Management in an AI World. Redefining Purpose and Strategy in the Fourth Industrial Revolution, Canals, J. & Heukamp, F., editors, 2020, 67–92, Springer International Publishing. [CrossRef]

- Luttwak, E. The clue China is preparing for war. Xi is laying the groundwork while the West looks away, Unherd, retrieved 7/23/2023 from https://unherd.com/2023/07/the-clue-china-is-preparing-for-war/, 2023, 7/19.

- Finkel, E. If AI becomes conscious, how will we know? Scientists and philosophers are proposing a checklist based on theories of human consciousness, Science, News, 2023, 381(6660).

- Butlin, P. et al. Consciousness in Artificial Intelligence: Insights from the Science of Consciousness, arXiv, 2308.08708 [cs.AI], 2023.

- Naddaf, M. Europe spent €600 million to recreate the human brain in a computer. How did it go? The Human Brain Project wraps up in September after a decade. Nature examines its achievements and its troubled past, Nature News, 2023, 620: 718-720.

- Shannon, C. E., and Weaver, W. The Mathematical Theory of Communication University of Illinois Press, 1949, 1-117.

- Brillouin, L. Science and Information Theory; Academic Press: Cambridge, MA, USA, 1956.

- Egginton, W. The Rigor of Angels. Borges, Heisenberg, Kant, and the Ultimate Nature of Reality, Pantheon books, 2023.

- Sliwa, J. Toward collective animal neuroscience, Science, 2021, 374(6566):397-398, 10.1126/science.abm3060.

- Hartley, R. V. L. Transmission of Information Bell System Technical Journal, 1928, 7(3): 535–563.

- Ash, Robert B. Information Theory, Dover 1965.

- Cover Thomas M. and Thomas Joy A. Elements of Information Theory, 2nd Ed, Wiley 2006.

- Moskowitz, Ira S. and Miller, A.R. Simple Timing Channels Proc. 1994 IEEE Computer Society Symposium on Research in Security and Privacy, 1994.

- Verdú, S. On Channel Capacity per Unit Cost IEEE Transaction on Information Theory, 1990, V., (36)5: 1019-1030.

- Moskowitz, Ira S. and Miller, A.R. The Channel Capacity of a Certain Noisy Timing Channel IEEE Transactions on Information Theory, 1992, 38(4): 1339-1344.

- Richard A. Silverman. On Binary Channels and their Cascades. IRE Transactions-Information Theory, 3 Dec., 1955, 19-27.

- Martin, Keye and Moskowitz, Ira S. Noisy Timing Channels with Binary Inputs and Outputs in Information Hiding, ed. J Camenisch et al., LNCS 4437, Springer, 2006, 124-144.

- Majani, Eric E. A Model for the study of Very Noisy Channels and Applications, PhD dissertation, Cal Tech, 1988.

- Golomb, Solomon W. The Limiting Behavior of the Z-Channel, IEEE Trans. on Information Theory, 1980, 26:3, p. 372.

- Physics, Encyclopedia Britannica, www.britannica.com/science/physics-science, 2023.

- Einstein, A. Albert Einstein on space-time Encyclopedia Britannica, 13th Ed., Albert-Einstein-on-Space-Time-1987141, 1926.

- Frank, A.; Gleiser, M. The Story of Our Universe May Be Starting to Unravel, New York Times, //www.nytimes.com/2023/09/02/opinion/cosmology-crisis-webb-telescope.html, 2023.

- Rickless, S., Plato’s Parmenides, The Stanford Encyclopedia of Philosophy, E.N. Zalta, editor, https://plato.stanford.edu/archives/spr2020/entries/plato-parmenides, 2020.

- Mann, R.P. Collective decision making by rational individuals, PNAS, 2018, 115(44): E10387-E10396. [CrossRef]

- Schölkopf, B. and colleagues Towards Causal Representation Learning, arXiv, 2102.11107, 2021.

- Sen, A. (1994) The Formulation of Rational Choice, The American Economic Review, 1994, 84(2):385-390.

- Lucas, R. Monetary Neutrality, Nobel Prize Lecture, 1995.

- Simon, H.A. Bounded rationality and organizational learning Technical Report AIP 107, CMU, Pittsburgh, PA, 1989.

- Lawless, W.F.; Sofge, Donald A.; Lofaro, Daniel; Mittu, Ranjeev Editorial: Interdisciplinary Approaches to the Structure and Performance of Interdependent Autonomous Human Machine Teams and Systems, Frontiers in Physics, Frontiers eBook, retrieved 3/1/2023 https://www.frontiersin.org/articles/10.3389/fphy.2023.1150796/full, 2023.

- U.S. Supreme Court California v. Green, 399 U.S. 149, California v. Green, No. 387, Argued April 20, 1970, Decided June 23, 1970, 399, U.S. 149, 1970.

- Ginsburg, R.B. American Electric Power Co. et al. v. Connecticut et al., 10-174, http://www.supremecourt.gov/opinions/10pdf/10-174.pdf, 2011.

- Nosek, B. Estimating the reproducibility of psychological science, Science, 2015, 349(6251): 943, www.science.org/doi/10.1126/science.aac4716.

- Nash, J.F. Equilibrium points in n-person games, PNAS, 1950, 36(1), 48-49, doi.org/10.1073.

- Chomsky, N.; Roberts, I.; Watumull J. The False Promise of ChatGPT, New York Times, retrieved 3/8/2023 from https://www.nytimes.com/2023/03/08/opinion/noam-chomsky-chatgpt-ai.html, 2023.

- Drucker, P.F. What we can learn from Japanese Management, Harvard Business Review.

- Akiyoshi, M.; Whitton, J.; Charnley-Parry, I.; Lawless, W.F. Effective Decision Rules for Systems of Public Engagement in Radioactive Waste Disposal: Evidence from the United States, the United Kingdom, and Japan, Systems Engineering and Artificial Intelligence; in Lawless, W.F.; Mittu, R.; Sofge, D.A.; Shortell, T.; McDermott, T.A. (Editors), Springer, Chapter 24: 509–533, 2021. [CrossRef]

- Bradbury, J.A.; Branch, K.M.; Malone, E.L. An evaluation of DOE-EM Public Participation Programs, PNNL-14200, Richland, WA, Pacific Northwest National Laboratory, 2003.

- Lawless, W.F.; Bergman, M.; Feltovich, N. Consensus-seeking versus truth-seeking, ASCE Practice Periodical of Hazardous, Toxic, and Radioactive Waste Management, 2005, 9(1), 59-70.

- Mui, C. How Kodak Failed, Forbes, retrieved 9/11/2023, 2012/01/18/how-kodak-failed, 2012.

- Barabba, V. The decision loom: A design for interactive decision-making in Organizations, Triarchy Press Ltd, 2011.

- Hudson, A. The Rise & Fall of Kodak. A Brief History of The Eastman Kodak Company, 1880 to 2012, Photo Secrets, https://www.photosecrets.com/the-rise-and-fall-of-kodak, 2012.

- Endsley, M. Human-AI Teaming: State of the Art and Research Needs; National Research Council, National Academies Press: Washington, DC, USA, 2021.

- Wong, M.L. et al. On the roles of function and selection in evolving systems, PNAS, 2023, 120(43), e2310223120.

- Wang, Y. China’s Social Media Interference Shows Urgent Need for Rules, The Canberra Times, https://www.hrw.org/news/2023/08/14/chinas-social-media-interference-shows-urgent-need-rules, retrieved on 9/9/2023, 2023, 8/14.

- Zumbrun, J. Should the U.S. worry that China is closing in on its lead in research and development? Amid a productivity slump, the IMF sees benefits from Chinese and South Korean innovation, Wall Street Journal, https://blogs.wsj.com/economics/2018/04/10/should-the-us-worry-about-china-rd/, 2018.

- Taplin, N. Can China’s red capital really innovate? U.S. technology theft from Britain helped kick-start the industrial revolution on American shores. Will China be able to replicate that success?, Wall Street Journal, retrieved May 14, 2018 from https://www.wsj.com/articles/can-chinas-red-capital-really-innovate-1526299173, 2018.

- Wickens, C.D. Engineering psychology and human performance (second edition), Merrill, 1992.

- Brown, B. Human Machine Teaming using Large Language Models, in Lawless, W.F.; Mittu, R.; Sofge, D.A.; Fouad, H. (Editors), forthcoming, Interdependent human-machine teams. The path to autonomy, Elsevier, Chapter 3, 2024.

- Cooke, N.; Hilton, M.E. Enhancing the Effectiveness of Team Science; National Research Council, National Academies Press: WAsh(1965)ington, DC, USA, 2015.

- Berscheid, E.; Reis, H. Attraction and close relationships. In The Handbook of Social Psychology, 4th ed.; Lawrence Erlbaum: Mahwah, NJ, USA, 1998; Volume 1.

- Wang, B.H. Entanglement-Separability Boundary Within a Quantum State. arXiv, 2020, arXiv:2003.00607. arXiv:2003.00607.

- Lewin, K. Field Theory in Social Science. Selected Theoretical Papers; Harper and Brothers: Manhattan, NY, USA,, 1951.

- IT Information theory, cs.stanford.edu/people/eroberts/courses/soco/projects/1999-00/information-theory/ noise, 2023.

- Wooters, W.; Zurek, W. The no-cloning theorem. Physics Today, 2009, 62: 76–7.

- Marshall, S.M.; Mathis, C.; Carrick, E.; Keenan, G.; Cooper, G.J.T.; Graham, H.; Craven, M.; Gromski, P.S.; Moore, D.G.; Walker, S.I.; et al. Identifying molecules as biosignatures with assembly theory and mass spectrometry. Nature Communications, 2021, 3033, 12. [CrossRef]

- Bette, D.A.; Pretre, R.; Chassot, P. Is our heart a well-designed pump? The heart along animal evolution. Eur. Heart J., 2014, 35, 2322–2332. [CrossRef]

- Von Neumann, J. Theory of Self-Reproducing Automata; University of Illinos Press, Champaign, IL, USA, 1966.

- Cummings, J. Team Science Successes and Challenges; NSF Workshop Fundamentals of Team Science and the Science of Team Science: Bethesda, MD, USA, 2015.

- Moskowitz, Ira S. A Cost Metric for Team Efficiency. Front. Phys. 2022, 10, 861633. [CrossRef]

| 1 | Oxford Dictionary of English, 2nd edition; see at https://www.oed.com

|

| 2 | Oxford Languages at https://languages.oup.com/google-dictionary-en/

|

| 3 | e.g., the Pew poll, “Deep Divisions in Americans’ Views of Nation’s Racial History–and How To Address It"; https://www.pewresearch.org/politics/2021/08/12/deep-divisions-in-americans-views-of-nations-racial-history-and- how-to-address-it/

|

| 4 | |

| 5 | NIH in 2021, Scientific Workforce Diversity Seminar Series Seminar Proceedings: “Is Implicit Bias Training Effective?” see Proceedings: “Implicit Bias"; https://diversity.nih.gov/sites/coswd/files/images

|

| 6 | Reference:

|

| 7 | Frank practices astrophysics at the University of Rochester; Gleiser practices theoretical physics at Dartmouth. |

| 8 | The James Webb Space Telescope; see https://webb.nasa.gov

|

| 9 | See the url for the “Hanford Vit Plant," accessed on 9/8/2023 |

| 10 | See the url for “Transuranic Waste Retrieval and Certification at Hanford," accessed on 9/8/2023 |

| 11 | See url at “Defense Waste Processing Facility Reaches 25 Years of Successful Operations at SRS," 2021, accessed on 9/8/2023 |

| 12 | Coles, I.; Simmons, A.M. (2023, 6/9), “Ukraine Probes Russian Defenses for Weak Points After Kicking Off Counteroffensive,” Wall Street Journal, https://www.wsj.com/articles/ukraine-probes-russian-defenses-for-weak-points-after-kicking-off-counteroffensive-26296960

|

| 13 | Britannica, The Editors of Encyclopaedia. “Aldrich Ames". Encyclopedia Britannica, 19 Oct. 2023, https://www.britannica.com/biography/Aldrich-Ames

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).