1. Introduction

Processing end-of-life (EoL) lithium-ion batteries (LiBs) in electric vehicles (EVs) has become a pressing issue because of the enormous number of EVs that are expected to be retired [

1]. Due to the nature of waste electrical and electronic equipment, landfilling LiBs is not an option, as it will cause severe environmental pollution from toxic and corrosive electrolytes and metals [

2]. Stockpiling EoL LiBs has also been reported to cause fire-related hazards [

3]. On the other hand, if properly processed, not only can the downsides be avoided, but critical materials that contain elements such as lithium, cobalt, nickel, and magnesium can be recycled, or even the majority of the intellectual and processing value added to the manufacturing of LiBs can be recovered [

4].

The typical EoL processing strategies of EV-LiBs can be broadly categorised into recycling, repurposing, and remanufacturing. Recent studies suggest that the status-quo methods of recycling EoL EV-LiBs, such as pyrometallurgical methods, hydrometallurgical methods, and shredding, arguably produce more greenhouse emissions than a newly made battery. Mechanical dismantling of EV-LiBs has been proposed as probably the most promising direction to achieve sustainable recycling of EoL EV-LiBs under the current circumstances [

5]. Thompsons et al. compared the present recycling methods with disassembling and concluded that disassembly could lead to cost savings of up to 80% [

6].

Hierarchical repurposing of EoL EV-LiBs is a more eco-friendly and straightforward solution [

7]. However, repurposing retired EV-LiBs also requires a disassembly process down to the module or cell level for inspection and replacement. Regarding the remanufacturing of EoL EV-LiBs, Kampker et al. examined 196 cylindrical battery cells from used LiBs and found that 89% of the cells functioned normally, providing strong evidence that remanufacturing EoL EV-LiBs is feasible if the LiBs are correctly disassembled [

4].

Thus, regardless of the EoL processing strategy, more efficient and reliable disassembly of EoL EV-LiBs is crucial. Nevertheless, the status quo on mechanically disassembling EV-LiBs relies heavily on manual operations [

5]. Compared with assembly automation, a fully autonomous disassembly system can be challenging because of the uncertain shapes, sizes, and conditions [

8]. However, with recent developments in many related fields, such as robotics, sensors, and informatics, employing robots to achieve autonomous disassembly of EoL EV-LiBs is a promising development direction [

9].

Robotic disassembly as a field of study can be broadly categorised into disassembly planning research [

10,

11], fundamental disassembly operation studies [

12,

13,

14], and disassembly system development [

15,

16,

17]. Specifically, for EV-LiB disassembly, planning research, such as disassembly sequence planning and task allocation problems, has been studied by many researchers [

18,

19,

20,

21]. Hellmuth et al. [

22] proposed a scoring system to assess the automation potential for each operation of EV-LiB disassembly. Furthermore, conceptual robotic disassembly system designs have been proposed [

23,

24]. However, none of the work mentioned above has been validated in real robotic disassembly systems. Some researchers have proposed or even demonstrated robotic disassembly cells for EV-LiBs with human-robot collaboration (HRC) [

25,

26,

27]. However, humans and robots working closely in a cell does not prevent humans from the risks of short-circuiting, electroshocking, and electrolyte leakage [

3].

This paper discusses using robots to automate the pack-to-module disassembly process of a plug-in hybrid-electric-vehicle (PHEV) LiB with prismatic cells and presents a disassembly platform that focuses on non-destructive operations [

28]. Compared with an EV-LiB, although a PHEV-LiB is smaller, but it includes very similar components and connections. Thus, the findings obtained from this paper also apply to the disassembly of full-size EV-LiBs with similar architectures.

This paper highlights fundamental operations, such as unscrewing and visual localisation, in robotic EVB disassembly systems. Previous implementations have often been incompatible with the proposed system. For example, Chen et al. [

29] developed a mechanism to change the nutrunner adapters. However, an extension bar is often needed to solve the accessibility issue, and the mechanism cannot change the adapters with the extension bar. Additionally, a complex holder also needs to be made for each adapter. Other researchers implemented computer vision systems [

30] for angle alignment and localisation for EVBs. However, the methods usually require training over large datasets [

25,

31,

32]. In contrast, this paper presents a more straightforward and efficient way to localise parts based on edge detection.

The main achievements of this study are: (1) building a robotic platform that aims to fully automates the non-destructive pack-to-module disassembly of a PHEV-LiB, (2) investigating the critical operations performed in the disassembly platform, in particular, the vision-based unfastening process, and (3) identifying critical challenges for the development of a fully autonomous EV-LiB disassembly.

This paper is structured as follows:

Section 2 introduces the process of disassembling the LiB pack;

Section 3 details the robotic disassembly platform;

Section 4 shows the experimental results, process demonstration and discussions about the limitations of the platform; and

Section 5 concludes the paper.

4. Results and Discussions

4.1. Validation of the unscrewing system

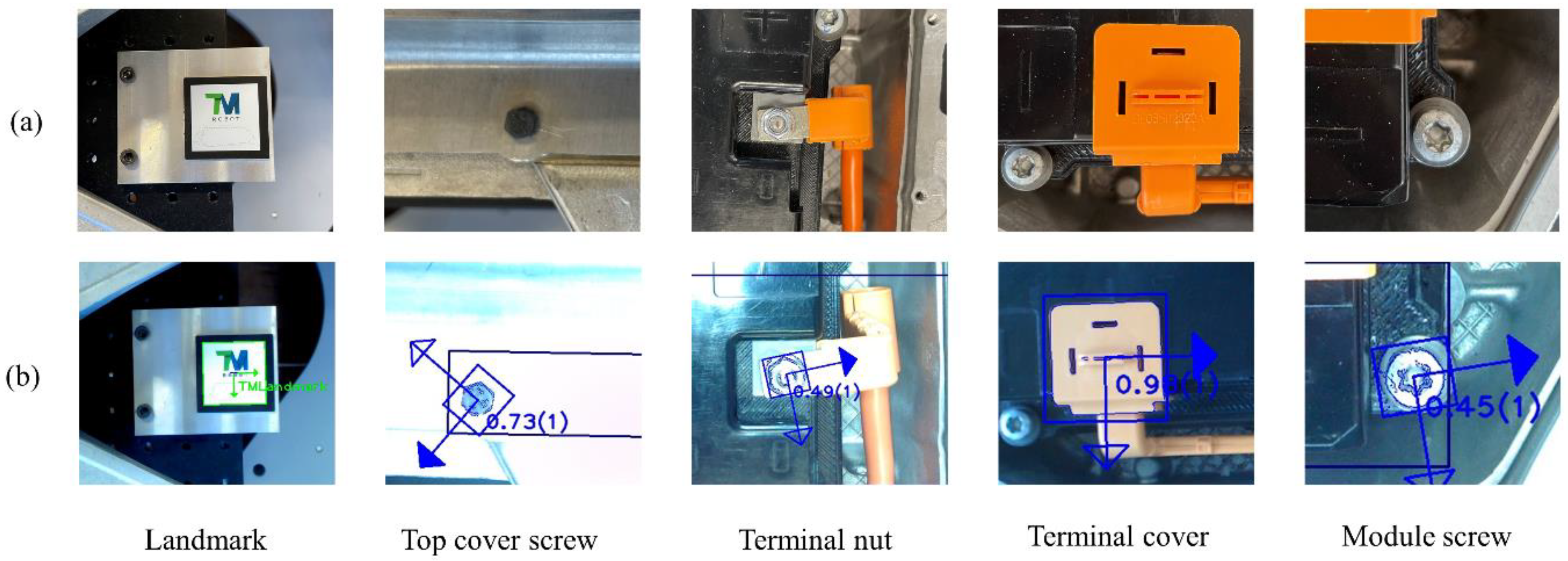

Figure 9 shows some of the parts being localised in this case study, including the landmark, 35 M5 x 10mm screws connecting the top cover and the lower tray (Symbol A in

Table 1), 14 nuts fixing the busbars/cables to the battery module terminals (Symbol I in

Table 1), 14 terminal covers (Symbol H in Table) and 28 M6x95mm bolts (Symbol L in

Table 1). The parts can be successfully recognised with the matching scores, orientations, and numbers of found parts provided by TM14's operating system.

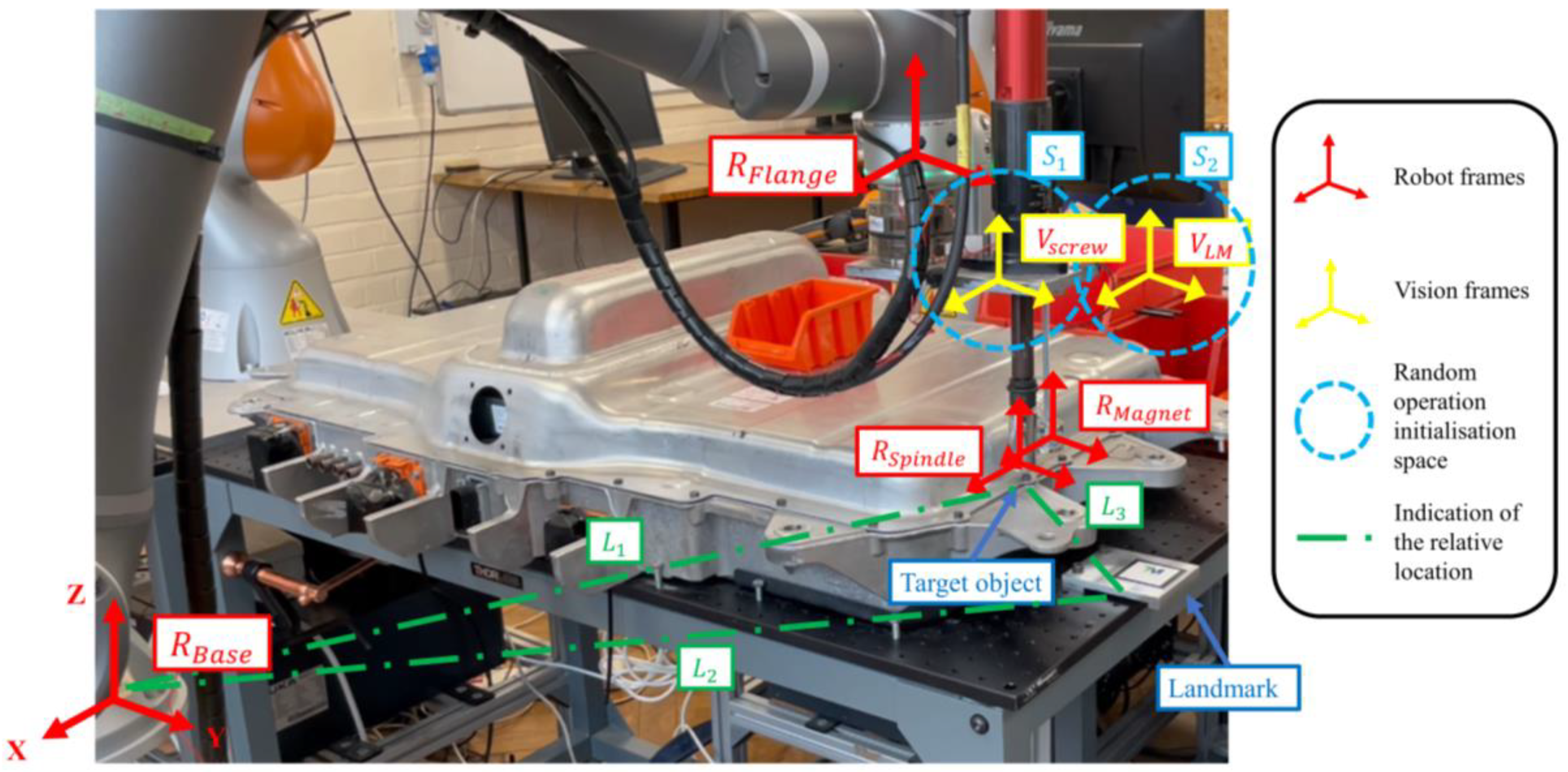

A set of experiments is designed and performed to evaluate the performance of the proposed localisation method in terms of robustness against positional errors. As discussed in

Section 3.2.1, the main purpose of using a vision system is to maintain a high success rate even when positional uncertainties are present in the disassembly processes. Regardless of the source of the error, they all change the robot's starting location relative to the target object. Therefore, to mimic the relative positional errors between the robot and the objects, the robot is set to initialise the unscrewing operations with random starting locations, as indicated by the circles in

Figure 6. Note that initialising the operation in an arbitrary space is equivalent to placing the target object randomly in a space of the same size.

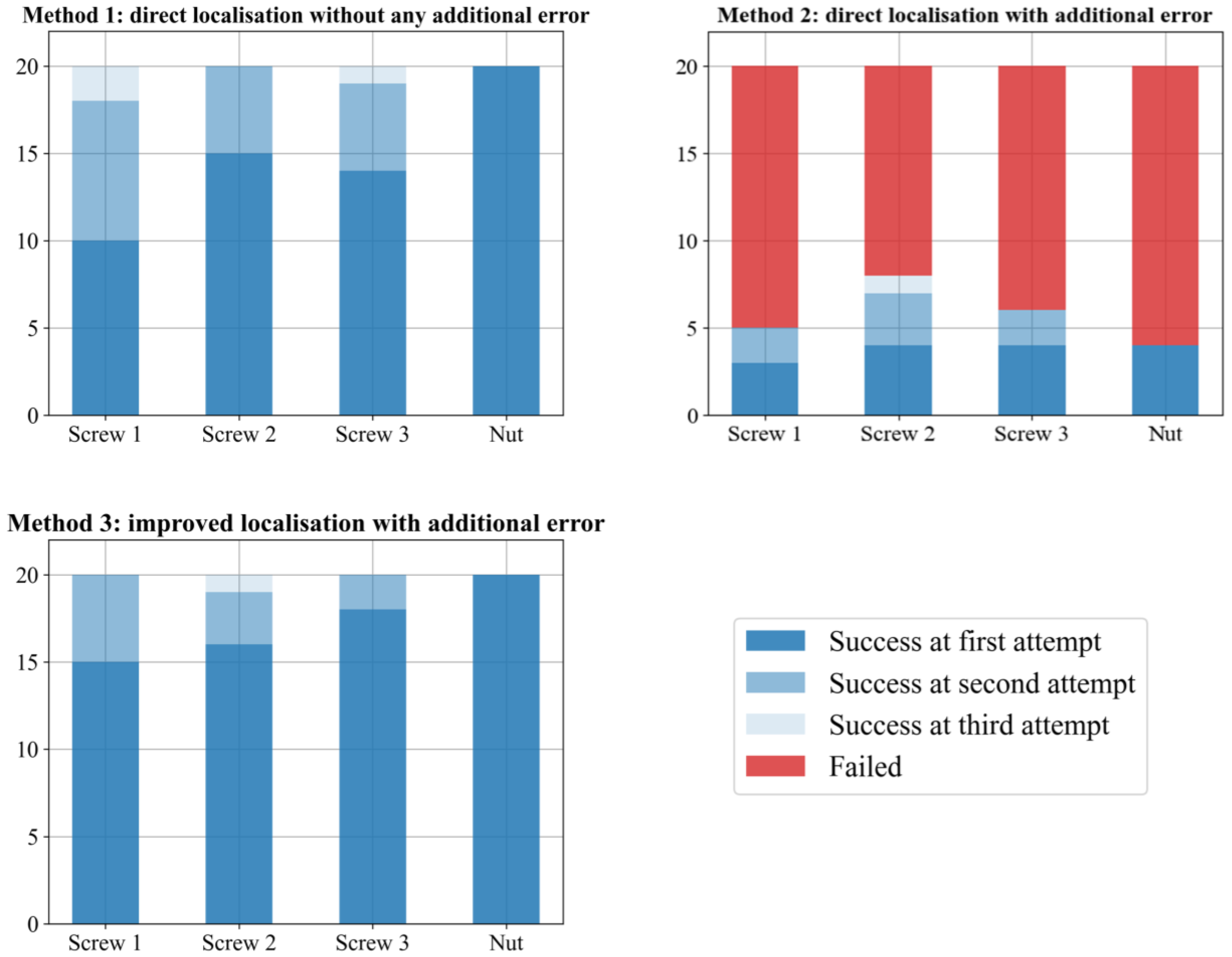

Three methods to initialise the unscrewing operation are compared in this experiment. In Method 1, the robot initialises the unscrewing process from a fixed location and directly searches for the fasteners. In other words, the robot starts by localising the fasteners directly from a recorded position without any additional positional error to mimic an ideal situation. In Method 2, the robot initialises the operation from a random frame in a space that represents a relative positional error (as marked by

in

Figure 6), and it directly searches for the parts instead of using the proposed method described in

Section 3.2.1 to first calibrate with the landmark. In Method 3, the robot starts the operation from a space the same size as Method 2 (as marked by

in

Figure 6) and uses the proposed two-step localisation method to find the parts.

If the target object is found by any method, it starts the unfastening and picking process. The operation fails if the object is not found or picked up within three attempts. Each method is repeated 20 times by unfastening three different screws on the top cover (step A in

Table 1) and one nut (step I in

Table 1) on the battery module terminals. For comparison, the search range, patterns, and criteria are kept the same for all methods.

Initialising the operations from a random position is achieved by adding a uniformly generated noise to the initialisation frames of the operations. In other words, noise is added to for Method 2 and for Method 3. The range of the noise, which regulates the size of the random starting space, is set to be from to for all translational axes and from to for all rotational axes. In comparison,

As shown in

Figure 11, the unscrewing process starting with Method 3 has achieved the same success rate as the one without introducing any additional positional errors within 80 repetitions, proving the effectiveness of the proposed method.

Figure 12.

Comparison of the unscrewing success rates when initialising with three localisation methods. Blue blocks indicate successful unscrewing operations with different numbers of attempts. If the localisation process does not find the part or the robot fails to pick up the component within three attempts, it is labelled a failure.

Figure 12.

Comparison of the unscrewing success rates when initialising with three localisation methods. Blue blocks indicate successful unscrewing operations with different numbers of attempts. If the localisation process does not find the part or the robot fails to pick up the component within three attempts, it is labelled a failure.

4.2. Comparison between robotic and human disassembly

The whole disassembly process is demonstrated in

Figure 13.

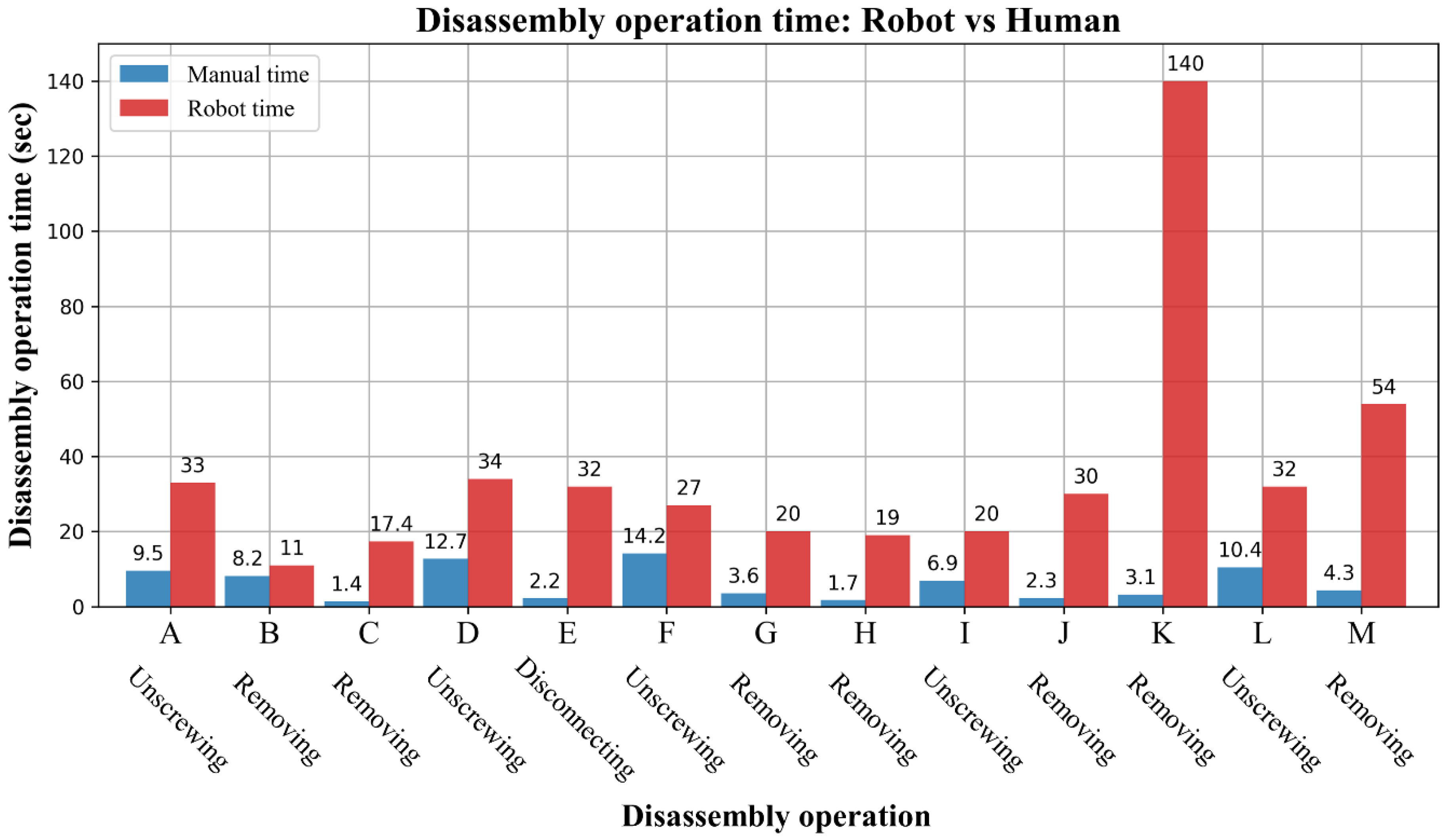

A comparison of the operation time between the human operators and the robot platform is made, as shown in

Figure 14. The manual disassembly time is obtained by the average of three operators repeating the task at least six times. For robot operations in which the needed time is not fixed, such as the unscrewing operation, where multiple attempts might occur, the operation time is obtained by the average running time from ten repetitions.

For each of the operations, the human was faster than the robot. This is mainly because the platform developed at this stage only focuses on completing the operations instead of optimising them. Most of the operations were running at 20 to 30% of the maximum overall speed of the robots, which are and for TM14 and KUKA iiwa, respectively. The robotic operation time also depends on the speed of the nutrunner and the perception tasks, such as visually checking whether the fastener or the terminal cover has been removed. Thus, to increase the productivity of the platform, the limit of the operating speed needs to be tested, and the operations need to be optimised.

A remarkable speed difference can also be observed for Step K – removing the high-voltage cables from the battery modules. This is because the high-voltage cables are flexible components, and their terminals are connected to the battery modules by threaded cylindrical connectors that might cause the terminals to become stuck on the connectors. This process involves a complex rule-based decision flow based on visual, force, and positional feedback, so it takes a remarkably long time compared with human operators. The same integration of all three kinds of information can be performed easily by human operators, who can remove the cable with both hands. Therefore, it is suggested that the manipulation of flexible components should be further developed as a crucial step to increase the platform's efficiency.

4.3. Limitations of the platform

The scope of this case study is to investigate the non-destructive operations in EVB robotic disassembly. However, non-destructive operations alone cannot achieve complete autonomous pack-to-module disassembly. Thus, dismantling that is not easily achieved by non-destructive operations has been simplified in this case study and identified as evidence to guide future research.

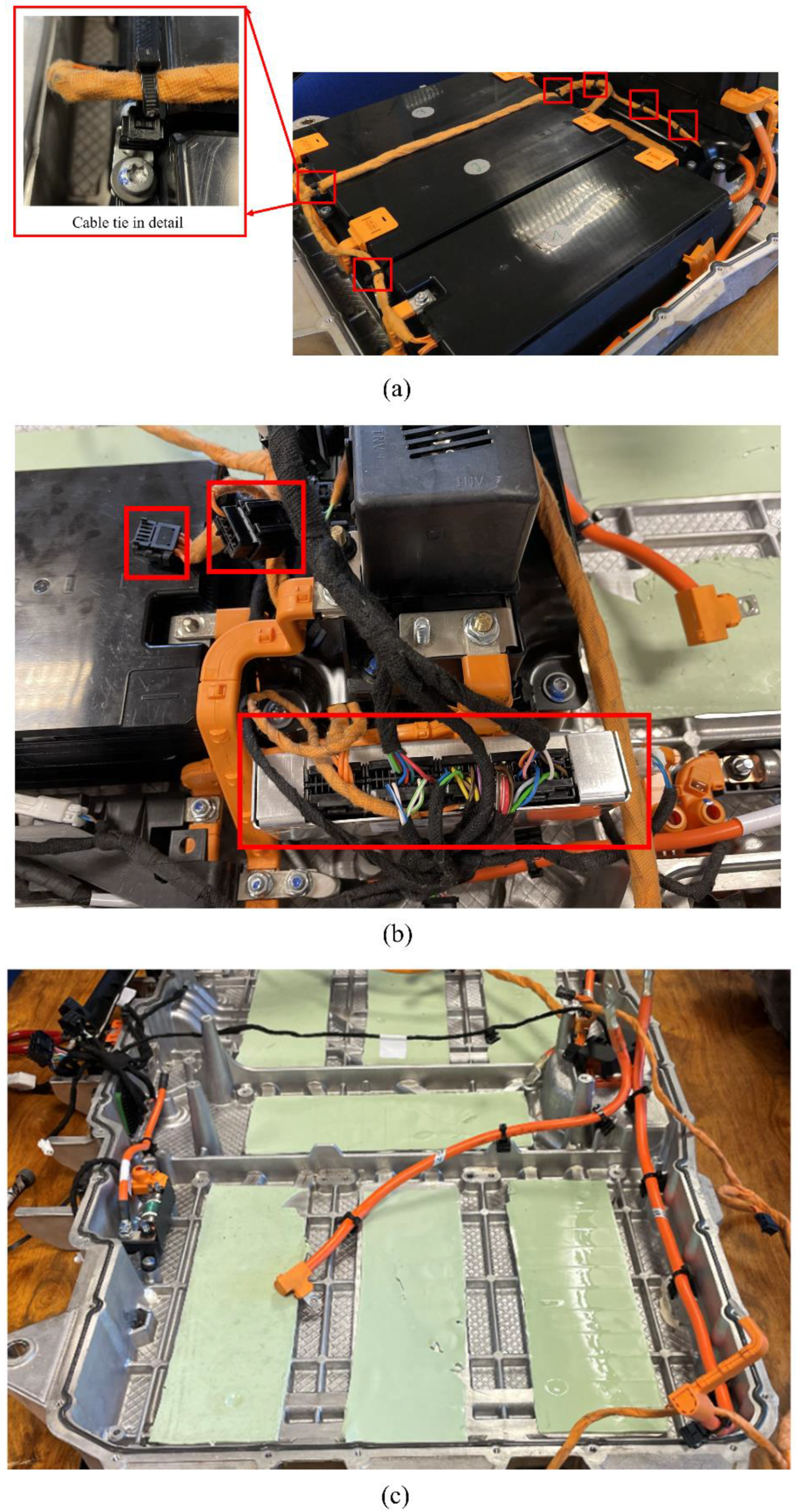

First, cable ties have been widely used in EVB packs, as shown in

Figure 15(a). They are mainly used to secure the cables relative to the modules but are hard to remove non-destructively.

Second, as shown in

Figure 15(b), cable harnesses are also widely used to connect the signalling cables. To remove the whole cable assembly, the robot needs to be able to disconnect snap-fitted harnesses, or the signalling cables need to be separated destructively from the harnesses by cutting.

Third, adhesive coolant pads placed between the modules and the lower tray of the battery pack, as shown in

Figure 15(c), have increased the force required when removing the modules from the pack. Since the force required is generally higher than the payload of the lightweight robots, the coolant pads are not considered in this case study.

Thus, more robotic disassembly tools are still required to solve these challenges and achieve a full autonomous pack-to-module disassembly for an EVB pack. Tool changer systems, as shown in

Figure 3, can be used to maintain platform expandability by including more tools for the robots. Also, as the disassembly sequence is regulated by the central PC while the operations are stored locally in the robots' controllers, the disassembly sequence of the platform can be reconfigured easily to maintain flexibility.

5. Conclusions

This paper proposes a robotic disassembly platform for dismantling a PHEV battery pack with seven modules consisting of prismatic cells. The platform focuses on non-destructive operations to obtain all seven battery modules from the pack. The battery pack disassembly plan, the platform architecture, and the disassembly processes have been proposed and validated. Design principles, such as using tool changers, the nutrunner adapter changing method, and a central PC to regulate the disassembly sequence, are proposed to ensure the expandability and flexibility of the proposed system.

Experimental results show that a two-step object localisation method based on 2D camera images improves the success rate of operations under positional uncertainties. Furthermore, the localisation method is integrated into the proposed unscrewing system that can: 1) unscrew and handle threaded fasteners, 2) change the nutrunner adapters, 3) localise the fasteners under positional uncertainty, and 4) deal with the situations in which the unfastened screws are jammed in the holes.

The disassembly time was compared with that taken by human operators. Although humans are faster, the robots were only operating at 20 to 30% of the maximum overall speed. Finally, limitations preventing the proposed platform from achieving a full autonomous pack-to-module disassembly, such as removing cable ties and disconnecting cable harnesses, are identified as promising future research directions.

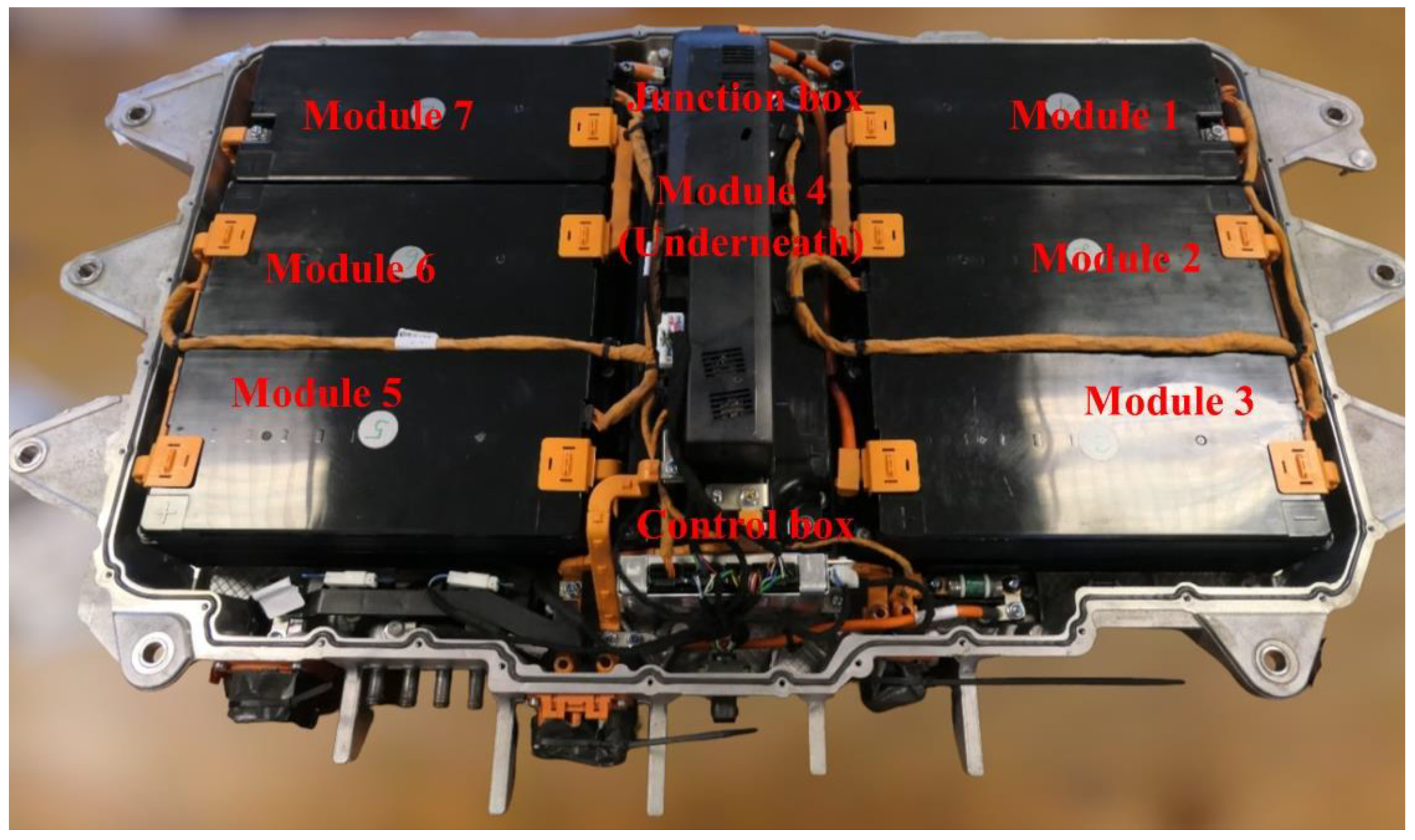

Figure 1.

Inner structure of the dummy PEEV-LiB pack. The fourth battery module is located underneath the junction box. All battery modules are replaced by dummies for safety reasons.

Figure 1.

Inner structure of the dummy PEEV-LiB pack. The fourth battery module is located underneath the junction box. All battery modules are replaced by dummies for safety reasons.

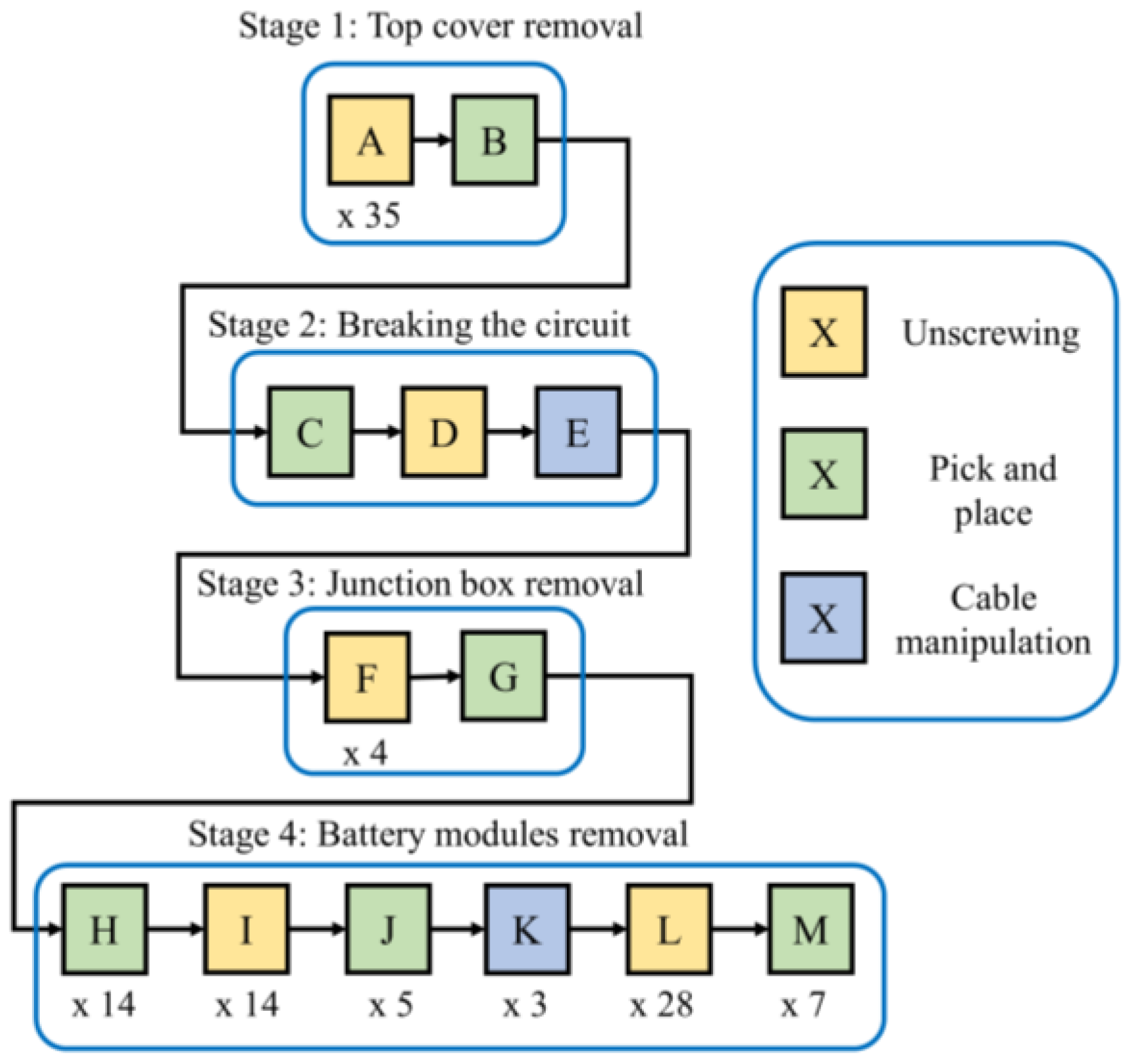

Figure 2.

The PHEV-LiB disassembly sequence developed for this case study. The sequence is generated based on the intuitive steps to disassemble the pack. It can also be observed that most of the repetitive operations are unscrewing.

Figure 2.

The PHEV-LiB disassembly sequence developed for this case study. The sequence is generated based on the intuitive steps to disassemble the pack. It can also be observed that most of the repetitive operations are unscrewing.

Figure 3.

(a) Photo and (b) simulation model of the proposed four-robot disassembly platform in operation. The cell deploys four lightweight industrial robots, and they are located on each side of the battery pack to ensure accessibility. In this case study, two KUKA iiwa lbr 14 robots are used as Robots A and B, and two Techman TM14 robots are used as Robots C and D. The simulation model is built with the RoboDK software.

Figure 3.

(a) Photo and (b) simulation model of the proposed four-robot disassembly platform in operation. The cell deploys four lightweight industrial robots, and they are located on each side of the battery pack to ensure accessibility. In this case study, two KUKA iiwa lbr 14 robots are used as Robots A and B, and two Techman TM14 robots are used as Robots C and D. The simulation model is built with the RoboDK software.

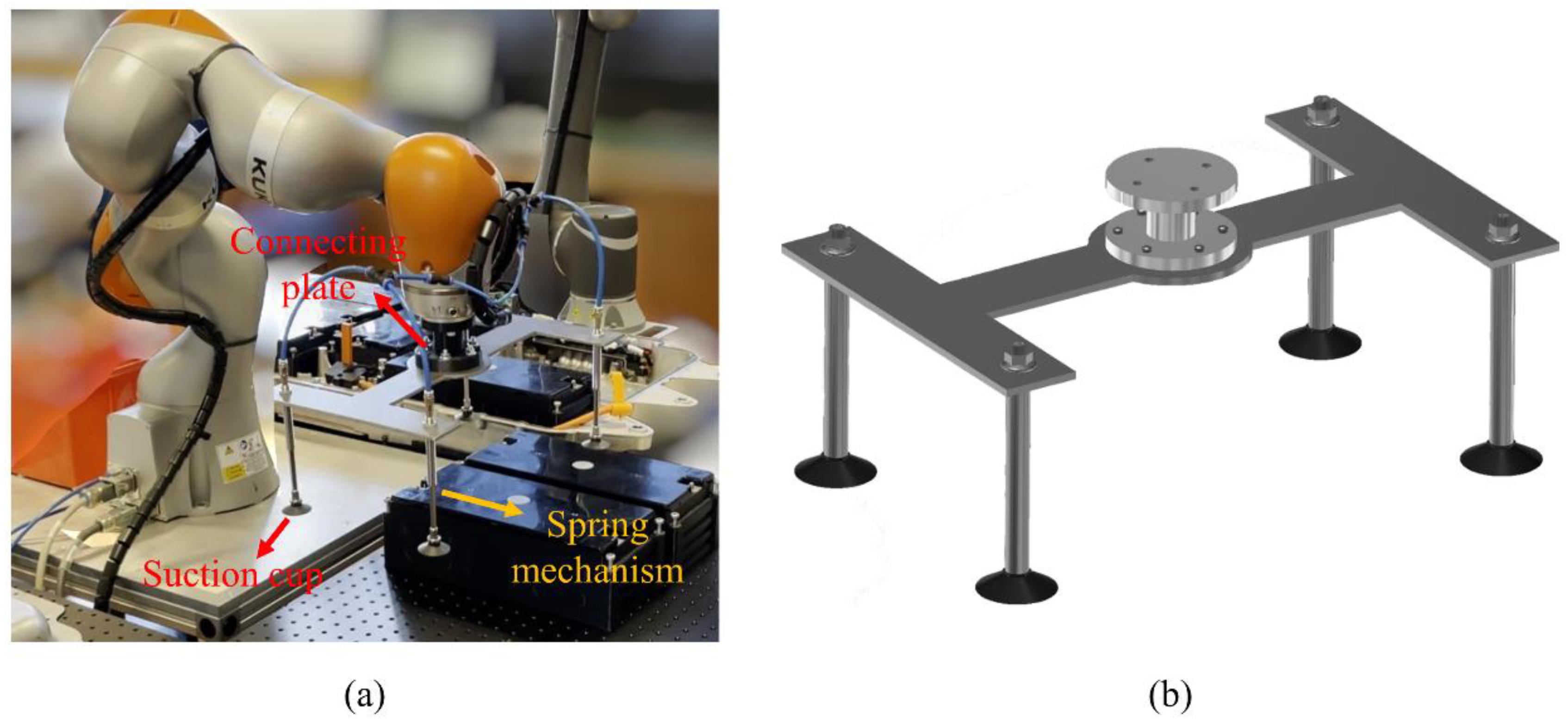

Figure 4.

An in-house built vacuum gripper attached to Robot A, shown in (a) photo, (b) CAD model. The vacuum gripper is used to handle bulky components, such as the battery modules, which cannot be handled by commonly-seen electrical grippers.

Figure 4.

An in-house built vacuum gripper attached to Robot A, shown in (a) photo, (b) CAD model. The vacuum gripper is used to handle bulky components, such as the battery modules, which cannot be handled by commonly-seen electrical grippers.

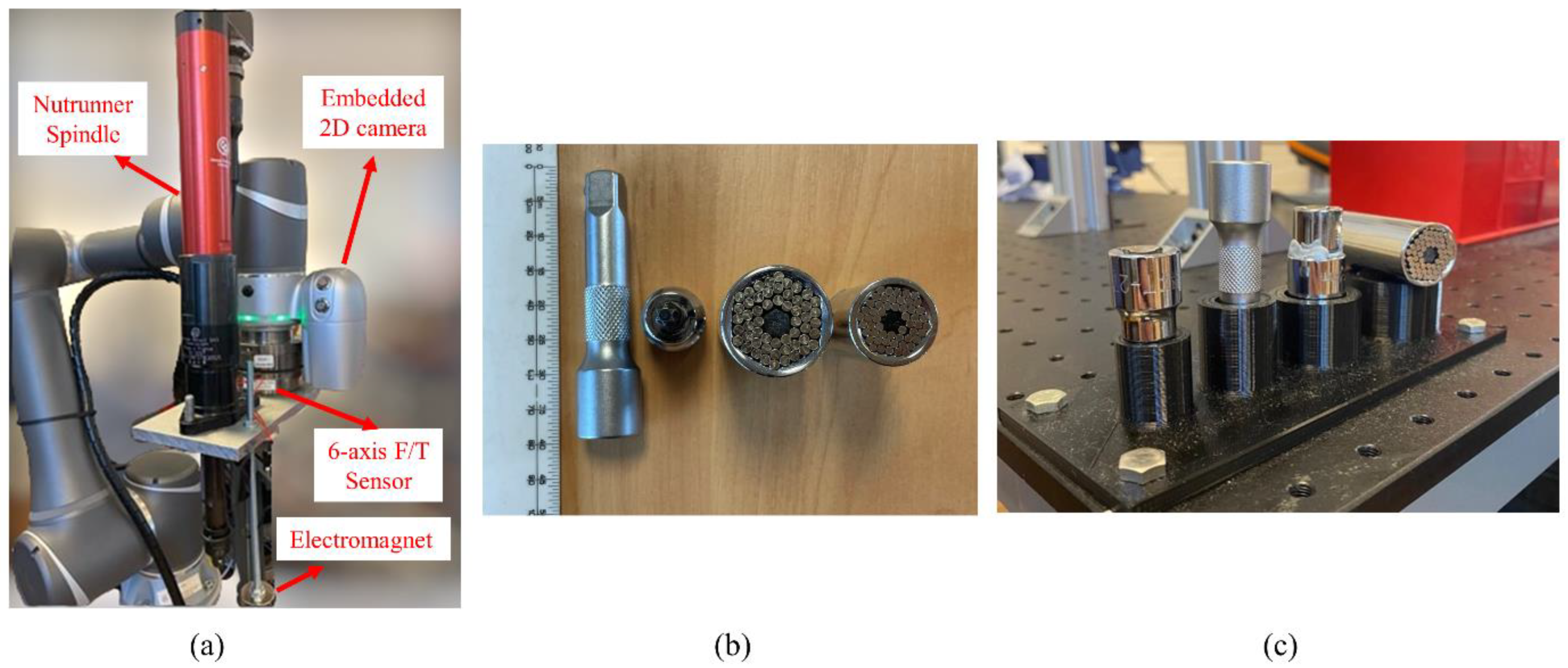

Figure 5.

The nutrunner system, which consists of a) the electric nutrunner equipped on Robot C, b) nutrunner adapters, from left to right: an extension bar, an M6 Torx socket, a 3/8 inch gripper socket, and a 1/4 inch gripper socket, and c) an adapter holder.

Figure 5.

The nutrunner system, which consists of a) the electric nutrunner equipped on Robot C, b) nutrunner adapters, from left to right: an extension bar, an M6 Torx socket, a 3/8 inch gripper socket, and a 1/4 inch gripper socket, and c) an adapter holder.

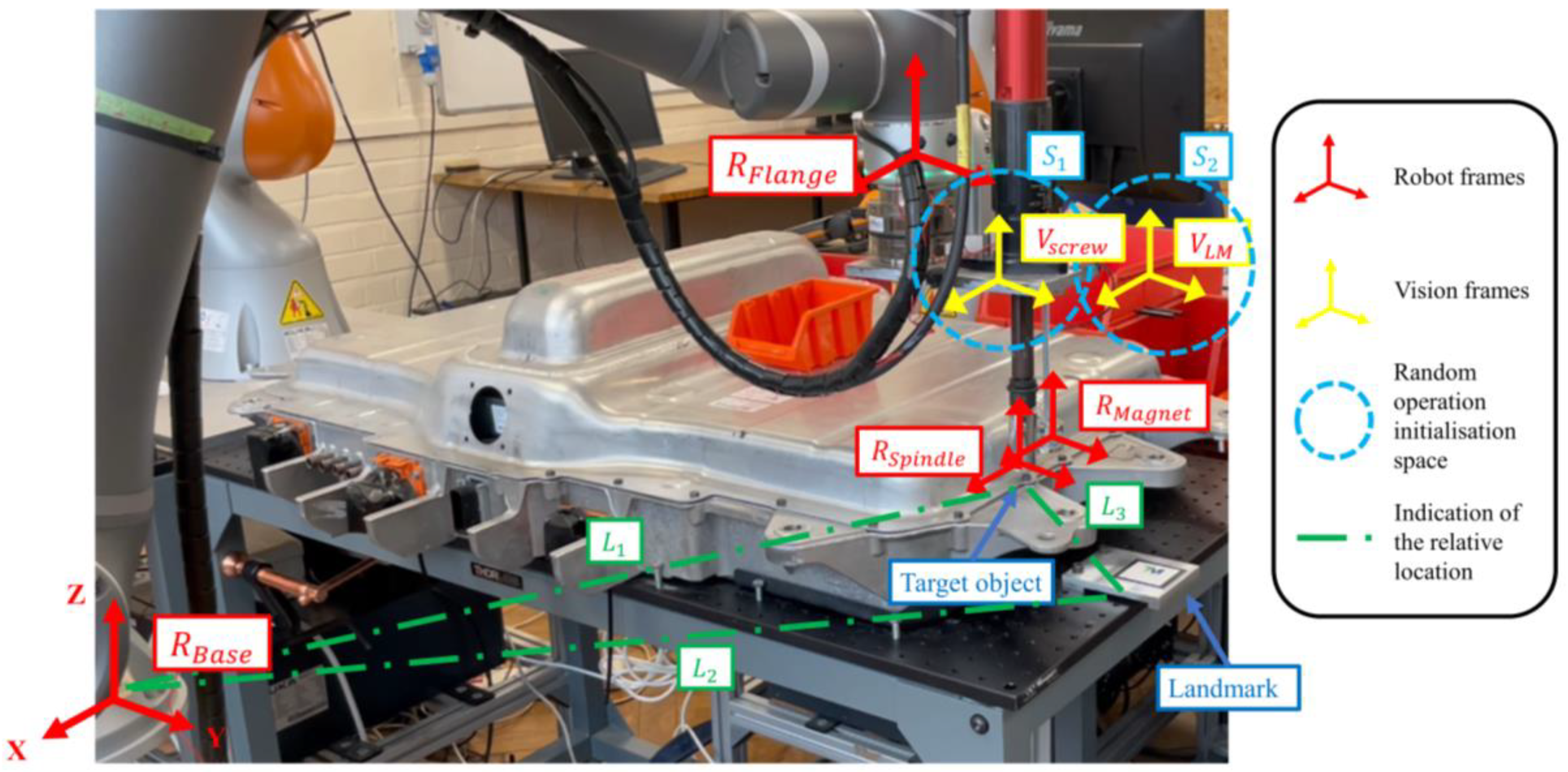

Figure 6.

Information about the robotic unscrewing operation. Frame is a term describing the 6-dimensional position information (translation and rotation) of any spatial point in a robotic system. In this system, the spindle and the electromagnet are fixed to the robot flange connected to the robot base, and the relative distances between their frames to the flange frame are used to compute the robot joint movements by inverse kinematics. Vision frames are locations for the robot flange to initialise the localisation processes. The dashed straight lines indicate the relative positions of different parts of the system. To assist in describing the experiment mentioned in

Section 4.1, the dashed circles indicate spaces where the localisation jobs could initialise to mimic the positional errors. Note that initialising the operation in a random space is equivalent to placing the target object randomly in a space of the same size since localisation aims to obtain a relative displacement between the vision frame and the target object. Additionally, note that the sizes of the circles do not reflect the actual sizes of the random spaces. However, it indicates that the two spaces are the same size.

Figure 6.

Information about the robotic unscrewing operation. Frame is a term describing the 6-dimensional position information (translation and rotation) of any spatial point in a robotic system. In this system, the spindle and the electromagnet are fixed to the robot flange connected to the robot base, and the relative distances between their frames to the flange frame are used to compute the robot joint movements by inverse kinematics. Vision frames are locations for the robot flange to initialise the localisation processes. The dashed straight lines indicate the relative positions of different parts of the system. To assist in describing the experiment mentioned in

Section 4.1, the dashed circles indicate spaces where the localisation jobs could initialise to mimic the positional errors. Note that initialising the operation in a random space is equivalent to placing the target object randomly in a space of the same size since localisation aims to obtain a relative displacement between the vision frame and the target object. Additionally, note that the sizes of the circles do not reflect the actual sizes of the random spaces. However, it indicates that the two spaces are the same size.

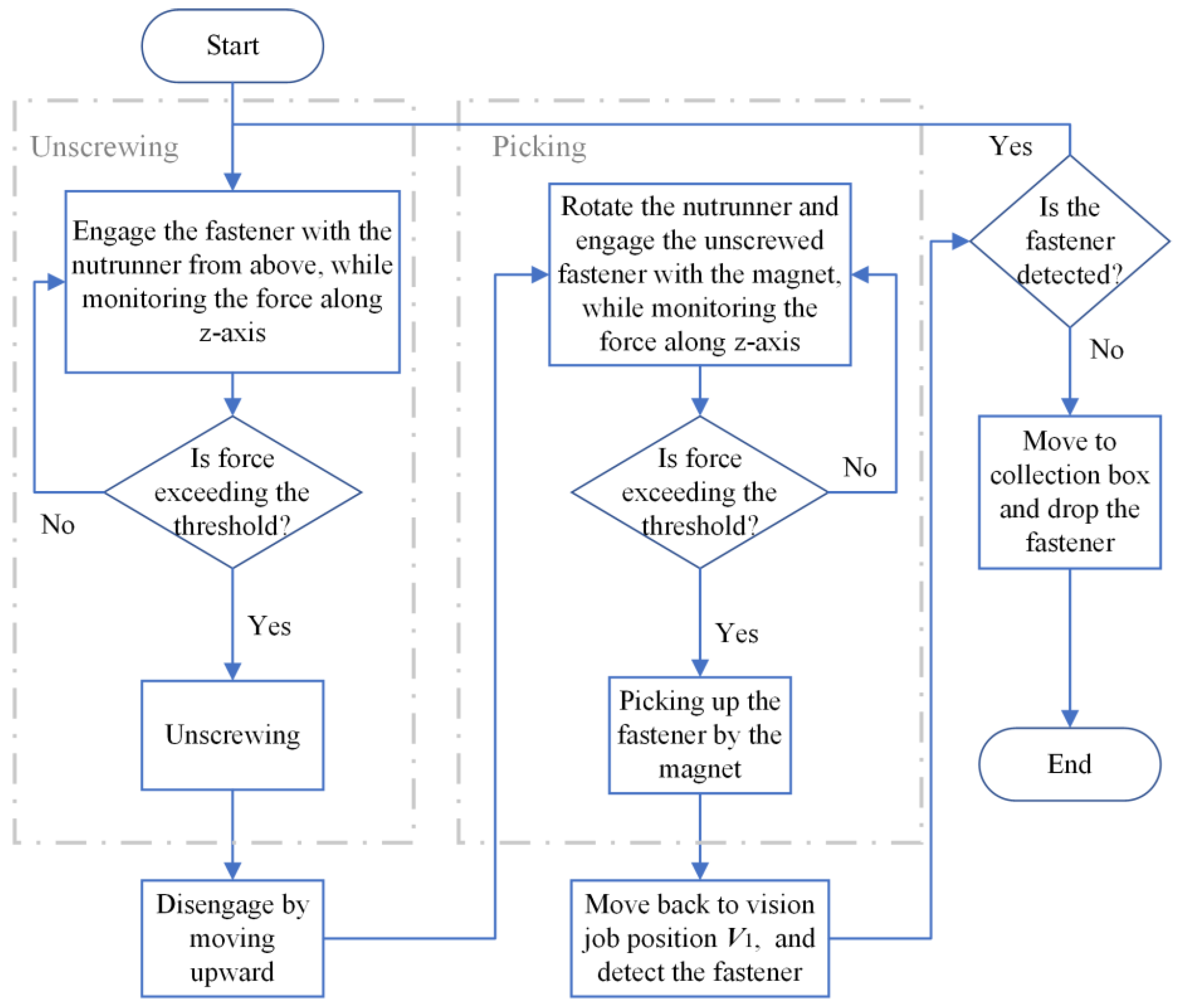

Figure 7.

Flow chart of the unscrewing and picking process.

Figure 7.

Flow chart of the unscrewing and picking process.

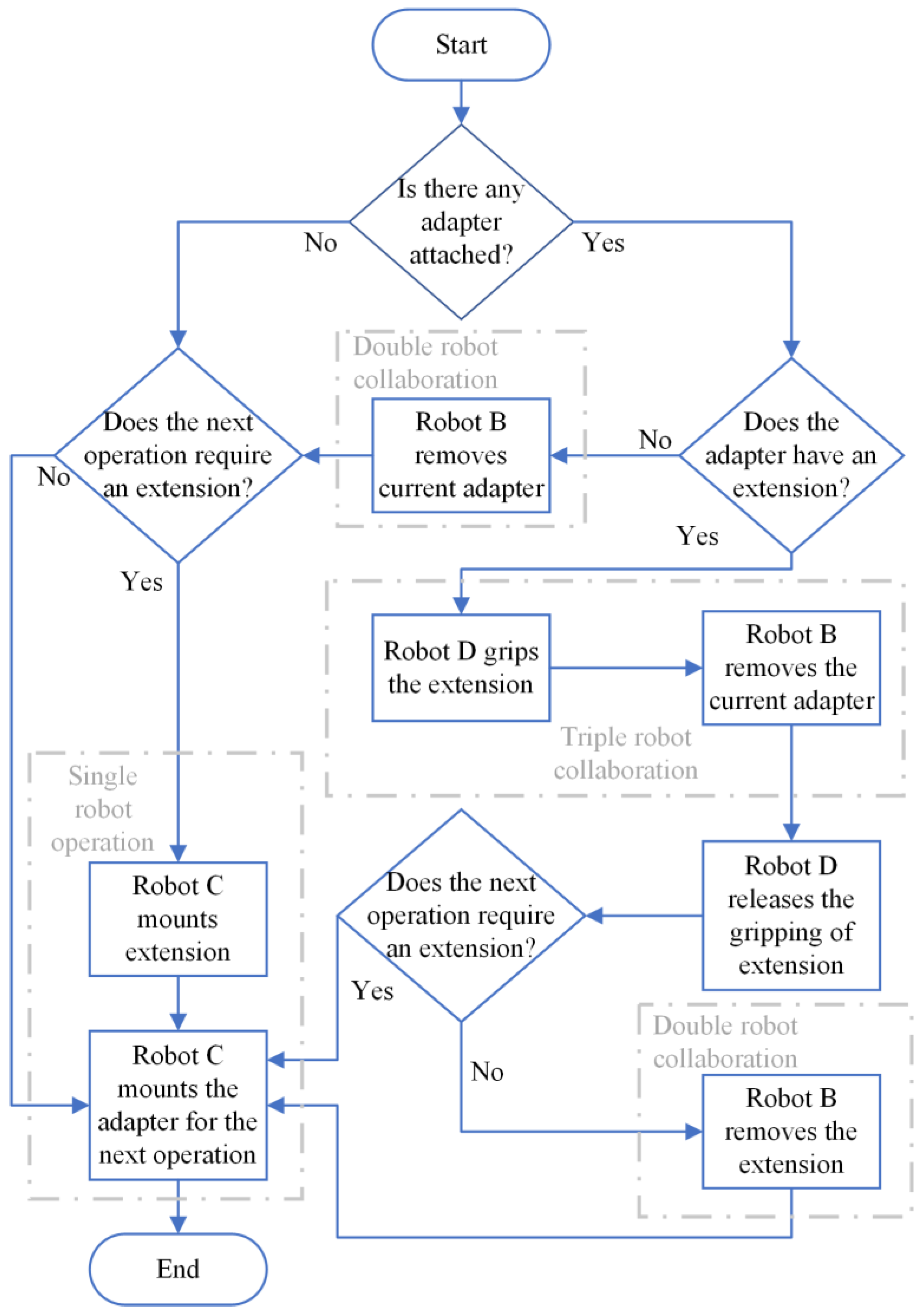

Figure 8.

Decision flow of the adapter changing system.

Figure 8.

Decision flow of the adapter changing system.

Figure 9.

Demonstration of parts being recognised and localised (a) in reality and (b) by the embedded on-wrist camera of the TM14 robot and its operating system, TMflow. The arrows represent the 2D orientations relative to the vision job frames. The numbers outside the brackets are the matching scores compared to the training images in percentage, and the integers inside the brackets denote how many objects have been detected. To avoid unexpected movements, each vision job is limited to recognising just one component by limiting the search range inside of the image.

Figure 9.

Demonstration of parts being recognised and localised (a) in reality and (b) by the embedded on-wrist camera of the TM14 robot and its operating system, TMflow. The arrows represent the 2D orientations relative to the vision job frames. The numbers outside the brackets are the matching scores compared to the training images in percentage, and the integers inside the brackets denote how many objects have been detected. To avoid unexpected movements, each vision job is limited to recognising just one component by limiting the search range inside of the image.

Figure 10.

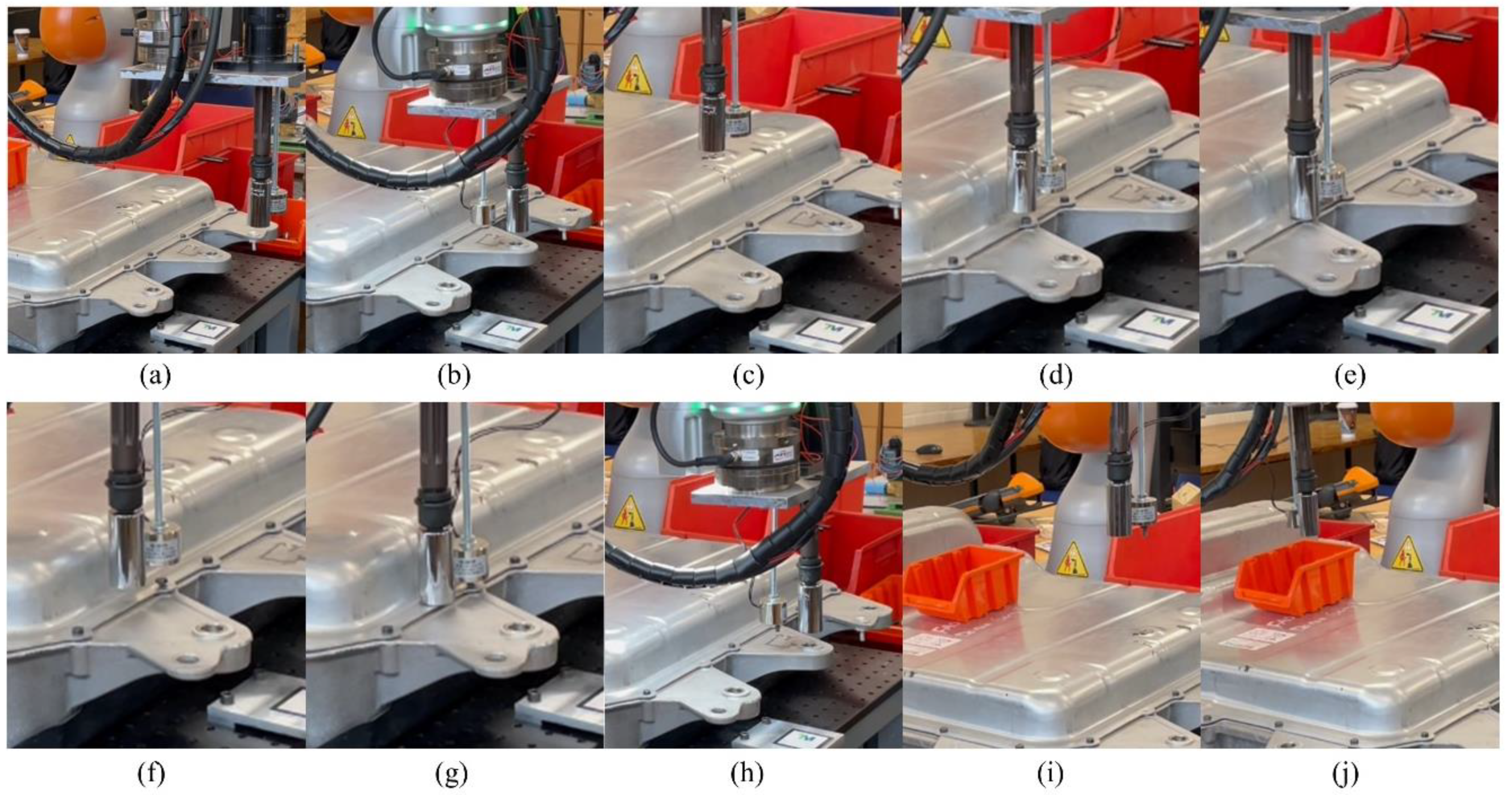

Demonstration of the whole unscrewing process in steps of (a) calibration with the landmark, (b) opening the camera to localise the fastener, (c) translating the nutrunner over the fastener, (d) engaging with the nutrunner until reaching a force threshold, (e) unscrewing, (f) translating the electromagnet over the fastener, (g) engaging with the electromagnet until reaching a force threshold, (h) opening the camera and making sure the fastener is successfully picked up, (i) moving to the collection bin, and (j) turning off the electromagnet to release the fastener.

Figure 10.

Demonstration of the whole unscrewing process in steps of (a) calibration with the landmark, (b) opening the camera to localise the fastener, (c) translating the nutrunner over the fastener, (d) engaging with the nutrunner until reaching a force threshold, (e) unscrewing, (f) translating the electromagnet over the fastener, (g) engaging with the electromagnet until reaching a force threshold, (h) opening the camera and making sure the fastener is successfully picked up, (i) moving to the collection bin, and (j) turning off the electromagnet to release the fastener.

Figure 11.

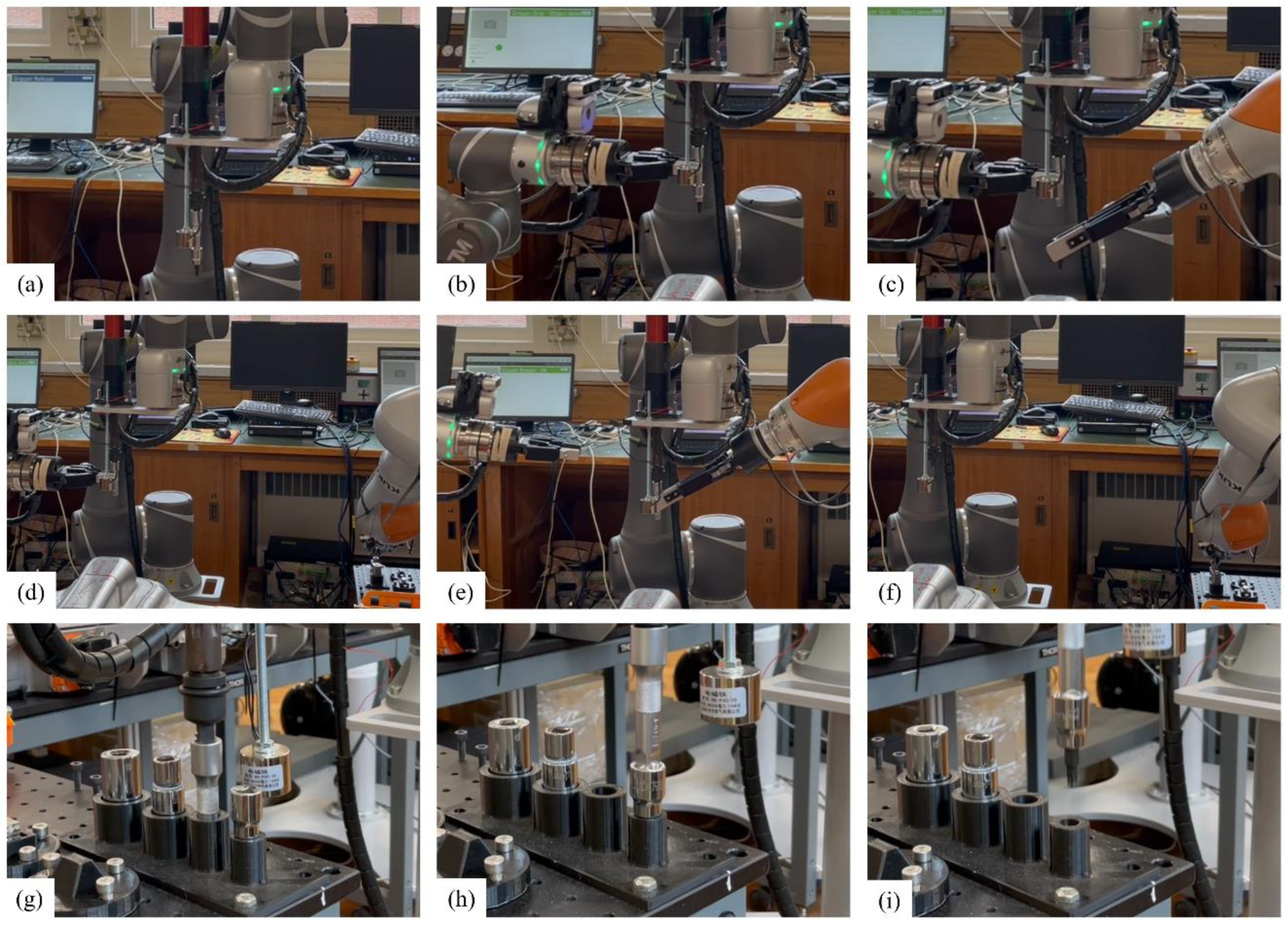

Demonstration of the three-robot-collaboration adapter changing process in steps of (a) robot C moving to a changing position, (b) robot D gripping the extension adapter, (c) robot B removing the Torx head adapter, (d) robot B placing the Torx head adapter into the adapter holder, (e) robot D releasing the gripping and robot B gripping the extension adapter, (f) robot B placing the extension adapter into the adapter holder, (g) robot C mounting the extension adapter, (h) robot C mounting the next adapter, (i) moving to the next operation.

Figure 11.

Demonstration of the three-robot-collaboration adapter changing process in steps of (a) robot C moving to a changing position, (b) robot D gripping the extension adapter, (c) robot B removing the Torx head adapter, (d) robot B placing the Torx head adapter into the adapter holder, (e) robot D releasing the gripping and robot B gripping the extension adapter, (f) robot B placing the extension adapter into the adapter holder, (g) robot C mounting the extension adapter, (h) robot C mounting the next adapter, (i) moving to the next operation.

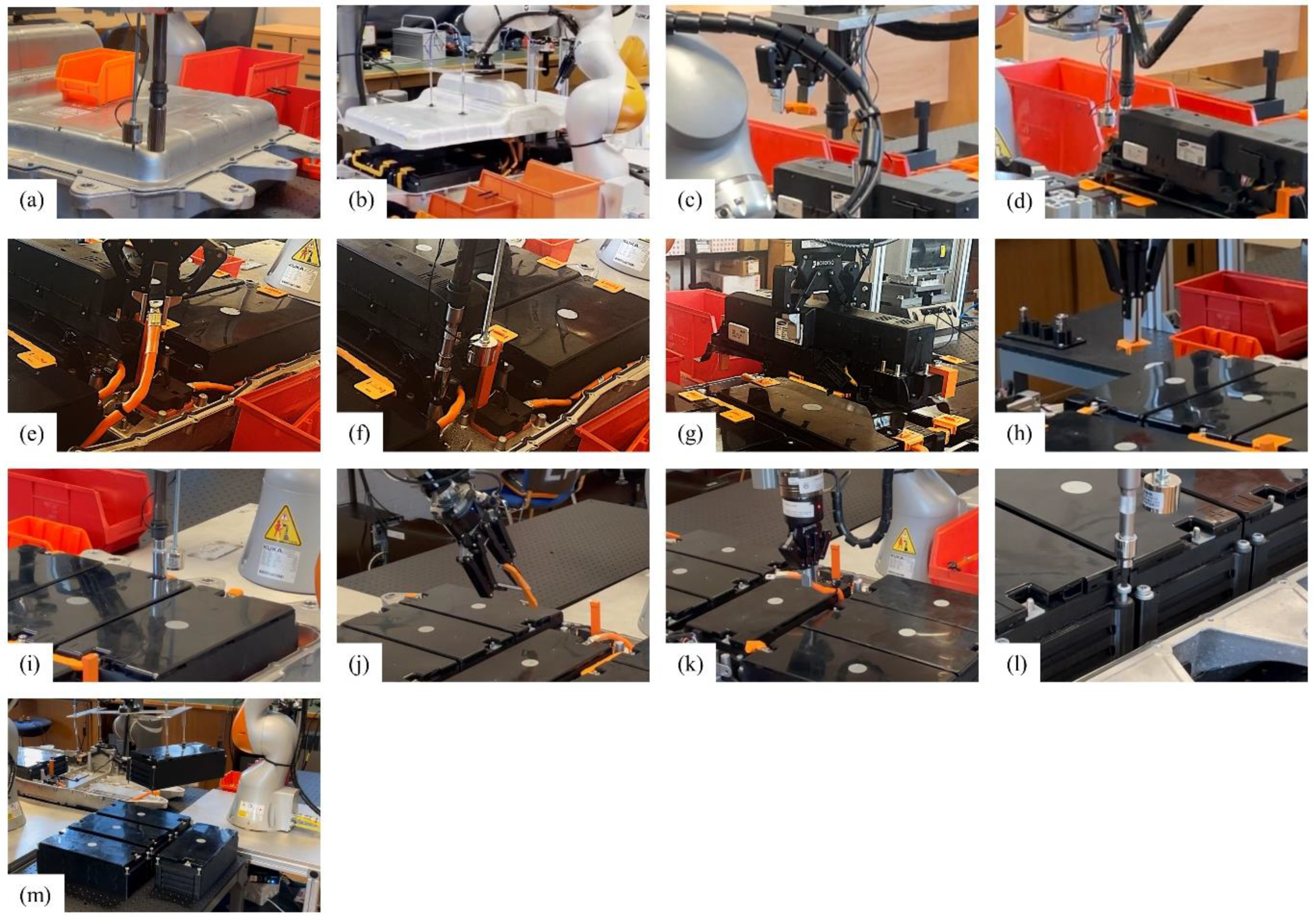

Figure 13.

Demonstration of the whole robotic disassembly process in the sequence corresponding to

Figure 2 and

Table 1.

Figure 13.

Demonstration of the whole robotic disassembly process in the sequence corresponding to

Figure 2 and

Table 1.

Figure 14.

Comparison of each disassembly operation between the human operators and the robot platform. The operation time of the human operators starts to count when the operator has fully equipped the correct tool and begins to engage the parts, and it ends when the operator places the dismantled parts into the designated collection bins. In other words, the time to change tools and to move around the working area is not counted. The robot operation time is measured in the same way.

Figure 14.

Comparison of each disassembly operation between the human operators and the robot platform. The operation time of the human operators starts to count when the operator has fully equipped the correct tool and begins to engage the parts, and it ends when the operator places the dismantled parts into the designated collection bins. In other words, the time to change tools and to move around the working area is not counted. The robot operation time is measured in the same way.

Figure 15.

Examples of challenges for robotic disassembly operations identified in this case study. (a) Example locations and detailed view of the cable ties. They are mainly used to secure the middle sections of the signalling cables to the battery modules. To remove the signalling cables, they need to be removed first. (b) Example usage of cable harnesses in the battery pack. The cable harnesses need to be disconnected to remove the signalling cables by the robots. (c) Coolant pads between the battery modules and the pack are used for heat dissipation and damping. However, they increase the adhesion between the module and the lower tray, resulting in a large separation force.

Figure 15.

Examples of challenges for robotic disassembly operations identified in this case study. (a) Example locations and detailed view of the cable ties. They are mainly used to secure the middle sections of the signalling cables to the battery modules. To remove the signalling cables, they need to be removed first. (b) Example usage of cable harnesses in the battery pack. The cable harnesses need to be disconnected to remove the signalling cables by the robots. (c) Coolant pads between the battery modules and the pack are used for heat dissipation and damping. However, they increase the adhesion between the module and the lower tray, resulting in a large separation force.

Table 1.

Components removed or disconnected at each stage.

Table 1.

Components removed or disconnected at each stage.

| Disassembly Stages |

Disassembly Step Symbols |

Disassembly process |

Repetition |

Component Removed or Disconnected |

| Stage 1: top cover removal |

A |

Unscrew |

35 |

M5x10mm screw |

| B |

Remove |

1 |

Top cover |

| Stage 2: circuit breaking |

C |

Remove |

1 |

L-shape cover |

| D |

Unscrew |

1 |

M6 nut |

| E |

Disconnect |

1 |

High-voltage cable |

| Stage 3: junction box removal |

F |

Unscrew |

4 |

M6x16mm bolt |

| G |

Remove |

1 |

Junction box |

| Stage 4: battery module removal |

H |

Remove |

14 |

Terminal cover |

| I |

Unscrew |

14 |

Nuts |

| J |

Remove |

5 |

Busbar |

| K |

Remove |

3 |

High-voltage cable |

| L |

Unscrew |

28 |

M6x95mm bolt |

| M |

Remove |

7 |

Battery module |

Table 2.

Main End-Effectors and Duties Allocated for Each Robot.

Table 2.

Main End-Effectors and Duties Allocated for Each Robot.

| Main Duties and End-Effectors Allocated for Each Robot |

|---|

| Robot |

Main End-Effectors |

Main Duty |

| Robot A |

Vacuum gripper |

Handling bulky objects |

| Robot B |

Two-finger gripper |

Handling small objects |

| Robot C |

Nutrunner |

Unscrewing |

| Robot D |

Two-finger gripper |

Handling small objects |

Table 3.

Fasteners being unscrewed and the corresponding adapters.

Table 3.

Fasteners being unscrewed and the corresponding adapters.

| Disassembly Step |

Fastener Type |

Size |

Head type |

Used adapter |

With extension |

Repetitions |

| A |

Screw |

M5x10mm |

Hexagonal |

3/8-inch gripper socket |

No |

35 |

| D |

Nut |

M6 |

Hexagonal |

1/4-inch gripper socket |

No |

1 |

| F |

Screw |

M6x16mm |

Hexagonal |

1/4-inch gripper socket |

Yes |

4 |

| I |

Nut |

M6 |

Hexagonal |

1/4-inch gripper socket |

No |

14 |

| L |

Screw |

M6x95mm |

Torx |

M6 Torx-shape socket |

Yes |

28 |