1. Introduction

The CSV files are a special kind of tabulated plain text data container widely used in data exchange, currently there is no defined standard for CSV file’s structure and a multitude of implementations and variants. Notwithstanding the foregoing, there are specifications such as RFC-4180 that define the basic structure of these files, while a useful addendum to this is defined in the specifications of the USA Library of Congress (LOC) [

1]. According to the LOC specifications the CSV simple format is intended for representing a rectangular array (matrix) of numeric and textual values. “It is a delimited data format that has

fields/columns separated by the comma character %x2C (Hex 2C) and

records/rows/lines separated by characters indicating a line break. RFC 4180 stipulates the use of CRLF pairs to denote line breaks, where CR is %x0D (Hex 0D) and LF is %x0A (Hex 0A). Each line should contain the same number of fields. Fields that contain a special character (comma, CR, LF, or double quote), must be “escaped” by enclosing them in double quotes (Hex 22). An optional header line may appear as the first line of the file with the same format as normal record lines. This header will contain names corresponding to the fields in the file and should contain the same number of fields as the records in the rest of the file. CSV commonly employs US-ASCII as character set, but other character sets are permitted” [

2]. Furter more, so far to the specifications, in a file may exist: commented or empty records; the tab character (\t) or semicolon (;) as field delimiter; one or more, in exceptional cases, of the characters CRLF, CR, and LF as a record delimiter; quote character escaped by preceding it with a backslash (Unix style).

Given that many public administration portals use CSV files to share information of public interest

1, coupled with the reality that the process of manipulating the information contained in them requires structuring the data in tables and correcting data quality errors, it is necessary to automate tasks as much as possible to reduce the time and effort required to deal with messy CSV data [

3,

4]. The automation problem is to determine the delimiters (also called dialect sniffing) of a given file. Dialect sniffing requires that the field delimiter, record delimiter and escape character be determined [

5].

This problem seems straightforward, but it is by no means simple. If one opts to implement a simple field delimiter counter to choose the one with the most occurrences in the entire file, it is very likely that disambiguation will become impossible if the algorithm is confronted with data that have two or more delimiters with the same number of matches.

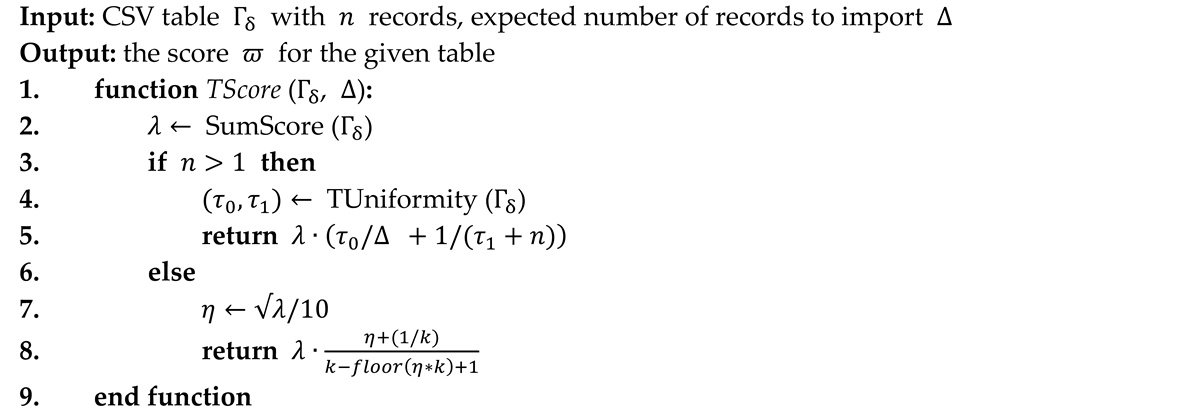

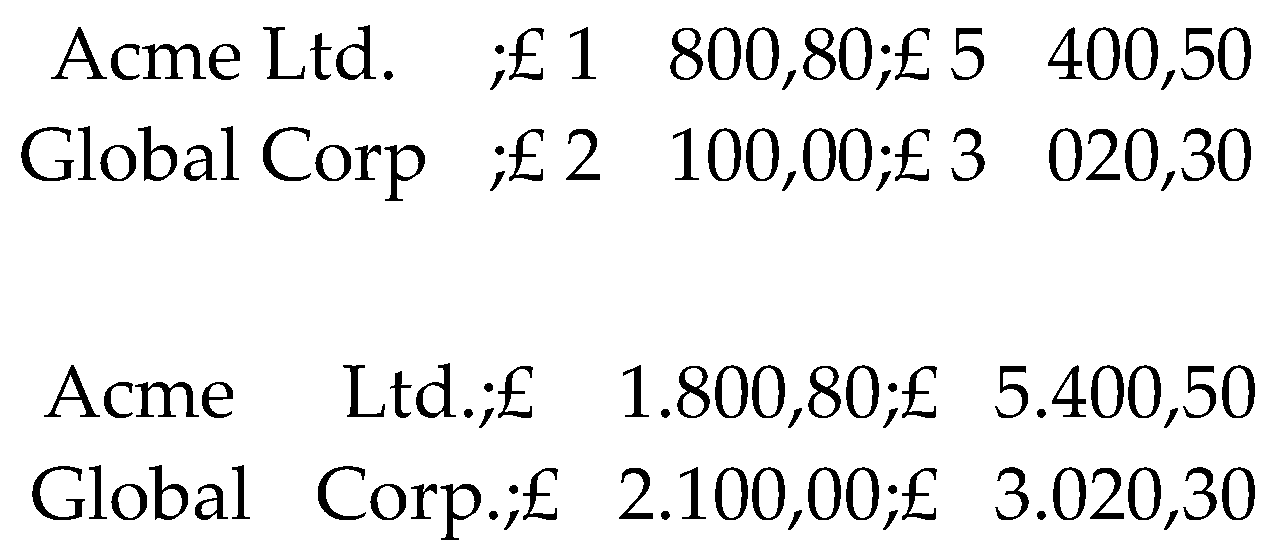

A CSV file with a structure as shown in

Figure 1 is at risk of being misinterpreted, this is illustrated in [

6]. If delimiters are counted, the period or space will be selected as field delimiters because of their three constant occurrences, generating four fields, in the records, as opposed to the two occurrences and three fields generated by the comma and semicolon. Although a well-defined file should have a header row, there are many files on the Internet that do not. [

4].

It is a fact that systems that work with CSV files may require the user to set the configuration with which they want the file to be processed, however, when the intention is to analyze data coming from different sources, it is very beneficial to implement a methodology that allows to automatically infer CSV dialects with minimal user intervention.

In this sense, CSV file dialect inference is a fundamental part of data mining, data wrangling and data cleansing environments [

3]. Moreover, dialect detection has the potential to be embedded in systems designed for the new paradigm with the NoDB philosophy, under which it is proposed to make databases systems more accessible to users [

7,

8]. These trends suggest that the traditional practice of considering CSV files outside of database systems is tending to change [

9].

2. Related Work

The detection of dialects in CSV files is a little studied field, and there are few sources on the subject. In 2017, T. Döhmen proposed the ranking decision method based on quality hypotheses for parsing CSV files. A similar method is implemented in the DuckDB system [

10]. A similar treatment, based on the discovery of the table structures once the information is loaded into the RAM, is addressed by C. Christodoulakis et. al. [

11]. In the latter, the methodology is based on the classification of the records present in the CSV files, to which a specific heuristic is applied to discover and interpret each line of data.

In 2019, G. van den Burg et al., developed the CleverCSV system as a culmination of his research, in which he demonstrated that his methodology significantly improved the accuracy with which dialects were determined compared to tools such as Python’s

csv module, or the intrinsic functions of the

Pandas package, also in the Python programming language. The methodology implemented in CleverCSV is based on the detection of patterns in the structure of the CSV records, in addition to the inference of the data types of the fields that compose each record. In this way, the utility applies the necessary heuristics to infer the potential dialect for a given CSV file through mathematical and logical operations devised to discern between possible dialects [

5].

In 2023, Leonardo Hübscher et al., presented a research project that led to the development of a software application capable of detecting tables in text files. This research considers the dialect determination of CSV files as a subproblem to be solved in order to obtain the dialect that produces the best table [

12].

3. Problem Formulation

In order to properly formulate the dialect detection problem, it is necessary to establish some basic definitions.

Definition 3.1 (CSV content). Given a CSV file its content is defined as , where , and represents a character set encoded using a given encoder.

As per the CSV content definition, there is a real possibility that a single CSV file may contain characters encoded in more than one encoder. For the purpose of this document, it is assumed that all characters share the same encoder.

Given that each file originates from a table to which a format function and the helper function have been applied to produce and write a sequence of human readable characters separated by lines; then from each CSV content is possible to obtain a table so that we can verify that .

Definition 3.2 (CSV table). The table is defined as a set of records composed of a given set of fields, which share the data typology between corresponding fields across their records. This table can be represented as a data array of fields and records. Thus, its records are defined as ; i.e., a set of fields . Then, the table can be expressed as ; i.e., a set of records .

The function is in charge of reading the content of the file , while the function is in charge of parsing and transforming the CSV content into a table . The process of parsing and transformation is clearly out of the scope of this study, so in the following it is assumed that the selected implementation is able to process the tables obtained by parsing a CSV file with the selected tool.

Definition 3.3 (CSV dialect). Let be the data table from which the content of file is generated, the dialect is defined as the formatting rule to be applied to produce the output data stream.

So that, by the dialect definition, it is verified that

Definition 3.4 (CSV dialect determination). Given a CSV file , determining the dialect involves identifying the dialect that satisfies the following statement .

Thus, it can be concluded that for a CSV file , created using a dialect , there exists a dialect that verifies the condition . Therefore, it is verifiable that the content of a CSV file is a function of its dialect.

1.1.1. Potential Dialect Boundaries

It should be noted that multiples potential dialects can produce similar tables outputs that are equal or approximately equal to the source table . Furthermore, shares the same character set as the contents for the CSV file . That is, can be practically any character within domain. Thus, it is necessary to narrow down the range of candidate characters involved in dialect detection to streamline the process.

For the purposes of this research, the potential dialect is restricted to

4. Table Uniformity

The table uniformity approach is proposed to solve the problem of dialect determination. The method is based on the measurement of the consistency of the table, which has been obtained through a dialect , and the dispersion of records along with the inference of the raw data types from the records.

Definition 4.1 (Table consistency). Let be a table , generated when reading a CSV file using a dialect , the table consistency, denoted by , is a ratio that describes how uniform a table is across its fields and its records.

Definition 4.2 (Records dispersion). Let be the sets of records from table , generated when reading a CSV file using a dialect , the records dispersion, denoted by , is a measure describing the magnitude of the change in the records composition throughout the table.

These definitions are based on the fact that tables, in general, have a defined structure with persistent fields in its records.

The two measurements that define the table uniformity parameter

are related to the structure of the records

from the table

. Where

is a direct function of the standard deviation of fields, and

being a function measuring the weighted dispersion in records structures as a factor of the statistical segmented mode

2.

Where, for a given table

,

is the standard deviation of the number of fields across records;

represents the count of times the number of fields changes between records;

is the statistical range of the number of fields over records;

is the segmented mode, describing the largest number of times the record structure is sequentially preserved within the table, and

is the records variability factor.

The definitions provided propose a concept diametrically opposed to that used in most solutions, since it discourages data dispersion, i.e., records with a higher number of fields/columns are only favored if their record structure is uniform. The parameter indicates the degree of consistency of the records in the table, while is a fine-grained measure of the dispersion and inconsistency within the records. This quality allows the new method to discern between data tables by inferring uniformity in two senses: consistent records and invariant records with little dispersion in their structure. The parameter ranges from , being for those tables with consistent records; while ranges from , being for those tables with invariant record structure and without dispersion.

5. Type Detection

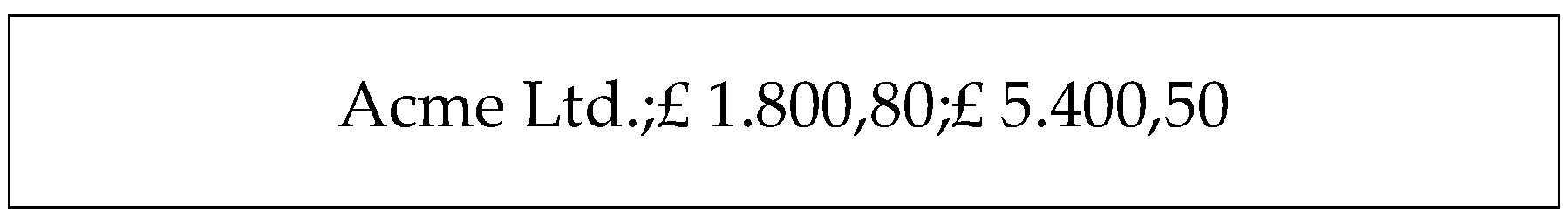

Data type detection is the core basis of the methodology implemented. Recognition of the types of data fields from each record allows us to collect information about the contents of a given table. In this context, the records scoring,

, is defined as

Where

is the score for ith field

in

from the table

. If the type of the ith field

is known

,

otherwise.

For the purposes of this paper, the following field types are generally considered to be known:

Time and date: matching regular dates and time format, as well stamped ones like MM/DD/YYYY[YYYY/MM/DD] HH:MM: SS +/- HH:MM

Numeric: matching all numeric data supported by the implementation language selected.

Percentage.

Alphanumeric: matching numbers, ASCII letters and underscore.

Currency

Especial data: like “n/a” or empty strings

Email.

System paths.

Structured scripts data types: matching JSON arrays and data delimited by parentheses, curly and square brackets.

Numeric lists: matching fields with numeric values delimited with common separator character.

URLs.

IPv4.

Al other fields will be scored as unknown type.

6. Table Scoring

Once the table uniformity

for the records

contained in table

, which has been generated by reading a CSV file

using a dialect

, and the score

are computed, the table score

is defined as

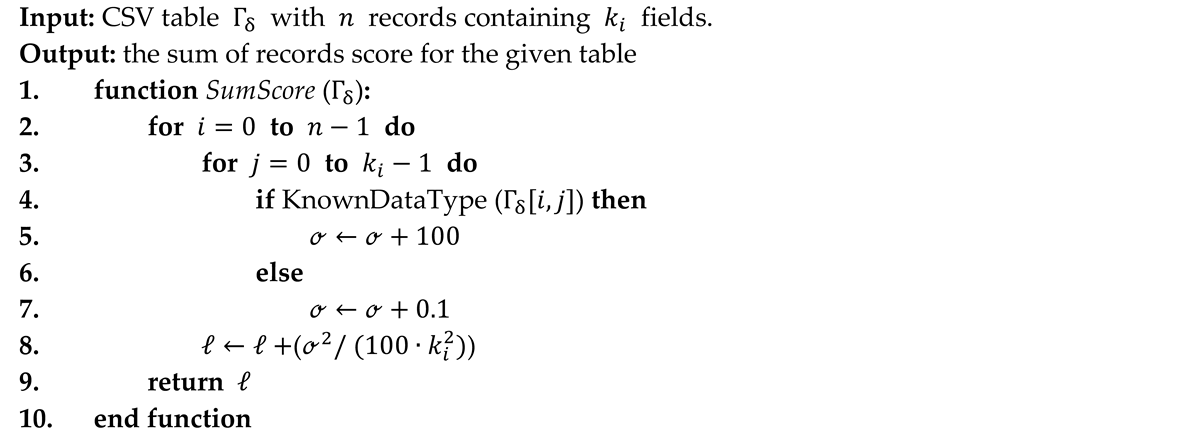

Where

is a threshold used for indicate the expected number of records to be imported from the CSV file

which contains a number of records

. For

, and an appropriate selection of

,

will generate a table in with

; therefore, by the definition stated, the table score is in the range

.

In the case

we have

Where

is a discriminant to ensure the exclusion of false positives with a single record.

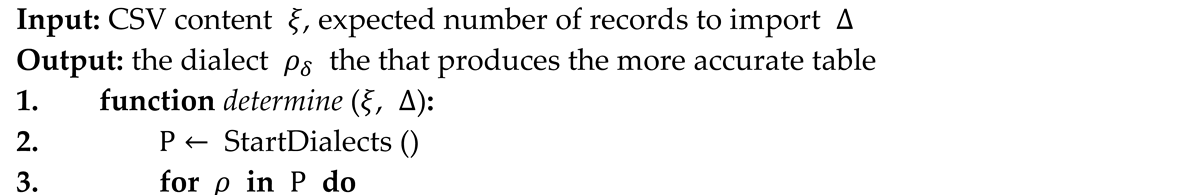

7. Determining CSV File Dialects

In order to develop a reliable methodology for determining the dialects of CSV files, the cases that produced failures in famous dialect detectors were used as a basis. This is because the dialect in well-structured files is easily determined with any simple heuristic.

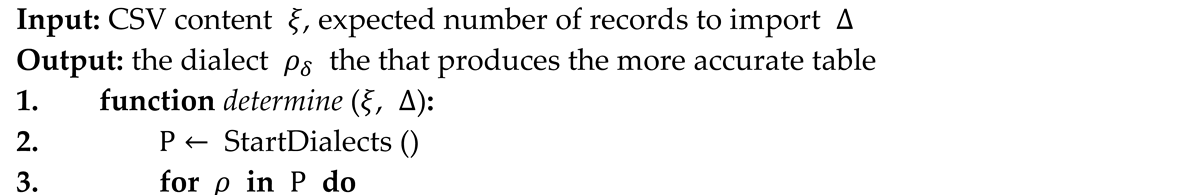

The main pseudocode for dialect determination is described in

Algorithm 1. At line 2 the set of predefined dialects are initialized; then, in line 4, a table

is created by parsing the CSV content

with each dialect.

|

Algorithm 1: Dialect Determination |

|

At this point, it becomes clear that the selection of a robust parser is of utmost importance in order to obtain the best results even on messy files. In line 5, the output table is scored and this result is saved within the current dialect in the collection . At line 6, the dialect that produce the highest scored table is selected.

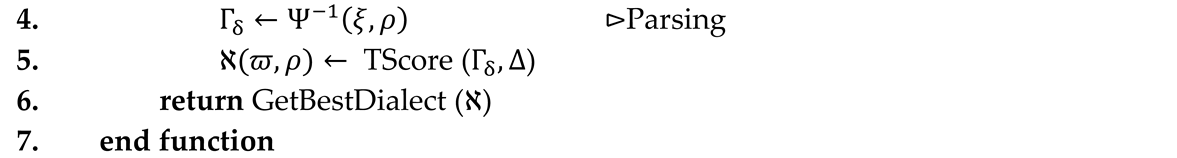

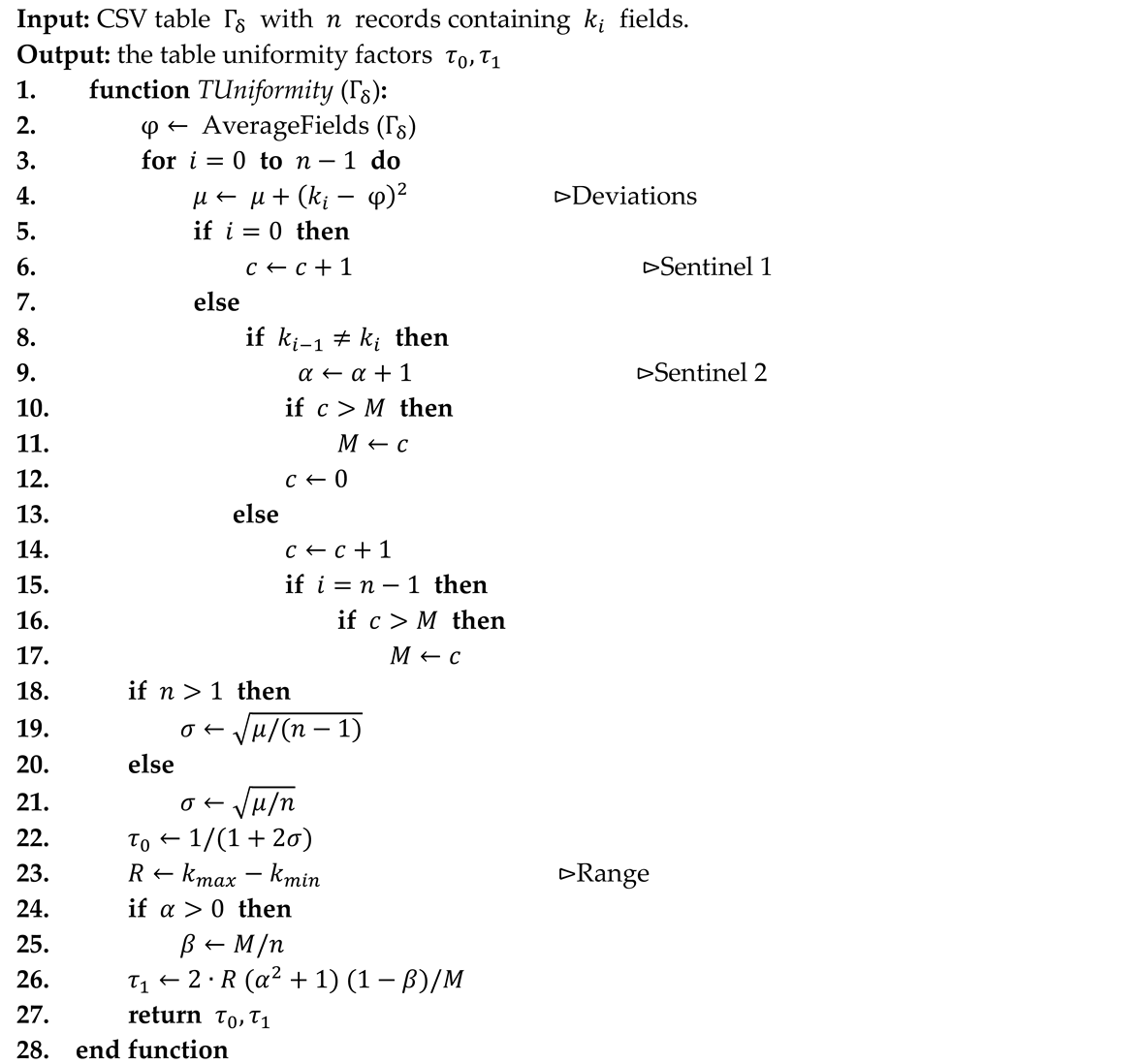

The table uniformity pseudocode is outlined in

Algorithm 4. The method uses a set of sentinels to measure table inconsistency through monitoring table changes through the parsed records.

|

Algorithm 4: Table Uniformity |

|

The parameter

is derived from the standard deviation that indicates how uniformly the fields count are grouped around the average number of fields contained in the parsed records, resulting in an appropriate measure to qualify the structure of a table [

13]. However, when there are two or more dialects with a small variance, the

parameter is not decisive. It is in this situation where the

parameter provides support by penalizing tables with variations in its records structures, and whose structure resembles sparse data that do not maintain consistency.

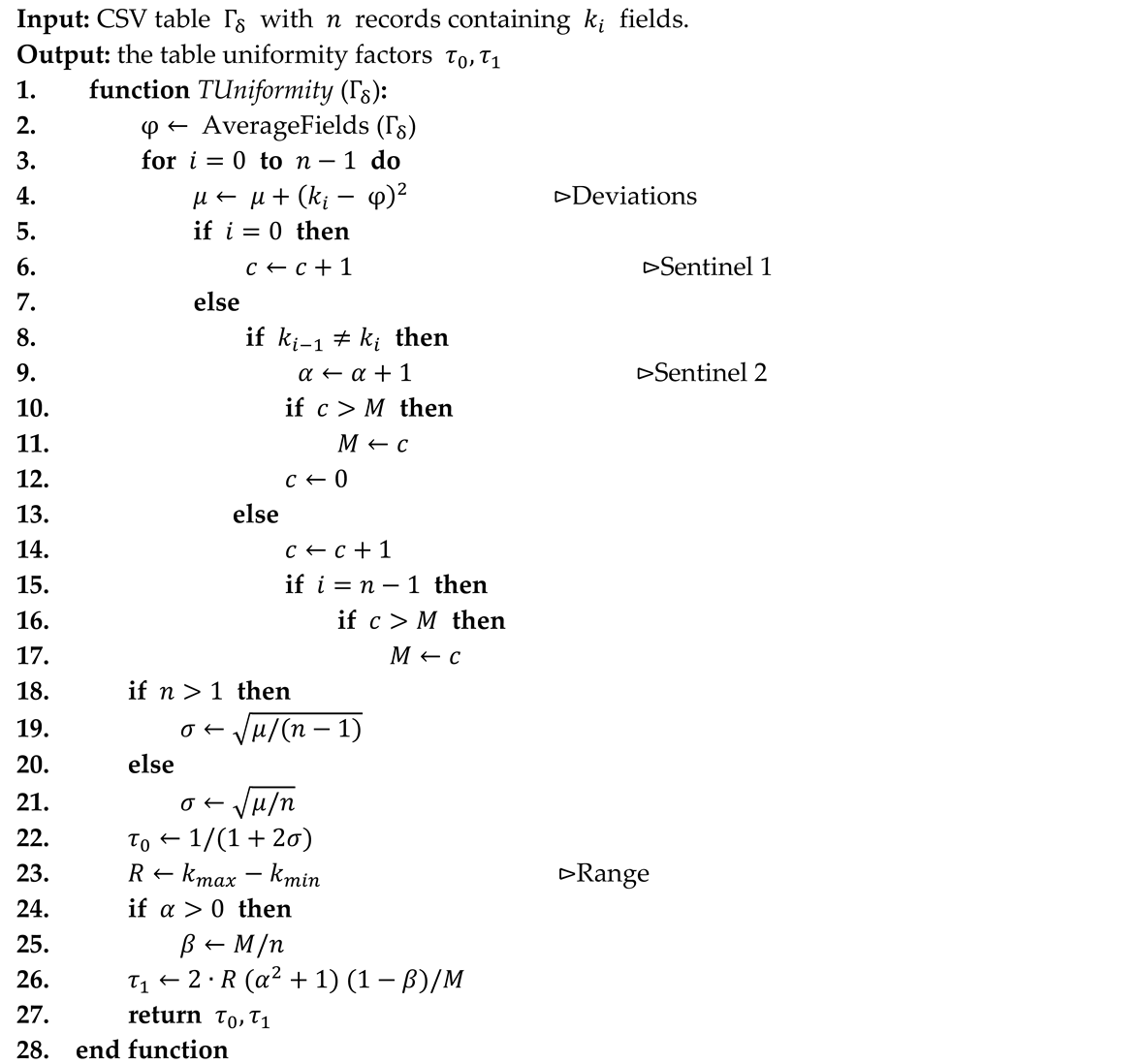

The following illustration shows the preview from the modified content of one of the files used during the testing phase. It was published in the CleverCSV repository on GitHub

3.

The star character has been replaced by the vertical bar “|” to include in the detection a potential

dialect with this character. As the author points out, the CSV file is comma delimited, using double quotes as the quote and escape character, then the file is compliant with RFC-4180 specifications. When running dialect detection, CleverCSV gets the vertical bar “|” as the delimiter as this field pattern gets a

score vs a

from the patterns with the “,” character as delimiter. This behavior is due to the fact that the logic used strongly weights the count of delimiters over the detected data types. This behavior is due to the fact that the logic used strongly weights the delimiter count over the types of data detected, where dialects containing the comma as delimiter obtain a type score of

against the type score of

obtained by dialects with the vertical bar as delimiter.

By executing the algorithms presented in this research, the following is obtained for dialects with the vertical bar as the delimiter , , , and . For the comma the results are , , , and . Then the comma “,” character is selected as delimiter.

8. Experiments

It was decided to code the new method and integrate it with CSV Interface

4, a VBA CSV file parser. Thus, the new CSV dialect determination method will be available in a widespread programming language without overinvesting efforts.

The new solution was tested on a dataset provided in the research by Gerardo Vitagliano et al. One or two polluted CSV file per pollution case are used for testing, all the 99 survey having at least one pollution case as described in the aforementioned study (excluding empty ones by the fact infinite dialects can be produce no payload files [

14]). In addition, data from the OpenRefine

5 testing, CleverCSV failure cases and other files used at development phase serves as testing samples. In total, the solution was tested against 148 CSV files (104 MB of data). In this way the proposed solution will be tested against real-world and programming like files with pollutions that make dialect detection difficult.

The solution presented in this research is compared head to head in the CSV dialect detection field with the alternative method CleverCSV [

5], a tool showing an accuracy of close to 97% [

15].

8.1. Dialect Detection Accuracy

To measure the accuracy of dialect determination, the dialect for each of the files in the testing set provided was manually verified and a CSV file containing the filename along with the corresponding dialect was produced. When executing the implemented algorithms, it is determined whether the dialect has been properly determined by comparing the result returned with the expected result, accumulating the total number of files for which the dialect was correctly detected by the implemented heuristic.

The results obtained in the test runs are shown in the

Table 1. It is noticeable the perfect score of the new method proposed in this paper.

When using tables of ten or twenty-five records (10R, 25R) for dialect determination, the proposed method was not able to determine the dialect of one of the files present in the testing dataset. This file has been sectioned to show the variation of certainty as the size of the table considered in the computations increases.

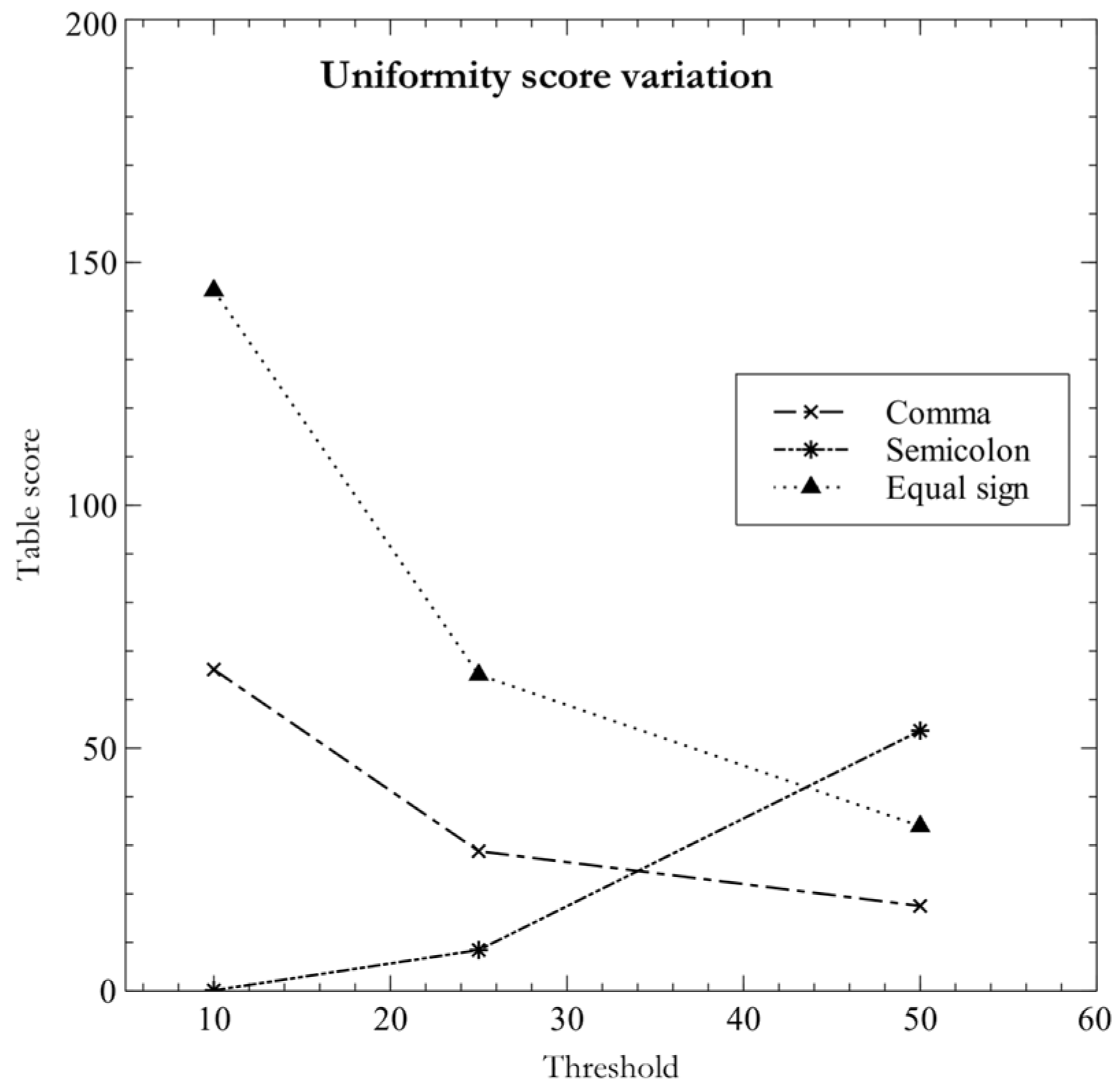

As can be seen in the illustration above, the file contains a twelve-row header with no comment identifier. When the stated heuristic is applied, it is concluded that the delimiter is the equal sign “=“, since the dialects containing it divide each record into known data types: an alphanumeric field/column and a field with structured data delimited by square brackets. By increasing the table size to twenty-five (25R) the heuristic begins to highlight the semicolon “;” as a possible field delimiter character. Finally, the semicolon is correctly detected as a delimiter when the threshold of fifty records (50R) in the table is stated. This behavior demonstrates that the proposed methodology is strongly related to the changes in the structure of the tables used in dialect inference and it is illustrated in the

Figure 3.

9. Discussion

The results obtained by the table uniformity method proposed in this research have their genesis in two aspects: the type of heuristics used, the behavior of the CSV file analyzer while producing tables using a certain dialect.

9.1. Heuristic

In contrast to CleverCSV, in whose heuristic the detection of data types serves as a factor to scale down the score obtained by a certain pattern; the table consistency method uses data detection as a base score to be narrowed using the table consistency and data dispersion parameters.

Since the detection of data types is the foundation of the method, a wide range of typologies is required to be recognized. According to Mitlohner’s research [

4], with a base of 104,826 CSV files, the vast majority of data commonly stored in this type of files are numeric, tokens (words separated by spaces), entities, URLs, dates, alphanumeric fields and general text, so these data types must be recognized. Additionally, in the field of programming, there are other types of data frequently dumped in CSV files, namely: structured data with the Regex pattern

, numerical lists, tuples, arrays among others.

It is worth mentioning that dialect detection is prone to failure when the CSV file is composed of unknown data types. In these cases, the table uniformity tends to select dialects that produce registers with a single field. When reviewing the cases where CleverCSV was not able to determine the dialect, it has been observed that the common denominator has been the high count of a potential delimiter with more occurrences than the expected delimiter.

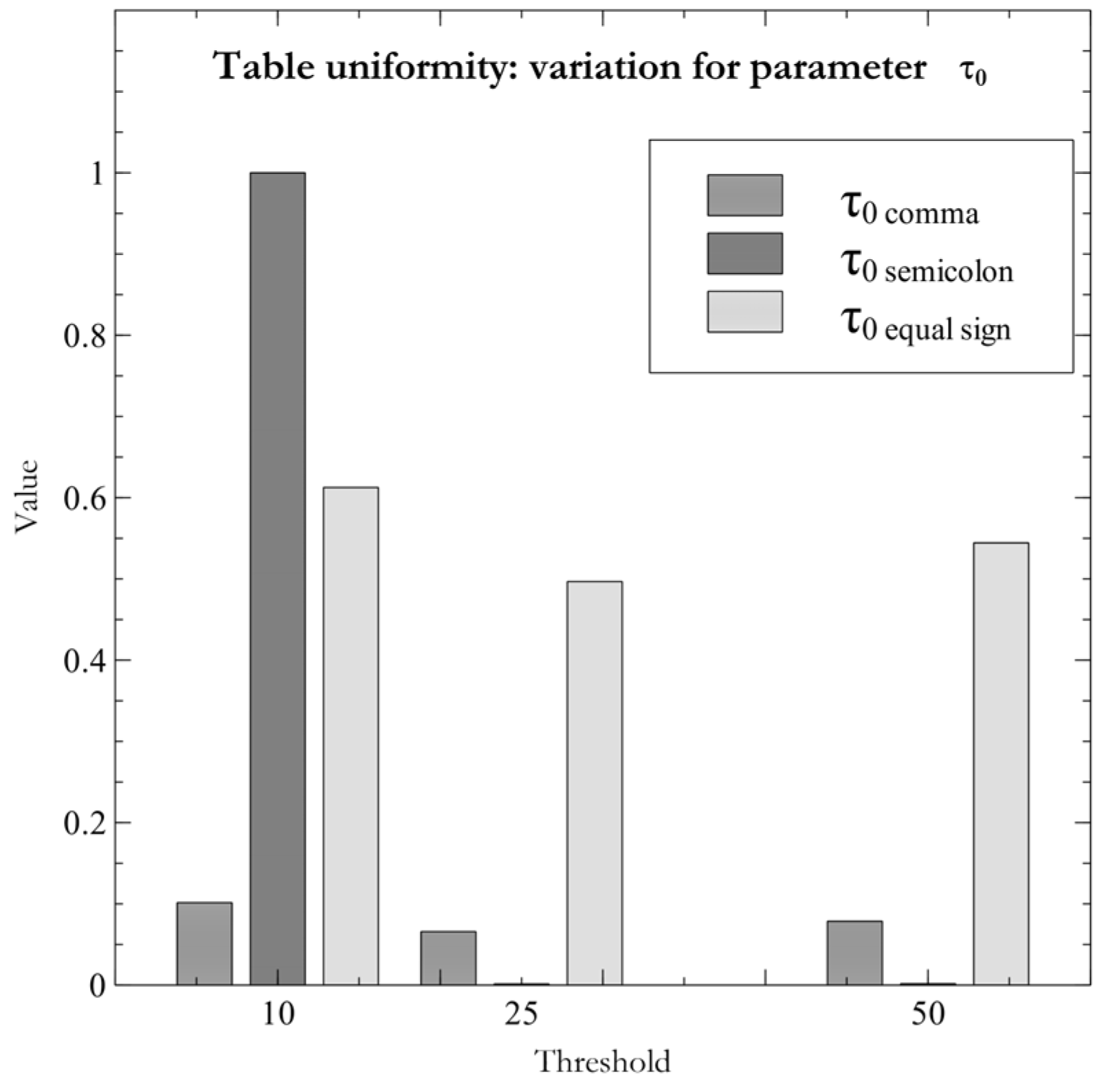

As pointed out earlier, the table uniformity method prefers grouped data over those that appear to be sparse data. In these cases, detection tends to depend exclusively on the data types detected in the records. This fact is evidenced by plotting the values of the uniformity parameter .

Looking at

Figure 4, it can be seen that, even though the score obtained by the semicolon dialect is very close to zero, the value of

is maximum. In contrast, this value fluctuates to almost zero for the dialect containing the semicolon; it remains almost unchanged among the dialects containing the other characters. In these cases, the dialect determination is relegated to data type detection and fine-grained monitoring of changes in table structures through the

parameter. The above serves as a basis for reaffirming that the table uniformity method helps to properly adjust the metrics obtained by inferring the data types.

9.2. CSV Parser Basis

The accuracy of dialect determination is intimately related to the way CSV parsers behave when confronted with atypical situations. This is because heuristics use these results to infer the configuration that returns the most suitable data structures.

One of the capabilities required for dialect determination is the recovery of data after the occurrence of a critical error. This is the case for the import of CSV files where there is no balance between quotation marks. This situation breaks the RFC-4180 specifications and causes an import error in all solutions intended to work with CSV files. In this sense, the recovery of this error should include a specific message after which the loading of information should continue until the whole file is processed.

Since the determination of dialects can be done with a few records received from a CSV file, there is a probability that some of the parameters that compose the dialect cannot be determined properly. Given this reality, it is preferable that CSV parsers be able to convert between one escaping mechanism and another instead of making the escape character mutually exclusive as established in the most relevant proposals on these topics [

16]. This results in the correct interpretation of escape sequences that use the backslash “\” in those files in which a quote character has been detected as part of their dialect.

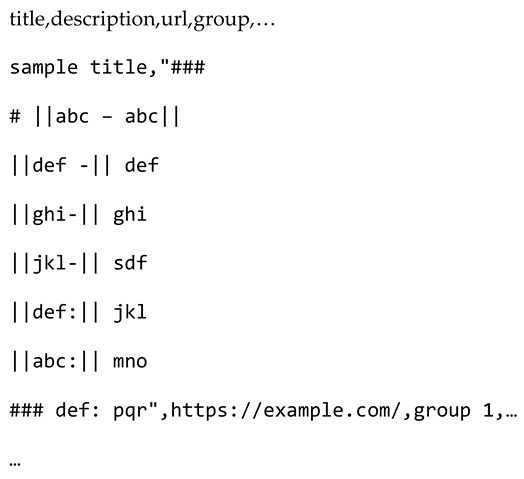

10. Appendix: Algorithms Pseudocode

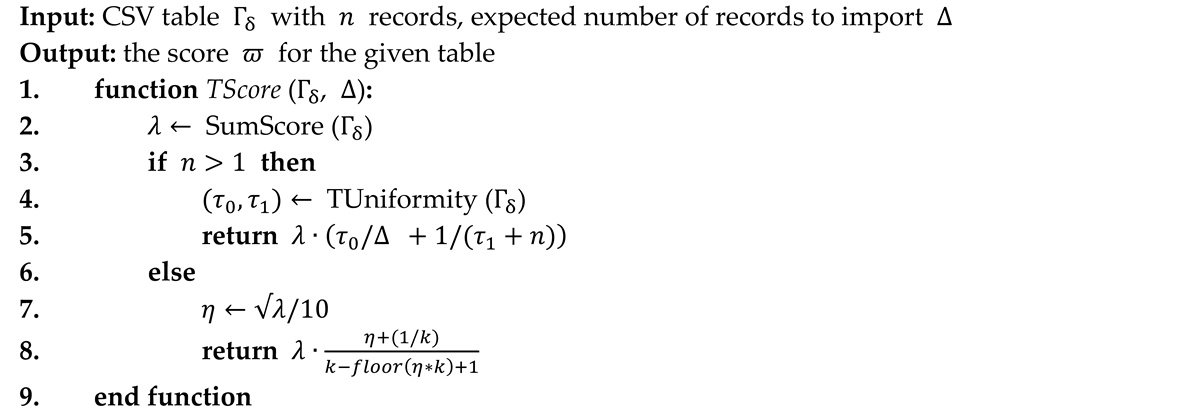

|

Algorithm 2: Table Score |

|

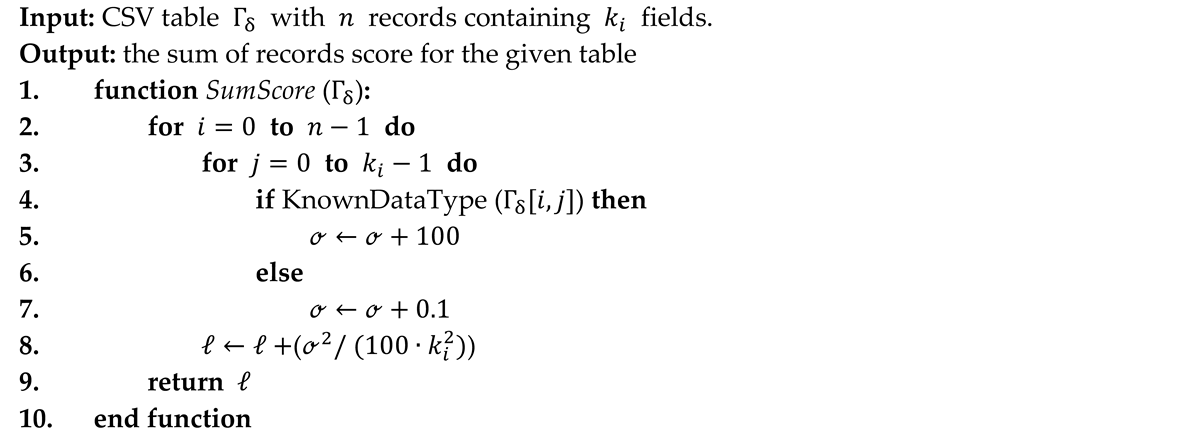

|

Algorithm 3: Sum of Records Score |

|

Notes

| 1 |

An analysis of a 413 GB data body found CSV files available for download on 232 portals [ 4]. |

| 2 |

Segmented mode refers to the use of segments of the sample, which are defined as the data undergoes dispersion. |

| 3 |

|

| 4 |

|

| 5 |

|

References

- Y. Shafranovich, “Common Format and MIME Type for Comma-Separated Values (CSV) Files,” IETF. Accessed: Jul. 23, 2021. Available online: https://datatracker.ietf.org/doc/rfc4180/.

- Library of Congress, “CSV, Comma Separated Values (RFC 4180),” LOC. Available online: https://www.loc.gov/preservation/digital/formats/fdd/fdd000323.shtml.

- C. Sutton, T. Hobson, J. Geddes, and R. Caruana, “Data Diff: Interpretable, Executable Summaries of Changes in Distributions for Data Wrangling,” presented at the Knowledge Discovery and Data Mining Conference, London, United Kingdom, Aug. 2018. Accessed: Jul. 23, 2021. Available online: https://www.turing.ac.uk/research/publications/data-diff-interpretable-executable-summaries-changes-distributions-data.

- J. Mitlohner, S. Neumaier, J. Umbrich, and A. Polleres, “Characteristics of Open Data CSV Files,” in 2016 2nd International Conference on Open and Big Data (OBD), Vienna: IEEE, Aug. 2016, pp. 72–79. [CrossRef]

- G. J. J. van den Burg, A. Nazábal, and C. Sutton, “Wrangling messy CSV files by detecting row and type patterns,” Data Min. Knowl. Discov., vol. 33, no. 6, pp. 1799–1820, Nov. 2019. [CrossRef]

- T. Döhmen, H. Mühleisen, and P. Boncz, “Multi-Hypothesis CSV Parsing,” in Proceedings of the 29th International Conference on Scientific and Statistical Database Management, Chicago IL USA: ACM, Jun. 2017, pp. 1–12. [CrossRef]

- Alagiannis, R. Borovica-Gajic, M. Branco, S. Idreos, and A. Ailamaki, “NoDB: efficient query execution on raw data files,” Commun. ACM, vol. 58, no. 12, pp. 112–121, Nov. 2015. [CrossRef]

- M. Karpathiotakis, M. Branco, I. Alagiannis, and A. Ailamaki, “Adaptive query processing on RAW data,” Proc. VLDB Endow., vol. 7, no. 12, pp. 1119–1130, Aug. 2014. [CrossRef]

- S. Idreos, I. Alagiannis, R. Johnson, and A. Ailamaki, “Here are my data files. Here are my queries. Where are my results?,” in Proceedings of 5th Biennial Conference on Innovative Data Systems Research, Asilomar, California, USA, Jan. 2011, pp. 57–68. Accessed: Jul. 24, 2021. Available online: https://www.cidrdb.org/cidr2011/Papers/CIDR11_Paper7.pdf.

- Dutch Stichting DuckDB Foundation, “DUCKDB.” Dutch Stichting DuckDB Foundation, Amsterdam NL, 13 2023. Accessed: Feb. 04, 2024. Available online: https://duckdb.org/docs/archive/0.9.2/.

- C. Christodoulakis, E. B. Munson, M. Gabel, A. D. Brown, and R. J. Miller, “Pytheas: pattern-based table discovery in CSV files,” Proc. VLDB Endow., vol. 13, no. 12, pp. 2075–2089, Aug. 2020. [CrossRef]

- L. Hübscher, L. Jiang, and F. Naumann, “ExtracTable: Extracting Tables from Raw Data Files,” 2023. [CrossRef]

- M. F. Al-Saleh and A. E. Yousif, “Properties of the Standard Deviation that are Rarely Mentioned in Classrooms,” Austrian J. Stat., vol. 38, no. 3, Apr. 2016. [CrossRef]

- G. Vitagliano, M. Hameed, L. Jiang, L. Reisener, E. Wu, and F. Naumann, “Pollock: A Data Loading Benchmark,” Proc. VLDB Endow., vol. 16, no. 8, pp. 1870–1882, Apr. 2023. [CrossRef]

- T. Petricek, G. J. J. V. D. Burg, A. Nazábal, T. Ceritli, E. Jiménez-Ruiz, and C. K. I. Williams, “AI Assistants: A Framework for Semi-Automated Data Wrangling,” IEEE Trans. Knowl. Data Eng., vol. 35, no. 9, pp. 9295–9306, Sep. 2023. [CrossRef]

- Rufus Pollock, “Data Package (v1),” CSV Dialect. Accessed: May 10, 2023. Available online: https://specs.frictionlessdata.io/csv-dialect/.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).