2. Evolution of AI Project Leadership

[6] A historical perspective offers valuable insights into the evolution of AI project leadership. Early leaders and visionaries in AI, often overlooked, laid the groundwork for contemporary practices [7]. Understanding milestones in the field's history provides executives with lessons that can shape modern AI leadership [1].

In the current landscape, AI project leadership has embraced various trends. [8] Agile leadership in AI development emphasizes adaptability and responsiveness to rapid technological changes. Collaborative leadership in cross-functional teams acknowledges the interdisciplinary nature of AI projects, fostering teamwork and communication across diverse skill sets [3,8]. However, these contemporary models come with their own set of challenges, ranging from technological disruptions to navigating complex ethical and regulatory landscapes [6,9].

2.1. Defining Leadership in the AI Context

Comprehending the significance of federal directives, it's crucial to first understand the evolving nature of leadership in the AI context [4]. AI projects demand leaders who not only possess traditional managerial acumen but also navigate the unique challenges posed by the integration of cutting-edge technologies [3]. The interplay of technical expertise and visionary leadership becomes essential in steering AI initiatives toward success [10].

Executives overseeing AI projects bear the responsibility of setting the strategic vision and mission for these initiatives [10]. Beyond the technical realm, their role extends into the ethical dimensions of AI development [10,11]. Fostering a culture of responsible AI involves ensuring that ethical considerations are integral to decision-making processes [5]. This dual responsibility emphasizes the multifaceted nature of AI project leadership [1].

2.1.1. Scope of the Executive Order

It is crucial to note that the impact of President Joe Biden's executive order on AI is predominantly focused on federal initiatives [12]. The order directs various government agencies to create regulations overseeing AI, set new standards, and administer governmental guidelines [10]. However, it is explicitly stated that the executive order itself is not a law, and there are currently no plans for Congress to pass similar legislation [13]. This indicates that the directives and regulations outlined in the order primarily pertain to the federal government's approach to AI development and deployment [12].

While the executive order is tailored for federal initiatives, it can serve as a directional guide for private entities involved in AI [12]. The emphasis on creating regulations, standards, and guidelines sends a clear message to the private sector about the areas where increased regulation might be expected [12,13]. This includes considerations for safeguarding data privacy, enhancing cybersecurity, and strengthening national security, among other aspects [14].

Additionally, the commitment of funding for research into privacy-preserving technologies with AI is noted as a significant contribution [12]. Private entities, particularly those heavily invested in AI development, can glean insights from the order's focus on privacy, equity, and civil rights in AI [15]. Although the order does not impose immediate regulatory constraints on private entities, it sets a precedent for potential future regulatory developments and emphasizes the importance of responsible and ethical AI practices [16].

In essence, while the executive order's primary impact is on federal initiatives, private entities are encouraged to pay attention to its directives and align their practices with the broader principles outlined [12,25]. The order acts as a precursor, signaling a growing emphasis on regulatory considerations in the AI space, even though it does not directly impose regulations on private entities at this stage [12,17].

2.1.2. Impact on Government Contracts Generally

[17] Dominique Shelton Leipzig, a partner and cybersecurity and data privacy leader with Mayer Brown, highlights the far-reaching implications of today's executive order on the vast landscape of government contracts [12,18]. The federal government, being the largest procurer of vendor services in the U.S., exercises considerable influence over a myriad of sectors, including IT, life sciences, defense, and more [12].

[17] Leipzig emphasizes that the executive order, signed by President Joe Biden, will directly impact trillions of dollars in government contracts. It extends its influence well beyond the realm of IT services, encompassing critical infrastructure areas such as health, finance, energy, and the food supply. To emphasize the scale of its impact, Leipzig notes that the government spent a staggering $2 trillion on IT services alone in the previous year [18,19].

In light of these numbers, Leipzig advises that companies falling into certain categories need to pay close attention [17]. If a company is a recipient of federal funds, part of critical infrastructure, a government contractor, or a supplier to any of these entities, the leaders of such companies are urged to be vigilant [25]. The executive order's implications are not confined to a specific sector; instead, they permeate through various industries with ties to government contracts, necessitating careful consideration and adaptation in response to the evolving regulatory landscape [12,20].

2.2. Understanding Order 14110 and Executive Order 14091

[12,20] Executive Order 14110, issued on October 30, 2023, emphasizes the safe, secure, and trustworthy development and use of AI. It delineates provisions for ensuring the ethical deployment of AI technologies, placing a significant compliance burden on executives leading AI projects [22].

Simultaneously, Executive Order 14091, dated February 16, 2023, places a strong emphasis on advancing racial equity, gender, physical condition, and age and supporting underserved communities through federal initiatives [12,21].

These federal directives play a pivotal role in shaping the ethical standards that govern AI development. Compliance with these directives ensures that AI projects prioritize safety, security, and trustworthiness [12]. Executives must be well-versed in the specific provisions of these orders to integrate them seamlessly into the fabric of their AI initiatives [12,17,20].

Furthermore, the directives accentuate the commitment to inclusive and responsible AI development. Executives must align their strategies with the objectives outlined in Executive Order 14091, ensuring that AI projects actively contribute to advancing racial equity and supporting underserved communities [12,21]. This entails not only a re-evaluation of AI algorithms for potential biases but also fostering diversity and inclusion within the AI teams [2].

2.2.1. Executive Order 14110

In dissecting the intricacies of Executive Order 14110, issued on October 30, 2023, it is imperative to delve into the key provisions that shape the landscape of AI development [11,12]. This executive directive, signed by President Joe Biden, articulates a comprehensive framework for the safe, secure, and trustworthy development and utilization of artificial intelligence (AI) technologies [12]. The essence of these provisions lies in ensuring that AI advancements align with ethical considerations, prioritize user safety, and foster public trust [12,15].

The primary focus of Executive Order 14110 is on promoting the safety of AI development [12,25]. This provision explains that executives leading AI projects prioritize the identification and mitigation of potential risks associated with AI technologies. From algorithmic biases to unintended consequences, the directive highlights the need for robust measures to guarantee that AI systems are developed with a keen eye on safety standards [23]. This involves rigorous testing, validation procedures, and continuous monitoring to identify and rectify potential safety issues throughout the development life cycle [24].

Security stands as a cornerstone in the Executive Order's provisions, emphasizing the imperative need for secure AI implementation [12,25]. In an era where cyber threats are prevalent, the directive calls for executives to integrate robust cybersecurity measures into AI systems [25]. This involves safeguarding data integrity, ensuring user privacy, and protecting against malicious attacks that could compromise the security of AI applications [25]. Secure AI implementation not only fortifies the technology against external threats but also nurtures user confidence in the reliability of AI systems [12].

The directive extends beyond the development and implementation phases to emphasize the importance of trustworthy AI use [25]. Executives are tasked with ensuring that AI technologies are deployed in a manner that engenders trust among users, stakeholders, and the general public. This provision requires transparency in how AI systems operate, with a commitment to clear communication regarding their intended use, limitations, and potential societal impacts [12]. By prioritizing trustworthy AI use, executives contribute to building a positive narrative around AI technologies and allaying concerns about their ethical implications [12].

2.2.2. Executive Order 14091

2.2.2.1. A Commitment to Racial Equity

In tandem with Executive Order 14110, Executive Order 14091, issued on February 16, 2023, adds another layer of responsibility for executives leading AI projects [12]. This directive, signed by President Joe Biden, underscores a resolute commitment to advancing racial equity and providing support to underserved communities through federal government initiatives [26].

The central thrust of Executive Order 14091 lies in the articulation of key objectives aimed at addressing racial disparities and promoting equity in various spheres, including technology and artificial intelligence [12]. Executives leading AI projects must align their strategies with these objectives, recognizing the imperative role that AI technologies play in either perpetuating or mitigating existing racial disparities [1,25].

Key objectives may include initiatives to eliminate biases in AI algorithms that may disproportionately impact certain racial or ethnic groups [12,27]. It could also involve promoting diversity and inclusion within the AI industry to ensure that the development and deployment of AI technologies consider the perspectives and experiences of individuals from diverse backgrounds [22]. The directive may further call for targeted efforts to bridge the digital divide and enhance access to AI-related opportunities for historically marginalized communities [12,25].

2.2.2.2. Focus on underserved communities.

A crucial aspect of Executive Order 14091 is its explicit focus on underserved communities [12]. Executives leading AI projects are tasked with understanding and addressing the unique challenges faced by these communities in the realm of technology and AI [2]. This involves a comprehensive approach that goes beyond mere representation to actively engaging with these communities, understanding their specific needs, and tailoring AI initiatives to address those needs [12,28].

In the context of AI, focusing on underserved communities may involve initiatives such as providing access to AI education and training programs, creating pathways for employment in the AI industry, and ensuring that AI technologies are designed with the specific needs of these communities in mind [25]. By prioritizing these communities, executives contribute to a more inclusive and equitable AI landscape, where the benefits of technological advancements are distributed more equitably across society [20].

In essence, Executive Order 14091 places a distinct emphasis on the pursuit of racial equity in the development and deployment of AI technologies [25]. Executives leading AI projects are instrumental in translating the key objectives of this directive into tangible actions that actively contribute to dismantling barriers and fostering inclusivity within the AI industry [12]. This commitment not only aligns with societal values but also reflects a broader recognition of the potential of AI to be a force for positive change in addressing systemic inequities [12,17,25].

Another key facet of integrating racial equity into AI projects is the promotion of diversity and inclusion within AI teams [12,25]. Executive Order 14091 recognizes that diverse perspectives are essential in crafting AI technologies that are sensitive to the needs of a broad spectrum of users [25]. AI project leaders are thus tasked with fostering a workplace culture that actively encourages diversity, ensuring that teams reflect a variety of backgrounds, experiences, and perspectives [20].

This involves intentional recruitment efforts to attract talent from underrepresented groups in the AI industry. Furthermore, it requires creating an inclusive environment where diverse voices are not only heard but actively contribute to decision-making processes [12,28]. By promoting diversity and inclusion within AI teams, project leaders not only adhere to the directive's objectives but also enhance the creativity and effectiveness of their teams in addressing the complex challenges associated with AI development [28].

The integration of racial equity in AI projects, as outlined in Executive Order 14091, necessitates a holistic approach [12,17]. AI project leaders must continually assess the impact of their initiatives on underserved communities, actively engaging with these communities to understand their unique needs [22]. Simultaneously, fostering diversity and inclusion within AI teams is paramount to ensuring that the development of AI technologies is representative and considerate of the diverse array of individuals who interact with these technologies [1,20,29]. This commitment not only aligns with the directive's objectives but also contributes to the creation of a more equitable and inclusive AI landscape [30].

2.2.2.3. Intersectionality

[12,25] While Executive Order 14091 primarily emphasizes "Advancing Racial Equity," its commitment to fostering an inclusive society acknowledges the concept of intersectionality. Intersectionality recognizes that individuals may embody multiple dimensions of diversity simultaneously, including but not limited to race, gender, age, and physical conditions [31]. By understanding and addressing the interconnected nature of these various identities, the order aims to create policies that consider the unique experiences and challenges faced by individuals with overlapping characteristics [31,32].

To address intersectionality, the order encourages federal agencies to undertake comprehensive reviews of their programs and policies [25], recognizing the intersecting factors that contribute to disparities and hinder equal opportunities. This approach aims to identify and eliminate systemic barriers that impact individuals with multiple dimensions of diversity, promoting policies that are inclusive and responsive to the complex needs of diverse communities [12,31].

Furthermore, the order emphasizes the significance of data collection and analysis that considers intersectionality [12,20]. This approach allows agencies to tailor their strategies, taking into account the specific challenges faced by individuals with overlapping identities [12,31]. By understanding the broad perspectives and requirements of individuals with intersecting characteristics, federal agencies can formulate policies that acknowledge and address the complexity of diverse experiences [12,32].

In summary, while the executive order explicitly focuses on advancing racial equity, its principles can be extended to embrace intersectionality [25,31]. By recognizing the interconnected nature of diverse identities, promoting inclusive policies, leveraging data-driven insights, and engaging with diverse communities, the order sets a foundation for fostering an environment where individuals with intersecting characteristics have equal access to opportunities and are treated with fairness and respect [12,25].

2.3. Integration of Robust Safety Measures

At the core of aligning AI projects with federal directives is the integration of robust safety measures [12,17,25]. The mandates of Executive Order 14110, which emphasize safe AI development, necessitate a strategic approach to identify, assess, and mitigate potential risks throughout the AI project lifecycle [20,25]. AI project leaders must implement comprehensive safety protocols that encompass data security, system reliability, and user protection [25].

This involves conducting rigorous risk assessments, implementing fail-safe mechanisms, and establishing protocols for incident response [20,25]. The goal is to create a safety-centric framework that not only meets regulatory requirements but also instills confidence among users, stakeholders, and the wider public [12]. By prioritizing safety measures, AI project leaders contribute to the overarching objective of ensuring the safe and secure development and use of AI technologies as outlined in the federal directives [12,25].

2.4. Addressing Racial Bias in AI Algorithms

In tandem with the commitments outlined in Executive Order 14091, AI project leaders must address and mitigate racial bias in AI algorithms [12,20]. This necessitates a proactive approach to identify and rectify biases that may inadvertently perpetuate existing disparities [25,31]. Addressing racial bias involves comprehensive assessments of AI algorithms, scrutinizing training data for potential biases, and implementing measures to ensure fair and equitable outcomes for users from diverse racial backgrounds [12,31].

To achieve this, AI project leaders may employ techniques such as algorithmic auditing, involving thorough examinations of decision-making processes, to uncover and rectify biases [25,32]. Additionally, fostering diversity within AI development teams plays a crucial role in identifying and addressing biases, as diverse perspectives contribute to a more comprehensive understanding of potential biases within algorithms [32].

By actively addressing racial bias in AI algorithms, project leaders not only align their initiatives with the federal directives but also contribute to the broader goal of advancing racial equity in the deployment of AI technologies [12]. This dual commitment ensures that AI projects not only meet regulatory standards but also actively contribute to a more inclusive and equitable technological landscape [25].

2.5. Developing a Comprehensive Strategy: Aligning AI Projects with Federal Directives

In the multifaceted process of aligning AI projects with federal directives, effective stakeholder engagement and communication emerge as critical components. Directives such as Executive Order 14110 set forth stringent compliance requirements [12,25].

Establishing an ongoing dialogue with relevant authorities ensures that AI projects align with evolving regulatory standards [12,20]. Regular consultations with regulatory bodies provide a platform for clarifying ambiguities, seeking guidance, and staying abreast of emerging compliance expectations [25].

This collaborative approach extends beyond mere compliance [20]. AI project leaders should actively contribute to the dialogue around regulatory frameworks, sharing insights from the field and providing feedback on the practical implications of regulatory measures. By fostering a collaborative relationship with regulatory bodies, project leaders contribute to the development of regulations that are both effective and feasible within the dynamic realm of AI technology [12,17,25].

Transparent communication is paramount to building trust and fostering public confidence in AI projects. AI project leaders are tasked with clearly communicating the impact, objectives, and ethical considerations of their initiatives to the public [9]. This involves adopting a proactive communication strategy that transcends legal requirements and emphasizes openness and clarity [29,32].

Communicating the impact of AI projects to the public requires a comprehensive understanding of the societal implications of the technology [29]. Project leaders must distill complex technical concepts into accessible information, enabling the public to comprehend the benefits, risks, and ethical considerations associated with AI projects [32]. This transparency not only satisfies regulatory expectations but also positions AI projects as accountable and responsible contributors to societal well-being [12,17].

2.6. Incorporating Ethical Considerations into AI Technologies

Ensuring the ethical development and deployment of AI technologies is a paramount responsibility for AI project leaders [11].

As stewards of AI initiatives, project leaders must establish and adhere to robust ethical AI frameworks. This involves the development and implementation of clear ethical guidelines that govern the entire lifecycle of AI projects [33]. These guidelines should address a spectrum of ethical considerations, encompassing fairness, transparency, accountability, and privacy [34].

To operationalize ethical guidelines, AI project leaders may collaborate with ethicists, legal experts, and stakeholders to identify potential ethical challenges and establish best practices [35]. By embedding ethical considerations into the fabric of AI development processes, project leaders ensure that their initiatives align with societal values and adhere to the principles outlined in Federal Directives [20,25].

Ethical considerations in AI are dynamic and require ongoing vigilance. AI project leaders must institute mechanisms for continuous monitoring and evaluation of ethical implications throughout the project lifecycle [33]. This involves regular audits, assessments, and evaluations to identify and rectify any ethical issues that may arise [20,33].

Continuous monitoring extends beyond the technical aspects of AI projects to include the impact on diverse user groups [12,25]. AI project leaders should actively seek feedback from users, particularly those from underserved communities, to ensure that the technology is not inadvertently causing harm or perpetuating biases [25]. By adopting a proactive stance towards continuous monitoring, project leaders demonstrate a commitment to ethical AI development that evolves with technology and societal needs [12].

2.7. Ensuring Accountability in AI Decision-Making

A cornerstone of accountability in AI decision-making is the establishment of transparent processes. AI project leaders must ensure that decision-making workflows, algorithms, and models are comprehensible and accessible to stakeholders [34]. Transparency builds trust among users, regulators, and the wider public, fostering confidence in the ethical governance of AI technologies [15,30].

Establishing transparent processes involves documenting and communicating how decisions are made at every stage of the AI project lifecycle [37]. This includes disclosing the criteria used for data selection, model training, and decision outputs [30]. By demystifying the decision-making process, AI project leaders empower stakeholders to scrutinize and understand the technology, contributing to an environment of accountability and ethical responsibility [37].

[12] In the dynamic landscape of AI, where uncertainties and unforeseen challenges are inevitable, learning from mistakes becomes a pivotal aspect of ensuring accountability. AI project leaders must cultivate a culture that acknowledges and learns from errors, promoting a proactive approach to rectifying and adapting strategies [37].

When mistakes occur, AI project leaders need to conduct thorough post-mortem analyses, identify the root causes of issues, and implement corrective measures [38]. Transparency in acknowledging and addressing mistakes is key to maintaining accountability. Additionally, the insights gained from mistakes should inform the adaptation of strategies and the enhancement of ethical frameworks to prevent similar issues in the future [12,31].

Fostering a culture of continuous improvement ensures that AI project teams are resilient in the face of evolving challenges [12]. By learning from mistakes, adapting strategies, and openly communicating these processes, AI project leaders not only reinforce accountability but also contribute to the ethical evolution of their projects [25].

2.8. A Suggested Guide for Documenting Successful Case Studies.

- ❖

Introduction: Provide context for the AI project, including its goals, objectives, and the Federal Directives it aims to align with, and briefly introduce the organization leading the project and the broader regulatory landscape.

- ❖

Project Overview: Detail the specific AI project, outlining its scope, purpose, and key features. Highlighting the connection between the project's goals and the relevant Federal Directives, such as Executive Order 14110 or Executive Order 14091.

- ❖

Compliance Measures: Clearly articulate the steps taken to ensure compliance with federal directives. In addition, specify how the project addresses the key provisions outlined in the relevant Executive Orders and provide insights into the development of safety measures, security protocols, and ethical frameworks.

- ❖

Technological Implementation: Describe the technological aspects of the AI project, including the algorithms, models, and technologies employed. Emphasizing how the technology contributes to the goals of the Federal Directives, such as ensuring safe, secure, and trustworthy AI development or promoting racial equity.

- ❖

Positive Impact on Communities: Showcase the tangible positive impact of the AI project on communities and organizations. Providing examples or data illustrating the benefits brought about by the project aligns with the broader societal goals outlined in the Federal Directives.

- ❖

Challenges Faced: Acknowledge any challenges or hurdles encountered during the implementation of the AI project.

- ❖

Continuous Improvement: Highlight any measures taken to continuously improve the AI project and also discuss how the project remains adaptable to changing regulations, technological advancements, and ethical standards.

- ❖

Results and Metrics: Present measurable outcomes and metrics associated with the project and include data related to compliance, community impact, and any relevant key performance indicators.

- ❖

Lessons Learned: Reflect on the lessons learned throughout the project and discuss how these lessons inform future AI initiatives and contribute to the organization's overall approach to AI governance as an executive overseeing the project.

- ❖

Conclusion: Summarize the key points of the case study, emphasizing the alignment with Federal Directives, the positive impact on communities, and the commitment to continuous improvement, and provide a clear conclusion that reinforces the significance of the project within the regulatory framework.

- ❖

Recommendations and Best Practices: Offer recommendations and best practices based on the lessons learned from the case study. It also provides insights for other organizations embarking on similar AI projects under federal directives.

- ❖

Visuals and Testimonials: Include visuals such as charts, graphs, and images to enhance understanding. If possible, incorporate testimonials or quotes from stakeholders involved in the project.

By following this comprehensive guide, case studies will effectively communicate the success of the AI project in alignment with federal directives.

Figure 1.

Steps for Documenting Successful Case Studies.

Figure 1.

Steps for Documenting Successful Case Studies.

2.9. Overcoming Challenges and Mitigating Risks

[12,25] Navigating the legal and regulatory landscape poses a significant challenge for AI project leaders. Compliance with federal directives, such as Executive Order 14110 and Executive Order 14091, necessitates a thorough understanding of evolving standards and mandates [12]. Legal challenges may arise from ambiguities in the directives, conflicts with existing regulations, or uncertainties surrounding emerging issues in AI governance [25].

[25] AI project leaders must actively engage with legal experts, regulatory bodies, and industry associations to stay informed about the evolving legal landscape [9]. Establishing a legal framework within the project that aligns with federal directives is crucial [12]. This involves conducting regular legal assessments, ensuring that the project remains compliant with the latest regulations, and adapting strategies to address any legal hurdles that may emerge during the project [9].

[35] The dynamic nature of AI technologies introduces technological and ethical dilemmas that AI project leaders must proactively navigate. Technological challenges may include issues related to the robustness of AI algorithms, bias in machine learning models, or the ethical implications of deploying AI in specific contexts [37].

To overcome technological challenges, AI project leaders must invest in ongoing research and development, stay abreast of technological advancements, and collaborate with experts in AI ethics and algorithmic fairness [9,37]. Integrating continuous monitoring and auditing mechanisms into the project can help identify and rectify technological dilemmas as they arise [35].

[36] Ethical dilemmas, on the other hand, may stem from conflicting values, biases in decision-making processes, or unintended societal consequences of AI applications. AI project leaders should foster a culture of ethical awareness within their teams, encouraging open dialogue and ethical considerations at every stage of the project [8]. Establishing ethical AI frameworks and conducting regular ethical reviews contribute to mitigating ethical challenges and ensuring responsible AI development [22].

2.10. Future Outlook and Continuous Improvement

Anticipating future federal directives in the dynamic realm of AI regulations is essential for proactive compliance [12,25]. To achieve this, AI project leaders should implement various strategies. Regular environmental scanning becomes crucial, involving a systematic process of staying informed about emerging trends, discussions, and legislative initiatives related to AI globally [17,25]. Active participation in industry forums, conferences, and discussions dedicated to AI regulation is also vital, providing insights into potential directions for future directives through engagement with policymakers and regulatory bodies [17,18].

Moreover, fostering ongoing collaboration with regulatory bodies is key, as is establishing a direct line of communication, sharing insights from AI projects, participating in regulatory consultations, and contributing feedback for the development of future directives [19].

Additionally, scenario planning exercises are essential to envisage potential regulatory landscapes, involving the consideration of various legislative possibilities and the formulation of adaptive strategies for the seamless navigation of different regulatory frameworks by the AI project [12,32].

In summary, anticipating future Federal Directives requires a proactive and multifaceted approach, including continuous environmental scanning, active participation in industry dialogues, collaboration with regulatory bodies, and scenario planning exercises, collectively ensuring that AI initiatives stay ahead of regulatory developments and maintain proactive compliance [25].

2.10.1. Adapting to Changing Ethical Standards

Regular ethical reviews should be seamlessly integrated into the AI project lifecycle [29]. This process entails a comprehensive assessment of the project's alignment with ethical principles, the identification of potential ethical challenges, and the adaptation of strategies to address emerging ethical standards.

Fostering diversity within AI project teams is essential for promoting a variety of perspectives in ethical decision-making [12]. Diverse teams are better equipped to navigate complex ethical dilemmas and contribute to the development of technologies that consider various societal values [25].

[34] Developing dynamic ethical frameworks within the AI project is crucial for adapting to changing ethical standards. This involves establishing a culture of ethical awareness and continuous improvement where ethical considerations are seamlessly embedded into the fabric of decision-making processes [5,34]. In doing so, the project ensures a proactive approach to evolving ethical standards in the dynamic field of AI development.

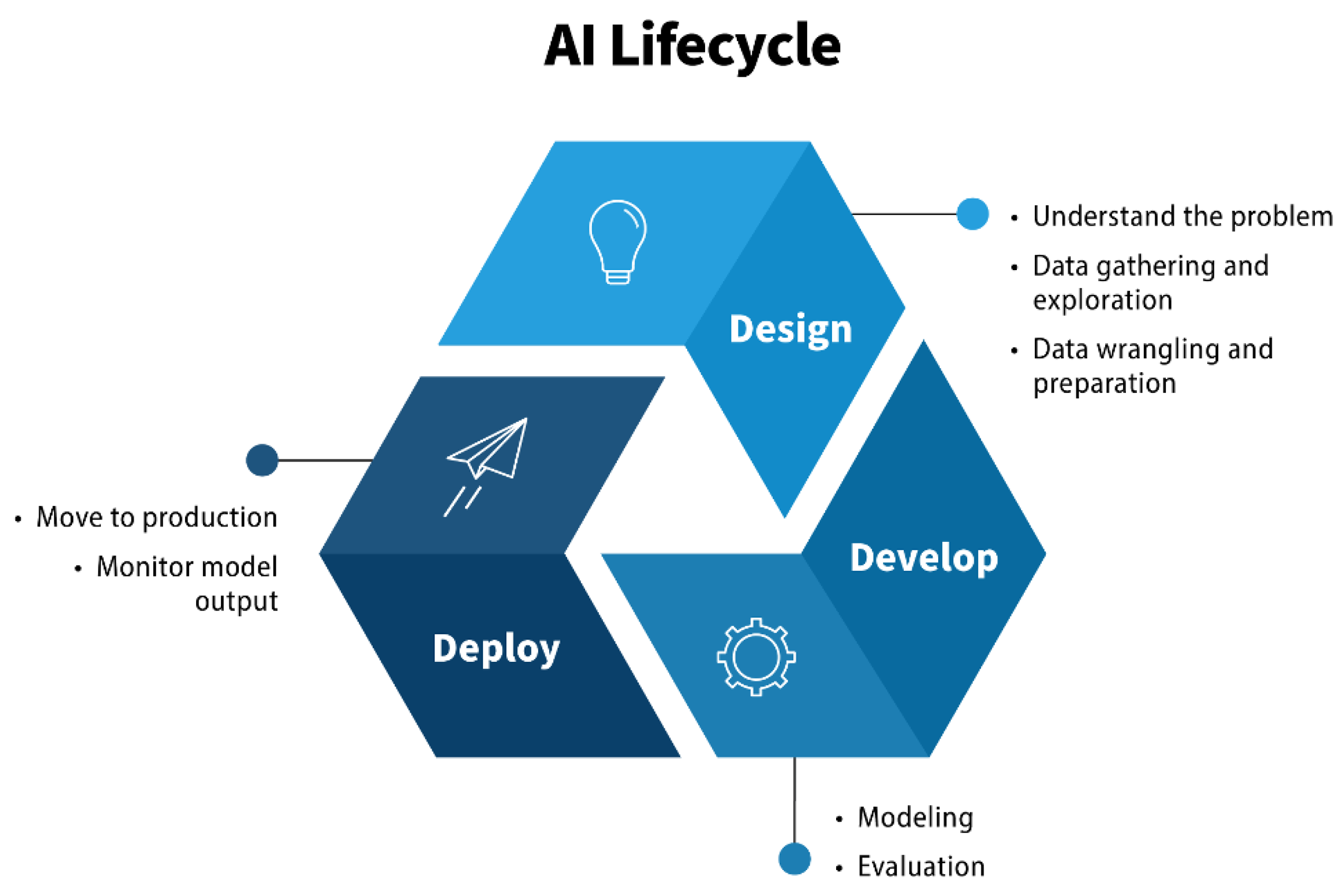

Figure 2.

AI Lifecycle [39].

Figure 2.

AI Lifecycle [39].

2.11. Proactive Measures for Enhanced AI Governance

AI project leaders are advised to proactively implement measures for enhanced AI governance, integrating mechanisms that contribute to the responsible and ethical development of AI technologies, and using strategies for these proactive measures (12,25).

Firstly, establishing an AI governance framework is paramount. This involves developing and refining a comprehensive framework that outlines ethical considerations, regulatory compliance measures, and strategies for continuous improvement. The framework should be dynamic, capable of adapting to evolving standards, and provide a solid foundation for ethical AI development [21,25].

Secondly, investing in ongoing training and education for AI project teams is essential. This includes keeping team members updated on the latest ethical guidelines, regulatory requirements, and technological advancements through various means such as workshops, seminars, and online courses. This ensures that the project teams are well-equipped to navigate the evolving landscape of AI governance [18,25].

Thirdly, incorporating feedback loops within the project structure is a valuable strategy. These loops enable the gathering of insights from users, stakeholders, and team members [2,3]. Actively seeking feedback on ethical considerations, user experiences, and potential areas for improvement informs continuous enhancement, fostering a culture of responsiveness and adaptability [3].

In addition, engaging in scenario-planning exercises to anticipate future challenges is crucial. This proactive approach ensures that the project is well-prepared to navigate potential obstacles and adapt to changing circumstances [4]. By foreseeing challenges and developing strategies for addressing them, AI project leaders enhance the project's resilience and ability to navigate the complexities of AI governance [2].

Lastly, by promoting a culture of continuous improvement, AI project leaders instill a commitment to excellence, innovation, and ethical responsibility within their teams [22]. This commitment not only contributes to the ongoing success of AI projects but also aligns with the broader objectives of responsible and ethical AI development in the ever-evolving technological landscape [21].

Figure 3.

Proactive Measures to Enhanced AI Governance.

Figure 3.

Proactive Measures to Enhanced AI Governance.