Submitted:

06 February 2024

Posted:

07 February 2024

You are already at the latest version

Abstract

Keywords:

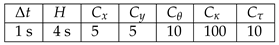

1. INTRODUCTION

- A hybrid software architecture for autonomous vehicles, combining modular perception and control modules with data-driven path planning;

- A comprehensive comparison between modular and hybrid software architectures through the simulation of urban scenarios;

- Evaluation of autonomous driving performance in diverse and hazardous traffic events within urban environments.

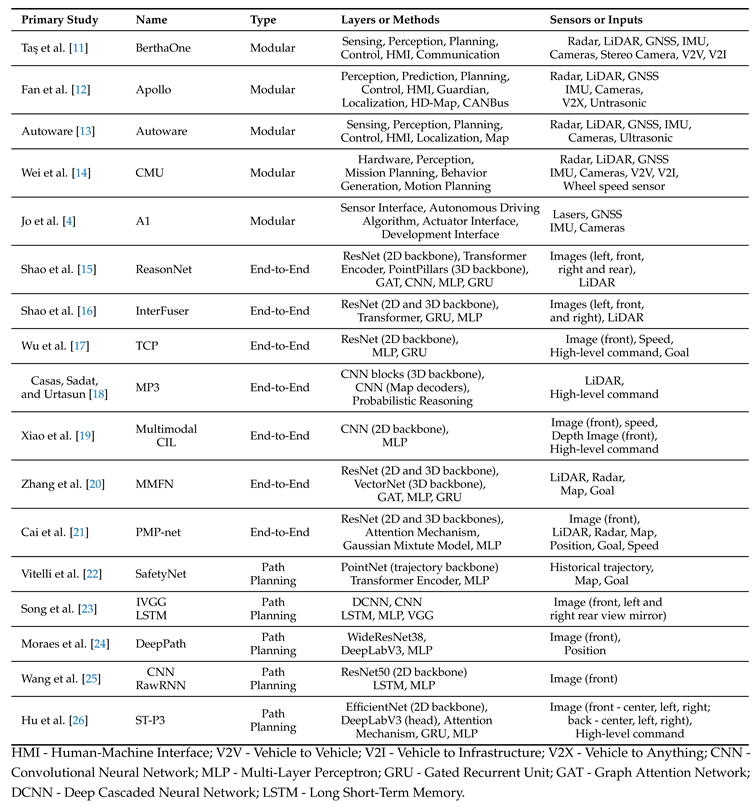

2. RELATED WORKS

|

2.1. Modular Navigation Architecture

2.2. End-to-End Autonomous Driving

2.3. Data-driven Path Planning

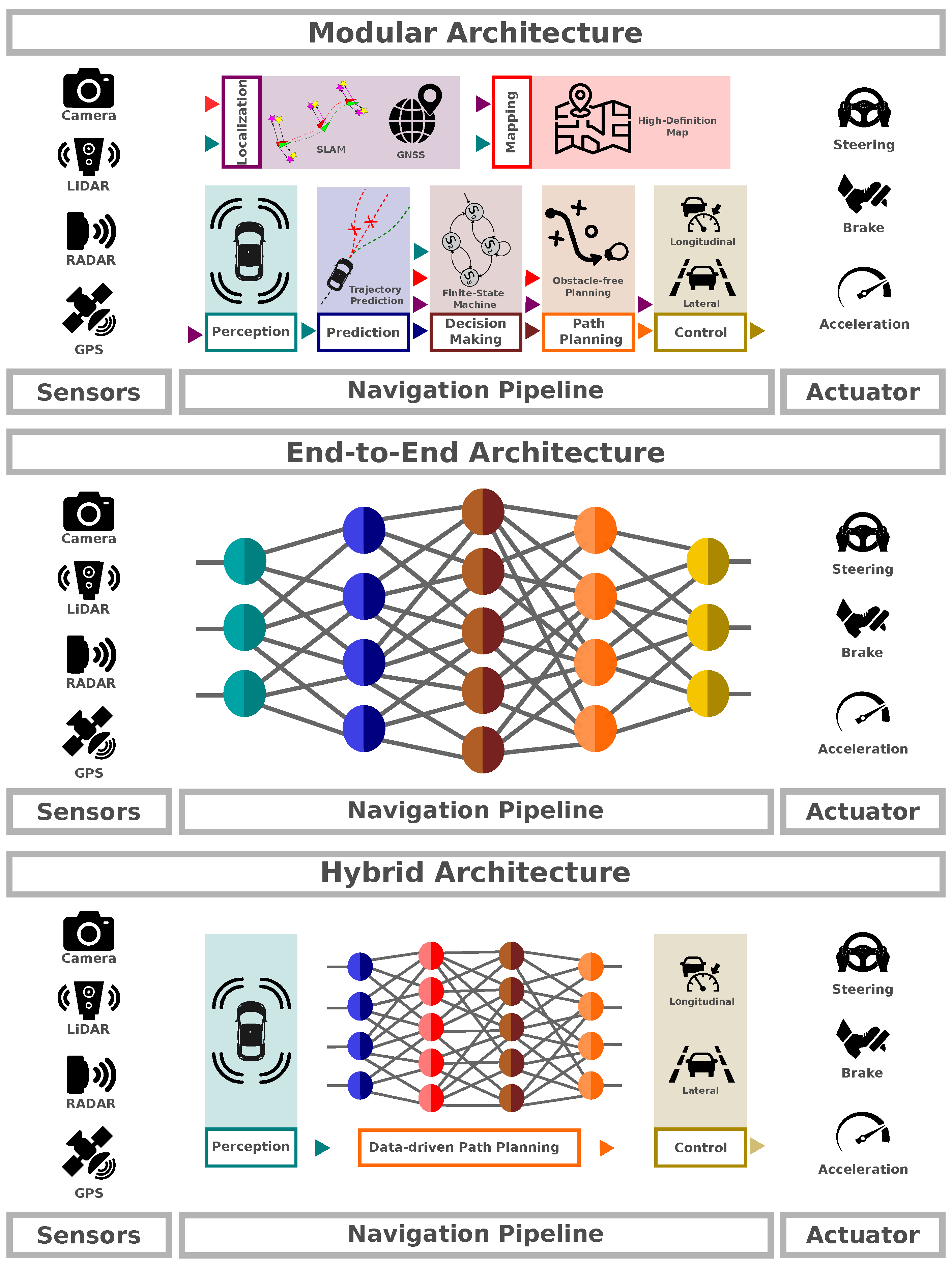

3. PROPOSED MODULAR PIPELINE

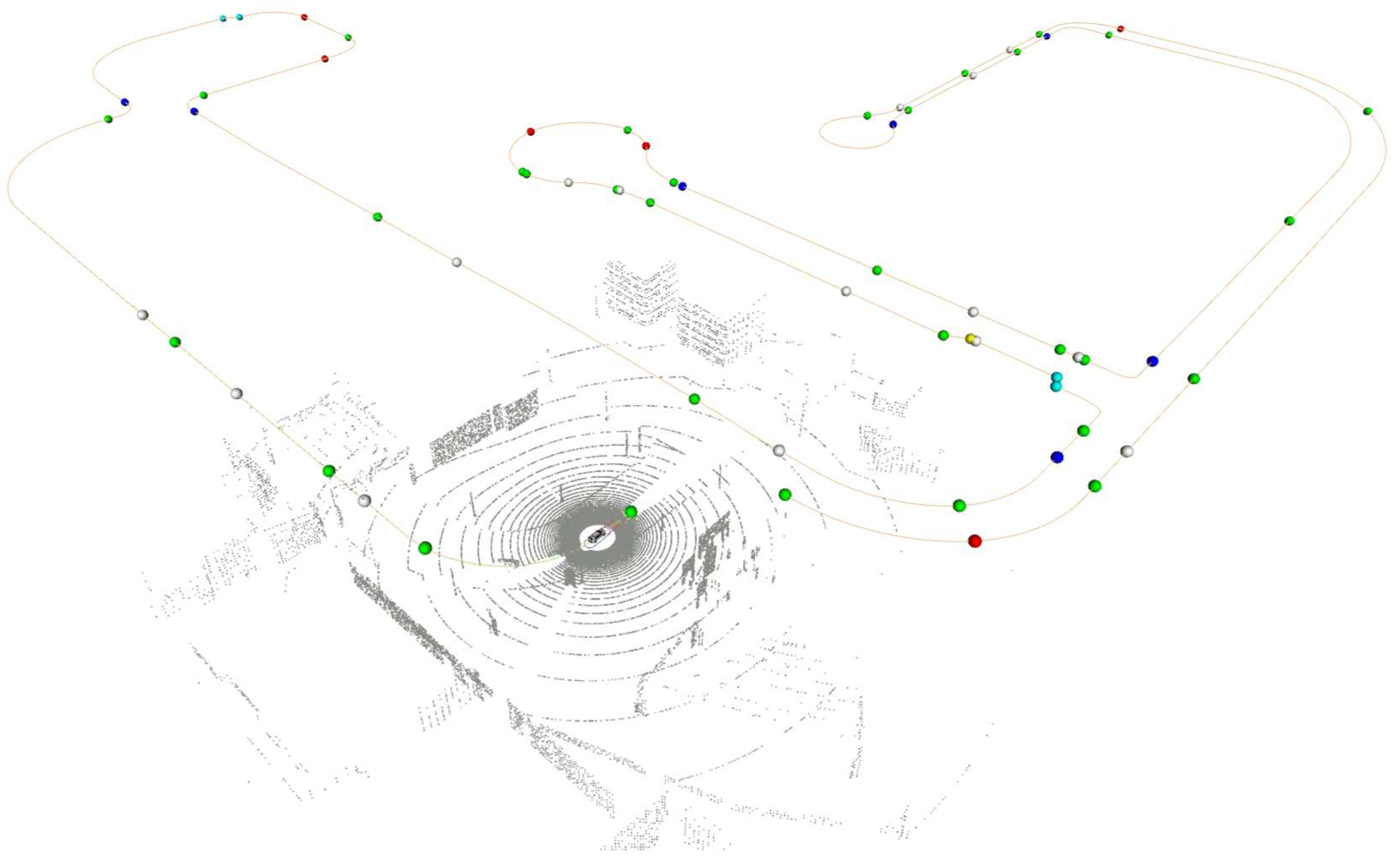

3.1. Mapping and Path Planning

3.1.1. OpenDRIVE

3.1.2. Path Planning

3.2. Perception

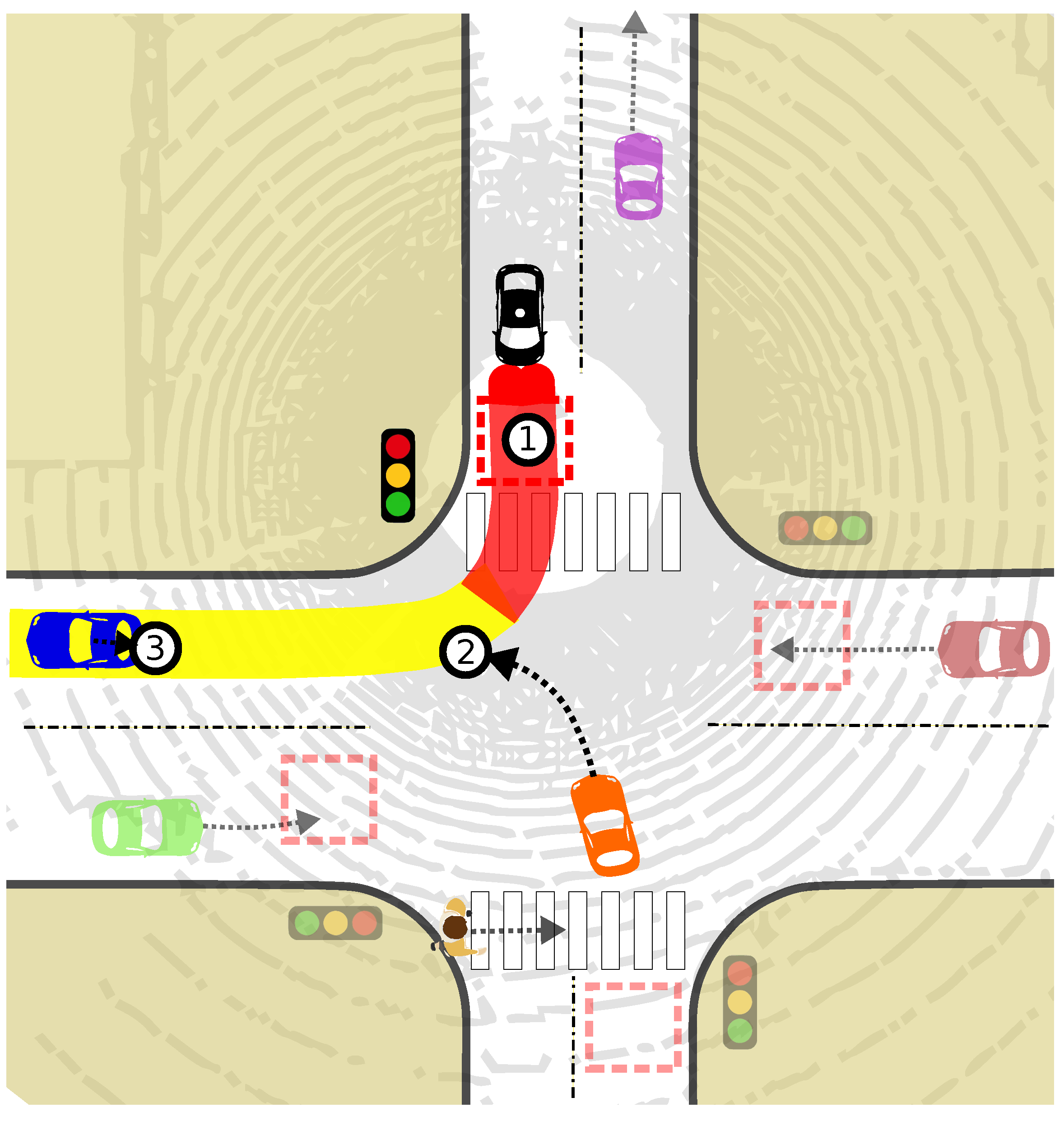

3.3. Risk assessment

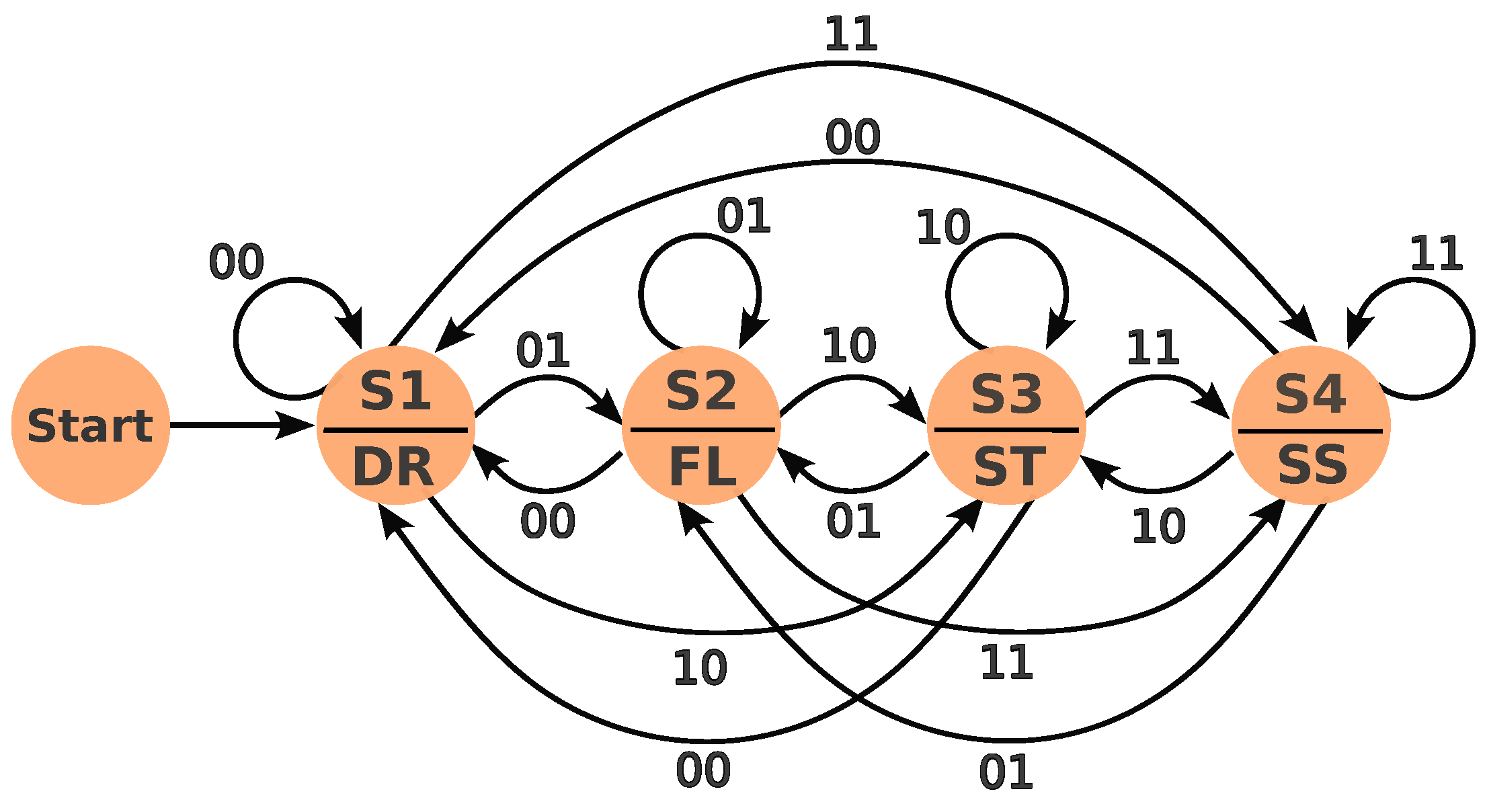

3.4. Decision making

3.5. Control

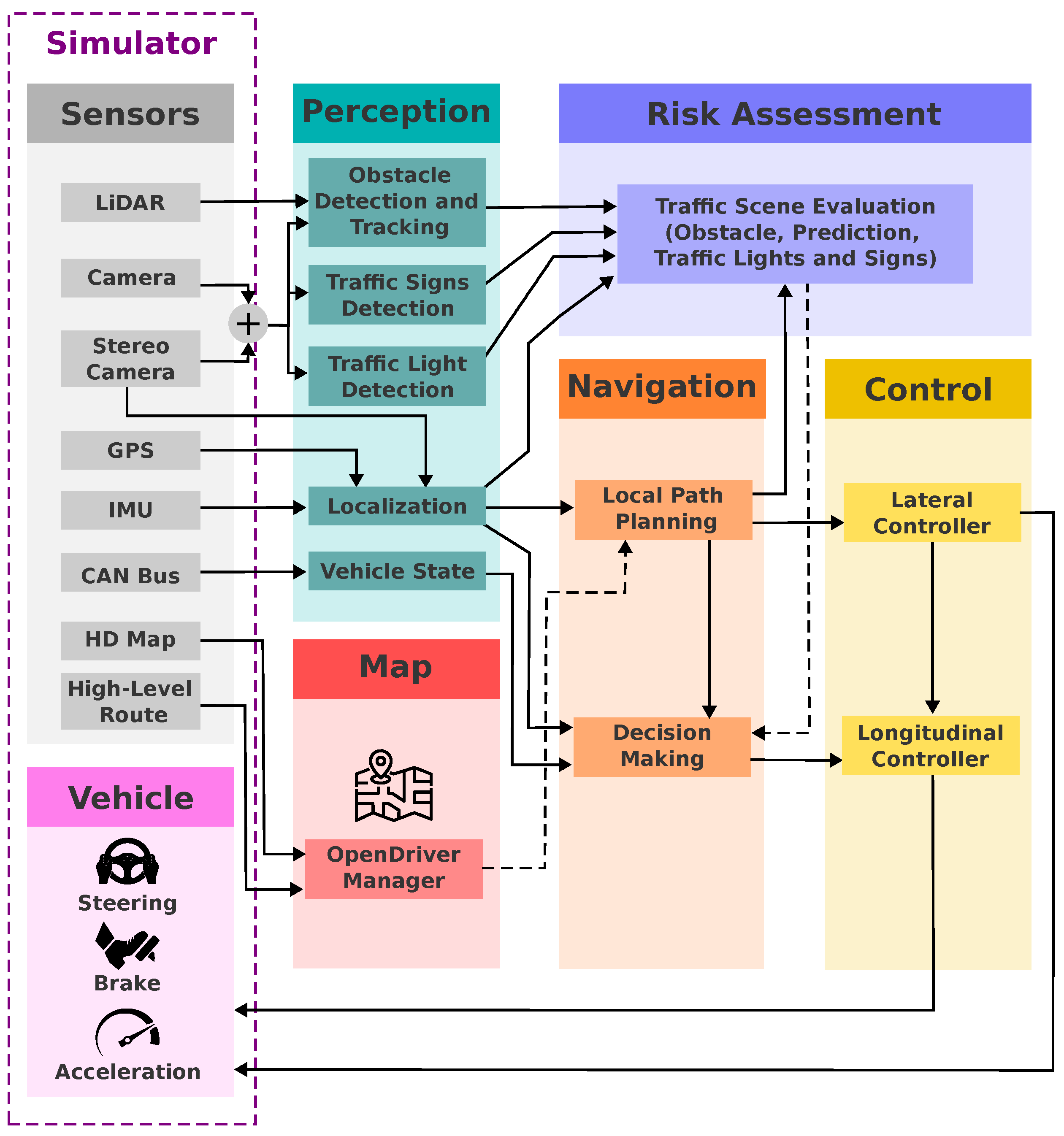

3.5.1. Lateral Control (MPC)

|

3.6. Localization

4. Hybrid Architecture for Mapless Autonomous Driving

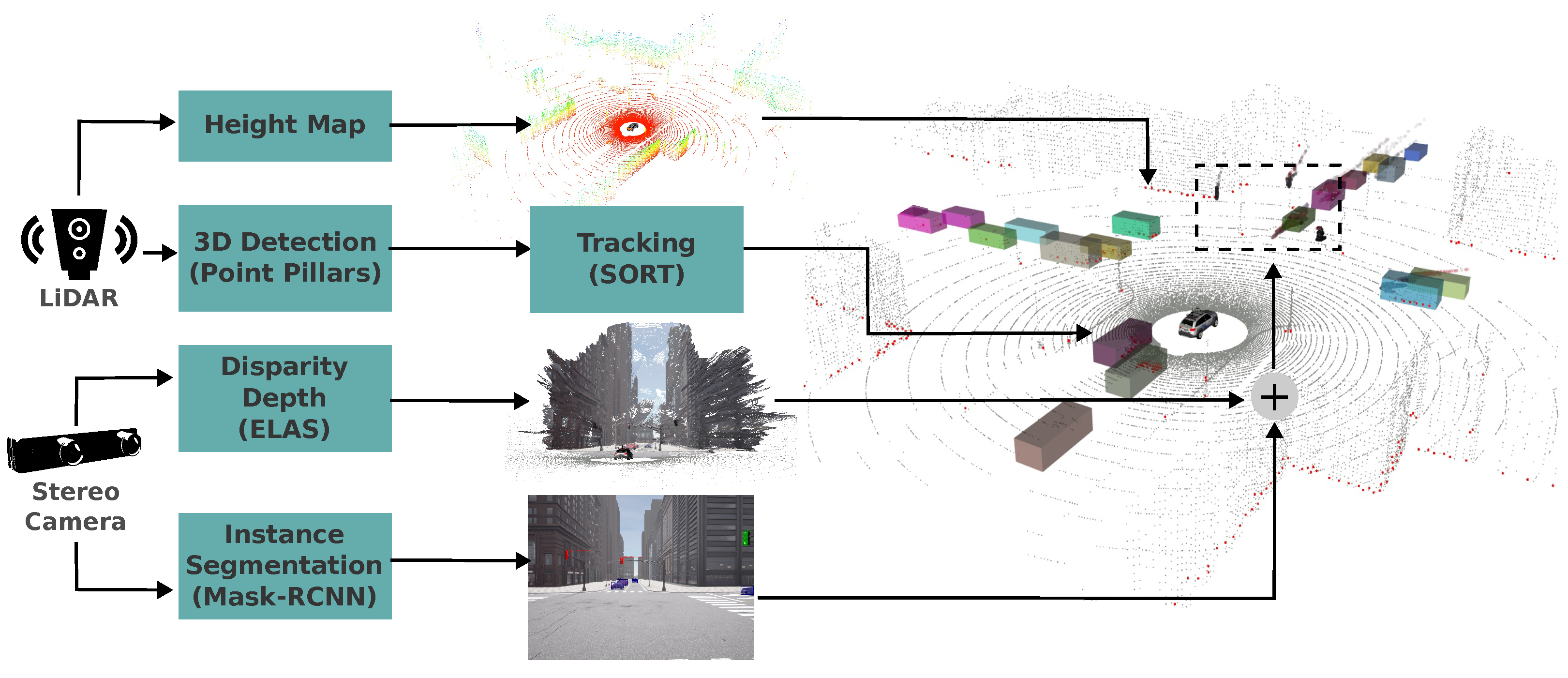

- Stereo Camera: We utilize a pair of cameras with specific field of view, resolution, and baseline. Disparity maps calculated with the ELAS algorithm and projected into point cloud. Stereo point cloud is transformed from the camera coordinate system to BEV coordinate system using the transformation matrix .

- LiDAR: The LiDAR point cloud is directly transformed to the BEV coordinate system using transformation matrix. LiDAR points are then rasterized in an RGB image where a colormap encodes height information (blue for ground, yellow for above sensor). Empty pixels are filled with black.

- High-Level Commands: Global plan commands are converted from the world frame to the BEV frame using and rasterized as colored dots in BEV space (blue for turn right, red for turn left, withe for straight and green for lane follow ). Additionally, we rasterize a straight line connecting two adjacent high-level commands. This connection enhances the representation of the order and sequence of points within the raster, facilitating interpretation and providing additional information to the CNN network.

5. Experiments and results

5.1. Experimental setup

- Two monocular cameras with 71° field of view (FOV) each are combined to form a stereo camera for 3D perception, producing a pair of rectified images with dimensions of pixels. The baseline of our stereo camera is 0.24 m. We utilize the ELAS algorithm [44] to generate 3D point clouds from the stereo images.

-

LiDAR sensor: 64 channels, 45° vertical field of view, 180° horizontal field of view, 50m range. Our system utilizes a simulated LiDAR collecting around one million data points per scan across 64 vertical layers.LiDAR and stereo camera are centered in the x-y plane of the ego car and mounted at 1.8m height.

- GPS and IMU: For localization and ego-motion estimation.

- CANBus: Provides vehicle internal state information such as speed and steering angle.

5.2. Metrics

- Control loss without prior action.

- Obstacle avoidance for unexpected obstacles.

- Negotiation at roundabouts and unmarked intersections.

- Following the lead vehicle’s sudden braking.

- Crossing intersections with a traffic-light-disobeying vehicle.

- Leaderboard 2 expands this scenarios, adding:

- Lane changes to avoid obstacles blocking lanes.

- Yielding to emergency vehicles.

- Door obstacles (e.g. opened car door).

- Avoiding vehicles invading lanes on bends.

- Maneuvering parking cut-ins and exits.

- To evaluate agent performance in each simulated scenario, CARLA Leaderboards employ a set of quantitative metrics that captures not only route completion but also adherence to traffic rules and safe driving practices. This metrics assesses the entire system’s performance, transcending mere point-to-destination navigation. It factors in traffic rules, passenger and pedestrian safety, and the ability to handle both common and unexpected situations (e.g., occluded obstacles and vehicle control loss).

- Collisions with pedestrians (CP) - 0.50.

- Collisions with other vehicles (CV) - 0.60.

- Collisions layout (CL) - 0.65.

- Running a red light (RLI) - 0.70.

- Stop sign infraction (SSI) - 0.80.

- Off-road infraction (ORI) - percentage of the route will not be considered.

- Scenario timeout (ST) - 0.70.

- Failure to maintain minimum speed (MinSI) - 0.70.

- Failure to yield to emergency vehicle (YEI) - 0.70.

- Under certain circumstances, the simulation will be automatically terminated, preventing the agent from further progress on the current route. These events include:

- Route deviations (RD)

- Route timeouts (RT)

- Agent blocked (AB)

- After all routes are completed, global metrics are calculated as the average of individual route metrics. The global driving score remains the primary metric for ranking agents against competitors. By employing comprehensive evaluation frameworks like CARLA Leaderboards, researchers and developers can gain valuable insights into the strengths and weaknesses of their autonomous driving systems, ultimately paving the way for safer and more robust vehicles that perform harmoniously as a whole, not just as a collection of individual components. For further details on the evaluation and metrics, visit the leaderboard website10.

5.3. Datasets

-

Instance Segmentation Dataset: We constructed a dataset of 20,000 RGB images with variable resolutions ranging from to pixels. These images encompass seven object classes: car, bicycle, pedestrian, red traffic light, yellow traffic light, green traffic light, and stop sign.For labeling, we employed a semi-automatic approach for cars, bicycles, pedestrians, and stop signs, leveraging sensor instances provided by the CARLA simulator. Traffic lights and stencil stop signs, however, required manual annotation for greater accuracy. All annotations were stored in the COCO format. Finally, we trained a Mask-RCNN model implemented in mmdetection11. for object detection and segmentation. Figure 9 showcases examples of detections achieved with our trained model. Our Instance Segmentation Dataset is available online12.

- 3D Object Detection Dataset: This dataset comprises 5,000 point clouds annotated with pose (relative to the ego car), height, length, width, and orientation for all cars, bicycles, and pedestrians. We leveraged the privileged sensor objects within the simulator to perform this automatic annotation. The data was subsequently saved in the KITTI format for compatibility with popular object detection algorithms. Using this dataset, we trained a PointPillars model adapted for our specific needs, implemented in the mmdetection3d framework13.

-

Path Planner Training Dataset: To train the path planner, we leveraged a privileged agent and the previously described sensors to collect approximately 300,000 frames. This agent granted access to ground-truth path information and provided error-free GPS and IMU data facilitating precise navigation. The point clouds from the LiDAR and stereo cameras were then projected and rasterized into 700x700 RGB images in the bird’s-eye view space. High-level commands like "left," "right," "straight," and "lane follow" were transformed to the ego coordinate system using the command pose, then rasterized within the bird’s-eye image in the same way than pointclouds but with color-coded points for commands (red for left, blue for right, white for straight, and green for lane follow). The ground-truth road path consisted of 200 waypoints spaced 20 cm apart, originating at the center of the ego car.To simulate potential navigation errors and enhance error recovery learning, we introduced Gaussian noise to the steering wheel inputs in 50% of the routes used for dataset collection.

5.4. Results on CARLA Leaderboards

5.5. Analysis and Discussion

5.5.1. Modular Architecture

5.5.2. Hybrid Architecture

5.5.3. Comparison and Final Remarks

6. Conclusions

6.1. Challenges and Future Work:

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Chib, P.S.; Singh, P. Recent advancements in end-to-end autonomous driving using deep learning: A survey. IEEE Transactions on Intelligent Vehicles 2023. [CrossRef]

- Teng, S.; Hu, X.; Deng, P.; Li, B.; Li, Y.; Ai, Y.; Yang, D.; Li, L.; Xuanyuan, Z.; Zhu, F.; others. Motion planning for autonomous driving: The state of the art and future perspectives. IEEE Transactions on Intelligent Vehicles 2023. [CrossRef]

- Tampuu, A.; Matiisen, T.; Semikin, M.; Fishman, D.; Muhammad, N. A survey of end-to-end driving: Architectures and training methods. IEEE Transactions on Neural Networks and Learning Systems 2020, 33, 1364–1384. [CrossRef]

- Jo, K.; Kim, J.; Kim, D.; Jang, C.; Sunwoo, M. Development of autonomous car—Part II: A case study on the implementation of an autonomous driving system based on distributed architecture. IEEE Transactions on Industrial Electronics 2015, 62, 5119–5132. [CrossRef]

- Liu, S.; Li, L.; Tang, J.; Wu, S.; Gaudiot, J.L. Creating Autonomous Vehicle Systems; Vol. 6, Morgan & Claypool Publishers, 2017; pp. i–186.

- Chen, L.; Wu, P.; Chitta, K.; Jaeger, B.; Geiger, A.; Li, H. End-to-end autonomous driving: Challenges and frontiers. arXiv preprint arXiv:2306.16927 2023. arXiv:2306.16927 2023. [CrossRef]

- Kalra, N.; Paddock, S.M. Driving to safety: How many miles of driving would it take to demonstrate autonomous vehicle reliability? Transportation Research Part A: Policy and Practice 2016, 94, 182–193. [CrossRef]

- Koopman, P.; Wagner, M. Challenges in autonomous vehicle testing and validation. SAE International Journal of Transportation Safety 2016, 4, 15–24.

- Huang, W.; Wang, K.; Lv, Y.; Zhu, F. Autonomous vehicles testing methods review. 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC). IEEE, 2016, pp. 163–168.

- Rosero, L.A.; Gomes, I.P.; da Silva, J.A.R.; Santos, T.C.d.; Nakamura, A.T.M.; Amaro, J.; Wolf, D.F.; Osório, F.S. A software architecture for autonomous vehicles: Team lrm-b entry in the first carla autonomous driving challenge. arXiv preprint arXiv:2010.12598 2020. arXiv:2010.12598 2020. [CrossRef]

- Taş, Ö.Ş.; Salscheider, N.O.; Poggenhans, F.; Wirges, S.; Bandera, C.; Zofka, M.R.; Strauss, T.; Zöllner, J.M.; Stiller, C. Making Bertha Cooperate–Team AnnieWAY’s Entry to the 2016 Grand Cooperative Driving Challenge. IEEE Transactions on Intelligent Transportation Systems 2018, 19, 1262–1276. [CrossRef]

- Fan, H.; Zhu, F.; Liu, C.; Zhang, L.; Zhuang, L.; Li, D.; Zhu, W.; Hu, J.; Li, H.; Kong, Q. Baidu apollo em motion planner. arXiv preprint arXiv:1807.08048 2018. arXiv:1807.08048 2018. [CrossRef]

- Autoware. Architecture overview, 2024. Accessed: 2023-01-23.

- Wei, J.; Snider, J.M.; Kim, J.; Dolan, J.M.; Rajkumar, R.; Litkouhi, B. Towards a viable autonomous driving research platform. Intelligent Vehicles Symposium (IV), 2013 IEEE. IEEE, 2013, pp. 763–770.

- Shao, H.; Wang, L.; Chen, R.; Waslander, S.L.; Li, H.; Liu, Y. ReasonNet: End-to-End Driving With Temporal and Global Reasoning. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2023, pp. 13723–13733.

- Shao, H.; Wang, L.; Chen, R.; Li, H.; Liu, Y. Safety-Enhanced Autonomous Driving Using Interpretable Sensor Fusion Transformer. arXiv preprint arXiv:2207.14024 2022. arXiv:2207.14024 2022.

- Wu, P.; Jia, X.; Chen, L.; Yan, J.; Li, H.; Qiao, Y. Trajectory-guided Control Prediction for End-to-end Autonomous Driving: A Simple yet Strong Baseline. NeurIPS, 2022.

- Casas, S.; Sadat, A.; Urtasun, R. Mp3: A unified model to map, perceive, predict and plan. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 14403–14412.

- Xiao, Y.; Codevilla, F.; Gurram, A.; Urfalioglu, O.; López, A.M. Multimodal End-to-End Autonomous Driving. IEEE Transactions on Intelligent Transportation Systems 2022, 23, 537–547. [Google Scholar] [CrossRef]

- Zhang, Q.; Tang, M.; Geng, R.; Chen, F.; Xin, R.; Wang, L. MMFN: Multi-Modal-Fusion-Net for End-to-End Driving. 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2022, pp. 8638–8643.

- Cai, P.; Wang, S.; Sun, Y.; Liu, M. Probabilistic end-to-end vehicle navigation in complex dynamic environments with multimodal sensor fusion. IEEE Robotics and Automation Letters 2020, 5, 4218–4224. [Google Scholar] [CrossRef]

- Vitelli, M.; Chang, Y.; Ye, Y.; Ferreira, A.; Wołczyk, M.; Osiński, B.; Niendorf, M.; Grimmett, H.; Huang, Q.; Jain, A. ; others. Safetynet: Safe planning for real-world self-driving vehicles using machine-learned policies. 2022 International Conference on Robotics and Automation (ICRA). IEEE, 2022, pp. 897–904.

- Song, S.; Hu, X.; Yu, J.; Bai, L.; Chen, L. Learning a deep motion planning model for autonomous driving. 2018 IEEE Intelligent Vehicles Symposium (IV). IEEE, 2018, pp. 1137–1142.

- Moraes, G.; Mozart, A.; Azevedo, P.; Piumbini, M.; Cardoso, V.B.; Oliveira-Santos, T.; De Souza, A.F.; Badue, C. Image-Based Real-Time Path Generation Using Deep Neural Networks. 2020 International Joint Conference on Neural Networks (IJCNN). IEEE, 2020, pp. 1–8.

- Wang, D.; Wang, C.; Wang, Y.; Wang, H.; Pei, F. An autonomous driving approach based on trajectory learning using deep neural networks. International journal of automotive technology 2021, 22, 1517–1528. [Google Scholar] [CrossRef]

- Hu, S.; Chen, L.; Wu, P.; Li, H.; Yan, J.; Tao, D. St-p3: End-to-end vision-based autonomous driving via spatial-temporal feature learning. European Conference on Computer Vision. Springer, 2022, pp. 533–549.

- Paden, B.; Čáp, M.; Yong, S.Z.; Yershov, D.; Frazzoli, E. A survey of motion planning and control techniques for self-driving urban vehicles. IEEE Transactions on intelligent vehicles 2016, 1, 33–55. [Google Scholar] [CrossRef]

- Katrakazas, C.; Quddus, M.; Chen, W.H.; Deka, L. Real-time motion planning methods for autonomous on-road driving: State-of-the-art and future research directions. Transportation Research Part C: Emerging Technologies 2015, 60, 416–442. [Google Scholar] [CrossRef]

- Xu, Z.; Xiao, X.; Warnell, G.; Nair, A.; Stone, P. Machine learning methods for local motion planning: A study of end-to-end vs. parameter learning. 2021 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR). IEEE, 2021, pp. 217–222.

- Reda, M.; Onsy, A.; Ghanbari, A.; Haikal, A.Y. Path planning algorithms in the autonomous driving system: A comprehensive review. Robotics and Autonomous Systems 2024, p. 104630. [CrossRef]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: an open-source Robot Operating System. ICRA workshop on open source software. Kobe, Japan, 2009, Vol. 3, p. 5.

- Van Brummelen, J.; O’Brien, M.; Gruyer, D.; Najjaran, H. Autonomous vehicle perception: The technology of today and tomorrow. Transportation research part C: emerging technologies 2018. [CrossRef]

- OpenDRIVE. ASAM OpenDRIVE 1.8.0, 2023. Accessed: 2023-01-27.

- Diaz-Diaz, A.; Ocaña, M.; Llamazares, Á.; Gómez-Huélamo, C.; Revenga, P.; Bergasa, L.M. Hd maps: Exploiting opendrive potential for path planning and map monitoring. 2022 IEEE Intelligent Vehicles Symposium (IV). IEEE, 2022, pp. 1211–1217.

- Dupuis, M.; Strobl, M.; Grezlikowski, H. OpenDRIVE 2010 and Beyond–Status and Future of the de facto Standard for the Description of Road Networks. Proc. of the Driving Simulation Conference Europe, 2010, pp. 231–242.

- Thrun, S.; Montemerlo, M.; Dahlkamp, H.; Stavens, D.; Aron, A.; Diebel, J.; Fong, P.; Gale, J.; Halpenny, M.; Hoffmann, G.; Lau, K.; Oakley, C.; Palatucci, M.; Pratt, V.; Stang, P.; Strohband, S.; Dupont, C.; Jendrossek, L.E.; Koelen, C.; Markey, C.; Rummel, C.; van Niekerk, J.; Jensen, E.; Alessandrini, P.; Bradski, G.; Davies, B.; Ettinger, S.; Kaehler, A.; Nefian, A.; Mahoney, P., Stanley: The Robot That Won the DARPA Grand Challenge. In The 2005 DARPA Grand Challenge: The Great Robot Race; Buehler, M.; Iagnemma, K.; Singh, S., Eds.; Springer Berlin Heidelberg: Berlin, Heidelberg, 2007; pp. 1–43. [CrossRef]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. Proceedings of the IEEE International Conference on Computer Vision (ICCV), 2017.

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. PointPillars: Fast Encoders for Object Detection From Point Clouds. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2019.

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple Online and Realtime Tracking. CoRR 2016, abs/1602.00763, [1602.00763]. [CrossRef]

- Lima, P.F.; Trincavelli, M.; Mårtensson, J.; Wahlberg, B. Clothoid-based model predictive control for autonomous driving. Control Conference (ECC), 2015 European. IEEE, 2015, pp. 2983–2990.

- Fraichard, T.; Scheuer, A. From Reeds and Shepp’s to continuous-curvature paths. IEEE Transactions on Robotics 2004, 20, 1025–1035. [Google Scholar] [CrossRef]

- Obayashi, M.; Uto, K.; Takano, G. Appropriate overtaking motion generating method using predictive control with suitable car dynamics. 2016 IEEE 55th Conference on Decision and Control (CDC). IEEE, 2016, pp. 4992–4997.

- Zhu, H. Software design methodology: From principles to architectural styles; Elsevier, 2005.

- Geiger, A.; Roser, M.; Urtasun, R. Efficient Large-Scale Stereo Matching. Asian Conference on Computer Vision (ACCV), 2010.

- Hossin, M.; Sulaiman, M. A review on evaluation metrics for data classification evaluations. International Journal of Data Mining & Knowledge Management Process 2015, 5, 1. [Google Scholar]

- Tharwat, A. Classification assessment methods. Applied Computing and Informatics 2018. [Google Scholar] [CrossRef]

- Jorgensen, P.C. Software testing: a craftsman’s approach; CRC press, 2018.

- Lewis, W.E. Software testing and continuous quality improvement; CRC press, 2017.

- of the United States, N.H.T.S.A. Pre-Crash Scenario Typology for Crash Avoidance Research, 2007. Accessed: 2023-01-30.

- Chekroun, R.; Toromanoff, M.; Hornauer, S.; Moutarde, F. GRI: General Reinforced Imitation and Its Application to Vision-Based Autonomous Driving. Robotics 2023, 12. [Google Scholar] [CrossRef]

- Gómez-Huélamo, C.; Diaz-Diaz, A.; Araluce, J.; Ortiz, M.E.; Gutiérrez, R.; Arango, F.; Llamazares, Á.; Bergasa, L.M. How to build and validate a safe and reliable Autonomous Driving stack? A ROS based software modular architecture baseline. 2022 IEEE Intelligent Vehicles Symposium (IV). IEEE, 2022, pp. 1282–1289.

- Gog, I.; Kalra, S.; Schafhalter, P.; Wright, M.A.; Gonzalez, J.E.; Stoica, I. Pylot: A modular platform for exploring latency-accuracy tradeoffs in autonomous vehicles. 2021 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2021, pp. 8806–8813.

- Jaeger, B.; Chitta, K.; Geiger, A. Hidden Biases of End-to-End Driving Models. Proc. of the IEEE International Conf. on Computer Vision (ICCV), 2023.

- Chen, D.; Krähenbühl, P. Learning from all vehicles. CVPR, 2022.

- Chitta, K.; Prakash, A.; Jaeger, B.; Yu, Z.; Renz, K.; Geiger, A. TransFuser: Imitation with Transformer-Based Sensor Fusion for Autonomous Driving. Pattern Analysis and Machine Intelligence (PAMI) 2023. [Google Scholar] [CrossRef] [PubMed]

- Prakash, A.; Chitta, K.; Geiger, A. Multi-Modal Fusion Transformer for End-to-End Autonomous Driving. Conference on Computer Vision and Pattern Recognition (CVPR), 2021.

- Chekroun, R.; Toromanoff, M.; Hornauer, S.; Moutarde, F. GRI: General Reinforced Imitation and its Application to Vision-Based Autonomous Driving. CoRR 2021, abs/2111.08575, [2111.08575]. [CrossRef]

- Chen, D.; Koltun, V.; Krähenbühl, P. Learning to drive from a world on rails. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 15590–15599.

- Toromanoff, M.; Wirbel, E.; Moutarde, F. End-to-End Model-Free Reinforcement Learning for Urban Driving Using Implicit Affordances. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2020.

- Chitta, K.; Prakash, A.; Geiger, A. NEAT: Neural Attention Fields for End-to-End Autonomous Driving. Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), 2021, pp. 15793–15803.

- Prakash, A.; Chitta, K.; Geiger, A. Multi-Modal Fusion Transformer for End-to-End Autonomous Driving. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2021, pp. 7077–7087.

- Rosero, L.; Silva, J.; Wolf, D.; Osório, F. CNN-Planner: A neural path planner based on sensor fusion in the bird’s eye view representation space for mapless autonomous driving. 2022 Latin American Robotics Symposium (LARS), 2022 Brazilian Symposium on Robotics (SBR), and 2022 Workshop on Robotics in Education (WRE), 2022, pp. 181–186.

- Chen, D.; Zhou, B.; Koltun, V.; Krähenbühl, P. Learning by Cheating. Proceedings of the Conference on Robot Learning; Kaelbling, L.P.; Kragic, D.; Sugiura, K., Eds. PMLR, 2020, Vol. 100, Proceedings of Machine Learning Research, pp. 66–75.

- Codevilla, F.; Santana, E.; Lopez, A.M.; Gaidon, A. Exploring the Limitations of Behavior Cloning for Autonomous Driving. Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), 2019.

| Input | Description |

|---|---|

| 00 | No obstacles detected, indicating a clear path ahead. |

| 01 | An obstacle is being tracked, requiring speed adjustments to maintain safe following distances. |

| 10 | A red traffic light is ahead, necessitating a controlled stop. |

| 11 | A stop sign is detected, also demanding a full stop. |

|

| Team | Method | DS | RC | IP | CP | CV | CL | RLI | SSI | ORI | RD | RT | AB |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Anonymous | Map TF++ | 61.17 | 81.81 | 0.70 | 0.01 | 0.99 | 0.00 | 0.08 | 0.00 | 0.00 | 0.00 | 0.00 | 0.55 |

| mmfn | MMFN+(TPlanner)14 | 59.85 | 82.81 | 0.71 | 0.01 | 0.59 | 0.00 | 0.51 | 0.00 | 0.00 | 0.00 | 0.62 | 0.06 |

| LRM 2023 |

CaRINA agent |

41.56 | 86.03 | 0.52 | 0.08 | 0.38 | 0.13 | 1.6 | 0.03 | 0.00 | 0.04 | 0.05 | 1.29 |

| RaphaeL | GRI-based DRL [50] |

33.78 | 57.44 | 0.57 | 0.00 | 3.36 | 0.50 | 0.52 | 0.00 | 1.52 | 1.47 | 0.23 | 0.80 |

| mmfn | MMFN [20] | 22.80 | 47.22 | 0.63 | 0.09 | 0.67 | 0.05 | 1.07 | 0.00 | 0.45 | 0.00 | 0.00 | 1003.88 |

| RobeSafe research group |

Techs4AgeCar+ [51] |

18.75 | 75.11 | 0.28 | 1.52 | 2.37 | 1.27 | 1.22 | 0.00 | 0.59 | 0.17 | 0.01 | 1.28 |

| ERDOS | Pylot [52] | 16.70 | 48.63 | 0.50 | 1.18 | 0.79 | 0.01 | 0.95 | 0.00 | 0.01 | 0.44 | 0.10 | 3.30 |

| LRM 2019 | CaRINA [10] | 15.55 | 40.63 | 0.47 | 1.06 | 3.35 | 1.79 | 0.28 | 0.00 | 3.28 | 0.34 | 0.00 | 7.26 |

| Team | Method | DS | RC | IP | CP | CV | CL | RLI | SSI | ORI | RD | RT | AB | YEI | ST | MinSI |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Kyber-E2E | Kyber-E2E | 3.11 | 5.28 | 0.67 | 0.36 | 0.63 | 0.27 | 0.09 | 0.09 | 0.01 | 0.00 | 0.09 | 0.09 | 0.00 | 0.54 | 0.00 |

| LRM 2023 |

CaRINA agent |

1.14 | 3.65 | 0.46 | 0.00 | 2.89 | 1.31 | 0.00 | 0.53 | 0.00 | 0.13 | 1.31 | 1.18 | 0.00 | 2.10 | 0.00 |

| Team | Method | DS | RC | IP | CP | CV | CL | RLI | SSI | ORI | RD | RT | AB |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Interfuser | ReasonNet [15] | 79.95 | 89.89 | 0.89 | 0.02 | 0.13 | 0.01 | 0.08 | 0.00 | 0.04 | 0.00 | 0.01 | 0.33 |

| Interfuser | InterFuser [16] | 76.18 | 88.23 | 0.84 | 0.04 | 0.37 | 0.14 | 0.22 | 0.00 | 0.13 | 0.00 | 0.01 | 0.43 |

| PPX | TCP [17] | 75.14 | 85.63 | 0.87 | 0.00 | 0.32 | 0.00 | 0.09 | 0.00 | 0.04 | 0.00 | 0.00 | 0.54 |

| DP | TF++ WP Ensemble [53] |

66.32 | 78.57 | 0.84 | 0.00 | 0.50 | 0.00 | 0.01 | 0.00 | 0.12 | 0.00 | 0.00 | 0.71 |

| WOR | LAV [54] | 61.85 | 94.46 | 0.64 | 0.04 | 0.70 | 0.02 | 0.17 | 0.00 | 0.25 | 0.09 | 0.04 | 0.10 |

| Attention Fields |

TF++ WP [53] | 61.57 | 77.66 | 0.81 | 0.02 | 0.41 | 0.00 | 0.03 | 0.00 | 0.08 | 0.00 | 0.00 | 0.71 |

| DP | TransFuser [55,56] | 61.18 | 86.69 | 0.71 | 0.04 | 0.81 | 0.01 | 0.05 | 0.00 | 0.23 | 0.00 | 0.01 | 0.43 |

| Attention Fields |

Latent TransFuser [55,56] |

45.20 | 66.31 | 0.72 | 0.02 | 1.11 | 0.02 | 0.05 | 0.00 | 0.16 | 0.00 | 0.04 | 1.82 |

| RaphaeL | GRIAD [57] | 36.79 | 61.85 | 0.60 | 0.00 | 2.77 | 0.41 | 0.48 | 0.00 | 1.39 | 1.11 | 0.34 | 0.84 |

| LRM 2023 |

CaRINA hybrid |

35.36 | 85.01 | 0.45 | 0.02 | 4.95 | 0.22 | 1.67 | 0.12 | 0.45 | 1.54 | 0.02 | 0.45 |

| WOR | World on Rails [58] | 31.37 | 57.65 | 0.56 | 0.61 | 1.35 | 1.02 | 0.79 | 0.00 | 0.96 | 1.69 | 0.00 | 0.47 |

| MaRLn | MaRLn [59] | 24.98 | 46.97 | 0.52 | 0.00 | 2.33 | 2.47 | 0.55 | 0.00 | 1.82 | 1.44 | 0.79 | 0.94 |

| Attention Fields |

NEAT [60] | 21.83 | 41.71 | 0.65 | 0.04 | 0.74 | 0.62 | 0.70 | 0.00 | 2.68 | 0.00 | 0.00 | 5.22 |

| SDV | AIM-MT [60] | 19.38 | 67.02 | 0.39 | 0.18 | 1.53 | 0.12 | 1.55 | 0.00 | 0.35 | 0.00 | 0.01 | 2.11 |

| SDV | TransFuser (CVPR 2021) [61] |

16.93 | 51.82 | 0.42 | 0.91 | 1.09 | 0.19 | 1.26 | 0.00 | 0.57 | 0.00 | 0.01 | 1.96 |

| LRM-B | CNN-Planner [62] | 15.40 | 50.05 | 0.41 | 0.08 | 4.67 | 0.42 | 0.35 | 0.00 | 2.78 | 0.12 | 0.00 | 4.63 |

| LBC | Learning by Cheating[63] |

8.94 | 17.54 | 0.73 | 0.00 | 0.40 | 1.16 | 0.71 | 0.00 | 1.52 | 0.03 | 0.00 | 4.69 |

| MaRLn | MaRLn [59] | 5.56 | 24.72 | 0.36 | 0.77 | 3.25 | 13.23 | 0.85 | 0.00 | 10.73 | 2.97 | 0.06 | 11.41 |

| Attention Fields | CILRS [64] | 5.37 | 14.40 | 0.55 | 2.69 | 1.48 | 2.35 | 1.62 | 0.00 | 4.55 | 4.14 | 0.00 | 4.28 |

| LRM 2019 | CaRINA [10] | 4.56 | 23.80 | 0.41 | 0.01 | 7.56 | 51.52 | 20.64 | 0.00 | 14.32 | 0.00 | 0.00 | 10055.99 |

| Team | Method | DS | RC | IP | CP | CV | CL | RLI | SSI | ORI | RD | RT | AB | YEI | ST | MinSI |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| LRM 2023 |

CaRINA hybrid |

1.23 | 9.55 | 0.31 | 0.25 | 1.64 | 0.25 | 0.25 | 0.40 | 0.43 | 0.10 | 0.30 | 0.60 | 0.10 | 1.20 | 0.15 |

| Tuebingen_AI | Zero-shot TF++ [53] |

0.58 | 8.53 | 0.38 | 0.17 | 1.80 | 0.51 | 0.00 | 3.76 | 0.35 | 0.06 | 0.56 | 0.51 | 0.00 | 2.19 | 0.17 |

| CARLA | baseline | 0.25 | 15.20 | 0.10 | 1.23 | 2.49 | 0.79 | 0.03 | 0.94 | 0.47 | 0.50 | 0.00 | 0.13 | 0.13 | 0.69 | 0.19 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).