1. Introduction

Recognition and analysis of human actions is a critical subfield in computer vision and deep learning, with the primary goal of automatically detecting and classifying human actions or gestures from video data. Sophisticated algorithms and models that can understand and interpret the dynamics of human movements are required for this purpose. The recognition and interpretation of human actions play a crucial role in various practical applications such as video surveillance footage, healthcare systems, robotics field, human-computer interaction, etc. Extracting meaningful information from video sequences enables machines to understand and respond to human actions, thereby enhancing the efficiency and safety of many domains.

This emerging field leverages the capabilities of deep learning techniques, to capture temporal and spatial features in video data. Recent advancements in the use of 3D graph convolutional neural networks (3D GCNs) have further improved the accuracy of action recognition models. Notable datasets such as NTU RGB+D [

1], NTU RGB+D 120 [

2], NW-UCLA, and Kinetics [

3], have become benchmarks for evaluating and analyzing the performance of these techniques, driving further research and innovation in the field.

1.1. Introduction to HAR based on skeleton data

The aim of using action prediction algorithms is to anticipate the classification label of a continuous action based on a partial observation along the temporal axis. Predicting human activities prior to their full execution is regarded as a subfield within the wider scientific area of human activity analysis. This field has garnered significant scholarly interest owing to its extensive array of applications in the domains of security surveillance, observing human-machine interactions, and medical monitoring. [

4].

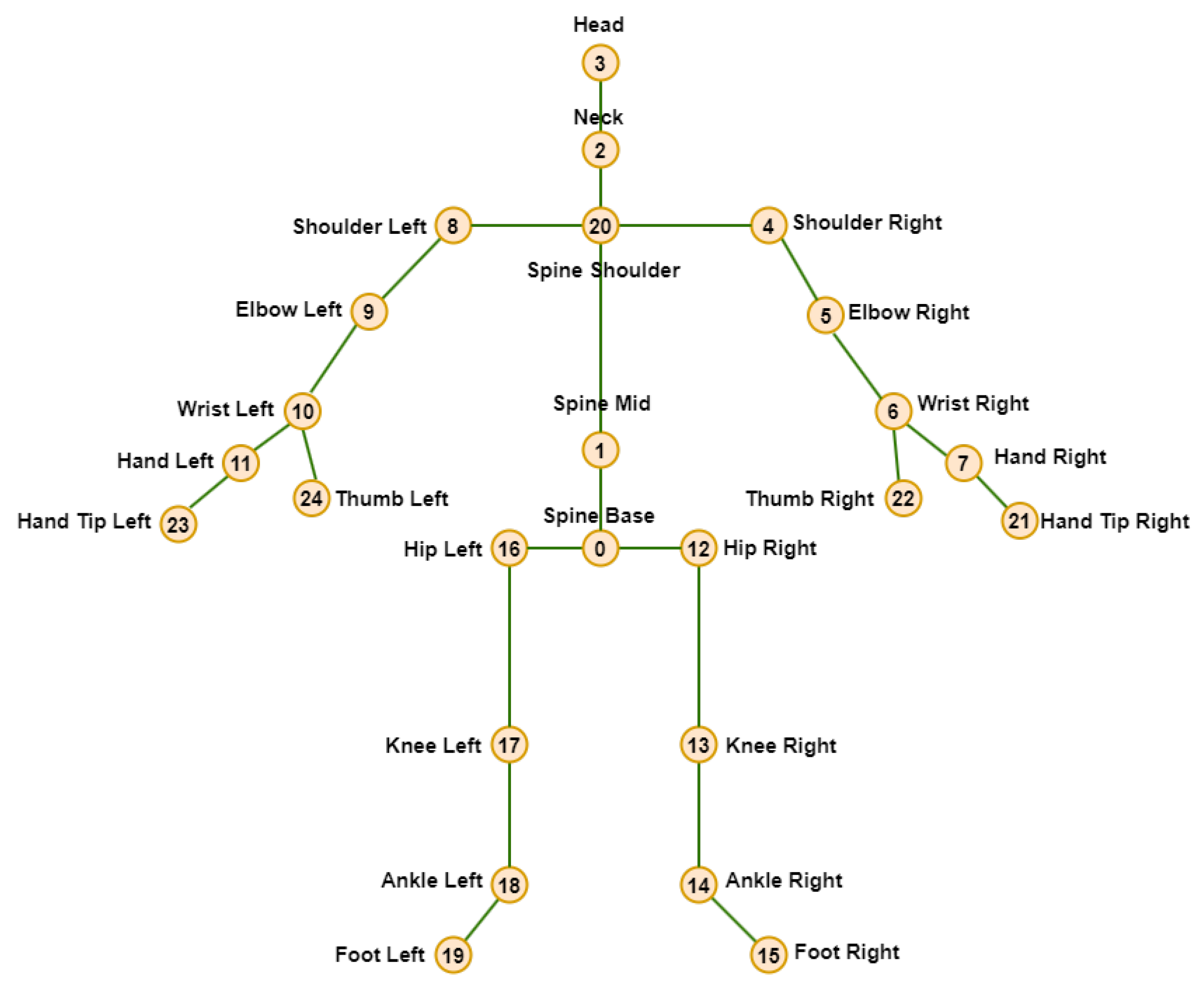

According to biological researches [

5], human skeleton data as depicted in

Figure 1, are sufficiently informative to represent human behavior, despite the absence of appearance information. Human activities are inherently conducted within a three-dimensional spatial context, making three-dimensional skeletal data an appropriate means of capturing human activity. The acquisition of 3D skeletal information can be efficiently and conveniently achieved in real-time through the utilization of affordable depth sensors, such as Microsoft Kinect and Asus Xtion. Consequently, the utilization of 3D skeleton data for activity analysis has become known prominent area of scholarly studies [

6,

7,

8]. Among the advantages of this type of activity analysis are its conciseness, sophisticated representation, and resilience to differences in views, illumination, and surrounding visual distractions.

1.2. Description of the Work

In this study, the focus is only on HAR. Thus, the goal was to detect the motion of a person. Surveillance cameras can be found in almost all sensitive locations. However, the resulting number of video streams is not only difficult to monitor but also costly to transmit and store.

We performed the experiments using a well-known action recognition dataset named NTU RGB+D 120 [

2], which is an extension of NTU RGB+D [

1] and provides the extracted skeletal motion of 120 motion classes.

The objective of this study was to develop a novel and highly accurate human motion detection algorithm. For this purpose, we focused on special practical scenarios and utilized the latest deep learning (DL) technologies (e.g. graph convolutional networks, autoencoders, and one-class classifiers) to develop a highly accurate HAR algorithm (for example, abnormal behavior of customers in shopping places can be detected by analyzing their motion based on 3D skeletal data).

1.3. Graph Autoencoders

Graph autoencoders (GAEs) are a class of neural network models designed for learning low-dimensional representations of graph-structured data. In recent years, they have gained a notable focus due to their ability to perform tasks in a range of applications, including node classification, link anticipation, and community discovery. GAEs use the power of autoencoders to encode graph nodes into lower-dimensional latent representations and decode them back to the original graph structure. This process involves capturing both the topological structure and node attributes, making them powerful tools for graph representation learning. Notable works in this field include the GraphSAGE model by Hamilton et al. [

9] and the variational graph autoencoder (VGAE) proposed by Kipf and Welling [

10]. These models provide valuable insights into the development of graph autoencoders for various graph-related tasks.

In addition to these studies, there is an expanding body of research exploring variations and applications of graph autoencoders. These have been adapted for semi-supervised learning, recommendation systems, and anomaly detection. The field of graph autoencoders continues to evolve, offering promising avenues for further research and development.

1.4. The Basic Description of the Graph Autoencoder Skeleton-based HAR Algorithm

GAEs refer to a class of neural network models which are specifically designed for unsupervised learning jobs, such as clustering and link prediction, on graph-structured data. GAEs are based on GCNs. We adopted the architecture of CTR-GCN that used a refinement way to learns channel-wise topologies which proposed by Chen et al. [

11] as the base unit and trained a graph auto-encoder to automatically learn spatial as well as temporal patterns from data.

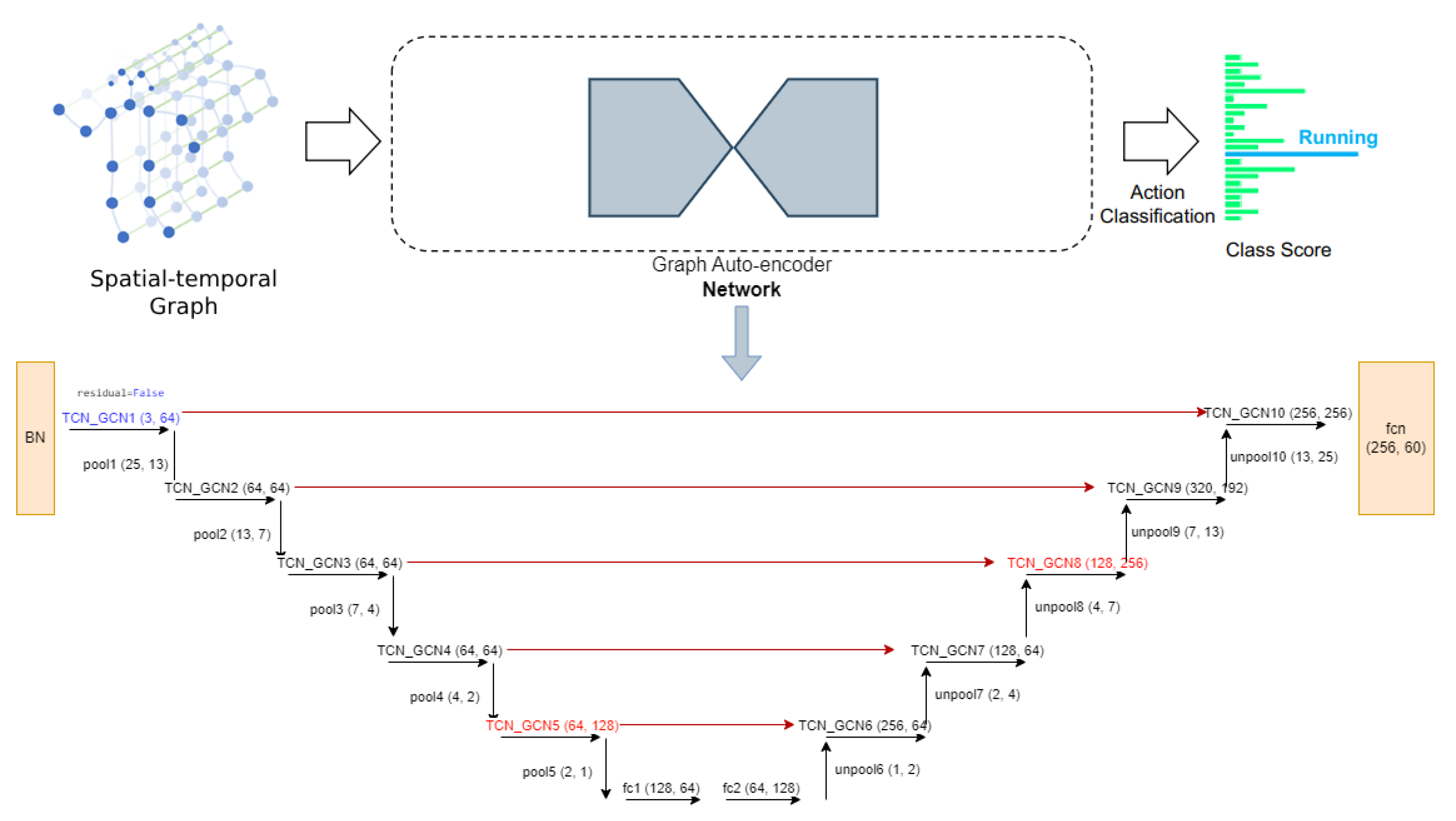

Figure 2 illustrates the proposed network architecture. The input to the network is a spatial temporal graph based on skeletal sequences which can be generated as described by Cai et al. [

12]. This graph is fed to the autoencoder for reconstruction. Finally, the verification process involves the application of thresholding to the reconstruction loss [

13].

The main contributions of this study are listed below:

2. Related Work

2.1. GCNs

CNN-based methods have achieved great results in RGB-based processing compared to skeleton-based representations. However, GCN-based methods overcome CNN weaknesses for processing skeleton data. Spectral techniques perform convolution within the spectral domain [

14,

15,

16]. Their application relies on Laplacian eigenbasis. Consequently, these methods are primarily suitable for graphs that share consistent structural configurations.

Convolutions are defined by spatial methods directly on a graph [

17,

18,

19]. Handling various neighborhood sizes is one of the difficulties associated with spatial approaches. GCN proposed by Kipf et al. [

16] is one of many GCN versions available, and it is widely adapted for diverse purposes because of its simplicity. Feature update rule of GCN comprises of transformation of features into abstract representations step and feature aggregation step based on the analysis of the graph topology. The same rules were used in this study for feature updates.

2.2. GCN-based Skeleton Action Recognition

Kipf et al. [

16] proposed a feature update rule, which is often followed by GCNs, has been successfully adapted for recognition of skeleton-based action [

20,

21,

22,

23,

24,

25,

26]. Numerous GCN-based techniques place a strong emphasis on skeleton graph modeling due to the significance of graphs in GCNs. Based on topological variations, GCN-based techniques can be categorized into the following categories:

Static and dynamic techniques: In static techniques, the topologies of GCNs remain constant throughout the inference process, whereas they are dynamically inferred throughout the inference process for dynamic techniques.

topology shared and topology non-shared techniques: Topologies are shared across all channels in topology shared techniques, whereas various topologies are employed in various channels or channel groups in topology non-shared techniques.

In the context of static approaches, Yan et al. [

24] proposed a ST-GCN that predefined topology that is fixed during the training and testing steps in accordance with the human body structure. Multi-scale graph topologies were incorporated into GCNs by Liu et al. [

20] and Huang et al. [

27] to facilitate the modeling of joint relationships across many ranges.

In the context of dynamic approaches, Li et al. [

6] suggested an A-links inference component that records correlations related to actions. Self-attention methods enhanced topology learning by modeling the correlation between each pair of joints [

21,

28]. These techniques use regional information to infer the relationships between two joints. A dynamic GCN method for learning correlations between joint pairs was proposed by Ye et al. [

25] by incorporating contextual data from joints. Dynamic methods offer greater generalization capabilities than static methods because of their dynamic topologies.

Methods with and without a common topology. Static and dynamic topologies are shared across all channels in topology sharing procedures. These strategies impose limitations on the performance of models by compelling GCNs to aggregate features across channels that possess identical topology. The majority of GCN-based techniques, including the aforementioned static methods [

20,

24,

27] and dynamic methods [

6,

21,

25,

28], operate in a topology shared manner.

Topology non-shared techniques naturally overcome the drawbacks of topology shared techniques by employing various topologies in various channels or channel groups. Decoupling graph convolutional network (DC-GCN) used various graph representations for various channels [

29]. DC-GCN encounters optimization challenges when constructing channel-wise topologies caused by too many parameters. In skeleton-based HAR, topology non-shared graph convolutions have rarely been investigated. Chen et al. [

11] were the pioneers in developing dynamic channel-wise topologies. We adopted their basic idea and implemented a graph auto-encoder network and proposed a GA-GCN model.

3. Materials and Methods

3.1. Datasets

In this study, an existing action recognition dataset named NTU RGB+D 120 [

2], which extends NTU RGB+D [

1] was used.

NTU RGB+D [

1] is a sizable dataset containing 56,880 skeletal action sequences, which can be used for the recognition of human actions. 40 volunteers performed the samples, which were classified into 60 categories. Every video sample has only one action and is ensured to have a maximum of two participants. The actions of volunteers were simultaneously recorded by three cameras of type Microsoft Kinect v2 from various views. The dataset authors suggested two standards as follows:

The subject samples are divided into two halves: 20 individuals provide training samples, while the remaining 20 provide testing samples. This standard is named cross-subject (x-sub).

The testing samples are derived from the views of camera 1, while the training samples are derived from the views of cameras 2 and 3. This standard is named cross-view (x-view).

NTU RGB+D 120 [

2] expands NTU RGB+D by adding 57,367 skeletal sequences spanning 60 new action classes to become the largest collection of 3D joint annotations specifically designed for HAR. 106 participants performed a total of 113,945 action sequences in 120 classes, which were recorded using three cameras. This dataset has 32 distinct configurations, each of which corresponds to a certain environment and background.The dataset authors suggested two standards as follows:

The subject samples are divided into two halves: 53 individuals provide training data, and the remaining 53 provide testing data. This standard is named cross-subject (c-sub).

The 32 setups were separated into two halves: sequences with even-numbered setup numbers provide training samples, and the remaining sequences with odd-numbered setup numbers provide testing samples. This standard is named cross-setup (x-setup).

3.2. Preliminaries

In this study, a graph is used to represent the human skeleton data. Joints and bones represent the graph’s vertices and edges, respectively. An adjacency matrix denoted as

is used to represent the graph data, where

is the set of

N vertices (joints) and

E denotes the set of edges (edges). The adjacency matrix models the strength of the relationship between

and

. The input features of

N vertices are denoted as

X and represented in a matrix of size

and

’s feature is denoted as

. The following formula is used to obtain the graph convolution:

Equation (

1) defines the output of the relevant features

based on the weight

W and adjacency matrix

A.

The graph autoencoder network consists of two parts, i.e. the encoder and the decoder. Equation (

2) defines the input of the layers

X and pooling function

of the encoder.

Equation (

3) defines the input of the layers

X, Unpooling function

, and the decode function

of the decoder.

Data related to skeletons can be gathered using devices to capture the motion or pose estimation techniques from recorded videos. Video data are often presented as a series of frames, with every frame containing the coordinates of a set of joints. A spatiotemporal graph was constructed by representing the joints as graph vertices and utilizing the inherent relationships in the human body structure and time as graph edges using 2D or 3D coordinate sequences for body joints representation. The inputs to GA-GCN are the coordinate vectors of joints located at the graph nodes. This can be compared to image-based CNNs, in which the input is composed of pixel intensity vectors that are located on a 2D image matrix. The input data were subjected to several spatiotemporal graph convolution layers, resulting in the generation of more advanced feature mappings on the graph. The basic SoftMax classifier was subsequently allocated to the matching action class. Our proposed model was trained using an end-to-end method via back propagation.

Figure 2 demonstrates our proposed pipeline GA-GCN.

3.3. Spatiotemporal Graph Autoencoder Network for Skeleton-based HAR Algorithm

In this study, a potent spatiotemporal graph autoencoder network named GA-GCN was built for HAR based on skeleton data. Previous research has shown that using the graph is more efficient for this task [

21,

30] so we chose to use the graph to represent the human skeleton as the nature of each joint. The autoencoder network is composed of 10 fundamental blocks divided into decoder and encoder parts. Afterwards, a global average pooling layer and a softmax classifier are used in order to predict action labels. A pooling layer was added after each encoder block to minimize the overall number of joints by half. Each block of the decoder is preceded by an unpooling layer to double the joints. The number of input channels and joints in the autoencoder blocks are (64,25)-(64,13)-(64,7)-(64,4)-(128,2)-(64,2)-(64,4)-(256,7)-(192,13)-(256,25). Strided temporal convolution reduced the temporal dimensions by half in the fifth and eighth blocks.

Figure 2 demonstrates our proposed network pipeline, GA-GCN.

3.4. Spatiotemporal Input Representations

Spatiotemporal representations typically refer to data or information that reflects the spatial and temporal dimensions.

The input of spatiotemporal representations consists of video data represented by skeletal sequences. Resizing process was applied to each sample, resulting in a total of 64 frames.

3.5. Modalities of GA-GCN

The data from eight different modalities: joint, joint motion, bone, bone motion, joint fast motion, joint motion fast motion, bone fast motion, and bone motion fast motion were combined.

Table 1 lists the configuration used for each modality. Basically, we change the values of the three variables to obtain eight different modalities in the following order: bone, vel, and fast-motion.

Firstly, the data are the same as the values of joints in a frame for all the frames in the dataset; then, if the bone flag is true, then the values of data for joints are updated to reflect the difference between the values of bone pairs in each frame. Then, if the vel flag is true, the values of data for joints in the current frame are updated to reflect the difference between the values of joints in the next frame and the current frame. Finally, if the fast-motion flag is true the values of the data for joints are updated to the average of the values from the previous, current, and next frame.

4. Results

This section discusses implementation details and the experimental findings.

4.1. Implementation Details

We carried out our experiments using the PyTorch framework and executed them on a single NVIDIA A100 Tensor Core GPU. Our models were trained using Stochastic Gradient Descent (SGD) which has a momentum value of 0.9 and value of 0.0004 for weight decay. To improve the training process’s stability, a warming strategy was implemented during the initial five epochs as outlined in the study carried out by He et al. [

31]. Additionally, we trained our model with 65 epochs, and 0.1 for learning rate decreased by 0.1 at epochs 35 and 55. The resizing of each sample to 64 frames was conducted for both NTU RGB+D and NTU RGB+D 120 datasets. Additionally, the data pre-processing method described by Zhang et al. [

28] was employed.

4.2. Experimental results

The summary of the experimental findings of our GA-GCN model on the NTU RGB+D dataset with cross-subject and cross-view is shown in

Table 3. The summary of the experimental results of our GA-GCN model on the NTU RGB+D 120 dataset with cross-subject and cross-set is shown in

Table 4. The improvement after adding four more modalities and ensemble the results when conducting the experiment on NTU RGB+D is shown in

Table 2 using cross-view standard.

5. Discussion

This section presents the details of the carried out ablation studies to show the performance of the proposed spatiotemporal graph autoencoder convolutional network GA-GCN are described. Then the GA-GCN proposed in this study is compared with other cutting-edge methods based on evaluation using two datasets.

The efficacy of the GA-GCN was assessed using ST-GCN [

24] as the baseline method that falls under static topology shared graph convolution. To ensure a fair comparison, residual connections were incorporated into ST-GCN as the fundamental building units and using the module of temporal modeling as outlined in

Section 3.

5.1. Comparison of GA-GCN Modalities

Table 2 shows how the accuracy increased when the modalities’s results of joint, joint motion, bone, and bone motion were ensembled when compared to the accuracy of a single modality. Subsequently, four more modalities were added and it was noted that the accuracy further increased compared to the accuracy when just four modalities are used. The eight modalities are as follows: joint, joint motion, bone, bone motion, joint fast motion, joint motion fast motion, bone fast motion, and bone motion fast motion. The experiments’ findings indicate that the accuracy of our model increased by 1.0% when the results of four more modalities involving fast motion were ensembled compared with the accuracy obtained with the original four modalities.

5.2. Comparison with the State-of-the-Art

Multimodality fusion frameworks have been used by many state-of-the-art techniques. In order to ensure a fair comparison, the same structure as in [

25,

36] were used for comparison. In particular, data from eight different modalities: joint, joint motion, bone, bone motion, joint fast motion, joint motion fast motion, bone fast motion, and bone motion fast motion were combined for comparison purposes. In

Table 3 and

Table 4, the model developed in this study is compared to cutting-edge techniques evaluated based using the NTU RGB+D and NTU RGB+D 120 datasets, respectively. Our technique outperforms most of the existing state-of-the-art methods when evaluated based on two common datasets.

6. Conclusions and Future Work

In this work, we proposed a novel skeleton-based HAR algorithm, named GA-GCN. Our algorithm utilizes the power of the spatiotemporal graph autoencoder network in order to accomplish high accuracy. In comparison to other graph convolutions, GA-GCN has a greater representation capability. Moreover, we added four input modalities to enhance the performance even further; see

Section 4. The GA-GCN was evaluated on two common datasets NTU RGB+D and NTU RGB+D 120 and outperformed most of the existing state-of-the-art methods. Additional experiments on more datasets can be considered as potential future work. Furthermore, extra graph edges can be added between significant nodes for specific actions to improve HAR performance.

Author Contributions

Conceptualization, H.A. and A.E.; methodology, H.A., A.E., A.M. and F.A.; software, H.A.; validation, H.A.; formal analysis, H.A., A.E., A.M. and F.A.; investigation, H.A., A.E., A.M. and F.A.; resources, A.E, A.M. and F.A.; data curation, H.A.; writing—original draft preparation, H.A.; writing—review and editing, H.A., A.E., A.M. and F.A.; supervision, A.E., A.M. and F.A.; All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Conflicts of Interest

The authors declare no conflict of interest.

References

- Shahroudy, A.; Liu, J.; Ng, T.T.; Wang, G. NTU RGB+D: A Large Scale Dataset for 3D Human Activity Analysis. IEEE Conference on Computer Vision and Pattern Recognition, 2016.

- Liu, J.; Shahroudy, A.; Perez, M.; Wang, G.; Duan, L.Y.; Kot, A.C. NTU RGB+D 120: A Large-Scale Benchmark for 3D Human Activity Understanding. IEEE Transactions on Pattern Analysis and Machine Intelligence 2019. [Google Scholar] [CrossRef]

- Kay, W.; Carreira, J.; Simonyan, K.; Zhang, B.; Hillier, C.; Vijayanarasimhan, S.; Viola, F.; Green, T.; Back, T.; Natsev, P. ; others. The kinetics human action video dataset. arXiv preprint arXiv:1705.06950, 2017.

- Liu, J.; Shahroudy, A.; Wang, G.; Duan, L.Y.; Kot, A.C. Skeleton-based online action prediction using scale selection network. IEEE transactions on pattern analysis and machine intelligence 2019, 42, 1453–1467. [Google Scholar] [CrossRef] [PubMed]

- Johansson, G. Visual perception of biological motion and a model for its analysis. Perception & psychophysics 1973, 14, 201–211. [Google Scholar]

- Li, M.; Chen, S.; Chen, X.; Zhang, Y.; Wang, Y.; Tian, Q. Actional-structural graph convolutional networks for skeleton-based action recognition. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 3595–3603.

- Liu, J.; Shahroudy, A.; Xu, D.; Kot, A.C.; Wang, G. Skeleton-based action recognition using spatio-temporal LSTM network with trust gates. IEEE transactions on pattern analysis and machine intelligence 2017, 40, 3007–3021. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Wang, G.; Duan, L.Y.; Abdiyeva, K.; Kot, A.C. Skeleton-based human action recognition with global context-aware attention LSTM networks. IEEE Transactions on Image Processing 2017, 27, 1586–1599. [Google Scholar] [CrossRef] [PubMed]

- Hamilton, W.; Ying, Z.; Leskovec, J. Inductive representation learning on large graphs. Advances in neural information processing systems 2017, 30. [Google Scholar]

- Kipf, T.N.; Welling, M. Variational graph auto-encoders. arXiv preprint arXiv:1611.07308, 2016.

- Chen, Y.; Zhang, Z.; Yuan, C.; Li, B.; Deng, Y.; Hu, W. Channel-wise topology refinement graph convolution for skeleton-based action recognition. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 13359–13368.

- Cai, Y.; Ge, L.; Liu, J.; Cai, J.; Cham, T.J.; Yuan, J.; Thalmann, N.M. Exploiting spatial-temporal relationships for 3d pose estimation via graph convolutional networks. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2019, pp. 2272–2281.

- Malik, J.; Elhayek, A.; Guha, S.; Ahmed, S.; Gillani, A.; Stricker, D. DeepAirSig: End-to-End Deep Learning Based in-Air Signature Verification. IEEE Access 2020, 8, 195832–195843. [Google Scholar] [CrossRef]

- Bruna, J.; Zaremba, W.; Szlam, A.; Lecun, Y. Spectral Networks and Locally Connected Networks on Graphs. International Conference on Learning Representations (ICLR); Apr 14–16; Banff, AB, Canada, 2014.

- Defferrard, M.; Bresson, X.; Vandergheynst, P. Convolutional neural networks on graphs with fast localized spectral filtering. Advances in neural information processing systems 2016, 29. [Google Scholar]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. International Conference on Learning Representations (ICLR), 2017.

- Duvenaud, D.K.; Maclaurin, D.; Iparraguirre, J.; Bombarell, R.; Hirzel, T.; Aspuru-Guzik, A.; Adams, R.P. Convolutional networks on graphs for learning molecular fingerprints. Advances in neural information processing systems 2015, 28. [Google Scholar]

- Niepert, M.; Ahmed, M.; Kutzkov, K. Learning convolutional neural networks for graphs. International conference on machine learning. PMLR, 2016, pp. 2014–2023.

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; Bengio, Y. Graph attention networks. International Conference on Learning Representations (ICLR), 2018.

- Liu, Z.; Zhang, H.; Chen, Z.; Wang, Z.; Ouyang, W. Disentangling and unifying graph convolutions for skeleton-based action recognition. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 143–152.

- Shi, L.; Zhang, Y.; Cheng, J.; Lu, H. Two-stream adaptive graph convolutional networks for skeleton-based action recognition. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2019, pp. 12026–12035.

- Tang, Y.; Tian, Y.; Lu, J.; Li, P.; Zhou, J. Deep progressive reinforcement learning for skeleton-based action recognition. Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 5323–5332.

- Veeriah, V.; Zhuang, N.; Qi, G.J. Differential recurrent neural networks for action recognition. Proceedings of the IEEE international conference on computer vision, 2015, pp. 4041–4049.

- Yan, S.; Xiong, Y.; Lin, D. Spatial temporal graph convolutional networks for skeleton-based action recognition. Thirty-second AAAI conference on artificial intelligence, 2018.

- Ye, F.; Pu, S.; Zhong, Q.; Li, C.; Xie, D.; Tang, H. Dynamic gcn: Context-enriched topology learning for skeleton-based action recognition. Proceedings of the 28th ACM International Conference on Multimedia, 2020, pp. 55–63.

- Zhao, R.; Wang, K.; Su, H.; Ji, Q. Bayesian graph convolution lstm for skeleton based action recognition. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2019, pp. 6882–6892.

- Huang, Z.; Shen, X.; Tian, X.; Li, H.; Huang, J.; Hua, X.S. Spatio-temporal inception graph convolutional networks for skeleton-based action recognition. Proceedings of the 28th ACM International Conference on Multimedia, 2020, pp. 2122–2130.

- Zhang, P.; Lan, C.; Zeng, W.; Xing, J.; Xue, J.; Zheng, N. Semantics-guided neural networks for efficient skeleton-based human action recognition. proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 1112–1121.

- Cheng, K.; Zhang, Y.; Cao, C.; Shi, L.; Cheng, J.; Lu, H. Decoupling gcn with dropgraph module for skeleton-based action recognition. Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, –28, 2020, Proceedings, Part XXIV 16. Springer, 2020, pp. 536–553. 23 August.

- Chen, Y.; Dai, X.; Liu, M.; Chen, D.; Yuan, L.; Liu, Z. Dynamic convolution: Attention over convolution kernels. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 11030–11039.

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 770–778.

- Li, S.; Li, W.; Cook, C.; Zhu, C.; Gao, Y. Independently recurrent neural network (indrnn): Building a longer and deeper rnn. Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 5457–5466.

- Li, C.; Zhong, Q.; Xie, D.; Pu, S. Co-occurrence feature learning from skeleton data for action recognition and detection with hierarchical aggregation. arXiv preprint arXiv:1804.06055, 2018.

- Si, C.; Chen, W.; Wang, W.; Wang, L.; Tan, T. An attention enhanced graph convolutional lstm network for skeleton-based action recognition. proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2019, pp. 1227–1236.

- Shi, L.; Zhang, Y.; Cheng, J.; Lu, H. Skeleton-based action recognition with directed graph neural networks. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2019, pp. 7912–7921.

- Cheng, K.; Zhang, Y.; He, X.; Chen, W.; Cheng, J.; Lu, H. Skeleton-based action recognition with shift graph convolutional network. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 183–192.

- Song, Y.F.; Zhang, Z.; Shan, C.; Wang, L. Stronger, faster and more explainable: A graph convolutional baseline for skeleton-based action recognition. proceedings of the 28th ACM international conference on multimedia, 2020, pp. 1625–1633.

- Korban, M.; Li, X. Ddgcn: A dynamic directed graph convolutional network for action recognition. Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XX 16. Springer, 2020, pp. 761–776.

- Shi, L.; Zhang, Y.; Cheng, J.; Lu, H. Decoupled spatial-temporal attention network for skeleton-based action-gesture recognition. Proceedings of the Asian Conference on Computer Vision, 2020.

- Plizzari, C.; Cannici, M.; Matteucci, M. Skeleton-based action recognition via spatial and temporal transformer networks. Computer Vision and Image Understanding 2021, 208, 103219. [Google Scholar] [CrossRef]

- Chen, Z.; Li, S.; Yang, B.; Li, Q.; Liu, H. Multi-scale spatial temporal graph convolutional network for skeleton-based action recognition. Proceedings of the AAAI conference on artificial intelligence, 2021, pp. 1113–1122.

- Trivedi, N.; Sarvadevabhatla, R.K. PSUMNet: Unified Modality Part Streams are All You Need for Efficient Pose-based Action Recognition. Computer Vision–ECCV 2022 Workshops: Proceedings, Part V. Springer, 2023, pp. 211–227.

- Liu, J.; Shahroudy, A.; Xu, D.; Wang, G. Spatio-temporal lstm with trust gates for 3d human action recognition. Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11-14, 2016, Proceedings, Part III 14. Springer, 2016, pp. 816–833.

- Ke, Q.; Bennamoun, M.; An, S.; Sohel, F.; Boussaid, F. Learning clip representations for skeleton-based 3d action recognition. IEEE Transactions on Image Processing 2018, 27, 2842–2855. [Google Scholar] [CrossRef] [PubMed]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).