Submitted:

11 January 2024

Posted:

11 January 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Materials and Methods

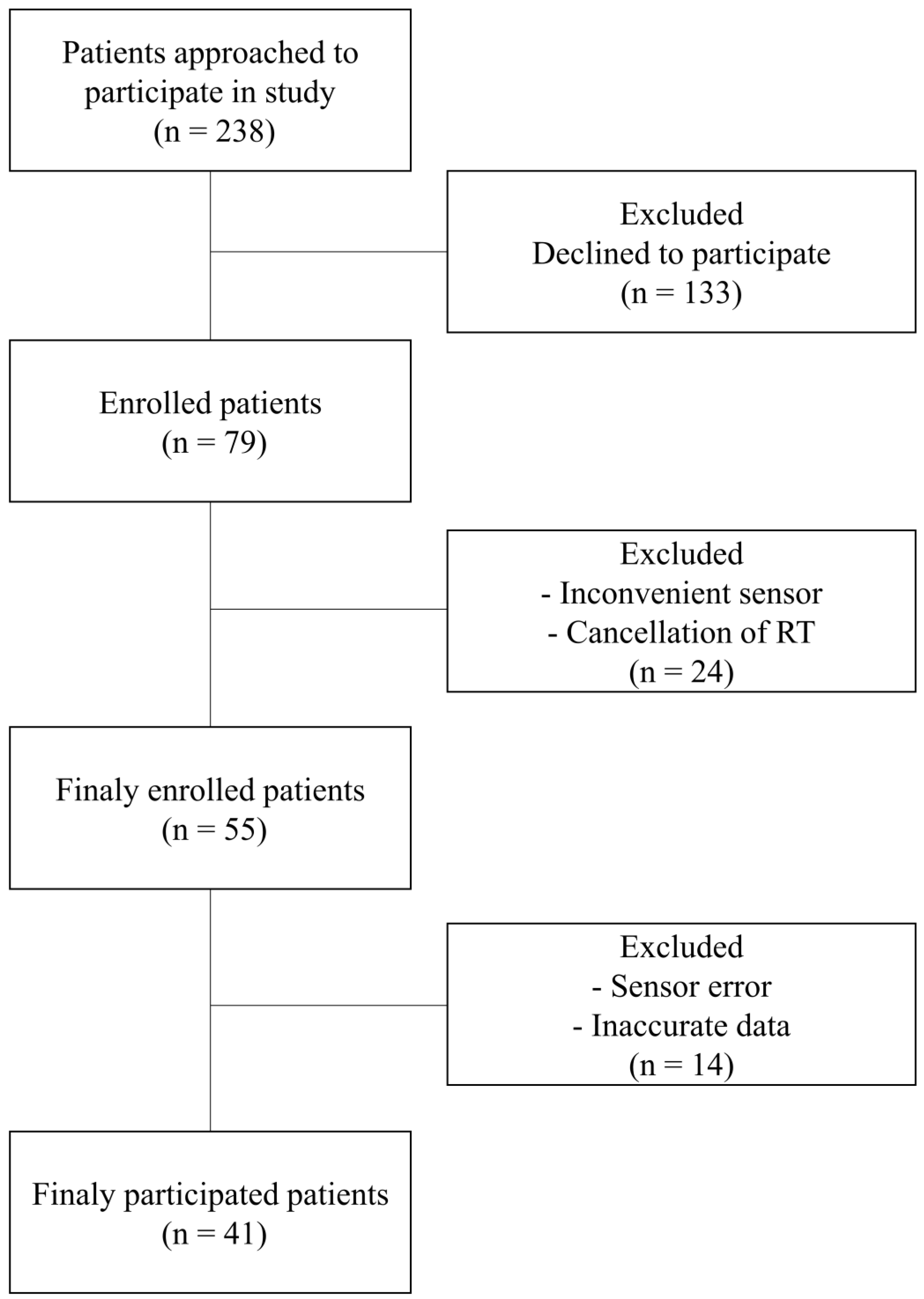

2.1. Patients

2.2. Data acquisition and processing

2.3. Stress features

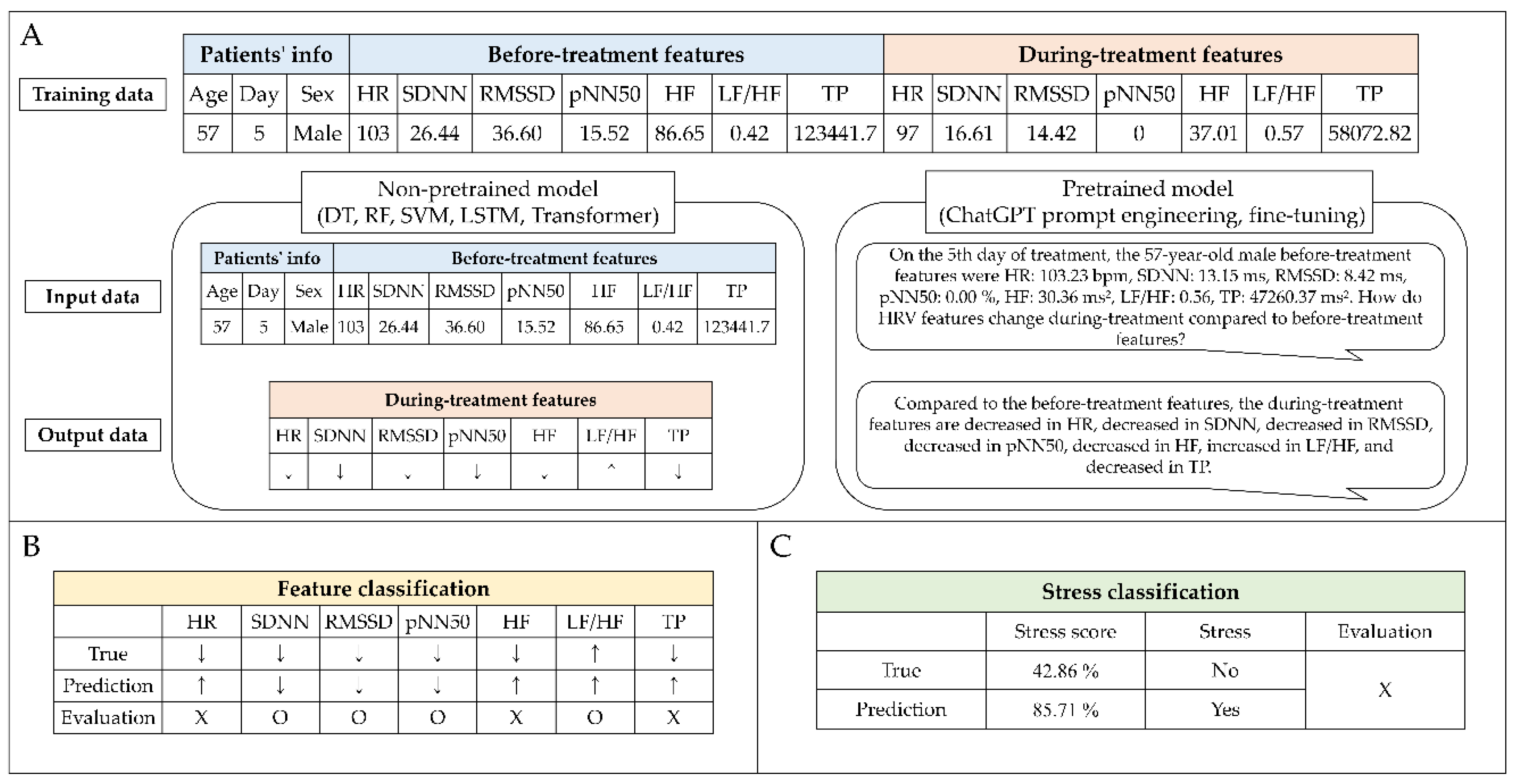

2.4. Stress prediction

2.5. Evaluation

3. Results

3.1. Patient characteristics

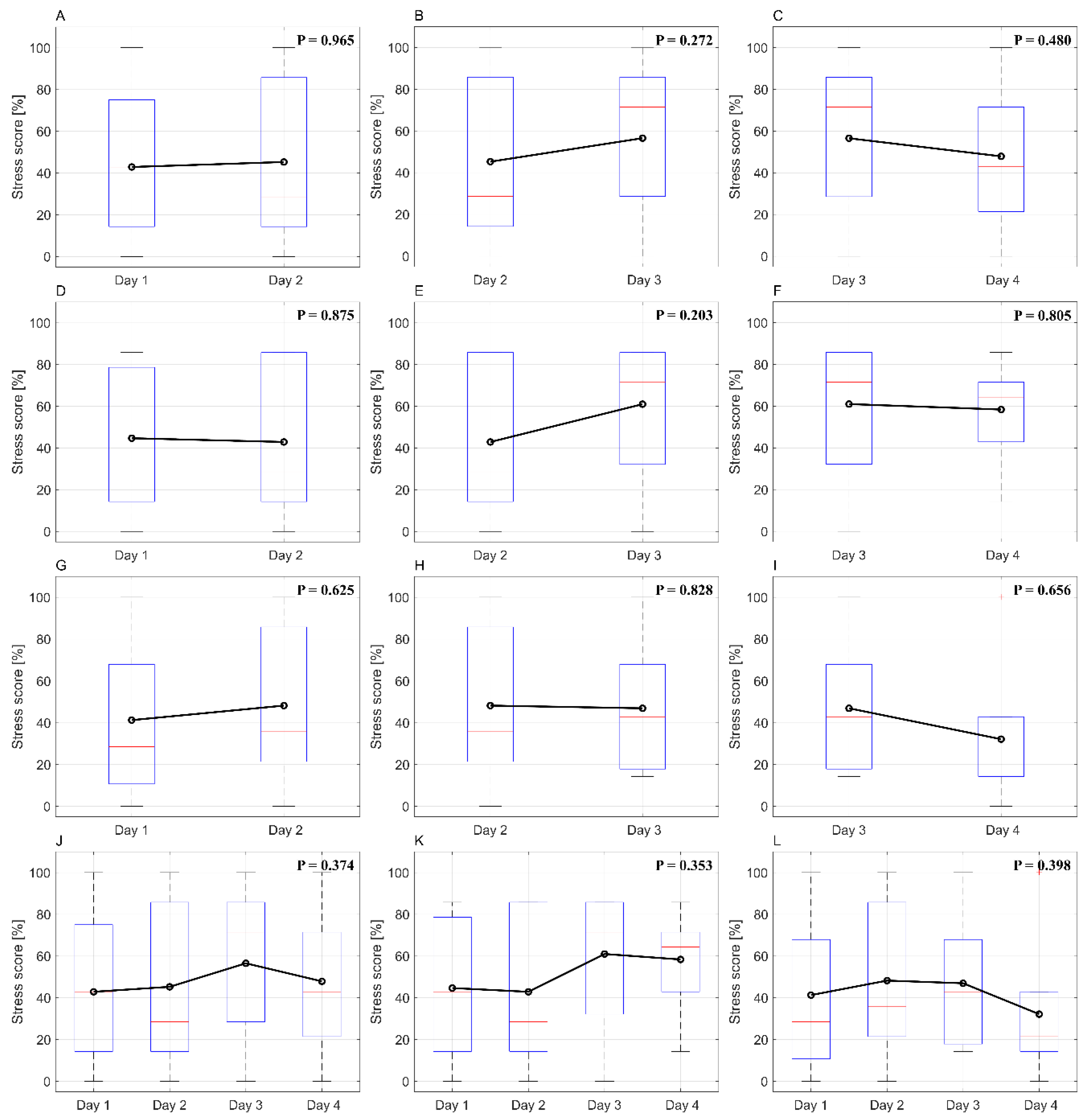

3.2. Stress score changes as treatment progresses

3.3. Non-pretrained model features classification

3.4. Pretrained model features classification.

3.5. Stress classification.

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Siegel, R.L.; Miller, K.D.; Wagle, N.S.; Jemal, A. Cancer statistics, 2023. Ca Cancer J Clin 2023, 73, 17-48.

- Baskar, R.; Lee, K.A.; Yeo, R.; Yeoh, K.-W. Cancer and radiation therapy: current advances and future directions. International journal of medical sciences 2012, 9, 193. [CrossRef]

- Abshire, D.; Lang, M.K. The evolution of radiation therapy in treating cancer. In Proceedings of the Seminars in oncology nursing, 2018; pp. 151-157. [CrossRef]

- Van Herk, M. Errors and margins in radiotherapy. In Proceedings of the Seminars in radiation oncology, 2004; pp. 52-64.

- Rudat, V.; Flentje, M.; Oetzel, D.; Menke, M.; Schlegel, W.; Wannenmacher, M. Influence of the positioning error on 3D conformal dose distributions during fractionated radiotherapy. Radiotherapy and Oncology 1994, 33, 56-63. [CrossRef]

- Fu, W.; Yang, Y.; Yue, N.J.; Heron, D.E.; Huq, M.S. Dosimetric influences of rotational setup errors on head and neck carcinoma intensity-modulated radiation therapy treatments. Medical Dosimetry 2013, 38, 125-132. [CrossRef]

- Siebers, J.V.; Keall, P.J.; Wu, Q.; Williamson, J.F.; Schmidt-Ullrich, R.K. Effect of patient setup errors on simultaneously integrated boost head and neck IMRT treatment plans. International Journal of Radiation Oncology* Biology* Physics 2005, 63, 422-433. [CrossRef]

- Tsujii, K.; Ueda, Y.; Isono, M.; Miyazaki, M.; Teshima, T.; Koizumi, M. Dosimetric impact of rotational setup errors in volumetric modulated arc therapy for postoperative cervical cancer. Journal of Radiation Research 2021, 62, 688-698. [CrossRef]

- Van Dyk, J.; Battista, J.J.; Bauman, G.S. Accuracy and uncertainty considerations in modern radiation oncology. The modern technology of radiation oncology 2013, 3, 361-412.

- Huang, G.; Medlam, G.; Lee, J.; Billingsley, S.; Bissonnette, J.-P.; Ringash, J.; Kane, G.; Hodgson, D.C. Error in the delivery of radiation therapy: results of a quality assurance review. International Journal of Radiation Oncology* Biology* Physics 2005, 61, 1590-1595. [CrossRef]

- Huq, M.S.; Fraass, B.A.; Dunscombe, P.B.; Gibbons Jr, J.P.; Ibbott, G.S.; Mundt, A.J.; Mutic, S.; Palta, J.R.; Rath, F.; Thomadsen, B.R. The report of Task Group 100 of the AAPM: Application of risk analysis methods to radiation therapy quality management. Medical physics 2016, 43, 4209-4262. [CrossRef]

- Wu, Q.J.; Thongphiew, D.; Wang, Z.; Chankong, V.; Yin, F.F. The impact of respiratory motion and treatment technique on stereotactic body radiation therapy for liver cancer. Medical physics 2008, 35, 1440-1451. [CrossRef]

- Yamashita, H.; Haga, A.; Hayakawa, Y.; Okuma, K.; Yoda, K.; Okano, Y.; Tanaka, K.-i.; Imae, T.; Ohtomo, K.; Nakagawa, K. Patient setup error and day-to-day esophageal motion error analyzed by cone-beam computed tomography in radiation therapy. Acta Oncologica 2010, 49, 485-490. [CrossRef]

- Poroch, D. The effect of preparatory patient education on the anxiety and satisfaction of cancer patients receiving radiation therapy. Cancer Nursing 1995, 18, 206-214.

- Keall, P.J.; Mageras, G.S.; Balter, J.M.; Emery, R.S.; Forster, K.M.; Jiang, S.B.; Kapatoes, J.M.; Low, D.A.; Murphy, M.J.; Murray, B.R. The management of respiratory motion in radiation oncology report of AAPM Task Group 76 a. Medical physics 2006, 33, 3874-3900. [CrossRef]

- Lee, H.; Ahn, Y.C.; Oh, D.; Nam, H.; Noh, J.M.; Park, S.Y. Tumor volume reduction rate during adaptive radiation therapy as a prognosticator for nasopharyngeal cancer. Cancer Research and Treatment: Official Journal of Korean Cancer Association 2016, 48, 537-545. [CrossRef]

- Woodford, C.; Yartsev, S.; Dar, A.R.; Bauman, G.; Van Dyk, J. Adaptive radiotherapy planning on decreasing gross tumor volumes as seen on megavoltage computed tomography images. International Journal of Radiation Oncology* Biology* Physics 2007, 69, 1316-1322. [CrossRef]

- Grassi, G.; Vailati, S.; Bertinieri, G.; Seravalle, G.; Stella, M.L.; Dell'Oro, R.; Mancia, G. Heart rate as marker of sympathetic activity. Journal of hypertension 1998, 16, 1635-1639. [CrossRef]

- Fisher, J.; Paton, J. The sympathetic nervous system and blood pressure in humans: implications for hypertension. Journal of human hypertension 2012, 26, 463-475. [CrossRef]

- Roman-Liu, D.; Grabarek, I.; Bartuzi, P.; Choromański, W. The influence of mental load on muscle tension. Ergonomics 2013, 56, 1125-1133. [CrossRef]

- Lundberg, U.; Frankenhaeuser, M. Stress and workload of men and women in high-ranking positions. Journal of occupational health psychology 1999, 4, 142. [CrossRef]

- Van Hasselt, V.B.; Sheehan, D.C.; Malcolm, A.S.; Sellers, A.H.; Baker, M.T.; Couwels, J. The law enforcement officer stress survey (LEOSS) evaluation of psychometric properties. Behavior modification 2008, 32, 133-151. [CrossRef]

- Prasad, K.; McLoughlin, C.; Stillman, M.; Poplau, S.; Goelz, E.; Taylor, S.; Nankivil, N.; Brown, R.; Linzer, M.; Cappelucci, K. Prevalence and correlates of stress and burnout among US healthcare workers during the COVID-19 pandemic: a national cross-sectional survey study. EClinicalMedicine 2021, 35. [CrossRef]

- Oh, H.-M.; Son, C.-G. The risk of psychological stress on cancer recurrence: A systematic review. Cancers 2021, 13, 5816. [CrossRef]

- De Jaeghere, E.A.; Kanervo, H.; Colman, R.; Schrauwen, W.; West, P.; Vandemaele, N.; De Pauw, A.; Jacobs, C.; Hilderson, I.; Saerens, M. Mental health and quality of life among patients with cancer during the SARS-CoV-2 pandemic: results from the longitudinal ONCOVID survey study. Cancers 2022, 14, 1093. [CrossRef]

- Skwirczyńska, E.; Chudecka-Głaz, A.; Wróblewski, O.; Tejchman, K.; Skonieczna-Żydecka, K.; Piotrowiak, M.; Michalczyk, K.; Karakiewicz, B. Age Matters: The Moderating Effect of Age on Styles and Strategies of Coping with Stress and Self-Esteem in Patients with Neoplastic Prostate Hyperplasia. Cancers 2023, 15, 1450. [CrossRef]

- Scherpenzeel, A.C.; Saris, W.E. The validity and reliability of survey questions: A meta-analysis of MTMM studies. Sociological Methods & Research 1997, 25, 341-383. [CrossRef]

- Vinkers, C.H.; Penning, R.; Hellhammer, J.; Verster, J.C.; Klaessens, J.H.; Olivier, B.; Kalkman, C.J. The effect of stress on core and peripheral body temperature in humans. Stress 2013, 16, 520-530. [CrossRef]

- McDuff, D.J.; Hernandez, J.; Gontarek, S.; Picard, R.W. Cogcam: Contact-free measurement of cognitive stress during computer tasks with a digital camera. In Proceedings of the Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, 2016; pp. 4000-4004.

- Krantz, G.; Forsman, M.; Lundberg, U. Consistency in physiological stress responses and electromyographic activity during induced stress exposure in women and men. Integrative Physiological & Behavioral Science 2004, 39, 105-118. [CrossRef]

- Mohan, P.M.; Nagarajan, V.; Das, S.R. Stress measurement from wearable photoplethysmographic sensor using heart rate variability data. In Proceedings of the 2016 International Conference on Communication and Signal Processing (ICCSP), 2016; pp. 1141-1144.

- Salahuddin, L.; Kim, D. Detection of acute stress by heart rate variability using a prototype mobile ECG sensor. In Proceedings of the 2006 International Conference on Hybrid Information Technology, 2006; pp. 453-459.

- Punita, P.; Saranya, K.; Kumar, S.S. Gender difference in heart rate variability in medical students and association with the level of stress. National Journal of Physiology, Pharmacy and Pharmacology 1970, 6, 431-431. [CrossRef]

- Dai, R.; Lu, C.; Yun, L.; Lenze, E.; Avidan, M.; Kannampallil, T. Comparing stress prediction models using smartwatch physiological signals and participant self-reports. Computer Methods and Programs in Biomedicine 2021, 208, 106207. [CrossRef]

- Antoni, D.; Vigneron, C.; Clavier, J.-B.; Guihard, S.; Velten, M.; Noel, G. Anxiety during radiation therapy: a prospective randomized controlled trial evaluating a specific one-on-one procedure announcement provided by a radiation therapist. Cancers 2021, 13, 2572. [CrossRef]

- Lewis, F.; Merckaert, I.; Liénard, A.; Libert, Y.; Etienne, A.-M.; Reynaert, C.; Slachmuylder, J.-L.; Scalliet, P.; Coucke, P.; Salamon, E. Anxiety and its time courses during radiotherapy for non-metastatic breast cancer: a longitudinal study. Radiotherapy and Oncology 2014, 111, 276-280. [CrossRef]

- Cinaz, B.; Arnrich, B.; La Marca, R.; Tröster, G. Monitoring of mental workload levels during an everyday life office-work scenario. Personal and ubiquitous computing 2013, 17, 229-239. [CrossRef]

- Clays, E.; De Bacquer, D.; Crasset, V.; Kittel, F.; De Smet, P.; Kornitzer, M.; Karasek, R.; De Backer, G. The perception of work stressors is related to reduced parasympathetic activity. International archives of occupational and environmental health 2011, 84, 185-191. [CrossRef]

- Hynynen, E.; Konttinen, N.; Kinnunen, U.; Kyröläinen, H.; Rusko, H. The incidence of stress symptoms and heart rate variability during sleep and orthostatic test. European journal of applied physiology 2011, 111, 733-741. [CrossRef]

- Lucini, D.; Norbiato, G.; Clerici, M.; Pagani, M. Hemodynamic and autonomic adjustments to real life stress conditions in humans. Hypertension 2002, 39, 184-188. [CrossRef]

- Taelman, J.; Vandeput, S.; Vlemincx, E.; Spaepen, A.; Van Huffel, S. Instantaneous changes in heart rate regulation due to mental load in simulated office work. European journal of applied physiology 2011, 111, 1497-1505. [CrossRef]

- Tharion, E.; Parthasarathy, S.; Neelakantan, N. Short-term heart rate variability measures in students during examinations. Natl Med J India 2009, 22, 63-66.

- Visnovcova, Z.; Mestanik, M.; Javorka, M.; Mokra, D.; Gala, M.; Jurko, A.; Calkovska, A.; Tonhajzerova, I. Complexity and time asymmetry of heart rate variability are altered in acute mental stress. Physiological measurement 2014, 35, 1319. [CrossRef]

- Madden, K.; Savard, G. Effects of mental state on heart rate and blood pressure variability in men and women. Clinical Physiology 1995, 15, 557-569. [CrossRef]

- Arsalan, A.; Anwar, S.M.; Majid, M. Mental stress detection using data from wearable and non-wearable sensors: a review. arXiv preprint arXiv:2202.03033 2022.

- Castaldo, R.; Melillo, P.; Bracale, U.; Caserta, M.; Triassi, M.; Pecchia, L. Acute mental stress assessment via short term HRV analysis in healthy adults: A systematic review with meta-analysis. Biomedical Signal Processing and Control 2015, 18, 370-377. [CrossRef]

- Karthikeyan, P.; Murugappan, M.; Yaacob, S. Detection of human stress using short-term ECG and HRV signals. Journal of Mechanics in Medicine and Biology 2013, 13, 1350038. [CrossRef]

- Quinlan, J.R. Learning decision tree classifiers. ACM Computing Surveys (CSUR) 1996, 28, 71-72. [CrossRef]

- Liaw, A.; Wiener, M. Classification and regression by randomForest. R news 2002, 2, 18-22.

- Gunn, S.R. Support vector machines for classification and regression. ISIS technical report 1998, 14, 5-16.

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural computation 1997, 9, 1735-1780. [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Advances in neural information processing systems 2017, 30.

- Lester, B.; Al-Rfou, R.; Constant, N. The power of scale for parameter-efficient prompt tuning. arXiv preprint arXiv:2104.08691 2021.

- Ziegler, D.M.; Stiennon, N.; Wu, J.; Brown, T.B.; Radford, A.; Amodei, D.; Christiano, P.; Irving, G. Fine-tuning language models from human preferences. arXiv preprint arXiv:1909.08593 2019.

- Stiegelis, H.E.; Ranchor, A.V.; Sanderman, R. Psychological functioning in cancer patients treated with radiotherapy. Patient Education and Counseling 2004, 52, 131-141. [CrossRef]

- Irwin, P.; Kramer, S.; Diamond, N.H.; Malone, D.; Zivin, G. Sex differences in psychological distress during definitive radiation therapy for cancer. Journal of Psychosocial Oncology 1986, 4, 63-75. [CrossRef]

- Gazi, A.H.; Lis, P.; Mohseni, A.; Ompi, C.; Giuste, F.O.; Shi, W.; Inan, O.T.; Wang, M.D. Respiratory markers significantly enhance anxiety detection using multimodal physiological sensing. In Proceedings of the 2021 IEEE EMBS International Conference on Biomedical and Health Informatics (BHI), 2021; pp. 1-4.

- Vulpe-Grigorași, A.; Grigore, O. A neural network approach for anxiety detection based on ECG. In Proceedings of the 2021 International Conference on e-Health and Bioengineering (EHB), 2021; pp. 1-4.

- Clark, J.; Nath, R.K.; Thapliyal, H. Machine learning based prediction of future stress events in a driving scenario. In Proceedings of the 2021 IEEE 7th World Forum on Internet of Things (WF-IoT), 2021; pp. 455-458.

- Taylor, S.; Jaques, N.; Nosakhare, E.; Sano, A.; Picard, R. Personalized multitask learning for predicting tomorrow's mood, stress, and health. IEEE Transactions on Affective Computing 2017, 11, 200-213. [CrossRef]

| Features | Unit | Description | Stressful |

|---|---|---|---|

| HR | bpm | Average number of heartbeats per minute | Increase |

| SDNN | ms | Standard deviation of NN intervals | Decrease |

| RMSSD | ms | Square root of the mean sum of squares of successive NN intervals differences | Decrease |

| pNN50 | % | Percentage of successive NN intervals differing more than 50 ms | Decrease |

| HF | ms² | Power of high-frequency range (0.15 – 0.4 Hz) | Decrease |

| LF/HF | ms² | Ratio low-frequency range / high-frequency range | Increase |

| TP | ms² | Total power of frequency range (0.004 – 0.4 Hz) | Decrease |

| Characteristic | N | (%) |

|---|---|---|

| All patients | 41 | (100) |

| Sex | ||

| Male | 27 | (65.85) |

| Female | 14 | (34.15) |

| Age | ||

| All | Mean, 67.15 | (Range, 47 – 80) |

| Male | Mean, 66.56 | (Range, 47 – 80) |

| Female | Mean, 68.29 | (Range, 57 – 80) |

| Stress case | ||

| All | 123 | (100) |

| Male | 81 | (65.85) |

| Female | 42 | (35.15) |

| Stress score | ||

| 0 % | 12 | (9.76) |

| 14.29 % | 18 | (14.63) |

| 28.57 % | 18 | (14.63) |

| 42.86 % | 17 | (13.82) |

| 57.14 % | 6 | (4.88) |

| 71.43 % | 17 | (13.82) |

| 85.71 % | 26 | (21.14) |

| 100 % | 9 | (7.32) |

| Stress case day | ||

| 1 | 17 | (13.82) |

| 2 | 18 | (14.63) |

| 3 | 22 | (17.89) |

| 4 | 20 | (16.26) |

| 5 - 14 | 46 | (37.40) |

| Day 1 | Day 2 | Day 3 | Day 4 | |

|---|---|---|---|---|

| Male | 44.69 ± 33.70 % | 42.90 ± 35.67 % | 61.01 ± 29.83 % | 58.39 ± 22.37 % |

| Female | 41.31 ± 39.45 % | 48.26 ± 37.40 % | 46.99 ± 31.67 % | 32.17 ± 31.28 % |

| P-value | 0.8707 | 0.6691 | 0.2978 | 0.0384 |

| Dataset | Model | EMR | Accuracy | Recall | Precision | F1 score |

|---|---|---|---|---|---|---|

| Type 1 | DT | 0.147 | 0.638 | 0.638 | 0.683 | 0.639 |

| RF | 0.163 | 0.646 | 0.654 | 0.645 | 0.625 | |

| SVM | 0.108 | 0.599 | 0.593 | 0.671 | 0.606 | |

| LSTM | 0.115 | 0.669 | 0.665 | 0.487 | 0.539 | |

| Transformer | 0.138 | 0.628 | 0.528 | 0.390 | 0.412 | |

| Type 2 | DT | 0.123 | 0.637 | 0.643 | 0.669 | 0.632 |

| RF | 0.165 | 0.645 | 0.673 | 0.616 | 0.615 | |

| SVM | 0.074 | 0.580 | 0.577 | 0.677 | 0.599 | |

| LSTM | 0.156 | 0.679 | 0.764 | 0.537 | 0.598 | |

| Transformer | 0.113 | 0.631 | 0.571 | 0.347 | 0.394 | |

| Type 3 | DT | 0.148 | 0.618 | 0.609 | 0.677 | 0.620 |

| RF | 0.165 | 0.645 | 0.656 | 0.612 | 0.616 | |

| SVM | 0.106 | 0.571 | 0.559 | 0.649 | 0.576 | |

| LSTM | 0.156 | 0.689 | 0.700 | 0.501 | 0.567 | |

| Transformer | 0.131 | 0.617 | 0.456 | 0.287 | 0.323 | |

| Type 4 | DT | 0.115 | 0.611 | 0.617 | 0.665 | 0.615 |

| RF | 0.164 | 0.656 | 0.673 | 0.640 | 0.635 | |

| SVM | 0.083 | 0.573 | 0.575 | 0.624 | 0.572 | |

| LSTM | 0.132 | 0.662 | 0.719 | 0.499 | 0.565 | |

| Transformer | 0.122 | 0.624 | 0.491 | 0.323 | 0.362 | |

| Type 5 | DT | 0.106 | 0.644 | 0.643 | 0.663 | 0.632 |

| RF | 0.147 | 0.653 | 0.670 | 0.641 | 0.632 | |

| SVM | 0.074 | 0.557 | 0.542 | 0.631 | 0.560 | |

| LSTM | 0.124 | 0.678 | 0.698 | 0.496 | 0.559 | |

| Transformer | 0.130 | 0.610 | 0.461 | 0.373 | 0.394 | |

| Type 6 | DT | 0.107 | 0.620 | 0.611 | 0.679 | 0.621 |

| RF | 0.147 | 0.649 | 0.673 | 0.604 | 0.612 | |

| SVM | 0.091 | 0.566 | 0.566 | 0.644 | 0.573 | |

| LSTM | 0.115 | 0.689 | 0.793 | 0.517 | 0.604 | |

| Transformer | 0.114 | 0.609 | 0.486 | 0.319 | 0.361 | |

| Type 7 | DT | 0.140 | 0.615 | 0.614 | 0.661 | 0.614 |

| RF | 0.164 | 0.651 | 0.675 | 0.640 | 0.631 | |

| SVM | 0.090 | 0.573 | 0.574 | 0.645 | 0.578 | |

| LSTM | 0.139 | 0.699 | 0.776 | 0.549 | 0.615 | |

| Transformer | 0.131 | 0.611 | 0.370 | 0.348 | 0.336 | |

| Type 8 | DT | 0.100 | 0.621 | 0.612 | 0.662 | 0.614 |

| RF | 0.163 | 0.641 | 0.656 | 0.612 | 0.609 | |

| SVM | 0.082 | 0.554 | 0.546 | 0.627 | 0.558 | |

| LSTM | 0.172 | 0.680 | 0.708 | 0.487 | 0.551 | |

| Transformer | 0.073 | 0.611 | 0.404 | 0.355 | 0.344 |

| Dataset | Model | EMR | Accuracy | Recall | Precision | F1 score |

|---|---|---|---|---|---|---|

| Type 6 | DT | 0.000 | 0.637 | 0.727 | 0.635 | 0.672 |

| RF | 0.077 | 0.637 | 0.777 | 0.611 | 0.669 | |

| SVM | 0.077 | 0.670 | 0.799 | 0.646 | 0.701 | |

| LSTM | 0.154 | 0.681 | 0.895 | 0.552 | 0.655 | |

| Transformer | 0.077 | 0.505 | 0.552 | 0.332 | 0.360 | |

| GPT3.5 (P) | 0.077 | 0.440 | 0.610 | 0.330 | 0.397 | |

| GPT4.0 (P) | 0.000 | 0.615 | 0.746 | 0.665 | 0.674 | |

| GPT3.5-turbo-1160 (F) | 0.154 | 0.527 | 0.726 | 0.412 | 0.503 | |

| Type 7 | DT | 0.077 | 0.582 | 0.688 | 0.593 | 0.632 |

| RF | 0.154 | 0.626 | 0.768 | 0.585 | 0.649 | |

| SVM | 0.154 | 0.637 | 0.783 | 0.581 | 0.646 | |

| LSTM | 0.154 | 0.703 | 0.907 | 0.587 | 0.707 | |

| Transformer | 0.000 | 0.560 | 0.453 | 0.563 | 0.492 | |

| GPT3.5 (P) | 0.077 | 0.396 | 0.538 | 0.358 | 0.416 | |

| GPT4.0 (P) | 0.077 | 0.560 | 0.667 | 0.629 | 0.638 | |

| GPT3.5-turbo-1160 (F) | 0.000 | 0.484 | 0.593 | 0.512 | 0.538 | |

| Type 8 | DT | 0.000 | 0.637 | 0.727 | 0.635 | 0.672 |

| RF | 0.154 | 0.626 | 0.768 | 0.585 | 0.649 | |

| SVM | 0.077 | 0.648 | 0.780 | 0.599 | 0.660 | |

| LSTM | 0.231 | 0.692 | 0.781 | 0.635 | 0.685 | |

| Transformer | 0.000 | 0.560 | 0.375 | 0.571 | 0.451 | |

| GPT3.5 (P) | 0.077 | 0.407 | 0.369 | 0.278 | 0.246 | |

| GPT4.0 (P) | 0.231 | 0.659 | 0.742 | 0.731 | 0.723 | |

| GPT3.5-turbo-1160 (F) | 0.077 | 0.615 | 0.714 | 0.613 | 0.646 |

| Dataset | Model | Accuracy | Recall | Precision | F1 score |

|---|---|---|---|---|---|

| Type 6 | DT | 0.615 | 0.375 | 0.429 | 0.400 |

| RF | 0.769 | 0.400 | 0.571 | 0.471 | |

| SVM | 0.769 | 0.400 | 0.571 | 0.471 | |

| LSTM | 0.692 | 0.444 | 0.500 | 0.471 | |

| Transformer | 0.538 | 0.571 | 0.400 | 0.471 | |

| GPT3.5 (P) | 0.385 | 0.600 | 0.300 | 0.400 | |

| GPT4.0 (P) | 0.615 | 0.500 | 0.444 | 0.471 | |

| GPT3.5-turbo-1160 (F) | 0.615 | 0.375 | 0.429 | 0.400 | |

| Type 7 | DT | 0.615 | 0.250 | 0.400 | 0.308 |

| RF | 0.769 | 0.400 | 0.571 | 0.471 | |

| SVM | 0.769 | 0.400 | 0.571 | 0.471 | |

| LSTM | 0.846 | 0.364 | 0.667 | 0.471 | |

| Transformer | 0.538 | 0.571 | 0.400 | 0.471 | |

| GPT3.5 (P) | 0.462 | 0.500 | 0.333 | 0.400 | |

| GPT4.0 (P) | 0.385 | 0.200 | 0.167 | 0.182 | |

| GPT3.5-turbo-1160 (F) | 0.462 | 0.167 | 0.200 | 0.182 | |

| Type 8 | DT | 0.692 | 0.333 | 0.500 | 0.400 |

| RF | 0.769 | 0.400 | 0.571 | 0.471 | |

| SVM | 0.769 | 0.400 | 0.571 | 0.471 | |

| LSTM | 0.769 | 0.400 | 0.571 | 0.471 | |

| Transformer | 0.692 | 0.000 | 0.000 | 0.000 | |

| GPT3.5 (P) | 0.385 | 0.800 | 0.333 | 0.471 | |

| GPT4.0 (P) | 0.769 | 0.300 | 0.600 | 0.400 | |

| GPT3.5-turbo-1160 (F) | 0.615 | 0.250 | 0.400 | 0.308 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).