1. Introduction

Energy harvesting is the process of capturing wasted energy from the ambient energy sources within the surrounding environment and then utilizing such energy for recharging purposes. Over the past few years, there has been much demands on the deployment and use of wireless sensors in many engineering applications, including the internet of things [

1,

2]. Due to the nature of wireless connectivity of such devices, the wireless sensors need to be self-powered [

3]. In principle, the design approach of electromagnetic energy harvesting systems could take one of three directions: analytical/empirical formulations, full-wave numerical simulations or trained trial and error approach. Unfortunately, the three approaches can generally be time consuming and computationally expensive while trying to achieve the optimal design. Thus, it is crucial to reconsider more efficient alternatives to aid in the design process, among which is the deployment and integration of intelligent algorithms during the design process.

In the last two decades, metamaterials and their 2D counterparts, the metasurfaces, have attracted a lot of attention from academia and industry, due to their unique properties, including peculiar constitutive parameters’ responses and potential applications, which are not realizable in most natural materials. Such properties are realized by subwavelength resonant engineered inclusions that are assembled periodically or aperiodically in a host medium. The recent evolution of metasurfaces and metamaterials has marked a transformative shift, primarily motivated by the demand for advanced capabilities in optical and electromagnetic domains [

4]. These engineered materials hold the potential for revolutionizing diverse applications, offering the prospect of downsized optics, unparalleled control over wavefronts, and tailored wave manipulation. Such advances have begun to reshape the landscape of communication, sensing and optical technologies [

5,

6,

7]. However, it is vital to acknowledge that the path to these innovations has been fraught with challenges. The intricate process of designing and synthesizing such materials has imposed substantial hurdles, necessitating extensive simulations, formidable computational resources, and iterative design procedures [

8,

9,

10,

11,

12,

13].

Various deep learning models have

also been used in many engineering applications, including the design of microwave and photonic structures. The multilayer perceptron (MLP) is one of the machine learning models used, especially in the extraction of features from large data problems such as image processing [

14]. With the advancement of machine learning algorithms, bidirectional MLP was adopted to design photonic structures even at the nanoscale, as in [

15]. Although MLP could be applied in the design of photonic inverse problems, it still has limitations, especially with problems that involve a challenging parameterization process. Hence, the convolution neural network (CNN) and Transpose CNN are powerful tools to extract image features and shrink large data from images such as the image of the photonic structure to low-dimensional feature vectors [

16]. The image of the structure is first pixelated and then convoluted with a feature extraction matrix or filter to form a feature map. The pooled feature map is constructed from the pooling process (using either maximum, sum, or average) to the feature map. Finally, the pooled feature map is flattened and serves as input to the deep neural network, as illustrated in [

17]. CNN used in [

18] to design an anisotropic digital coding metasurface, which means that the surface with a metallic layer will be coded as ’1’, while surfaces without a metallic layer will be coded as ’0’, which can be used to design the metallic reflector. In the same study, high-level precision was achieved with the machine learning method as compared to the numerical analysis method. In [

19], the model is trained to learn the relationship between the geometry of metamaterials and the properties of their absorption and to demonstrate the effectiveness of machine learning in the design of metamaterials for high-temperature applications. All the aforementioned published papers show the power of machine learning algorithms in minimizing the time required to design the desired metamaterial or metasurface structure in order to fit a desired application of interest.

Different optimization techniques have been employed to design metasurfaces and their impact on performance has been studied as in [

18,

20,

21,

22,

23,

24]. State-of-the-art multi-objective optimization algorithms have been used to realize metasurfaces that meet multiple design goals and achieve high performances in terms of reflection, transmission, polarization, angular, and frequency-dependent properties [

25]. Custom state-of-the-art multi-objective and surrogate-assisted optimization algorithms have been employed to explore the solution space and design metasurface topologies that achieve an arbitrary number of user-specified performance criteria [

26]. Statistical learning optimization has been used to optimize highly efficient and robust metasurface designs, including single- and multi-functional devices, while also accounting for manufacturing imperfections [

27]. The choice of optimizer can affect the convergence criteria and the efficiency of finding the optimum point, with gradient-based optimizers generally outperforming population-based methods in terms of function evaluations [

28].

In light of this background and to alleviate some of the challenges in the design of metasurface absorbers and extending the work in [

29], the main contributions in this research are outlined as follows:

Optimal Dataset Size Determination: we investigated thoroughly the requisite dataset size to attain a high level of accepted accuracy in deep neural network (DNN) models for metasurface design. Our numerical experiments reveal that a dataset comprising 4000 samples is adequate to establish a robust DNN model for a rapid design and synthesis of metasurface absorbers with an accuracy above 90%.

Sparse Data Handling with Cascaded DNN: we addressed the challenge of handling datasets that are characterized by a high prevalence of sparse data. Further, we examined the effectiveness of cascaded DNN models in refining prediction values. Our findings indicate that while cascaded DNNs are effective, careful hyperparameter tuning of the optimizer is essential in order to mitigate numerical instability. Furthermore, we determine that a two-layer cascaded neural network is sufficient to achieve the desired accuracy in the design of multi-resonant metasurface absorbers. The impact of two other data sorting and selection techniques, namely: ascending data sorting and bootstrap method were also investigated and compared with the proposed adaptive descending data sorting method.

Dataset Arrangement Impact Analysis: we conducted a systematic investigation addressing the impact of different dataset arrangements on prediction accuracy, which to the best of our knowledge has not been thoroughly explored. Our study demonstrates that there is relatively limited influence on prediction accuracy when datasets are randomly organized or arranged using an alternative method, which we refer to it here as the Adaptive Cascaded DL (ACDL) model. This approach involves aggregating response values for specific cases and subsequently arranging them in descending order, contributing to our understanding of dataset arrangement strategies for metasurface design through AI.

The remainder of this paper is organized as follows.

Section 2 outlines the contribution in this work through the proposed adaptive cascaded deep learning model.

Section 3 presents a rotational concentric split-ring resonator (R-SRR) unit cell as the main building block of electromagnetic metasurface absorber. The data set are then generated through full-wave electromagnetic simulations. Moreover, the results are presented and discussed in

Section 4. Finally, summary of the findings from this research study is concluded in

Section 4.

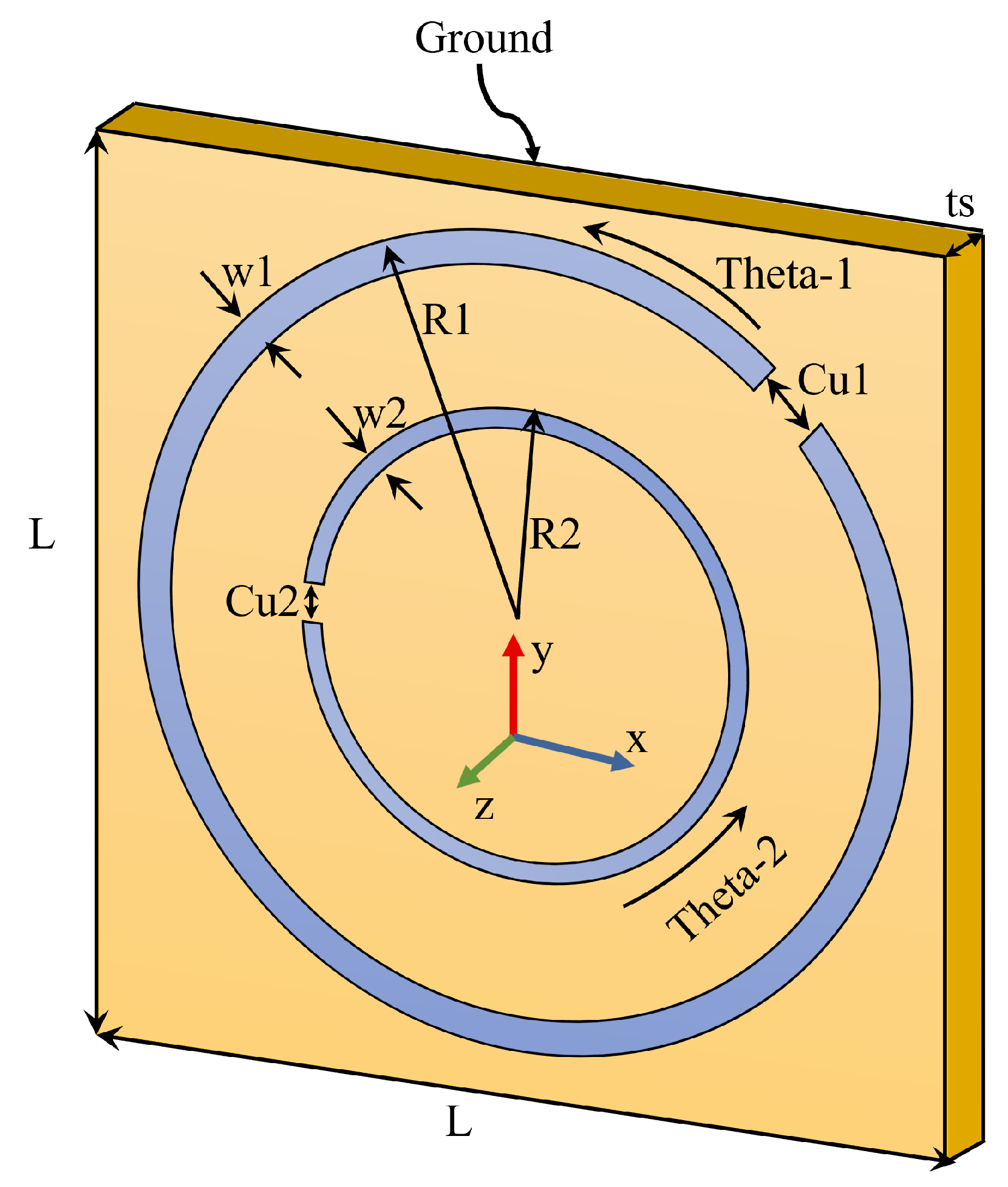

3. Metasurface Absorber Structure Model

Figure 4 shows the proposed metasurface unit cell of two split-ring resonators, SRRs, as part of the absorbing structure. It consists of two edge-coupled concentric circular metallic rings with two gap cuts (slits). The square-shaped structure is mounted on a Rogers

substrate (

= 2.2, tan

= 0.0009) with a thickness of

= 1.57 mm and is backed with a metallic ground layer. The metallic parts of the structure are made of metallic copper.It is very important to mention here that this proposed metasurface structure is different from the classical edge-coupled SRRs, due to the dependence of the two opposite cuts on angular positions, i.e.

and

. In other words, the two concentric rings are rotational due to their dependence on angles. Thus, the deployed unit cell results in asymmetric-SRR structure, which has not been explored in deep learning studies concerning such metasurface absorbing structure.

Within the 3D numerical structure model, periodic unit cell boundary conditions are enforced in the x and y directions, respectively, while open space boundary conditions were applied to the boundaries along the z direction. In order to excite the metasurface absorber structure, Floquet ports were assigned along the two walls, i.e. ±z-direction.

This developed metasurface structure was used to generate, train and test the dataset through a set of simulation tasks, taking into consideration the parameters of interest. The dependent variables are: a) the unit cell period, L, in the x and y directions, b) the outer and inner radii of the inner and outer rings: , , respectively, c) the widths of the rings: and , d) the two slits (, ) added to the dependent variables and d) with their angular positions and ). Thus, a total of 10 dependent variables are considered in this research in order to generate 4000 random data sets of reflection coefficient, , and absorbance responses, with 1001 data points each from the design parameters, which are then considered as the input to the DL model. In another case study, a dataset size of 7000 was also generated and its impact was assessed in order to study the effect of increasing the data set on the precision of the prediction from the DL model, the data set was increased to include the change of lattice size, L with respect to and , with a size of 7000.

4. Results and Discussions

From the design perspective of metasurface absorbers, two important responses are required in the dataset generation, which are the scattering reflection coefficient,

and the absorption strength,

A. This absorbance strength is expressed as

where

A corresponds to the absorbance strength of the metasurface absorber. Note that since the proposed absorbing structure is backed with a metallic layer,

. Thus, there is a direct relationship between the

and the absorbance responses.

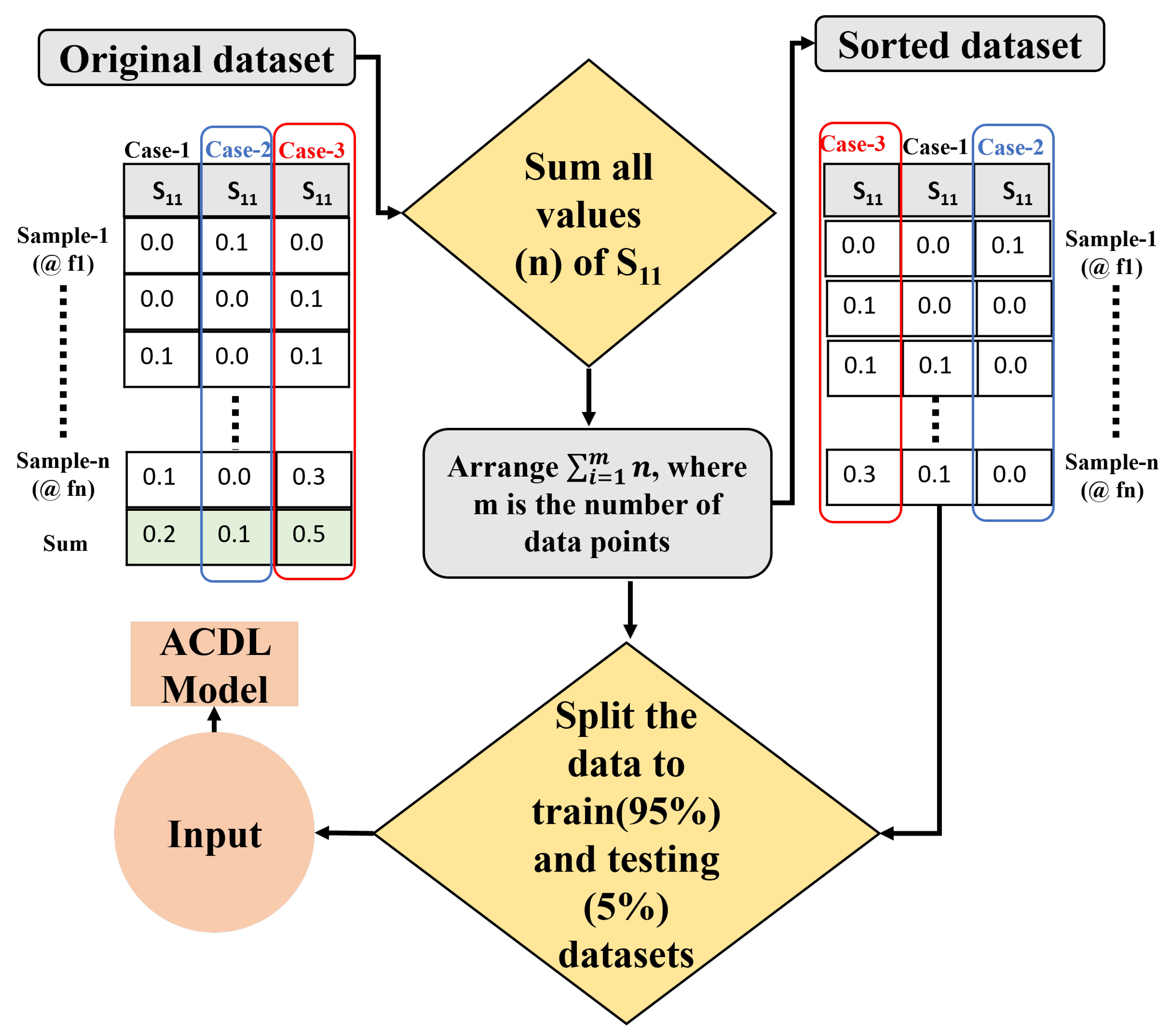

In this section, we present the results from the trained ACDL model. For convenience, the initial dataset were uniformly distributed at random. To partition this dataset into training and testing subsets, we employ the Keras split function, adhering to a predefined split ratio of 95% for training and 5% for testing. This approach grants us invaluable insights into the dynamic effects of random dataset organization and the consequent influence on our model’s performance. On the other hand, the second dataset follows our proposed adaptive data splitting mechanism, which is uniquely tailored to address the specific challenges posed by sparse datasets.Within this framework, we aggregate the scattering parameters response values corresponding to distinct geometrical structure design and, with careful data handling and precision, we arrange them in a descending order based on the data response strength, as illustrated in

Figure 5.

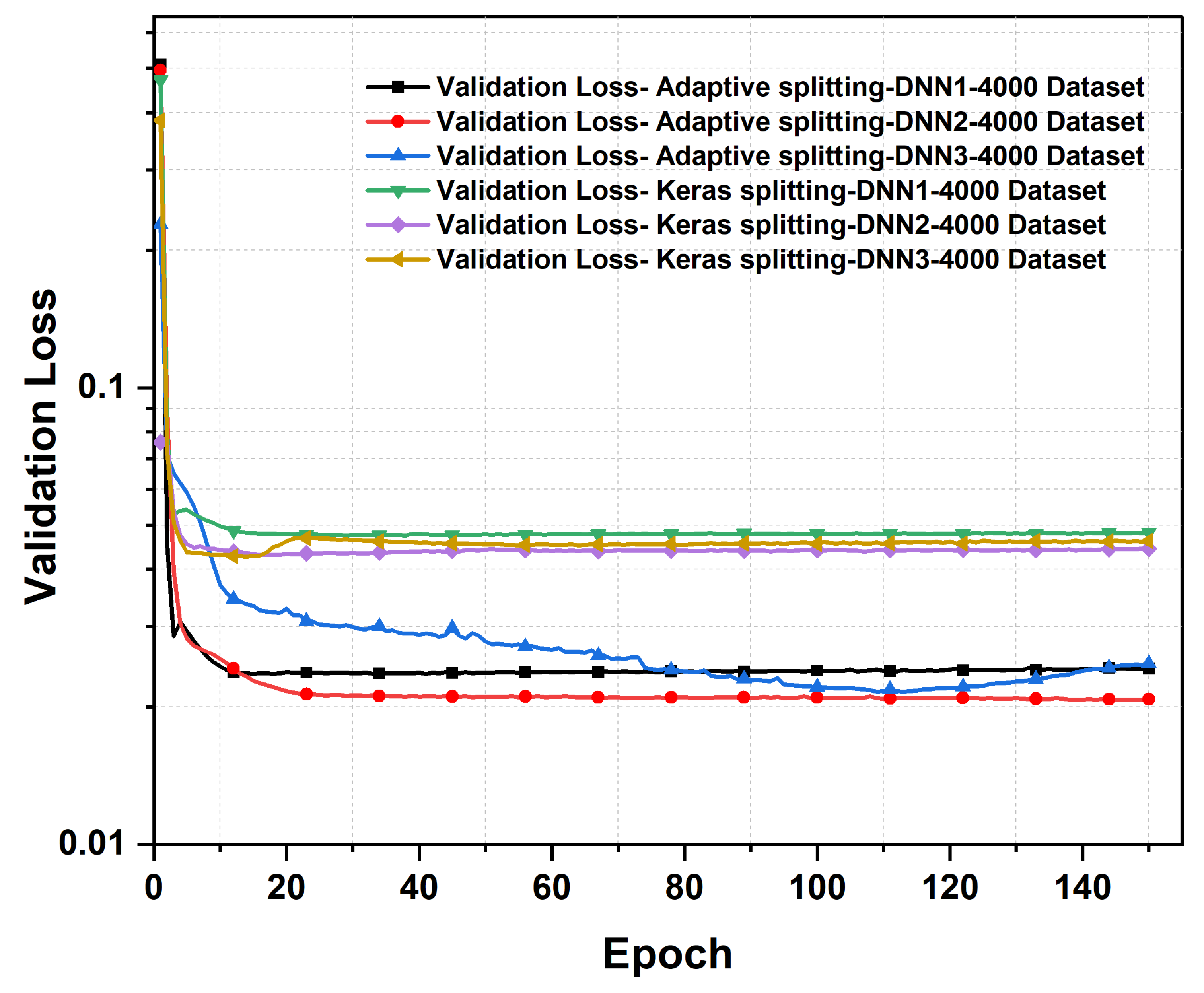

Figure 6 shows the significance of the proposed adaptive splitting method in this analysis. One standout observation is the adaptive method’s consistent superiority in terms of validation errors compared to the Keras method. As illustrated in

Table 1, the adaptive method consistently delivers lower validation losses across all

layers, with

and

exhibiting notably lower validation losses of 0.024 and 0.020, respectively, while the Keras method trails behind with validation losses of 0.049 and 0.044, respectively. This remarkable and sustained advantage in reducing validation errors accentuates the adaptive method’s unique ability to ensure the reliability and precision of deep neural network models. Moreover, it is worth noting that the training loss under the Adaptive method is marginally higher, as shown in

Table 1, with a training loss of 0.15 as compared to 0.032 with the Keras method for the third DNN layer. However, given the importance of validation accuracy in real-world applications, this minor increase in the training loss is outweighed by the significant gain in validation performance. This emphasizes the importance of carefully weighing the trade-offs when selecting the appropriate dataset splitting method. From the aforementioned results and discussion, it is apparent that employing cascaded neural networks with only two layers proves to be the optimal choice for designing multi-resonant metasurface absorbers, offering a balance between performance and efficiency. This finding is especially significant, as it sets the stage for further investigation when the dataset is increased from 4000 to 7000, where scalability and robustness of this approach can be further explored.

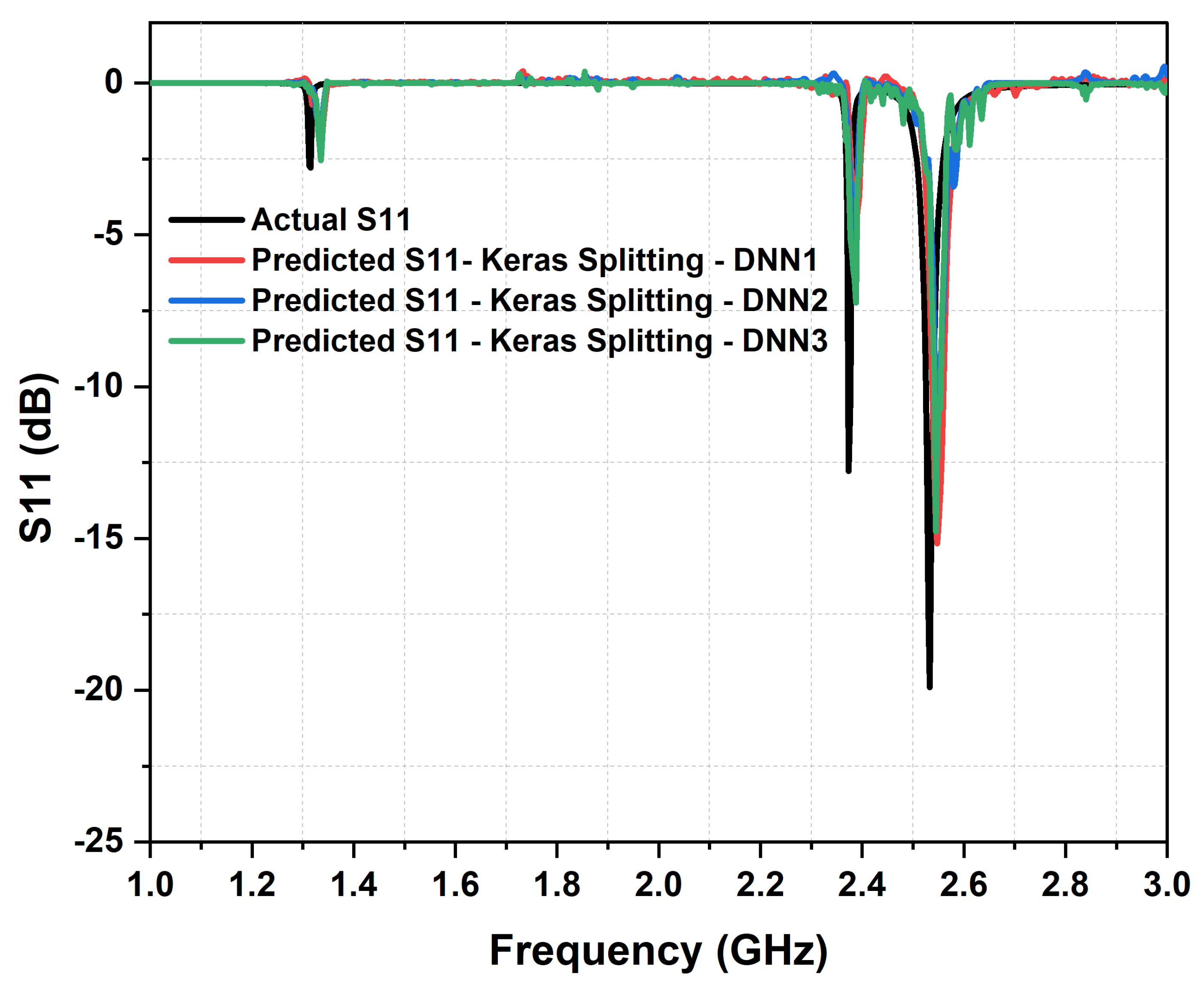

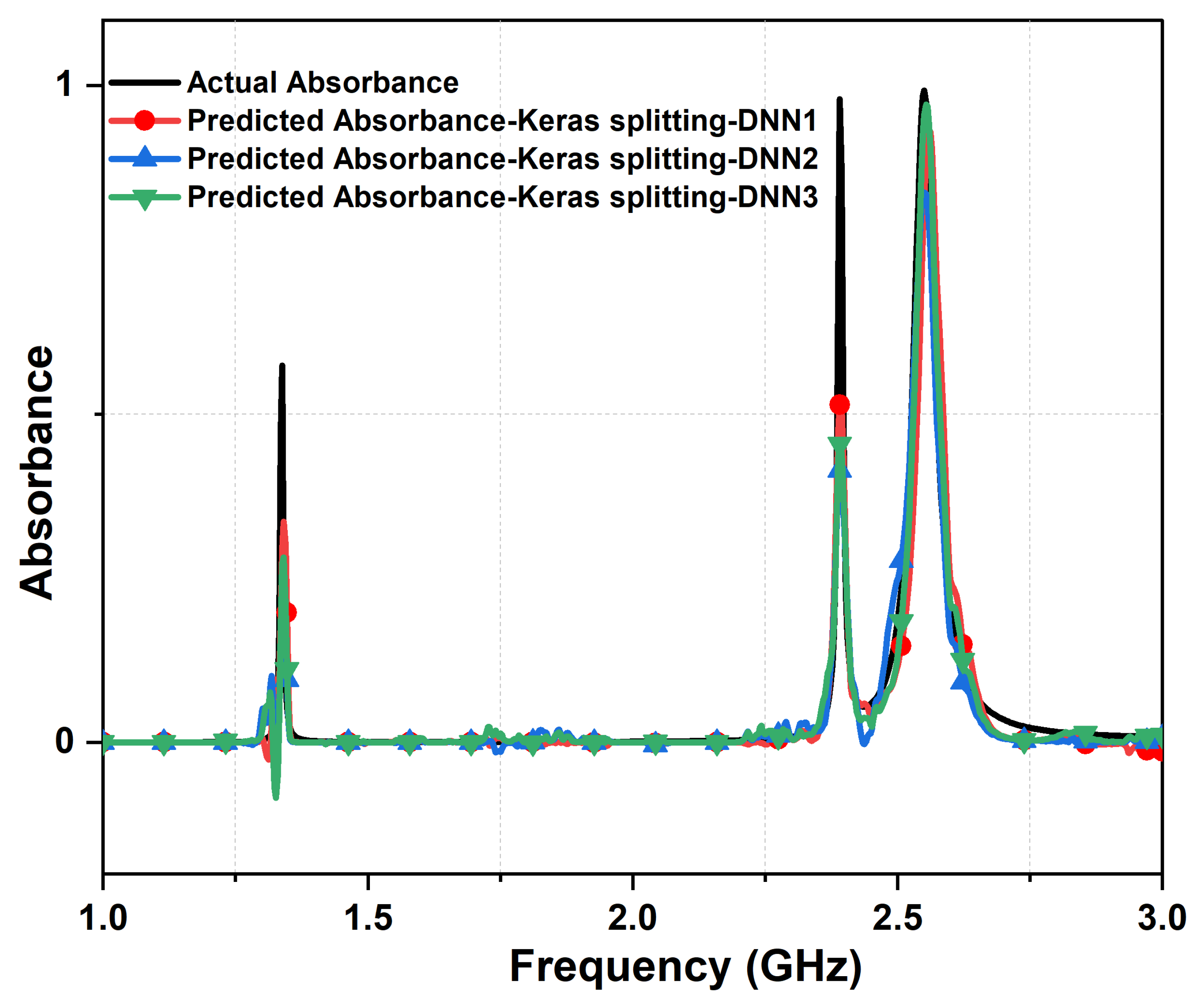

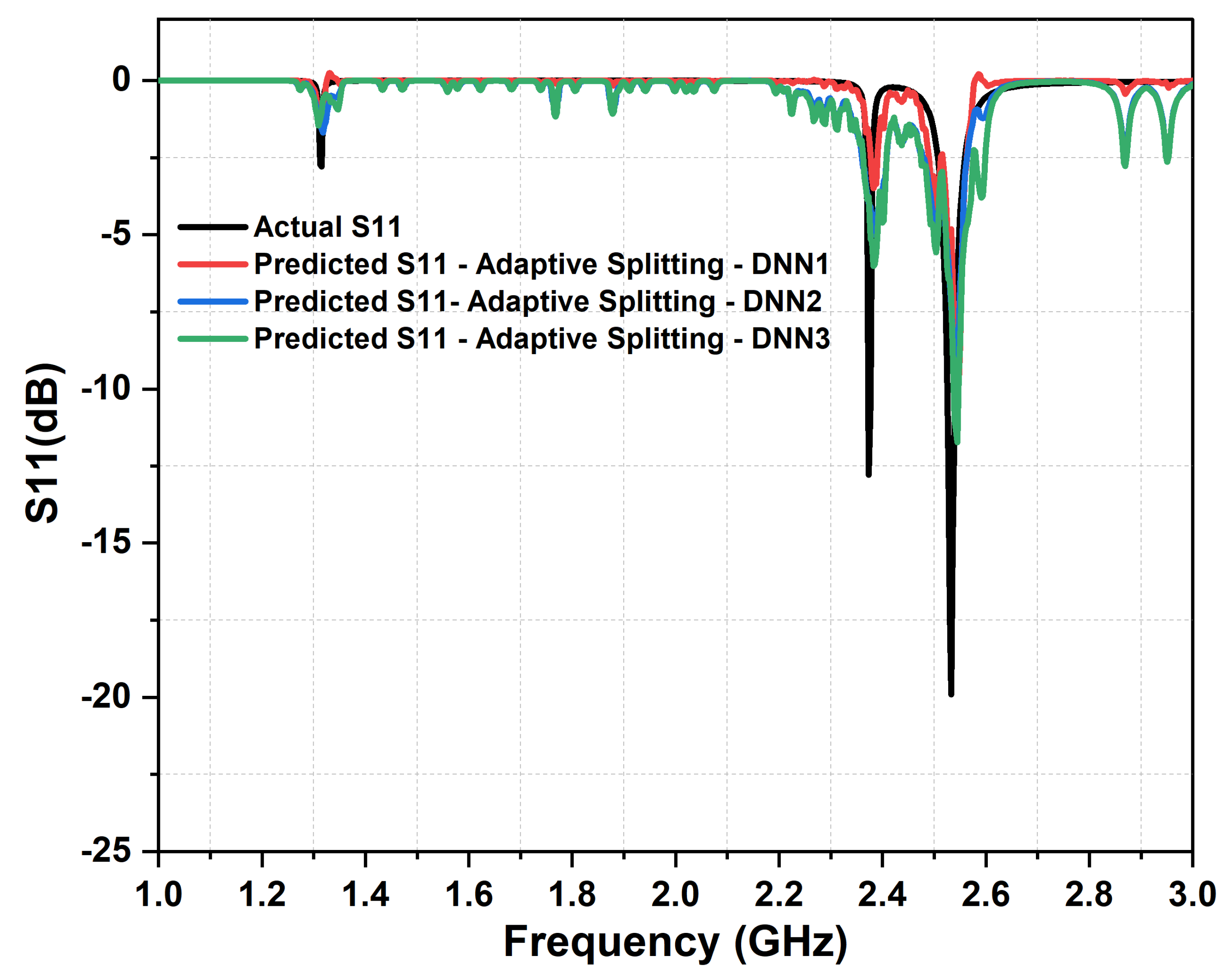

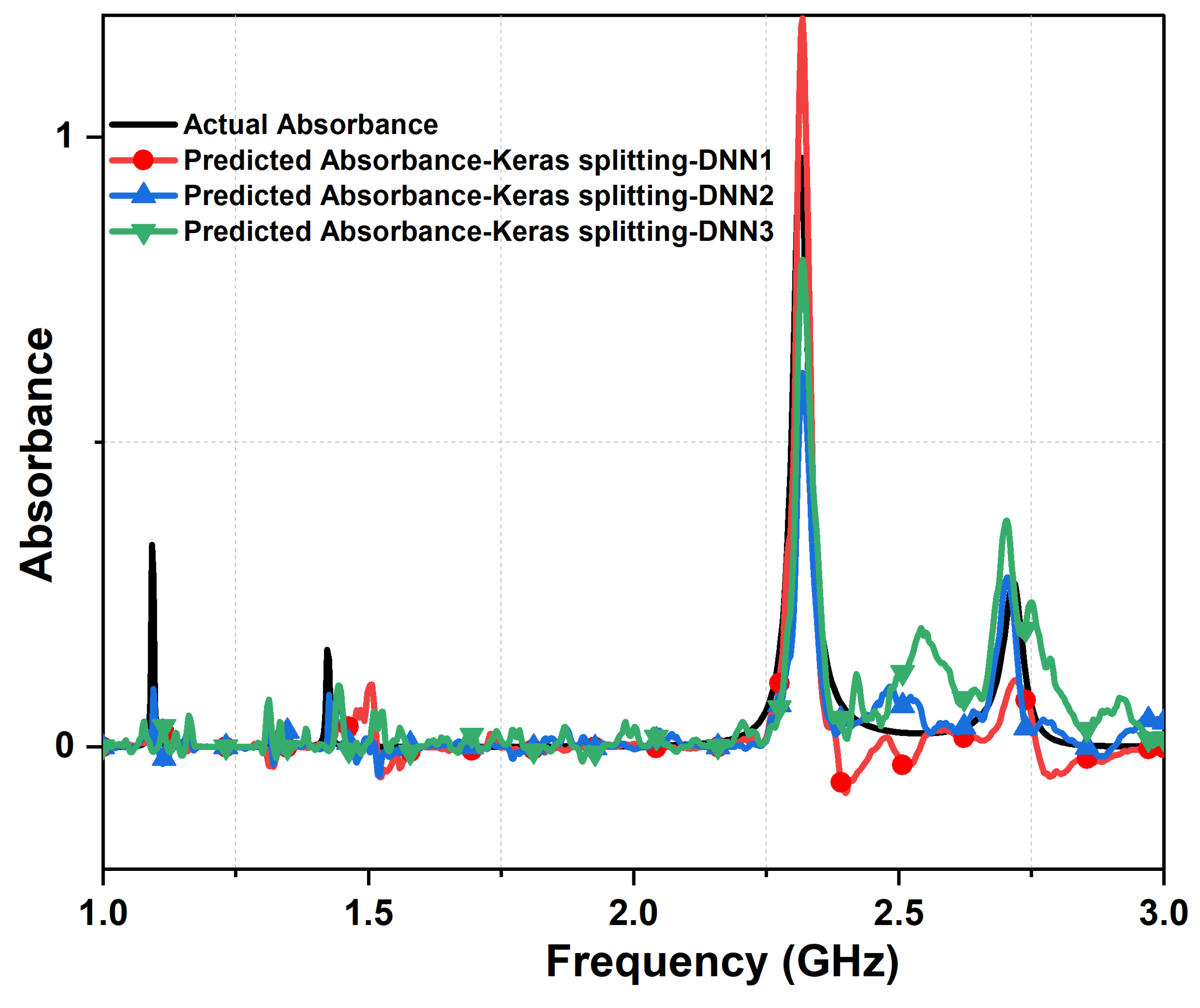

Figure 7 and

Figure 8 present a comparison between actual design response and predicted responses from each layer for the Keras splitting method for the metasurface absorber design responses in terms of

and absorbance strength, respectively. Clearly, we could observe a significant amount of noise generated from the first layer of the DNN model with Keras splitting. Furthermore, this noise was reduced via refining the data within the second layer, due to the change of inputted data to the model and enhancement in the gradient calculation. We note here the hyperparameters of the second DNN layer and due to the small values of the gradient vector at this layer;

changed from 0.9 to 0.95 in order to make the model more numerically stable.

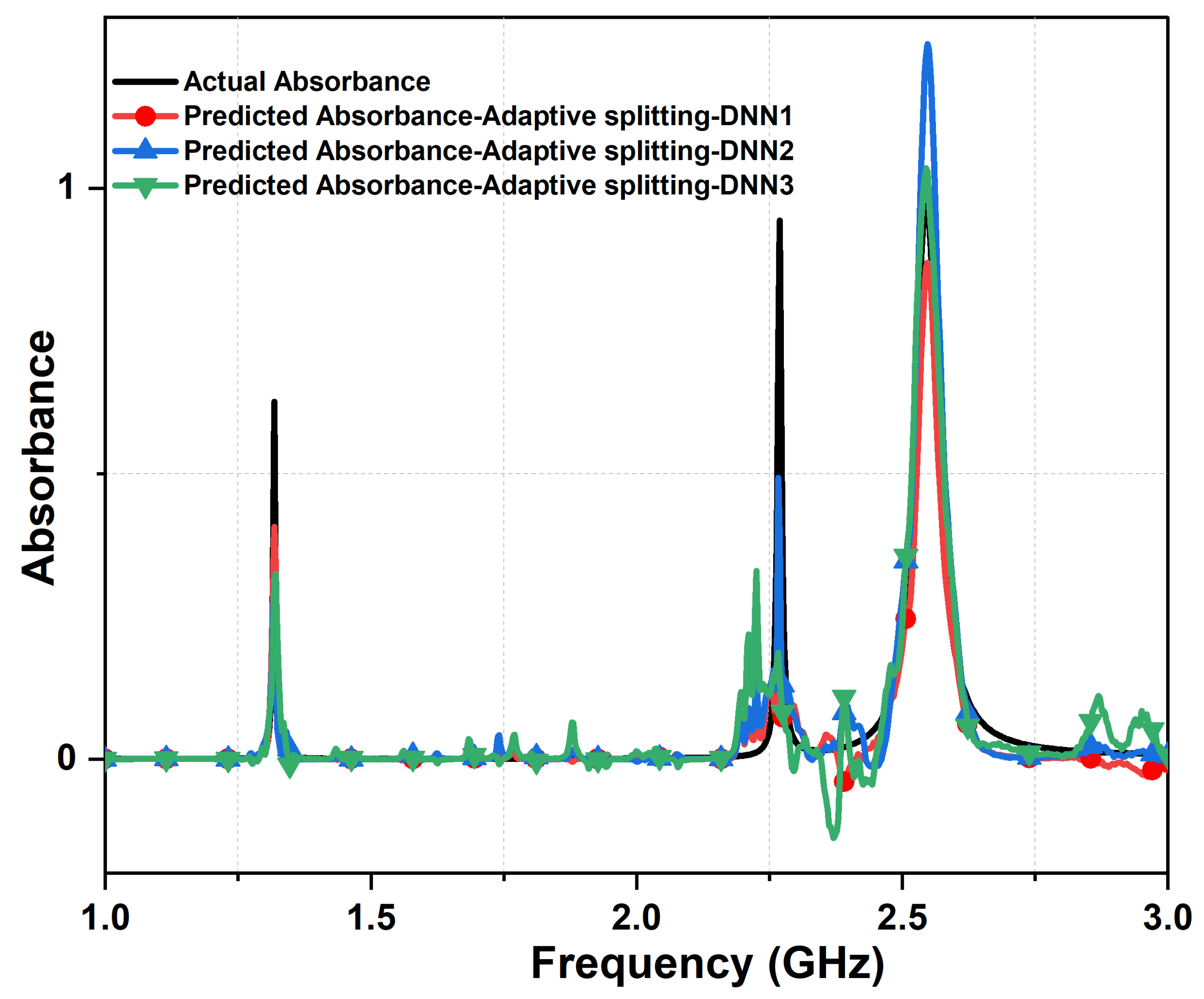

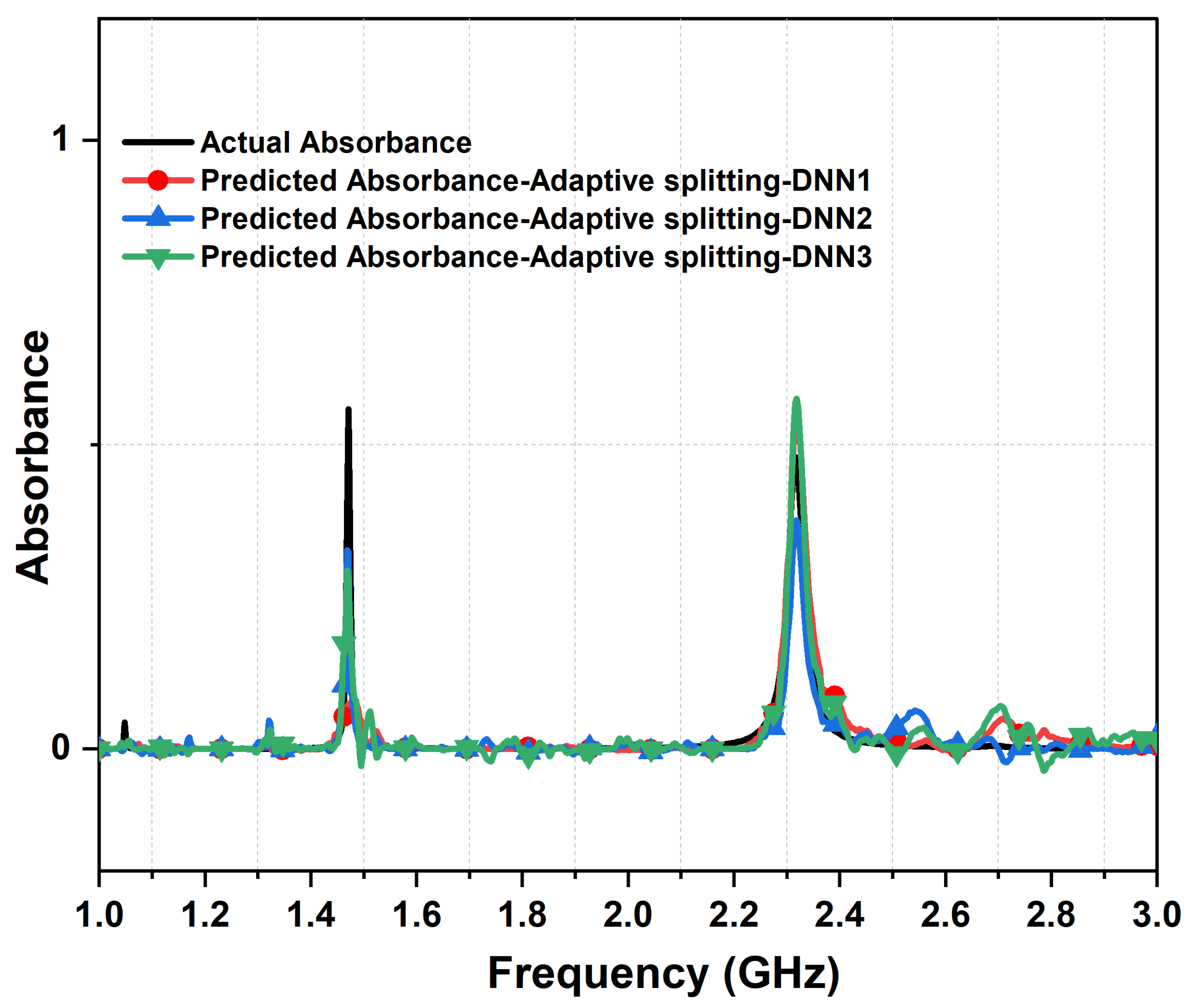

Figure 9 and

Figure 10 present a comparison between actual design response and predicted responses from each layer for the proposed splitting method for the metasurface absorber design in terms of

and absorbance strength. Interestingly, the prediction of the actual performance was greatly improved in terms of absorption response and encountered noise from the DL model, specifically with two deep neural network cascaded layers.

Upon careful examination of the prediction samples (

Figure 8 and

Figure 10), it becomes evident that a clear distinction in prediction quality emerges. Specifically, when assessing predictive accuracy in terms of generated noise, where values exceeding zero dB are of particular significance, the dataset organized through the adaptive data sorting method exhibits a slight advantage. This advantage becomes notably pronounced when employing two cascaded deep neural network layers. In essence, the adaptive data sorting method demonstrates superior predictive stability and response accuracy in scenarios where minimizing noise in predictions is a crucial criterion.

Another investigation focusing on impact of dataset size is considered next, where dataset was increased from 4000 to 7000 samples. As shown in

Figure 15 and the dataset findings in

Table 2, significant reduction to validation errors can be seen with the adaptive splitting method as compared to the Keras method across all

layers. From

Table 2, we can see that the validation losses with the adaptive method are 0.0067 and 0.006 for

and

layers, respectively, while higher values of 0.077 and 0.0073 resulted with the Keras splitting method with

and

, respectively. From such results, the proposed splitting mechanism showed higher precision and accuracy while increasing the dataset population. Moreover, the deployment of only two layers within the DNN model turns out to provide the optimal performance in terms of training and validation losses when dataset size of 7000 or even lowering it to 4000.

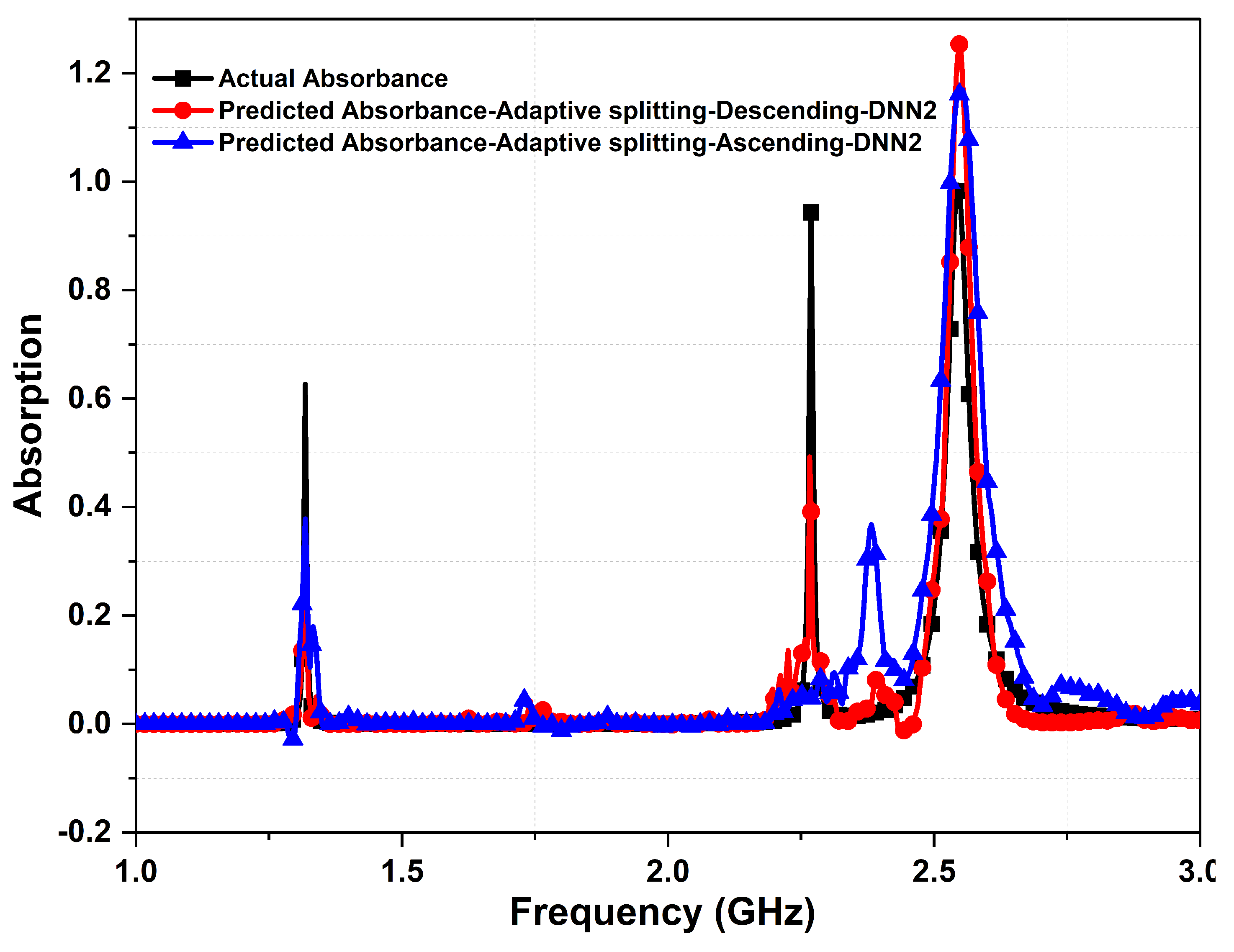

In order to ensure the accuracy of the results from the suggested data sorting mechanism via descending order, a comparison is made with dataset sorting through an ascending order. For convenience, the same dataset of 4000 cases was used.

Figure 11 depicts the predicted responses of the absorbance from the descending order data sorting and compared with the ascending order. Clearly, we can see that the prediction accuracy of the ascending order sorting is less accurate than the proposed descending data sorting, since not all resonance peaks of the absorber were detected. This is expected due to large pool of sparse data that comes as a priority in the ascending order topology. On the other hand, the predicted absorbance peaks when sorting data in descending order are all comparable to the actual absorbance response (i.e., see red curve). Another important metric is the prediction accuracy of the model when considering data sorting by either ascending or descending order.

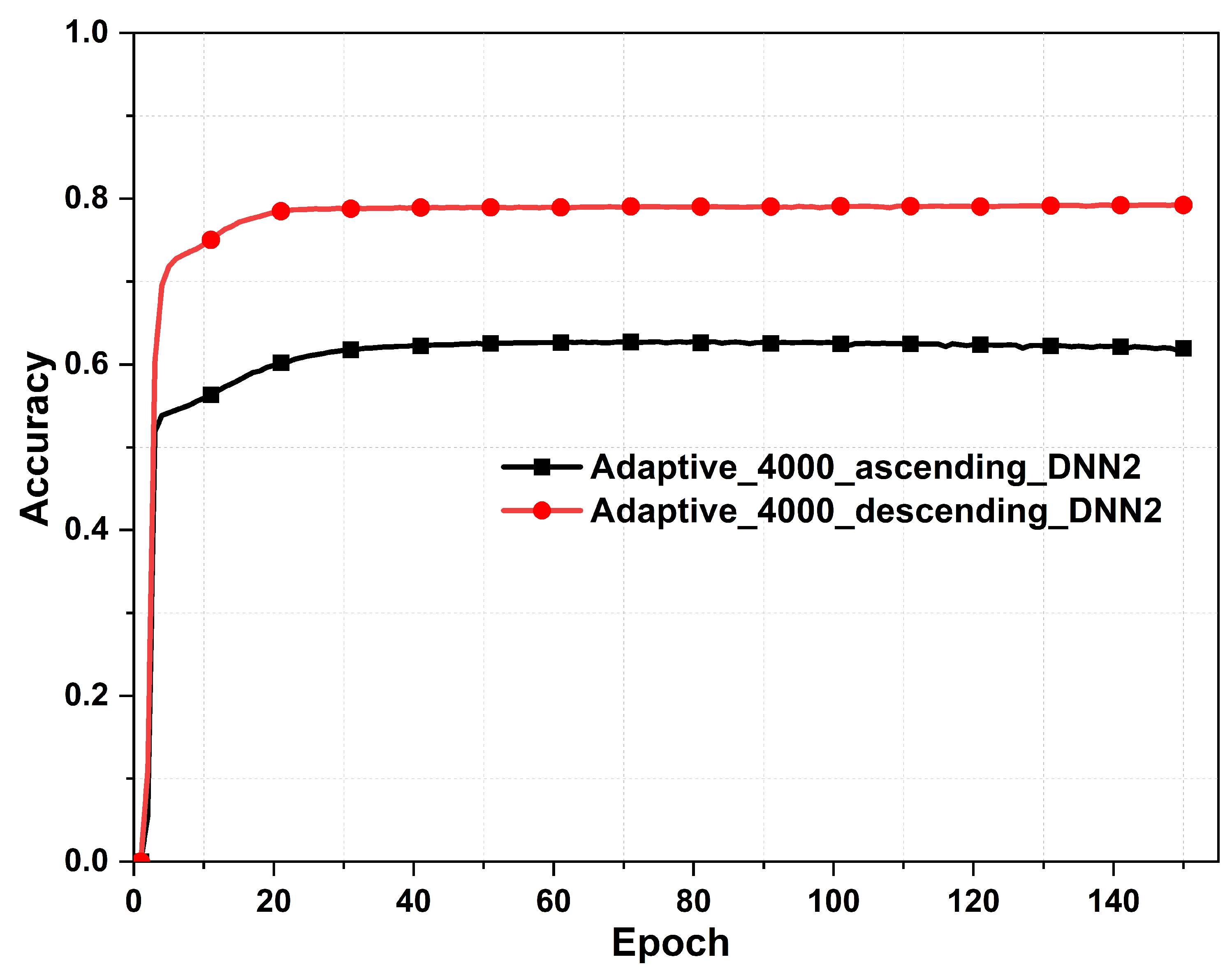

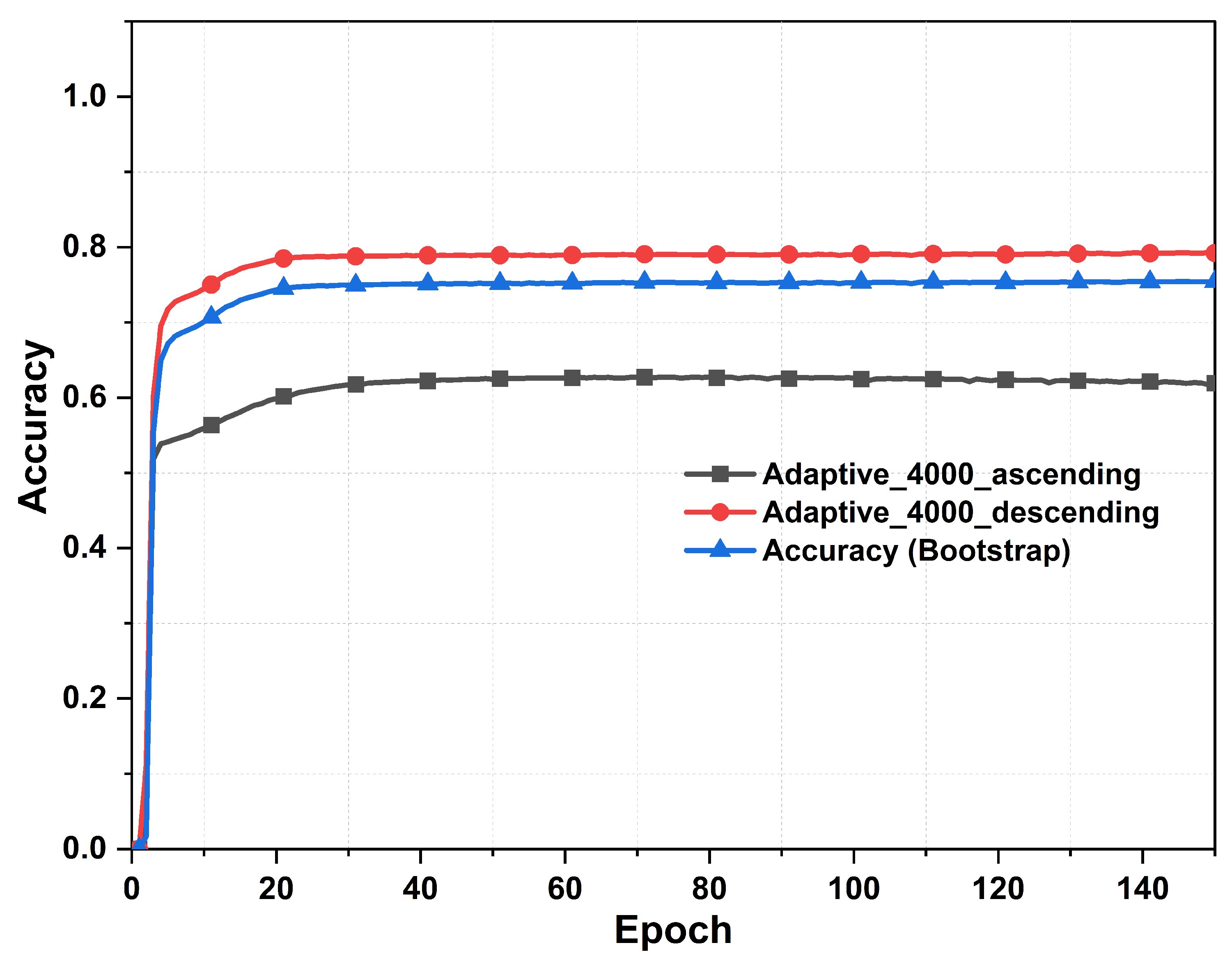

Figure 12 illustrates a reduction in the prediction accuracy of the trained DL model when sorting the data in ascending order by almost 20% as compared against the case of descending order.

Next, we investigate the prediction performance and accuracy of the developed DL model with another splitting technique, the

bootstrap method.

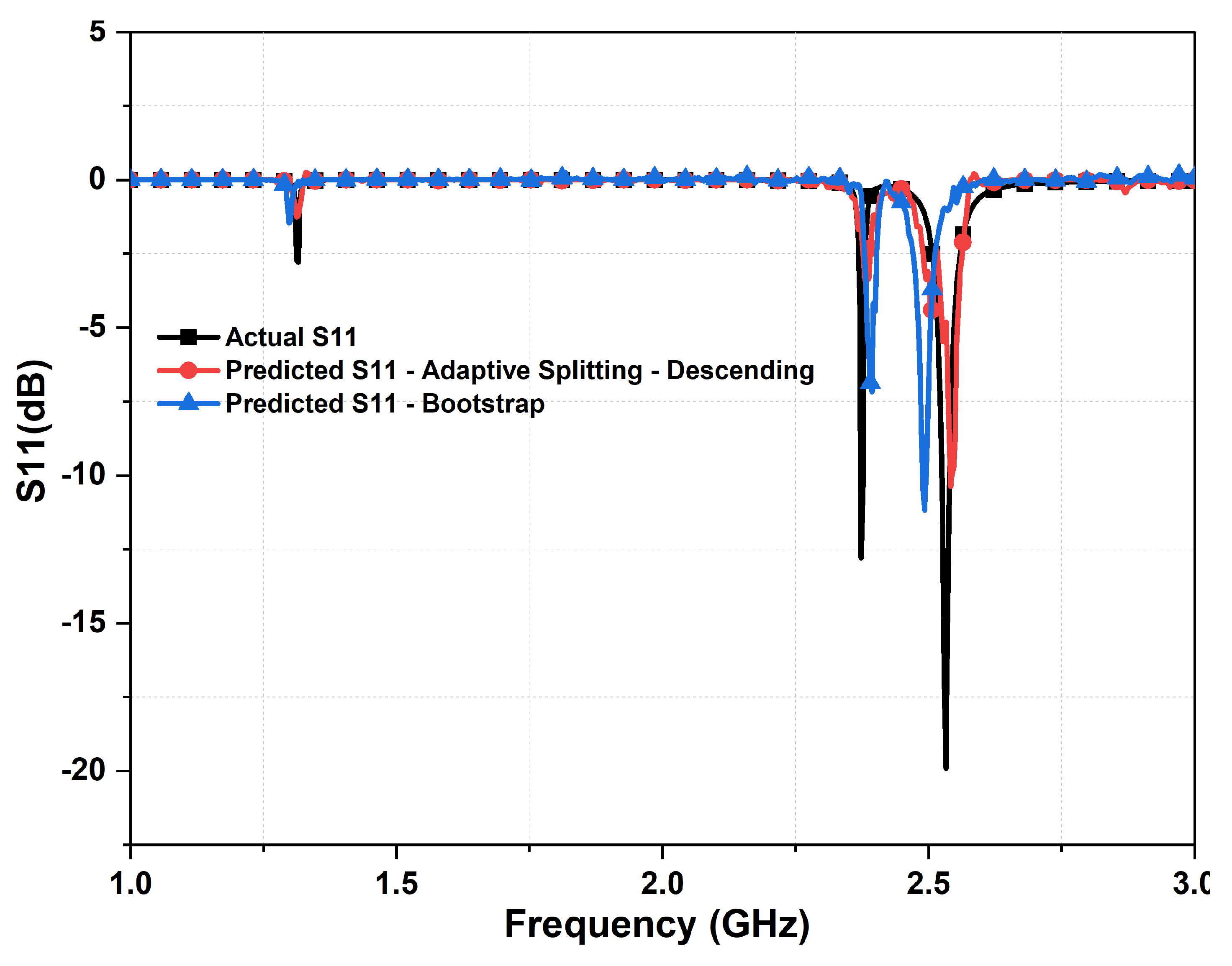

Figure 13 shows the prediction responses for reflection coefficient from the bootstrap method, our proposed descending data sorting order and actual response of the absorber. Interestingly, the predicted reflection coefficient response from the bootstrap shows more robustness in the prediction of the absorber performance, where absorber’s resonances are well predicted. The accuracy of the trained DL model with bootstrap method and compared with our proposed data sorting is illustrated in

Figure 14. As shown, lower prediction accuracy was realized from the bootstrap method by almost 5% as compared against the proposed data sorting technique but better than the ascending data order approach with almost 15% increase.

To empirically assess the performance of the cascaded DNN model trained on a dataset comprising 7000 samples, we execute a series of rigorous numerical experiments. Such experiments are conducted with unwavering precision, employing two distinct data-splitting methodologies. The outcome of these endeavors culminates in the generation of comprehensive test samples, each tailored to evaluate the effectiveness of the respective data-splitting method.

Figure 15.

Validation loss for 7000 dataset, with data split using Keras split function and our proposed adaptive data merging technique.

Figure 15.

Validation loss for 7000 dataset, with data split using Keras split function and our proposed adaptive data merging technique.

Upon augmenting the dataset from 4000 data samples to 7000, the evaluation of structural absorbance is extended through the consistent application of data partitioning and a three-tier cascaded neural network framework. The comparative visualizations presented in

Figure 16 and

Figure 17 illustrate the actual absorbance alongside the predicted absorbance values across the three cascaded layers. For instance, the predicted absorbance from the Keras method provides unrealistic absorption strength values (either above one and even negative absorbance values) (see

Figure 16) as compared to the case of our proposed adaptive splitting from

Figure 17. Moreover, the deployment of two DNN layers along with adaptive splitting results in a best prediction scenario from the absorbance response.

Lastly, we present the prediction accuracy of the proposed DL model with adaptive data splitting and compared against the case with Keras splitting from the second

layer, as shown in

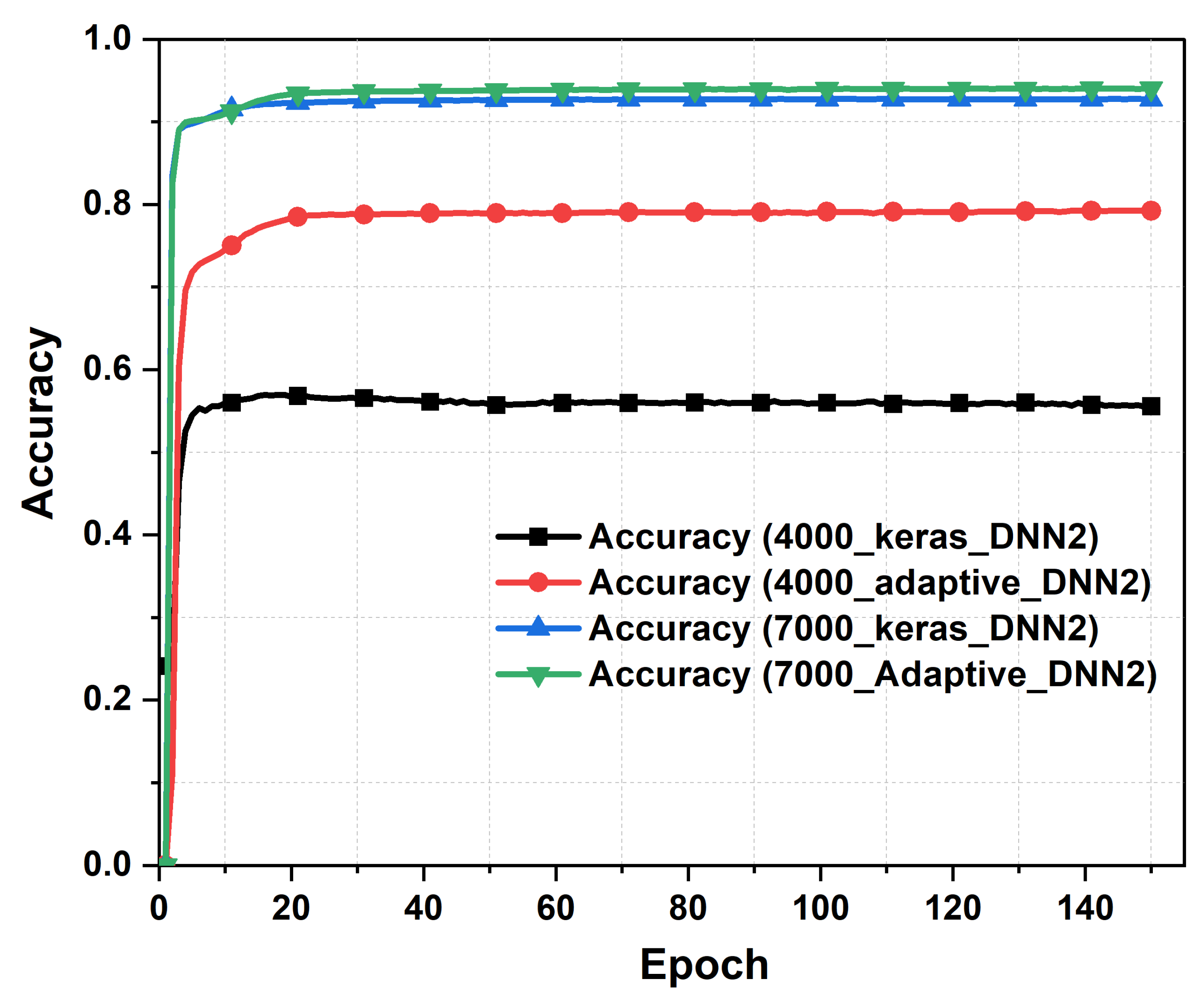

Figure 18. We can observe that the proposed model reached a prediction accuracy of 79% while the accuracy with the Keras data splitting mechanism was around 54% for dataset population of 4000. Moreover, the prediction accuracy has improved further when increasing the size of the dataset to 7000, where the accuracy reached 94% for our proposed model as compared to 92% when Keras splitting is considered. Hence, the proposed adaptive splitting method shows better prediction accuracy as compared against the model with Keras splitting feature.

Table 3 provides a comparison between various state of art artificial intelligence based models for the design and synthesis of metasurface absorbers at different frequency regimes. From the structural geometry, our proposed structure has not been explored in earlier studies, where the generated dataset has dependency on the angular location of the SRRs’ split gaps, i.e. function of angles

and

. Moreover, our model has explored a mechanism for pre-processing of data via adaptive data splitting mechanism, as illustrated in

Figure 5. This technique has shown significant convergence and accuracy of the trained and validated dataset by considering only two deep neural network layers, as evident from

Table 1 and

Table 2, where accuracy of 94% was achieved for the 7000 dataset with the proposed DL model with the adaptive data splitting and sorting mechanism.

5. Conclusions

In this paper, we proposed an adaptive cascaded deep learning (ACDL) model for the design and synthesis prediction of metasurface absorbers. A comprehensive exploration of key factors influencing the precision of metasurface response predictions was carried out. Firstly, a deep neural network model was developed with a novel data splitting technique, termed here as ACDL model, was introduced and rigorously compared against the conventional Keras split function using random dataset distribution. The results demonstrate that the proposed data-splitting method has a substantial impact on enhancing the quality of metasurface response predictions. This is exemplified through graphical representations and numerical results, showcasing its superiority.

Secondly, the paper investigated the impact of dataset size in the context of multi-resonance metasurface design and prediction. It is established that a dataset consisting of 4000 samples strikes an optimal balance, thus achieving a high degree of accuracy in metasurface response predictions. Moreover, the findings from this research emphasized that enlarging the dataset may introduce unwanted noise into predictions due to the nature of the multi-resonance metasurface absorber response.

Thirdly, the introduction of cascaded neural networks for augmenting the quality of scattering and absorption responses predictions is explored. Although a valuable enhancement, it demands particular attention due to the challenge posed by small gradients, potentially leading to numerical instability. The study in this paper emphasizes the necessity of fine-tuning optimizer hyperparameters, as evidenced by adjustments in learning rate and within the Adam optimizer.Moreover, the impact of sparse data on the trained DL model was thoroughly investigated by assessing the performance of the model with the proposed data sorting with ascending data sorting and the bootstrap method. From the analyzed statistical data, the proposed descending data sorting achieved higher prediction accuracy of 20% and 5% as compared to ascending order data sorting and the bootstrap method, respectively.

Lastly, the accuracy of the prediction from the developed ACDL model reached 94% for a sufficient dataset of 7000. As such, we believe that this proposed data splitting technique can be integrated in many artificial intelligence to aid in the design process and enhanced prediction of metasurface absorbers performance.

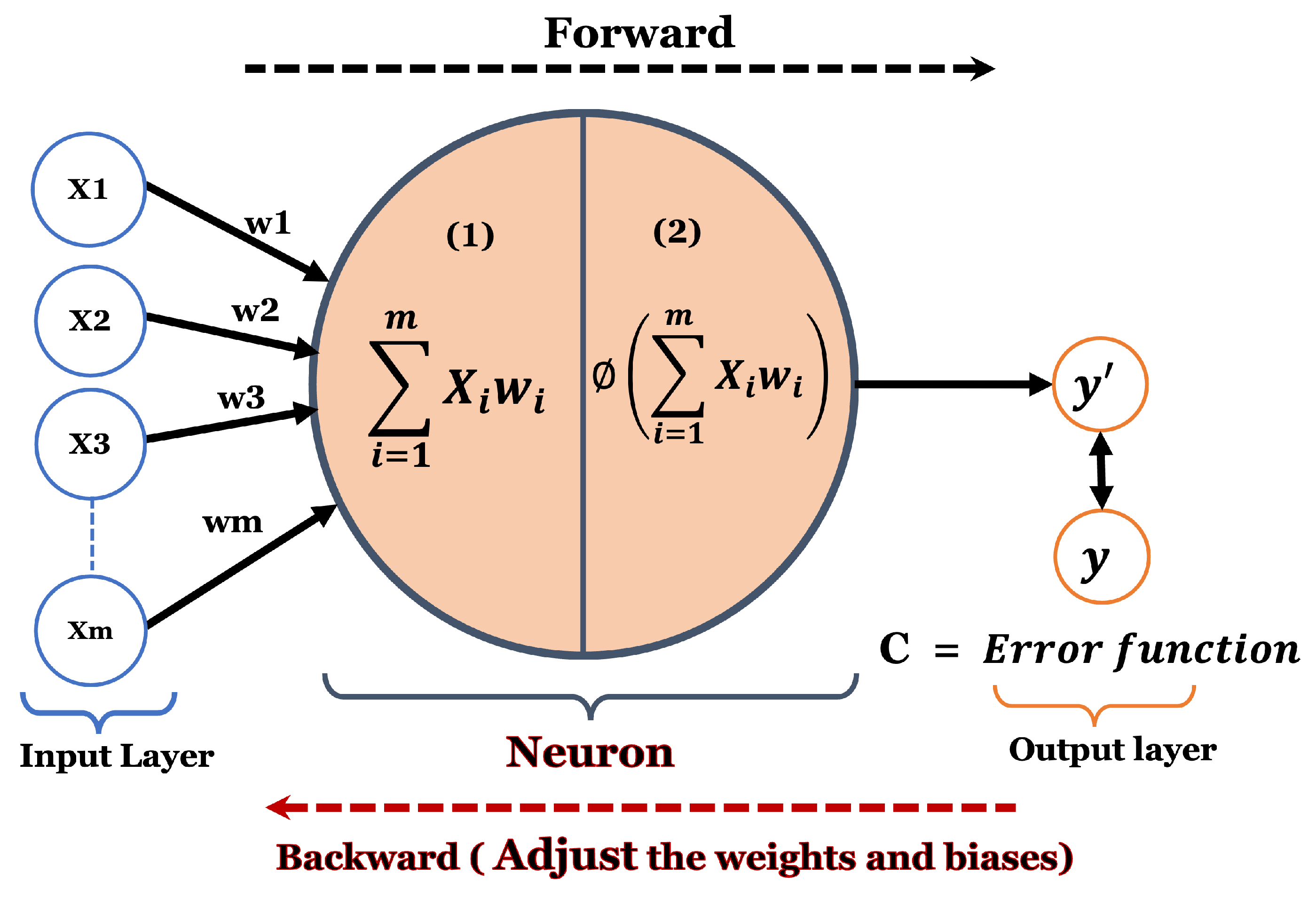

Figure 1.

Neural network Feed Forward principle with single neuron.

Figure 1.

Neural network Feed Forward principle with single neuron.

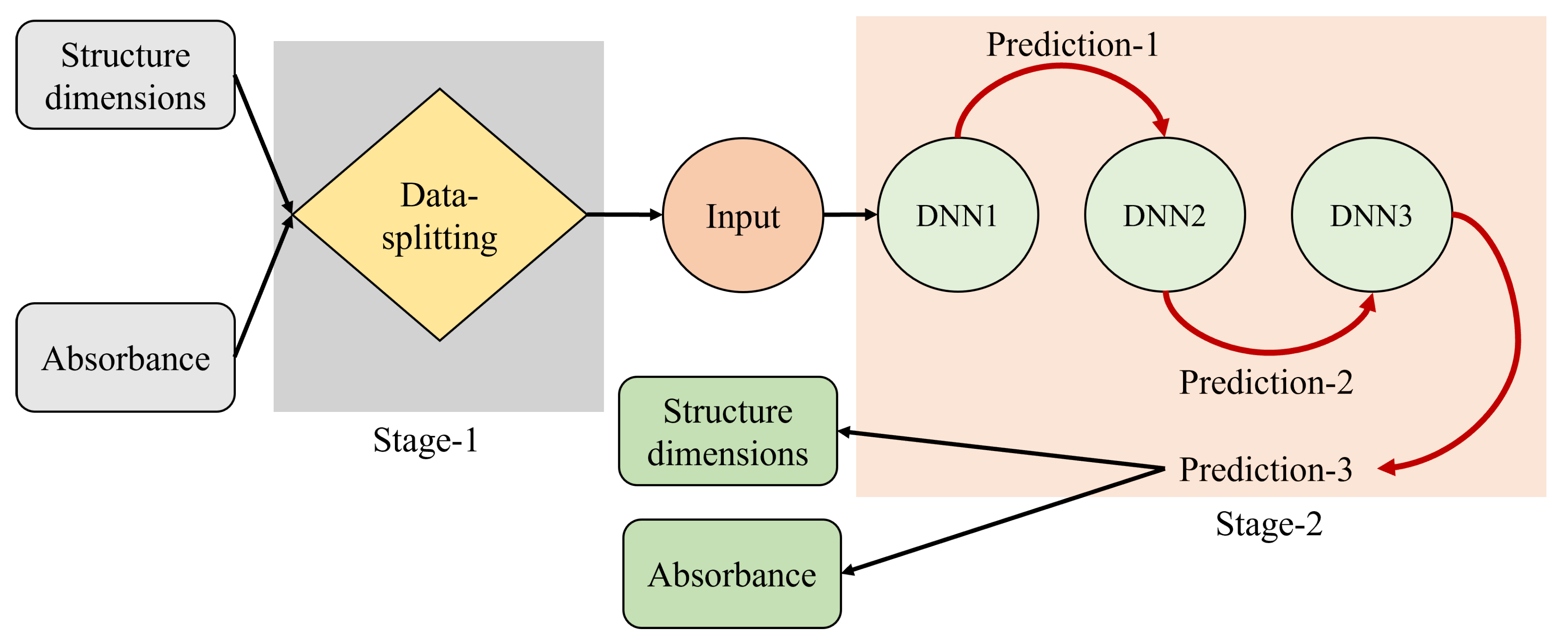

Figure 2.

A flowchart diagram of the proposed adaptive cascaded deep learning model.

Figure 2.

A flowchart diagram of the proposed adaptive cascaded deep learning model.

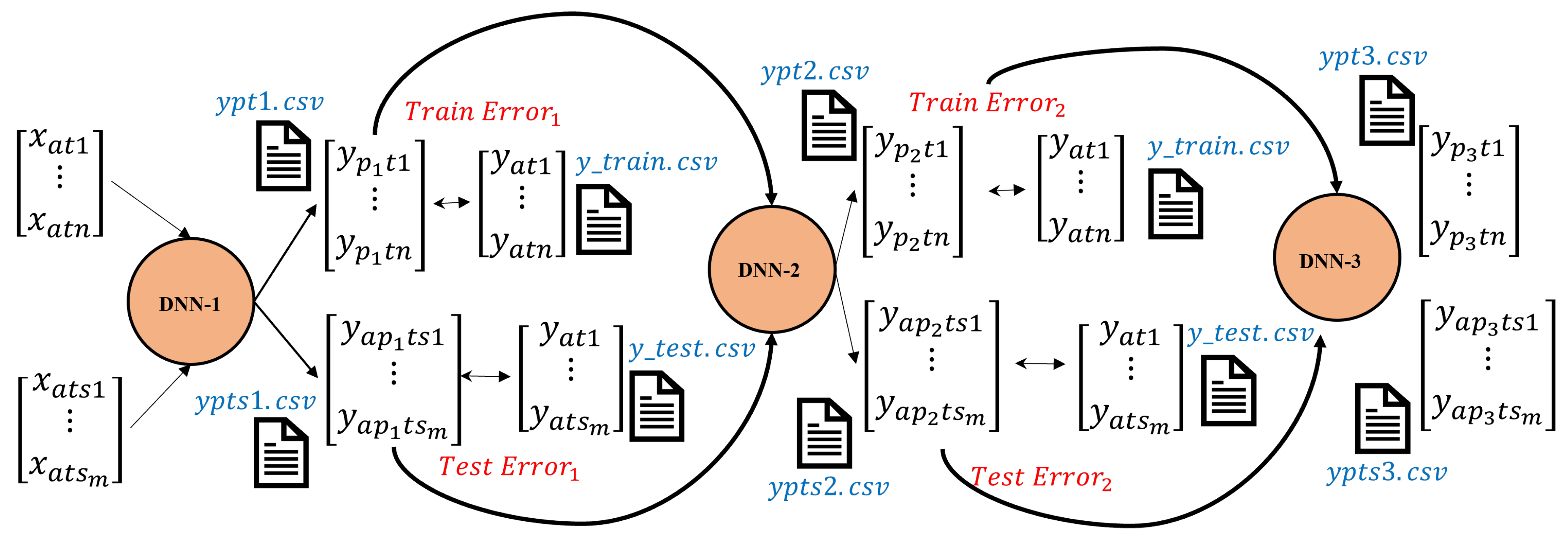

Figure 3.

A comprehensive flowchart diagram showing forward flow of Cascaded neural network under study after data splitting mechanism.

Figure 3.

A comprehensive flowchart diagram showing forward flow of Cascaded neural network under study after data splitting mechanism.

Figure 4.

The proposed metasurface energy absorber unit cell structure under study with its geometrical parameters. Note that light blue areas represent metallization layers.

Figure 4.

The proposed metasurface energy absorber unit cell structure under study with its geometrical parameters. Note that light blue areas represent metallization layers.

Figure 5.

Proposed adaptive technique data splitting method.Note that the data sorting is based solely on the maximum sum of all sample values of each case.

Figure 5.

Proposed adaptive technique data splitting method.Note that the data sorting is based solely on the maximum sum of all sample values of each case.

Figure 6.

Validation loss for 4000 dataset, with data splitted using Keras split function and our proposed adaptive data merging technique.

Figure 6.

Validation loss for 4000 dataset, with data splitted using Keras split function and our proposed adaptive data merging technique.

Figure 7.

Comparing the actual response of and predicted response, from the three cascaded DNN with Keras Split function and 4,000 samples dataset. The actual metasurface absorber design parameters are:1.4 mm , = 6.02, = 46.0, 0.13 mm, 0.9 mm, 14 mm, 8 mm, and L = 30 mm.

Figure 7.

Comparing the actual response of and predicted response, from the three cascaded DNN with Keras Split function and 4,000 samples dataset. The actual metasurface absorber design parameters are:1.4 mm , = 6.02, = 46.0, 0.13 mm, 0.9 mm, 14 mm, 8 mm, and L = 30 mm.

Figure 8.

Comparing the actual absorbance and predicted absorbance, from the three cascaded DNN with Keras Split function and 4,000 samples dataset. The actual metasurface absorber design parameters are: 1.4 mm , = , = , 0.13 mm, 0.9 mm, 14 mm, 8 mm, and L = 30 mm.

Figure 8.

Comparing the actual absorbance and predicted absorbance, from the three cascaded DNN with Keras Split function and 4,000 samples dataset. The actual metasurface absorber design parameters are: 1.4 mm , = , = , 0.13 mm, 0.9 mm, 14 mm, 8 mm, and L = 30 mm.

Figure 9.

Comparing the actual response of and predicted response, from the three cascaded DNN with our proposed adaptive data merging technique and 4,000 samples dataset. The actual metasurface absorber design parameters are:1.4 mm , = , = , 0.6 mm, 0.2 mm, 14 mm, 8 mm, and L = 30 mm.

Figure 9.

Comparing the actual response of and predicted response, from the three cascaded DNN with our proposed adaptive data merging technique and 4,000 samples dataset. The actual metasurface absorber design parameters are:1.4 mm , = , = , 0.6 mm, 0.2 mm, 14 mm, 8 mm, and L = 30 mm.

Figure 10.

Comparing the actual absorbance and predicted absorbance, from the three cascaded DNN with Adaptive Split function and 4,000 samples dataset. The actual metasurface absorber design parameters are: 1.4 mm , = , = , 0.13 mm, 0.9 mm, 14 mm, 8 mm, and L = 30 mm.

Figure 10.

Comparing the actual absorbance and predicted absorbance, from the three cascaded DNN with Adaptive Split function and 4,000 samples dataset. The actual metasurface absorber design parameters are: 1.4 mm , = , = , 0.13 mm, 0.9 mm, 14 mm, 8 mm, and L = 30 mm.

Figure 11.

Comparing the actual absorbance and predicted absorbance between descending and ascending order, from the second DNN. The actual metasurface absorber design parameters are: 1.4 mm, = , = , 0.13 mm, 0.9 mm, 14 mm, 8 mm, and L = 30 mm.

Figure 11.

Comparing the actual absorbance and predicted absorbance between descending and ascending order, from the second DNN. The actual metasurface absorber design parameters are: 1.4 mm, = , = , 0.13 mm, 0.9 mm, 14 mm, 8 mm, and L = 30 mm.

Figure 12.

Comparing the prediction accuracy between the trained DL model with descending and ascending order data sorting.

Figure 12.

Comparing the prediction accuracy between the trained DL model with descending and ascending order data sorting.

Figure 13.

Comparing the actual absorbance and predicted absorbance between descending order data sorting and bootstrap method, from the second DNN. The actual metasurface absorber design parameters are: 1.4 mm, = , = , 0.13 mm, 0.9 mm, 14 mm, 8 mm, and L = 30 mm.

Figure 13.

Comparing the actual absorbance and predicted absorbance between descending order data sorting and bootstrap method, from the second DNN. The actual metasurface absorber design parameters are: 1.4 mm, = , = , 0.13 mm, 0.9 mm, 14 mm, 8 mm, and L = 30 mm.

Figure 14.

Comparing the prediction accuracy between the trained DL model with descending order data sorting and bootstrap method.

Figure 14.

Comparing the prediction accuracy between the trained DL model with descending order data sorting and bootstrap method.

Figure 16.

Comparing the actual absorbance and predicted absorbance, from the three cascaded DNN with Keras Split function and 7,000 samples dataset. The actual metasurface absorber design parameters are: 1.33 mm, 1.47 mm , = , = , 0.63 mm, 0.92 mm, 15.71 mm, 13.71 mm, and L = 30 mm.

Figure 16.

Comparing the actual absorbance and predicted absorbance, from the three cascaded DNN with Keras Split function and 7,000 samples dataset. The actual metasurface absorber design parameters are: 1.33 mm, 1.47 mm , = , = , 0.63 mm, 0.92 mm, 15.71 mm, 13.71 mm, and L = 30 mm.

Figure 17.

Comparing the actual absorbance and predicted absorbance, from the three cascaded DNN with our proposed adaptive data merging technique and 7,000 samples dataset. The actual metasurface absorber design parameters are: 1.33 mm, 1.47 mm , = , = , 0.63 mm, 0.92 mm, 15.71 mm, 13.71 mm, and L = 30 mm.

Figure 17.

Comparing the actual absorbance and predicted absorbance, from the three cascaded DNN with our proposed adaptive data merging technique and 7,000 samples dataset. The actual metasurface absorber design parameters are: 1.33 mm, 1.47 mm , = , = , 0.63 mm, 0.92 mm, 15.71 mm, 13.71 mm, and L = 30 mm.

Figure 18.

Accuracy of the cascaded neural network with two layers as a function of epochs, with and without the adaptive data splitting.

Figure 18.

Accuracy of the cascaded neural network with two layers as a function of epochs, with and without the adaptive data splitting.

Table 1.

Training (MSLE) and validation (MSE) losses with 4000 dataset using the proposed ACDL model after 150 epochs.

Table 1.

Training (MSLE) and validation (MSE) losses with 4000 dataset using the proposed ACDL model after 150 epochs.

Dataset Splitting

Method |

Training Loss |

Validation Loss |

| |

DNN1 |

DNN2 |

DNN3 |

DNN1 |

DNN2 |

DNN3 |

| Keras |

0.031 |

0.033 |

0.032 |

0.049 |

0.044 |

0.046 |

| Adaptive |

0.037 |

0.039 |

0.15 |

0.024 |

0.020 |

0.25 |

Table 2.

Training (MSLE) and validation (MSE) losses with 7000 dataset using the proposed ACDL model after 150 epochs.

Table 2.

Training (MSLE) and validation (MSE) losses with 7000 dataset using the proposed ACDL model after 150 epochs.

Dataset Splitting

Method |

Training Loss |

Validation Loss |

| |

DNN1 |

DNN2 |

DNN3 |

DNN1 |

DNN2 |

DNN3 |

| Keras |

0.051 |

0.49 |

0.047 |

0.077 |

0.0073 |

0.0095 |

| Adaptive |

0.049 |

0.045 |

0.05 |

0.0067 |

0.006 |

0.081 |

Table 3.

Comparison of state of art artificial intelligence models for metasurface absorbers design.

Table 3.

Comparison of state of art artificial intelligence models for metasurface absorbers design.

| Reference |

Structure |

Machine Learning Model |

Accuracy |

Model Complexity |

| [20] |

Different shapes |

GAN |

95% |

complex structure; complex dataset preparation (based on GAN); Large dataset requirement |

| [21] |

Acoustic metasurface |

CNN |

- |

Complex dataset preparation (based on CNN) |

| [18] |

Pexilated Metasurface |

CNN |

90.5% |

Complex structure; Complex dataset preparation (based on CNN) |

| [22] |

Pexilated Metasurface |

CNN |

90% |

Required significant data preprocessing |

| [23] |

8-Rings pattern Metasurface |

CNN |

90% |

Complex structure; Complex dataset preparation (based on CNN) |

| [24] |

Diploe antenna based on metasurfaces |

GAN |

- |

Complex structure; complex dataset preparation (based on GAN) |

| Proposed model |

Edge-coupled SRR with automated cut gap position |

DNN |

94% (7000 dataset) |

Straight forward dataset management mechanism; Ease of integration with postprocessing data from EM simulators; Simple design structure to implement |