Submitted:

12 December 2023

Posted:

12 December 2023

You are already at the latest version

Abstract

Keywords:

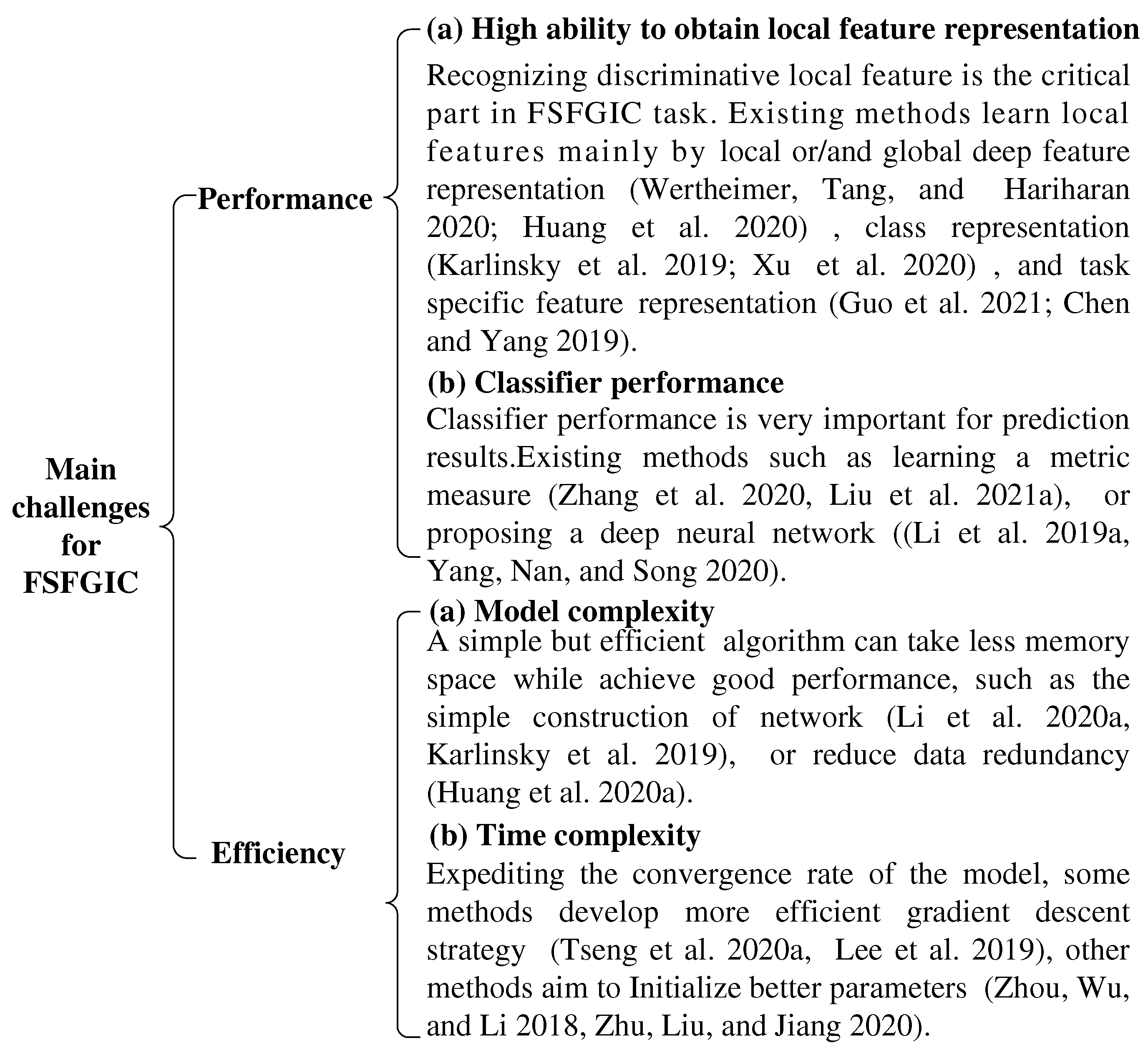

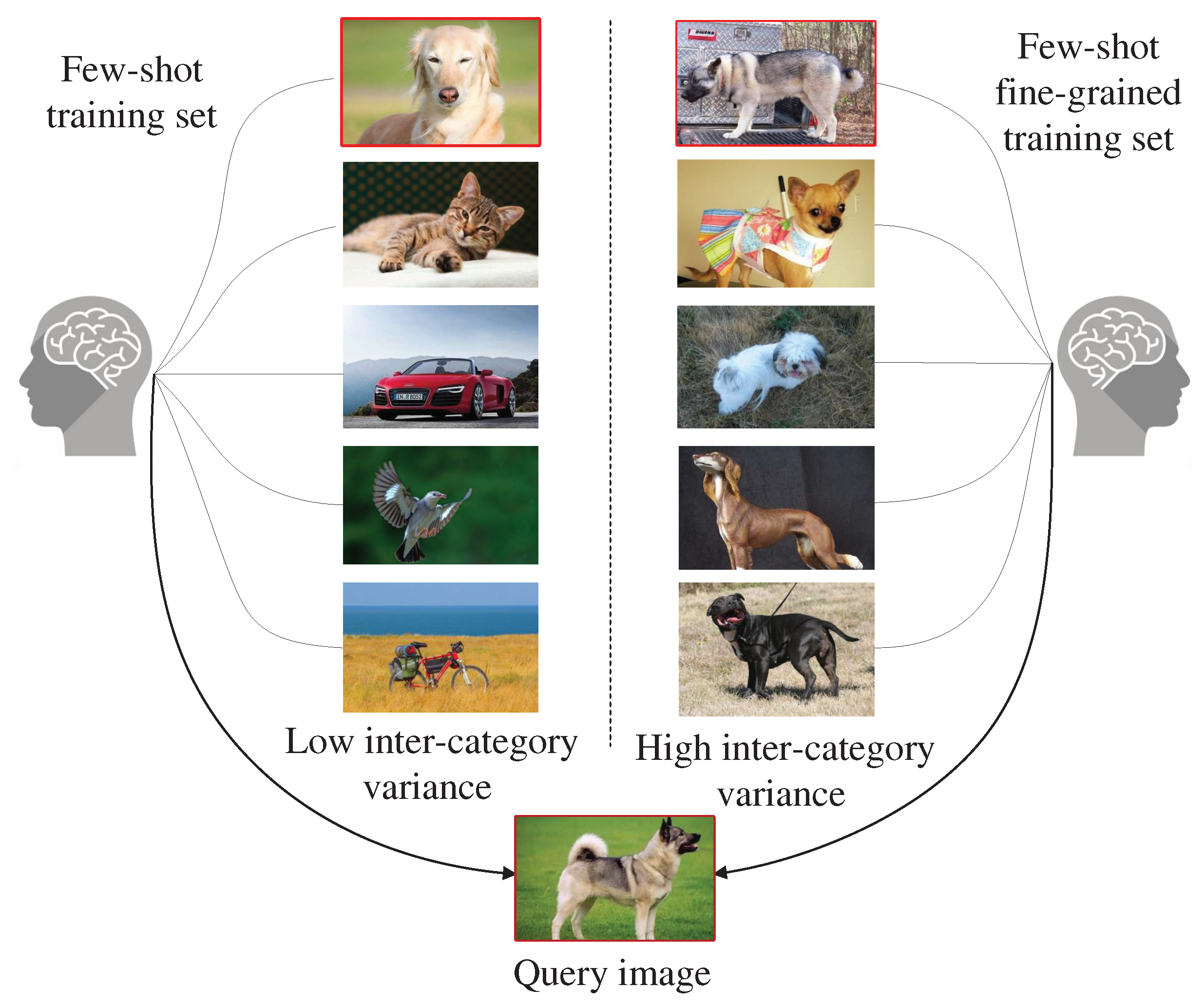

1. Introduction

2. Problem, datasets, and categorization of FSFGIC methods

2.1. Problem formulation

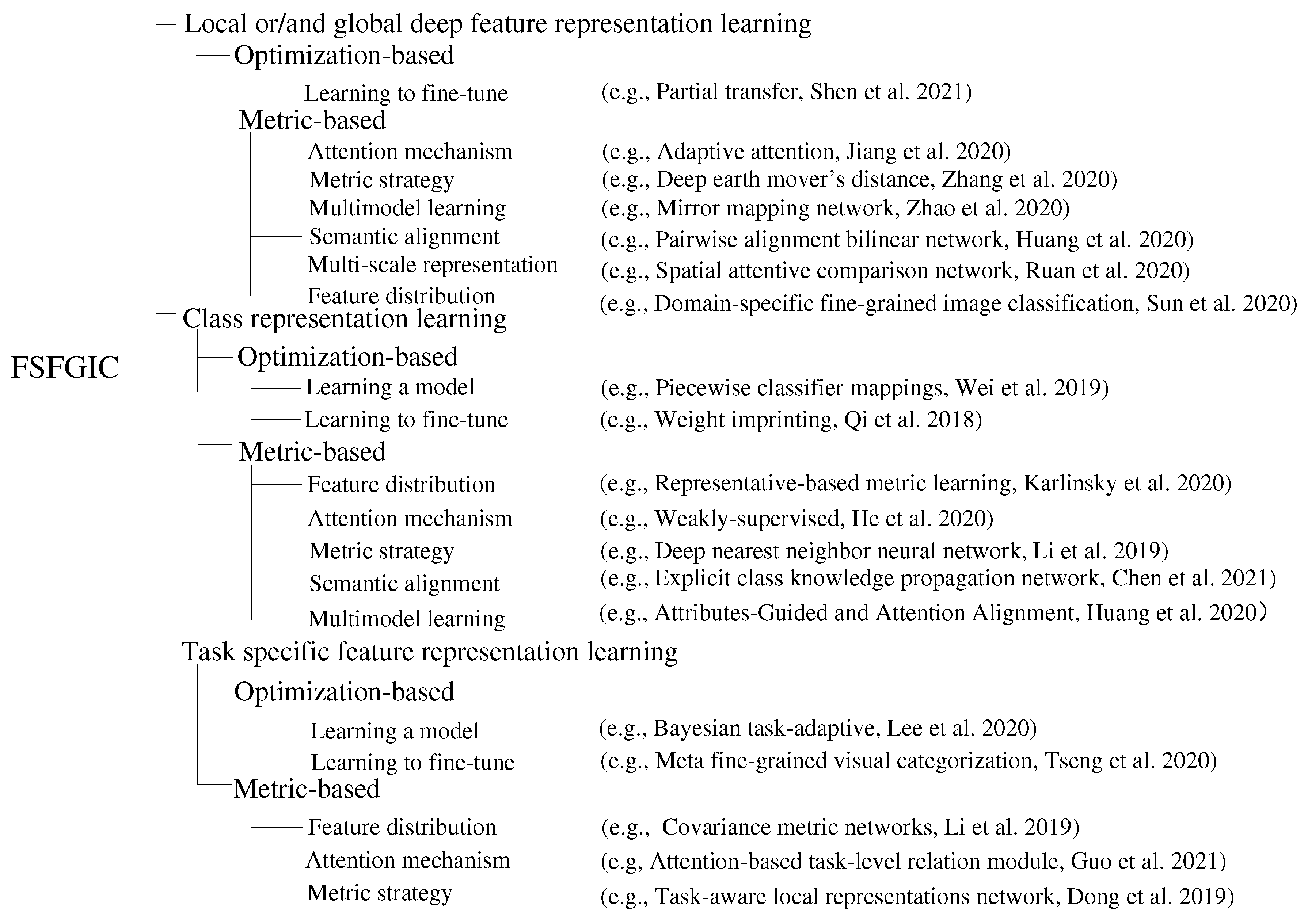

2.2. A taxonomy of the existing feature representation learning for FSFGIC

2.3. Benchmark datasets

3. Methods on FSFGIC

3.1. Data augmentation techniques for FSFGIC

3.2. Local or/and global deep feature representation learning based FSFGIC methods

3.2.1. Optimization-based local or/and global deep feature representation learning

3.2.2. Metric-based local or/and global deep feature representation learning

3.3. Class representation learning based FSFGIC methods

3.3.1. Optimization-based class representation learning

3.3.2. Metric-based class representation learning

3.4. Task specific feature representation learning based FSFGIC methods

3.4.1. Optimization-based task specific feature representation learning

3.4.2. Metric-based task specific feature representation learning

|

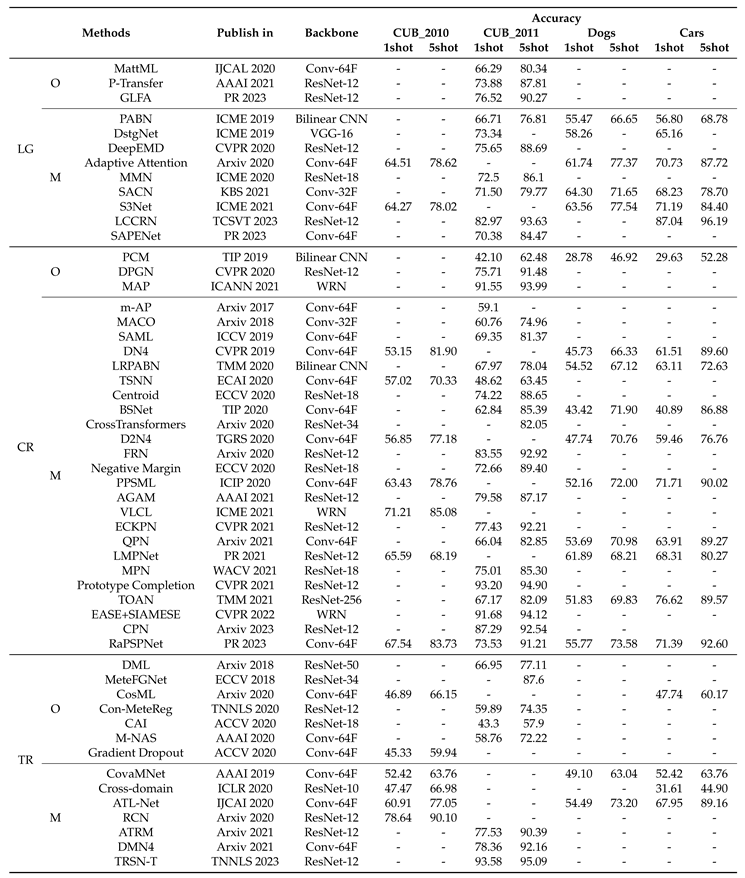

4. Summary and discussions

5. Conclusion

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhang, Y.; Tang, H.; Jia, K. Fine-grained visual categorization using meta-learning optimization with sample selection of auxiliary data. In Proceedings of the European Conference on Computer Vision, 2018, pp. 233–248.

- Wah, C.; Branson, S.; Welinder, P.; Perona, P.; Belongie, S. The caltech-ucsd birds-200-2011 dataset 2011.

- Nilsback, M.E.; Zisserman, A. Automated flower classification over a large number of classes. In Proceedings of the Indian conference on computer vision, graphics & image processing, 2008, pp. 722–729.

- Maji, S.; Rahtu, E.; Kannala, J.; Blaschko, M.; Vedaldi, A. Fine-grained visual classification of aircraft. arXiv preprint arXiv:1306.5151 2013.

- Smith, L.B.; Slone, L.K. A developmental approach to machine learning? Frontiers in psychology 2017, 8, 2124. [CrossRef]

- Zhu, Y.; Liu, C.; Jiang, S. Multi-attention Meta Learning for Few-shot Fine-grained Image Recognition. In Proceedings of the International Joint Conference on Artificial Intelligence, 2020, pp. 1090–1096. [CrossRef]

- Li, W.; Wang, L.; Xu, J.; Huo, J.; Gao, Y.; Luo, J. Revisiting local descriptor based image-to-class measure for few-shot learning. In Proceedings of the IEEE conference on computer vision and pattern recognition, 2019, pp. 7260–7268. [CrossRef]

- Dong, C.; Li, W.; Huo, J.; Gu, Z.; Gao, Y. Learning task-aware local representations for few-shot learning. In Proceedings of the Proceedings of the Twenty-Ninth International Conference on International Joint Conferences on Artificial Intelligence, 2021, pp. 716–722. [CrossRef]

- Zhang, W.; Liu, X.; Xue, Z.; Gao, Y.; Sun, C. NDPNet: A novel non-linear data projection network for few-shot fine-grained image classification. arXiv preprint arXiv:2106.06988 2021. [CrossRef]

- Vinyals, O.; Blundell, C.; Lillicrap, T.; Wierstra, D.; et al. Matching networks for one shot learning. Advances in neural information processing systems 2016, 29.

- Cao, S.; Wang, W.; Zhang, J.; Zheng, M.; Li, Q. A few-shot fine-grained image classification method leveraging global and local structures. International Journal of Machine Learning and Cybernetics 2022, 13, 2273–2281. [CrossRef]

- Abdelaziz, M.; Zhang, Z. Learn to aggregate global and local representations for few-shot learning. Multimedia Tools and Applications 2023, pp. 1–24. [CrossRef]

- Zhu, H.; Koniusz, P. EASE: Unsupervised discriminant subspace learning for transductive few-shot learning. In Proceedings of the IEEE conference on computer vision and pattern recognition, 2022, pp. 9078–9088.

- Li, Y.; Bian, C.; Chen, H. Generalized ridge regression-based channelwise feature map weighted reconstruction network for fine-grained few-shot ship classification. IEEE Transactions on Geoscience and Remote Sensing 2023, 61, 1–10. [CrossRef]

- Hu, Z.; Shen, L.; Lai, S.; Yuan, C. Task-adaptive Feature Disentanglement and Hallucination for Few-shot Classification. IEEE Transactions on Circuits and Systems for Video Technology 2023. [CrossRef]

- Zhou, Z.; Luo, L.; Zhou, S.; Li, W.; Yang, X.; Liu, X.; Zhu, E. Task-Related Saliency for Few-Shot Image Classification. IEEE Transactions on Neural Networks and Learning Systems 2023. [CrossRef]

- Chen, C.; Yang, X.; Xu, C.; Huang, X.; Ma, Z. Eckpn: Explicit class knowledge propagation network for transductive few-shot learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2021, pp. 6596–6605.

- Guo, Y.; Ma, Z.; Li, X.; Dong, Y. Atrm: Attention-based task-level relation module for gnn-based fewshot learning. arXiv preprint arXiv 2021, 2101.

- Khosla, A.; Jayadevaprakash, N.; Yao, B.; Li, F.F. Novel dataset for fine-grained image categorization: Stanford dogs. In Proceedings of the CVPR Workshop on Fine-Grained Visual Categorization. Citeseer, 2011, Vol. 2.

- Krause, J.; Stark, M.; Deng, J.; Fei-Fei, L. 3D object representations for fine-grained categorization. In Proceedings of the IEEE international conference on computer vision workshops, 2013, pp. 554–561. [CrossRef]

- Van Horn, G.; Branson, S.; Farrell, R.; Haber, S.; Barry, J.; Ipeirotis, P.; Perona, P.; Belongie, S. Building a bird recognition APP and large scale dataset with citizen scientists: The fine print in fine-grained dataset collection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2015, pp. 595–604. [CrossRef]

- Xiao, J.; Hays, J.; Ehinger, K.A.; Oliva, A.; Torralba, A. Sun database: Large-scale scene recognition from abbey to zoo. In Proceedings of the IEEE computer society conference on computer vision and pattern recognition. IEEE, 2010, pp. 3485–3492. [CrossRef]

- Yu, X.; Zhao, Y.; Gao, Y.; Xiong, S.; Yuan, X. Patchy image structure classification using multi-orientation region transform. In Proceedings of the AAAI Conference on Artificial Intelligence, 2020, Vol. 34, pp. 12741–12748. [CrossRef]

- Huang, S.; Zhang, M.; Kang, Y.; Wang, D. Attributes-guided and pure-visual attention alignment for few-shot recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, 2021, Vol. 35, pp. 7840–7847. [CrossRef]

- Afrasiyabi, A.; Lalonde, J.F.; Gagné, C. Associative alignment for few-shot image classification. In Proceedings of the European Conference on Computer Vision, 2020, pp. 18–35. [CrossRef]

- Li, X.; Wu, J.; Sun, Z.; Ma, Z.; Cao, J.; Xue, J.H. BSNet: Bi-similarity network for few-shot fine-grained image classification. IEEE Transactions on Image Processing 2020, 30, 1318–1331. [CrossRef]

- Wertheimer, D.; Tang, L.; Hariharan, B. Few-shot classification with feature map reconstruction networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, 2021, pp. 8012–8021.

- Hilliard, N.; Phillips, L.; Howland, S.; Yankov, A.; Corley, C.D.; Hodas, N.O. Few-shot learning with metric-agnostic conditional embeddings. arXiv preprint arXiv:1802.04376 2018.

- Zhang, M.; Wang, D.; Gai, S. Knowledge distillation for model-agnostic meta-learning. In European Conference on Artificial Intelligence; 2020; pp. 1355–1362.

- Perrett, T.; Masullo, A.; Burghardt, T.; Mirmehdi, M.; Damen, D. Meta-learning with context-agnostic initialisations. In Proceedings of the Asian Conference on Computer Vision, 2020.

- Pahde, F.; Nabi, M.; Klein, T.; Jahnichen, P. Discriminative hallucination for multi-modal few-shot learning. In Proceedings of the IEEE International Conference on Image Processing, 2018, pp. 156–160. [CrossRef]

- Xian, Y.; Sharma, S.; Schiele, B.; Akata, Z. f-vaegan-d2: A feature generating framework for any-shot learning. In Proceedings of the IEEE conference on computer vision and pattern recognition, 2019, pp. 10275–10284. [CrossRef]

- Xu, J.; Le, H.; Huang, M.; Athar, S.; Samaras, D. Variational feature disentangling for fine-grained few-shot classification. In Proceedings of the IEEE International Conference on Computer Vision, 2021, pp. 8812–8821. [CrossRef]

- Park, S.J.; Han, S.; Baek, J.W.; Kim, I.; Song, J.; Lee, H.B.; Han, J.J.; Hwang, S.J. Meta variance transfer: Learning to augment from the others. In Proceedings of the International Conference on Machine Learning, 2020, pp. 7510–7520.

- Luo, Q.; Wang, L.; Lv, J.; Xiang, S.; Pan, C. Few-shot learning via feature hallucination with variational inference. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision, 2021, pp. 3963–3972.

- Tsutsui, S.; Fu, Y.; Crandall, D. Meta-reinforced synthetic data for one-shot fine-grained visual recognition. Advances in Neural Information Processing Systems 2019, 32.

- Pahde, F.; Jähnichen, P.; Klein, T.; Nabi, M. Cross-modal hallucination for few-shot fine-grained recognition. arXiv preprint arXiv:1806.05147 2018.

- Zhu, P.; Gu, M.; Li, W.; Zhang, C.; Hu, Q. Progressive point to set metric learning for semi-supervised few-shot classification. In Proceedings of the IEEE International Conference on Image Processing, 2020, pp. 196–200.

- Wang, Y.; Xu, C.; Liu, C.; Zhang, L.; Fu, Y. Instance credibility inference for few-shot learning. In Proceedings of the IEEE conference on computer vision and pattern recognition, 2020, pp. 12836–12845.

- Huang, H.; Zhang, J.; Zhang, J.; Wu, Q.; Xu, J. Compare more nuanced: Pairwise alignment bilinear network for few-shot fine-grained learning. In Proceedings of the IEEE International Conference on Multimedia and Expo, 2019, pp. 91–96.

- Chen, M.; Fang, Y.; Wang, X.; Luo, H.; Geng, Y.; Zhang, X.; Huang, C.; Liu, W.; Wang, B. Diversity transfer network for few-shot learning. In Proceedings of the AAAI Conference on Artificial Intelligence, 2020, Vol. 34, pp. 10559–10566. [CrossRef]

- Schwartz, E.; Karlinsky, L.; Shtok, J.; Harary, S.; Marder, M.; Kumar, A.; Feris, R.; Giryes, R.; Bronstein, A. Delta-encoder: An effective sample synthesis method for few-shot object recognition. Advances in Neural Information Processing Systems 2018, 31.

- Wang, C.; Song, S.; Yang, Q.; Li, X.; Huang, G. Fine-grained few shot learning with foreground object transformation. Neurocomputing 2021, 466, 16–26. [CrossRef]

- Tokmakov, P.; Wang, Y.X.; Hebert, M. Learning compositional representations for few-shot recognition. In Proceedings of the IEEE International Conference on Computer Vision, 2019, pp. 6372–6381.

- Huynh, D.; Elhamifar, E. Compositional fine-grained low-shot learning. arXiv preprint arXiv:2105.10438 2021.

- Shen, Z.; Liu, Z.; Qin, J.; Savvides, M.; Cheng, K.T. Partial is better than all: revisiting fine-tuning strategy for few-shot learning. In Proceedings of the AAAI Conference on Artificial Intelligence, 2021, Vol. 35, pp. 9594–9602. [CrossRef]

- Chen, W.Y.; Liu, Y.C.; Kira, Z.; Wang, Y.C.F.; Huang, J.B. A closer look at few-shot classification. arXiv preprint arXiv:1904.04232 2019.

- Snell, J.; Swersky, K.; Zemel, R. Prototypical networks for few-shot learning. Advances in neural information processing systems 2017, 30.

- Shi, B.; Li, W.; Huo, J.; Zhu, P.; Wang, L.; Gao, Y. Global-and local-aware feature augmentation with semantic orthogonality for few-shot image classification. Pattern Recognition 2023, 142, 109702. [CrossRef]

- Hao, F.; He, F.; Cheng, J.; Wang, L.; Cao, J.; Tao, D. Collect and select: Semantic alignment metric learning for few-shot learning. In Proceedings of the IEEE international Conference on Computer Vision, 2019, pp. 8460–8469.

- Flores, C.F.; Gonzalez-Garcia, A.; van de Weijer, J.; Raducanu, B. Saliency for fine-grained object recognition in domains with scarce training data. Pattern Recognition 2019, 94, 62–73. [CrossRef]

- Tavakoli, H.R.; Borji, A.; Laaksonen, J.; Rahtu, E. Exploiting inter-image similarity and ensemble of extreme learners for fixation prediction using deep features. Neurocomputing 2017, 244, 10–18. [CrossRef]

- Zhang, X.; Wei, Y.; Feng, J.; Yang, Y.; Huang, T.S. Adversarial complementary learning for weakly supervised object localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 1325–1334. [CrossRef]

- Jiang, Z.; Kang, B.; Zhou, K.; Feng, J. Few-shot classification via adaptive attention. arXiv preprint arXiv:2008.02465 2020.

- Wu, H.; Zhao, Y.; Li, J. Selective, structural, subtle: Trilinear spatial-awareness for few-shot fine-grained visual recognition. In Proceedings of the IEEE International Conference on Multimedia and Expo, 2021, pp. 1–6.

- Ruan, X.; Lin, G.; Long, C.; Lu, S. Few-shot fine-grained classification with spatial attentive comparison. Knowledge-Based Systems 2021, 218, 106840. [CrossRef]

- Song, H.; Deng, B.; Pound, M.; Özcan, E.; Triguero, I. A fusion spatial attention approach for few-shot learning. Information Fusion 2022, 81, 187–202. [CrossRef]

- Huang, X.; Choi, S.H. Sapenet: Self-attention based prototype enhancement network for few-shot learning. Pattern Recognition 2023, 135, 109170. [CrossRef]

- Zhang, C.; Cai, Y.; Lin, G.; Shen, C. Deepemd: Few-shot image classification with differentiable earth mover’s distance and structured classifiers. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2020, pp. 12203–12213.

- Chen, Q.; Yang, R. Learning to distinguish: A general method to improve compare-based one-shot learning frameworks for similar classes. In Proceedings of the IEEE International Conference on Multimedia and Expo, 2019, pp. 952–957.

- Liu, Y.; Zhu, L.; Wang, X.; Yamada, M.; Yang, Y. Bilaterally normalized scale-consistent sinkhorn distance for few-shot image classification. IEEE Transactions on Neural Networks and Learning Systems 2023. [CrossRef]

- Zhao, J.; Lin, X.; Zhou, J.; Yang, J.; He, L.; Yang, Z. Knowledge-based fine-grained classification for few-shot learning. In Proceedings of the IEEE International Conference on Multimedia and Expo, 2020, pp. 1–6. [CrossRef]

- Sun, X.; Xv, H.; Dong, J.; Zhou, H.; Chen, C.; Li, Q. Few-shot learning for domain-specific fine-grained image classification. IEEE Transactions on Industrial Electronics 2020, 68, 3588–3598. [CrossRef]

- Zheng, Z.; Feng, X.; Yu, H.; Li, X.; Gao, M. BDLA: Bi-directional local alignment for few-shot learning. Applied Intelligence 2023, 53, 769–785. [CrossRef]

- Zhang, W.; Sun, C. Corner detection using second-order generalized Gaussian directional derivative representations. IEEE Transactions on Pattern Analysis and Mchine Intelligence 2019, 43, 1213–1224. [CrossRef]

- Zhang, W.; Sun, C.; Gao, Y. Image intensity variation information for interest point detection. IEEE Transactions on Pattern Analysis and Machine Intelligence 2023, 45, 9883–9894. [CrossRef]

- Jing, J.; Liu, S.; Wang, G.; Zhang, W.; Sun, C. Recent advances on image edge detection: A comprehensive review. Neurocomputing 2022. [CrossRef]

- Zhang, W.; Zhao, Y.; Breckon, T.P.; Chen, L. Noise robust image edge detection based upon the automatic anisotropic Gaussian kernels. Pattern Recognition 2017, 63, 193–205. [CrossRef]

- Jing, J.; Gao, T.; Zhang, W.; Gao, Y.; Sun, C. Image feature information extraction for interest point detection: A comprehensive review. IEEE Transactions on Pattern Analysis and Machine Intelligence 2023, 45, 4694–4712. [CrossRef]

- Zhang, W.; Sun, C.; Breckon, T.; Alshammari, N. Discrete curvature representations for noise robust image corner detection. IEEE Transactions on Image Processing 2019, 28, 4444–4459. [CrossRef]

- Zhang, W.; Sun, C. Corner detection using multi-directional structure tensor with multiple scales. International Journal of Computer Vision 2020, 128, 438–459. [CrossRef]

- Shui, P.L.; Zhang, W.C. Corner detection and classification using anisotropic directional derivative representations. IEEE Transactions on Image Processing 2013, 22, 3204–3218. [CrossRef]

- Zhang, H.; Torr, P.; Koniusz, P. Few-shot learning with multi-scale self-supervision. arXiv preprint arXiv:2001.01600 2020.

- Chen, Y.; Zheng, Y.; Xu, Z.; Tang, T.; Tang, Z.; Chen, J.; Liu, Y. Cross-domain few-shot classification based on lightweight Res2Net and flexible GNN. Knowledge-Based Systems 2022, 247, 108623. [CrossRef]

- Wei, X.S.; Wang, P.; Liu, L.; Shen, C.; Wu, J. Piecewise classifier mappings: Learning fine-grained learners for novel categories with few examples. IEEE Transactions on Image Processing 2019, 28, 6116–6125. [CrossRef]

- Lin, T.Y.; RoyChowdhury, A.; Maji, S. Bilinear CNN models for fine-grained visual recognition. In Proceedings of the IEEE International Conference on Computer Vision, 2015, pp. 1449–1457. [CrossRef]

- Yang, L.; Li, L.; Zhang, Z.; Zhou, X.; Zhou, E.; Liu, Y. DPGN: Distribution propagation graph network for few-shot learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2020, pp. 13390–13399.

- He, J.; Kortylewski, A.; Yuille, A. CORL: Compositional representation learning for few-shot classification. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision, 2023, pp. 3890–3899.

- Qi, H.; Brown, M.; Lowe, D.G. Low-shot learning with imprinted weights. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 5822–5830.

- Hu, Y.; Gripon, V.; Pateux, S. Leveraging the feature distribution in transfer-based few-shot learning. In Proceedings of the International Conference on Artificial Neural Networks, 2021, pp. 487–499.

- Liu, X.; Zhou, K.; Yang, P.; Jing, L.; Yu, J. Adaptive distribution calibration for few-shot learning via optimal transport. Information Sciences 2022, 611, 1–17. [CrossRef]

- Arjovsky, M.; Bottou, L. Towards principled methods for training generative adversarial networks. arXiv preprint arXiv:1701.04862 2017.

- Xian, Y.; Lorenz, T.; Schiele, B.; Akata, Z. Feature generating networks for zero-shot learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 5542–5551. [CrossRef]

- Verma, V.K.; Arora, G.; Mishra, A.; Rai, P. Generalized zero-shot learning via synthesized examples. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 4281–4289.

- Karlinsky, L.; Shtok, J.; Harary, S.; Schwartz, E.; Aides, A.; Feris, R.; Giryes, R.; Bronstein, A.M. Repmet: Representative-based metric learning for classification and few-shot object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 5197–5206. [CrossRef]

- Zhang, W.; Zhao, Y.; Gao, Y.; Sun, C. Re-abstraction and perturbing support pair network for few-shot fine-grained image classification. Pattern Recognition 2023, p. 110158. [CrossRef]

- Das, D.; Moon, J.; George Lee, C. Few-shot image recognition with manifolds. In Proceedings of the Advances in Visual Computing: International Symposium, 2020, pp. 3–14. [CrossRef]

- Lyu, Q.; Wang, W. Compositional Prototypical Networks for Few-Shot Classification. arXiv preprint arXiv:2306.06584 2023. [CrossRef]

- Luo, X.; Chen, Y.; Wen, L.; Pan, L.; Xu, Z. Boosting few-shot classification with view-learnable contrastive learning. In Proceedings of the IEEE International Conference on Multimedia and Expo, 2021, pp. 1–6.

- Chen, X.; Wang, G. Few-shot learning by integrating spatial and frequency representation. In Proceedings of the Conference on Robots and Vision, 2021, pp. 49–56.

- Ji, Z.; Chai, X.; Yu, Y.; Pang, Y.; Zhang, Z. Improved prototypical networks for few-shot learning. Pattern Recognition Letters 2020, 140, 81–87. [CrossRef]

- Hu, Y.; Pateux, S.; Gripon, V. Squeezing backbone feature distributions to the max for efficient few-shot learning. Algorithms 2022, 15, 147. [CrossRef]

- Chobola, T.; Vašata, D.; Kordík, P. Transfer learning based few-shot classification using optimal transport mapping from preprocessed latent space of backbone neural network. In Proceedings of the AAAI Workshop on Meta-Learning and MetaDL Challenge, 2021, pp. 29–37.

- Zagoruyko, S.; Komodakis, N. Paying more attention to attention: Improving the performance of convolutional neural networks via attention transfer. arXiv preprint arXiv:1612.03928 2016.

- He, X.; Lin, J.; Shen, J. Weakly-supervised Object Localization for Few-shot Learning and Fine-grained Few-shot Learning. arXiv preprint arXiv:2003.00874 2020.

- Li, Y.; Li, H.; Chen, H.; Chen, C. Hierarchical representation based query-specific prototypical network for few-shot image classification. arXiv preprint arXiv:2103.11384 2021.

- Doersch, C.; Gupta, A.; Zisserman, A. Crosstransformers: spatially-aware few-shot transfer. Advances in Neural Information Processing Systems 2020, 33, 21981–21993.

- Huang, H.; Wu, Z.; Li, W.; Huo, J.; Gao, Y. Local descriptor-based multi-prototype network for few-shot learning. Pattern Recognition 2021, 116, 107935. [CrossRef]

- Yang, X.; Nan, X.; Song, B. D2N4: A discriminative deep nearest neighbor neural network for few-shot space target recognition. IEEE Transactions on Geoscience and Remote Sensing 2020, 58, 3667–3676. [CrossRef]

- Wen, Y.; Zhang, K.; Li, Z.; Qiao, Y. A discriminative feature learning approach for deep face recognition. In Proceedings of the European Conference on Computer Vision, 2016, pp. 499–515. [CrossRef]

- Simon, C.; Koniusz, P.; Nock, R.; Harandi, M. Adaptive subspaces for few-shot learning. In Proceedings of the IEEE conference on computer vision and pattern recognition, 2020, pp. 4136–4145. [CrossRef]

- Triantafillou, E.; Zemel, R.; Urtasun, R. Few-shot learning through an information retrieval lens. Advances in Neural Iformation Processing Systems 2017, 30.

- Liu, B.; Cao, Y.; Lin, Y.; Li, Q.; Zhang, Z.; Long, M.; Hu, H. Negative margin matters: Understanding margin in few-shot classification. In Proceedings of the European Conference on Computer Vision, 2020, pp. 438–455. [CrossRef]

- Huang, H.; Zhang, J.; Zhang, J.; Xu, J.; Wu, Q. Low-rank pairwise alignment bilinear network for few-shot fine-grained image classification. IEEE Transactions on Multimedia 2020, 23, 1666–1680. [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision, 2018, pp. 3–19.

- Pahde, F.; Puscas, M.; Klein, T.; Nabi, M. Multimodal prototypical networks for few-shot learning. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision, 2021, pp. 2644–2653. [CrossRef]

- Wang, R.; Zheng, H.; Duan, X.; Liu, J.; Lu, Y.; Wang, T.; Xu, S.; Zhang, B. Few-Shot Learning with Visual Distribution Calibration and Cross-Modal Distribution Alignment. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2023, pp. 23445–23454.

- Gu, Q.; Luo, Z.; Zhu, Y. A Two-Stream Network with Image-to-Class Deep Metric for Few-Shot Classification. In ECAI 2020; 2020; pp. 2704–2711.

- Zhang, B.; Li, X.; Ye, Y.; Huang, Z.; Zhang, L. Prototype completion with primitive knowledge for few-shot learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2021, pp. 3754–3762. [CrossRef]

- Achille, A.; Lam, M.; Tewari, R.; Ravichandran, A.; Maji, S.; Fowlkes, C.C.; Soatto, S.; Perona, P. Task2vec: Task embedding for meta-learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 6430–6439.

- Jaakkola, T.; Haussler, D. Exploiting generative models in discriminative classifiers. Advances in Neural Information Processing Systems 1998, 11.

- Lee, H.B.; Lee, H.; Na, D.; Kim, S.; Park, M.; Yang, E.; Hwang, S.J. Learning to balance: Bayesian meta-learning for imbalanced and out-of-distribution tasks. arXiv preprint arXiv:1905.12917 2019.

- Finn, C.; Abbeel, P.; Levine, S. Model-agnostic meta-learning for fast adaptation of deep networks. In Proceedings of the International Conference on Machine Learning, 2017, pp. 1126–1135.

- Wang, J.; Wu, J.; Bai, H.; Cheng, J. M-nas: Meta neural architecture search. In Proceedings of the AAAI Conference on Artificial Intelligence, 2020, Vol. 34, pp. 6186–6193.

- Tseng, H.Y.; Chen, Y.W.; Tsai, Y.H.; Liu, S.; Lin, Y.Y.; Yang, M.H. Regularizing meta-learning via gradient dropout. In Proceedings of the Asian Conference on Computer Vision, 2020.

- He, Y.; Liang, W.; Zhao, D.; Zhou, H.Y.; Ge, W.; Yu, Y.; Zhang, W. Attribute surrogates learning and spectral tokens pooling in transformers for few-shot learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2022, pp. 9119–9129.

- Zhou, F.; Wu, B.; Li, Z. Deep meta-learning: Learning to learn in the concept space. arXiv preprint arXiv:1802.03596 2018.

- Tian, P.; Li, W.; Gao, Y. Consistent meta-regularization for better meta-knowledge in few-shot learning. IEEE Transactions on Neural Networks and Learning Systems 2021, 33, 7277–7288. [CrossRef]

- Antoniou, A.; Storkey, A.J. Learning to learn by self-critique. Advances in Neural Information Processing Systems 2019, 32.

- Peng, S.; Song, W.; Ester, M. Combining domain-specific meta-learners in the parameter space for cross-domain few-shot classification. arXiv preprint arXiv:2011.00179 2020.

- Li, W.; Xu, J.; Huo, J.; Wang, L.; Gao, Y.; Luo, J. Distribution consistency based covariance metric networks for few-shot learning. In Proceedings of the AAAI conference on artificial intelligence, 2019, Vol. 33, pp. 8642–8649. [CrossRef]

- Tseng, H.Y.; Lee, H.Y.; Huang, J.B.; Yang, M.H. Cross-domain few-shot classification via learned feature-wise transformation. arXiv preprint arXiv:2001.08735 2020.

- Lee, D.H.; Chung, S.Y. Unsupervised embedding adaptation via early-stage feature reconstruction for few-shot classification. In Proceedings of the International Conference on Machine Learning, 2021, pp. 6098–6108.

- Xue, Z.; Duan, L.; Li, W.; Chen, L.; Luo, J. Region comparison network for interpretable few-shot image classification. arXiv preprint arXiv:2009.03558 2020.

- Liu, Y.; Zheng, T.; Song, J.; Cai, D.; He, X. Dmn4: Few-shot learning via discriminative mutual nearest neighbor neural network. In Proceedings of the AAAI Conference on Artificial Intelligence, 2022, Vol. 36, pp. 1828–1836. [CrossRef]

- Gowda, K.; Krishna, G. The condensed nearest neighbor rule using the concept of mutual nearest neighborhood. IEEE Transactions on Information Theory 1979, 25, 488–490. [CrossRef]

- Ye, M.; Guo, Y. Deep triplet ranking networks for one-shot recognition. arXiv preprint arXiv:1804.07275 2018.

- Li, X.; Yu, L.; Fu, C.W.; Fang, M.; Heng, P.A. Revisiting metric learning for few-shot image classification. Neurocomputing 2020, 406, 49–58. [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016, pp. 770–778. [CrossRef]

| Dataset name | Class | images | categories |

|---|---|---|---|

| SUN397 | Scenes | 130,519 | 397 |

| Oxford 102 Flowers | Flowers | 8,189 | 102 |

| CUB200-2011 | Birds | 11,788 | 200 |

| Stanford Dogs | Dogs | 20,580 | 120 |

| Stanford Cars | Cars | 16,185 | 196 |

| FGVC-Aircraft | Aircrafts | 10,000 | 100 |

| NABirds | Birds | 48,562 | 555 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).