Submitted:

01 December 2023

Posted:

01 December 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

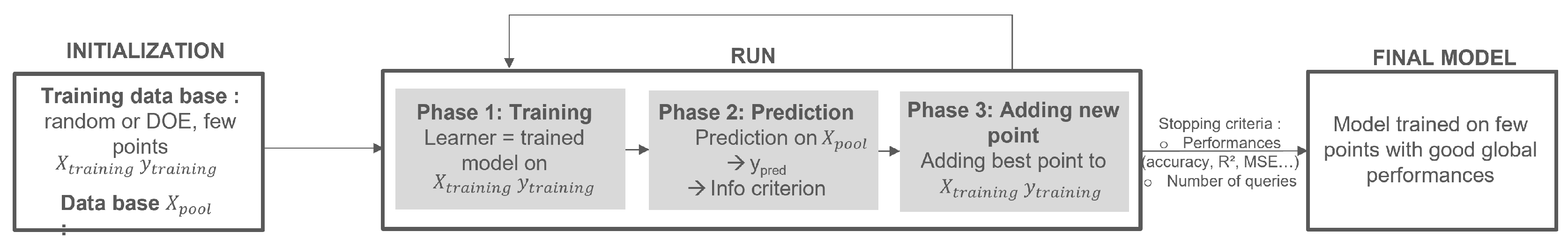

2. Methodology

2.1. Active Learning

2.1.1. Scenarios

- (i)

- Membership Query Synthesis [11]: Here, the model can ask for any sample in the input space, it can also ask for queries generated de novo rather than for those sampled from an underlying natural distribution. This method has been particularly effective for problems confined to a finite domain [12]. Initially developed for classification models, it can also be extended to regression models [13]. However, this method may leave too much freedom to the algorithm which can be led to request samples without any physical meaning.

- (ii)

- Stream-Based Selective sampling [14]: Here, the initial assumption is that it is not expensive to add a sample. Therefore, the model decides for each possible sample whether to add it as training data. This approach is also called sometimes Stream-Based or Sequential Active Learning because all the samples are considered one by one and the model chooses for each one whether to kept or not.

- (iii)

- Pool-Based Sampling [15]: There, a small set L of labelled data and a large set denoted U of unlabelled available data are considered. The query is then made according to the information criterion which evaluates the relevance of a sample from the basis U in comparison to the others. The best sample according to this criterion is then chosen and added to the training set. The difference with the previous scenario is that the decision regarding a sample is taken individually. In the Pool-Based the others samples still available are taken into account. This last method is the most used in real applications.

2.1.2. Query strategies

- Query by Committee A committee of models trained on different hypothesis on the base L is defined . Then, a vote is done and the sample which generates the most disagreement is selected and added [19]. There are different ways to measure the level of disagreement and make the final vote. The two most used are: the vote entropy described in [20] and the Kullback-Leibler (KL) divergence in [21].

- Expected model change For a given model and a given sample, the impact of the sample if added to the training database L is estimated through a gradient calculation. The sample that induces the biggest change is here the most relevant and is added to the training [22,23] :where is the gradient of the objective function l respectively to the parameters applied to the tuple

- Variance reduction The most informative sample which will be added to the training set is the one minimizing the output variance (i.e. minimizing the generalization error) [24]:where is the estimated mean output variance across the input distribution after the model has been re-trained on the query x and its corresponding label.

2.2. s-PGD equations

2.3. Fisher Matrix

2.4. Computation of a new information criteria

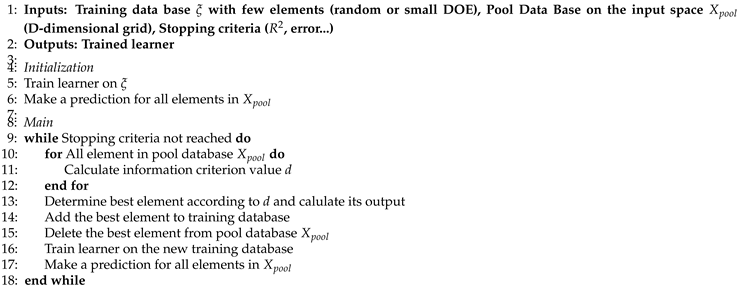

| Algorithm 1 Active Learning : Matrix criterion |

|

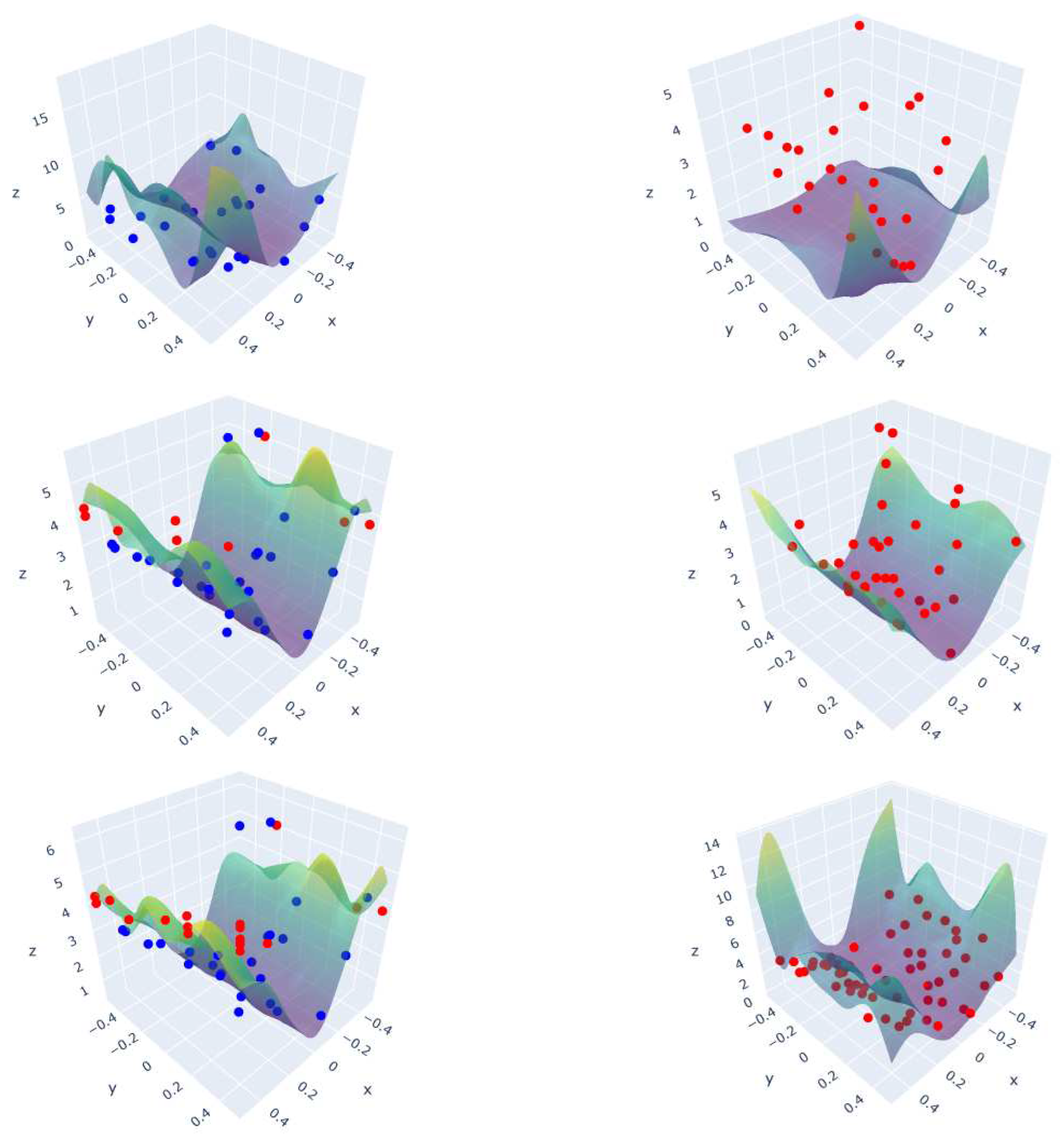

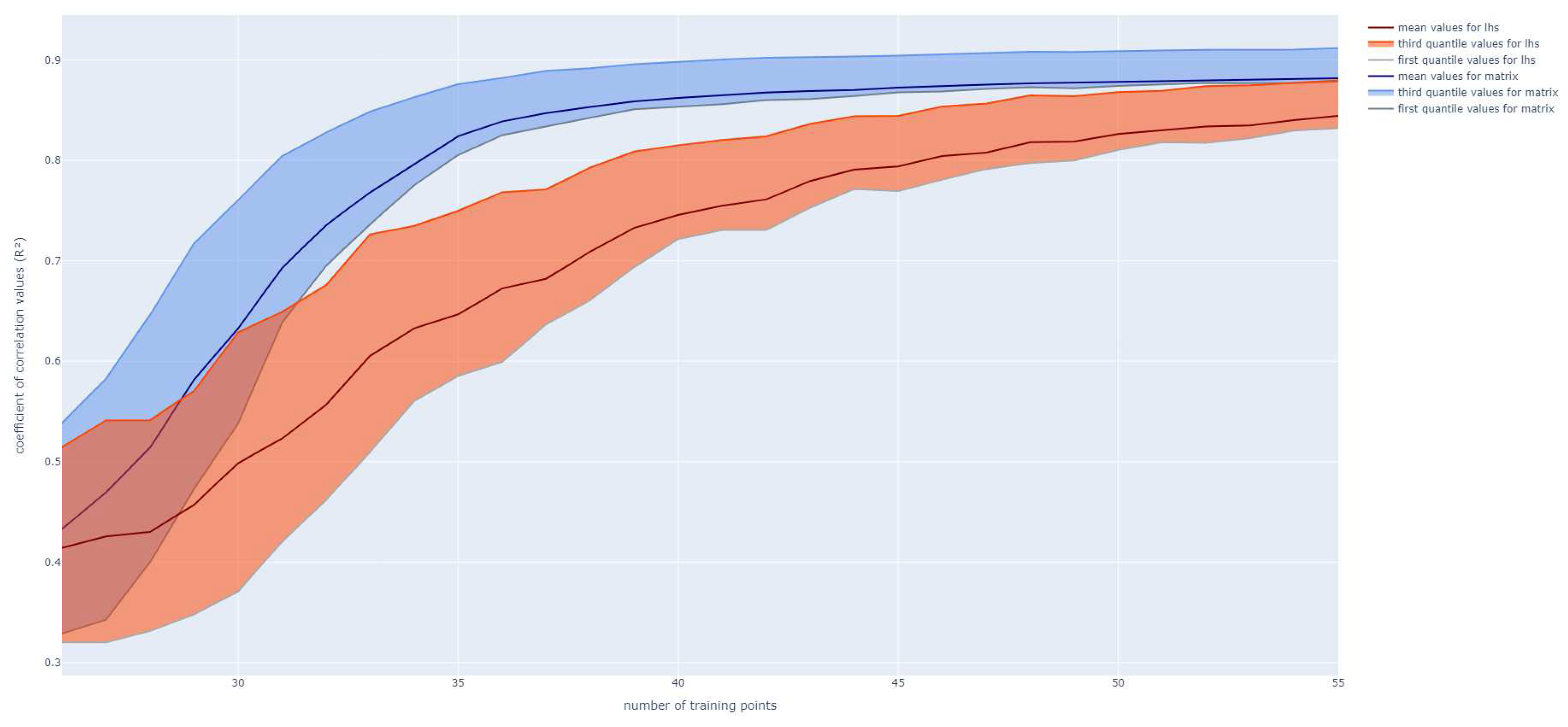

3. Tests and results

3.1. Polynomial function

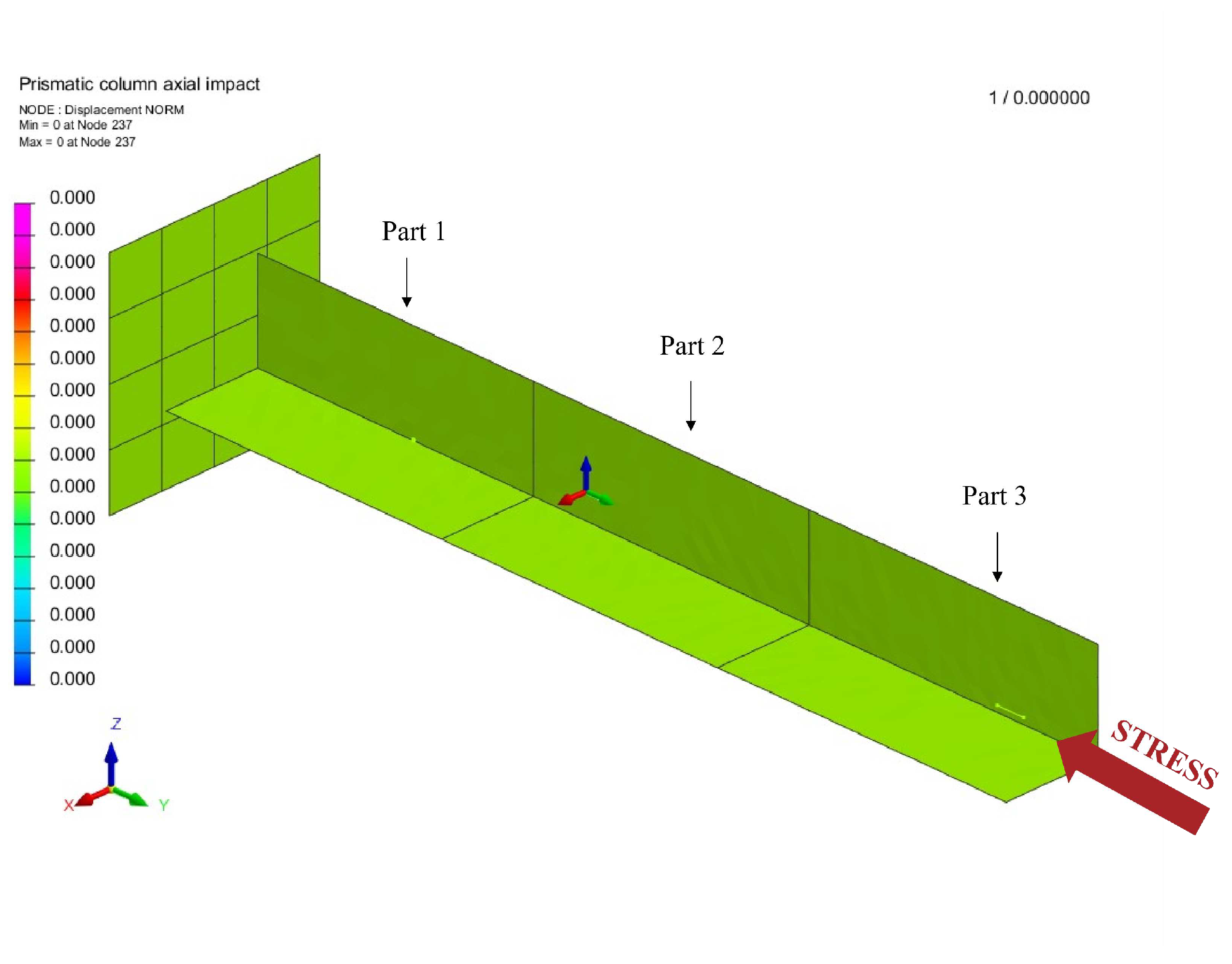

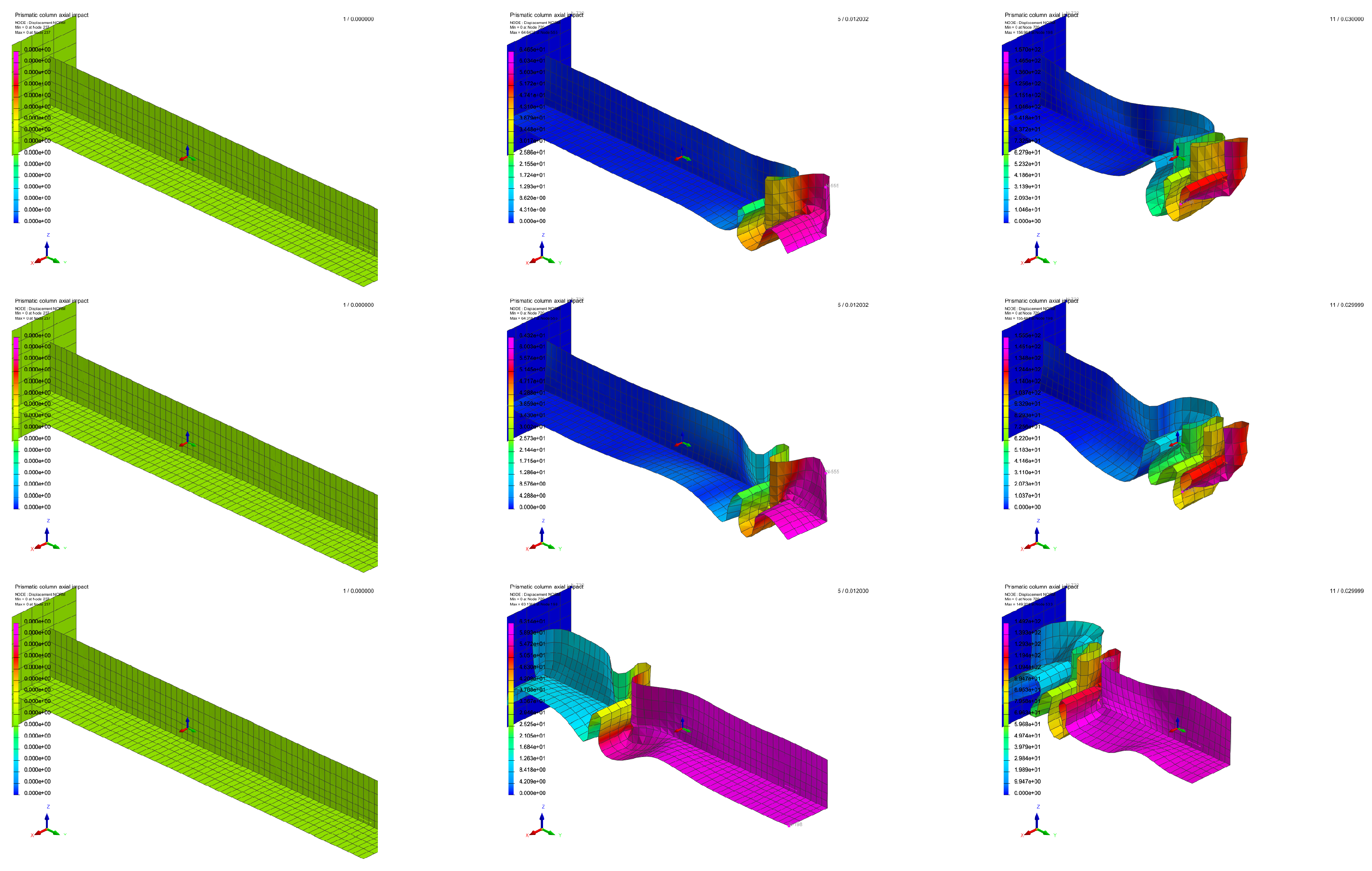

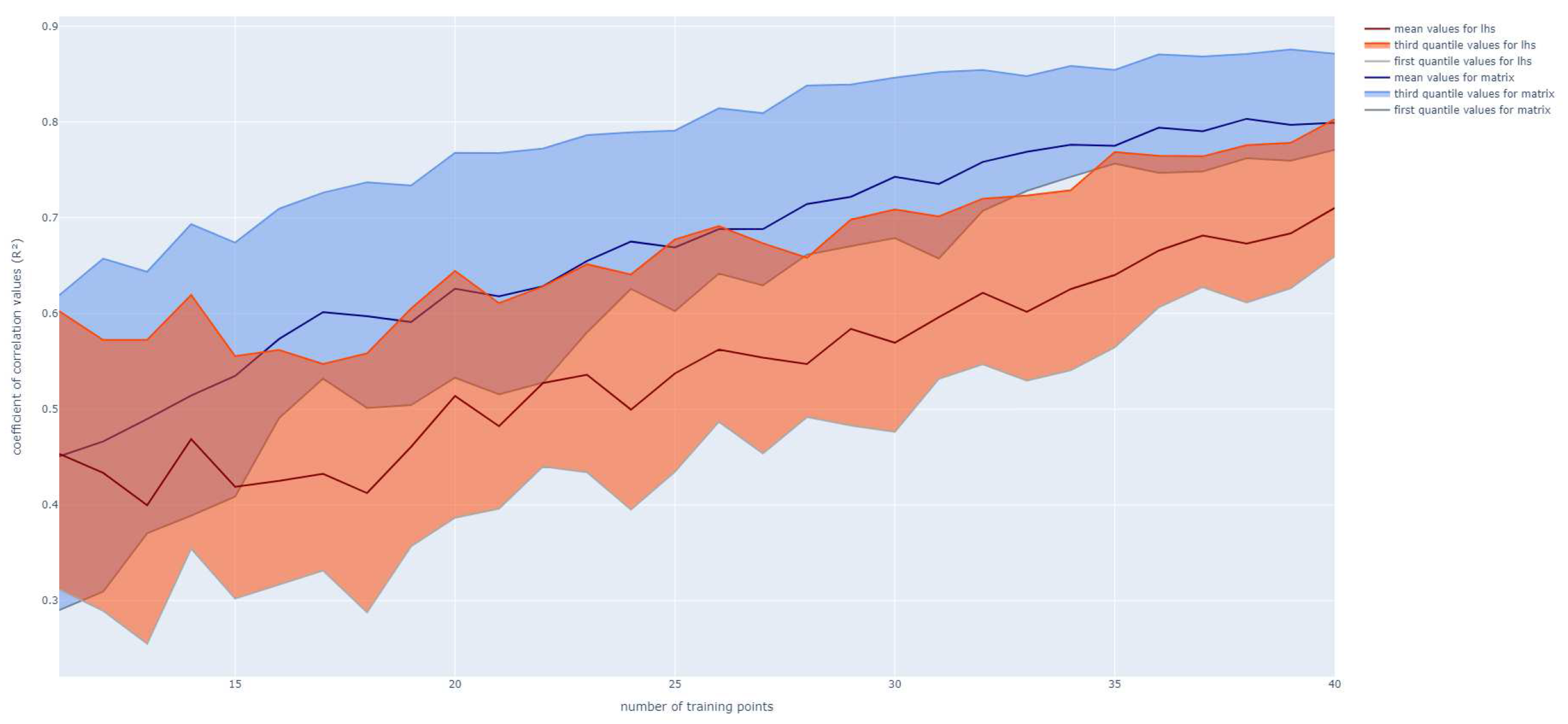

3.2. Application on a box-beam crash absorber

4. Conclusion and future works

- First, the samples, in this study, are added one by one, but it could be interesting to add them by group. Indeed, it seems that the algorithm needs to select some points in the same area to estimate it before moving to others. This behaviour can be explained because the information criterion used aims to minimize the global output variance. Adding points by group could be an interesting way to solve this issue. In further studies, studying how and how much points to add would be relevant. Moreover, when the real output value (given by the “oracle”) comes from experiments, it is more pertinent to do more than one point simultaneously to have a better organization.

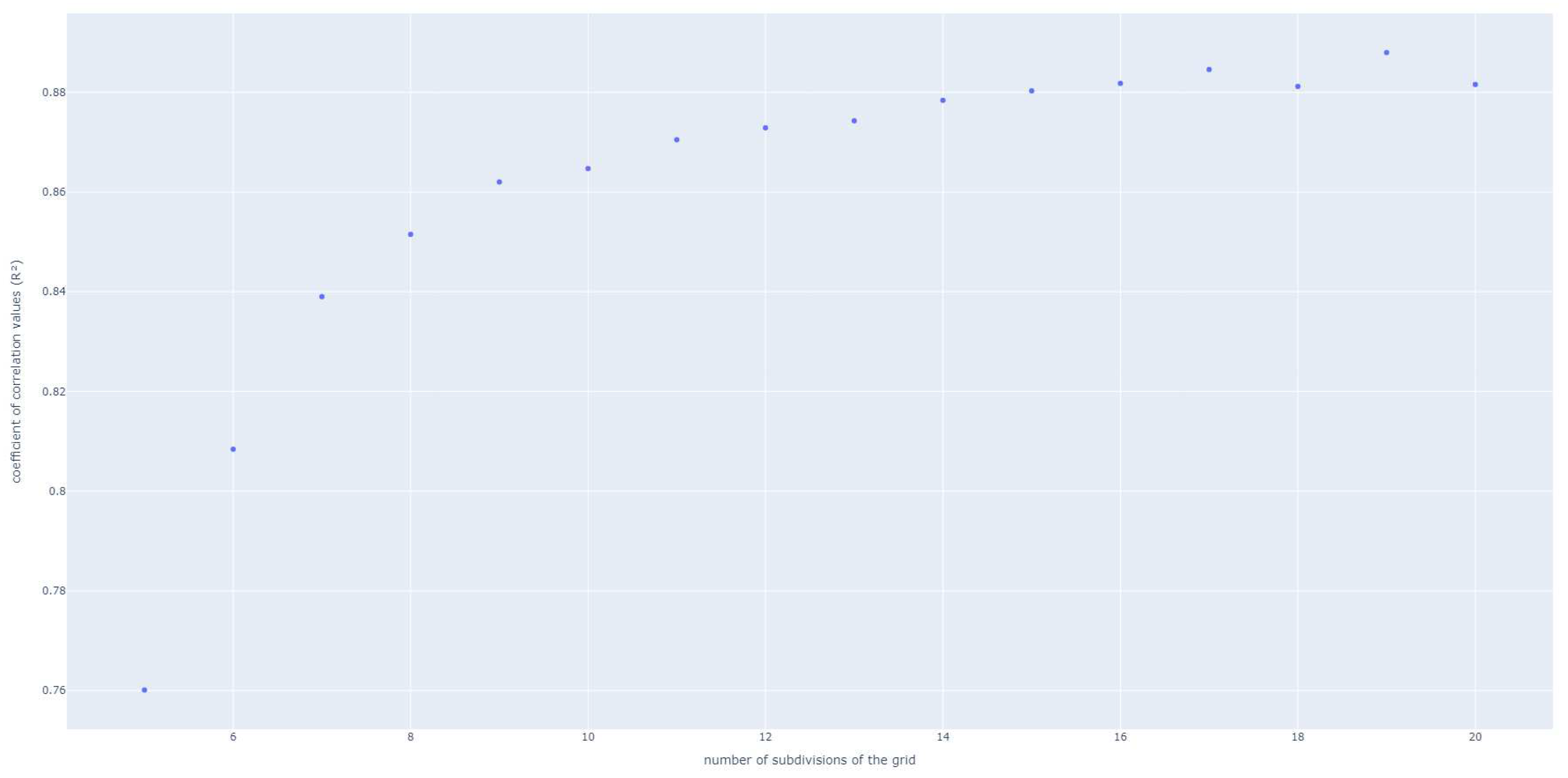

- Another point that could be optimized, is the search of the criterion optimum value. As for now, a simple research along a refined grid is used. Using an optimized method to find optimum (such as a gradient descent for example) or using an adaptive grid mesh which would refine itself near the interesting areas could be an option. This is also interesting in a purpose of reducing the computational cost of the algorithm. Indeed, searching precisely without generating a huge grid will be a good improvement and should be optimized.

- In terms of practical use, developing an algorithm to determine and maybe adapt during the whole active learning process the s-PGD parameter (number of modes, function degrees) would also improve the speed of convergence.

- Finally, the mix of the criterion with a more specific cost function is also considered to improve the results as it was studies in the preliminary’s step of the method development.

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Matrix method criterion detailed definition

References

- Mitchell, T. Machine Learning; McGraw-Hill, 1997.

- Laughlin, R.B.; Pines, D. The theory of everything. Proceedings of the national academy of sciencesof the United States of America 2000.

- Goupy, J.; Creighton, L. Introduction to Design of Experiments; Dunod/L’Usine nouvelle, 2006.

- Settles, B. Active Learning Literature Survey. Physical Review E 2009.

- Frieden, B.R.; Gatenby.; A, R. Principle of maximum Fisher information from Hardy’s axioms applied to statistical systems. Computer Sciences Technical Report 2013.

- Ibáñez, R.; Abisset-Chavanne, E. A Multidimensional Data-Driven Sparse Identification Technique: T he Sparse Proper Generalized Decomposition. Hindawi 2018.

- Fisher, R. The Arrangement of Field Experiments. Journal of the Ministry of Agriculture of Great Britain 1926.

- Box, G. E. Hunter, W.G.H. Statistics for Experimenters: Design, Innovation, and Discovery; Wiley, 2005.

- R.L. Plackett, J.B. The Design of Optimum Multifactorial Experiments. Biometrika 1946.

- M.D. McKay, R.J Beckman, W.C. A Comparison of Three Methods for Selecting Values of Input Variables in the Analysis of Output from a Computer Code. Technometrics, American Statistical Association 1979.

- Angluin, D. Queries and concept learning; 1988.

- Angluin, D. Queries revisited; Springer-Verlag, 2001.

- D. Cohn, Z.G.; Jordan, M. Active learning with statistical models. Journal of Artificial Intelligence Research 1996.

- D. Cohn, L.A.; Ladner, R. Training connection networks with queries and selective sampling. Advances in Neural Information Processing Systems (NIPS) 1990.

- Lewis, D.; Gale, W. A sequential algorithm for training text classifiers. Proceedings of the ACM SIGIR Conference on Research and Development Information Retrieval 1994.

- Lewis, D.; Catlett, J. Heterogeneous uncertainty sampling for supervised learning. Proceedings of the International Conference on Machine Learning (ICML) 1994.

- T. Scheffer, C. Decomain, and S. Wrobel. Active hidden Markov models for information extraction. Proceedings of the International Conference on Advancesin Intelligent Data Analysis (CAIDA) 2001.

- Shannon, C. A mathematical theory of communication. Bell System Technical Journal 1948.

- H.S. Seung, M.O.; Sompolinsky, H. Query by committee. Proceedings of the ACM Workshop on Computational Learning Theory 1992.

- Dagan, I.; Engelson, S. Committee-based sampling for training probabilistic classifiers. Proceedings of the International Conference on Machine Learning (ICML) 1995.

- McCallum, A.; Nigam, K. Employing EM in pool-based active learning for text classification. Proceedings of the International Conference on Machine Learning (ICML) 1998.

- H.S. Seung, M.O.; Sompolins, H. Multiple-instance active learning. Advances in Neural Information Processing Systems (NIPS) 2008.

- B. Settles, M. Craven, and L. Friedland. Active learning with real annotationcosts. Proceedings of the NIPS Workshop on Cost-Sensitive Learning 2008.

- MacKay, D. Information-based objective functions for active data selection 1992.

- F. Chinesta, A. Huerta, G. Rozza, and K. Willcox. Encyclopedia of Computational Mechanics; Vol. Model Order Reduction, John Wiley and Sons, 2015.

- G. Berkooz, P.H.; Lumley, J. The Proper Orthogonal Decomposition in the Analysis of Turbulent Flows. Annual Review of Fluid Mechanics 1993.

- Jolliffe, Ian T., Cadima, Jorge. Principal component analysis: a review and recent developments. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences 2016.

- Abel Sancarlos, Victor Champaney, J.L.D.; Chinesta, F. PGD-based Advanced Nonlinear Multiparametric Regression for Constructing Metamodels at the scarce data limit 2021.

- Ibanez, R. Advanced physics-based and data-driven strategies. These centrale Nantes 2019.

- Abel Sancarlos, Elias Cueto, Francisco Chinesta, and JL Duval. A novel sparse reduced order formulation for modeling electromagnetic forces in electric motors. SN Applied Science 2021.

- Abel Sancarlos, Morgan Cameron, Andreas Abel, Elias Cueto, Jean-Louis Duval, and Francisco Chinesta. A novel sparse reduced order formulation formodeling electromagnetic forces in electric motors. Archives of Computational Methods in Engineering 2020.

- Argerich, C. Study and development of new acoustic technologies for nacelle products. PhD thesis, Universitat Politecnica de Catalunya 2020.

- RA, F. On the mathematical foundations of theoretical statistics. A Containing Papers of a Mathematical or Physical Character 1922.

- Kiefer, J. et Wolfowitz, J. The equivalence of two extremum problems. Canadian journal of Mathematics 1960.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).