1. Introduction

The prevalence of osteoporosis has been on the rise, in part due to the ageing population [

1]. This condition is characterised by reduced bone mineral density (BMD) and mechanical strength, which increases the risk of pathological osteoporotic fracturers [

2]. Among osteoporotic fractures, vertebral fractures are the most common which is attributed to the spine’s abundant composition of trabecular bone that is more prone to microarchitectural deterioration compared to cortical bone [

3,

4]. Post-menopausal women are at a higher risk of osteoporotic vertebral fractures (OVFs), given the high prevalence of osteoporosis and osteopenia in this population [

5,

6].

OVFs are a significant burden to healthcare due to reduced quality of life associated with pain, morbidity, and mortality [

2,

6]. These fractures can limit mobility, interfere with activities of daily living and decline pulmonary capacity due to OVF related hyper-kyphosis [

3]. Fortunately, many of these negative effects can be avoided by prompt pharmacological management, non-pharmacological treatment and lifestyle changes [

6,

7,

8]. Therefore, timely diagnosis of OVFs are essential in order to aid in their early treatment and management [

2].

Osteoporotic fractures predict increased risk of future fractures and it is therefore important to diagnose OVFs and commence effectove tretaments to reduce risk of future fractures [

4]. Therefore, using OVF status as a more definitive biomarker for osteoporosis has gained attention [

4,

7,

10]. OVFs can also predict risk for more severe fractures such as of the hip and pelvis, as OVF tends to precede these critical and potentially life-altering fractures [

3,

10,

11]. However, OVFs are challenging to diagnose due to the common and indistinct symptom of back pain, which can delay timely detection. Furthermore, up to two-thirds of OVFs are clinically silent, but in time, may progress to more disruptive clinical features [

3].

Chest radiograph is a widely used imaging modality in clinical practice because it is a convenient and opportunistic screening tool for OVFs [

11,

12]. Nevertheless, OVFs remain underdiagnosed on chest radiographs [

7]. This is because radiologists tend to focus on the cardiopulmonary anatomy that predominantly form the basis of the clinical inquiry, whilst overlooking the examination of vertebral column [

11]. Additionally, mild vertebral fractures can be subtle, requiring closer inspection, often leading to missed diagnoses even when the radiograph is requested for spinal analysis [

13].

Artificial intelligence (AI) is at the forefront of healthcare, and is garnering significant interest in its application for medical imaging practice [

14]. AI applies trained models and algorithms that use pattern recognition and experiential learning to process, analyse and create solutions to problems [

15,

16]. Recently, Xiao et al. [

11] developed an AI software program called "Ofeye 1.0" that enables detection of OVFs on lateral chest radiographs with 93.9% accuracy, 86% sensitivity, and 97.1% specificity. Despite promising results reported in their study, this model was validated solely on an Asian population, and its generalizability to other populations, such as Caucasians, is unclear. Its performance may differ when applied to Caucasians due to differences in lifestyle, cultural factors, osteoporotic trends and susceptibility to OVFs [

9]. It is pertinent to validate this software tool so that it can reliably be implemented in more clinics in order to support the timely, efficient, and accurate diagnosis of OVFs. This is due to the fact that the Ofeye 1.0 tool has the advantage to process up to 100 radiographs in a single operation, which can help managing the high demand for reporting while reducing the likelihood of missing OVFs [

11]. This motivates the conduction of this study by exploring the diagnostic value of the newly developed AI tool Ofeye 1.0 in automatic detection of OVFs on lateral chest radiographs.

This study aims to test the performance of Ofeye1.0 for detecting OVFs on lateral chest radiographs in a Caucasian population by assessing its sensitivity, and specificity and accuracy. We hypothesised that this AI tool could serve as a complementary tool to routine diagnostic radiology practice when reporting chest radiographs in elderly women by improving detection of missed OVFs in these patients not referred for spinal disorders. By reducing the risk of missed diagnoses and streamlining radiologist’s workload, it is expected that patient outcomes will be improved.

2. Materials and Methods

2.1. Study design and data collection

This is a cross-sectional study with data collection from three clinical sites. Two of these clinics were Australian, one in a private practice and the other in a public hospital, while the third clinic was situated in a public hospital in Switzerland. These sites were selected through a convenience sampling method, and the cases were chosen by searching the Picture Archive and Communicating System (PACS) using the search terms “X-RAY CHEST” and “at least 60 years” and “female”. The selected date range included data from approximately six months prior to the time of the search.

Lateral chest radiographs were manually extracted from the dataset. Some sites retrieved the most recent X-rays from the search, while other sites occasionally selected previous cases with the aim of randomising the sample. All images were anonymised for image processing and analysis by the Ofeye 1.0 tool, as well as read by observers.

Inclusion criteria were as follows: Lateral chest radiographs from woman over 60 years old with acceptable image quality. The defined exclusion criteria were: antero-posterior chest radiographs, lumbar spines, repeat examinations of the same patient, illegible due to poor image quality or because the vertebrae were obscured by marked lung pathology. Additionally, images were further excluded if the original radiologist reports were not available.

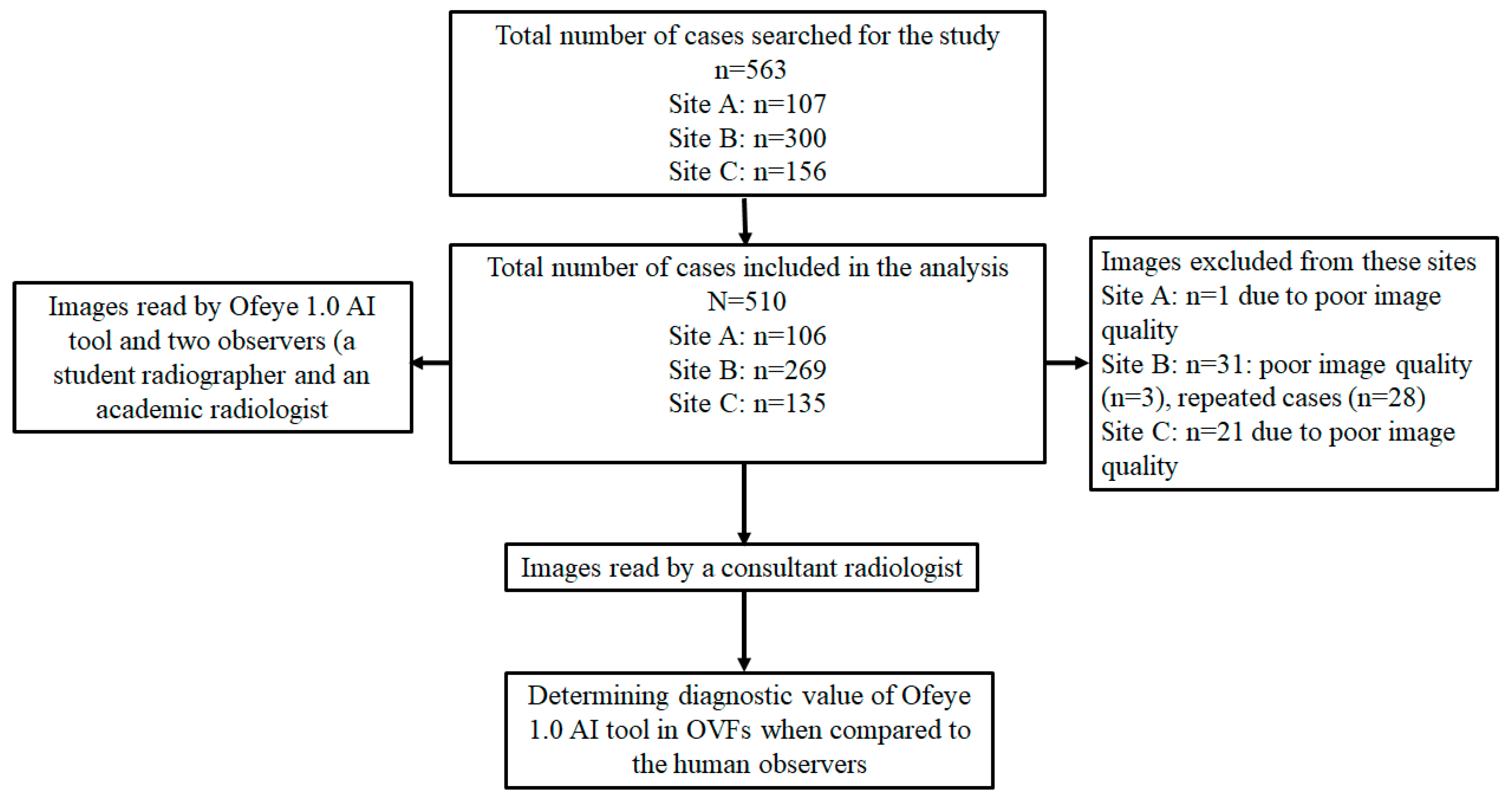

We collected 563 lateral chest radiographs of post-menopausal woman and their corresponding radiological reports from three radiology clinics. A total of 510 studies were included, and 53 studies were excluded (

Figure 1). Ethics approval was obtained from all of these clinical sites, as well as Human Research Ethics Committee, Curtin University.

Figure 1 is a flow chart showing data collection from three clinical sites.

2.2. Image analysis by Ofeye 1.0 for automatic detection of OVF

The accuracy and reliability of Ofeye 1.0 AI algorithm has been validated by Xiao et al [

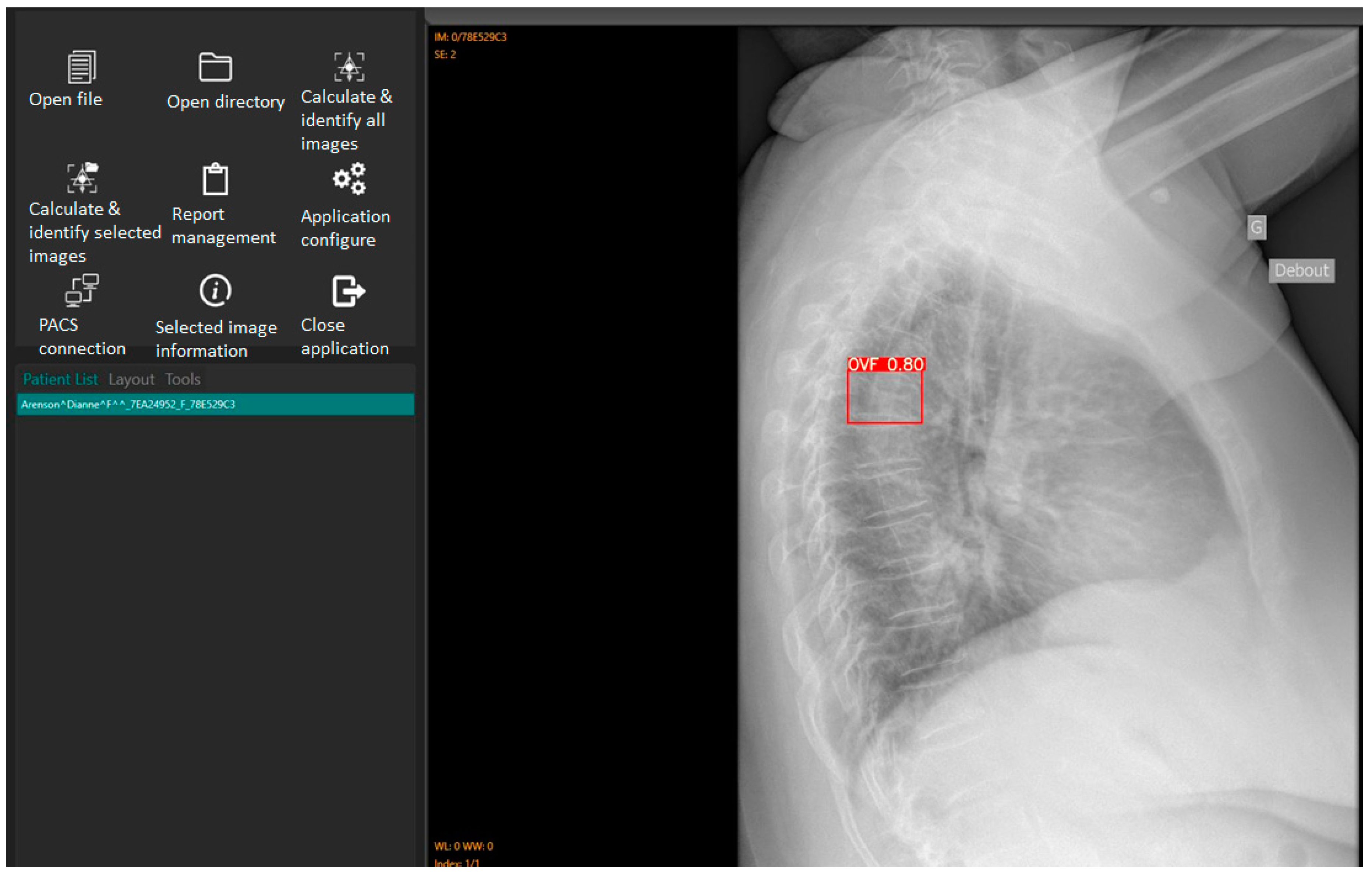

11]. As explained before, the primary application of this AI tool is to assist rapid and efficient identification of OVFs on chest radiographs for routine examinations and not for spinal disorders. Digital Imaging and Communication in Medicine (DICOM) images can be opened using either the “open file” or “open directory” icons. The “Calculate and identify all images” icon batch processes up to 100 images in less than 3 minutes [

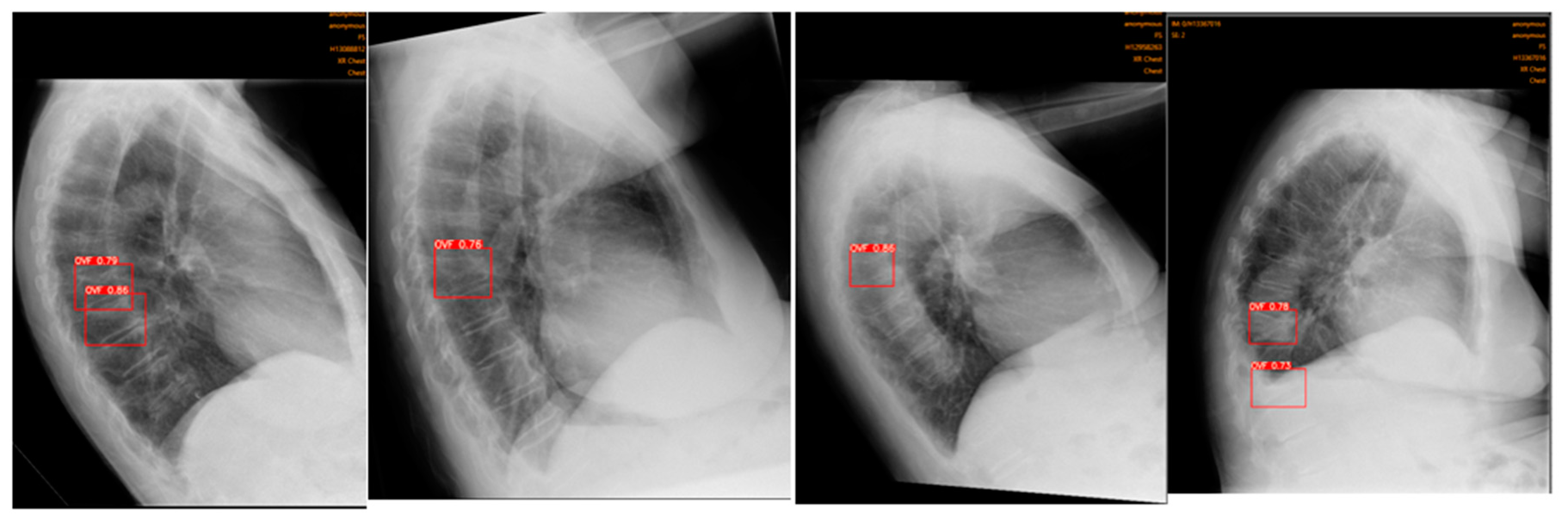

11], outputting a red box around identified OVFs with a percentage indicating the likelihood of a true fracture (

Figure 2). Ofeye1.0 only displays an identified fracture if the percentage likelihood is at least 60% [

11].

.

2.3. Image assessment by human observers

A consultant radiologist with more than 20 years’ experience in interpreting chest radiographs and thoracic CT images analysed the included images and identified the presence of OVFs with results documented as either the presence or absence of a fracture.

A fracture was deemed present if it met either of the following criteria [

17]:

1. A reduction of at least 20% in the anterior or middle vertebral height compared to the posterior height.

2. A reduction of at least 20% in any of the anterior, middle, or posterior vertebral heights, relative to the vertebra immediately above or below it.

In evaluating the chest radiographs, consideration was given to the normal physiologic wedging that typically occurs at the thoraco-lumbar junction, which is considered within the normal range up to 10 degrees [

18].

The consultant radiologist was blinded to the findings of the AI software and to the reports, which were similarly recorded as fracture detected or not. The consultant radiologist’s observations served as the gold standard against which the performance of both the AI system and the original radiologist reports were evaluated. Further, a student radiographer (with 3 years’ experience in medical imaging) and an academic radiologist (with more than 20 years’ experience in interpreting chest radiographs and CT images) assessed these images separately using the same criteria as stated above. Their assessments were then collated with discrepancy of agreement resolved through re-assessing of the images. This allows us to compare the diagnostic value of AI performance when compared to that from the consultant radiologist’s findings.

2.4. Statistical Analysis

Data was entered into MS Excel for analysis. The total number of true positive (TP), true negative (TN), false positive (FP) and false negative (FN) of the AI relative to the gold standard were calculated. The sensitivity, specificity, positive predictive value (PPV) and negative predictive value (NPV) of the AI tool at the 95% confidence interval were then calculated using the statistical software MedCalc (® v20.305, Ostend Belgium). The percentage of all OVFs present that were detected by AI but missed by the original radiologist reports was also calculated.

3. Results

The performance of Ofeye 1.0 at detecting OVFs from three clinical sites compared to the consultant radiologist presented high specificity at individual sites and overall sites with more than 92% achieved, showing the reliability of using it as a screening tool (

Table 1). In contrast, the sensitivity was relatively low, between 33.3% and 58%.

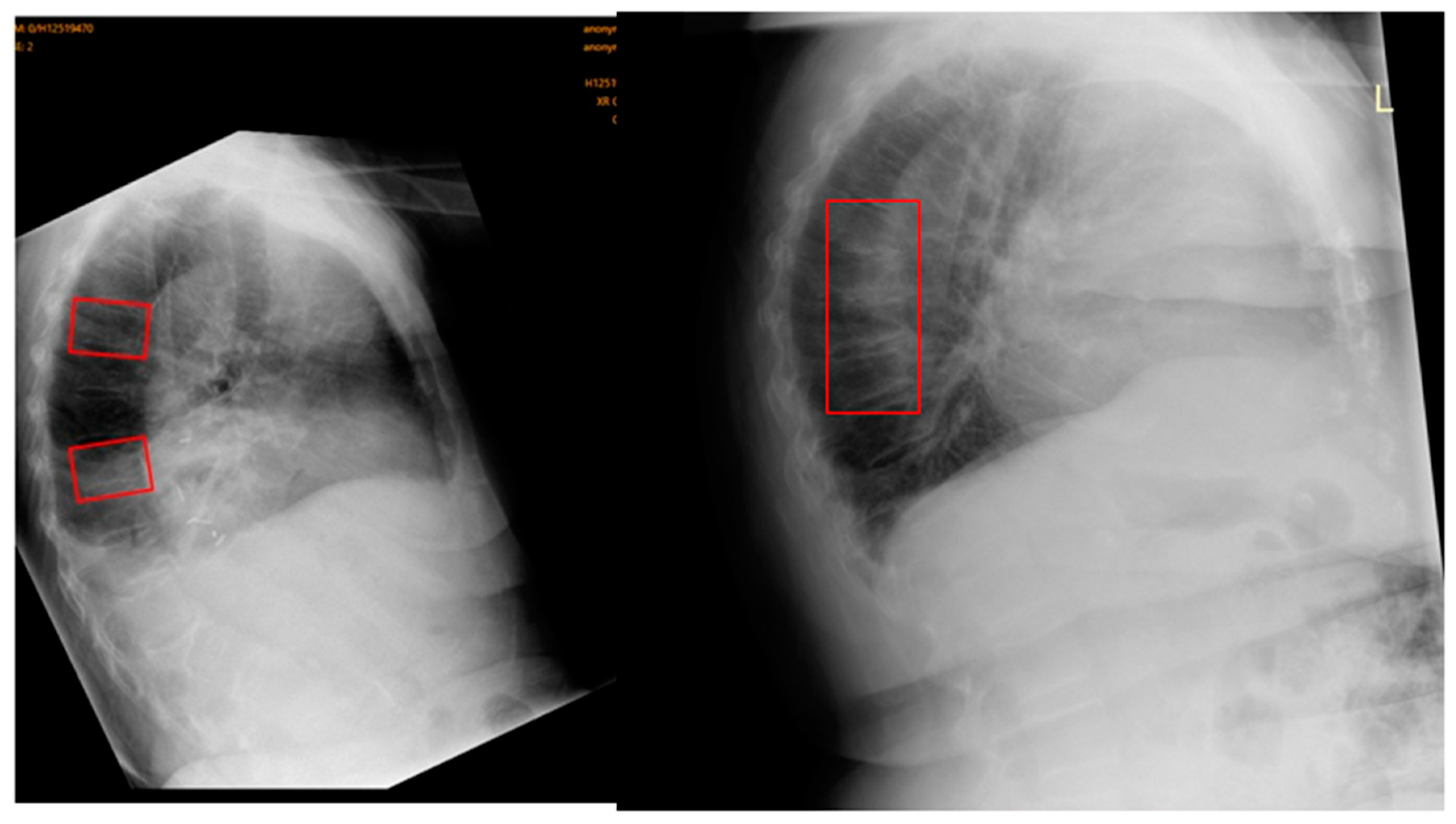

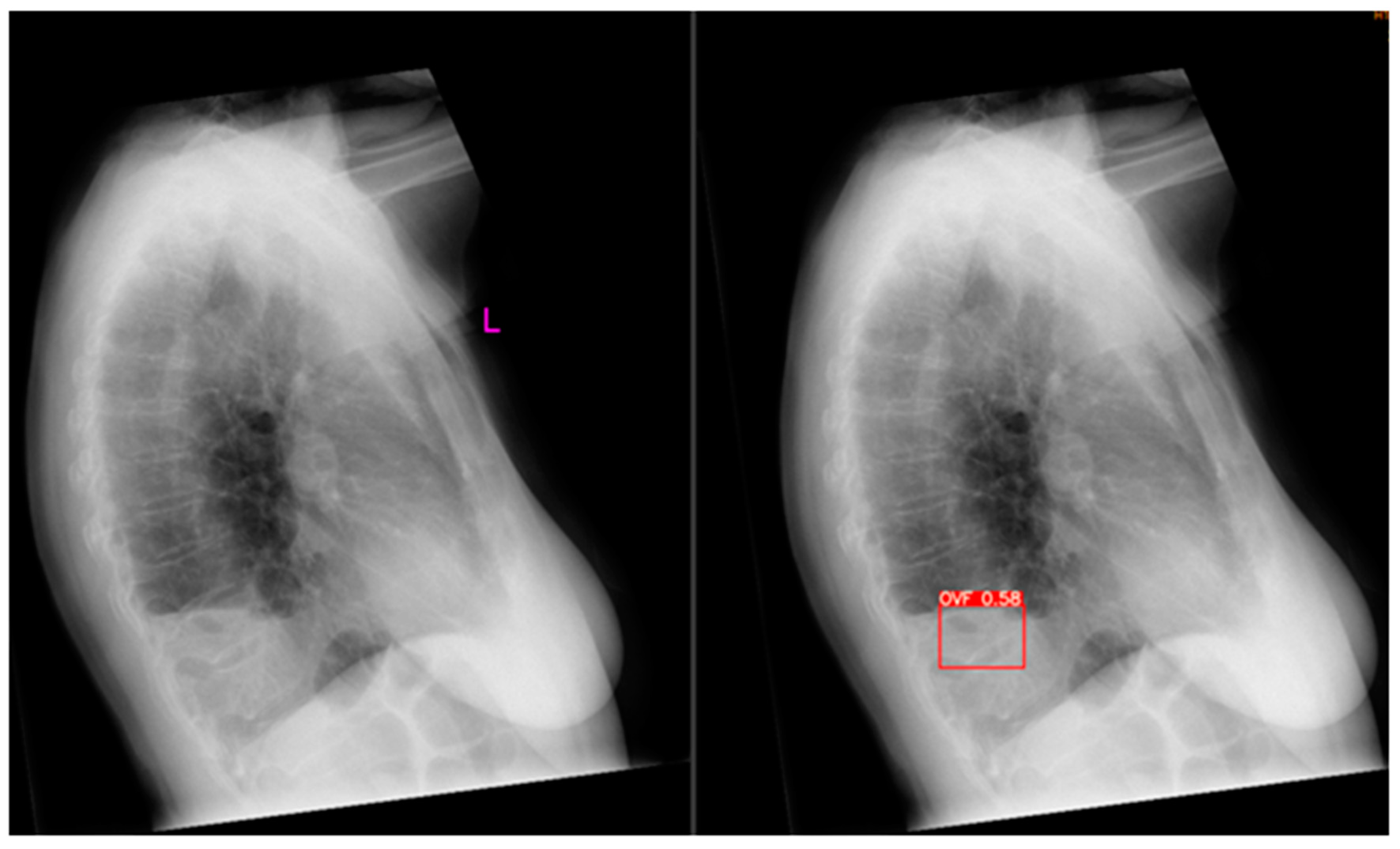

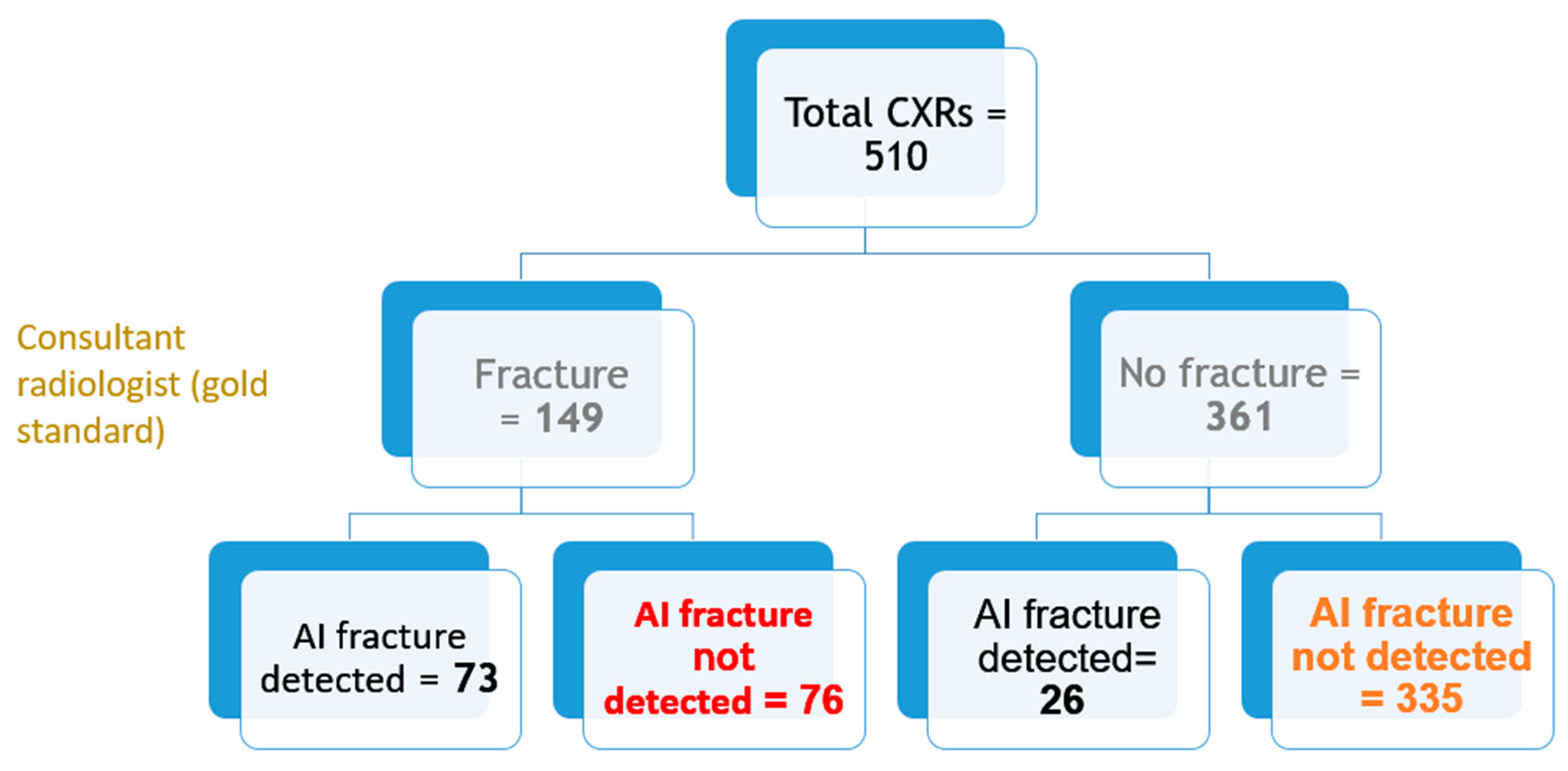

Out of all 510 lateral chest radiographs, the AI software detected 73 fractures correctly and 76 fractures were missed (

Figure 3), resulting in a sensitivity of 49% (95% CI: 40.7, 57.3%). The specificity was 92.8% (95% CI: 89.6, 95.2%) with 335 out of the 510 radiographs correctly labelled as fracture-free, whilst 26 normal radiographs were erroneously labelled as having OVFs (

Figure 4). The PPV and NPV were 73.7% (95% CI: 65.2,80.8%) and 81.5% (95% CI: 79, 83.8%) respectively, giving an accurate result 80% (95% CI: 76.3, 83.4%) of the time.

Figure 5 is a flowchart summrising the number of these cases with or without fractures.

Fifty-two fractures were detected by AI that was missed by the original radiologist reports, thus, the AI software resulted in a 35% improved detection rate (

Figure 6).

4. Discussion

This study aimed to assess the validity of the new Ofeye 1.0 software in detecting OVFs on lateral chest radiographs in post-menopausal Caucasian women based on analysis of 510 examinations from three clinical sites. The results revealed a high specificity of 92.8% (95% CI: 89.6, 95.2%), indicating the AI tool’s accuracy in identifying normal chest radiographs when OVFs are absent. This suggests the potential of using the developed AI as a valuable screening tool. However, the sensitivity was found to be low at 49% (95% CI: 40.7, 57.3%), indicating its limitations in reliably serving as a diagnostic tool due to its lower ability to detect mild OVFs when present. Nonetheless, it is worth noting that the AI outperformed original radiologist reports by 35% in OVF detection. As such, integrating the findings of the AI tool into the clinical workflow of radiologists may offer advantages in improving diagnostic outcomes.

This study builds on the work of Xiao et al [

11] for validating their developed AI tool, the Ofeye1.0. They used nearly 4000 spine radiographs and 2000 chest radiographs from 16 clinical sites to train the AI model. Of which, 1404 cases had OVFs. They retrieved 542 chest radiographs and 162 spine radiographs from four clinical sites to test the AI model, of which 215 cases had OVFs. Our findings are consistent with Xiao et al.’s study in terms of relatively high specificity at detecting OVFs on lateral chest radiogaphs, although at a slightly lower capability (92.8% compared to 97.3%). Comparatively, our study demonstrated a substantially lower sensitivity (49% compared to 86%) with a higher false negative rate of 14.9% compared to Xiao et. al’s 7%. The discrepancy between our study and theirs may be due to several reasons. Firstly, Xiao et al [

11]. analysed this AI tool based on an Asian population, in which vertebral fractures may present themselves differently [

4,

9,

10]. For example, it is possible that fractures appear more obvious compared to some subtle changes that was noted in our study sample. This would make it easier for Xiao et al. to detect, leading to lower false negatives and hence a higher sensitivity. Hence, variations in population charecteristics underly the necessity for further testing of AI on a wide variety of populations before it is implemented for routine clinical use [

19]. Furthermore, the current OVF assessment criteria have been critiqued for being too subjective, especially pertaining to mild OVFs [

3,

4]. Hence, it is possible that our study’s observers were more stringent compared to Xiao et al’s, leading to a large variation to our findings. Secondly, their study relied on a single reader to act as the gold standard, and no information was provided regarding their level of expertise at reading spinal radiographs nor whether they were blinded to the results of Ofeye 1.0. In contrast, in our data analysis, we compared the AI performance with original diagnostic reports to determine the rate of missing diagnosis by original reports. Further, our images were assessed initially by two observers, followed by a consultant radiologist whose reading was used as the gold standard. Despite use of different reading approaches by these observers, our results were consistent across the observers, thus showing the reliability of our data analysis. The high false negative rate in our study is most likely due to early or less obvious OVFs which were ranked as normal by Ofeye 1.0. This will need to be addressed by further studies such as assessing these images by a few more radiologists to allow us to draw more robust conclusions.

Previous studies have demonstrated validity of deep-learning models to assess OVFs on dedicated spinal X-rays, CT scans as well as DEXA scans [

20,

21,

22]. Burns et al [

22] validated their computer-aided detection (CAD) system for automated detection of thoracic and lumbar vertebral body compression fractures on CT images with sensitivity, specificity and accuracy being 98.7%, 77.3% and 95% respectively. Their specificity is lower than our findings, however, their sensitivity outperforms the current study findings. These discrepancies are expected as CT scans enable enhanced visualisation without interference from overlying anatomy as compared to planar radiography [

23]. Chen et al [

24]. validated a deep-learning algorithm for detection of OVFs on plain frontal abdominal radiographs, claiming 73.02% specificity, 73.81% sensitivity and 73.59% accuracy. Likewise, Shen et al [

25]. recently validated their AI tool for detection of OVFs on dedicated thoracic and lumbar radiogaphs, revealing 97.25% specificity, 97.41% sensitivity and 84.08% accuracy. Their software demonstrated better validity compared to this study, however, a focused spinal radiograph generates less scatter radiation compared to chest examinations, enabling better image quality with improved contrast resolution and reduced noise for enhanced diagnostics [

26]. Furthermore, spinal radiograph exposures are optimised for visualisation of the vertebral bodies compared to chest radiograph exposures which focus on pulmonary visualisation rather than spinal anatomy [

27].

Compared to these prior studies, this study uniquely contributes to the validation of AI for detecting OVFs on lateral chest radiographs; which are not primarily indicated for spinal analysis. This advantageously expands the clinical capacity for widespread, improved detection of OVFs due to chest radiographs being a high-yield examination. Considering spinal vertebrae are almost visualised in their entirety, lateral chest radiographs provide a unique opportunity for early OVF detection in woman not referred for spinal disorders. Additionally, incidental OVF findings are often missed by reporting radiologists as they tend to silo their focus to the cardio-pulmonary anatomy which was the primary focus of the examination [

11]. In a busy clinical environment, where radiologists have less than 15 seconds [

28] to assess a radiograph, there is an even higher likelihood of missing OVFs on these scans. Thus, Ofeye1.0 may serve as a complimentary screening tool for improved detection of OVFs which may be overlooked. The 35% enhanced detection rate of OVFs that our findings suggest that Ofeye1.0 adds above that of the original radiologist reports, demonstrate the benefit of utilising this AI tool as an aid to radiologist reading. Thirty five percent of patients lives may be positively impacted by delivering the pertinent treatment, environmental or lifestyle adaptations in order to address any detected OVFs, improving their functional capacities and preventing cascade of fracturing that tend to onsue after initial mild OVFs. Thus, patients may be spared the incapacitating chronic pain and immobility issues associated with the advanced stages of OVFs.

A limitation of our study is that we relied on a single consultant radiologist as the gold standard, although with cross-checking from another radiologist and student-radiographer. Ideally, 3 consultant radiologists would serve as the reference standard in order to optimise reliability. Furthermore, the criteria used to classify minimal and mild grade OVFs has been criticised for being subjective [

29] and was based on the sole discretion of this individual consultant whose opinion as to whether mild OVFs were present. Additionally, we did not categorise the OVFs according to severity such as minimal, mild, moderate, or severe. This would have enabled a more comprehensive understanding of Ofeye1.0’s performance in detecting different grades of fractures. It is worth noting that Ofeye1.0 has also been critiqued for not labelling the type of OVF, rather providing a probability or confidence as to whether a fracture is present [

11].

Our large sample size of 510 lateral chest radiographs across multiple sites constitutes a strength of this study. However, this presented challenges in our ability to recruit multiple consultant radiologists to read these images due to the extensive time required for analysis. Furthermore, there was no standardisation or oversight as to the timing allocated for the consultant radiologist to read the images, nor the time of day or the total time per sitting. Thus, it is possible that the consultant radiologist may have experienced fatigue if not adequate rest time was taken between cases. Despite that, the consultant radiologist remained blinded to the findings of Ofeye1.0, avoiding any bias or unintentional influence on the consultant radiologist’s decisions.

5. Conclusions

We have validated the use of Ofeye1.0 as a screening tool for OVFs in Caucasian post-menopausal woman. The AI tool exhibits a relatively high specificity of 92.8% (95% CI: 89.6, 95.2%) with a low false positive rate of 5.1%. Therefore, this AI tool may complement radiologists, to enhance OVF detection rates and diagnostic accuracy. However, its low sensitivity of 49% (95% CI: 40.7, 57.3%) suggests that radiologists should not rely solely on this software for diagnostic purposes and they must have the final decision about the diagnosis.

Author Contributions

Conceptualization, Z.S and C.W., and A.G.; methodology, Z.S.; software, Z.S., C.W., and J.S.; validation, J.S., Z.S., and A.G.; formal analysis, A.G.; investigation, J.S., C.W., and Z.S.; resources, A.G., H.S., S.S.G.; data curation, Z.S.; writing—original draft preparation, J.S., C.W; writing—review and editing, J.S., Z.S, and C.R; visualization, J.S., A.G., and Z.S.; supervision, Z.S.; project administration, Z.S.; funding acquisition, A.G., H.S., and Z.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by RPH Small Imaging Grant (SGA0020622) and Faculty of Health Sciences Summer Scholarship, Curtin University (2022-2023).

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Curtin University Human Research Ethics Committee (HRE2022-0395).

Informed Consent Statement

Patient consent was waived due to retrospective nature of the study.

Data Availability Statement

Data is not available due to ethics restrictions.

Acknowledgments

We would like to thank Dr Rene Forsyth for her assistance in collecting some data for inclusion in the analysis, and Dr Brad Zhang for his advice on statistical analysis.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Huang, S.; Zhu, X.; Xiao, D.; Zhuang, J.; Liang. G.; Liang, C.; Zheng, X.; Ke, Y.; Chang, Y. Therapeutic effect of percutaneous kyphoplasty combined with anti-osteoporosis drug on postmenopausal women with osteoporotic vertebral compression fracture and analysis of postoperative bone cement leakage risk factors: a retrospective cohort study. J. Orthop. Surg. Res. 2019, 14(1): 452. [CrossRef]

- Wáng, Y.X.J.; Deng, M.; He, L.C.; Che-Nordin, N.; Santiago, F.R. Osteoporotic vertebral endplate and cortex fractures: A pictorial review. J. Orthop. Translat. 2018,15(1),35-49. [CrossRef]

- Griffith, J.F. Identifying osteoporotic vertebral fracture. Quant. Imaging. Med. Surg. 2015,5(4),592-602. [CrossRef]

- Wáng, Y.X.J. An update of our understanding of radiographic diagnostics for prevalent osteoporotic vertebral fracture in elderly women. Quant. Imaging. Med. Surg. 2022,12(7),3495-3514. [CrossRef]

- Ji, C.; Rong, Y.; Wang, J.; Yu, S.; Yin, G.; Fan, J.; et al. Risk factors for refracture following primary osteoporotic vertebral compression fractures. Pain. Physician. 2021, 24(3),E335-E340. https://www.proquest.com/docview/2656012762?pq-origsite=primo.

- Patel, D.; Liu, J.; Ebraheim, N.A. Managements of osteoporotic vertebral compression fractures: A narrative review. World. J. Orthop. 2022,13(6),564-573. [CrossRef]

- Lenchik, L.; Rogers, L.F.; Delmas, P.D.; Genant, H.K. Diagnosis of Osteoporotic Vertebral Fractures:. [CrossRef]

- Importance of Recognition and Description by Radiologists. AJR Am. J. Roentgenol. 2004,183(4),949-958. [CrossRef]

- Howe, T.E.; Shea, B.; Dawson, L.J.; Downie, F.; Murray, A.; Ross, C.; et al. Exercise for preventing and treating osteoporosis in postmenopausal women. Cochrane. Database. Syst. Rev. 2011, 7, CD000333. [CrossRef]

- Wáng, Y.X.J. The definition of spine bone mineral density (BMD)-classified osteoporosis and the much inflated prevalence of spine osteoporosis in older Chinese women when using the conventional cutpoint T-score of-2.5. Ann. Transl. Med. 2022, 10(24), 1421.doi: 10.21037/atm-22-669. [CrossRef]

- Wáng, Y.X.J.; Lu, Z.H.; Leung, J.C.; Fang, Z.Y.; Kwok, T.C. Osteoporotic-like vertebral fracture with less than 20% height loss is associated with increased further vertebral fracture risk in older women: the MrOS and MsOS (Hong Kong) year-18 follow-up radiograph results. Quant. Imaging. Med. Surg. 2023, 13, 1115-1125. [CrossRef]

- Xiao, B.H.; Zhu, M.S.Y.; Du, E.Z.; Liu, W.H.; Ma, J.B.; Huang, H.; et al. A software program for automated compressive vertebral fracture detection on elderly women’s lateral chest radiograph: Ofeye 1.0. Quant. Imaging. Med. Surg. 2022, 12(8),4259-4271. [CrossRef]

- Gündel, S.; Grbic, S.; Georgescu, B.; Liu, S.; Maier, A.; Comaniciu, D. Learning to Recognize Abnormalities in Chest X-Rays with Location-Aware Dense Networks. In Progress in Pattern Recognition, Image Analysis, Computer Vision, and Applications; Vera-Rodriguez, R.; Fierrez, J.; Morales, A. Ed.; Springer International Publishing, Spain, 2019; Vol 11401, p 757–765. [CrossRef]

- Du, M.M.; Che-Nordin, N.; Ye, P.P.; Qiu, S.W.; Yan, Z.H.; Wang, Y.X.J. Underreporting characteristics of osteoporotic vertebral fracture in back pain clinic patients of a tertiary hospital in China. J. Orthop. Translat. 2020, 23,152-158. doi:10.1016/j.jot.2019.10.007. [CrossRef]

- Kelly, B.S.; Judge, C.; Bollard, S.M.; Clifford, S.M.; Healy, G.M.; Aziz, A.; et al. Radiology artificial intelligence: a systematic review and evaluation of methods (RAISE). European Radiology. 2022, 32(11),7998-8007. doi:10.1007/s00330-022-08784-6. [CrossRef]

- Kumar, K.; Thakur, G,S,M. Advanced applications of neural networks and artificial intelligence: A review. I.J. Inform. Technol. Comput Sci. 2012, 4(6),57. [CrossRef]

- Nazar, M.; Alam, M.M.; Yafi, E.; Su’ud, M.M. A Systematic Review of Human–Computer Interaction and Explainable Artificial Intelligence in Healthcare With Artificial Intelligence Techniques. IEEE, Access. 2021, 9,153316-153348. [CrossRef]

- Matsumoto, M.; Okada, E.; Kaneko, Y.; Ichihara, D.; Watanabe, K.; Chiba, K.; et al. Wedging of vertebral bodies at the thoracolumbar junction in asymptomatic healthy subjects on magnetic resonance imaging. Surg. Radiol. Anat. 2011, 33(3),223-228. [CrossRef]

- Crawford, M.B.; Toms, A.P.; Shepstone, L. Defining Normal Vertebral Angulation at the Thoracolumbar Junction. AJR. Am. J. Roentgenol. 2009, 193(1),W33-W37. doi:10.2214/AJR.08.2026. [CrossRef]

- Ranschaert, E. Artificial Intelligence in Radiology: Hype or Hope? J. Belgian, Sco. Radiol. 2018, 102(1). [CrossRef]

- Kim, D.H.; Jeong, J.G.; Kim, Y.J.; Kim, K.G.; Jeon, J.Y. Automated Vertebral Segmentation and Measurement of Vertebral Compression Ratio Based on Deep Learning in X-Ray Images. J. Digit. Imaging. 2021, 34(4),853-861. [CrossRef]

- Mehta, S.D.; Sebro, R. Computer-Aided Detection of Incidental Lumbar Spine Fractures from Routine Dual-Energy X-Ray Absorptiometry (DEXA) Studies Using a Support Vector Machine (SVM) Classifier. J. Digit. Imaging. 2020, 33(1),204-210. [CrossRef]

- Burns, J.E.; Yao, J.; Summers, R.M. Vertebral body compression fractures and bone density: automated detection and classification in CT. Radiology. 2017, 284, 788-797.

- Kim, .KC.; Cho, H.C.; Jang, T.J.; Choi, J.M.; Seo, J.K. Automatic detection and segmentation of lumbar vertebrae from X-ray images for compression fracture evaluation. Comput. Methods. Programs. Biomed. 2021, 200, 105833. [CrossRef]

- Chen, H.Y.; Hsu, B.W.Y.; Yin, Y.K.; Lin, F.H.; Yang, T.H.; Yang, R.S.; Lee, C.K.; Tseng, V.S. Application of deep learning algorithm to detect and visualize vertebral fractures on plain frontal radiographs. PloS. One. 2021,16(1),e0245992. [CrossRef]

- Shen, L.; Gao, C.; Hu, S.; Kang, D.; Zhang, Z.; Xia, D.; et al. Using Artificial Intelligence to Diagnose Osteoporotic Vertebral Fractures on Plain Radiographs. J. Bone. Miner Res. 2023, 38(9),1278-1287. [CrossRef]

- Lampignano, J.P.; Kendrick, L.E. Bontrager’s Textbook of Radiographic Positioning and Related Anatomy. 10th ed. Elsevier;2021.

- Delrue, L.; Gosselin, R.; Ilsen, B.; Van Landeghem, A.; de Mey, J.; Duyck, P. Difficulties in the Interpretation of Chest Radiography. Springer: Berlin Heidelberg, 2011; pp 27-49. Medical Radiology. [CrossRef]

- Shin, H.J.; Han, K.; Ryu, L.; Kim, E.K. The impact of artificial intelligence on the reading times of radiologists for chest radiographs. NPJ. Digit. Med. 2023, 6(1), 82. [CrossRef]

- Lentle, B.; Koromani, F.; Brown, JP.; et al. The Radiology of osteoporotic vertebral fractures revisited. J. Bone. Miner Res. 2019, 34(3),409-418. [CrossRef]

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).