Submitted:

27 October 2023

Posted:

27 October 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

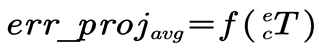

2. Coordinate systems definitions and hand-eye calibration equation

2.1. Coordinate systems definitions

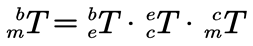

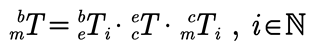

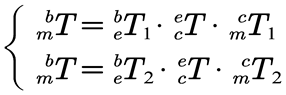

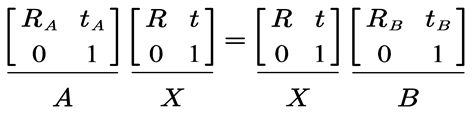

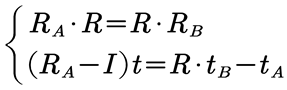

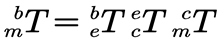

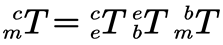

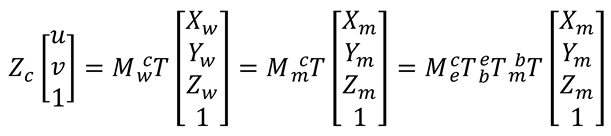

2.2. Hand-eye Calibration equation

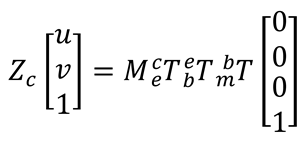

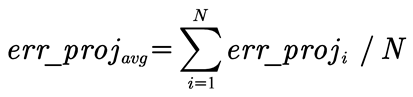

3. Reprojection error analysis method of calibration algorithms

3.1. Hand-eye calibration algorithm simulation experiments

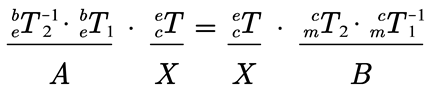

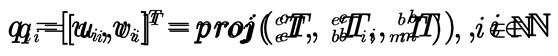

3.2. Heuristic error analysis

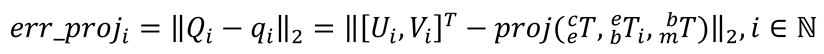

3.3. Reprojection error analysis

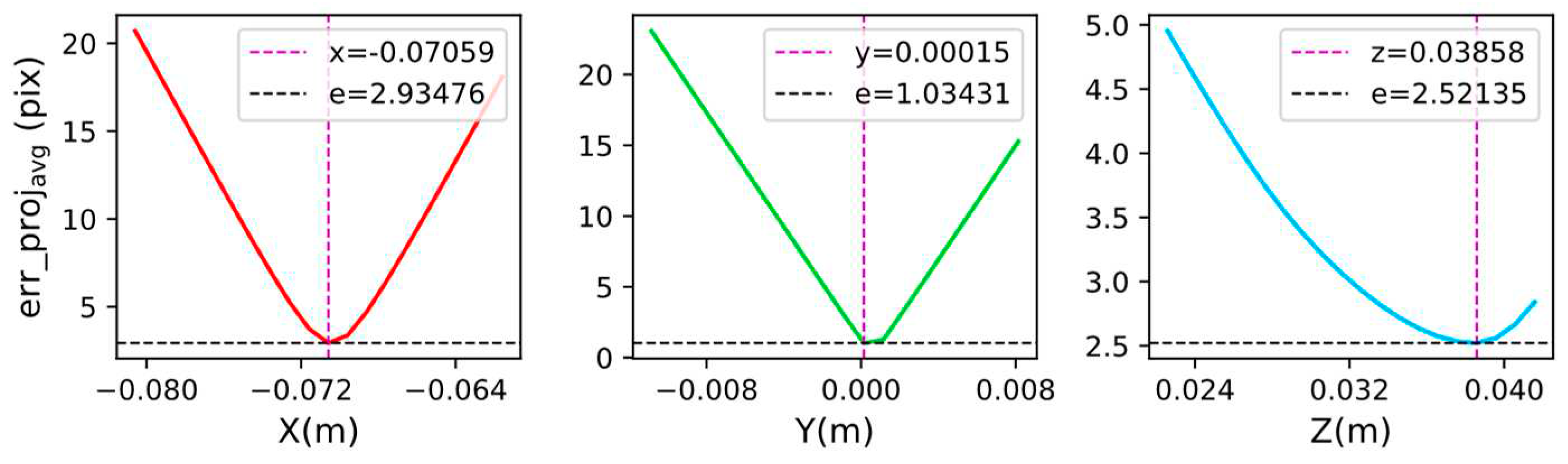

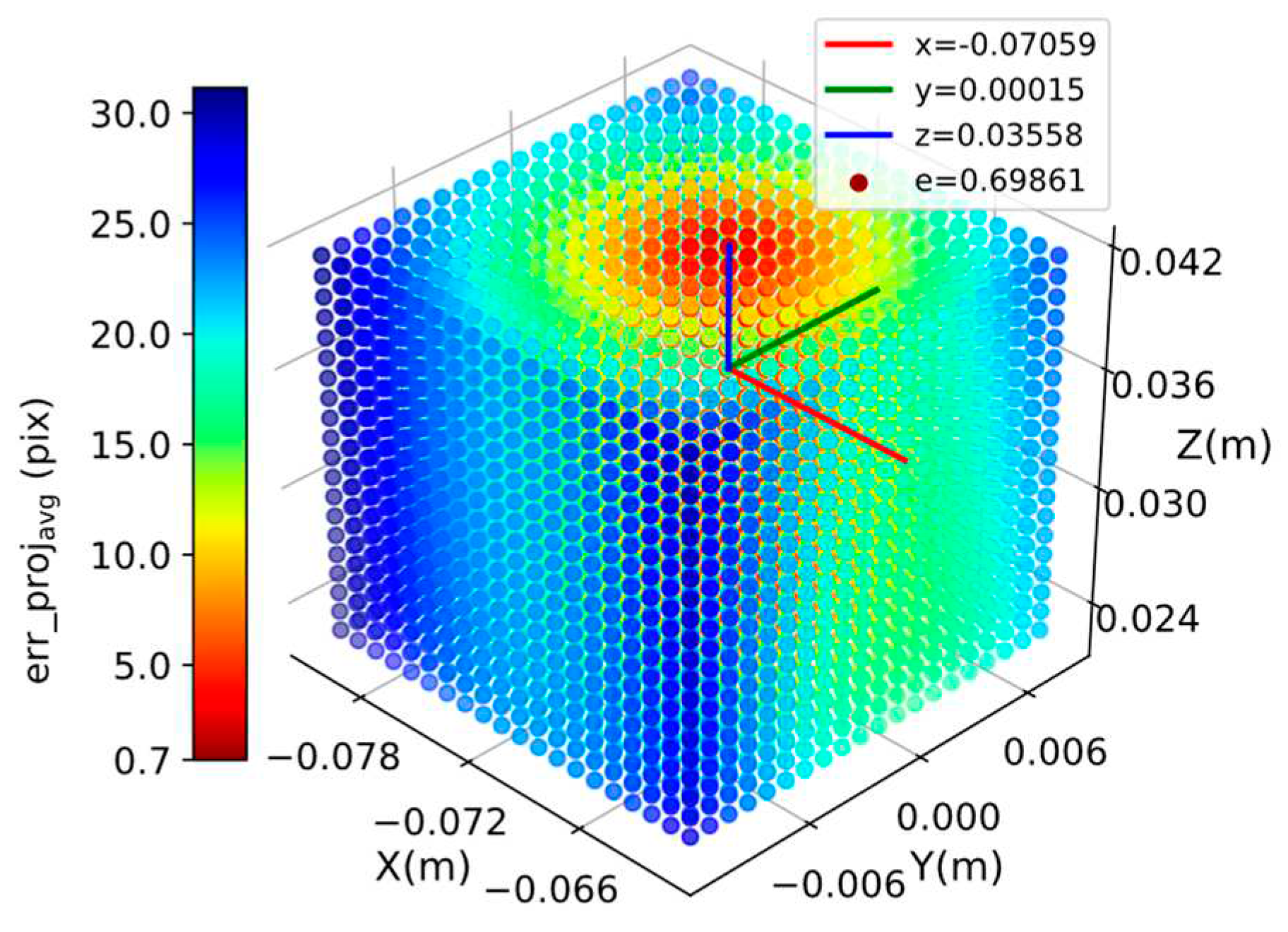

3.4. Optimization calibration algorithm by minimizing reprojection error analysis

4. Hand-eye calibration algorithm experiment

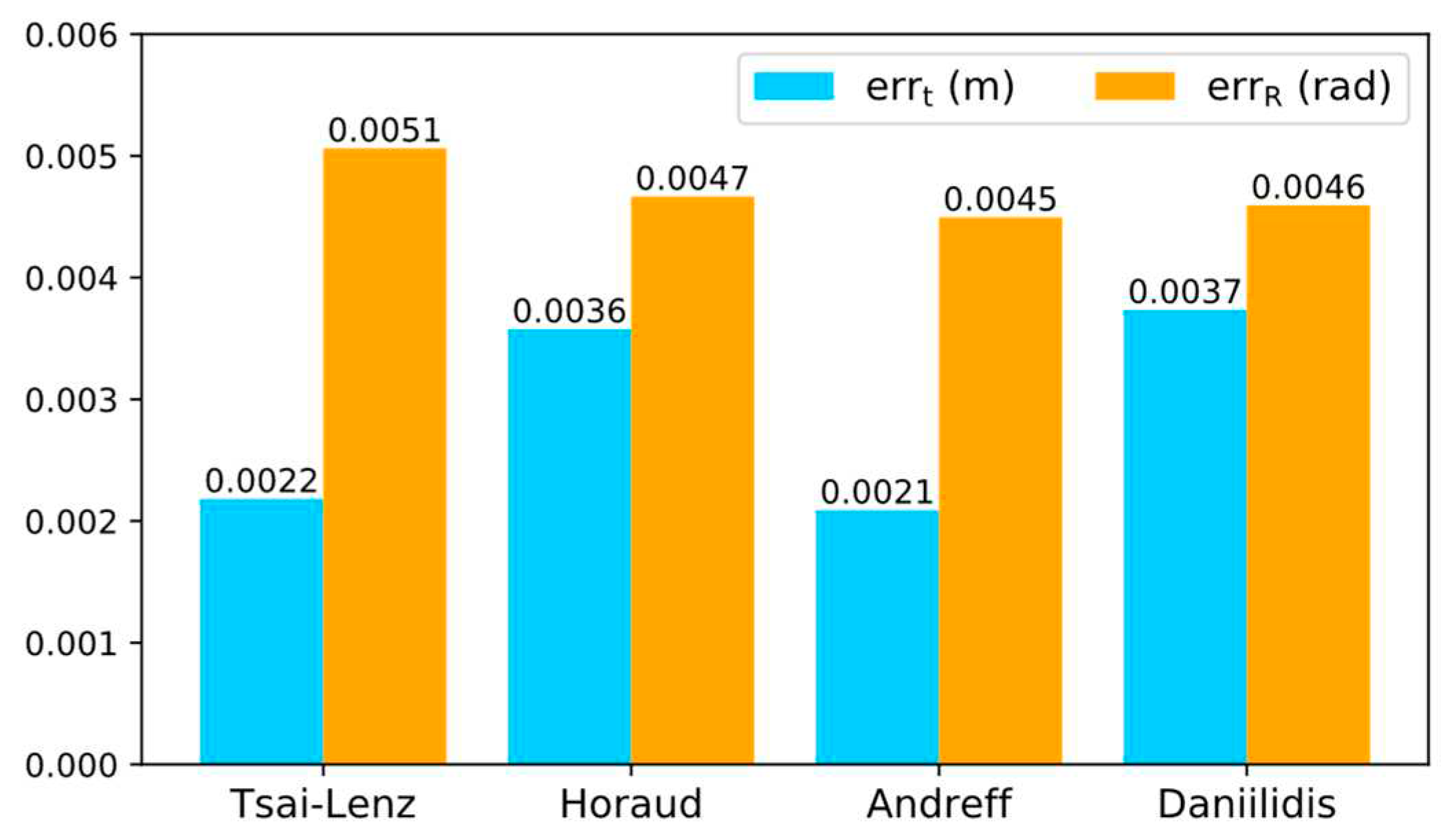

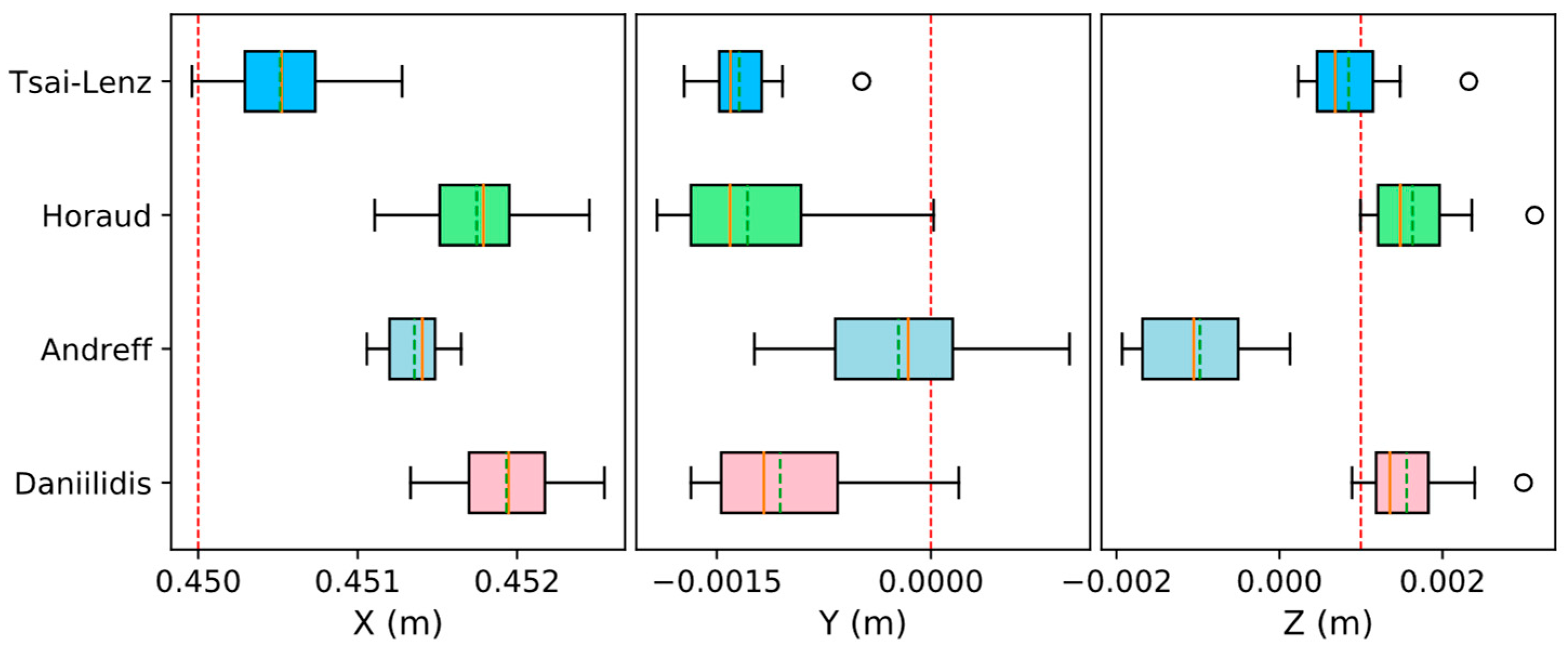

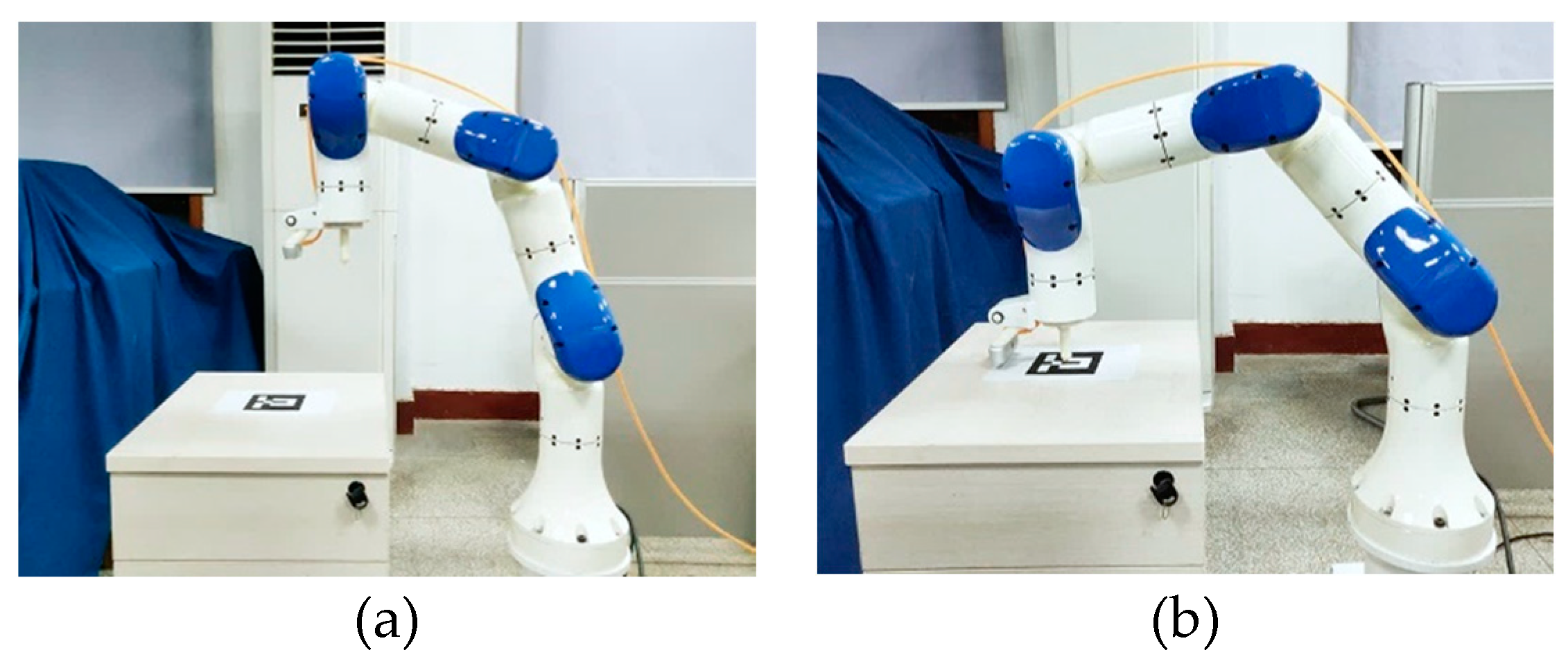

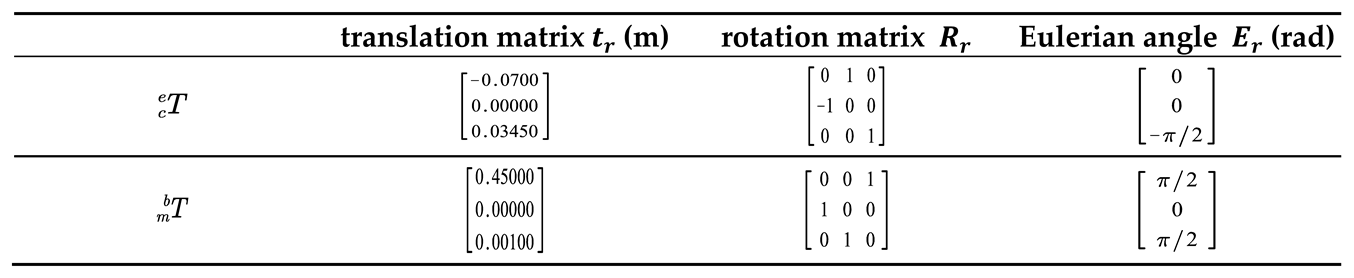

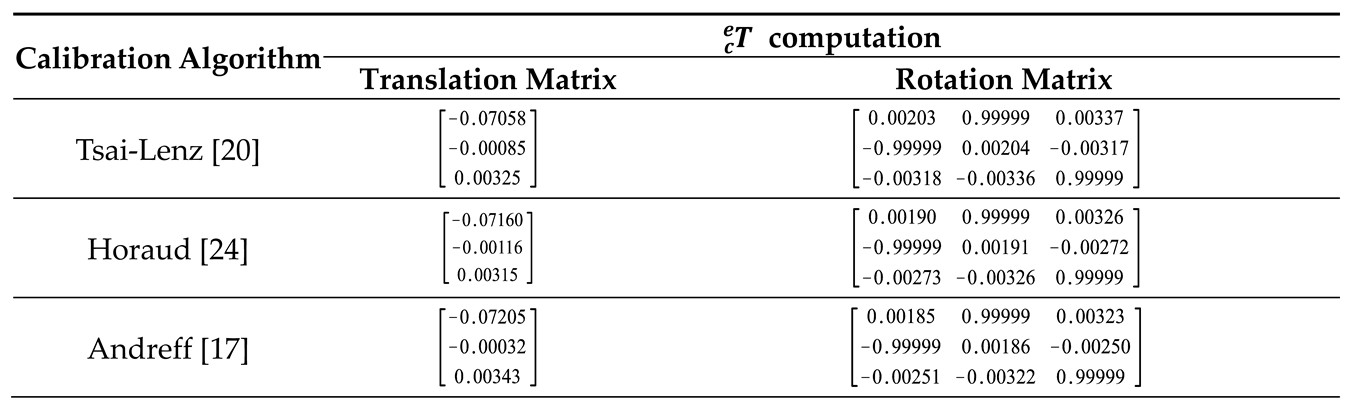

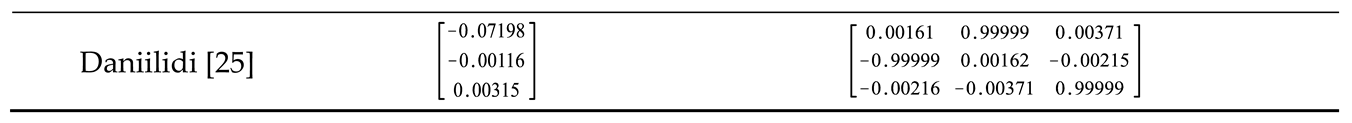

4.1. Calibration process and results

- Using the auxiliary calibration tool, the real translation matrixof the AR marker coordinate system relative to the base coordinate system of the manipulator is obtained, and then the position of the AR marker is kept unchanged.

- The manipulator is controlled to move to 20 different states where the corner information of the AR marker can be detected, and the corresponding 20 groups of coordinate system transformation data are collected and recorded.

- The mean value of is calculated by using the coordinate transformation data of each group, and the translation matrix in is replaced by to obtain for calculating the reprojection error.

- The Tsai-Lenz algorithm is used to calculate the initial value of the hand-eye transformation matrix , and then its translation parameters are automatically adjusted to minimize the average reprojection error. The optimized hand-eye transformation matrix.

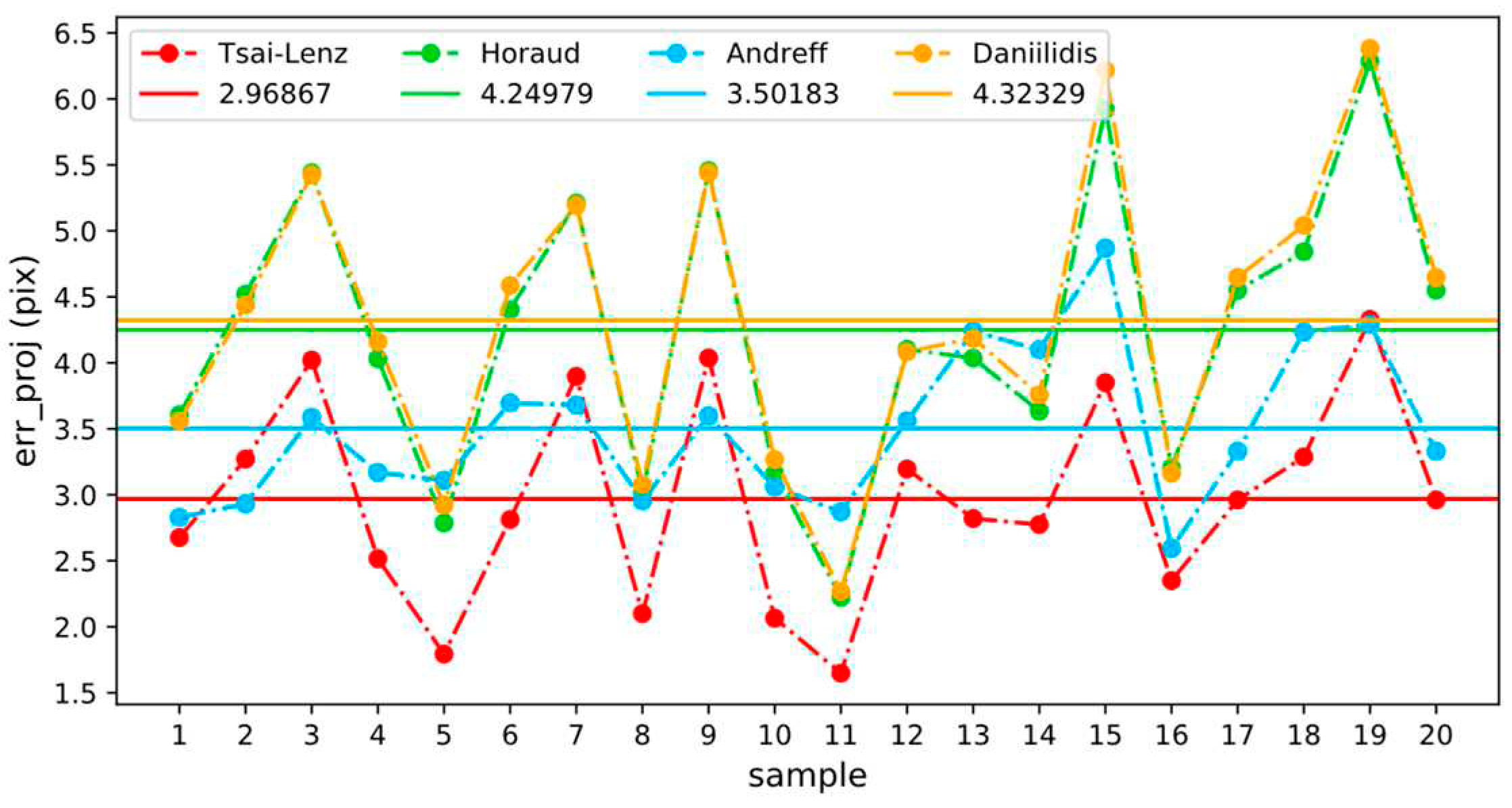

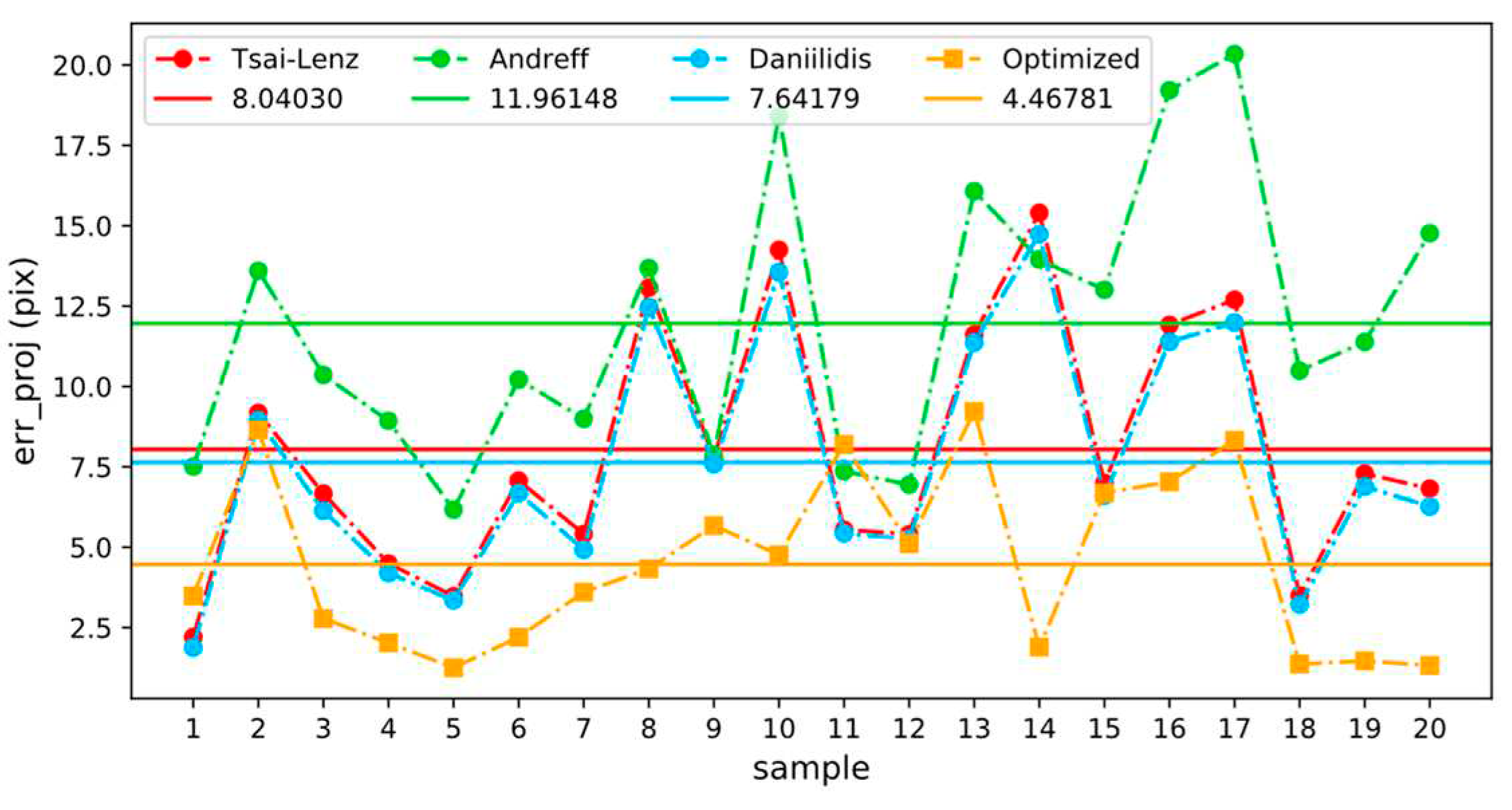

4.2. Reprojection error analysis

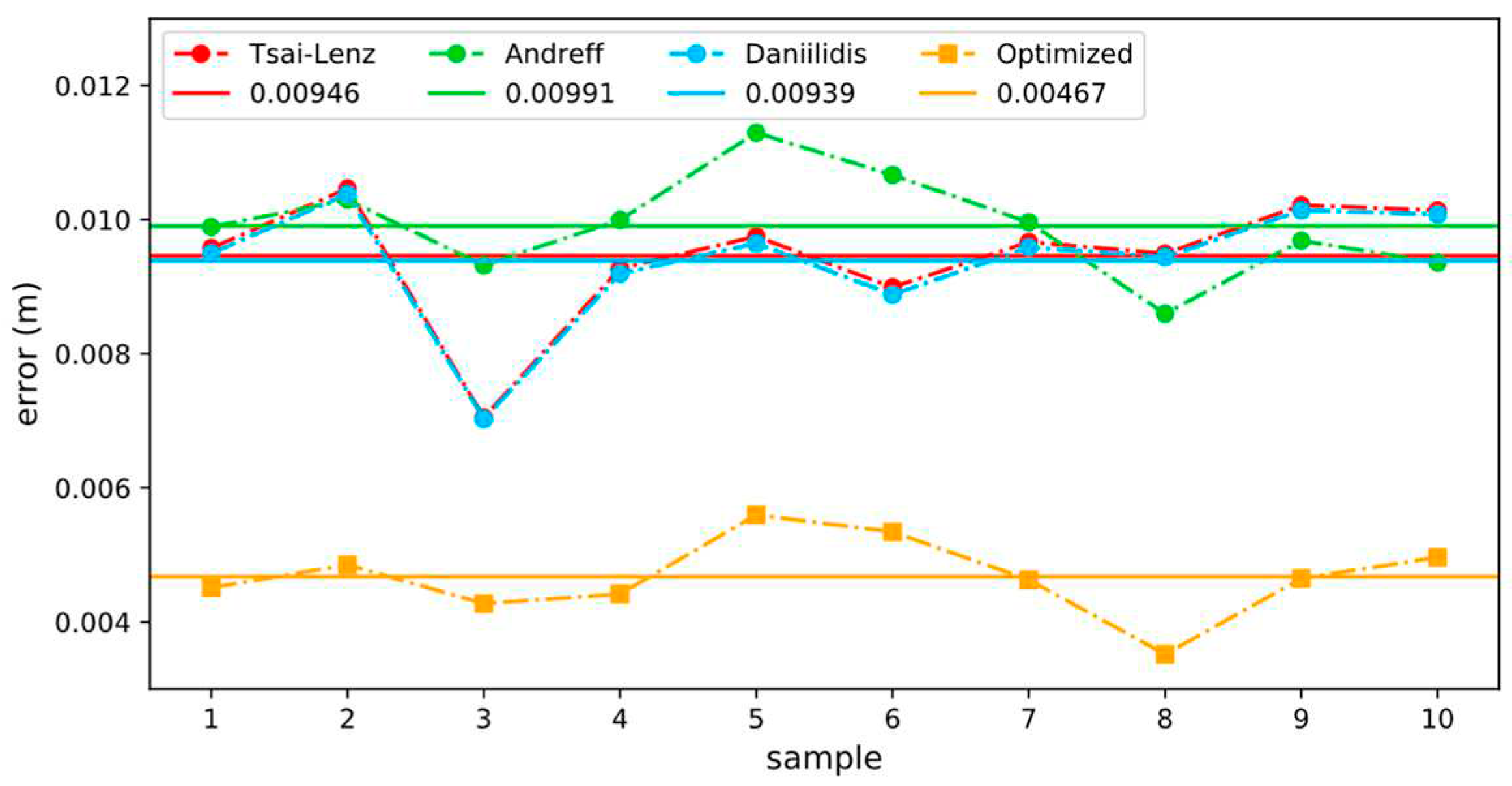

4.3. Visual positioning error analysis

5. Summary

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ge, Pengxiang, et al. "Multivision Sensor Extrinsic Calibration Method With Non-Overlapping Fields of View Using Encoded 1D Target." IEEE Sensors Journal 22.13 (2022): 13519-13528. [CrossRef]

- Bu, Lingbin, et al. "Concentric circle grids for camera calibration with considering lens distortion." Optics and Lasers in Engineering 140 (2021): 106527. [CrossRef]

- Chen, Xiangcheng, et al. "Camera calibration with global LBP-coded phase-shifting wedge grating arrays." Optics and Lasers in Engineering 136 (2021): 106314. [CrossRef]

- Zhang, Zhengyou. "A flexible new technique for camera calibration." IEEE Transactions on pattern analysis and machine intelligence 22.11 (2000): 1330-1334. [CrossRef]

- Hartley, Richard I. "Self-calibration of stationary cameras." International journal of computer vision 22 (1997): 5-23. [CrossRef]

- Li, Mengxiang. "Camera calibration of a head-eye system for active vision." Computer Vision—ECCV'94: Third European Conference on Computer Vision Stockholm, Sweden, May 2–6, 1994 Proceedings, Volume I 3. Springer Berlin Heidelberg, 1994. [CrossRef]

- Hartley, Richard I. "Projective reconstruction and invariants from multiple images." IEEE Transactions on Pattern Analysis and Machine Intelligence 16.10 (1994): 1036-1041. [CrossRef]

- Pollefeys, Marc, Luc Van Gool, and Andre Oosterlinck. "The modulus constraint: a new constraint self-calibration." Proceedings of 13th International Conference on Pattern Recognition. Vol. 1. IEEE, 1996. [CrossRef]

- Triggs, Bill. "Autocalibration and the absolute quadric." Proceedings of IEEE Computer Society Conference on Computer Vision and Pattern Recognition. IEEE, 1997. [CrossRef]

- Heyden, Anders, and K. Astrom. "Flexible calibration: Minimal cases for auto-calibration." Proceedings of the Seventh IEEE International Conference on Computer Vision. Vol. 1. IEEE, 1999. [CrossRef]

- Pollefeys, Marc, Reinhard Koch, and Luc Van Gool. "Self-calibration and metric reconstruction inspite of varying and unknown intrinsic camera parameters." International journal of computer vision 32.1 (1999): 7-25. [CrossRef]

- Pollefeys, Marc. Self-calibration and metric 3D reconstruction from uncalibrated image sequences. Diss. PhD thesis, ESAT-PSI, KU Leuven, 1999.

- Hartley, Richard I., et al. "Camera calibration and the search for infinity." Proceedings of the Seventh IEEE International Conference on Computer Vision. Vol. 1. IEEE, 1999. [CrossRef]

- Wenyu Chen, Jia Du, Wei Xiong, Yue Wang, Shueching Chia, Bingbing Liu, Jierong Cheng, Ying Gu. "A Noise-Tolerant Algorithm for Robot-Sensor Calibration Using a Planar Disk of Arbitrary 3-D Orientation," in IEEE Transactions on Automation Science and Engineering, vol. 15, no. 1, pp. 251-263, Jan. 2018. [CrossRef]

- Mingyang Li, Zhijiang Du, Xiaoxing Ma, Wei Dong, Yongzhuo Gao. A robot hand-eye calibration method of line laser sensor based on 3D reconstruction. Robotics and Computer-Integrated Manufacturing. Volume 71, October 2021, 102136. [CrossRef]

- Yuan Zhang, Zhicheng Qiu, and Xianmin Zhang. Calibration method for hand-eye system with rotation and translation couplings. Applied Optics, Vol. 58, Issue 20, pp. 5375-5387, 2019. [CrossRef]

- Andreff, Nicolas, Radu Horaud, and Bernard Espiau. "On-line hand-eye calibration." Second International Conference on 3-D Digital Imaging and Modeling (Cat. No. PR00062). IEEE, 1999.

- Tabb, Amy, and Khalil M. Ahmad Yousef. "Solving the robot-world hand-eye (s) calibration problem with iterative methods." Machine Vision and Applications 28.5-6 (2017): 569-590. [CrossRef]

- Jianfeng Jiang, Xiao Luo, Shijie Xu, Qingsheng Luo, Minghao Li. "Hand-Eye Calibration of EOD Robot by Solving the AXB = YCZD Problem," in IEEE Access, vol. 10, pp. 3415-3429, 2022. [CrossRef]

- Tsai, Roger Y., and Reimar K. Lenz. "A new technique for fully autonomous and efficient 3 d robotics hand/eye calibration." IEEE Transactions on robotics and automation 5.3 (1989): 345-358. [CrossRef]

- Yan Liu, Qinglin Wang, Yuan Li. A method for hand-eye calibration of a robot vision measuring system. 2015 10th Asian Control Conference (ASCC), Kota Kinabalu, Malaysia, 2015, pp. 1-6. [CrossRef]

- Yanbiao Zou, Xiangzhi Chen. "Hand–eye calibration of arc welding robot and laser vision sensor through semidefinite programming", Industrial Robot, Vol. 45 No. 5, pp. 597-610, 2019. [CrossRef]

- Shichao Deng, Feng Mei, Long Yang, Chenguang Liang, Yingliang Jiang, Gaoxing Yu, Yihai Chen. "Research on the Hand-eye calibration Method Based on Monocular Robot". Journal of Physics: Conference Series, Volume 1820, 2021 International Conference on Mechanical Engineering, Intelligent Manufacturing and Automation Technology (MEMAT), January 15-17, 2021, Guilin, China. [CrossRef]

- Horaud, Radu, and Fadi Dornaika. "Hand-eye calibration." The international journal of robotics research 14.3 (1995): 195-210. [CrossRef]

- Daniilidis, Konstantinos. "Hand-eye calibration using dual quaternions." The International Journal of Robotics Research 18.3 (1999): 286-298. [CrossRef]

Short Biography of Authors

|

|

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).