Submitted:

17 October 2023

Posted:

27 October 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

- (1)

- We propose an information quantification and grading strategy to evaluate and quantify the amount of information contained in different positions within the image. This method allows us to assess the significance and relevance of various image regions.

- (2)

- We introduce a novel intra-layer rich-scale feature extraction module. This module is specifically designed to perform feature extraction on targets with large-scale. By adaptively extracting features at different we effectively capture the intricate and variations present in the image, leading to improved segmentation performance.

- (3)

- We propose an intra- and inter-layer feature aggregation module, this module allows for the refinement of feature maps and the aggregation of low-level features, such as edge features, with high-level semantic features.

- (4)

- Based on the above intra-layer rich-scale feature extraction module and intra- and inter-layer feature aggregation module, we construct a network specifically designed for high-resolution remote sensing image semantic segmentation.

2. Related Work

2.1. Remote Sensing Image Semantic Segmentation

2.2. Multi-scale Context Feature

3. Method

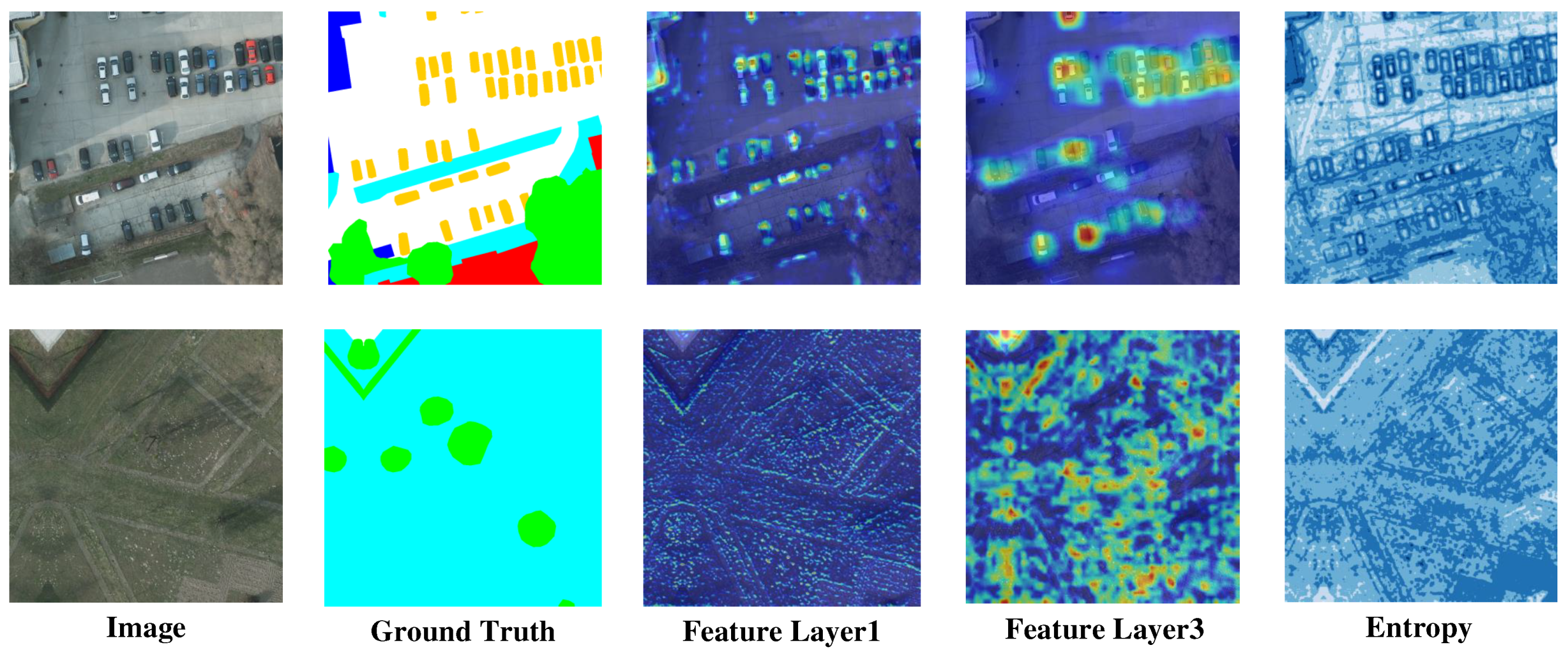

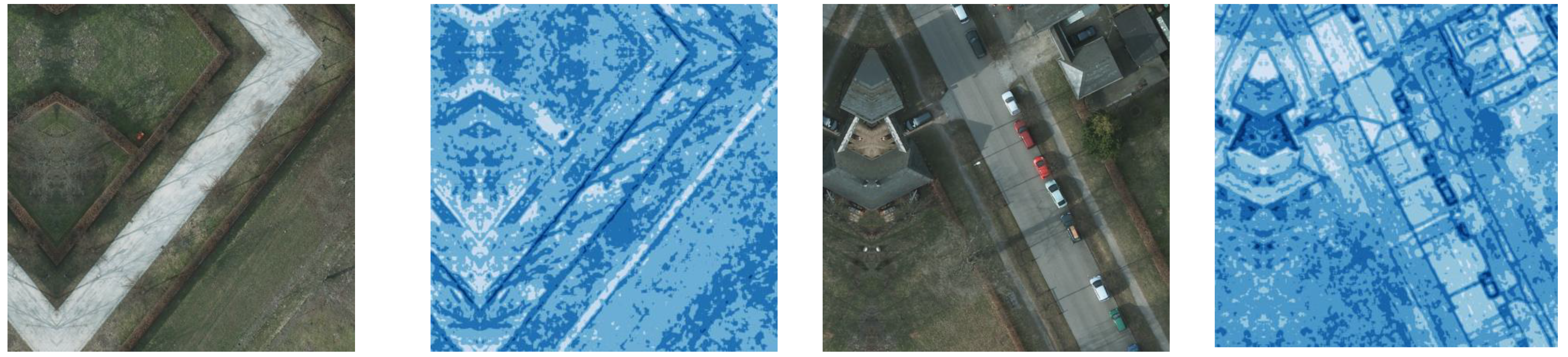

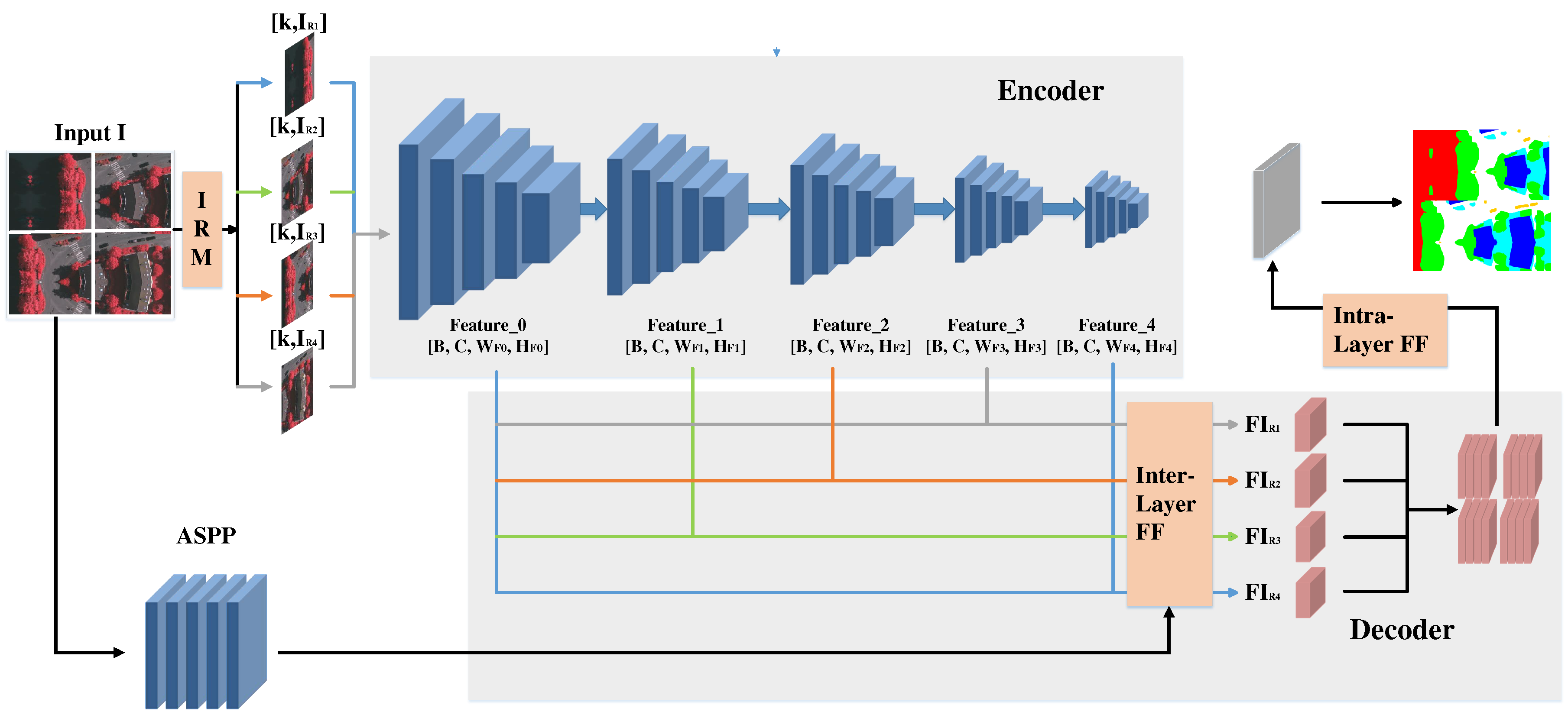

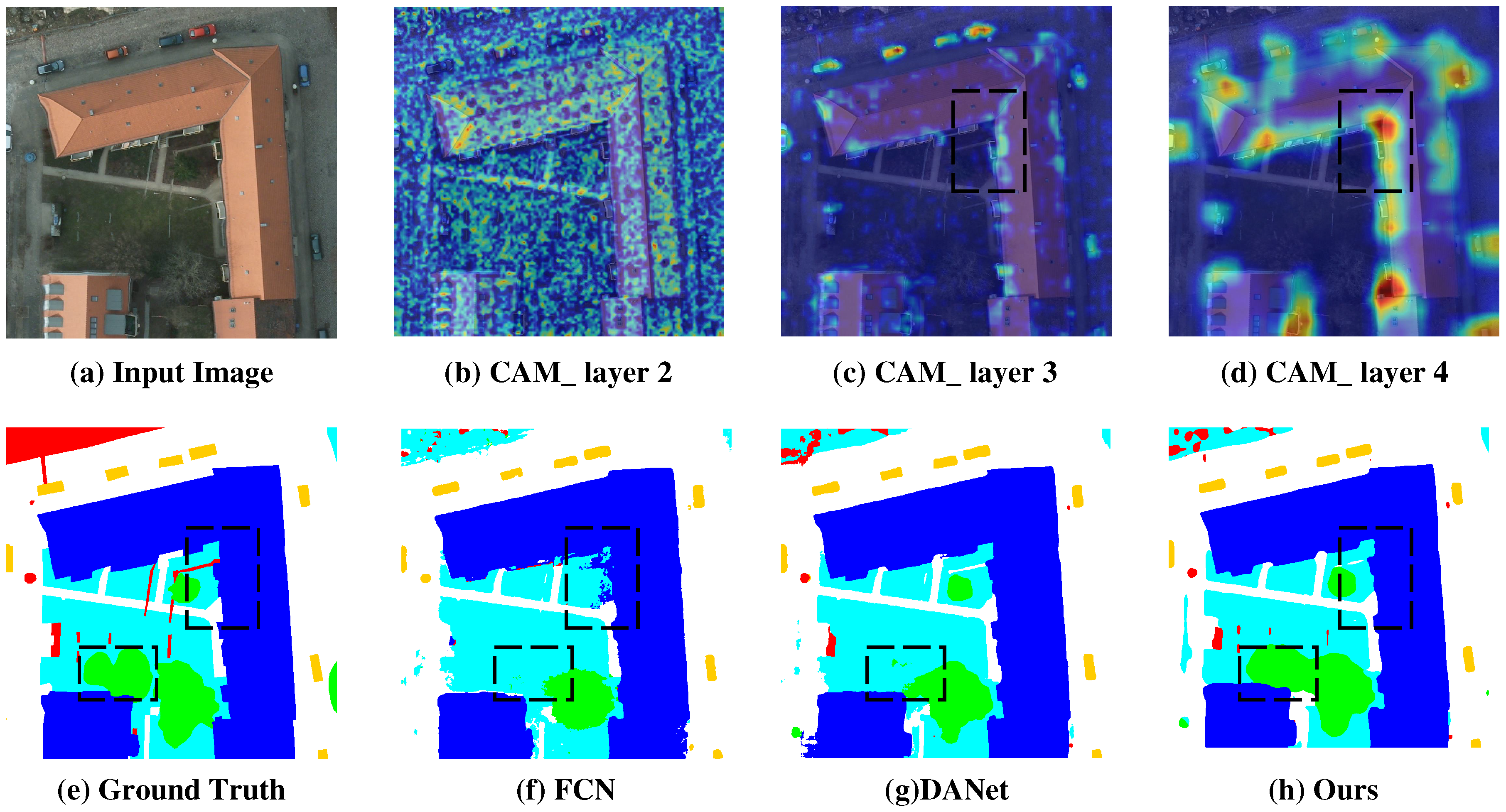

3.1. Information Rating Module

3.2. Intra-layer Rich-scale Feature Enhancement

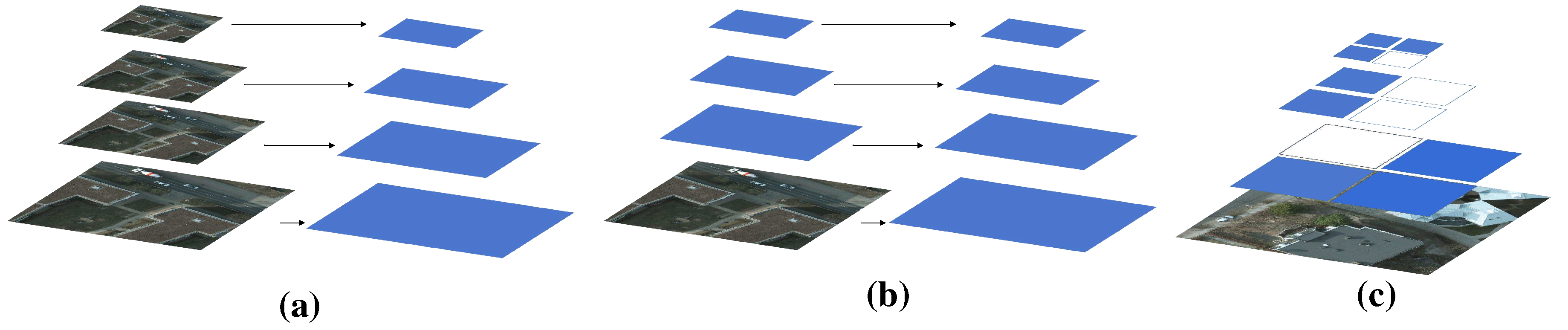

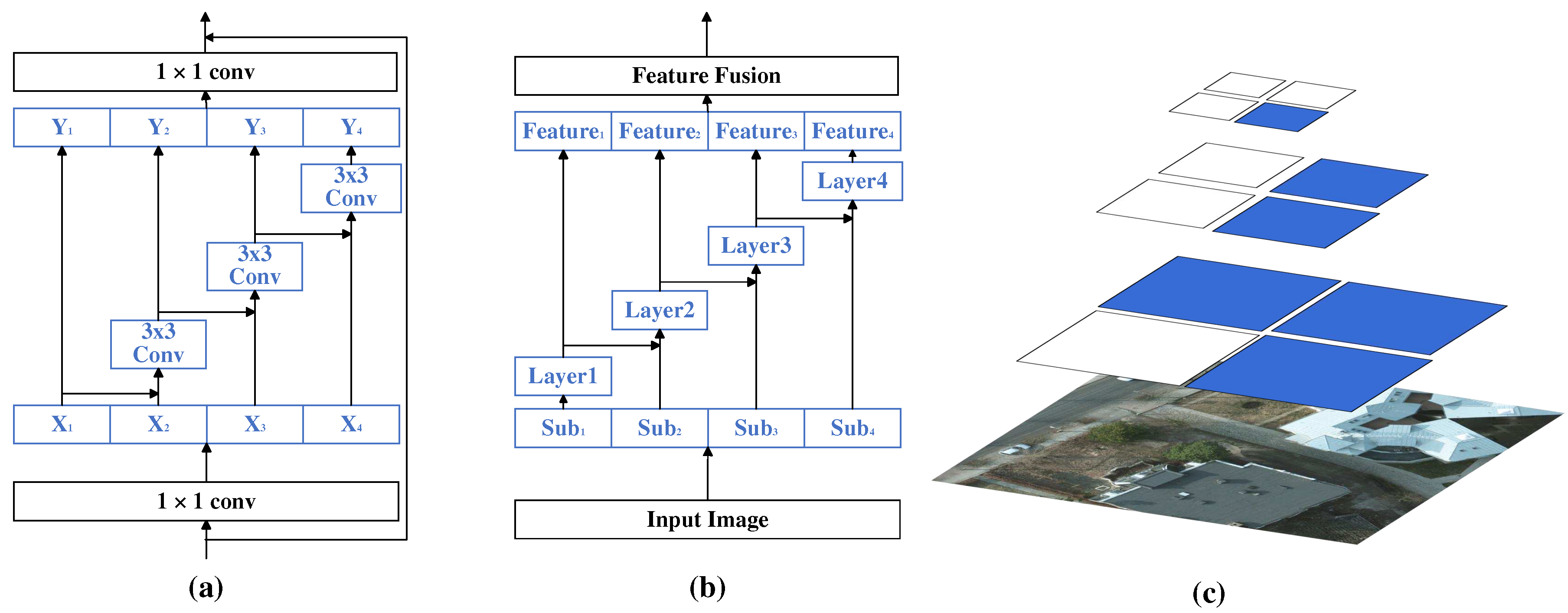

3.2.1. Intra-layer Rich-scale Feature Extraction

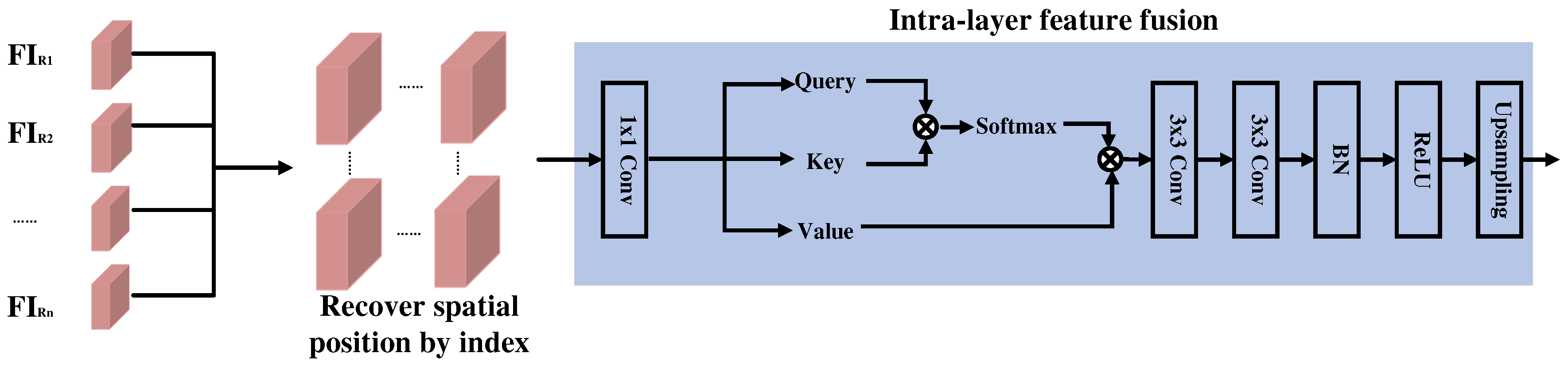

3.2.2. Inter- and Intra-layer Feature Fusion

3.3. Combined with Sota Method

3.3.1. Review Res2Net

3.3.2. Overall of Network Architecture

4. Experiments

4.1. Dataset

4.2. Evaluation Metrics

4.3. Experiment Setting and Complement Details

4.4. Results and Analysis

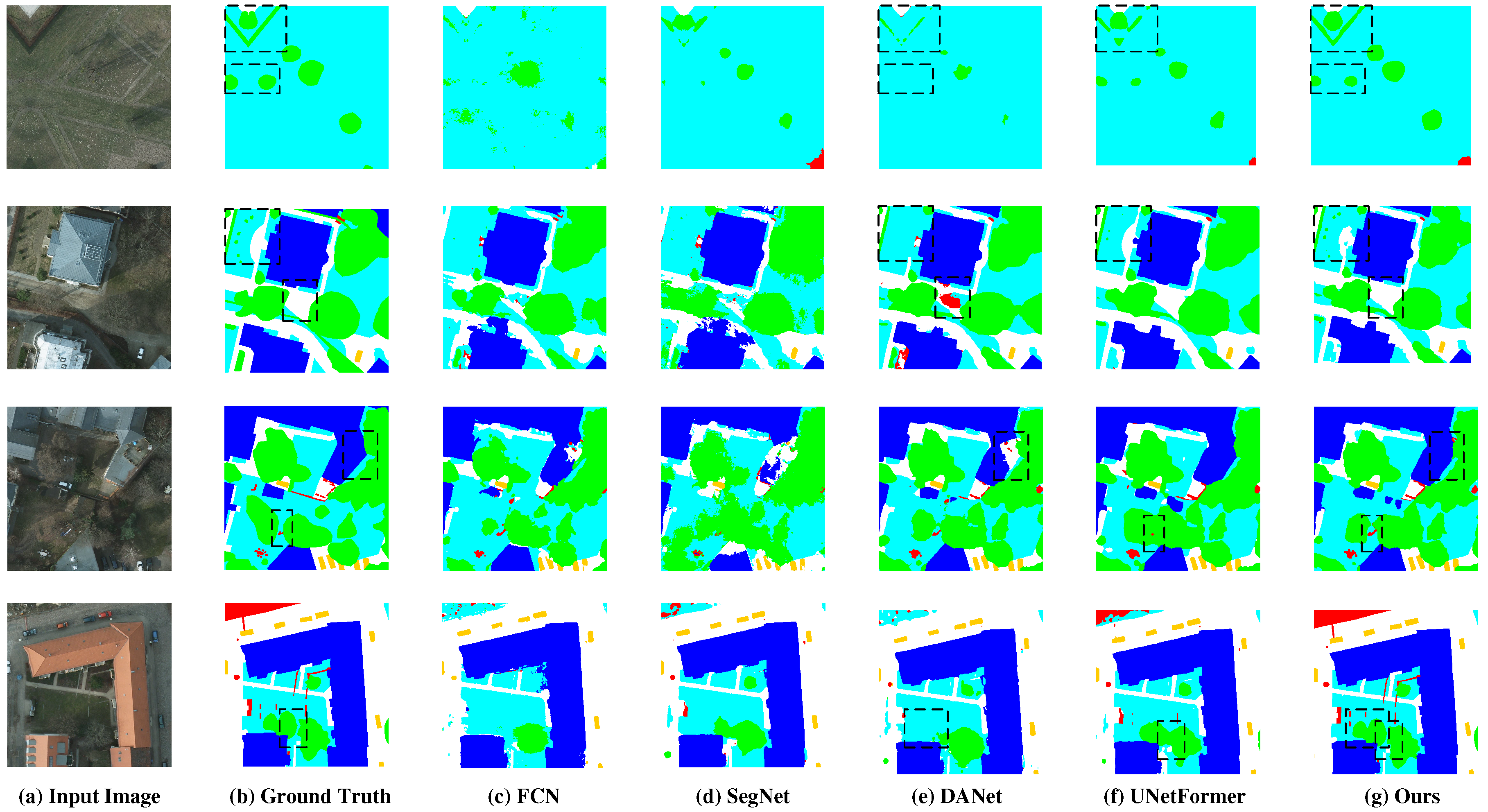

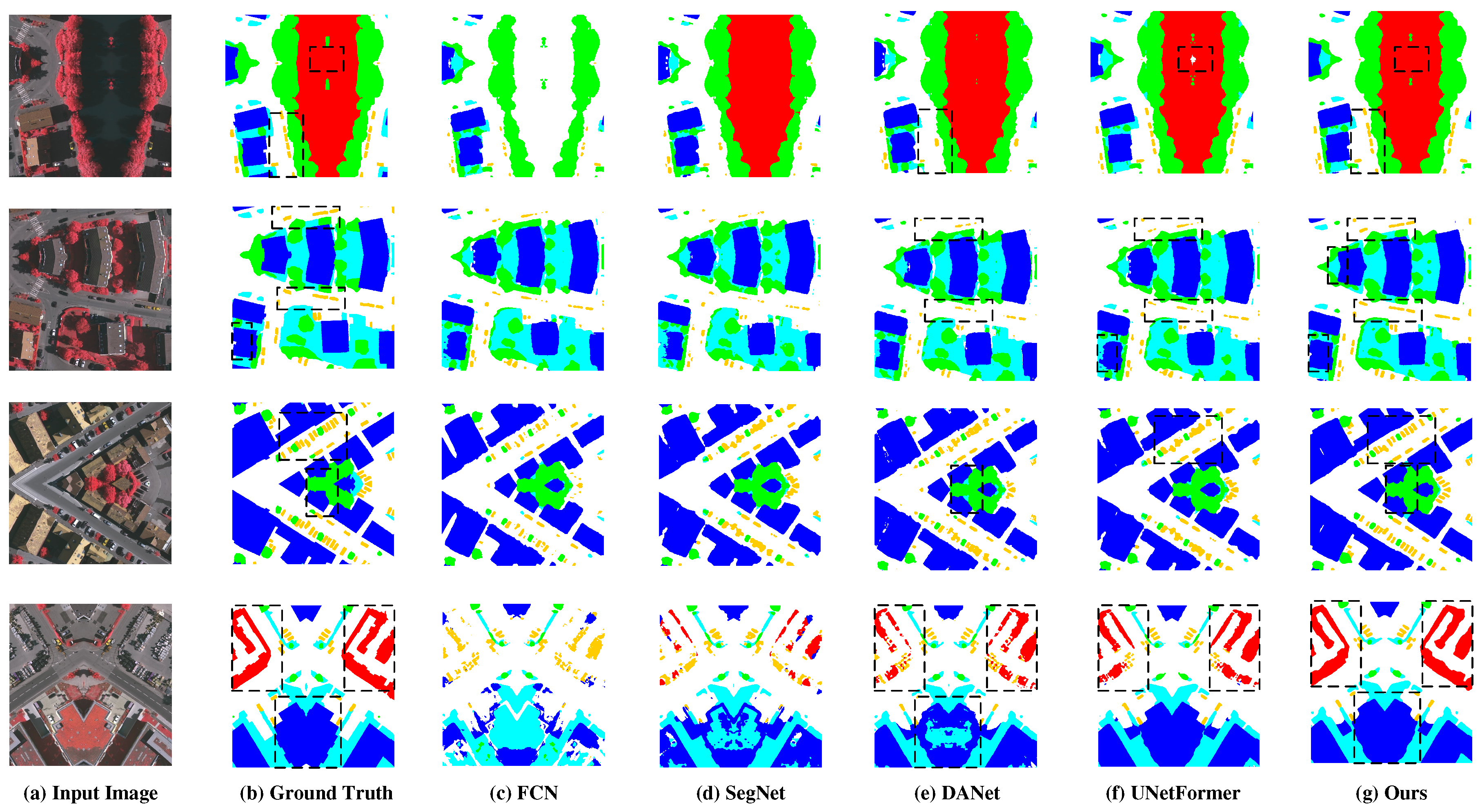

4.4.1. Comparison with State-of-the-art Methods

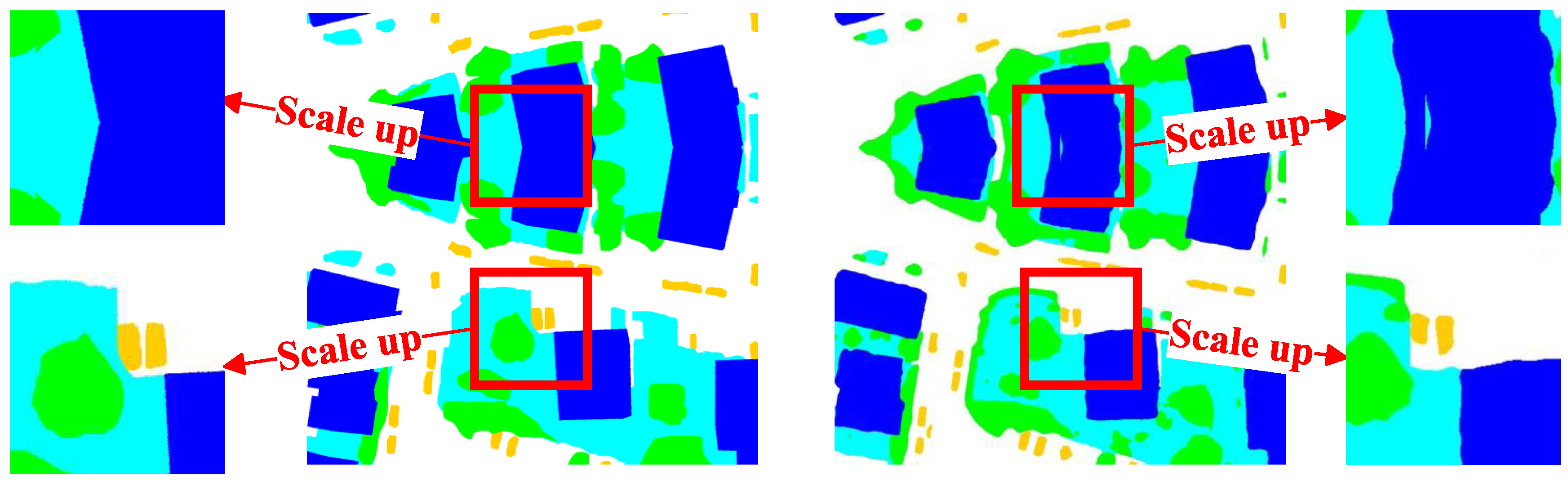

4.4.2. Ablation Experiments and Analysis

5. Conclusion

Conflicts of Interest

References

- Xu, H.; Song, J.; Zhu, Y. Evaluation and Comparison of Semantic Segmentation Networks for Rice Identification Based on Sentinel-2 Imagery. Remote Sensing 2023, 15, 1499. [Google Scholar] [CrossRef]

- Hao, X.; Yin, L.; Li, X.; Zhang, L.; Yang, R. A Multi-Objective Semantic Segmentation Algorithm Based on Improved U-Net Networks. Remote Sensing 2023, 15, 1838. [Google Scholar] [CrossRef]

- Zhang, Y.; Lu, H.; Ma, G.; Zhao, H.; Xie, D.; Geng, S.; Tian, W.; Sian, K.T.C.L.K. MU-Net: Embedding MixFormer into Unet to Extract Water Bodies from Remote Sensing Images. Remote Sens. 2023, 15, 3559. [Google Scholar] [CrossRef]

- He, C.; Liu, Y.; Wang, D.; Liu, S.; Yu, L.; Ren, Y. Automatic Extraction of Bare Soil Land from High-Resolution Remote Sensing Images Based on Semantic Segmentation with Deep Learning. Remote Sensing 2023, 15, 1646. [Google Scholar] [CrossRef]

- Ju, Y.; Xu, Q.; Jin, S.; Li, W.; Su, Y.; Dong, X.; Guo, Q. Loess Landslide Detection Using Object Detection Algorithms in Northwest China. Remote Sensing 2022, 14, 1182. [Google Scholar] [CrossRef]

- Fu, X.; Shen, F.; Du, X.; Li, Z. Bag of Tricks for “Vision Meet Alage” Object Detection Challenge. 2022 6th International Conference on Universal Village (UV). IEEE, 2022, pp. 1–4.

- Maulik, U.; Saha, I. Automatic Fuzzy Clustering Using Modified Differential Evolution for Image Classification. IEEE transactions on Geoscience and Remote sensing 2010, 48, 3503–3510. [Google Scholar] [CrossRef]

- Yang, M.D.; Huang, K.S.; Kuo, Y.H.; Tsai, H.P.; Lin, L.M. Spatial and Spectral Hybrid Image Classification for Rice Lodging Assessment through UAV Imagery. Remote Sensing 2017, 9, 583. [Google Scholar] [CrossRef]

- Guo, Y.; Jia, X.; Paull, D. Effective Sequential Classifier Training for SVM-based Multitemporal Remote Sensing Image Classification. IEEE Transactions on Image Processing 2018, 27, 3036–3048. [Google Scholar] [CrossRef] [PubMed]

- Huang, X.; Zhang, L. An SVM Ensemble Approach Combining Spectral, Structural, and Semantic Features for the Classification of High-Resolution Remotely Sensed Imagery. IEEE transactions on geoscience and remote sensing 2012, 51, 257–272. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2015, pp. 3431–3440.

- Sun, W.; Wang, R. Fully Convolutional Networks for Semantic Segmentation of Very High Resolution Remotely Sensed Images Combined with DSM. IEEE Geoscience and Remote Sensing Letters 2018, 15, 474–478. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An Image Is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv preprint, arXiv:2010.11929 2020, [2010.11929].

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Semantic Image Segmentation with Deep Convolutional Nets and Fully Connected Crfs. arXiv preprint, arXiv:1412.7062 2014, [1412.7062].

- Yu, F.; Koltun, V. Multi-Scale Context Aggregation by Dilated Convolutions. arXiv preprint, arXiv:1511.07122 2015, [1511.07122].

- Peng, C.; Zhang, X.; Yu, G.; Luo, G.; Sun, J. Large Kernel Matters–Improve Semantic Segmentation by Global Convolutional Network. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 4353–4361.

- Kirillov, A.; Girshick, R.; He, K.; Dollár, P. Panoptic Feature Pyramid Networks. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 6399–6408.

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-Local Neural Networks. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 7794–7803.

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual Attention Network for Scene Segmentation. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 3146–3154.

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 10012–10022.

- Strudel, R.; Garcia, R.; Laptev, I.; Schmid, C. Segmenter: Transformer for Semantic Segmentation. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 7262–7272.

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015, Proceedings, Part III 18. Springer, 2015, pp. 234–241.

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected Crfs. IEEE transactions on pattern analysis and machine intelligence 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking Atrous Convolution for Semantic Image Segmentation. arXiv preprint, arXiv:1706.05587 2017, [1706.05587].

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. Proceedings of the European Conference on Computer Vision (ECCV), 2018, pp. 801–818.

- Long, J.; Li, M.; Wang, X. Integrating Spatial Details With Long-Range Contexts for Semantic Segmentation of Very High-Resolution Remote-Sensing Images. IEEE Geoscience and Remote Sensing Letters 2023, 20, 1–5. [Google Scholar] [CrossRef]

- Shen, F.; Lin, L.; Wei, M.; Liu, J.; Zhu, J.; Zeng, H.; Cai, C.; Zheng, L. A large benchmark for fabric image retrieval. 2019 IEEE 4th International Conference on Image, Vision and Computing (ICIVC). IEEE, 2019, pp. 247–251.

- Jin, H.; Bao, Z.; Chang, X.; Zhang, T.; Chen, C. Semantic Segmentation of Remote Sensing Images Based on Dilated Convolution and Spatial-Channel Attention Mechanism. Journal of Applied Remote Sensing 2023, 17, 016518–016518. [Google Scholar] [CrossRef]

- Tan, X.; Xiao, Z.; Zhang, Y.; Wang, Z.; Qi, X.; Li, D. Context-Driven Feature-Focusing Network for Semantic Segmentation of High-Resolution Remote Sensing Images. Remote Sensing 2023, 15, 1348. [Google Scholar] [CrossRef]

- Wang, L.; Li, R.; Zhang, C.; Fang, S.; Duan, C.; Meng, X.; Atkinson, P.M. UNetFormer: A UNet-like Transformer for Efficient Semantic Segmentation of Remote Sensing Urban Scene Imagery. ISPRS Journal of Photogrammetry and Remote Sensing 2022, 190, 196–214. [Google Scholar] [CrossRef]

- Xu, R.; Shen, F.; Wu, H.; Zhu, J.; Zeng, H. Dual modal meta metric learning for attribute-image person re-identification. 2021 IEEE International Conference on Networking, Sensing and Control (ICNSC). IEEE, 2021, Vol. 1, pp. 1–6.

- Liu, J.; Shen, F.; Wei, M.; Zhang, Y.; Zeng, H.; Zhu, J.; Cai, C. A Large-Scale Benchmark for Vehicle Logo Recognition. 2019 IEEE 4th International Conference on Image, Vision and Computing (ICIVC). IEEE, 2019, pp. 479–483.

- Yang, Y.; Dong, J.; Wang, Y.; Yu, B.; Yang, Z. DMAU-Net: An Attention-Based Multiscale Max-Pooling Dense Network for the Semantic Segmentation in VHR Remote-Sensing Images. Remote Sensing 2023, 15, 1328. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhao, H.; Ma, G.; Xie, D.; Geng, S.; Lu, H.; Tian, W.; Lim Kam Sian, K.T.C. MAAFEU-Net: A Novel Land Use Classification Model Based on Mixed Attention Module and Adjustable Feature Enhancement Layer in Remote Sensing Images. ISPRS International Journal of Geo-Information 2023, 12, 206. [Google Scholar] [CrossRef]

- Xiao, R.; Zhong, C.; Zeng, W.; Cheng, M.; Wang, C. Novel Convolutions for Semantic Segmentation of Remote Sensing Images. IEEE Transactions on Geoscience and Remote Sensing 2023. [Google Scholar] [CrossRef]

- Shen, F.; Zhu, J.; Zhu, X.; Huang, J.; Zeng, H.; Lei, Z.; Cai, C. An Efficient Multiresolution Network for Vehicle Reidentification. IEEE Internet of Things Journal 2021, 9, 9049–9059. [Google Scholar] [CrossRef]

- Shen, F.; Peng, X.; Wang, L.; Zhang, X.; Shu, M.; Wang, Y. HSGM: A Hierarchical Similarity Graph Module for Object Re-identification. 2022 IEEE International Conference on Multimedia and Expo (ICME). IEEE, 2022, pp. 1–6.

- Shen, F.; Wei, M.; Ren, J. HSGNet: Object Re-identification with Hierarchical Similarity Graph Network. arXiv preprint, arXiv:2211.05486 2022.

- Wu, H.; Shen, F.; Zhu, J.; Zeng, H.; Zhu, X.; Lei, Z. A sample-proxy dual triplet loss function for object re-identification. IET Image Processing 2022, 16, 3781–3789. [Google Scholar] [CrossRef]

- Li, R.; Duan, C.; Zheng, S.; Zhang, C.; Atkinson, P.M. MACU-Net for Semantic Segmentation of Fine-Resolution Remotely Sensed Images. IEEE Geoscience and Remote Sensing Letters 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Xie, Y.; Shen, F.; Zhu, J.; Zeng, H. Viewpoint robust knowledge distillation for accelerating vehicle re-identification. EURASIP Journal on Advances in Signal Processing 2021, 2021, 1–13. [Google Scholar] [CrossRef]

- Xu, R.; Wang, C.; Zhang, J.; Xu, S.; Meng, W.; Zhang, X. Rssformer: Foreground Saliency Enhancement for Remote Sensing Land-Cover Segmentation. IEEE Transactions on Image Processing 2023, 32, 1052–1064. [Google Scholar] [CrossRef] [PubMed]

- Qiao, C.; Shen, F.; Wang, X.; Wang, R.; Cao, F.; Zhao, S.; Li, C. A Novel Multi-Frequency Coordinated Module for SAR Ship Detection. 2022 IEEE 34th International Conference on Tools with Artificial Intelligence (ICTAI). IEEE, 2022, pp. 804–811.

- Shen, F.; Shu, X.; Du, X.; Tang, J. Pedestrian-specific Bipartite-aware Similarity Learning for Text-based Person Retrieval. Proceedings of the 31th ACM International Conference on Multimedia, 2023.

- Shen, F.; Xie, Y.; Zhu, J.; Zhu, X.; Zeng, H. Git: Graph interactive transformer for vehicle re-identification. IEEE Transactions on Image Processing 2023. [Google Scholar] [CrossRef] [PubMed]

- Li, M.; Wei, M.; He, X.; Shen, F. Enhancing Part Features via Contrastive Attention Module for Vehicle Re-identification. 2022 IEEE International Conference on Image Processing (ICIP). IEEE, 2022, pp. 1816–1820.

- Shen, F.; Zhu, J.; Zhu, X.; Xie, Y.; Huang, J. Exploring spatial significance via hybrid pyramidal graph network for vehicle re-identification. IEEE Transactions on Intelligent Transportation Systems 2021, 23, 8793–8804. [Google Scholar] [CrossRef]

- He, X.; Zhou, Y.; Zhao, J.; Zhang, D.; Yao, R.; Xue, Y. Swin Transformer Embedding UNet for Remote Sensing Image Semantic Segmentation. IEEE Transactions on Geoscience and Remote Sensing 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Gao, S.H.; Cheng, M.M.; Zhao, K.; Zhang, X.Y.; Yang, M.H.; Torr, P. Res2net: A New Multi-Scale Backbone Architecture. IEEE transactions on pattern analysis and machine intelligence 2019, 43, 652–662. [Google Scholar] [CrossRef] [PubMed]

- Shen, F.; He, X.; Wei, M.; Xie, Y. A competitive method to vipriors object detection challenge. arXiv preprint, arXiv:2104.09059 2021.

- Shen, F.; Wang, Z.; Wang, Z.; Fu, X.; Chen, J.; Du, X.; Tang, J. A Competitive Method for Dog Nose-print Re-identification. arXiv preprint, arXiv:2205.15934 2022.

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE transactions on pattern analysis and machine intelligence 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 2881–2890.

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. Unet++: A Nested u-Net Architecture for Medical Image Segmentation. Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support: 4th International Workshop, DLMIA 2018, and 8th International Workshop, ML-CDS 2018, Held in Conjunction with MICCAI 2018, Granada, Spain, 20 September 2018, Proceedings 4. Springer, 2018, pp. 3–11.

- Yuan, Y.; Chen, X.; Wang, J. Object-Contextual Representations for Semantic Segmentation. Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020, Proceedings, Part VI 16. Springer, 2020, pp. 173–190.

- Li, S.; Han, K.; Costain, T.W.; Howard-Jenkins, H.; Prisacariu, V. Correspondence Networks with Adaptive Neighbourhood Consensus. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 10196–10205.

- Li, R.; Zheng, S.; Duan, C.; Su, J.; Zhang, C. Multistage Attention ResU-Net for Semantic Segmentation of Fine-Resolution Remote Sensing Images. IEEE Geoscience and Remote Sensing Letters 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Zheng, Z.; Zhong, Y.; Wang, J.; Ma, A. Foreground-Aware Relation Network for Geospatial Object Segmentation in High Spatial Resolution Remote Sensing Imagery. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 4096–4105.

- Ding, L.; Tang, H.; Bruzzone, L. LANet: Local Attention Embedding to Improve the Semantic Segmentation of Remote Sensing Images. IEEE Transactions on Geoscience and Remote Sensing 2020, 59, 426–435. [Google Scholar] [CrossRef]

| Method | IoU | mIoU | F1-Score | OA | ||||

|---|---|---|---|---|---|---|---|---|

| Imp.surf. | Building | Lowveg. | Tree | Car | ||||

| SegNet [52] | 71.69 | 75.64 | 61.71 | 55.40 | 76.51 | 68.19 | 80.79 | 88.94 |

| FCN [11] | 81.64 | 89.11 | 71.36 | 73.34 | 79.32 | 71.44 | 81.85 | 87.17 |

| PSPNet [53] | 82.68 | 90.17 | 72.72 | 74.00 | 80.56 | 72.67 | 82.75 | 87.90 |

| DeepLab v3+ [25] | 79.80 | 86.86 | 69.73 | 68.10 | 83.08 | 77.51 | 87.14 | 85.67 |

| UNet++ [54] | 83.25 | 83.87 | 74.38 | 78.33 | 73.27 | 80.56 | - | - |

| OCRNet [55] | 85.17 | 90.22 | 75.31 | 76.96 | 89.83 | 83.50 | - | - |

| MACUNet [40] | 86.64 | 90.36 | 73.37 | 76.58 | 80.69 | 84.76 | - | - |

| ANCNet [56] | 86.25 | 92.17 | 76.26 | 74.83 | 83.16 | 85.17 | - | - |

| DMAUNet [33] | 87.72 | 92.03 | 75.46 | 78.52 | 87.91 | 85.68 | - | - |

| HRFNet (Ours) | 87.21 | 94.09 | 77.92 | 80.38 | 92.75 | 86.47 | 92.62 | 91.14 |

| Method | IoU | mIoU | F1-Score | OA | ||||

|---|---|---|---|---|---|---|---|---|

| Imp.surf. | Building | Lowveg. | Tree | Car | ||||

| FCN [11] | 78.11 | 84.82 | 63.78 | 75.08 | 53.38 | 70.95 | 77.98 | 85.86 |

| SegNet [52] | 81.76 | 83.79 | 79.98 | 48.19 | 68.73 | 72.49 | 83.31 | 87.72 |

| DeepLab v3+ [52] | 78.62 | 86.07 | 64.47 | 75.43 | 58.69 | 72.66 | 79.41 | 86.27 |

| PSPNet [53] | 79.16 | 85.90 | 64.36 | 74.94 | 60.93 | 73.06 | 79.75 | 86.26 |

| MAResU-Net [57] | 79.58 | 86.05 | 64.31 | 75.69 | 59.68 | 73.06 | 79.51 | 86.52 |

| FarSeg [58] | 78.94 | 86.14 | 64.48 | 75.51 | 61.72 | 73.36 | 79.68 | 86.46 |

| LANet [59] | 79.41 | 86.17 | 64.47 | 75.87 | 64.29 | 74.04 | 79.84 | 86.59 |

| UNet [22] | 82.02 | 86.63 | 80.72 | 52.51 | 70.34 | 74.44 | 84.75 | 88.43 |

| DANet [19] | 82.27 | 89.15 | 71.77 | 73.70 | 81.72 | 79.72 | - | - |

| Unetformer [30] | 86.45 | 90.91 | 73.83 | 82.61 | 79.44 | 82.64 | 90.18 | 90.76 |

| HRFNet (Ours) | 87.30 | 91.69 | 82.72 | 81.13 | 73.70 | 83.31 | 90.77 | 91.21 |

| Rn[1] | Layers of Feature map | IoU | mIoU | |||||

|---|---|---|---|---|---|---|---|---|

| Imp.surf. | Building | Tree | Car | Lowveg. | Clutter | |||

| 1 | 2 | 85.87 | 90.98 | 81.45 | 76.60 | 71.90 | 39.83 | 81.36[2] |

| 1 | 2 | 86.76 | 91.59 | 82.33 | 78.92 | 72.81 | 43.60 | 82.48 |

| 2 | 2 | 86.56 | 91.61 | 82.27 | 79.55 | 73.04 | 43.74 | 82.61 |

| 1 | 3 | 86.75 | 91.36 | 82.83 | 78.73 | 73.80 | 46.41 | 82.69 |

| 3 | 3 | 86.89 | 91.63 | 82.35 | 79.47 | 73.22 | 46.67 | 82.71 |

| 1 | 4 | 86.64 | 91.72 | 82.45 | 79.19 | 73.62 | 46.26 | 82.72 |

| 2 | 4 | 87.18 | 92.16 | 82.84 | 78.72 | 74.00 | 45.74 | 82.98 |

| 4 | 4 | 87.30 | 91.69 | 82.72 | 81.13 | 73.70 | 49.49 | 83.31 |

| Rn | Feature map | IoU | mIoU | |||||

|---|---|---|---|---|---|---|---|---|

| Imp.surf. | Building | Tree | Car | Lowveg. | Clutter | |||

| 2 | 1,2 | 86.49 | 91.05 | 81.88 | 76.78 | 73.00 | 45.54 | 81.84 |

| 2 | 2,3 | 87.05 | 91.94 | 82.22 | 78.99 | 73.59 | 45.23 | 82.76 |

| 2 | 2,4 | 87.05 | 91.80 | 82.61 | 79.26 | 73.48 | 47.68 | 82.84 |

| 2 | 3,4 | 87.18 | 92.16 | 82.84 | 78.72 | 74.00 | 45.74 | 82.98 |

| 4 | 1,2,3,4 | 87.30 | 91.69 | 82.72 | 81.13 | 73.70 | 49.49 | 83.31 |

| 0]*Inter-layer Feature Fusion | IoU | 0]*mIoU | |||||

|---|---|---|---|---|---|---|---|

| Imp.surf. | Building | Tree | Car | Lowveg | Clutter | ||

| w/o | 86.77 | 92.01 | 81.92 | 78.81 | 73.02 | 54.11 | 82.51 |

| Edge smoothing | 87.05 | 91.80 | 82.61 | 79.25 | 73.48 | 47.68 | 82.84 |

| w/ | 87.30 | 91.69 | 82.72 | 81.13 | 73.70 | 49.49 | 83.31 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).