Submitted:

22 October 2023

Posted:

23 October 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Research Background

1.2. Related work

1.3. The contribution of the work in this paper

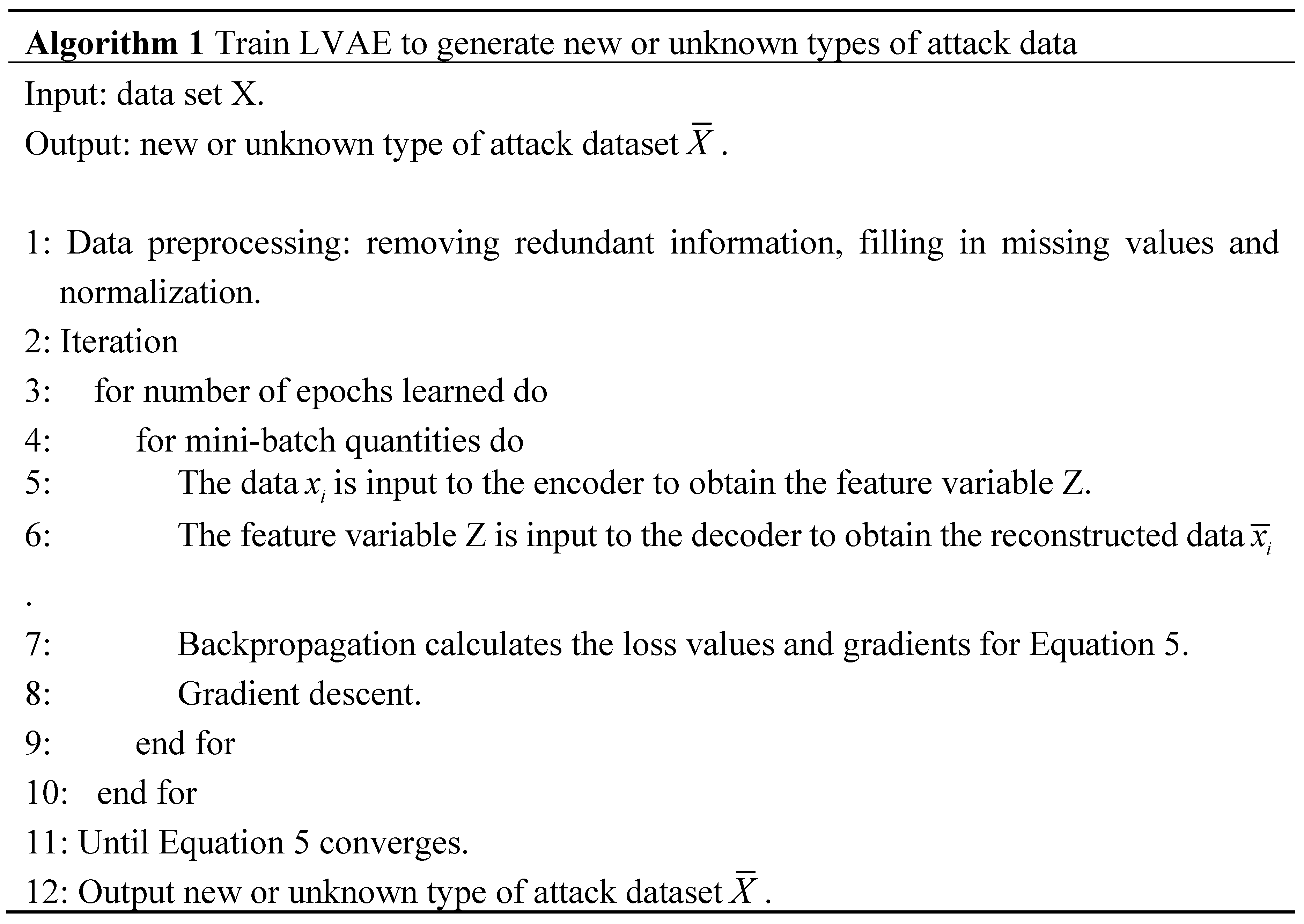

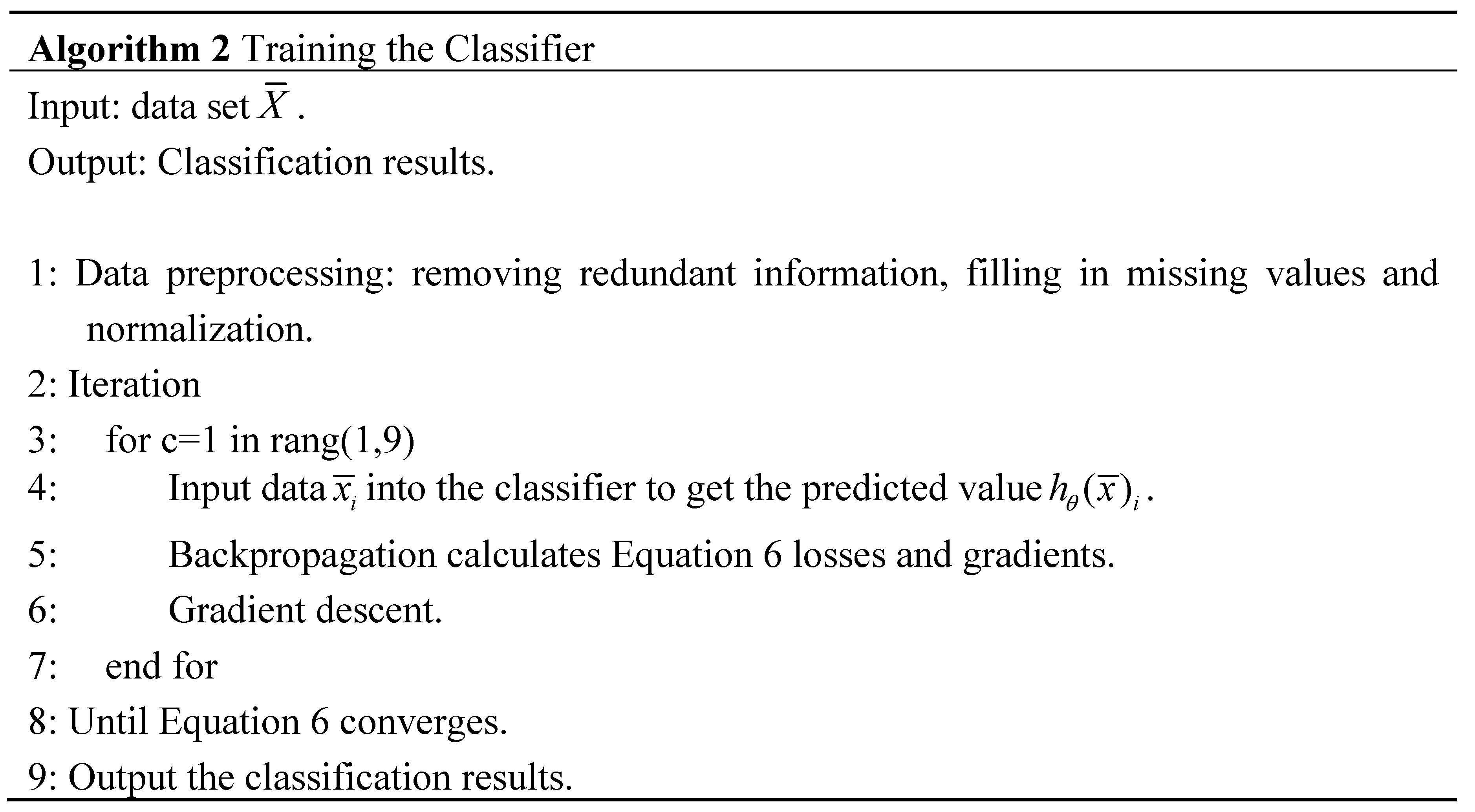

- We introduce a novel approach for identifying unknown assaults called LVAE, which we developed by creating an effective reconstruction loss term that makes use of the logarithmic hyperbolic cosine (log-cosh) function. The intricate distribution of actual attack data can be accurately captured by this function, allowing for the simulation of discrete features for modeling that enhance the creation of novel, unknown attacks;

- We utilize eight different techniques for feature extraction and data classification, choosing the most accurate approach at the end to guarantee outstanding performance in identifying unknowing assaults;

- We trained our model using the latest CICIDS 2017 dataset, incorporating varying numbers of real and unknown-type attacks. In comparison to several state-of-the-art methods, our LVAE approach demonstrated superior performance, surpassing the most recent advancements and significantly enhancing the detection rate of unknown-type attacks.

2. Materials and Methods

2.1. Log-cosh Variational Auto Encoder

2.2. Classification stage

3. Experiments

3.1. Description of the dataset

3.2. Assessment of indicators

3.3. Setting of model hyperparameters

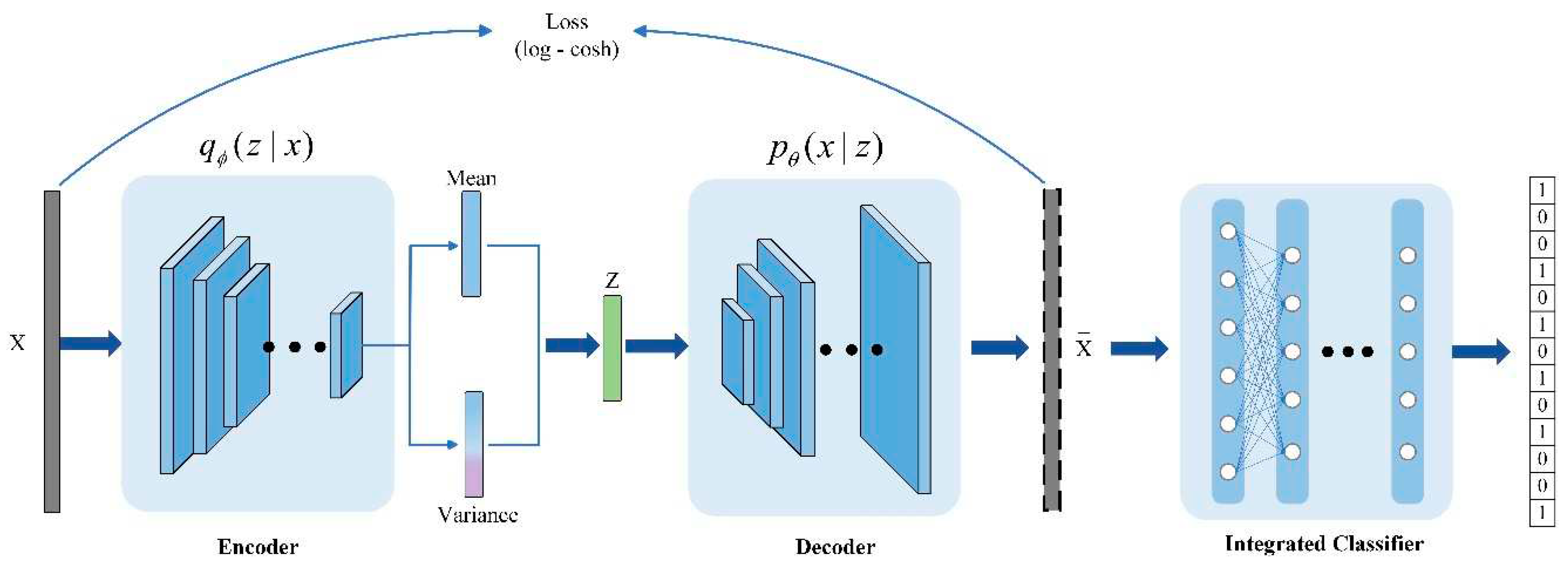

- Multilayer Perceptron (MLP): The hidden layer consists of 80 nodes with ReLu activation. The model is trained for 5 epochs with a batch size of 5, and the Adam optimizer is used for loss optimization;

- Gaussian-based Naive Bayes (GaussianNB): No specific priorities are set;

- Decision Tree (DT): The criterion is set to entropy, and the maximum number of tree layers is set to 4. The remaining parameters use default values;

- Random Forest (RF): The number of estimators is set to 100, while the other parameters use default values;

- Support Vector Machine (SVM): The gamma parameter is set to scale, and C is set to 1;

- Logistic Regression (LR): The penalty is set to L2, C is set to 1, and the maximum number of iterations is set to 1200,000;

- Gradient Boost (GB): The random_state is set to 0;

- Gated Recurrent Unit (GRU): The hidden layer consists of 80 nodes with a sigmoid activation function. Dropout is set to 0.2. The output layer utilizes the softmax activation function. The model is trained for 5 epochs with a batch size of 10, and the Adam optimizer is used for loss optimization.

4. Results and discussion

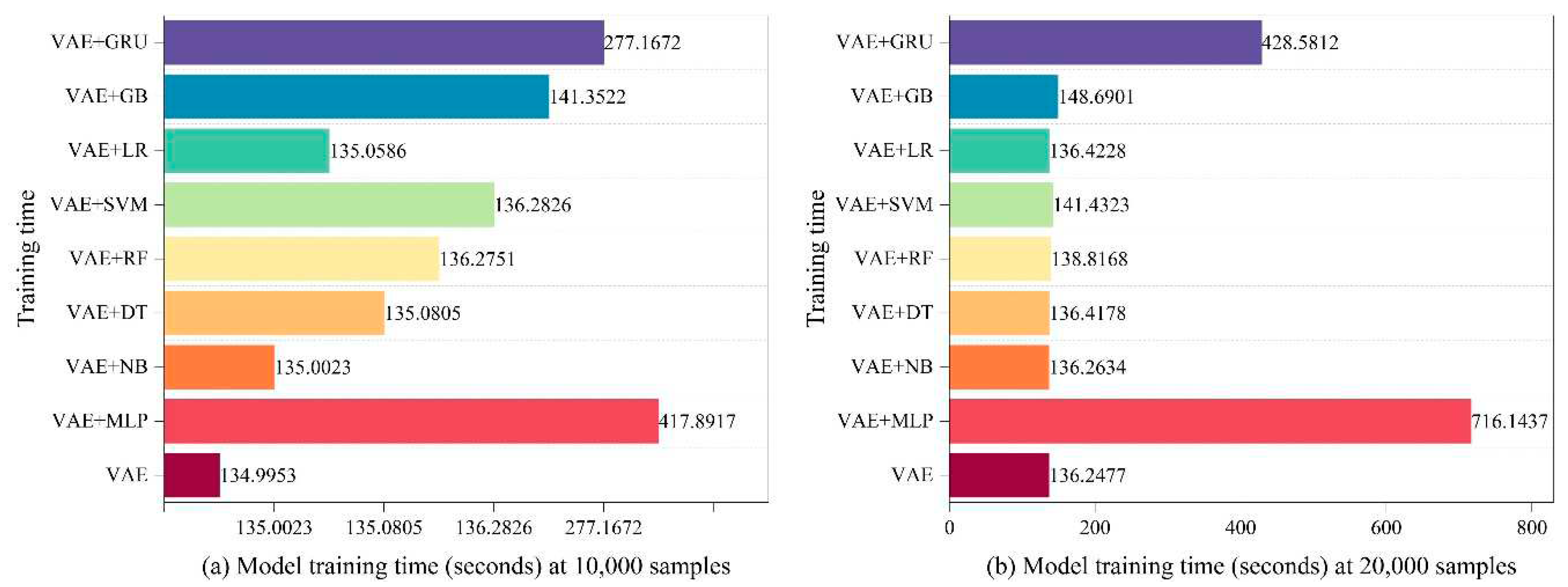

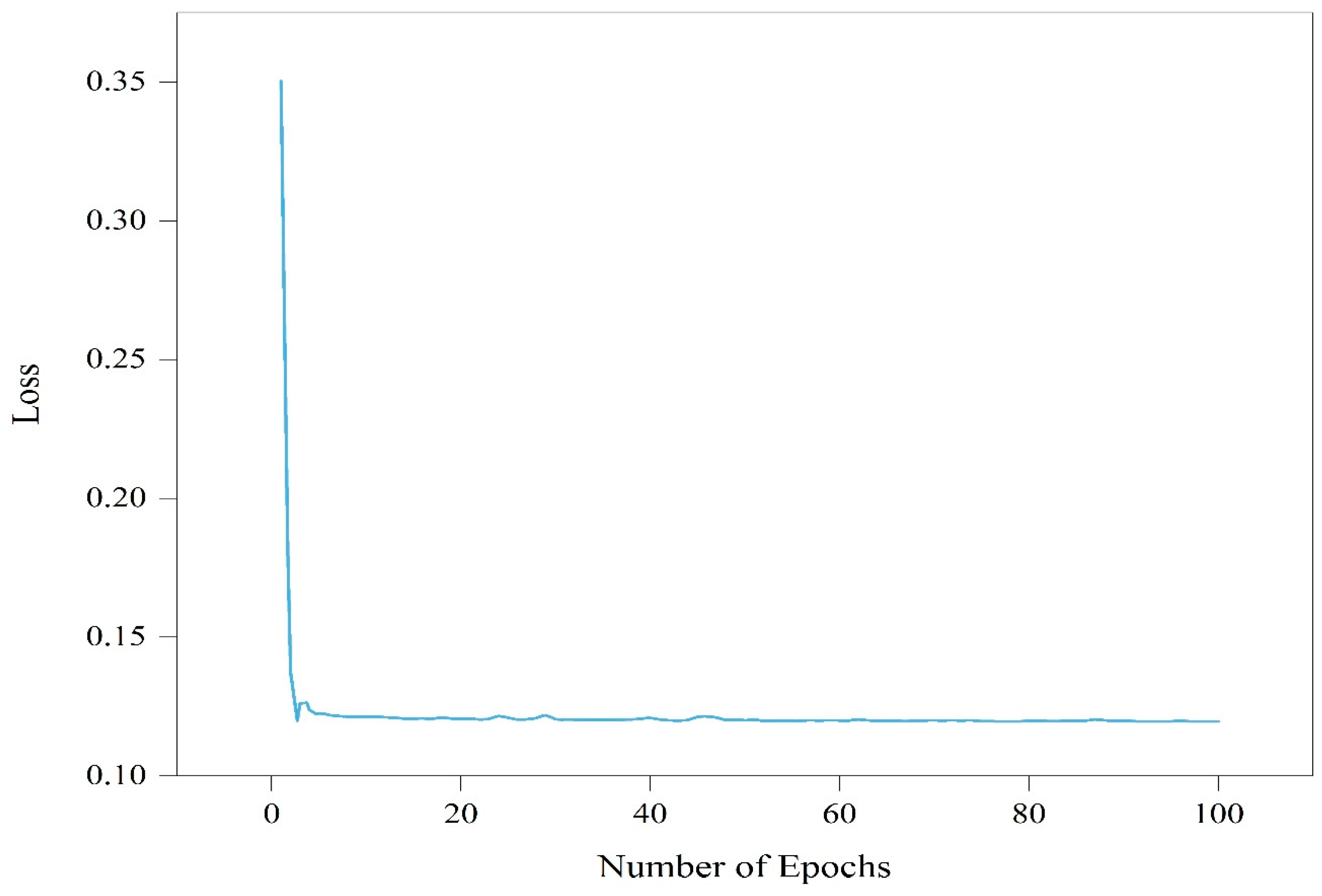

- Experimental Outcomes and Analysis: In order to assess the effectiveness of our LVAE technique, we performed a number of experiments. The results demonstrate that our approach is able to accurately detect attacks of unknown types. We make sure the model is successful against new threats by carefully choosing and fine-tuning its parameters. Our method's overall performance was further improved by carefully determining the ideal hyperparameters through experimentation;

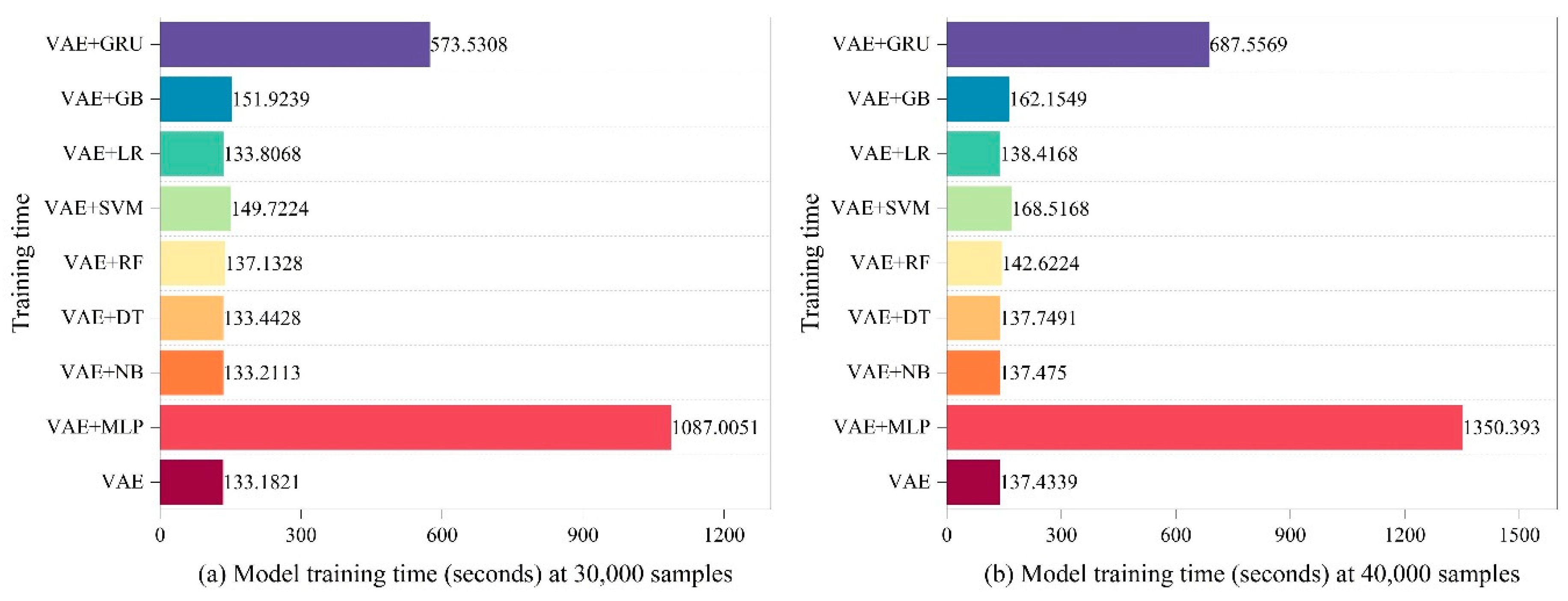

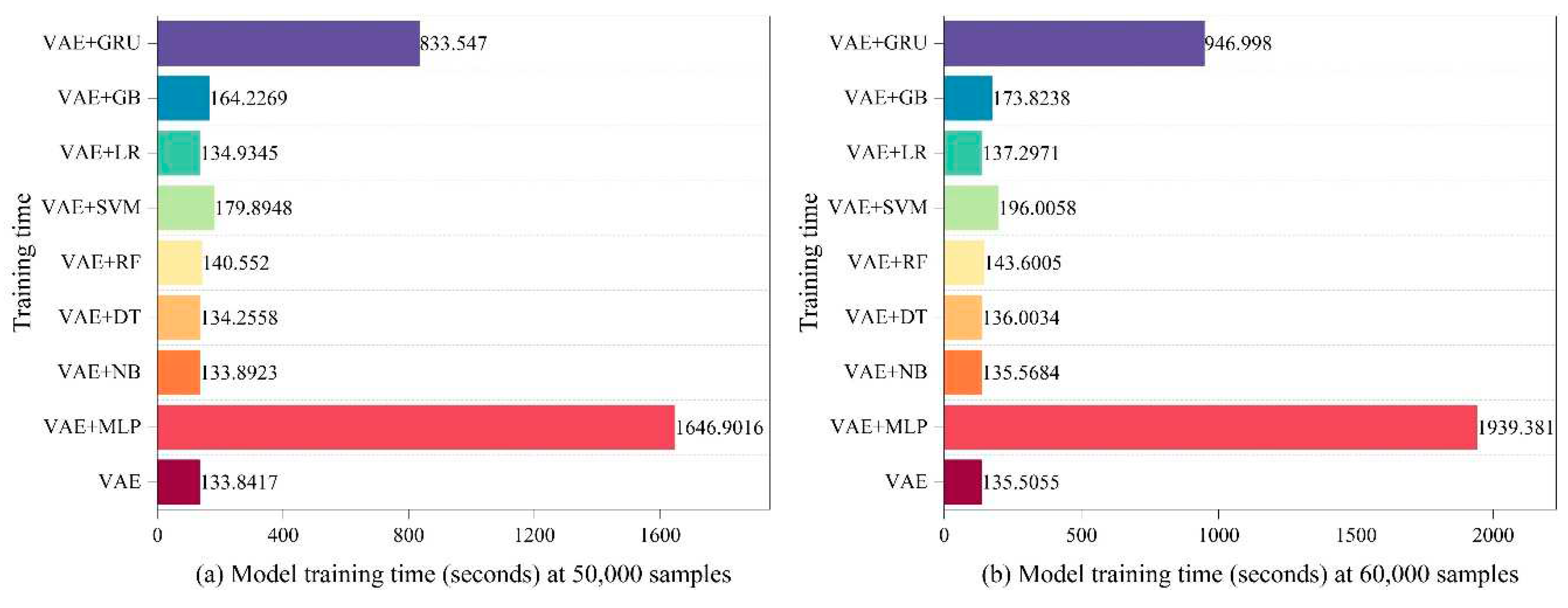

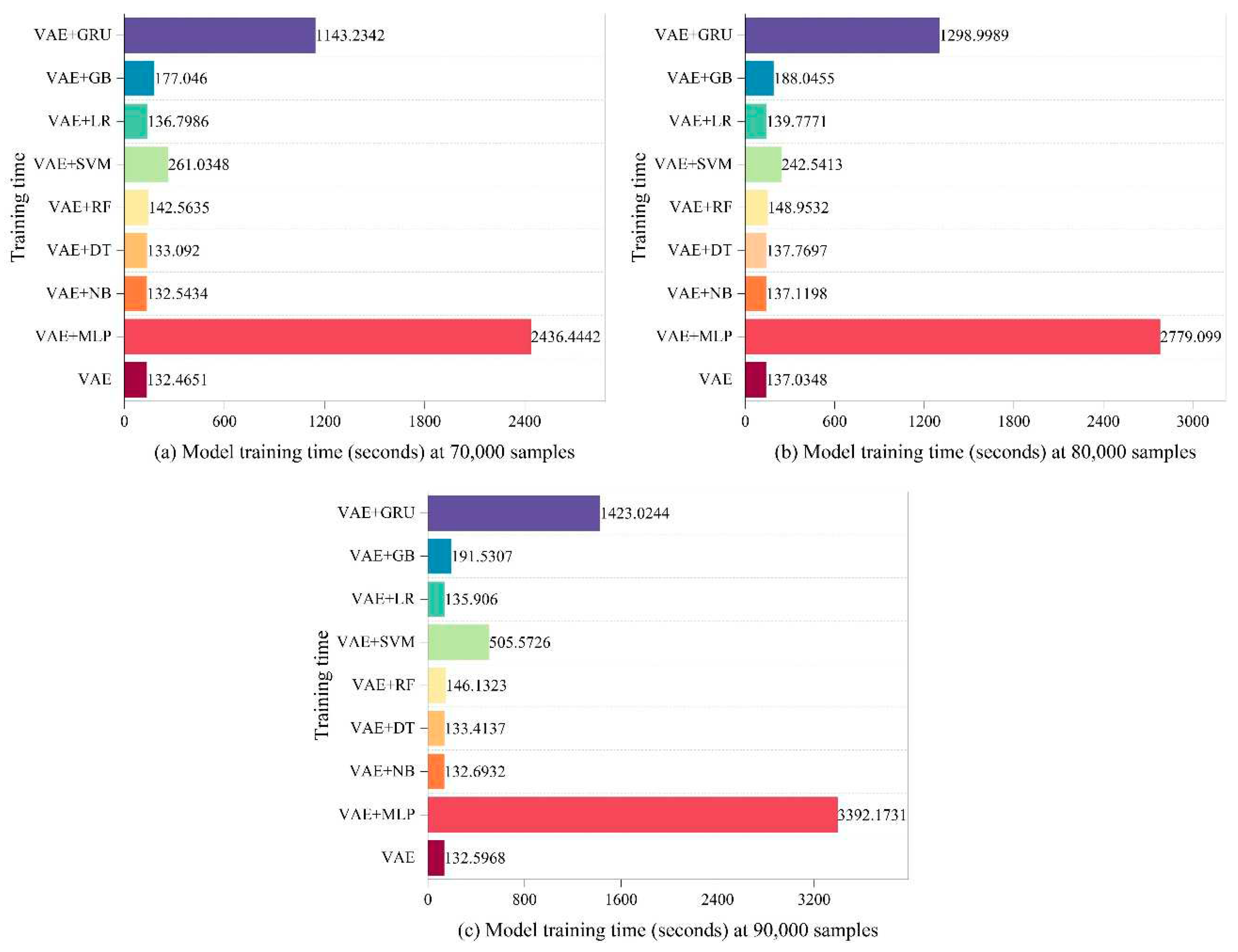

- Computational Cost Analysis: To identify novel or unknown attacks, we integrate eight different techniques in the integrated classifier section. Every technique has a set of carefully chosen parameters that are used to maximize performance. Each approach has a different computing cost, but we have taken steps to guarantee efficiency without sacrificing accuracy.

4.1. Analysis of experimental results

4.2. Calculated cost analysis of the various components of the LVAE methodology

5. Conclusion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Dong, S.; Xia, Y.; Peng, T. Network Abnormal Traffic Detection Model Based on Semi-Supervised Deep Reinforcement Learning. IEEE Trans. Netw. Serv. Manag. 2021, 18, 4197–4212. [Google Scholar] [CrossRef]

- Tian, Y.; Mirzabagheri, M.; Bamakan, S.M.H.; Wang, H.; Qu, Q. Ramp loss one-class support vector machine; A robust and effective approach to anomaly detection problems. Neurocomputing 2018, 310, 223–235. [Google Scholar] [CrossRef]

- Kamarudin, M.H.; Maple, C.; Watson, T.; Safa, N.S. A LogitBoost-Based Algorithm for Detecting Known and Unknown Web Attacks. IEEE Access 2017, 5, 26190–26200. [Google Scholar] [CrossRef]

- Ahmad, R.; Alsmadi, I.; Alhamdani, W.; Tawalbeh, L.a. A Deep Learning Ensemble Approach to Detecting Unknown Network Attacks. J. Inf. Secur. Appl. 2022, 67, 103196. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, K.; Liao, X.; Zhang, W. A genetic clustering method for intrusion detection. Pattern Recognit. 2004, 37, 927–942. [Google Scholar] [CrossRef]

- Xu, X.; Shen, F.; Yang, Y.; Shen, H.T.; Li, X. Learning Discriminative Binary Codes for Large-scale Cross-modal Retrieval. IEEE Trans. Image Process. 2017, 26, 2494–2507. [Google Scholar] [CrossRef] [PubMed]

- Luo, Y.; Yang, Y.; Shen, F.; Huang, Z.; Zhou, P.; Shen, H.T. Robust discrete code modeling for supervised hashing. Pattern Recognit. 2018, 75, 128–135. [Google Scholar] [CrossRef]

- Hu, M.; Yang, Y.; Shen, F.; Xie, N.; Shen, H.T. Hashing with Angular Reconstructive Embeddings. IEEE Trans. Image Process. 2018, 27, 545–555. [Google Scholar] [CrossRef] [PubMed]

- Xu, X.; Lu, H.; Song, J.; Yang, Y.; Shen, H.T.; Li, X. Ternary Adversarial Networks with Self-Supervision for Zero-Shot Cross-Modal Retrieval. IEEE Trans. Cybern. 2020, 50, 2400–2413. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.-S.; Chen, Y.-C.; Chew, C.-J.; Chen, C.-L.; Huynh, T.-N.; Kuo, C.-W. CoNN-IDS: Intrusion detection system based on collaborative neural networks and agile training. Comput. Secur. 2022, 122, 102908. [Google Scholar] [CrossRef]

- Lopez-Martin, M.; Sanchez-Esguevillas, A.; Arribas, J.I.; Carro, B. Contrastive Learning Over Random Fourier Features for IoT Network Intrusion Detection. IEEE Internet Things J. 2023, 10, 8505–8513. [Google Scholar] [CrossRef]

- Singh, A.; Chatterjee, K.; Satapathy, S.C. An edge based hybrid intrusion detection framework for mobile edge computing. Complex Intell. Syst. 2022, 8, 3719–3746. [Google Scholar] [CrossRef]

- Zoppi, T.; Ceccarelli, A.; Puccetti, T.; Bondavalli, A. Which algorithm can detect unknown attacks? Comparison of supervised, unsupervised and meta-learning algorithms for intrusion detection. Comput. Secur. 2023, 127, 103107. [Google Scholar] [CrossRef]

- Boukela, L.; Zhang, G.; Yacoub, M.; Bouzefrane, S. A near-autonomous and incremental intrusion detection system through active learning of known and unknown attacks. In Proceedings of the 2021 International Conference on Security, Pattern Analysis, and Cybernetics(SPAC), 18–20 June 2021; pp. 374–379. [Google Scholar]

- Soltani, M.; Ousat, B.; Jafari Siavoshani, M.; Jahangir, A.H. An adaptable deep learning-based intrusion detection system to zero-day attacks. J. Inf. Secur. Appl. 2023, 76, 103516. [Google Scholar] [CrossRef]

- Mahdavi, E.; Fanian, A.; Mirzaei, A.; Taghiyarrenani, Z. ITL-IDS: Incremental Transfer Learning for Intrusion Detection Systems. Knowl. -Based Syst. 2022, 253, 109542. [Google Scholar] [CrossRef]

- Mananayaka, A.K.; Chung, S.S. Network Intrusion Detection with Two-Phased Hybrid Ensemble Learning and Automatic Feature Selection. IEEE Access 2023, 11, 45154–45167. [Google Scholar] [CrossRef]

- Zhou, X.; Liang, W.; Li, W.; Yan, K.; Shimizu, S.; Wang, K.I.K. Hierarchical Adversarial Attacks Against Graph-Neural-Network-Based IoT Network Intrusion Detection System. IEEE Internet Things J. 2022, 9, 9310–9319. [Google Scholar] [CrossRef]

- Kumar, V.; Sinha, D. A robust intelligent zero-day cyber-attack detection technique. Complex Intell. Syst. 2021, 7, 2211–2234. [Google Scholar] [CrossRef] [PubMed]

- Sarhan, M.; Layeghy, S.; Gallagher, M.; Portmann, M. From zero-shot machine learning to zero-day attack detection. Int. J. Inf. Secur. 2023, 22, 947–959. [Google Scholar] [CrossRef]

- Sheng, C.; Yao, Y.; Li, W.; Yang, W.; Liu, Y. Unknown Attack Traffic Classification in SCADA Network Using Heuristic Clustering Technique. IEEE Trans. Netw. Serv. Manag. 2023, 1. [Google Scholar] [CrossRef]

- Hairab, B.I.; Elsayed, M.S.; Jurcut, A.D.; Azer, M.A. Anomaly Detection Based on CNN and Regularization Techniques Against Zero-Day Attacks in IoT Networks. IEEE Access 2022, 10, 98427–98440. [Google Scholar] [CrossRef]

- Araujo-Filho, P.F.d.; Naili, M.; Kaddoum, G.; Fapi, E.T.; Zhu, Z. Unsupervised GAN-Based Intrusion Detection System Using Temporal Convolutional Networks and Self-Attention. IEEE Trans. Netw. Serv. Manag. 2023, 1. [Google Scholar] [CrossRef]

- Verkerken, M.; D’hooge, L.; Sudyana, D.; Lin, Y.D.; Wauters, T.; Volckaert, B.; Turck, F.D. A Novel Multi-Stage Approach for Hierarchical Intrusion Detection. IEEE Trans. Netw. Serv. Manag. 2023, 1. [Google Scholar] [CrossRef]

- Sohi, S.M.; Seifert, J.-P.; Ganji, F. RNNIDS: Enhancing network intrusion detection systems through deep learning. Comput. Secur. 2021, 102, 102151. [Google Scholar] [CrossRef]

- Moustafa, N.; Keshk, M.; Choo, K.-K.R.; Lynar, T.; Camtepe, S.; Whitty, M. DAD: A Distributed Anomaly Detection system using ensemble one-class statistical learning in edge networks. Future Gener. Comput. Syst. 2021, 118, 240–251. [Google Scholar] [CrossRef]

- Debicha, I.; Bauwens, R.; Debatty, T.; Dricot, J.-M.; Kenaza, T.; Mees, W. TAD: Transfer learning-based multi-adversarial detection of evasion attacks against network intrusion detection systems. Future Gener. Comput. Syst. 2023, 138, 185–197. [Google Scholar] [CrossRef]

- Dina, A.S.; Manivannan, D. Intrusion detection based on Machine Learning techniques in computer networks. Internet Things 2021, 16, 100462. [Google Scholar] [CrossRef]

- Lai, Y.C.; Sudyana, D.; Lin, Y.D.; Verkerken, M.; D’hooge, L.; Wauters, T.; Volckaert, B.; Turck, F.D. Task Assignment and Capacity Allocation for ML-Based Intrusion Detection as a Service in a Multi-Tier Architecture. IEEE Trans. Netw. Serv. Manag. 2023, 20, 672–683. [Google Scholar] [CrossRef]

- Sabeel, U.; Heydari, S.S.; El-Khatib, K.; Elgazzar, K. Unknown, Atypical and Polymorphic Network Intrusion Detection: A Systematic Survey. IEEE Trans. Netw. Serv. Manag. 2023, 1–1. [Google Scholar] [CrossRef]

- Rani, S.V.J.; Ioannou, I.; Nagaradjane, P.; Christophorou, C.; Vassiliou, V.; Yarramsetti, H.; Shridhar, S.; Balaji, L.M.; Pitsillides, A. A Novel Deep Hierarchical Machine Learning Approach for Identification of Known and Unknown Multiple Security Attacks in a D2D Communications Network. IEEE Access 2023, 1–1. [Google Scholar] [CrossRef]

- Lu, C.; Wang, X.; Yang, A.; Liu, Y.; Dong, Z. A Few-shot Based Model-Agnostic Meta-Learning for Intrusion Detection in Security of Internet of Things. IEEE Internet Things J. 2023, 1. [Google Scholar] [CrossRef]

- Shin, G.Y.; Kim, D.W.; Han, M.M. Data Discretization and Decision Boundary Data Point Analysis for Unknown Attack Detection. IEEE Access 2022, 10, 114008–114015. [Google Scholar] [CrossRef]

- Lan, J.; Liu, X.; Li, B.; Zhao, J. A novel hierarchical attention-based triplet network with unsupervised domain adaptation for network intrusion detection. Appl. Intell. 2023, 53, 11705–11726. [Google Scholar] [CrossRef]

- Zavrak, S.; İskefiyeli, M. Anomaly-Based Intrusion Detection from Network Flow Features Using Variational Autoencoder. IEEE Access 2020, 8, 108346–108358. [Google Scholar] [CrossRef]

- Vu, L.; Nguyen, Q.U.; Nguyen, D.N.; Hoang, D.T.; Dutkiewicz, E. Deep Generative Learning Models for Cloud Intrusion Detection Systems. IEEE Trans. Cybern. 2023, 53, 565–577. [Google Scholar] [CrossRef] [PubMed]

- Long, C.; Xiao, J.; Wei, J.; Zhao, J.; Wan, W.; Du, G. Autoencoder ensembles for network intrusion detection. In Proceedings of the 2022 24th International Conference on Advanced Communication Technology (ICACT), 13–16 February 2022; pp. 323–333. [Google Scholar]

- Yang, J.; Chen, X.; Chen, S.; Jiang, X.; Tan, X. Conditional Variational Auto-Encoder and Extreme Value Theory Aided Two-Stage Learning Approach for Intelligent Fine-Grained Known/Unknown Intrusion Detection. IEEE Trans. Inf. Forensics Secur. 2021, 16, 3538–3553. [Google Scholar] [CrossRef]

- Abdalgawad, N.; Sajun, A.; Kaddoura, Y.; Zualkernan, I.A.; Aloul, F. Generative Deep Learning to Detect Cyberattacks for the IoT-23 Dataset. IEEE Access 2022, 10, 6430–6441. [Google Scholar] [CrossRef]

- Jin, D.; Chen, S.; He, H.; Jiang, X.; Cheng, S.; Yang, J. Federated Incremental Learning based Evolvable Intrusion Detection System for Zero-Day Attacks. IEEE Netw. 2023, 37, 125–132. [Google Scholar] [CrossRef]

- Yang, L.; Song, Y.; Gao, S.; Hu, A.; Xiao, B. Griffin: Real-Time Network Intrusion Detection System via Ensemble of Autoencoder in SDN. IEEE Trans. Netw. Serv. Manag. 2022, 19, 2269–2281. [Google Scholar] [CrossRef]

- Zahoora, U.; Rajarajan, M.; Pan, Z.; Khan, A. Zero-day Ransomware Attack Detection using Deep Contractive Autoencoder and Voting based Ensemble Classifier. Appl. Intell. 2022, 52, 13941–13960. [Google Scholar] [CrossRef]

- Boppana, T.K.; Bagade, P. GAN-AE: An unsupervised intrusion detection system for MQTT networks. Eng. Appl. Artif. Intell. 2023, 119, 105805. [Google Scholar] [CrossRef]

- Kim, C.; Chang, S.Y.; Kim, J.; Lee, D.; Kim, J. Automated, Reliable Zero-day Malware Detection based on Autoencoding Architecture. IEEE Trans. Netw. Serv. Manag. 2023, 1. [Google Scholar] [CrossRef]

- Li, R.; Li, Q.; Zhou, J.; Jiang, Y. ADRIoT: An Edge-Assisted Anomaly Detection Framework Against IoT-Based Network Attacks. IEEE Internet Things J. 2022, 9, 10576–10587. [Google Scholar] [CrossRef]

- Li, Z.; Chen, S.; Dai, H.; Xu, D.; Chu, C.K.; Xiao, B. Abnormal Traffic Detection: Traffic Feature Extraction and DAE-GAN With Efficient Data Augmentation. IEEE Trans. Reliab. 2023, 72, 498–510. [Google Scholar] [CrossRef]

- Sharafaldin, I.; Lashkari, A.H.; Ghorbani, A.A. Toward generating a new intrusion detection dataset and intrusion traffic characterization. ICISSp 2018, 1, 108–116. [Google Scholar]

- Xu, C.; Shen, J.; Du, X. A Method of Few-Shot Network Intrusion Detection Based on Meta-Learning Framework. IEEE Trans. Inf. Forensics Secur. 2020, 15, 3540–3552. [Google Scholar] [CrossRef]

| Traffic Class | Label | Numbers | Ratio |

|---|---|---|---|

| Benign | Benign | 2273,097 | 80.30% |

| DDoS | DDoS | 128,027 | 4.52% |

| DoS | DoS Hulk | 231,073 | 8.16% |

| DoS GoldenEye | 10,293 | 0.36% | |

| DoS slowloris | 5,796 | 0.20% | |

| DoS Slowhttptest | 5,499 | 0.19% | |

| Port Scan | Port Scan | 158,930 | 5.61% |

| Botnet | Bot | 1,966 | 0.07% |

| Brute Force | FTP-Patator | 7,938 | 0.28% |

| SSH-Patator | 5,897 | 0.20% | |

| Web Attack | Web Attack – Brute Force |

1,507 | 0.05% |

| Web Attack – Sql Injection |

21 | 0.001% | |

| Web Attack – XSS |

652 | 0.02% | |

| Infiltration | Infiltration | 36 | 0.002% |

| Heartbleed | Heartbleed | 11 | 0.001% |

| Total | N | 2830,743 | 100% |

| Training set distribution | Training dataset | Test set distribution | Test dataset |

|---|---|---|---|

| Benign (50%) | 10,000 | Benign 100 | 2,000+8,000(generated) |

| 20,000 | 2,000+18,000(generated) | ||

| 30,000 | 2,000+28,000(generated) | ||

| 40,000 | 2,000+38,000(generated) | ||

| 50,000 | 2,000+48,000(generated) | ||

| Attack (50%) | 60,000 | Attack 1,900 | 2,000+58,000 generated) |

| 70,000 | 2,000+68,000(generated) | ||

| 80,000 | 2,000+78,000(generated) | ||

| 90,000 | 2,000+88,000(generated) |

| Number of samples | Methods | Train (accuracy) | Test (accuracy) | F1 |

|---|---|---|---|---|

| Train (10,000) Test (10,000) |

MLP | 95.44% | 89.65% | 93.67% |

| NB | 80.84% | 97.82% | 97.98% | |

| DT | 88.29% | 98.95% | 98.49% | |

| RF | 99.95% | 98.87% | 98.45% | |

| SVM | 93.36% | 94.07% | 96.03% | |

| LR | 85.09% | 96.40% | 97.21% | |

| GB | 99.65% | 98.88% | 98.46% | |

| GRU | 80.97% | 99.01% | 98.52% | |

| Train (20,000) Test (20,000) |

MLP | 95.19% | 98.63% | 98.83% |

| NB | 63.60% | 94.37% | 96.68% | |

| DT | 91.57% | 97.56% | 98.31% | |

| RF | 99.98% | 99.47% | 99.24% | |

| SVM | 92.95% | 97.12% | 98.07% | |

| LR | 83.35% | 97.23% | 98.12% | |

| GB | 99.24% | 97.55% | 98.27% | |

| GRU | 87.30% | 99.50% | 99.25% | |

| Train (30,000) Test (30,000) |

MLP | 96.13% | 99.02% | 99.26% |

| NB | 79.27% | 3.88% | 7.46% | |

| DT | 91.13% | 98.37% | 98.87% | |

| RF | 99.93% | 99.66% | 99.50% | |

| SVM | 93.62% | 98.08% | 98.71% | |

| LR | 84.39% | 98.17% | 98.76% | |

| GB | 99.09% | 98.91% | 99.13% | |

| GRU | 88.04% | 99.67% | 99.50% |

| Number of samples | Methods | Train (accuracy) | Test (accuracy) | F1 |

|---|---|---|---|---|

| Train (40,000) Test (40,000) |

MLP | 96.90% | 99.64% | 99.61% |

| NB | 60.49% | 98.65% | 99.10% | |

| DT | 91.73% | 98.77% | 99.15% | |

| RF | 99.97% | 99.73% | 99.62% | |

| SVM | 94.44% | 98.98% | 99.25% | |

| LR | 86.85% | 98.63% | 99.07% | |

| GB | 99.21% | 99.74% | 99.62% | |

| GRU | 91.13% | 99.75% | 99.63% | |

| Train (50,000) Test (50,000) |

MLP | 97.33% | 98.62% | 99.15% |

| NB | 77.73% | 98.94% | 99.29% | |

| DT | 92.07% | 99.03% | 99.33% | |

| RF | 99.94% | 99.80% | 99.70% | |

| SVM | 94.94% | 98.19% | 98.90% | |

| LR | 88.29% | 98.90% | 99.25% | |

| GB | 99.17% | 99.78% | 99.69% | |

| GRU | 93.26% | 99.80% | 99.70% | |

| Train (60,000) Test (60,000) |

MLP | 97.41% | 99.06% | 99.04% |

| NB | 77.71% | 99.13% | 99.42% | |

| DT | 92.56% | 99.18% | 99.43% | |

| RF | 99.99% | 99.83% | 99.75% | |

| SVM | 94.28% | 98.97% | 99.32% | |

| LR | 88.52% | 99.09% | 99.38% | |

| GB | 99.21% | 99.83% | 99.75% | |

| GRU | 93.54% | 99.82% | 99.74% |

| Number of samples | Methods | Train (accuracy) | Test (accuracy) | F1 |

|---|---|---|---|---|

| Train (70,000) Test (70,000) |

MLP | 97.28% | 99.85% | 99.81% |

| NB | 59.99% | 99.85% | 99.79% | |

| DT | 91.84% | 99.29% | 99.52% | |

| RF | 99.98% | 99.86% | 99.79% | |

| SVM | 92.07% | 97.71% | 98.77% | |

| LR | 87.63% | 99.21% | 99.47% | |

| GB | 99.16% | 99.84% | 99.79% | |

| GRU | 91.12% | 99.86% | 99.79% | |

| Train (80,000) Test (80,000) |

MLP | 97.68% | 99.88% | 99.81% |

| NB | 63.57% | 99.38% | 99.39% | |

| DT | 92.67% | 99.37% | 99.57% | |

| RF | 99.99% | 99.87% | 99.81% | |

| SVM | 95.52% | 98.85% | 99.31% | |

| LR | 89.10% | 99.31% | 99.54% | |

| GB | 99.09% | 99.86% | 99.81% | |

| GRU | 92.97% | 99.87% | 99.81% | |

| Train (90,000) Test (90,000) |

MLP | 97.71% | 99.32% | 99.55% |

| NB | 65.17% | 99.45% | 99.63% | |

| DT | 92.85% | 99.44% | 99.62% | |

| RF | 99.99% | 99.87% | 99.83% | |

| SVM | 95.80% | 99.31% | 99.55% | |

| LR | 89.75% | 99.38% | 99.59% | |

| GB | 99.07% | 99.88% | 99.84% | |

| GRU | 95.32% | 99.89% | 99.83% |

| Research | Approach | Dataset | Accuracy | F1 |

|---|---|---|---|---|

| [10] | AE, DNN | CICIDS2017 | 97.28% | 72.81% |

| [38] | CVAE, Extreme value theory. | CICIDS2017 | 92.10% | 97.15% |

| [24] | AE, one-class SVM, RF, neural network. |

CICIDS2017 | 98.77% | 98.97% |

| [48] | CNN. | CICIDS2017 | 94.64% | 99.62% |

| proposed | Improved VAE, A. | CICIDS2017 | 99.89% | 99.83% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).