Submitted:

05 June 2025

Posted:

06 June 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. The Original Cognitive Architecture

3. Related Work

3.1. State-Of-The-Art in AI

- Symbolic interpretation: AI struggles to assign semantic significance to symbols, instead focusing on shallow comparisons like symmetry checks.

- Compositional reasoning: AI falters when it needs to apply multiple interacting rules simultaneously.

- Contextual rule application: Systems fail to apply rules differently based on complex contexts, often fixating on surface-level patterns.

3.2. Alternative Models

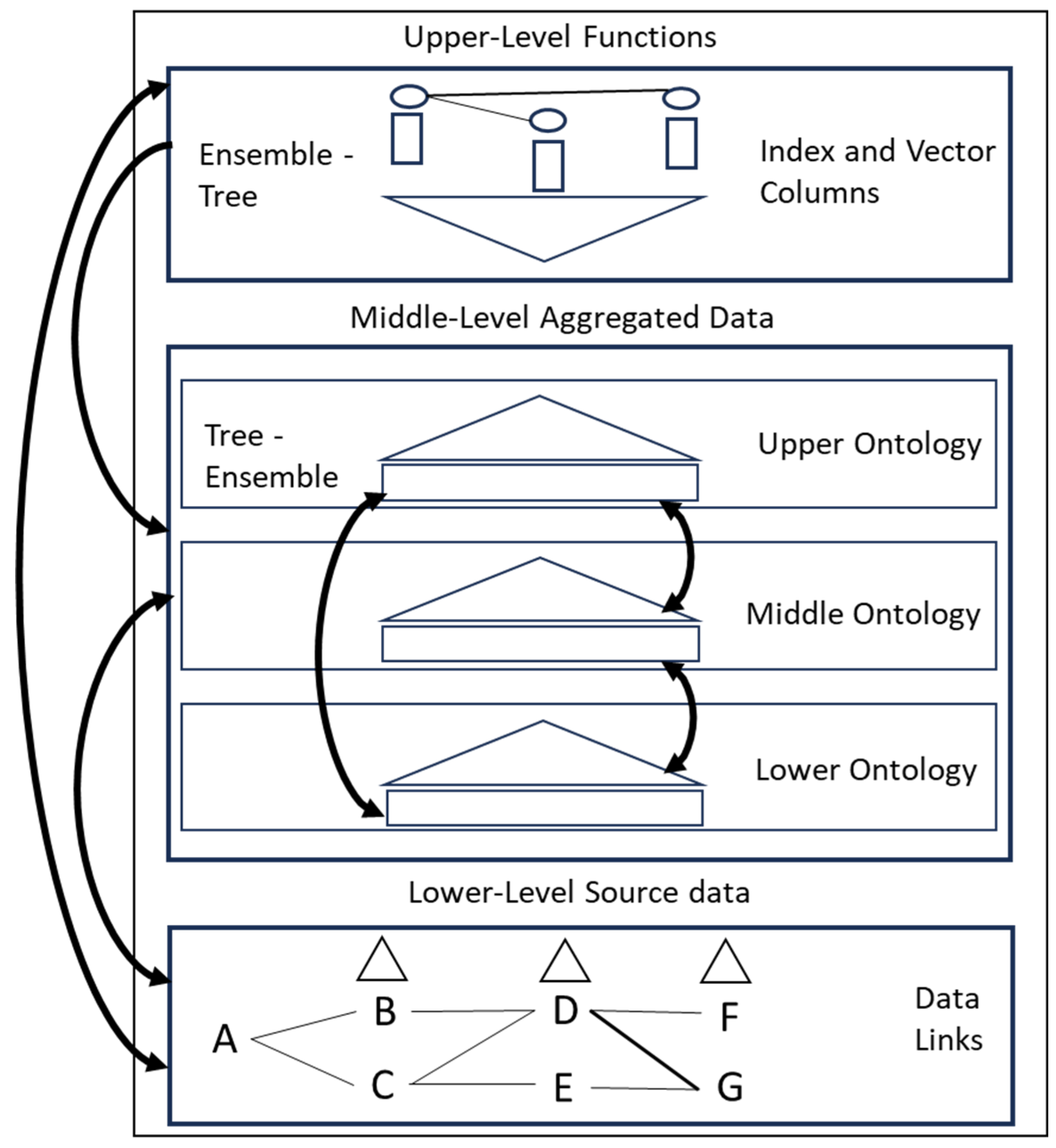

4. The Memory Model

4.1. Memory Model Levels

- (1)

- The lowest level is an n-gram structure that is sets of links only, between every source concept that has been stored. The links describe any possible routes through the source concept sequences, but are unweighted.

- (2)

- The middle level is an ontology that aggregates the source data through 3 phases and this converts it from set-based sequences into type-based clusters.

- (3)

- The upper level is a combination of the functional properties of the brain, with whatever input and resulting conversions they produce, being stored in the same memory substrate.

- Experience to knowledge.

- Knowledge to knowledge.

- Knowledge to experience.

4.2. Lower Memory Level

4.3. Middle Ontology Level

4.4. Unit of Work

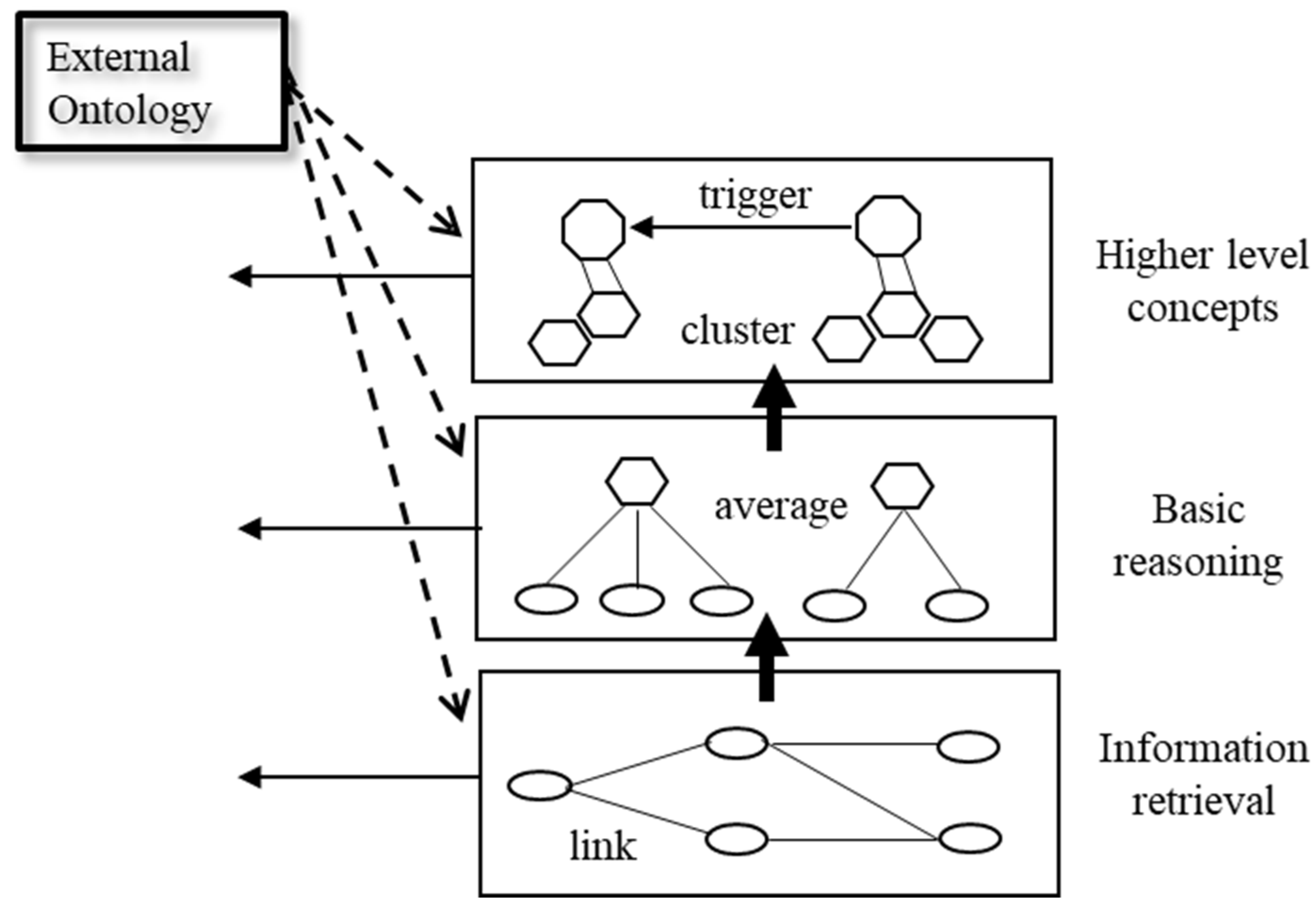

5. The Neural Level

5.1. Function Identity

5.2. Function Structure

5.3. Index Types

- Unipolar Type: this has a list of index terms that is generally a bit longer and is unordered. It can be matched with any sequence in the input set, but to only 1 sequence.

- Bipolar Type: this has a list of index terms and a related feature set. The index terms should be matched to only 1 sequence and some of the feature values should also match with that sequence. This matching should be in order however, where the order in the feature should be repeated in the sequence. Then the rest of the feature values can match with any other sequence and in any order.

- Pyramidal Type: this has a list of index terms and a related feature set. The index terms however are split over 2 specific sequences. Both the index terms and the related feature set should match with 2 specific sequences and the matching should be ordered in both.

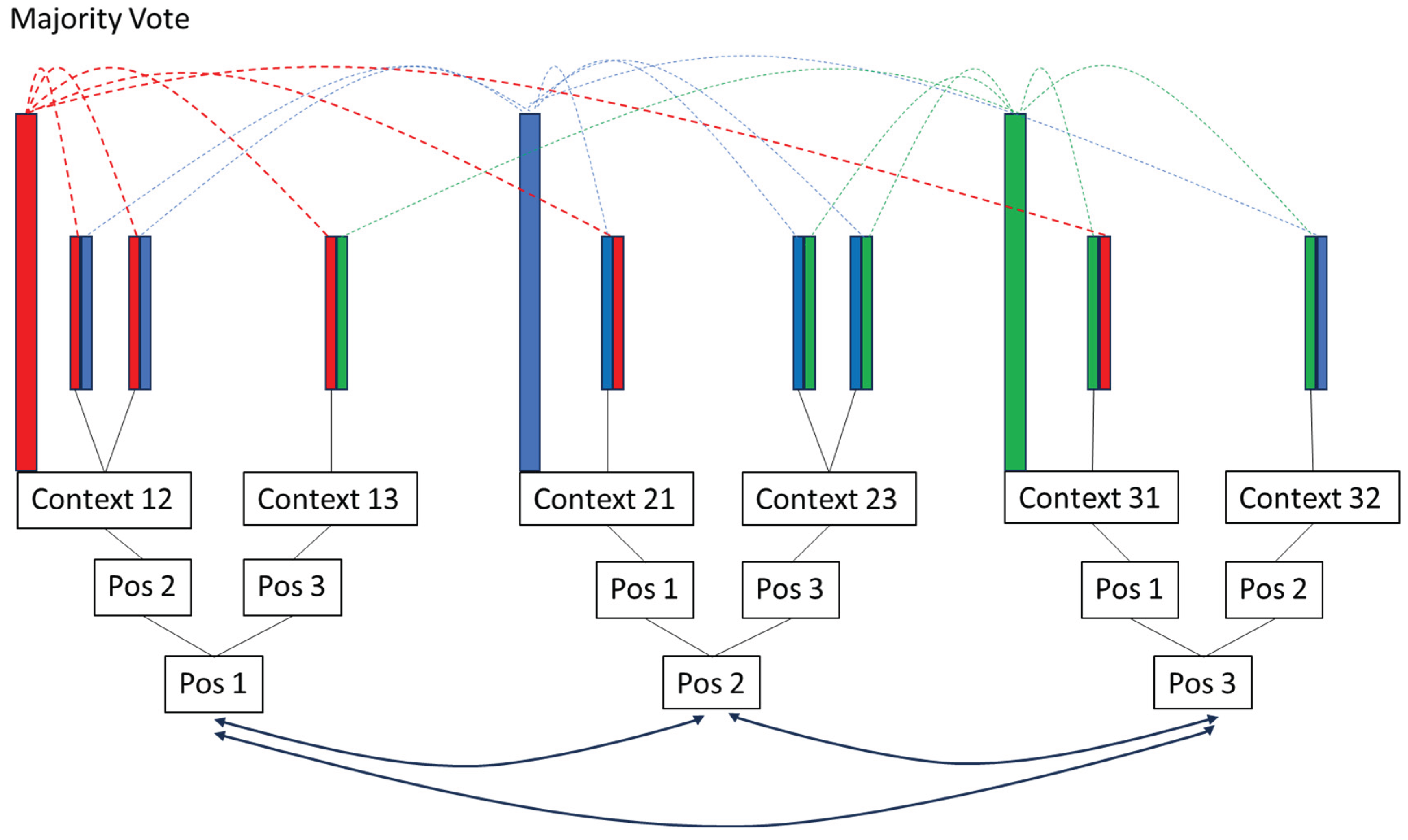

6. Ordinal Learning

7. Algorithm Testing

7.1. Ontology Tests

7.1.1. Statistical Counting

7.1.2. Classic Texts

7.2. Ordinal Tests

8. Some Biological Comparisons

8.1. Gestalt Psychology

- The cat sat on the mat and drank some milk.

- The dog barked at the moon and chased its tail.

- The dog barked at the moon and chased its tail, or

- The dog barked at the moon and drank some milk.

8.1.1. Numbers in the Gestalt Process

8.2. Cortical Columns

8.3. Animal Brain Function

8.3.1. Theory of Small Changes

9. Conclusions and Future Work

Appendix A. Upper Ontology Trees for Book Texts

| Clusters |

| dorothy |

| scarecrow |

| lion, woodman |

| great, oz |

| city, emerald |

| asked, came, see |

| little, out, tin |

| again, answered, away, before, down, made, now, shall, toto, up |

| back, come, girl, go, green, head, heart, man, one, over, upon, very, witch |

| Clusters |

| romeo, shall |

| thou |

| love, o, thy |

| death, eye, hath |

| come, thee |

| go, good, here, ill, night, now |

| day, give, lady, make, one, out, up, well |

| man, more, tybalt |

| Clusters |

| back, before, came |

| down, know |

| more, room, think, well |

| day, eye, face, found, matter, tell |

| upon |

| holmes, very |

| little, man, now |

| one |

| away, case, good, heard, house, much, nothing, quite, street, such, through, two, ye |

| go, here |

| come, hand, over, shall, time |

| asked, never |

| door, saw |

| mr, see |

| out, up |

| made, way |

| Clusters |

| answer, computer, man, question, think |

| machine |

| one |

| such |

Appendix B. Documents and Test Results for the Neural-Level Sorting

| Train File – Hard-Boiled Egg |

| Place eggs at the bottom of a pot and cover them with cold water. Bring the water to a boil, then remove the pot from the heat. Let the eggs sit in the hot water until hard-boiled. Remove the eggs from the pot and crack them against the counter and peel them with your fingers. |

| Train File – Panna Cotta |

| For the panna cotta, soak the gelatine leaves in a little cold water until soft. Place the milk, cream, vanilla pod and seeds and sugar into a pan and bring to a simmer. Remove the vanilla pod and discard. Squeeze the water out of the gelatine leaves, then add to the pan and take off the heat. Stir until the gelatine has dissolved. Divide the mixture among four ramekins and leave to cool. Place into the fridge for at least an hour, until set. For the sauce, place the sugar, water and cherry liqueur into a pan and bring to the boil. Reduce the heat and simmer until the sugar has dissolved. Take the pan off the heat and add half the raspberries. Using a hand blender, blend the sauce until smooth. Pass the sauce through a sieve into a bowl and stir in the remaining fruit. To serve, turn each panna cotta out onto a serving plate. Spoon over the sauce and garnish with a sprig of mint. Dust with icing sugar. |

| Test File – Hard-Boiled Egg and Panna Cotta |

| Remove the vanilla pod and discard. For the panna cotta, soak the gelatine leaves in a little cold water until soft. As soon as they are cooked drain off the hot water, then leave them in cold water until they are cool enough to handle. Squeeze the water out of the gelatine leaves, then add to the pan and take off the heat. Spoon over the sauce and garnish with a sprig of mint. Stir until the gelatine has dissolved. Place the eggs into a saucepan and add enough cold water to cover them by about 1cm. Pass the sauce through a sieve into a bowl and stir in the remaining fruit. Divide the mixture among four ramekins and leave to cool. Place into the fridge for at least an hour, until set. To peel them crack the shells all over on a hard surface, then peel the shell off starting at the wide end. For the sauce, place the sugar, water and cherry liqueur into a pan and bring to the boil. Place the milk, cream, vanilla pod and seeds and sugar into a pan and bring to a simmer. Reduce the heat and simmer until the sugar has dissolved. Take the pan off the heat and add half the raspberries. Using a hand blender, blend the sauce until smooth. Bring the water up to boil then turn to a simmer. To serve, turn each panna cotta out onto a serving plate. Dust with icing sugar. |

| Selected Sequences from the Hard-Boiled Egg Function |

| [place, the, eggs, into, a, saucepan, and, add, enough, cold, water, to, cover, them, by, about] [bring, the, water, up, to, boil, then, turn, to, a, simmer] [as, soon, as, they, are, cooked, drain, off, the, hot, water, then, leave, them, in, cold, water, until, they, are, cool, enough, to, handle] [to, peel, them, crack, the, shells, all, over, on, a, hard, surface, then, peel, the, shell, off, starting, at, the, wide, end] |

| Selected Sequences from the Panna Cotta Function |

| [for, the, panna, cotta, soak, the, gelatine, leaves, in, a, little, cold, water, until, soft] [place, the, milk, cream, vanilla, pod, and, seeds, and, sugar, into, a, pan, and, bring, to, the, boil] [remove, the, vanilla, pod, and, discard] [squeeze, the, water, out, of, the, gelatine, leaves, then, add, to, the, pan, and, take, off, the, heat] [stir, until, the, gelatine, has, dissolved] [divide, the, mixture, among, four, ramekins, and, leave, to, cool] [place, into, the, fridge, for, at, least, an, hour, until, set] [for, the, sauce, place, the, sugar, water, and, cherry, liqueur, into, a, pan, and, bring, to, the, boil] [reduce, the, heat, and, simmer, until, the, sugar, has, dissolved] [take, the, pan, off, the, heat, and, add, half, the, raspberries] [using, a, hand, blender, blend, the, sauce, until, smooth] [pass, the, sauce, through, a, sieve, into, a, bowl, and, stir, in, the, remaining, fruit] [to, serve, turn, each, panna, cotta, out, onto, a, serving, plate] [spoon, over, the, sauce, and, garnish, with, a, sprig, of, mint] [dust, with, icing, sugar] |

| 1 | In statistics, a pivotal quantity is a function of observations and unobservable parameters. It can be used in normalisation, to allow data from different data sets to be compared. |

| 2 |

References

- Anderson, J.A., Silverstein, J.W., Ritz, S.A. and Jones, R.A. (1977). Distinctive Features, Categorical Perception, and Probability Learning: Some Applications of a Neural Model, Psychological Review, Vol. 84, No. 5.

- ARC Prize. (2025). https://arcprize.org/. (last accessed 30/4/25).

- Barabasi, A.L. and Albert R. (1999). Emergence of scaling in random networks, Science, 286:509-12.

- Brown, P.F., Della Pietra, V.J., Desouza, P.V., Lai, J.C. and Mercer, R.L.. (1992). Class-based n-gram models of natural language. Computational linguistics, 18(4), pp.467-480.

- Buffart, H. (2017). A formal approach to Gestalt theory, Blurb, ISBN: 9781389505577.

- Cavanagh, J.P. Relation between the immediate memory span and the memory search rate. Psychol. Rev. 1972, 79, 525–530. [Google Scholar] [CrossRef]

- Cover, T.M. and Joy, A.T. (1991). Elements of Information Theory, John Wiley & Sons, Inc. Print ISBN 0-471-06259-6 Online ISBN 0-471-20061-1.

- Dobrynin, V.; Sherman, M.; Abramovich, R.; Platonov, A. A Sparsifier Model for Efficient Information Retrieval. 2024 IEEE 18th International Conference on Application of Information and Communication Technologies (AICT). LOCATION OF CONFERENCE, ItalyDATE OF CONFERENCE; pp. 1–4.

- Dobson, S. and Fields, C. (2023). Constructing condensed memories in functorial time. Journal of Experimental & Theoretical Artificial Intelligence, pp.1-25.

- Dong, M., Yao, L., Wang, X., Benatallah, B. and Zhang, S. (2018). GrCAN: Gradient Boost Convolutional Autoencoder with Neural Decision Forest. arXiv:1806.08079.

- Dorigo, M., Bonabeau, E. And Theraulaz, G. (2000). Ant algorithms and stigmergy, Future Generation Computer Systems, Vol. 16, pp. 851 - 871.

- Eliasmith, C.; Stewart, T.C.; Choo, X.; Bekolay, T.; DeWolf, T.; Tang, Y.; Rasmussen, D. A Large-Scale Model of the Functioning Brain. Science 2012, 338, 1202–1205. [Google Scholar] [CrossRef] [PubMed]

- Feng, S.; Sun, H.; Yan, X.; Zhu, H.; Zou, Z.; Shen, S.; Liu, H.X. Dense reinforcement learning for safety validation of autonomous vehicles. Nature 2023, 615, 620–627. [Google Scholar] [CrossRef] [PubMed]

- Fink, G.A. (2014). Markov models for pattern recognition: from theory to applications. Springer Science & Business Media.

- Friedman, R. Cognition as a Mechanical Process. NeuroSci 2021, 2, 141–150. [Google Scholar] [CrossRef]

- Goodfellow, I.J., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A. and Bengio, Y. (2014). Generative adversarial nets. Advances in neural information processing systems, 27.

- Grassé, P.P. (1959). La reconstruction dun id et les coordinations internidividuelles chez Bellicositermes natalensis et Cubitermes sp., La théorie de la stigmergie: essais d’interprétation du comportment des termites constructeurs, Insectes Sociaux, Vol. 6, pp. 41-84.

- Greer, K. Neural Assemblies as Precursors for Brain Function. NeuroSci 2022, 3, 645–655. [Google Scholar] [CrossRef]

- Greer, K. (2021). New Ideas for Brain Modelling 7, International Journal of Computational and Applied Mathematics & Computer Science, Vol. 1, pp. 34-45.

- Greer, K. (2021). Is Intelligence Artificial? Euroasia Summit, Congress on Scientific Researches and Recent Trends-8, August 2-4, The Philippine Merchant Marine Academy, Philippines, pp. 307 - 324. Also available on arXiv at https://arxiv.org/abs/1403.1076.

- Greer, K. (2021). Category Trees - Classifiers that Branch on Category, International Journal of Artificial Intelligence & Applications (IJAIA), Vol. 12, No. 6, pp. 65 - 76.

- Greer, K.; Systems, B.D.C. New ideas for brain modelling 6. AIMS Biophys. 2020, 7, 308–322. [Google Scholar] [CrossRef]

- Greer, K. New ideas for brain modelling 3. Cogn. Syst. Res. 2019, 55, 1–13. [Google Scholar] [CrossRef]

- Greer, K. (2012). Turing: Then, Now and Still Key, in: X-S. Yang (eds.), Artificial Intelligence, Evolutionary Computation and Metaheuristics (AIECM) - Turing 2012, Studies in Computational Intelligence, 2013, Vol. 427/2013. 43–62, Springer-Verlag Berlin Heidelberg. [CrossRef]

- Greer, K. (2011). Symbolic Neural Networks for Clustering Higher-Level Concepts, NAUN International Journal of Computers, Issue 3, Vol. 5, pp. 378 – 386, extended version of the WSEAS/EUROPMENT International Conference on Computers and Computing (ICCC’11).

- Gruber, T.R. A translation approach to portable ontology specifications. Knowl. Acquis. 1993, 5, 199–220. [Google Scholar] [CrossRef]

- Gupta, B.; Rawat, A.; Jain, A.; Arora, A.; Dhami, N. Analysis of Various Decision Tree Algorithms for Classification in Data Mining. Int. J. Comput. Appl. 2017, 163, 15–19. [Google Scholar] [CrossRef]

- Hawkins, J.; Lewis, M.; Klukas, M.; Purdy, S.; Ahmad, S. A Framework for Intelligence and Cortical Function Based on Grid Cells in the Neocortex. Front. Neural Circuits 2019, 12, 121. [Google Scholar] [CrossRef]

- Hawkins, J. and Blakeslee, S. On Intelligence. Times Books, 2004.

- High, R. , 2012. The era of cognitive systems: An inside look at IBM Watson and how it works. IBM Corporation, Redbooks, pp.1-16.

- Hinton, G. How to Represent Part-Whole Hierarchies in a Neural Network. Neural Comput. 2023, 35, 413–452. [Google Scholar] [CrossRef] [PubMed]

- Hinton, G.E.; Osindero, S.; Teh, Y.-W. A Fast Learning Algorithm for Deep Belief Nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef] [PubMed]

- Katuwal, R.; Suganthan, P. Stacked autoencoder based deep random vector functional link neural network for classification. Appl. Soft Comput. 2019, 85. [Google Scholar] [CrossRef]

- Kingma, D.P. and Welling, M., 2019. An introduction to variational autoencoders. Foundations and Trends in Machine Learning, 12(4), pp.307-392.

- Krizhevsky, A. , Sutskever, I. and Hinton, G.E. (2012). Imagenet classification with deep convolutional neural networks. In Advances in neural information processing systems, pp. 1097-1105.

- Krotov, D. A new frontier for Hopfield networks. Nat. Rev. Phys. 2023, 5, 366–367. [Google Scholar] [CrossRef]

- Laird, J. (2012). The Soar cognitive architecture, MIT Press.

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Lieto, A.; Lebiere, C.; Oltramari, A. The knowledge level in cognitive architectures: Current limitations and possible developments. Cogn. Syst. Res. 2018, 48, 39–55. [Google Scholar] [CrossRef]

- Lynn, C.W.; Holmes, C.M.; Palmer, S.E. Heavy-tailed neuronal connectivity arises from Hebbian self-organization. Nat. Phys. 2024, 20, 484–491. [Google Scholar] [CrossRef]

- Meunier, D.; Lambiotte, R.; Bullmore, E.T. Modular and Hierarchically Modular Organization of Brain Networks. Front. Neurosci. 2010, 4, 200. [Google Scholar] [CrossRef]

- Miller, G.A. The magical number seven, plus or minus two: Some limits on our capacity for processing information. Psychol. Rev. 1956, 63, 81–97. [Google Scholar] [CrossRef]

- Mikolov, T. , Sutskever, I., Chen, K., Corrado, G.S. and Dean, J. (2013). Distributed representations of words and phrases and their compositionality. Advances in neural information processing systems, 26.

- Minaee, S. , Mikolov, T., Nikzad, N., Chenaghlu, M., Socher, R., Amatriain, X. and Gao, J., 2024. Large language models: A survey. arXiv:2402.06196.

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef]

- Mountcastle, V.B. The columnar organization of the neocortex. Brain 1997, 120, 701–722. [Google Scholar] [CrossRef] [PubMed]

- Newell, A. and Simon, H.A. (1976). Computer science as empirical inquiry: Symbols and search, Communications of the ACM, Vol.19, No. 3, pp. 113 - 126.

- Nguyen, T. , Ye, N. and Bartlett, P.L. (2019). Learning Near-optimal Convex Combinations of Basis Models with Generalization Guarantees. arXiv:1910.03742.

- OpenAI. ( arXiv:cs.CL/2303.08774, 2023.

- Pulvermüller, F.; Tomasello, R.; Henningsen-Schomers, M.R.; Wennekers, T. Biological constraints on neural network models of cognitive function. Nat. Rev. Neurosci. 2021, 22, 488–502. [Google Scholar] [CrossRef] [PubMed]

- Rock, I. (1977). In defence of unconscious inference. In W. Epstein (Ed.), Stability and constancy in visual perception: mechanisms and processes. New York, N. Y.: John Wiley & Sons.

- Rubinov, M.; Sporns, O.; van Leeuwen, C.; Breakspear, M. Symbiotic relationship between brain structure and dynamics. BMC Neurosci. 2009, 10, 55–55. [Google Scholar] [CrossRef]

- Sarker, K.; Zhou, L.; Eberhart, A.; Hitzler, P. Neuro-symbolic artificial intelligence. AI Commun. 2022, 34, 197–209. [Google Scholar] [CrossRef]

- Shannon, C.E. (1948). A Mathematical Theory of Communication, The Bell System Technical Journal, 27(3), pp. 379 - 423.

- Bao Y, Xu Y, Li Z, Wu Q. Racial and ethnic difference in the risk of fractures in the United States: a systematic review and meta-analysis. Sci Rep. 2023;13(1):9481. [CrossRef]

- Tkačik, G.; Mora, T.; Marre, O.; Amodei, D.; Palmer, S.E.; Berry, M.J.; Bialek, W. Thermodynamics and signatures of criticality in a network of neurons. Proc. Natl. Acad. Sci. 2015, 112, 11508–11513. [Google Scholar] [CrossRef]

- The Gutenberg Project., https://www.gutenberg.org/browse/scores/top. (last accessed 30/4/25).

- Treves, A. and Rolls, E.T. (1991). What determines the capacity of autoassociative memories in the brain?, Network: Computation in Neural Systems, 2(4), p.371.

- Tsien, R.Y. Very long-term memories may be stored in the pattern of holes in the perineuronal net. Proc. Natl. Acad. Sci. 2013, 110, 12456–12461. [Google Scholar] [CrossRef]

- Turing, A.M. (1950). Computing machinery and intelligence. Mind, 59, pp. 433 - 460.

- Vaswani, A. , Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, L. and Polosukhin, I. (2017). Attention is all you need. Advances in neural information processing systems, 30.

- Webb, T.W.; Frankland, S.M.; Altabaa, A.; Segert, S.; Krishnamurthy, K.; Campbell, D.; Russin, J.; Giallanza, T.; O’rEilly, R.; Lafferty, J.; et al. The relational bottleneck as an inductive bias for efficient abstraction. Trends Cogn. Sci. 2024, 28, 829–843. [Google Scholar] [CrossRef]

- Word2Vec – DeepLearning4J. (2025). https://deeplearning4j.konduit.ai/en-1.0.0-beta7/language-processing/word2vec. (last accessed 12/5/25).

| E-Sense Typing | Type Clusters | |

| dorothy | ||

| asked, came, see | ||

| city, emerald | ||

| great, oz | ||

| Word2Vec | Type Word | Associations |

| dorothy | dorothy, back, over, scarecrow, girl | |

| asked | asked, cowardly, sorrowfully, promised, courage | |

| came | came, next, morning, flew, carried | |

| see | see, lived, away, many, people | |

| city | city, emerald, brick, streets, led | |

| emerald | emerald, brick, city, streets, gates | |

| great | great, room, throne, head, terrible | |

| oz | oz, throne, room, heart, tell | |

| E-Sense Typing | Type Clusters | |

| love, o, thy | ||

| romeo, shall | ||

| death, eye, hath | ||

| Word2Vec | Type Word | Associations |

| thou | thou, art, wilt, thy, hast | |

| love | love, art, wit, thou, fortunes | |

| thy | thy, thou, tybalt, dead, husband | |

| romeo | romeo, slain, thou, hes, art | |

| shall | shall, thou, till, art, such | |

| death | death, romeo, slain, tybalt, thy | |

| eye | eye, hoar, sometime, dreams, ladies | |

| hath | hath, husband, slain, tybalt, dead | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).