Submitted:

31 July 2023

Posted:

02 August 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

- Pose restrictions: sheep are often photographed in fixed postures intended to increase the consistency of facial features.

- Obstruction removal: sheep are sometimes cleaned as dirt and other materials are removed prior to data collection.

- Extensive pre-processing: some techniques require the manual selection or cropping of images to identify facial features.

- Limited sample size: the steps listed above can be tedious and time consuming, which typically limits datasets to a few hundred samples.

2. Materials and Methods

2.1. Dataset

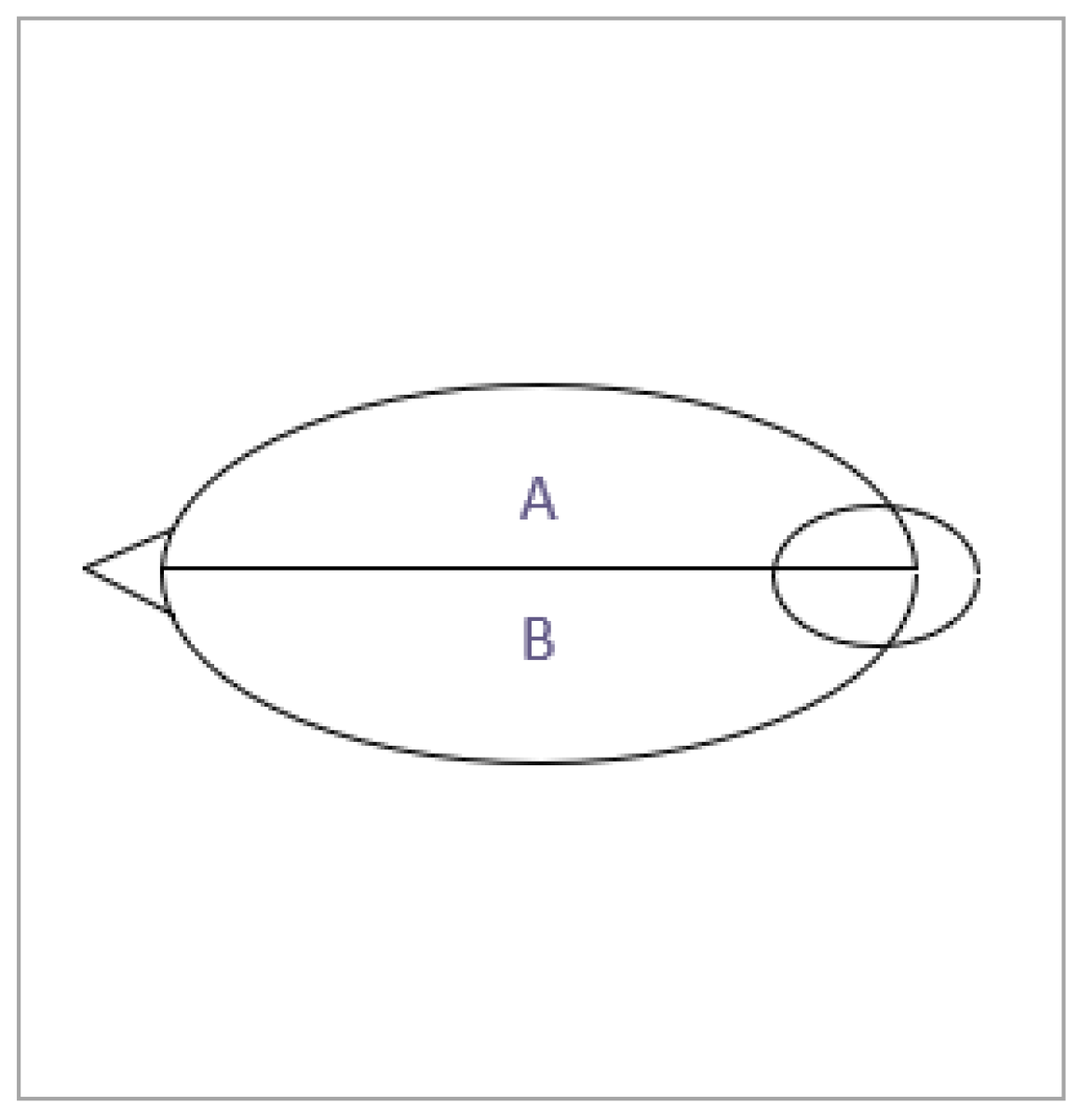

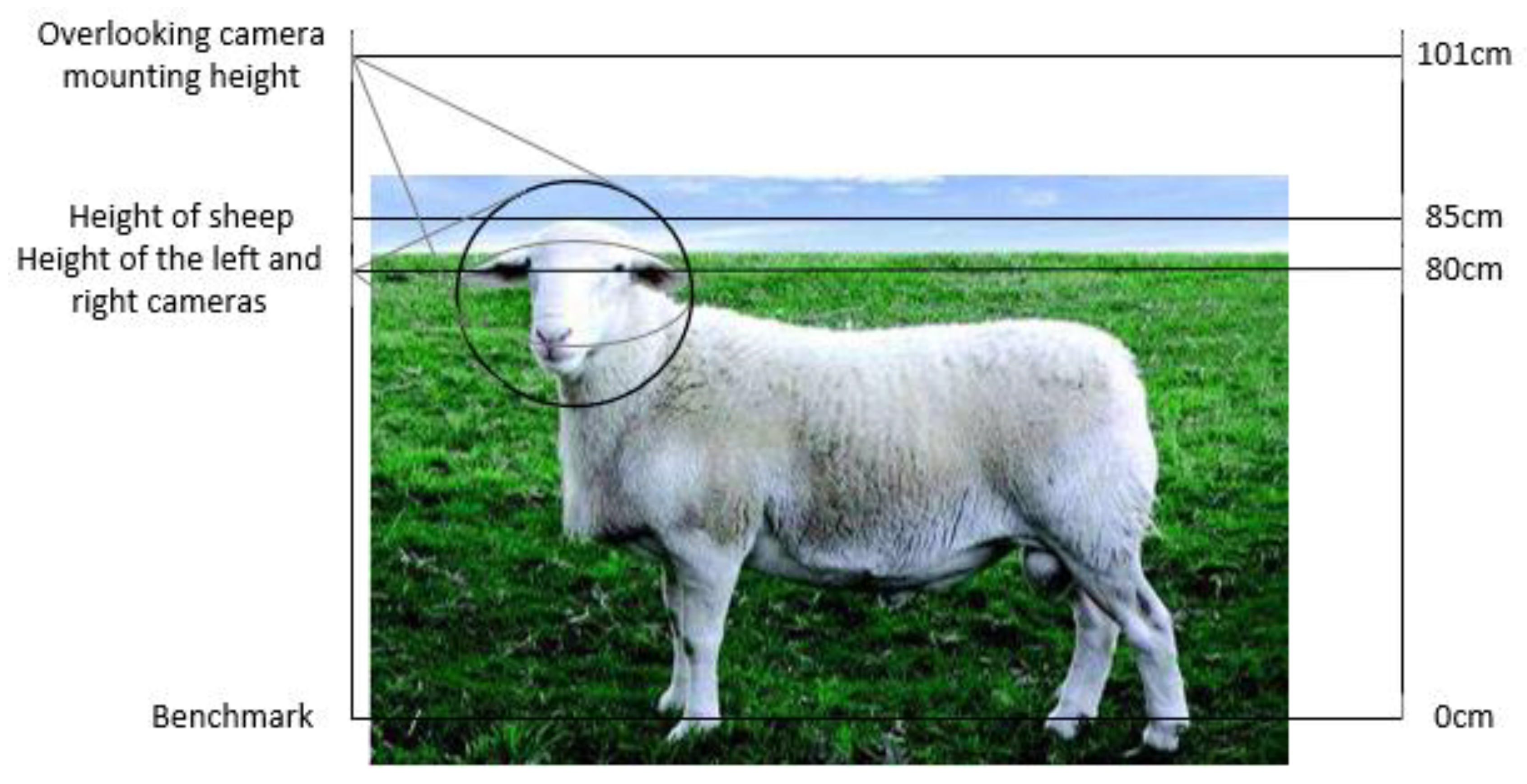

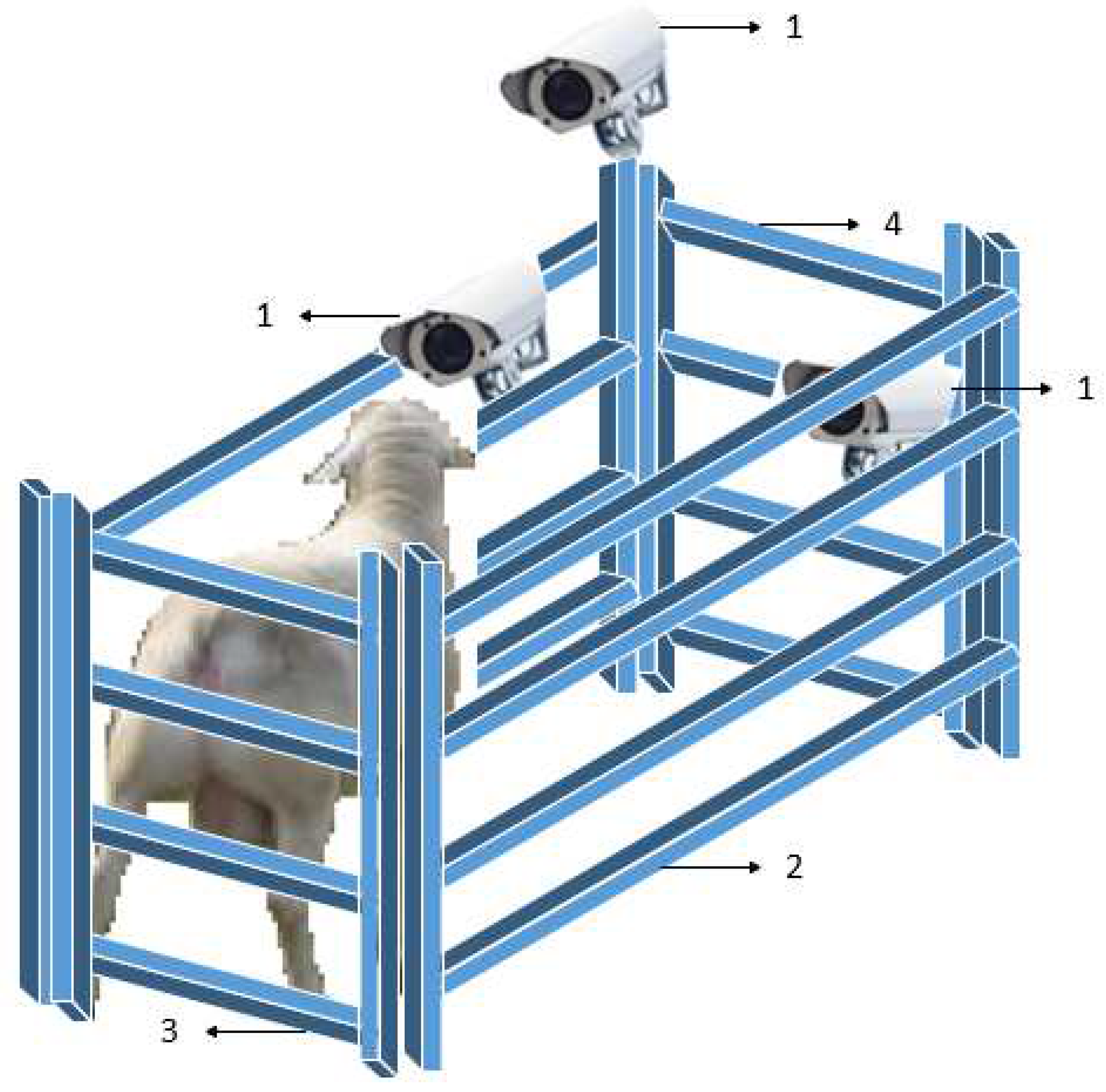

2.1.1. Dataset Collection

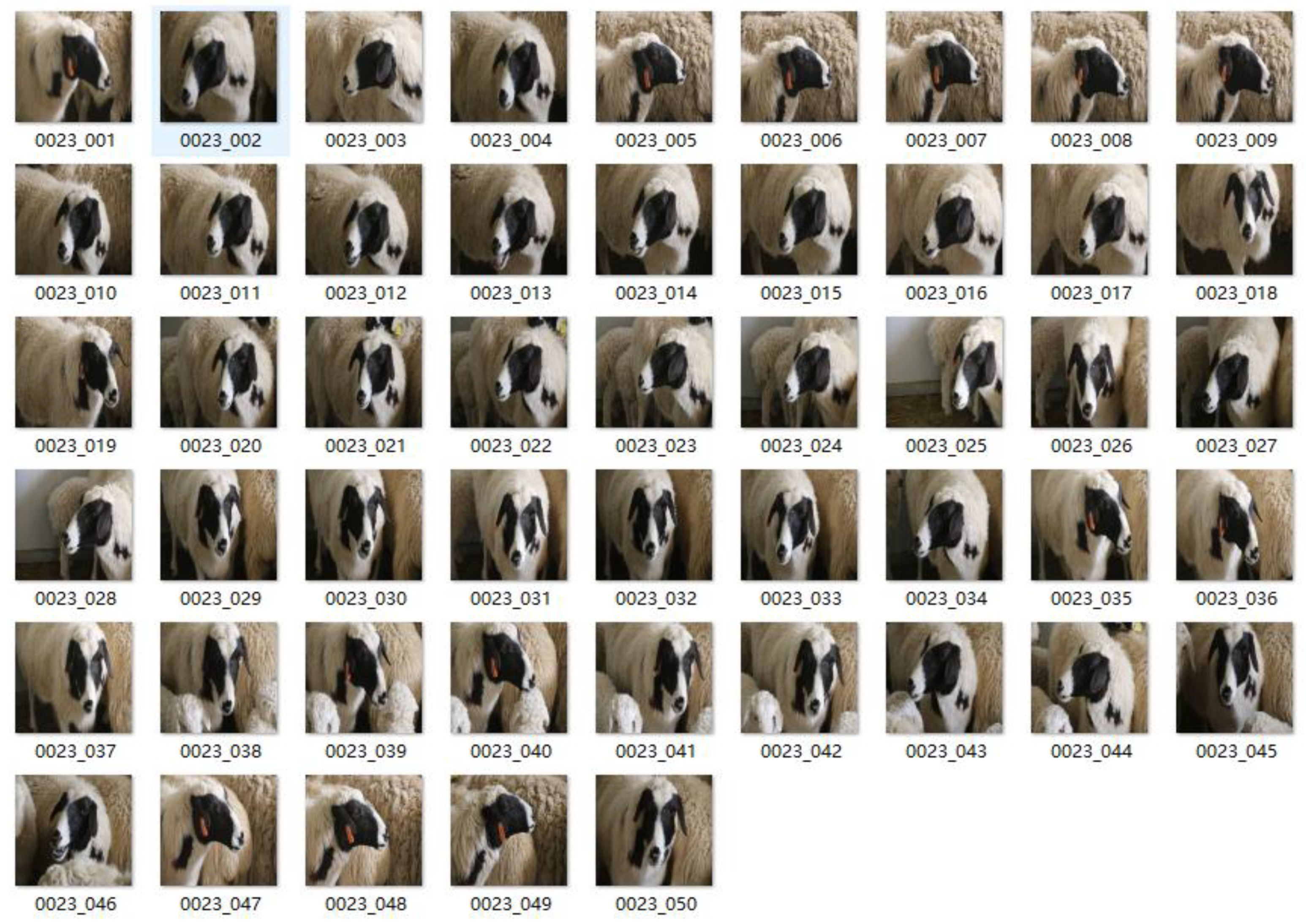

2.1.2. Dataset Construction

2.1.3. Dataset Annotation

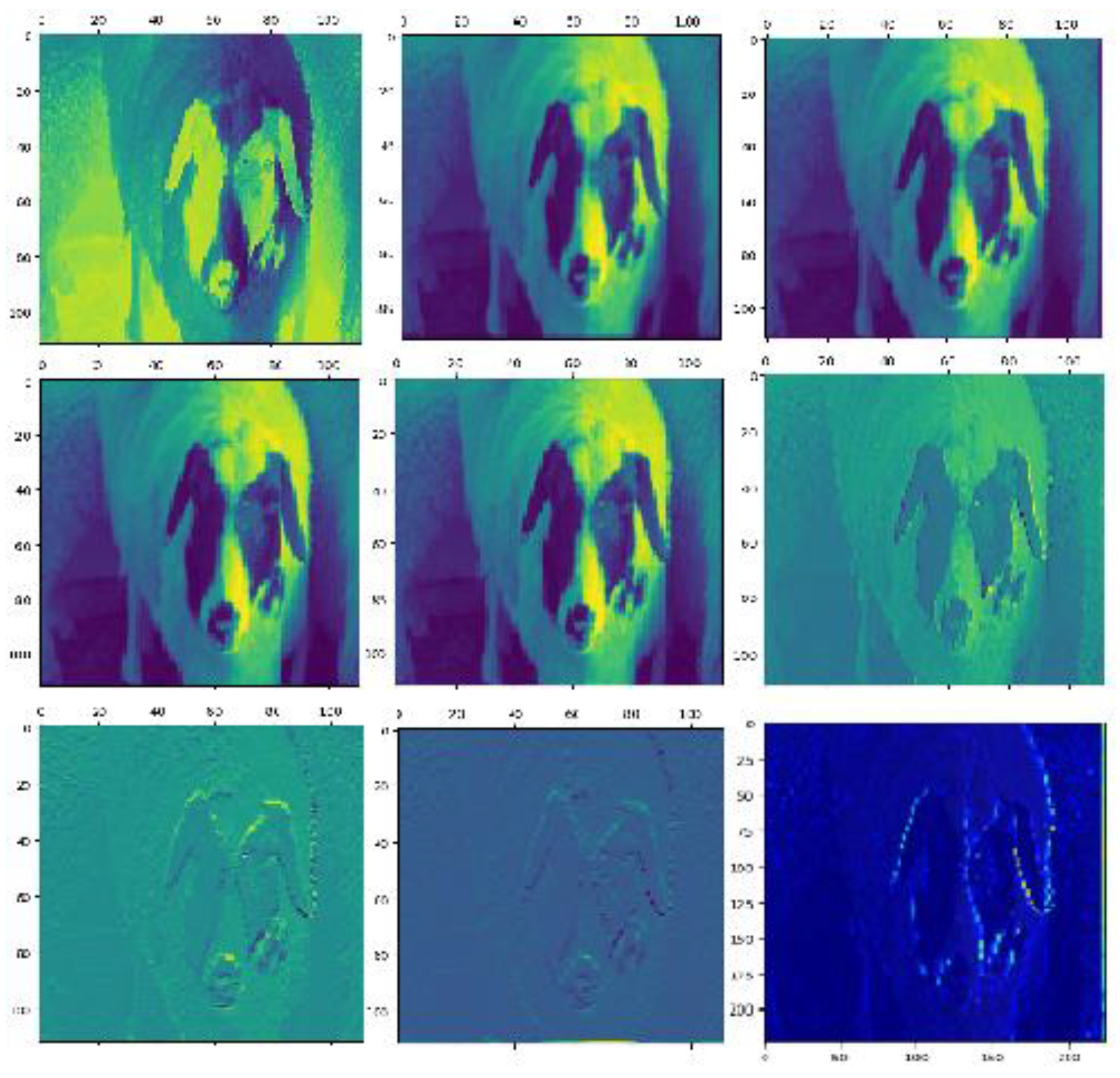

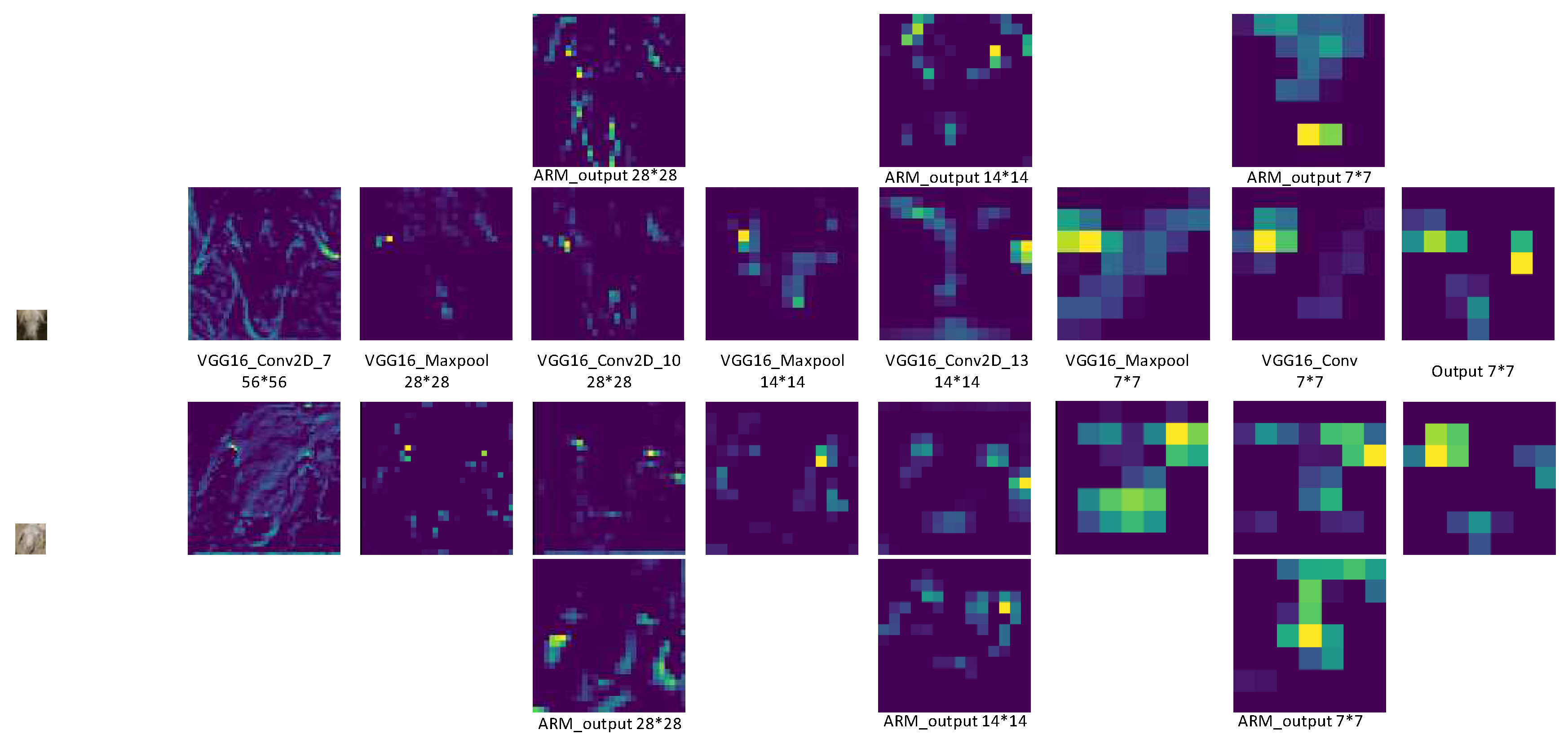

2.2. Backbone Networks

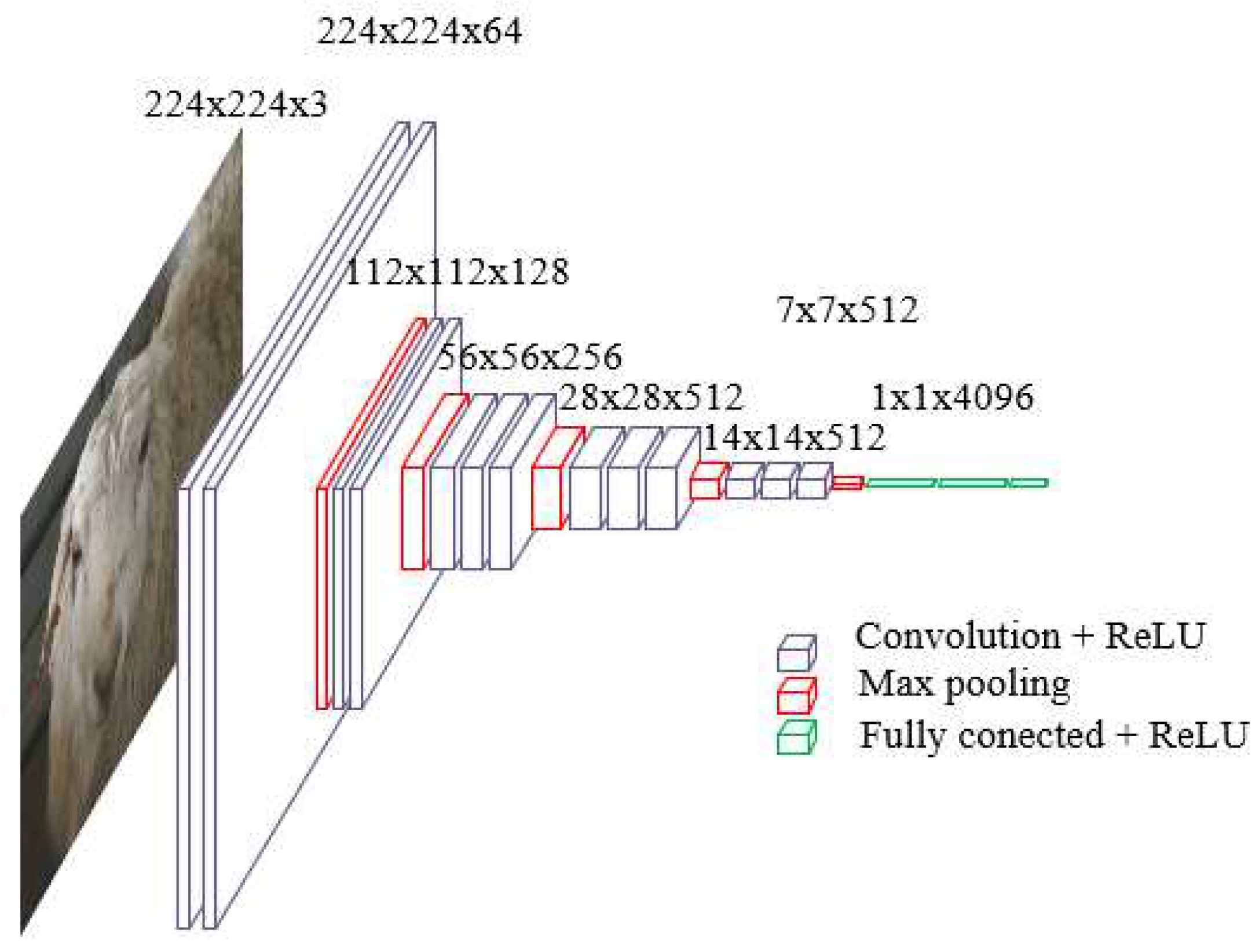

2.2.1. VGG16

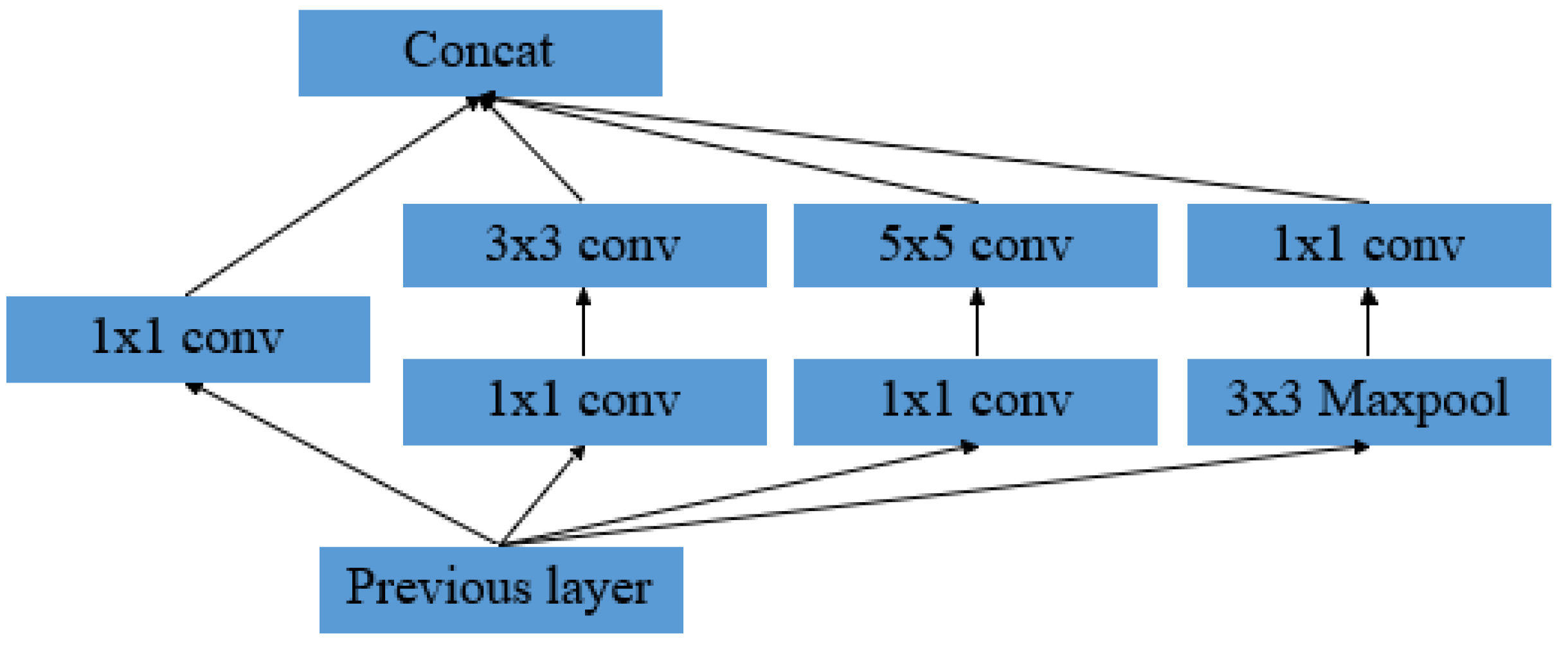

2.2.2. GoogLeNet

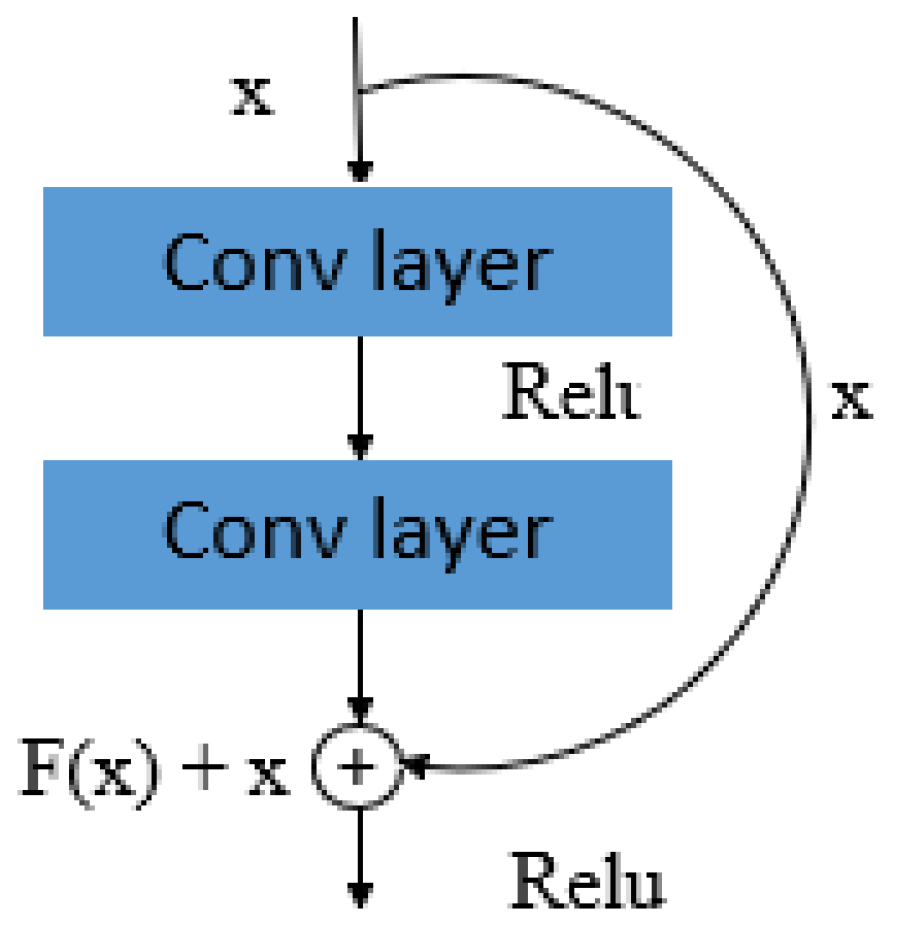

2.2.3. ResNet50

2.3. Activation Functions

2.4. Loss Functions

2.5. Evaluation Metrics

3. Evaluation and Analysis

3.1. Test Configuration

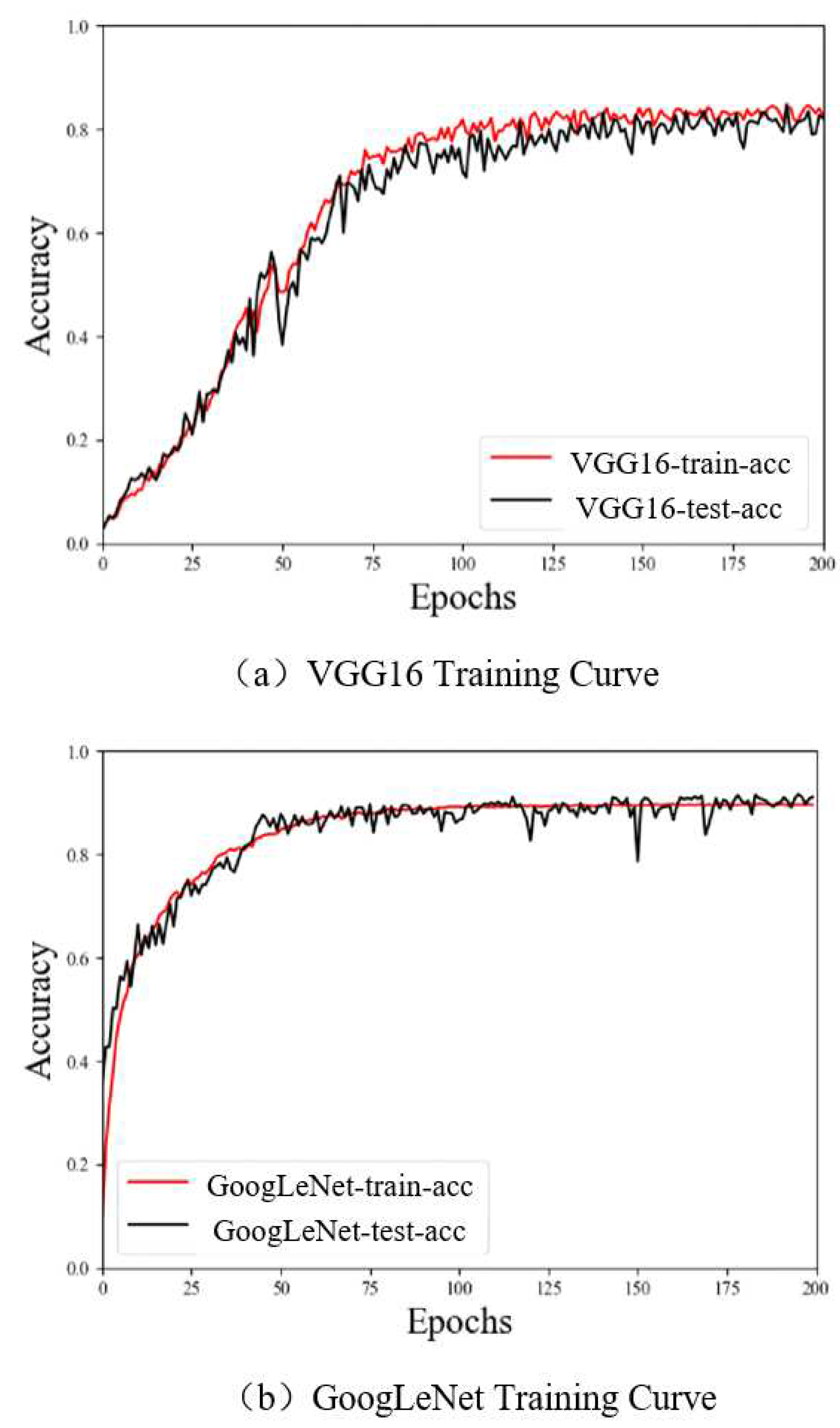

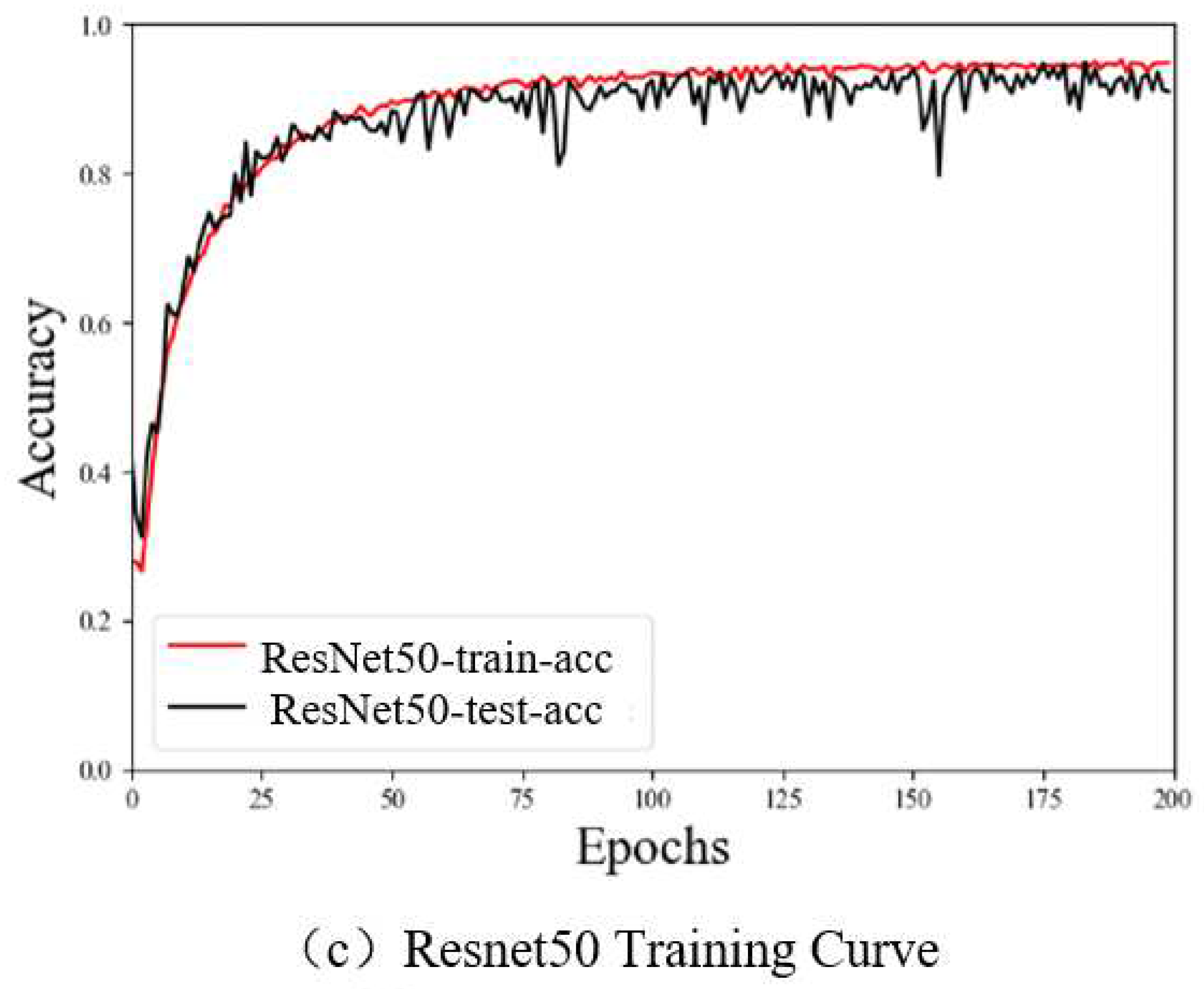

3.2. Performance Benchmarks

4. Discussion

5. Conclusion

Author Contributions

Foundation Items

Abbreviations

References

- Xuan Chuanzhong, Wu Pei, Ma Yanhua, Zhang Lina, Han Ding, Liu Yanqiu(2015). Vocal signal recognition of ewes based on power spectrum and formant analysis method. Transactions of the Chinese Society of Agricultural Engineering. 31(24):219-224. (in chinese).

- Xuan Chuanzhong, Ma Yanhua, Wu Pei, Zhang Lina, Hao Min, Zhang Xiyu(2016). Behavior classification and recognition for facility breeding sheep based on acoustic signal weighted feature. Transactions of the Chinese Society of Agricultural Engineering. 32(19):195-202. (in chinese). [CrossRef]

- Sharma S, Shah D J(2013). A Brief Overview on Different Animal Detection Methods. Signal and Image Processing: An International Journal. 4(3):77-81. [CrossRef]

- Zhu W, Drewes J, Gegenfurtner K R(2013). Animal Detection in realistic Images: Effects of Color and Image Database. PloS one. 8(10):e75816. [CrossRef]

- Baratchi M, Meratnia N, Havinga P J M, et al(2013). Sensing solutions for collecting spatio-temporal data for wildlife monitoring applications:a review. Sensors. 13(5):6054-6088. [CrossRef]

- Chen Zhanqi, Zhang Yuan, Wang Wenzhi, Li Dan, He Jie, Song Rende(2022). Multiscale Feature Fusion Yak Face Recognition Algorithm Based on Transfer Learning. Smart Agriculture. 4(2):77-85. [CrossRef]

- Qin Xing, Song Gefang(2019). Pig Face Recognition Based on Bilinear Convolution Neural Network. Journal of Hangzhou Dianzi University. 39(2):12-17. [CrossRef]

- Chen Zhengtao, Huang Can, Yang Bo, Zhao Li, Liao Yong(2021). Yak Face Recognition Algorithm of Parallel Convolutional Neural Network Based on Transfer Learning. Journal of Computer Applications. 41(5):1332-1336. [CrossRef]

- Corkery G P, Gonzales-Barron U A, Bueler F, et al(2007). A Preliminary Investigation on Face Recognition as a Biometric Identifier of Sheep. Transactions of the Asabe. 50(1):313-320. [CrossRef]

- Wei Bin(2020). Face detection and Recognition of goats based on deep learning. Northwest A&F University.(in chinese).

- Heng Yang, Renqiao Zhang and Peter Robinson(2015). Human and Sheep Facial Landmarks Localisation by Triplet Interpolated Features. CORR. [CrossRef]

- Aya Salama, Aboul Ellah Hassanien, and Aly Fahmy(2019). Sheep identification using a hybrid deep learning and Bayesian optimization approach. Citation information. IEEE Access. [CrossRef]

- Alam Noor(2019). Sheep facial expression pain rating scale: using Convolutional Neural Networks. Harbin Institute of Technology.

- M.Hutson(2017). Artificial intelligence learns to spot pain in sheep. Science.

- Hongcheng Xue, Junping Qin, Chao Quan, et al(2021). Open Set Sheep Face Recognition Based on Euclidean Space Metric. Mathematical Problems in Engineering. [CrossRef]

- Zhang Hongming, Zhou Lixiang, Li Yongheng, et al(2022). Research on Sheep Face Recognition Method Based on Improved Mobile-FaceNet. Transactions of the Chinese Society for Agricultural Machinery.

- Shang Cheng, Wang Meili, Ning Jifeng, et al(2022). Identification of dairy goats with high similarity based on Joint loss optimization. Journal of Image and Graphics.

- Xue Hong-cheng(2021). Research on sheep face recognition based on key point detection and Euclidean space measurement. Inner Mongolia University of Technology.

- Yang Jialin(2022). Research and Implementation of lightweight sheep face recognition Method Based on Attention Mechanism. Northwest A & F University.

- Wang Z(2004). Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Transactions on Image Processing. [CrossRef]

- Chu J L, Krzyzak A(2014). Analysis of feature maps selection in supervised learning using Convolutional Neural Networks//Proceedings of the 27th Canadian Conference on Artificial Intelligence. Montreal, Canada. 59-70. [CrossRef]

- Karen Simonyan, Andrew Zisserman(2014). Very Deep Convolutional Networks for Large Scale Image Recognition. Computer Vision and Pattern Recognition.

- Szegedy C, Liu W, Jia Y, et al(2015). Going deeper with convolutions. In 2015 Conference on Computer Vision and Pattern Recognition, Boston, USA:IEEE. 1-9. [CrossRef]

- Barbedo J G A(2018). Factors influencing the use of deep learning for plant disease recognition. Biosystems Engineering. 172:84-91. [CrossRef]

- S Dong, P Wang, K Abbas(2021). A survey on deep learning and its applications. Computer Science Review. 40:100379. [CrossRef]

- WC Lin, CF Tsai, JR Zhong(2022). Deep learning for missing value imputation of continuous data and the effect of data discretization. Knowledge-Based Systems. 239:108079. [CrossRef]

- LeCun Y, Bottou L, Bengio Y, et al(1998). Gradient-based learning applied to document recognition. Proceesings of the IEEE. 86(11):2278-2324. [CrossRef]

- LeCun Y, Bottou L, Denker J S, et al(1989). Backpropagation applied to handwritten zip code recognition. Neural Computation. 11(4):541-551. [CrossRef]

- Rumelhart D E, Hinton G, Williame R J(1986). Learning representations by back-propagating errors. Nature. 323(6088):533-536. [CrossRef]

- HE K M, ZHANG X Y, REN S Q, et al(2016). Deep residual learning for image recognition. IEEE Conference on Computer Vision and Pattern Recognition. 770-778. [CrossRef]

- LIU W Y, WEN Y D, YU Z D, et al(2017). SphereFace:deep hypersphere embedding for face recognition. 2017 IEEE Conference on Computer Vision and Pattern Recognition. 6738-6746. [CrossRef]

- WANG H, WANG Y T, ZHOU Z, et al(2018). CosFace: large margin cosine loss for deep face recognition. Conference on Computer Vision and Pattern Recognition. 5265-5274.

- HASNAT A, BOHNE J, MILGRAM J, et al(2017). DeepVisage:making face recognition simple yet with powerful generalization skills. 2017 IEEE International Conference on Computer Vision Workshops. 1682-1691. [CrossRef]

- DENG J K, GUO J, XUE N N, et al(2019). ArcFace:additive angular margin loss for deep face recognition. 2019 IEEE Conference on Computer Vision and Pattern Recognition. 4690-4699.

- Balduzzi D, Frean M, Leary L, et al(2017). The shattered gradients problem: If resnets are the answer, then what is the question?. In 2017 34th International Conference on Machine Learning, Sydney, Australia:PMLR. 342-350.

| Model parameter | VGG16 | GoogLeNet | ResNet50 |

|---|---|---|---|

| Input shape | 224 x 224 x 3 | 224 x 224 x 3 | 224 x 224 x 3 |

| Total parameters | 138 M | 4.2 M | 5.3 M |

| Base learning rate | 0.001 | 0.001 | 0.001 |

| Binary Softmax | 107 | 107 | 107 |

| Epochs | 200 | 200 | 200 |

| Model | Precision (%) | Recall (%) | F1-score (%) | Parameters | Cost (ms) |

|---|---|---|---|---|---|

| VGG16 | 82.26 | 85.38 | 83.79 | 8.3×106 | 547 |

| GoogLeNet | 88.03 | 90.23 | 89.11 | 15.2×106 | 621 |

| ResNet50 | 93.67 | 93.22 | 93.44 | 9.9×106 | 456 |

| Study | Number of Samples | Classifier Model | Recognition Rate |

|---|---|---|---|

| Corkery et al. [9] | 450 | Cosine Distance |

96% |

| Wei et al. [10] | 3,121 | VGGFace | 91% |

| Yang et al. [11] | 600 | Cascaded Regression | 90% |

| Shang et al. [17] | 1,300 | ResNet18 | 93% |

| Xue et al. [18] | 6,559 | SheepFaceNet | 89% |

| This study | 5,350 | ResNet50 | 93% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).