1. Introduction

The rapid advancement of artificial intelligence (AI) has led to various applications in different domains, including recommendation systems (RSs) [

1,

2,

3]. The latter play a crucial role in enhancing user experience by providing personalized suggestions based on individual preferences and needs [

4,

5]. In the context of museums and cultural spaces, where visitors seek meaningful and engaging experiences, the utilization of advanced RSs can greatly contribute to their enjoyment and overall satisfaction [

6,

7].

This work focuses in the introduction of large language models (LLMs) and more specifically of the Generative Pre-trained Transformer 4 (GPT-4) [

8], a knowledge-based LLM, as a RS for museums. GPT-4, an evolution of previous models, possesses exceptional natural language processing capabilities and has the potential to offer valuable recommendations to museum visitors. However, as a language model, GPT-4 lacks inherent context awareness, which is essential in providing accurate and relevant recommendations in museum settings [

9].

To address this limitation, the current study proposes the fine-tuning of GPT-4 through the incorporation of contextual information and user instructions during the training process. This fine-tuned version of GPT-4 becomes a context-aware recommendation system, capable of adapting its suggestions based on user input and specific contextual factors such as location, time of visit, and other relevant parameters. By considering context, the system aims to provide personalized recommendations that align with user preferences and desires, ultimately enhancing the overall museum experience.

The primary objective of this contribution is to evaluate the effectiveness of GPT-4 as a context-aware RS in the museum domain. This is achieved by a number of user studies, conducted to measure the system’s ability to provide accurate and relevant recommendations, as well as its impact on user engagement and satisfaction within the museum environment. Through these evaluations, the aim is to contribute to advancements in the field of personalized museum recommendations, ultimately benefiting both museum visitors and curators.

This rest of this paper is structured as follows;

Section 2 provides an overview of related work in the field of AI recommendation systems and discusses existing approaches in the cultural domain.

Section 3 elaborates on the training of the MAGICAL system in a virtual museum methodology.

Section 4 discusses the evaluation outcomes along with their implications. Finally,

Section 5 concludes the paper by summarizing the findings and outlining future directions for research and implementation.

2. Related Work

Recommendation systems have been extensively studied and applied in various domains, including e-commerce, entertainment, and online platforms. However, their application in the context of cultural heritage (CH), and more specifically in the museums, is relatively new and presents unique challenges and opportunities. Moreover, in the last couple of years, the continuous development of GPT has brought about significant changes in various sectors, reshaping interactions with technology. Among the fields profoundly impacted by the advancements of GPT, CH research stands out prominently [

10,

11,

12,

13,

14].

Agapiou et al. [

15] explore the feasibility on remote sensing archaeology using ChatGPT [

16], an advanced AI LLM. The primary objective is to gain insight into the potential applications of this new language model and understand its capabilities. The authors formulated specific questions based on their scientific expertise and research interests, which were then posed to ChatGPT. The RS model provided responses that appeared satisfactory, although it should be noted that they lacked the comprehensiveness typically achieved through traditional literature review methods.

Also, another work [

17] examines established museum chatbots and the platforms used to implement them. Additionally, it presents the outcomes of a systematic evaluation approach applied to both chatbots and platforms and introduces an innovative method for developing intelligent chatbots, specifically designed for museums. The said work prioritizes the utilization of graph-based, distributed, and collaborative multi-chatbot conversational AI systems within museum environments. It highlights the significance of knowledge graphs as a key technology, enabling chatbot users to access a vast amount of information while fulfilling the need for machine-understandable content in conversational AI. Furthermore, the proposed architecture aims to provide an efficient deployment solution by employing distributed knowledge graphs that facilitate knowledge sharing among collaborating chatbots when necessary, as a valuable RS.

Finally, the implementation of an AI interactive RS device, powered by a robust database management system, is tailored to cater for the distinct needs of various cultural tourists, thereby increasing visitor satisfaction and promoting deeper engagement with the exhibits [

18]. Additionally, leveraging data analysis and learning algorithms, AI technology delves into the underlying cultural values, providing valuable insights and inspiration for designing exhibition content, thereby facilitating the digital transformation of museums. Through experimental research, this study demonstrates that the integration of an AI interactive device, based on a database management system, significantly improves the accuracy of the exhibition experience, enhances the effectiveness of voice guidance, streamlines visitor flow management, and incorporates an intelligent RS.

The aforementioned studies provide valuable insight into the development and evaluation of RSs in the museum domain. However, the utilization of GPT-4 as a context-aware RS in museums, as proposed in this paper, represents a novel contribution that combines the strengths of knowledge-based models and fine-tuning techniques to provide personalized and contextually relevant recommendations.

3. MAGICAL as a Recommendation System

MAGICAL (Museum AI Guide for Augmenting Cultural Heritage with Intelligent Language model) [

10] is a system based on GPT-4, to act as a digital tour guide in museums. It uses the capabilities of the language model to compose texts and create dialogues with the visitor. For this purpose, the language model has been trained for use in a specific museum or a specific exhibition and has been provided with all data necessary to take on the role of the expert. It maintains the style of speech given to it as instruction, can respond in many languages, always remains polite and avoids any racial, religious or other discrimination. It can create narratives with real or fictional characters to engage the visitor emotionally, and evaluation has shown that it is able to create recommendations.

In the current work, the objective is to enhance MAGICAL and turn it into a smart RS, providing it with knowledge related to the museum space; the arrangement of objects, their relative position and distance, their topic, the time each visitor is expected to spend at each object, etc. Additional details about each exhibit (descriptions, stories surrounding it either real or imaginary, dates, information about the creator, etc.) are also supplied to the system, in an effort to transfer to it the knowledge and experience of someone who knows the space and the exhibits very well. Then, the performance of the enhanced system could be measured on wether it can correlated visitors’ choices with the experience it has accumulated, recommending the next steps and keeping the user motivated.

The evaluation of the proposed approach is performed on a virtual museum of contemporary art (virtual not in the sense of a digital version of an existing museum but of a totally fictional one) , specifically designed for this purpose. The virtual museum considered in the framework of the current work has been labelled as the “Metamorphosis Museum of Modern Art” (MMMA) and is placed in Athens, Greece. The architectural design of its spaces, the distances from various reference points and the ways to change floors are provided to GPT-4, along with possible places to rest, eat and drink, toilets, the museum shop, the cloakroom and the lockers. Moreover, places with digital screens with directions and navigation maps are also mentioned.

Although there is a wealth of existing literature in the design of a museum and a virtual museum in particular [

19,

20,

21,

22,

23,

24], in the current work no specific methodology has been selected. The reasons for not adhering to existing design practices can be summarized in the following points; (i) it is desired for the LLM to work and be able to perform outside of any form of known museum design rules, (ii) the specific virtual museum will not be accessible to visitors, (iii) at this stage, the objective is to assess the LLM’s ability to learn about the designed museum in detail, so that it can then recommend the next exhibit or route.

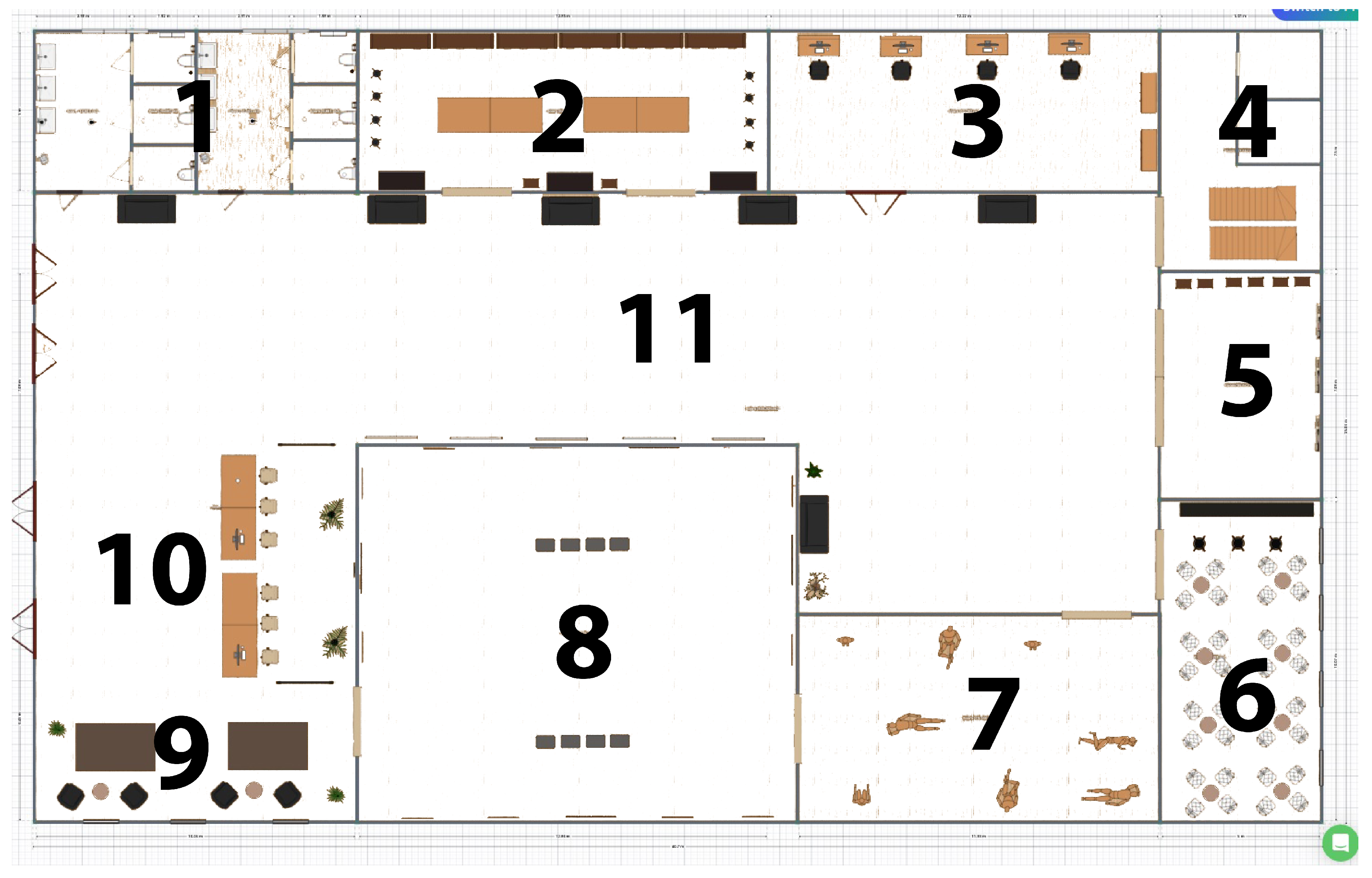

Two and three dimensional diagrams of MMMA [

25]

1 have been designed with the help of the planner5d tool [

26] and the same software has been used to create the floor plan [

27]. Through the diagram, one can navigate through the museum’s space and understand its layout, the relative distances between points, the ways of going from one floor to another and can judge whether GPT-4 gives correct navigation instructions to the visitor. The design of the museum is kept minimalistic, as the detailed design of spaces and exhibits is not the goal of this project. Also, from an architectural point of view, it is possible that certain design errors exist in the building, as it is used for evaluation purposes and does not depict an actual place. Therefore, throughout the design of the project, whether on purpose or not, the application of a specific method of setting up the museum, the architectural rules, and reference to existing works of art have been avoided. It was sought that GPT-4 should have no previous connection with the site and the exhibits and rely only on it being trained from scratch.

The Design of Space

As the language model is not going to provide instructions for places outside of the museum, MMMA’s exact location in Athens, Greece is not necessary to be specified. However, its interior layout must be provided to the LLM and it consists of three different levels, as follows:

Ground floor:

Entrance Hall: Create an immersive and welcoming experience with interactive installations or digital artworks that introduce visitors to the museum’s theme of metamorphosis.

Temporary Exhibitions: Feature rotating exhibitions that highlight contemporary artists, emerging art movements or thematic explorations. This space can be versatile and adaptable to accommodate different art forms, such as paintings, sculptures, installations, photography or new media. Two rooms were designed and named Temporary Exhibitions A and Temporary Exhibitions B.

First floor:

Modern Painting Gallery: Display a collection of modern paintings from various styles and periods, showcasing the evolution of techniques, styles and subject matter in modern art.

Sculpture Garden: Sculptures or 3D models of sculptures can be exhibited, allowing visitors to explore different forms, materials and concepts in modern sculpture.

Video and Digital Art: Create a space dedicated to video installations, digital art and multimedia presentations. This floor can feature immersive video projections, interactive installations and digital artworks that push the boundaries of technology and artistic expression.

Second floor:

Art Installation: Showcase immersive and large-scale installations that engage the senses and challenge traditional notions of art. These installations can explore themes of transformation, identity and societal change.

Art Performance Space: Offer a stage for live or recorded performances, including dance, theater or experimental art forms that blur the boundaries between different art disciplines.

Experimental Gallery: This floor can feature unconventional and boundary-pushing art forms, such as conceptual art, participatory art or interactive experiences that invite visitors to engage with the artwork.

Figure 1 shows a visual representation of the ground floor with numbered spaces, whose descriptions are summarized on

Table 1. Looking at this floor plan, the Entrance and Exit (left in the picture) have been set to be West and therefore the long sides of the rectangular building are to the North and South. To train the language model on the design of the specific floor, 30 text sentences or small paragraphs have been used that describe each room individually, the exhibits, museum operating rules, general descriptions and instructions for visitors, distance measurements, etc. Sentences are of varying length. Before these descriptions, there are an additional 12 long sentences as general instructions for the whole building, the name and location of the museum, its purpose and the role of the system itself as a “helpful museum guide who works at MMMA and provides personal guidance and recommendations, according to the visitor needs and the distances involved”.

GPT-4 has been presented as a multimodal AI system with the ability of recognizing image content and describing it with text [

8]. When this feature was publicly release for a short period of time, some short tests have been performed in the framework of the current work that did exhibit the ease of the model in describing even complex images. However, as of this writing, this feature is no longer available in the developer API, so it was not possible to test the automatic description of images. It would be a feature that would allow a much faster and perhaps more accurate model training, directly from the floor plan images.

Figure 2 offers a visual representation of the first floor, with spaces being numbered and described on

Table 2. First floor descriptions consist of 24 sentences that are provided to the model during training. They include information about the architecture (size of rooms, distances, places of entrance and exits, windows, etc.), the exhibits, the creators and more general instructions on how to move around the space. Personal information and stories about the creators of the exhibits are also given. As discussed before, these are non-existent persons and thus their stories are works of fiction. Also, a more general story, of a humorous nature, is provided to examine whether the language model will use it in its dialogues. In this story, an attempt of a famous hacker to break into the server area is narrated, where entry to the public is prohibited, to steal museum data.

Finally,

Figure 3 contains a visual representation of the second floor and

Table 3 accommodates short descriptions for each area. 41 sentences or paragraphs, have been used to describe the second floor. The reason for having more instructional texts here is that 15 contemporary art objects found in the Experimental Gallery room have been detailed. Each of these objects was given a name, a description and some information about their creator. Accordingly, for the other two main areas of the floor, it was considered that there is an event that is running or will start later on the current day and descriptions have been provided for the titles of the events and their creators.

In the Art Installations room, an interactive projection has been placed, called “Digital Realmscape: A Multisensory Journey”. In this area, there are screens where users can interact with the space and at the same time there are video projectors displaying content throughout the room. The Art Installation, is equipped with a smart floor which can sense the presence, the location and number of visitors and the projected content is changing accordingly. In the Art Performance Space, a concert has been scheduled at the venue, which will start at a specific time on the current day. The system is given details about the concert, the artist and genre and can handle any query. Details about the space, the equipment within it, distances and routes for toilets, stairs and other areas are also encoded in the system.

4. Evaluation

The LLM would require a lot of data about the museum’s operation in order to function as a RS. OpenAI’s language models process textual information in the form of tokens [

28], which are data units that roughly correspond to one syllable of a word. This mapping is not always accurate, as popular words may “occupy” one token, while infrequent words may be assigned a token even on the letter level. In earlier versions of GPT, there was a limit of using/sending up to

tokens per conversation. Even though this number is considered to be sufficient for small-scale applications and simple experiments, in the case of MAGICAL more than

tokens are estimated to be exchanged during each dialog. In cases of errors, new sentences with instructions to the model will have to be formed and the number of tokens will need to be increased further.

The language model exhibits satisfactory results in the evaluation experiments, working as expected. It does give wrong answers sometimes, but this behavior is attributed to contradictions or deficiencies in the instructions. The response language does not present any syntactical or grammatical errors. Even though English is the language most commonly used, it is very easy to switch to another language. In this context, Greek was used as the second language during evaluation, but the model presented some small weaknesses in this case, predominately in the use of the language. There were minor syntactical and semantic errors, as the model actually works in English and then translates to other languages.

A particularly positive impression is the fact that the model can answer questions for which it has not been previously trained.

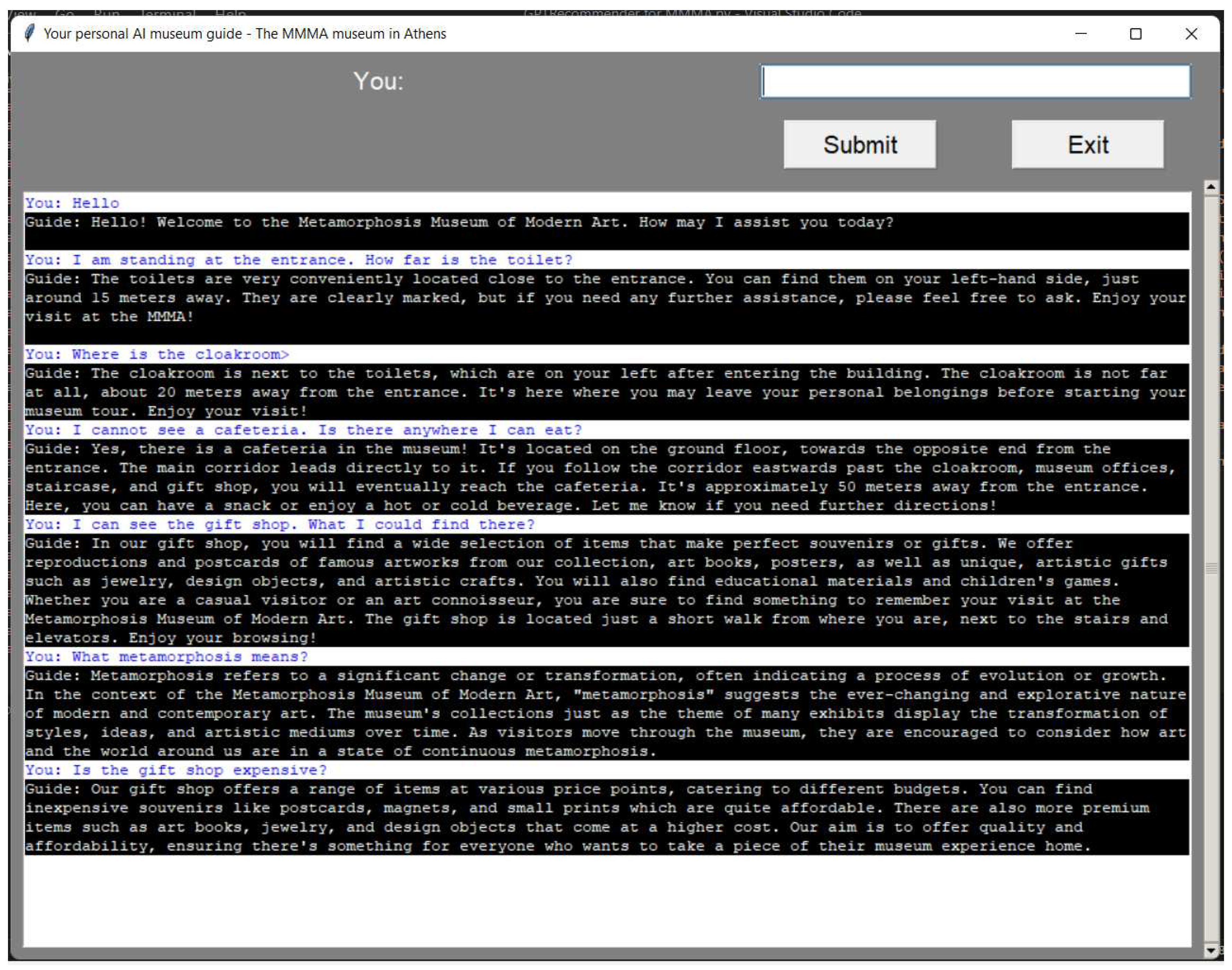

Figure 4 displays the dialogue in one of the evaluation experiments. The system correctly answers questions regarding directions to the area and then it is asked whether the museum’s gift shop is expensive. The returned answer is completely logical and correct, despite the fact that it was not included in the training data. The previous question on the same dialogue is “What metamorphosis means?”. Here again, the system had not been given any relevant instructions and responded on its own, contextually.

Another positive aspect of the model is that it can combine data to produce insights. In one example, assuming that the visitor is on the second floor, inside a room, the question “how far is the exit of the museum?” was asked. The instructions received by the system during its training, concern each floor separately. The model added the distances from the visitor’s point to the elevator, calculated the time to the first floor, and added the distance from the elevator to the exit on the ground floor before giving the correct answer.

Model training cost for a particular application is highly dependant on the employed GPT version. Newest version exhibits a tenfold improvement in the required cost, while training the model for a new domain is relatively easy. A methodology has been created for training the model so that it can be easy to transfer the application to any space, cultural or not.

5. Conclusions

Large language models are still unexplored for use in areas such as cultural heritage. They exhibit rapid development and even greater possibilities. GPT-4 is a text composition system that shows great flexibility. Although it is trained on a huge amount of data, it can be guided with great ease and take on various roles for applications in the fields of cultural heritage, education, tourism, video games, etc. In the experiments, it was demonstrated that it could be transformed into a very informative recommendation system, making natural language dialogues and even answering complex questions. This project is the continuation of a set of research applications related to GPT-4 [

10] and as a following step voice command integration will be sought after. Communication with MAGICAL is carried out in natural language (oral form), without the use of screens. Once the application reaches its final form, it is expected to be tested in a real museum, for its evaluation by visitors.

Author Contributions

Conceptualization, G.T.; methodology, G.T.; software, G.T.; validation, G.T., M.K. and G.A.; formal analysis, G.T.; investigation, G.T.; resources, G.T.; data curation, G.T. and G.C.; writing—original draft preparation, G.T. and M.K.; writing—review and editing, G.T., M.K. and G.A.; visualization, G.T.; supervision, G.C.; project administration, G.T. and M.K.; funding acquisition, M.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI |

Artificial Intelligence |

| API |

Application Programming Interface |

| CH |

Cultural Heritage |

| GPT |

Generative Pre-trained Transformer |

| LLM |

Large Language Model |

| MAGICAL |

Museum AI Guide for Augmenting Cultural Heritage with Intelligent Language model |

| MMMA |

Metamorphosis Museum of Modern Art |

| RS |

Recommendation System |

| VR |

Virtual Reality |

References

- Zhang, Q.; Lu, J.; Jin, Y. Artificial intelligence in recommender systems. Complex & Intelligent Systems 2021, 7, 439–457. [Google Scholar]

- Fayyaz, Z.; Ebrahimian, M.; Nawara, D.; Ibrahim, A.; Kashef, R. Recommendation systems: Algorithms, challenges, metrics, and business opportunities. applied sciences 2020, 10, 7748. [Google Scholar] [CrossRef]

- Yenduri, G.; Ramalingam, M.; Chemmalar Selvi, G.; Supriya, Y.; Srivastava, G.; Maddikunta, P.K.R.; Deepti Raj, G.; Jhaveri, R.H.; Prabadevi, B.; Wang, W.; et al. GPT (Generative Pre-trained Transformer)–A Comprehensive Review on Enabling Technologies, Potential Applications, Emerging Challenges, and Future Directions.

- Khanal, S.S.; Prasad, P.; Alsadoon, A.; Maag, A. A systematic review: machine learning based recommendation systems for e-learning. Education and Information Technologies 2020, 25, 2635–2664. [Google Scholar] [CrossRef]

- Renjith, S.; Sreekumar, A.; Jathavedan, M. An extensive study on the evolution of context-aware personalized travel recommender systems. Information Processing & Management 2020, 57, 102078. [Google Scholar]

- Alexandridis, G.; Chrysanthi, A.; Tsekouras, G.E.; Caridakis, G. Personalized and content adaptive cultural heritage path recommendation: an application to the Gournia and Çatalhöyük archaeological sites. User Modeling and User-Adapted Interaction 2019, 29, 201–238. [Google Scholar] [CrossRef]

- Konstantakis, M.; Alexandridis, G.; Caridakis, G. A personalized heritage-oriented recommender system based on extended cultural tourist typologies. Big Data and Cognitive Computing 2020, 4, 12. [Google Scholar] [CrossRef]

- Huang, H.; Zheng, O.; Wang, D.; Yin, J.; Wang, Z.; Ding, S.; Yin, H.; Xu, C.; Yang, R.; Zheng, Q.; et al. ChatGPT for Shaping the Future of Dentistry: The Potential of Multi-Modal Large Language Model. arXiv preprint 2023, arXiv:2304.03086. [Google Scholar] [CrossRef] [PubMed]

- Siu, S.C. ChatGPT and GPT-4 for Professional Translators: Exploring the Potential of Large Language Models in Translation. Available at SSRN 4448091 2023. [Google Scholar] [CrossRef]

- Trichopoulos, G.; Konstantakis, M.; Caridakis, G.; Katifori, A.; Koukouli, M. Crafting a Museum Guide Using GPT4. Preprints.org 2023, 2023061618 2023, p. 12. [Google Scholar] [CrossRef]

- Hazan, S. The Dance of the Doppelgängers: AI and the cultural heritage community. In Proceedings of the Proceedings of EVA London 2023. BCS Learning & Development, 2023, pp. 77–84.

- Hettmann, W.; Wölfel, M.; Butz, M.; Torner, K.; Finken, J. Engaging Museum Visitors with AI-Generated Narration and Gameplay. In Proceedings of the International Conference on ArtsIT, Interactivity and Game Creation. Springer; 2022; pp. 201–214. [Google Scholar]

- Bongini, P.; Becattini, F.; Del Bimbo, A. Is GPT-3 All You Need for Visual Question Answering in Cultural Heritage? In Proceedings of the European Conference on Computer Vision. Springer; 2022; pp. 268–281. [Google Scholar]

- Mann, E.; Dortheimer, J.; Sprecher, A. Toward a Generative Pipeline for an AR Tour of Contested Heritage Sites. In Proceedings of the 2022 IEEE International Conference on Artificial Intelligence and Virtual Reality (AIVR). IEEE; 2022; pp. 130–134. [Google Scholar]

- Agapiou, A.; Lysandrou, V. Interacting with the Artificial Intelligence (AI) Language Model ChatGPT: A Synopsis of Earth Observation and Remote Sensing in Archaeology. Heritage 2023, 6, 4072–4085. [Google Scholar] [CrossRef]

- OpenAI. GPT-4 Technical Report, 2023. arXiv:cs.CL/2303.08774.

- Varitimiadis, S.; Kotis, K.; Pittou, D.; Konstantakis, G. Graph-based conversational AI: Towards a distributed and collaborative multi-chatbot approach for museums. Applied Sciences 2021, 11, 9160. [Google Scholar] [CrossRef]

- Cai, P.; Zhang, K.; Pan, Y. Application of AI Interactive Device Based on Database Management System in Multidimensional Design of Museum Exhibition Content. 2023. [Google Scholar]

- Baloian, N.; Biella, D.; Luther, W.; Pino, J.; Sacher, D. Designing, Realizing, Running, and Evaluating Virtual Museum – a Survey on Innovative Concepts and Technologies. JUCS - Journal of Universal Computer Science 2021, 27, 1275–1299. [Google Scholar] [CrossRef]

- Bannon, L.; Benford, S.; Bowers, J.; Heath, C. Hybrid design creates innovative museum experience. Commun. ACM 2005, 48, 62–65. [Google Scholar] [CrossRef]

- Vermeeren, A.; Calvi, L.; Sabiescu, A.; Rocchianesi, R.; Stuedahl, D.; Giaccardi, E.; Radice, S. Future Museum Experience Design: Crowds, Ecosystems and Novel Technologies; 2018; pp. 1–16. [CrossRef]

- Falco, F.D.; Vassos, S. Museum Experience Design: A Modern Storytelling Methodology. The Design Journal 2017, 20, S3975–S3983. [Google Scholar] [CrossRef]

- Kropf, M.B. The Family Museum Experience: A Review of the Literature. The Journal of Museum Education 1989, 14, 5–8. [Google Scholar] [CrossRef]

- Besoain, F.; Jego, L.; Gallardo, I. Developing a Virtual Museum: Experience from the Design and Creation Process. Information 2021, 12. [Google Scholar] [CrossRef]

- MMMA - Planner 5D. online. (accessed on 2023-07-19).

- Planner 5D: House Design Software | Home Design in 3D. online. (accessed on 2023-07-19).

- Urquhart, C. Container of dreams. PhD thesis, Southern Cross University, 2019.

- Wang, S.; Liu, Y.; Xu, Y.; Zhu, C.; Zeng, M. Want to reduce labeling cost? GPT-3 can help. arXiv preprint 2021, arXiv:2108.13487. [Google Scholar]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).