1. Introduction

As the modern manufacturing industry is evolving to become increasingly discrete and intricate, a crucial characteristic is the mastery of core technology in-house while outsourcing the production of other components to downstream factories. As a result, quality control assumes a paramount importance in the production process. At the same time the development of big data, cloud computing, Internet of things gradually around the layout of the industry, sensors and intelligent data acquisition are helping to improve the life cycle of any asset, starting from design, manufacturing, distribution, maintenance, until recycling [

1]. The dynamic and complex nature of the production floor, characterized by intermittent, fluctuating, stochastic, and non-linear behaviors, poses a significant challenge in leveraging data for effective utilization. Consequently, the development of a robust quality prediction model represents a formidable undertaking.

The prediction of quality models is a significant concern within the realm of industrial intelligence and represents a typical task for analytical processes within the industry[

2], The task of prediction is bifurcated into the capacity to effectuate suitable modifications based on the outcomes, thereby enhancing the product's qualification rate. At present, discrete manufacturing facilities predominantly rely on Manufacturing Execution Systems (MES) to manage and analyze their manufacturing data, which has exhibited a remarkable surge in usage. Meanwhile, manufacturing plants typically employ arbitrary inspection methods to identify defects in the parts received from their downstream suppliers. However, issues pertaining to the quality of supplier parts can significantly impede production, resulting in a divergence in both the supply chain and quality-related factors[

3]. Predictive manufacturing systems are emerging as a new paradigm for solving quality problems caused by quality divergences in the production process that propagate downstream in the production chain and cannot be detected.

From a technological perspective, research related to efficient processing of multivariate heterogeneous data in the past decade has been plagued by problems such as missing data, duplication, nonlinearity of noisy data structures, and concatenation of various stages. Improve product quality and ensure production stability, many key quality indicators often play an important role in quality control and forecasting [4-6]. A large number of research works have been published to efficiently handle industrial multivariate heterogeneous data and etraction of quality-related elements including the data-driven and deep learning methods[7-11]. For example, A method based on rough set data theory using particle swarm algorithm and least squares support vector machine [

12] to model manufacturing data of 5M1E in discrete manufacturing helps manufacturing companies to accomplish quality prediction and quality control. A data-driven real-time learning model is used to deal with nonlinear processes in the face of uncertainty perturbations in industrial environments, which in turn improves fault detection accuracy [

13]. As well as proposing a framework for a data-driven approach [

14] to bottleneck discovery in manufacturing execution systems to reduce the quality fluctuations brought about by uncertainty allowing perturbations. Help improve the digitalization of the discrete shop by combining a manufacturing execution system and a computerized maintenance system [

15] for prediction and decision support of core equipment maintenance in the production process. In [

16], the manufacturing critical feature points are classified according to the assembly requirements by means of an comprehensive coordination model, and the distribution method for minimizing integrated errors in the manufacturing process is established and applied in the aircraft assembly process. However these do not take into account the complexity of discrete manufacturing mainly around univariate prediction and control, the large amount of data in the MES system needs to extract data associated with quality characteristics for analysis, and in the past no link between quality and raw data was established.

The main components of the manufacturing execution system are [

17]: he Manufacturing Execution System (MES) is comprised of several key modules, including the CONFIG basic parameter setting module, the PM production management module, the PMO production site monitoring module, the QC quality control module, and the REPAIR repair station module. The QC module, which follows ISO09001 product line specifications, uploads test results to the database[

18]. Qualified products proceed to the next station for production, while unqualified products are sent for rework. In recent years, there have been many new advancements in the field of deep learning that have been integrated into manufacturing systems, making it a highly promising area for extending predictive capabilities and optimizing quality control and process efficiency in QC modules. In [

19] prevented the propagation of undetected defects down the production line and used the XBoost method is combined in the manufacturing system to improve the recall rate of automotive defective products. In [

20] based on deep learning in an intelligent monitoring system selects the most relevant features with quality to predict the product pass rate to improve the factory quality.

Also the application of neural networks provides novel solutions for nonlinear prediction in manufacturing systems, in [

21] for deep learning for quality management for quality extraction of aluminum castings based on a feature pyramid network approach to improve accuracy. The smart manufacturing device data is analyzed in [

22] and the accuracy of device identification is improved by finding the correlation between the data through LSTM in the cloud server. Learning relevant hidden variables in [

23] using supervised LSTM networks to predict quality dynamic properties. A 2DConvLSTMAE method for metal can manufacturing speed in [

24] uses a 2DConvLSTMAE method for simultaneous prediction of multiple machines to reduce training time and improve prediction performance. The scheduling model for the workshop cycle scheduling problem is established using LRNNN, which reduces the complexity of the problem and is more convenient for use in actual production[

25]. The contemporary challenge for discrete manufacturing quality prediction is the real-time monitoring of product quality for enterprises. To address this challenge, the use of high-performance, dynamic, and synchronous recurrent neural networks has been proposed. This proposal stems from the findings of an empirical survey conducted on the actual generation process of multi-dimensional inspection data extraction quality features within manufacturing systems.

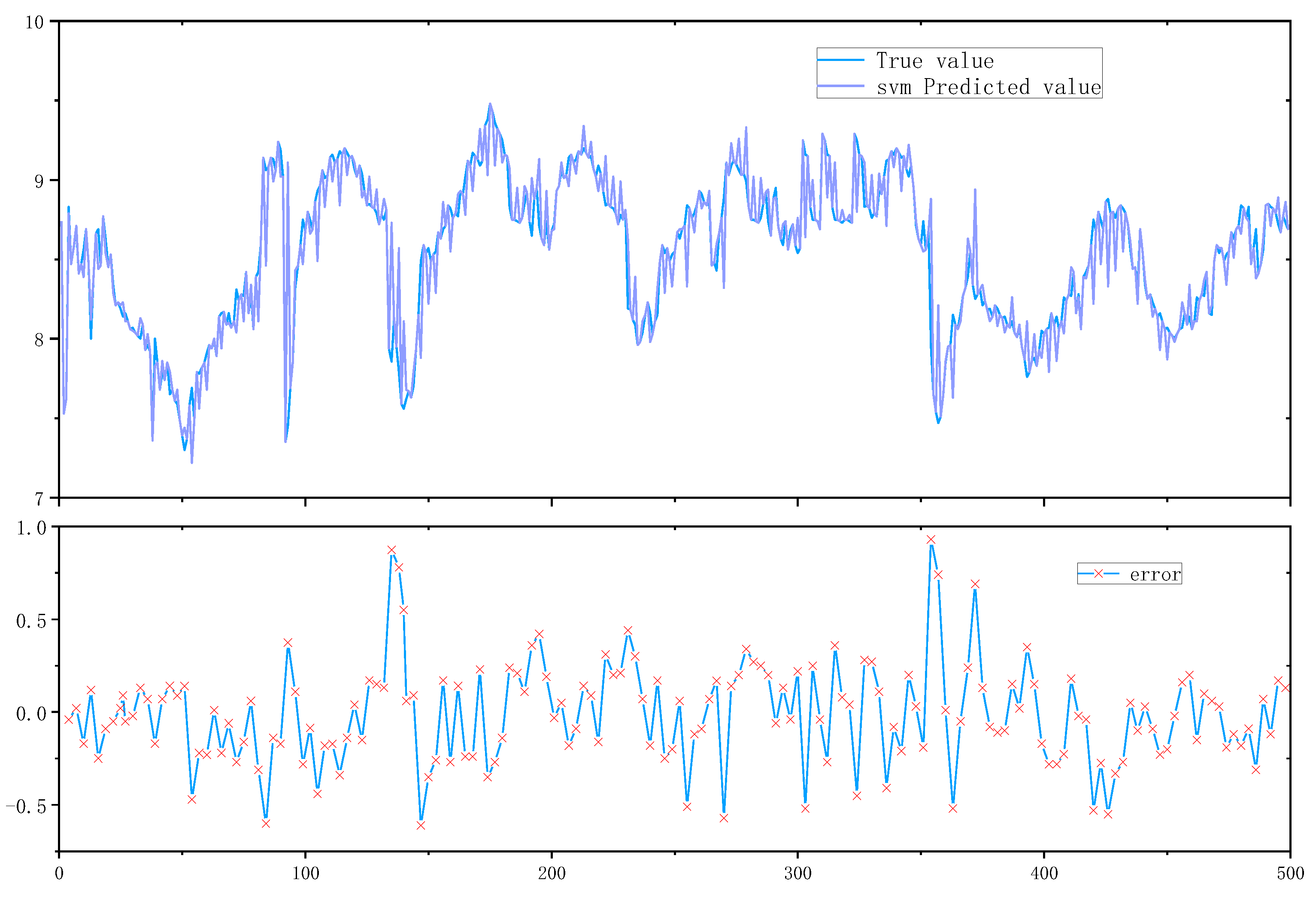

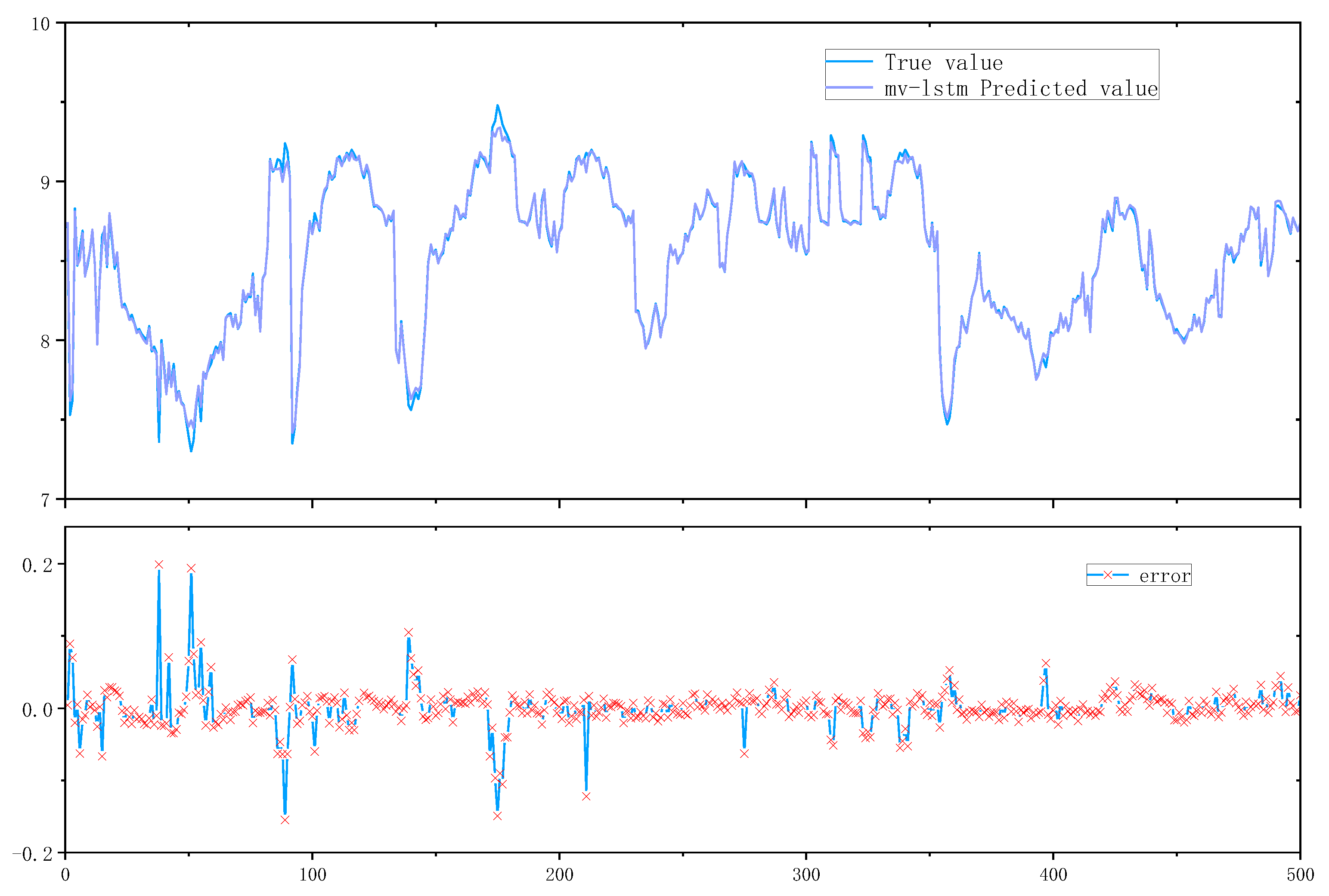

Based on the above analysis, and considering the complexity of quality prediction in the product production process, this paper proposes a fuzzy quality evaluation for data representation of quality issues at each stage of product production, followed by quality prediction in a multivariate long and short term memory model. The proposed model is validated in the drinking water line and trained by each testing index power, flow rate, heating rate, leakage current and evaluation results as historical data to predict the product quality level of the next batch of products at that node in the short term of the assembly line. Section II of the article presents the theoretical background implementation principles of the gateway deployment and approach. Section III shows the experimental results and presents insights. Section IV concludes the performance of the presented method on the data set and suggests possible improvement measures for the model.

2. MATERIALS AND METHODS

2.1. Data Acquisition

Data collection in ci xi city prays for the qi xi intelligent technology limited company's water dispenser production line data, through the production of each stage of the deployment of interface transmission detection data to achieve data collection, the collection of data stored in the server, you can view the product id real-time data in the mobile terminal.

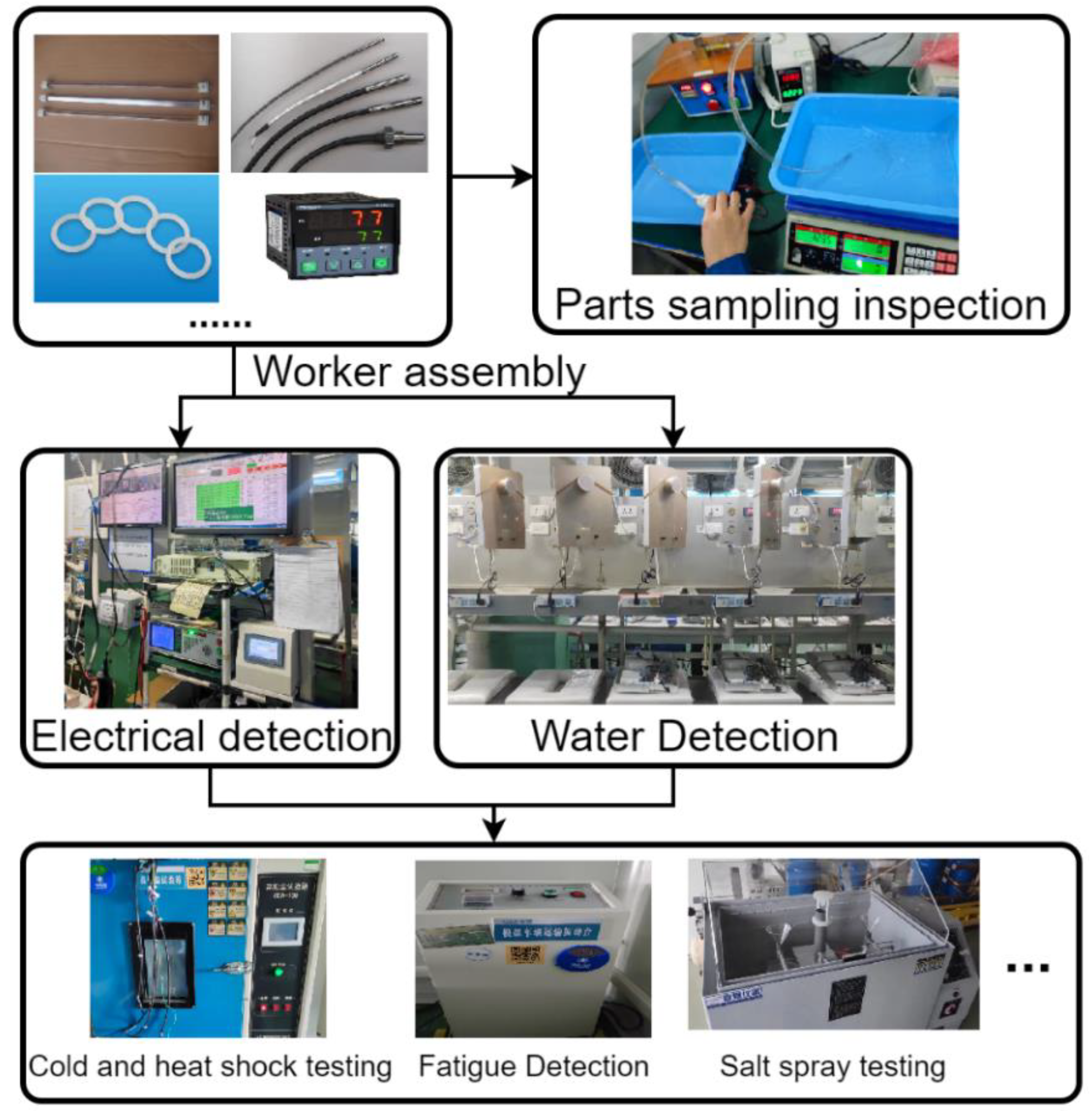

Which each part sampling data by the quality inspector will be the same manufacturer batch of products in accordance with a certain proportion of the function of the conformity check usually sampling sample data as the batch of parts quality data, in order to prevent the occurrence of quality differences can increase the number of sampling to increase the reliability of detection. Semi-finished product testing according to a certain degree of product assembly after a testing step water dispenser product testing can be divided into electrical testing and water testing, its electrical testing by a series of measurement tools such as oscilloscopes specific data testing, water testing by the flow sensor, temperature sensor and the relationship between the specific value of time detection. Finished product testing in the cold and heat shock test, fatigue test, smoke test for the quality inspector according to the actual situation of the evaluation of the test.

Figure 1.

Multi-stage detection data collection for water dispenser production line.

Figure 1.

Multi-stage detection data collection for water dispenser production line.

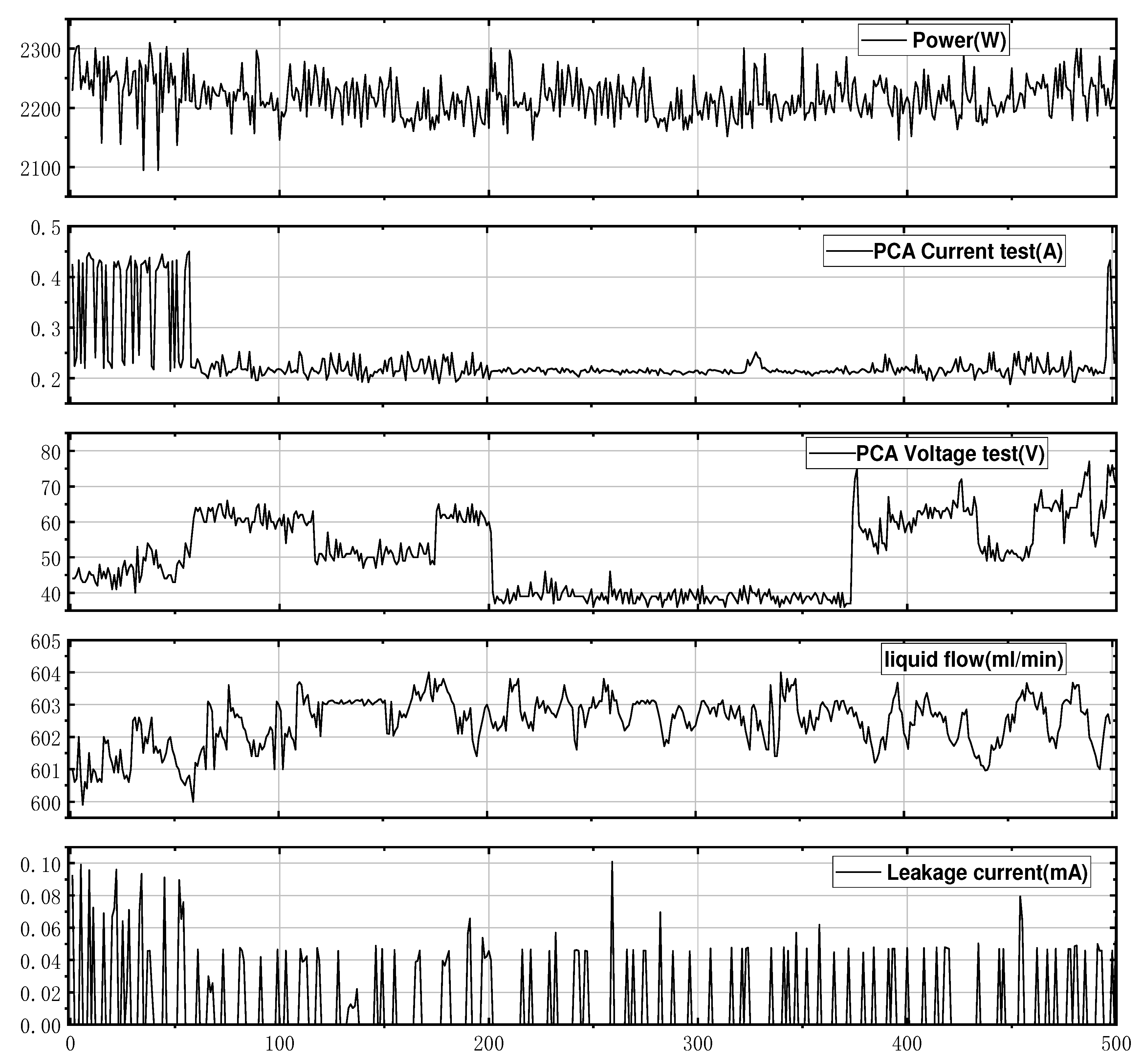

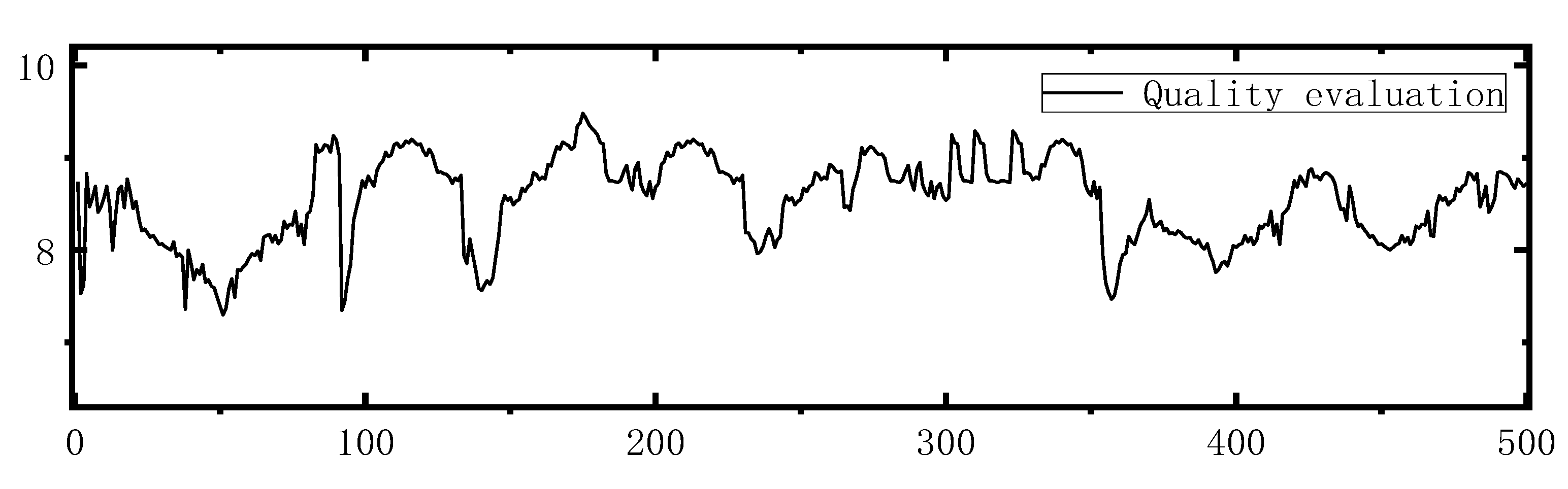

By traversing the gateway data of the drinking fountains produced by QIXI and extracting the semi-finished product stage data from the supply chain and manufacturing execution system of the production line, the quality level was evaluated with 500 drinking fountains produced continuously on the same production line to discover the quality divergence points. The LSTM model can generally only reflect the variable time-series dynamic characteristics and cannot extract the quality features we extract the quality features at this stage according to fuzzy theory, of the export of real-time data starting file is mainly stored in json format we need to traverse each variable to facilitate later input model. After traversing the data we tested the water dispenser semi-finished product including power, PCA Current test, PCA Voltage test, flow output, and leakage current. Missing defaults take the interpolation method for completing. There are many reasons for missing data, which may be caused by human or sensor failure, and the specific methods can be found in [

26].

Figure 4.

Semi-finished product manufacturing data traversal.

Figure 4.

Semi-finished product manufacturing data traversal.

2.2. LSTM prediction model

Traditional neural networks are fully connected from the input layer to the hidden layers and then to the output layer, but lack intra-layer connections between neurons. This can result in large deviations when processing sequential data [

27]. In contrast, recurrent neural networks (RNNs) have the ability to retain and apply information from previous computations to current output calculations through the presence of connections between neurons within the hidden layers. The input to the hidden layer is comprised of both the output from the input layer and the output from the hidden layer at the previous time step. A common training method for RNNs when processing sequential data is the back-propagation through time (BPTT) algorithm, which operates by locating optimal points along the negative gradient direction of parameter optimization until convergence is achieved. However, during optimization, it is necessary to trace back information for all previous time steps in order to compute the partial derivative of parameters at a given moment. The overall partial derivative function is then calculated as the sum of all time steps. The inclusion of activation functions can result in multiplicative interactions between their derivatives, leading to issues such as “gradient vanishing” or “gradient explosion” [

28].

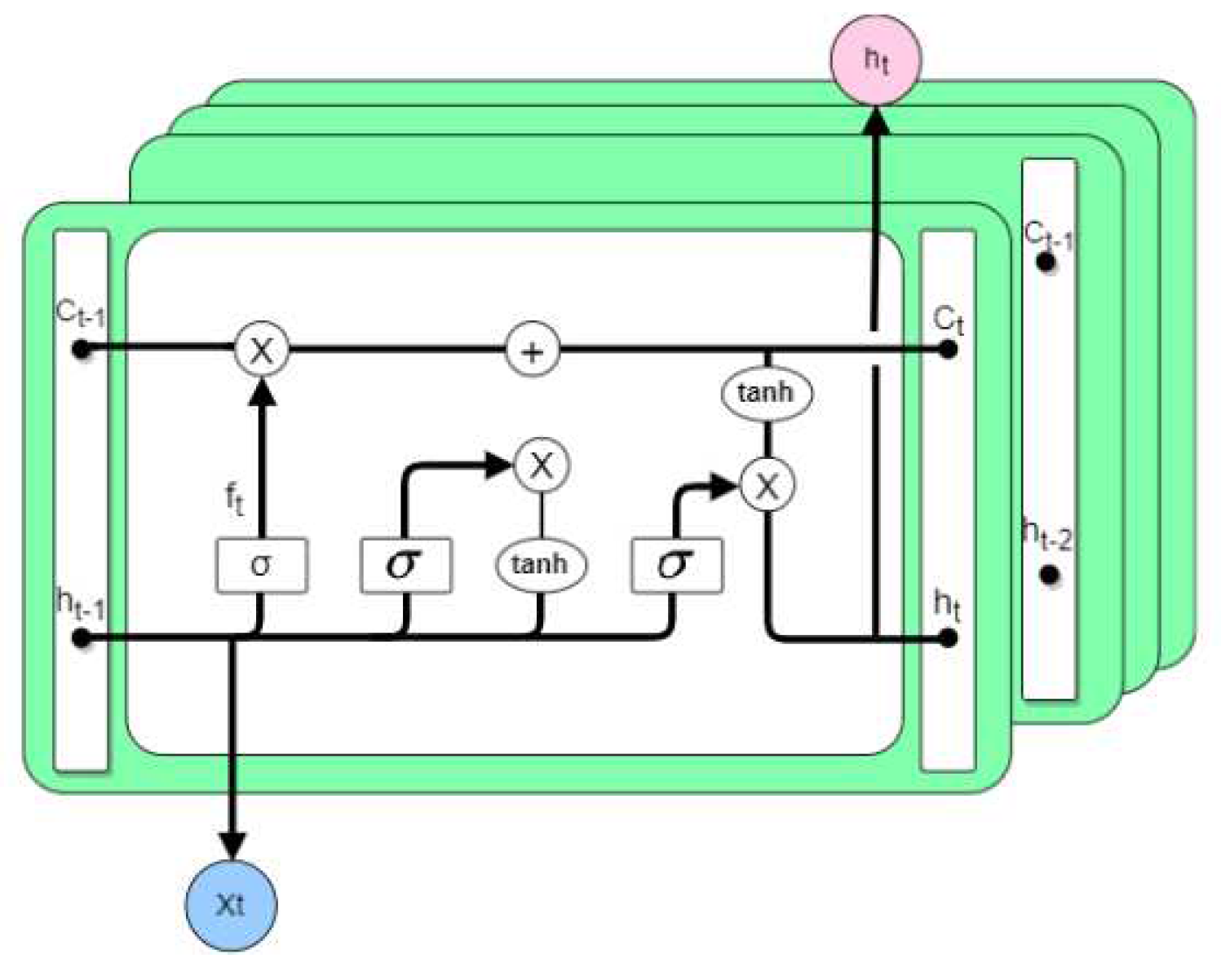

LSTM is a recurrent neural network RNN improvement, is a recurrent neural network used to extract the temporal information and temporal characteristics of the processing, compared to RNN designed memory cells to introduce the forgetting gate method for information can be selective memory filter out the noise information, reducing the memory burden.

In

Figure 2 the input gate learns the quality-related sequence, in the forgetting gate the information is learned and saved in the memory cell, and the output gate outputs the result by controlling the current time step. Assuming that the equation passed at time step t moments:

The same as RNN, LSTM uses back propagation method to update the model, but LSTM can fit faster than RNN but the number of parameters is large so it needs a lot of data as support.

Like the RNN, the LSTM network has a process of data back-propagation, where the error t value propagates along the time series and spreads between layers. After obtaining the updated gradient of the horizontal and vertical weights and bias terms through the structure of the hidden layers, the updated value of each weight and bias term can be obtained. The calculation method is the same as the RNN network, and the value of the learning rate α should be set to control the error updated gradient and the speed of error decline. In the above training model, we introduced three evaluation metrics [39] to evaluate the prediction effect, which are defined as follows:

The root mean square error, also known as the standard error, is the square root of the mean square error. The rationale for introducing the root mean square error is identical to that for introducing the standard deviation. That is, the magnitude of the root mean square error differs from that of the data and does not visually reflect the degree of dispersion. Therefore, the square root is taken on the root mean square error to obtain the root mean square error:

The mean absolute error (MAE) is a commonly used regression loss function that represents the average magnitude of the error in predicted values, irrespective of the direction of the error. It is calculated as the sum of the absolute values of the differences between the target and predicted values and is expressed by the following equation 7:

The Mean Absolute Percentage Error (MAPE) is distinct from the RMSE in its relatively low sensitivity to discrete points. It serves as a robust statistical indicator of prediction accuracy, as demonstrated in Equation 8:

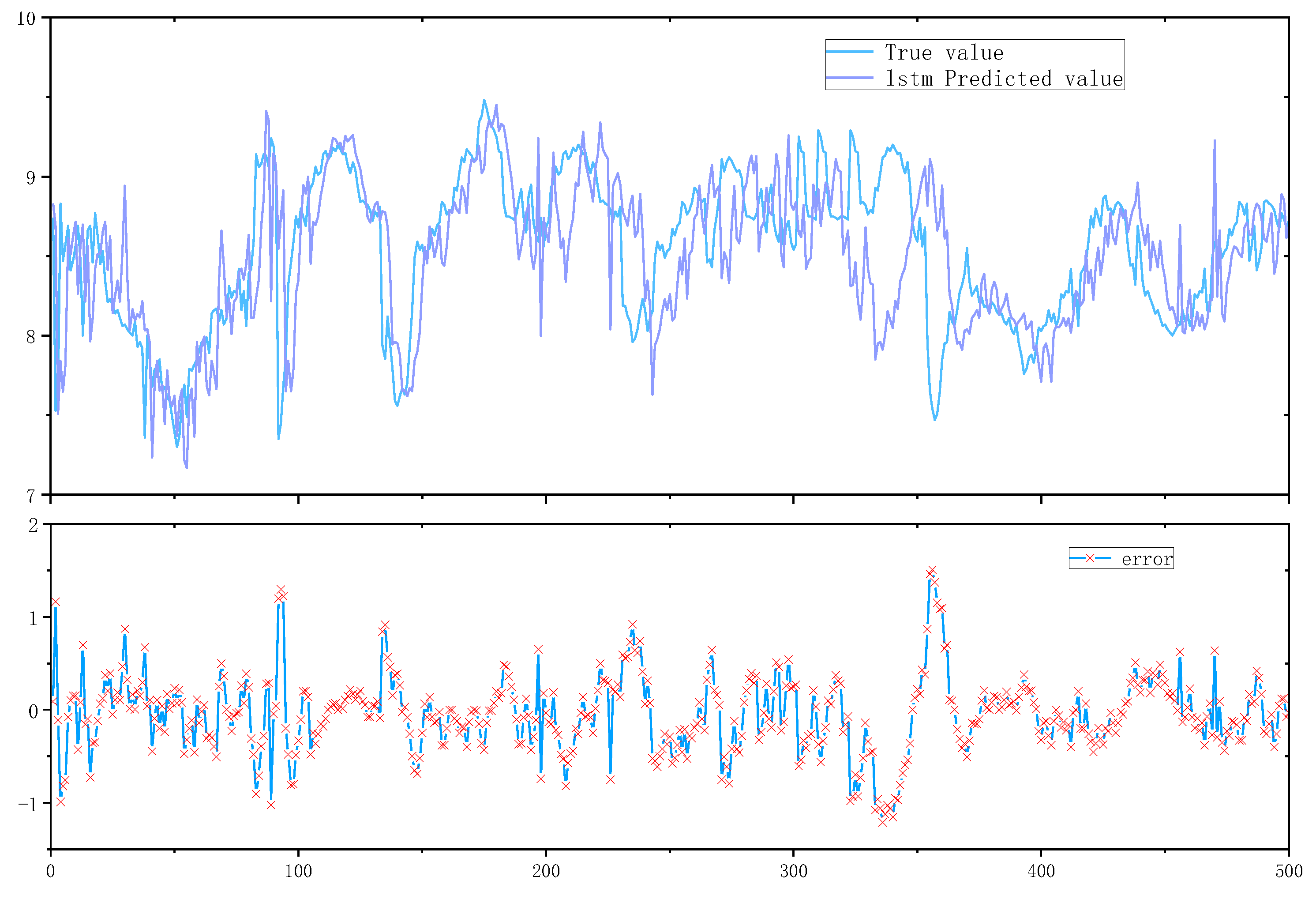

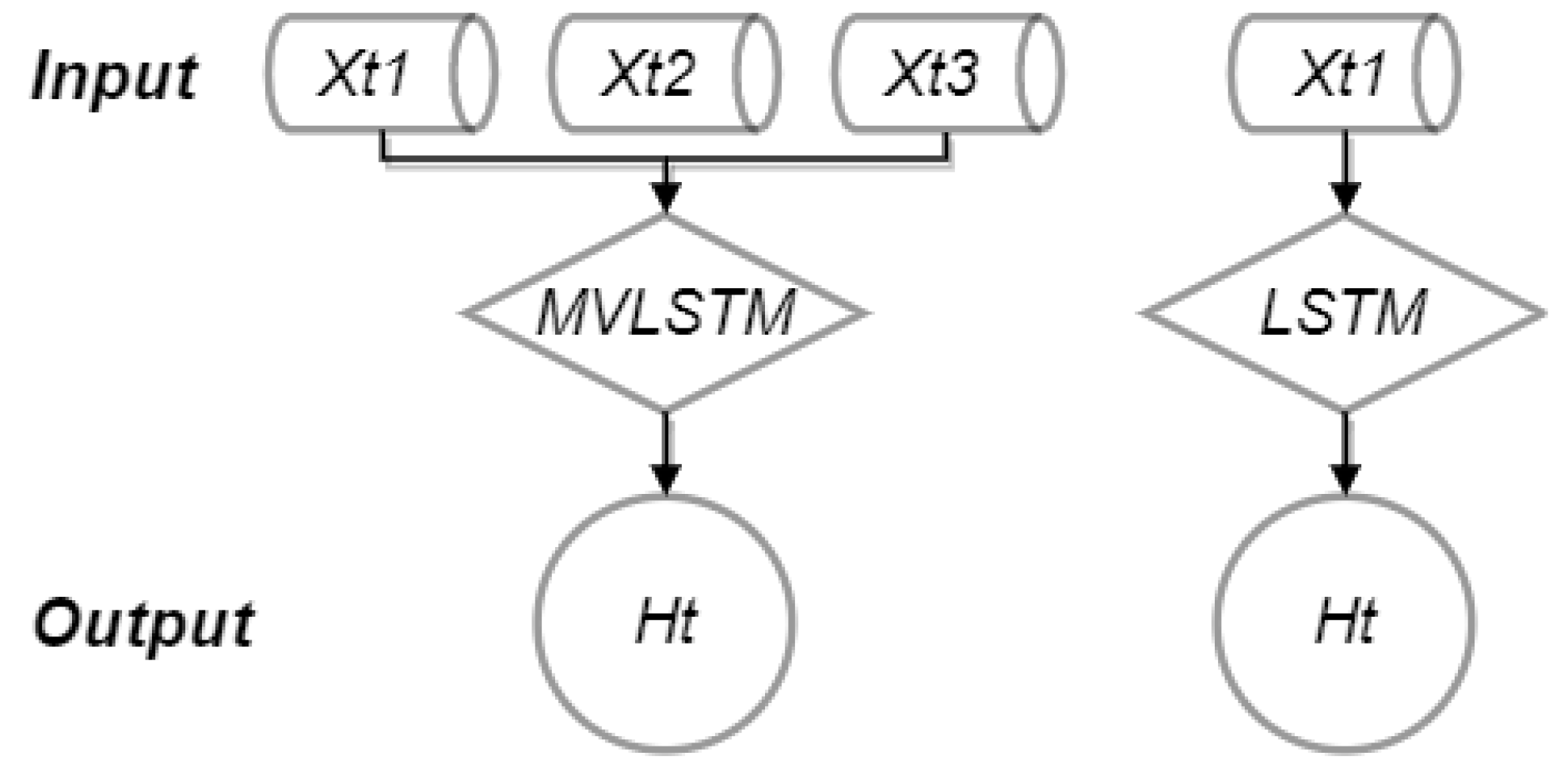

The improved MV-LSTM prediction model is proposed for the production characteristics of discrete manufacturing, which expands the input layers to multiple dimensions compared to the traditional LSTM. By learning historical data to map manufacturing parameters and quality characteristics, the number of neuron cells, LSTM layer, and Dense layer are adjusted to improve the accuracy of prediction to find quality divergence points.

2.3. Data preprocessing.

2.3.1. Data pre-processing

The filtered 500 product data were divided into two parts, 80% as training set and 20% as test set. To ensure the temporal correlation in the manufacturing process and to make the model fit better. We normalize the test data so that the input data is between (0, 1). The standard calculation formula is as follows:

The normalized feature value for sequence i is represented by xti. The maximum and minimum values of the training dataset for a given feature are denoted by xmax and xmin, respectively. The actual value of sequence i is denoted by xi. The power, PCA Current test, PCA Voltage test, flow output, and leakage current were all normalized to the range of 0–1 using the aforementioned equation.

2.3.2. Model construction

Univariate LSTM, which makes predictions of temporal characteristics for xi column data, and MV-LSTM, which expands one-dimensional xi data to multiple dimensions. The secondary indicators with large influence on the quality characteristics are entered into the MV-LSTM, which contains the main influences on the objective function.

The MV-LSTM neural network was constructed to design the LSTM layer, Dense layer and the number of neuron cells as parameters to regulate the prediction performance, and the expression between the target and acquisition variables can be expressed as follows:

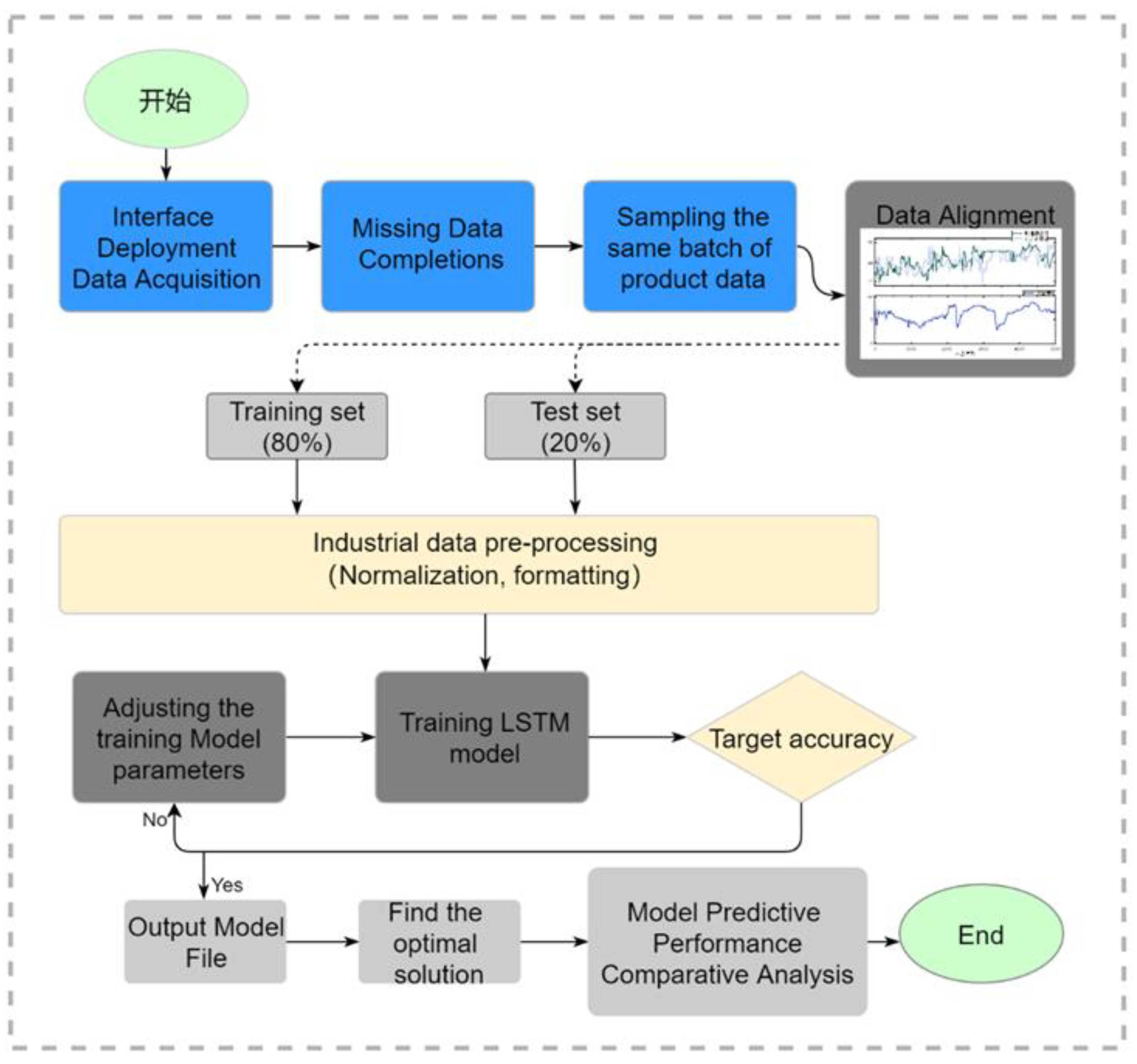

The ADAM algorithm is used for prediction performance optimization to adjust hyperparameters, and the analysis is performed by specifying hyperparameters and training all specified parameters to select the solution with the most parameters. The input variables are the number of LSTM hidden layers, the number of Dense hidden layers, the number of neurons, the memory step, three parameters to train different models, loss function using RMSE, and finally determine the optimal parameters, the main process of the model is represented in

Figure 4.

Figure 4.

Framework of LSTM network method for product production stage quality prediction.

Figure 4.

Framework of LSTM network method for product production stage quality prediction.

A gateway is deployed to collect and analyze the manufacturing data of drinking fountains produced continuously on a single production line. The data is completed for missing values and aligned by product sequence number. 400 sequential product data is used as the training set and 100 product data as the test set. The data is normalized and formatted for easy input into the model. The LSTM parameters are trained with 400 product data, and the optimal prediction is obtained by adjusting the parameters. The parameter file is exported after the prediction results are adjusted. Finally, the best method for drinking water line production data is determined through comparative analysis.

4. Conclusion

This study employs a quality assessment approach to analyze product quality in light of the inherent risks present throughout the production process. Given the influence of uncertainty and complexity on product quality at the production level, a fuzzy evaluation method is utilized to establish the relationship between each testing index and quality. This is achieved by constructing a multivariate input prediction model for stage quality prediction, which facilitates timely product rework and reduces factory costs.

In the factory production process, the manufacturing execution system’s inspection data collection QC module is extended to enhance prediction capabilities. This provides production companies with improvement activities and enables early detection of product quality issues. The proposed model is validated using the QIXI production line as a case study, demonstrating that the multivariate long and short-term memory model employed for surface analysis exhibits strong fitting capabilities for dealing with nonlinearities and is well-suited for systematic transformation of the production line.

Many manufacturers strive to increase quality improvement activities to prevent defective products from leaving the factory and damaging their reputation. In response to this demand, a higher level of quality prediction is required, as failed predictions inevitably lead to poor decisions. The model used in this paper exhibits higher accuracy compared to traditional methods and facilitates enterprise quality management and risk control. In the future, the development of manufacturing execution systems will trend towards greater intelligence, leveraging big data and deep learning to manage key equipment production and assess production levels at each stage. This will enable enterprises to identify quality divergence points earlier