Submitted:

19 June 2023

Posted:

22 June 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

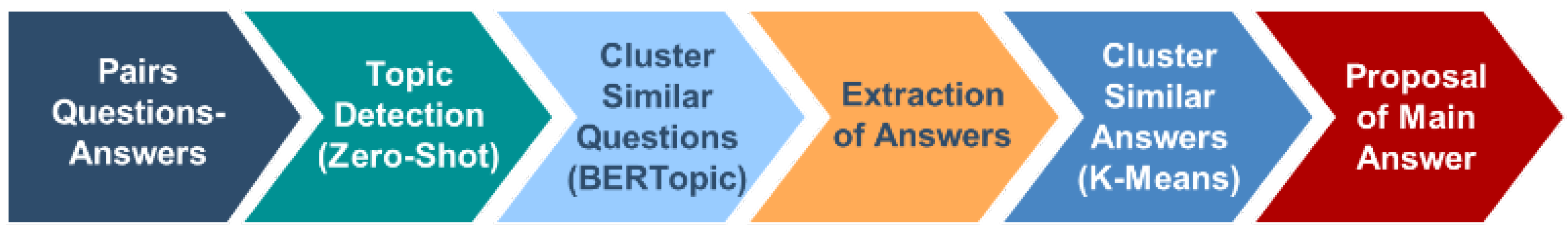

2. Architecture

2.1. Zero-Shot Topic Classification

2.2. Detection of Response Inconsistencies

3. Data Annotations

3.1. Zero-Shot Topic Classification

3.2. Detection of Response Inconsistencies

4. Results

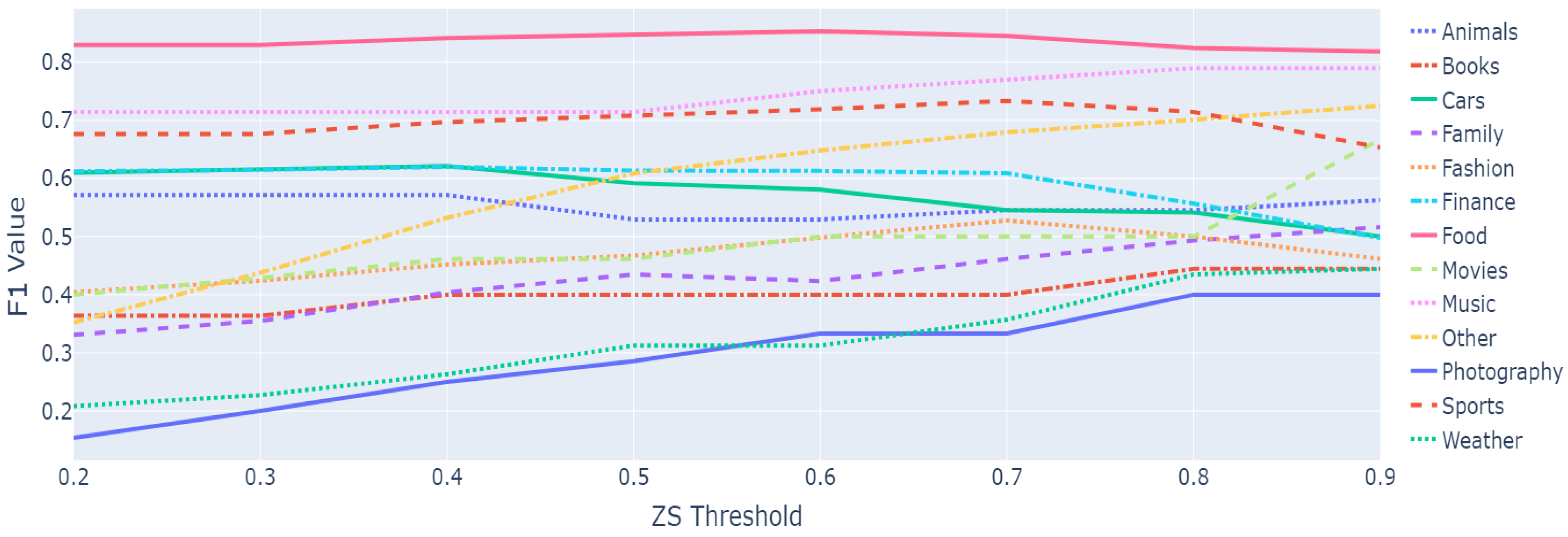

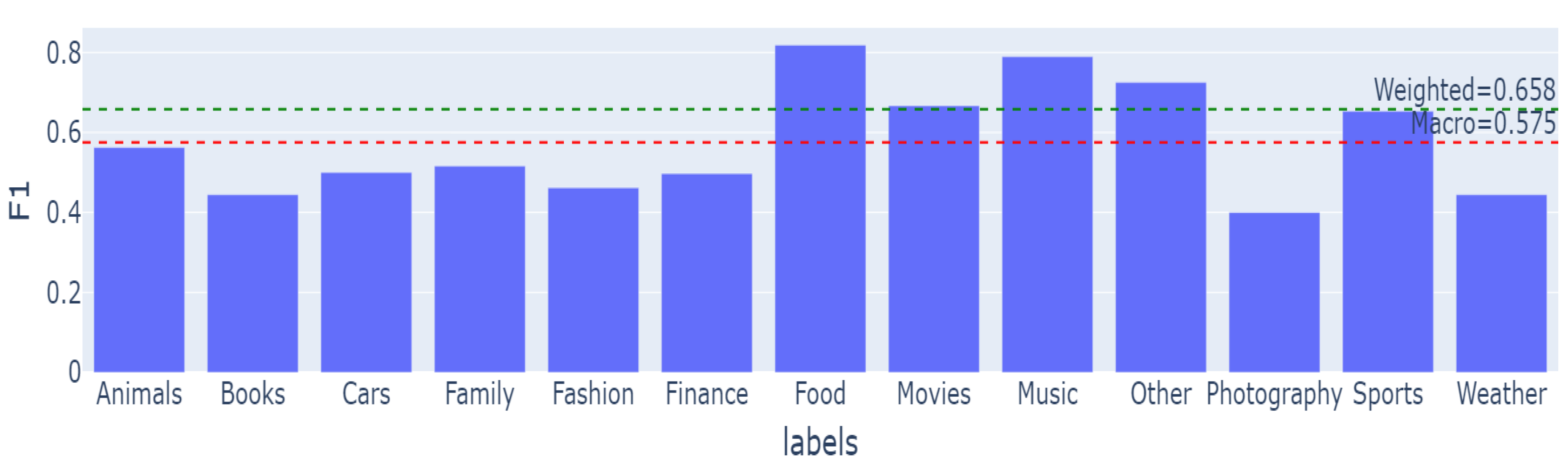

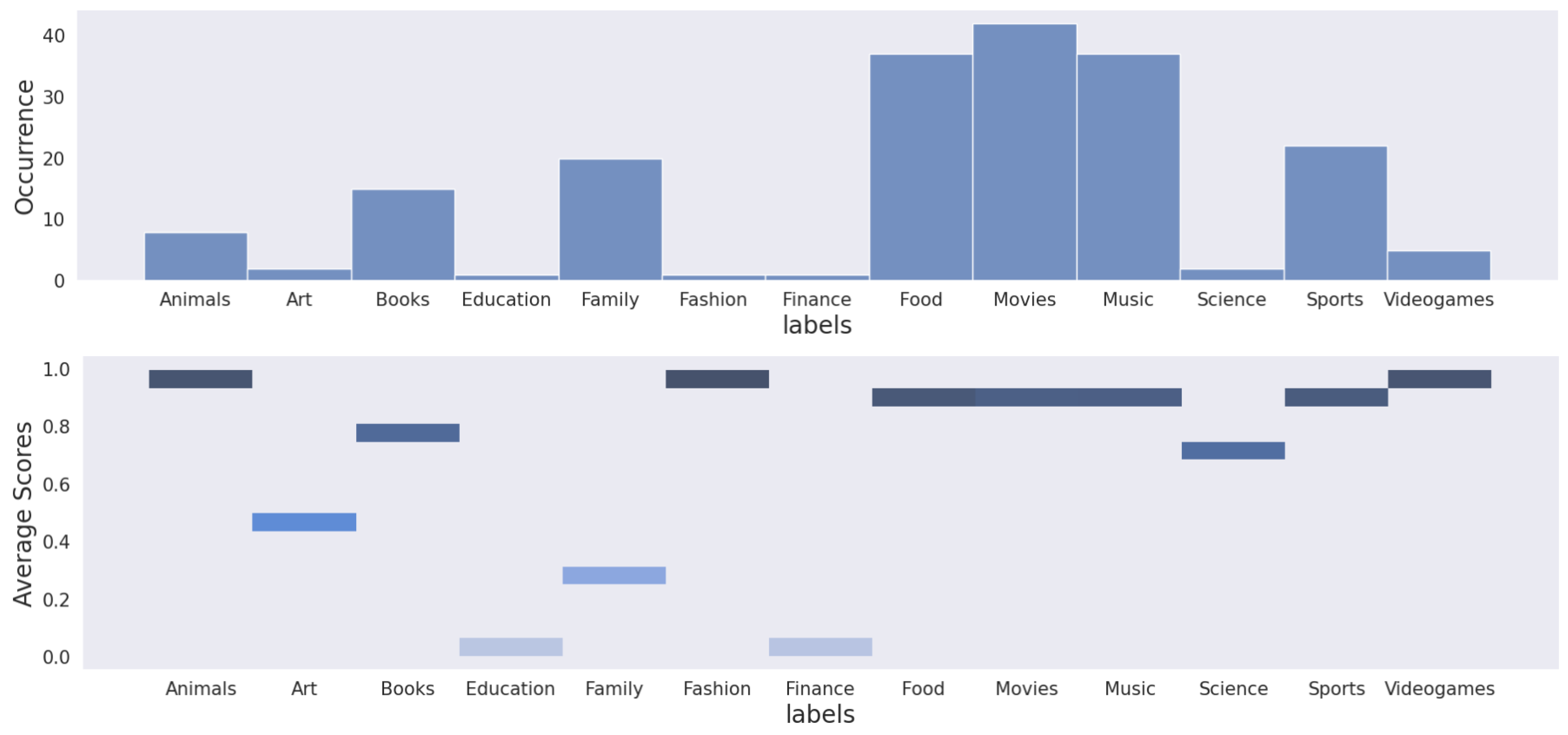

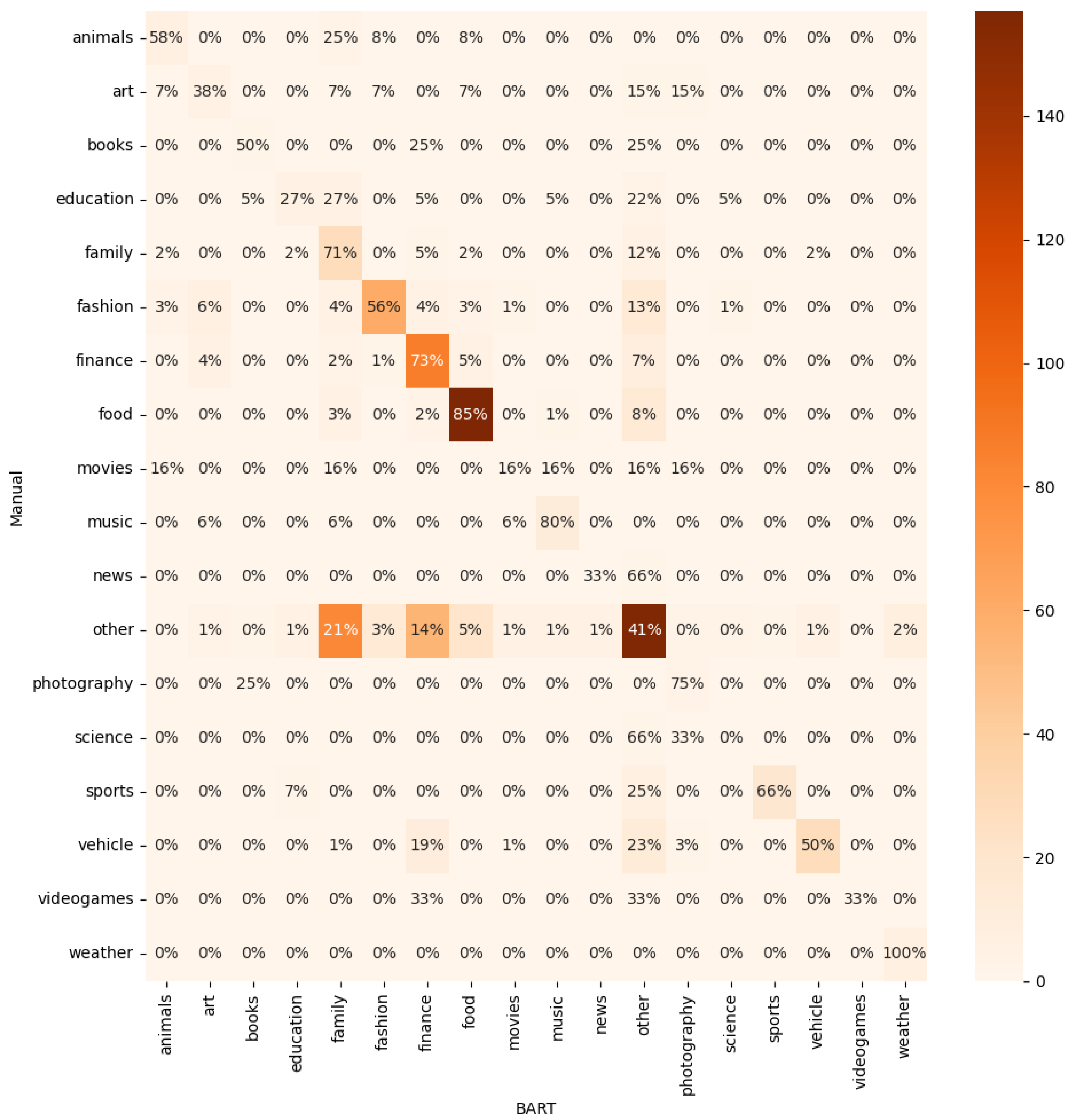

4.1. Zero-Shot Topic Classification

4.1.1. Original Results on DailyDialog - Topic Level

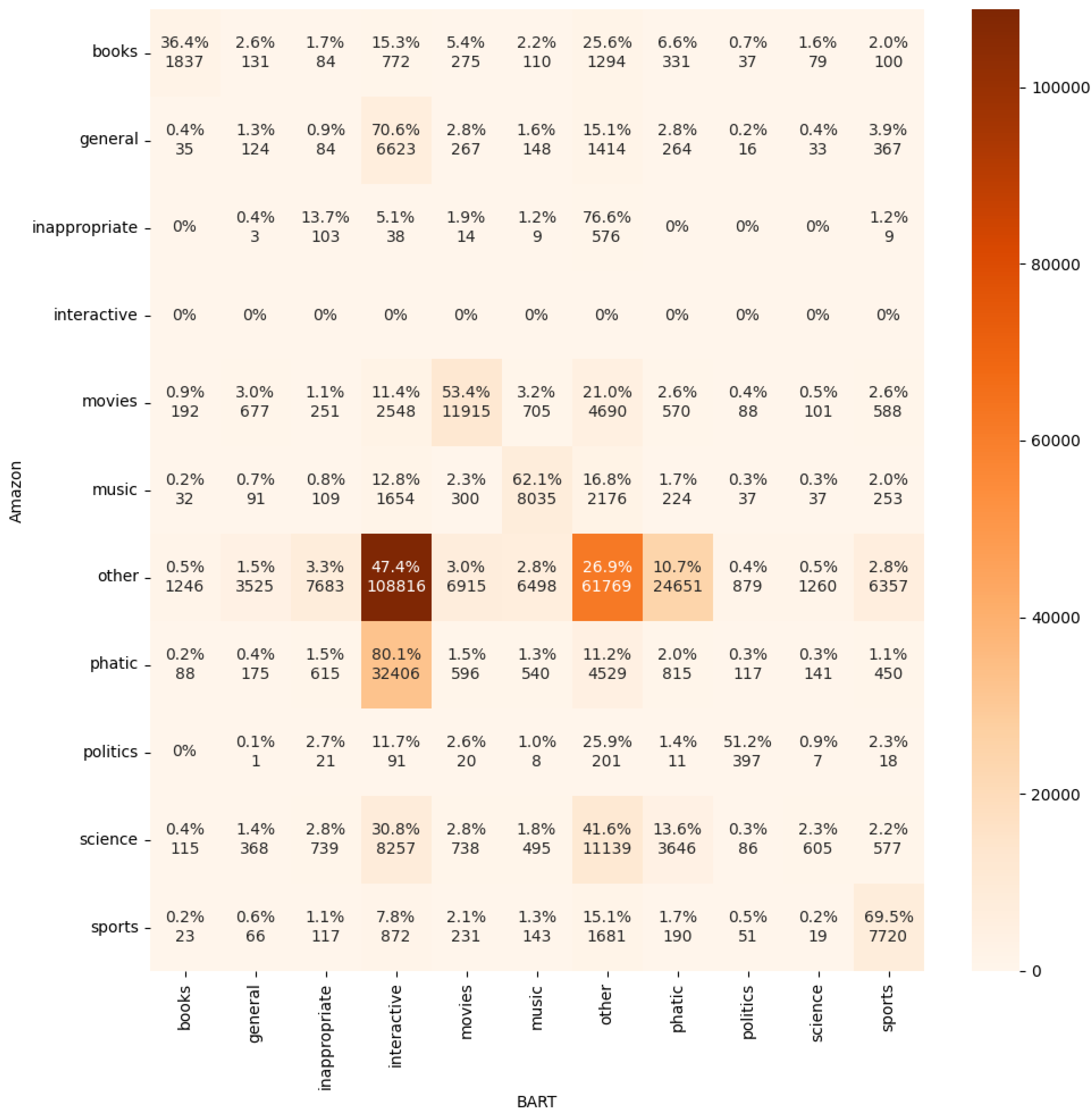

4.1.2. New Results on SGC5 - Topic and Subtopic Level

- 2: BART topic is correct.

- 1: CoBot topic is correct.

- 0: Both BART and CoBot topics are correct.

- -1: Neither the BART nor the CoBot topics are correct.

- 1: The BART subtopic is correct.

- 0: The BART subtopic is not correct.

4.1.3. New Results on DailyDialog - Topic and Subtopic Level

4.2. BART Classifier Latency

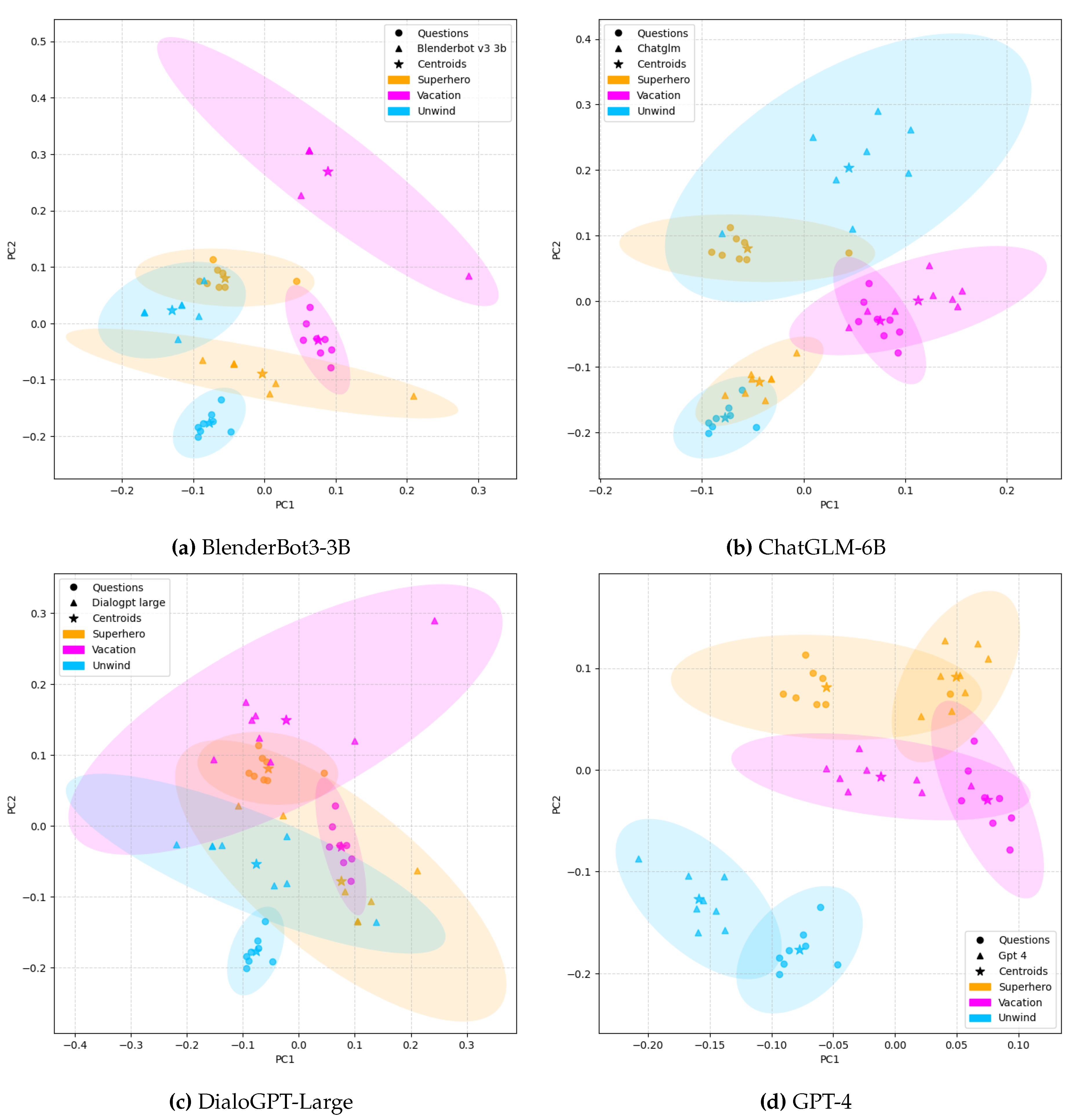

4.3. Detection of Response Inconsistencies

5. Conclusions and Future Work

Funding

Acknowledgments

Conflicts of Interest

Appendix A

Appendix A.1

| Superhero Topic Questions | GPT-4 Model Responses |

|---|---|

| Original: Who is your favorite superhero? | My favorite superhero is definitely Spider-Man because of his relatable struggles and character development through the years. |

|

Paraphrase: Which superhero do you hold as your favorite? |

I really enjoy Spider-Man because of his relatable struggles and witty humor, plus the iconic red and blue costume. |

|

Paraphrase: What superhero character do you admire the most? |

I personally admire Spider-Man the most because of his relatability and sense of responsibility towards his community. |

|

Paraphrase: Who is the superhero you resonate with the most? |

I really resonate with Spider-Man because he’s relatable, deals with everyday struggles, and always tries to do what’s right. |

| Paraphrase: What is your preferred superhero of all time? | My all-time favorite superhero is Spider-Man because of his relatability and the way he balances his personal life with heroism. |

|

Paraphrase: Which fictional hero holds a special place in your heart? |

Harry Potter has a special place in my heart because of his bravery, growth, and the magical world he introduced me to. |

|

Paraphrase: Who is the superhero that you find most inspiring? |

My all-time favorite superhero is Spider-Man because he teaches us the importance of responsibility and resilience in tough situations. |

|

Paraphrase: What superhero character do you consider your favorite? |

I absolutely love Spider-Man because of his relatability, witty humor, and the way he deals with everyday challenges. |

| Average Annotation: 2.0 | Average Prediction: 3.0 |

| Vacation Topic Questions | GPT-4 Model Responses |

|---|---|

| Original: What is your dream vacation destination? | My dream vacation destination would be exploring the beautiful landscapes of New Zealand and experiencing its rich culture. |

| Paraphrase: Where is your ultimate dream vacation spot? | My ultimate dream vacation spot would be Bora Bora, relaxing on a beautiful overwater bungalow and enjoying the crystal clear water. |

|

Paraphrase: What destination do you fantasize about for a perfect vacation? |

I dream about relaxing on a beautiful tropical beach like Bali or Maldives, with warm turquoise water and palm trees. |

|

Paraphrase: Which place would be your dream getaway location? |

My dream getaway location would be the Amalfi Coast in Italy, with its beautiful scenery, charming towns, and delicious cuisine. |

|

Paraphrase: If you could choose any place for a dream vacation, where would it be? |

I’d absolutely love to visit New Zealand for a dream vacation, immersing myself in its breathtaking landscapes and rich culture. |

|

Paraphrase: What is the vacation destination you yearn to visit the most? |

I’ve always dreamed of visiting the Maldives, spending my days relaxing on the sandy beaches and swimming in crystal-clear waters. |

|

Paraphrase: Where would you love to go on your dream vacation? |

I would love to go to Bora Bora for my dream vacation, staying in an overwater bungalow and enjoying the beautiful beaches! |

|

Paraphrase: Which location tops your list for an ideal vacation spot? |

I absolutely love the idea of vacationing in Santorini, Greece, with its beautiful beaches, stunning sunsets, and unique architecture. |

| Average Annotation: 5.0 | Average Prediction: 4.0 |

| Unwind Topic Questions | GPT-4 Model Responses |

|---|---|

| Original: How do you like to unwind after a long day? | I love to relax with a good book, watch my favorite shows on Netflix, or enjoy a soothing cup of tea. |

|

Paraphrase: What is your preferred method of relaxation after a tiring day? |

I really enjoy winding down with a good book, cozying up on the couch, and sipping on some calming chamomile tea. |

|

Paraphrase: How do you like to de-stress and unwind following a long day? |

I love curling up with a good book, watching a movie, or taking a bubble bath to de-stress after a long day. |

|

Paraphrase: What activities do you find most rejuvenating after a busy day? |

After a busy day, I find relaxing with a good book, watching a movie, or going for a walk outside to be most rejuvenating. |

|

Paraphrase: How do you prefer to unwind and recharge after a hectic day? |

I love curling up with a good book, taking a warm bath, or watching my favorite TV show to relax after a long day! |

|

Paraphrase: What is your go-to way of unwinding after a long and exhausting day? |

I love taking a warm bath, listening to soothing music, and practicing mindfulness meditation to unwind after a tough day. |

|

Paraphrase: What helps you relax and find peace of mind after a demanding day? |

I love taking a warm bath, listening to soothing music, and practicing mindfulness meditation to unwind after a tough day. |

|

Paraphrase: How do you like to wind down and find tranquility at the end of a long day? |

I like to relax by reading a good book, listening to soothing music, or taking a warm bath to find tranquility. |

| Average Annotation: 5.0 | Average Prediction: 4.0 |

References

- Shuster, K.; Xu, J.; Komeili, M.; Ju, D.; Smith, E.M.; Roller, S.; Ung, M.; Chen, M.; Arora, K.; Lane, J. BlenderBot 3: a deployed conversational agent that continually learns to responsibly engage. arXiv 2022, arXiv:2208.03188. [Google Scholar] [CrossRef]

- Thoppilan, R.; De Freitas, D.; Hall, J.; Shazeer, N.; Kulshreshtha, A.; Cheng, H.T.; Jin, A.; Bos, T.; Baker, L.; Du, Y. Lamda: Language models for dialog applications. arXiv 2022, arXiv:2201.08239. [Google Scholar] [CrossRef]

- OpenAI. GPT-4 technical report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Rodríguez-Cantelar, M.; de la Cal, D.; Estecha, M.; Gutiérrez, A.G.; Martín, D.; Milara, N.R.N.; Jiménez, R.M.; D’Haro, L.F. Genuine2: An open domain chatbot based on generative models 2021.

- Hakkani-Tür, D. Alexa prize socialbot grand challenge year iv 2021.

- Hu, S.; Liu, Y.; Gottardi, A.; Hedayatnia, B.; Khatri, A.; Chadha, A.; Chen, Q.; Rajan, P.; Binici, A.; Somani, V.; Lu, Y.; Dwivedi, P.; Hu, L.; Shi, H.; Sahai, S.; Eric, M.; Gopalakrishnan, K.; Kim, S.; Gella, S.; Papangelis, A.; Lange, P.; Jin, D.; Chartier, N.; Namazifar, M.; Padmakumar, A.; Ghazarian, S.; Oraby, S.; Narayan-Chen, A.; Du, Y.; Stubell, L.; Stiff, S.; Bland, K.; Mandal, A.; Ghanadan, R.; Hakkani-Tür, D. Further advances in open domain dialog systems in the Fourth Alexa Prize SocialBot Grand Challenge. Alexa Prize SocialBot Grand Challenge 4 Proceedings, 2021.

- Prats, J.M.; Estecha-Garitagoitia, M.; Rodríguez-Cantelar, M.; D’Haro, L.F. Automatic Detection of Inconsistencies in Open-Domain Chatbots. Proc. IberSPEECH 2022, 2022, pp. 116–120. [CrossRef]

- Yin, W.; Hay, J.; Roth, D. Benchmarking Zero-shot Text Classification: Datasets, Evaluation and Entailment Approach. Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP); Association for Computational Linguistics: Hong Kong, China, 2019; pp. 3914–3923. [CrossRef]

- Blei, D.M.; Ng, A.Y.; Jordan, M.I. Latent dirichlet allocation. Journal of machine Learning research 2003, 3, 993–1022. [Google Scholar]

- Grootendorst, M. BERTopic: Neural topic modeling with a class-based TF-IDF procedure. arXiv 2022, arXiv:2203.05794. [Google Scholar] [CrossRef]

- Reimers, N.; Gurevych, I. Sentence-bert: Sentence embeddings using siamese bert-networks. arXiv 2019, arXiv:1908.10084. [Google Scholar] [CrossRef]

- McInnes, L.; Healy, J.; Melville, J. Umap: Uniform manifold approximation and projection for dimension reduction. arXiv 2018, arXiv:1802.03426. [Google Scholar] [CrossRef]

- Campello, R.J.; Moulavi, D.; Zimek, A.; Sander, J. Hierarchical density estimates for data clustering, visualization, and outlier detection. ACM Transactions on Knowledge Discovery from Data (TKDD) 2015, 10, 1–51. [Google Scholar] [CrossRef]

- Wolf, T.; Sanh, V.; Chaumond, J.; Delangue, C. Transfertransfo: A transfer learning approach for neural network based conversational agents. arXiv 2019, arXiv:1901.08149. [Google Scholar] [CrossRef]

- Anderson, P.; Fernando, B.; Johnson, M.; Gould, S. Guided Open Vocabulary Image Captioning with Constrained Beam Search. Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing; Association for Computational Linguistics: Copenhagen, Denmark, 2017; pp. 936–945. [CrossRef]

- Li, Y.; Su, H.; Shen, X.; Li, W.; Cao, Z.; Niu, S. DailyDialog: A Manually Labelled Multi-turn Dialogue Dataset. Proceedings of the Eighth International Joint Conference on Natural Language Processing (Volume 1: Long Papers); Asian Federation of Natural Language Processing: Taipei, Taiwan, 2017; pp. 986–995.

- Khatri, C.; Hedayatnia, B.; Venkatesh, A.; Nunn, J.; Pan, Y.; Liu, Q.; Song, H.; Gottardi, A.; Kwatra, S.; Pancholi, S.; others. Advancing the state of the art in open domain dialog systems through the alexa prize. arXiv 2018, arXiv:1812.10757. [Google Scholar] [CrossRef]

- He, P.; Liu, X.; Gao, J.; Chen, W. DEBERTA: DECODING-ENHANCED BERT WITH DISENTANGLED ATTENTION. International Conference on Learning Representations, 2021.

- Lewis, M.; Liu, Y.; Goyal, N.; Ghazvininejad, M.; Mohamed, A.; Levy, O.; Stoyanov, V.; Zettlemoyer, L. BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension. arXiv 2019, arXiv:1910.13461. [Google Scholar] [CrossRef]

- Sanh, V.; Debut, L.; Chaumond, J.; Wolf, T. DistilBERT, a distilled version of BERT: smaller, faster, cheaper and lighter. arXiv 2020, arXiv:1910.01108. [Google Scholar] [CrossRef]

- Zhang, Y.; Sun, S.; Galley, M.; Chen, Y.C.; Brockett, C.; Gao, X.; Gao, J.; Liu, J.; Dolan, B. Dialogpt: Large-scale generative pre-training for conversational response generation. arXiv 2019, arXiv:1911.00536. [Google Scholar] [CrossRef]

- Roller, S.; Dinan, E.; Goyal, N.; Ju, D.; Williamson, M.; Liu, Y.; Xu, J.; Ott, M.; Shuster, K.; Smith, E.M.; Boureau, Y.L.; Weston, J. Recipes for building an open-domain chatbot. 2020.

- Xu, J.; Szlam, A.; Weston, J. Beyond goldfish memory: Long-term open-domain conversation. arXiv 2021, arXiv:2107.07567. [Google Scholar]

- Komeili, M.; Shuster, K.; Weston, J. Internet-augmented dialogue generation. arXiv 2021, arXiv:2107.07566. [Google Scholar] [CrossRef]

- Shuster, K.; Komeili, M.; Adolphs, L.; Roller, S.; Szlam, A.; Weston, J. Language models that seek for knowledge: Modular search & generation for dialogue and prompt completion. arXiv 2022, arXiv:2203.13224. [Google Scholar] [CrossRef]

- Zeng, H. Measuring Massive Multitask Chinese Understanding. arXiv 2023, arXiv:2304.12986. [Google Scholar]

- Du, Z.; Qian, Y.; Liu, X.; Ding, M.; Qiu, J.; Yang, Z.; Tang, J. GLM: General Language Model Pretraining with Autoregressive Blank Infilling. arXiv 2022, arXiv:2103.10360. [Google Scholar] [CrossRef]

- Keskar, N.S.; McCann, B.; Varshney, L.R.; Xiong, C.; Socher, R. Ctrl: A conditional transformer language model for controllable generation. arXiv 2019, arXiv:1909.05858. [Google Scholar] [CrossRef]

- Chen, Z.; Sadoc, J.; D’Haro, L.F.; Banchs, R.; Rudnicky, A. Automatic evaluation and moderation of open-domain dialogue systems. arXiv 2021, arXiv:2111.02110. [Google Scholar] [CrossRef]

- Zhang, C.; D’Haro, L.F.; Friedrichs, T.; Li, H. MDD-Eval: Self-Training on Augmented Data for Multi-Domain Dialogue Evaluation. In Proceedings of the AAAI Conference on Artificial Intelligence, 2022, Vol. 36, pp. 11657–11666.

- Mehri, S.; Choi, J.; D’Haro, L.F.; Deriu, J.; Eskenazi, M.; Gasic, M.; Georgila, K.; Hakkani-Tur, D.; Li, Z.; Rieser, V. Report from the NSF future directions workshop on automatic evaluation of dialog: Research directions and challenges. arXiv arXiv:2203.10012. [CrossRef]

| 1 | |

| 2 | |

| 3 | |

| 4 | |

| 5 | |

| 6 | |

| 7 | |

| 8 | |

| 9 | |

| 10 | |

| 11 | |

| 12 | |

| 13 | |

| 14 | |

| 15 | |

| 16 |

| Prposed Topics List | |

|---|---|

| Topics | Subtopics |

| animals | cats, dogs, pets. |

| art | ballet, cinema, museum, painting, theater. |

| books | author, genre, harry potter, plot, title. |

| education | history, mark, professor, school, subject, university. |

| family | parents, friends, relatives, marriage, children. |

| fashion | catwalk, clothes, design, dress, footwear, jewel, model. |

| finance | benefits, bitcoins, buy, finances, investment, sell, stock market, taxes. |

| food | drinks, fish, healthy, meal, meat, vegetables, dessert. |

| movies | actor, director, genre, plot, synopsis, title. |

| music | band, dance, genre, lyrics, rythm, singer, song. |

| news | exclusive, fake news, interview, press, trending. |

| photography | camera, lens, light, optics, zoom. |

| science | math, nature, physics, robots, space. |

| sports | baseball, basketball, coach, exercise, football, player, soccer, tennis. |

| vehicle | bike, boat, car, failure, fuel, parts, plane, public transport, speed. |

| videogames | arcade, computer, console, nintendo, play station, xbox. |

| weather: | cloudy, cold, hot, raining, sunny. |

| other | |

| CoBot Topics List | |||

|---|---|---|---|

| 1. books | 2. general | 3. interactive | 4. inappropriate |

| 5. movies | 6. music | 7. phatic | 8. politics |

| 9. science | 10. sports | 11. other | |

| Question | Paraphrases |

|---|---|

| What is your favorite sport? | Which is the sport you like the most? |

| My favorite sport is basketball, and yours? | |

| What kind of sport do you like? | |

| What is your favorite book? | What is the title of your favorite book? |

| Which book you always like to read? | |

| Hi!! I like reading, which book is your favorite one? | |

| What is your job? | What do you do for a living? |

| What do you do for work? |

| Question | Paraphrases |

|---|---|

| What is your favorite hobby? | What leisure activity do you enjoy the most? |

| Which pastime brings you the most satisfaction? | |

| What is the hobby that you find most appealing? | |

| Who is your favorite superhero? | What superhero character do you admire the most? |

| What is your preferred superhero of all time? | |

| Which fictional hero holds a special place in your heart? | |

| What’s your favorite type of cuisine? | Which cuisine do you find most appealing? |

| Which type of cooking brings you the greatest delight? | |

| What style of food do you consider your favorite? |

| Model | Accuracy (%) | F1 Score | Precision | Recall |

|---|---|---|---|---|

| cross-encoder/nli-deberta-v3-base | 10.08 | 0.08 | 0.22 | 0.15 |

| cross-encoder/nli-deberta-v3-large | 7.55 | 0.06 | 0.18 | 0.15 |

| facebook/bart-large-mnli | 25.98 | 0.26 | 0.30 | 0.29 |

| typeform/distilbert-base-uncased-mnli | 0.83 | 0.01 | 0.09 | 0.02 |

| Scores | Toipic level | Subtopic level | ||

|---|---|---|---|---|

| Hits | Accuracy (%) | Hits | Accuracy (%) | |

| 2 | 82/200 | 41 | - | |

| 1 | 3/200 | 1.5 | 143/200 | 71.5 |

| 0 | 101/200 | 50.5 | 57/200 | 28.5 |

| -1 | 14/200 | 7 | - | |

| Sentence | Toipic | Subtopic | |

|---|---|---|---|

| Human1: | I know. I’m starting a new diet the day after tomorrow. | food | healthy |

| Human2: | It’s about time. | ||

| Human1: | I have something important to do, can you fast the speed? | vehicle | speed |

| Human2: | Sure, I’ll try my best. Here we are. | ||

| Human1: | Do you know this song? | music | song |

| Human2: | Yes, I like it very much. | ||

| Human1: | Where are you going to find one? | other | — |

| Human2: | I have no idea. | ||

| Human1: | I wish to buy a diamond ring. | finance | investment |

| Human2: | How many carats diamond do you want? | ||

| Human1: | It’s a kitty. | animals | pets |

| Human2: | Oh, Jim. I told you. No pets. It’ll make a mess of this house. | ||

| Model | Accuracy (%) | F1 Score | Precision | Recall |

|---|---|---|---|---|

| Topics | 57.90 | 0.45 | 0.43 | 0.53 |

| Subtopics | 88.95 | 0.67 | 0.70 | 0.68 |

| Dataset | #Labels | Average Time (ms) |

|---|---|---|

| SGC5 | 11 | 27 |

| DailyDialog Topic | 18 | 39 |

| DailyDialog Subtopic | 6 | 19 |

| Chatbot | Avg. No. Responses | Av. Predicted | MSE |

|---|---|---|---|

| Seeker | 4.0±1.7 | 4.0±1.7 | 3.1 |

| DialoGPT-Large | 4.3±2.1 | 3.1±2.0 | 5.4 |

| BlenderBot2 (400M) | 4.0±1.6 | 4.6±2.2 | 2.4 |

| BlenderBot2 (2.7B) | 3.7±1.6 | 3.3±1.9 | 2.8 |

| Overall | 4.0±1.7 | 3.8±2.0 | 3.4 |

| Chatbot | Avg. No. Responses | Av. Predicted | MSE |

|---|---|---|---|

| ChatGLM | 4.6±1.4 | 3.8±0.8 | 3.2 |

| BlenderBot3 (3B) | 3.7±1.2 | 2.5±0.7 | 2.9 |

| GPT-4 | 3.4±1.1 | 3.5±0.8 | 1.8 |

| DialoGPT-Large | 5.1±1.5 | 3.3±0.9 | 5.0 |

| Overall | 4.2±1.3 | 3.3±0.8 | 3.3 |

| Chatbot | Avg. No. Responses | Av. Predicted | MSE |

|---|---|---|---|

| ChatGLM | 4.6±1.4 | 3.7±0.8 | 3.1 |

| BlenderBot3 (3B) | 3.7±1.2 | 2.5±0.8 | 3.5 |

| GPT-4 | 3.4±1.1 | 3.5±0.7 | 1.6 |

| DialoGPT-Large | 5.1±1.5 | 3.4±1.0 | 4.7 |

| Overall | 4.2±1.3 | 3.3±0.9 | 3.2 |

| BlenderBot3 | ChatGLM | DialoGPT-Large | GPT-4 | |||||

|---|---|---|---|---|---|---|---|---|

| Type of Question | Human | Pred. | Human | Pred. | Human | Pred. | Human | Pred. |

| Vacation | 3.0 | 2.0 | 3.0 | 3.0 | 4.0 | 2.0 | 2.0 | 3.0 |

| Superhero | 2.0 | 2.0 | 5.0 | 5.0 | 5.0 | 4.0 | 5.0 | 4.0 |

| Unwind | 5.0 | 3.0 | 5.0 | 4.0 | 6.0 | 4.0 | 3.0 | 2.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).