Submitted:

05 June 2023

Posted:

06 June 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Research Contribution

- Investigation of Multus-Medium by utilizing the discriminative and unique features in the form of texts and images to get the recommendation of a better product.

- BiLSTM embedded CNN and feature fusion with sentiment analytic model. The proposed MMOM produces a better recommendation.

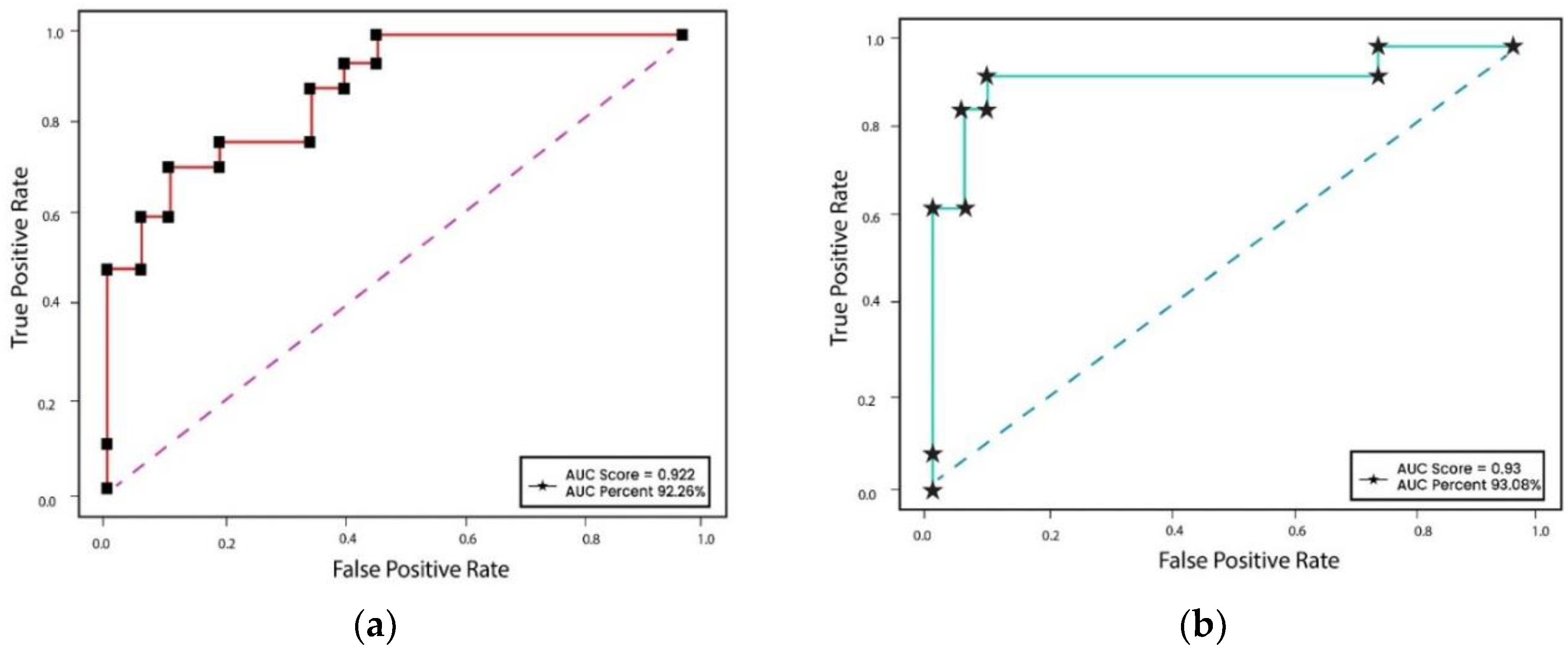

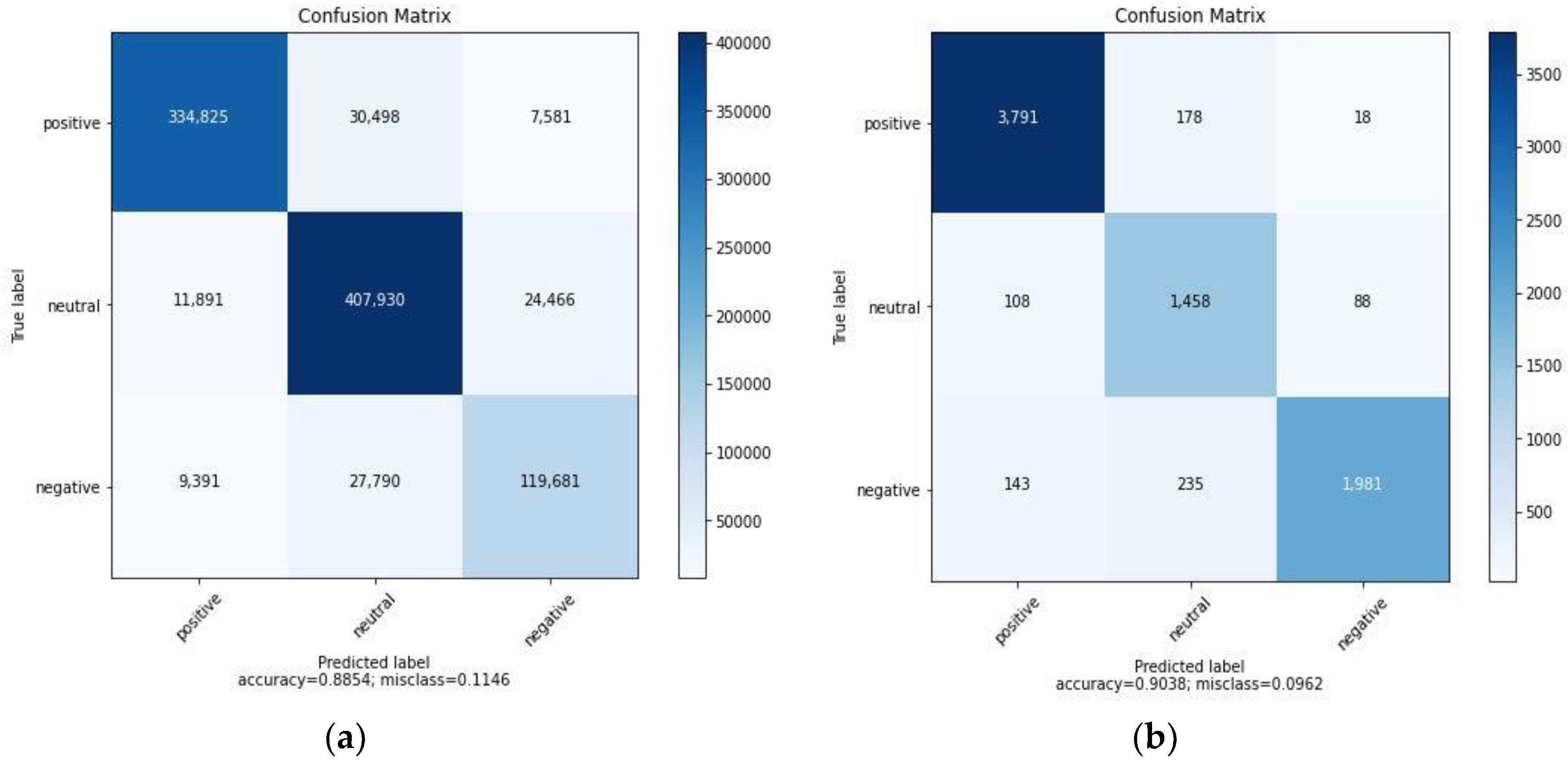

- Experimental results demonstrate that the accuracy, F1 score and ROC over fliker8k are 90.38%, 88.75% and 93.08%, whereas for twitter dataset are 88.54%, 86.34%, and 92.26% respectively, the accuracy of purposed model is 7.34% and 9.54% higher than the other two mentioned techniques.

2. Literature Review

2.1. Text Reviews-based Recommendation

2.2. Image Reviews based Recommendation

2.3. Multus-Medium Reviews-based Recommendation

3. Research Methodology

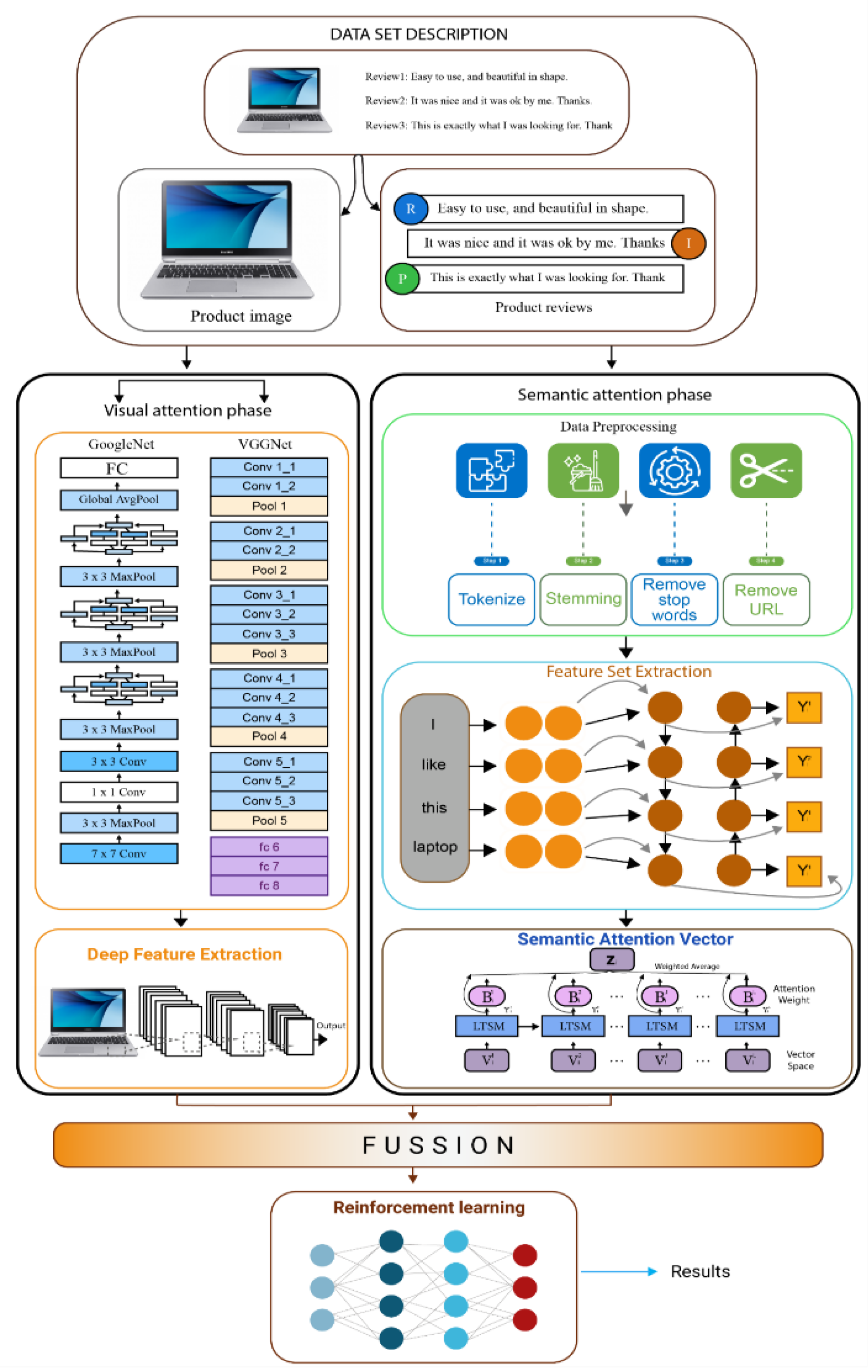

- The methodology begins with aspect and opinion extraction, followed by aspect refinement and identification. Data collection is conducted from Twitter and Flickr8k datasets, providing a diverse range of reviews and images related to products.

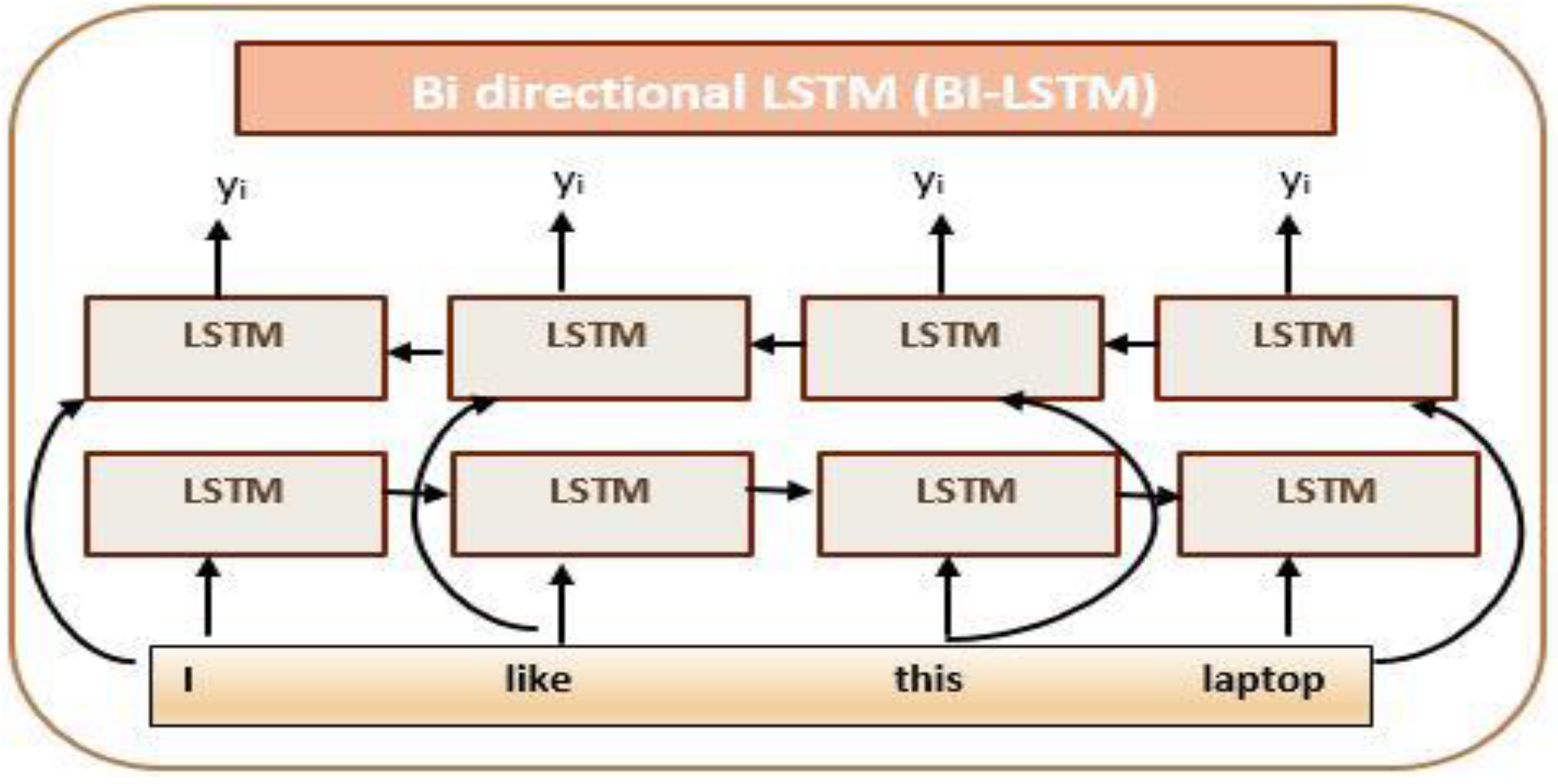

- Feature extraction involves semantic attention for text-based feature extraction and visual attention models for image-based feature extraction. Text-based features are extracted using BiLSTM, employing techniques like data preprocessing, lowercasing, stemming, and tokenization.

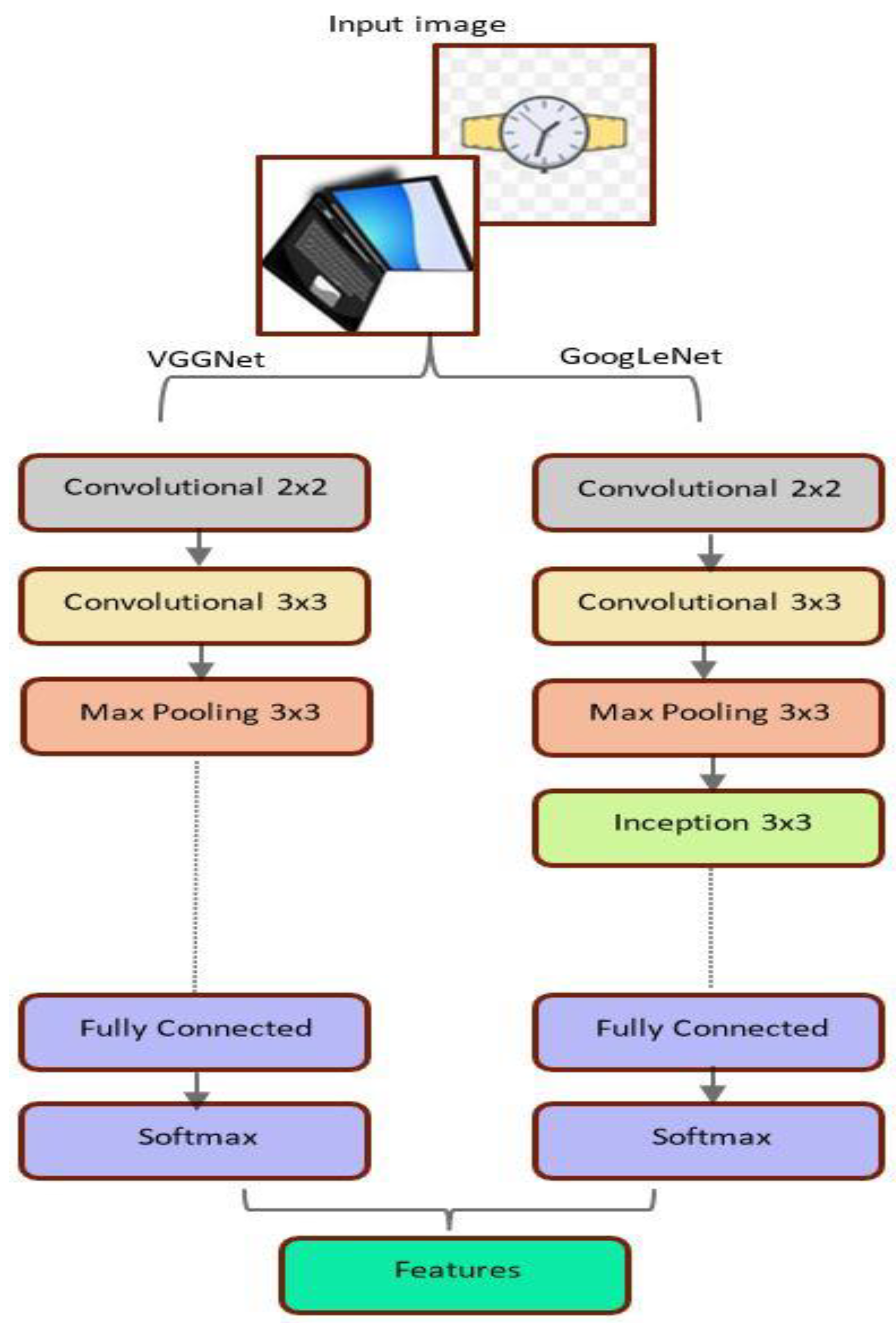

- Simultaneously, visual attention models utilize GoogleNet and VGGNet architectures to extract low-level features from product images. These pre-trained models capture visual characteristics such as product quality and price.

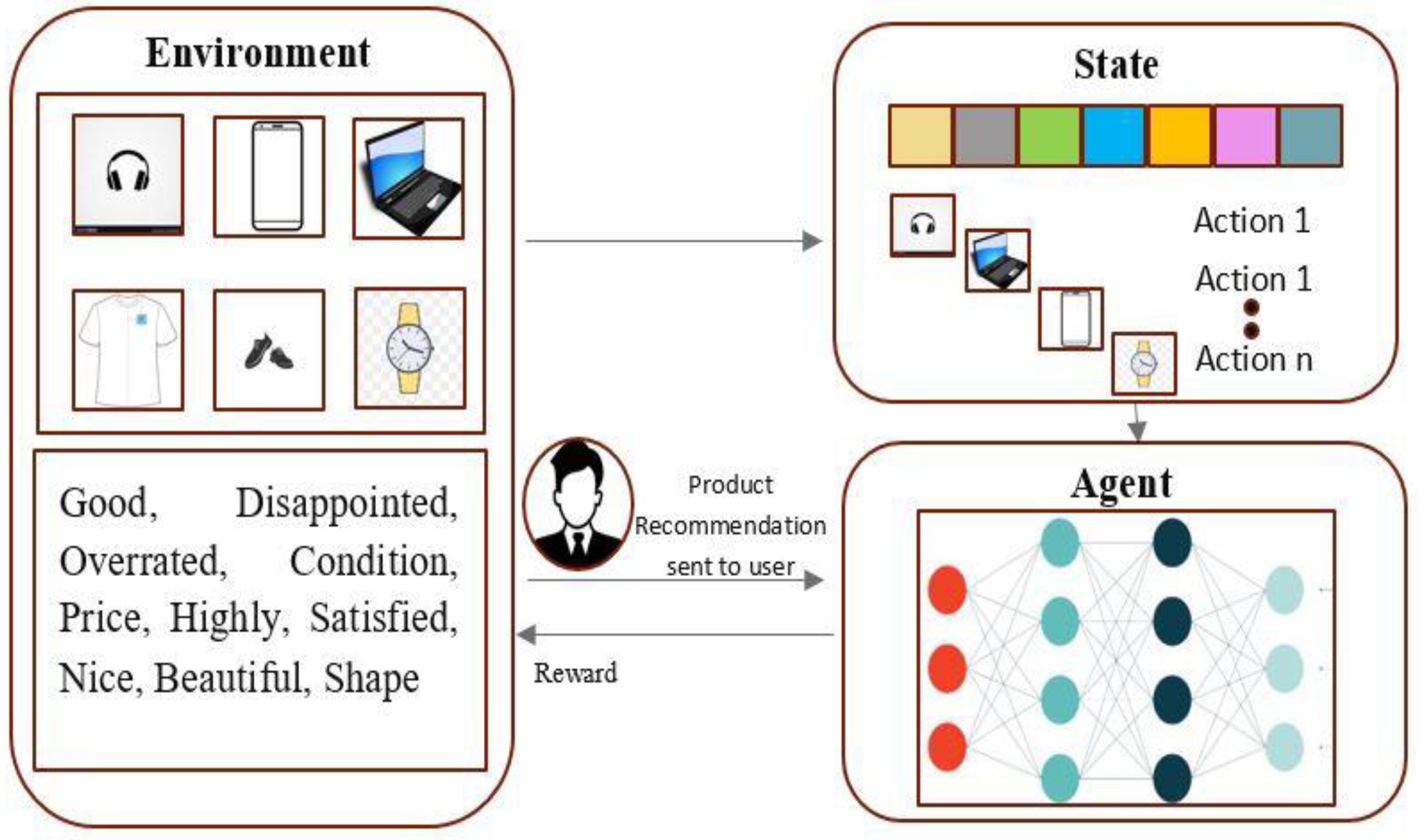

- The fusion of extracted features combines the results from semantic and visual attention models, creating a unified feature vector set. These fusion harnesses the strengths of both textual and visual information for more accurate recommendations.

- Reinforcement learning is applied to further enhance the recommendation system. It models the recommendation process as a Markov decision process, continuously adapting recommendations based on user feedback and behavior.

3.1. Data Set Description

3.2. Semantic Attention Phase for Feature Extraction

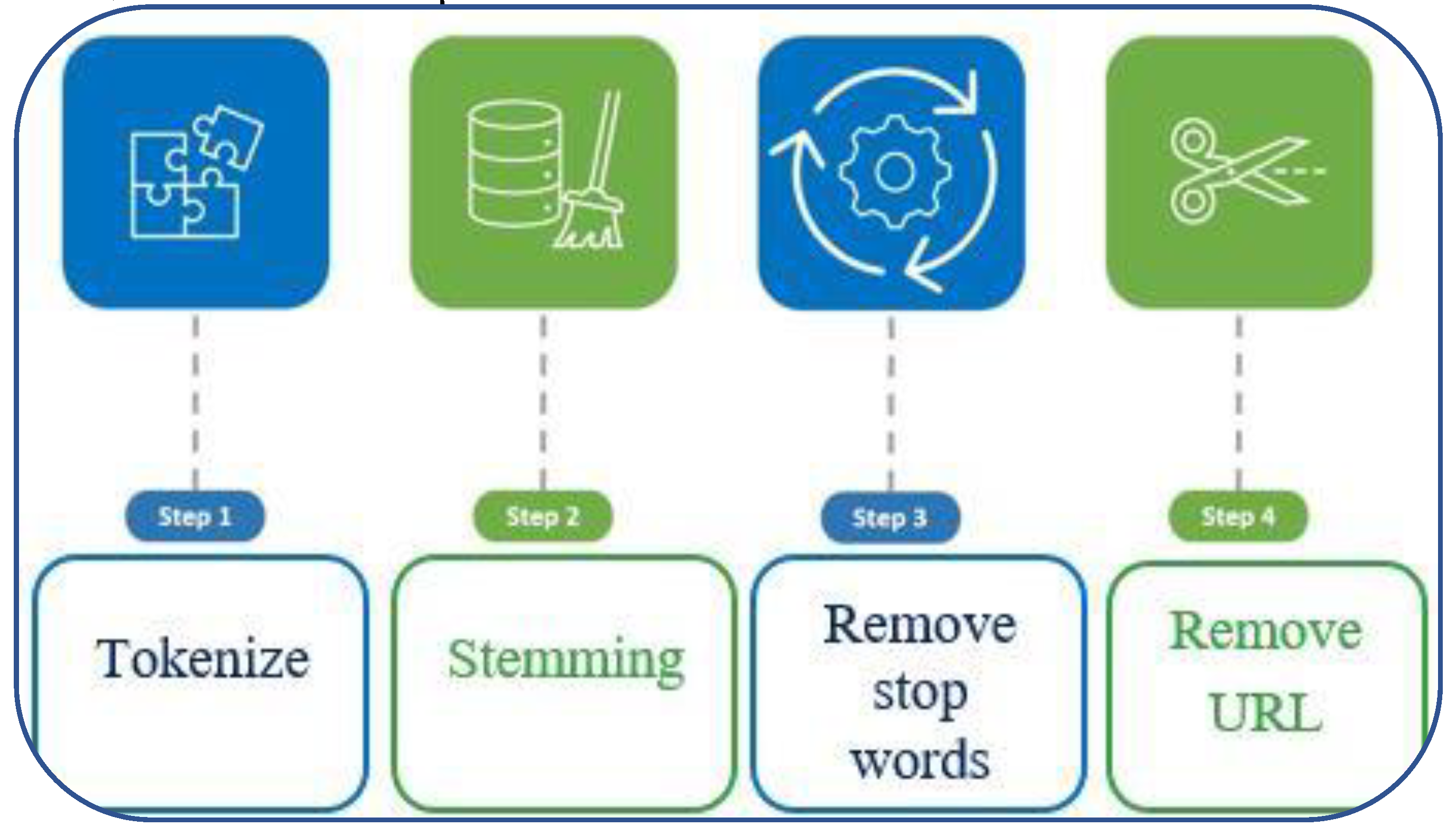

3.2.1. Data Preprocessing

| Algorithm1. Data Preprocessing | |

| 1. | Input: DSi : A data set of reviews |

| 2. | Output: Wall : All preprocessed words |

| 3. | Initialize Wall ⟵Φ |

| 4. | For Each DS ε DSi |

| 5. | String St ⟵ tokenize (DSi) |

| 6. | St΄ ⟵ lowercase (DSi) |

| 7. | End For |

| 8. | For Each Si ε St |

| 9. | St΄΄ ⟵ normalize (Si) |

| 10. | St΄΄΄ ⟵ stem (St΄΄) |

| 11. | St΄΄΄΄΄ ⟵ transliteration (St΄΄΄΄) |

| 12. | End For |

| 13. | Return Wall |

3.2.2. Feature Set Extraction

3.3. Visual Attention Model for Image-based Feature Extraction

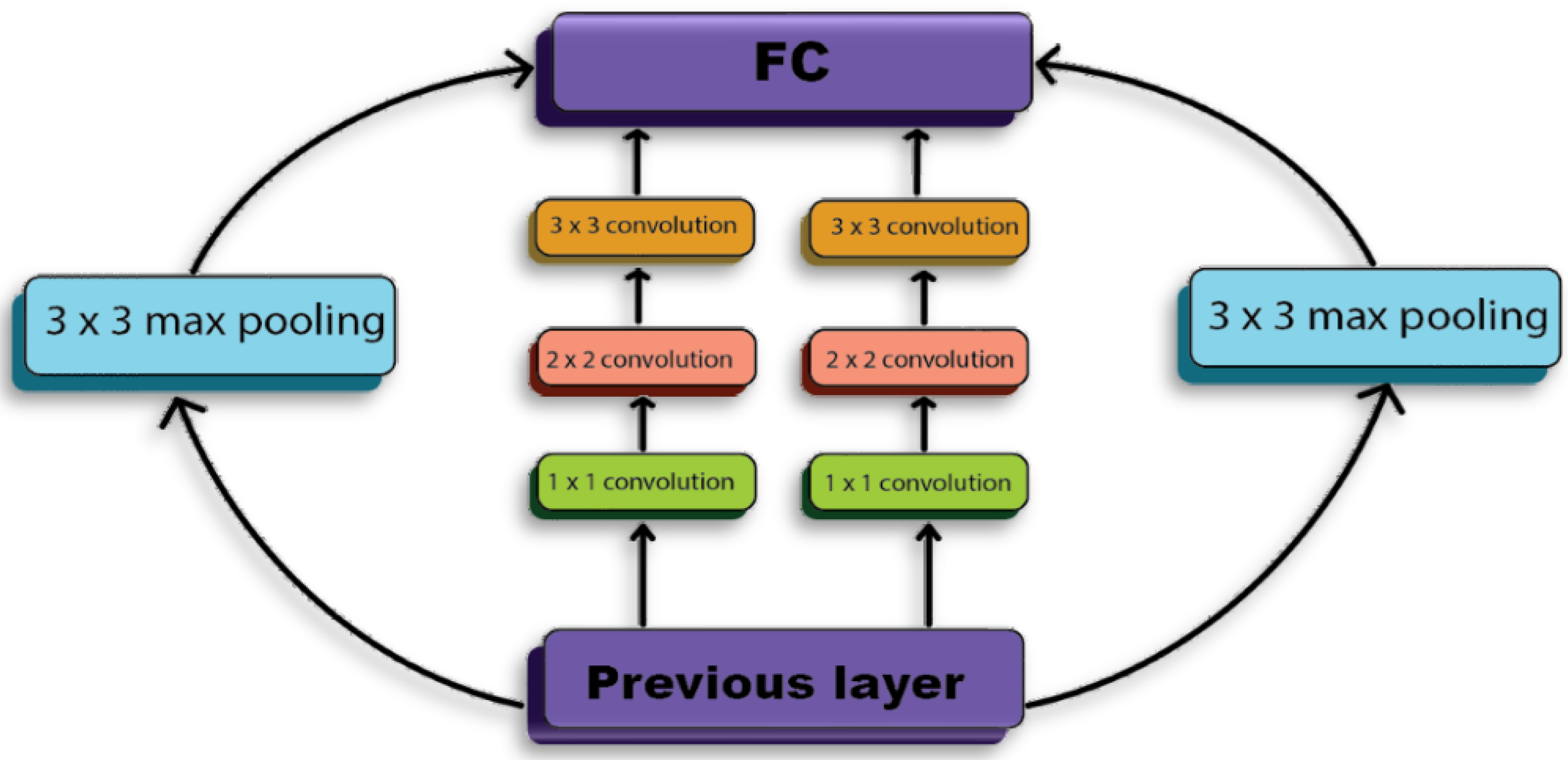

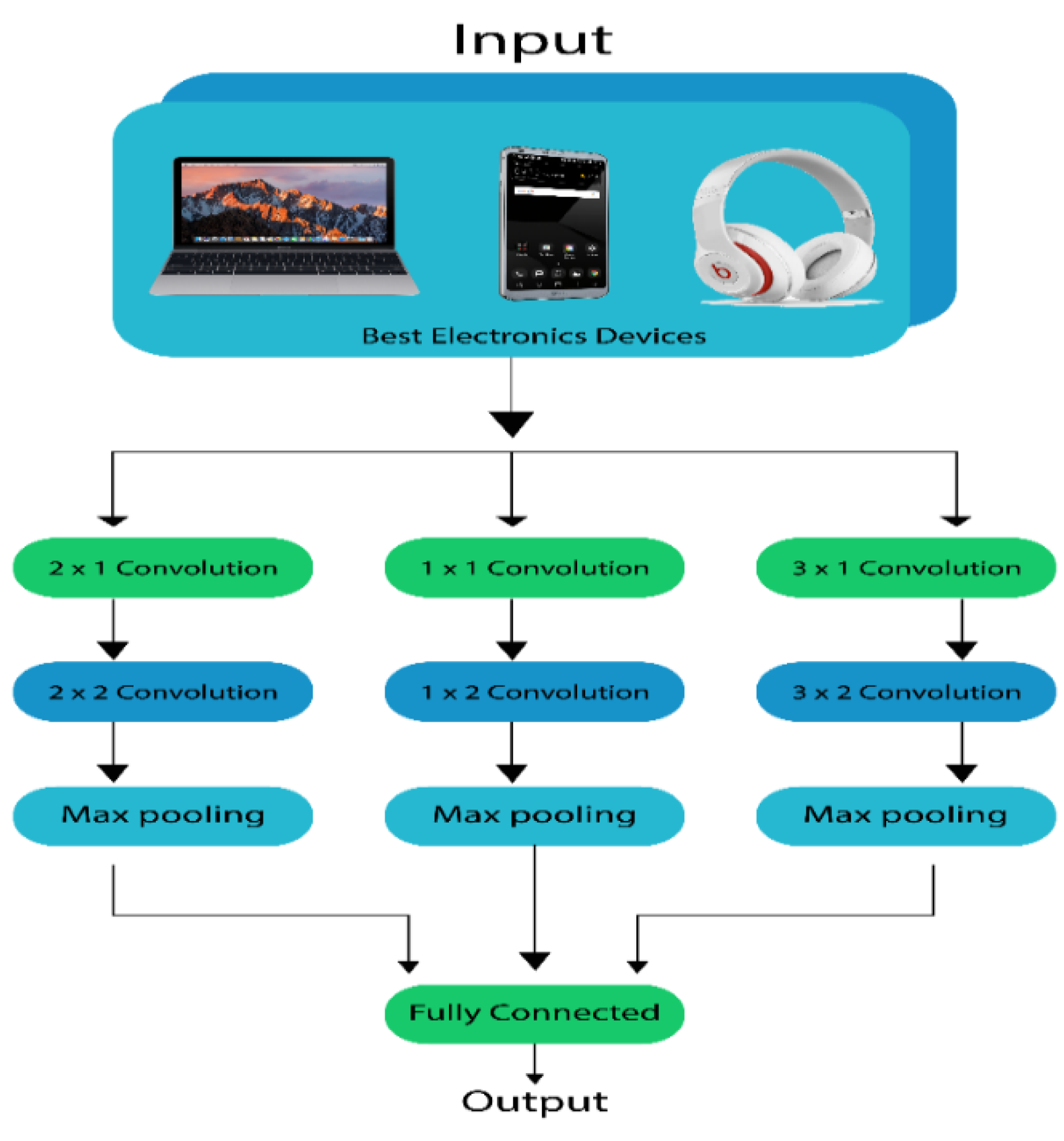

3.3.1. Feature Extraction using GoogleNet and VGGNet

- GoogLeNet

- VGGNet

- Embedding GoogLeNet and VGGNet

3.3.2. Deep Feature Extraction

3.3.3. Applying Fusion

3.3.4. Applying Reinforcement Learning

4. Experimental Results

4.1. Performance Evaluation Measure

4.2. Outcome of the Experiment

4.2.1. Baselines

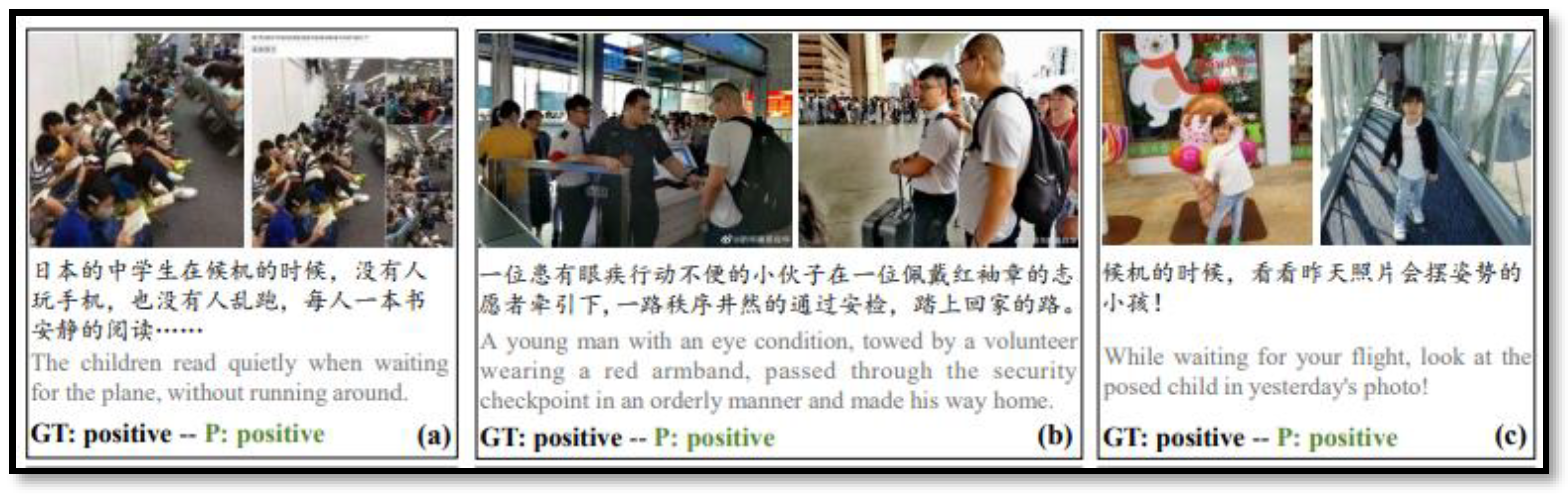

- AHRM [24]: They use a multi-modal (positive, negative) analysis of social images with the help of an attention-based heterogeneous relational model (AHRM).

- DMAF [23]: They proposed a new method of multimodal attentive fusion (DMAF) analysis for the sentiment. This technique can collect supplementary and no redundant information for more accurate sentiment categorization by automatically drawing attention to locations and words that are associated with affection.

- AMGN [25]: They proposed a new approach called "Attention-Based Modality-Gated Networks" (AMGN) to leverage the correlation between the modalities of images and texts, intending to extract discriminative features required for multimodal sentiment analysis.

- AHRM model achieved an F1 score of 87.1% and an accuracy of 87.5%.

- DMAF model achieved an F1 score of 85% and an accuracy of 85.9%.

- MMOM model achieved the highest F1 score of 88.75% and the highest accuracy of 90.38%.

- For the Twitter (t4sa) dataset:

- DMAF model achieved an F1 score of 76.9% and an accuracy of 76.3%.

- AMGN model achieved an F1 score of 79.1% and an accuracy of 79%.

- MMOM model achieved an F1 score of 86.34% and an accuracy of 88.54%.

4.2.2. Performance of Purposed Research

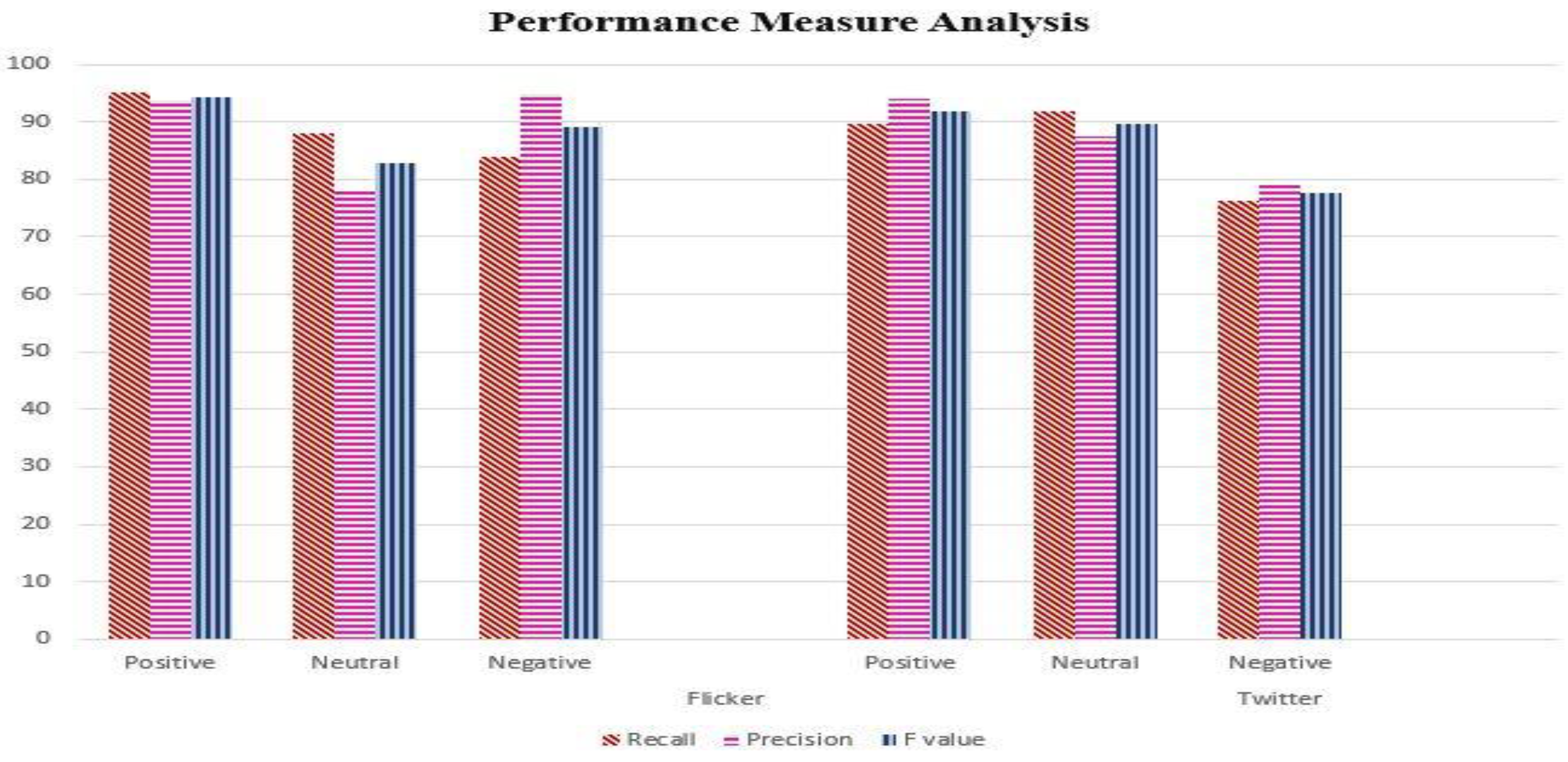

- Positive class: The proposed model achieved a recall of 95.08%, indicating that it correctly identified a high percentage of positive instances. The precision for the positive class was 93.79%, indicating a high accuracy in predicting positive instances. The F value for the positive class was 94.43%, reflecting a good balance between precision and recall.

- Neutral class: The model achieved a recall of 88.15% and a precision of 77.93% for the neutral class. The F value for the neutral class was 82.72%.

- Negative class: The proposed model achieved a recall of 83.89% and a precision of 94.92% for the negative class. The F value for the negative class was 89.11%.

- Average: The average recall across all classes was 89.04%, the average precision was 88.88%, and the average F value was 88.75%.

- Positive class: The proposed model achieved a recall of 89.79% and a precision of 94.02% for the positive class. The F value for the positive class was 91.86%.

- Neutral class: The model achieved a recall of 91.82% and a precision of 87.5% for the neutral class. The F value for the neutral class was 89.61%.

- Negative class: The proposed model achieved a recall of 76.3% and a precision of 78.9% for the negative class. The F value for the negative class was 77.57%.

- Average: The average recall across all classes was 85.97%, the average precision was 86.80%, and the average F value was 86.34%.

4.3. Discussion

5. Conclusions and Future Work

Funding

Conflicts of Interest

| 1 | |

| 2 |

References

- Horrigan, J. Online shopping, Pew Internet & American Life project. 2018 Washington, DC Available at:<http://www. pewinternet. org/Reports/2008/Online-Shopping/01-Summary-of-Findings. aspx>[Accessed 8/8/2014].

- Lei, Z.; Yin, D.; Mitra, S.; Zhang, H. Swayed by the reviews: Disentangling the effects of average ratings and individual reviews in online word-of-mouth. Production and Operations Management 2022, 31, 2393–2411. [Google Scholar] [CrossRef]

- Nawaz, A.; Awan, A. A.; Ali, T.; Rana, M. R. R. Product’s behaviour recommendations using free text: an aspect based sentiment analysis approach. Cluster Computing 2020, 23, 1267–1279. [Google Scholar] [CrossRef]

- Rana, M.R.R.; Nawaz, A.; Iqbal, J. A survey on sentiment classification algorithms, challenges and applications. Acta Universitatis Sapientiae, Informatica 2018, 10, 58–72. [Google Scholar] [CrossRef]

- Al-Abbadi, L.; Bader, D.; Mohammad, A.; Al-Quran, A.; Aldaihani, F.; Al-Hawary, S.; Alathamneh, F. The effect of online consumer reviews on purchasing intention through product mental image. International Journal of Data and Network Science 2022, 6, 1519–1530. [Google Scholar] [CrossRef]

- Kurdi, B.; Alshurideh, M.; Akour, I.; Alzoubi, H.; Obeidat, B.; Alhamad, A. The role of digital marketing channels on consumer buying decisions through eWOM in the Jordanian markets. International Journal of Data and Network Science 2020, 6, 1175–1186. [Google Scholar] [CrossRef]

- Bhuvaneshwari, P.; Rao, A. N.; Robinson, Y. H.; Thippeswamy, M. N. Sentiment analysis for user reviews using Bi-LSTM self-attention based CNN model. Multimedia Tools and Applications 2020, 81, 12405–12419. [Google Scholar] [CrossRef]

- Rana, M. R. R.; Rehman, S. U.; Nawaz, A.; Ali, T.; Imran, A.; Alzahrani, A.; Almuhaimeed, A. Aspect-Based Sentiment Analysis for Social Multimedia: A Hybrid Computational Framework. Computer Systems Science & Engineering 2023, 46. [Google Scholar]

- Khan, A. Improved multi-lingual sentiment analysis and recognition using deep learning. Journal of Information Science 2023, 01655515221137270. [Google Scholar] [CrossRef]

- Chaudhry, N. N.; Yasir, J.; Farzana, K.; Zahid, M.; Zafar I., K.; Umar, S.; Sadaf, H.J. Sentiment analysis of before and after elections: Twitter data of us election 2020. Electronics 2021, 10, 2082. [Google Scholar] [CrossRef]

- El-Affendi, M. A.; Khawla, A.; Amir, H. A novel deep learning-based multilevel parallel attention neural (MPAN) model for multidomain arabic sentiment analysis. IEEE Access 2021, 9, 7508–7518. [Google Scholar] [CrossRef]

- Gastaldo, P.; Zunino, R.; Cambria, E.; Decherchi, S. Combining ELM with random projections. IEEE intelligent systems 2013, 28, 46–48. [Google Scholar]

- Medhat, W.; Hassan, A.; Korashy, H. Sentiment analysis algorithms and applications: A survey. Ain Shams engineering journal 2014, 5, 1093–1113. [Google Scholar] [CrossRef]

- Nawaz, A.; Ali, T.; Hafeez, Y.; Rehman, S. U.; Rashid, M. R. Mining public opinion: a sentiment based forecasting for democratic elections of Pakistan. Spatial Information Research 2022, 1–13. [Google Scholar] [CrossRef]

- Schuckert, M.; X. Liu; R. Law. Hospitality and tourism online reviews: Recent trends and future directions. Journal of Travel & Tourism Marketing 2015, 32, 608–621.

- Agoraki, M.; Aslanidis, N.; Kouretas, G. P. US banks’ lending, financial stability, and text-based sentiment analysis. Journal of Economic Behavior & Organization 2022, 197, 73–90. [Google Scholar]

- Abdi, A.; Shamsuddin, S. M.; Hasan, S.; Piran, J. Deep learning-based sentiment classification of evaluative text based on Multi-feature fusion. Information Processing & Management 2019, 56, 1245–1259. [Google Scholar]

- Onan, A.; Sentiment analysis on product reviews based on weighted word embeddings and deep neural networks. Concurrency and Computation: Practice and Experience 2021, 33, e5909. [CrossRef]

- Yang, J.; She, D.; Sun, M.; Cheng, M. M.; Rosin, P. L.; Wang, L. Visual sentiment prediction based on automatic discovery of affective regions. IEEE Transactions on Multimedia 2020, 20, 2513–2525. [Google Scholar] [CrossRef]

- Tuinhof, H.; Pirker. C.; Haltmeier, H. Image-based fashion product recommendation with deep learning. In international conference on machine learning, optimization, and data science; 2018.

- Meena, Y.; Kumar, P.; Sharma, A. Product recommendation system using distance measure of product image features. in 2018 Second International Conference on Intelligent Computing and Control Systems (ICICCS) 2018. [Google Scholar]

- Wang, L.; Guo, W.; Yao, X.; Zhang, Y.; Yang, J. Multimodal Event-Aware Network for Sentiment Analysis in Tourism. IEEE MultiMedia 2021, 28, 49–58. [Google Scholar] [CrossRef]

- Huang, F.; Zhang, X.; Zhao, Z.; Xu, J.; Li, Z. Image–text sentiment analysis via deep multimodal attentive fusion. Knowledge-Based Systems 2019, 167, 26–37. [Google Scholar] [CrossRef]

- Xu, J.; Li, Z.; Huang, F.; Li, C.; Philip, S. Y. Social image sentiment analysis by exploiting multimodal content and heterogeneous relations. IEEE Transactions on Industrial Informatics 2020, 17, 2974–2982. [Google Scholar] [CrossRef]

- Huang, F.; Wei, K.; Weng, J.; Li, Z. Attention-based modality-gated networks for image-text sentiment analysis. ACM Transactions on Multimedia Computing, Communications, and Applications 2020, 16, 1–19. [Google Scholar] [CrossRef]

- Ghorbanali, A.; Sohrabi, M. K.; Yaghmaee, F. Ensemble transfer learning-based multimodal sentiment analysis using weighted convolutional neural networks. Information Processing & Management 2022, 59, 102929. [Google Scholar]

- AL-Sammarraie, Y. Q.; Khaled, A. Q.; AL-Mousa, M. R.; Desouky, S. F. Image Captions and Hashtags Generation Using Deep Learning Approach. In 2022 International Engineering Conference on Electrical, Energy, and Artificial Intelligence (EICEEAI) 2022, 1-5.

- Gaspar, A.; Alexandre. L. A. A multimodal approach to image sentiment analysis. in Intelligent Data Engineering and Automated Learning–IDEAL 2019. 20th International Conference 2019, 14–16.

- Wu, S.; Fei, H.; Ren, Y.; Li, B.; Li, F.; Ji, D. High-order pair-wise aspect and opinion terms extraction with edge-enhanced syntactic graph convolution. IEEE/ACM Transactions on Audio, Speech, and Language Processing 2021, 29, 2396–2406. [Google Scholar] [CrossRef]

- Ahmed, Z.; Duan, J.; Wu, F. ; Wang, J; EFCA: An extended formal concept analysis method for aspect extraction in healthcare informatics. In 2021 IEEE International Conference on Bioinformatics and Biomedicine (BIBM) 2021, 1241–1244. [Google Scholar]

- Rana, M. R. R.; Rehman, S. U.; Nawaz, A.; Ali, T.; Ahmed, M. A Conceptual Model for Decision Support Systems Using Aspect Based Sentiment Analysis. Proceedings of The Romanian Academy Series A-Mathematics Physics Technical Sciences Information Science 2021, 22, 381–390. [Google Scholar]

- Bhende, M.; Thakare, A.; Pant, B.; Singhal, P.; Shinde, S.; Dugbakie, B. N. Integrating Multiclass Light Weighted BiLSTM Model for Classifying Negative Emotions. Computational Intelligence and Neuroscience 2022. [Google Scholar] [CrossRef]

- Xiao, Y.; Li, C.; Thürer, M.; Liu, Y.; Qu, T. User preference mining based on fine-grained sentiment analysis. Journal of Retailing and Consumer Services 2022, 68, 103013. [Google Scholar] [CrossRef]

- Luong, M.T.; Pham, H; Manning, C. D; Effective approaches to attention-based neural machine translation. arXiv 2015, arXiv:1508.04025.

- Wang, Y.; Huang, M.; Zhu, X.; Zhao, L. Attention-based LSTM for aspect-level sentiment classification. In Proceedings of the 2016 conference on empirical methods in natural language processing 2016, 606–615. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D. Going deeper with convolutions. In Proceedings of the IEEE conference on computer vision and pattern recognition 2015, 1–9. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Yu, Z.; Dong, Y.; Cheng, J. Research on Face Recognition Classification Based on Improved GoogleNet. Security and Communication Networks 2022, 1–6. [Google Scholar] [CrossRef]

- Goswami, A. D.; Bhavekar, G. S.; Chafle, P. V. Electrocardiogram signal classification using VGGNet: a neural network based classification model. International Journal of Information Technology 2023, 15, 119–128. [Google Scholar] [CrossRef]

- Fan, Y.; Wang, Y.; Yu, H.; Liu, B. Movie recommendation based on visual features of trailers. In Innovative Mobile and Internet Services in Ubiquitous Computing: Proceedings of the 11th International Conference on Innovative Mobile and Internet Services in Ubiquitous Computing (IMIS-2017) 2018, 242-253.

- Andreeva, E.; Ignatov, D. I.; Grachev, A.; Savchenko, A. V. Extraction of visual features for recommendation of products via deep learning in Analysis of Images, Social Networks and Texts: 7th International Conference, AIST 2018, Moscow, Russia 2048, 5–7.

- Wang, M.; Cao, D.; Li, L.; Li, S.; Ji, R. Microblog sentiment analysis based on cross-media bag-of-words model. In Proceedings of international conference on internet multimedia computing and service 2014, 76–80. [Google Scholar]

- Cao, D.; Ji, R.; Lin, D.; Li, S. A cross-media public sentiment analysis system for microblog. Multimedia Systems 2016, 22, 479–486. [Google Scholar] [CrossRef]

- Sokolova, M.; Lapalme, G. Classification of opinions with non-affective adverbs and adjectives. in Proceedings of the International Conference RANLP-2009 2009. [Google Scholar]

| Ref | Proposed Methodology | Dataset | Result Accuracy | Limitation |

| [16] | Empirical Analysis | U.S. commercial bank dataset | 79% | Required complex training and large dataset. |

| [17] | RNN-LSTM algorithm | Movie review datasets, Document Understanding Conferences (DUC) datasets | 74.78% | Loss of sequential and contextual information |

| [18] |

CNN-LSTM |

93000 tweets (tweeter dataset) | 89% | Unable to normalize polarity intensity of words |

| [19] | R-CNN | IAPS, ArtPhoto, Twitter, Flickr, Instagram | 83.05% | Required a more dependable recurrent model |

| [20] | convolutional neural network | Fashion Dataset | 87% | Dictionary-based and domain-specific |

| [21] | Deep feature modal using regression | Amazon product reviews | 83% | Fail to produce multi-dimension features |

| [22] | multi-modal event-aware network | Weibos posts | 89% | Unable to manage out-of-vocabulary words |

| [23] | Image-Text Sentiment Analysis model | Twitter dataset, Getty dataset. | 76% accuracy for Twitter data and 87% accuracy for Getty photos. | Costly and time-consuming to search for opinionated words |

| [24] | AHRM | Flicker8k dataset, Getty dataset. | 87% | Features are lost during extraction |

| [25] |

Attention-Based Modality-Gated Networks for Image-Text Sentiment Analysis |

Flicker8kW, Twitter | 88% accuracy on FlickrW images and gives 79% accuracy on Twitter data | Weight base non-reliable method for feature extraction. |

| Dataset | Positive | Neutral | Negative | Sum |

| Twitter (t4sa) | 372,904 | 444,287 | 156,862 | 974,053 |

| Flicker8k | 3,999 | 1,711 | 2,382 | 8,092 |

| Datasets | Models | F1 score | Accuracy |

|---|---|---|---|

| Flicker8k dataset | AHRM | 87.1% | 87.5% |

| DMAF | 85% | 85.9% | |

| MMOM | 88.75% | 90.38% | |

| Twitter(t4sa) | DMAF | 76.9% | 76.3% |

| AMGN | 79.1% | 79% | |

| MMOM | 86.34% | 88.54% |

| Dataset | Class | Recall | Precision | F value |

| Flicker8k | Positive | 95.08 | 93.79 | 94.43 |

| Neutral | 88.15 | 77.93 | 82.72 | |

| Negative | 83.89 | 94.92 | 89.11 | |

| Average | 89.04 | 88.88 | 88.75 | |

| Positive | 89.79 | 94.02 | 91.86 | |

| Neutral | 91.82 | 87.5 | 89.61 | |

| Negative | 76.3 | 78.9 | 77.57 | |

| Average | 85.97 | 86.80 | 86.34 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).