Submitted:

24 May 2023

Posted:

26 May 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Speech perception in Noise

1.2. Temporal resolution

1.3. Working memory

1.4. Frequency discrimination

1.5. Different music styles and instruments

2. MATERIAL AND METHODS

2.1. Participants

2.2. Procedure

2.2.1. Speech-in-Babble

2.2.2. Gaps-in-Noise

2.2.3. Digit Span

2.2.4. Word Recognition – Rhythm Component (WRRC)

2.2.5. Frequency Discrimination Limen

2.3. Statistical Analysis

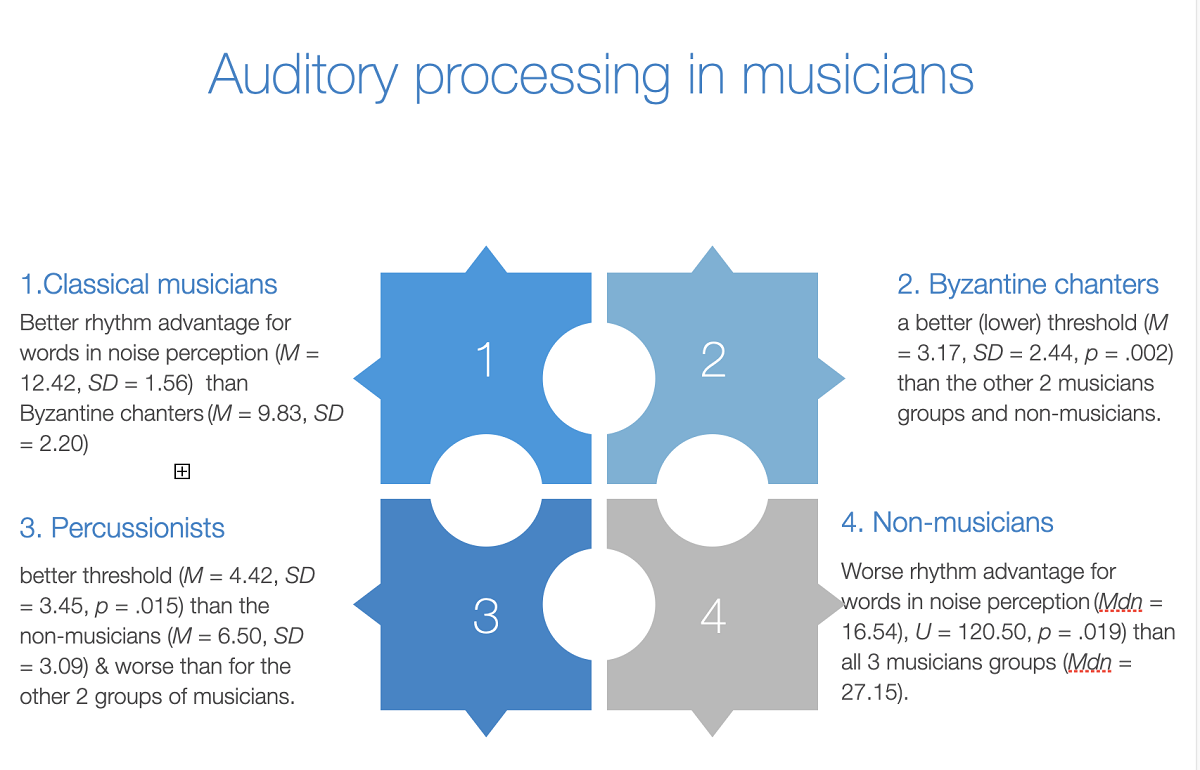

3. Results

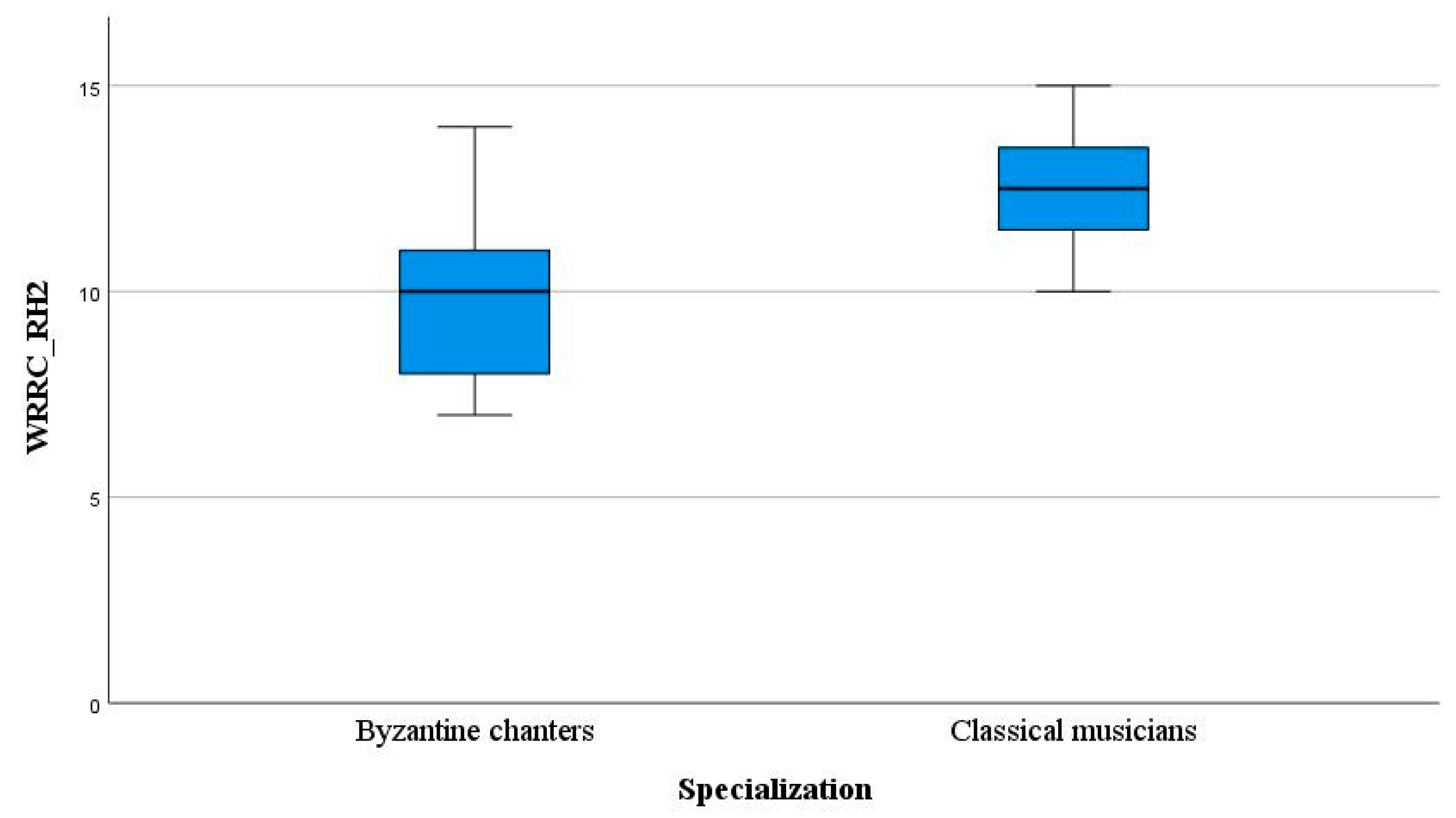

3.1. Word Recognition Rhythm Component

3.2. Gaps In Noise

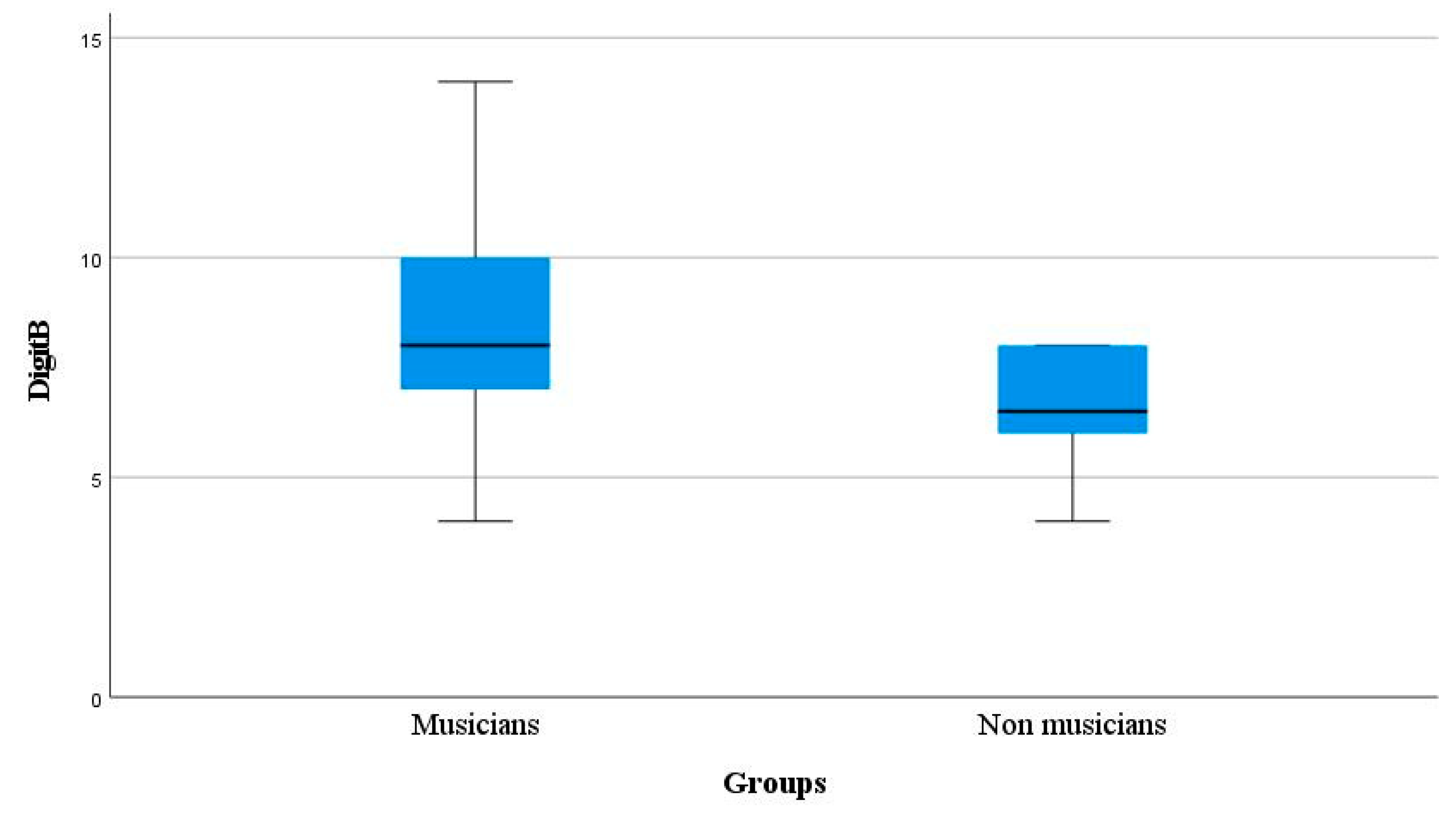

3.3. Digit Span

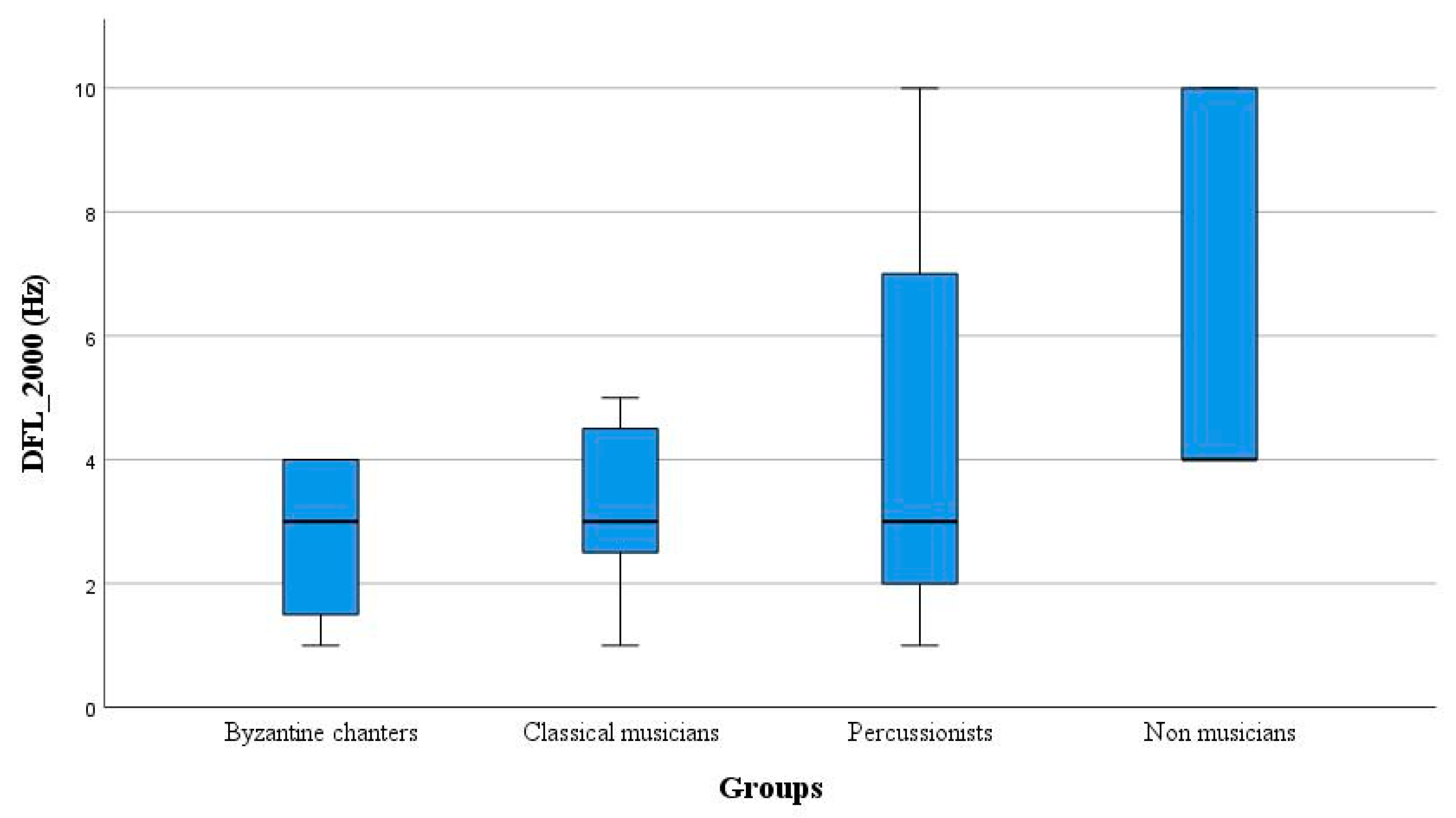

3.4. Frequency Discrimination Limen

4. Discussion

5. Conclusion

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations:

References

- Bamiou, D.-E. Aetiology and Clinical Presentations of Auditory Processing Disorders - a Review. Arch Dis Child 2001, 85, 361–365. [Google Scholar] [CrossRef]

- Chermak, G. D.; Lee, J. Comparison of Children’s Performance on Four Tests of Temporal Resolution. J Am Acad Audiol 2005, 16, 554–563. [Google Scholar] [CrossRef]

- Rudner, M.; Rönnberg, J.; Lunner, T. Working Memory Supports Listening in Noise for Persons with Hearing Impairment. J Am Acad Audiol 2011, 22, 156–167. [Google Scholar] [CrossRef]

- Iliadou, V.; Bamiou, D. E.; Sidiras, C.; Moschopoulos, N. P.; Tsolaki, M.; Nimatoudis, I.; Chermak, G. D. The Use of the Gaps-in-Noise Test as an Index of the Enhanced Left Temporal Cortical Thinning Associated with the Transition between Mild Cognitive Impairment and Alzheimer’s Disease. J Am Acad Audiol 2017, 28, 463–471. [Google Scholar] [CrossRef]

- Schlaug, G.; Norton, A.; Overy, K.; Winner, E. Effects of Music Training on the Child’s Brain and Cognitive Development. Ann N Y Acad Sci 2005, 1060, 219–230. [Google Scholar] [CrossRef]

- Lad, M.; Billig, A. J.; Kumar, S.; Griffiths, T. D. A Specific Relationship between Musical Sophistication and Auditory Working Memory. Sci Rep 2022, 12. [Google Scholar] [CrossRef]

- Kraus, N.; Chandrasekaran, B. Music Training for the Development of Auditory Skills. Nat Rev Neurosci 2010, 11, 599–605. [Google Scholar] [CrossRef] [PubMed]

- Kraus, N.; Skoe, E.; Parbery-Clark, A.; Ashley, R. Experience-Induced Malleability in Neural Encoding of Pitch, Timbre, and Timing: Implications for Language and Music. In Annals of the New York Academy of Sciences; Blackwell Publishing Inc., 2009; Volume 1169, pp. 543–557. [Google Scholar] [CrossRef]

- Koelsch, S.; Schröger, E.; Tervaniemi, M. Superior Pre-Attentive Auditory Processing in Musicians. Neuroreport 1999, 10, 1309–1313. [Google Scholar] [CrossRef] [PubMed]

- Strait, D. L.; Kraus, N. Biological Impact of Auditory Expertise across the Life Span: Musicians as a Model of Auditory Learning. Hear Res 2014, 308, 109–121. [Google Scholar] [CrossRef] [PubMed]

- Tervaniemi, M.; Just, V.; Koelsch, S.; Widmann, A.; Schroger, E. Pitch Discrimination Accuracy in Musicians vs Non-musicians: An Event-Related Potential and Behavioral Study. Exp Brain Res 2005, 161, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Forgeard, M.; Winner, E.; Norton, A.; Schlaug, G. Practicing a Musical Instrument in Childhood Is Associated with Enhanced Verbal Ability and Nonverbal Reasoning. PLoS One 2008, 3. [Google Scholar] [CrossRef] [PubMed]

- Kraus, N.; Strait, D. L.; Parbery-Clark, A. Cognitive Factors Shape Brain Networks for Auditory Skills: Spotlight on Auditory Working Memory. Ann N Y Acad Sci 2012, 1252, 100–107. [Google Scholar] [CrossRef] [PubMed]

- Slater, J.; Skoe, E.; Strait, D. L.; O’Connell, S.; Thompson, E.; Kraus, N. Music Training Improves Speech-in-Noise Perception: Longitudinal Evidence from a Community-Based Music Program. Behavioural Brain Research 2015, 291, 244–252. [Google Scholar] [CrossRef]

- Tzounopoulos, T.; Kraus, N. Learning to Encode Timing: Mechanisms of Plasticity in the Auditory Brainstem. Neuron 2009. [Google Scholar] [CrossRef] [PubMed]

- Margulis, E. H.; Mlsna, L. M.; Uppunda, A. K.; Parrish, T. B.; Wong, P. C. M. Selective Neurophysiologic Responses to Music in Instrumentalists with Different Listening Biographies. Hum Brain Mapp 2009, 30, 267–275. [Google Scholar] [CrossRef]

- Pantev, C.; Roberts, L. E.; Schulz, M.; Engelien, A.; Ross, B. Timbre-Specific Enhancement of Auditory Cortical Representations in Musicians. Neuroreport 2001, 12, 169–174. [Google Scholar] [CrossRef]

- Strait, D. L.; Chan, K.; Ashley, R.; Kraus, N. Specialization among the Specialized: Auditory Brainstem Function Is Tuned in to Timbre. Cortex 2012, 48, 360–362. [Google Scholar] [CrossRef]

- Besson, M.; Chobert, J.; Marie, C. Transfer of Training between Music and Speech: Common Processing, Attention, and Memory. Front Psychol 2011, 2. [Google Scholar] [CrossRef]

- Parbery-Clark, A.; Skoe, E.; Lam, C.; Kraus, N. Musician Enhancement for Speech-In-Noise. Ear Hear 2009, 30, 653–661. [Google Scholar] [CrossRef]

- Patel, A. D. Why Would Musical Training Benefit the Neural Encoding of Speech? The OPERA Hypothesis. Front Psychol 2011, 2. [Google Scholar] [CrossRef]

- Patel, A. D.; Iversen, J. R. The Linguistic Benefits of Musical Abilities. Trends Cogn Sci 2007, 11, 369–372. [Google Scholar] [CrossRef] [PubMed]

- Strait, D. L.; Parbery-Clark, A.; Hittner, E.; Kraus, N. Musical Training during Early Childhood Enhances the Neural Encoding of Speech in Noise. Brain Lang 2012, 123, 191–201. [Google Scholar] [CrossRef] [PubMed]

- Strait, D. L.; Kraus, N.; Skoe, E.; Ashley, R. Musical Experience and Neural Efficiency - Effects of Training on Subcortical Processing of Vocal Expressions of Emotion. European Journal of Neuroscience 2009, 29, 661–668. [Google Scholar] [CrossRef] [PubMed]

- Patel, A. D. Can Nonlinguistic Musical Training Change the Way the Brain Processes Speech? The Expanded OPERA Hypothesis. Hear Res 2014, 308, 98–108. [Google Scholar] [CrossRef] [PubMed]

- Elbert, T.; Pantev, C.; Wienbruch, C.; Rockstroh, B.; Taub, E. Increased Cortical Representation of the Fingers of the Left Hand in String Players. Science (1979) 1995, 270, 305–307. [Google Scholar] [CrossRef] [PubMed]

- Vuust, P.; Brattico, E.; Seppänen, M.; Näätänen, R.; Tervaniemi, M. Practiced Musical Style Shapes Auditory Skills. Ann N Y Acad Sci 2012, 1252, 139–146. [Google Scholar] [CrossRef] [PubMed]

- Vuust, P.; Brattico, E.; Seppänen, M.; Näätänen, R.; Tervaniemi, M. The Sound of Music: Differentiating Musicians Using a Fast, Musical Multi-Feature Mismatch Negativity Paradigm. Neuropsychologia 2012, 50, 1432–1443. [Google Scholar] [CrossRef]

- Medwetsky, L. Spoken Language Processing Model: Bridging Auditory and Language Processing to Guide Assessment and Intervention. Lang Speech Hear Serv Sch 2011, 42, 286–296. [Google Scholar] [CrossRef]

- American Speech-Language-Hearing Association. Central auditory processing: Current status of research and implications for clinical practice. American Journal of Audiology 1996, 5, 41–54. [CrossRef]

- (Central) Auditory Processing Disorders—The Role of the Audiologist. [CrossRef]

- Monteiro, A. R. M.; Nascimento, M. F.; Soares, D. C.; Ferreira, I. M. D. D. C. Temporal Resolution Abilities in Musicians and No Musicians Violinists Habilidades de Resolução Temporal Em Músicos Violinistas e Não Músicos; 2010. [Google Scholar]

- Samelli, A. G.; Schochat, E. The Gaps-in-Noise Test: Gap Detection Thresholds in Normal-Hearing Young Adults. Int J Audiol 2008, 47, 238–245. [Google Scholar] [CrossRef] [PubMed]

- Phillips, D. P. Central Auditory System and Central Auditory Processing Disorders: Some Conceptual Issues; 2002; Volume 23. [Google Scholar]

- Chermak, G. D.; Musiek, F. E. Central Auditory Processing Disorders: New Perspectives; Singular Pub Group: San Diego, 1997. [Google Scholar]

- Gelfand, S. A. Hearing : An Introduction to Psychological and Physiological Acoustics; Informa Healthcare, 2010. [Google Scholar]

- Griffiths, T. D.; Warren, J. D. The Planum Temporale as a Computational Hub. Trends Neurosci 2002, 25, 348–353. [Google Scholar] [CrossRef] [PubMed]

- Hautus, M. J.; Setchell, G. J.; Waldie, K. E.; Kirk, I. J. Age-Related Improvements in Auditory Temporal Resolution in Reading-Impaired Children. Dyslexia 2003, 9, 37–45. [Google Scholar] [CrossRef] [PubMed]

- Walker, M. M.; Shinn, J. B.; Cranford, J. L.; Givens, G. D.; Holbert, D. Auditory Temporal Processing Performance of Young Adults With Reading Disorders. Journal of Speech, Language, and Hearing Research 2002, 45, 598–605. [Google Scholar] [CrossRef] [PubMed]

- Rance, G.; McKay, C.; Grayden, D. Perceptual Characterization of Children with Auditory Neuropathy. Ear Hear 2004, 25, 34–46. [Google Scholar] [CrossRef] [PubMed]

- Fingelkurts, A. A.; Fingelkurts, A. A. Timing in Cognition and EEG Brain Dynamics: Discreteness versus Continuity. Cogn Process 2006, 7, 135–162. [Google Scholar] [CrossRef] [PubMed]

- Bao, Y.; Szymaszek, A.; Wang, X.; Oron, A.; Pöppel, E.; Szelag, E. Temporal Order Perception of Auditory Stimuli Is Selectively Modified by Tonal and Non-Tonal Language Environments. Cognition 2013, 129, 579–585. [Google Scholar] [CrossRef] [PubMed]

- Grube, M.; Kumar, S.; Cooper, F. E.; Turton, S.; Griffiths, T. D. Auditory Sequence Analysis and Phonological Skill. Proceedings of the Royal Society B: Biological Sciences 2012, 279, 4496–4504. [Google Scholar] [CrossRef]

- Grube, M.; Cooper, F. E.; Griffiths, T. D. Auditory Temporal-Regularity Processing Correlates with Language and Literacy Skill in Early Adulthood. Cogn Neurosci 2013, 4, (3–4). [Google Scholar] [CrossRef]

- Iliadou, V.; Ptok, M.; Grech, H.; Pedersen, E. R.; Brechmann, A.; Deggouj, N.; Kiese-Himmel, C.; Sliwinska-Kowalska, M.; Nickisch, A.; Demanez, L.; Veuillet, E.; Thai-Van, H.; Sirimanna, T.; Callimachou, M.; Santarelli, R.; Kuske, S.; Barajas, J.; Hedjever, M.; Konukseven, O.; Veraguth, D.; Mattsson, T. S.; Martins, J. H.; Bamiou, D. E. A European Perspective on Auditory Processing Disorder-Current Knowledge and Future Research Focus. Front Neurol 2017, 8. [Google Scholar] [CrossRef]

- Musiek, F. E.; Shinn, J.; Chermak, G. D.; Bamiou, D.-E. Perspectives on the Pure-Tone Audiogram. J Am Acad Audiol 2017, 28, 655–671. [Google Scholar] [CrossRef]

- World report on hearing. Geneva: World Health Organization; 2021. Licence: CC BY-NC-SA 3.0 IGO.

- Musiek, F. E.; Baran, J. A.; James Bellis, T.; Chermak, G. D.; Hall III, J. W.; Professor, C.; Keith, R. W.; Medwetsky, L.; Loftus West, K.; Young, M.; Nagle, S.; Volunteer, S. merican Academy of Audiology Clinical Practice Guidelines: Diagnosis, Treatment and Management of Children and Adults with Central Auditory Processing American Academy of Audiology Clinical Practice Guidelines Guidelines for the Diagnosis, Treatment and Management of Children and Adults with Central Auditory Processing Disorder Task Force Members; 2010. [Google Scholar]

- Musiek, F. E.; Shinn, J. B.; Jirsa, R.; Bamiou, D.-E.; Baran, J. A.; Zaidan, E. GIN (Gaps-In-Noise) Test Performance in Subjects with Confirmed Central Auditory Nervous System Involvement; 2005. [Google Scholar]

- Gilley, P. M.; Sharma, M.; Purdy, S. C. Oscillatory Decoupling Differentiates Auditory Encoding Deficits in Children with Listening Problems. Clinical Neurophysiology 2016, 127, 1618–1628. [Google Scholar] [CrossRef]

- Slater, J.; Kraus, N. The Role of Rhythm in Perceiving Speech in Noise: A Comparison of Percussionists, Vocalists and Non-Musicians. Cogn Process 2016, 17, 79–87. [Google Scholar] [CrossRef] [PubMed]

- Coffey, E. B. J.; Mogilever, N. B.; Zatorre, R. J. Speech-in-Noise Perception in Musicians: A Review. Hear Res 2017, 352, 49–69. [Google Scholar] [CrossRef] [PubMed]

- Hennessy, S.; Mack, W. J.; Habibi, A. Speech-in-noise Perception in Musicians and Non-musicians: A Multi-level Meta-Analysis. Hear Res 2022, 416, 108442. [Google Scholar] [CrossRef] [PubMed]

- Boebinger, D.; Evans, S.; Rosen, S.; Lima, C. F.; Manly, T.; Scott, S. K. Musicians and Non-Musicians Are Equally Adept at Perceiving Masked Speech. J Acoust Soc Am 2015, 137, 378–387. [Google Scholar] [CrossRef] [PubMed]

- Fuller, C. D.; Galvin, J. J.; Maat, B.; Free, R. H.; Başkent, D. The Musician Effect: Does It Persist under Degraded Pitch Conditions of Cochlear Implant Simulations? Front Neurosci 2014. [Google Scholar] [CrossRef]

- Ruggles, D. R.; Freyman, R. L.; Oxenham, A. J. Influence of Musical Training on Understanding Voiced and Whispered Speech in Noise. PLoS One 2014, 9. [Google Scholar] [CrossRef] [PubMed]

- Sidiras, C.; Iliadou, V.; Nimatoudis, I.; Reichenbach, T.; Bamiou, D. E. Spoken Word Recognition Enhancement Due to Preceding Synchronized Beats Compared to Unsynchronized or Unrhythmic Beats. Front Neurosci 2017, 11. [Google Scholar] [CrossRef]

- Sidiras, C.; Iliadou, V. V.; Nimatoudis, I.; Bamiou, D. E. Absence of Rhythm Benefit on Speech in Noise Recognition in Children Diagnosed With Auditory Processing Disorder. Front Neurosci 2020, 14. [Google Scholar] [CrossRef]

- Plack, C. J.; Viemeister, N. F. Suppression and the Dynamic Range of Hearing. J Acoust Soc Am 1993, 93, 976–982. [Google Scholar] [CrossRef]

- Iliadou, V.; Bamiou, D. E.; Chermak, G. D.; Nimatoudis, I. Comparison of Two Tests of Auditory Temporal Resolution in Children with Central Auditory Processing Disorder, Adults with Psychosis, and Adult Professional Musicians. Int J Audiol 2014, 53, 507–513. [Google Scholar] [CrossRef] [PubMed]

- Chermak, G. D.; Musiek, F. E. Central Auditory Processing Disorders: New Perspectives; Singular Pub Group: San Diego, 1997. [Google Scholar]

- Elangovan, S.; Stuart, A. Natural Boundaries in Gap Detection Are Related to Categorical Perception of Stop Consonants. Ear Hear 2008, 29, 761–774. [Google Scholar] [CrossRef] [PubMed]

- Keith, R. Random Gap Detection Test. St. Louis, 2000. MO: Auditec.

- Rammsayer, T. H.; Buttkus, F.; Altenmüller, E. Musicians Do Better than Non-musicians in Both Auditory and Visual Timing Tasks. Music Percept 2012, 30(1), 85–96. [Google Scholar] [CrossRef]

- Donai, J. J.; Jennings, M. B. Gaps-in-Noise Detection and Gender Identification from Noise-Vocoded Vowel Segments: Comparing Performance of Active Musicians to Non-Musicians. J Acoust Soc Am 2016, 139, EL128–EL134. [Google Scholar] [CrossRef] [PubMed]

- Kumar, P.; Sanju, H.; Nikhil, J. Temporal Resolution and Active Auditory Discrimination Skill in Vocal Musicians. Int Arch Otorhinolaryngol 2015, 20, 310–314. [Google Scholar] [CrossRef]

- Rammsayer, T.; Altenmüller, E. Temporal Information Processing in Musicians and Non-musicians. Music Percept 2006, 24, 37–48. [Google Scholar] [CrossRef]

- Ryn Junior, F. Van; Lüders, D.; Casali, R. L.; Amaral, M. I. R. do. Temporal Auditory Processing in People Exposed to Musical Instrument Practice. Codas 2022, 34. [Google Scholar] [CrossRef]

- Sangamanatha, V. A.; Bhat, J.; Srivastava, M. Temporal Resolution in Individuals with and without Musical Training Perception of Spectral Ripples and Speech Perception in Noise by Older Adults View Project; 2012. https://www.researchgate.net/publication/230822432.

- Tervaniemi, M.; Janhunen, L.; Kruck, S.; Putkinen, V.; Huotilainen, M. Auditory Profiles of Classical, Jazz, and Rock Musicians: Genre-Specific Sensitivity to Musical Sound Features. Front Psychol 2016, 6. [Google Scholar] [CrossRef] [PubMed]

- Nascimento, F.; Monteiro, R.; Soares, C.; Ferreira, M. Temporal Sequencing Abilities in Musicians Violinists and Non-Musicians. Arquivos Internacionais de Otorrinolaringologia 2014, 14, 217–224. [Google Scholar] [CrossRef]

- Brandler, S.; Rammsayer, T. H. Differences in Mental Abilities between Musicians and Non-Musicians. Psychol Music 2003, 31, 123–138. [Google Scholar] [CrossRef]

- Chan, A. S.; Ho, Y.-C.; Cheung, M.-C. Music Training Improves Verbal Memory. Nature 1998, 396, 128–128. [Google Scholar] [CrossRef]

- Franklin, M. S.; Sledge Moore, K.; Yip, C.-Y.; Jonides, J.; Rattray, K.; Moher, J. The Effects of Musical Training on Verbal Memory. Psychol Music 2008, 36, 353–365. [Google Scholar] [CrossRef]

- George, E. M.; Coch, D. Music Training and Working Memory: An ERP Study. Neuropsychologia 2011, 49, 1083–1094. [Google Scholar] [CrossRef] [PubMed]

- Hallam, S.; Himonides, E. The Power of Music; Open Book Publishers: Cambridge, UK, 2022. [Google Scholar] [CrossRef]

- Hansen, M.; Wallentin, M.; Vuust, P. Working Memory and Musical Competence of Musicians and Non-Musicians. Psychol Music 2013, 41, 779–793. [Google Scholar] [CrossRef]

- Jakobson, L. S.; Lewycky, S. T.; Kilgour, A. R.; Stoesz, B. M. Memory for Verbal and Visual Material in Highly Trained Musicians. Music Percept 2008, 26, 41–55. [Google Scholar] [CrossRef]

- Lee, Y.; Lu, M.; Ko, H. Effects of Skill Training on Working Memory Capacity. Learn Instr 2007, 17, 336–344. [Google Scholar] [CrossRef]

- Pallesen, K. J.; Brattico, E.; Bailey, C. J.; Korvenoja, A.; Koivisto, J.; Gjedde, A.; Carlson, S. Cognitive Control in Auditory Working Memory Is Enhanced in Musicians. PLoS One 2010, 5. [Google Scholar] [CrossRef] [PubMed]

- Parbery-Clark, A.; Strait, D. L.; Anderson, S.; Hittner, E.; Kraus, N. Musical Experience and the Aging Auditory System: Implications for Cognitive Abilities and Hearing Speech in Noise. PLoS One 2011, 6. [Google Scholar] [CrossRef]

- Talamini, F.; Carretti, B.; Grassi, M. The Working Memory of Musicians and Non-musicians. An Interdisciplinary Journal 2016, 34, 183–191. [Google Scholar] [CrossRef]

- Talamini, F.; Altoè, G.; Carretti, B.; Grassi, M. Musicians Have Better Memory than Non-musicians: A Meta-Analysis. PLoS One 2017, 12. [Google Scholar] [CrossRef]

- Taylor, A. C.; Dewhurst, S. A. Investigating the Influence of Music Training on Verbal Memory. Psychol Music 2017, 45, 814–820. [Google Scholar] [CrossRef]

- Vasuki, P. R. M.; Sharma, M.; Demuth, K.; Arciuli, J. Musicians’ Edge: A Comparison of Auditory Processing, Cognitive Abilities and Statistical Learning. Hear Res 2016, 342, 112–123. [Google Scholar] [CrossRef] [PubMed]

- Wallentin, M.; Nielsen, A. H.; Friis-Olivarius, M.; Vuust, C.; Vuust, P. The Musical Ear Test, a New Reliable Test for Measuring Musical Competence. Learn Individ Differ 2010, 20, 188–196. [Google Scholar] [CrossRef]

- Zuk, J.; Benjamin, C.; Kenyon, A.; Gaab, N. Behavioral and Neural Correlates of Executive Functioning in Musicians and Non-Musicians. PLoS One 2014, 9. [Google Scholar] [CrossRef] [PubMed]

- Belin, P. Voice Processing in Human and Non-Human Primates. In Philosophical Transactions of the Royal Society B: Biological Sciences; Royal Society, 2006. [Google Scholar] [CrossRef]

- Bianchi, F.; Santurette, S.; Wendt, D.; Dau, T. Pitch Discrimination in Musicians and Non-Musicians: Effects of Harmonic Resolvability and Processing Effort. J Assoc Res Otolaryngol 2016, 17, 69–79. [Google Scholar] [CrossRef] [PubMed]

- Inabinet, D.; De La Cruz, J.; Cha, J.; Ng, K.; Musacchia, G. Diotic and Dichotic Mechanisms of Discrimination Threshold in Musicians and Non-Musicians. Brain Sci 79, 79. [Google Scholar] [CrossRef] [PubMed]

- Magne, C.; Schön, D.; Besson, M. Musician Children Detect Pitch Violations in Both Music and Language Better than Nonmusician Children: Behavioral and Electrophysiological Approaches. J Cogn Neurosci. 2006, 18(2), 199–211. [Google Scholar] [CrossRef]

- Micheyl, C.; Delhommeau, K.; Perrot, X.; Oxenham, A. J. Influence of Musical and Psychoacoustical Training on Pitch Discrimination. Hear Res 79, 79, 79–79. [Google Scholar] [CrossRef]

- Musacchia, G.; Sams, M.; Skoe, E.; Kraus, N. Musicians Have Enhanced Subcortical Auditory and Audiovisual Processing of Speech and Music. 79 2007, 104. [Google Scholar] [CrossRef]

- Toh, X. R.; Tan, S. H.; Wong, G.; Lau, F.; Wong, F. C. K. Enduring Musician Advantage among Former Musicians in Prosodic Pitch Perception. Sci Rep 2023, 13(1), 2657. [Google Scholar] [CrossRef]

- Kishon-Rabin, L.; Amir, O.; Vexler, Y.; Zaltz, Y. Pitch Discrimination: Are Professional Musicians Better than Non-Musicians? J Basic Clin Physiol Pharmacol 2001, 12. [Google Scholar] [CrossRef] [PubMed]

- Tervaniemi, M.; Huotilainen, M.; Brattico, E. Melodic Multi-Feature Paradigm Reveals Auditory Profiles in Music-Sound Encoding. Front Hum Neurosci 2014, 8. [Google Scholar] [CrossRef] [PubMed]

- Delviniotis, D.; Kouroupetroglou, G.; Theodoridis, S. Acoustic Analysis of Musical Intervals in Modern Byzantine Chant Scales. J Acoust Soc Am 2008, 124(4), EL262–EL269. [Google Scholar] [CrossRef] [PubMed]

- Wellesz, E. A History of Byzantine Music and Hymnography; Clarendon Press: Oxford, 1961. [Google Scholar]

- Baloyianis, St. Psaltic art and the brain: The philosophy of the Byzantine music from the perspectives of the neurosciences. In The Psaltic Art as an Autonomous Science: Scientific Branches – Related Scientific Fields – Interdisciplinary Collaborations and Interaction, Volos, 29 June-3 July 2014; 2015. https://speech.di.uoa.gr/IMC2014/. 3 July.

- Delviniotis, D. S. New Method of Byzantine Music (BM) Intervals’ Measuring and Its Application in the Fourth Mode. A New Approach of the Music Intervals’ Definition. MODUS-MODI_MODALITY International Musicological Conference; 6-10 September 2017. [CrossRef]

- Patriarchal Music Committee. Στοιχειώδης διδασκαλία της Εκκλησιαστικής Μουσικής - εκπονηθείσα επί τη βάσει του ψαλτηρίου [Elementary Teaching of Ecclesiastical Music – elaborated on the base of the psalter]; Κωνσταντινούπολις, 1881.

- Kypourgos, N. Μερικές Παρατηρήσεις Πάνω Στα Διαστήματα Της Ελληνικής Και Aνατολικής Μουσικής. Μουσικολογία 1985, 2. [Google Scholar]

- Sundberg, J. The Acoustics of the Singing Voice. Sci Am 1977, 236, 82–91. [Google Scholar] [CrossRef] [PubMed]

- Iliadou, V.; Bamiou, D.-E.; Kaprinis, S.; Kandylis, D.; Kaprinis, G. Auditory Processing Disorders in Children Suspected of Learning Disabilities—A Need for Screening? Int J Pediatr Otorhinolaryngol 2009, 73(7), 1029–1034. [Google Scholar] [CrossRef]

- Sidiras, C.; Iliadou, V.; Chermak, G. D.; Nimatoudis, I. Assessment of Functional Hearing in Greek-Speaking Children Diagnosed with Central Auditory Processing Disorder. J Am Acad Audiol 2016, 27(5), 395–405. [Google Scholar] [CrossRef]

- Shinn, J. B.; Chermak, G. D.; Musiek, F. E. GIN (Gaps-In-Noise) Performance in the Pediatric Population. J Am Acad Audiol 2009, 20(04), 229–238. [Google Scholar] [CrossRef]

- Wechsler, D. The Wechsler adult intelligence scale-III; Psychological Corporation: San Antonio, TX, 1997. [Google Scholar]

- Flagge, A. G.; Neeley, M. E.; Davis, T. M.; Henbest, V. S. A Preliminary Exploration of Pitch Discrimination, Temporal Sequencing, and Prosodic Awareness Skills of Children Who Participate in Different School-Based Music Curricula. Brain Sci 2021, 11(8), 982. [Google Scholar] [CrossRef] [PubMed]

- Sun, Y.; Lu, X.; Ho, H. T.; Thompson, W. F. Pitch Discrimination Associated with Phonological Awareness: Evidence from Congenital Amusia. Sci Rep 2017, 7. [Google Scholar] [CrossRef] [PubMed]

- Nelson, D. A.; Stanton, M. E.; Freyman, R. L. A General Equation Describing Frequency Discrimination as a Function of Frequency and Sensation Level. J Acoust Soc Am. 1983, 73(6), 2117–23. [Google Scholar] [CrossRef] [PubMed]

- Loutridis, S. Aκουστική: αρχές και εφαρμογές; Tziola: Thessaloniki, 2015. [Google Scholar]

- Iliadou, V.; Fourakis, M.; Vakalos, A.; Hawks, J. W.; Kaprinis, G. Bi-Syllabic, Modern Greek Word Lists for Use in Word Recognition Tests. Int J Audiol 2006, 45, 74–82. [Google Scholar] [CrossRef] [PubMed]

- Iliadou, V. (Vivian); Apalla, K.; Kaprinis, S.; Nimatoudis, I.; Kaprinis, G.; Iacovides, A. Is Central Auditory Processing Disorder Present in Psychosis? Am J Audiol 2013, 22, 201–208. [Google Scholar] [CrossRef] [PubMed]

- Cramer, D.; Howitt, D. The SAGE Dictionary of Statistics; SAGE Publications, Ltd: 1 Oliver’s Yard, 55 City Road, London England EC1Y 1SP United Kingdom, 2004. [Google Scholar] [CrossRef]

- Doane, D. P.; Seward, L. E. Measuring Skewness: A Forgotten Statistic? Journal of Statistics Education 2011, 2011. [Google Scholar] [CrossRef]

- Du, Y.; Zatorre, R. J. Musical Training Sharpens and Bonds Ears and Tongue to Hear Speech Better. Proceedings of the National Academy of Sciences 2017, 114(51), 13579–13584. [Google Scholar] [CrossRef]

- Li, X.; Zatorre, R. J.; Du, Y. The Microstructural Plasticity of the Arcuate Fasciculus Undergirds Improved Speech in Noise Perception in Musicians. Cerebral Cortex 2021, 31, 3975–3985. [Google Scholar] [CrossRef]

- Snell, K. B.; Frisina, D. R. Relationships among Age-Related Differences in Gap Detection and Word Recognition. J Acoust Soc Am 2000, 107, 1615–1626. [Google Scholar] [CrossRef]

- Snell, K. B.; Mapes, F. M.; Hickman, E. D.; Frisina, D. R. Word Recognition in Competing Babble and the Effects of Age, Temporal Processing, and Absolute Sensitivity. J Acoust Soc Am 2002, 112, 720–727. [Google Scholar] [CrossRef]

- Vardonikolaki, A.; Pavlopoulos, V.; Pastiadis, K.; Markatos, N.; Papathanasiou, I.; Papadelis, G.; Logiadis, M.; Bibas, A. Musicians’ Hearing Handicap Index: A New Questionnaire to Assess the Impact of Hearing Impairment in Musicians and Other Music Professionals. JSLHR 2020, 63, 4219–4237. [Google Scholar] [CrossRef]

- Varnet, L.; Wang, T.; Peter, C.; Meunier, F.; Hoen, M. How Musical Expertise Shapes Speech Perception: Evidence from Auditory Classification Images. Sci Rep 2015, 5. [Google Scholar] [CrossRef]

- Vardonikolaki, A.; Kikidis, D.; Iliadou, E.; Markatos, N.; Pastiadis, K.; Bibas, A. Audiological Findings in Professionals Exposed to Music and Their Relation with Tinnitus; 2021; pp 327–353. [CrossRef]

- Spiech, C.; Endestad, T.; Laeng, B.; Danielsen, A.; Haghish, E. F. Beat Alignment Ability Is Associated with Formal Musical Training Not Current Music Playing. Front Psychol 2023, 14. [Google Scholar] [CrossRef] [PubMed]

- Slater, J.; Kraus, N.; Woodruff Carr, K.; Tierney, A.; Azem, A.; Ashley, R. Speech-in-Noise Perception Is Linked to Rhythm Production Skills in Adult Percussionists and Non-Musicians. Lang Cogn Neurosci 2018, 33, 710–717. [Google Scholar] [CrossRef] [PubMed]

- Baloyianis, St. Aι νευροεπιστήμαι εις το Βυζάντιον [The neurosciences in Byzantium]. ΕΓΚΕΦAΛOΣ, 2012, 49, 34-46. http://www.encephalos.gr/pdf/49-1-04g.pdf. /: 34-46. http.

- Garred, R. Music as Therapy : A Dialogical Perspective; Barcelona Publishers, 2006.

| Age | |

|---|---|

| Byzantine chanters | 39.17 (13.361) |

| Classical musicians | 37.92 (11.866) |

| Percussionists | 32.75 (10.635) |

| Non-musicians | 33.25 (10.678) |

| Total | 35.77 (11.661) |

| Frequency/ear | 4kHz LE | 8kHz LE | Average H. Thr. LE | 4kHz RE | 8kHz RE | Average H. Thr. RE |

|---|---|---|---|---|---|---|

| Participant 1 | 40 dB | 40 dB | <20 dB | 45 dB | 40 dB |

>20dB (21.7 dB) |

| Participant 2 | 30 dB | 20 dB | <20 dB | 30 dB | 20 dB | <20 dB |

| Participant 3 | 45 dB | 0 dB | <20 dB | 45 dB | 20 dB | <20 dB |

| S | R | S | R | S | R | S | R | S | R | |

|---|---|---|---|---|---|---|---|---|---|---|

| 500 Hz (part1) | 500 | 530 | 500 | 510 | 500 | 504 | 500 | 520 | 500 | 501 |

| 1000 Hz (part2) | 1000 | 1002 | 1000 | 1050 | 1000 | 1020 | 1000 | 1004 | 1000 | 1003 |

| 2000 Hz (part3) | 2000 | 2005 | 2000 | 2020 | 2000 | 2003 | 2000 | 2010 | 2000 | 2001 |

| RH1 | RH2 | |

|---|---|---|

| Classical musicians | 13.33 (1.30) | 12.42 (1.56) |

| Byzantine musicians | 13.17 (1.11) | 9.83 (2.20) |

| Percussionists | 13.17 (1.33) | 10.92 (1.37) |

| Non-musicians | 12.25 (.96) | 11.33 (1.96) |

| SinB_RE | SinB_LE | |

|---|---|---|

| Classical musicians | -.183 (.3664) | -1.233 (.2309) |

| Byzantine chanters | -.083 (.5357) | -1.208 (.1975) |

| Percussionists | -.300 (.3766) | -1.142 (.2811) |

| Non-musicians | -.283 (.3950) | -1.117 (.2823) |

| GIN_RE | GIN_LE | |

|---|---|---|

| Classical musicians | 5.33 (1.43) | 5.67 (1.23) |

| Byzantine chanters | 5.50 (1.31) | 5.83 (1.46) |

| Percussionists | 5.50 (1.67) | 5.58 (1.31) |

| Non-musicians | 4.92 (.66) | 5.42 (.66) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).