Submitted:

18 May 2023

Posted:

19 May 2023

Read the latest preprint version here

Abstract

Keywords:

1. Introduction

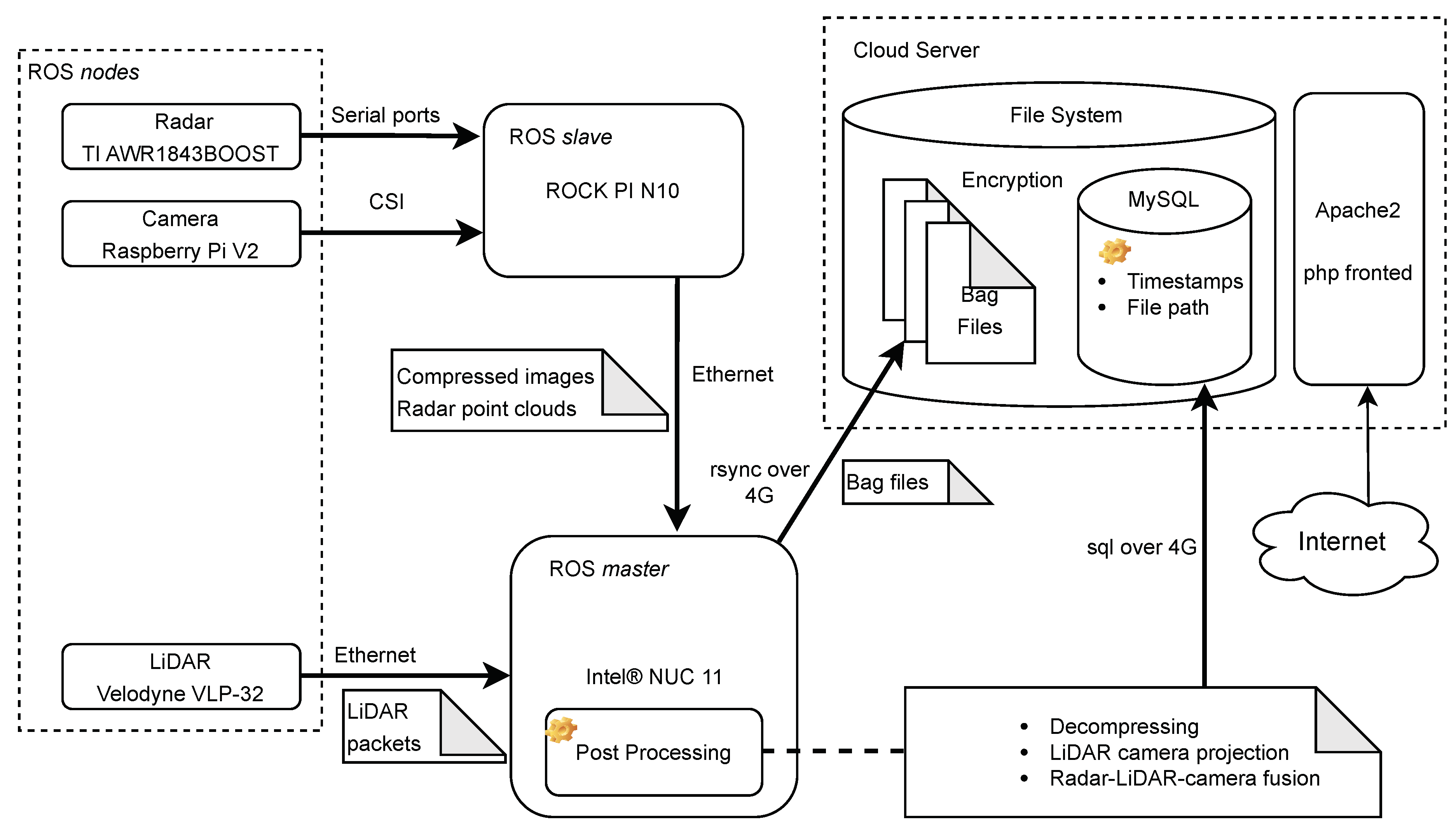

- We present a general purpose scalable end-to-end AV data collection framework for collecting high-quality multi-sensor radar, LiDAR, and camera data.

- The implementation and demonstration of the framework’s prototype, whose source code is available at: https://github.com/Claud1234/distributed_sensor_data_collector.

- The dataset collection framework contains backend data processing and multimodal sensor fusion algorithms.

2. Related Work

2.1. Dataset collection framework for autonomous driving

2.2. Multimodal sensor system for data acquisition

3. Methodology

3.1. Sensor Calibration

3.1.1. Intrinsic Calibration

3.1.2. Extrinsic Calibration

- Before the extrinsic calibration, individual sensors were intrinsically calibrated and published the processed data as ROS messages. However, to have efficient and reliable data transmission and save bandwidth, ROS drivers for the LiDAR and camera sensors were programmed to publish only Velodyne packets and compressed images. Therefore, additional scripts and operations were required to handle the sensor data to match the ROS message types for the extrinsic calibration tools. Table 1 illustrates the message types of the sensors and other post-processing.

- The calibration relies on humans to match the LiDAR point and corresponding image pixel. Therefore, it is recommended to pick the noticeable features, such as the intersection of the black and white squares or the corner of the checkerboard.

- The point-pixel matches should be picked from the checkerboard in different locations covering all sensors’ full Field of View (FOV). For camera sensors, ensure the pixels from the image edges were selected. Depth varieties (the distance between the checkerboard and the sensor) are critical for LiDAR sensors.

- It is a matter of fact that human errors are inevitable when pairing points and pixels. Therefore, it is suggested to select as many pairs as possible and repeat the calibration to ensure high accuracy.

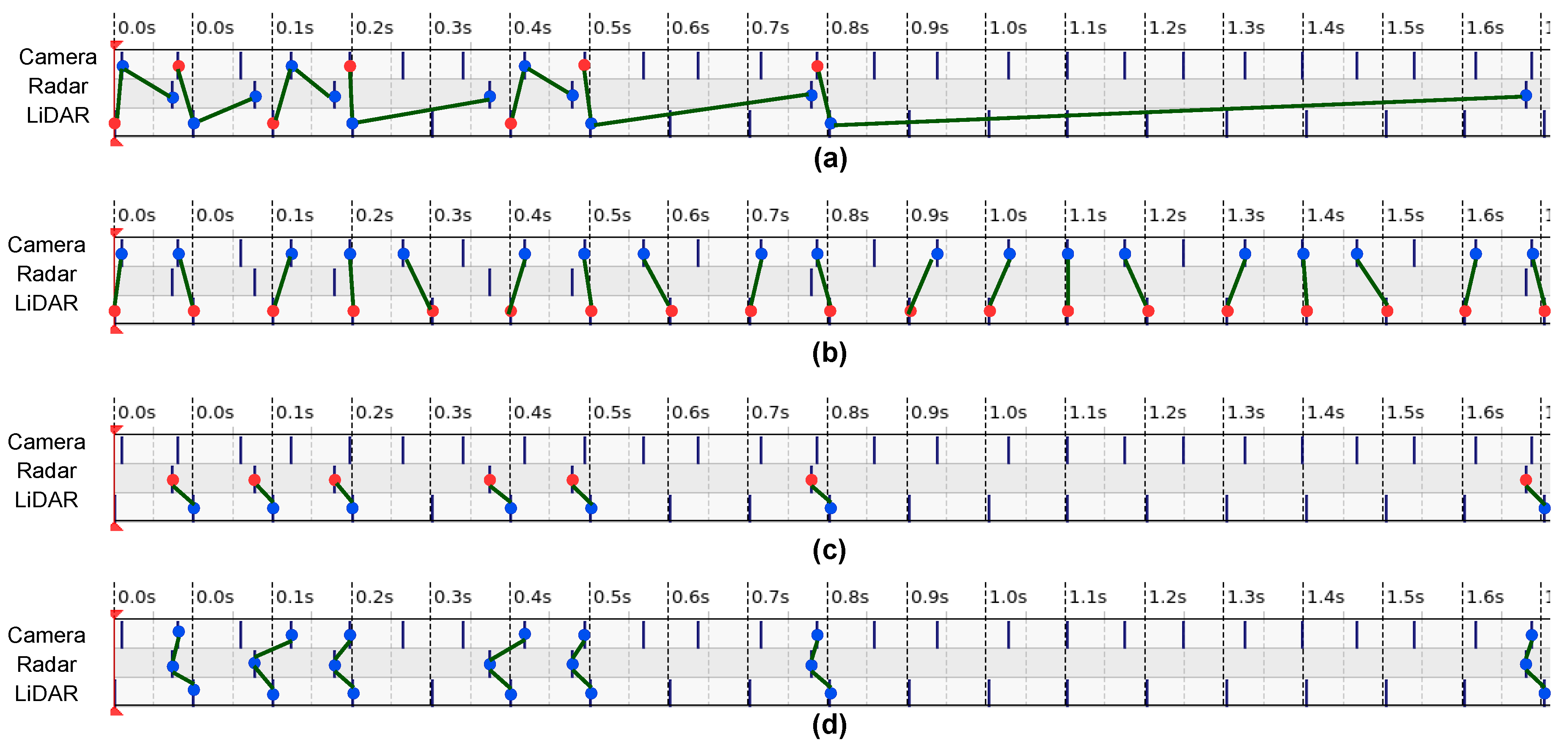

3.2. Sensor Synchronization

- Absolute timestamp is the time when data was produced in sensors. It was usually created by the ROS drivers of the sensors and was written in the header of each message.

- Relative timestamp Relative timestamp represents the time data arrives at the central processing unit. It is the Intel® NUC 11 in our prototype.

3.3. Sensor Fusion

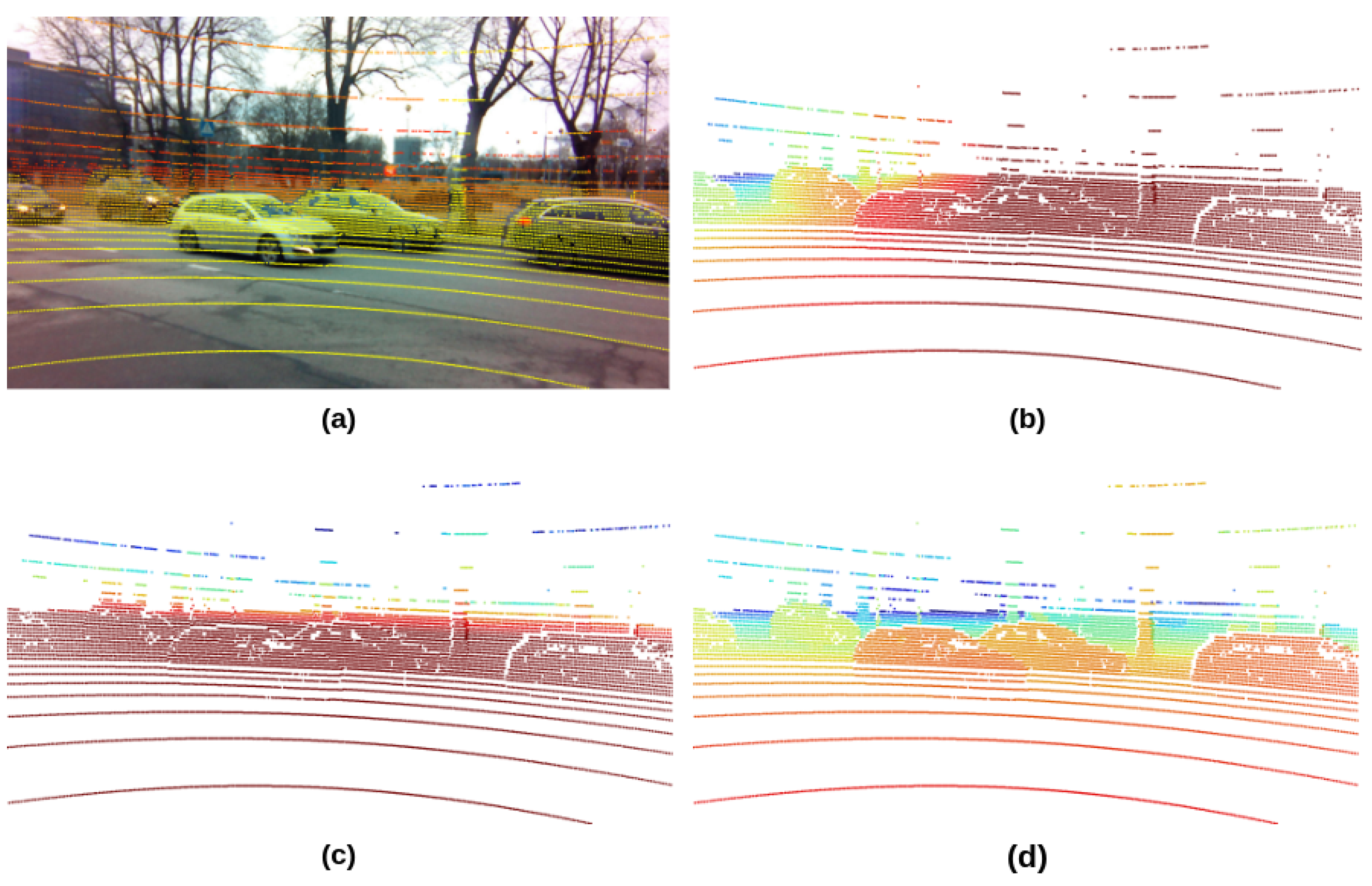

- In the first step, camera-LiDAR fusion can have a maximum amount of fusion results. Only a few messages were discarded during the sensor synchronization because the camera and LiDAR sensors have close and homogeneous frame rates. Therefore, the projection of the LiDAR point clouds to the camera images can be easily adapted as the input data of the neural networks.

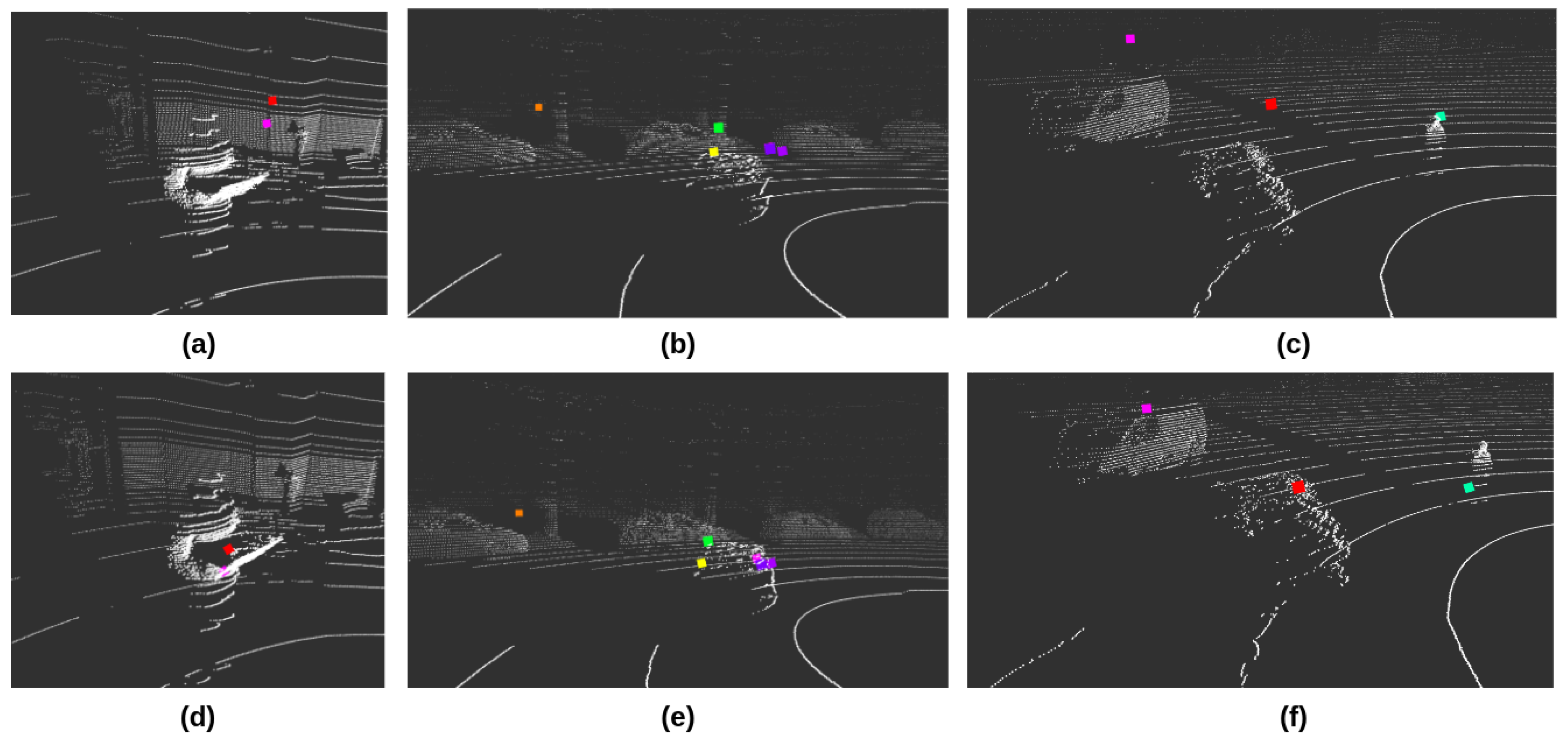

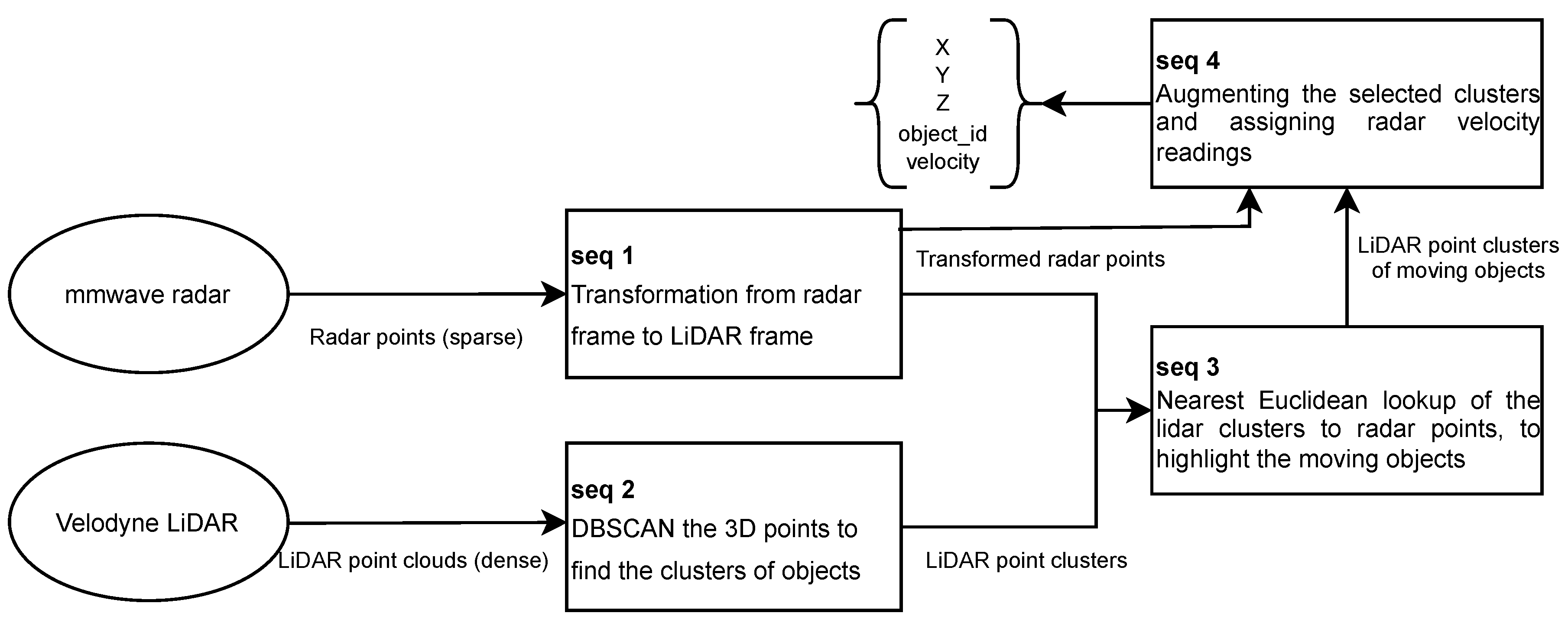

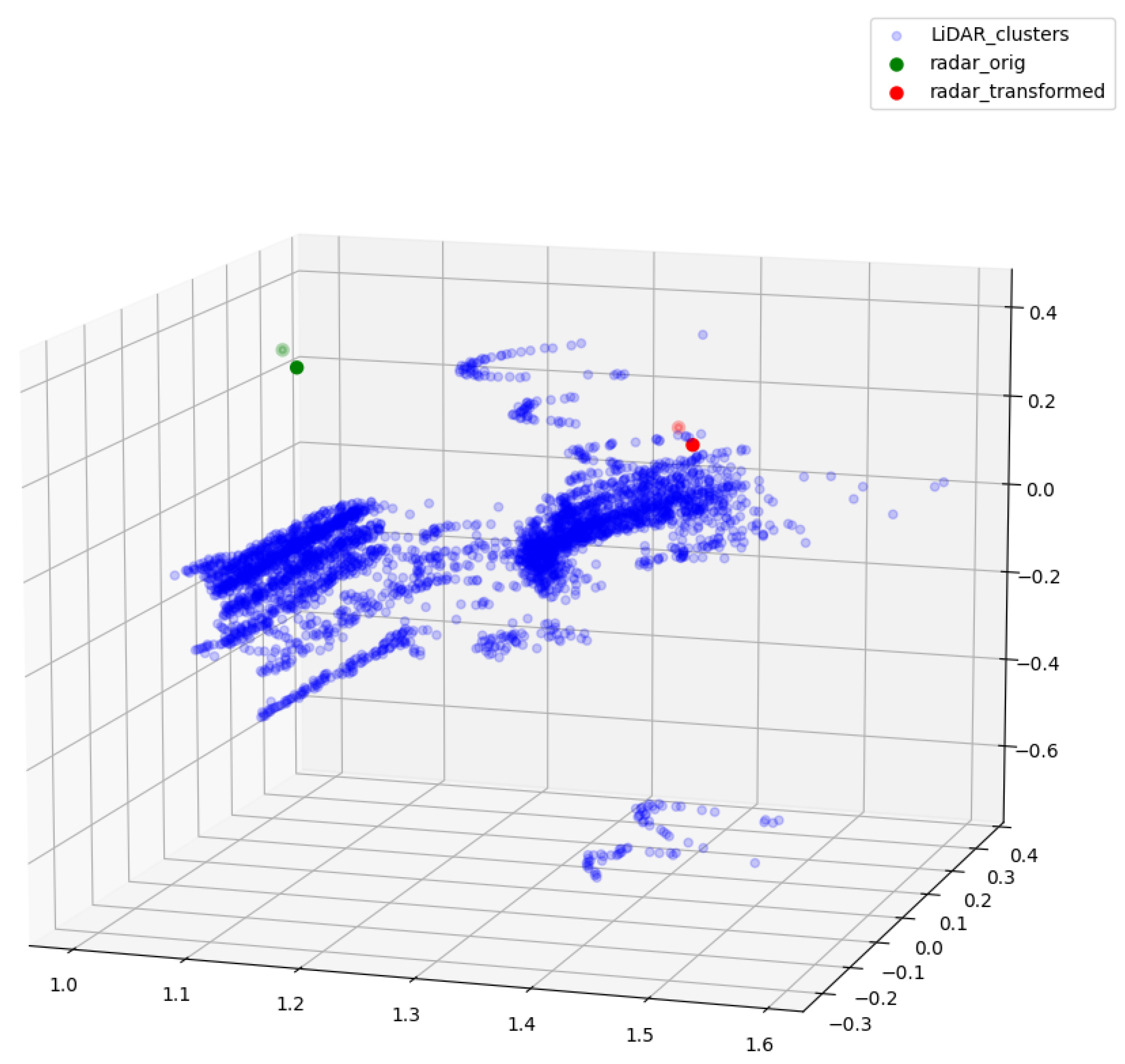

- The second step fusion of the LiDAR and radar points grants dataset the capability to filter out moving objects from dense LiDAR point clouds and be aware of objects’ relative velocity.

- The thorough camera-LiDAR-radar fusion is the combination of the first two fusion stage results, which consume little computing power and cause minor delays.

3.3.1. LiDAR Camera Fusion

- LiDAR point clouds is stored in sparse triplet format , where N is the amount of points in LiDAR data.

- LiDAR point clouds is transformed to camera reference frame; by multiplying the with the LiDAR-to-camera transform matrix

- The transformed LiDAR points are projected to the camera plane, preserving the structure of the original triplet structure; in essence, the transformed LiDAR matrix is multiplied by the camera projection matrix ; as a result, the projected LiDAR matrix now contains the LiDAR point coordinates on the camera plane (pixel coordinates).

-

The camera frame width W and height H are used to cut off all the LiDAR points not visible by the camera view. Keeping in mind the projected LiDAR matrix from the previous step. We calculate the matrix row indices where the values satisfy the following:The row indices where satisfy the expressions are stored in an index array ; the shapes of the and are the same, therefore it is secure to apply the derived indices to both camera-frame-transformed LiDAR matrix and camera-projected matrix .

- The resulting footprint images , and are initialized following the camera frame resolution ; and subsequently populate with black pixels (zero value).

-

Zero-value footprint images are populated as follows:

| Algorithm 1 LiDAR transposition, projection populating the images |

|

3.3.2. Radar LiDAR and Camera Fusion

4. Prototype Setup

4.1. Hardware Configurations

4.1.1. Processing unit configurations

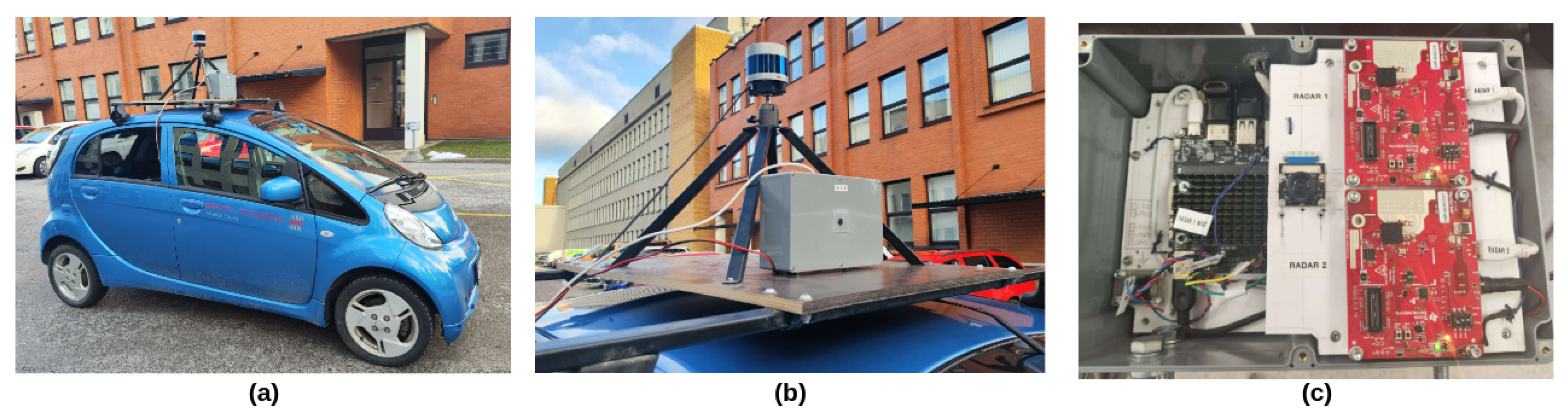

- All the sensors must be modular; in a manner that they can work independently and can be easily interchanged. Therefore, there is a need for independent and modular processing units to initiate sensors and transfer the data.

- Some sensors have hardware limitations. For example, our radar sensors rely on serial ports for communication, and cable’s length affects the communication performance in practical tests. Corresponding computer for radar sensors has to stay nearby.

- The main processing unit hardware must provide enough computation resources to support complex operations such as real-time data decompression and database writing.

4.1.2. Sensor installation

4.2. Software System

4.3. Cloud server

5. Conclusions

Funding

Acknowledgments

Conflicts of Interest

References

- Le Mero, L.; Yi, D.; Dianati, M.; Mouzakitis, A. A Survey on Imitation Learning Techniques for End-to-End Autonomous Vehicles. IEEE Transactions on Intelligent Transportation Systems 2022, 23, 14128–14147. [Google Scholar] [CrossRef]

- Bathla, G.; Bhadane, K.; Singh, R.K.; Kumar, R.; Aluvalu, R.; Krishnamurthi, R.; Kumar, A.; Thakur, R.N.; Basheer, S. Autonomous Vehicles and Intelligent Automation: Applications, Challenges, and Opportunities. Mobile Information Systems 2022, 2022, 7632892. [Google Scholar] [CrossRef]

- Ettinger, S.; Cheng, S.; Caine, B.; Liu, C.; Zhao, H.; Pradhan, S.; Chai, Y.; Sapp, B.; Qi, C.R.; Zhou, Y.; Yang, Z.; Chouard, A.; Sun, P.; Ngiam, J.; Vasudevan, V.; McCauley, A.; Shlens, J.; Anguelov, D. Large Scale Interactive Motion Forecasting for Autonomous Driving: The Waymo Open Motion Dataset. Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), 2021, pp. 9710–9719.

- Jacob, J.; Rabha, P. Driving data collection framework using low cost hardware. Proceedings of the European Conference on Computer Vision (ECCV) Workshops, 2018, pp. 0–0.

- de Gelder, E.; Paardekooper, J.P.; den Camp, O.O.; Schutter, B.D. Safety assessment of automated vehicles: how to determine whether we have collected enough field data? Traffic Injury Prevention 2019, 20, S162–S170. [Google Scholar] [CrossRef] [PubMed]

- Lopez, P.A.; Behrisch, M.; Bieker-Walz, L.; Erdmann, J.; Flötteröd, Y.P.; Hilbrich, R.; Lücken, L.; Rummel, J.; Wagner, P.; Wießner, E. Microscopic traffic simulation using sumo. 2018 21st international conference on intelligent transportation systems (ITSC). IEEE, 2018, pp. 2575–2582.

- Sun, P.; Kretzschmar, H.; Dotiwalla, X.; Chouard, A.; Patnaik, V.; Tsui, P.; Guo, J.; Zhou, Y.; Chai, Y.; Caine, B.; Vasudevan, V.; Han, W.; Ngiam, J.; Zhao, H.; Timofeev, A.; Ettinger, S.; Krivokon, M.; Gao, A.; Joshi, A.; Zhang, Y.; Shlens, J.; Chen, Z.; Anguelov, D. Scalability in Perception for Autonomous Driving: Waymo Open Dataset. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2020.

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. nuScenes: A Multimodal Dataset for Autonomous Driving. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2020.

- Alatise, M.B.; Hancke, G.P. A Review on Challenges of Autonomous Mobile Robot and Sensor Fusion Methods. IEEE Access 2020, 8, 39830–39846. [Google Scholar] [CrossRef]

- Blasch, E.; Pham, T.; Chong, C.Y.; Koch, W.; Leung, H.; Braines, D.; Abdelzaher, T. Machine Learning/Artificial Intelligence for Sensor Data Fusion–Opportunities and Challenges. IEEE Aerospace and Electronic Systems Magazine 2021, 36, 80–93. [Google Scholar] [CrossRef]

- Wallace, A.M.; Mukherjee, S.; Toh, B.; Ahrabian, A. Combining automotive radar and LiDAR for surface detection in adverse conditions. IET Radar, Sonar & Navigation 2021, 15, 359–369. [Google Scholar] [CrossRef]

- Gu, J.; Bellone, M.; Sell, R.; Lind, A. Object segmentation for autonomous driving using iseAuto data. Electronics 2022, 11, 1119. [Google Scholar] [CrossRef]

- Muller, R.; Man, Y.; Celik, Z.B.; Li, M.; Gerdes, R. Drivetruth: Automated autonomous driving dataset generation for security applications. International Workshop on Automotive and Autonomous Vehicle Security (AutoSec), 2022.

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. The International Journal of Robotics Research 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Xiao, P.; Shao, Z.; Hao, S.; Zhang, Z.; Chai, X.; Jiao, J.; Li, Z.; Wu, J.; Sun, K.; Jiang, K.; Wang, Y.; Yang, D. PandaSet: Advanced Sensor Suite Dataset for Autonomous Driving. 2021 IEEE International Intelligent Transportation Systems Conference (ITSC), 2021, pp. 3095–3101. [CrossRef]

- Déziel, J.L.; Merriaux, P.; Tremblay, F.; Lessard, D.; Plourde, D.; Stanguennec, J.; Goulet, P.; Olivier, P. PixSet : An Opportunity for 3D Computer Vision to Go Beyond Point Clouds With a Full-Waveform LiDAR Dataset. 2021, arXiv:cs.RO/2102.12010. [Google Scholar]

- Pitropov, M.; Garcia, D.E.; Rebello, J.; Smart, M.; Wang, C.; Czarnecki, K.; Waslander, S. Canadian adverse driving conditions dataset. The International Journal of Robotics Research 2021, 40, 681–690. [Google Scholar] [CrossRef]

- Yan, Z.; Sun, L.; Krajník, T.; Ruichek, Y. EU Long-term Dataset with Multiple Sensors for Autonomous Driving. 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2020, pp. 10697–10704. [CrossRef]

- Lakshminarayana, N. Large scale multimodal data capture, evaluation and maintenance framework for autonomous driving datasets. Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, 2019, pp. 0–0.

- Beck, J.; Arvin, R.; Lee, S.; Khattak, A.; Chakraborty, S. Automated vehicle data pipeline for accident reconstruction: new insights from LiDAR, camera, and radar data. Accident Analysis & Prevention 2023, 180, 106923. [Google Scholar]

- Dosovitskiy, A.; Ros, G.; Codevilla, F.; Lopez, A.; Koltun, V. CARLA: An open urban driving simulator. Conference on robot learning. PMLR, 2017, pp. 1–16.

- Xiao, Y.; Codevilla, F.; Gurram, A.; Urfalioglu, O.; López, A.M. Multimodal end-to-end autonomous driving. IEEE Transactions on Intelligent Transportation Systems 2020, 23, 537–547. [Google Scholar] [CrossRef]

- Wei, J.; Snider, J.M.; Kim, J.; Dolan, J.M.; Rajkumar, R.; Litkouhi, B. Towards a viable autonomous driving research platform. 2013 IEEE Intelligent Vehicles Symposium (IV). IEEE, 2013, pp. 763–770.

- Grisleri, P.; Fedriga, I. The braive autonomous ground vehicle platform. IFAC Proceedings Volumes 2010, 43, 497–502. [Google Scholar] [CrossRef]

- Bertozzi, M.; Bombini, L.; Broggi, A.; Buzzoni, M.; Cardarelli, E.; Cattani, S.; Cerri, P.; Coati, A.; Debattisti, S.; Falzoni, A.; Fedriga, R.I.; Felisa, M.; Gatti, L.; Giacomazzo, A.; Grisleri, P.; Laghi, M.C.; Mazzei, L.; Medici, P.; Panciroli, M.; Porta, P.P.; Zani, P.; Versari, P. VIAC: An out of ordinary experiment. 2011 IEEE Intelligent Vehicles Symposium (IV), 2011, pp. 175–180. [CrossRef]

- Self-Driving Made Real—NAVYA. https://navya.tech/fr, Last accessed on 2023-05-02.

- Gu, J.; Chhetri, T.R. Range Sensor Overview and Blind-Zone Reduction of Autonomous Vehicle Shuttles. IOP Conference Series: Materials Science and Engineering 2021, 1140, 012006. [Google Scholar] [CrossRef]

- Chang, M.F.; Lambert, J.; Sangkloy, P.; Singh, J.; Bak, S.; Hartnett, A.; Wang, D.; Carr, P.; Lucey, S.; Ramanan, D.; Hays, J. Argoverse: 3D Tracking and Forecasting with Rich Maps. 2019, arXiv:cs.CV/1911.02620]. [Google Scholar]

- Wang, P.; Huang, X.; Cheng, X.; Zhou, D.; Geng, Q.; Yang, R. The apolloscape open dataset for autonomous driving and its application. IEEE transactions on pattern analysis and machine intelligence 2019, 1. [Google Scholar]

- Thrun, S.; Montemerlo, M.; Dahlkamp, H.; Stavens, D.; Aron, A.; Diebel, J.; Fong, P.; Gale, J.; Halpenny, M.; Hoffmann, G.; others. Stanley: The robot that won the DARPA Grand Challenge. Journal of field Robotics 2006, 23, 661–692. [Google Scholar] [CrossRef]

- Zhang, J.; Singh, S. Laser–visual–inertial odometry and mapping with high robustness and low drift. Journal of field robotics 2018, 35, 1242–1264. [Google Scholar] [CrossRef]

- An, P.; Ma, T.; Yu, K.; Fang, B.; Zhang, J.; Fu, W.; Ma, J. Geometric calibration for LiDAR-camera system fusing 3D-2D and 3D-3D point correspondences. Optics express 2020, 28, 2122–2141. [Google Scholar] [CrossRef]

- Domhof, J.; Kooij, J.F.; Gavrila, D.M. An extrinsic calibration tool for radar, camera and lidar. 2019 International Conference on Robotics and Automation (ICRA). IEEE, 2019, pp. 8107–8113.

- Jeong, J.; Cho, Y.; Kim, A. The road is enough! Extrinsic calibration of non-overlapping stereo camera and LiDAR using road information. IEEE Robotics and Automation Letters 2019, 4, 2831–2838. [Google Scholar] [CrossRef]

- Schöller, C.; Schnettler, M.; Krämmer, A.; Hinz, G.; Bakovic, M.; Güzet, M.; Knoll, A. Targetless rotational auto-calibration of radar and camera for intelligent transportation systems. 2019 IEEE Intelligent Transportation Systems Conference (ITSC). IEEE, 2019, pp. 3934–3941.

- Huang, K.; Shi, B.; Li, X.; Li, X.; Huang, S.; Li, Y. Multi-modal sensor fusion for auto driving perception: A survey. arXiv 2022, arXiv:2202.02703. [Google Scholar]

- Cui, Y.; Chen, R.; Chu, W.; Chen, L.; Tian, D.; Li, Y.; Cao, D. Deep learning for image and point cloud fusion in autonomous driving: A review. IEEE Transactions on Intelligent Transportation Systems 2021, 23, 722–739. [Google Scholar] [CrossRef]

- Caltagirone, L.; Bellone, M.; Svensson, L.; Wahde, M. LIDAR–camera fusion for road detection using fully convolutional neural networks. Robotics and Autonomous Systems 2019, 111, 125–131. [Google Scholar] [CrossRef]

- Caltagirone, L.; Bellone, M.; Svensson, L.; Wahde, M.; Sell, R. LiDAR–camera semi-supervised learning for semantic segmentation. Sensors 2021, 21, 4813. [Google Scholar] [CrossRef]

- Pollach, M.; Schiegg, F.; Knoll, A. Low latency and low-level sensor fusion for automotive use-cases. 2020 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2020, pp. 6780–6786.

- Shahian Jahromi, B.; Tulabandhula, T.; Cetin, S. Real-time hybrid multi-sensor fusion framework for perception in autonomous vehicles. Sensors 2019, 19, 4357. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.L.; Jahanshahi, M.R.; Manjunatha, P.; Gan, W.; Abdelbarr, M.; Masri, S.F.; Becerik-Gerber, B.; Caffrey, J.P. Inexpensive multimodal sensor fusion system for autonomous data acquisition of road surface conditions. IEEE Sensors Journal 2016, 16, 7731–7743. [Google Scholar] [CrossRef]

- Meyer, G.P.; Charland, J.; Hegde, D.; Laddha, A.; Vallespi-Gonzalez, C. Sensor fusion for joint 3d object detection and semantic segmentation. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition workshops, 2019, pp. 0–0.

- Guan, H.; Yan, W.; Yu, Y.; Zhong, L.; Li, D. Robust traffic-sign detection and classification using mobile LiDAR data with digital images. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing 2018, 11, 1715–1724. [Google Scholar] [CrossRef]

- Yeong, D.J.; Velasco-Hernandez, G.; Barry, J.; Walsh, J. Sensor and Sensor Fusion Technology in Autonomous Vehicles: A Review. Sensors 2021, 21, 2140. [Google Scholar] [CrossRef]

- Liu, Z.; Wu, Q.; Wu, S.; Pan, X. Flexible and accurate camera calibration using grid spherical images. Optics express 2017, 25, 15269–15285. [Google Scholar] [CrossRef]

- Vel’as, M.; Španěl, M.; Materna, Z.; Herout, A. Calibration of rgb camera with velodyne lidar 2014.

- Pannu, G.S.; Ansari, M.D.; Gupta, P. Design and implementation of autonomous car using Raspberry Pi. International Journal of Computer Applications 2015, 113. [Google Scholar]

- Jain, A.K. Working model of self-driving car using convolutional neural network, Raspberry Pi and Arduino. 2018 Second International Conference on Electronics, Communication and Aerospace Technology (ICECA). IEEE, 2018, pp. 1630–1635.

- Zhang, Z. A flexible new technique for camera calibration. IEEE Transactions on pattern analysis and machine intelligence 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Glennie, C.; Lichti, D.D. Static calibration and analysis of the Velodyne HDL-64E S2 for high accuracy mobile scanning. Remote sensing 2010, 2, 1610–1624. [Google Scholar] [CrossRef]

- Atanacio-Jiménez, G.; González-Barbosa, J.J.; Hurtado-Ramos, J.B.; Ornelas-Rodríguez, F.J.; Jiménez-Hernández, H.; García-Ramirez, T.; González-Barbosa, R. Lidar velodyne hdl-64e calibration using pattern planes. International Journal of Advanced Robotic Systems 2011, 8, 59. [Google Scholar] [CrossRef]

- Milch, S.; Behrens, M. Pedestrian detection with radar and computer vision. PROCEEDINGS OF PAL 2001-PROGRESS IN AUTOMOBILE LIGHTING, HELD LABORATORY OF LIGHTING TECHNOLOGY, SEPTEMBER 2001. VOL 9, 2001. [Google Scholar]

- Huang, W.; Zhang, Z.; Li, W.; Tian, J.; others. Moving object tracking based on millimeter-wave radar and vision sensor. Journal of Applied Science and Engineering 2018, 21, 609–614. [Google Scholar]

- Liu, F.; Sparbert, J.; Stiller, C. IMMPDA vehicle tracking system using asynchronous sensor fusion of radar and vision. 2008 IEEE Intelligent Vehicles Symposium. IEEE, 2008, pp. 168–173.

- Guo, X.p.; Du, J.s.; Gao, J.; Wang, W. Pedestrian detection based on fusion of millimeter wave radar and vision. Proceedings of the 2018 International Conference on Artificial Intelligence and Pattern Recognition, 2018, pp. 38–42.

- Yin, L.; Luo, B.; Wang, W.; Yu, H.; Wang, C.; Li, C. CoMask: Corresponding Mask-Based End-to-End Extrinsic Calibration of the Camera and LiDAR. Remote Sensing 2020, 12, 1925. [Google Scholar] [CrossRef]

- Peršić, J.; Marković, I.; Petrović, I. Extrinsic 6dof calibration of a radar–lidar–camera system enhanced by radar cross section estimates evaluation. Robotics and Autonomous Systems 2019, 114, 217–230. [Google Scholar] [CrossRef]

- Message_Filters—ROS Wiki. https://wiki.ros.org/message_filters, Last accessed on 2023-03-07.

- Banerjee, K.; Notz, D.; Windelen, J.; Gavarraju, S.; He, M. Online camera lidar fusion and object detection on hybrid data for autonomous driving. 2018 IEEE Intelligent Vehicles Symposium (IV). IEEE, 2018, pp. 1632–1638.

- Fayyad, J.; Jaradat, M.A.; Gruyer, D.; Najjaran, H. Deep learning sensor fusion for autonomous vehicle perception and localization: A review. Sensors 2020, 20, 4220. [Google Scholar] [CrossRef]

- Wei, Z.; Zhang, F.; Chang, S.; Liu, Y.; Wu, H.; Feng, Z. Mmwave radar and vision fusion for object detection in autonomous driving: A review. Sensors 2022, 22, 2542. [Google Scholar] [CrossRef]

- Hajri, H.; Rahal, M.C. Real time lidar and radar high-level fusion for obstacle detection and tracking with evaluation on a ground truth. arXiv 2018, arXiv:1807.11264. [Google Scholar]

- Fritsche, P.; Zeise, B.; Hemme, P.; Wagner, B. Fusion of radar, LiDAR and thermal information for hazard detection in low visibility environments. 2017 IEEE International Symposium on Safety, Security and Rescue Robotics (SSRR). IEEE, 2017, pp. 96–101.

- Pikner, H.; Karjust, K. Multi-layer cyber-physical low-level control solution for mobile robots. IOP Conference Series: Materials Science and Engineering. IOP Publishing 2021, 1140, 012048. [Google Scholar] [CrossRef]

- Sell, R.; Leier, M.; Rassõlkin, A.; Ernits, J.P. Self-driving car ISEAUTO for research and education. 2018 19th International Conference on Research and Education in Mechatronics (REM). IEEE, 2018, pp. 111–116.

- Geyer, J.; Kassahun, Y.; Mahmudi, M.; Ricou, X.; Durgesh, R.; Chung, A.S.; Hauswald, L.; Pham, V.H.; Mühlegg, M.; Dorn, S.; others. A2d2: Audi autonomous driving dataset. arXiv 2020, arXiv:2004.06320. [Google Scholar]

- Broggi, A.; Buzzoni, M.; Debattisti, S.; Grisleri, P.; Laghi, M.C.; Medici, P.; Versari, P. Extensive tests of autonomous driving technologies. IEEE Transactions on Intelligent Transportation Systems 2013, 14, 1403–1415. [Google Scholar] [CrossRef]

- Kato, S.; Tokunaga, S.; Maruyama, Y.; Maeda, S.; Hirabayashi, M.; Kitsukawa, Y.; Monrroy, A.; Ando, T.; Fujii, Y.; Azumi, T. Autoware on board: Enabling autonomous vehicles with embedded systems. 2018 ACM/IEEE 9th International Conference on Cyber-Physical Systems (ICCPS). IEEE, 2018, pp. 287–296.

| Sensor | Message Type of Topic Published by Driver | Message Type of Topic Subscribed by Calibration Processes |

|---|---|---|

| LiDAR Velodyne VLP-32C |

velodyne_msgs/VelodyneScan | sensor_msgs/PointCloud2 (LiDAR-camera extrinsic) velodyne_msgs/VelodyneScan (radar-LiDAR extrinsic) |

| Camera Raspberry Pi V2 |

sensor_msgs/CompressedImage | sensor_msgs/Image (camera intrinsic) sensor_msgs/Image (LiDAR-camera extrinsic) |

| Radar TI AWR1843BOOST |

sensor_msgs/PointCloud2 | sensor_msgs/PointCloud2 (radar intrinsic) sensor_msgs/PointCloud2 (radar-LiDAR extrinsic) |

| FoV (°) | Range (m)/Resolution | Update Rate (Hz) | |

|---|---|---|---|

| Velodyne VLP-32 | 40 (vertical) | 200 | 20 |

| Raspberry Pi V2 | 160 (D) | 3280x2464 | 90 in 640x480 |

| TI mmwave AWR1843BOOST | 100 (H) 40 (V) |

4cm (range resolution) 0.3m/sec (velocity resolution) |

10-100 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).